Open Access

Open Access

ARTICLE

Human Intelligent-Things Interaction Application Using 6G and Deep Edge Learning

College of Computer and Cyber Sciences, University of Prince Mugrin, Medina, 42241, Saudi Arabia

* Corresponding Author: Ftoon H. Kedwan. Email:

Journal on Internet of Things 2024, 6, 43-73. https://doi.org/10.32604/jiot.2024.052325

Received 30 March 2024; Accepted 12 August 2024; Issue published 10 September 2024

Abstract

Impressive advancements and novel techniques have been witnessed in AI-based Human Intelligent-Things Interaction (HITI) systems. Several technological breakthroughs have contributed to HITI, such as Internet of Things (IoT), deep and edge learning for deducing intelligence, and 6G for ultra-fast and ultralow-latency communication between cyber-physical HITI systems. However, human-AI teaming presents several challenges that are yet to be addressed, despite the many advancements that have been made towards human-AI teaming. Allowing human stakeholders to understand AI’s decision-making process is a novel challenge. Artificial Intelligence (AI) needs to adopt diversified human understandable features, such as ethics, non-biases, trustworthiness, explainability, safety guarantee, data privacy, system security, and auditability. While adopting these features, high system performance should be maintained, and transparent processing involved in the ‘human intelligent-things teaming’ should be conveyed. To this end, we introduce the fusion of four key technologies, namely an ensemble of deep learning, 6G, IoT, and corresponding security/privacy techniques to support HITI. This paper presents a framework that integrates the aforementioned four key technologies to support AI-based Human Intelligent-Things Interaction. Additionally, this paper demonstrates two security applications as proof of the concept, namely intelligent smart city surveillance and handling emergency services. The paper proposes to fuse four key technologies (deep learning, 6G, IoT, and security and privacy techniques) to support Human Intelligent-Things interaction, applying the proposed framework to two security applications (surveillance and emergency handling). In this research paper, we will present a comprehensive review of the existing techniques of fusing security and privacy within future HITI applications. Moreover, we will showcase two security applications as proof of concept that use the fusion of the four key technologies to offer next-generation HITI services, namely intelligent smart city surveillance and handling emergency services. This proposed research outcome is envisioned to democratize the use of AI within smart city surveillance applications.Keywords

Advanced technologies, such as the Internet of Things (IoT), Artificial Intelligence (AI), and 6G network connectivity, have become an integral part of the civilized modern lifestyle and how businesses are run [1]. Yet, there are some obstacles to completely relying on them, especially when human judgment is required to assess certain values, such as safety, ethics, and trustworthiness. Therefore, a near real-time Human Intelligent-Things Interaction (HITI) application is an emergent challenge. The current research work will try to resolve the aforementioned challenges. One way to do this is by allowing humans to understand how decisions are made by the HITI algorithms. This could be achieved by establishing HITI processing transparency to eliminate the Blackbox syndrome of the processing step. Understandability between humans and the edge processing endpoints must also be established to support HITI processing transparency.

A fusion of advanced technologies is used in the current research work to enable a clear understanding of non-functional processing features, such as ethics, trustworthiness, explainability, and auditability. Hence, this research investigates the possibility of building edge GPU devices that can train and run multiple ensembles of deep learning models on different types of edge IoT nodes with constrained resources. In addition, a 6G architecture-based framework will be built to fuse edge IoT devices and an ensemble of GPU-enabled deep-learning processing units. This framework will be supported with edge 6G communication capability [2], edge storage system [3], and system security and data privacy techniques. As a proof of concept, a smart city surveillance application and handling emergency services will be implemented to showcase the research idea.

• Study the 6G architecture and design a framework that allows intelligent fusion of IoT and deep learning surveillance applications within a smart city.

• Study the fusion of diversified types of IoT devices that can leverage the advantage of deep learning and 6G network convergence.

• Study supervised, unsupervised, and hybrid deep learning models and their ensemble techniques that considers:

•Explainable fusion at the Edge Node

•Explainable fusion in the Cloud

• Study different security and privacy models that will allow IoT devices and edge learning models to take part in smart city surveillance applications.

• Finally, the following four dimensions, 6G network characteristics, fusion of IoT devices, the ensemble of human understandable and explainable deep learning models, and security/privacy dimensions, will be leveraged to develop two smart city surveillance applications.

1.3 Research Secondary Objectives

The proposed system will bring the following benefits:

1. The implemented framework will make an integrated system that is the first of its kind, integrating 6G, Deep Learning, IoT, and Security and Privacy Approaches.

2. The framework will showcase deep learning-assisted

a) Edge-to-cloud secure big data sharing

b) Live dashboard

c) Live alerts

d) Live events

3. The framework will showcase next-generation demonstrable AI-based services to different stakeholders, such as city offices and ministries, for further technology transfer.

In this research, we will address the following challenges:

1. While deep learning models run on a cloud GPU, and since data security and privacy are essential for smart city applications, edge GPU devices should be able to run deep learning models on IoT nodes that have constrained resources. Running multiple deep-learning models on the same GPU node is even more challenging. In the current research work, the methods that will allow us to run multiple ensembles of deep learning models on edge nodes with constrained resources will be investigated and presented. This will allow each edge node to detect multiple smart city surveillance events.

2. Since 6G offers massive IoT connectivity with microseconds of latency between any two endpoints. Some key features of 6G include software-defined security, software functions virtualization, narrowband IoT [4], and SD-WAN [5]. Results for the two selected smart city applications will be shared.

3. Finally, we will present approaches that will allow AI-based applications to integrate with IoT, deep learning, and 6G to support human-AI interaction.

As mentioned above, this paper is based on the fusion of several key technologies, namely deep and edge learning, which deduce intelligence. The 6G network allows for ultra-fast communication between IoT and cyber-physical systems while maintaining relatively low latency. The deep learning and IoT fields have been extensively studied in many books and papers [6]. The reader should refer to the surveys [7–9] for a detailed overview of these two fields. In this section, we focus on the related works in the newly emerging fields of 6G wireless networks and deep learning on edge devices.

The number of intelligent devices connected to the internet is increasing day by day. This requires more reliable, resilient, and secure connectivity to wireless networks. Thus, a wireless network beyond 5G (i.e., 6G) is essential for building connectivity between humans and machines [10–12]. Moreover, recent advances in deep learning support many remarkable applications, such as robotics and self-driving cars. This increases the demand for more innovations in 6G wireless networks. Such networks will be needed to support extensive AI services in all the network layers. A potential architecture for 6G was introduced in [13]. Many other works also describe the roadmap towards 6G [14–16]. Zhang et al. [17] discussed several possible technology transformations that will define the 6G networks. Additionally, many recent applications are now using 6G to upgrade both performance and service quality [18–21]. Some other noteworthy research that focuses on 6G can be reached at [22–26].

1.5.2 Deep Learning on Edge Devices

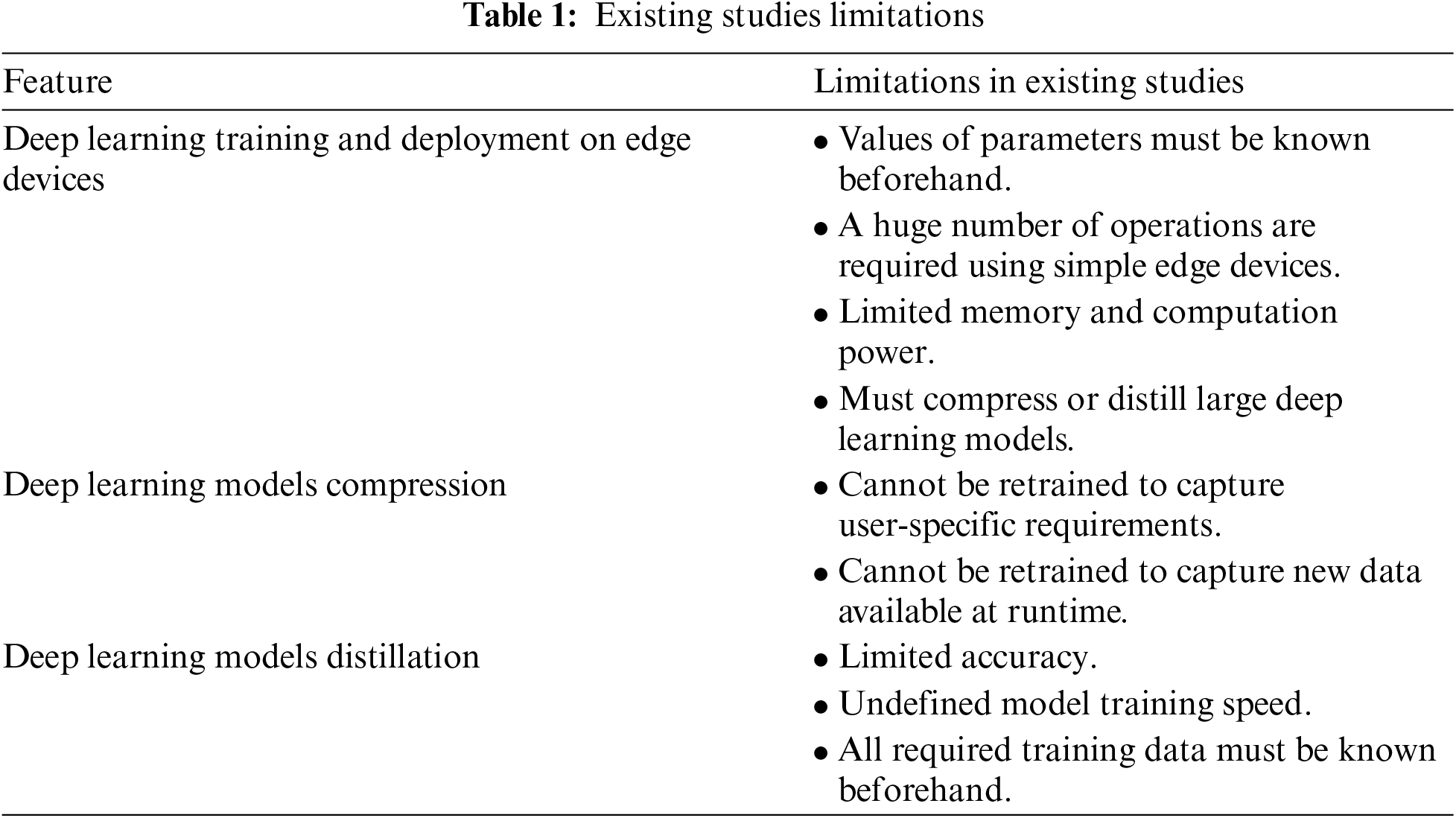

Deep learning models have achieved excellent accuracy in several practical applications [27–29], such as speech recognition/generation and motion detection/analysis. In many situations, deep learning models must be trained and deployed on edge devices, such as in [30]. This is important as it allows to achieve the personalized, responsive, and private learning. This task is challenging since it requires learning the value of millions of parameters by performing a huge number of operations using simple edge devices with limited memory and computation power. Thus, it is impossible to benefit from the great power of deep learning applied on edge devices without either compressing or distilling the large deep learning model.

The compression of an already-trained model is done using weights sharing [31], quantization [32], or pruning [33,34]. It is also possible to combine more than one of these compression techniques [35]. However, the compressed models cannot be retrained to capture user-specific requirements, nor can they be retrained to capture new data available at runtime. The distillation approaches are based on knowledge transfer using knowledge distilled from a termed teacher (a cloud-based deep model) to improve the accuracy of a termed student (an on-device small model) [36–39]. The distillation approaches suffer from limited accuracy [40,41] and the undefined training speed of the model to reach an acceptable accuracy. Moreover, these approaches assume that all required training data is available at the training time. It is also assumed that the tasks for the student and the teacher remain the same. However, both assumptions are not realistic in practice. The concerning limitations in the current studies are summarized in Table 1 for easier readability and reference. Some other noteworthy research that uses deep learning on edge devices are [42,43].

The next section discusses the research methodology and design that explains advanced architectures in communication technologies, secure big data sharing and transmission techniques, and advanced deep learning algorithms that can support HITI. In addition, this section demonstrates a use-case scenario that explains the incorporation of human-understandable features (i.e., ethics, trust, security, privacy, and non-biases) in the use of AI for HITI applications. In this section, the framework design is demonstrated via a high-level diagram that shows all employed technologies required to run the research project implementation. It also shows the techniques used for preserving human-AI interaction data security and privacy. It talks about the project implementation of two types of services: smart city surveillance and emergency handling scenarios. After that, research implementation is tested, and results are discussed and evaluated using evaluation measures, such as accuracy, recall, precision, and F1 score. Assessment of the usage of edge learning and the provenance of using blockchain is presented in this section. The last section summarizes the research paper in conclusion and identifies potential future works.

2 Methodology & Framework Design

Recent advancements in AI, IoT, and 6G communications have shown promising results in the HITI applications. What was perceived as a computer in the HITI loop is now replaced with several technologies. The computing part of the HITI can now be accomplished through IoT devices that can carry out complex computing tasks. Recent IoT devices come with greater memory modules, high performance CPU, GPU, and RAM. Many sensors can be added through expansion slots. The IoT devices are small in form factor, and hence, can be deployed at any location where computing tasks need to be carried out. Examples of deployment are human body area networks, smart homes, smart buildings, industrial control systems, within vehicles, and virtually anywhere. In parallel, the cloud infrastructure has also matured to a new level. IoT data can now be sensed at its origin, parsed by the edge computing devices, and important phenomena can be deduced. In addition, carefully crafted an amount of local data or decisions can be shared with the cloud providers for further processing or storage. This section discusses the advanced technologies employed in the current research. It also discusses the framework design at a high-level.

2.1 Proposed Research Background

The adopted novel technologies include 6G connectivity architectures that improve the data exchange between data sources and cloud services within a few milliseconds of latency. In addition, HITI-supported deep learning algorithms are adopted to form the fusion of deep edge learning models that can be used for surveillance and emergency situations. Real-time video analytics techniques are also discussed since emergency handling scenarios are usually captured in real time and should be dealt with swiftly. A use-case scenario is demonstrated to explain the feasibility of using AI for HITI applications to leverage human understandable features (i.e., ethics, trust, security, privacy, and non-biases).

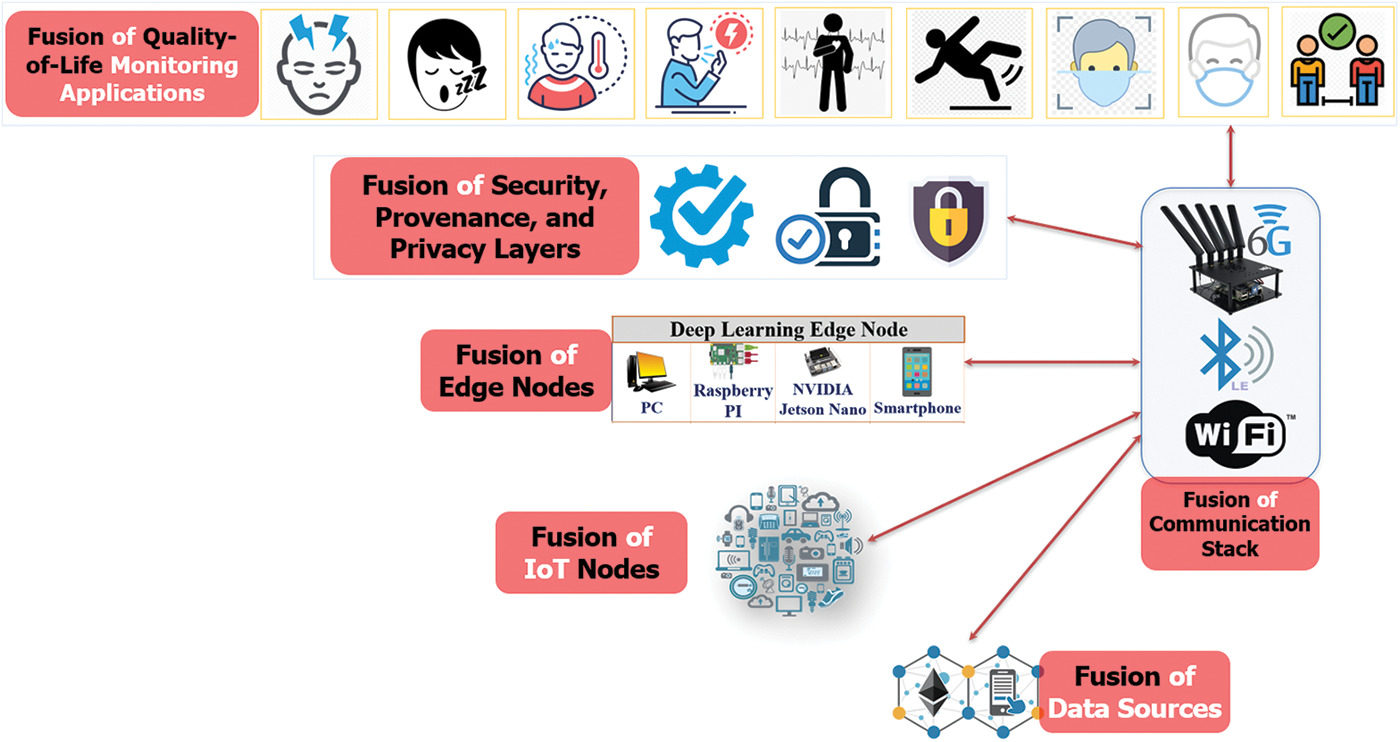

Recent advancements in communication technologies have brought forth from 4G all digital to 5G mass connectivity to 6G mass autonomy. This novel network connectivity advancement has opened the door to innovative and rich HITI, such as the quality of service, experience, and trust [44]. Fig. 1 shows fusion scenarios at different layers where intelligent things can stack-up and provide rich, personalized emergency services for humans. There is an earlier notion that cloud computing infrastructure is overly distant from data sources, which is used to be a concern with respect to the HITI applications’ quality of service. The data privacy and security when shared with third-party cloud providers was also a concern of having remote data sources. In recent advancements in 5G, beyond 5G, and 6G communication networks, the gap between the source of the data at the edge and the cloud has diminished [45]. Massive amounts of data can travel between any two points around the world within milliseconds or even less. This high-speed communication channel has opened the door of a tactile internet for human-computing device interactions. Fig. 2 shows the fusion of different entities form the HITI ecosystem. AI is one of the areas that has taken the full benefit of this ultra-low latency and massive bandwidth communication [13]. Due to the availability of massive number of datasets, very high bandwidth communication, availability of a massive memory and GPU, innovative AI applications have been developed and proposed by researchers. Even the AI processes can now be performed at the edge nodes [46].

Figure 1: Fusion of different entities to support HITI

Figure 2: Fusion of communication entities within HITI ecosystem

Deep learning algorithms supporting a very large number of hidden layers can now be run on edge devices within smart homes or around human body area networks [47]. Fig. 3 shows the next generation deep learning algorithms that can support HITI. Fig. 4 shows a sample HITI emergency healthcare application where diversified types of IoT are attached with AI-enabled edge devices. The edge nodes leverage GPUs to process deep learning algorithms developed for tracking different quality of life (QoL) states of an elderly person. The edge node hosting deep learning algorithms are trained to understand the emergency needs of an elderly person and provide the necessary services [48]. The subject can interact with the AI algorithms through natural user interfaces, such as gesture, speech, brain thought, and other channels.

Figure 3: Fusion of deep edge learning models to support surveillance and emergency situations

Figure 4: Fusion of human intelligence and AI interaction for emergency health applications within a smart city

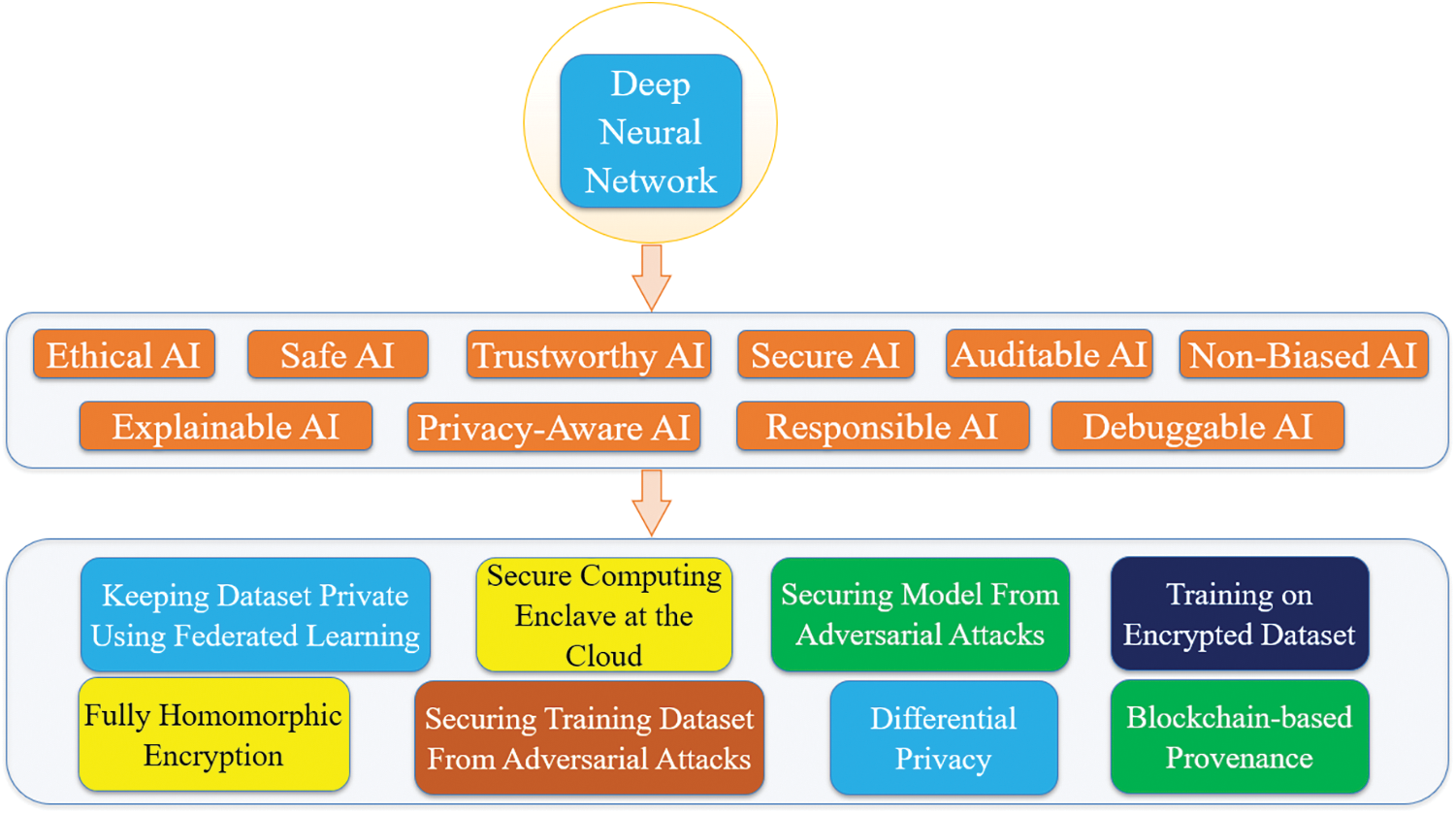

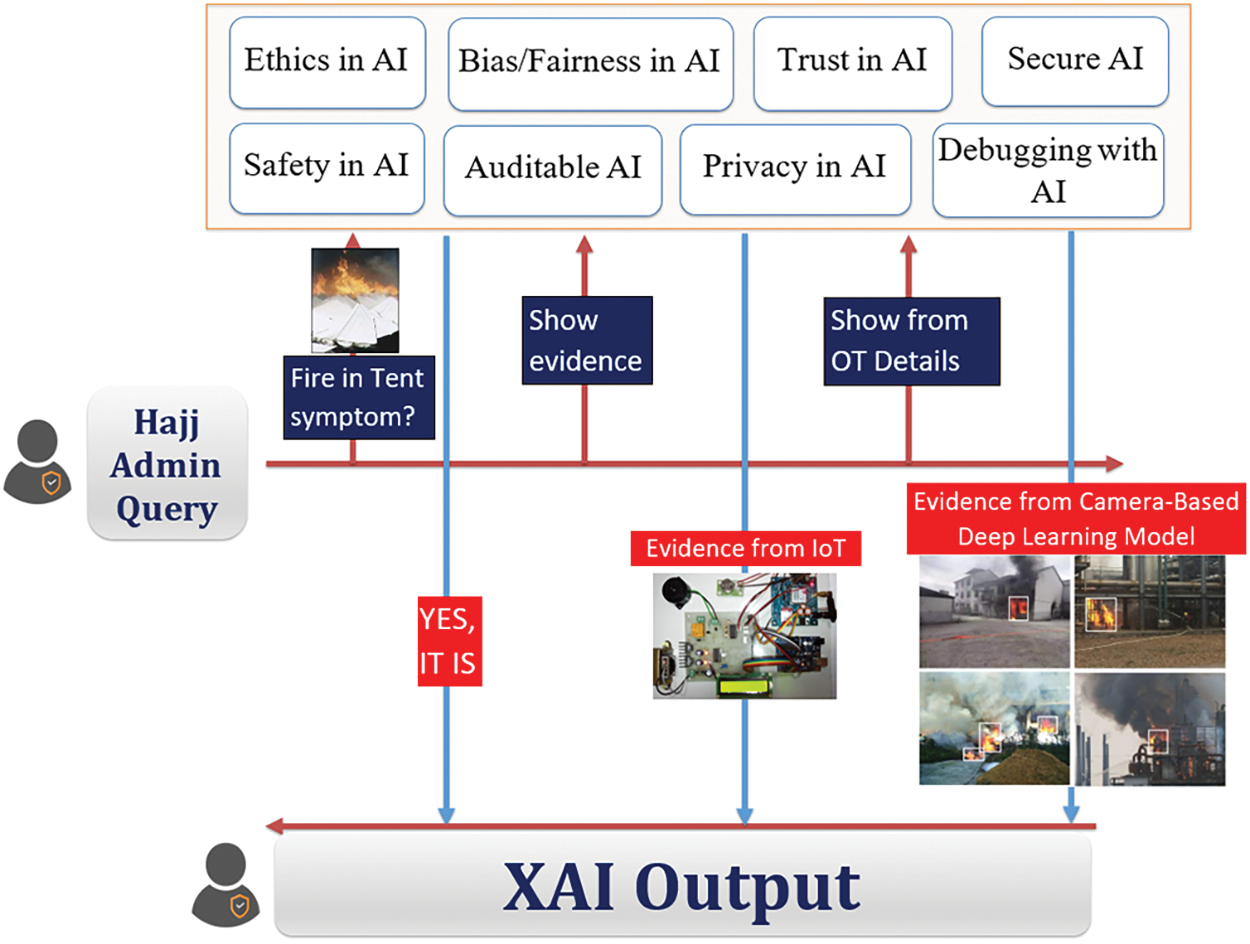

2.1.3 AI Algorithms Understandability

While AI algorithms have the potential to be used with HITI applications, researchers have tried to address several related concerns. For example, if an AI algorithm is used within a safety-critical industrial system, can humans interacting with the AI device trust the algorithm? How to make sure that the AI algorithm is not compromised or leaking training data to the algorithm provider? The Blackbox nature of the existing AI algorithms does not provide evidence about the inner details regarding how the input was processed and how the algorithm came to the intermediate and final decision. The current research adopts a new generation of AI algorithms that try to add explainability, human-like ethics, trust, security, privacy, and non-biases options built-in within the AI algorithm [49]. This will allow the human actors to query and interact with the AI and reason with the working principles and the machine’s decision-making processes. The specific measures that could be taken to ensure these human-like features are listed in Fig. 5. Some of them are adopted in the current research, such as:

• Preserving dataset privacy using federated learning.

• Secure computing environment at the could.

• Securing training datasets from adversarial attacks.

• Securing algorithmic models from adversarial attacks.

• Blockchain-based provenance.

Figure 5: Fusion of different types of AI with security and privacy

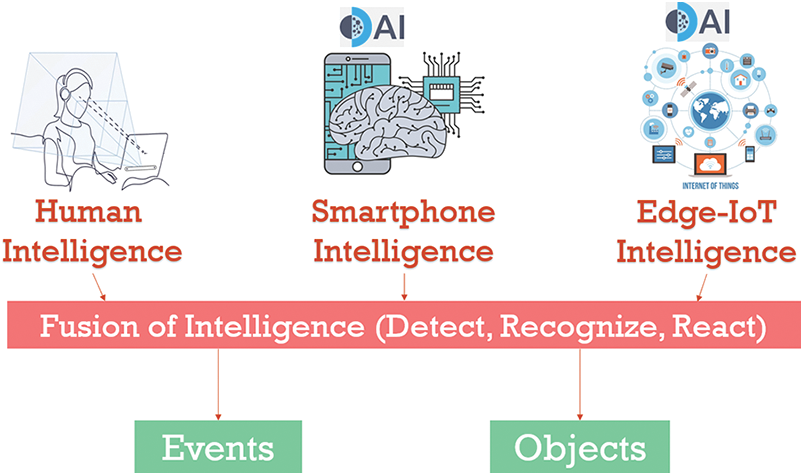

By avoiding the Blackbox syndrome during model development, the AI algorithm could be explainable, auditable, and debuggable. Focusing on datasets, models, and computing environment security, in addition to encryption mechanisms, helps satisfy features like safety, privacy-awareness, and responsibility. Measures like differential privacy, Blockchain-based provenance, and homomorphic encryption support non-biases and trustworthiness. Fig. 6 shows a scenario in which human intelligence interacts with an AI that understands human semantics. This semantic AI would bring trust in human subjects and will convince the HITI application designers to democratize AI for more and more critical applications.

Figure 6: Fusion of human intelligence with edge AI for deducing different types of objects and event

2.1.4 AI-Enabled Fusion Scenarios

To make the HITI application near real-time, the human and the underlying application need to process the end-to-end data within milliseconds. In this case, the human perceives that the application is responding in real-time. 5G showed hope by supporting very low latency, high reliability, very high bandwidth, low response time and ability to handle a massive number of IoT devices within a square kilometer. In addition, 5G introduced virtualization of network functions, edge caching, software defined networks, network slicing, directional antenna, and so on. Using 5G networks, the underlying HITI applications can reserve the network resources on the fly. Lately, researchers started studying the shortcomings of 5G, which has led to research terms such as “Beyond 5G” and “6G”.

For example, one of the limitations of the 5G networks is that the physical layer frequency allocation, bandwidth reservations, network slicing and other virtualization parameters that need to be manually configured, which is very inefficient for a complex system initialization. Hence, 6G is proposed, which will inherently be maintained by the AI algorithms [17]. Different 6G radio access networks, resource allocations, efficient spectrum allocation, security functions virtualization, network slice configuration, and other processes will be managed by the built-in AI algorithms.

Fig. 7 shows the AI-enabled 6G properties, such as latency below 0.1 milliseconds, reliability on the order of nine 9 s, and devices having zero energy usage [50]. 6G is expected to support subnetworks with throughput exceeding 100 Gbps within subnetwork and around 5 Gbps at the network edge. 6G is expected to accommodate 10 times IoT devices having precision of less than 1% missed detection and false positives with location accuracy on the order of centimeter. All these features are expected to offer a tactile internet experience and push for innovative HITI applications [44].

Figure 7: 6G for smart city HITI applications

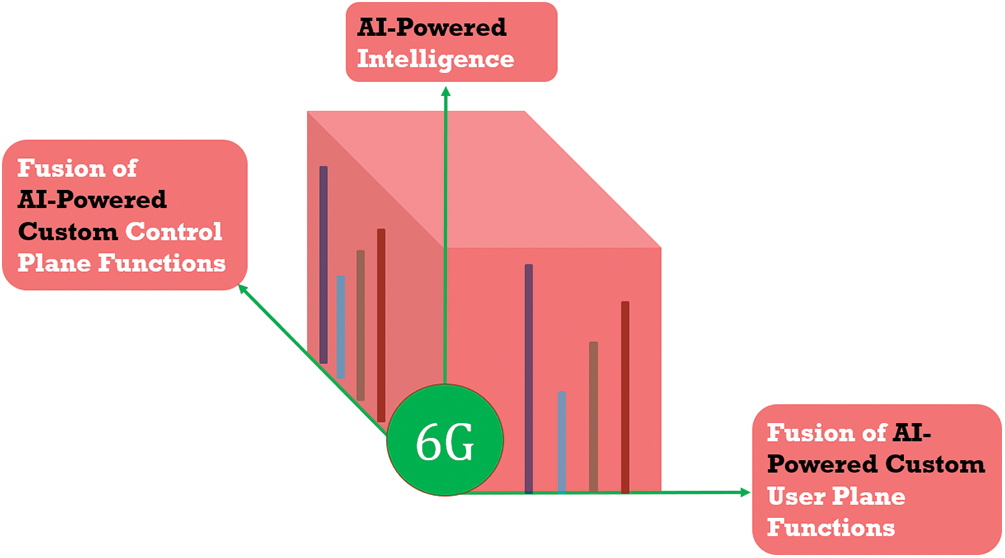

As shown in Fig. 8, 6G will allow User Plane (UP) functions that provide personalized qualities of service and experience-related functionalities with the help of AI functional plane. The Control Plane (CP) functionalities use AI algorithms that ensure the network functions, security functions, user mobility, and other control mechanisms to support the UP requirements. The 6G will use built-in AI plane functions to offer custom, dynamic, and personalized network slices that will serve the HITI applications’ needs [13]. AI-based Multiple-Input Multiple-Output (MIMO) beam-forming will use the needed bandwidth only based on the HITI vertical. Network slices and network functions are virtually configured according to the QoS needed.

Figure 8: Fusion of deep learning within 6G framework

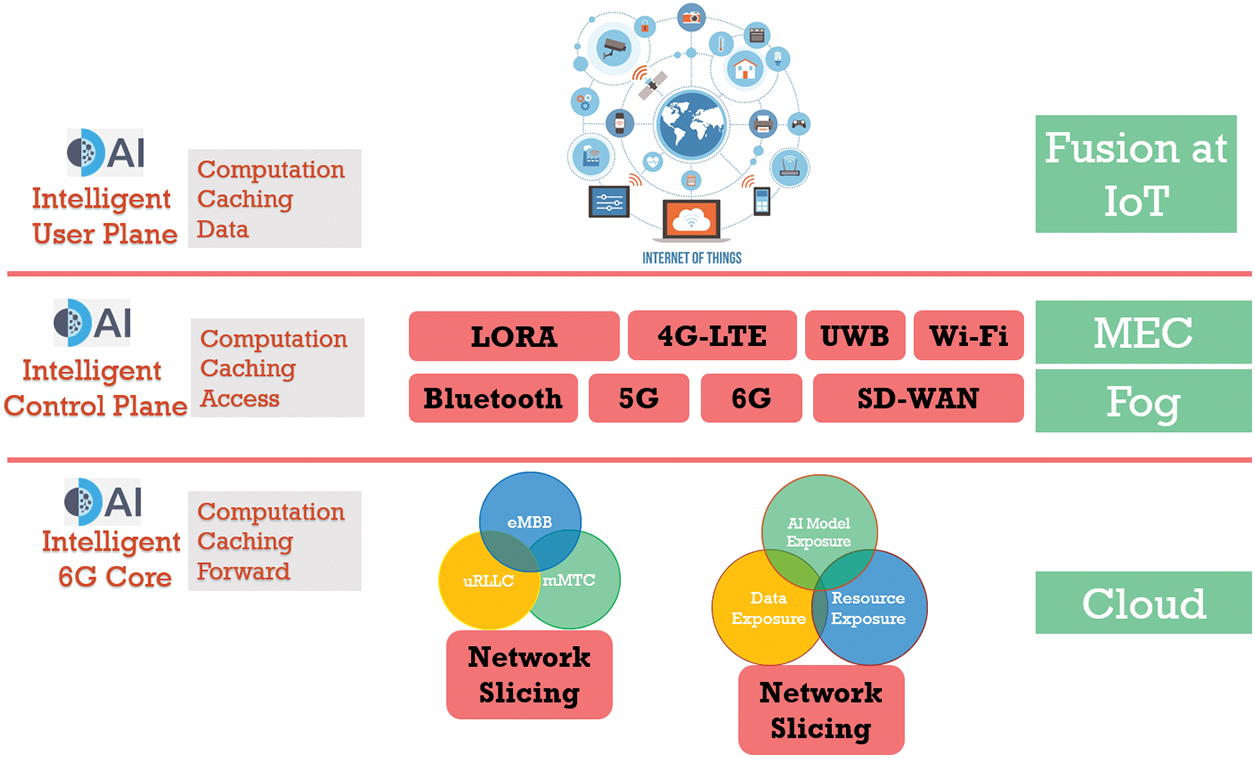

As shown in Fig. 9, 6G will use intelligence to manage applications from application to physical Operating System Interface (OSI) layers [51]. Intelligent computing offloading and caching can be managed by application layer AI algorithms. Intelligent traffic prediction can be done by transport layer AI algorithms. Traffic clustering can be done by employing AI algorithms in the network layer. Intelligent channel allocation can be performed by a datalink layer AI algorithm. Fusion of OSI layers can also be done to offer hybrid services. For example, physical and datalink layers can be jointly optimized by AI algorithms to offer adaptive configuration, network, and datalink layers. Such fusion can offer radio resource scheduling, network, and transport layers can offer intelligent network traffic control, while the rest of the upper layers offer data rate control. 6G will also use AI algorithms at different metaphors, e.g., AI for edge computing and cloud computing layers. At the edge computing layers where sensing takes place, intelligent control functions and access strategies are managed by AI algorithms. In the fog computing layer, intelligent resource management, slice orchestration, and routing are managed by AI algorithms. Finally, in the cloud computing layer, i.e., service space, computation and storage resource allocation are performed by AI algorithms.

Figure 9: 6G intelligent network configuration based on HITI performance and needs. 6G intelligent network configuration for mobility, low-latency, and high-bandwidth requiring HITI surveillance applications

2.1.5 AI-Enabled 6G Communication

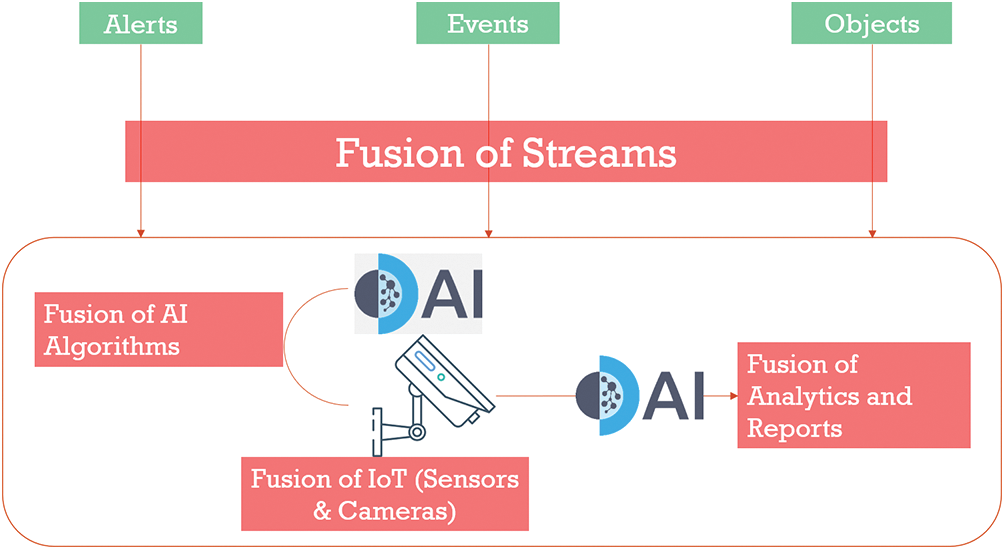

Leveraging the AI-enabled 6G communication architecture, future HITI applications will be able to offer many real-time video analytics. As shown in Fig. 10, IoT devices, such as camera sensors, will be able to process live video scenes, analyze the scene and infer objects, events and generate alerts and reports. For example, if the requirement of underlying video analytics is greater than 30 frames per second on a 4K video stream, the 6G control plane will employ AI to provide the network quality of service through the right network slice [52].

Figure 10: 6G intelligent network will allow real-time and live AI-based HITI surveillance applications

This section discusses the framework architecture developed to run the implementation of the current research project. All advanced technologies and techniques discussed in the previous section are incorporated into this framework design. This section also describes the virtualization framework of the 6G secure functions as a means of preserving human-AI interaction, data security and privacy.

2.2.1 Overall Framework Architecture

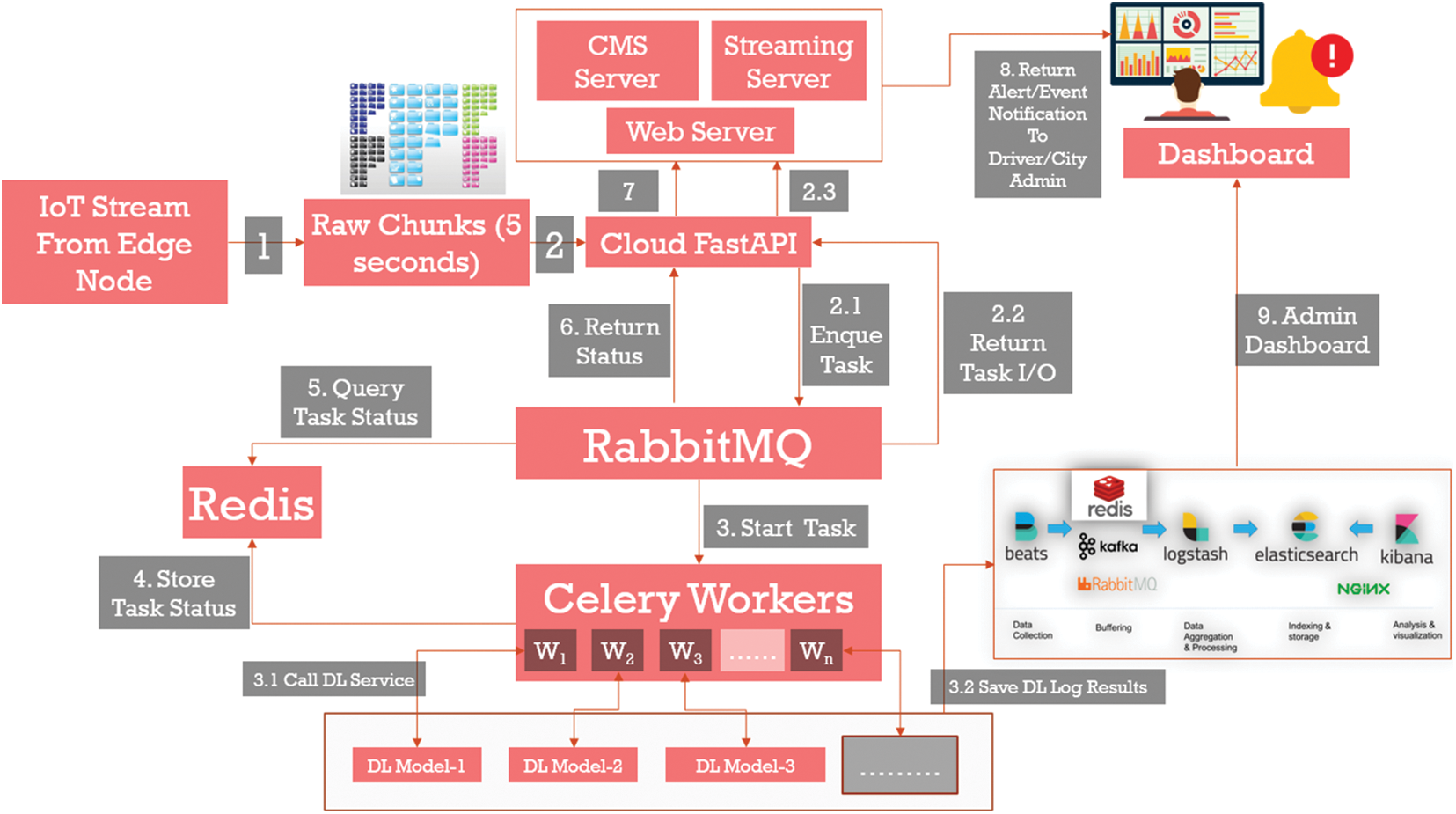

In this section, the general design of the framework is discussed. Fig. 11 shows the overall proposed framework. The framework will employ a set of edge IoT sensors, a set of GPU-enabled edge AI processing units, edge 6G communication capability, and edge storage. Raw IoT sensors will be processed at the edge node based on the requirements. Events will be detected by the edge node hosting the deep learning algorithms. The outcomes will be sent to the Cloud Fast API [53], which will enqueue the client raw files as well as detected results.

Figure 11: Overall general architecture

Some heavy computing needs will be addressed at the server-side GPU and resources. The server-side architecture will use RabbitMQ [54] for managing the tasks, engaging other components, such as Celery Worker processes [55], Redis in-memory task processor, web server, Content Management System (CMS) server [56], and streaming server. To perform the logging and search engine, elastic stack is used. Security and privacy of the smart city data containing human-AI interaction is of the utmost importance. Hence, in this paper, we will be using the end-to-end encryption state. For data privacy, deep learning model computation, and sharing of the results, differential privacy has been applied as a security and privacy check mechanism.

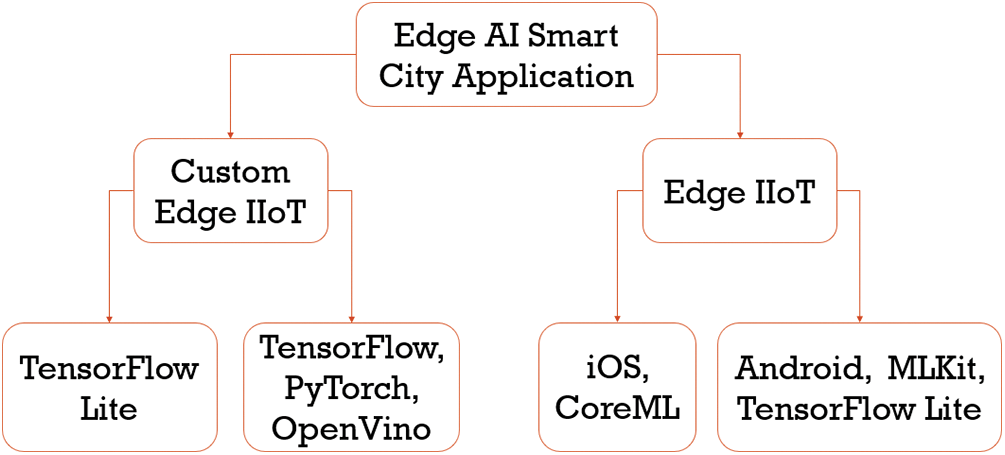

Fig. 12 shows the proposed security and privacy framework that will be added as a 6G secure functions’ virtualization framework. Starting from training the raw dataset, model creation, model deployment, and inferencing, a complete pipeline will be based on security and privacy. Finally, the deep learning models will ensemble a set of edge and cloud capable models. This will allow targeting any type of GPU capability within the complete end-to-end smart city application scenario. Fig. 13 shows different open-source frameworks that we have researched, tested, and finally considered in this research.

Figure 12: Security and privacy models that has been studied, implemented, and tested

Figure 13: Families of edge learning models that has been studied, implemented, and tested

3 Implementation, Test Results, and Discussion

This section demonstrates the implementation of 17 smart city surveillance scenarios, in addition to multiple emergency handling scenarios. Implementation testing and results discussion is then carried out to evaluate the efficiency, reliability, and resilience of the proposed framework and system architecture in real world applications. Also, usage of edge learning and privacy and trustworthiness has been tested. The provenance is also assessed using blockchain to test the chain of trust between all entities involved within a smart city application.

As for proof of concept, we have implemented two types of services where human and AI teaming has been realized: namely intelligent smart city surveillance and handling emergency services. For pre-training, two classification learning algorithms were used: Logistic Regression (LR) and Support Vector Machine (SVM) classification models for selective feature extraction and recognition. To improve the classification accuracy, the number of iterations were increased, and the model parameters were continuously observed and adjusted for best performance. Hence, input images shall be accurately classified into a specific class based on the features extracted and recognized.

The deep learning model training step is done through running training data iteratively on the Convolutional Neural Network (CNN) model while updating the parameters between the Neural Network layers for higher detection accuracy. Those parameters represent the weights assigned to each neuron in each hidden layer. In the input Neural Network layer, neurons represent image features. Hence, adjusting the parameters or weights is reflected in how accurately the model can recognize an image feature. The data extracted from the training data (images) at this stage are Internal Neural Network rules or intricate patterns that enable the algorithm to learn the analysis process of those images and learn how to detect it independently in the future. This deep learning model configuration proved to improve the classification performance compared to traditional feature selection methods.

3.1.1 Smart City Surveillance Implementation Scenarios

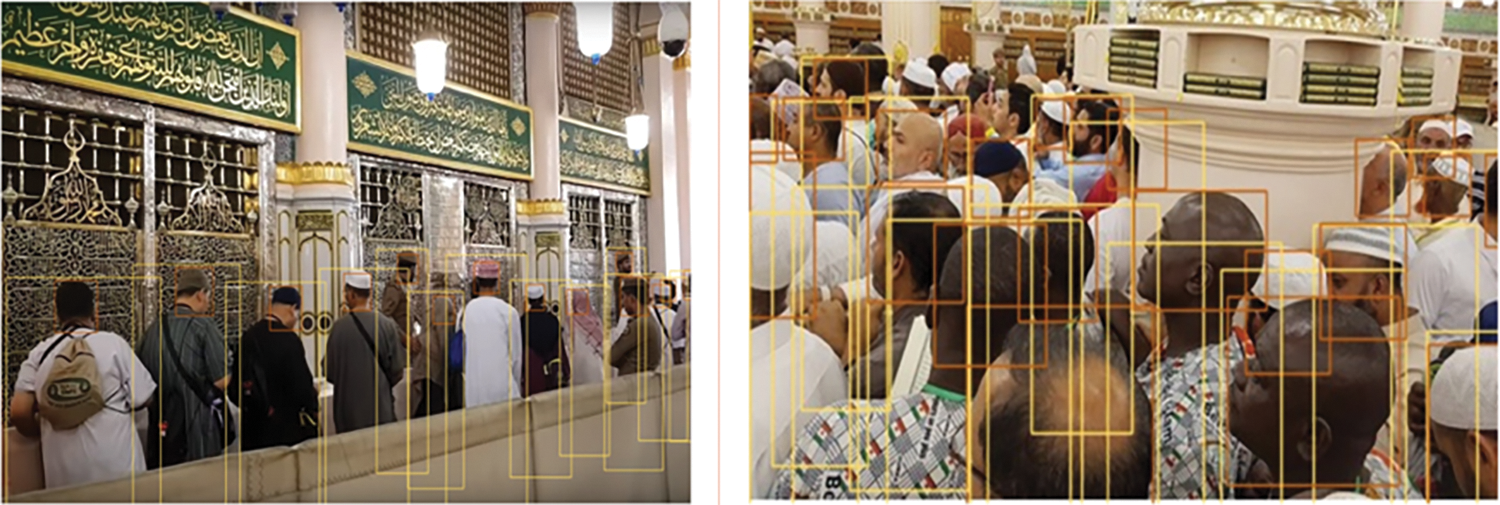

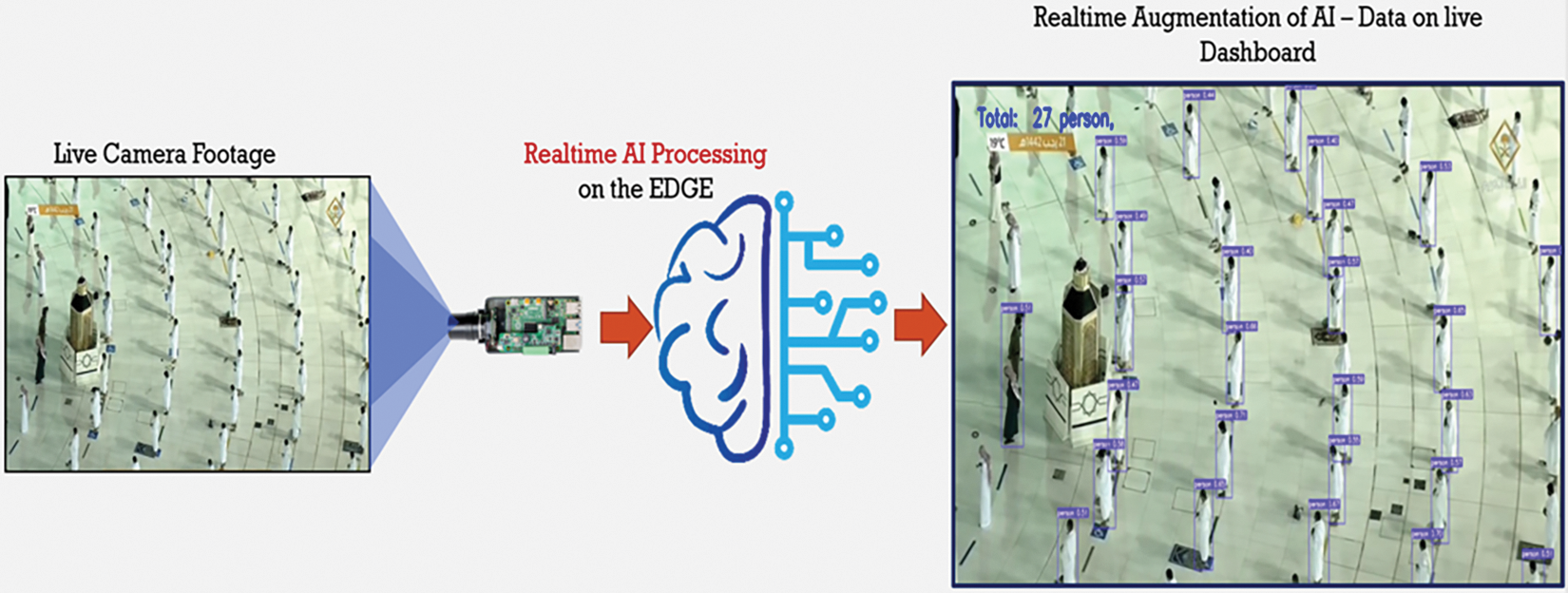

Seventeen different scenarios of smart city surveillance were targeted and chosen where the proposed technologies are used. In Fig. 14, the picture shows the live human crowd count in the prophet’s mosque in which the proposed system can detect the number of people, any violent activity, etc.

Figure 14: Deep learning crowd-based count

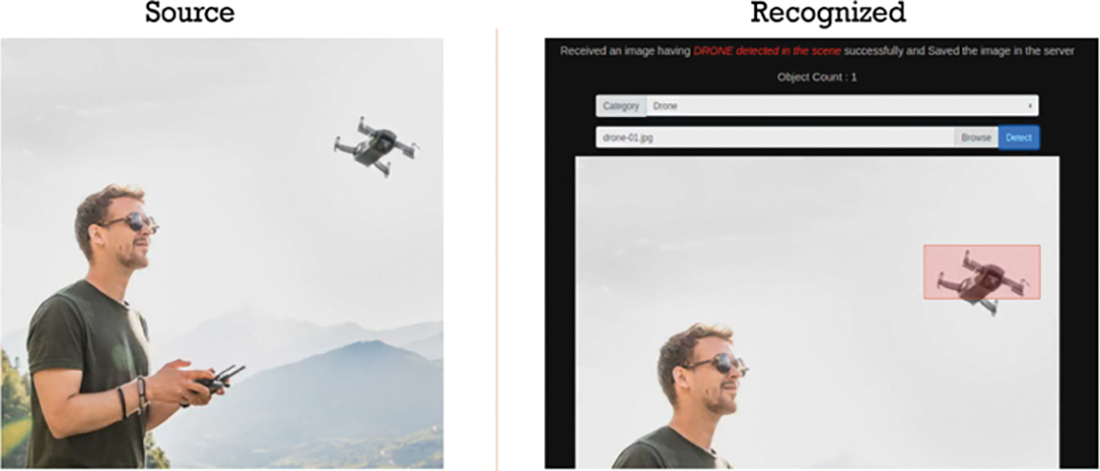

Figs. 15 and 16 show surveillance of different suspicious objects, such as drones, ships, mines, and separate these objects of interest with different labels, such as harmless (i.e., drones) or regular (i.e., birds) objects.

Figure 15: Deep learning-based suspicious object detection

Figure 16: Deep learning-based drone detection

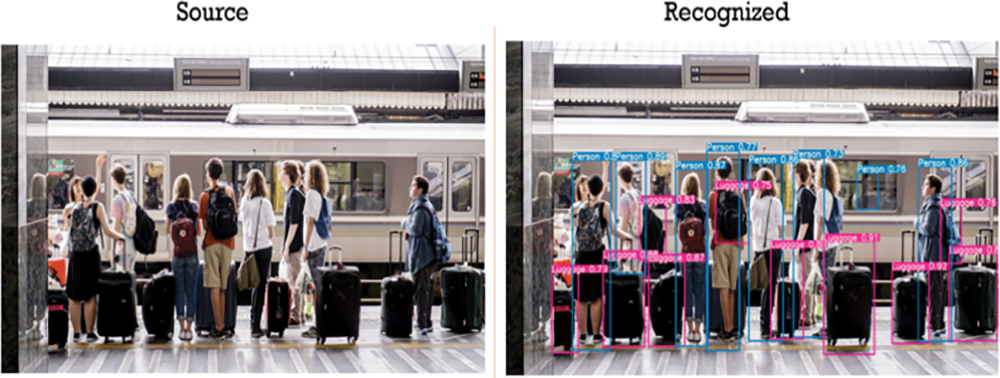

Figs. 17 and 18 show a scenario in which public places (i.e., train station, airport, mosque) areas can be monitored for luggage that is not accompanied by any human.

Figure 17: Deep learning-based unattended luggage detection

Figure 18: Deep learning-based luggage detection

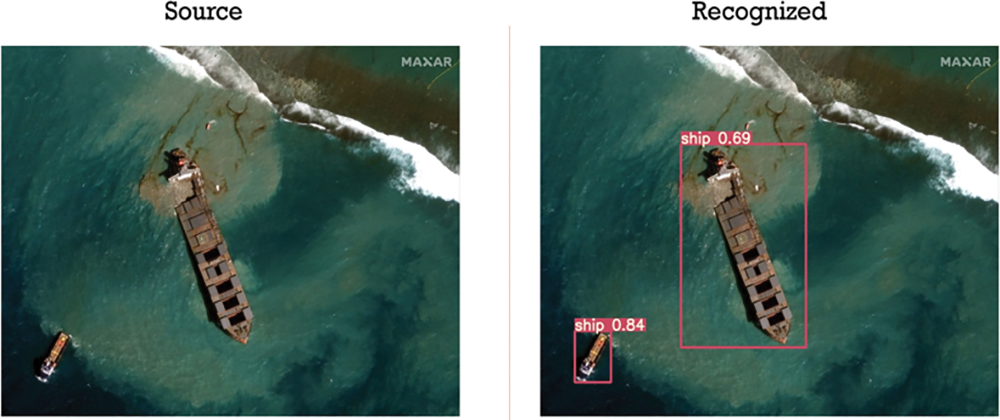

Fig. 19 shows deep learning-based surveillance on satellite imagery.

Figure 19: Deep learning-based detection from satellite imagery

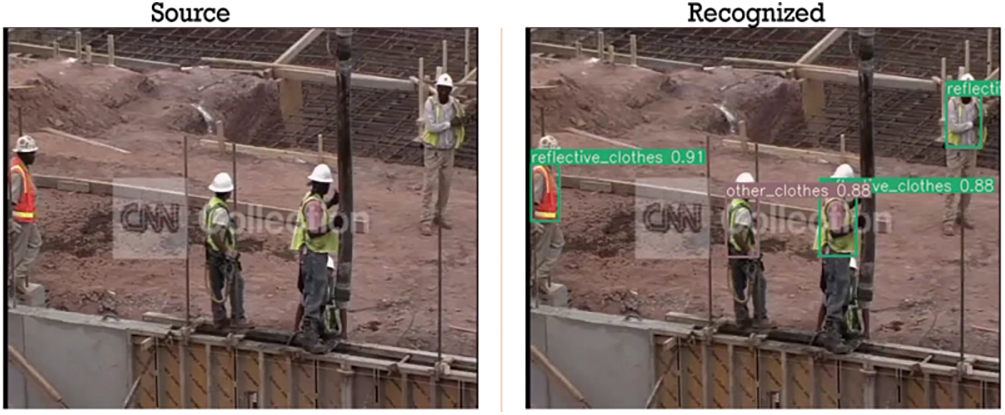

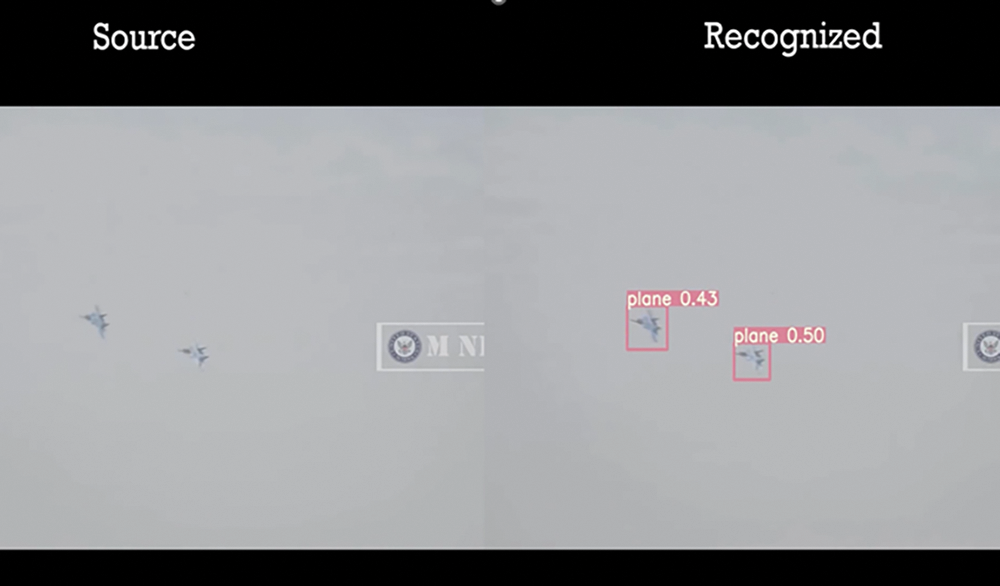

Figs. 20–22 show live surveillance of a construction site and airspace for possible violations.

Figure 20: Deep learning-based helmet detector

Figure 21: Deep learning-based safety cloth detector

Figure 22: Deep learning-based airspace violation detector

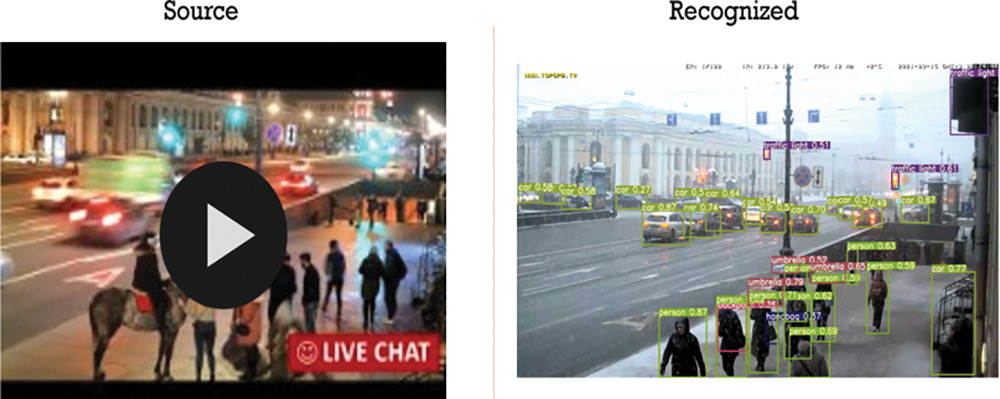

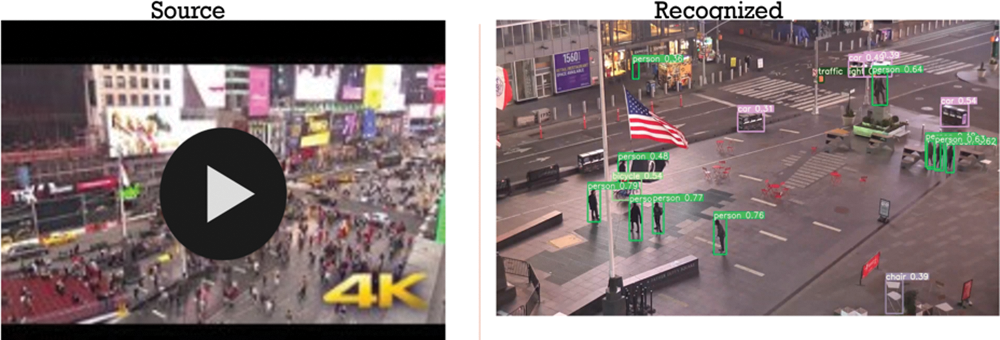

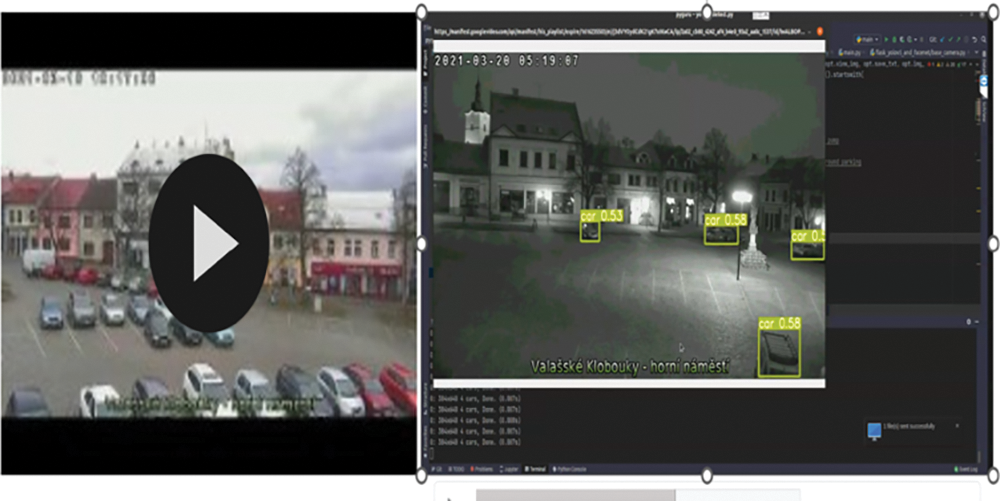

Figs. 23 and 24 show live surveillance capability of the city’s public spaces.

Figure 23: Deep learning-based detection from live stream

Figure 24: Deep learning-based object detection from live stream

Figs. 25–28 show our deep learning model running on drone images and video feed to detect objects of interest.

Figure 25: Deep learning-based object detection from video

Figure 26: Deep learning-based cars detection from video

Figure 27: Deep learning-based parked car detection from video

Figure 28: Deep learning-based social distancing alert

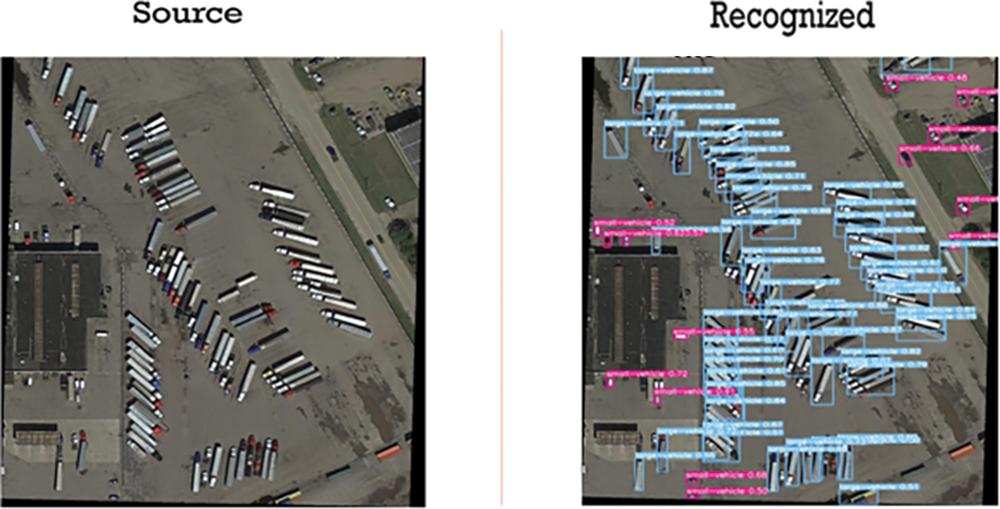

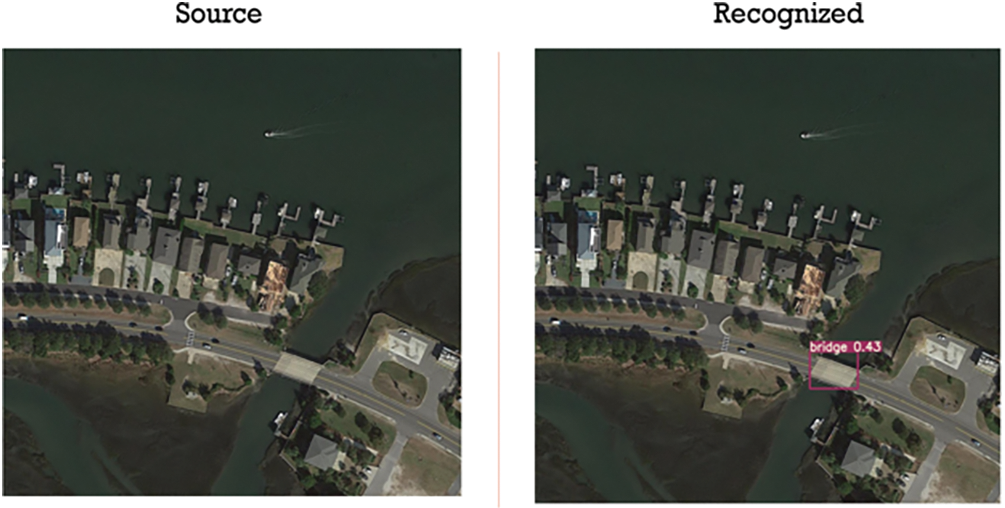

Figs. 29 and 30 show deep learning-based surveillance on satellite imagery to detect airplanes, bridges, and other objects of interest.

Figure 29: Deep learning-based trucks detection from satellite imagery

Figure 30: Deep learning-based bridge detection from satellite imagery

Finally, Fig. 31 shows the proposed live video analytics on social distancing in Mecca Grand Mosque.

Figure 31: Human–explainable AI teaming to handle smart city surveillance scenarios

3.1.2 Emergency Handling Scenarios

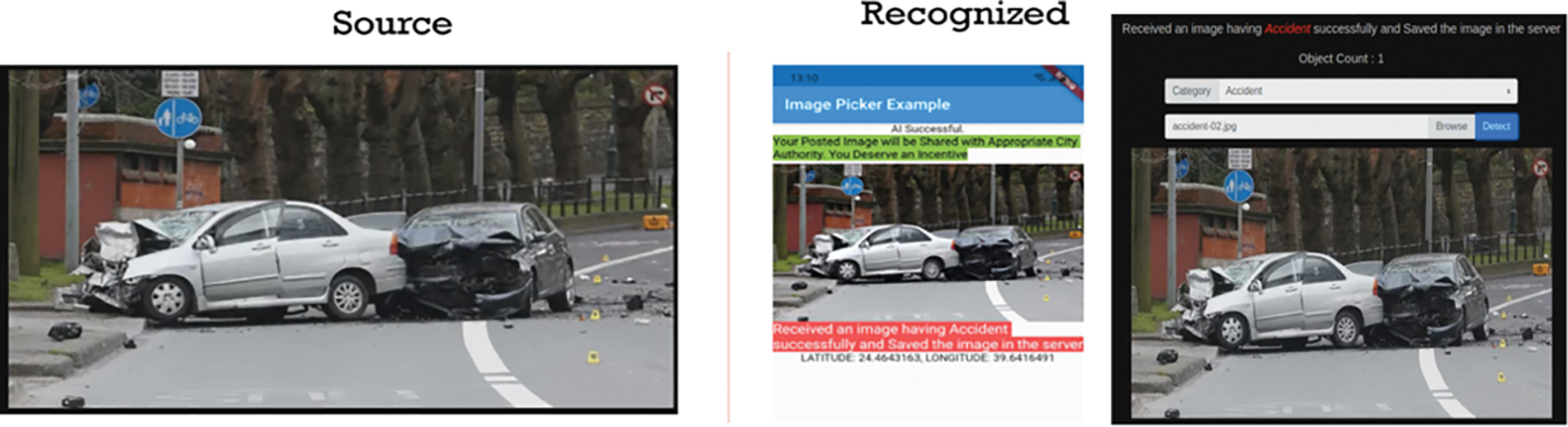

We have developed different emergency handling situations in which deep learning, edge learning, IoT devices, cloud and 6G architecture work seamlessly as shown in Fig. 11. Figs. 32 to 38 show 8 diversified types of emergency handling scenarios in which human-AI teaming scenarios have been implemented and tested. For example, Fig. 32 shows a crowd sensing scenario in which a developed citizen application is being used to capture an emergency car accident and share the captured image with the emergency 911 department. The evidence that is shared with the 911 department for further action is also included.

Figure 32: Deep learning-based accident detection

Figure 33: Deep learning-based accident and human injury detection

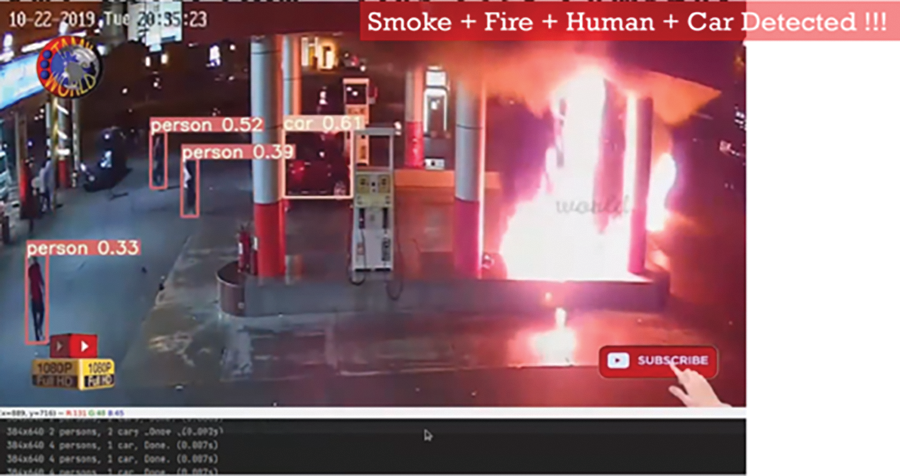

Figure 34: Deep learning-based fire and smoke detection

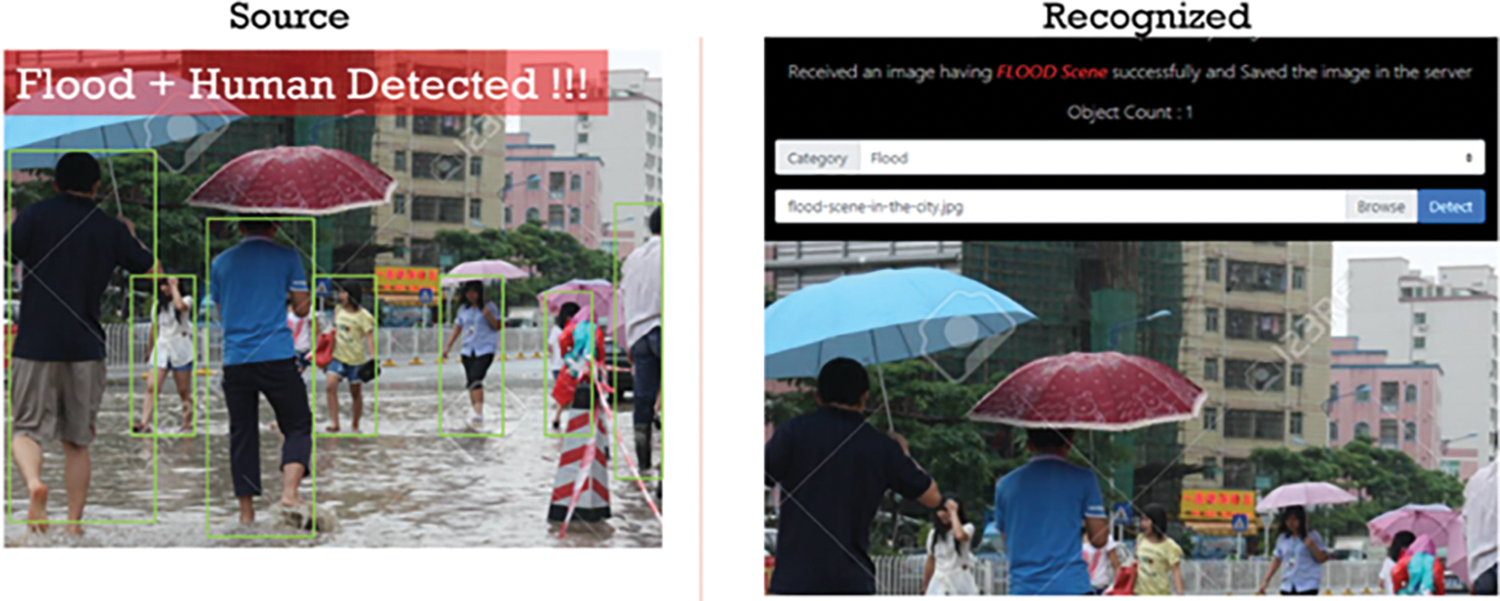

Figure 35: Deep learning-based flood detection

Figure 36: Deep learning-based fire detection

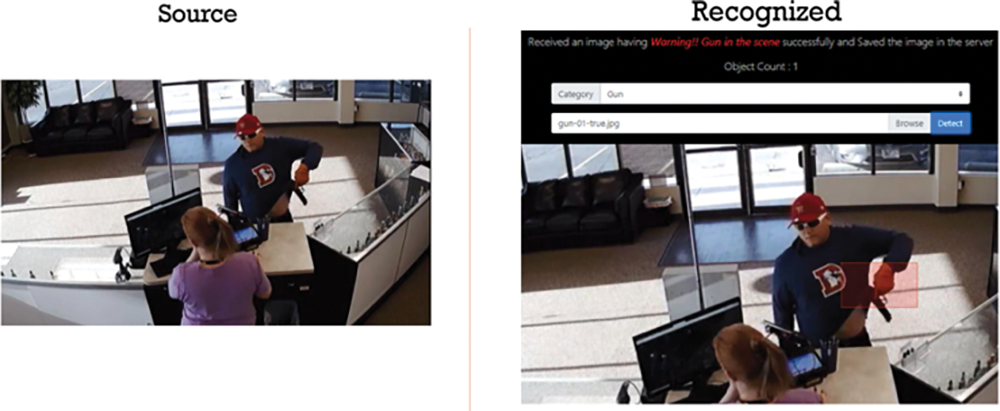

Figure 37: Deep learning-based gun detection

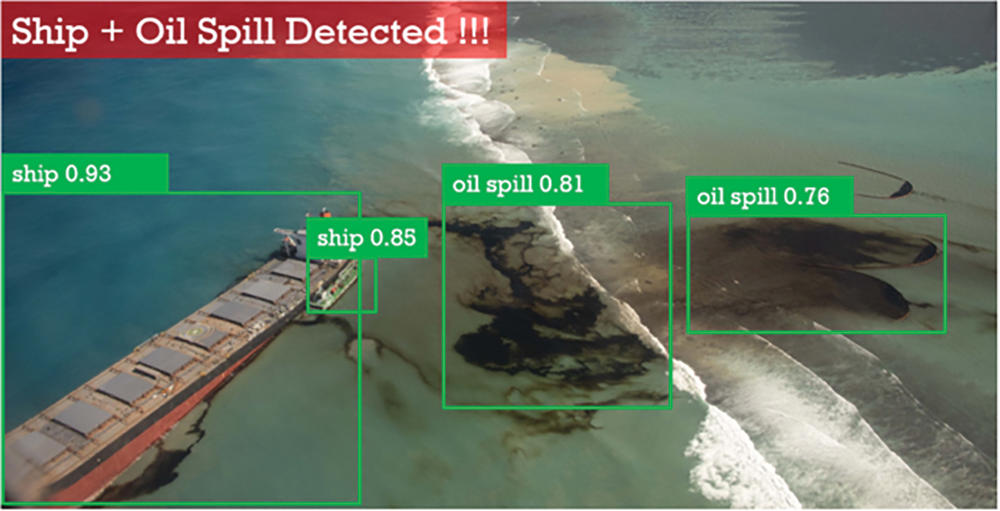

Figure 38: Deep learning-based oil spillage detection

Fig. 33 shows video analytics describing the automated emergency environment with the 911 department.

Fig. 34 shows a detailed report generated by our developed deep learning algorithms to support description of emergency events by the 911 department.

Similarly, Fig. 35 represents an emergency flood event.

Fig. 36 shows the description of a fire event at a gas station.

Fig. 37 shows the detection and reporting of a gun violence scene.

Fig. 38 shows the spillage of oil as a disaster scenario.

Test strategies of different surveillance and emergency-handling scenarios are discussed in this section as shown in Figs. 14 to 38. In all test scenarios, we have obtained the needed types of datasets from both open source and personally collected from different experiments. The used dataset is a multi-class dataset. The testing dataset is an independent dataset personally collected from different experiments.

Diversified types of deep learning algorithms were used, including supervised, semi-supervised, unsupervised, federated learning, and other types as appropriate. We have also used transfer learning for certain cases. Some of the algorithms that have been used in this experiment are presented as well. CNN performs better when used for classifying images with a larger training dataset. But it may not achieve well in the case of a very small dataset. In the case of a small amount of training data available, CNN is implemented along with Fuzzy Neural Network (FNN) for better performance. As shown in Fig. 39, the output of the CNN is used as feature maps for the fuzzifier layers.

Figure 39: Human–explainable AI teaming to handle an emergency situation

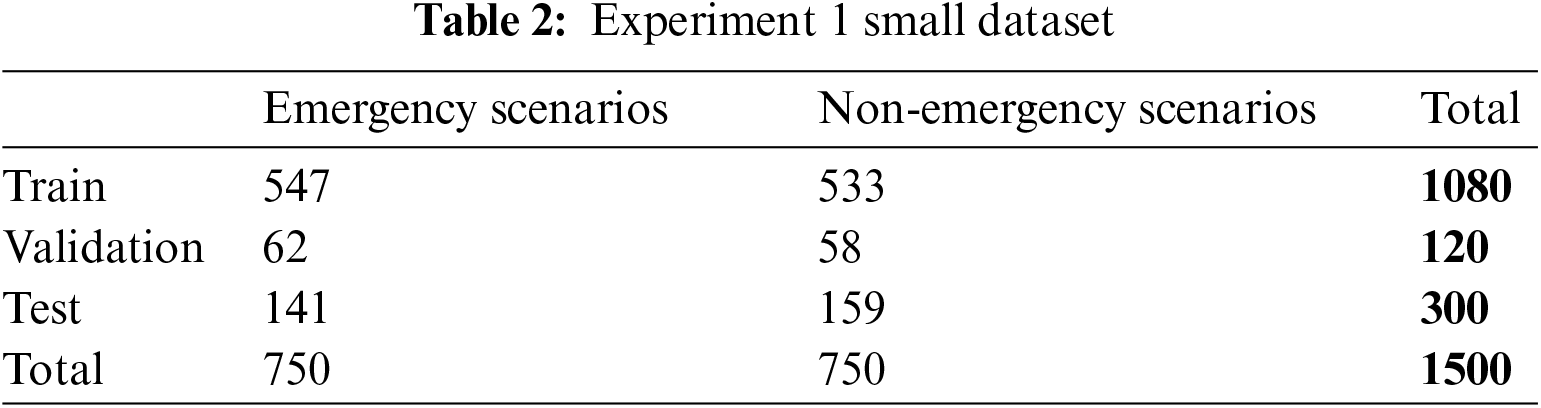

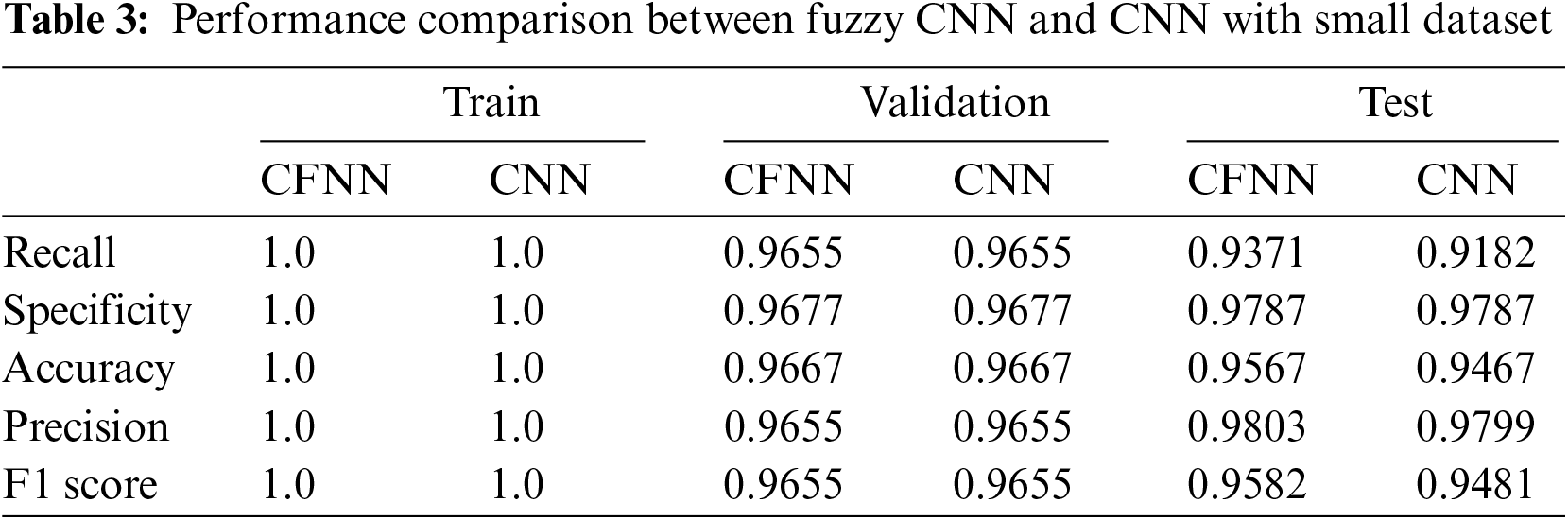

For example, two experiments have been done to compare the performance of Convolutional Fuzzy Neural Network (CFNN) with a basic CNN model. Both models have the same CNN architecture except for CFNN, which has additional FNN layers. Experiment 1 was done with a small dataset containing 1500 smart city images. 1080 images were used for training, 120 for validation and 300 for testing, shown in Table 2.

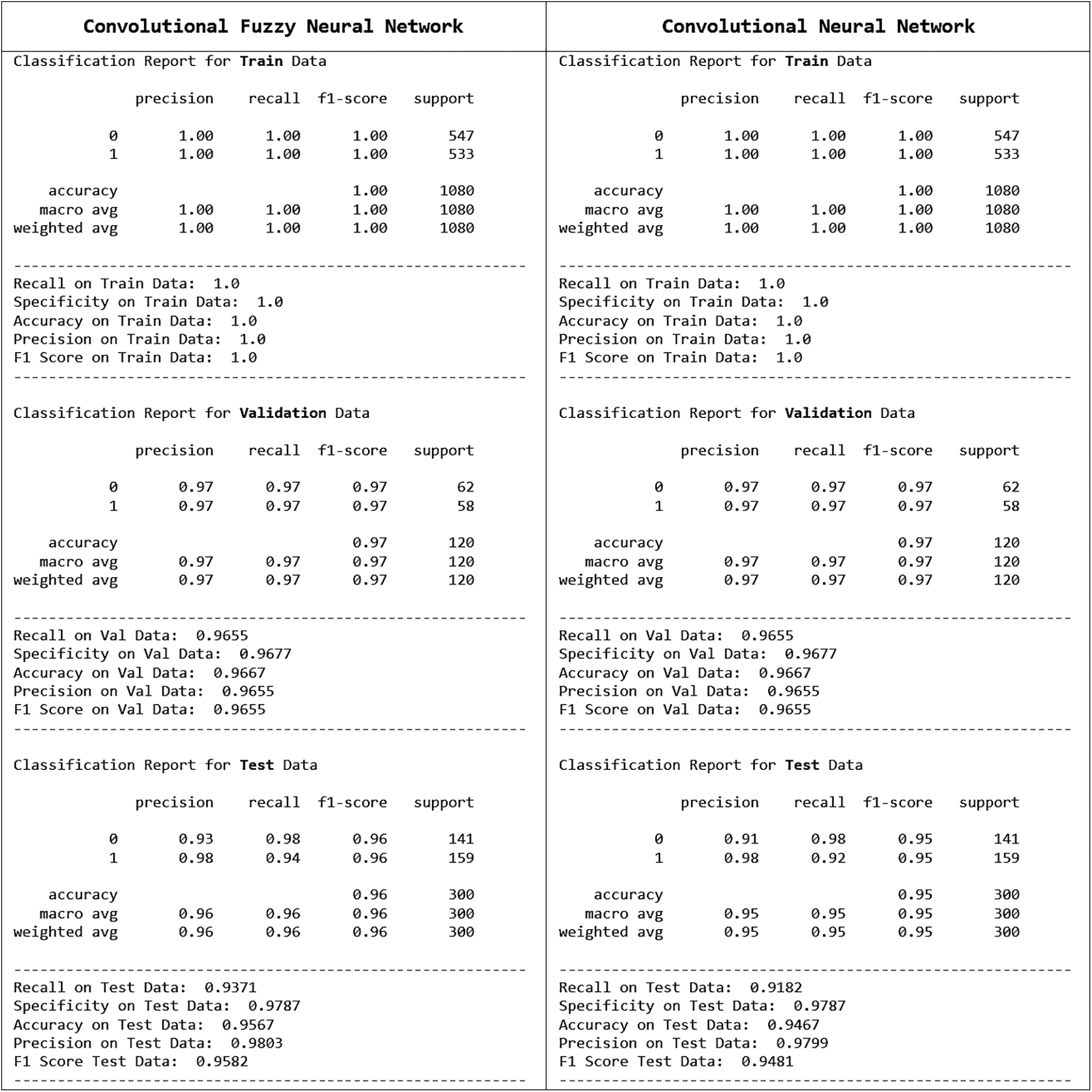

In this case, FCNN performs better with test accuracy of 95.67% and a processing speed of 56.21 Frames Per Second (FPS), whereas CNN has test accuracy of 94.67% and a processing speed of 54.43 Frames Per Second (FPS). FCNN also has higher recall, precision and F1 scores than CNN with this small dataset shown in Table 3. The experiment trials are compared with each other in further detail in Fig. 40. A detailed report is also provided in Figs. 41 and 42.

Figure 40: Confusion matrix comparison

Figure 41: Details report (for small dataset with 1500 samples)

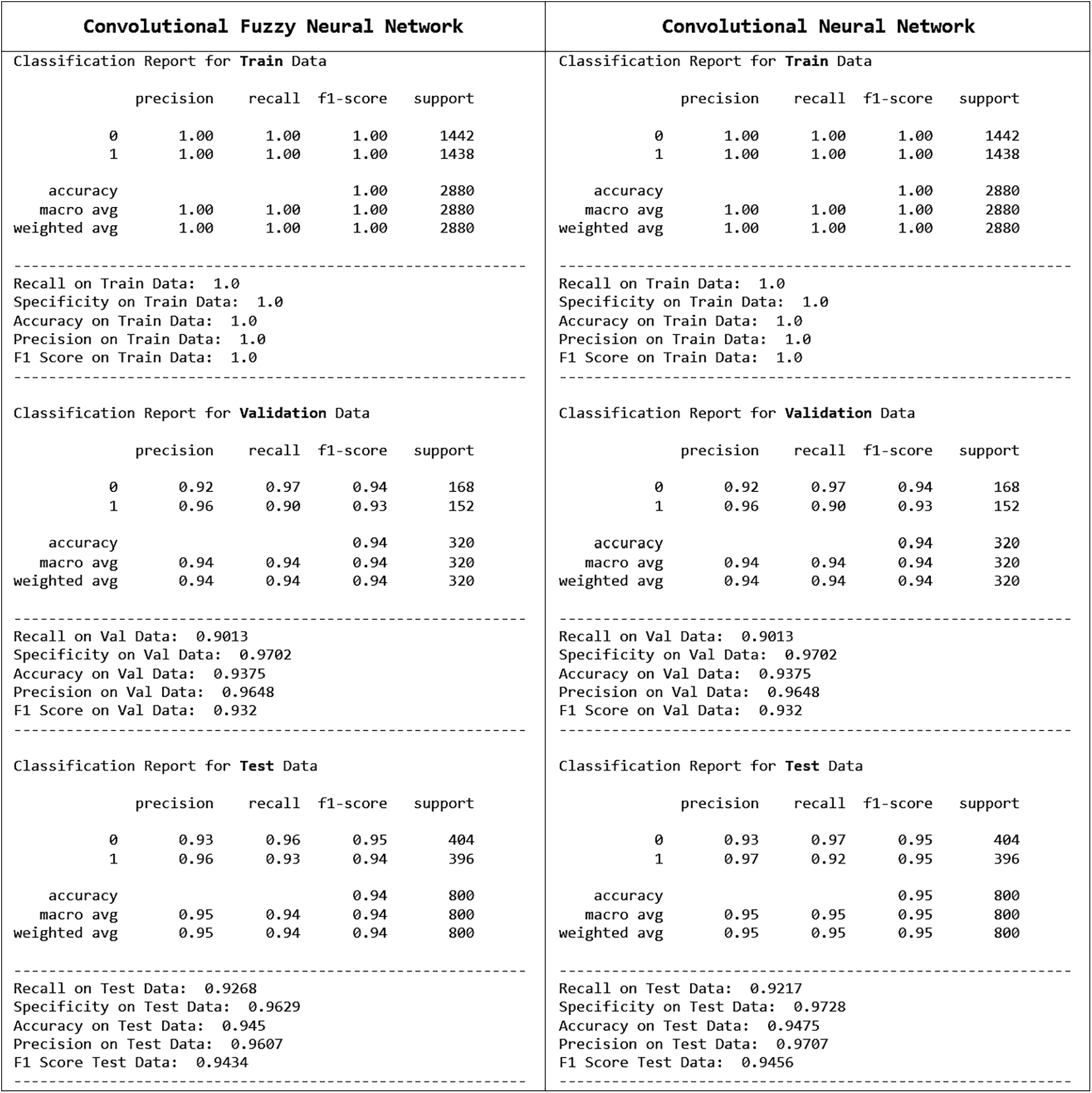

Figure 42: Details report (for dataset with 4000 samples)

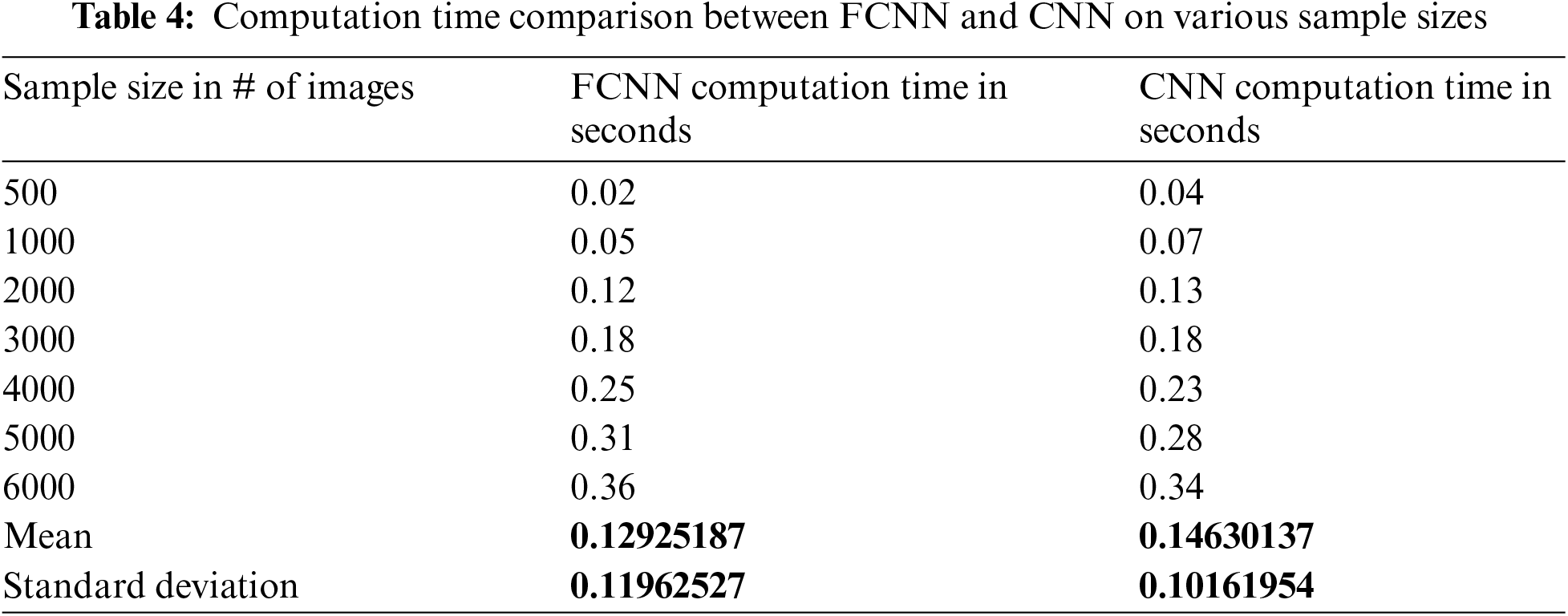

In Experiment 2, the dataset had a total of 4000 samples. In this experiment, with an enlarged dataset, CNN slightly outperformed FCNN during testing in terms of specificity, accuracy, precision, and F1 score, and processing speed on FPS. Data distribution and performance measures for this experiment are shown in Figs. 41 and 42. CNN has achieved testing accuracy of 94.75% and a processing speed of 55.25 FPS, whereas CFNN has the closest testing accuracy of 94.5% and a processing speed of 55.21 FPS. So, in the case of a small dataset, CFNN might perform better than CNN. If there is enough data available for training, CNN might be sufficient. According to Table 4, comparisons between FCNN and CNN have shown that the FCNN can classify images faster than CNN in terms of computation time when dealing with smaller datasets (<3000 images). For bigger dataset sizes, CNN performs a faster classification.

3.2.1 Assessing the Provenance Using Blockchain

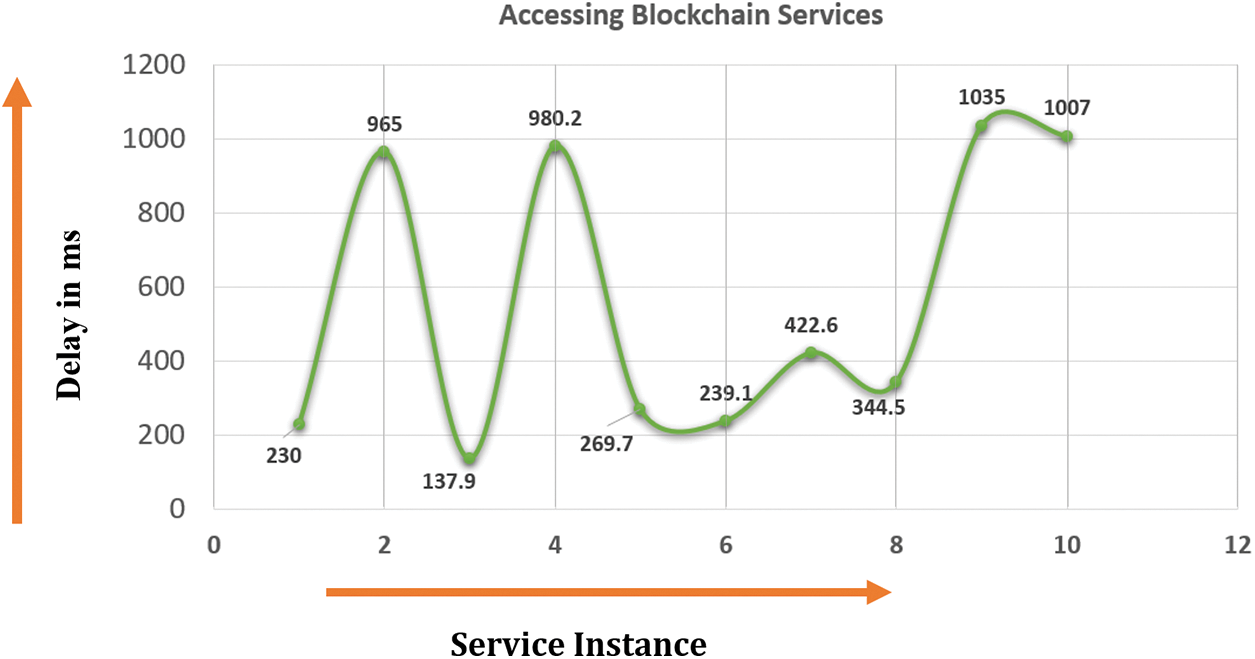

We have measured the delay in accessing the deep learning applications after introducing the provenance of different trustworthy entities within our framework. It is extremely important that all the entities involved within a smart city application are properly privacy-protected and can have trust in each other. Fig. 43 shows the delay introduced by the Peer-To-Peer (P2P) system, which is tolerable for smart city surveillance and emergency handling applications.

Figure 43: Service access delay posed by the introduction of Blockchain-based provenance

3.2.2 Assessing the Usage of Edge and Federated Learning

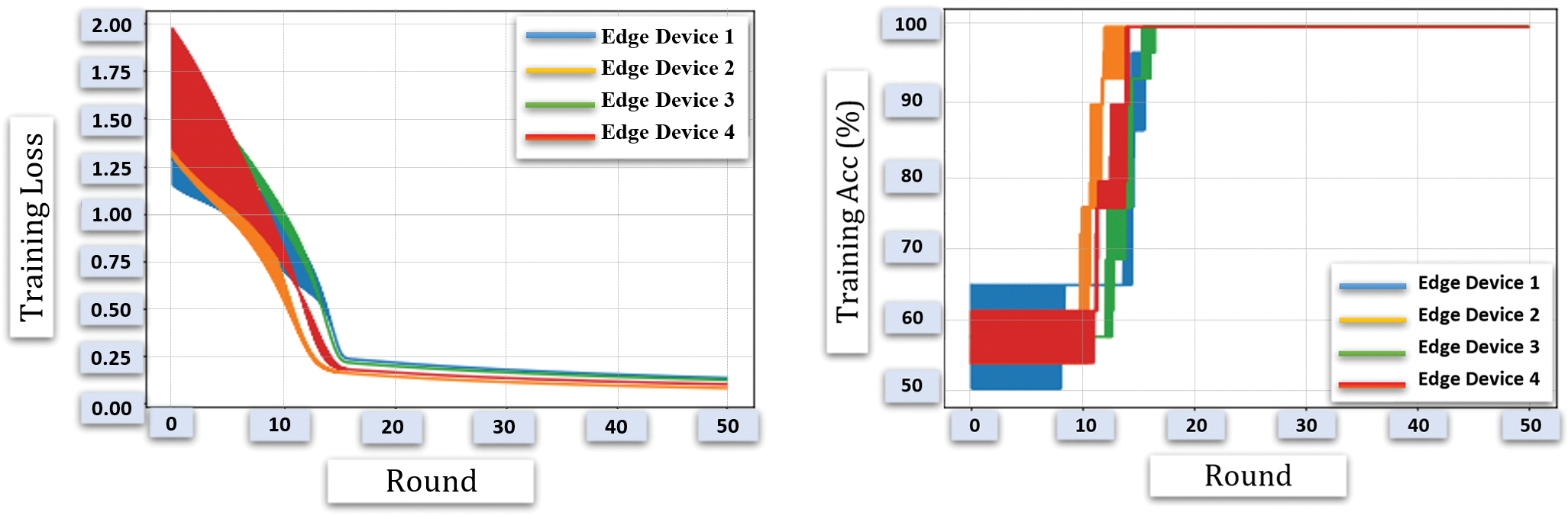

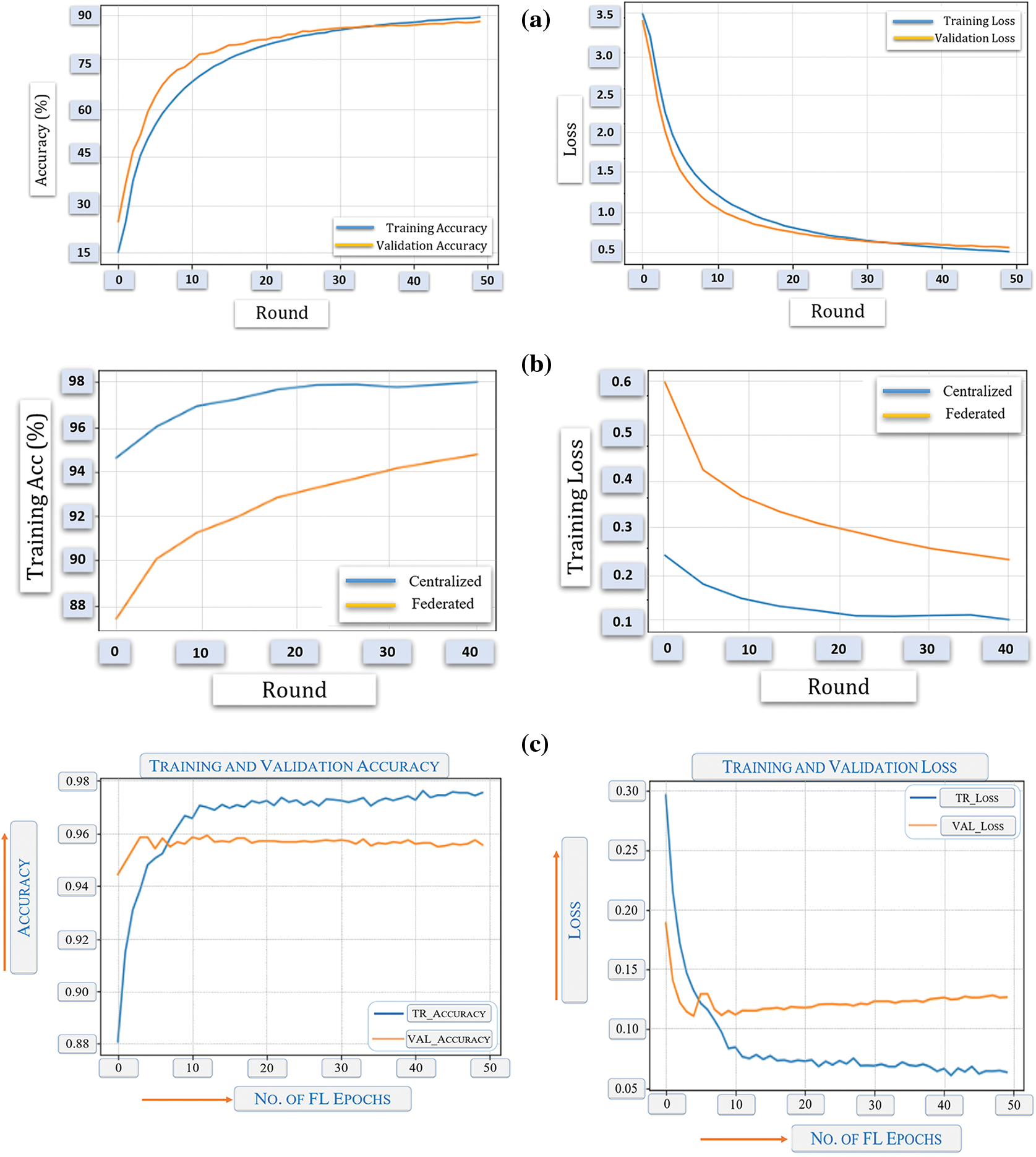

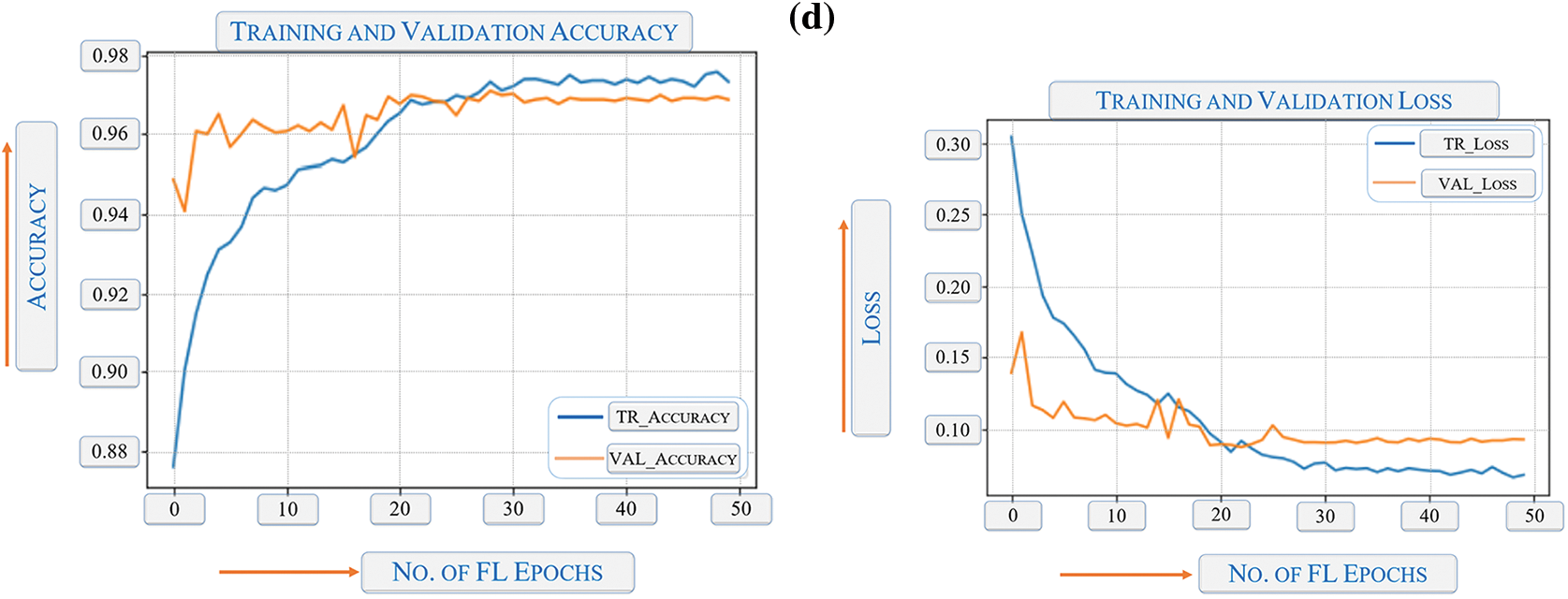

Fig. 44 shows the experimental result of implementing federated learning on edge devices. As shown in the figure, both training loss and accuracy seem to have degraded due to the introduction of federated learning through a blockchain-based mediator. However, the introduction of privacy preservation and anonymity add more value to the smart city surveillance and emergency handling scenarios.

Figure 44: Performance of training for edge learning applications through federated learning

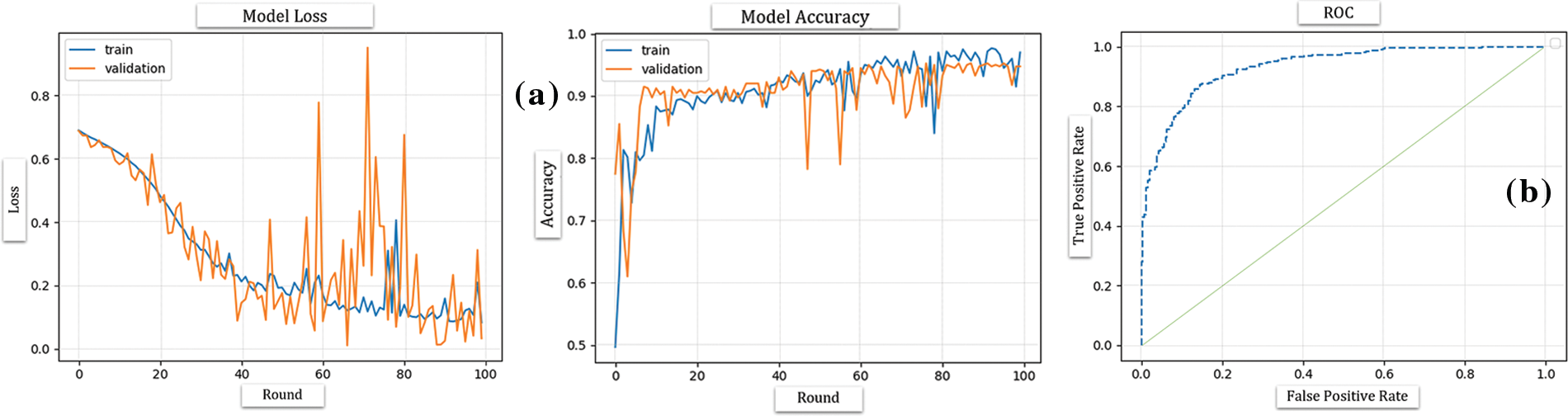

Figs. 45 and 46 demonstrate the training performance for drone-based deep learning model development using different techniques. Fig. 45 presents the comparison between the rates of the true positive and false positive rates. Fig. 46 presents different capturing techniques. In the end, it is important to note that no matter how sophisticated a deep learning model can be, there are certain limitations that it is yet to overcome. Such a limitation is the mandatory availability of enough training data. Some emergency phenomena (earthquakes, hurricanes, etc.) or behaviors don’t happen enough times to collect sufficient data for training, validation, and testing to be able to detect their future occurrences. In addition, some complex programs, when used to train deep learning algorithmic models, create a highly resource-consuming model with a huge size. On the other hand, some complex programs are simply unlearnable due to extreme complexity. Hence, despite the promising results of this research work, its scalability is subject to the complexity of the problem at hand, and the availability of sufficient training data.

Figure 45: Training performance for drone-based deep learning model development

Figure 46: Training performance for deep learning model development; (a) satellite-based, (b) human-based, (c) other objects of interests shown in Figs. 14 to 30, and (d) for emergency events shown in Figs. 32 to 38

The proposed system presents a novel approach that supports secure HITI interaction via the intelligent fusion of IoT, deep learning, and 6G network technologies. This approach employs an innovative method that allows the running of multiple ensembles of deep learning models on resource-constrained edge nodes. In this framework, each edge node can detect multiple smart city surveillance events. The events’ detection performance proved to be ~95% accurate, which seems to be sufficient to build trust in these AI-based applications. This considerably high accuracy gives system developers a green light to apply the proposed system to many other real-life scenarios.

In addition, the deep learning algorithms employed in the current research project allowed the framework to accommodate edge-to-cloud secure big data sharing, live dashboard, live alerts, and live events. Therefore, these AI-based services with such a high detection algorithm accuracy ratio pave the way towards incorporating advanced technologies in places such as smart city initiatives, government offices, and emergency response trams. Lastly, since such usage of technology in sensitive settings mandates absolute privacy and anonymity, robust security and privacy approaches are adopted to allow IoT devices and edge learning models to take part in sensitive AI-based applications, such as smart city surveillance.

Acknowledgement: Special thanks to Dr. Ahmed Elhayek for his input in regard to the AI aspect of this work.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: Mohammed Abdur Rahman established the research idea and execution. Ftoon H. Kedwan and Mohammed Abdur Rahman shared fair writing responsibility. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data and materials used collected from personal experiments and hence will not be supplied for public access.

Ethics Approval: The accomplished work does not involve any humans, animals, or private and personal data. Therefore, no ethical approvals were needed.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. D. Vrontis, M. Christofi, V. Pereira, S. Tarba, A. Makrides and E. Trichina, “Artificial intelligence, robotics, advanced technologies and human resource management: A systematic review,” Int. J. Hum. Resour. Manage., vol. 33, no. 6, pp. 1237–1266, May 2022. doi: 10.1080/09585192.2020.1871398. [Google Scholar] [CrossRef]

2. W. Jiang et al., “Terahertz communications and sensing for 6G and beyond: A comprehensive review,” IEEE Commun. Surv. Tutorials, Apr. 2024. doi: 10.1109/COMST.2024.3385908. [Google Scholar] [CrossRef]

3. C. Li, L. Wang, J. Li, and Y. Fei, “CIM: CP-ABE-based identity management framework for collaborative edge storage,” Peer Peer Netw. Appl., vol. 17, no. 2, pp. 639–655, Mar. 2024. doi: 10.1007/s12083-023-01606-6. [Google Scholar] [CrossRef]

4. R. Gnanaselvam and M. S. Vasanthi, “Dynamic spectrum access-based augmenting coverage in narrow band Internet of Things,” Int. J. Commun. Syst., vol. 37, no. 1, Jan. 2024, Art. no. e5629. doi: 10.1002/dac.5629. [Google Scholar] [CrossRef]

5. P. Segeč, M. Moravčik, J. Uratmová, J. Papán, and O. Yeremenko, “SD-WAN-architecture, functions and benefits,” presented at the 18th Int. Conf. Emerg. eLearn. Technol. Appl. (ICETAKošice, Slovenia, IEEE, Nov. 2020, pp. 593–599. [Google Scholar]

6. F. H. Kedwan and C. Sharma, “Twitter texts’ quality classification using data mining and neural networks,” Int. J. Comput. Appl., vol. 178, no. 32, pp. 19–27, Jul. 2019. [Google Scholar]

7. S. H. Shah and I. Yaqoob, “A survey: Internet of Things (IoT) technologies, applications and challenges,” IEEE Smart Energy Grid Eng. (SEGE), vol. 17, pp. 381–385, Aug. 2016. doi: 10.1109/SEGE.2016.7589556. [Google Scholar] [CrossRef]

8. D. C. Nguyen et al., “6G Internet of Things: A comprehensive survey,” IEEE Internet Things J., vol. 9, no. 1, pp. 359–383, Jan. 2022. doi: 10.1109/JIOT.2021.3103320. [Google Scholar] [CrossRef]

9. S. Dong, P. Wang, and K. Abbas, “A survey on deep learning and its applications,” Comput. Sci. Rev., vol. 40, May 2021, Art. no. 100379. doi: 10.1016/j.cosrev.2021.100379. [Google Scholar] [CrossRef]

10. C. D’Andrea et al., “6G wireless technologies,” The Road towards 6G: Opportunities, Challenges, and Applications: A Comprehensive View of the Enabling Technologies, Cham: Springer Nat. Switzerland, vol. 51, no. 114, pp. 1–222, 2024. [Google Scholar]

11. R. Chataut, M. Nankya, and R. Akl, “6G networks and the AI revolution—exploring technologies, applications, and emerging challenges,” Sensors, vol. 24, no. 6, Mar. 2024, Art. no. 1888. doi: 10.3390/s24061888. [Google Scholar] [PubMed] [CrossRef]

12. A. Samad et al., “6G white paper on machine learning in wireless communication networks,” Apr. 2020, arXiv:2004.13875. [Google Scholar]

13. K. B. Letaief, W. Chen, Y. Shi, J. Zhang, and Y. J. A. Zhang, “The roadmap to 6G: AI empowered wireless networks,” IEEE Commun. Mag., vol. 57, no. 8, pp. 84–90, Aug. 2019. doi: 10.1109/MCOM.2019.1900271. [Google Scholar] [CrossRef]

14. K. David and H. Berndt, “6G vision and requirements: Is there any need for beyond 5G?,” IEEE Veh. Technol. Mag., vol. 13, no. 3, pp. 72–80, Sep. 2018. doi: 10.1109/MVT.2018.2848498. [Google Scholar] [CrossRef]

15. E. C. Strinati et al., “6G: The next frontier: From holographic messaging to artificial intelligence using subterahertz and visible light communication,” IEEE Veh. Technol. Mag., vol. 14, no. 3, pp. 42–50, Aug. 2019. doi: 10.1109/MVT.2019.2921162. [Google Scholar] [CrossRef]

16. F. Tariq, M. R. Khandaker, K. K. Wong, M. A. Imran, M. Bennis and M. Debbah, “A speculative study on 6G,” IEEE Wirel. Commun., vol. 27, no. 4, pp. 118–125, Aug. 2020. doi: 10.1109/MWC.001.1900488. [Google Scholar] [CrossRef]

17. Z. Zhang et al., “6G wireless networks: Vision, requirements, architecture, and key technologies,” IEEE Veh. Technol. Mag., vol. 14, no. 3, pp. 28–41, Sep. 2019. doi: 10.1109/MVT.2019.2921208. [Google Scholar] [CrossRef]

18. M. H. Alsharif, A. Jahid, R. Kannadasan, and M. K. Kim, “Unleashing the potential of sixth generation (6G) wireless networks in smart energy grid management: A comprehensive review,” Energy Rep., vol. 11, pp. 1376–1398, Jun. 2024. doi: 10.1016/j.egyr.2024.01.011. [Google Scholar] [CrossRef]

19. J. Bae, W. Khalid, A. Lee, H. Lee, S. Noh and H. Yu, “Overview of RIS-enabled secure transmission in 6G wireless networks,” Digit. Commun. Netw., Mar. 2024. doi: 10.1016/j.dcan.2024.02.005. [Google Scholar] [CrossRef]

20. W. Abdallah, “A physical layer security scheme for 6G wireless networks using post-quantum cryptography,” Comput. Commun., vol. 218, no. 5, pp. 176–187, Mar. 2024. doi: 10.1016/j.comcom.2024.02.019. [Google Scholar] [CrossRef]

21. A. Alhammadi et al., “Artificial intelligence in 6G wireless networks: Opportunities, applications, and challenges,” Int. J. Intell. Syst., vol. 2024, no. 1, pp. 1–27, 2024. doi: 10.1155/2024/8845070. [Google Scholar] [CrossRef]

22. R. Sun, N. Cheng, C. Li, F. Chen, and W. Chen, “Knowledge-driven deep learning paradigms for wireless network optimization in 6G,” IEEE Netw., vol. 38, no. 2, pp. 70–78, Mar. 2024. doi: 10.1109/MNET.2024.3352257. [Google Scholar] [CrossRef]

23. D. Verbruggen, H. Salluoha, and S. Pollin, “Distributed deep learning for modulation classification in 6G Cell-free wireless networks,” Mar. 2024, arXiv:2403.08563. [Google Scholar]

24. P. Yang, Y. Xiao, M. Xiao, and S. Li, “6G wireless communications: Vision and potential techniques,” IEEE Netw., vol. 33, no. 4, pp. 70–75, Jul. 2019. doi: 10.1109/MNET.2019.1800418. [Google Scholar] [CrossRef]

25. T. S. Rappaport et al., “Wireless communications and applications above 100 GHz: Opportunities and challenges for 6G and beyond,” IEEE Access, vol. 7, pp. 78729–78757, Jun. 2019. doi: 10.1109/ACCESS.2019.2921522. [Google Scholar] [CrossRef]

26. K. Zhao, Y. Chen, and M. Zhao, “Enabling deep learning on edge devices through filter pruning and knowledge transfer,” Jan. 2022, arXiv:2201.10947. [Google Scholar]

27. A. Raha, D. A. Mathaikutty, S. K. Ghosh, and S. Kundu, “FlexNN: A dataflow-aware flexible deep learning accelerator for energy-efficient edge devices,” Mar. 2024, arXiv:2403.09026. [Google Scholar]

28. M. Zawish, S. Davy, and L. Abraham, “Complexity-driven model compression for resource-constrained deep learning on edge,” IEEE Trans. Artif. Intell., vol. 5, no. 8, pp. 1–15, Jan. 2024. doi: 10.1109/TAI.2024.3353157. [Google Scholar] [CrossRef]

29. J. DeGe and S. Sang, “Optimization of news dissemination push mode by intelligent edge computing technology for deep learning,” Sci. Rep., vol. 14, no. 1, Mar. 2024, Art. no. 6671. doi: 10.1038/s41598-024-53859-7. [Google Scholar] [PubMed] [CrossRef]

30. W. Chen, J. Wilson, S. Tyree, K. Weinberger, and Y. Chen, “Compressing neural networks with the hashing trick,” presented at the Int. Conf. Mach. Learn., Lille, France, Jul. 2015, vol. 37, pp. 2285–2294. [Google Scholar]

31. D. Kadetotad, S. Arunachalam, C. Chakrabarti, and J. -S. Seo, “Efficient memory compression in deep neural networks using coarse-grain sparsification for speech applications,” presented at the 35th Int. Conf. Comput.-Aided Des., ACM, Austin, TX, USA, Nov. 2016, pp. 1–8. [Google Scholar]

32. Y. LeCun, J. S. Denker, and S. A. Solla, “Optimal brain damage,” Adv. Neural Inf. Process. Syst., vol. 2, pp. 598–605, 1989. [Google Scholar]

33. S. Srinivas and R. V. Babu, “Data-free parameter pruning for deep neural networks,” Jul. 2015, arXiv:1507.06149. [Google Scholar]

34. S. Han, H. Mao, and W. J. Dally, “Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding,” Oct. 2015, arXiv:1510.00149. [Google Scholar]

35. J. Ba and R. Caruana, “Do deep nets really need to be deep?,” Adv. Neural Inf. Process. Syst., vol. 27, pp. 2654–2662, 2014. [Google Scholar]

36. G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” Mar. 2015, arXiv:1503.02531. [Google Scholar]

37. A. Romero, N. Ballas, S. E. Kahou, A. Chassang, C. Gatta and Y. Bengio, “FitNets: Hints for thin deep nets,” arXiv preprint arXiv:1412.6550, vol. 1, p. 6550, Dec. 2014. [Google Scholar]

38. R. Venkatesan and B. Li, “Diving deeper into mentee networks,” Apr. 2016, arXiv:1604.08220. [Google Scholar]

39. J. Yim, D. Joo, J. Bae, and J. Kim, “A gift from knowledge distillation: Fast optimization network minimization and transfer learning,” in 2017 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Honolulu, HI, USA, 2017. [Google Scholar]

40. A. Benito-Santos and R. T. Sanchez, “A data-driven introduction to authors, readings, and techniques in visualization for the digital humanities,” IEEE Comput. Graph. App., vol. 40, no. 3, pp. 45–57, Feb. 2020. [Google Scholar]

41. D. Berardini, L. Migliorelli, A. Galdelli, E. Frontoni, A. Mancini and S. Moccia, “A deep-learning framework running on edge devices for handgun and knife detection from indoor video-surveillance cameras,” Multimed. Tools Appl., vol. 83, no. 7, pp. 19109–19127, Feb. 2024. doi: 10.1007/s11042-023-16231-x. [Google Scholar] [CrossRef]

42. N. Rai, Y. Zhang, M. Villamil, K. Howatt, M. Ostlie and X. Sun, “Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology,” Comput. Electron. Agric., vol. 216, Jan. 2024, Art. no. 108442. doi: 10.1016/j.compag.2023.108442. [Google Scholar] [CrossRef]

43. C. Li, W. Guo, S. C. Sun, S. Al-Rubaye, and A. Tsourdos, “Trustworthy deep learning in 6G-enabled mass autonomy: From concept to quality-of-trust KPIs,” IEEE Veh. Technol. Mag., vol. 15, no. 4, pp. 112–121, Sep. 2020. doi: 10.1109/MVT.2020.3017181. [Google Scholar] [CrossRef]

44. X. Wang, Y. Han, V. C. M. Leung, D. Niyato, X. Yan and X. Chen, “Convergence of edge computing and deep learning: A comprehensive survey,” IEEE Commun. Surv. Tutorials, vol. 22, no. 2, pp. 869–904, Jan. 2020. doi: 10.1109/COMST.2020.2970550. [Google Scholar] [CrossRef]

45. M. A. Rahman, M. S. Hossain, N. Alrajeh, and F. Alsolami, “Adversarial examples–security threats to COVID-19 deep learning systems in medical IoT devices,” IEEE Internet Things J., vol. 8, no. 12, pp. 9603–9610, Aug. 2020. doi: 10.1109/JIOT.2020.3013710. [Google Scholar] [PubMed] [CrossRef]

46. M. A. Rahman, M. S. Hossain, N. Alrajeh, and N. Guizani, “B5G and explainable deep learning assisted healthcare vertical at the edge COVID 19 perspective,” IEEE Netw., vol. 34, no. 4, pp. 98–105, Jul. 2020. doi: 10.1109/MNET.011.2000353. [Google Scholar] [CrossRef]

47. A. Rahman, M. S. Hossain, M. M. Rashid, S. Barnes, and E. Hassanain, “IoEV-Chain: A 5G-based secure inter-connected mobility framework for the internet of electric vehicles,” IEEE Netw., vol. 34, no. 5, pp. 190–197, Aug. 2020. doi: 10.1109/MNET.001.1900597. [Google Scholar] [CrossRef]

48. H. Viswanathan and P. E. Mogensen, “Communications in the 6G Era,” IEEE Access, vol. 8, pp. 57063–57074, Mar. 2020. doi: 10.1109/ACCESS.2020.2981745. [Google Scholar] [CrossRef]

49. C. She et al., “A tutorial on ultrareliable and low-latency communications in 6G: Integrating domain knowledge into deep learning,” Proc. IEEE, vol. 109, no. 3, pp. 204–246, Mar. 2021. doi: 10.1109/JPROC.2021.3053601. [Google Scholar] [CrossRef]

50. N. Kato, B. Mao, F. Tang, Y. Kawamoto, and J. Liu, “Ten challenges in advancing machine learning technologies toward 6G,” IEEE Wirel. Commun., vol. 27, no. 3, pp. 96–103, Apr. 2020. doi: 10.1109/MWC.001.1900476. [Google Scholar] [CrossRef]

51. A. Khan, L. Serafini, L. Bozzato, and B. Lazzerini, “Event detection from video using answer set programming,” in CEUR Workshop Proc., 2019, vol. 2396, pp. 48–58. [Google Scholar]

52. M. Emmi et al., “RAPID: Checking API usage for the cloud in the cloud,” presented at the 29th ACM Joint Meet. Eur. Soft. Eng. Conf. Symp. Found. Soft. Eng., New York, NY, USA, Aug. 2021, pp. 1416–1426. [Google Scholar]

53. A. N. Aprianto, A. S. Girsang, Y. Nugroho, and W. K. Putra, “Performance analysis of RabbitMQ and Nats streaming for communication in microservice,” Teknologi: Jurnal Ilmiah Sistem Informasi, vol. 14, no. 1, pp. 37–47, Mar. 2024. [Google Scholar]

54. R. Sileika and S. Rytis, “Distributed message processing system,” in Pro Python Syst. Adm., Nov. 2014, pp. 331–347. [Google Scholar]

55. S. M. Levin, “Unleashing real-time analytics: A comparative study of in-memory computing vs. traditional disk-based systems,” Braz. J. Sci., vol. 3, no. 5, pp. 30–39, Apr. 2024. doi: 10.14295/bjs.v3i5.553. [Google Scholar] [CrossRef]

56. S. Lin, C. Li, and K. Niu, “End-to-end encrypted message distribution system for the Internet of Things based on conditional proxy re-encryption,” Sensors, vol. 24, no. 2, Jan. 2024, Art. no. 438. doi: 10.3390/s24020438. [Google Scholar] [PubMed] [CrossRef]

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools