Open Access

Open Access

PROCEEDINGS

Application of Simplified Swarm Optimization on Graph Convolutional Networks

1 Department of Electrical Engineering, National Taiwan University, Taipei City, 106319, Taiwan R.O.C.

2 Institute of Artificial Intelligence Innovation, National Yang Ming Chiao Tung University, Hsinchu City, 300093, Taiwan R.O.C.

* Corresponding Author: Guan-Yan Yang. Email:

The International Conference on Computational & Experimental Engineering and Sciences 2024, 32(1), 1-4. https://doi.org/10.32604/icces.2024.013279

Abstract

1 IntroductionThis paper explores various strategies to enhance neural network performance, including adjustments to network architecture, selection of activation functions and optimizers, and regularization techniques. Hyperparameter optimization is a widely recognized approach for improving model performance [2], with methods such as grid search, genetic algorithms, and particle swarm optimization (PSO) [3] previously utilized to identify optimal solutions for neural networks. However, these techniques can be complex and challenging for beginners. Consequently, this research advocates for the use of SSO, a straightforward and effective method initially applied to the LeNet model in 2023 [4]. SSO optimizes various parameters, including kernel number, size, stride, number of units in fully connected layers, and batch size, but has not previously focused on activation functions, optimizers, or regularization. These elements are the focus of the current study. Additionally, as Graph Convolution Neural Networks (GCN) also incorporate convolutional structures, SSO is applied to GCN models as well. The primary contributions of this study are:

1. Demonstrating the applicability of SSO to GCN.

2. Highlighting importance of choosing activation functions and optimizers over structural modifications.

3. Providing a systematic approach to identify effective parameters through SSO.

2 The Proposed Method

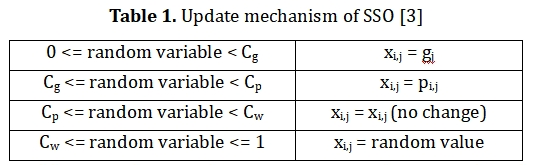

The SSO algorithm is characterized by its straightforwardness. The update mechanism for SSO is detailed in Table 1.

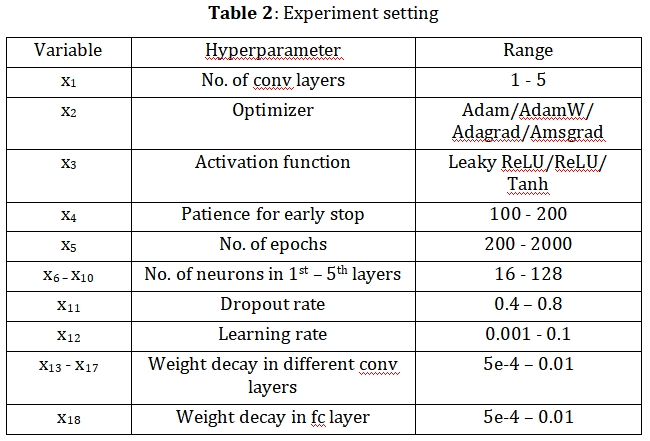

The update of each variable xi,j within a solution vector Xi = (xi,1, xi,2, …) is determined by a random variable between 0 and 1. Here, gj represents the variable from the best solution, and pi,j denotes the variable from the original solution. The probabilities Cg, Cp and Cw dictate the likelihood that the updated xi,j will match gj, pi,j, xi,j, and a random value, respectively, set at 0.4, 0.7, and 0.9 in this study. The experimental configurations for GCNs are detailed in Tables 2, highlighting the extensive range of variables or hyperparameters involved.

3 Experiment

In this study, GCNs employed the Cora, Citeseer, and Pubmed datasets. All experiments were conducted using Python 3.10.12 and Pytorch 2.2.1 on Google Colab, executed on an Intel® Xeon® CPU @ 2.00GHz with 16 GB of RAM and an NVIDIA Tesla T4 GPU.

The application of the Simplified Swarm Optimization (SSO) algorithm involved three steps:

1.Conduct an initial run to identify the optimal activation function and optimizer without constraints.

2.With the activation function and optimizer fixed, determine the optimal network structure and other hyperparameters such as dropout rate or weight decay.

3.Optionally, implement a learning rate scheduler or data augmentation upon finding the optimal solution if deemed necessary.

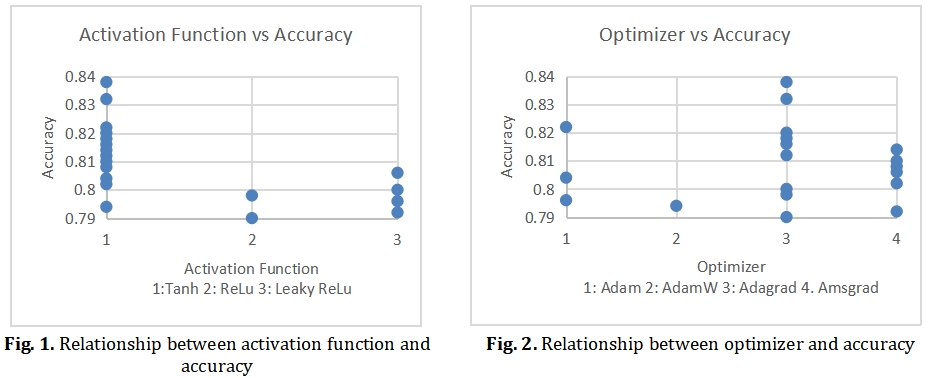

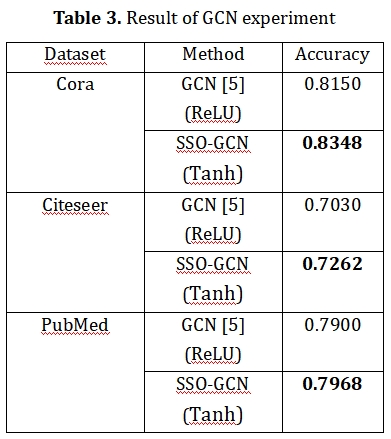

The results, indicating the optimal activation function and optimizer, are presented in Figures 1 to 2.

Following steps 2, the SSO algorithm identified an optimal solution with the best activation function (Tanh) and optimizer (Adagrad), as detailed in Tables 3.

4 Conclusion

This research demonstrates that SSO is an effective method for identifying optimal solutions in GCN. SSO systematically suggests crucial hyperparameters, such as activation functions and optimizers. Additionally, SSO's applicability extends to other neural network architectures. Currently, we are also conducting research to make SSO applicable to more different neural networks. For future work, we think SSO potentially can improve the test case generation [6], test prioritization [7], deep learning process [8], and blockchain latency [9, 10].

Keywords

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools