Open Access

Open Access

ARTICLE

Chase, Pounce, and Escape Optimization Algorithm

Computer Science Department, Faculty of Science, University of Zakho, Duhok City, 42001, Iraq

* Corresponding Author: Adel Sabry Eesa. Email:

(This article belongs to the Special Issue: AI Powered Human-centric Computing with Cloud/Fog/Edge)

Intelligent Automation & Soft Computing 2024, 39(4), 697-723. https://doi.org/10.32604/iasc.2024.053192

Received 26 April 2024; Accepted 02 July 2024; Issue published 06 September 2024

Abstract

While many metaheuristic optimization algorithms strive to address optimization challenges, they often grapple with the delicate balance between exploration and exploitation, leading to issues such as premature convergence, sensitivity to parameter settings, and difficulty in maintaining population diversity. In response to these challenges, this study introduces the Chase, Pounce, and Escape (CPE) algorithm, drawing inspiration from predator-prey dynamics. Unlike traditional optimization approaches, the CPE algorithm divides the population into two groups, each independently exploring the search space to efficiently navigate complex problem domains and avoid local optima. By incorporating a unique search mechanism that integrates both the average of the best solution and the current solution, the CPE algorithm demonstrates superior convergence properties. Additionally, the inclusion of a pouncing process facilitates rapid movement towards optimal solutions. Through comprehensive evaluations across various optimization scenarios, including standard test functions, Congress on Evolutionary Computation (CEC)-2017 benchmarks, and real-world engineering challenges, the effectiveness of the CPE algorithm is demonstrated. Results consistently highlight the algorithm’s performance, surpassing that of other well-known optimization techniques, and achieving remarkable outcomes in terms of mean, best, and standard deviation values across different problem domains, underscoring its robustness and versatility.Keywords

In many fields, including physics, biology, engineering, economics, and business, optimization algorithms are the foundation of problem-solving efforts. They are primarily classified into two types: deterministic and metaheuristic. The goal of optimization algorithms is to find the best possible solution within given constraints [1]. Deterministic algorithms employ gradient-based methods and are particularly effective for solving unimodal problems. In contrast, metaheuristic algorithms leverage stochastic search strategies to navigate complex multi-dimensional landscapes in pursuit of global optima. The richness of these optimization techniques is highlighted by their application to real-world challenges, where factors like time, design specifications, and geometric complexities pose unique hurdles to finding optimal solutions. To tackle this, several metaheuristic optimization algorithms have been developed, inspired by nature, such as physics-based algorithms, Swarm Intelligence (SI), and Evolutionary Algorithms (EA). EA imitates organic evolution by using processes including recombination, mutation, and selection [2], while SI algorithms, like Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), are based on collective swarm behavior [3]. Recent years have witnessed the proliferation of metaheuristic optimization algorithms, inspired by various phenomena in nature including physics-based algorithms [4], animal behavior-based algorithms [5–9], and human behavior-based algorithms [10–12]. Each of these paradigms has unique inspirations and methodologies, developed with the goal of effectively balancing exploration and exploitation. Despite these efforts, many existing algorithms still face significant challenges in achieving this balance.

Amidst recent algorithm proposals, a critical question arises: do we need more optimization techniques? The No Free Lunch (NFL) theorem [13] indicates that no single algorithm can address all problems effectively, as each excels only in specific cases. On average, optimization techniques perform equally across all problems. The persistent challenge of balancing exploration and exploitation continues to inspire innovative adaptations. These factors drive researchers to develop or enhance algorithms tailored to specific subsets within various fields. our research introduces the Chase, Pounce, and Escape (CPE) algorithm, a novel metaheuristic approach inspired by the hunting dynamics of predators and prey. Unlike conventional optimization algorithms, the CPE algorithm partitions the population into two distinct groups, each independently exploring the search space. This unique approach facilitates efficient navigation through complex problem domains while mitigating the risk of local optima.

The contributions of this paper include:

• Introduction of the CPE algorithm: We introduce a novel nature-inspired metaheuristic as a competitive alternative to existing methods. This algorithm employs an innovative partitioning strategy, dividing the population into two groups operating in different scenarios, thereby enhancing both exploration and exploitation dynamics.

• Evaluation across various optimization scenarios: We conduct a comprehensive evaluation of the CPE algorithm’s efficacy across a spectrum of optimization scenarios, encompassing 50 standard test functions and CEC-2017 benchmark functions.

• Application to real-world engineering challenges: The proposed CPE is applied and tested on four prominent engineering problems, including the design of welded beams, speed reducers, cantilever beams, and multi-plate disc clutch brakes.

To provide a comprehensive overview, we organize the remainder of the paper as follows: Section 2 reviews related work, focusing on the drawbacks identified in current literature. Section 3 delineates the approach to cooperative hunting between predators, serving as the theoretical foundation for the proposed CPE optimization algorithm. In Section 4, we elaborate on the intricacies of the CPE algorithm, outlining its methodology and design principles. Section 5 presents experimental results, showcasing the efficacy of the CPE algorithm across various optimization problems, including real-world scenarios. Finally, Section 6 concludes the paper by summarizing key findings and suggesting avenues for future research.

With different motivations and approaches, many optimization algorithms have been created with the goal of efficiently striking a balance between exploration and exploitation. But in this aspect, a lot of the current algorithms face significant challenges. EAs, PSOs, and ACO are a few of the most well-known. EAs work by means of mechanisms including recombination, mutation, and selection. They are inspired by the concepts of biological evolution. To produce excellent answers for optimization issues, these algorithms mimic natural evolutionary processes. EAs are widely used in many different areas, but they frequently encounter substantial obstacles, such as premature convergence, which occurs when the algorithm becomes stuck in local optima, and maintaining an effective balance between exploration and exploitation [14].

Fish schools and flocks of birds serve as models for PSO social dynamics. Potential solutions are represented by a population of particles in PSO, which traverse the search space under the impact of their own and neighboring experiences. Despite PSO’s effectiveness in resolving a variety of optimization issues, it can have trouble preserving diversity within the swarm and is prone to early convergence. In notably complex, multimodal landscapes, this may lead to inadequate investigation [15].

Ants foraging strategy served as the model for ACO. Ants leave behind pheromones on the paths they travel, which direct other ants’ following motions. Finding the best routes to resources is made easier by this cooperative process. However, if the pheromone update mechanism is too aggressive, ACO algorithms may converge too quickly on paths that are not optimal. This can drastically diminish the algorithm’s capacity for exploration, which will limit its ability to find global optima in intricate problem spaces [16].

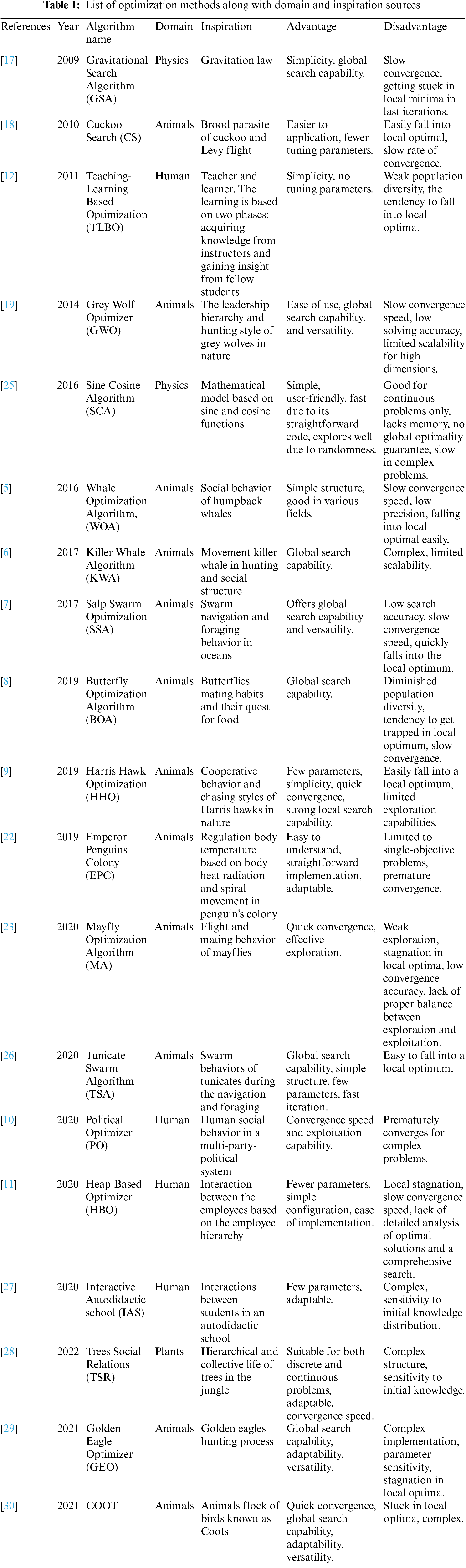

Recent developments in optimization algorithms have drawn inspiration from various domains, including physics, animal behavior, and human behavior. Table 1 highlights some of the most popular metaheuristic optimization algorithms from the past decade, detailing their inspirations, advantages, and disadvantages.

Physics inspirations such as Gravitational Search Algorithm (GSA) [17] are based on the law of gravity and mass interactions. While GSA is powerful, it often converges slowly, especially in high-dimensional spaces, due to the gradual reduction in exploration capability.

Animal-inspired algorithms like the Cuckoo Search (CS) [18] and Grey Wolf Optimizer (GWO) [19] draw inspiration from animal behavior. CS, inspired by the brood parasitism of cuckoos, and GWO, mimicking the leadership hierarchy in grey wolf packs, both exhibit strong exploration abilities initially. However, they can suffer from a rapid convergence phase, limiting their exploitation capabilities in the later stages [20,21]. The Whale Optimization Algorithm (WOA) [5] and Salp Swarm algorithm (SSA) [7], inspired by the social behavior of whales and salps respectively, show similar trends where maintaining a balance between global search (exploration) and local search (exploitation) remains challenging. Harris Hawk Optimization (HHO) [9], Emperor Penguins Colony (EPC) [22], and Mayfly Optimization Algorithm (MA) [23] show promise in tackling specific problems but still face the common issue of balancing exploration and exploitation. HHO, inspired by the cooperative behavior of Harris hawks, and EPC, mimicking the huddling behavior of emperor penguins, often require careful parameter adjustment to maintain this balance.

Human behavior-based inspirations, such as Political Optimizer (PO) [10] and Heap-Based Optimizer (HBO) [11], Teaching-Learning Based Optimization (TLBO) [12] algorithms introduce unique social and computational strategies but similarly struggle with premature convergence and maintaining diversity in the solution space.

Overall, while these algorithms introduce various innovative strategies to address optimization problems, they often struggle with the trade-off between exploration and exploitation. Premature convergence, sensitivity to parameter settings, and difficulty in maintaining diversity within the population are common challenges [24]. In addition, optimization algorithms assuming function continuity struggle when faced with non-smooth or discontinuous functions. Continuous functions, which exhibit smooth behavior, enable seamless exploration. In contrast, non-smooth functions, featuring abrupt changes or non-differentiable points, challenge traditional methods relying on smoothness and differentiability. The need for adaptive, robust, and efficient optimization techniques continues to drive research in this field, motivating the development of new algorithms such as the Chase, Pounce, and Escape (CPE) algorithm proposed in this study. The CPE algorithm aims to address these challenges by leveraging novel search strategies inspired by the hunting dynamics of predators and prey, offering a competitive alternative to existing metaheuristic approaches. The CPE algorithm introduced in this study innovatively balances exploration and exploitation through two distinct predator groups. In the first group, predators employ a weighted combination of current, best, and average solutions to approach and encircle prey, facilitating targeted exploration while maintaining diversity. The second group utilizes random equation selection to update positions, mimicking the pouncing and escape process of prey, promoting adaptive exploration.

In natural ecosystems, predators and prey exhibit behaviors that mirror the principles of exploration and exploitation. Predators explore vast territories in search of prey. Once prey is located, predators engage in pursuit, exploiting their position to capture and consume their quarry. Conversely, prey species employ evasive maneuvers to escape predation, contributing to the diversity and adaptability of their movements within the environment. Chase and escaping are the two primary activities involved in group hunting [31]. With the goal of trapping the flock of targets, the chasers’ band together and form packs. A cooperative hunting approach is used by lions, which are an example of group chasing. They surround the herd and cover it from all sides before attacking, then they pounce. After they get closer, they pounce on the group. Typically, lions target weaker, older animals, and calves to increase their chances of capturing them [32]. On the other hand, flight initiation distance is a measure of the distance at which prey flee from predators. The distance at which prey flee from predators, on the other hand, is measured by the flight initiation distance. Predator-prey distances come in three varieties: start, alert, and flight initiation. The prey’s awareness of the predator is known as the alert distance, the start distance occurs as the predator approaches, and the flight initiation distance occurs when the prey takes flight [33].

Inspired by the division of roles between predators and prey, the CPE algorithm partitions the population into two distinct groups. This approach balances exploration and exploitation. In the first group, the algorithm employs the concept of approaching and encircling prey, which involves identifying and narrowing down promising regions within the search space. In the second group, the algorithm incorporates the concept of pouncing on prey and the prey’s escape process. This partitioning fosters a dynamic balance between exploration (via predator-like agents) and exploitation (through prey-like solutions).

Emulating the pursuit tactics of predators, the algorithm guides predator agents towards promising solution regions within the search space. The movement of predators is influenced by factors such as the proximity of prey and the overall distribution of the prey, facilitating comprehensive exploration of the solution landscape. When a predatory agent closes in on a prey-like solution, it executes a “pouncing” action akin to a focused local search. This mechanism enables the algorithm to exploit promising solution regions efficiently, refining candidate solutions and enhancing convergence towards optimal outcomes.

To prevent premature convergence and maintain solution diversity, prey-like solutions employ adaptive escape mechanisms. These mechanisms ensure that solutions dynamically adjust their positions in response to predator movements, thereby preventing over-exploitation of specific solution regions and encouraging continued exploration. This strategy not only enhances the diversity of the solutions but also significantly improves the algorithm’s ability to find the global optimum.

Through the integration of these algorithmic strategies, the CPE algorithm achieves an effective balance and synergy between exploration and exploitation. The chase dynamics drive thorough exploration of the solution space, while the pounce mechanism facilitates efficient exploitation of promising solution regions. Additionally, the adaptive escape behavior of prey-like solutions fosters solution diversity, contributing to the algorithm’s robust performance across various optimization scenarios.

4 Proposed Chase, Pounce, and Escape Optimization Algorithm

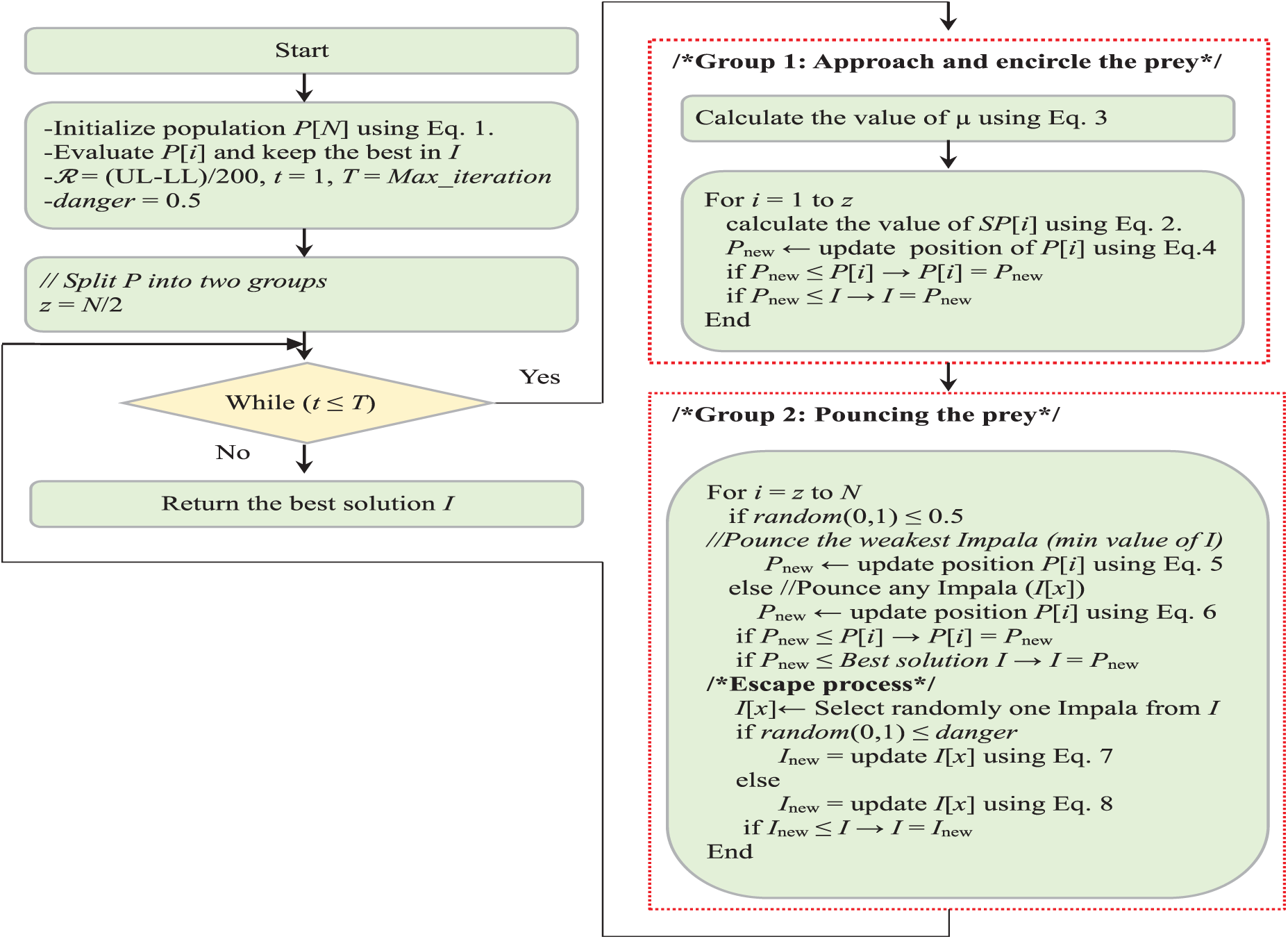

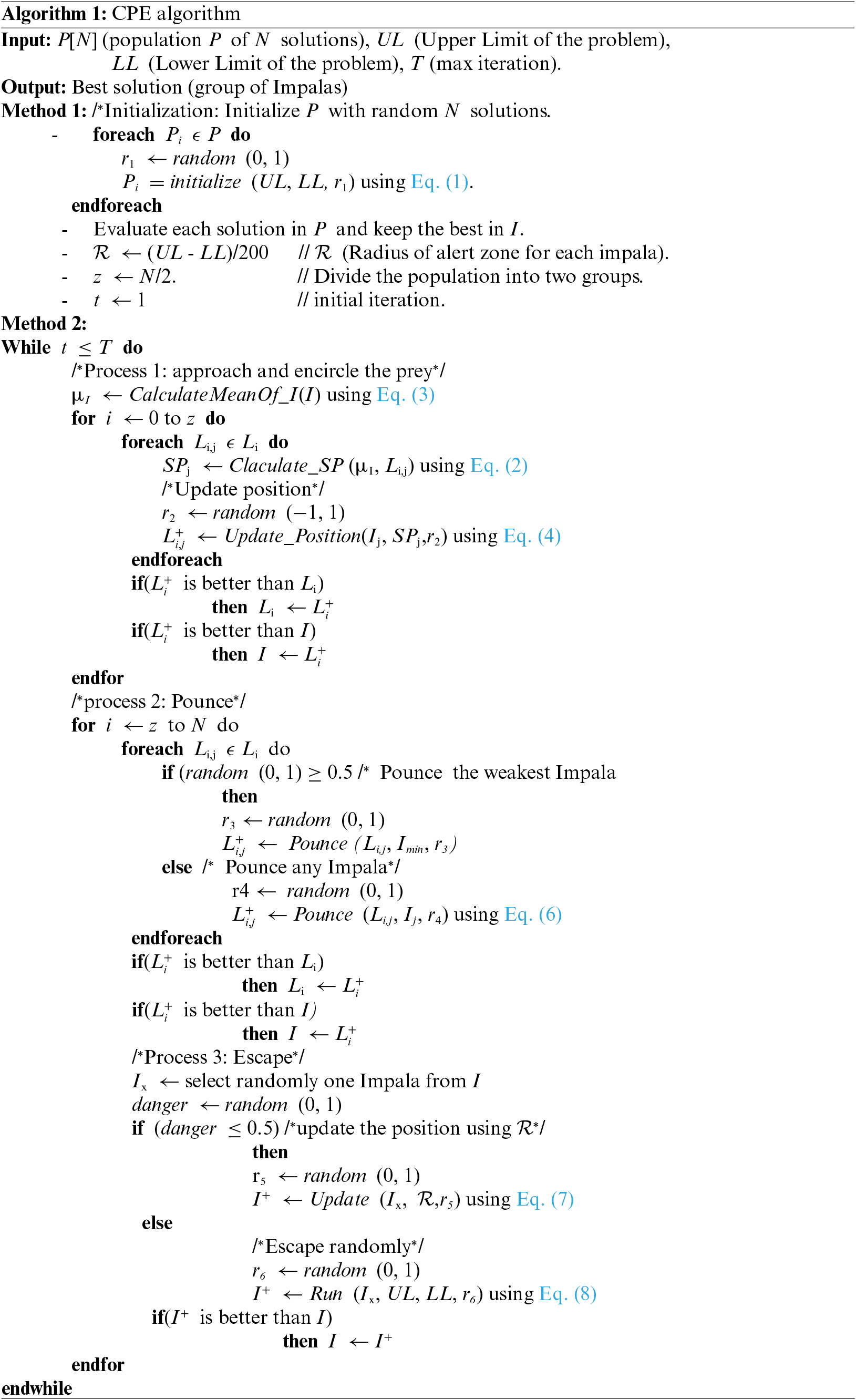

This section contains a thorough explanation of the suggested CPE method, which is based on an image of a group of lions (L) chasing after a group of impalas (I). The three different processes that make up the chase, pounce, and escape operations are approaching and encircling the prey, pouncing the prey, and escaping. Sections 4.2–4.4, respectively, provide a mathematical definition of these procedures.

Initially, as per Eq. (1), a population P of N initial solutions is dispersed randomly across the D-dimensional problem space.

Here, UL and LL denote the upper and lower bounds of the problem domain, respectively.

4.2 Approaching and Encircling the Prey

In this procedure, each Lion (

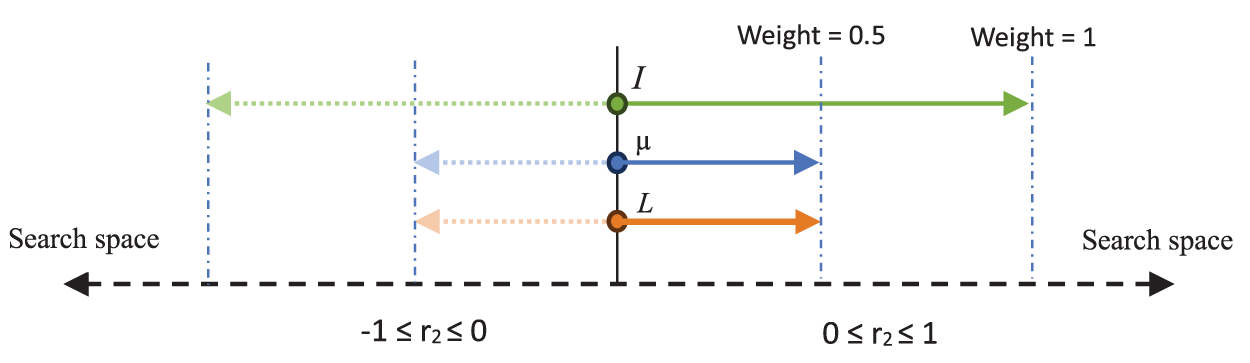

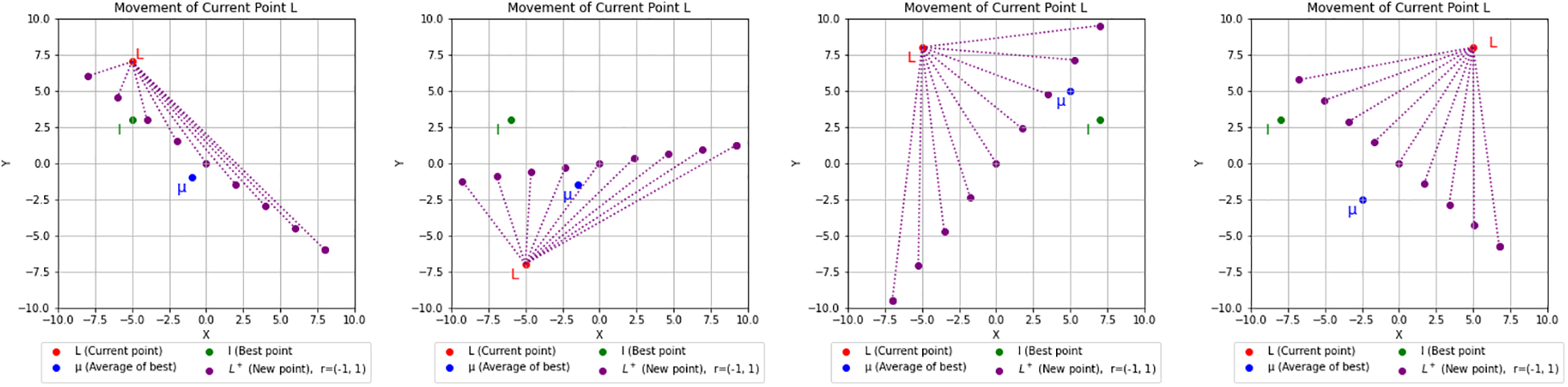

to clarify the operation of this part of the population, the algorithm employs historical knowledge stored in the current point L, while also taking into account the overall performance achieved by the optimal point I. The introduction of the mean point µ acts as a balancing factor between exploration and exploitation. The weights of 0.5 assigned to L and µ in Eq. (2) signify their moderate significance, enabling controlled exploration around the current and mean points. In contrast, the weight of 1.0 designated to I in Eq. (4) imparts a more pronounced exploitation element, steering the algorithm towards promising regions within the search space. The inclusion of the random value

Figure 1: Interaction between the current point L, the mean point µ, and the best point I

Figure 2: Potential trajectory of the current point L across various scenarios

In this process, the Lions attempt to pounce the prey. Prioritizing the weakest targets. The process for pouncing the weakest prey is defined in Eq. (5), while Eq. (6) defines the procedure for pouncing any accessible prey.

where  then Eq. (5) is used; otherwise, Eq. (6) is used. Here,

then Eq. (5) is used; otherwise, Eq. (6) is used. Here,  is a real number in the range (0, 1) determined by the user. By offering two equations for position update, the algorithm introduces variability in the lion’s movement strategy. This promotes exploration by allowing lions to choose between two different directions for updating their positions based on the current context. The motivation behind this design is to inject randomness into the movement of lions in the second group. This randomness encourages exploration by ensuring that lions do not get stuck in local optima. The algorithm takes a cautious approach Eq. (5) by emphasizing movement towards the minimum value among the best solutions, and a more adventurous approach Eq. (3) by focusing on the current best solution. The random choice of equations enables adaptive exploration based on the current context. Once the attacking process begins, Impalas try to escape using the process described in the next section.

is a real number in the range (0, 1) determined by the user. By offering two equations for position update, the algorithm introduces variability in the lion’s movement strategy. This promotes exploration by allowing lions to choose between two different directions for updating their positions based on the current context. The motivation behind this design is to inject randomness into the movement of lions in the second group. This randomness encourages exploration by ensuring that lions do not get stuck in local optima. The algorithm takes a cautious approach Eq. (5) by emphasizing movement towards the minimum value among the best solutions, and a more adventurous approach Eq. (3) by focusing on the current best solution. The random choice of equations enables adaptive exploration based on the current context. Once the attacking process begins, Impalas try to escape using the process described in the next section.

Some Impalas evaluate the degree of risk in their surroundings throughout this process. if they believe the danger to be minimal, they cautiously adjust their location within the area of the alert zone, as defined in Eq. (7). On the other hand, if the danger is high, the Impalas instinctively flee from the threat and escape randomly, as described in Eq. (8).

Here,

where

Figure 3: Flowchart diagram of the proposed CPE algorithm

The time complexity can generally be calculated using the formula

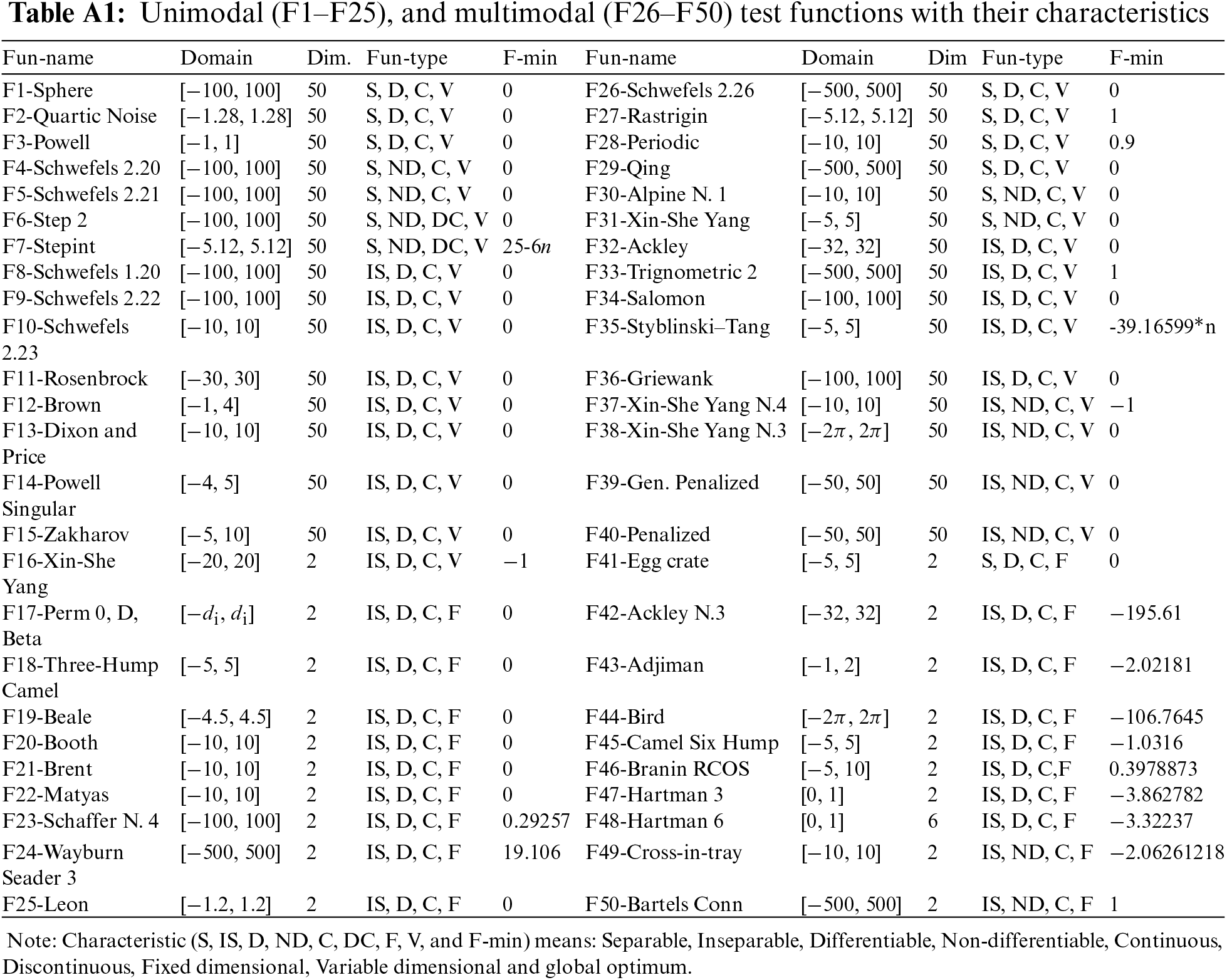

5.1 Experiment 1: Using 50 Test Functions

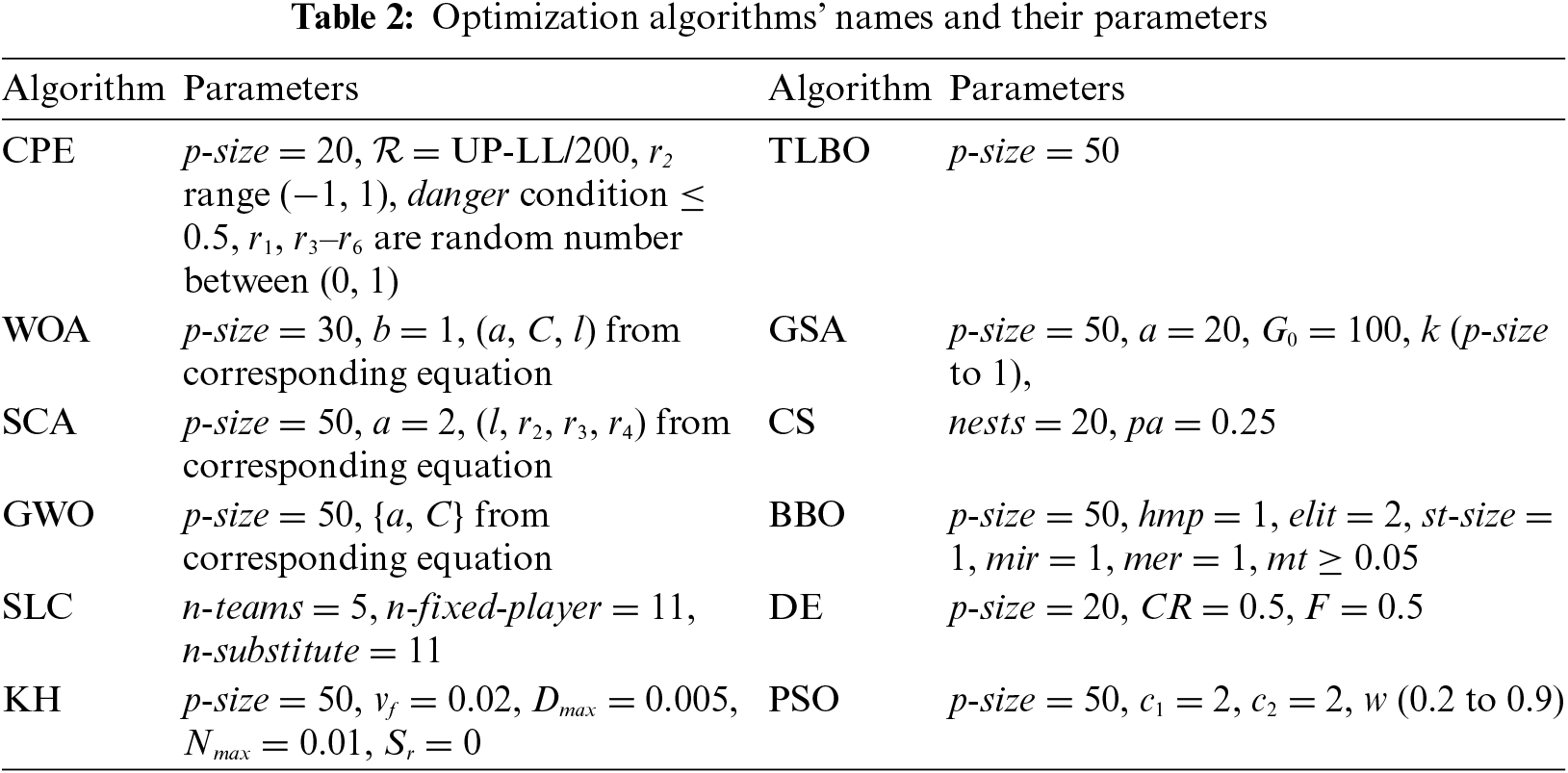

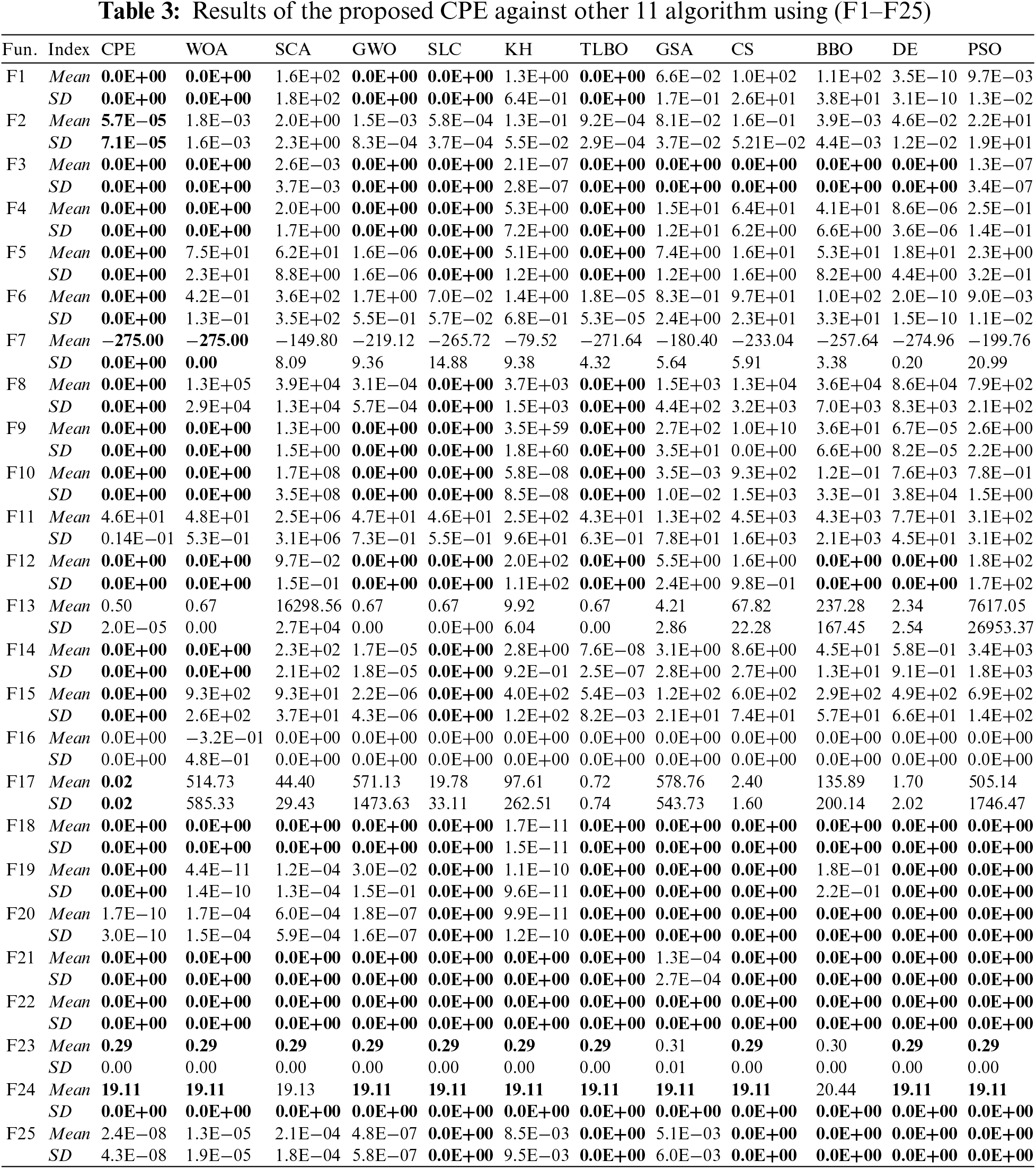

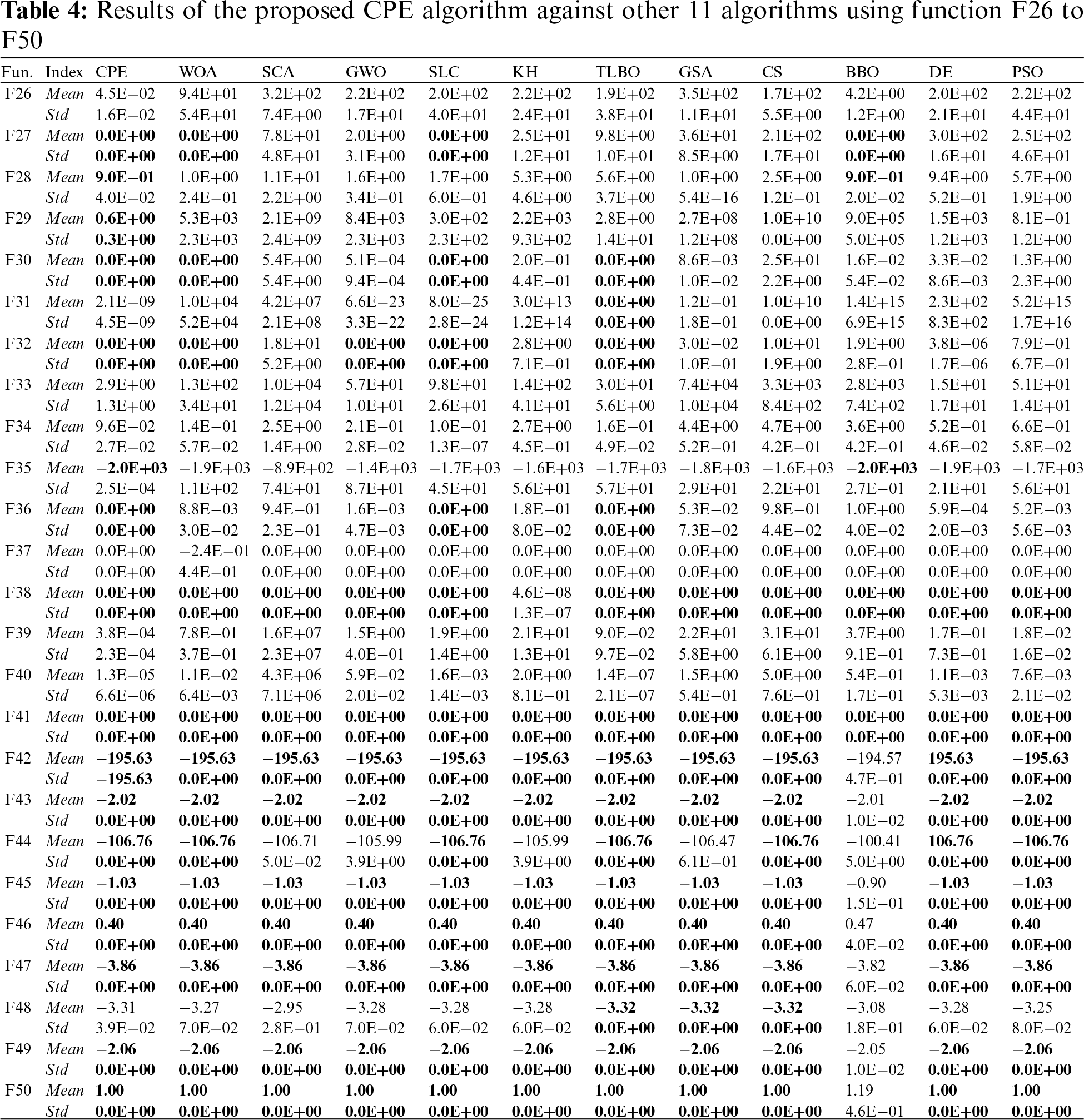

This experiment utilized 50 commonly used test functions to assess the performance of the proposed algorithm. The characteristics of these functions are extensively described in Table A1 in Appendix A. Specifically, functions 1–25 are unimodal and lack local optima, making them suitable for evaluating the algorithm’s exploitation ability. Meanwhile, functions 26–50 are multimodal and have numerous local optima, enabling the evaluation of the algorithm’s exploration capability. For more details about the mathematical formulas of these test functions, the reader can refer to reference [10]. The performance of the proposed algorithm is compared to 11 well-known algorithms, including WOA, SCA, GWO, Soccer League Competition (SLC), Krill Herd (KH), TLBO, GSA, CS, Biogeography Based Optimizer (BBO), Differential Evolution (DE), and PSO. These algorithms were selected because they represent a diverse range of nature-inspired metaheuristics that have been widely used and validated in the field of optimization. Each algorithm is executed 25 times for each test function, and Mean and Standard Deviation (SD) are recorded as metrics. Results with a value lower than the specified tolerance, δ = E − 11, are considered to be 0 during the evaluation. To ensure a fair comparison, the Number of Function Evaluations (NFEs) is set to 30,000 for all algorithms. The names of the algorithms and their parameter settings are presented in Table 2.

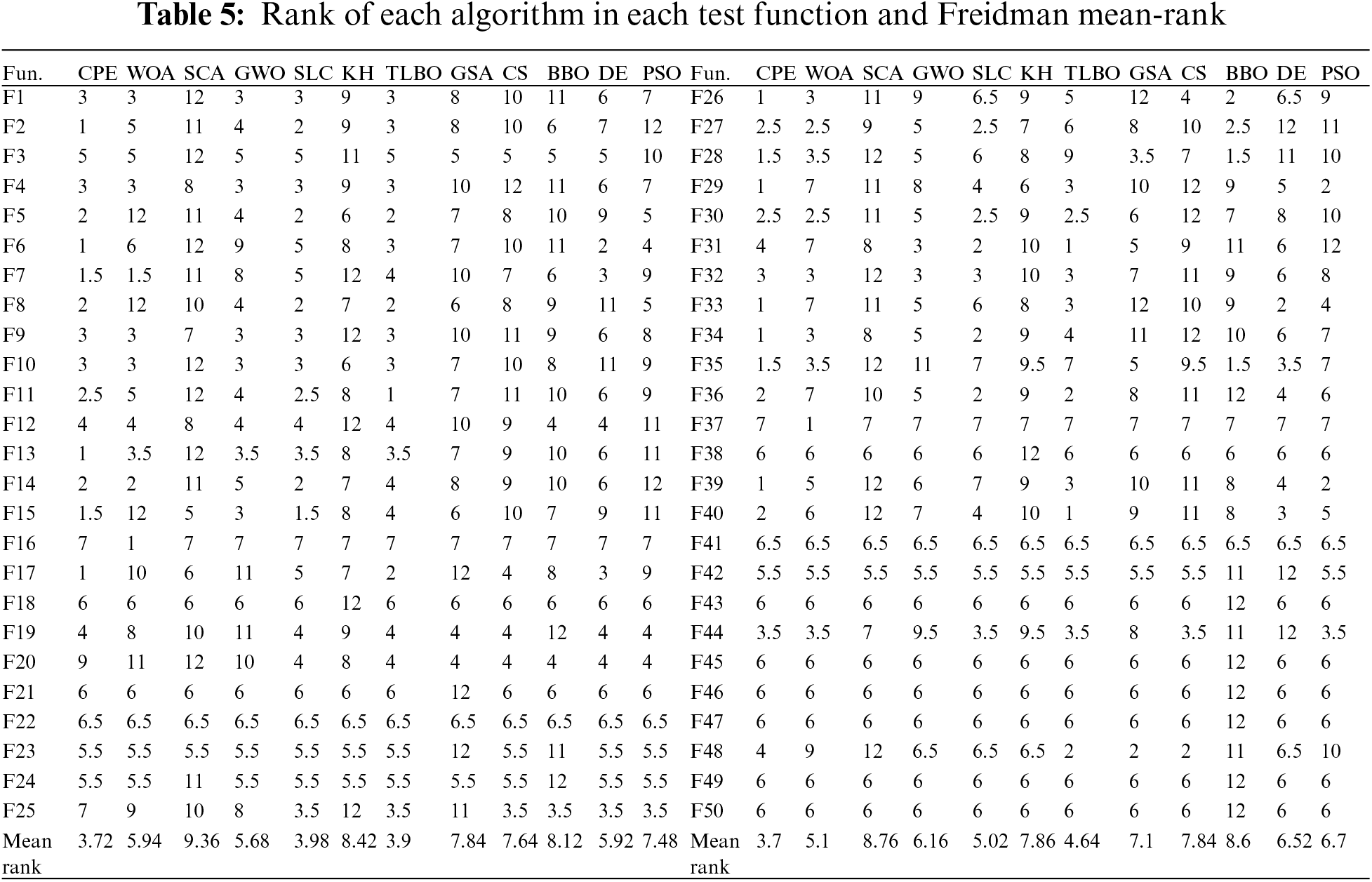

The Mean and SD results for functions 1–25 in the exploitation test and functions 26–50 in the exploration test are shown in Tables 3 and 4, respectively. The best result is highlighted in bold in the tables. Table 5 presents the performance ranks across a comprehensive set of 50 test functions. The evaluation employs Freidman mean rank values as a means to consolidate the algorithmic performances, providing a collective perspective on their effectiveness across all functions. Results indicate that the proposed CPE algorithm demonstrates superior performance on certain test functions, obtaining the top rank (ranked first) in 9 out of the 50 functions, specifically F2, F6, F13, F17, F26, F29, F33, F34, and F39. For the remaining 41 test functions, the proposed CPE algorithm exhibits either superior or comparable performance in comparison to the other algorithms, except for functions F20 and F25 where the proposed algorithm obtains ranks of 9 and 7, respectively. The strengths of the CPE algorithm lie in its innovative partitioning strategy, which effectively enhances exploration and exploitation dynamics. This allows it to perform well on both unimodal and multimodal functions. However, it faces challenges with certain functions (e.g., F20 and F25), indicating areas where parameter tuning or additional mechanisms might be needed to improve performance. Other algorithms, such as GWO and PSO, also demonstrated strong performance, particularly in specific types of functions where their specialized strategies are highly effective. For example, PSO excels in exploitation due to its velocity and position update mechanisms. However, these algorithms may have limitations in maintaining diversity and avoiding local optima, which can impact their performance on more complex multimodal functions. Furthermore, the mean rank analysis reveals that the proposed CPE algorithm achieves better mean ranks of approximately 3.72 and 3.7 for the first and second sets of test functions, respectively. These results provide valuable insights into the algorithm’s overall performance across the entire range of test functions. The CPE algorithm’s strengths lie in its innovative partitioning strategy, which enhances both exploration and exploitation dynamics. However, there is potential for improvement in fine-tuning the parameters or exploring additional strategies to address functions where performance was less optimal.

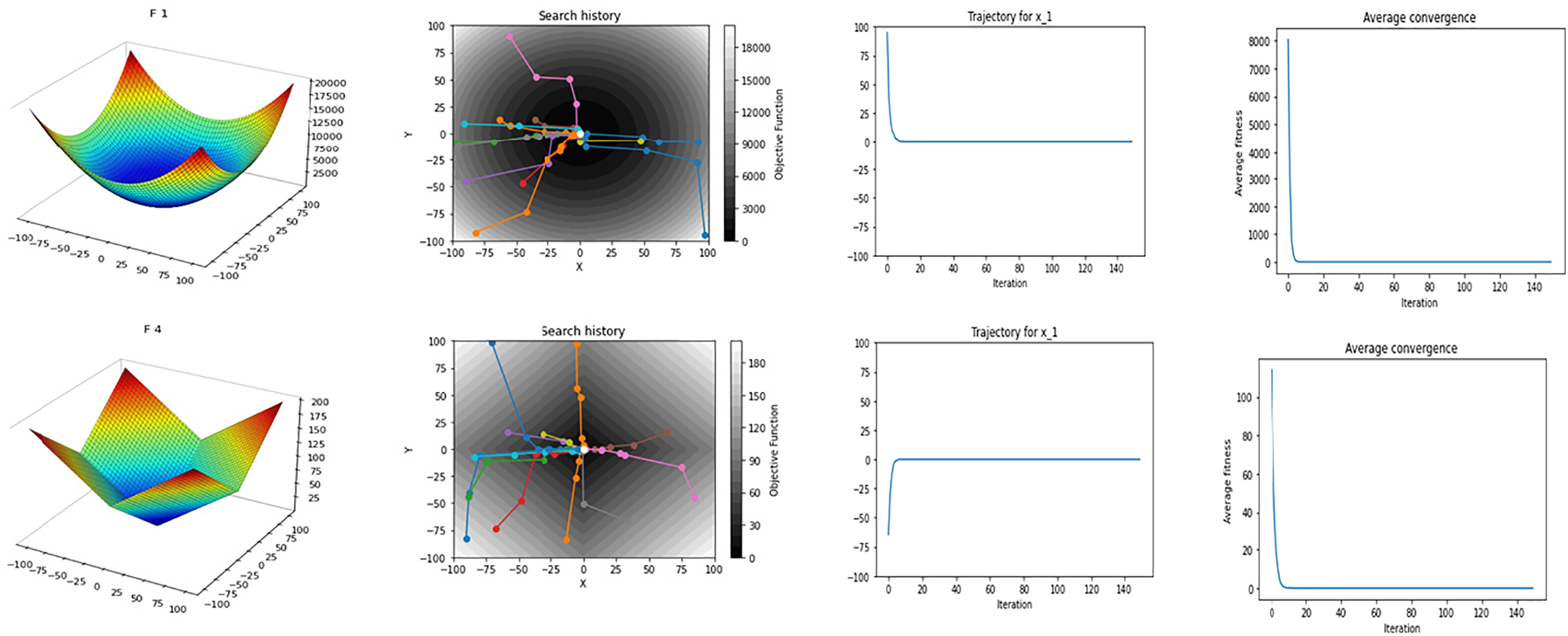

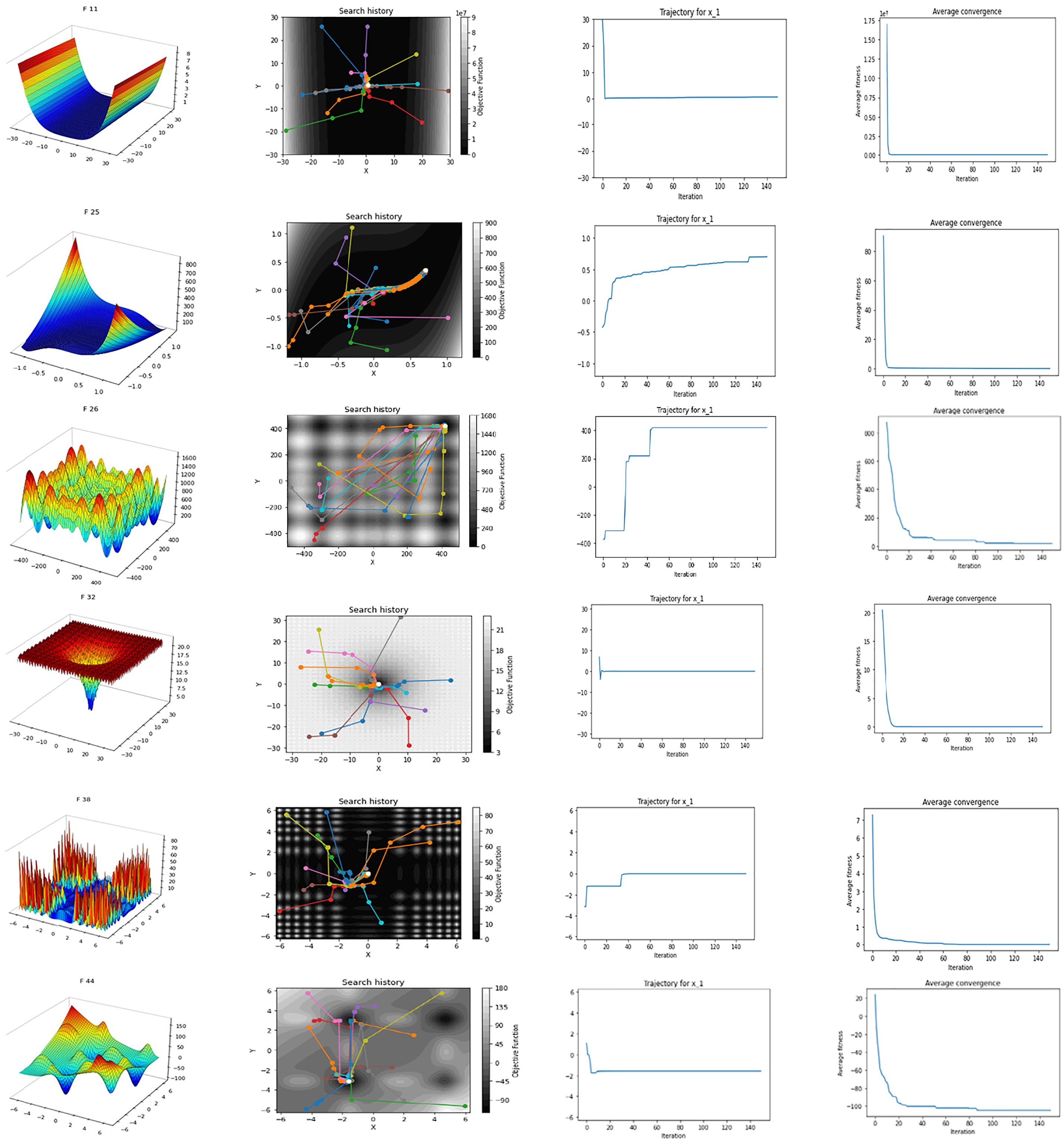

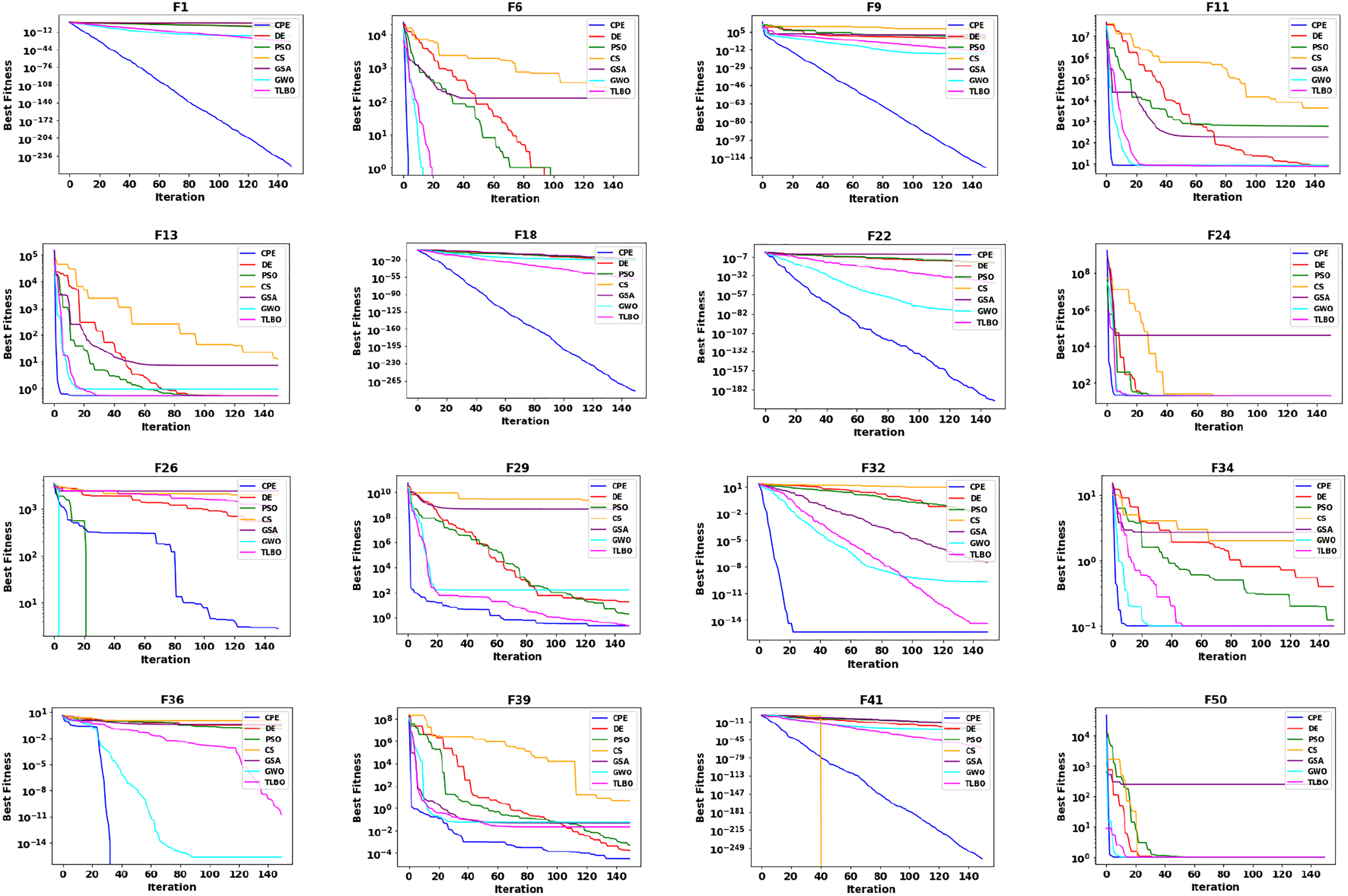

The convergence behaviour is another important feature of an optimization method. It involves the movement of solutions along the search space towards the best answer. To observe the convergence behaviour of the CPE algorithm, Fig. 4 illustrates the search history and trajectory of 12 agents. The first column depicts the 2-D version of the function, the second column shows the search history and trajectory of 12 agents, the third column displays the trajectory of a randomly selected agent in its first dimension, and the fourth column represents the average convergence of all search agents. It is evident that the CPE algorithm’s search history extensively explores promising regions of the search space, avoiding local minima traps, and effectively exploiting the best solution. The trajectory of the first dimension of the agent in column 3 shows that the variables lead to the best value in early iterations. Furthermore, Fig. 5 displays the convergence curves of the CPE algorithm compared to other algorithms, including DE, PSO, CS, GSA, GWO, and TLBO, for some unimodal and multimodal benchmark functions. The curves are plotted against the number of iterations, with a maximum of 150. For unimodal functions (1–25), the curves indicate that the CPE algorithm outperformed all other algorithms, highlighting its notable ability to rapidly explore and exploit promising areas. In the case of multimodal functions (26–50) in Fig. 5, the experimental results demonstrate that the CPE algorithm performs better than all other algorithms, except for GWO and PSO in the case of F26, and PSO in the case of F41. Nevertheless, the CPE algorithm consistently exhibits a pronounced capacity to escape local optima. These empirical observations underscore the algorithm’s adaptability, which enables it to dynamically adjust its exploration strategies in accordance with the contextual requirements, manifesting a multifaceted spectrum of exploration and exploitation techniques. The first group within its methodology amalgamates lion positions, optimal solutions, and averaging techniques, thereby achieving a judicious equilibrium between intensification and diversification. In contrast, the second group introduces variance into lion movement patterns, thereby promoting exploratory behaviours while effectively mitigating stagnation. The algorithm, fundamentally, optimizes solutions through a synthesis of individual experiences, communal knowledge, and controlled stochasticity. Consequently, we assert that the CPE algorithm excels in probing the search space, showcasing rapid convergence toward optimal solutions, and possesses commendable exploration capabilities. These attributes collectively empower it to adeptly circumvent local minima.

Figure 4: Search history and trajectory of 12 agents, trajectory of the first dimension of one agent, and the average convergence of all agents

Figure 5: Comparisons of convergence curves for some benchmark functions

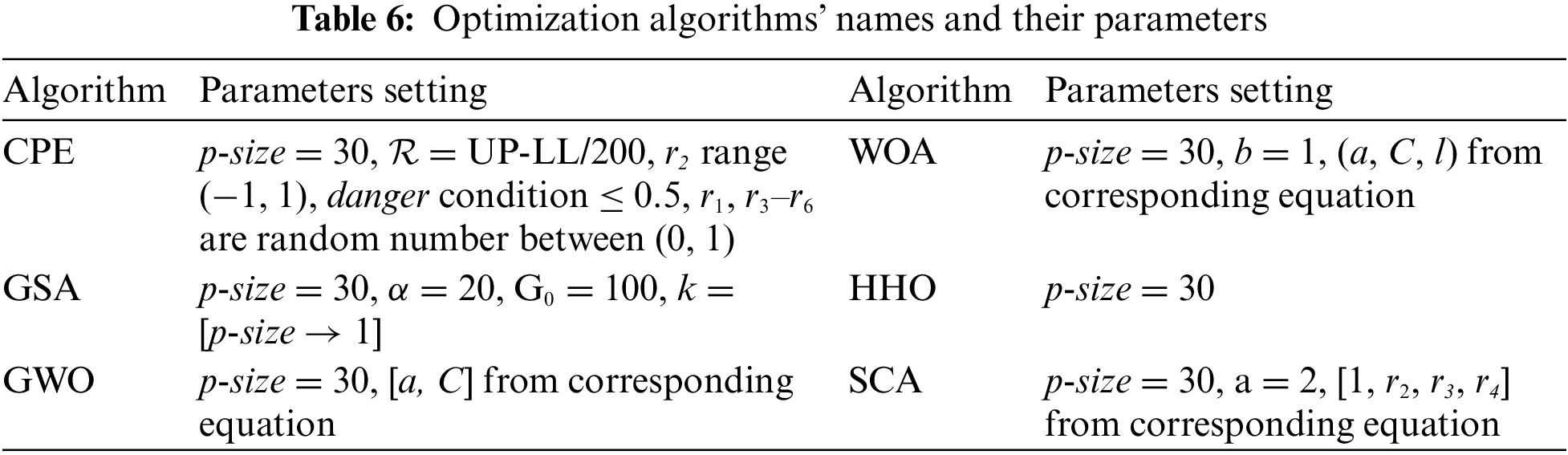

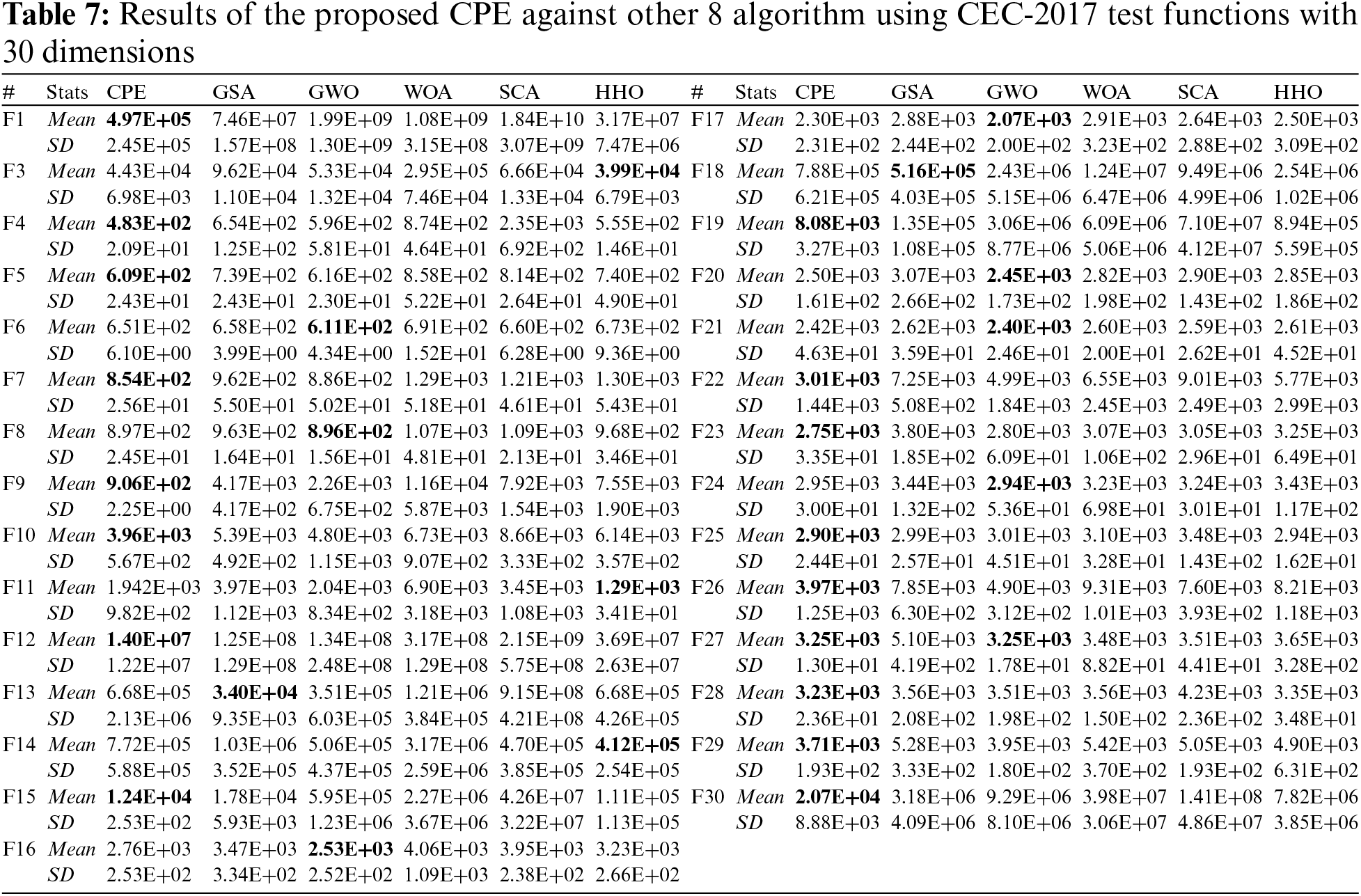

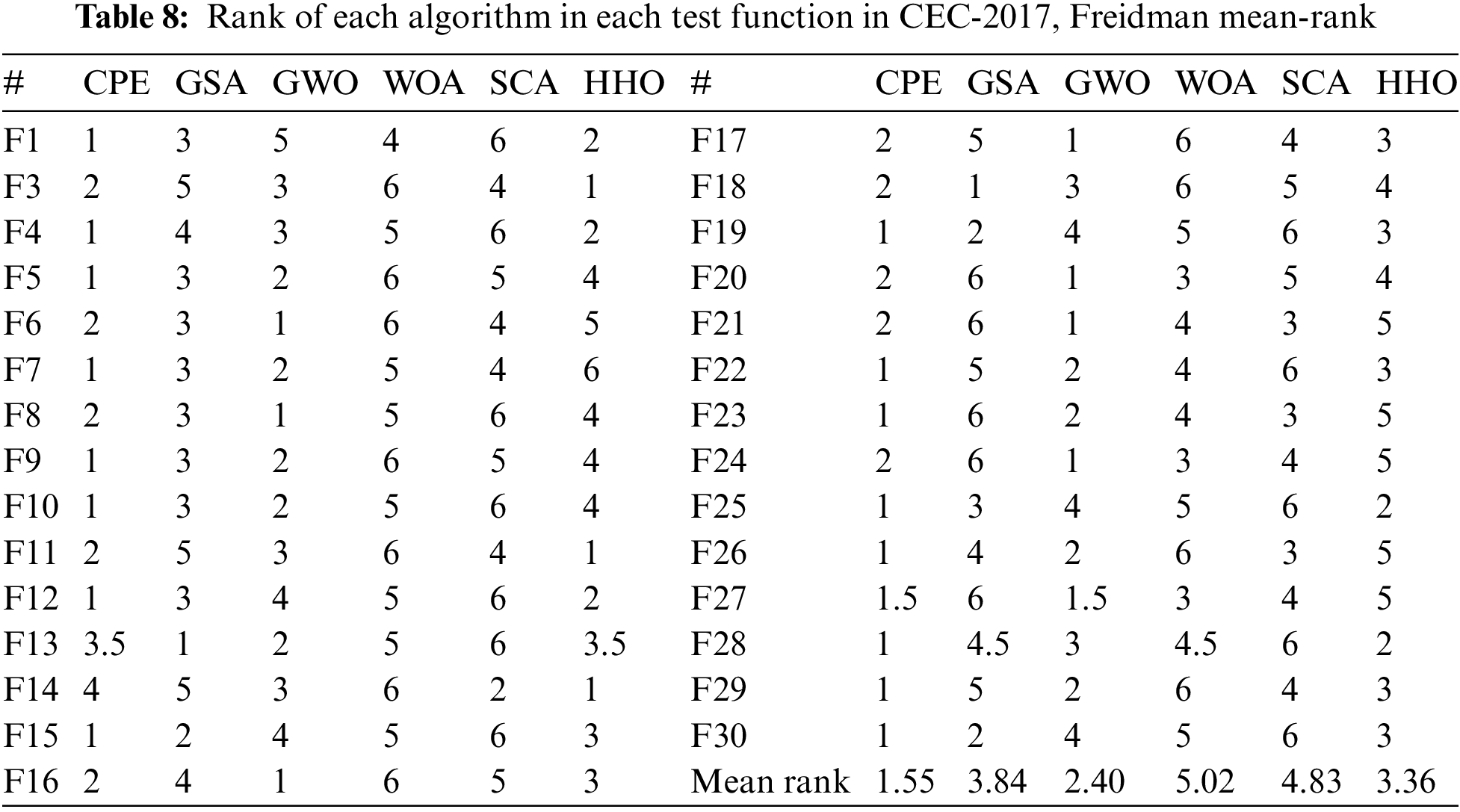

5.2 Experiment 2: Using CEC-2017 with 30 Dimensions

In this experiment, we utilized CEC-2017 benchmark functions with 30 dimensions, encompassing 30 test functions, which included unimodal, multimodal, hybrid, and composition functions. However, function 2 was excluded from the analysis due to its instability. The performance of the proposed CPE algorithm is compared against five well-known optimization algorithms, namely GSA, GWO, WOA, SCA, and HHO. These algorithms were chosen because they are representative of powerful techniques in the field of optimization each inspired with different domain and provide a robust benchmark for evaluating the CPE performance. For all algorithms, NOFE is set to 60,000 function evaluations. Table 6 presents the names of the algorithms and their parameter settings. Each algorithm is executed 30 times for each test function, and the Mean and SD are recorded. The best result is highlighted in bold. Table 7 presents the Mean and SD results of the proposed algorithm against the other algorithms, while Table 8 shows the rank of each algorithm in each test function. The last row in Table 8 presents the Freidman mean rank test for each algorithm across all the 29 test functions. Analysing the results from Tables 7 and 8, it is evident that the proposed CPE algorithm demonstrates superior performance on certain test functions, obtaining the first rank in 15 out of the 29 functions. For the remaining 14 test functions, the proposed CPE algorithm achieves the second rank in 10 test functions. The worst result is obtained in only two functions (13 and 14). Overall, the performance of the proposed algorithm either outperforms or is comparable to the other algorithms. The CPE strengths are again evident in its strong performance across a diverse set of test functions, particularly in achieving the top rank in over half of the functions tested. This demonstrates its robustness and adaptability to different types of optimization problems. However, its lower performance on functions 13 and 14 suggests areas where the algorithm could be refined, potentially through parameter adjustments or hybridization with other techniques. Among the alternative algorithms, GSA and GWO showed competitive performance, particularly on specific function types. GSA strength in global search capabilities makes it effective for complex landscapes, while GWO hierarchical structure aids in convergence. Nonetheless, these algorithms may struggle with maintaining a balance between exploration and exploitation, which is crucial for achieving consistent performance across varied functions. Furthermore, the mean rank analysis reveals that the proposed CPE algorithm achieves better mean ranks, approximately 1.55. These results provide valuable insights into the algorithm’s overall performance across the entire range of test functions, suggesting that the proposed CPE algorithm possesses notable strengths and competitiveness. However, areas for improvement include refining the algorithm to handle specific functions where it showed relatively lower performance, potentially by adjusting parameter settings or incorporating hybrid strategies.

5.3 Experiment 3: Applying CPE to Real Engineering Design Problems

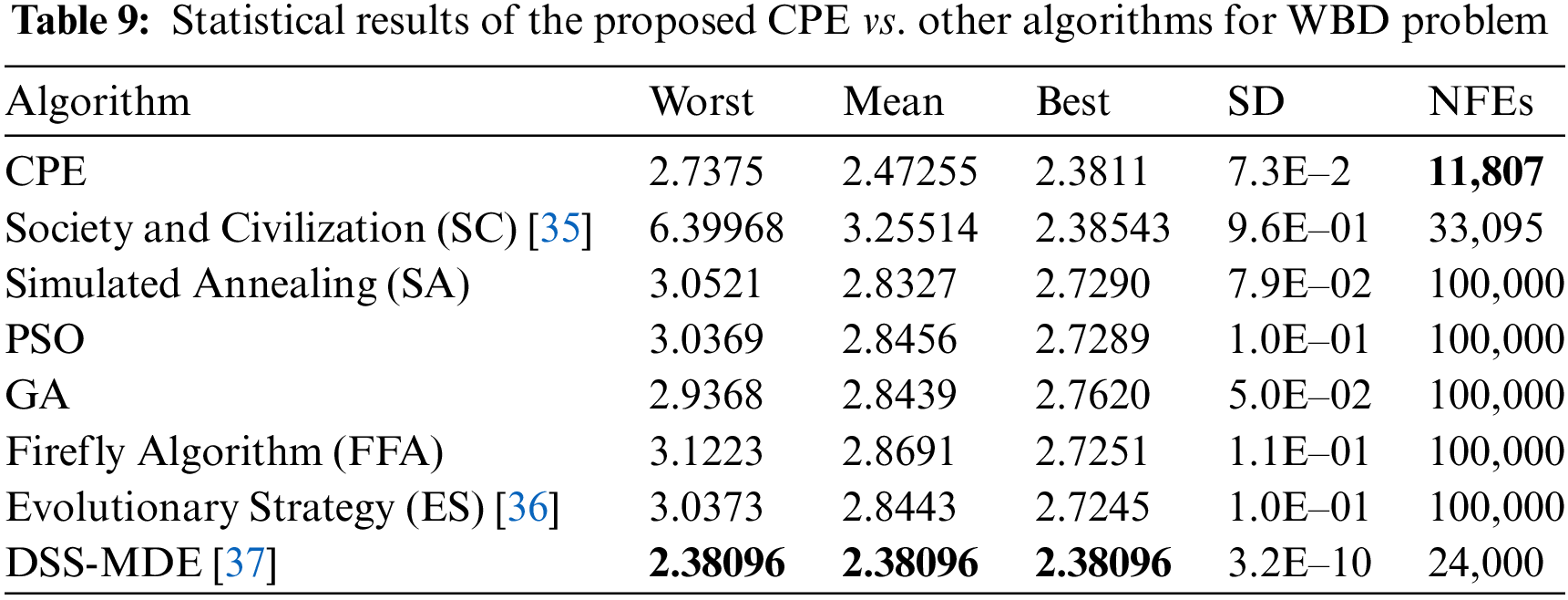

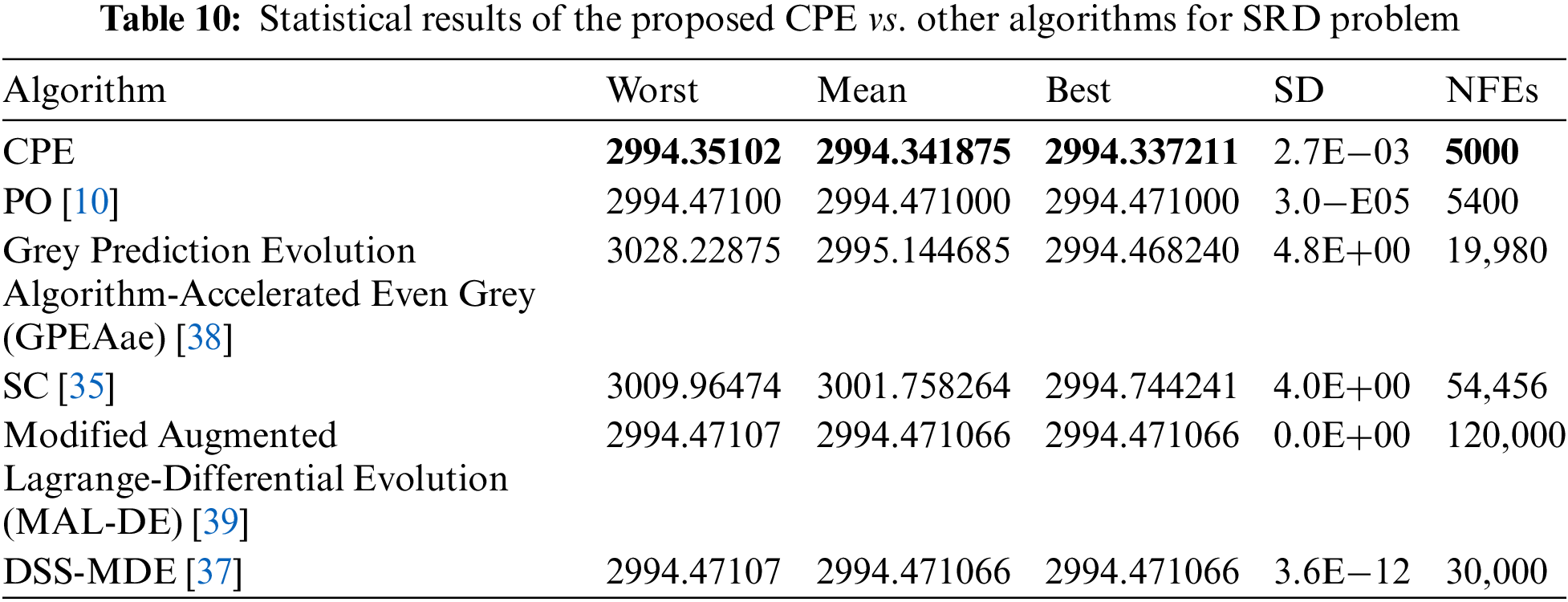

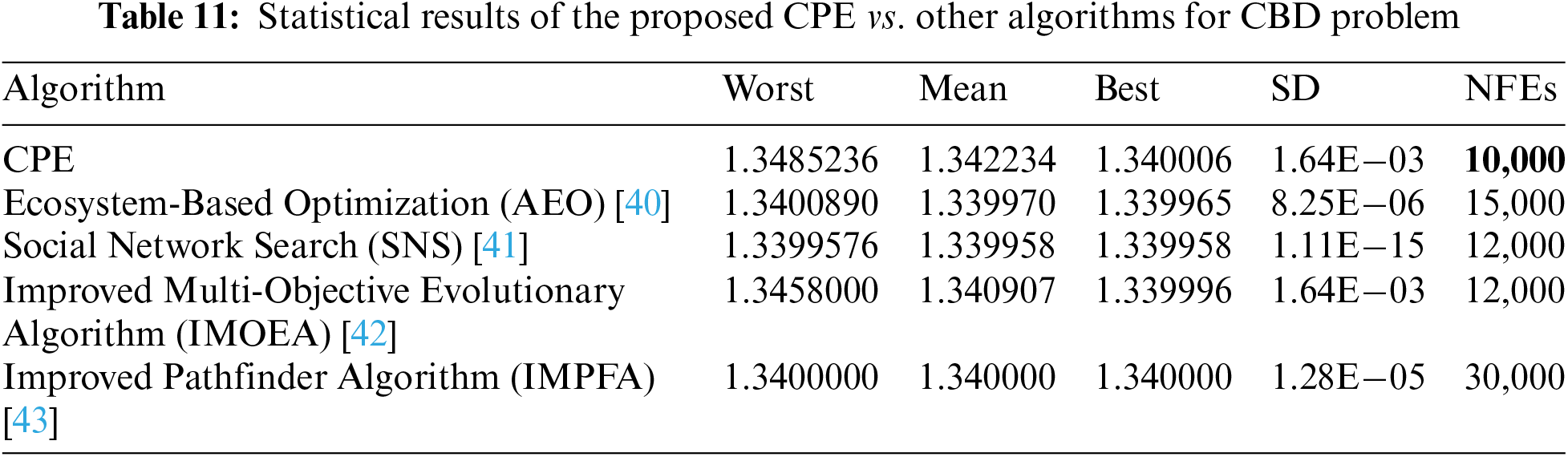

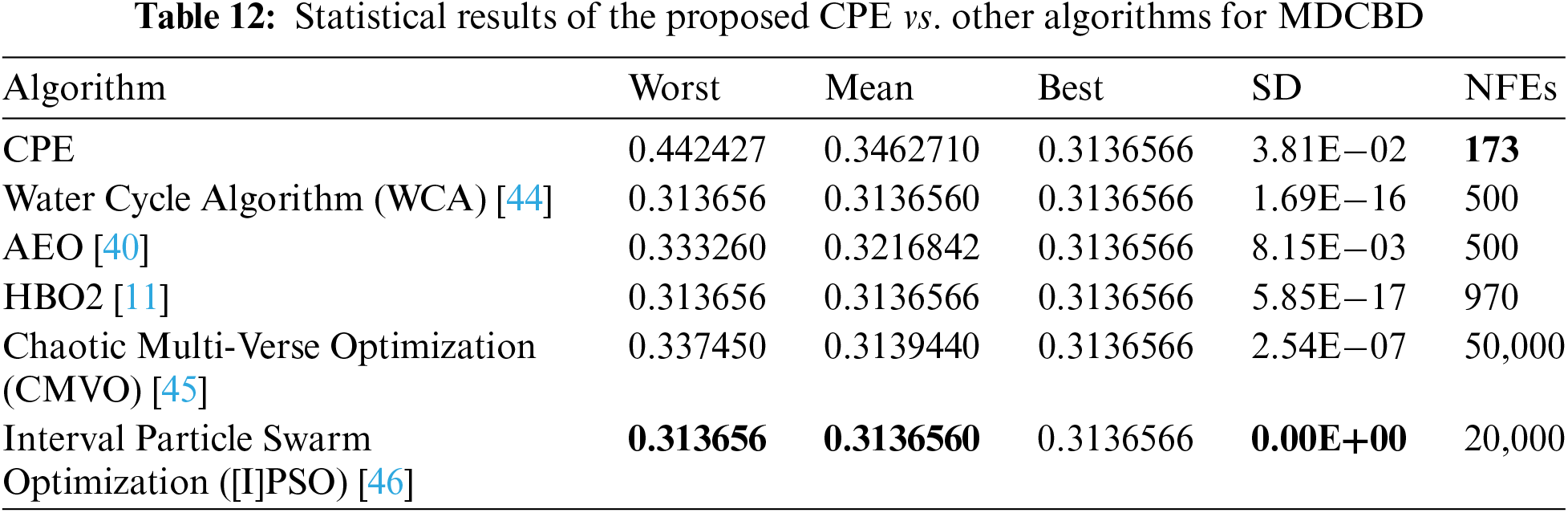

In this study, we employed the proposed CPE algorithm to optimize four established engineering design problems, namely Welded Beam Design (WBD), Speed Reducer Design (SRD), Cantilever Beam Design (CBD), and Multi-plate Disc Clutch Brake Design (MDCBD) [34]. To gauge the effectiveness of the CPE algorithm, we conducted a comparative analysis with other algorithms that have also been utilized to optimize these problems, using only studies that employed the correct mathematical equations. Notably, different solutions may arise due to differences in the problem formulations [34]. Table 9 presents statistical results for the WBD problem, including Worst, Mean, Best, SD, and Number of Function Evaluations (NFEs), comparing the performance of the proposed CPE algorithm with other algorithms. The results indicate that the CPE algorithm outperforms all other algorithms in terms of Mean, Best, and SD, except for the Dynamic Stochastic Selection-Multimember Differential Evolution (DSS-MDE) method, which produces slightly better results but requires significantly more NFEs (18,467 NFEs vs. 11,807 NFEs for the proposed CPE algorithm). Similarly, Table 10 shows that the proposed CPE algorithm is superior to all other algorithms in all terms for the SRD problem, with only 5000 NFEs. In contrast, Table 11 indicates that there are no appreciable variations in the algorithms for the CBD problem in terms of Worst, Mean, and Best. However, on the positive side, the CPE algorithm requires only 173 NFEs. Lastly, Table 12 presents the results for the MDCBD problem, indicating that there were no significant differences between the algorithms’ performance in terms of Best. However, the proposed algorithm performs slightly worse against other algorithms in terms of Mean and Worst, with a difference of about 0.02 and 0.1, respectively. On the positive side, the CPE algorithm requires only 173 NFEs to achieve the same best solution produced by the other algorithms, making it faster than all other algorithms.

The statistical results presented in the tables lead to the conclusion that the proposed CPE algorithm outperforms other algorithms in the majority of the tested optimization problems, achieving better Mean and Best values or similar results. Moreover, the CPE algorithm requires fewer function evaluations, making it a faster optimization method. These findings suggest that the CPE algorithm is a promising optimization method that can be applied to a wide range of problems, and it has the potential to be a useful tool for researchers and practitioners in various fields.

6 Conclusions and Future Works

This study presents a novel optimization algorithm, called the CPE algorithm, which is inspired by the hunting behavior of predators and prey. The proposed CPE algorithm utilizes a unique approach that effectively combines multiple searches. By partitioning the population into two groups, each tasked with independently exploring the search space, the algorithm can efficiently navigate through the problem domain and avoid local optima. Moreover, the algorithm employs a distinct search mechanism that incorporates the average of the best solution, and the current solution, facilitating convergence to the optimal solution. Additionally, the use of the pouncing process enables faster movement towards the best solution. The algorithm’s efficacy is demonstrated across 50 well-known test functions, CEC-2017 benchmarks, and real-world engineering challenges, consistently surpassing other algorithms. However, we acknowledge that the algorithm does have certain limitations. Specifically, determining parameters such as the radius

Acknowledgement: Not applicable.

Funding Statement: The author received no specific funding for this study.

Availability of Data and Materials: Not applicable.

Conflicts of Interest: The author declares that he has no conflicts of interest to report regarding the present study.

References

1. R. R. Bulatović, S. R. Đorđević, and V. S. Đorđević, “Cuckoo search algorithm: A metaheuristic approach to solving the problem of optimum synthesis of a six-bar double dwell linkage,” Mech. Mach. Theory, vol. 61, no. 4, pp. 1–13, 2013. doi: 10.1016/j.mechmachtheory.2012.10.010. [Google Scholar] [CrossRef]

2. M. A. Albadr, S. Tiun, M. Ayob, and F. Al-Dhief, “Genetic algorithm based on natural selection theory for optimization problems,” Symmetry, vol. 12, no. 11, pp. 1758, 2020. doi: 10.3390/sym12111758. [Google Scholar] [CrossRef]

3. J. Tang, G. Liu, and Q. Pan, “A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends,” IEEE/CAA J. Automat. Sin., vol. 8, no. 10, pp. 1627–1643, 2021. doi: 10.1109/JAS.2021.1004129. [Google Scholar] [CrossRef]

4. N. Siddique and H. Adeli, “Nature inspired computing: An overview and some future directions,” Cognit. Comput., vol. 7, no. 6, pp. 706–714, 2015. doi: 10.1007/s12559-015-9370-8. [Google Scholar] [PubMed] [CrossRef]

5. S. Mirjalili and A. Lewis, “The whale optimization algorithm,” Adv. Eng. Softw., vol. 95, no. 12, pp. 51–67, 2016. doi: 10.1016/j.advengsoft.2016.01.008. [Google Scholar] [CrossRef]

6. T. R. Biyanto et al., “Killer whale algorithm: An algorithm inspired by the life of killer whale,” in Proc. Comput. Sci., Bali, Indonesia, 2017, vol. 124, pp. 151–157. doi: 10.1016/j.procs.2017.12.141. [Google Scholar] [CrossRef]

7. S. Mirjalili, A. H. Gandomi, S. Z. Mirjalili, S. Saremi, H. Faris and S. M. Mirjalili, “Salp swarm algorithm: A bio-inspired optimizer for engineering design problems,” Adv. Eng. Softw., vol. 114, pp. 163–191, 2017. doi: 10.1016/j.advengsoft.2017.07.002. [Google Scholar] [CrossRef]

8. S. Arora and S. Singh, “Butterfly optimization algorithm: A novel approach for global optimization,” Soft Comput., vol. 23, no. 3, pp. 715–734, 2019. doi: 10.1007/s00500-018-3102-4. [Google Scholar] [CrossRef]

9. A. A. Heidari, S. Mirjalili, H. Faris, I. Aljarah, M. Mafarja and H. Chen, “Harris hawks’ optimization: Algorithm and applications,” Future Gener. Comput. Syst., vol. 97, pp. 849–872, 2019. doi: 10.1016/j.future.2019.02.028. [Google Scholar] [CrossRef]

10. Q. Askari, I. Younas, and M. Saeed, “Political optimizer: A novel socio-inspired meta-heuristic for global optimization,” Knowl.-Based Syst., vol. 195, no. 5, pp. 105709, 2020. doi: 10.1016/j.knosys.2020.105709. [Google Scholar] [CrossRef]

11. Q. Askari, M. Saeed, and I. Younas, “Heap-based optimizer inspired by corporate rank hierarchy for global optimization,” Expert Syst. Appl., vol. 161, no. 3, pp. 113702, 2020. doi: 10.1016/j.eswa.2020.113702. [Google Scholar] [CrossRef]

12. R. V. Rao, V. J. Savsani, and D. P. Vakharia, “Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems,” Comput.-Aided Design, vol. 43, no. 3, pp. 303–315, 2011. doi: 10.1016/j.cad.2010.12.015. [Google Scholar] [CrossRef]

13. D. H. Wolpert and W. G. Macready, “No free lunch theorems for optimization,” IEEE Trans. Evol. Comput., vol. 1, no. 1, pp. 67–82, 1997. doi: 10.1109/4235.585893. [Google Scholar] [CrossRef]

14. A. Hussain and Y. S. Muhammad, “Trade-off between exploration and exploitation with genetic algorithm using a novel selection operator,” Complex Intell. Syst., vol. 6, no. 1, pp. 1–14, 2020. doi: 10.1007/s40747-019-0102-7. [Google Scholar] [CrossRef]

15. L. Ming, D. Wenqiang, and N. Fuzhong, “An adaptive particle swarm optimization algorithm based on directed weighted complex network,” Math. Probl. Eng., vol. 2014, pp. 434972, 2014. doi: 10.1155/2014/434972. [Google Scholar] [CrossRef]

16. B. Li, Y. Zhou, Y. Qiu, and Z. Li, “An ant-based optimizer for the training of convolutional neural networks,” in Proc. 2022 China Automat. Congress (CAC), Xiamen, China, Nov. 25–27, 2022. doi: 10.1109/CAC57257.2022.10055228. [Google Scholar] [CrossRef]

17. E. Rashedi, H. Nezamabadi, and S. Saryazdi, “GSA: A gravitational search algorithm,” Inf. Sci., vol. 179, no. 13, pp. 2232–2248, 2009. doi: 10.1016/j.ins.2009.03.004. [Google Scholar] [CrossRef]

18. X. S. Yang and S. Deb, “Engineering optimization by cuckoo search,” Int. J. Math. Modell. Numer. Optim., vol. 1, no. 4, pp. 330–343, 2010. doi: 10.1504/IJMMNO.2010.035430. [Google Scholar] [CrossRef]

19. S. Mirjalili, S. M. Mirjalili, and A. Lewis, “Grey wolf optimizer,” Adv. Eng. Softw., vol. 69, pp. 46–61, 2014. doi: 10.1016/j.advengsoft.2013.12.007. [Google Scholar] [CrossRef]

20. W. G. Luo and X. B. Yu, “A novel enhanced cuckoo search algorithm for global optimization,” J. Intell. Fuzzy Syst., vol. 43, no. 3, pp. 2945–2962, 2022. doi: 10.3233/JIFS-220179. [Google Scholar] [CrossRef]

21. Y. Ou, P. Yin, and L. Mo, “An improved grey wolf optimizer and its application in robot path planning,” Biomimetics, vol. 8, no. 1, pp. 84, 2023. doi: 10.3390/biomimetics8010084. [Google Scholar] [PubMed] [CrossRef]

22. S. Harifi, M. Khalilian, J. Mohammadzadeh, and S. Ebrahimnejad, “Emperor penguins’ colony: A new metaheuristic algorithm for optimization,” Evol. Intell., vol. 12, no. 2, pp. 211–226, 2019. doi: 10.1007/s12065-019-00212-x. [Google Scholar] [CrossRef]

23. K. Zervoudakis and S. Tsafarakis, “A mayfly optimization algorithm,” Comput. Industr. Eng., vol. 145, no. 5, pp. 106559, 2020. doi: 10.1016/j.cie.2020.106559. [Google Scholar] [CrossRef]

24. M. Dirik, “Comparison of recent meta-heuristic optimization algorithms using different benchmark functions,” J. Math. Sci. Model., vol. 5, no. 3, pp. 113–124, 2022. doi: 10.33187/jmsm.1115792. [Google Scholar] [CrossRef]

25. S. Mirjalili, “SCA: A sine cosine algorithm for solving optimization problems,” Knowl.-Based Syst., vol. 96, pp. 120–133, 2016. doi: 10.1016/j.knosys.2015.12.022. [Google Scholar] [CrossRef]

26. S. Kaur, L. K. Awasthi, A. L. Sangal, and G. Dhiman, “Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization,” Eng. Appl. Artif. Intell., vol. 90, no. 2, pp. 103541, 2020. doi: 10.1016/j.engappai.2020.103541. [Google Scholar] [CrossRef]

27. M. Jahangiri, M. A. Hadianfard, M. A. Najafgholipour, M. Jahangiri, and M. R. Gerami, “Interactive autodidactic school: A new metaheuristic optimization algorithm for solving mathematical and structural design optimization problems,” Comput. Struct., vol. 235, no. 2, pp. 106268, 2020. doi: 10.1016/j.compstruc.2020.106268. [Google Scholar] [CrossRef]

28. M. Alimoradi, H. Azgomi, and A. Asghari, “Trees social relations optimization algorithm: A new swarm-based metaheuristic technique to solve continuous and discrete optimization problems,” Math. Comput. Simul., vol. 194, no. 4, pp. 629–664, 2022. doi: 10.1016/j.matcom.2021.12.010. [Google Scholar] [CrossRef]

29. A. M. Balani, M. D. Nayeri, A. Azar, and M. T. Yazdi, “Golden eagle optimizer: A nature-inspired metaheuristic algorithm,” Comput. Ind. Eng., vol. 152, pp. 107050, 2021. doi: 10.1016/j.cie.2020.107050. [Google Scholar] [CrossRef]

30. I. Naruei and F. Keynia, “A new optimization method based on COOT bird natural life model,” Expert Syst. Appl., vol. 183, no. 2, pp. 115352, 2021. doi: 10.1016/j.eswa.2021.115352. [Google Scholar] [CrossRef]

31. J. R. Šćepanović, A. Karač, Z. M. Jakšić, L. B. Petković, and S. B. Vrhovac, “Group chase and escape in the presence of obstacles,” Phys. A: Stat. Mech. Appl., vol. 525, pp. 450–465, 2019. doi: 10.1016/j.physa.2019.03.017. [Google Scholar] [CrossRef]

32. G. B. Schaller, “Predator-prey relations,” in The Serengeti Lion: A Study of Predator-Prey Relations, 1st ed. Chicago, USA: University of Chicago Press, 1972, pp. 87. [Google Scholar]

33. F. Dumont, C. Pasquaretta, D. Réale, G. Bogliani, and A. V. Hardenberg, “Flight initiation distance and starting distance: Biological effect or mathematical artefact,” Ethology, vol. 118, no. 11, pp. 1051–1062, 2012. doi: 10.1111/eth.12006. [Google Scholar] [CrossRef]

34. A. S. Eesa, M. M. Hassan, and W. K. Arabo, “Letter: Application of optimization algorithms to engineering design problems and discrepancies in mathematical formulas,” Appl. Soft Comput., vol. 140, pp. 110252, 2023. doi: 10.1016/j.asoc.2023.110252. [Google Scholar] [CrossRef]

35. T. Ray and K. M. Liew, “Society and civilization: An optimization algorithm based on the simulation of social behavior,” IEEE Trans. Evol. Comput., vol. 7, no. 4, pp. 386–396, 2003. doi: 10.1109/TEVC.2003.814902. [Google Scholar] [CrossRef]

36. M. Ebrahimi, “Design and optimization of aluminum cross-car beam assemblies considering uncertainties,” M.S. dissertation, University of Toronto, Canada, 2015. [Google Scholar]

37. M. Zhang, W. Luo, and X. Wang, “Differential evolution with dynamic stochastic selection for constrained optimization,” Inf. Sci., vol. 178, no. 15, pp. 3043–3074, 2008. doi: 10.1016/j.ins.2008.02.014. [Google Scholar] [CrossRef]

38. C. Gao, Z. Hu, Z. Xiong, and Q. Su, “Grey prediction evolution algorithm based on accelerated even grey model,” IEEE Access, vol. 8, pp. 107941–107957, 2020. doi: 10.1109/ACCESS.2020.3001194. [Google Scholar] [CrossRef]

39. W. Long, X. Liang, Y. Huang, and Y. Chen, “A hybrid differential evolution augmented Lagrange method for constrained numerical and engineering optimization,” Comput.-Aided Design, vol. 45, no. 12, pp. 1562–1574, 2013. doi: 10.1016/j.cad.2013.07.007. [Google Scholar] [CrossRef]

40. W. Zhao, L. Wang, and Z. Zhang, “Artificial ecosystem-based optimization: A novel nature-inspired meta-heuristic algorithm,” Neural Comput. Appl., vol. 32, no. 13, pp. 9383–9425, 2020. doi: 10.1007/s00521-019-04452-x. [Google Scholar] [CrossRef]

41. H. Bayzidi, S. Talatahari, M. Saraee, and C. P. Lamarche, “Social network search for solving engineering optimization problems,” Comput. Intell. Neurosci., vol. 2021, pp. 1–32, 2021. doi: 10.1155/2021/8548639. [Google Scholar] [PubMed] [CrossRef]

42. H. G. Arab, A. M. Rayeni, and M. R. Ghasemi, “An effective improved multi-objective evolutionary algorithm (IMOEA) for solving constraint civil engineering optimization problems,” Teknik Dergi, vol. 32, no. 2, pp. 10645–10674, 2021. doi: 10.18400/tekderg.541640. [Google Scholar] [CrossRef]

43. C. Tang, Y. Zhou, Q. Luo, and Z. Tang, “An enhanced pathfinder algorithm for engineering optimization problems,” Eng. Comput., vol. 83, no. 2, pp. 1481–1503, 2021. [Google Scholar]

44. H. Eskandar, A. Sadollah, A. Bahreininejad, and M. Hamdi, “Water cycle algorithm—A novel metaheuristic optimization method for solving constrained engineering optimization problems,” Comput. Struct., vol. 110–111, no. 1, pp. 151–166, 2012. doi: 10.1016/j.compstruc.2012.07.010. [Google Scholar] [CrossRef]

45. G. I. Sayed, A. Darwish, and A. E. Hassanien, “A new chaotic multi-verse optimization algorithm for solving engineering optimization problems,” J. Exp. Theor. Artif. Intell., vol. 30, no. 2, pp. 293–317, 2018. doi: 10.1080/0952813X.2018.1430858. [Google Scholar] [CrossRef]

46. T. M. Coelho, A. M. Machado, L. Jaulin, P. Ekel, W. Pedrycz and G. L. Soares, “An interval space reducing method for constrained problems with particle swarm optimization,” Appl. Soft Comput., vol. 59, no. 4, pp. 405–417, 2017. doi: 10.1016/j.asoc.2017.05.022. [Google Scholar] [CrossRef]

Appendix A

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools