Open Access

Open Access

ARTICLE

Feature Fusion Based Deep Transfer Learning Based Human Gait Classification Model

1 Department of Electronics and Instrumentation Engineering, V. R. Siddhartha Engineering College, Vijayawada, 520007, India

2 Candidate of Pedagogical Sciences, Department of Industrial Electronics and Lighting Engineering, Kazan State Power Engineering University, Kazan, 420066, Russia

3 Department of Theories and Principles of Physical Education and Life Safety, North-Eastern Federal University Named After M. K. Ammosov, Yakutsk, 677000, Russia

4 Department of Computer Science and Engineering, Vignan’s Institute of Information Technology, Visakhapatnam, 530049, India

5 Department of Applied Data Science, Noroff University College, Kristiansand, Norway

6 Artificial Intelligence Research Center (AIRC), Ajman University, Ajman, 346, United Arab Emirates

7 Department of Electrical and Computer Engineering, Lebanese American University, Byblos, Lebanon

8 Department of ICT Convergence, Soonchunhyang University, Asan, 31538, Korea

* Corresponding Author: Yunyoung Nam. Email:

Intelligent Automation & Soft Computing 2023, 37(2), 1453-1468. https://doi.org/10.32604/iasc.2023.038321

Received 07 December 2022; Accepted 16 January 2023; Issue published 21 June 2023

Abstract

Gait is a biological typical that defines the method by that people walk. Walking is the most significant performance which keeps our day-to-day life and physical condition. Surface electromyography (sEMG) is a weak bioelectric signal that portrays the functional state between the human muscles and nervous system to any extent. Gait classifiers dependent upon sEMG signals are extremely utilized in analysing muscle diseases and as a guide path for recovery treatment. Several approaches are established in the works for gait recognition utilizing conventional and deep learning (DL) approaches. This study designs an Enhanced Artificial Algae Algorithm with Hybrid Deep Learning based Human Gait Classification (EAAA-HDLGR) technique on sEMG signals. The EAAA-HDLGR technique extracts the time domain (TD) and frequency domain (FD) features from the sEMG signals and is fused. In addition, the EAAA-HDLGR technique exploits the hybrid deep learning (HDL) model for gait recognition. At last, an EAAA-based hyperparameter optimizer is applied for the HDL model, which is mainly derived from the quasi-oppositional based learning (QOBL) concept, showing the novelty of the work. A brief classifier outcome of the EAAA-HDLGR technique is examined under diverse aspects, and the results indicate improving the EAAA-HDLGR technique. The results imply that the EAAA-HDLGR technique accomplishes improved results with the inclusion of EAAA on gait recognition.Keywords

Human gait, how an individual walks, is personally distinctive because of its physical properties difference betwixt individuals and might be employed as a novel biometric for the authentication and identification of a person [1]. In comparison to other biometrics, namely iris, face, and fingerprint, human gait recognition (HGR) has the advantage of non-invasion, non-cooperation (without any cooperation or interaction with the subject), hard to disguise and long distance, making it very attractive as a means of detection and demonstrates huge potential in the applications of surveillance and security [2,3]. Many sensing modalities involving wearable devices, vision, and foot pressure were used for capturing gait data [4].

Traditional HGR techniques include data preprocessing and features extracted in a handcrafted manner for additional identification [5], frequently suffer from various challenges and constraints enforced by the difficulty of the tasks, namely occlusions, viewing angle, locating the body segments, shadows, large intra-class variations, etc. [6,7]. Emerging trends in machine learning (ML), called deep learning (DL), have become apparent in the past few years as a ground-breaking tool for handling topics in computer vision, speech, sound, and image processing, tremendously outperforming virtually any baseline established previously [8]. The new model exempts the requirement of manually extracting representative features from the expert and provides primary outcomes based on HGR, surpassing the present difficulties and opening room for additional investigation [9,10]. The manually extracted feature was affected by the smartphone’s position while gathering the information [11]. Consequently, some typical statistical manually extracted feature was collected from raw smartphone sensor information. Afterwards, the extraction of handcrafted features, the shallow ML classifier, was used to identify many physical activities of the human. Therefore, shallow ML algorithm relies on handcrafted feature [12,13]. The DL algorithm is more advanced than the shallow ML algorithm since the DL algorithm automatically learns useful features from the raw sensory information without human intervention and identifies the human’s physical activities [14]. The shallow ML algorithm with DL algorithms and handcrafted extracting features with automatically learned features achieved better outcomes in carrying out the smartphone-based HGR model. So, it is apparent that integrating manually extracted features with automatically learned features in the DL algorithm might enhance the potential of the smartphone-based HGR paradigm [15].

Khan et al. [16] developed a lightweight DL algorithm for the HGR method. The presented algorithm involves sequential step–pretrained deep model selection of feature classification. Initially, two lightweight pretrained methods are considered and finetuned concerning other layers and freeze some middle layers. Thereafter, the model was trained using the deep transfer learning (DTL) algorithm, and the feature was engineered on average pooling and fully connected layers. The fusion is carried out through discriminative correlation analysis, enhanced by the moth-flame optimization (MFO) technique. Liang et al. [17] examine the effect of every layer on a parallel infrastructured convolutional neural network (CNN). In particular, we slowly freeze the parameter of GaitSet from high to low layers and see the performance of finetuning. Furthermore, the rise of the frozen layer has negative consequences on the performance; it could reach the maximal efficacy with a single convolution layer unfrozen.

The author in [18] proposed a novel architecture for HGR using DL and better feature selection. During the augmentation phase, three flip operations were performed. During the feature extraction phase, two pre-trained models have taken place, NASNet Mobile and Inception-ResNetV2. These two models were trained and fine-tuned utilizing the TL algorithm on the CASIA B gait data. The feature of the selected deep model was enhanced through an adapted three-step whale-optimized algorithm, and the better feature was selected. Hashem et al. [19] proposed an accurate and advanced end-user software system that is capable of identifying individuals in video based on the gait signature for hospital security. TL algorithm based on pretrained CNN was used and capable of extracting deep feature vectors and categorizing people directly rather than a typical representation that includes hand-crafted feature engineering and computing the binary silhouettes.

In [20], proposed a novel fully automatic technique for HGR under different view angles using the DL technique. Four major phases are included: recognition using supervised learning methods, pre-processing of the original video frame, which exploits pre-trained Densenet-201 CNN model for extracting features, and decrease of further features in extraction vector based on a hybrid selection technique. Sharif et al. [21] suggest a method that efficiently deals with the problem related to walking styles and viewing angle shifts in real-time. The subsequent steps are included: (a) feature selection based on the kurtosis-controlled entropy (KcE) method, then a correlation-based feature fusion phase, (b) real-time video capture, and (c) extraction feature utilizing transfer learning (TL) on the ResNet101 deep model. Then, the most discriminative feature was categorized using the innovative ML classifier.

This study designs an Enhanced Artificial Algae Algorithm with Hybrid Deep Learning based Human Gait Classification (EAAA-HDLGR) technique on sEMG signals. The EAAA-HDLGR technique initially extracts the time domain (TD), and frequency domain (FD) features from the sEMG signals and is fused. In addition, the EAAA-HDLGR technique exploits the hybrid deep learning (HDL) model for gait recognition. At last, an EAAA-based hyperparameter optimizer is applied for the HDL model, mainly derived from the quasi-oppositional based learning (QOBL) concept. A brief classifier outcome of the EAAA-HDLGR technique is examined under diverse aspects.

The rest of the paper is organized as follows. Section 2 provides the overall description of the proposed model. Next, Section 3 offers the experimental validation process. Finally, Section 4 concludes the work with major findings.

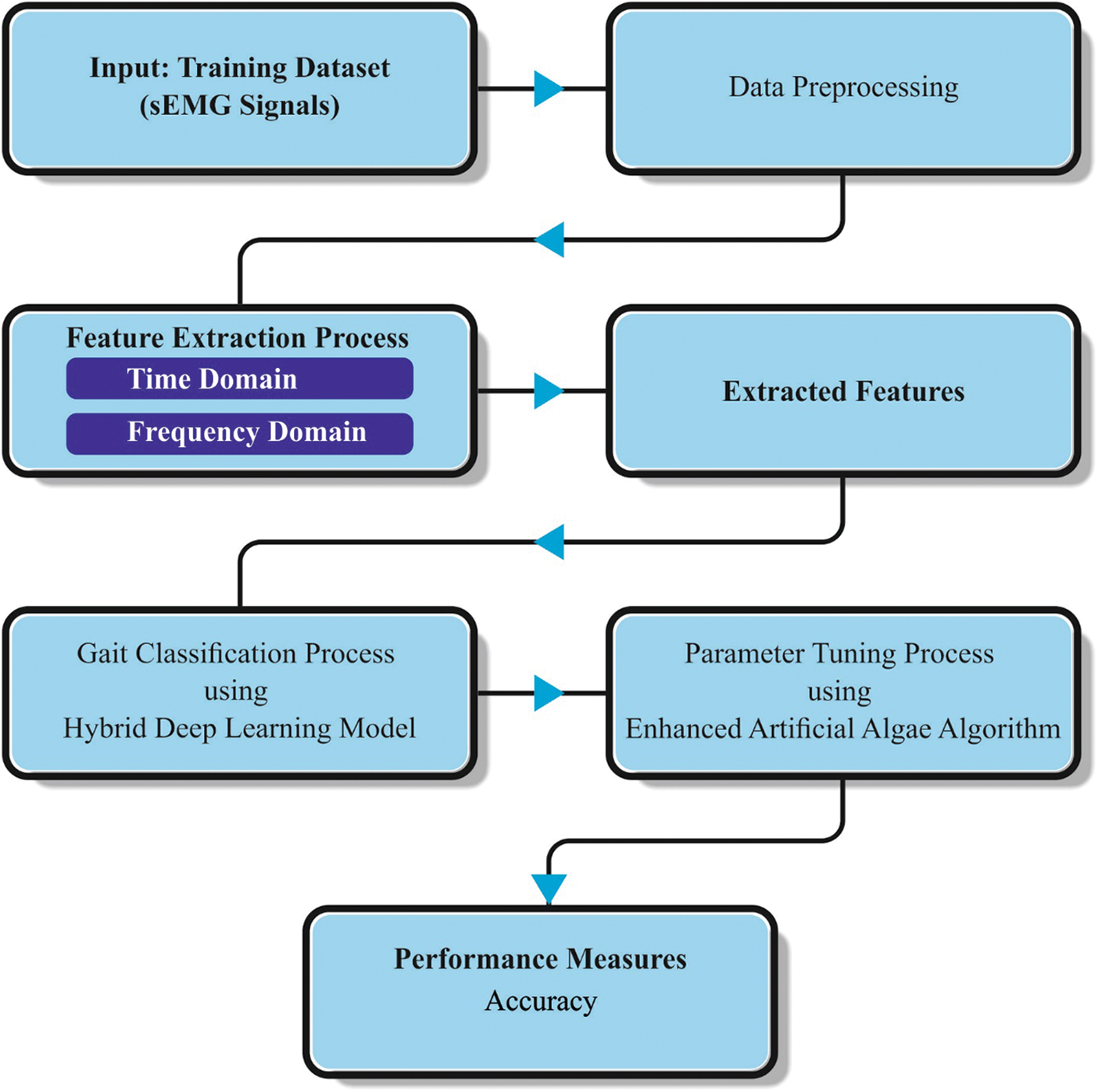

In this study, we have derived a new EAAA-HDLGR technique for gait recognition using sEMG signals. Primarily, the EAAA-HDLGR technique derived the TD as well as FD features from the sEMG signals, which are then fused. In addition, the EAAA-HDLGR technique exploited the HDL model for gait recognition. At last, an EAAA-based hyperparameter optimizer is applied for the HDL model, mainly derived from the QOBL concept. Fig. 1 depicts the workflow of the EAAA-HDLGR algorithm.

Figure 1: Workflow of EAAA-HDLGR approach

2.1 Feature Extraction Process

Afterwards, de-noising, the TD and FD features of all the channels of the EMG signal can be extracted [22]. During this case, the 3 representative time domain features comprising variance (VAR), zero crossing points (ZC), and mean absolute value (MAV) can be utilized as frequency domain features. MAV gets the benefit of properties in which sEMG signals are huge amplitude fluctuations from the time domain that are linearly compared with muscle activation level. The maximum value of

whereas,

whereas,

It can choose 2 representative frequency domain features like average power frequency

whereas

2.2 Gait Classification using HDL Model

During this study, the HDL technique was employed for the gait classifier. It comprises CNN with long short-term memory (LSTM) and bidirectional LSTM (Bi-LSTM) technique [23]. CNN is increasingly popular under the domains of DL and is contained in convolution (Conv) and pooling layers. The Conv layer function is to remove useful features in the input database. Its interior includes one or more Conv kernels. The Conv layer was executed to remove effectual features by sliding the Conv kernel on the feature. Afterwards, enhance a Max-Pooling layer and then the Conv layer; the Max-Pooling layer maintains the strong features and extracts the weaker feature to prevent over-fitting and decrease the complexity. LSTM avoids gradient vanishing and explosion from the trained process of recurrent neural network (RNN), whereas input, output, and forget gates can be projected. The 3 gate functions effectively solve the trained problem of RNN. The input gate determines the data that existing time carries to future time. The forget gate identifies the data count of preceding times which is preserved in the current period. The resultant gate chooses the result of existing to future states. The succeeding equation illustrates the equation of distinct cells from LSTM:

At this point,

• CNN was projected with three 1-D Conv layers, and the count of Conv kernels is set as 16, 32, 64, correspondingly. The Conv kernel size is set as 2, and the striding phase has set as. The effectual feature was extracted with stride from the Conv kernel. Then, add the Max-Pooling layer for all the Conv layers; afterwards, the pooling window size was set as 2, and the stride stage was set as one. The Max-Pooling layer decreases the feature effort and avoids overfitting.

• By assigning weight to features using the attention process, the attention block enhances the outcome of time series features, restrains the interference of insignificant features, and effectually explains this problem that the process doesn’t judge the effect of vital of various time series features.

• The extraction feature assumed that the input of 1 Bi-LSTM layer and 2 LSTM layers achieved the classifier outcome.

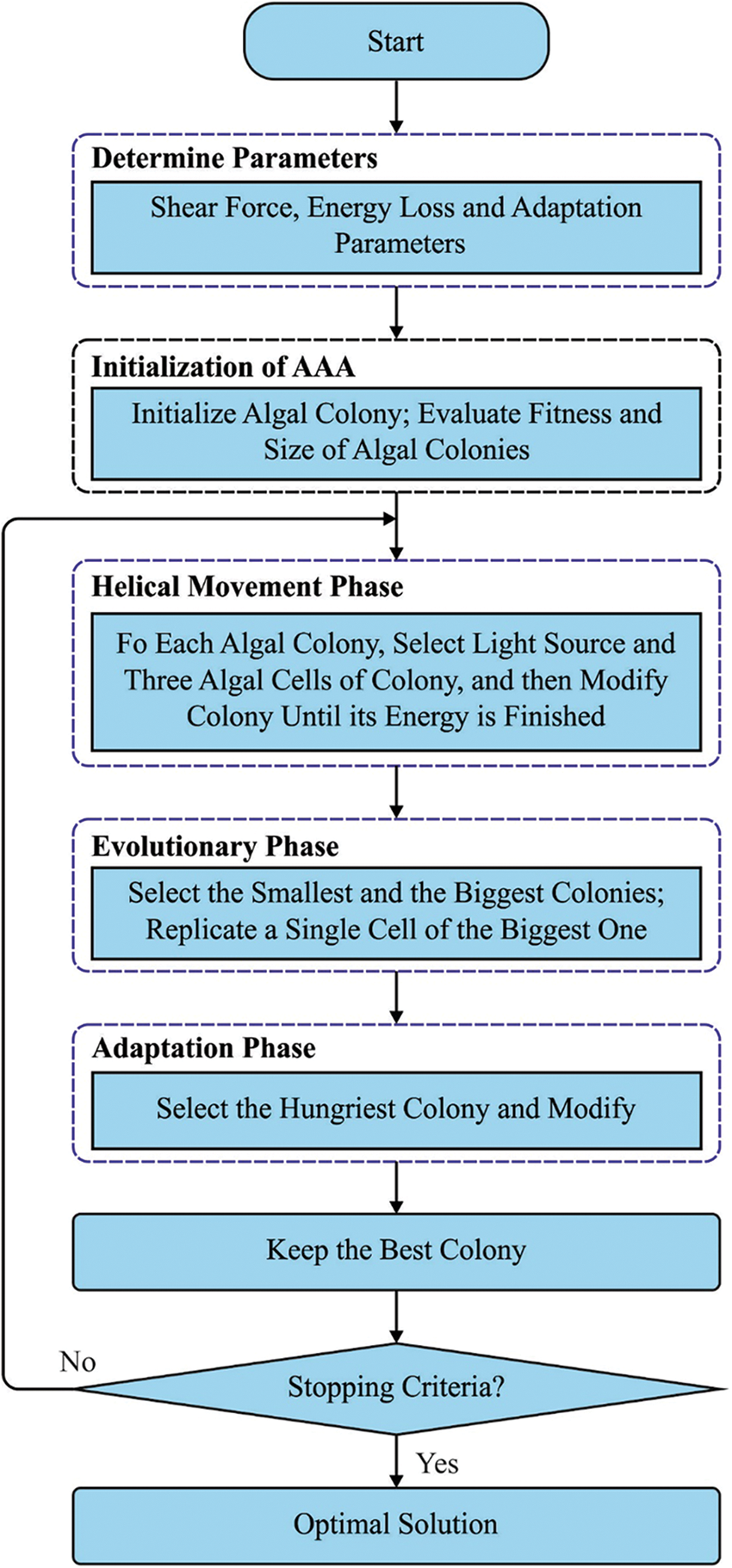

2.3 Parameter Optimization using EAAA Model

To improve the recognition rate, EAAA based hyperparameter optimizer is applied to the HDL model. Artificial algae are generally known to describe the features of algae and demonstrate that they can be responsive to solutions from the problem spaces [24]. With a real one, artificial algae demonstrate that if it is implemented in the environment by moving to a light source for photosynthesizing by spiral swimming, it can switch superior species and eventually multiply with mitotic division. Thus, this procedure contains 3 important processes Helical Motion, Evolutionary Process, and Adaptation. The term algae colony signifies the collection of algae cells that lives together. Algae colony and population can demonstrate in the subsequent formula.

The algae colony work as separate cell and permits together, and the cell from the colony can pass away an unfavourable level of life. The colony present at the optimal point is named a better colony, and it includes better algae cells. The development kinetics of algae colonies calculated by the Monod approach as demonstrated under.

In Eq. (14),

In Eq. (15),

In the above formula,

During this equation, the value

Figure 2: Flowchart of AAA

The distance to the light source and friction surface is to control the step size of motions.

For the helical rotation of algae cells,

Also, the QOAAO technique resulted by utilizing quasi-opposition-based population initialization. At this point, the random solution was higher than the global better solution if related to their opposite solution. So, the N better individual was chosen in the distinct population containing

In the formula, the

The fitness chosen is a vital feature in the EAAA system. The solution encoder was exploited to assess the aptitude (goodness) of candidate solutions. At this point, the accuracy value is the main form employed to design a fitness function.

From the expression, TP represents the true positive, and FP denotes the false positive value.

In this section, the gait classification results of the EAAA-HDLGR approach are investigated in detail. The proposed model is simulated using Python 3.6.5 tool on PC i5-8600k, GeForce 1050Ti 4GB, 16GB RAM, 250GB SSD, and 1TB HDD. The parameter settings are given as follows: learning rate: 0.01, dropout: 0.5, batch size: 5, epoch count: 50, and activation: ReLU.

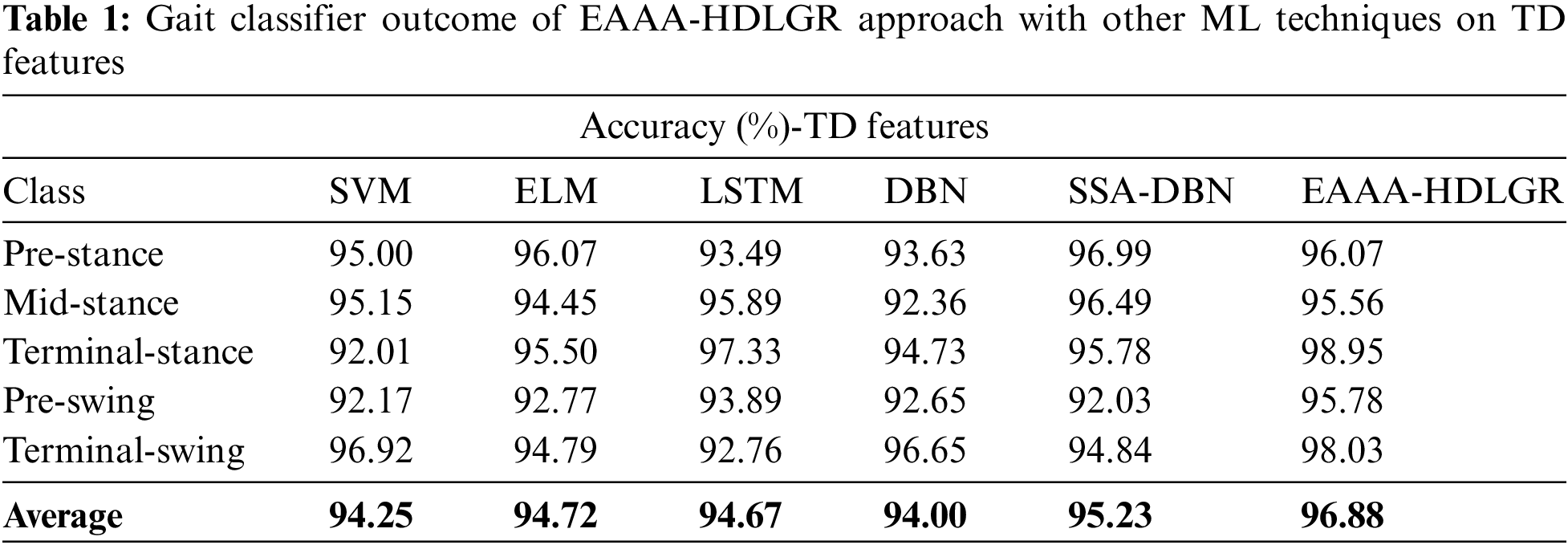

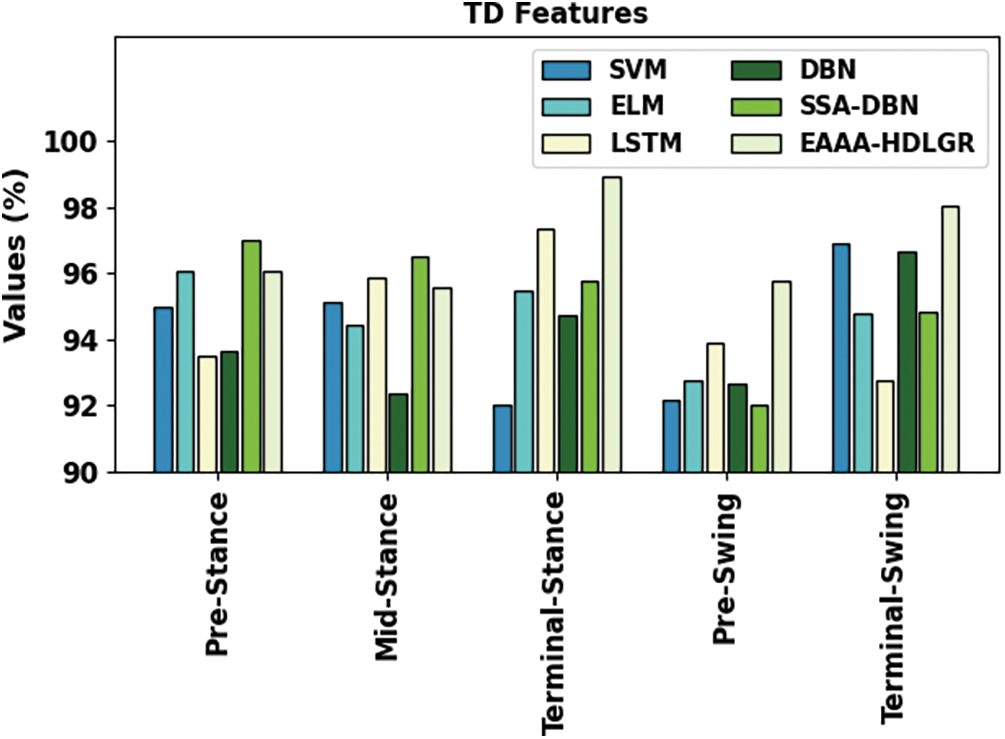

Table 1 and Fig. 3 demonstrate the overall gait classification outcomes of the EAAA-HDLGR model with other ML models on TD features [22]. The experimental results inferred that the EAAA-HDLGR model exhibits effectual outcomes. For instance, with the pre-stance stage, the EAAA-HDLGR model has highlighted an increasing

Figure 3: Gait classifier outcome of EAAA-HDLGR approach on TD features

Meanwhile, with the Terminal-Stance stage, the EAAA-HDLGR approach has emphasized increasing

An average

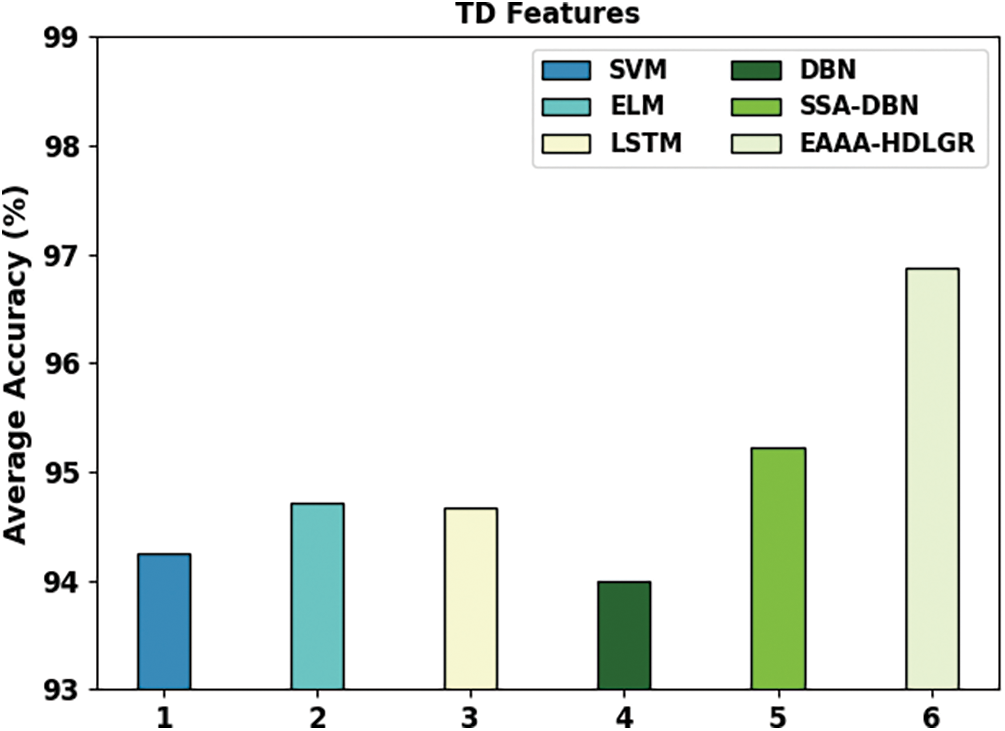

Figure 4: Average outcome of EAAA-HDLGR approach on TD features

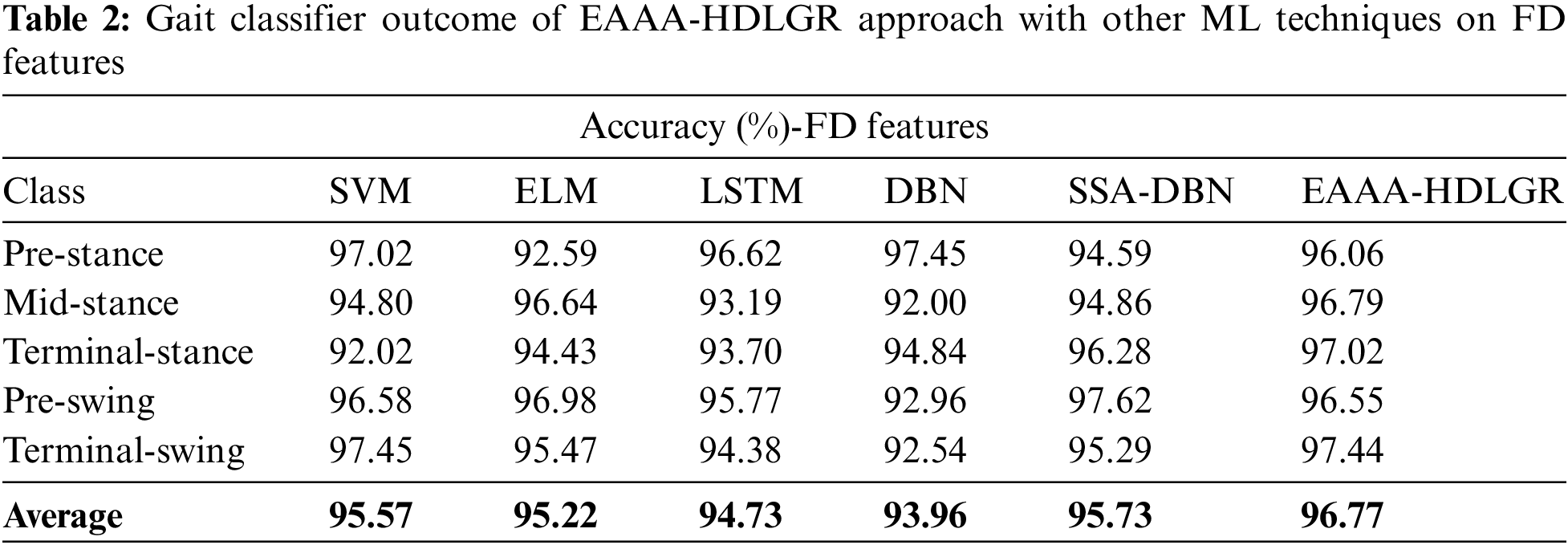

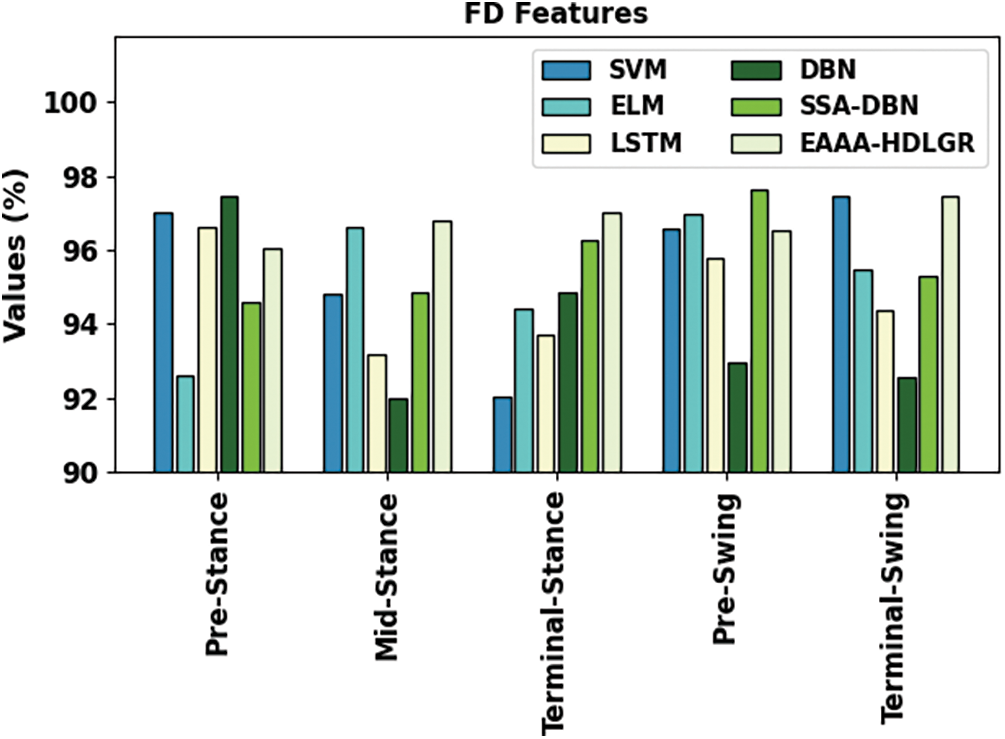

Table 2 and Fig. 5 illustrate the overall gait classification outcomes of the EAAA-HDLGR approach with other ML models on FD features. The experimental outcomes inferred that the EAAA-HDLGR system exhibits effectual outcomes. For the sample, with the pre-stance stage, the EAAA-HDLGR method has exhibited a maximal

Figure 5: Gait classifier outcome of EAAA-HDLGR approach on FD features

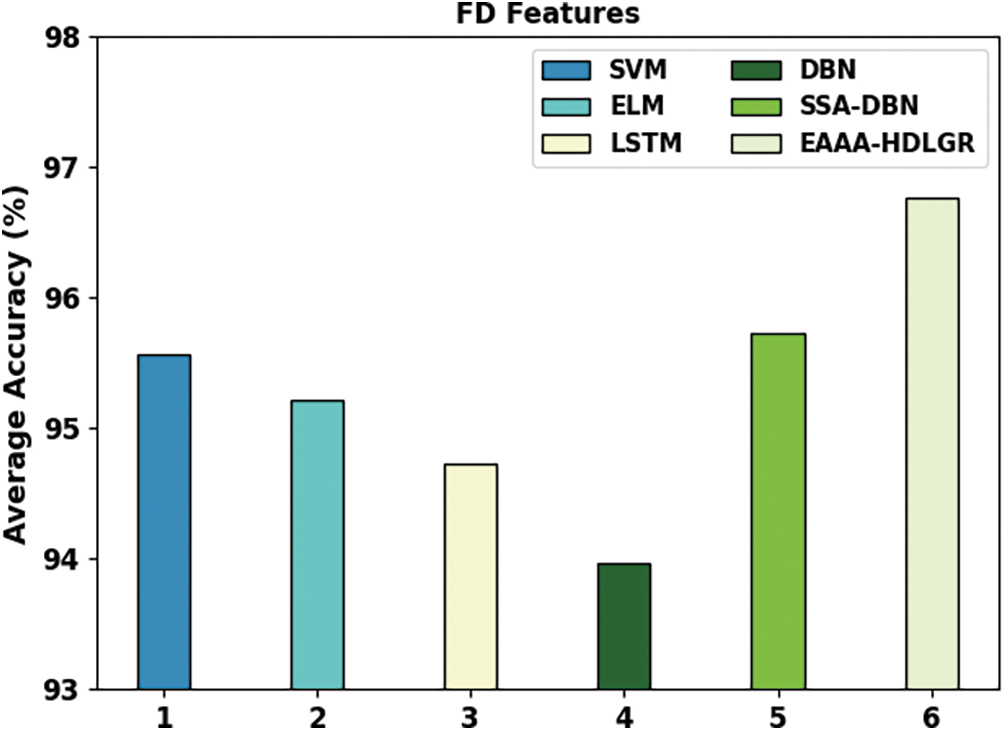

An average

Figure 6: Average outcome of EAAA-HDLGR approach on FD features

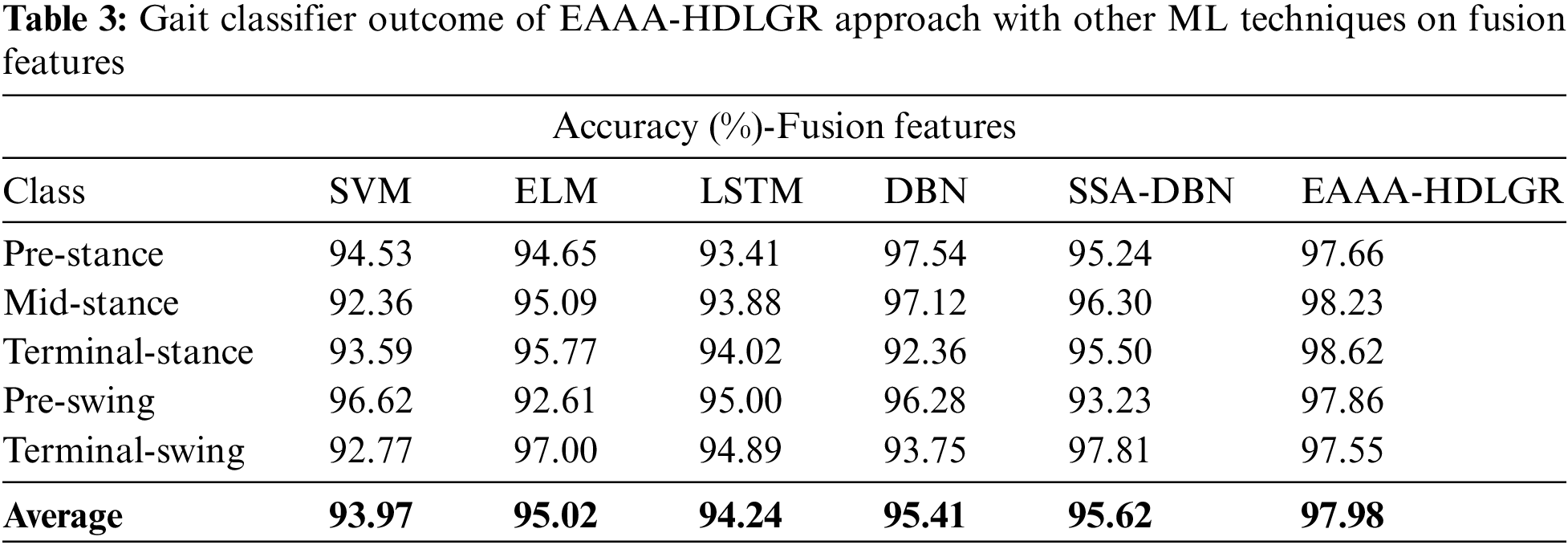

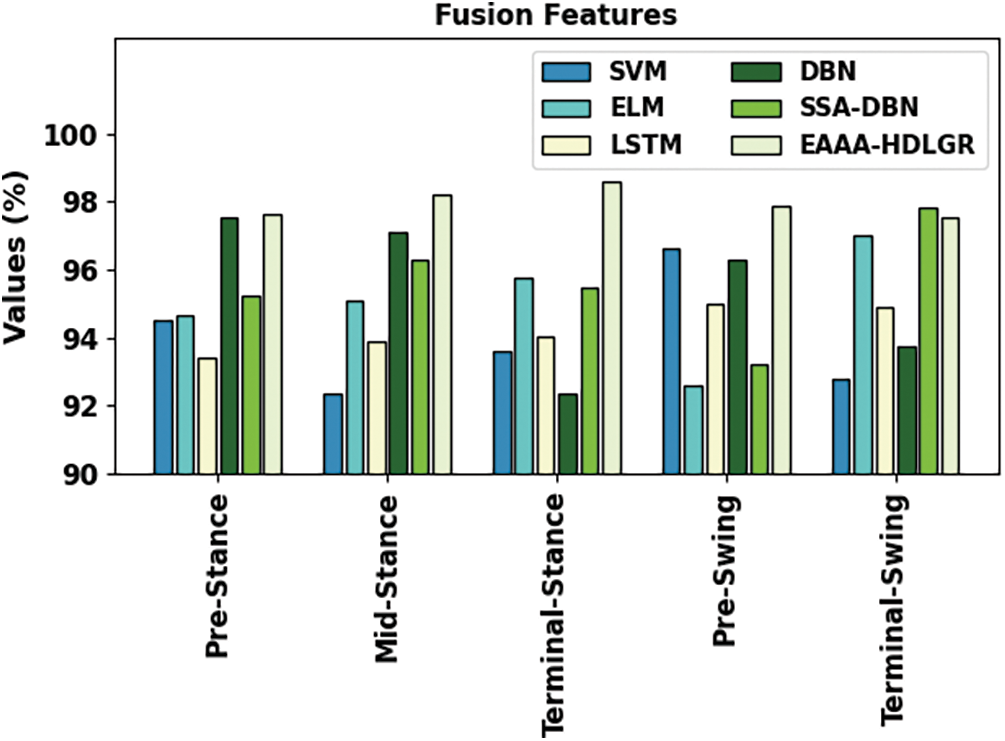

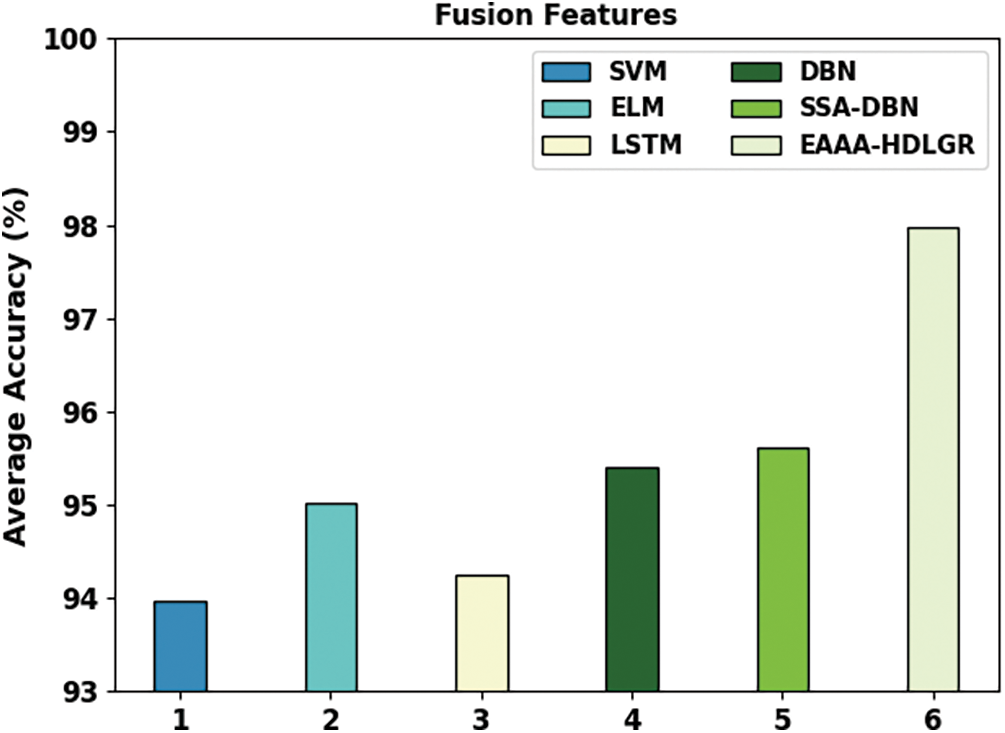

Table 3 and Fig. 7 showcase the overall gait classification outcomes of the EAAA-HDLGR algorithm with other ML techniques on Fusion features. The experimental results inferred that the EAAA-HDLGR system demonstrates effectual outcomes. For instance, with the pre-stance stage, the EAAA-HDLGR approach has highlighted a higher

Figure 7: Gait classifier outcome of EAAA-HDLGR approach on fusion features

An average

Figure 8: Average outcome of EAAA-HDLGR approach on fusion features

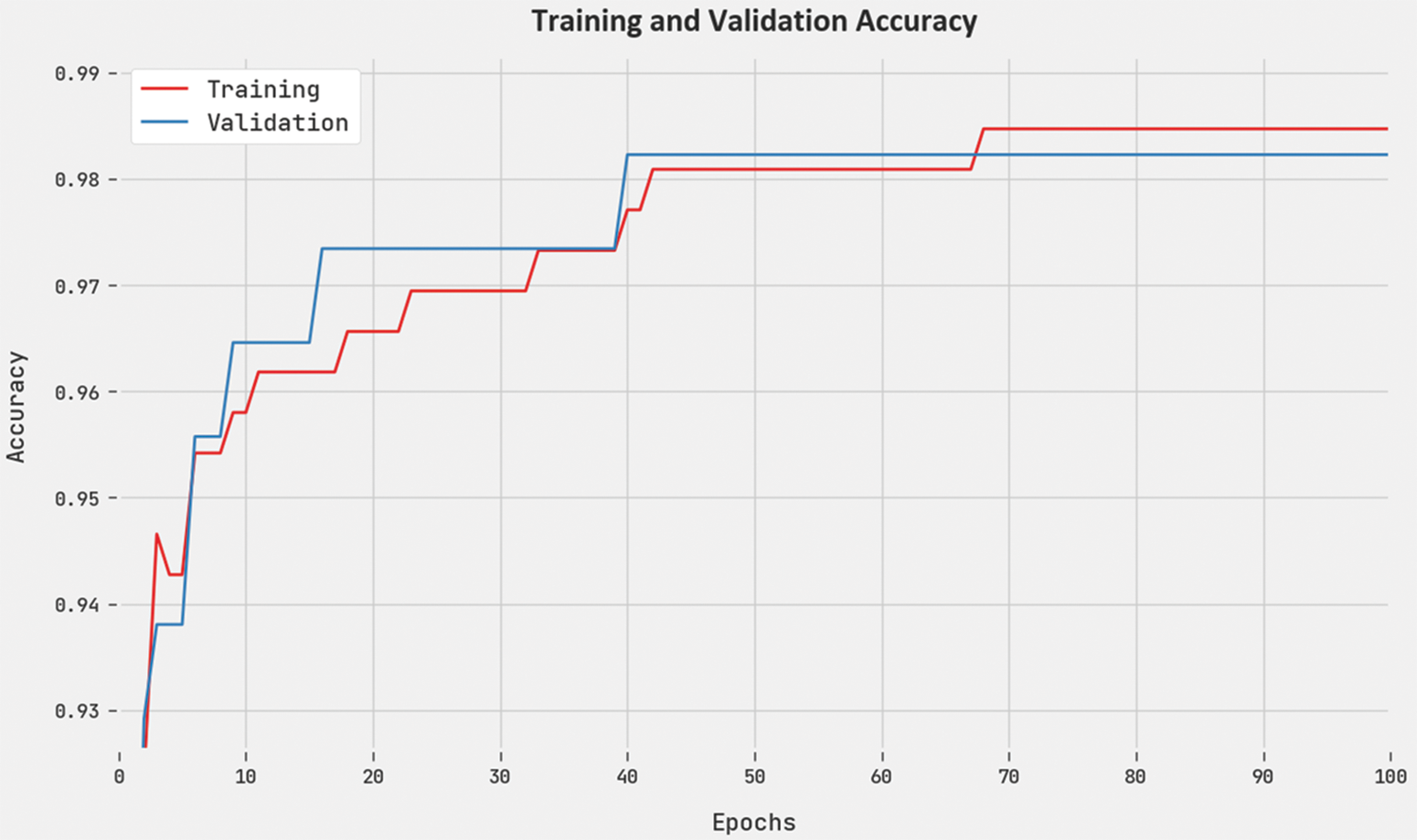

The training accuracy (TACC) and validation accuracy (VACC) of the EAAA-HDLGR approach are investigated on gait classifier performance in Fig. 9. The figure stated that the EAAA-HDLGR methodology has shown higher performance with improved values of TACC and VACC. It is observable that the EAAA-HDLGR methodology has reached maximal TACC outcomes.

Figure 9: TACC and VACC outcome of EAAA-HDLGR approach

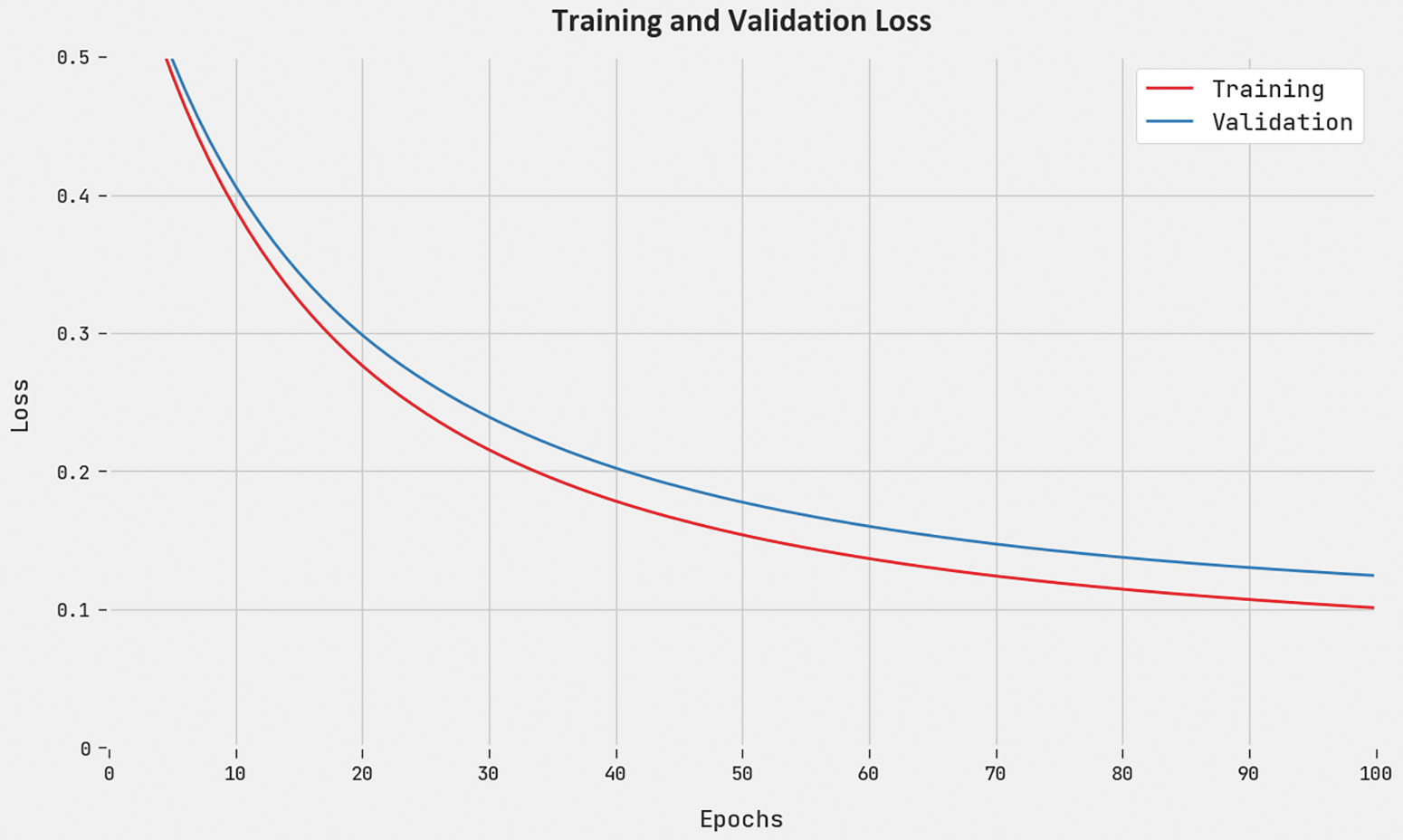

The training loss (TLS) and validation loss (VLS) of the EAAA-HDLGR system are tested on gait classifier performance in Fig. 10. The figure pointed out that the EAAA-HDLGR algorithm has better performance with minimal values of TLS and VLS. It is noticeable that the EAAA-HDLGR model has resulted in lesser VLS outcomes.

Figure 10: TLS and VLS outcome of the EAAA-HDLGR approach

In this study, we have derived a new EAAA-HDLGR technique for gait recognition using sEMG signals. Primarily, the EAAA-HDLGR technique derived the TD as well as FD features from the sEMG signals which are then fused. In addition, the EAAA-HDLGR technique exploited the HDL model for gait recognition. At last, an EAAA-based hyperparameter optimizer is applied for the HDL model, which is mainly derived by the use of the QOBL concept. A brief classifier outcome of the EAAA-HDLGR technique is examined under diverse aspects and the results indicated the betterment of the EAAA-HDLGR technique. The results imply that the EAAA-HDLGR technique accomplishes improved results with the inclusion of EAAA on gait recognition. In future, feature reduction and feature selection processes can be combined to boost the recognition rate of the EAAA-HDLGR technique.

Funding Statement: This research was supported by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant number: HI21C1831) and the Soonchunhyang University Research Fund.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Z. Ni and B. Huang, “Human identification based on natural gait micro‐Doppler signatures using deep transfer learning,” IET Radar, Sonar & Navigation, vol. 14, no. 10, pp. 1640–1646, 2020. [Google Scholar]

2. X. Bai, Y. Hui, L. Wang and F. Zhou, “Radar-based human gait recognition using dual-channel deep convolutional neural network,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 12, pp. 9767–9778, 2019. [Google Scholar]

3. M. Sharif, M. Attique, M. Z. Tahir, M. Yasmim, T. Saba et al., “A machine learning method with threshold based parallel feature fusion and feature selection for automated gait recognition,” Journal of Organizational and End User Computing, vol. 32, no. 2, pp. 67–92, 2020. [Google Scholar]

4. H. Wu, X. Zhang, J. Wu and L. Yan, “Classification algorithm for human walking gait based on multi-sensor feature fusion,” in Int. Conf. on Mechatronics and Intelligent Robotics, Advances in Intelligent Systems and Computing Book Series, Cham, Springer, vol. 856, pp. 718–725, 2019. [Google Scholar]

5. H. Arshad, M. A. Khan, M. Sharif, M. Yasmin and M. Y. Javed, “Multi-level features fusion and selection for human gait recognition: An optimized framework of Bayesian model and binomial distribution,” International Journal of Machine Learning and Cybernetics, vol. 10, no. 12, pp. 3601–3618, 2019. [Google Scholar]

6. N. Mansouri, M. A. Issa and Y. B. Jemaa, “Gait features fusion for efficient automatic age classification,” IET Computer Vision, vol. 12, no. 1, pp. 69–75, 2018. [Google Scholar]

7. D. Thakur and S. Biswas, “Feature fusion using deep learning for smartphone based human activity recognition,” International Journal of Information Technology, vol. 13, no. 4, pp. 1615–1624, 2021. [Google Scholar] [PubMed]

8. F. M. Castro, M. J. Marín-Jiménez, N. Guil and N. Pérez de la Blanca, “Multimodal feature fusion for CNN-based gait recognition: An empirical comparison,” Neural Computing and Applications, vol. 32, no. 17, pp. 14173–14193, 2020. [Google Scholar]

9. M. M. Hasan and H. A. Mustafa, “Multi-level feature fusion for robust pose-based gait recognition using RNN,” International Journal of Computer Science and Information Security, vol. 18, no. 2, pp. 20–31, 2021. [Google Scholar]

10. Y. Lang, Q. Wang, Y. Yang, C. Hou, D. Huang et al., “Unsupervised domain adaptation for micro-Doppler human motion classification via feature fusion,” IEEE Geoscience and Remote Sensing Letters, vol. 16, no. 3, pp. 392–396, 2018. [Google Scholar]

11. M. Li, S. Tian, L. Sun and X. Chen, “Gait analysis for post-stroke hemiparetic patient by multi-features fusion method,” Sensors, vol. 19, no. 7, pp. 1737, 2019. [Google Scholar] [PubMed]

12. A. Abdelbaky and S. Aly, “Two-stream spatiotemporal feature fusion for human action recognition,” The Visual Computer, vol. 37, no. 7, pp. 1821–1835, 2021. [Google Scholar]

13. F. F. Wahid, “Statistical features from frame aggregation and differences for human gait recognition,” Multimedia Tools and Applications, vol. 80, no. 12, pp. 18345–18364, 2021. [Google Scholar]

14. Q. Hong, Z. Wang, J. Chen and B. Huang, “Cross-view gait recognition based on feature fusion,” in IEEE 33rd Int. Conf. on Tools with Artificial Intelligence (ICTAI), Washington, DC, USA, pp. 640–646, 2021. [Google Scholar]

15. K. Sugandhi, F. F. Wahid and G. Raju, “Inter frame statistical feature fusion for human gait recognition,” in Int. Conf. on Data Science and Communication (IconDSC), Bangalore, India, pp. 1–5, 2019. [Google Scholar]

16. M. A. Khan, H. Arshad, R. Damaševičius, A. Alqahtani, S. Alsubai et al., “Human gait analysis: A sequential framework of lightweight deep learning and improved moth-flame optimization algorithm,” Computational Intelligence and Neuroscience, vol. 2022, pp. 1–13, 2022. [Google Scholar]

17. Y. Liang, E. H. K. Yeung and Y. Hu, “Parallel CNN classification for human gait identification with optimal cross data-set transfer learning,” in IEEE Int. Conf. on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Hong Kong, China, pp. 1–6, 2021. [Google Scholar]

18. F. Saleem, M. A. Khan, M. Alhaisoni, U. Tariq, A. Armghan et al., “Human gait recognition: A single stream optimal deep learning features fusion,” Sensors, vol. 21, no. 22, pp. 7584, 2021. [Google Scholar] [PubMed]

19. L. Hashem, R. Al-Harakeh and A. Cherry, “Human gait identification system based on transfer learning,” in 21st Int. Arab Conf. on Information Technology (ACIT), Giza, Egypt, pp. 1–6, 2020. [Google Scholar]

20. A. Mehmood, M. A. Khan, M. Sharif, S. A. Khan, M. Shaheen et al., “Prosperous human gait recognition: An end-to-end system based on pre-trained CNN features selection,” Multimedia Tools and Applications, pp. 1–21, 2020. https://doi.org/10.1007/s11042-020-08928-0 [Google Scholar] [CrossRef]

21. M. I. Sharif, M. A. Khan, A. Alqahtani, M. Nazir, S. Alsubai et al., “Deep learning and kurtosis-controlled, entropy-based framework for human gait recognition using video sequences,” Electronics, vol. 11, no. 3, pp. 334, 2022. [Google Scholar]

22. J. He, F. Gao, J. Wang, Q. Wu, Q. Zhang et al., “A method combining multi-feature fusion and optimized deep belief network for emg-based human gait classification,” Mathematics, vol. 10, no. 22, pp. 4387, 2022. [Google Scholar]

23. R. J. Kavitha, C. Thiagarajan, P. I. Priya, A. V. Anand, E. A. Al-Ammar et al., “Improved harris hawks optimization with hybrid deep learning based heating and cooling load prediction on residential buildings,” Chemosphere, vol. 309, pp. 136525, 2022. [Google Scholar] [PubMed]

24. K. I. Anwer and S. Servi, “Clustering method based on artificial algae algorithm,” International Journal of Intelligent Systems and Applications in Engineering, vol. 9, no. 4, pp. 136–151, 2021. [Google Scholar]

25. J. Xia, H. Zhang, R. Li, Z. Wang, Z. Cai et al., “Adaptive barebones salp swarm algorithm with quasi-oppositional learning for medical diagnosis systems: A comprehensive analysis,” Journal of Bionic Engineering, vol. 19, no. 1, pp. 240–256, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools