Open Access

Open Access

ARTICLE

Metaheuristics with Vector Quantization Enabled Codebook Compression Model for Secure Industrial Embedded Environment

Department of Biomedical Engineering, Saveetha School of Engineering, Saveetha Institute of Medical and Technical Sciences, Saveetha University, Saveetha Nagar, Thandalam, Chennai, 602105, India

* Corresponding Author: S. Srinivasan. Email:

Intelligent Automation & Soft Computing 2023, 36(3), 3607-3620. https://doi.org/10.32604/iasc.2023.036647

Received 08 October 2022; Accepted 06 December 2022; Issue published 15 March 2023

Abstract

At the present time, the Industrial Internet of Things (IIoT) has swiftly evolved and emerged, and picture data that is collected by terminal devices or IoT nodes are tied to the user's private data. The use of image sensors as an automation tool for the IIoT is increasingly becoming more common. Due to the fact that this organisation transfers an enormous number of photographs at any one time, one of the most significant issues that it has is reducing the total quantity of data that is sent and, as a result, the available bandwidth, without compromising the image quality. Image compression in the sensor, on the other hand, expedites the transfer of data while simultaneously reducing bandwidth use. The traditional method of protecting sensitive data is rendered less effective in an environment dominated by IoT owing to the involvement of third parties. The image encryption model provides a safe and adaptable method to protect the confidentiality of picture transformation and storage inside an IIoT system. This helps to ensure that image datasets are kept safe. The Linde–Buzo–Gray (LBG) methodology is an example of a vector quantization algorithm that is extensively used and a relatively new form of picture reduction known as vector quantization (VQ). As a result, the purpose of this research is to create an artificial humming bird optimization approach that combines LBG-enabled codebook creation and encryption (AHBO-LBGCCE) for use in an IIoT setting. In the beginning, the AHBO-LBGCCE method used the LBG model in conjunction with the AHBO algorithm in order to construct the VQ. The Burrows-Wheeler Transform (BWT) model is used in order to accomplish codebook compression. In addition, the Blowfish algorithm is used in order to carry out the encryption procedure so that security may be attained. A comprehensive experimental investigation is carried out in order to verify the effectiveness of the proposed algorithm in comparison to other algorithms. The experimental values ensure that the suggested approach and the outcomes are examined in a variety of different perspectives in order to further enhance them.Keywords

The Industrial Internet of Things (IIoT) is the primary impetus behind the development of the 6th generation (6G) mobile communications infrastructure [1]. A new paradigm will be presented by an artificial intelligence (AI) supported 6G network, which will promote the inclusion of vertical industry in various scenarios such as industrial digital transformation and smart manufacturing [2]. The evolutionary 6G network needs to offer ultra-high data rate with ultra-low latency, reliability, ubiquitous intelligence, and massive connections [3], which is supporting the intelligent production scheduling, prediction maintenance of equipment, high-precision product quality control, and other value-added services in a variety of industrial IoT systems, which is giving rise to the fourth industrial revolution [4]. People are becoming more concerned about the security of their data in light of the phenomenal rise of the Internet. Several other strategies were presented as potential means of protecting sensitive information. Another method of data protection is known as data concealing [5]. In order to achieve the goal of information security, data hiding involves embedding large datasets within carriers. This prevents the information from being recognised and so helps to achieve the goal [6].

The technique of data concealing may be divided into two categories: irreversible and reversible, and it is often used in cloud applications for the purpose of accessorial data embedding, namely the embedding of keywords for the purpose of picture retrieval [7]. As a result, one of the key concerns is to maximise payload while minimising distortion. The vector quantization (VQ) system is a lossy image compression method that may be broken down into three stages: image compression, decompression, and codebook training. Image compression is the first step. The Lindo-Buzo-Gray (LBG) technique has been used extensively in the creation of conventional codebooks [8]. It has been proposed that research workers should speed up the index code search as well as the design of the codebook. After using the codebook as a guide, the picture is compressed into an index table. During the decompression process, the index table is converted into an image by using codebooks that are quite similar to one another [9]. The VQ data source coding technology is efficient and reliable. The LBG approach was named after the individuals who first proposed it, and there are also a number of additional improved variants [10]. This technology can provide a straightforward decoding strategy in addition to a greater compression ratio.

In this work, an artificial humming bird optimization with LBG enabled codebook creation and encryption approach known as AHO-LBGCCE is developed for use in an IIoT setting. In the beginning, the AHBO-LBGCCE method used the LBG model in conjunction with the AHBO algorithm in order to construct the VQ. The Burrows-Wheeler Transform (BWT) model is used in order to accomplish codebook compression. In addition, the Blowfish algorithm is used in order to carry out the encryption procedure so that security may be attained. A comprehensive experimental investigation is carried out in order to verify the effectiveness of the proposed algorithm in comparison to other algorithms.

When [11] is being analysed, the WOA may be used to determine which image compression yields the superior codebook. WOA is a standard method that takes into account a variety of searching methods and is used to construct a robust codebook from picture compressions. The capability of the proposed system to compress a variety of typical pictures reveals that the proposed approach is capable of producing image compressions with the appropriate characteristics. The approaches such as PSO, BA, and FA that were described were superseded by the superior compression capabilities of the system that was demonstrated. An MRFO employing an LBG-related microarray image compression (MRFOLBG-MIC) technique is investigated by Alkhaldi et al. [12]. In most cases, the LBG technique was used in order to construct a local optimal codebook for image compressions. The MRFO method, which is designed to produce better codebooks, was used in order to improve the LBG-VQ codebook that was developed.

An effective method for compressing biomedical images using a CC platform and HHO-based LBG techniques was proposed by Satish Kumar et al. [13]. The method that is provided allows for a seamless transition between the exploitation phase and the exploration phase. A delicate method for masking digital picture data that is reliant upon lattice VQ (LVQ) was provided in reference [14]. The picture was converted from the spatial domain to the frequency domain using an integer-to-integer discrete wavelet transform (DWT) during the data concealing system that was provided, as well as during a primary preprocessing phase (IIDWT). After that, the LVQ lattices of A4 and Z4 were applied to embed data into the cover picture. The authors of [15] are interested in steganography using Internet Low Bitrate Codec voice streams as their primary emphasis. The sensitive information was encoded together with an improvement to the searching range of gain codebooks, which was done in order to quantize the gain values.

A hardware-effective image compression circuit that is reliant on VQ to maximum-speed image sensors is investigated in Huang et al.’s [16] research. During the course of this circuit, a SOM was carried out to facilitate the on-chip learning of codebooks in order to flexibly meet the requirements of many applications. The authors propose a reconfigurable complete-binary adder tree as a means of decreasing the amount of hardware resources required, during which time the arithmetic unit was employed in its entirety. Establishing a new hierarchical geometry approach that is reliant on adaptable Tree-Structured Point-LVQ is the work of Filali et al. [17] (TSPLVQ). This technology enables the structuring of three-dimensional content in a hierarchical fashion, which improves the compression efficiency of static point clouds. Two different techniques of quantization were used in order to show recursively the three-dimensional point clouds as a succession of embedding truncated cubic lattices. This was done in accordance with the adaptive and multiscale architecture that the system had.

In this piece, we present a new AHBO-LBGCCE system that we have developed for the construction and compression of secure codebooks in the context of the IIoT environment. In the beginning, the AHBO-LBGCCE method used the LBG model in conjunction with the AHBO algorithm in order to construct the VQ. In this particular study, the BWT model is utilised for the purpose of codebook compression. In addition, the Blowfish algorithm is utilised as part of the encryption process in order to accomplish the goal of achieving security.

3.1 AHBO Based Codebook Construction Process

VQ can be determined by the block coding mechanism which is used to compress images with loss of data. In the presented method, the construction of codebooksis a crucial process [18]. Consider

Subjected to the constraint provided by the subsequent equation:

Figure 1: Overall VQ process

In 1957, Lloyd developed an LBG algorithm that can be characterised as an approach to scalar quantization. In 1980, this algorithm was generalised to be used with VQ. In order to define the codebook, it applies two conditions that have been predetermined to the input vector. The LBG approach uses two conditions in an iterative fashion in order to arrive at the optimal codebook based on the particular procedure.:

• Split the input vectors into various groups via minimal distance rules. The resulting blocks are stored in binary indicator matrixes whereby the component is formulated by Eq. (4),

• Find the centroid of each portion. The former codeword was replaced with the presented centroid:

• Go to step 1 until no modification in cj takes place.

The AHBO is a biologically stimulated optimization approach that is based on the actions of intelligent hummingbirds (HB) [19]. [Citation needed] In the following, the three AHBO tactics that are considered to be the most essential will be discussed.

Sources of food: It performs frequent evaluations of the properties of the food sources in order to select an appropriate source from among a group of food sources. These evaluations include factors such as the amount of time that has passed since the bees last visited the flowers, the rate at which the nectar refills, the composition of each individual flower, and the quality of the nectar.

Hummingbird: If each HB is assigned to specific food sources where it may be fed, then the HB and the food sources will be in a position that is comparable to one another. A HB is able to recall the pace at which various food sources and places refresh their nectar supply, and they may relay this knowledge to other members of the colony. In addition, HB is able to recall how much longer it has been since they have visited the food sources on their own.

Visit table: This information was recorded as the visit level to the food source for HB, which represents the number of times that have passed since the HB's most recent visit to the food source. That particular HB would give more emphasis to its visits to food sources that get a big volume of visitors.

The objective of the AHBO algorithm is to achieve victory over optimal problems using a swarm-based metaheuristic method. This section presents three mathematical models that represent three different foraging techniques used by HB. These methods include territorial foraging, migratory foraging, and directed foraging. The suggested method, much like the optimizer in the swarm-based class, may be broken down into three primary stages. The behaviour of HB in both flying and foraging served as inspiration for the optimization strategy that is being given here. The AHBO algorithm's three foundational phases, which are currently under discussion, are as follows: It is stated in the following equation how directed foraging employs three different combat techniques in foraging: diagonal flight, axial flight, and omni-directional flight.:

In Eq. (2),

While

Figure 2: Steps involved in AHBO algorithm

Territorial foraging: The succeeding expression indicates the local searching of HB:

In Eq. (8),

In Eq. (9),

There has been no evidence shown to show how the AHBO-based LBG models really operate. It is necessary to first cut the input picture into non-overlapping blocks before using the LBG technique to the quantization process [20]. The codebook was implemented with the help of the LBG model, which has the capability of being trained using the AHBO technique and has to be compliant with the restrictions of global convergence. The index number is sent through the communications media, and then it is reconstructed at the destination using the decoder. The accompanying codewords and the reformed index have been correctly placed in order to generate a decompressed picture with a size that is substantially comparable to the input image that was supplied. The AHBO model has been discussed in the following steps.

Step1: Initializing parameters: now, the codebook created through LBG approach is declared the primary solution where remaining solution is randomly developed. The solution attained denotes a codebook of

Step2: (Selective current optimum solution): The fitness of every solution can be processed according to Eq. (1) and chooses the high fitness position as better outcome.

Step3: (Generation of novel solution): The grey wolf location is upgraded. Once the (K) arbitrarily created number is greater than ~a the bad location can be replaced with the recently discovered location and keep the optimum position unchanged.

Step4: Rank the solution under the application of fitness functions and choose the best solution.

Step5: (Ending condition): Follow steps 2 and 3 until the Stopping condition is reached.

3.2 Codebook Compression Process

In the presented AHBO-LBGCCE technique, the compression of codebook process is carried out using the BWT technique. Let us

Step1: add a $ symbol to end of S that is lesser than S symbol.

Step2: Generate the M matrixes, the row1 of that is equivalent to S. Row2 of M matrix has shifted 1bit cyclically towards right of row1 of M matrix (S value). Row3 of M matrix has shifted 1bitcyclically towardsright of row2 of M matrix, and it can be repeatedstillattain row n. 2rough the observe of matrices, it could be initiated that once the nth row of matrix shift to right by 1bit, the 1st row Sis attained again that represents the structurecyclical shift has been implemented still the nth row, concluding a rotation of sequence S.

Step3: Create the converting text S=BWT(S) by adopting the final column of M matrix.

The Blowfish (BF) method is used here as part of the process of encrypting data. BF is a blockcipher with a symmetric key that has a key length that may vary from 32–448 bits and a block size that can be up to 64 bits [22]. The BF encryption system was completely open-source, so anybody could use it without paying a fee, and it wasn't protected by any patents. The encrypting software known as SplashID was created by combining BF with numerous other tools. The BF security approach went through rigorous testing. In addition, the BF is one of the quickest components built in order for everyone to use it. It is capable of doing important tasks, such as SplashID, which are compatible with a number of different processor types. While the symmetric blockcipher known as BF is used as a casual alternative to DES or IDEA, these algorithms are still widely used. It makes use of a variable-length key with a length somewhere between 32 and 448 bits, making it suitable for both domestic and commercial applications. BF is a quick and cost-free alternative to the most common encrypting methods. Bruce Schneier was the one that developed it. As a result, it is capable of being extensively analysed, and since it is a reliable encryption system, it has the potential to gradually gain popularity. The BF is not protected by a patent, has an open licence, and does not restrict its users in any way. Because there are not many primitives that are capable of operating on devices with little CPU capacity, lightweight cryptography has become more significant in recent years.

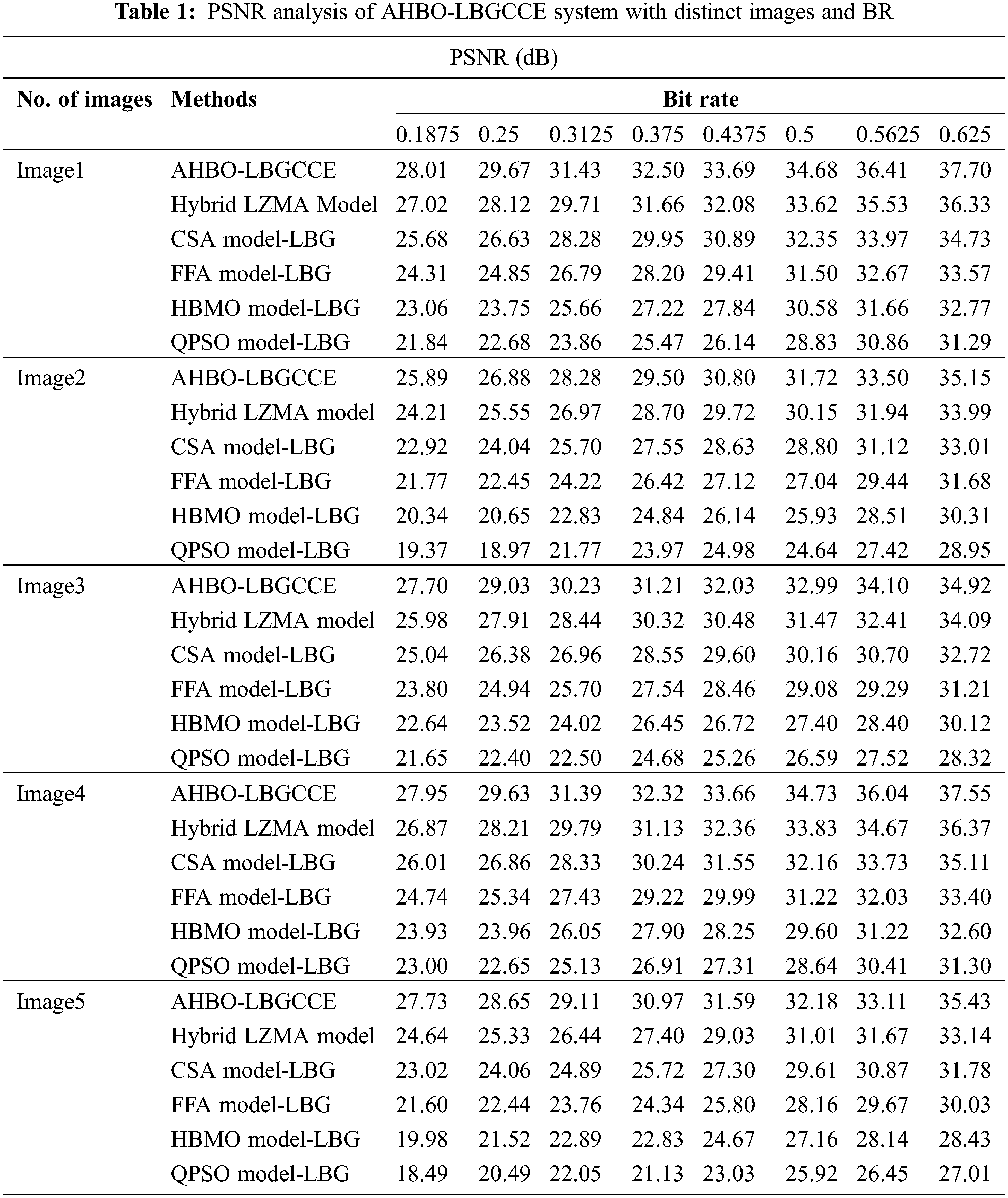

In this section, the experimental validation of the AHBO-LBGCCE technique is carried out on a set of images. Table 1 offers a comprehensive PSNR examination of the AHBO-LBGCCE model under varying levels of bit rate (BR).

Fig. 3 represents a brief PSNR examination of the AHBO-LBGCCE model with diverse BR levels under image 1. The results inferred that the AHBO-LBGCCE method has reached increased PSNR values under each BR. For example, with BR of 0.1875, the AHBO-LBGCCE technique has gained maximum PSNR of 28.01 dB. In contrast, the hybrid LZMA, CSA Model_LBG, FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG approaches have obtained reduced PSNR values of 27.02, 25.68, 24.31, 23.06, and 21.84 dB correspondingly.

Figure 3: PSNR analysis of AHBO-LBGCCE system under image1

Fig. 4 exhibits a detailed PSNR inspection of the AHBO-LBGCCE approach with varied BR levels under image 2. The outcome demonstrates that the AHBO-LBGCCE technique has obtained maximum PSNR values under each BR. For example, with BR of 0.1875, the AHBO-LBGCCE approach has resulted in maximum PSNR of 25.89 dB. On the other hand, the hybrid LZMA, CSA Model_LBG, FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG techniques have gained least PSNR values of 24.21, 22.92, 21.77, 20.34, and 19.37 dB correspondingly.

Figure 4: PSNR analysis of AHBO-LBGCCE system under images

Fig. 5 illustrates a comparative PSNR study of the AHBO-LBGCCE technique with several BR levels under image 3. The outcomes described that the AHBO-LBGCCE approach has resulted inraised PSNR values in each BR. For example, with BR of 0.1875, the AHBO-LBGCCE approach has provided increased PSNR of 27.70 dB. Contrastingly, the hybrid LZMA, CSA Model_LBG, FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG techniques have attained decreased PSNR values of 25.98, 25.04, 23.80, 22.64, and 21.65 dB correspondingly.

Figure 5: PSNR analysis of AHBO-LBGCCE system under image3

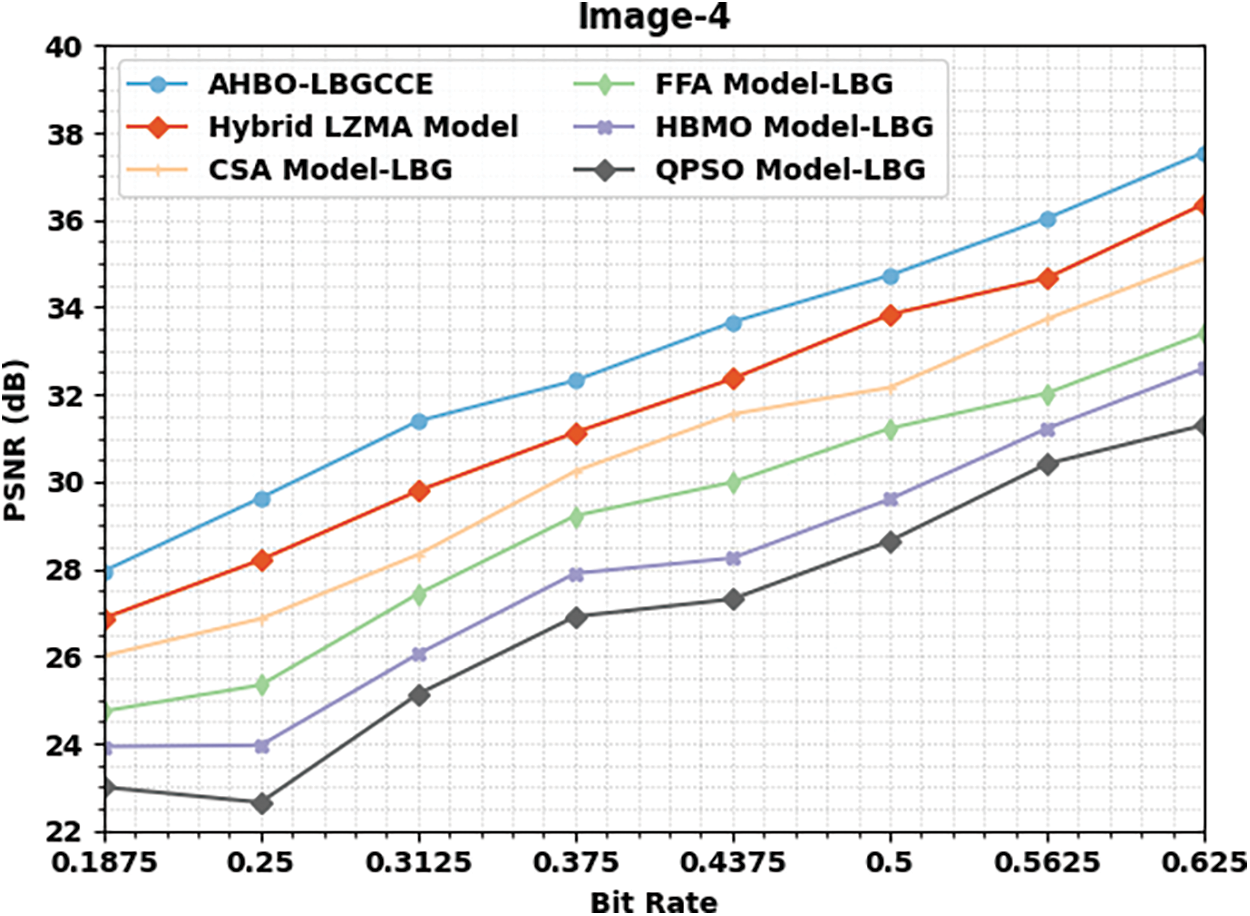

Fig. 6 studies comparative PSNR outcomes of the AHBO-LBGCCE model with different BR levels under image 4. The experimental values denoted that the AHBO-LBGCCE model has resulted in better PSNR values under each BR. For example, with BR of 0.1875, the AHBO-LBGCCE technique has accomplished highest PSNR of 27.95 dB. Simultaneously, the hybrid LZMA, CSA Model_LBG, FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG techniques have demonstrated reduced PSNR values of 26.87, 26.01, 24.74, 23.93, and 23.00 dB correspondingly.

Figure 6: PSNR analysis of AHBO-LBGCCE system under image4

In Fig. 7, a comprehensive PSNR analysis of the AHBO-LBGCCE method with several BR levels under image 5. The experimental outcomes represented that the AHBO-LBGCCE technique has attained raised PSNR values in each BR. For example, with BR of 0.1875, the AHBO-LBGCCE approach has demonstrated greater PSNR of 27.73 dB. In contrast, the hybrid LZMA, CSA Model_LBG, FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG techniques have exhibited inferior PSNR values of 24.64, 23.02, 21.60, 19.98, and 18.49 dB correspondingly.

Figure 7: PSNR analysis of AHBO-LBGCCE system under image5

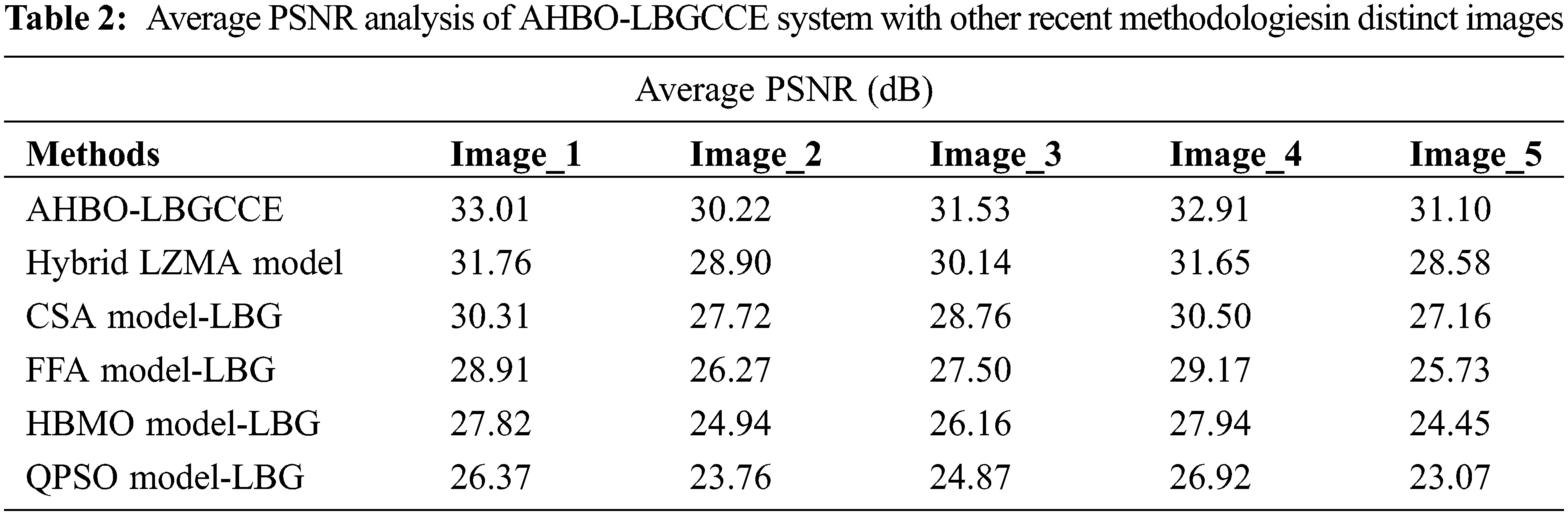

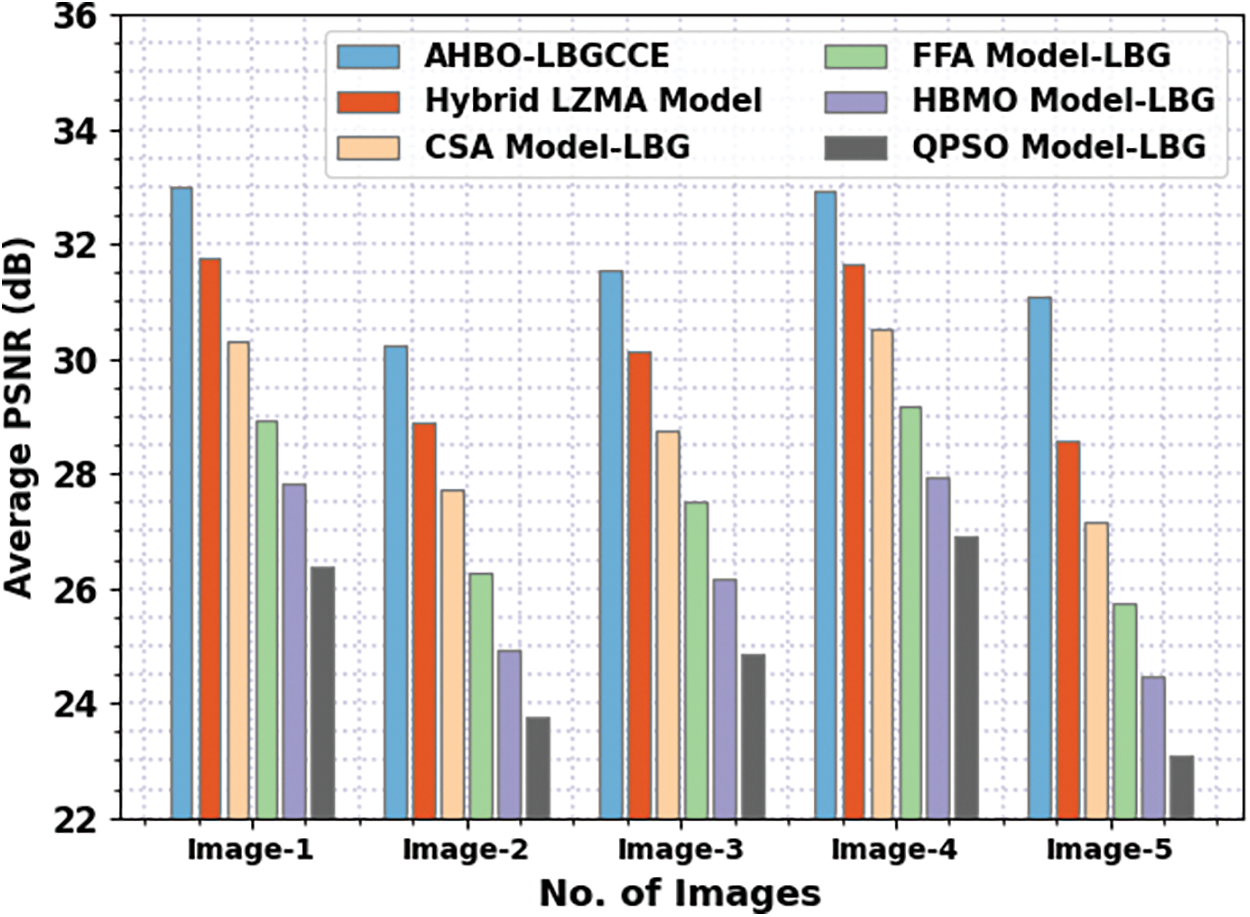

Next, an average PSNR study of the AHBO-LBGCCE model is performed in Table 2 and Fig. 8. The outcomedemonstrates the improved outcomes of the AHBO-LBGCCE model with maximum values of PSNR under all test images. It is noticeable the FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG has demonstrated least PSNR values. Though the hybrid LZMA and CSA Model_LBG stated nearer average PSNR values, the AHBO-LBGCCE model has outpaced the other ones.

Figure 8: Average PSNR analysis of AHBO-LBGCCE system with distinct images

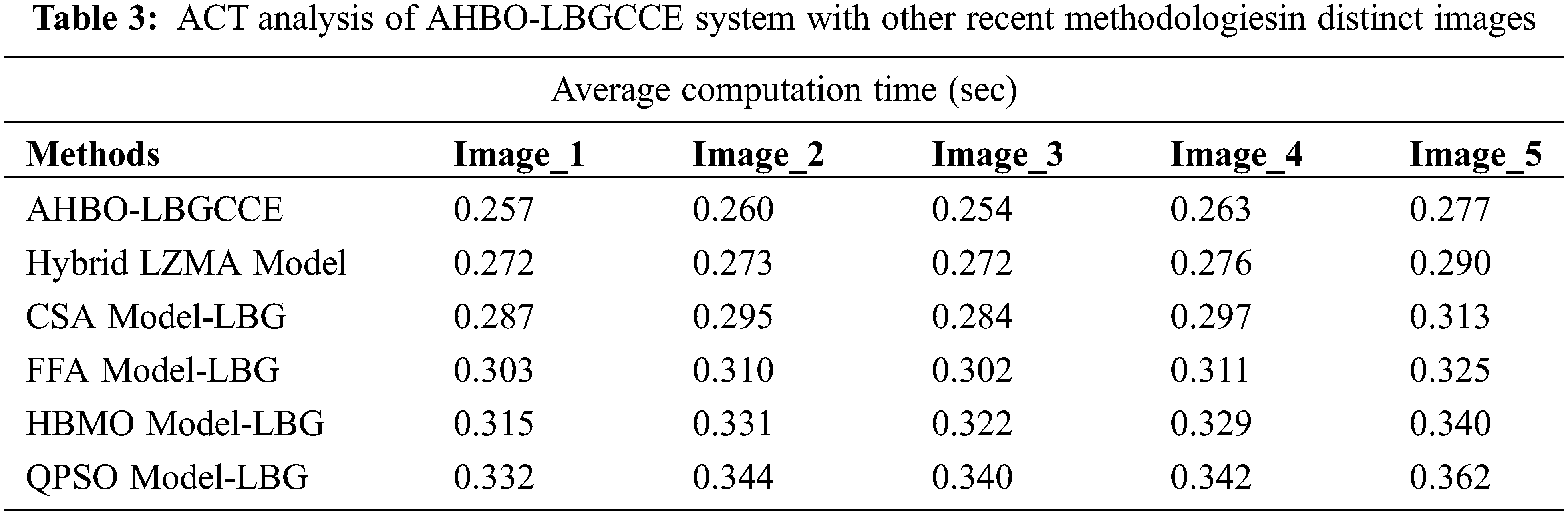

Finally, an average computation time (ACT) examination of the AHBO-LBGCCE system is studied in Table 3 and Fig. 9.

Figure 9: ACT analysis of AHBO-LBGCCE system under distinct images

The result indicates that the AHBO-LBGCCE approach has obtained superior performance with minimal values of ACT under all test images. It is also noted that the FFA Model_LBG, HBMO Model_LBG, and QPSO Model_LBG have shown poor performance. Although the hybrid LZMA and CSA Model_LBG reported closer ACT values, the AHBO-LBGCCE model has outperformed the other ones. These results assured the enhanced performance of the AHBO-LBGCCE method over other recent approaches.

In the course of this research, we came up with an innovative AHBO-LBGCCE method for the secure generation and compression of codebooks used in the context of the IIoT. In the beginning, the AHBO-LBGCCE method used the LBG model in conjunction with the AHBO algorithm in order to construct the VQ. In this particular research, the BWT model is used for the purpose of codebook compression. In addition, the Blowfish algorithm is used as part of the encryption process in order to accomplish the goal of achieving security. A comprehensive experimental investigation is carried out in order to verify the effectiveness of the provided technique in comparison to other algorithms. The experimental data helped to verify that the suggested approach might be improved, and the findings are being evaluated under a variety of different criteria. Using hybrid metaheuristics will allow for the performance of the AHBO-LBGCCE approach to be improved in the future.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. J. Löhdefink, A. Bär, J. Sitzmann and T. Fingscheidt, “Adaptive bitrate quantization scheme without codebook for learned image compression,” in Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, New Orleans, Louisiana, pp. 1732–1737, 2022. [Google Scholar]

2. X. Zhang, F. An, L. Chen, I. Ishii and H. J. Mattausch, “A modular and reconfigurable pipeline architecture for learning vector quantization,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 65, no. 10, pp. 3312–3325, 2018. [Google Scholar]

3. U. Jayasankar, V. Thirumal and D. Ponnurangam, “A survey on data compression techniques: From the perspective of data quality, coding schemes, data type and applications,” Journal of King Saud University-Computer and Information Sciences, vol. 33, no. 2, pp. 119–140, 2021. [Google Scholar]

4. N. Floropoulos and A. Tefas, “Complete vector quantization of feedforward neural networks,” Neurocomputing, vol. 367, no. 1, pp. 55–63, 2019. [Google Scholar]

5. J. Ravishankar, M. Sharma and S. Khaidem, “A novel compression scheme based on hybrid tucker-vector quantization via tensor sketching for dynamic light fields acquired through coded aperture camera,” in Proc. of the Int. Conf. on 3D Immersion (IC3D), Brussels, Belgium, IEEE, pp. 1–8, 2021. [Google Scholar]

6. J. Vizárraga, R. Casas, A. Marc and J. D. Buldain, “Dimensionality reduction for smart IoT sensors,” Electronics, vol. 9, no. 12, pp. 2035, 2020. [Google Scholar]

7. S. Nousias, E. V. Pikoulis, C. Mavrokefalidis and A. S. Lalos, “Accelerating deep neural networks for efficient scene understanding in automotive cyber-physical systems,” in Proc. of the 4th IEEE Int. Conf. on Industrial Cyber-Physical Systems (ICPS), Victoria, BC, Canada, IEEE, pp. 63–69, 2021. [Google Scholar]

8. M. Chen, D. Gündüz, K. Huang, W. Saad, M. Bennis et al., “Distributed learning in wireless networks: Recent progress and future challenges,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 12, pp, 1–26, 2021. [Google Scholar]

9. S. M. Darwish and A. A. Almajtomi, “Metaheuristic-based vector quantization approach: A new paradigm for neural network-based video compression,” Multimedia Tools and Applications, vol. 80, no. 5, pp. 7367–7396, 2021. [Google Scholar]

10. Y. Jeon, A. M. Mohammadi and L. Namyoon, “Communication-efficient federated learning over MIMO multiple access channels,” IEEE Transactions on Communications, vol. 70, no. 10, pp. 6547–6562, 2022. [Google Scholar]

11. J. Rahebi, “Vector quantization using whale optimization algorithm for digital image compression,” Multimedia Tools and Applications, vol. 2022, pp. 1–27, 2022. [Google Scholar]

12. N. A. Alkhaldi, R. A. A. Alsedais, H. T. Halawani and S. M. A. Aboutaleb, “Manta ray foraging optimization with vector quantization based microarray image compression technique,” Computational Intelligence and Neuroscience, vol. 2022, pp. 1–18, 2022. [Google Scholar]

13. T. Satish Kumar, S. Jothilakshmi, B. C. James, M. Prakash, N. Arulkumar et al., “HHO-based vector quantization technique for biomedical image compression in cloud computing,International,” Journal of Image and Graphics, vol. 2021, pp. 1–18, 2021. [Google Scholar]

14. E. Akhtarkavan, B. Majidi, M. F. M. Salleh and J. C. Patra, “Fragile high capacity data hiding in digital images using integer-to-integer DWT and lattice vector quantization,” Multimedia Tools and Applications, vol. 79, no. 19, pp. 13427–13447, 2020. [Google Scholar]

15. Z. Su, W. Li, G. Zhang, D. Hu and X. Zhou, “A steganographic method based on gain quantization for iLBC speech streams,” Multimedia Systems, vol. 26, no. 2, pp. 223–233, 2020. [Google Scholar]

16. Z. Huang, X. Zhang, L. Chen, Y. Zhu, F. An et al., “A vector-quantization compression circuit with on-chip learning ability for high-speed image sensor,” IEEE Access, vol. 5, pp. 22132–22143, 2017. [Google Scholar]

17. A. Filali, V. Ricordel, N. Normand and W. Hamidouche, “Rate-distortion optimized tree-structured point-lattice vector quantization for compression of 3D point clouds geometry,” in Proc. of the IEEE Int. Conf. on Image Processing (ICIP), Taipei, Taiwan, pp. 1099–1103, 2019. [Google Scholar]

18. M. H. Horng, “Vector quantization using the firefly algorithm for image compression,” Expert Systems with Applications, vol. 39, no. 1, pp. 1078–1091, 2012. [Google Scholar]

19. W. Zhao, L. Wang and S. Mirjalili, “Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications,” Computer Methods in Applied Mechanics and Engineering, vol. 388, pp. 114194, 2022. [Google Scholar]

20. S. M. Syfullah, Z. B. Zakaria, M. P. Uddin, M. F. Rabbi, M. I. Afjal et al., “Efficient vector code-book generation using K-means and Linde-Buzo-Gray (LBG) algorithm for Bengali voice recognition,” in Proc. of the Int. Conf. on Advancement in Electrical and Electronic Engineering (ICAEEE), Gazipur, Bangladesh, pp. 1–4, 2018. [Google Scholar]

21. C. Tang, L. Luo, Y. Xu, G. Chen, L. Tang et al., “Sequence fusion algorithm of tumor gene sequencing and alignment based on machine learning,” Computational Intelligence and Neuroscience, vol. 2021, pp. 1–16, 2021. [Google Scholar]

22. P. Rani, P. N. Singh, S. Verma, N. Ali, P. K. Shukla et al., “An implementation of modified blowfish technique with honey bee behavior optimization for load balancing in cloud system environment,” Wireless Communications and Mobile Computing, vol. 2022, pp. 1–22, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools