Open Access

Open Access

ARTICLE

Automated Disabled People Fall Detection Using Cuckoo Search with Mobile Networks

Department of Computer Science, College of Sciences and Humanities-Aflaj, Prince Sattam bin Abdulaziz University, Saudi Arabia

* Corresponding Author: Mesfer Al Duhayyim. Email:

Intelligent Automation & Soft Computing 2023, 36(3), 2473-2489. https://doi.org/10.32604/iasc.2023.033585

Received 21 June 2022; Accepted 26 October 2022; Issue published 15 March 2023

Abstract

Falls are the most common concern among older adults or disabled people who use scooters and wheelchairs. The early detection of disabled persons’ falls is required to increase the living rate of an individual or provide support to them whenever required. In recent times, the arrival of the Internet of Things (IoT), smartphones, Artificial Intelligence (AI), wearables and so on make it easy to design fall detection mechanisms for smart homecare. The current study develops an Automated Disabled People Fall Detection using Cuckoo Search Optimization with Mobile Networks (ADPFD-CSOMN) model. The proposed model’s major aim is to detect and distinguish fall events from non-fall events automatically. To attain this, the presented ADPFD-CSOMN technique incorporates the design of the MobileNet model for the feature extraction process. Next, the CSO-based hyperparameter tuning process is executed for the MobileNet model, which shows the paper’s novelty. Finally, the Radial Basis Function (RBF) classification model recognises and classifies the instances as either fall or non-fall. In order to validate the betterment of the proposed ADPFD-CSOMN model, a comprehensive experimental analysis was conducted. The results confirmed the enhanced fall classification outcomes of the ADPFD-CSOMN model over other approaches with an accuracy of 99.17%.Keywords

Disabled people are highly susceptible to hazardous falls and are incapable of calling or getting help at crucial moments. During such emergency times, it is challenging for them to push the emergency button present in their necklace or the medical bracelet. This drawback creates a need for the development of a medical alert mechanism that remains helpful in the detection of falls [1]. A gadget should be developed so that it utilizes the technology to predict the fall events of its wearer. In case of such a prediction, the device should automatically send an alert to the healthcare monitoring company. Fall can be defined as any unanticipated event that leads the individual to rest in a low phase [2]. Falls cause injuries that might be fatal most of the time, while they also result in psychological complaints too. Those individuals who met fatal falls may not be able to perform their day-to-day activities and suffer from constraints, anxiety, fear and depression of falling again. The main biological problem among elder adults is the fear of falling and limiting their Activities of Daily Life (ADL) [3]. This fear further leads to activity constraints which in turn weakens the muscles and causes insufficient gait balance. Consequently, the independence of the individual gets affected, and old-age people rely on others for their mobility. In this background, wearable or remote technologies are required for tracking, detecting and preventing falls from enhancing their overall Quality of Life (QoL). Falls’ understanding can be divided into fall prevention and detection [4].

When a fall is detected with the help of cameras or sensors to summon help, it is called fall detection [5]. Conversely, fall prevention focuses on averting falls by monitoring the locomotion of the human beings. Various advanced mechanisms have been developed in recent years with the help of sensors and methods to detect as well as prevent falls [6]. Fall detection refers to the task of identifying a specific object or a specific incident in the provided context. On the other hand, fall recognition can be explained as the arrangement of membership of the incident or an instance for a specific class. Thus, the fall ‘detection’ process can be defined as an identification process that determines whether a fall has occurred from the presented data. Fall ‘recognition’ can be defined as the recognition of a particular kind of fall event, such as a fall from the sitting position, forward fall, fall from a lying position, backward fall, fall from the standing position, and so on [7,8]. Fall recognition is highly helpful in increasing the appropriateness of the response. For instance, a fall from a standing position is fatal, whereas the outcomes of a fall from either lying or sitting posture depend on the prevailing conditions. If the fall type is appropriately familiar, effectual responses can be raised to handle the probable complexities of the particular kind of fall [9]. This study does not openly refer to whether the reviewed mechanism is either a fall recognition or a fall detection mechanism. Rather, it uses the word ‘fall detection’ to represent the general fall detection and activity recognition mechanisms. The Machine Learning (ML) technique offers a learning capability to the mechanism on the basis of trends and datasets in the data. At the time of the data collection process, the sensors collect the data related to distinct fall variables [10]. Therefore, ML techniques are utilized in the data processing mechanisms for identification and the classification of fall activities based on their application needs.

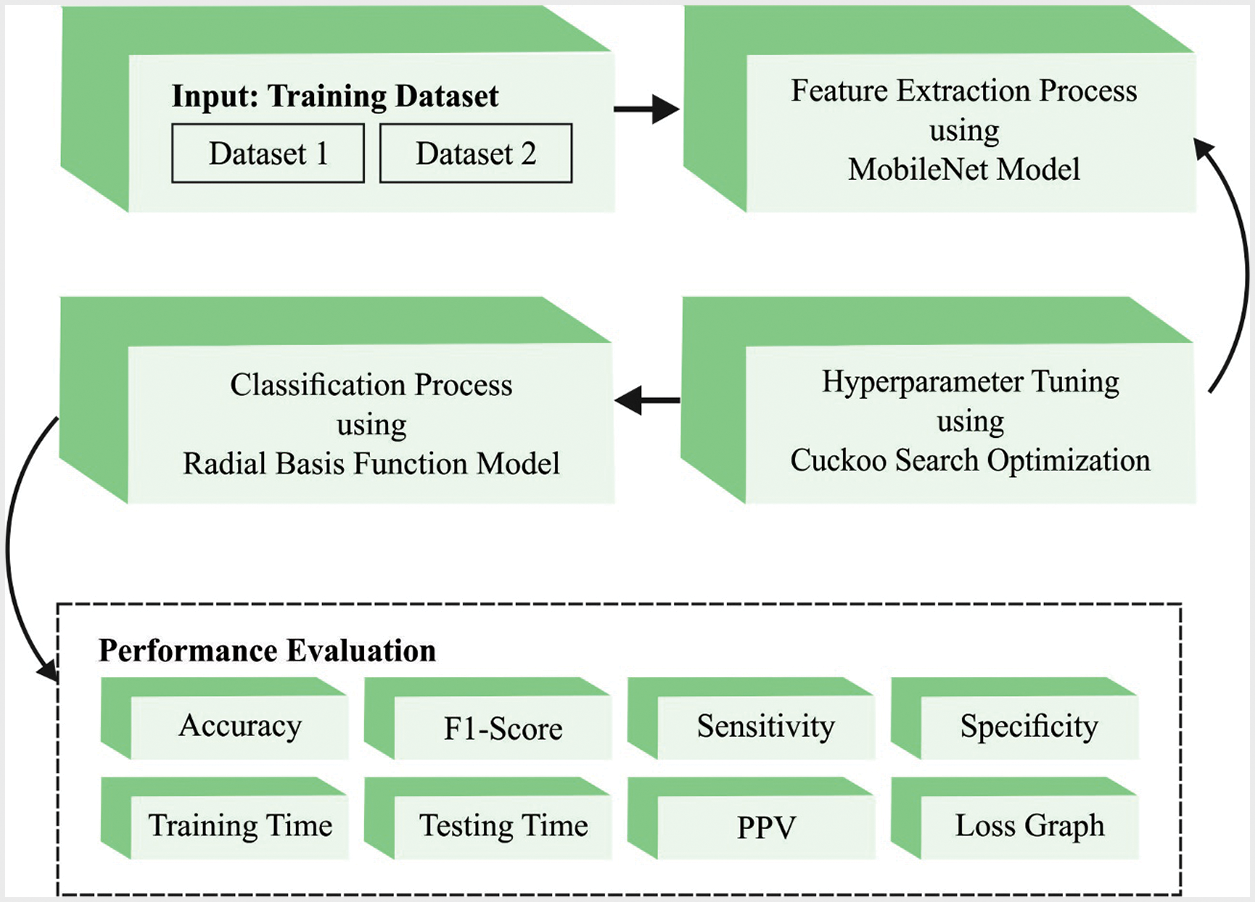

The current study presents an Automated Disabled People Fall Detection using Cuckoo Search Optimization with Mobile Networks (ADPFD-CSOMN) model. The presented ADPFD-CSOMN technique incorporates the MobileNet model for the feature extraction process in the preliminary stage. In addition, the CSO algorithm is exploited for optimal modification of the hyperparameters involved herewith. Finally, the Radial Basis Function (RBF) classification model recognises and classifies an instance as either a fall event or a non-fall event. In order to validate the supremacy of the proposed ADPFD-CSOMN model, a comprehensive experimental analysis was conducted.

Yacchirema et al. [11] developed the Internet of Things and Ensemble (IoTE)-Fall method, a smart fall detection technology that can be used among elderly persons in the indoor environment. The proposed technique used the IoT technology and the ensemble ML method. The IoTE-Fall technique applied a 3D-axis accelerometer embedded with IPv6 over Low-Power Wireless Personal Area Network (6lowpan) wearable device. This device can capture real-time data regarding the movements of elderly volunteers. In the study conducted earlier [12], a low-cost extremely-precise Machine Learning (ML)-related fall detection technique was presented. Especially a novel online feature extraction methodology was developed in this study that proficiently applied the time features of the falls. Additionally, a new ML-based technique was developed to achieve the numerical complexity trade-off or optimal accuracy. The low computation cost incurred by the presented approach allowed it to be embedded in wearable sensors. Further, the power requirement was comparatively less than the rest of the devices. These features improve the independence of wearable devices. On the other hand, the need for battery replacement or recharge gets reduced. Hassan et al. [13] presented a fall detection architecture that identifies the falls of elderly persons and assists the family members as well as their caregivers by instantly localizing the victims. In this work, the information retrieved from the accelerometer sensors on smartphones was analysed and processed through online fall detection techniques installed in smartphones. This technique transmits an indoor sound alert to the family member via a wireless access point at home or through Short Message Service (SMS) in case of outdoor to the caregiver or the hospital via mobile network BS. A hybrid DL method was applied in this study for the fall detection process.

Zerrouki et al. [14] developed an advanced technique for consistent identification of the falls related to the human silhouette shape variance in vision monitoring. This mission was accomplished based on the steps given herewith (i) Reduction of the feature vector dimension via a differential evolution method; (ii) Presentation of a curve-let transform along with the area ratios to recognize the postures of a human being in the image; (iii) Adaptation of Hidden Markov Mechanism for the classification of a video sequence as either non-fall or fall actions (iv) and identification of the postures using the Support Vector Machine (SVM) model. Maitre et al. [15] recommended a Deep Neural Network (DNN) method using three ultra-wide band radars to distinguish the fall events in an apartment measuring 40 square meters. The Deep Neural Network had a Convolutional Neural Network (CNN) that was stacked with Long Short Term Memory (LSTM) model and a Fully Convolutional Network (FCN) to recognize the fall events. In other terms, the problem tackled here was a binary classifier attempt to discriminate the fall events from the non-fall events.

3 Process Involved in Fall Detection Model

In this study, a novel ADPFD-CSOMN method has been developed to identify and classify fall events. The presented ADPFD-CSOMN technique incorporates the MobileNet model for the feature extraction process during the preliminary stage. In addition, the CSO technique is exploited for optimal fine-tuning of the hyperparameters involved. At last, the RBF classification algorithm is utilized to recognize and classify an event as either fall or a non-fall event. Fig. 1 illustrates the overall block diagram of the ADPFD-CSOMN approach.

Figure 1: Overall block diagram of the ADPFD-CSOMN approach

3.1 Feature Extraction Using MobileNet Model

At first, the presented ADPFD-CSOMN technique incorporates the MobileNet model design for feature extraction. The MobileNet structure is effective with less number of features in the name of Palmprint detection [16]. Further, the MobileNet framework is depth-wise. The basic architecture depends on different abstraction layers, whereas the mechanism behind the distinct convolution layers seems to be a quantized formation that evaluates the common challenge. The difficulty of

Since the multiplier value is a context-specific entity, it is also used in the classification of skin diseases. Here, the value of the multiplier

The projected algorithm incorporates both depth-wise and point-wise convolution layers, which are limited by a renowned depletion parameter using the d parameter and are evaluated as given below.

Two hyper features, such as the resolution and the width multiplier, help in adjusting the feasible size window to achieve a precise prediction. In this work, the input size of the image is

3.2 Hyperparameter Adjustment Using CSO Algorithm

In this stage, the CSO algorithm is exploited for optimal fine-tuning of the hyperparameters involved herewith. The CSO algorithm is inspired by the social performance of Cuckoo [17]. This algorithm is a population-related search method in which an optimal outcome is identified for the enhanced problem. Computationally, the CSO approach is formulated as two fundamental stages. In the initial stage, the accessibility of the host nest is fixed. The following posit is that every Cuckoo finds only an individual egg at timestamp t to arbitrarily choose the host nest. At last, the maximum quality egg from the optimal nest proceeds to the upcoming generation. Consider that

If

Mantegna’s and McCulloch’s techniques are two popular techniques used in this optimization method. The Levy step size is calculated by following the Mantegna approach as given herewith.

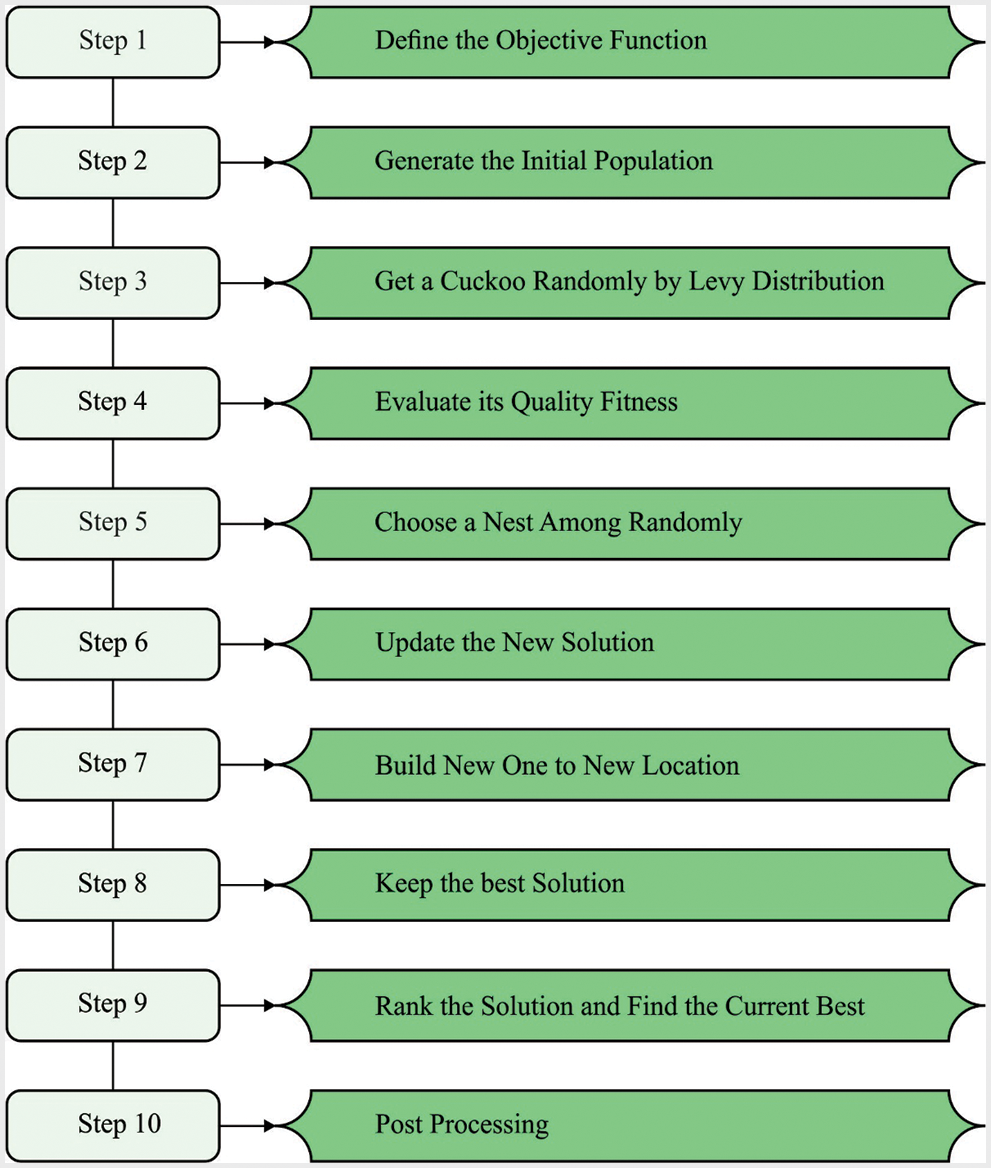

This condition is a forsaken probability, the whole size of the population and the maximum quantity of cuckoo reproduction during its lifetime is set as the utilizer; however, the first term at the initial stage is accomplished. Fig. 2 demonstrates the flowchart of the CSO technique.

Figure 2: Flowchart of CSO technique

Consider that t indicates the existing generation,

In Eq. (7),

In Eq. (6),

In Eq. (8),

CSO method derives a fitness function to achieve enhanced classification performance. It sets a positive digit to denote the superior outcomes of the candidate solutions. In this study, the reduced classification error rate is taken as the fitness function, as shown in Eq. (10). The finest solution contains less error rates, whereas the poorest solution has a high error rate.

3.3 Fall Detection Using RBF Model

In this final stage, the Radial Basis Function (RBF) classification method is utilized to recognize and classify an instance as either a fall event or a non-fall event. RBF is a well-known supervised Neural Network (NN) learning model [18] that falls under the MLP network type. The RBF network is established through three subsequent layers given below.

Input Layer. It transfers the input with no distortion

Output Layer. Simple layer that comprises a linear function.

Normally, the basis function takes the form

In Eq. (11),

The weight vector is described by minimizing the mean squared variances among the classification output.

Then, the target value

The parameters

or

Hence,

After calculations, the following notations are attained.

In this section, the proposed ADPFD-CSOMN model was experimentally validated using two datasets, namely, the Multiple Cameras Fall (MCF) dataset (available at http://www.iro.umontreal.ca/∼labimage/Dataset/) and UR Fall Detection (URFD) dataset (available at http://fenix.univ.rzeszow.pl/∼mkepski/ds/uf.html).

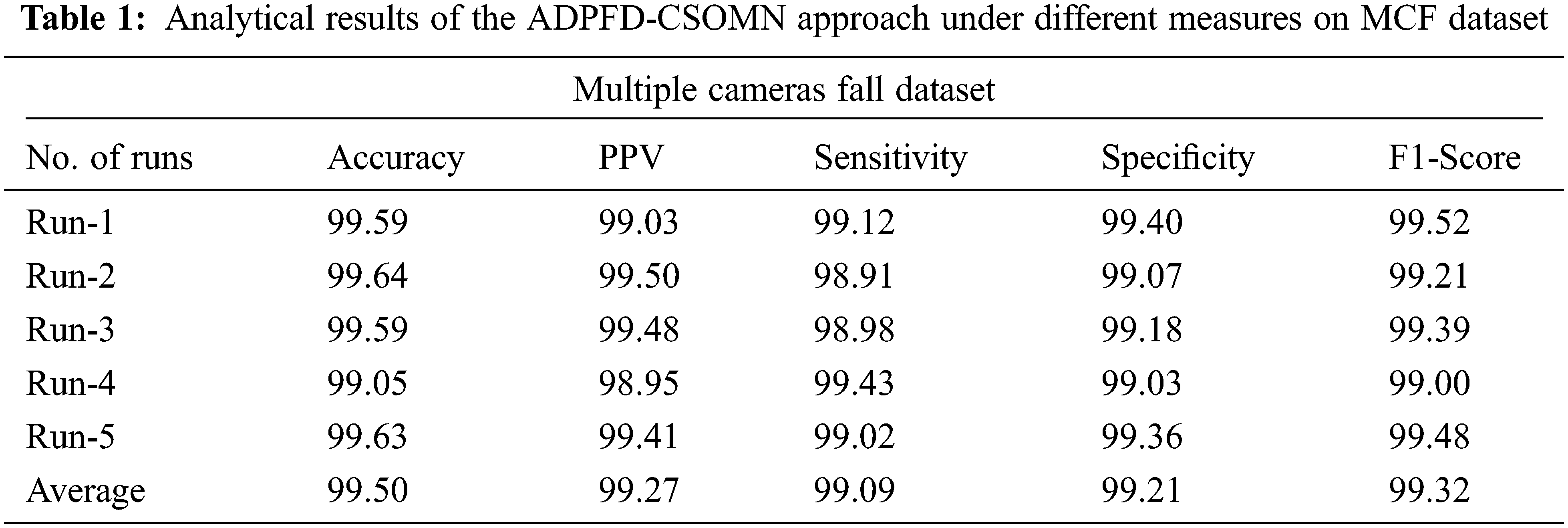

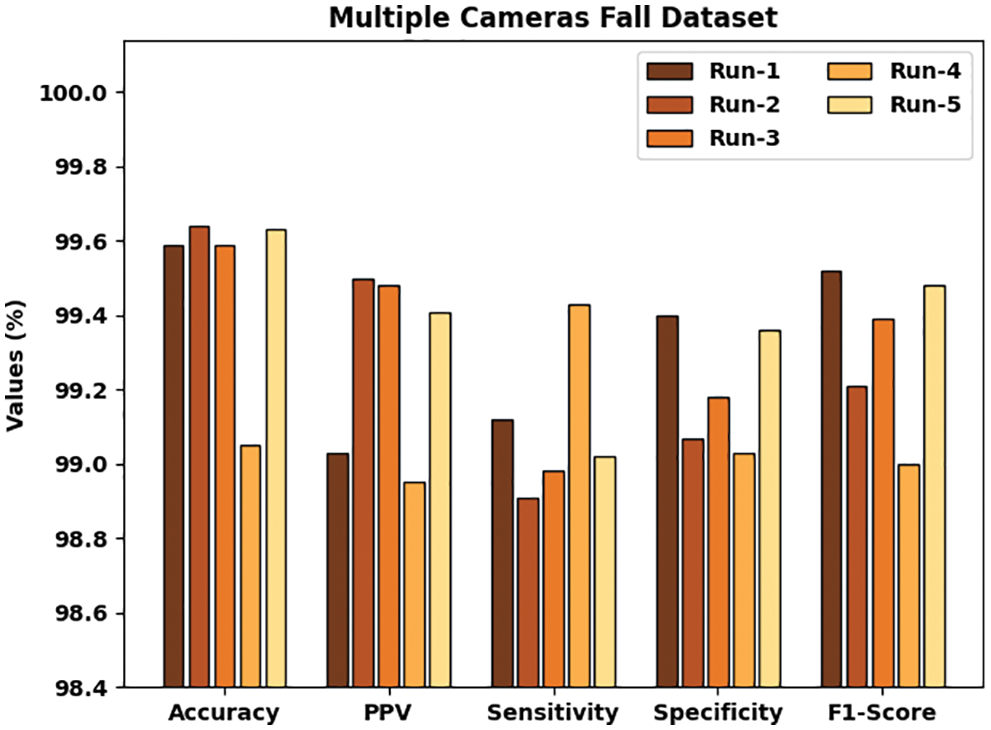

Table 1 and Fig. 3 show the fall detection results attained by the proposed ADPFD-CSOMN algorithm on MCF dataset. The results infer that the ADPFD-CSOMN method achieved an effectual recognition performance under each class. For example, on run-1, the ADPFD-CSOMN model achieved

Figure 3: Analytical results of the ADPFD-CSOMN approach on MCF dataset

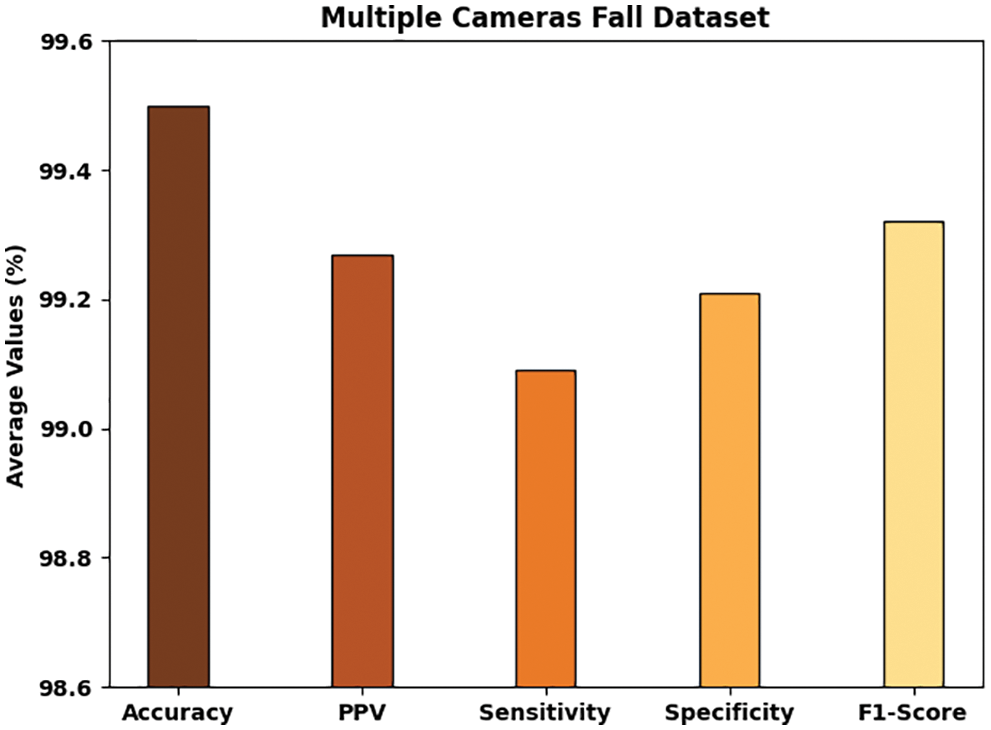

Fig. 4 demonstrates the average fall detection outcomes accomplished by the proposed ADPFD-CSOMN algorithm on test MCF dataset. The figure implies that the proposed ADPFD-CSOMN method reached average

Figure 4: Average analytical results of the ADPFD-CSOMN approach on MCF dataset

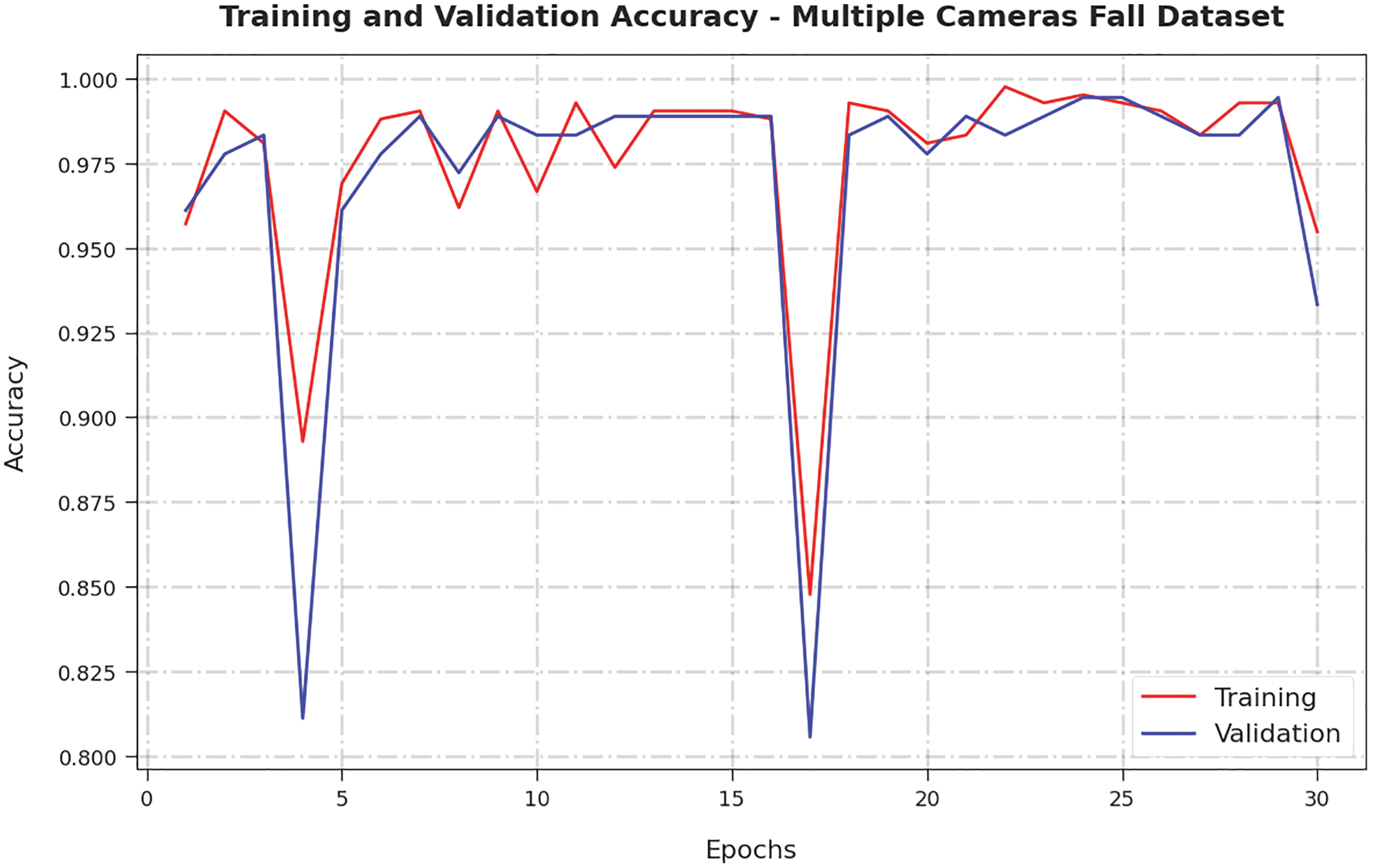

Both Training Accuracy (TA) and Validation Accuracy (VA) values, obtained by the ADPFD-CSOMN method on MCF dataset, are shown in Fig. 5. The experimental outcomes revealed that the presented ADPFD-CSOMN system reached superior TA and VA values whereas the VA values were higher than the TA values.

Figure 5: TA and VA analyses results of the ADPFD-CSOMN approach on MCF dataset

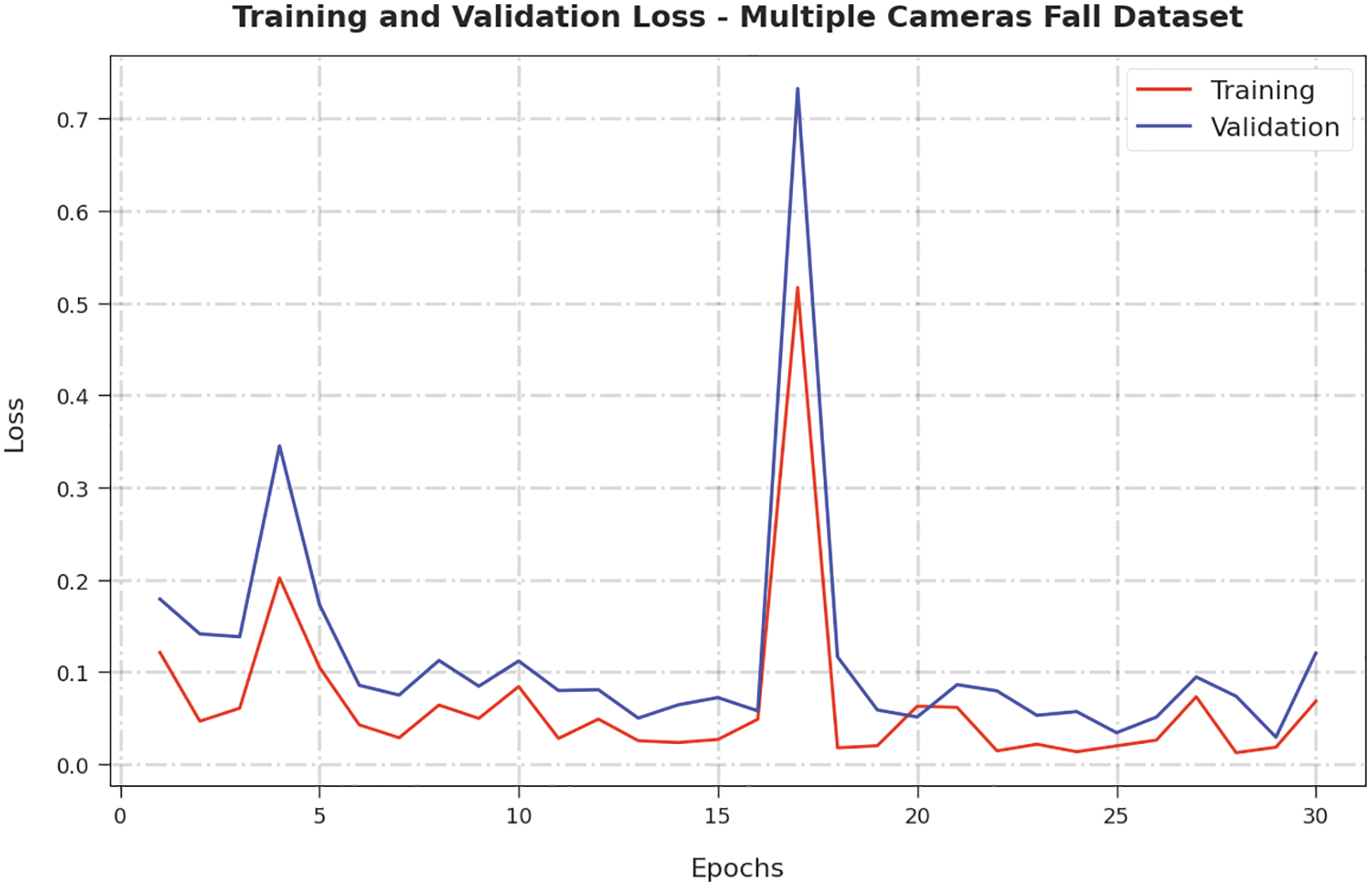

Both Training Loss (TL) and Validation Loss (VL) values, acquired by the ADPFD-CSOMN approach on MCF dataset, are exhibited in Fig. 6. The experimental outcomes denote that the proposed ADPFD-CSOMN approach exhibited the least TL and VL values while the VL values were lesser than the TL values.

Figure 6: TL and VL analyses results of the ADPFD-CSOMN approach on MCF dataset

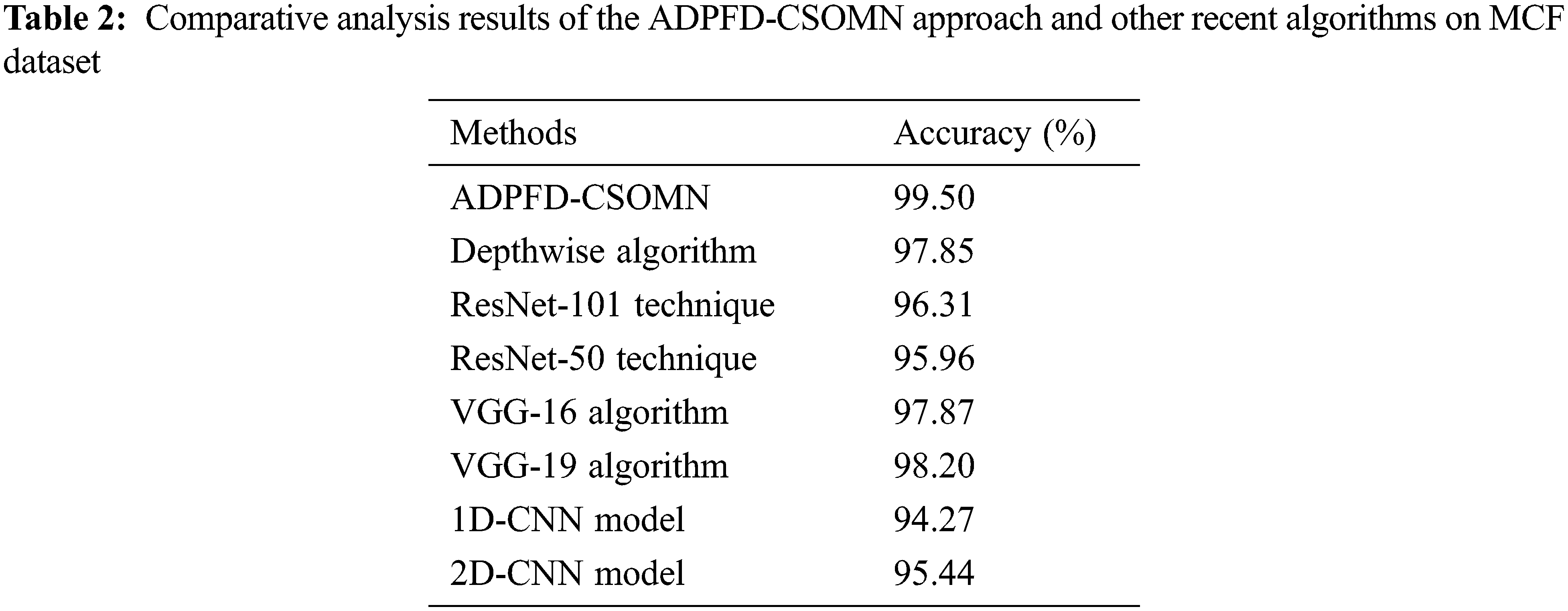

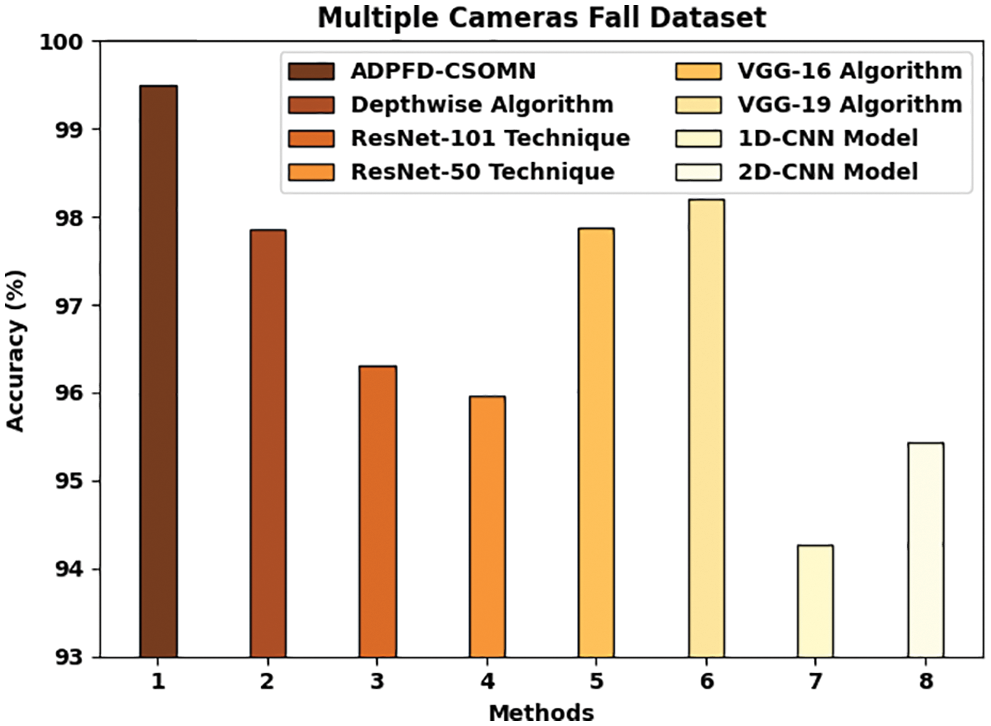

Table 2 and Fig. 7 portray the brief overview of the comparative analysis results, attained by the proposed ADPFD-CSOMN method and other recent approaches on MCF dataset [19]. The figure reports that the 1D-CNN, 2D-CNN, ResNet-101 and the ResNet-50 models reported the least

Figure 7: Comparative analysis results of the ADPFD-CSOMN approach on MCF dataset

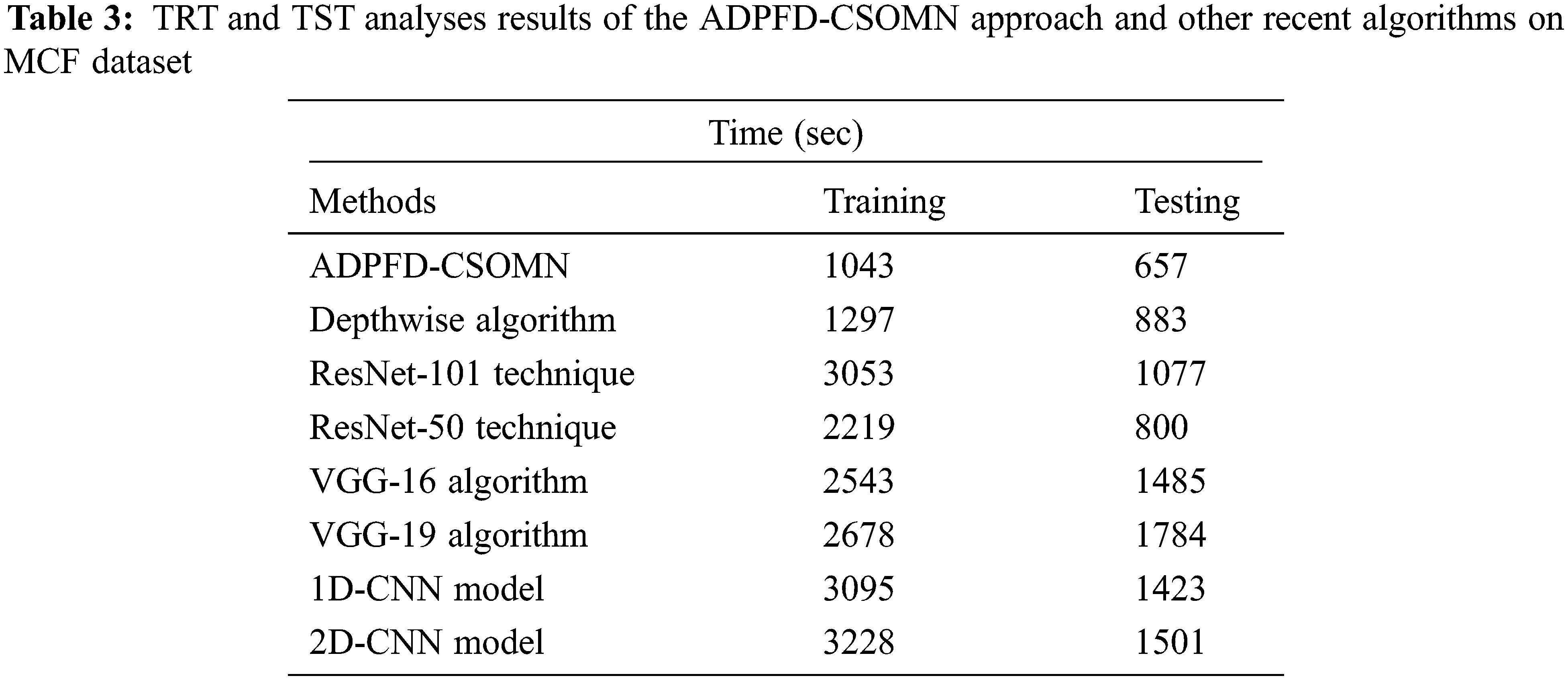

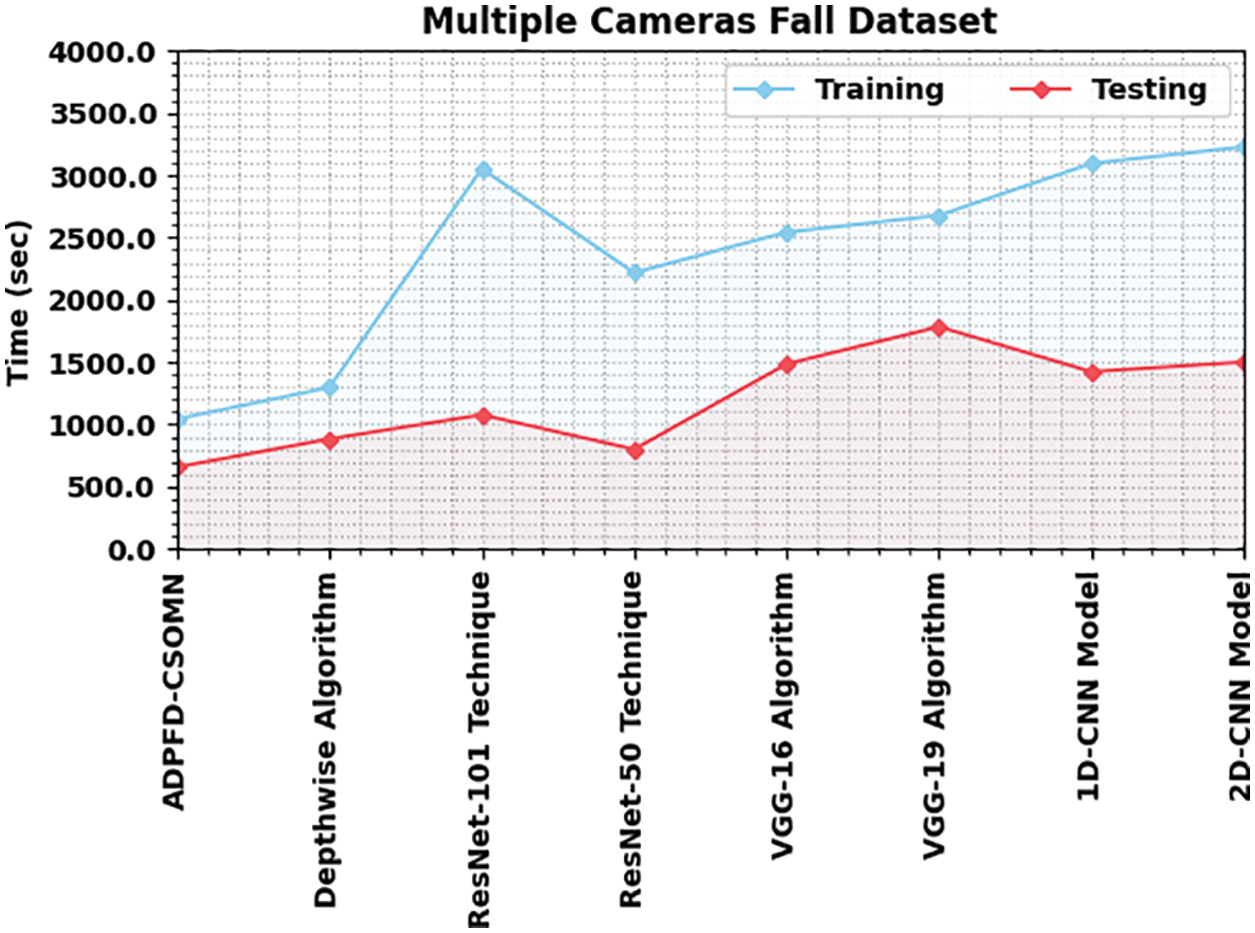

The researchers conducted the Training Time (TRT) and Testing Time (TST) analyses in detail between the proposed ADPFD-CSOMN model and other recent models on MCF dataset and the results are shown in Table 3 and Fig. 8. Based on the TRT analysis outcomes, it can be inferred that the proposed ADPFD-CSOMN model achieved proficient results with a minimal TRT of 1043 s. But the depthwise technique, ResNet-101, ResNet-50, VGG-16, VGG-19, 1D-CNN and the 2D-CNN models produced high TRT values such as 1297, 3053, 2219, 2543, 2678, 3095 and 3228 s respectively. Also, based on the TST analysis outcomes, the ADPFD-CSOMN algorithm proved its proficiency with a minimal TRT of 657 s whereas the depthwise, ResNet-101, ResNet-50, VGG-16, VGG-19, 1D-CNN and the 2D-CNN models produced increased TST values such as 883, 1077, 800, 1485, 1784, 1423 and 1501 s correspondingly.

Figure 8: TRT and TST analyses results of the ADPFD-CSOMN approach on MCF dataset

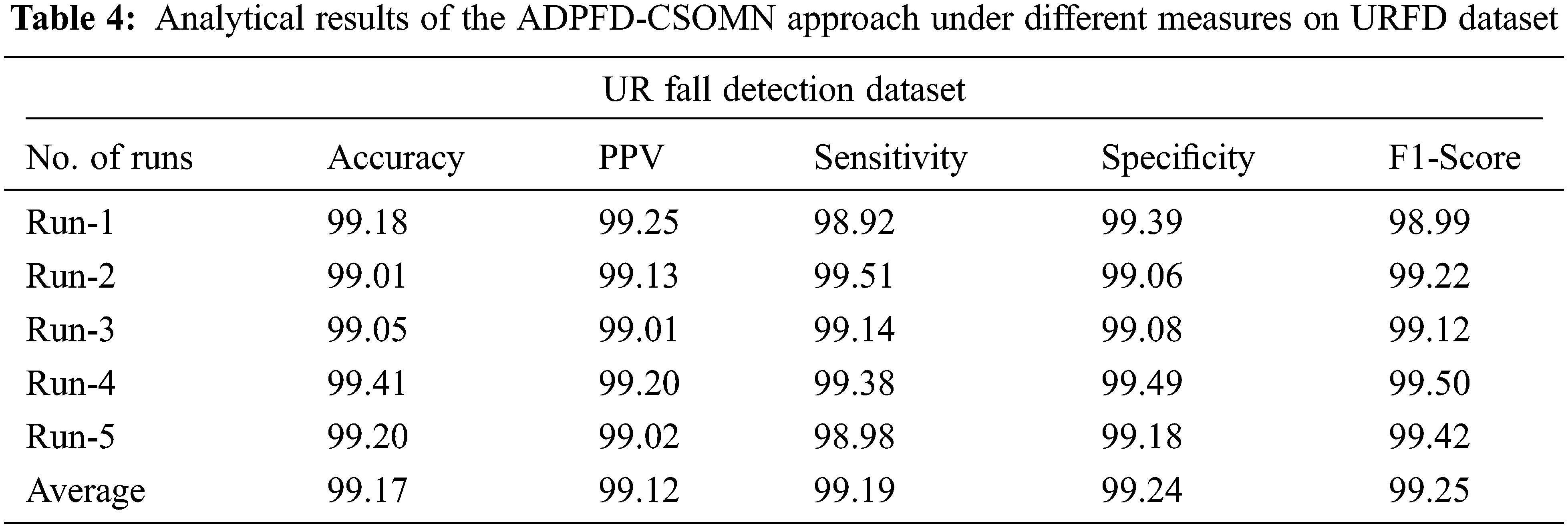

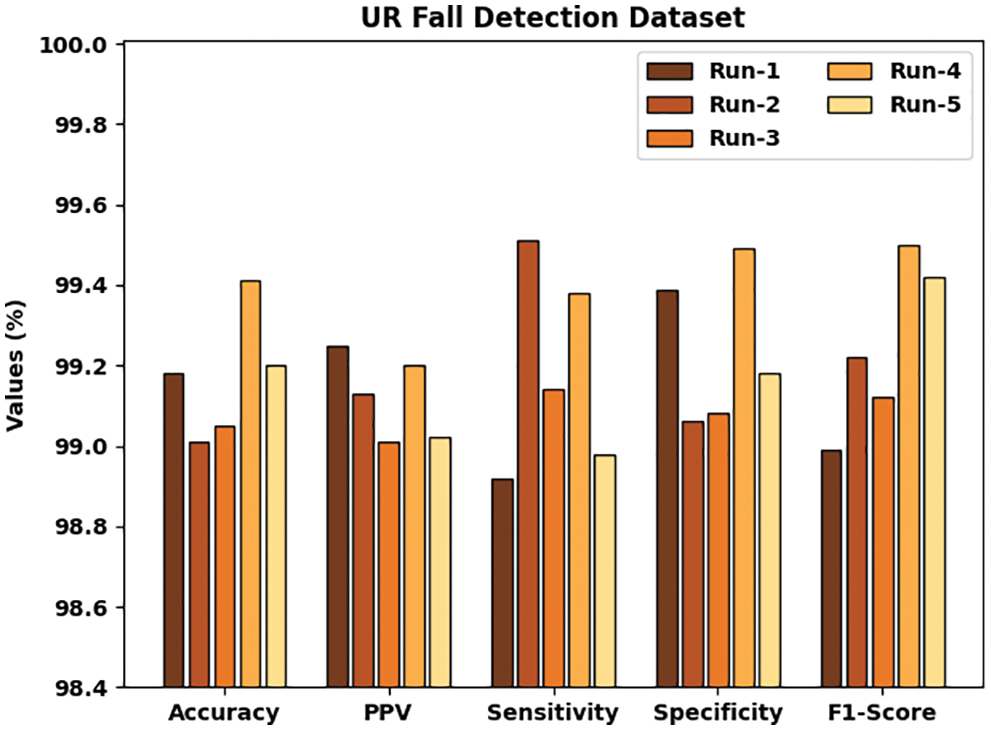

Table 4 and Fig. 9 provide the fall detection results offered by the ADPFD-CSOMN model on URFD dataset. The results infer that the proposed ADPFD-CSOMN method displayed an effectual recognition performance for every class. For example, on run-1, the ADPFD-CSOMN model rendered

Figure 9: Analytical results of the ADPFD-CSOMN approach on URFD dataset

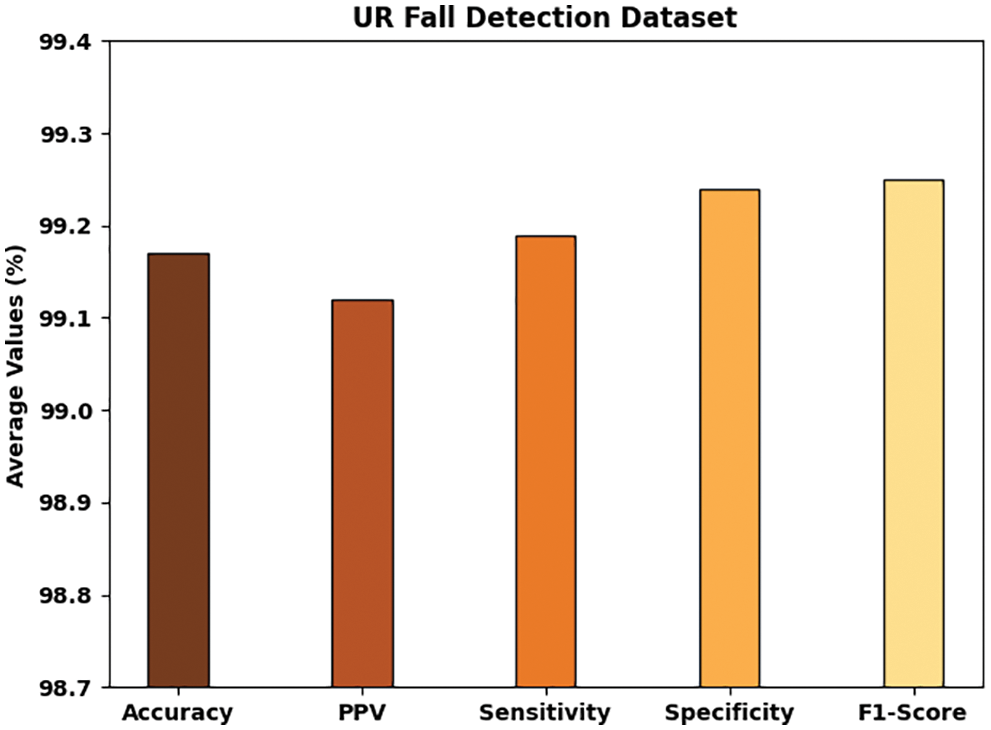

Fig. 10 establishes the average fall detection outcomes attained by the proposed ADPFD-CSOMN method on test URFD dataset. The figure implies that the ADPFD-CSOMN approach reached average

Figure 10: Average analysis results of the ADPFD-CSOMN approach on URFD dataset

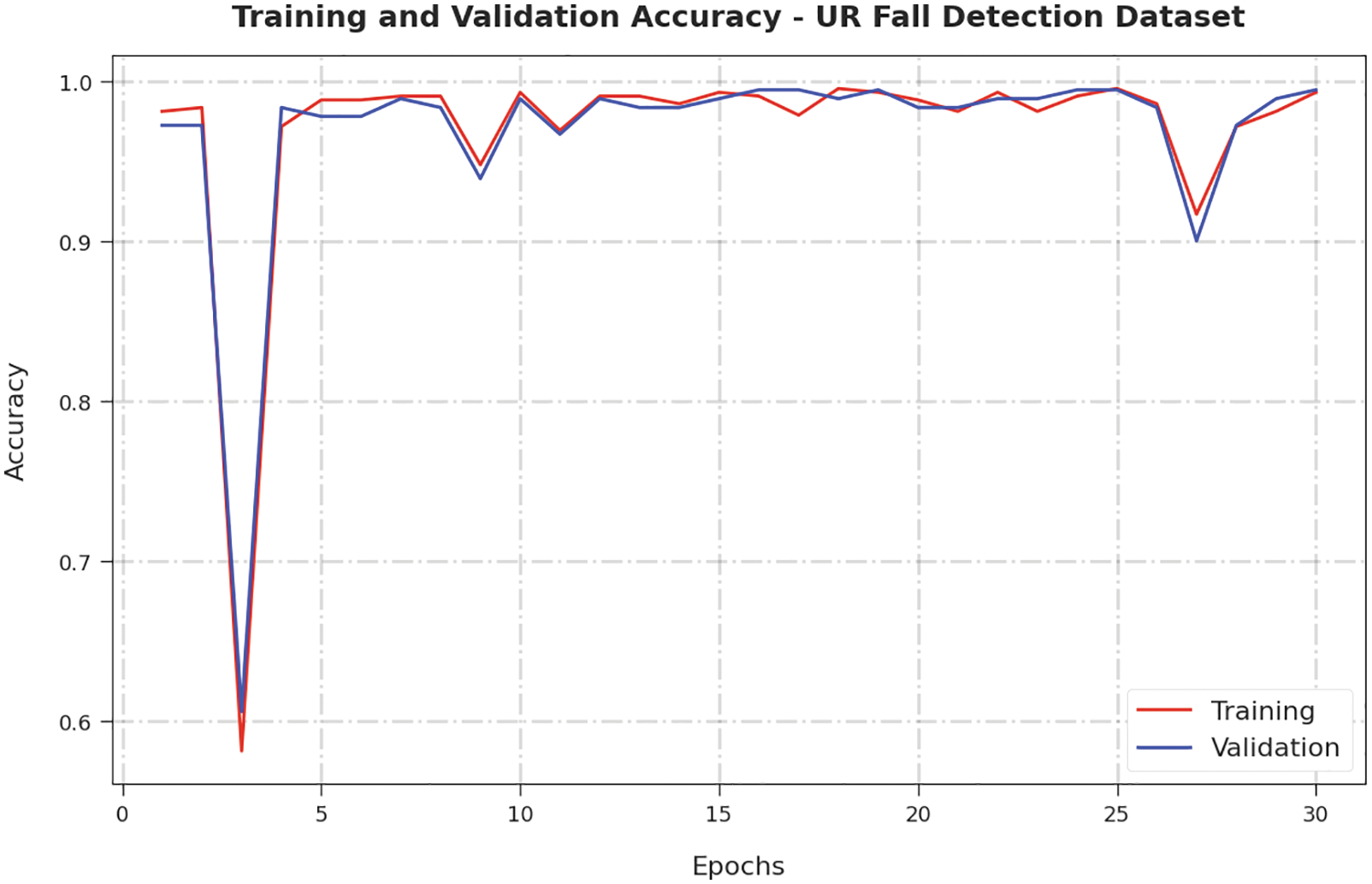

Both TA and VA values, acquired by the ADPFD-CSOMN method on URFD dataset, are shown in Fig. 11. The experimental outcomes infer that the proposed ADPFD-CSOMN technique achieved the maximal TA and VA values while the VA values were higher than the TA values.

Figure 11: TA and VA analyses results of the ADPFD-CSOMN approach on URFD dataset

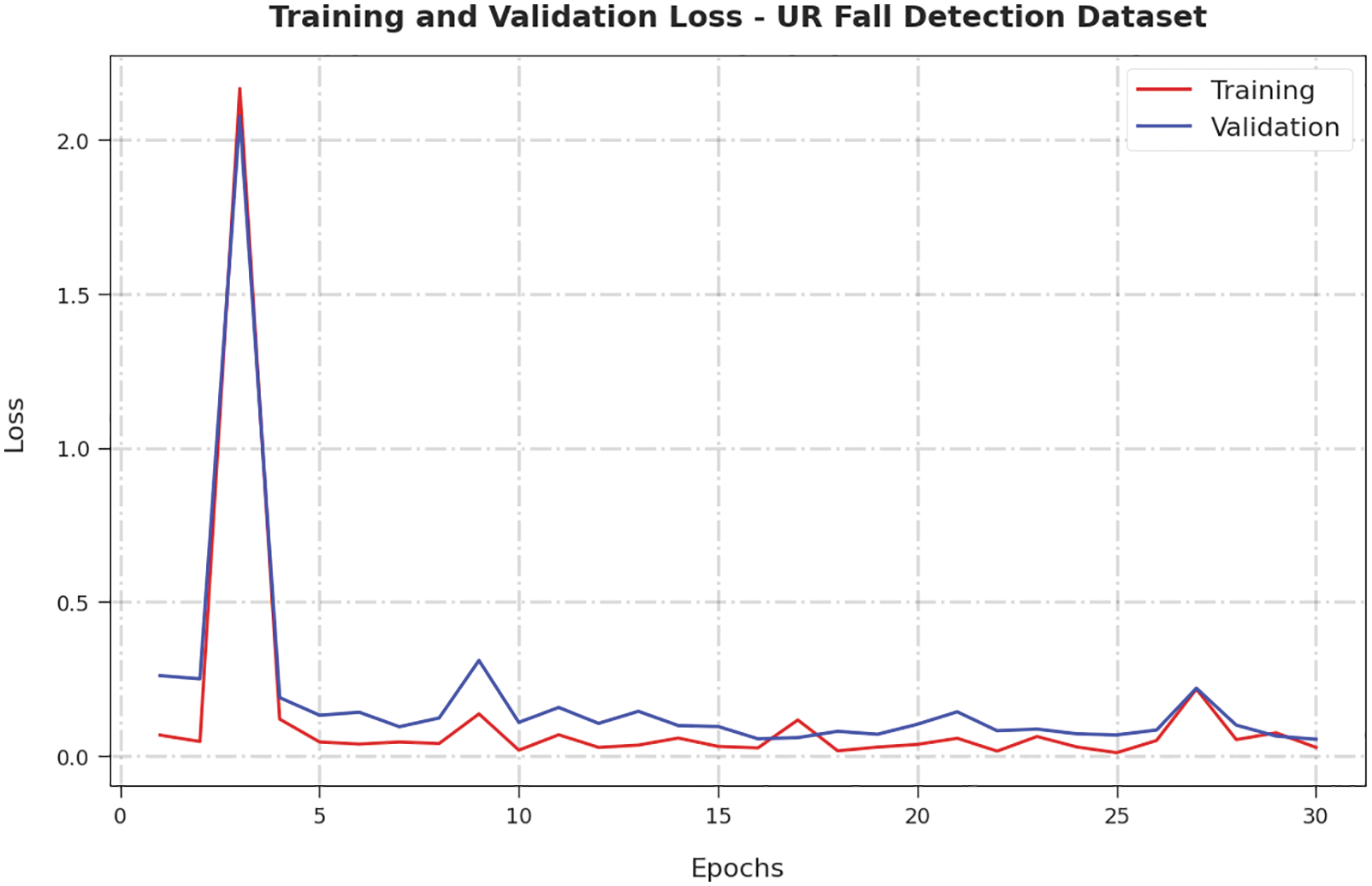

Both TL and VL values, reached by the proposed ADPFD-CSOMN approach on URFD dataset, are exhibited in Fig. 12. The experimental outcomes imply that the proposed ADPFD-CSOMN algorithm exhibited the minimal TL and VL values while the VL values were lesser than the TL values.

Figure 12: TL and VL analyses results of the ADPFD-CSOMN approach on URFD dataset

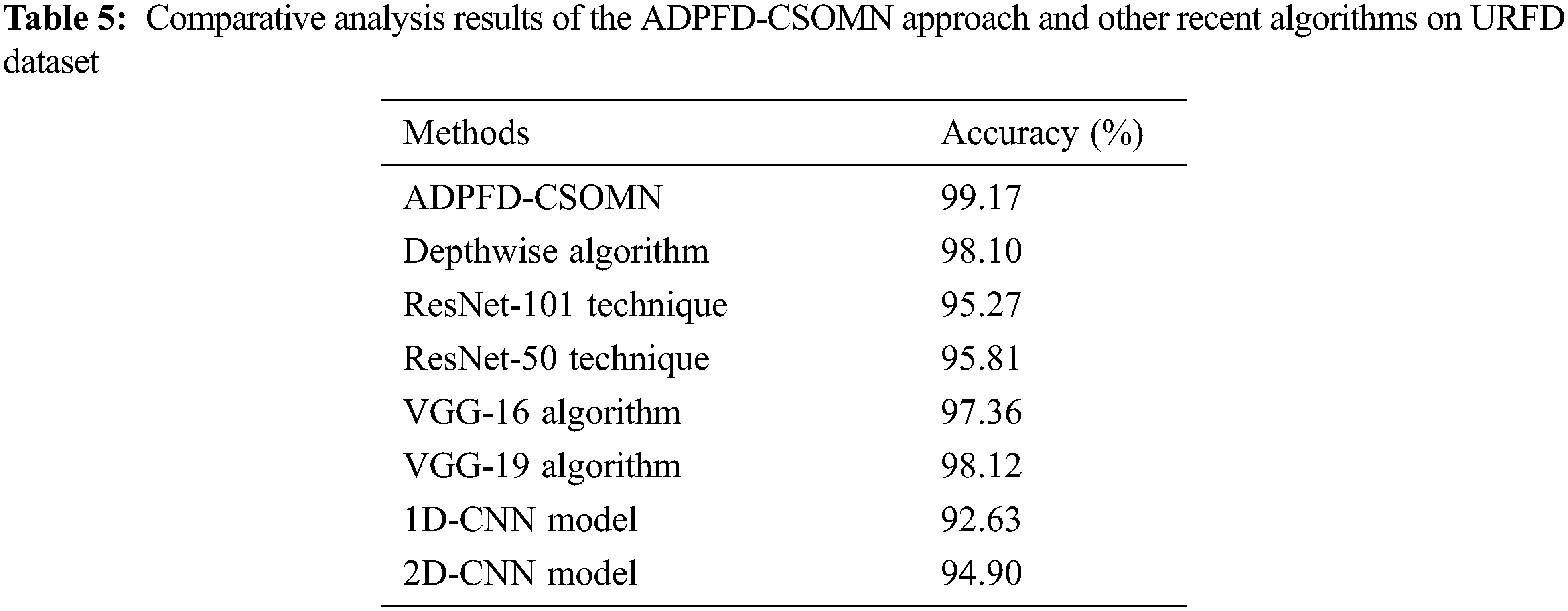

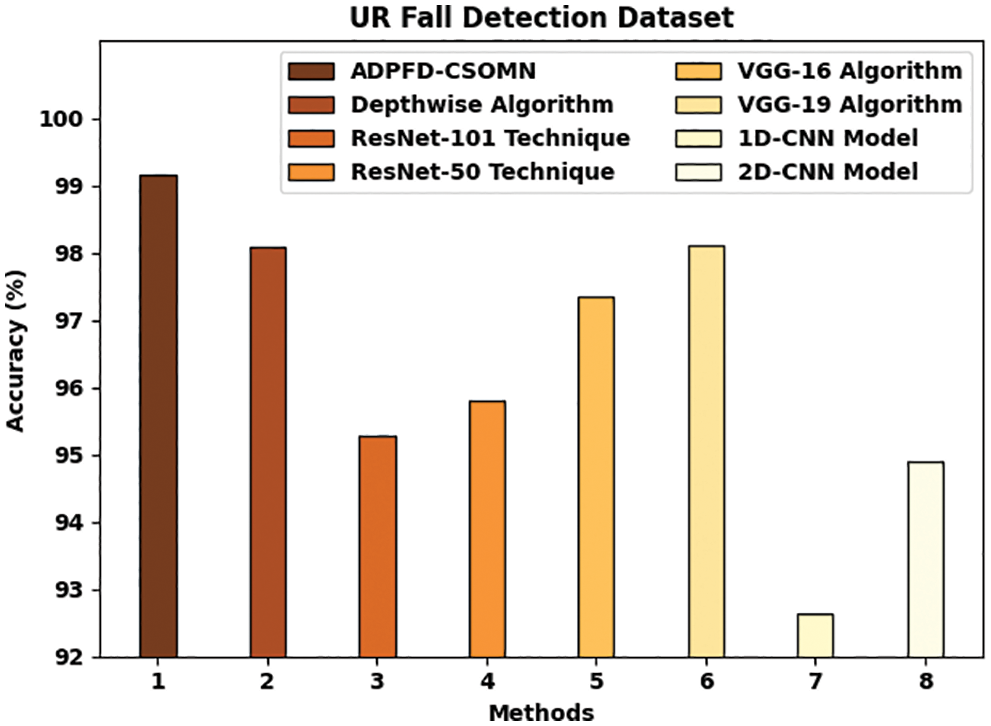

Table 5 and Fig. 13 report the comparative analysis results achieved by the ADPFD-CSOMN model and other recent techniques on URFD dataset. The figure infers that the 1D-CNN, 2D-CNN, ResNet-101 and ResNet-50 algorithms reported the least

Figure 13: Comparative analysis results of the ADPFD-CSOMN approach on URFD dataset

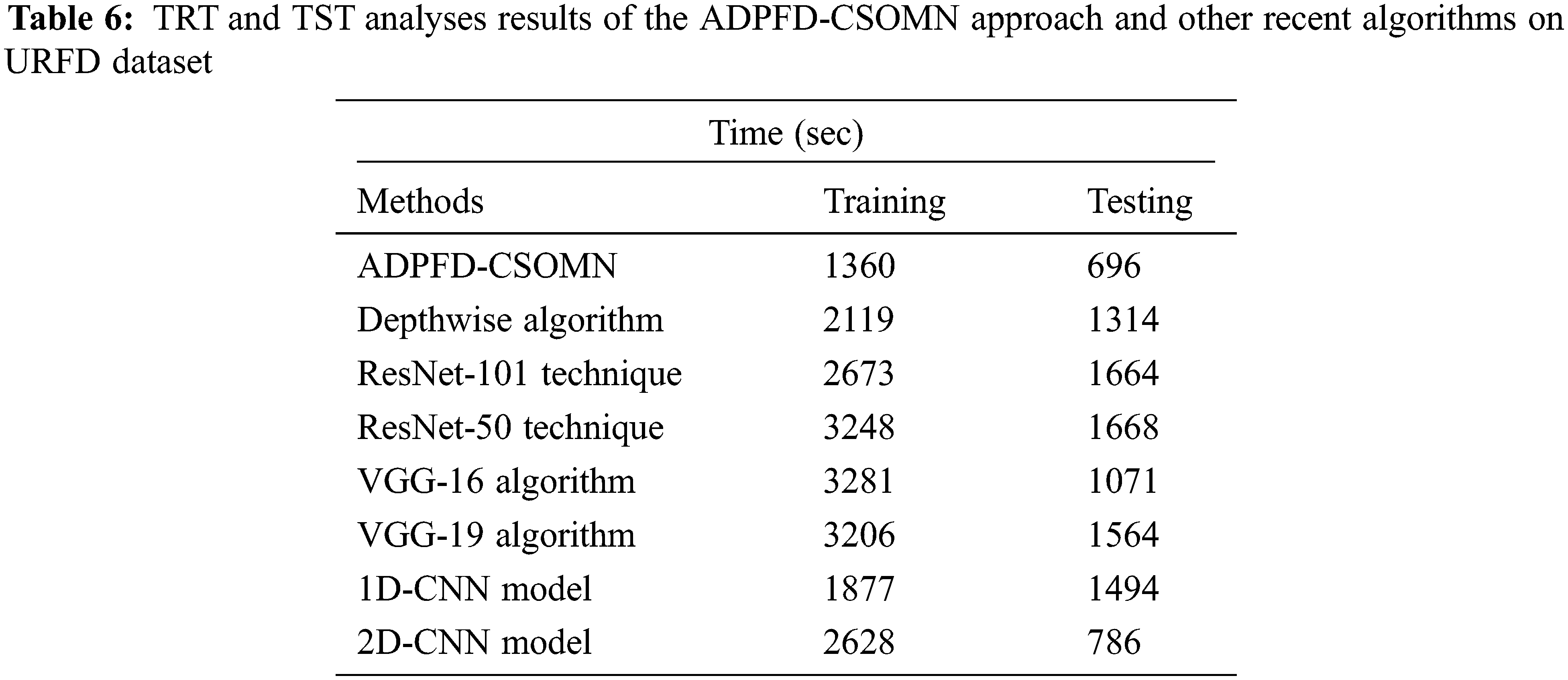

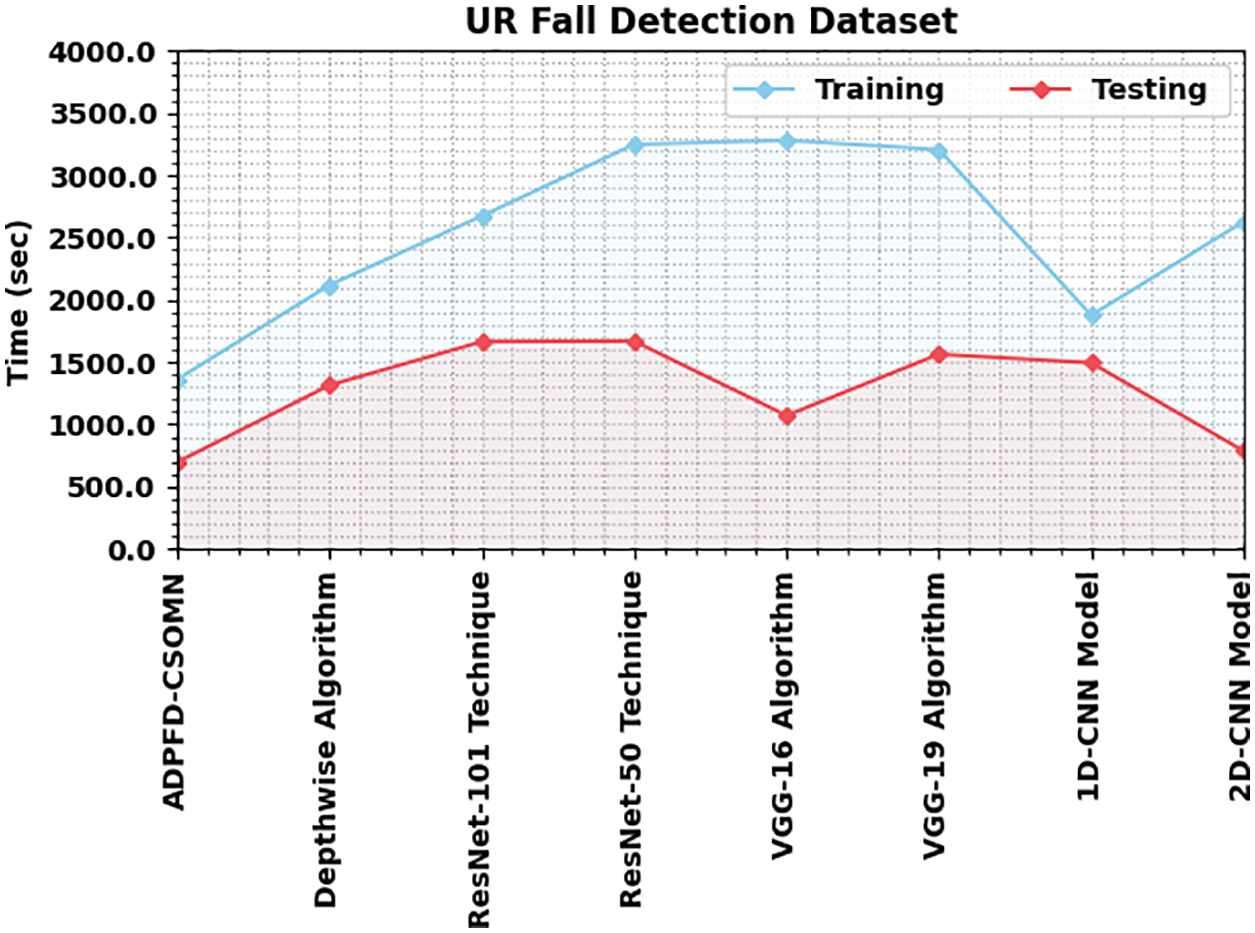

The authors conducted the TRT and TST analyses between the proposed ADPFD-CSOMN model and other recent models on URFD dataset and the results are presented in Table 6 and Fig. 14. Based on the TRT analysis outcomes, it can be inferred that the proposed ADPFD-CSOMN model achieved proficient results with a minimal TRT of 1360 s. However, the depthwise, ResNet-101, ResNet-50, VGG-16, VGG-19, 1D-CNN and the 2D-CNN models produced the maximum TRT values such as 2119, 2673, 3248, 3281, 3206, 1877 and 2628 s correspondingly. In addition, based on the TST analysis outcomes, it can be inferred that the proposed ADPFD-CSOMN model displayed proficient results with a minimal TRT of 696 s. But the depthwise, ResNet-101, ResNet-50, VGG-16, VGG-19, 1D-CNN and 2D-CNN models yielded the maximum TST values such as 1314, 1664, 1668, 1071, 1564, 1494 and 786 s correspondingly.

Figure 14: TRT and TST analyses results of the ADPFD-CSOMN approach on URFD dataset

The outcomes and the discussion made above highlight the proficient performance of the proposed ADPFD-CSOMN model in the fall detection process.

In this study, a novel ADPFD-CSOMN method has been devised for identification and the categorization of the fall events. The presented ADPFD-CSOMN technique incorporates the MobileNet model design for the purpose of feature extraction process during the preliminary stage. In addition, the CSO technique is exploited for the optimal modification of the hyperparameters related to the model. In the final stage, the RBF classification model is utilized to recognize and classify an instance as either a fall event or a non-fall event. In order to validate the supremacy of the proposed ADPFD-CSOMN model, a comprehensive experimental analysis was conducted. The comparison study outcomes pointed out the enhanced fall classification outcomes of the ADPFD-CSOMN model over other approaches. Thus, the ADPFD-CSOMN model can be employed as a proficient tool in real-time applications to identify the fall events. In the upcoming years, the performance of the proposed ADPFD-CSOMN model can be optimally improved with the help of hybrid metaheuristic algorithms.

Funding Statement: The author received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. L. M. Villaseñor, H. Ponce, J. Brieva, E. M. Albor, J. N. Martínez et al., “UP-Fall detection dataset: A multimodal approach,” Sensors, vol. 19, no. 9, pp. 1988, 2019. [Google Scholar]

2. S. Usmani, A. Saboor, M. Haris, M. A. Khan and H. Park, “Latest research trends in fall detection and prevention using machine learning: A systematic review,” Sensors, vol. 21, no. 15, pp. 5134, 2021. [Google Scholar] [PubMed]

3. S. Rastogi and J. Singh, “A systematic review on machine learning for fall detection system,” Computational Intelligence, vol. 37, no. 2, pp. 951–974, 2021. [Google Scholar]

4. F. Hussain, F. Hussain, M. E. Haq and M. A. Azam, “Activity-aware fall detection and recognition based on wearable sensors,” IEEE Sensors Journal, vol. 19, no. 12, pp. 4528–4536, 2019. [Google Scholar]

5. T. Mauldin, M. Canby, V. Metsis, A. Ngu and C. Rivera, “SmartFall: A smartwatch-based fall detection system using deep learning,” Sensors, vol. 18, no. 10, pp. 3363, 2018. [Google Scholar] [PubMed]

6. A. Bhattacharya and R. Vaughan, “Deep learning radar design for breathing and fall detection,” IEEE Sensors Journal, vol. 20, no. 9, pp. 5072–5085, 2020. [Google Scholar]

7. I. Chandra, N. Sivakumar, C. B. Gokulnath and P. Parthasarathy, “IoT based fall detection and ambient assisted system for the elderly,” Cluster Computing, vol. 22, no. S1, pp. 2517–2525, 2019. [Google Scholar]

8. F. Shu and J. Shu, “An eight-camera fall detection system using human fall pattern recognition via machine learning by a low-cost android box,” Scientific Reports, vol. 11, no. 1, pp. 2471, 2021. [Google Scholar] [PubMed]

9. N. Thakur and C. Han, “A study of fall detection in assisted living: Identifying and improving the optimal machine learning method,” Journal of Sensor and Actuator Networks, vol. 10, no. 3, pp. 39, 2021. [Google Scholar]

10. F. Lezzar, D. Benmerzoug and I. Kitouni, “Camera-based fall detection system for the elderly with occlusion recognition,” Applied Medical Informatics, vol. 42, no. 3, pp. 169–179, 2020. [Google Scholar]

11. D. Yacchirema, J. S. de Puga, C. Palau and M. Esteve, “Fall detection system for elderly people using IoT and ensemble machine learning algorithm,” Personal and Ubiquitous Computing, vol. 23, no. 5–6, pp. 801–817, 2019. [Google Scholar]

12. M. Saleh and R. L. B. Jeannes, “Elderly fall detection using wearable sensors: A low cost highly accurate algorithm,” IEEE Sensors Journal, vol. 19, no. 8, pp. 3156–3164, 2019. [Google Scholar]

13. M. M. Hassan, A. Gumaei, G. Aloi, G. Fortino and M. Zhou, “A smartphone-enabled fall detection framework for elderly people in connected home healthcare,” IEEE Network, vol. 33, no. 6, pp. 58–63, 2019. [Google Scholar]

14. N. Zerrouki and A. Houacine, “Combined curvelets and hidden Markov models for human fall detection,” Multimedia Tools and Applications, vol. 77, no. 5, pp. 6405–6424, 2018. [Google Scholar]

15. J. Maitre, K. Bouchard and S. Gaboury, “Fall detection with uwb radars and cnn-lstm architecture,” IEEE Journal of Biomedical and Health Informatics, vol. 25, no. 4, pp. 1273–1283, 2021. [Google Scholar] [PubMed]

16. P. N. Srinivasu, J. G. SivaSai, M. F. Ijaz, A. K. Bhoi, W. Kim et al., “Classification of skin disease using deep learning neural networks with mobilenet v2 and lstm,” Sensors, vol. 21, no. 8, pp. 2852, 2021. [Google Scholar] [PubMed]

17. J. Yu, C. H. Kim and S. B. Rhee, “Clustering cuckoo search optimization for economic load dispatch problem,” Neural Computing and Applications, vol. 32, no. 22, pp. 16951–16969, 2020. [Google Scholar]

18. C. She, Z. Wang, F. Sun, P. Liu and L. Zhang, “Battery aging assessment for real-world electric buses based on incremental capacity analysis and radial basis function neural network,” IEEE Transactions on Industrial Informatics, vol. 16, no. 5, pp. 3345–3354, 2020. [Google Scholar]

19. T. Vaiyapuri, E. L. Lydia, M. Y. Sikkandar, V. G. Díaz, I. V. Pustokhina et al., “Internet of things and deep learning enabled elderly fall detection model for smart homecare,” IEEE Access, vol. 9, pp. 113879–113888, 2021. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools