Open Access

Open Access

ARTICLE

Artificial Intelligence-Based Image Reconstruction for Computed Tomography: A Survey

1 College of Computer, National University of Defense Technology, Changsha, 410073, China

2 Technical Service Center for Vocational Education, National University of Defense Technology, Changsha, 410073, China

3 School of Computing and Communications, Lancaster University, Lancaster, LA1 4WA, UK

* Corresponding Author: Zhiping Cai. Email:

Intelligent Automation & Soft Computing 2023, 36(3), 2545-2558. https://doi.org/10.32604/iasc.2023.029857

Received 13 March 2022; Accepted 19 April 2022; Issue published 15 March 2023

Abstract

Computed tomography has made significant advances since its introduction in the early 1970s, where researchers have mainly focused on the quality of image reconstruction in the early stage. However, radiation exposure poses a health risk, prompting the demand of the lowest possible dose when carrying out CT examinations. To acquire high-quality reconstruction images with low dose radiation, CT reconstruction techniques have evolved from conventional reconstruction such as analytical and iterative reconstruction, to reconstruction methods based on artificial intelligence (AI). All these efforts are devoted to constructing high-quality images using only low doses with fast reconstruction speed. In particular, conventional reconstruction methods usually optimize one aspect, while AI-based reconstruction has finally managed to attain all goals in one shot. However, there are limitations such as the requirements on large datasets, unstable performance, and weak generalizability in AI-based reconstruction methods. This work presents the review and discussion on the classification, the commercial use, the advantages, and the limitations of AI-based image reconstruction methods in CT.Keywords

The first CT examination of the brain was performed using Sir Geoffrey Hounsfield’s EMI scanner in 1972, and CT has evolved into a vital diagnostic tool in modern medicine [1]. Using an algebraic reconstruction method, the first CT examination took over 5 min and about 2.5 h to reconstruct. Nowadays, CT scans image the whole body in seconds with almost instantaneous reconstruction [2]. The resolution of the CT image has also become higher, despite the long reconstruction time. In the past, CT scans had fuzzy images with 80 × 80 pixels, while today’s CT scanners have images with 1024 × 1024 pixels or more.

A growing use of CT has raised concerns, and the community is aware of the health risks of radiation exposure. Scientists and the media are concerned that CT radiation might lead to cancer. CT does provide essential clinical information helping diagnosis. In spite of this, excessive or inappropriate use can result in unnecessary radiation exposure. To minimizing radiation exposure, CT imaging should be discussed in conjunction with the radiation risks and the benefits of the image, ensuring that the projected benefits outweigh any projected radiation risks [3]. To conclude, the ALARA (as low as reasonably achievable) principle should be applied to CT scans [4].

Initial efforts were made in transmission CT in the early 1970s to introduce the concept of iterative reconstruction (IR), which was already well-established in the single-photon emission CT in the 1960s [5]. However, due to a lack of computer capacity, IR was quickly supplanted by analytical approaches such as filtered back projection (FBP). In 2009 until the first IR technique was introduced clinically, analytical reconstruction methods have been the dominant type of reconstruction. Because of the rapid growth in computer technology, it was only a matter of time before all of the leading CT vendors began offering IR algorithms for clinical use, which was followed by increasingly sophisticated reconstruction algorithms [6]. With the advancement of artificial intelligence technology, it has found its way into the medical profession, with medical image processing being one of the most essential applications. Recent developments in computer infrastructures, such as graphics processing units (GPUs) and cloud computing systems, have allowed AI applications to become more prevalent in various fields of study, including the reconstruction of medical images [7]. All these efforts are directed toward the construction of high-quality images using only low doses and a fast reconstruction rate. In particular, conventional reconstruction methods usually optimize one aspect, while AI-based reconstruction has finally managed to attain all goals in one shot [8].

This article aims to give an overview of AI-based CT image reconstruction. This work presents the review and discussion on the classification, the commercial use, the advantages, and the limitations of AI-based CT image reconstruction.

2 Conventional Reconstruction Method

Conventional reconstruction algorithms can be divided into analytical reconstruction algorithm and iterative reconstruction algorithm. Originally, CT images were reconstructed using the algebraic reconstruction technique (ART) in an iterative fashion [9]. Since this technique lacked the necessary computational power, it was quickly replaced by more simple analytical techniques, such as filtered back projection (FBP). FBP is the most common analytical reconstruction algorithm. Since the introduction of the first iterative reconstruction (IR) technique in 2009, it has been the method of choice for decades. This caused a true hype in the CT-imaging domain. The major CT vendors began introducing IR reconstruction algorithms for clinical use within a few years, which were quickly developed into more complex algorithms. The purpose of this chapter is to provide a concise discussion of the conventional CT reconstruction process.

The main work is the mutual transformation of projection data and image data between object space, Radon space, and Fourier space when scanning and reconstruction data.

Radon transform and central slice theorem are the theoretical basis of analytical reconstruction. In two-dimensional imaging, CT reconstruction is the process of mutual transformation between object space and Radon space. The central slice theorem of two-dimensional images reveals that Fourier space is a bridge between Radon space and object space. The theorem states: The parallel beam projection

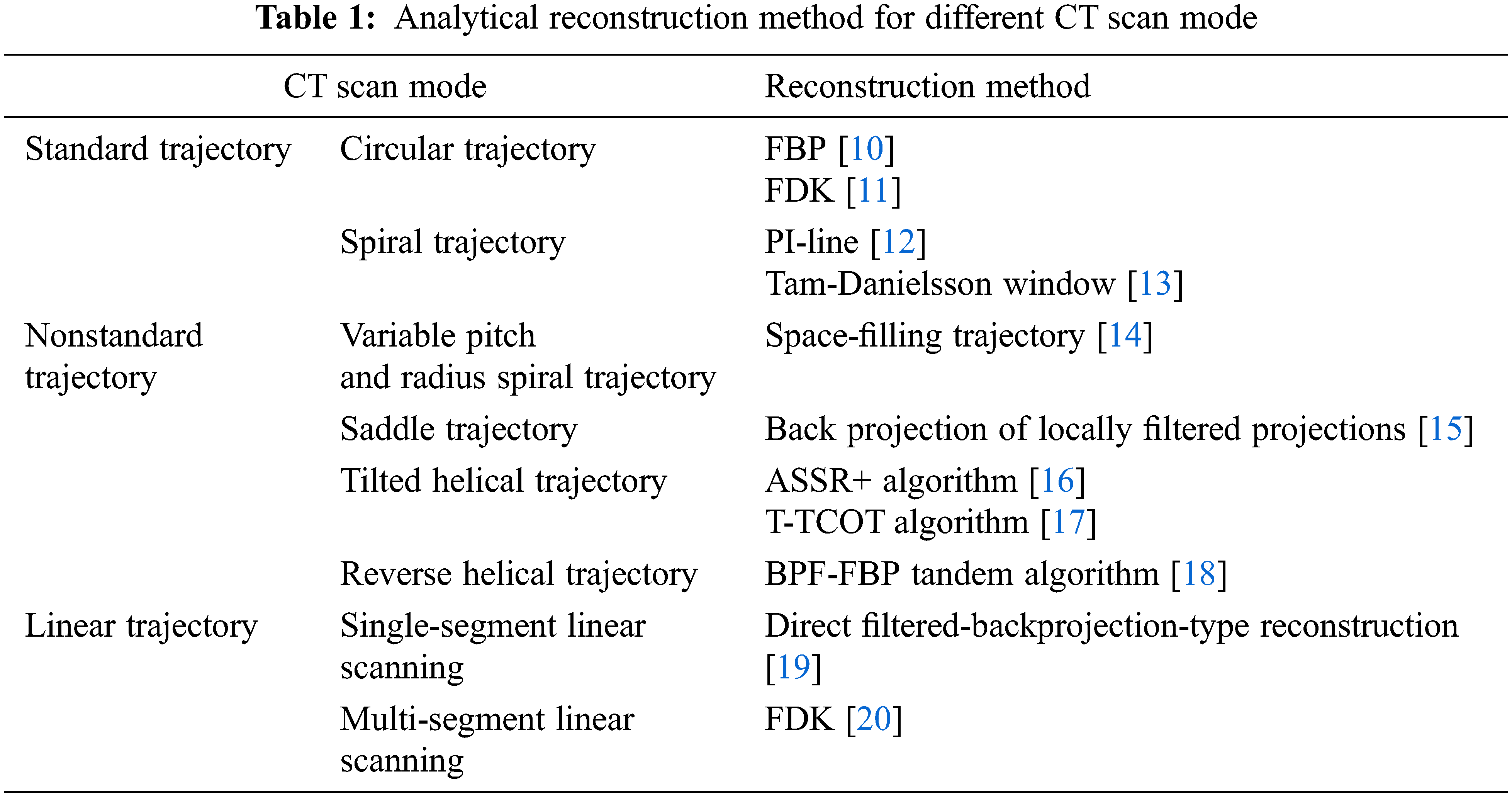

Because different scanning modes have different geometric structures, their reconstruction methods are also different. This section introduces the analytical reconstruction method according to the CT scan mode. The table lists the partial reconstruction methods corresponding to each scan mode.

Due to different scanning modes with different geometric structures, their reconstruction methods are also different. This section introduces the analytical reconstruction method according to the CT scan mode. Table 1 lists the partial reconstruction methods corresponding to each scan mode.

In the first 30 years after the invention of CT, it has always been the dominant algorithm in the analytical reconstruction method for CT image reconstruction because it is computationally efficient and highly accurate. From the perspective of computational efficiency, the algorithm implements parallel processing very well, that is, it allows the image to be reconstructed almost in real-time while scanning the patient. From the perspective of accuracy, when the input original data such as sinogram is obtained under ideal conditions, the algorithm can reconstruct an accurate copy image of the scanned object. However, in non-ideal situations, FBP has obvious limitations. This algorithm is usually unable to simulate the performance of CT systems under non-ideal conditions. Non-ideal states may result from several factors, including the basic physics of X-rays, such as beam hardening and scattering, the statistics of data collection, such as X-ray photon flux limitations and electronic noise, geometric factors associated with the system, such as partial volume effects and smaller focal lengths and detector sizes, as well as patient factors, such as positioning and movement. As a result of these limitations, patients are often required to receive higher radiation doses to achieve an acceptable image quality [6].

To overcome the shortcomings of the analytical reconstruction method, iterative reconstruction is introduced into CT image reconstruction. Different from the closed solution method of analytical reconstruction, iterative reconstruction adopts a progressive solution method, through preset reconstruction model, and repeated iterations to find the optimal solution that can match the input data. In other words, the iterative reconstruction algorithm is a method that drives image quality with modeling accuracy, and the accuracy of the reconstruction model determines the best image quality that can be achieved. For the purpose of simulating how the CT system will perform under non-ideal conditions, a complex reconstruction model is usually used. The higher the requirements for image quality, the more complex the model, and usually requires a longer reconstruction time [21].

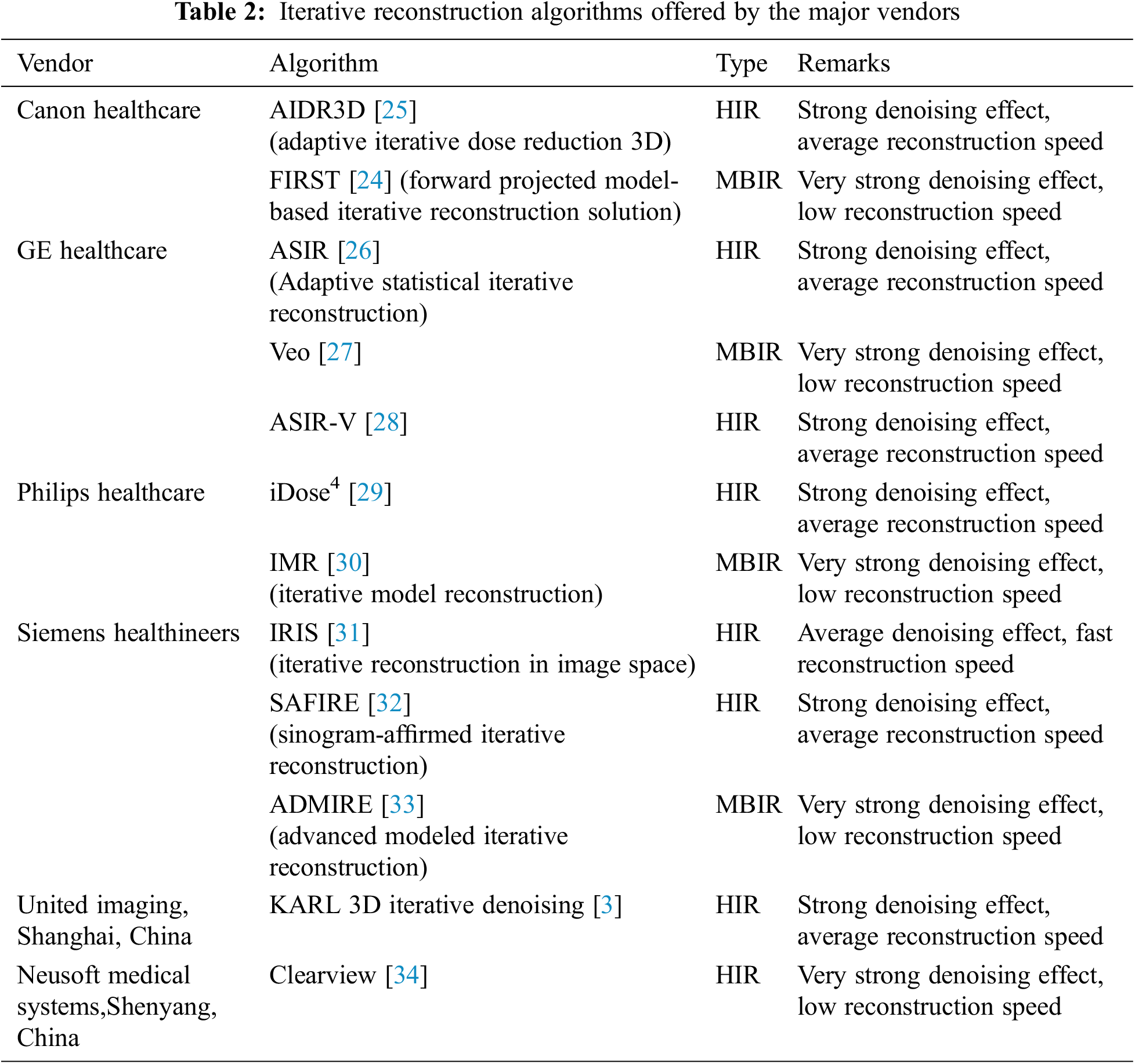

There are two main classifications of iterative reconstruction techniques: hybrid iterative reconstruction (HIR) and model-based iterative reconstruction (MBIR) [22]. Table 2 shows iterative algorithms for the major vendors.

Compared to FBP, MBIR reconstructs images with a higher signal-to-noise ratio(SNR) and requires a lower radiation dose because it uses sophisticated algorithms to minimize the difference between real and synthetic data, but it requires a high level of computing power and extensive reconstruction time [1]. Veo (GE Healthcare) is the first MBIR [23]. In 2016, FIRST (Canon Healthcare), an image reconstruction approach that is based on three-dimensional (3D) was developed [24]. Other MBIR algorithms include IMR (Philips Healthcare), and ADMIRE (Siemens Healthineers).

The HIR employs a combination of statistical system modeling and forward-projection steps in order to reach a compromise between the MBIR and FBP. The first HIR, RIS (Siemens Healthineers) and ASIR (GE Healthcare), was developed in 2008. Subsequently, several others were developed, such as iDose4 (Philips Healthcare), ClearView (Neusoft Medical Systems, Shenyang, China), etc.

Although the use of iterative reconstruction methods reduces the radiation dose, their performance in image quality is still unsatisfactory. Due to the limitations of modeling complexity, iteratively reconstructed images often display a different aesthetic than images generated by FBP under ideal circumstances, and the noise texture is often described as being blotchy, plastic or unnatural [1].

Artificial intelligence is widely used in various fields such as coverless information hiding techniques, machine vision and so on [35–39]. Medical images or signals can be analyzed with the assistance of AI to identify deviations from normal patterns that may indicate disease [40]. As an image processing technique, object detection heavily relies on bounding boxes for object categories and classification confidences [37], and is of great significance to medical image processing. AI-based CT reconstruction technology has been instrumental in detecting pneumonia infections during the past two years, as the world has been hit by the COVID-19 pandemic [41,42]. The emerging field of AI has attracted substantial interest due to its potential for improving the reconstruction of CT images [43]. The application of AI to CT reconstruction is being investigated by a number of research teams.

Neural network is one of the most popular artificial intelligence methods at present. And sparse-view CT reconstruction helps reduce radiation exposure, but it’s still a struggle to obtain a high-quality image reconstruction from only a few projections. As a result, the use of deep learning to rebuild sparse-view CT scans is a hot topic of research. Fig. 1 shows the process of image reconstruction with neural network. Input the low-dose sinogram into the neural network, and compare the resultant suboptimal image with the real image of the equivalent high-dose data. Then compare these two images on the basis of a variety of parameters such as image noise, low-contrast resolution, low-contrast detectability, etc. Additionally, the output suboptimal image includes differences and updates based on the backpropagation network. In this manner, the output image should be compared with the real image of the high-dose version until a certain level of accuracy is reached [44–47].

Figure 1: Schematic view of the neural network-based reconstruction process

While analytic reconstruction is efficient but requires proper sampling, and iterative reconstruction considers the statistical and physical properties of the imaging device but there are discrepancies between the model and physical factors, AI-based reconstruction can extract features from low-quality image data to train learning with greater accuracy and speed.

3.1 Classification of AI-Based Reconstruction

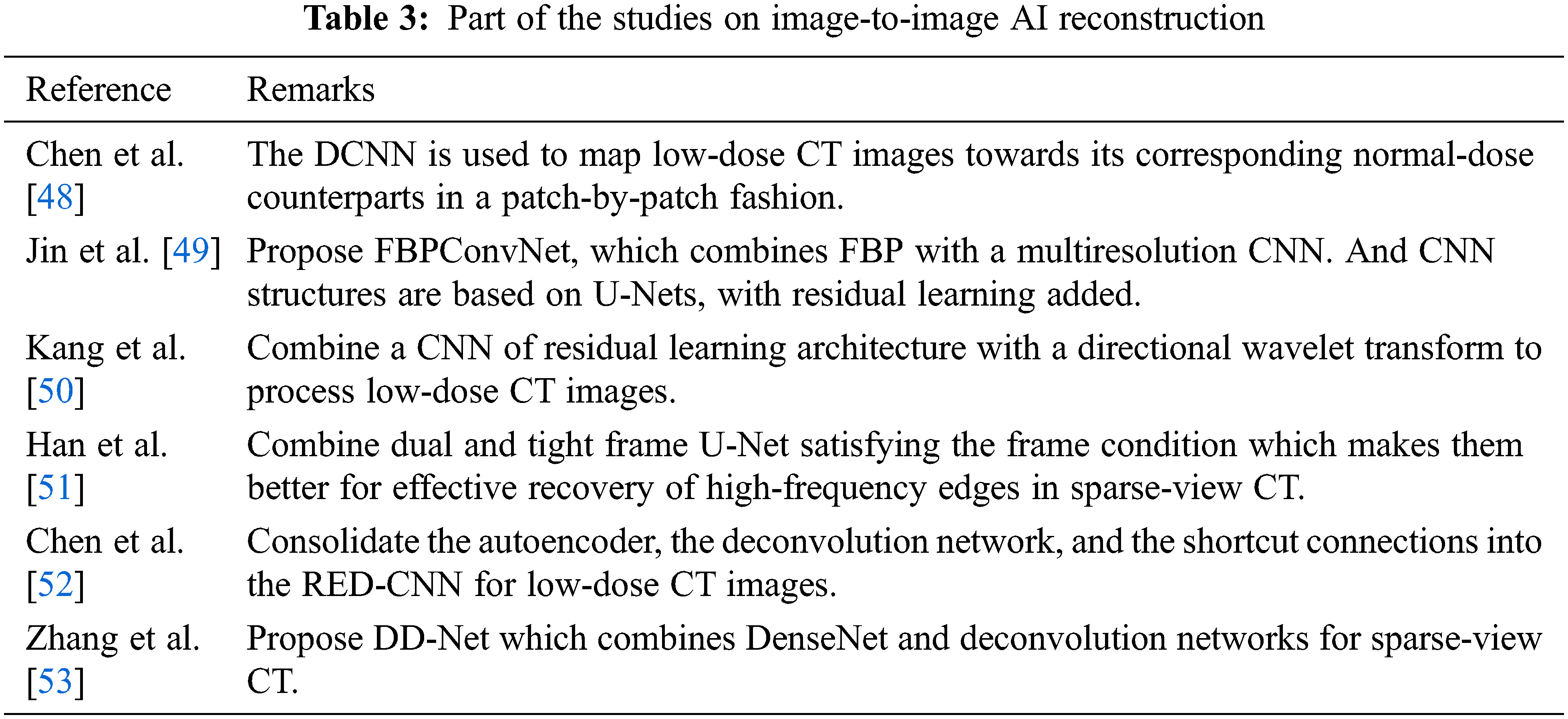

One type of AI-based reconstruction is image-to-image which is also based on image-space. The main idea of this reconstruction method is to train neural networks that can transform low-dose or sparse-view CT images into acceptable images without requiring raw or sinogram data. Part of the studies on image-to-image AI-based reconstruction are shown in Table 3. There are these image-to-image reconstruction methods: deep convolutional neural network (DCNN) combined with FBP (FBPConvNet) [49], directional wavelet transform based CNN [50], dual and tight frame U-Net [51] and so on. These methods produce high-quality images at high speed. In most case, to reconstruct routine-dose CT images, CNNs are trained with low-dose CT images.

Although these image-to-image reconstruction methods obtain good results, the results may be different to the original measurement because the methods usually predict output with image-prior.

Compared to the conventional reconstruction method, AI-based image reconstruction, especially deep learning (DL), has more advantages as it can learn features when training based on raw input data.

The measurement can be directly converted into images using data-to-image reconstruction methods. Zhu et al. [54] presented an image reconstruction framework called automated transform by manifold approximation (AUTOMAP). AUTOMAP maps sensor domain to image domain via supervised learning of sensor and image domain pairs, replacing the approach of sequential modular reconstruction chains that may include discrete transforms such as Fourier or Hilbert, data interpolation techniques, nonlinear optimization, and various filtering mechanisms.

He et al. [55] presented inverse Radon transform approximation (iRadonMAP) for trasferring randon projections to an image. In addition to DL techniques, they utilized a sinusoidal back-projection layer and a fully connected filtering layer to develop a Radon inversion strategy that is further enhanced by a shared network structure.

The hybrid method mainly combines AI with iterative algorithms, which are inspired by the benefits of iterative algorithms.

One idea of the hybrid method is based on the development of an iterative process into a network that takes into consideration the geometric properties of the image. The learned experts’ assessment-based reconstruction network (LEARN) is obtained by a data-driven training scheme based on iterative reconstruction of experts [56]. In the manifold and graph integrative convolutional network (MAGIC), the iterative scheme is unrolled and the network operates both on images and manifolds [57]. Alternating direction method of multipliers (ADMM) is significantly better than the conventional iterative reconstruction methods in recovering image details and improving low-contrast saliency [58].

Another hybrid method is iteratively using the trained networks. By rolling over proximal primal-dual optimization methods to convolutional neural networks, the learned primal-dual hybrid gradient (PDHG) algorithm accounts for a forward operator (possibly non-linear) in a deep neural network [59]. Using a block-wise MBIR algorithm combining momentum and majorizer networks with regression networks, Chun et al. present the first fast and convergent INN architecture, Momentum-Net [60]. In Deep BCD-NET, CNNs are integrated into iterative image recovery [61].

Wu et al. [62] developed the ACID algorithm, which integrates analytic methods, compressed sensing, iteration refinement and deep learning, in an effort to overcome the instabilities resulting from deep learning in image reconstruction [63].

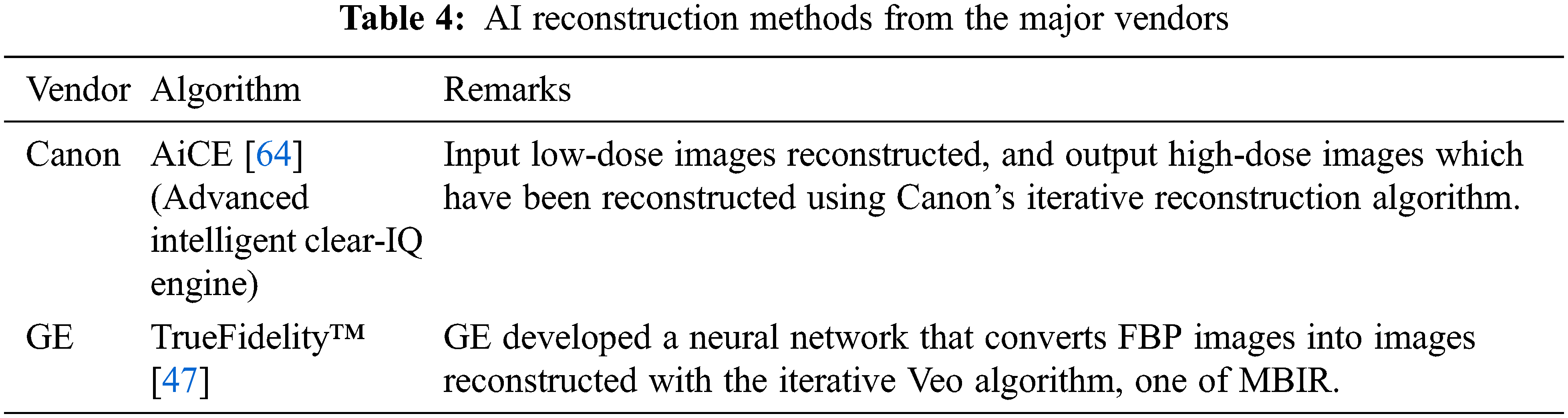

3.2 AI-Based Reconstruction for Commercial Use

There are at least two commercially available AI-based image reconstruction algorithms-Advanced Intelligent Clear-IQ Engine (AiCE, Canon Medical Solutions) and TrueFidelity™ (GE Healthcare) as shown in Table 4. The first commercialized deep learning reconstruction tool AiCE is based on DCNN that trained on high-quality CT images collected under high radiation dose and then reconstructed low-dose images with an MBIR algorithm [65]. In this way, training pairs of HIR images and high-dose MBIR images help DCNN learns statistical features to distinguish signals from noise and artifacts during the training process. And these learned features are incorporated into the DCNN for subsequent use on the test data. As DCNN is trained with high-dose MBIR images, the experimental results indicate that AiCE has better image reconstruction effects than MBIR, as well as a faster reconstruction speed [66].

In the TrueFidelity™ (GE Healthcare) algorithm, DCNN is used to feed highly noisy projection data into its network and compare the output image with its low-noise counterpart by comparing a variety of attributes such as image noise, low contrast detectability, etc. Both phantom and clinical studies show that the TrueFidelity images greatly improved image quality, including low-dose imaging, high-resolution imaging, and the evaluation of overweight individuals. Further, it offers the potential to lower the radiation dose during CT acquisition procedures without sacrificing the quality of images. This application is particularly relevant for screening examinations, pediatric imaging, and repeat exams [47].

3.3 Advantage of AI-Based Reconstruction

The advantages of AI reconstruction are lower noise, higher contrast spatial resolution, and better detectability than other reconstruction technology.

The purpose of medical imaging phantoms is to ensure that methods of imaging the human body are capable of working properly. These objects serve as stand-ins for human tissues in biomedical research. Usually, phantom studies are first performed to verify hypothesis while patient studies are conducted to determine if the theory could be realistic. Therefore, in this section, the advantages of AI-based reconstruction method are shown from both phantom and patient studies.

(1) In Phantom studies, AI-based image reconstruction demonstrated improved performance over alternative iterative reconstruction techniques for low-dose CT.

Racine et al. [67] compared FBP, HIR (ASiR-V, GE), and AI-based reconstruction (TrueFidelity™, GE) at three different dose levels. Based on simulated abdominal lesions like appendicitis, colonic diverticulitis, and calcium-containing urinary stones, they computed noise power spectra and target transfer functions. The results demonstrate significant reductions in noise with AI-based reconstructions while maintaining noise texture and enhancing overall spatial resolution. TrueFidelity™ outperformed ASiR-V for simulated clinical results at different dose levels.

Higaki et al. [64] reconstructed Phantom images with FBP, HIR (AIDR3D, Canon), MBIR (FIRST, Canon), and AI-based reconstruction (AiCE, Canon). Using the same CT scanner, the researchers scanned a phantom containing cylindrical modules of different contrasts. The noise power spectrum (NPS), the 10% modulation-transfer function (MTF) level, and the model observer are used to evaluate noise characterization, spatial resolution, and task-based detectability. On AI-based reconstruction images, all aspects of image performance are better.

Greffier et al. [68] compared AI-based image reconstruction algorithm (TrueFidelity™, GE) with convelutinal reconstruction method-FBP and HIR (ASiR-V, GE) at seven doses levels to assess the impact on image quality and dose reduction. The results demostrated that noise magnitude was reduced, spatial resolution was improved, and detectability was improved without affecting noise texture by applying artificial intelligence-based image reconstruction algorithms.

(2) In the patient studies, AI-based image reconstruction shows better image quality than other reconstruction methods.

Akagi et al. [66] examined the clinical applicability of abdominal ultrahigh-resolution CT (U-HRCT) exams reconstructed with an AI reconstruction (AiCE, Canon) in comparison to HIR (AIDR3D, Canon) and MBIR (FIRST, Canon) in 46 patients. The standard deviation of attenuation was recorded as the image noise, and the contrast-to-noise (CNR) was calculated. In the study, AI-based reconstruction resulted in significantly lower image noise and higher CNR than HIR and MBIR.

Similarity, in the study by Tatsugami et al. [69], they used CNN to reconstruct images of 30 adult patients who had undergone clinically-indicated CT coronary angiography. In this study, a comparison was made between a HIR reconstruction and one using AI-based image reconstruction, and it was determined that AI-based image reconstruction provided lower image noise, a better CNR, and better image quality.

An analysis of 59 adult CT scans was conducted by Singh et al. [65] to assess the effects of AI-based image reconstruction. The study compared image quality and clinically significant lesion detection between AI-based reconstruction (AiCE, Canon) and IR images of submillisievert chest and abdomen CT images. The study showed that 97% of low-dose abdominal CT scans and 95% to 100% of low-dose chest CT scans are sufficient for the diagnostic interpretation of images obtained following the use of low-dose AiCE.

3.4 Limitations of AI-Based Reconstruction

While AI-based reconstruction methods produce better quality images than conventional reconstruction methods such as analytical and iterative reconstruction, they do have certain limitations. The limitations include: requiring large datasets compared to the conventional reconstruction method, unstable performance, and weak generalizability [7]. In the following section, we discuss these shortages in detail.

3.4.1 Requirements of Large Datasets

Artificial intelligence especially deep learning methods are highly dependent on the number of datasets to train the models. The quality and the number of training datasets have a huge impact on the reconstruction result. The datasets should coverage multifold variations in patient size, shape, as well as attenuation and margins of diffuse abnormalities detected in true clinical data and so on so that we can use these datasets to cross-validate the AI-based reconstruction method. Usually, large datasets mean a large amount of labels which is time-consuming and labor-intensive. Also, when using clinical image data in commercial applications, there are legal and ethical issues.

The results of artificial intelligence aren’t always ideal, they are unstable. Antun et al. [63] demonstrated the instability of deep learning image reconstruction usually occurs in three forms. The first form is that tiny or almost undetectable perturbations may cause severe artefacts to the result of reconstruction. The second is that deep learning method may omit some small structural changes such as tumor, which can result in severe medical accidents because of the neglecting lesions. The last is the reduction in algorithm performance with increasing samples, it is counterintuitive, but Antun et al. confirmed the conclusion by stability test with algorithms and easy-to-use software.

Generalizability may be a significant problem when applying a certain AI-based reconstruction method to different datasets or scanners [70]. In Section 3.2, the AI-based reconstruction methods are only available for a few CT scanners of a few commercial CT vendors. CT vendors are likely to create different AI-based reconstruction methods based on their CT scanners, which may increase the complexity of CT image reconstruction. Although it is unrealistic to train a universal model which can work at any datasets and scanners, we are supposed to find ways to increase the generalizability of the AI-based reconstruction method.

During this study, we reviewed the AI-based CT image reconstruction. As technology develops rapidly, medical data continues to expand, and hardware equipment is becoming better and better, AI combined with medical treatment is becoming more diversified. Due to the advantages of improving image quality and reducing image noise, AI-based CT image reconstruction is increasingly popular compared to conventional reconstruction method such as analytical and iterative reconstruction. Presently, some AI-based reconstruction methods are availabele from some commercial CT vendors, formally used in clinical application, but these reconstruction methods have issues such as the requirements of large datasets, unstable performance, and weak generalizability. The application prospects of AI-based image reconstruction are broad, but further research is needed. Since this paper comprehensively analyzed the AI-based CT reconstruction from aspects of classification, commercial use, advantage and limitations, it may be used as a reference point.

Acknowledgement: The authors extend their appreciation to the National Key Research and Development Program of China and the UK EPSRC project for funding this work.

Funding Statement: This work is supported by the National Key Research and Development Program of China (2020YFC2003400). Qiang Ni’s work was funded by the UK EPSRC project under grant number EP/K011693/1.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. L. L. Geyer, U. J. Schoepf, F. G. Meinel, J. W. Nance Jr. G. Bastarrika et al., “State of the art: Iterative CT reconstruction techniques,” Radiology, vol. 276, no. 2, pp. 339–357, 2015. [Google Scholar] [PubMed]

2. Y. Zhao, X. Zhao and P. Zhang, “An extended algebraic reconstruction technique (E-ART) for dual spectral CT,” IEEE Transactions on Medical Imaging, vol. 34, no. 3, pp. 761–768, 2014. [Google Scholar] [PubMed]

3. R. Singh, W. Wu, G. Wang and M. K. Kalra, “Artificial intelligence in image reconstruction: The change is here,” Physica Medica, vol. 79, no. 12, pp. 113–125, 2020. [Google Scholar] [PubMed]

4. T. L. Slovis, “Children, computed tomography radiation dose, and the as low as reasonably achievable (ALARA) concept,” Pediatrics, vol. 112, no. 4, pp. 971–972, 2003. [Google Scholar] [PubMed]

5. D. Fleischmann and F. E. Boas, “Computed tomography old ideas and new technology,” European Radiology, vol. 21, no. 3, pp. 510–517, 2011. [Google Scholar] [PubMed]

6. M. J. Willemink and P. B. Noël, “The evolution of image reconstruction for CT—from filtered back projection to artificial intelligence,” European Radiology, vol. 29, no. 5, pp. 2185–2195, 2019. [Google Scholar] [PubMed]

7. E. Ahishakiye, M. B. Van Gijzen, J. Tumwiine, R. Wario and J. Obungoloch, “A survey on deep learning in medical image reconstruction,” Intelligent Medicine, vol. 1, no. 1, pp. 118–127, 2021. [Google Scholar]

8. C. M. McLeavy, M. H. Chunara, R. J. Gravell, A. Rauf, A. Cushnie et al., “The future of CT: Deep learning reconstruction,” Clinical Radiology, vol. 76, no. 6, pp. 407–415, 2021. [Google Scholar] [PubMed]

9. D. Fleischmann and F. E. Boas, “Computed tomography—Old ideas and new technology,” European Radiology, vol. 21, no. 3, pp. 510–517, 2011. [Google Scholar] [PubMed]

10. F. Pontana, A. Duhamel, J. Pagniez, T. Flohr, J. B. Faivre et al., “Chest computed tomography using iterative reconstruction vs filtered back projection (Part 2Image quality of low-dose CT examinations in 80 patients,” European Radiology, vol. 21, no. 3, pp. 636–643, 2011. [Google Scholar] [PubMed]

11. L. Li, Y. Xing, Z. Chen, L. Zhang and K. Kang, “A curve-filtered FDK (C-FDK) reconstruction algorithm for circular cone-beam CT,” Journal of X-ray Science and Technology, vol. 19, no. 3, pp. 355–371, 2011. [Google Scholar] [PubMed]

12. O. Delgado-Friedrichs, A. M. Kingston, S. J. Latham, G. R. Myers and A. P. Sheppard, “PI-line difference for alignment and motion-correction of cone-beam helical-trajectory micro-tomography data,” IEEE Transactions on Computational Imaging, vol. 6, pp. 24–33, 2019. [Google Scholar]

13. S. Shim, N. Saltybaeva, N. Berger, M. Marcon, H. Alkadhi et al., “Lesion detectability and radiation dose in spiral breast CT with photon-counting detector technology: A phantom study,” Investigative Radiology, vol. 55, no. 8, pp. 515–523, 2020. [Google Scholar] [PubMed]

14. A. M. Kingston, G. R. Myers, S. J. Latham, B. Recur, H. Li et al., “Space-filling X-ray source trajectories for efficient scanning in large-angle cone-beam computed tomography,” IEEE Transactions on Computational Imaging, vol. 4, no. 3, pp. 447–458, 2018. [Google Scholar]

15. J. D. Pack, F. Noo and R. Clackdoyle, “Cone-beam reconstruction using the backprojection of locally filtered projections,” IEEE Transactions on Medical Imaging, vol. 24, no. 1, pp. 70–85, 2005. [Google Scholar] [PubMed]

16. M. Kachelrieß, T. Fuchs, S. Schaller and W. A. Kalender, “Advanced single-slice rebinning for tilted spiral cone-beam CT,” Medical Physics, vol. 28, no. 6, pp. 1033–1041, 2001. [Google Scholar]

17. I. A. Hein, K. Taguchi, I. Mori, M. Kazama and M. D. Silver, “Tilted helical Feldkamp cone-beam reconstruction algorithm for multislice CT,” Proceedings of SPIE - The International Society for Optical Engineering, vol. 5032, no.1, pp. 1901–1910, 2003. [Google Scholar]

18. S. Cho, D. Xia, C. A. Pellizzari and X. Pan, “A BPF-FBP tandem algorithm for image reconstruction in reverse helical cone-beam CT,” Medical Physics, vol. 37, no. 1, pp. 32–39, 2010. [Google Scholar] [PubMed]

19. H. Gao, L. Zhang, Z. Chen, Y. Xing, J. Cheng et al., “Direct filtered-backprojection-type reconstruction from a straight-line trajectory,” Optical Engineering, vol. 46, no. 5, pp. 057003, 2007. [Google Scholar]

20. E. Morton, K. Mann, A. Berman, M. Knaup and M. Kachelrieß, “Ultrafast 3D reconstruction for X-ray real-time tomography (RTT),” in 2009 IEEE Nuclear Science Symp. Conf. Record (NSS/MIC), Orlando, FL, USA, pp. 4077–4080, 2009. [Google Scholar]

21. P. B. Noël, A. M. Walczak, J. Xu, J. J. Corso, K. R. Hoffmann et al., “GPU-based cone beam computed tomography,” Computer Methods and Programs in Biomedicine, vol. 98, no. 3, pp. 271–277, 2010. [Google Scholar]

22. M. J. Willemink, P. A. de Jong, T. Leiner, L. M. de Heer, R. A. Nievelstein et al., “Iterative reconstruction techniques for computed tomography part 1: Technical principles,” European Radiology, vol. 23, no. 6, pp. 1623–1631, 2013. [Google Scholar] [PubMed]

23. M. J. Willemink, R. A. Takx, P. A. de Jong, R. P. Budde, R. L. Bleys et al., “Computed tomography radiation dose reduction: Effect of different iterative reconstruction algorithms on image quality,” Journal of Computer Assisted Tomography, vol. 38, no. 6, pp. 815–823, 2014. [Google Scholar] [PubMed]

24. C. Hassani, A. Ronco, A. E. Prosper, S. Dissanayake, S. Y. Cen et al., “Forward-projected model-based iterative reconstruction in screening low-dose chest CT: Comparison with adaptive iterative dose reduction 3D,” American Journal of Roentgenology, vol. 211, no. 3, pp. 548–556, 2018. [Google Scholar] [PubMed]

25. M. Y. Chen, M. L. Steigner, S. W. Leung, K. K. Kumamaru, K. Schultz et al., “Simulated 50% radiation dose reduction in coronary CT angiography using adaptive iterative dose reduction in three-dimensions (AIDR3D),” The International Journal of Cardiovascular Imaging, vol. 29, no. 5, pp. 1167–1175, 2013. [Google Scholar] [PubMed]

26. J. Leipsic, T. M. Labounty, B. Heilbron, J. K. Min, G. J. Mancini et al., “Adaptive statistical iterative reconstruction: Assessment of image noise and image quality in coronary CT angiography,” American Journal of Roentgenology, vol. 195, no. 3, pp. 649–654, 2010. [Google Scholar] [PubMed]

27. M. A. Yoon, S. H. Kim, J. M. Lee, H. S. Woo, E. S. Lee et al., “Adaptive statistical iterative reconstruction and Veo: Assessment of image quality and diagnostic performance in CT colonography at various radiation doses,” Journal of Computer Assisted Tomography, vol. 36, no. 5, pp. 596–601, 2012. [Google Scholar] [PubMed]

28. H. Kwon, J. Cho, J. Oh, D. Kim, J. Cho et al., “The adaptive statistical iterative reconstruction-V technique for radiation dose reduction in abdominal CT: comparison with the adaptive statistical iterative reconstruction technique,” The British Journal of Radiology, vol. 88, no. 1054, pp. 20150463, 2015. [Google Scholar] [PubMed]

29. Y. Funama, K. Taguchi, D. Utsunomiya, S. Oda, Y. Yanaga et al., “Combination of a low tube voltage technique with the hybrid iterative reconstruction (iDose) algorithm at coronary CT angiography,” Journal of Computer Assisted Tomography, vol. 35, no. 4, pp. 480–485, 2011. [Google Scholar] [PubMed]

30. D. Mehta, R. Thompson, T. Morton, A. Dhanantwari and E. Shefer, “Iterative model reconstruction: Simultaneously lowered computed tomography radiation dose and improved image quality,” A Journal of the International Organization for Medical Physics, vol. 1, no. 2, pp. 147–155, 2013. [Google Scholar]

31. M. S. Bittencourt, B. Schmidt, M. Seltmann, G. Muschiol, D. Ropers et al., “Iterative reconstruction in image space (IRIS) in cardiac computed tomography: Initial experience,” The International Journal of Cardiovascular Imaging, vol. 27, no. 7, pp. 1081–1087, 2011. [Google Scholar] [PubMed]

32. K. Grant and R. Raupach, SAFIRE: Sinogram affirmed iterative reconstruction, Siemens, NJ, USA: Siemens Medical Solutions Whitepaper, 2012. [Online]. Available: http://www.medical.siemens.com/siemens/en_US/gg_ct_FBAs/files/Definition_AS/Safire.pdf. [Google Scholar]

33. J. Solomon, A. Mileto, J. C. Ramirez-Giraldo and E. Samei, “Diagnostic performance of an advanced modeled iterative reconstruction algorithm for low-contrast detectability with a third-generation dual-source multidetector CT scanner: Potential for radiation dose reduction in a multireader study,” Radiology, vol. 275, no. 3, pp. 735–745, 2015. [Google Scholar] [PubMed]

34. L. Zhang, H. Shi and S. Dong, “Impact of artificial intelligence imaging optimization technique on image quality of low-dose chest CT scan,” Chinese Journal of Radiological Medicine and Protection, vol. 12, pp. 722–727, 2020. [Google Scholar]

35. S. Zhao, M. Hu, Z. Cai, Z. Zhang, T. Zhou et al., “Enhancing Chinese character representation with lattice-aligned attention,” IEEE Transactions on Neural Networks and Learning Systems, vol. 77, pp. 1–10, 2021. [Google Scholar]

36. S. Zhao, M. Hu, Z. Cai and F. Liu, “Dynamic modeling cross-modal interactions in two-phase prediction for entity-relation extraction,” IEEE Transactions on Neural Networks and Learning Systems, vol. 80, pp. 1–10, 2021. [Google Scholar]

37. W. Ma, T. Zhou, J. Qin, Q. Zhou and Z. Cai, “Joint-attention feature fusion network and dual-adaptive NMS for object detection,” Knowledge-Based Systems, vol. 241, no. 2, pp. 108213, 2022. [Google Scholar]

38. K. Yang, J. Jiang and Z. Pan, “Mixed noise removal by residual learning of deep CNN,” Journal of New Media, vol. 2, no. 1, pp. 1–10, 2020. [Google Scholar]

39. W. Sun, X. Chen, X. R. Zhang, G. Z. Dai, P. S. Chang et al., “A multi-feature learning model with enhanced local attention for vehicle re-identification,” Computers, Materials & Continua, vol. 69, no. 3, pp. 3549–3561, 2021. [Google Scholar]

40. V. V. Lakshmi and J. S. Leena Jasmine, “A hybrid artificial intelligence model for skin cancer diagnosis,” Computer Systems Science and Engineering, vol. 37, no. 2, pp. 233–245, 2021. [Google Scholar]

41. M. Mahmoud, Y. Bazi, R. M. Jomaa, M. Zuair and N. A. Ajlan, “Deep learning approach for COVID-19 detection in computed tomography images,” Computers, Materials & Continua, vol. 67, no. 2, pp. 2093–2110, 2021. [Google Scholar]

42. S. Ketu and P. K. Mishra, “A hybrid deep learning model for covid-19 prediction and current status of clinical trials worldwide,” Computers, Materials & Continua, vol. 66, no. 2, pp. 1896–1919, 2021. [Google Scholar]

43. J. M. Wolterink, T. Leiner, M. A. Viergever and I. Išgum, “Generative adversarial networks for noise reduction in low-dose CT,” IEEE Transactions on Medical Imaging, vol. 36, no. 12, pp. 2536–2545, 2017. [Google Scholar] [PubMed]

44. T. P. Szczykutowicz, B. Nett, L. Cherkezyan, M. Pozniak, J. Tang et al., “Protocol optimization considerations for implementing deep learning CT reconstruction,” American Journal of Roentgenology, vol. 216, no. 6, pp. 1668–1677, 2021. [Google Scholar] [PubMed]

45. W. Wu, D. Hu, C. Niu, H. Yu, V. Vardhanabhuti et al., “DRONE: Dual-domain residual-based optimization network for sparse-view CT reconstruction,” IEEE Transactions on Medical Imaging, vol. 40, no. 11, pp. 3002–3014, 2021. [Google Scholar] [PubMed]

46. A. W. Reed, H. Kim, R. Anirudh, K. A. Mohan, K. Champley et al., “Dynamic ct reconstruction from limited views with implicit neural representations and parametric motion fields,” in Proc. of the IEEE/CVF Int. Conf. on Computer Vision, Montreal, null, Canada, pp. 2258–2268, 2021. [Google Scholar]

47. J. Hsieh, E. Liu, B. Nett, J. Tang, J. B. Thibault et al., A new era of image reconstruction: TrueFidelity™. GE Healthcare, Connecticut, USA: White Paper, 2019. [Online]. Available: https://www.gehealthcare.com/-/jssmedia/040dd213fa89463287155151fdb01922.pdf. [Google Scholar]

48. H. Chen, Y. Zhang, W. Zhang, P. Liao, K. Li et al., “Low-dose CT via convolutional neural network,” Biomedical Optics Express, vol. 8, no. 2, pp. 679–694, 2017. [Google Scholar] [PubMed]

49. K. H. Jin, M. T. McCann, E. Froustey and M. Unser, “Deep convolutional neural network for inverse problems in imaging,” IEEE Transactions on Image Processing, vol. 26, no. 9, pp. 4509–4522, 2017. [Google Scholar]

50. E. Kang, J. Min and J. C. Ye, “A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction,” Medical Physics, vol. 44, no. 10, pp. e360–e375, 2017. [Google Scholar] [PubMed]

51. Y. Han and J. C. Ye, “Framing U-Net via deep convolutional framelets: Application to sparse-view CT,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1418–1429, 2018. [Google Scholar] [PubMed]

52. H. Chen, Y. Zhang, M. K. Kalra, F. Lin, Y. Chen et al., “Low-dose CT with a residual encoder-decoder convolutional neural network (RED-CNN),” IEEE Transactions on Medical Imaging, vol. 36, no. 99, pp. 2524–2535, 2017. [Google Scholar] [PubMed]

53. Z. Zhang, X. Liang, X. Dong, Y. Xie and G. Cao, “A sparse-view CT reconstruction method based on combination of densenet and deconvolution,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1407–1417, 2018. [Google Scholar] [PubMed]

54. B. Zhu, J. Z. Liu, S. F. Cauley, B. R. Rosen and M. S. Rosen, “Image reconstruction by domain-transform manifold learning,” Nature, vol. 555, no. 7697, pp. 487–492, 2018. [Google Scholar] [PubMed]

55. J. He, Y. Wang and J. Ma, “Radon inversion via deep learning,” IEEE Transactions on Medical Imaging, vol. 39, no. 6, pp. 2076–2087, 2020. [Google Scholar] [PubMed]

56. H. Chen, Y. Zhang, Y. Chen, J. Zhang, W. Zhang et al., “LEARN: Learned experts’ assessment-based reconstruction network for sparse-data CT,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1333–1347, 2018. [Google Scholar] [PubMed]

57. W. Xia, Z. Lu, Y. Huang, Z. Shi, Y. Liu et al., “MAGIC: Manifold and graph integrative convolutional network for low-dose CT reconstruction,” IEEE Transactions on Medical Imaging, vol. 40, no. 12, pp. 3459–3472, 2021. [Google Scholar] [PubMed]

58. Q. Lyu, D. Ruan, J. Hoffman, R. Neph, M. McNitt-Gray et al., “Iterative reconstruction for low dose CT using plug-and-play alternating direction method of multipliers (ADMM) framework,” Proceedings of SPIE - The International Society for Optical Engineering, vol. 10949, no. 1, pp. 36–44, 2019. [Google Scholar]

59. J. Adler and O. Öktem, “Learned primal-dual reconstruction,” IEEE Transactions on Medical Imaging, vol. 37, no. 6, pp. 1322–1332, 2018. [Google Scholar] [PubMed]

60. I. Y. Chun, Z. Huang, H. Lim and J. Fessler, “Momentum-net: Fast and convergent iterative neural network for inverse problems,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 122, no. 10, pp. 1, 2020. [Google Scholar]

61. Y. Chun and J. A. Fessler, “Deep BCD-net using identical encoding-decoding CNN structures for iterative image recovery,” in IEEE 13th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Aristi Village, Greece, pp. 1–5, 2018. [Google Scholar]

62. W. Wu, D. Hu, W. Cong, H. Shan, S. Wang et al., “Stabilizing deep tomographic reconstruction networks,” arXiv: 2008.01846, 2020. [Google Scholar]

63. V. Antun, F. Renna, C. Poon, B. Adcock and A. C. Hansen, “On instabilities of deep learning in image reconstruction and the potential costs of AI,” Proceedings of The National Academy of Sciences of The United States of America, vol. 117, no. 48, pp. 30088–30095, 2020. [Google Scholar] [PubMed]

64. T. Higaki, Y. Nakamura, J. Zhou, Z. Yu, T. Nemoto et al., “Deep learning reconstruction at CT: Phantom study of the image characteristics,” Academic Radiology, vol. 27, no. 1, pp. 82–87, 2020. [Google Scholar] [PubMed]

65. R. Singh, S. R. Digumarthy, V. V. Muse, A. R. Kambadakone, M. A. Blake et al., “Image quality and lesion detection on deep learning reconstruction and iterative reconstruction of submillisievert chest and abdominal CT,” American Journal of Roentgenology, vol. 214, no. 3, pp. 566–573, 2020. [Google Scholar] [PubMed]

66. M. Akagi, Y. Nakamura, T. Higaki, K. Narita, Y. Honda et al., “Deep learning reconstruction improves image quality of abdominal ultra-high-resolution CT,” European Radiology, vol. 29, no. 11, pp. 6163–6171, 2019. [Google Scholar] [PubMed]

67. D. Racine, F. Becce, A. Viry, P. Monnin, B. Thomsen et al., “Task-based characterization of a deep learning image reconstruction and comparison with filtered back-projection and a partial model-based iterative reconstruction in abdominal CT: A phantom study,” Physica Medica, vol. 76, pp. 28–37, 2020. [Google Scholar] [PubMed]

68. J. Greffier, A. Hamard, F. Pereira, C. Barrau, H. Pasquier et al., “Image quality and dose reduction opportunity of deep learning image reconstruction algorithm for CT: A phantom study,” European Radiology, vol. 30, no. 7, pp. 3951–3959, 2020. [Google Scholar] [PubMed]

69. F. Tatsugami, T. Higaki, Y. Nakamura, Z. Yu, J. Zhou et al., “Deep learning-based image restoration algorithm for coronary CT angiography,” European Radiology, vol. 29, no. 10, pp. 5322–5329, 2019. [Google Scholar] [PubMed]

70. X. Liang, D. Nguyen and S. B. Jiang, “Generalizability issues with deep learning models in medicine and their potential solutions: Illustrated with cone-beam computed tomography (CBCT) to computed tomography (CT) image conversion,” Machine Learning: Science and Technology, vol. 2, no. 1, pp. 15007, 2020. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools