Open Access

Open Access

ARTICLE

Deep Learning Implemented Visualizing City Cleanliness Level by Garbage Detection

1

Anna University, Chennai, 600025, Tamil Nadu, India

2

Mahendra Engineering College (Autonomous), Namakkal, 637503, Tamil Nadu, India

* Corresponding Author: T. Jesudas. Email:

Intelligent Automation & Soft Computing 2023, 36(2), 1639-1652. https://doi.org/10.32604/iasc.2023.032301

Received 13 May 2022; Accepted 12 July 2022; Issue published 05 January 2023

A correction of this article was approved in:

Correction: Deep Learning Implemented Visualizing City Cleanliness Level by Garbage Detection

Read correction

Abstract

In an urban city, the daily challenges of managing cleanliness are the primary aspect of routine life, which requires a large number of resources, the manual process of labour, and budget. Street cleaning techniques include street sweepers going away to different metropolitan areas, manually verifying if the street required cleaning taking action. This research presents novel street garbage recognizing robotic navigation techniques by detecting the city’s street-level images and multi-level segmentation. For the large volume of the process, the deep learning-based methods can be better to achieve a high level of classification, object detection, and accuracy than other learning algorithms. The proposed Histogram of Oriented Gradients (HOG) is used to features extracted while using the deep learning technique to classify the ground-level segmentation process’s images. In this paper, we use mobile edge computing to process street images in advance and filter out pictures that meet our needs, which significantly affect recognition efficiency. To measure the urban streets’ cleanliness, our street cleanliness assessment approach provides a multi-level assessment model across different layers. Besides, with ground-level segmentation using a deep neural network, a novel navigation strategy is proposed for robotic classification. Single Shot MultiBox Detector (SSD) approaches the output space of bounding boxes into a set of default boxes over different feature ratios and scales per attribute map location from the dataset. The SSD can classify and detect the garbage’s accurately and autonomously by using deep learning for garbage recognition. Experimental results show that accurate street garbage detection and navigation can reach approximately the same cleaning effectiveness as traditional methods.Keywords

The street sweepers are required to clean the street manually, and they should move various spots in including conventional methods. However, this method demands massive investment and is not optimized in terms of money and time. This research introduced an automated platform that addresses city cleaning problems in the best way by using new equipment with computational techniques and cameras to analyse and make an efficient schedule for the clean-up process. Deep neural network learning-based techniques are used to achieve high accuracy and classification and perform object detection using conventional machine learning algorithms through a large volume of images. To determine the street dirty by analysing the photography images using the proposed framework of deep learning techniques of the street leverage process and using classification method litter objects detected with the degree of point in earlier stages [1–4].

A solution for the best garbage removal techniques suggested in the large cities of the area. There is already an existing hardware and software system that allows for solving some of the calculating problems of optimal scheduling waste disposal. Several approaches are used to detect by applying different methods, which will enable the control of the filling level of garbage containers. Still, the problems in the study of dynamic changes in the fulfillment of container level and changes in the city’s real traffic have been solved yet. The proposed research using the dynamic process of feature extraction and accuracy level segmentation of time-optimal criteria for garbage detection of all waste from the ground level process [4].

Jong et al. [5] current changes of detection methods characteristically follow one of two approaches by applying either post-classification or analysis difference image. Due to satellite images’ high determination nature to build a useful Difference Image (DI), a Convolutional Neural Network (CNN) is used in this study. Deep Neural Networks (DNNs) have been used effectively to support the process of discovery and highlight differences while avoiding some of the dimness of the classic methods. This study proposes a novel way to distinguish and categorize change using CNNs qualified for semantic segmentation. Li et al. [6] advanced mobile cloud and Internet of Thinks (IoT) technologies have connected the world like never before and even smaller. To solve city management problems by the growing technologies of leverages cities, these technologies become an opportunity and challenges for overcoming these issues. Cities are the transformation state to become a smart city using these technologies. This survey about street cleanliness has taken an automated assessment in a real-time world. To address the issue, this research proposed a multi-level assessment system services using mobile station collecting regarding the status of streets connected via city networks is analysed in the cloud and present city management online or mobile. This application gives opportunities to contribute to making a city a better place and city residents. Lopeza et al. [7] developed an application to evaluate the cleanliness of streets and collect waste material services. This app is based on the plan indicators that can be used to assess the cleanliness of the street and collection of street wastage services of the municipality. The indicators plan has been applied to the city by using the app was addressed to design. Still, it is not limited to following the goals regarding information measuring and storing the above indicators and finally publishing the results of the facilities’ element status to the public sectors. Bai et al. [8] this survey presents a novel approach to robotic garbage pickup, which operates on the ground. The robotic can detect the garbage autonomously and accurately and garbage identification by using a deep neural network. Experimental results illustrate that the garbage appreciation accuracy can reach a high level, and even without using a deep neural network for planning path, the path navigation plan can reach almost the with traditional methods same cleaning efficiency. Thus, the proposed system of a robot can serve as better assistance to release dustman’s physical labour work on the task of garbage cleaning [9,10].

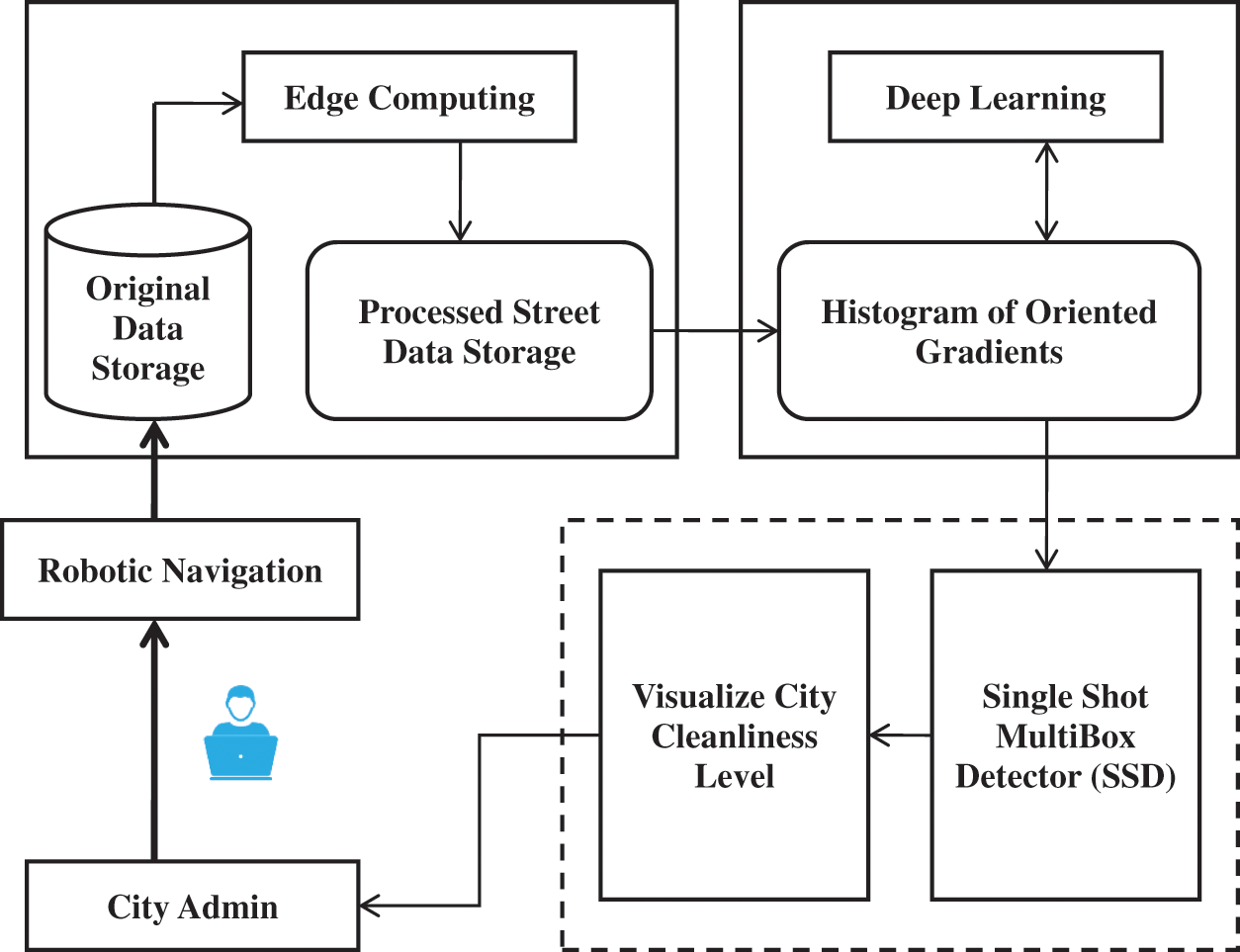

3.1 Process of Data Collection

The proposed methodology automatically detects the garbage using advanced deep learning algorithms applied on the street image of the dataset repository. Deep learning is pre-processing a technique classification from object detection through robotic navigation. Based on the number of junk objects detected using the method of classification, the street into the predefined level of cleanliness. The dashboard of the data analysis is processed information from the raw data store to plot the status of the real-time cleanliness [11,12]. From the original raw data, the repository can execute edge computing for processing datasets to centralized visualization of the street. Edge computing is applied for systematic computing operations for feature extracting, classification, ground-level segmentation, and finally detecting litter from the street. The Fig. 1 shows the proposed flow diagram.

Figure 1: Proposed flow diagram

3.2 Overview of Edge Computing

A method of optimization, method of sensing the data’s from the systematic process of proximity. Edge computing is a pattern that proposed data processing and storing edge of the network, for the edge computing data decentralized to analyse the indication points for litter detection from street view. Edge computing is applied in the segmentation of ground-level assessment for indicating points of the centralized method [13–16]. This research presented the design and evaluation of a unique decentralized storage system for the structure of edge computing, aiming to provide integrated storage for data created by different edge devices.

3.3 Histogram of Oriented Gradient (HOG)

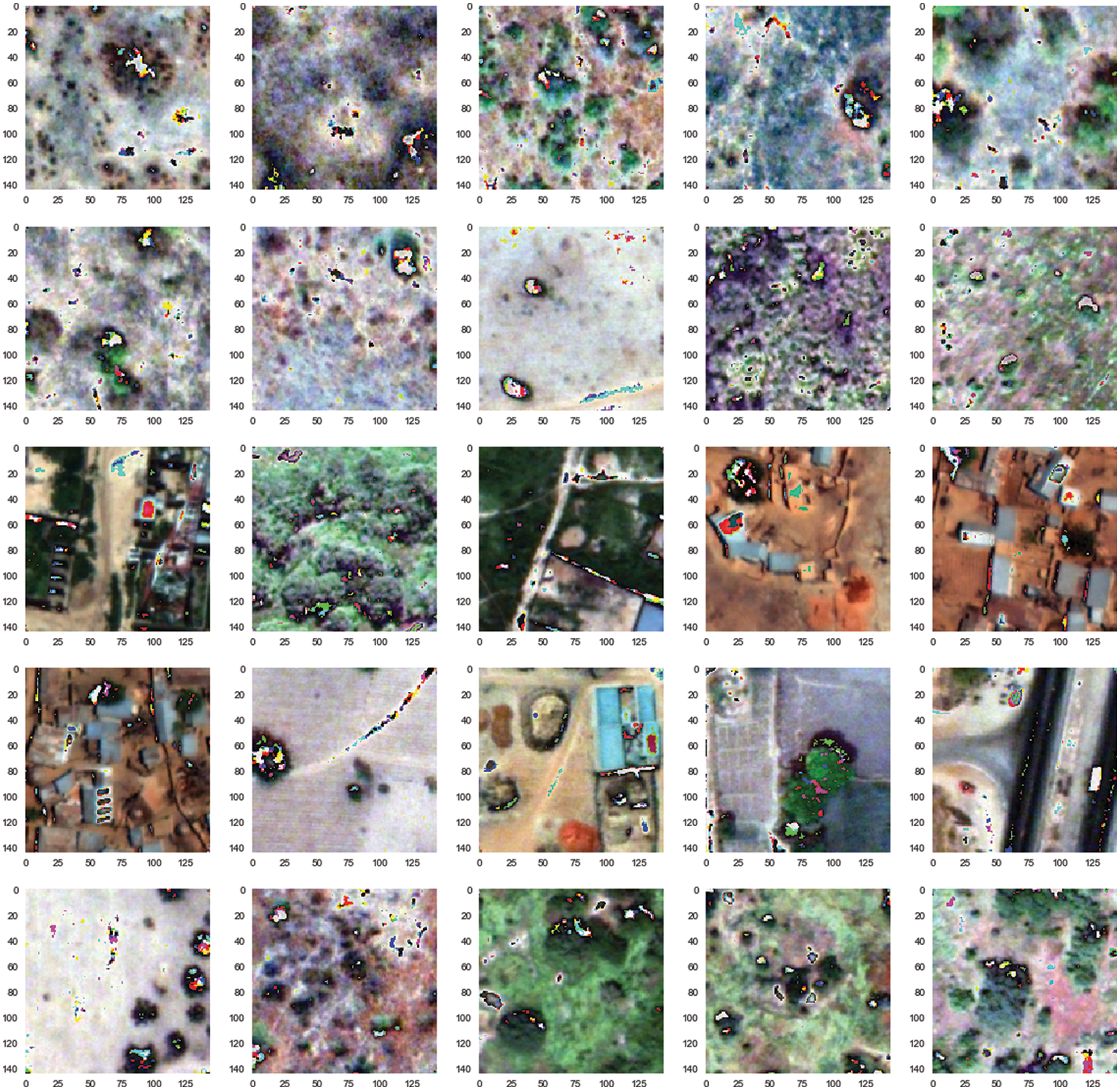

To make efficient garbage detection with real-time processing should design optimal feature extraction and method of classification by considering both high ordering performance and computational costs. Among the comparison for various feature extraction, the HOG feature demonstrates the best classification performance to classify the ground level of segmentation. The deep learning technique of HOG will be applied to classify the variable of high-level dimensional features from the street view data processing. The Fig. 2 shows the city diagnosis for dividing street point.

Figure 2: City diagnosis for dividing street point

Feature extraction is a method of extracting the features from the dataset of the ground image. The objective of the feature extraction procedure is to characterize raw images in them shorten compact form to decision-making processes such as pattern classification. This stage is answerable for extracting all possible features that are predictable to be operational in diagnosing ground-level segmentation without relating to the disadvantages of unnecessary dimensionality. Therefore, we should select variable HOG features not only to reduce the computational costs but also to enhance classification performance.

HOG features have been proposed to describe in many pattern recognition techniques. Which are discussed below in Eqs. (1)–(3).

where,

F: Denotes the number of features extracted

Hj: pixel value denotes the center of the segmentation

(n + 1): number of iterations

‘P’: are index minimized by Hj.

k: Segmentation value

The arithmetic means are computed after each distribution of pixels became a new position of center clusters. While manipulative, the components of the center of components Hj formed after iteration n + 1 as an arithmetic means of components of each pixel values are appropriate to this center of ground-level segmentation. The Fig. 3 shows the feature extraction for segmentation.

Figure 3: Feature extraction for segmentation

To compare the proposed feature extraction with the conventional HOG feature, fixed classification method such as compressive sensing technique for selected elements. Experimental results illustrate that the proposed feature extraction executes good classification performance compared with traditional HOG features for street-level datasets with accuracy deduction and various illumination conditions.

3.4 Robotic Navigation with Ground Level Segmentation

The practical model of multi-task robustness and exploiting feature learning for feature extraction for classified region is detection. The main contributions are summarized as follows.

1. An efficient method of feature extraction is developed for manipulating the images of visual features by multi-view region, which included edge features of Histogram of oriented gradient feature. By the representation of the dataset enriched and reduce, the noise complex is further suppressed.

2. The object detection of litter framework on the multi-task learning approaches detects the garbage by the robotic navigated dataset’s street. The present framework focused on accuracy detection of litter learning features of multi-task and further improving the robustness and efficient outcome of litter detection.

3. The incremental updating equation has been derived in a proposed region detector, making it flexible to classify the street map region with the available up-to-date training image data. Using such an updating equation, the supported object detector will be enhanced suitably to changing environments.

The above Fig. 4 demonstrated the assessment of ground level segmentation using the feature extraction of HOG by four-layer of extraction. Each layer is segmented step by step from a dataset from a ground-level map. The first layer analyses the city map, analyzed by robotic navigation of data stored in a repository and divided the area from the city map. The second layer segment the area map and divided the area into each block of the process. The third layer block map is divided into the street map for the point of an indication to detect the user admin. And finally, the fourth layer the street map, which have detection of point of indication and detect the litter by object detection.

Figure 4: Ground level segmentation

3.5 SSD and Garbage Recognition

As the development theory of deep learning, which has widely processed the object detection technology is used in all fields. The critical technology of advanced data analysis technology of one of the learning methods is used to detect the objects accurately. The proposed system deep learning is used to solve facilitate object detection for sorting waste. The light weight deep learning model of SSD has the capacity of detecting objects by the parameters model. The experimental results show that the modified model can recognize the categories of waste accurately. The Fig. 5 shows the classification of detecting SSD methods.

Figure 5: Classification of detecting ssd method

These features extracted are classified by mean and standard deviation from the dataset of segmenting ground-level assessment.

Mean (M) each band and sub-band of the ground level segmentation was considered by mean Eq. (4)

Standard Deviation (SD) Eq. (5) measures the variation of the dataset from the repository of robotic navigation detection of city ground-level analysis and the Fig. 6 shows the histogram for object detection.

Figure 6: Histogram for object detection

To better understand SSD, let’s start by explaining where the name of this architecture comes from:

• Single Shot:

This means localization tasks of object and classification are done in the network of a single forward pass.

• Multi Box:

This is the name of a developed technique for bounding the box regression method.

• Detector:

The network is an object detector, and that also classifies those objects detected. The SSD is one of the wildest algorithms in the field of current target detection. It has achieved better results in target detection, but there are also solve problems such as poor features extraction in layers and features loss of in deep layers. The Fig. 7 shows the SSD with extracted features.

Figure 7: SSD with feature extracted

Classification Process steps are shown below, in this process, SSD contains the following steps to classification the objects.

Step 1: Let P be the data points in the given input image.

Step 2: Partition the data points into k equal sets.

Step 3: In each set, take the center point as the point of indication.

Step 4: Calculate the distance between each data point (1 ≤ i ≤ n) to all initial means (1 ≤ j ≤ k).

Step 5: For each data point di, find the closest centroid mj and assign Pi to classification j.

Step 6: Set classification [i] = j.

Step 7: Set nearest point P[i] = (pi, mj).

Step 8: For each classification (1 ≤ j ≤ k), recalculate the centroids.

Step 9: For each data point di, compute its distance from the centroid of the present nearest cluster.

Step 10: If all objects are grouped, then the run of the algorithm is terminated, or else the steps from 3 to 9 are repeated for possible objects between the classifications.

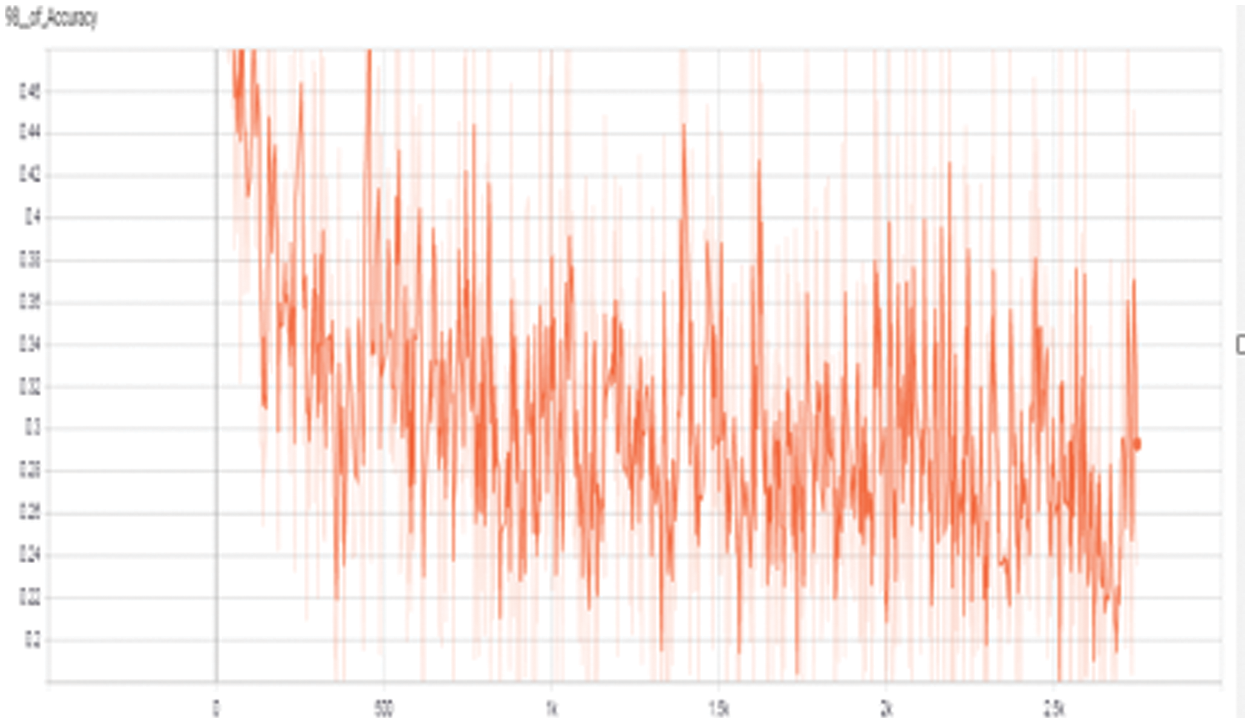

The proposed application has been developed to provide quick results that obtained each indicator for each district, which is essential for making an appropriate diagnosis of the cleanliness of the city, optimization service processes, and steps for decision making. Such a platform can prove an effective consumption resource reducing and involved overall operational cost in street cleaning. The Fig. 8 shows the accuracy of classification of garbage detection by graphical form.

Figure 8: Accuracy classification of garbage detection

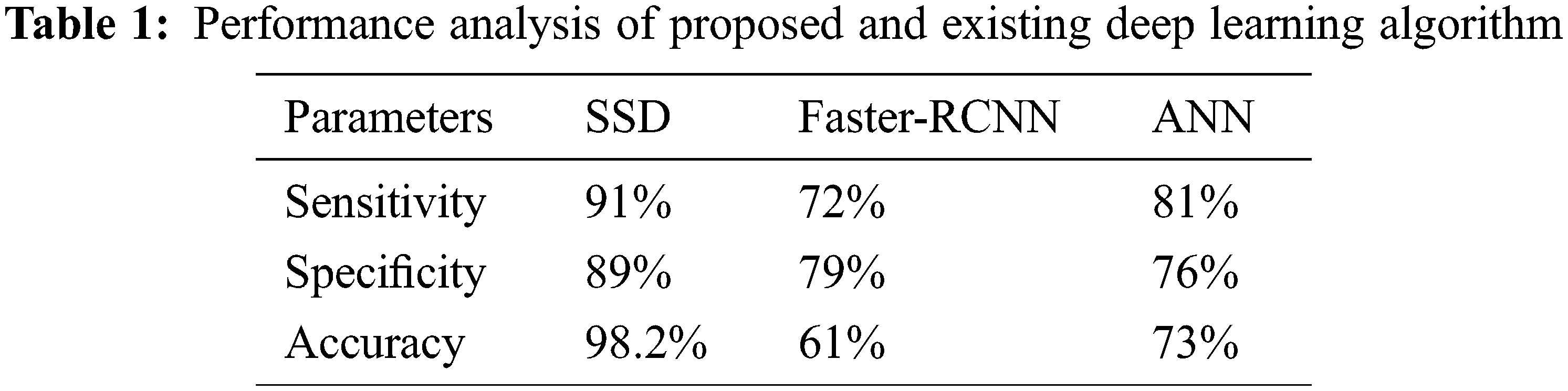

In this section, the evaluation of the proposed method is enhanced with the feature extraction of HOG, and the existing method measures the ground level segmentation of litter detection by using the SSD algorithm. The proposed system is compared with the existing system in the term of Sensitivity, Specificity and Accuracy, classification accuracy, time complexity, and error rate prediction methods are processed.

4.2 Sensitivity, Specificity, and Accuracy

Here, the performance of the proposed improved deep learning method has been compared with existing techniques, and the proposed SSD classifier is compared with existing Faster-Region based Convolutional Neural Network (RCNN) and Artificial Neural Network (ANN) classifier in terms of sensitivity, specificity, the accuracy of the garbage detection, and they have been calculated by below equations.

The statistical measures that can be considered are sensitivity, specificity, and accuracy

Accuracy (A) or else correct rate

Precision (P), or Positive Predictive Value (PPV)

Recall (R), or True Positive Rate (TPR) or sensitivity

Specificity (S) or else True Negative Rate (TNR)

F-measure (F)

A truly positive and true negative of accurate image classification is labelled by the classifier techniques. The true positive is refer to the proper classification of pixel values; this is labelled proper classification; otherwise, this labelled has an improper classifier that indicates the false positive of the records.

where,

TP specifies the True Positive,

FP denotes the False Positive,

TN can indicate the True Negative,

FN represents the False Negative.

The city garbage detection for feature extraction and ground segmentation has been defined by the TP, and the improperly segmented and detection have been labelled by the TN. FP has explained the incorrectly discovered and segmented area of point by pixels present in the image, and finally, FN has explained wrongly identified and segmented present in convinced the garbage detection. The performance comparison of proposed and segmentation and identification methods has been summarized in the below table. The comparison tables for the existing Deep learning algorithms with our developed algorithm are illustrated in Tab. 1.

From the above comparison table, the proposed method has provided 91% in sensitivity level, 89% in specificity level, and 98.2% in the accuracy level compared to existing techniques Faster RCNN and ANN classifier. Similarly, the classification accuracy of the given test dataset is represented by the overall percentage of test data records that are properly classified by the classifier techniques and it is shown in Fig. 9.

Figure 9: Comparison of statistical parameters

4.3 Optimal Feature Extraction

The below chart Fig. 10 demonstrated the feature classification using the proposed algorithm of Feature Extraction of HOG used to extract some of the features for easy analyzing of garbage. This evaluation is compared with existing algorithms.

Figure 10: Feature extraction process

4.4 The Time Complexity for Segmentation

The garbage detected by segmentation of object detection using the proposed has been implemented with the execution milliseconds by comparing with the existing system. This is established under the comparison results in Fig. 11.

Figure 11: Comparison of time complexity

Compared with the HOD and SSD algorithm of the proposed system with Support Vector Machines (SVM) and Recurrent Neural Network (RNN) of the existing system, the proposed deep learning algorithms are taken a minimum time for detecting garbage. The above comparison chart shows the proposed method of the HOG and SSD algorithm has taken minimum time for the progression garbage detection. Therefore, the proposed system can provide low time complications than the existing systems.

4.5 Accurate Segmentation of SSD

The prediction accuracy of the presented and existing methods can be analyzed through how the garbage is recognized through dataset and feature extraction by deep learning. The efficiency of each method is evaluated using the accuracy level of the analyzing process. The segmentation accuracy of the garbage detection is shown in Fig. 12. This clearly says that the proposed method has given high accuracy for litter detection from ground level segmentation than existing methods SVM, CNN, and You only look once (YOLO).

Figure 12: Ground level segmentation

The street cleanliness is one of the challenging solved in the research paper the litters are detecting by using the various algorithm of deep learning. With the development of novel technologies, the proposed city cleanliness approaches for decentralized edge computing is used and detect the litters. Robotic navigation is a technique that stored the data in the repository of decentralized edge computing techniques. The HOG is a proposed algorithm for feature extraction that segments the ground level of the process from a large city or area of a particular point. The SSD is used to detect the object by boundary layer of the object detection. The experimental results show the accuracy of litter detection from object detection, better performance, and accuracy are included in the research. The garbage’s are detected from object detection by boundary box of SSD techniques is used from the feature extraction process.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. S. Watanasophon and S. Ouitrakul, “Garbage collection robot on the beach using wireless communications,” in Proc. 3rd Int. Conf. on Informatics, Environment, Energy, Applications, IPCBEE, Singapore, vol. 66, pp. 92–96, 2014. [Google Scholar]

2. S. Kulkarni and S. Junghare, “Robot-based indoor autonomous trash detection algorithm using ultrasonic sensors,” in Proc. Int. Conf. on Control, Automation, Robotics and Embedded Systems (CARE), Jabalpur, pp. 1–5, 2013. [Google Scholar]

3. S. Chen, D. Wang, T. Liu, W. Ren and Y. Zhong, “An autonomous ship for cleaning the garbage floating on a lake,” in Proc. Second Int. Conf. on Intelligent Computation Technology and Automation, Changsha, pp. 471–474, 2009. [Google Scholar]

4. C. Y. Tsai, C. C. Wong, C. J. Yu, C. C. Liu and T. Y. Liu, “A hybrid switched reactive-based visual servo control of 5-dof robot manipulators for pick-and-place tasks,” IEEE Systems Journal, vol. 9, no. 1, pp. 119–130, 2015. [Google Scholar]

5. K. L. Jong and A. S. Bosman, “Unsupervised change detection in satellite images using convolutional neural networks,” in Proc. 2019 Int. Joint Conf. on Neural Networks (IJCNN), Hungary, IEEE, pp. 1–8, 2019. [Google Scholar]

6. W. Li, B. Bhushan and J. Gao, “SmartClean: Smart city street cleanliness system using multi-level assessment model—Research notes,” International Journal of Software Engineering and Knowledge Engineering, vol. 28, no. 11n12, pp. 1755–1774, 2018. [Google Scholar]

7. I. Lopeza, V. Gutierrezb, F. Collantesa, D. Gila, R. Revillaa et al., “Developing an indicators plan and software for evaluating street cleanliness and waste collection services,” Journal of Urban Management, vol. 6, no. 2, pp. 66–79, 2017. [Google Scholar]

8. J. Bai, S. Lian, Z. Liu, K. Wang and D. Liu, “Deep learning-based robot automatically picking up garbage on grass,” IEEE Transactions on Consumer Electronics, vol. 64, no. 3, pp. 382–389, 2018. [Google Scholar]

9. G. Ferri, A. Manzi, P. Salvini, B. Mazzolai, C. Laschi et al., “Dust cart, an autonomous robot for door-to-door garbage collection: From dust bot project to the experimentation in the small town of peccioli,” in Proc. 2011 IEEE Int. Conf. on Robotics and Automation (ICRA), Shanghai, pp. 655–660, 2011. [Google Scholar]

10. M. C. Kang, K. S. Kim, D. K. Noh, J. W. Han and S. J. Ko, “A robust obstacle detection method for robotic vacuum cleaners,” IEEE Transactions on Consumer Electronics, vol. 60, no. 4, pp. 587–595, 2014. [Google Scholar]

11. R. Boellaard, N. C. Krak and O. S. Hoekstra, “Effects of noise, image resolution, and roi definition on the accuracy of standard uptake values: A simulation study,” Journal of Nuclear Medicine, vol. 45, no. 9, pp. 1519, 2004. [Google Scholar] [PubMed]

12. J. Palacin, J. A. Salse, I. Valganon and X. Clua, “Building a mobile robot for a floor-cleaning operation in domestic environments,” IEEE Transactions on Instrumentation Measurement, vol. 53, no. 5, pp. 1418–1424, 2004. [Google Scholar]

13. Y. Gong, D. Li and L. Yu, “Research on target detection and tracking technology based on intelligent machine vision,” Computer Knowledge and Technology, vol. 34, pp. 216–218, 2016. [Google Scholar]

14. Y. Gao, B. Zhou and X. Hu, “Research on image recognition based on data enhancement in convolutional neural networks,” Computer Technology and Development, vol. 28, no. 8, pp. 62–65, 2018. [Google Scholar]

15. F. Cherni, Y. Boutereaa, C. Rekik and N. Derbel, “Autonomous mobile robot navigation algorithm for planning collision-free path designed in dynamic environments,” in Proc. 9th Jordanian Int. Electrical and Electronics Engineering Conf. (JIEEEC), Amman, Jordan, pp. 1–6, 2015. [Google Scholar]

16. Y. Zhu, R. Mottaghi, E. Kolve, J. J. Lim, A. Gupta et al., “Target-driven visual navigation in indoor scenes using deep reinforcement learning,” in Proc. 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), Singapore, pp. 3357–3364, 2017. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools