Open Access

Open Access

ARTICLE

Multisensor Information Fusion for Condition Based Environment Monitoring

1 Department of Computer Science and Engineering, Hindusthan College of Engineering and Technology, Coimbatore, 641050, India

2 Department of Electronics and Communication Engineering, Hindusthan College of Engineering and Technology, Coimbatore, 641050, India

* Corresponding Author: A. Reyana. Email:

Intelligent Automation & Soft Computing 2023, 36(1), 1013-1025. https://doi.org/10.32604/iasc.2023.032538

Received 21 May 2022; Accepted 21 June 2022; Issue published 29 September 2022

Abstract

Destructive wildfires are becoming an annual event, similar to climate change, resulting in catastrophes that wreak havoc on both humans and the environment. The result, however, is disastrous, causing irreversible damage to the ecosystem. The location of the incident and the hotspot can sometimes have an impact on early fire detection systems. With the advancement of intelligent sensor-based control technologies, the multi-sensor data fusion technique integrates data from multiple sensor nodes. The primary objective to avoid wildfire is to identify the exact location of wildfire occurrence, allowing fire units to respond as soon as possible. Thus to predict the occurrence of fire in forests, a fast and effective intelligent control system is proposed. The proposed algorithm with decision tree classification determines whether fire detection parameters are in the acceptable range and further utilizes a fuzzy-based optimization to optimize the complex environment. The experimental results of the proposed model have a detection rate of 98.3. Thus, providing real-time monitoring of certain environmental variables for continuous situational awareness and instant responsiveness.Keywords

Forests help to maintain the earth’s ecological balance. Uncontrolled fires primarily occur in forest areas that have invaded agricultural lands. Wildfires are caused by either intentional or unintentional causes. However, the result is disastrous, causing irreparable damage to the ecosystem [1]. The Australian bushfires began in 2020 and lasted until March 2021, killing nearly 3 billion animals [2]. Mammals, birds, frogs, and reptiles are all included. For example, in the year 2020, the world will be confronted with numerous massive wildfires and forest fires. The Australian bushfires of January 2020 destroyed six million acres of land. Thousands of people were evacuated from Arizona on June 28, 2020, due to a wildfire. On September 8, 2020, California faced more than forty-nine new wildfires that had been burning for several weeks.

Destructive wildfires are becoming an annual event, similar to climate change [3]. As a result, there is an increase in death trolls, resulting in catastrophes that wreak havoc on both humans and the environment. Firefighters are currently on the scene inspecting the remaining fire, but their safety is not guaranteed [4]. Despite increased efforts, fire disasters cannot be effectively predicted. Early detection and monitoring of fires can make immediate evacuation possible [5]. Monitoring potentially dangerous areas and providing early warning of fires will greatly reduce response time, potential damage, and firefighting costs [6]. As a result, it is critical to identify the fire situation and accurately determine the ignition point and real-time dynamics [7].

With the advancement of sensor technologies, [8] researchers show interest in applications related to condition-based environment monitoring systems. The data obtained in this manner, however, is redundant, ambiguous, and imprecise. The key aspect of the multi-sensor fusion model is that it omits the redundancy of the data, providing robust and accurate results [9]. The current multi-sensor data fusion algorithm used for fire detection is incapable of effectively removing error data, resulting in false alarms and data omission. The Kalman filter algorithm performs the fusion of a variety of data by removing noise [10]. Therefore, the proposed system is an enhancement of the Kalman filter, fulfilling the objective of the proposed research. The use of Wireless Sensor Network (WSN) for fire detection has proven to be effective in terms of providing accurate and reliable information [11]. Wi-Fi and ultra-wideband signals are being developed to provide a non-Global Positioning System solution [12,13]. Sensor measurements are inaccurate and may be non-working due to energy insufficiency [14]. Such factors are incapable of providing reliable and accurate information [15]. In addition to this issue, the sink node receives huge redundant data. The above-mentioned issues can be avoided by employing the data fusion technique [16,17].

The multi-sensor data fusion technique integrates data from multiple sensor nodes [18]. The location of the incident and the hotspot can sometimes have an impact on early fire detection systems [19]. The primary objective of the proposed system is to identify the exact location of wildfire occurrence, allowing fire units to respond as soon as possible. To predict the occurrence of fire in forests, a fast and effective Adaptive Decentralized Kalman Filter with a Decision Tree-based Multi-sensor Fusion (ADKF-DT-MF) fire detection technique was proposed. Decentralized Kalman filter, which combines data from multiple Kalman filters. This can improve the performance equal to that of a single Kalman filter that integrates all the independent sensor data in the system. It is practically true to provide optimal performance with a higher degree of fault tolerance. The primary contributions are:

• The ADKF-DT-MF technique detects wildfire at an early stage, increasing the chance of putting it out by collecting data on temperature and humidity, among other things.

• The ADKF-DT-MF technique obtains redundant and reliable data from multiple sensors for processing, monitoring, tracking, detection, and communication purposes to create environmental awareness.

• To perform an intricate intelligent decision-making system with fuzzy optimization capabilities.

• To avoid data fusion center estimation delays.

• When a hazard is detected, the cloud platform and online short message service (SMS) for receiving alert messages are integrated for communication purposes. As a result, communication overhead and battery consumption are reduced. Further, the performance is validated, by comparing it with the existing Enhanced Neural Network (ENN) technique.

• Thus, real-time monitoring and control of wildfires provide continuous situational awareness and instant responsiveness.

The rest of the article’s section is structured as follows: Section 2 illustrates the literature review. Section 3 describes in detail the ADKF-DT-MF-based fire detection technique. Section 4 illustrates the experimental results and discussion, and Section 5 concludes and discusses its future scope.

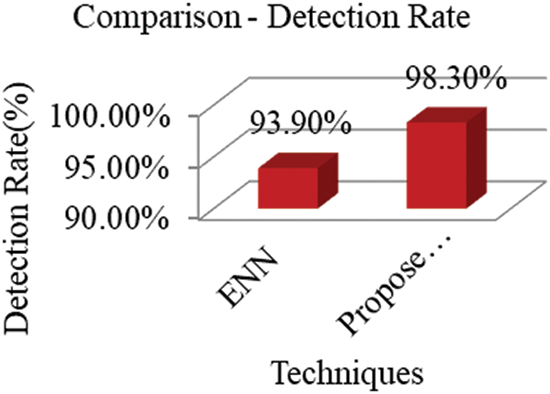

In [20] the authors proposed a reasoning method for a fire detection system using a fusion model extension neural network. The fusion system integrates at various levels. The sensor signal is initially received and processed by the extension neural network. As the input layer detects, the classification result is provided by the output layer. The layers are joined together using a double weight connection. Three input signals are considered to determine the fire: temperature, smoke, and carbon monoxide concentration. The network is trained to understand the model’s validity and superiority. Although the system demonstrated rapid convergence in fire detection, there were training errors, and the fire identification rate was only 93.9%. In [21] conducted a literature review on video flame and smoke-based fire detection. Forest fires cause environmental damage that takes decades to repair. It was previously detected using a point sensor based on heat, smoke, and flame. In addition, there is gas. Particles that reach the sensor body are activated. When it comes to responses, there is a lag. However, the model is appropriate for small rooms but not for large open spaces. Furthermore, point sensors are not efficient to detect fire with other characteristics of fire.

The authors [22] investigated fire sensor information fusion using a backpropagation neural network to ensure firefighter safety. Robots were used in the study to identify the remaining fires, lowering labor costs. Temperature, infrared signals, smoke, and carbon monoxide were all considered fusion parameters. The temperature sensors DS18B20, as well as the smoke and carbon monoxide sensors from the MQ-2 and MQ-7, were used. The fire detection algorithm is a cross between Artificial Neural networks and fuzzy algorithms. Although the robots’ fire detection assisted the firefighters, fire detection in dense forest areas, as well as fires caused by environmental factors such as high temperatures, continue to be challenging. In [23] the authors sought to summarize the onboard multisensor configuration for ground vehicle detection in off-road conditions. When working with condition-based environmental applications, it is critical to understand the different types of sensors and their performance. Range-based, image-based, and hybrid sensors are utilized in intelligent vehicles. The multisensor fusion method is the most effective way to perceive information in a complex environment. Sensor fusion methods are classified as probability, classification, and inference-based. Despite the author’s description of the different types of sensors and methods, integrating them into emerging technologies remains a challenge.

In [24] proposed the “Safe From Fire” (SFF) that receives data from multiple sensors located throughout the victimized area. Fuzzy logic integration monitors the location and severity of fire outbreaks. The system is aided by data fusion algorithms. Text messages and phone calls are used to notify people about the fire’s existence. The SFF system detected fires with a 95% accuracy rate. However, the system accepted multiple readings and issued repeated warning alarms. In [25] proposed a mobile autonomous firefighting robot for fire detection in an industrial setting. The robot has two driving wheels, and the motor controls the robot’s speed and direction. It employs a variety of sensors, including proximity, thermal infrared, and light sensors. Input signal matching the target is measured. Despite an improvement in detection mode, performance on suppressed and noisy targets was inferior.

In [26] developed hybrid body sensor network architecture to aid in the delivery of medical services. A body sensor network gathers physiological, psychological, and activity data to provide medical services. This necessitates gathering the users’ current state. The fixed devices allow for flexible human-robot interaction. The layers generate sensory data that is used to perform a multisensor fusion. Although multisensor fusion ensures quality improvement in fusion, fusion decision-making remains a challenge. In [27] the authors created wearable motion sensing devices for residents’ feet and wrists, followed by an intelligent fire detection system for home safety [28]. The intelligent system transmits real-time temperature and CO concentration, as well as alarms and appliance status. The system’s feasibility and effectiveness were confirmed. However, in two cross-validations, the results showed only a 92.2% recognition rate. According to [29], IoT devices generate useful data and enable data analysis, allowing for optimal real-time environmental decisions. However, wireless sensor networks consume a lot of energy and exhibit poor performance in real-time. Feature optimization reduces the network parameters and improves the performance of the system [30]. In the case of catastrophic events, tremendous losses to life and nature occur. Global warming has contributed to increased forest fires, and it needs immediate attention [31]. The problem of energy efficiency and reliability for forest fires is monitored. However, optimization algorithms improve energy efficiency, along with data routing in the context of WSN [32].

IoT is the primary source of big data. The quality of information is critical in decision-making, and data fusion can help achieve this. The following are some of the challenges associated with multisensor data fusion: i) Information from multiple sensors must be decisive, sensible, and precise. ii) Low-power sensor battery replacement is complicated and necessitates the use of energy-efficient sensors. iii) Hiding the semantics of fused information in critical applications. iv) Additionally, to obtain accurate information data, ambiguity, inconsistency, and conflicting nature must be removed. As a result, developing a multisensor fusion technique that addresses the aforementioned issues remains a challenge.

3 ADKF-DT Multisensor Fustion Technique

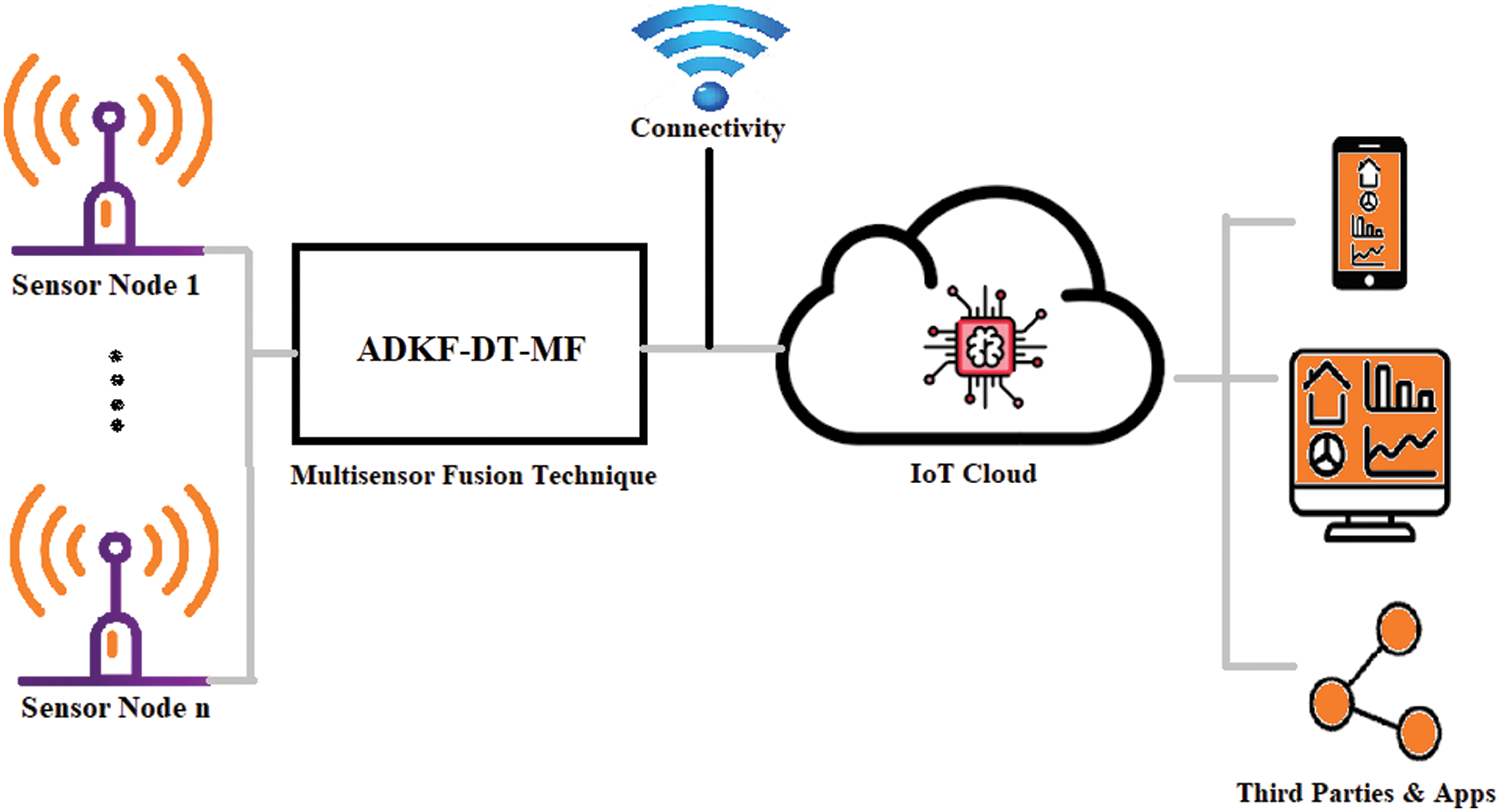

Multi-sensor fusion’s primary goal is to improve system performance by combining data from all sensors. To provide better spatial and temporal coverage, multiple sensors can be used. Furthermore, using multiple sensors improves estimation accuracy and fault tolerance. To meet the long-term and high-precision requirements, a multi-sensor fusion framework must continuously provide all-time weather parameters. The integrated fusion method is used for isolating measurement failures and fusing state estimates to combine local estimation results obtained from each sensor. Temperature, humidity, and noise are among the physical attributes monitored by the sensors. As data transmission speeds up, so does network complexity. When the sensor batteries run out, they cannot be replaced. As a result, it is necessary to increase battery life. Here the influential ADKF-DT multisensor fusion technique is applied as a probable solution to the challenge of earlier identification of forest fires. The proposed framework of the wildfire detection system is shown in Fig. 1.

Figure 1: Wildfire control system

The sensors perform data transmission through a wireless medium and are further processed by the proposed technique. Finally, the data is sent to the IoT cloud server which fulfills the objective of communicating early an alert message on the fire threat. The dynamic environmental measurements such as temperature and humidity in real-time are considered input data. The system detects fire and sends an alert message to the station. Depending on the budget, any number of sensors can be used, here the sensors of type Node MCU (ESP8266) interfaced with temperature and humidity sensors, i.e., DHT11. To identify threats and gain knowledge of the environment the observed sensor data are classified. The decision tree classifies the temperature and humidity parameters at the fusion center. Once the fire is detected in the perceived area the concerned authorities are communicated regarding the exact location and intensity of the fire which helps to take necessary action. Since sensors exhibit limited energy, the algorithm performs functions such as

i) predefining the active sensors and determining the shortest path for information transmission,

ii) reduces the amount of data sent to the base station

iii) those inactive go to sleep mode for energy saving scheme

The coverage function (FC) for a fire hotspot region is expressed in Eq. (1)

The sensing coordinates are x and y, and the time is denoted by t. Eq. (2) expresses the equation for a temperature sensor, while Eq. (3) expresses the equation for a fire event.

Regardless of sensor failure, the fusion operation is carried out by the fusion centre. The optimal estimation is calculated by the signal level fusion stage, which keeps track of the sensor’s estimated state and its uncertainty. The filter evaluates the incoming evidence recursively, yielding estimates of the state. Similarly, the uncertainty resulting from the covariance is calculated using Eq. (4).

where x1 and x2 are independent random variables. Z the observation and w Gaussian noise w, shown in Eq. (5)

where x (k) is the state vector and w (k) represents the unknown zero mean. The proposed technique’s efficiency is also validated by comparing it to the existing ENN technique [23]. For the classification and regression tree at each level, the root node is considered for attribute selection. The selection of attributes at the root node on each level depends mainly on: Entropy, Information Gain, and Gini index. These criteria calculate the attribute value, the attribute of high value is considered for Information Gain and placed at the root node. The continuous attribute value is calculated using the Gini index. The entropy for a single attribute is mentioned in Eq. (6)

where S, the current state;

The cost function corresponds to the Gini index, which evaluates the splits in the dataset favoring large partitions. The Gini index reaches a minimum zero value when all cases in the node belong to the same target category. The Gain Ratio is calculated as in Eq. (8). The information gain ratio is intrinsic information to reduce the bias that occurred due to multi-valued attributes. This is done by considering the number and size of branches while choosing an attribute.

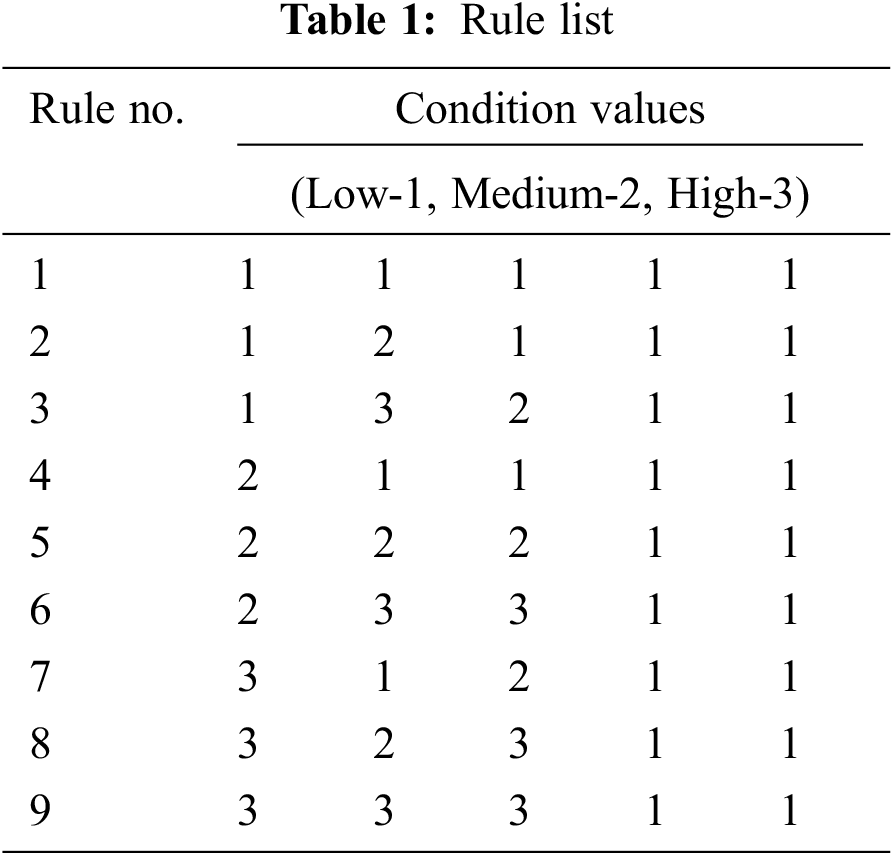

Over a given period, real-time environmental datasets for variable temperatures and humidity are observed. The simulation setup was created in Matlab 2018 (a) on a built-in system with an Intel (R) Core (TM) i7-4500U CPU and RAM PC running Windows 10. Furthermore, data communication is carried out via the ThingSpeak IoT platform channels. The sensors were placed at distinct locations so that a look could be kept on the entire forest territory to distinguish the start of disturbing temperature and humidity. The proposed Adaptive Decentralized Kalman Filter (ADKF) is used for data fusion. After fusion, fire detection occurs, and the decision tree, i.e., ADKF-DT-MF, classifies for accurate prediction. The decision tree related to rule induction as shown in Tab. 1 presents the various range of tables such as low, medium, and high. Each path from the root of the decision tree to one of its leaves is transformed into a rule.

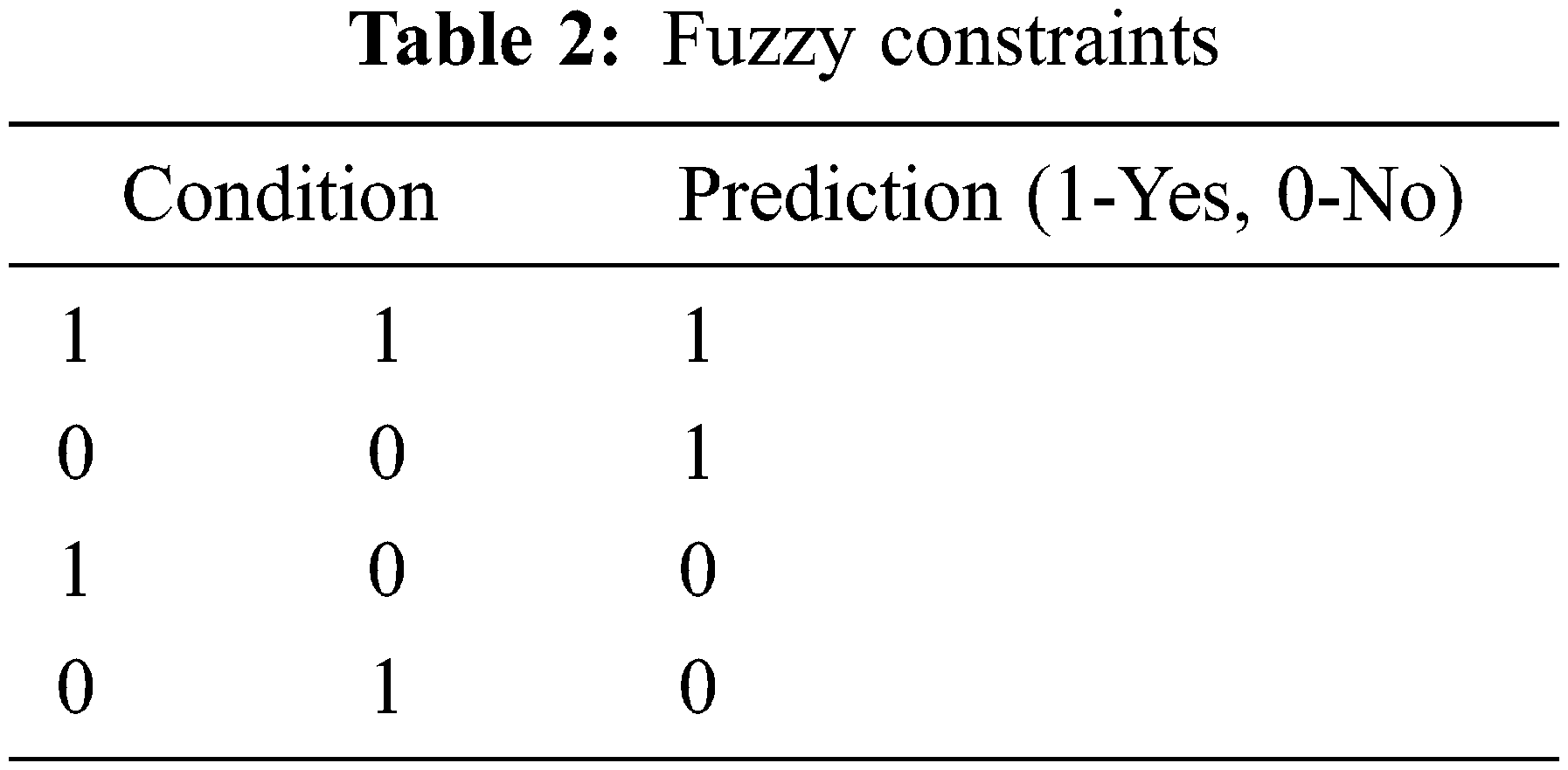

The classification starts from the root of the tree comparing the value of the root attribute with the record’s attribute. The target variable type is continuous the decision tree here is a continuous variable decision tree. The major challenge is to forecast the climate change threats in the forest environment and improves the prediction accuracy The decision tree of classification and regression technique was implemented to conquer the accuracy problem. The decision tree of 5-level depth consisting of 1000 candidates at each tree node is modeled. The temperature and humidity changes are observed on an hourly basis. Each recursion happens every 24 h. Thus enabling to find the best-fit line, which can predict the output more accurately. Here the input attributes are set as temperature (low, medium, high), and humidity (low, medium, high) with a membership function of between 0 and 1 as the target variable. The decision tree compares the information gained and splits the tree based on the three features. The split with the highest information gain will be considered for the first split and the process will continue until the information gain value is zero as presented in Tab. 2. On-sensor failure fuzzy-based decision tree model enables to adjust of the signaling devices, thus optimizing the system. Thus minimizing the generalization error. The optimization is based on maximum temperature, priority, and distance as depicted in Tab. 2. The variable value is pure and the level of impurity reaches the value of 0.

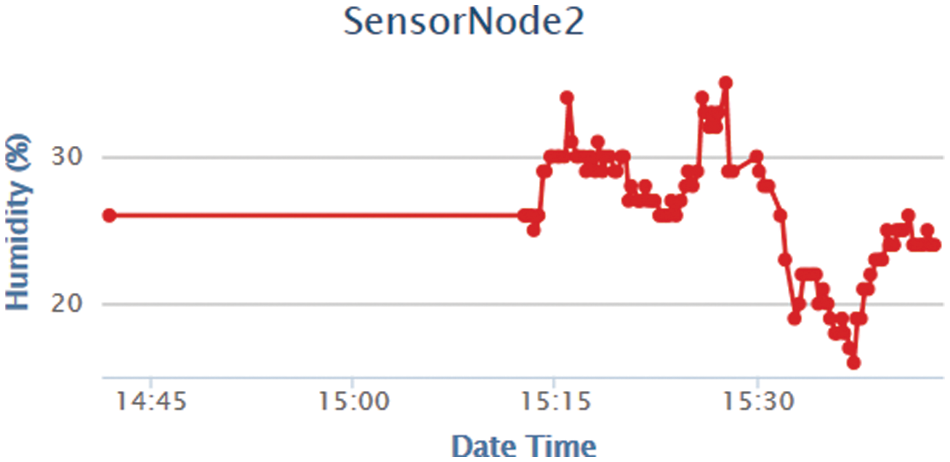

The proposed intelligent control system consists of two parts: sensor nodes and the ThingSpeak IoT Cloud server. Optimization view is shown in Fig. 2. The optimized view is based upon priority, degree and distance. A sensor node is made up of temperature and humidity sensors, as well as a controller board and a power supply. Each channel saves the current temperature, humidity, and time of update. The data stored in the training data channel is used to perform the training. Due to the limitations of ThingSpeak’s free account, which only allows the creation of a maximum of four channels per account, the fused data and predicted data are stored on the same channel. The React App monitors the fire status in the channel, and if a fire is detected, it will trigger the ThingHTTP App, where the SMS gateways APIs are pre-configured during implementation. When the API request reaches the SMS gateway server, the SMS will be sent to the numbers specified in the received request. Finally, the results are sent to the appropriate authorities for immediate action. Figs. 3–6 depict the input data sensing by Sensor Node-1 and Sensor Node-2. Here temperature and humidity data are considered as input for the system. The graph depicts the temperature and humidity change curves before preprocessing. It has been discovered that the collected data is inferred by external factors.

Figure 2: Optimization view

Figure 3: Temperature sensing sensor node-1

Figure 4: Humidity sensing sensor node-1

Figure 5: Temperature sensing sensor node-2

Figure 6: Humidity sensing sensor node-2

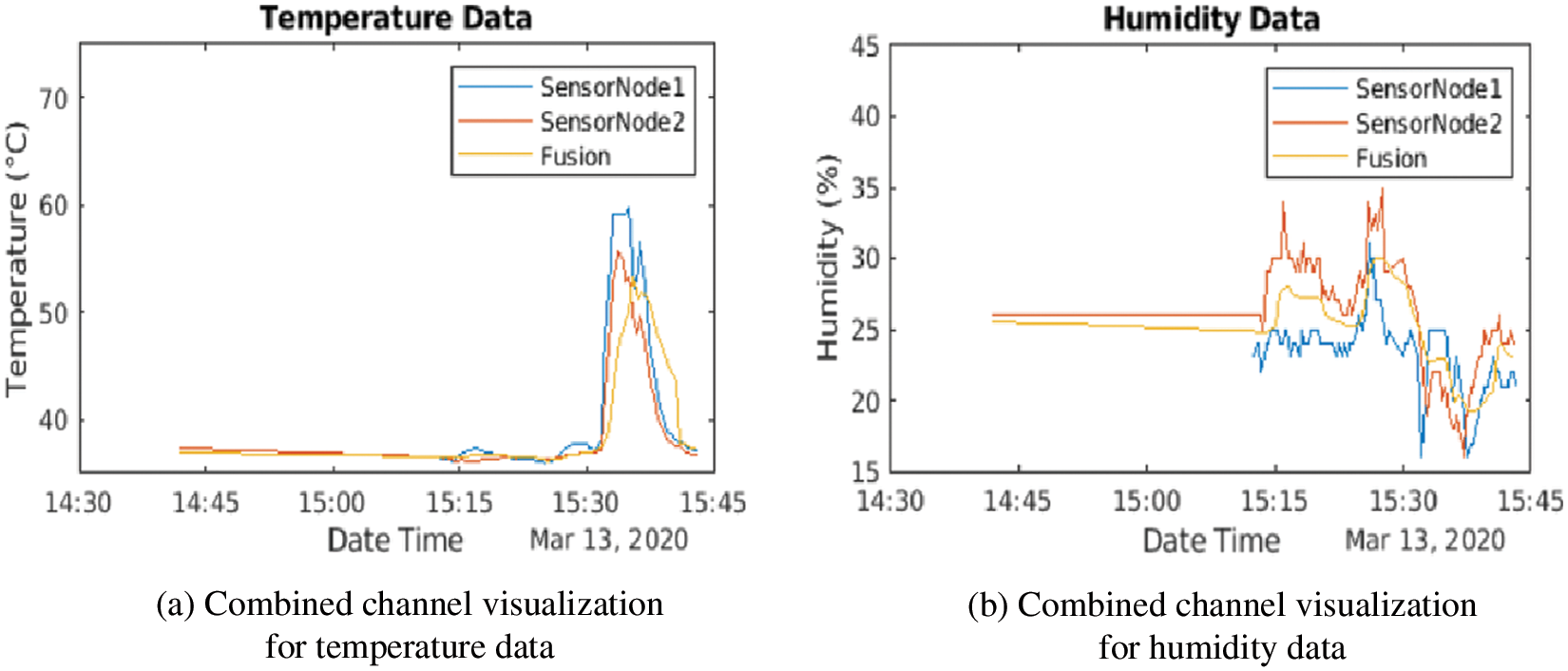

The data from the sensor node is fused using the ADKF algorithm, and the visualization is done on a separate channel on ThingSpeak. Figs. 7a and 7b depict a combined graph visualization of data from Sensor Node 1 and Sensor Node 2, as well as the fused version of both sensor data.

Figure 7: (a) Combined channel visualization for temperature data (b) Combined channel visualization for humidity data

Figs. 7a and 7b depict the visualization of fused data from the training data channels for both temperature and humidity. During the summer season, training is conducted using data collected from real-world forest environments. The temperature appeared to be low at night, whereas the temperature reading was high during the day, close to 35°C to 37°C with the hot sun, and the humidity was between 80% and 95%.

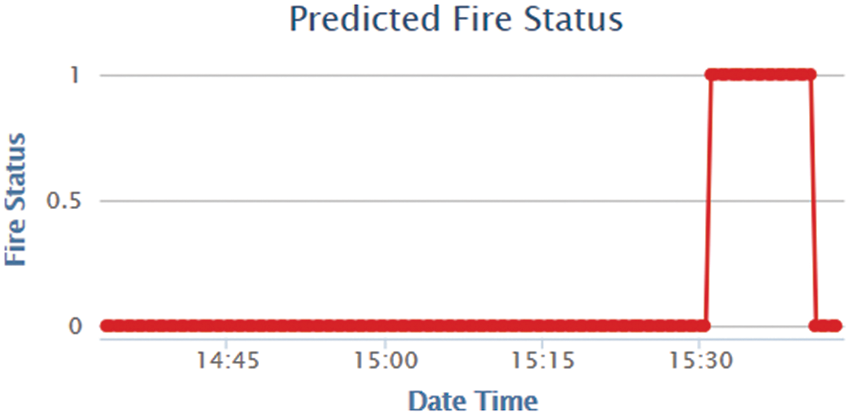

Fig. 8 shows the graphical status of fire prediction. When the fire is predicted an alert message is sent to the authorized authority. Thus the volume of data sent is reduced. As a result, the proposed system’s anti-inference capability for fire detection has been enhanced. Furthermore, the ADKF eliminates noise data, increasing detection accuracy. The proposed technique is compared to the existing technique [23] to demonstrate its efficiency. The comparison results in terms of detection rate are shown in Fig. 9. Furthermore, the proposed model has a detection rate of 98.3%, demonstrating that the proposed technique is robust. During rainy seasons, the moisture content of the air is high, indicating a lower risk of fire. Due to a lack of rain during the summer, temperatures rise, the air becomes drier, and the likelihood of a fire increases. However, the sensors’ measurements can be inaccurate at times because the sensor is faulty or sends malicious data. Due to a lack of energy, some sensors do not function properly. As a result, accurate information about the fire event is impossible to obtain. The ADKF-DT multi-sensor fusion technique provides precise and accurate information on wildfires.

Figure 8: Fire prediction status graph

Figure 9: Comparison with existing technique

Although fire has always been a natural and beneficial part of many ecosystems, wildfires, cause irreparable damage to the ecosystem. Destructive wildfires are becoming an annual event. Despite increased efforts, fire disasters cannot be effectively predicted. The proposed classification of fusion data using the ADKF-Decision tree and intelligent fuzzy membership optimized control system for forest fire detection detects wildfires as early as possible before they spread across a large area, thereby preventing poaching. As a result, the experiment was a success in predicting fire, with a detection rate accuracy of 98.3%. For technical feasibility, only minimum sensor nodes were tested. More sensor nodes fixed in the heterogeneous environment can be considered for future research. The feasibility of the study can be verified with different algorithms. In addition, the study on connectivity to the IoT cloud server could be considered for future research scope.

Acknowledgement: The authors with a deep sense of gratitude would thank the supervisor for her guidance and constant support rendered during this research.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. F. Alam, R. Mehmood. I. Katib, N. N. Albogami and A. Albeshri, “Data fusion and IoT for smart ubiquitous environments: A survey,” IEEE Access, vol. 5, pp. 9533–9554, 2017. [Google Scholar]

2. P. H. Chou, Y. L. Hsu, W. L. Lee, Y. C. Kuo, C. C. Chang et al., “Development of a smart home system based on multi-sensor data fusion technology,” in Proc. Int. Conf. on Applied System Innovation (ICASI), Sapporo, Japan, pp. 690–693, 2017. [Google Scholar]

3. V. Chowdary, M. K. Gupta and R. Singh, “A review on forest fire detection techniques: A decadal perspective,” Networks, vol. 4, pp. 12, 2018. [Google Scholar]

4. M. A. Crowley, J. A. Cardille. J. C. White and M. A. Wulder, “Multi-sensor, multi-scale, Bayesian data synthesis for mapping within-year wildfire progression,” Remote Sensing Letters, vol. 10, no. 3, pp. 302–311, 2019. [Google Scholar]

5. C. M. de Farias, L. Pirmez, G. Fortino and A. Guerrieri, “A Multi-sensor data fusion technique using data correlations among multiple applications,” Future Generation Computer Systems, vol. 92, pp. 109–118, 2019. [Google Scholar]

6. M. A. El Abbassi, A. Jilbab and A. Bourouhou, “Detection model based on multi-sensor data for early fire prevention,” in Proc. Int. Conf. on Electrical and Information Technologies (ICEIT), Tangiers, Morocco, pp. 214–218, 2016. [Google Scholar]

7. W. Fengbo, L. Xitong and Z. Huike, “Design and development of forest fire monitoring terminal,” in Proc. Int. Conf. on Sensor Networks and Signal Processing (SNSP), Xi’an, China, pp. 40–44, 2018. [Google Scholar]

8. A. Gaur, A. Singh, A. Kumar, A. Kumar and K. Kapoor, “Video flame and smoke-based fire detection algorithms: A literature review,” Fire Technology, vol. 56, no. 5, pp. 1943–1980, 2020. [Google Scholar]

9. F. Gong, C. Li, W. Gong, X. Li, X. Yuan et al., “A Real-time fire detection method from video with multifeature fusion,” Computational Intelligence and Neuroscience, vol. 2019, pp. 1–17, 2019. [Google Scholar]

10. Y. L. Hsu, P. H. Chou, H. Chang, S. L. Lin, S. C. Yang et al., “Design and implementation of a smart home system using multisensor data fusion technology,” Sensors, vol. 17, no. 7, pp. 1631, 2017. [Google Scholar]

11. J. Hu, B. Y. Zheng, C. Wang, C. H. Zhao, X. L. Hou et al., “A survey on multi-sensor fusion-based obstacle detection for intelligent ground vehicles in off-road environments,” Frontiers of Information Technology & Electronic Engineering, vol. 21, pp. 675–692, 2020. [Google Scholar]

12. S. H. Javadi and A. Mohammadi, “Fire detection by fusing correlated measurements,” Journal of Ambient Intelligence and Humanized Computing, vol. 10, no. 4, pp. 1443–1451, 2019. [Google Scholar]

13. Y. H. Liang and W. M. Tian, “Multi-sensor fusion approach for fire alarm using BP neural network,” in Proc. Int. Conf. on Intelligent Networking and Collaborative Systems (INCoS), Ostrava, Czech Republic, pp. 99–102, 2016. [Google Scholar]

14. K. Lin, Y. Li, J. Sun, D. Zhou and Q. Zhang, “Multi-sensor fusion for body sensor network in medical human–robot interaction scenario,” Information Fusion, vol. 57, pp. 15–26, 2020. [Google Scholar]

15. M. I. Mobin, M. Abid-Ar-Rafi, M. N. Islam and M. R. Hasan, “An intelligent fire detection and mitigation system safe from fire (SFF),” International Journal of Computer Applications, vol. 133, no. 6, pp. 1–7, 2016. [Google Scholar]

16. F. Z. Rachman and G. Hendrantoro, “A fire detection system using multi-sensor networks based on fuzzy logic in indoor scenarios,” in Proc. 8th Int. Conf. on Information and Communication Technology (ICoICTYogyakarta, Indonesia, pp. 1–6, 2020. [Google Scholar]

17. T. Rakib and M. R. Sarkar, “Design and fabrication of an autonomous firefighting robot with multisensor fire detection using PID controller,” in Proc. 5th Int. Conf. on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, pp. 909–914, 2016. [Google Scholar]

18. A. Reyana and P. Vijayalakshmi, “Multisensor data fusion technique for environmental awareness in wireless sensor networks,” European Journal of Molecular & Clinical Medicine, vol. 7, no. 08, pp. 4479–4490, 2021. [Google Scholar]

19. H. Seiti and A. Hafezalkotob, “Developing pessimistic–optimistic risk-based methods for multi-sensor fusion: An interval-valued evidence theory approach,” Applied Soft Computing, vol. 72, pp. 609–623, 2018. [Google Scholar]

20. R. A. Sowah, A. R. Ofoli, S. N. Krakani and S. Y. Fiawoo, “Hardware design and web-based communication modules of a real-time multisensor fire detection and notification system using fuzzy logic,” IEEE Transactions on Industry Applications, vol. 53, no. 1, pp. 559–566, 2016. [Google Scholar]

21. A. Rehman, D. S. Necsulescu and J. Sasiadek, “Robotic based fire detection in smart manufacturing facilities,” IFAC-PapersOnLine, vol. 48, no. 3, pp. 1640–1645, 2015. [Google Scholar]

22. N. Verma and D. Singh, “Analysis of cost-effective sensors: Data fusion approach used for forest fire application,” Materials Today: Proceedings, vol. 24, pp. 2283–2289, 2020. [Google Scholar]

23. T. C. Wang, Y. Z. Xie and H. Yan, “Research of multi-sensor information fusion technology based on extension neural network,” Mathematical Modelling of Engineering Problems, vol. 3, no. 3, pp. 129–34, 2016. [Google Scholar]

24. T. Wang, J. Hu, T. Ma and J. Song, “Forest fire detection system based on fuzzy kalman filter,” in Proc. Int. Conf. on Urban Engineering and Management Science (ICUEMS), Zhuhai, China, pp. 630–633, 2020. [Google Scholar]

25. C. Yang, A. Mohammadi and Q. W. Chen, “Multi-sensor fusion with interaction multiple models and chi-square test tolerant filter,” Sensors, vol. 16, no. 11, pp. 1835, 2016. [Google Scholar]

26. A. Yoddumnern, T. Yooyativong and R. Chaisricharoen, “The wifi multi-sensor network for fire detection mechanism using fuzzy logic with IFTTT process based on cloud,” in Proc. 14th Int. Conf. on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Phuket, Thailand, pp. in 785,–789, 2017. [Google Scholar]

27. J. Zhang, Z. Ye and K. Li, “Multi-sensor information fusion detection system for fire robot through backpropagation neural network,” Plos One, vol. 15, no. 7, pp. e0236482, 2020. [Google Scholar]

28. E. Zervas, A. Mpimpoudis, C. Anagnostopoulos, O. Sekkas and S. “Hadjiefthymiades “Multisensor data fusion for fire detection,” Information Fusion, vol. 12, no. 3, pp. 150–159, 2011. [Google Scholar]

29. H. Zhao, Z. Wang, S. Qiu, Y. Shen, L. Zhang et al., “Heading drift reduction for foot-mounted inertial navigation system via multi-sensor fusion and dual-gait analysis,” IEEE Sensors Journal, vol. 19, no. 19, pp. 8514–8521, 2018. [Google Scholar]

30. W. Sun, G. C. Zhang, X. R. Zhang, X. Zhang and N. N. Ge, “Fine-grained vehicle type classification using light weight convolutional neural network with feature optimization and joint learning strategy,” Multimedia Tools and Applications, vol. 80, no. 20, pp. 30803–30816, 2021. [Google Scholar]

31. A. Aljumah, “IoT-inspired framework for real-time prediction of forest fire,” International Journal of Computers, Communications & Control, vol. 17, no. 3, pp. 1–18, 2022. [Google Scholar]

32. N. Moussa, E. Nurellari and A. El Alaoui, “A novel energy-efficient and reliable ACO-based routing protocol for WSN-enabled forest fires detection,” Journal of Ambient Intelligence and Humanized Computing, vol. 35, no. 1, pp. e5008, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools