Open Access

Open Access

ARTICLE

Early Detection of Autism in Children Using Transfer Learning

1 School of Information Technology, Skyline University College, Sharjah, 1797, UAE

2 Network and Communication Technology Lab, Center for Cyber Security, Faculty of Information Science and Technology Universiti Kebangsaan Malaysia, 43600, Malaysia

3 School of Computer Science, National College of Business Administration & Economics, Lahore, 54000, Pakistan

4 Lahore Garrison University, Lahore, 54000, Pakistan

5 Department of Computer Science, COMSATS University Islamabad–Lahore Campus, Lahore, 54000, Pakistan

6 Department of Software, Pattern Recognition and Machine Learning Lab, Gachon University, Seongnam, Gyeonggido, 13120, Korea

* Corresponding Author: Muhammad Adnan Khan. Email:

Intelligent Automation & Soft Computing 2023, 36(1), 11-22. https://doi.org/10.32604/iasc.2023.030125

Received 18 March 2022; Accepted 30 May 2022; Issue published 29 September 2022

Abstract

Autism spectrum disorder (ASD) is a challenging and complex neuro-development syndrome that affects the child’s language, speech, social skills, communication skills, and logical thinking ability. The early detection of ASD is essential for delivering effective, timely interventions. Various facial features such as a lack of eye contact, showing uncommon hand or body movements, babbling or talking in an unusual tone, and not using common gestures could be used to detect and classify ASD at an early stage. Our study aimed to develop a deep transfer learning model to facilitate the early detection of ASD based on facial features. A dataset of facial images of autistic and non-autistic children was collected from the Kaggle data repository and was used to develop the transfer learning AlexNet (ASDDTLA) model. Our model achieved a detection accuracy of 87.7% and performed better than other established ASD detection models. Therefore, this model could facilitate the early detection of ASD in clinical practice.Keywords

Autism Spectrum Disorder (ASD) is a complex neurological developmental disability that directly affects the brain’s ability to process information. This disease is typically diagnosed in children after the age of 3 years and is characterized by a lack of eye contact, poor social interaction, and stereotyped behaviors [1]. One in every 70 children born worldwide is affected by autism [2]. In the United States, about 3.63% of boys and 1.25% of girls aged between 3 to 17 years are diagnosed with ASD [3]. There is no single known cause for the development of ASD. However, both environmental factors and genetic mutations may have an important role in the development of this disease. ASD affects various parts of the brain and has been linked with several genetic mutations [4]. More than 100 different genes are linked to the development of ASD, and approximately 10% to 20% of ASD cases have multiple genetic mutations [5,6].

Due to the absence of pathophysiological diagnostic markers indicative of ASD, various psychological questionnaires have been developed to diagnose the disease and its impact on development. The questionnaires are designed to evaluate the child’s social interaction and behaviors according to their age. These tools are used alongside a medical history, clinical observations, and intelligence tests [7] to confirm the diagnosis.

Although ASD is still not curable, an early diagnosis is essential for the delivery of timely interventions necessary to reduce the symptoms of the disease and to enable the child to develop the necessary skills to function later in life. Several studies evaluated the role of various features, including eye-tracking, facial appearance, speech, and behavioral patterns that could be used to diagnose ASD [8]. However, these features are often very subtle and difficult to recognize, especially in very young children. Healthcare professionals do have sufficient tools or skills to diagnose and treat autistic children in an effective manner. They might not get formal training to diagnose the disease or sometimes they face problems communicating with autistic children. By using deep learning-based algorithms for the disease give promising results by surpassing human performance in assessing large datasets. These ML or DL algorithms also give accurate predictions [9].

Machine learning and deep learning models are increasingly being used to diagnose disease from medical images [10], natural language processing [11], sentiment analysis [12], computer vision [13], speech recognition [14], predictive analytics [15], data analytics [16], and to provide patient-centered care [17]. The advantage of the novel deep learning models such as convoluted neural networks (CNN) is that the development of the model does not require the time-consuming manual tagging of images by a healthcare professional. This allows the model to be trained on large datasets, minimizing bias in the model.

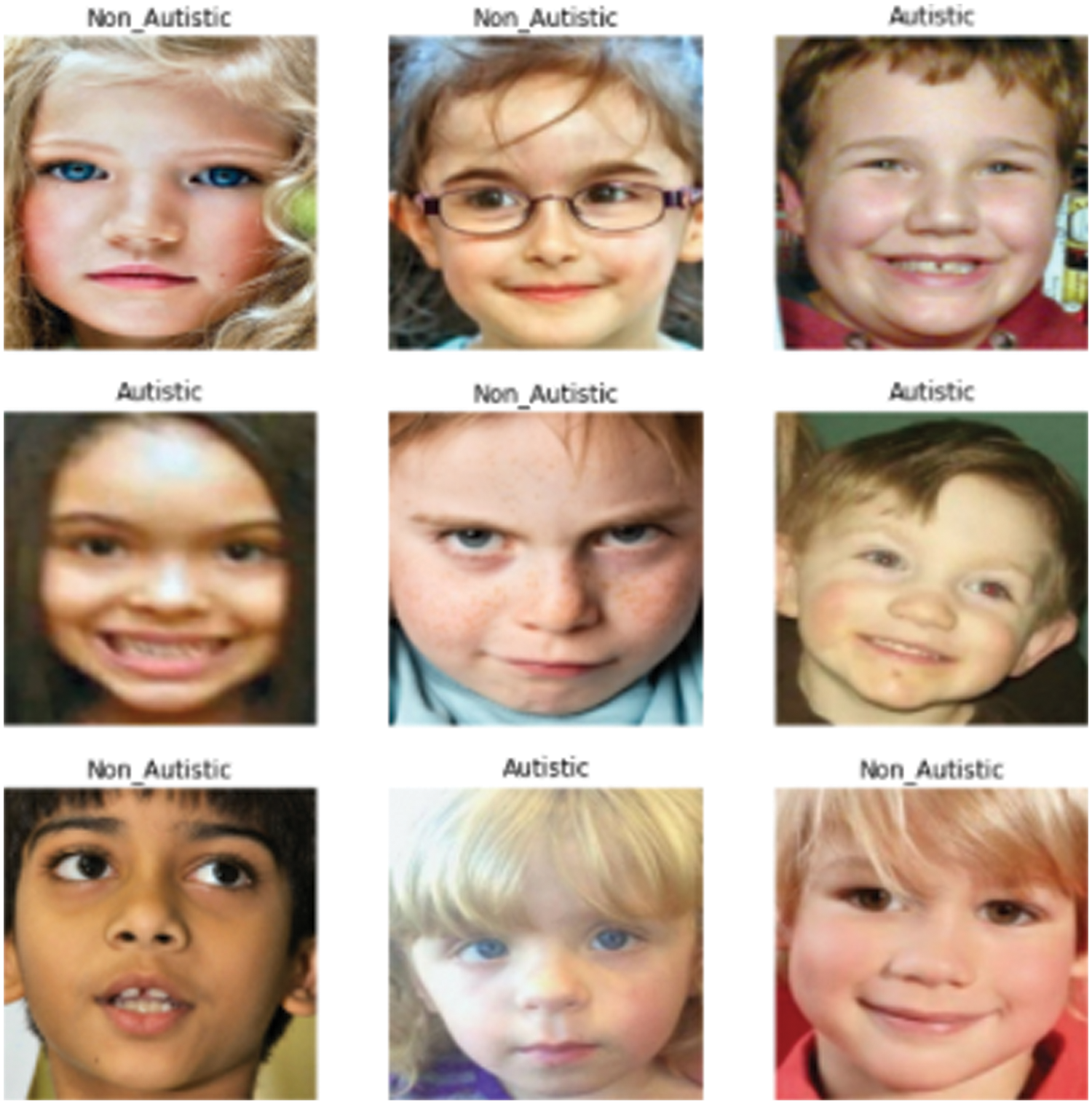

However, very few machines and deep learning models have been developed to diagnose ASD based on facial features and behavioral patterns. Therefore this study aimed to develop a transfer learning model based on the AlexNet architecture to diagnose and classify ASD based on the facial features from images of autistic and non-autistic children obtained from the Kaggle data repository of autism disease [18] as shown in Fig. 1.

Figure 1: Sample images illustrating autistic (1) and non-autistic (2) appearances obtained from the Kaggle data repository of autism disease

The rest of the article is structured as follows. Section 2 describes the literatureevaluating various diagnostic models that could be used to diagnose ASD based on facial features. Section 3 provides an overview of the methods used to develop and test the accuracy of the ASD deep transfer learning algorithm (ASDDTLA). The findings of the study are discussed in Section 4. The final section outlines the conclusion of this work.

Several studies have evaluated the relationship between facial expressions and patients with neurological disorders, including Alzheimer’s [9], neurodegenerative disorders [19], and frontotemporal dementia patients [20]. The evaluation of facial expressions in ASD patients has gained momentum in the past few years. Various techniques have been proposed to enable ASD children to process and perform different facial expressions. Yolcu et al. [21] used a CNN to identify important facial-related features related to ASD. A second CNN model was used to recognize the relevant facial expressions. This model achieved a maximum accuracy of 94.44%. The same research group further improved the model by adapting the model to identify features in the mouth, eyes, and eyebrows in children diagnosed with neurological disorders. These models were integrated to create iconized images that could be used to facilitate differential diagnosis.

Valles et al. [22] used CNN models to assess human facial expressions using images obtained from Kaggle’s (FER2013) 2013 dataset, modified to include images of ASD children acquired using different lighting. Other studies made use of deep learning and Raspberry Pi3 models to evaluate the emotions of ASD children undergoing robot-assisted therapy (RAT) [23,24].

Guha et al. [25] used time-series modeling and statistical analysis to compare 6 universal emotions in children with and without ASD. Their findings showed that ASD children have less complex facial expressions, particularly in the eye region. Grossard et al. [26] developed an educative multimodal emotional imitation game (JEMImE) using a 3-dimensional virtual environment to enable ASD children to express sadness, anger, and happiness. The game utilized various visual and motivational tools to enable the children to engage with the game [27].

Smitha et al. [28] compared the efficacy of parallel and serial Principal Component Analysis (PCA) feature extraction algorithm for a portable motion detector for ASD children. The PCA algorithm [29] was implemented at the hardware level to simplify the extraction of relevant motion features and achieved a maximum of 82.3% accuracy for a word length of 8 bits. Pramerdorfer et al. [30] evaluated the use of various CNN models to improve the recognition of image-based facial expressions linked with ASD. The use of basic CNN architectures improved the software’s detection performance compared with other models. However, an ensemble of modern deep CNN achieved an accuracy of 75.2%, which significantly outperformed previously developed models. Another advantage of this model is that no auxiliary face registration or training data is required to develop the model.

Ibala et al. [31] used transfer learning and leveraged ensemble techniques to identify the seven key human emotions: sadness, fear, disgust, neutrality, surprise, happiness, and anger on facial images of people having ASD and non-ASD. The maximum accuracy achieved through transfer learning and the ensemble methods were 78.3% and 67.2%, respectively.

Several multiple deep learning techniques have been proposed to resolve various classification problems on medical images. This study used the transfer learning approach to train an Autism Spectrum Disorder Detection with Transfer Learning AlextNet (ASDDTLA) model to diagnose ASD from facial images.

Our proposed pre-trained deep learning CNN model was based on the AlexNet architecture. The model was retrained by modifying the layers in the dataset to improve the model’s classification performance and hence facilitate the identification of ASD at an early stage. This ASDDTLA model can automatically complete robust extraction of numerous facial features, which can be difficult to extract via a clinical assessment due to their subtlety. The development of the algorithm was performed in three phases firstly modification in the pretrained Alexnet, secondly training of the model, and lastly validation of the model.

3.1 Modification of the Transfer Learning-Based Autism Detection and Classification Pre-Trained Architecture CNN (AlexNet)

The CNN neural network utilized in our study consisted of a multi-layered stacked architecture. Its core purpose is to recognize patterns directly from the image pixels. Therefore this method requires minimum preprocessing. The typical CNN architecture consists of 3 layers; a pooling layer, a convolutional layer, and a final fully connected layer. The convolutional layer is responsible for most of the computation work, and it is one of the core layers of CNN. The main purpose of this layer is the filtration and convolutional operation of the input data before it is handed over to the output of the next layer. The filter is then applied to the next layer to identify the relevant features from the whole input that are then used to create a feature map. The pooling layer located between the consecutive convolution is used for the spatial reduction of the computational space and spatial representation. This layer performs a pooling operation on the divided inputs for the next convolutional layer to reduce the computational cost. The pooling and convolutional layers select only the relevant features from the given input images. The fully connected layer provides the final output equal to the number of classes.

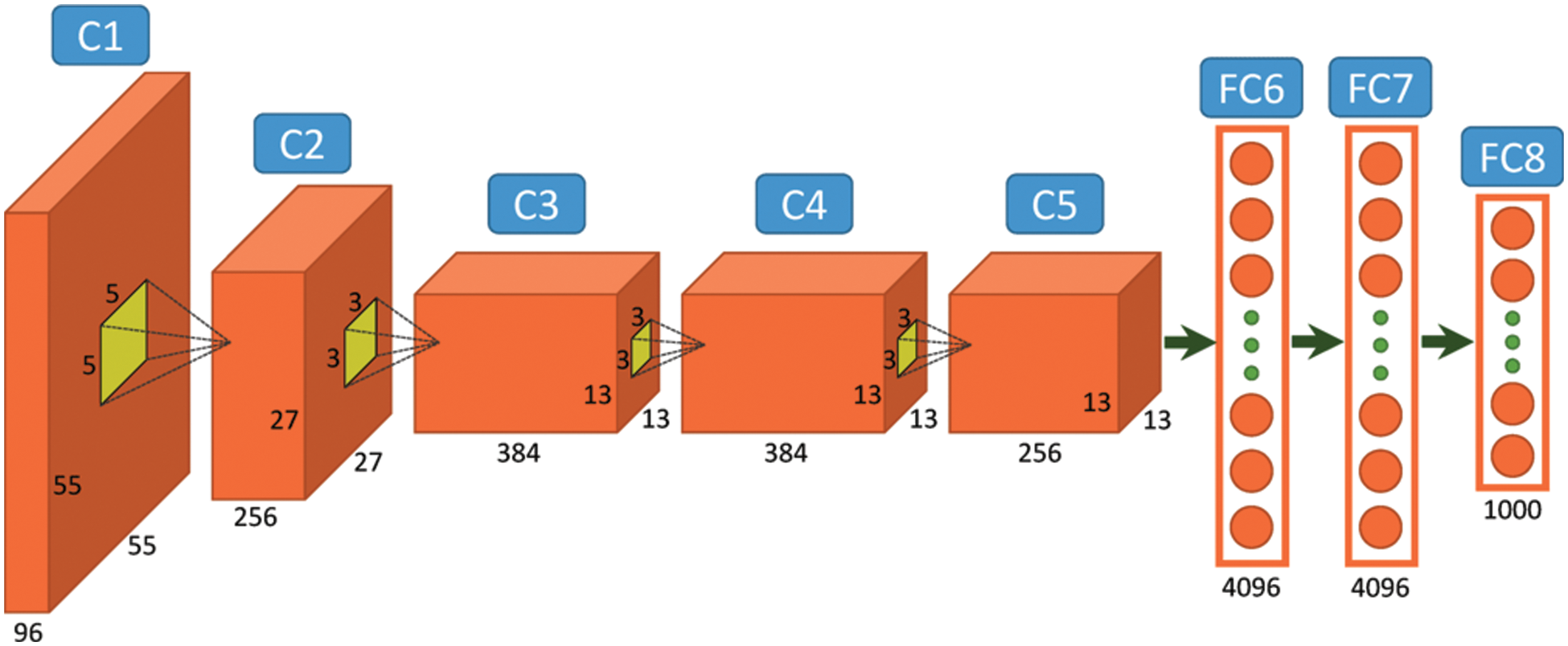

Almost every CNN uses a similar structural design to that proposed in our study. However, variations can exist. In this work, we proposed a system model built on the AlexNet pre-trained architecture for the classification and detection of autism disease [32]. The general architecture of the AlexNet is described further in Fig. 2. The C1-C5 represents 5 Convolutional Layers and FC6-FC8 represents Fully Connected Layers of the AlexNet model.

Figure 2: General architecture of the AlexNet [32]

3.2 Parameters of Transfer Learning

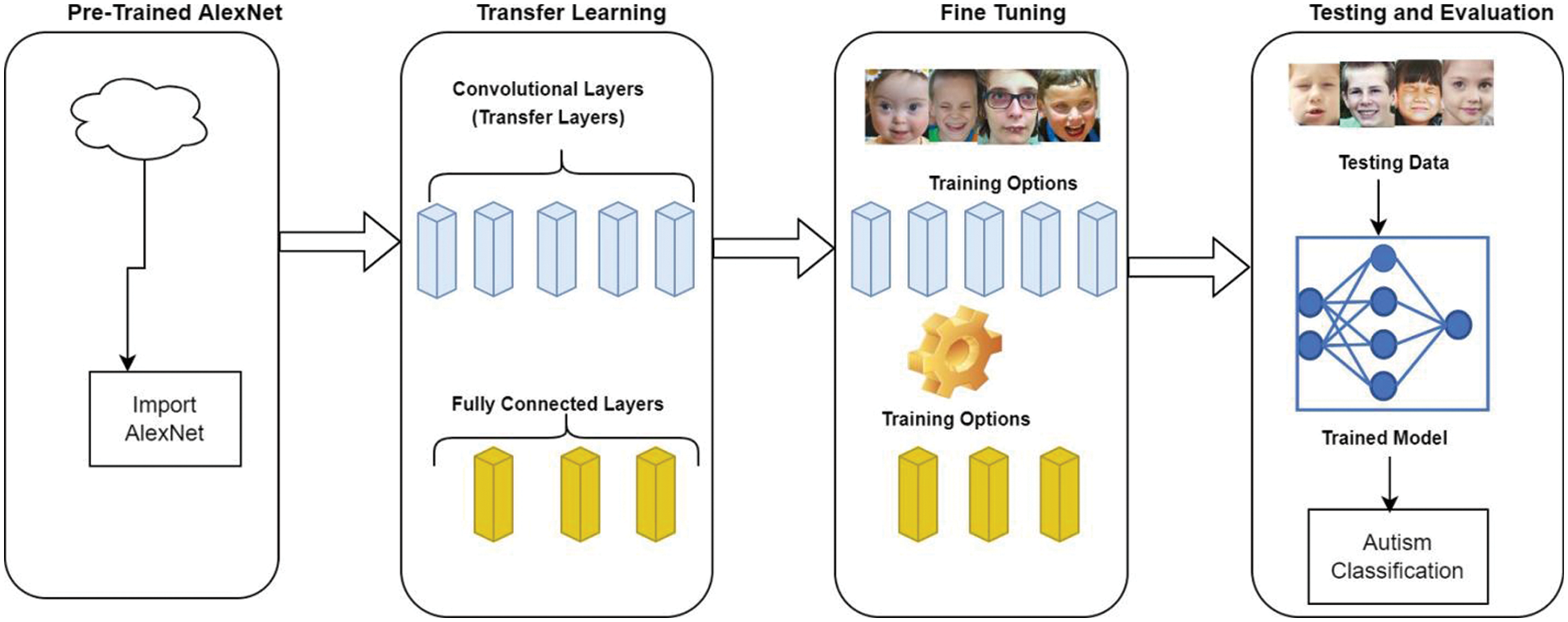

Transfer learning is a machine learning technique whereby a model trained on one task is re-purposed for a novel related task. Therefore, transfer learning is increasingly being used to reduce the time and data required to train a new model. It can also use unlabelled data to train the model as the bulk of the model is pre-trained. Therefore, we used the pre-trained AlexNet model to learn a novel task as a starting point. This network was trained on a huge ImageNet dataset, consisting of 15 million images of different objects. The pre-trained parameters of the internal layers of the architecture (all the layers except the last three) were transferred to the new model. The last three layers of the AlexNet architecture were replaced with a fully connected layer, an output classification layer, and a SoftMax layer.

3.3 Modification of the AlexNet Architecture

The AlexNet architecture was used to acquire the input image as a 227 × 227-pixel red-green-blue (RGB) image and hand it over to the protest classes of the ImageNet. This system comprised five convolutional layers and three associated fully connected layers (FC). For the classification of ASD, we created a structure that produces the scores for the output classes as the pre-trained classes may vary from the classes obtained from the new task. The former layers of the architecture were used to obtain the general features of the training images, like edge detection, whereas the final FC layers were used to identify the class-specific features necessary to categorize the image into specific classes. For the ASD class-specific features obtained from the transfer learning approach, we extracted all the layers of the AlexNet architecture except the last three. Transfer learning was used to replace the last three layers of the AlexNet with our problem that has binary class specifications. No changes were applied to the former five layers. The former 5 layers were trained using the AlexNet (ImageNet), while the final layers were trained with images obtained from the autism dataset downloaded from Kaggle repository. The fully connected layer parameters consisted of the weight learn factor, biased learn factor, and output size. The learning rate was determined using the weight learn rate, while the bias learning rate determined the bias factor. The SoftMax layer was used to facilitate the application of SoftMax functions to the input. The classification parameters represented the output size. The methodology used to develop the model is further illustrated in Fig. 3.

Figure 3: Proposed methodology

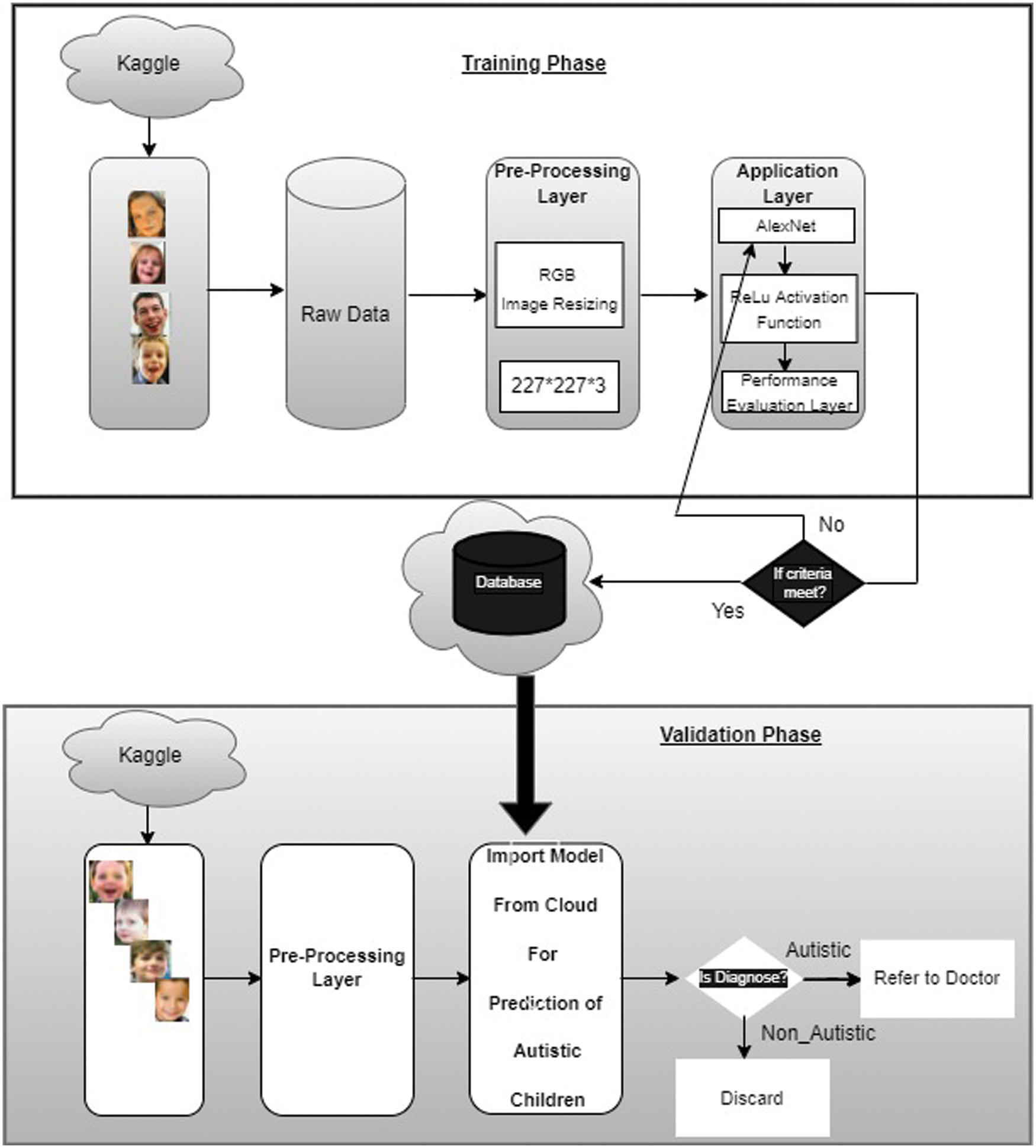

Our proposed ASDDTLA system model structure is illustrated in Fig. 4. The model development included a training phase and a validation phase on the image dataset used for the classification problem. Since the AlexNet pre-trained architecture only accepts images of a dimension of 227 × 227, all images were preprocessed to this dimension.

Figure 4: Proposed ASDDTLA system model

In the training phase, 80% of the data was handed over to the pre-trained AlexNet model, which was trained using a modified architecture of 40 epochs. In the validation phase, 20% of the dataset was used to validate the binary classification of the trained model.

The AlexNet architecture was pre-trained using over 1000 classes of images obtained from the ImageNet dataset. In the training phase of the proposed system model, the ASDDTLA classification of the images from the target domain was used to train the pre-trained model, and the transferred CNN layers were finely tuned over the target dataset, which kept the low-level features from the ImageNet intact. A fast learning rate was used to train the CNN architecture domain using features specifically related to the classes included in the layers. These features were finely tuned for the target domain classification. The pre-trained model consisted of the last three FC layers designed specifically for the target domain to facilitate the categorization of the target images into their corresponding classes. This process ensured the transfer of all low-level features of the model into the shallow layers, which is important for resolving the given problem and speeding up the learning rate for a novel problem.

Several training parameters can be varied to train the network, such as the learning rate, validation frequency, number of epochs, and number of iterations. For our proposed model, we used 40 epochs, 80% of the dataset, and a learning rate of 0.0001. The stochastic gradient descent with momentum (SGDM) algorithm was used to train the network. This optimization algorithm adjusts the weight parameter and minimizes the loss function. The network’s performance was optimized by varying the learning rate from le-1 to le-10. The optimal learning rate was found to be 0.0001.

The trained model was saved on the cloud for validation purposes. Image samples of children with and without ASD were used to validate the model.

The performance of the algorithm was evaluated by calculating the miss rate, accuracy, precision, sensitivity, specificity, f1 score, False-negative-rate (FNR) and False positive rate (FPR), Likelihood ratio positive (LRP), and likelihood ratio negative (LRN) [33].

4.1 Training and Validation Datasets

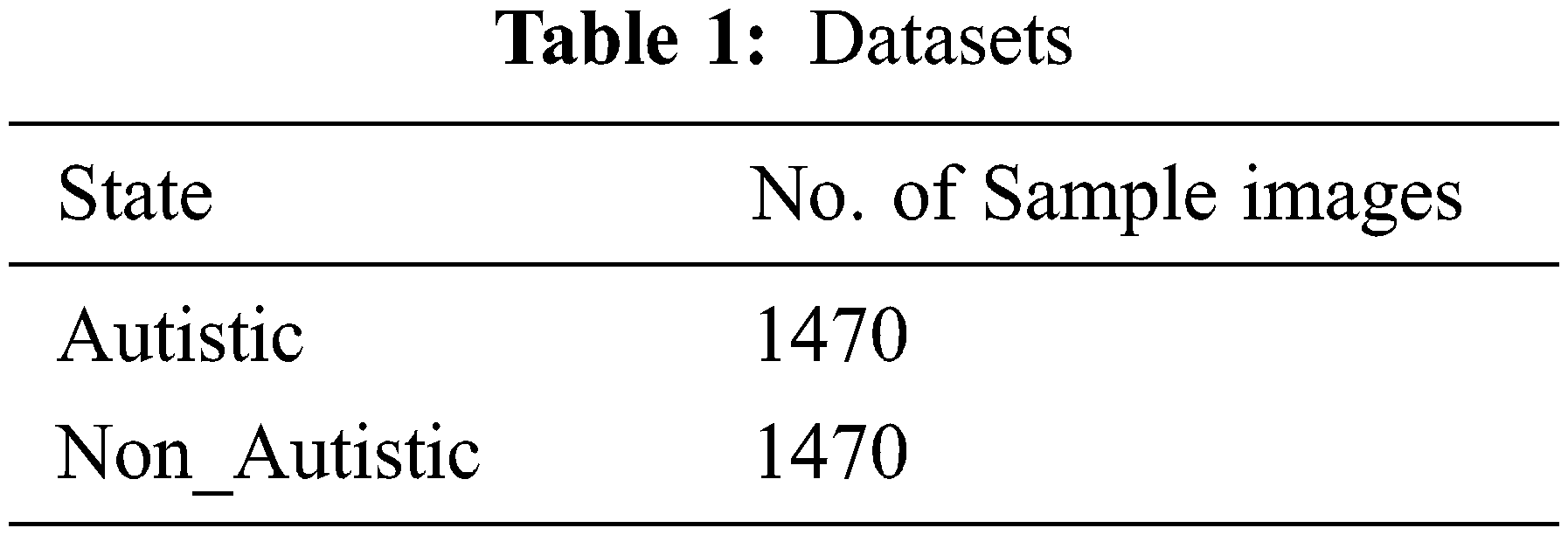

A total of 2940 images were retrieved from the public Kaggle data repository [18], of which 1470 were of ASD children, and the rest belonged to non-ASD children as shown in Tab. 1. A total of 2352 images were used to train the algorithm, and 588 images were used for the validation.

The ASDDTLA model was used to assign the images to the ASD class and non-ASD class based on the facial features, as shown in Fig. 5.

Figure 5: Classification of the images by the ASDDTLA model to the ASD and non-ASD classes

4.2 Image Labeling for the Training and Validation Datasets

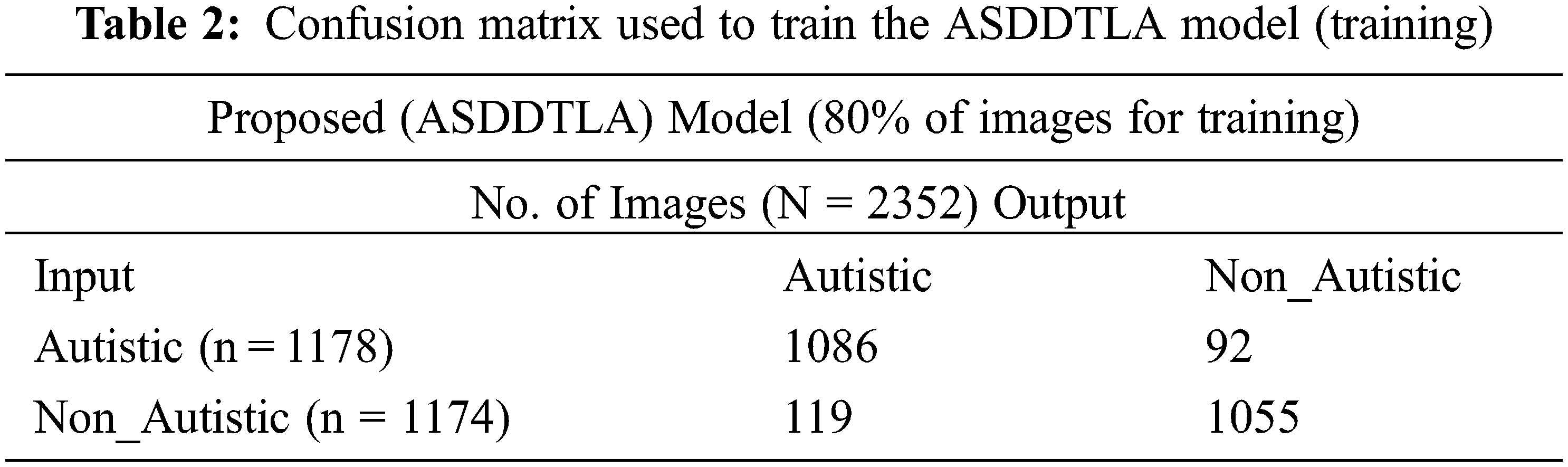

Tab. 2 shows the confusion matrix used to train the model. Since this is a binary classification problem 1178 ASD images were assigned to the ASD class, and 1174 images were assigned to the non ASD class. A total number of 1174 images are used for the Non-Autistic class, in which 1055 images were correctly classified as Non-Autistic and 119 wrongly classified as Autistic.

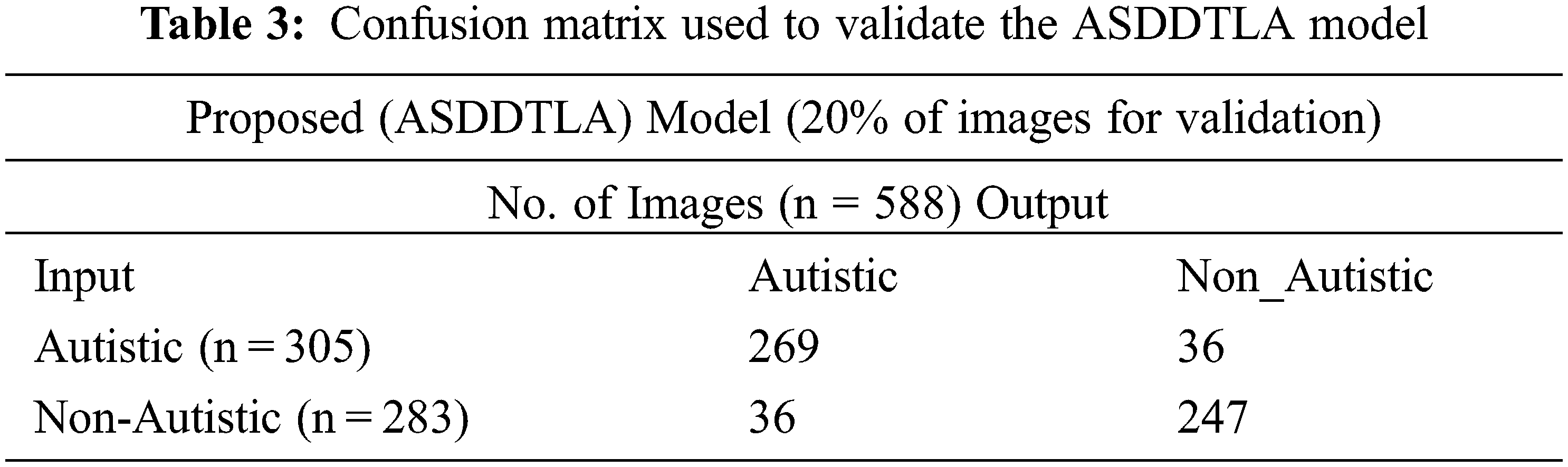

Tab. 3 shows the confusion matrix used for validation. Out of the 588 images, 305 images were assigned to the ASD class, and 283 images were assigned to the non ASD class. The majority of the images in both the ASD (n = 263) and non ASD (n = 247) classes were tagged correctly by the model. Conversely, the model incorrectly tagged thirty-six images in both the ASD and non-ASD classes.

4.3 Training and Validation Accuracy Using Different Parameters

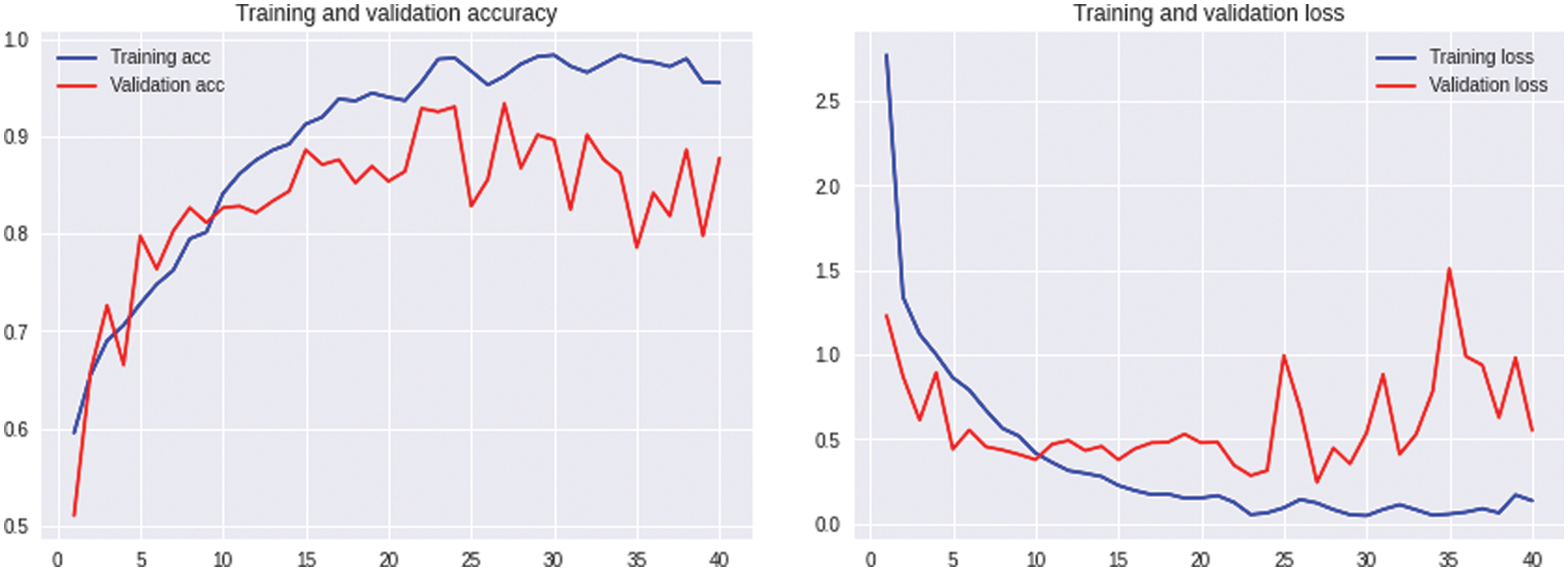

Fig. 6 demonstrates the training and validation accuracy and loss of accuracy for the ASDDTLA model using a range of different epochs up to 40. The optimal number of epochs on which the model was giving the best results was 40.

Figure 6: Accuracy and loss of accuracy for the training and validation datasets using different epochs

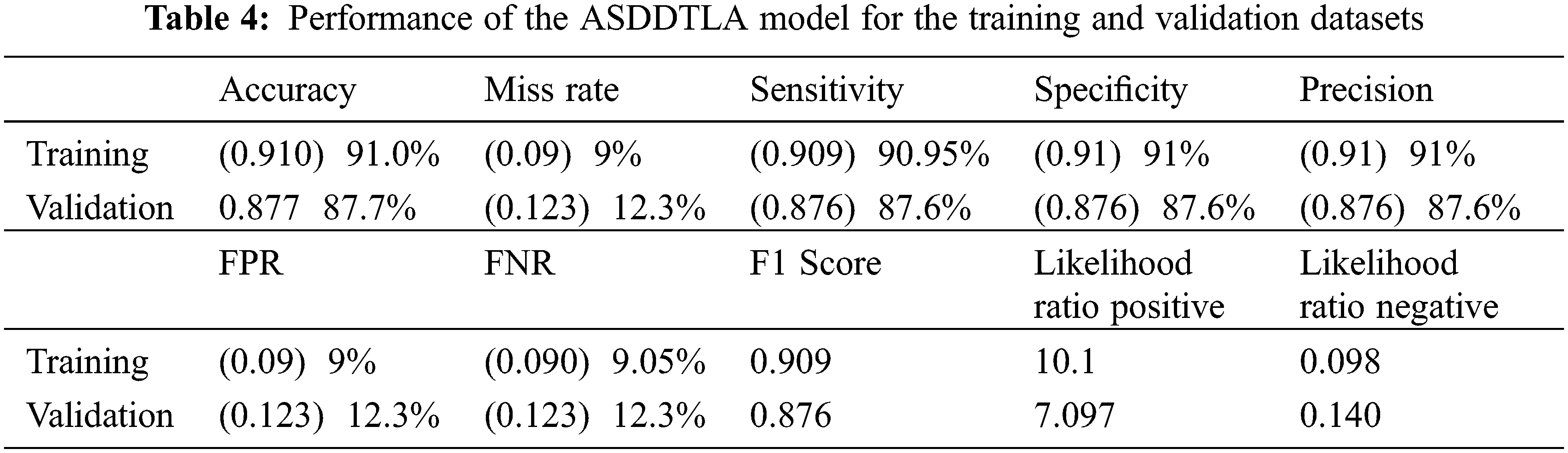

4.4 Performance of the ASDDTLA Algorithm for the Training and Validation Data

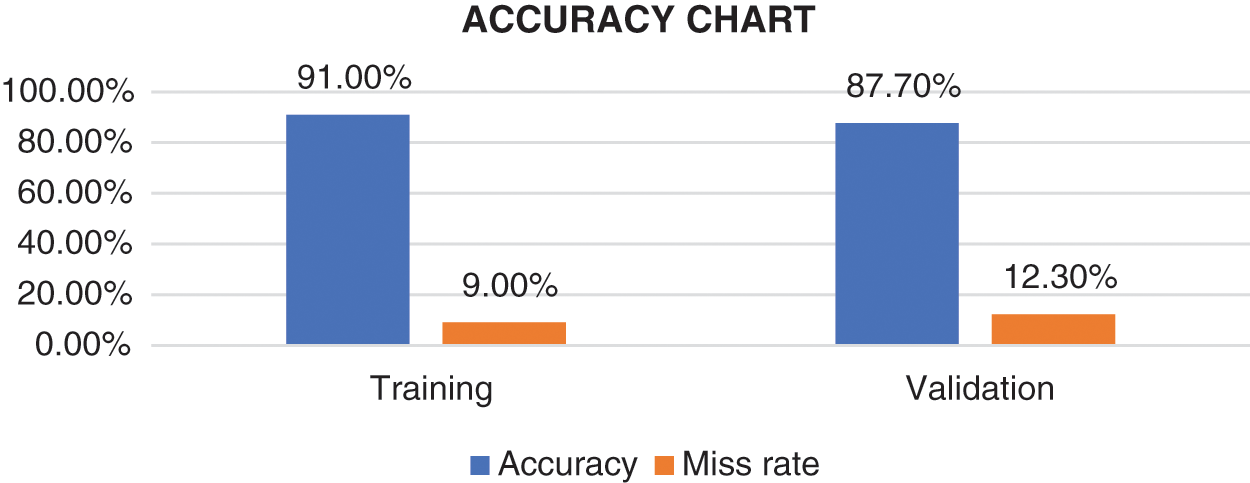

Tab. 4 provides a summary of all the statistical measures used to evaluate the model. The final model resulted in an accuracy of 91.0% and 87.7% for the training and validation data, respectively as shown in Fig. 7. The proposed system model provides 91.0% accuracy for the training and 87.7% for validation purposes. The other statistical parameter values for the sensitivity, specificity, accuracy, miss rate, FNR, FPR, precision, F1 score, LRP, LRN for the validation are 87.6%, 87.6%, 87.7%, 12.3%, 12.3%, 12.3%, 87.6%, 0.876%, 7.097%, 0.140% respectively. The statistical parameters for sensitivity, specificity, accuracy, miss rate, FNR, FPR, precision, F1 score, LRP, LRN are 90.95%, 91%, 91.0%, 9%, 9.05%, 9%, 91%, 0.909%, 10.1%, 0.098% respectively for the training phase.

Figure 7: Accuracy chart

4.5 Performance of the ASDDTLA Algorithm in Relation to Other Established Models

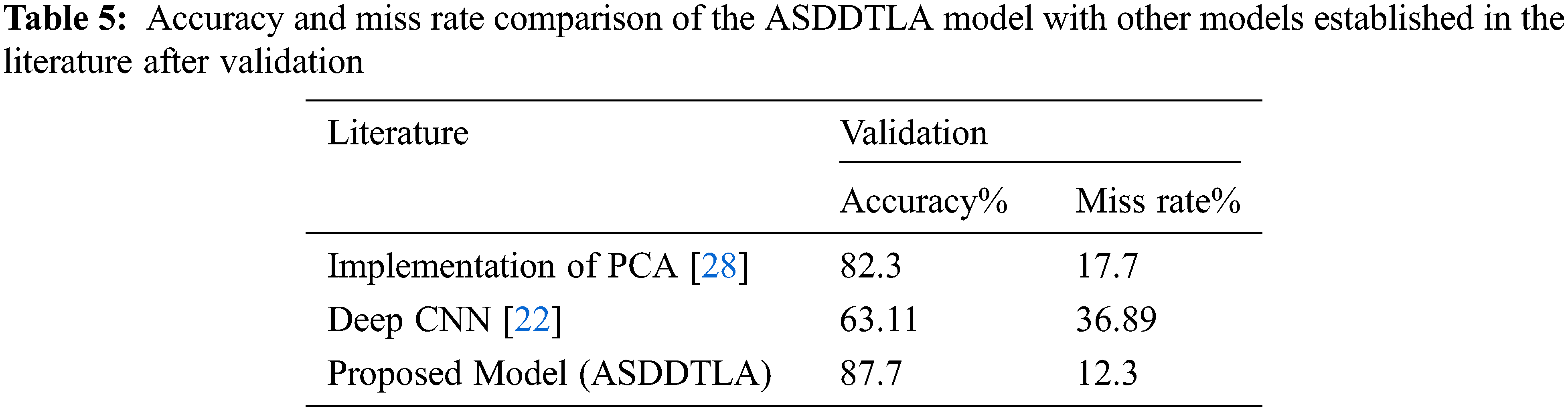

Although some deep learning algorithms have been proposed to detect and classify ASD, to our knowledge, this is the first study that is making use of a transfer deep learning approach to detect ASD based on facial features. Compared with other established models, including PCA [28] and deep CNN [22], our model achieved a higher level of accuracy and a lower miss rate, as shown in Tab. 5.

This study developed and implemented a transfer deep learning facial recognition framework to diagnose and classify ASD. Our model achieved an accuracy of 87.7% for the validation set. Our model performed better than other state-of-the-art models proposed in the literature. Therefore, clinicians could potentially use this model to facilitate the diagnosis of ASD at an early stage. This methodology can be easily applied to small image datasets. However, this method can also be applied to other classification problems on medical images and other ASD datasets. The main contribution of this research is the development of a tool that could be used to detect and classify ASD at an early stage by evaluating facial features.

Acknowledgement: The authors thank their families and colleagues for their continued support.

Funding Statement: The author received no specific funding for this study.

Conflicts of Interest: The authors have no conflicts of interest to report regarding the present study.

References

1. K. L. Goh, S. Morris, S. Rosalie, C. Foster, T. Falkmer et al., “Typically developed adults and adults with autism spectrum disorder classification using centre of pressure measurements,” in Proc. IEEE Int. Conf. on Acoustion Speech Signal Process, Shanghai, China, pp. 844–848, 2016. [Google Scholar]

2. C. M. Zaroff and S. Y. Uhm, “Prevalence of autism spectrum disorders and influence of country of measurement and ethnicity,” Social Psychiatry Psychiatric Epidemiology, vol. 47, no. 3, pp. 395–398, 2012. [Google Scholar]

3. A. A. of Pediatrics and B. F. Guidelines, “Summary of changes made to the bright futures/aap recommendations for preventive pediatric health care (periodicity schedule),” NCHS Data Brief, vol. 7, no. 291, pp. 18–19, 2017. [Google Scholar]

4. E. Stevens, D. R. Dixon, M. N. Novack, D. Granpeesheh, T. Smith et al., “Identification and analysis of behavioral phenotypes in autism spectrum disorder via unsupervised machine learning,” International Journal of Medical Information, vol. 129, no. 2, pp. 29–36, 2019. [Google Scholar]

5. B. S. Abrahams and D. H. Geschwind, “Advances in autism genetics: On the threshold of a new neurobiology,” Nature Reviews Genetics, vol. 9, no. 5, pp. 341–355, 2008. [Google Scholar]

6. C. Betancur, “Etiological heterogeneity in autism spectrum disorders: More than 100 genetic and genomic disorders and still counting,” Brain Research, vol. 1380, no. 1, pp. 42–77, 2011. [Google Scholar]

7. A. C. Y. Tse and R. S. W. Masters, “Improving motor skill acquisition through analogy in children with autism spectrum disorders,” Psychological Sport Exercise, vol. 41, no. 5, pp. 63–69, 2019. [Google Scholar]

8. E. A. Papagiannopoulou, K. M. Chitty, D. F. Hermens, I. B. Hickie and J. Lagopoulos, “A systematic review and meta-analysis of eye-tracking studies in children with autism spectrum disorders,” Social Neuroscience, vol. 9, no. 6, pp. 610–632, 2014. [Google Scholar]

9. T. M. Ghazal, S. Abbas, S. Munir, M. A. Khan, M. Ahmad et al., “Alzheimer disease detection empowered with transfer learning,” Computers Materials and Continua, vol. 70, no. 3, pp. 5005–5019, 2022. [Google Scholar]

10. E. Moradi, A. Pepe, C. Gaser, H. Huttunen and J. Tohka, “Machine learning framework for early MRI-based Alzheimer’s conversion prediction in MCI subjects,” Neuroimage, vol. 104, pp. 398–412, 2015. [Google Scholar]

11. S. L. M. Sainte, N. Alalyani, S. Alotaibi, S. Ghouzali and I. Abunadi, “Arabic natural language processing and machine learning-based systems,” IEEE Access, vol. 7, pp. 7011–7020, 2019. [Google Scholar]

12. B. Gupta, M. Negi, K. Vishwakarma, G. Rawat and P. Badhani, “Study of twitter sentiment analysis using machine learning algorithms on python,” International Journal of Computer Applications, vol. 165, no. 9, pp. 29–34, 2017. [Google Scholar]

13. A. I. Khan and S. Al-Habsi, “Machine learning in computer vision,” Procedia Computer Science, vol. 167, no. 2019, pp. 1444–1451, 2020. [Google Scholar]

14. T. B. Mokgonyane, T. J. Sefara, T. I. Modipa, M. M. Mogale, M. J. Manamela et al., “Automatic speaker recognition system based on machine learning algorithms,” in Proc. Southern African Universities Power Engineering Conf. Mechatronics/Pattern Recognition Associaton South Africa, Potchefstroom, South Africa, pp. 141–146, 2019. [Google Scholar]

15. B. Nithya and V. Ilango, “Predictive analytics in health care using machine learning tools and techniques,” in Proc. Int. Conf. on Intelligent Computing Control Systems, Madurai, India, pp. 492–499, 2018. [Google Scholar]

16. A. Moubayed, M. Injadat, A. B. Nassif, H. Lutfiyya and A. Shami, “E-Learning: Challenges and research opportunities using machine learning data analytics,” IEEE Access, vol. 6, pp. 39117–39138, 2018. [Google Scholar]

17. I. H. Sarker, A. S. M. Kayes and P. Watters, “Effectiveness analysis of machine learning classification models for predicting personalized context-aware smartphone usage,” Journal of Big Data, vol. 6, no. 1, pp. 1–28, 2019. [Google Scholar]

18. “Autism dataset.” https://www.kaggle.com/cihan063/autism-image-data. 2022. [Google Scholar]

19. M. D. Sweeney, K. Kisler, A. Montagne, A. W. Toga and B. V. Zlokovic, “The role of brain vasculature in neurodegenerative disorders,” Nature Neuroscience, vol. 21, no. 10, pp. 1318–1331, 2018. [Google Scholar]

20. D. Fernandez-Duque and S. E. Black, “Impaired recognition of negative facial emotions in patients with frontotemporal dementia,” Neuropsychologia, vol. 43, no. 11, pp. 1673–1687, 2005. [Google Scholar]

21. G. Yolcu, I. Oztel, S. Kazan, C. Oz, K. Palaniappan et al., “Facial expression recognition for monitoring neurological disorders based on convolutional neural network,” Multimedia Tools and Applications, vol. 78, no. 22, pp. 31581–31603, 2019. [Google Scholar]

22. M. I. U. Haque and D. Valles, “A facial expression recognition approach using DCNN for autistic children to identify emotions,” in Proc. 9th IEEE Annual Information Technology, Electronics and Mobile Communication Conf., Canada, pp. 546–551, 2019. [Google Scholar]

23. A. Di Nuovo, D. Conti, G. Trubia, S. Buono and S. D. Nuovo, “Deep learning systems for estimating visual attention in robot-assisted therapy of children with autism and intellectual disability,” Robotics, vol. 7, no. 2, pp. 1–21, 2018. [Google Scholar]

24. S. Singh, R. Ramya, V. Sushma, S. Roshini and R. Pavithra, “Facial recognition using machine learning algorithms on raspberry pi,” in Proc. 4th Int. Conf. on Electrical, Electronics, Communication Computer Technologies and Optimization Techniques, Mysuru, India, pp. 197–202, 2019. [Google Scholar]

25. T. Guha, Z. Yang, A. Ramakrishna, R. B. Grossman, H. Darren et al., “On quantifying facial expression-related atypicality of children with autism spectrum disorder,” in Proc. IEEE Int. Conf. Acoust Speech Signal Process, South Brisbane, Queensland, Australia, pp. 803–807, 2015. [Google Scholar]

26. C. Grossard, S. Hun, A. Dapogny, E. Juillet, F. Hamel et al., “Teaching facial expression production in autism: The serious game jemime,” Creative Education, vol. 10, no. 11, pp. 2347–2366, 2019. [Google Scholar]

27. A. Dapogny, C. Grossard, S. Hun, S. Serret, J. Bourgeois et al., “Jemime: A serious game to teach children with asd how to adequately produce facial expressions,” in Proc. 13th IEEE Int. Conf. Automatic Face Gesture Recognition, Xi’an, China, pp. 723–730, 2018. [Google Scholar]

28. K. G. Smitha and A. P. Vinod, “Facial emotion recognition system for autistic children: A feasible study based on FPGA implementation,” Medical & Biological Engineering & Computing, vol. 53, no. 11, pp. 1221–1229, 2015. [Google Scholar]

29. S. X. Wu, H. T. Wai, L. Li and A. Scaglione, “A review of distributed algorithms for principal component analysis,” Proceedigs of the IEEE, vol. 106, no. 8, pp. 1321–1340, 2018. [Google Scholar]

30. C. Pramerdorfer and M. Kampel, “Facial expression recognition using convolutional neural networks: State of the art,” in Proc. 10th Annual Computing and Communication Workshop and Conf., Las Vegas, NV, USA, pp. 1–7, 2020. [Google Scholar]

31. R. K. Ibala, J. R. M. Babela and P. Senga, “Encephalopathy after pertussis immunization,” Archives of Pediatrics, vol. 7, no. 2, pp. 216–217, 2000. [Google Scholar]

32. S. Collet, Availabe Online: 2017. https://www.saagie.com/blog/object-detection-part1/. [Google Scholar]

33. J. V. H. N. Seliya and T. M. Khoshgoftaar, “A study on the relationships of classifier performance metrics,” in Proc. Int. Conf. Tools with Artificial Intelligence, Volos, Greece, pp. 59–66, 2009. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools