Open Access

Open Access

ARTICLE

Robust Frequency Estimation Under Additive Symmetric α-Stable Gaussian Mixture Noise

1 Beijing Orient Institute of Measurement and Test, Beijing, 10083, China

2 University of Science & Technology Beijing, Beijing, 10083, China

* Corresponding Author: Bolong Men. Email:

Intelligent Automation & Soft Computing 2023, 36(1), 83-95. https://doi.org/10.32604/iasc.2023.027602

Received 22 January 2022; Accepted 19 April 2022; Issue published 29 September 2022

Abstract

Here the estimating problem of a single sinusoidal signal in the additive symmetric α-stable Gaussian (ASαSG) noise is investigated. The ASαSG noise here is expressed as the additive of a Gaussian noise and a symmetric α-stable distributed variable. As the probability density function (PDF) of the ASαSG is complicated, traditional estimators cannot provide optimum estimates. Based on the Metropolis-Hastings (M-H) sampling scheme, a robust frequency estimator is proposed for ASαSG noise. Moreover, to accelerate the convergence rate of the developed algorithm, a new criterion of reconstructing the proposal covariance is derived, whose main idea is updating the proposal variance using several previous samples drawn in each iteration. The approximation PDF of the ASαSG noise, which is referred to the weighted sum of a Voigt function and a Gaussian PDF, is also employed to reduce the computational complexity. The computer simulations show that the performance of our method is better than the maximum likelihood and the -norm estimators.Keywords

In the real-world applications, impulsive noise is commonly come across, especially in wireless communication or/and image processing [1–7]. Among these heavy-tailed noise models, α-stable [7], Student’s t and Laplace distributions [8–13] are typical ones, whose probability density function (PDF) are usually described by a single known mathematical function. Furthermore, mixture noise models are proposed, which are Gaussian mixture and Cauchy Gaussian mixture [14–18]. However, all these noise models cannot represent the special noise type in some real-world applications like the astrophysical imaging processing [19] and multi-user communication network [20]. Take the astrophysical imaging processing as an example, the encountered noise is described as a variable following symmetric α-stable (SαS) distribution and a Gaussian distributed variable, known as additive symmetric α-stable [21] Gaussian (ASαSG) mixture noise. Here SαS is due to galactic radiation and the Gaussian noise is caused by the antenna of the satellite [22].

In the paper, the estimation problem is investigated for a single sinusoid signal embedded with the ASαSG mixture noise. As the PDF of the SαS noise cannot be written as an closed-form function, the PDF of ASαSG distribution, obtained by the convolution of the PDF of SαS distribution and the PDF of Gaussian distributions, cannot be expressed in an analytical form. Therefore, traditional estimators like maximum likelihood estimator (MLE), cannot provide the optimal and stable estimates. Therefore, to fix the estimation problem, we adopt a Markov chain Monte Carlo (MCMC) method, which can sample from a simple conditional distribution of a stable Markov chain [22], instead of a complicated target PDF. Since the conditional distribution is difficult to choose, the Metropolis-Hastings (M-H) method [23–27] is proposed, which draw samples from any simple distributions with a constraint of an acceptance ratio [28]. As only the conditional PDF of a stable Markov chain corresponds to the target PDF, the convergence of the chain influences the computational complexity of the proposed method. In order to improve computational cost, a proposal covariance reconstruction method is proposed, which iteratively updates the proposal variance with the residuals between adjacent samples. Here we consider an independent-parameter estimation problem, so the proposal covariance is defined as a diagonal matrix, with all non-zero elements being candidate proposal variances. To further reduce the complexity caused by the PDF of the ASαSG, the approximation of the SαS [22,29–33] is utilized, which is a weighted sum of a Cauchy PDF and a Gaussian PDF. And hence the PDF of ASαSG noise can be simplified as the sum of the Voigt profile [34,35] and a normal distribution. It is also worth to point out that our work is a generalization of [36,37], which consider the additive Gaussian and Cauchy noise (

The rest of this paper is organized as follows. Section 2 reviews the main idea of the M-H algorithm. The PDF approximation of the ASαSG is shown in Section 3. In Section 4, the proposed method is then given in detail, where development of the new proposed covariance updating criterion is also provided. Computer simulations are conducted in Section 5 to verify the robust of the proposed scheme. In Section 6, conclusions are drawn.

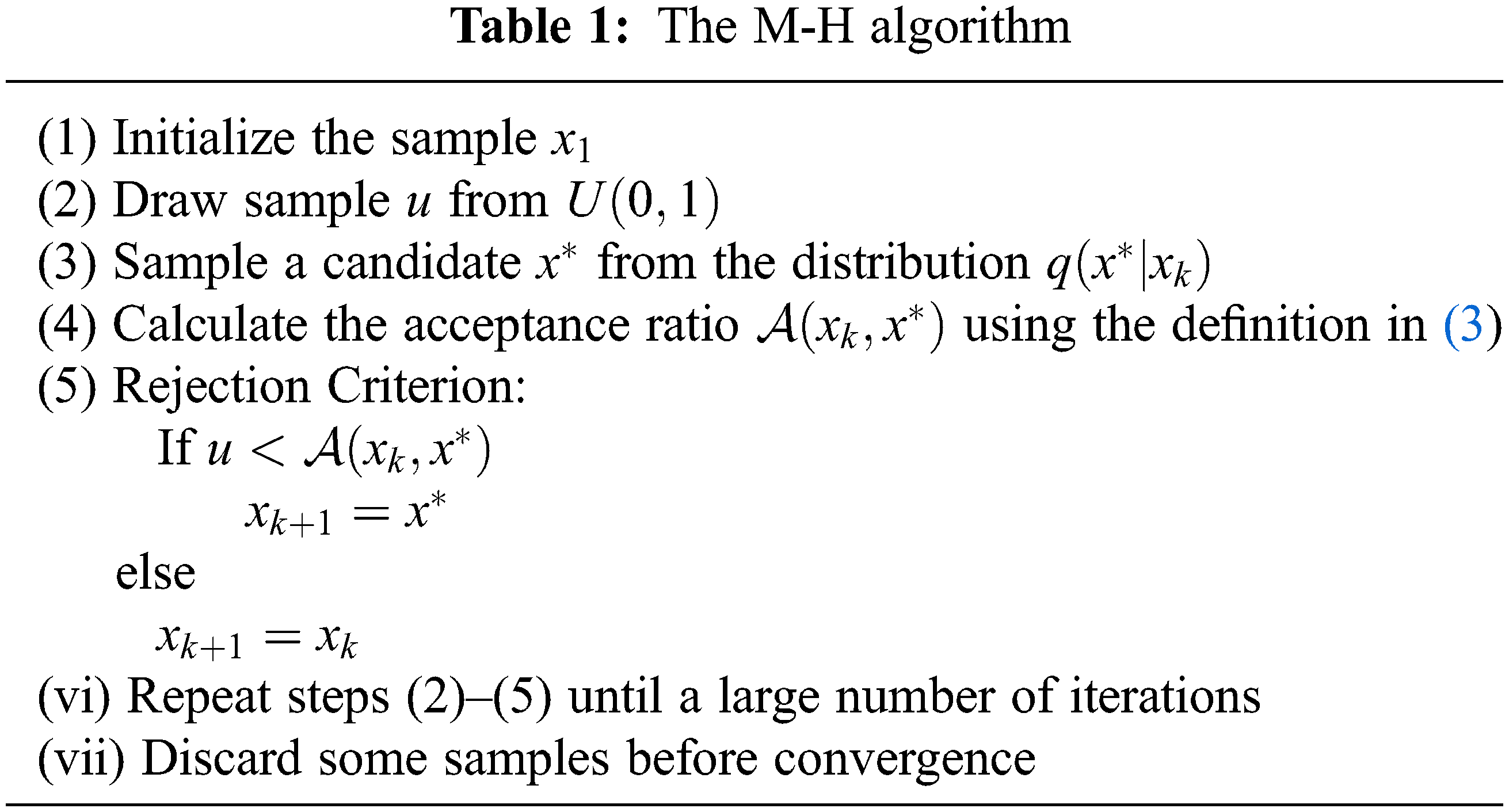

A Markov chain [38–41] can be defined by a series of random variables {

where

Denote the PDF of

is satisfied with

However, in some scenarios of complicated target PDF, the proper conditional PDF

Usually, the

3 The PDF Approximation of Mixture Noise

The ASαSG noise

where

Since the mixture noise is the additive of two random variables with different PDF, the PDF of mixed noise q, is usually calculated according to the convolution of SαS and Gaussian PDFs, which is

where

As the SαS process has no closed-from PDF expression, it is usually expressed using characteristic function (CF) [44], which is

where

Due to the complicated relationship between the CF and PDF, the PDF of ASαSG in (8) cannot be expressed with an analytic form due to the convolution and integral operations. Therefore, to obtain the closed-form PDF expression, we use the approximated PDF of the SαS noise. Because for a SαS variable,

where

where p denotes the fractional moment. According to the investigation in [48], the value of p is usually chosen as −1/4.

Then we express the PDF of the Gaussian variable g as

With the use of (10)–(14), the PDF of ASαSG distribution in (8) is simplified as

where

According to [32],

where

In general, the observations have the form of:

where

with

4.1 Posterior of Unknown Parameters

Let

where

By employing Bayes’ theorem [19], we have

where

with

Furthermore, it can be easily seen in (27) that the expectations of posteriors of the unknown parameters are their true values. Therefore, the mean of unknown parameter samples drawn by M-H algorithm are the unbiased.

Although we have known the PDF expression of the ASαSG noise, estimators like MLE and the lp-norm methods [51], are not able to be utilized due to poor performance and convergence problems. Furthermore, since the posteriors of unknown parameters are complicated, directly sampling on them is difficult.

Therefore, in order to accurate estimate

where

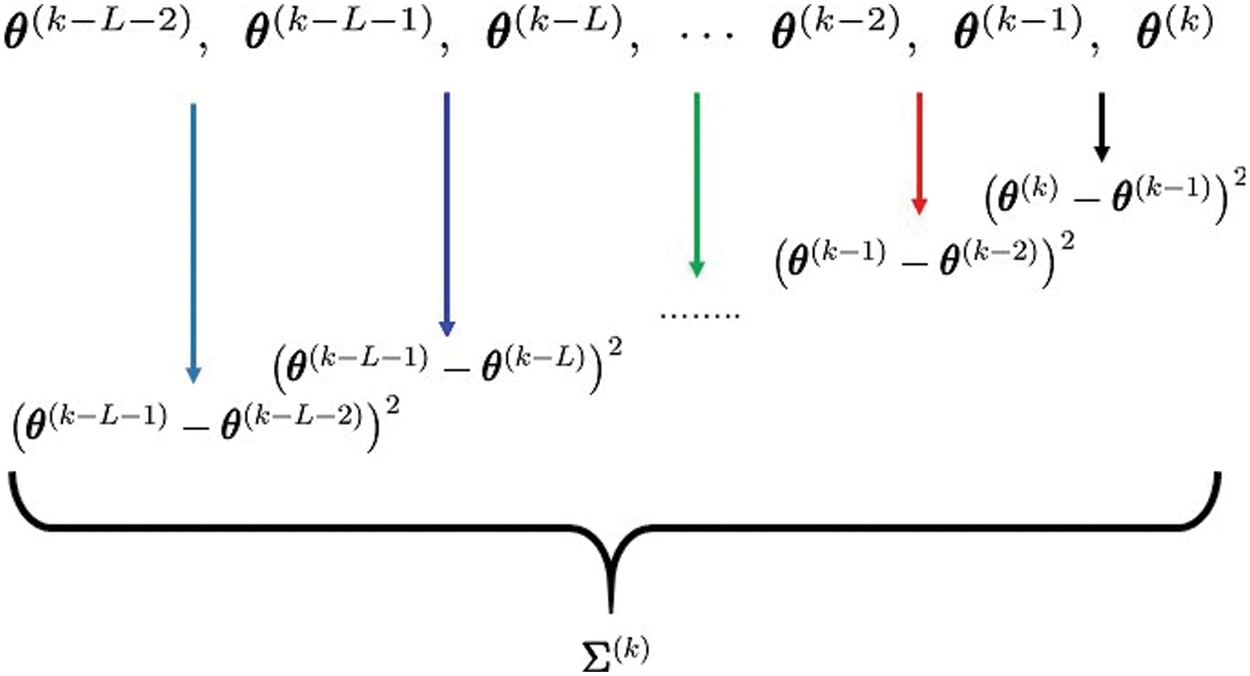

In this paper, we propose employing a batch-mode samples to update the values of the proposed variance in the proposal covariance matrix. The details of the proposal covariance matrix

Figure 1: The batch-mode proposed covariance

where L is the length of the batch-mode window.

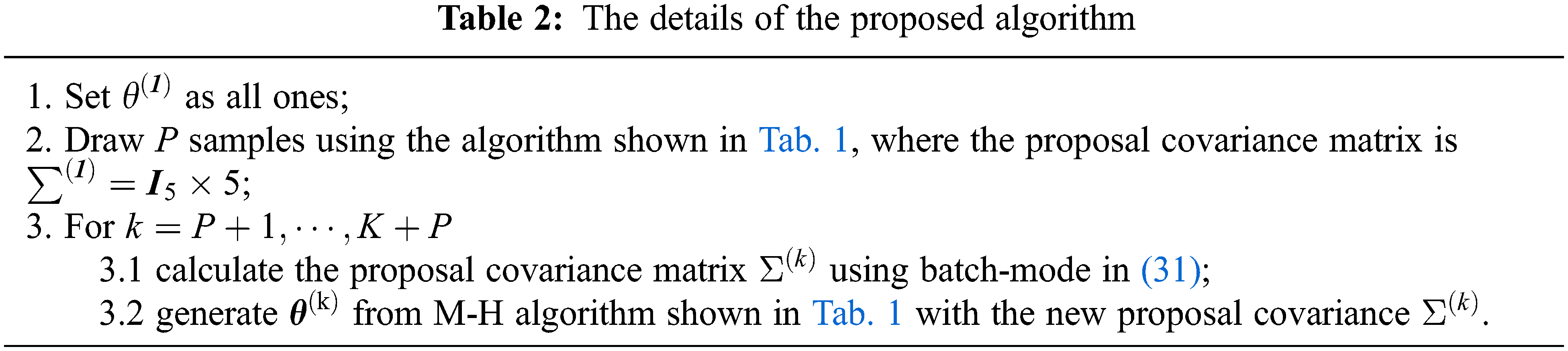

According to the previous discussion, the initialization of the M-H method, denoted by

After the the steps in Tab. 2, the chain of

where

5 Cramér-Rao Lower Bound (CRLB)

Let

where

with

and

where

In this section, computer simulations are conducted to verify the effectiveness of our method. Then the mean square frequency error (MSFE), denoted by

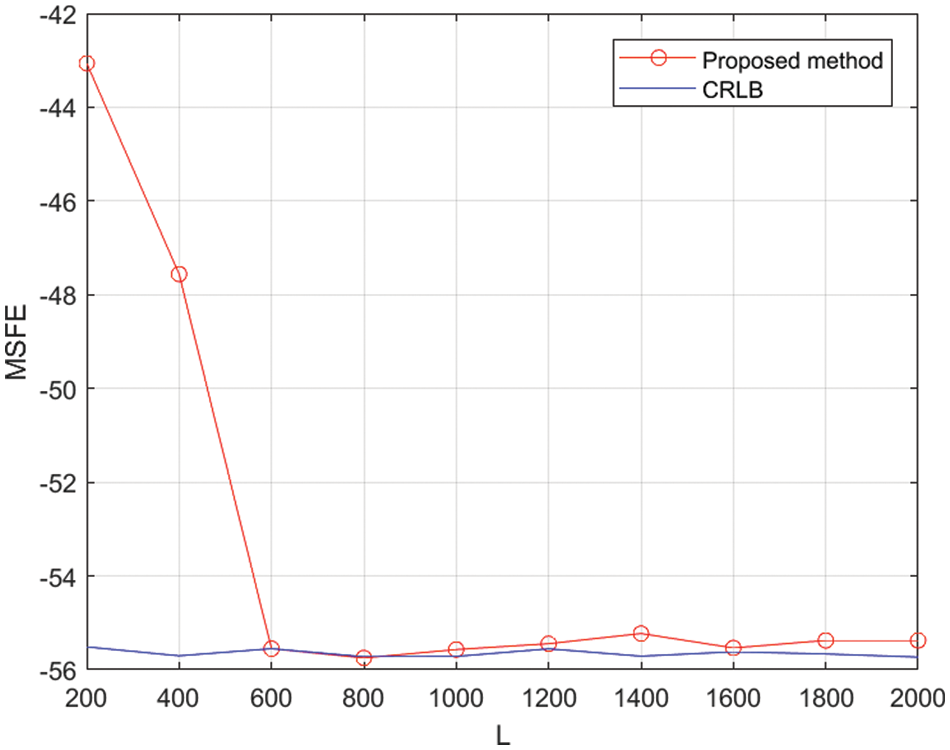

First of all, to obtain the proper

Figure 2: MSFE vs. L

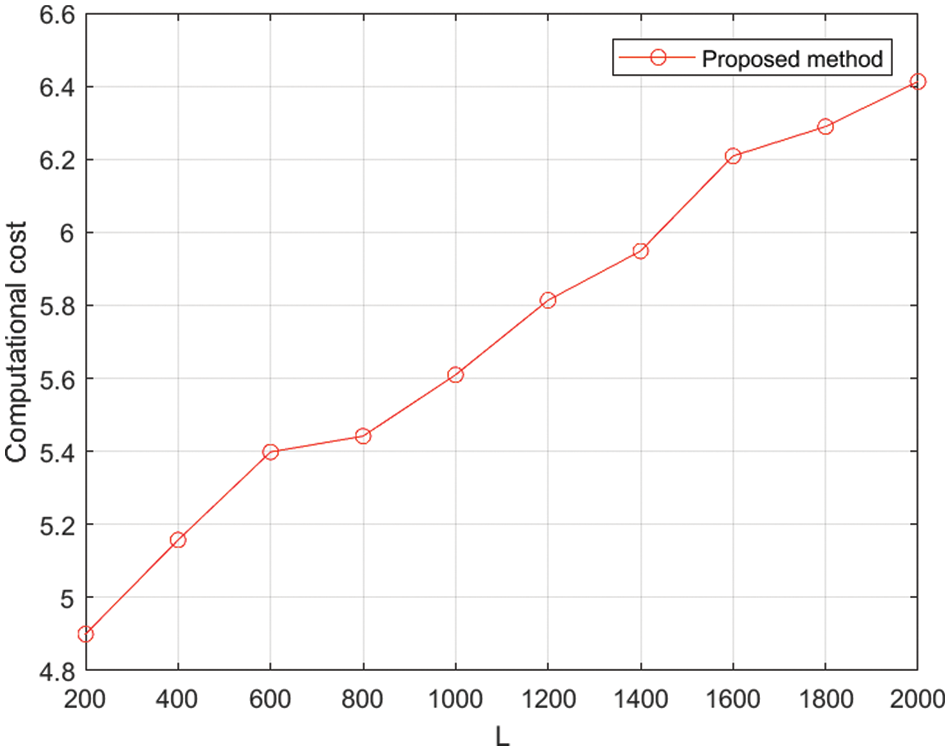

Figure 3: The computational cost of the proposed method vs. L

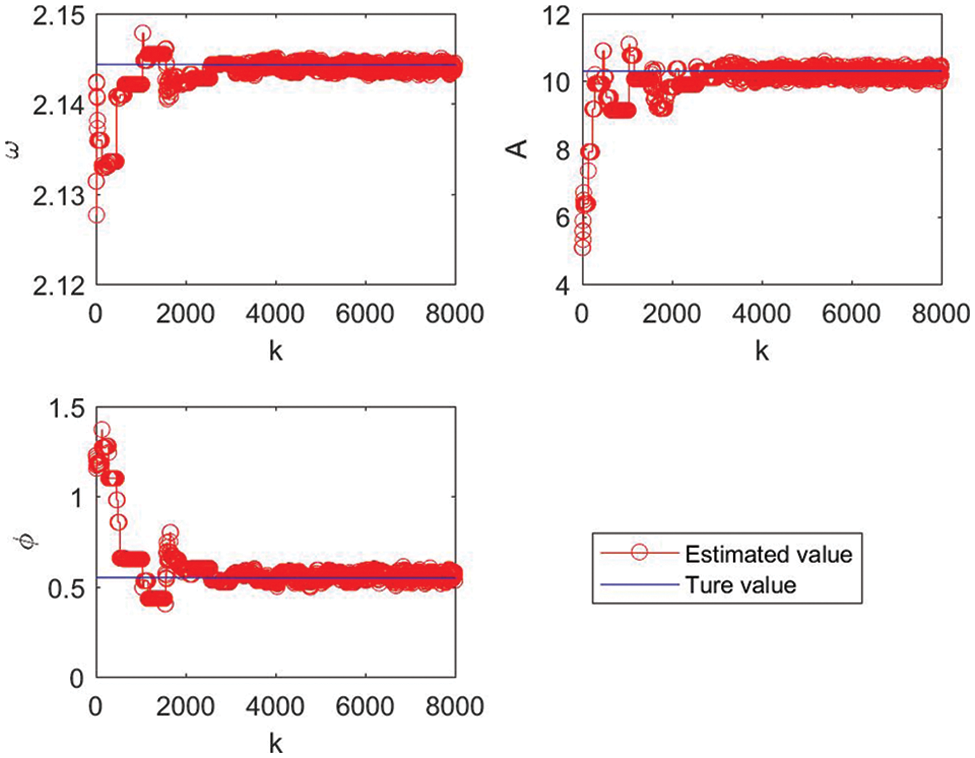

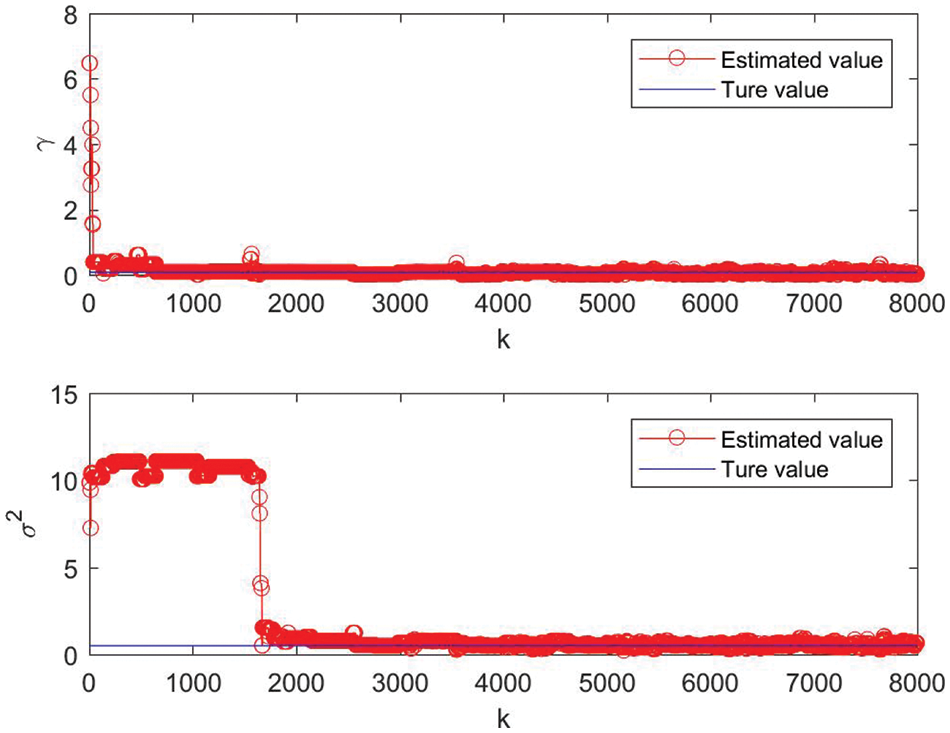

Second, we study the convergence rate of the M-H chain and the value of the burn-in period P. In this test, the density parameter is the same values to the previous test and the proposal covariance matrix is calculated by (31) with

Figure 4: Estimates of unknown parameters vs. iteration number k

Figure 5: Estimates of density parameters vs. iteration number k

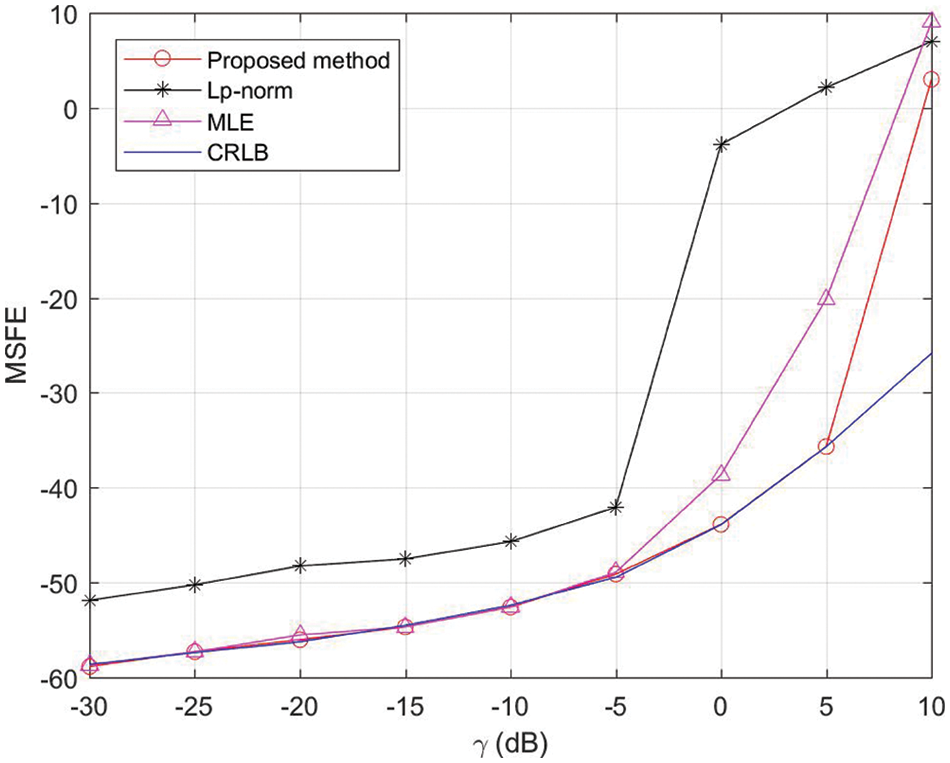

In the following, the MSFE performance of our estimator, MLE and lp-norm estimator are considered. Since there is no signal-to-noise ratio in ASαSG noise, in the proposed method, γ is scaled to generate different noise conditions. With the use of previous tests, we throw away the first 2000 samples to guarantee the stationary of the chain. It is indicated in Fig. 6 that the MSFE of our proposed method can attain the CRLB for the noise conditions

Figure 6: Mean square frequency error of ω vs. γ

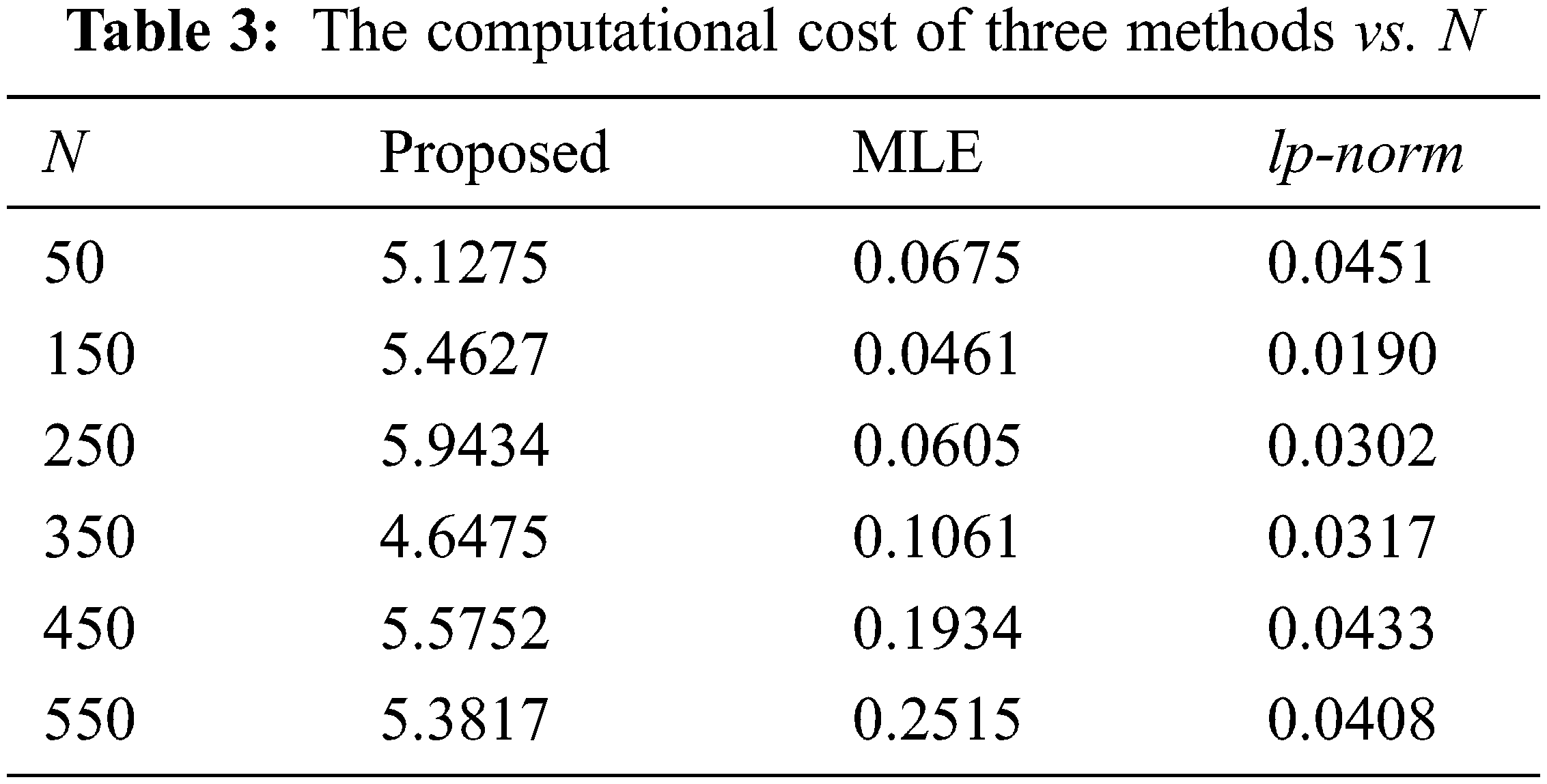

Finally, the computational complexity of our scheme is studied in different data length. It can be seen in the Tab. 3 that the computational cost of MLE and lp-norm is lower than the proposed estimator [38]. However, in the higher data length, our proposed scheme will not increase. This is to say, our method is not sensitive to the data length, indicating the advantage of its application in big data.

In this paper, the improved Bayesian method, namely M-H algorithm, is used to study the accurate frequency estimation method of single sinusoidal signal with ASαSG noise. In order to reduce the computational cost, a new proposal covariance matrix reconstruction criterion and an PDF approximation is designed. Simulation results indicate that the developed method can obtain the unbiased estimates with a stable sampling condition. In addition, MSFE of the proposal estimator can obtain CRLB after discarding burn-in period samples. Our method can be also extended to the other complicated signal models.

Funding Statement: The work was financially supported by National Key R&D Program of China (Grant No. 2018YFF01012600), National Natural Science Foundation of China (Grant No. 61701021) and Fundamental Research Funds for the Central Universities (Grant No. FRF-TP-19-006A3).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. P. H. Vincent and T. Lang, Signal Processing for Wireless Communication Systems. The Netherlands: Kluwer Academic, 2002. [Google Scholar]

2. M. Bhoopathi and P. Palanivel, “Estimation of locational marginal pricing using hybrid optimization algorithms,” Intelligent Automation & Soft Computing, vol. 31, no. 1, pp. 143–159, 2022. [Google Scholar]

3. K. Mal, I. H. Kalwar, K. Shaikh, T. D. Memon, B. S. Chowdhry et al., “A new estimation of nonlinear contact forces of railway vehicle,” Intelligent Automation & Soft Computing, vol. 28, no. 3, pp. 823–841, 2021. [Google Scholar]

4. S. M. Kay, Fundamentals of Statistical Signal Processing: Estimation Theory. Prentice-Hall, NJ: Englewood Cliffs, 1993. [Google Scholar]

5. A. M. Zoubir, V. Koivunen, Y. Chakhchoukh and M. Muma, “Robust estimation in signal processing: A tutorial-style treatment of fundamental concepts,” IEEE Signal Processing Magazine, vol. 29, no. 4, pp. 61–80, 2012. [Google Scholar]

6. R. C. Gonzalez and R. E. Woods, Digital Image Processing. New Jersey: Pearson Prentice Hall, 2008. [Google Scholar]

7. Y. Chen, E. E. Kuruoglu and H. C. So, “Estimation under additive Cauchy-Gaussian noise using Markov chain Monte Carlo,” in Proc. of IEEE Workshop on Statistical Signal Processing (SSP 14), Gold Coast, Australia, pp. 356–359, 2014. [Google Scholar]

8. C. L. Nikias and M. Shao, Signal Processing with Alpha-Stable Distribution and Applications. New York: John Wiley & Sons Inc, 1995. [Google Scholar]

9. J. F. Zhang, T. S. Qiu, P. Wang and S. Y. Luan, “A novel cauchy score function based DOA estimation method under alpha-stable noise environments,” Signal Processing, vol. 138, no. 17, pp. 98–105, 2017. [Google Scholar]

10. K. Aas, “The generalized hyperbolic skew student’s t-distribution,” Journal of Financial Econometrics, vol. 4, no. 2, pp. 275–309, 2006. [Google Scholar]

11. B. Jorgensen, Statistical Properties of the Generalized Inverse Gaussian Distribution. Heidelberg, Germany: Springer-Verlag, 1982. [Google Scholar]

12. T. Zhang, A. Wiesel and M. S. Greco, “Multivariate generalized Gaussian distribution: Convexity and graphical models,” IEEE Transactions on Signal Processing, vol. 61, no. 16, pp. 4141–4148, 2013. [Google Scholar]

13. J. J. Shynk, Probability, Random Variables, and Random Processes: Theory and Signal Processing Applications. Hoboken, N.J: Wiley, 2013. [Google Scholar]

14. T. Poggio and K. K. Sung, Finding Human Faces with a Gaussian Mixture Distribution-based Face Model. Berlin Heidelberg, Germany: Springer, 1995. [Google Scholar]

15. J. T. Flam, S. Chatterjee, K. Kansanen and T. Ekman, “On mmse estimation: A linear model under Gaussian mixture statistics,” IEEE Transactions on Signal Processing, vol. 60, no. 7, pp. 3840–3845, 2012. [Google Scholar]

16. J. Zhang, N. Zhao, M. Liu, Y. Chen and F. R. Yu, “Modified Cramér-Rao bound for M-FSK signal parameter estimation in Cauchy and Gaussian noise,” IEEE Transactions on Vehicular Technology, vol. 68, no. 10, pp. 10283–10288, 2019. [Google Scholar]

17. C. Gong, X. Yang, W. Huangfu and Q. Lu, “A mixture model parameters estimation algorithm for inter-contact times in internet of vehicles,” Computers, Materials & Continua, vol. 69, no. 2, pp. 2445–2457, 2021. [Google Scholar]

18. J. N. Hwang, S. R. Lay and A. Lippman, “Nonparametric multivariate density estimation: A comparative study,” IEEE Transactions on Signal Processing, vol. 42, no. 10, pp. 2795–2810, 1994. [Google Scholar]

19. D. Herranz, E. E. Kuruoglu and L. Toffolatti, “An α-stable approach to the study of the P(D) distribution of unresolved point sources in CMB sky maps,” Astronomy and Astrophysics, vol. 424, no. 3, pp. 1081–1096, 2004. [Google Scholar]

20. J. Ilow, D. Hatzinakos and A. N. Venetsanopoulos, “Performance of FH SS radio networks with interference modeled as a mixture of Gaussian and alpha-stable noise,” IEEE Transactions on Communications, vol. 46, no. 4, pp. 509–520, 1998. [Google Scholar]

21. D. S. Gonzalez, E. E. Kuruoglu and D. P. Ruiz, “Finite mixture of α-stable distributions,” Digital Signal Processing, vol. 19, no. 2, pp. 250–264, 2009. [Google Scholar]

22. X. L. Yuan, W. Bao and N. H. Tran, “Quality, reliability, security and robustness in heterogeneous systems,” in 17th EAI International Conf., QShine 2021, Virtual Event, Springer, 2021. [Google Scholar]

23. G. Kail, S. P. Chepuri and G. Leus, “Robust censoring using metropolis-hastings sampling,” IEEE Journal of Selected Topics in Signal Processing, vol. 10, no. 2, pp. 270–283, 2016. [Google Scholar]

24. W. Aydi and F. S. Alduais, “Estimating weibull parameters using least squares and multilayer perceptron vs. bayes estimation,” Computers, Materials & Continua, vol. 71, no. 2, pp. 4033–4050, 2022. [Google Scholar]

25. M. Zichuan, S. Hussain, Z. Ahmad, O. Kharazmi and Z. Almaspoor, “A new generalized weibull model: Classical and bayesian estimation,” Computer Systems Science and Engineering, vol. 38, no. 1, pp. 79–92, 2021. [Google Scholar]

26. C. Siddhartha and G. Edward, “Understanding the metropolis-hastings algorithm,” The American Statistician, vol. 49, no. 4, pp. 327–335, 1995. [Google Scholar]

27. K. M. Zuev and L. S. Katafygiotis, “Modified metropolis-hastings algorithm with delayed rejection,” Probabilistic Engineering Mechanics, vol. 26, no. 3, pp. 405–412, 2011. [Google Scholar]

28. Y. Chen, Y. L. Tian, D. F. Zhang, L. T. Huang and J. G. Xu, “Robust frequency estimation under additive mixture noise,” Computers, Materials & Continua, vol. 72, no. 1, pp. 1671–1684, 2022. [Google Scholar]

29. C. M. Grinstead and J. L. Snell, Introduction to Probability. Rhode Island: American Mathematical Society, 2012. [Google Scholar]

30. G. James, D. Witten, T. Hastie and R. Tibshirani, An Introduction to Statistical Learning. New York: Springer, 2013. [Google Scholar]

31. X. Li, C. Peng, L. Fan and F. Gao, “Normalisation-based receiver using bcgm approximation for α-stable noise channels,” Electronics Letters, vol. 49, no. 15, pp. 965–967, 2013. [Google Scholar]

32. Y. Chen and J. Chen, “Novel SαS PDF approximations and their applications in wireless signal detection,” IEEE Transactions on Wireless Communications, vol. 14, no. 2, pp. 1080–1091, 2015. [Google Scholar]

33. X. T. Li, J. Sun, L. W. Jin and M. Liu, “Bi-parameter CGM model for approximation of α-stable PDF,” Electronics Letters, vol. 44, no. 18, pp. 1096–1097, 2008. [Google Scholar]

34. Z. Hashemifard and H. Amindavar, “PDF approximations to estimation and detection in time-correlated alpha-stable channels,” Signal Processing, vol. 133, no. 10, pp. 97–106, 2017. [Google Scholar]

35. F. W. J. Olver, D. M. Lozier and R. F. Boisvert, NIST Handbook of Mathematical Functions. Cambridge: Cambridge University Press, 2010. [Google Scholar]

36. I. S. Gradshteyn and I. M. Ryzhik, Table of Integrals, Series, and Products. Salt Lake City: Academic Press, 2007. [Google Scholar]

37. Y. Chen, E. E. Kuruoglu, H. C. So, L. -T. Huang and W.-Q. Wang, “Density parameter estimation for additive Cauchy-Gaussian mixture,” in Proc. of IEEE Workshop on Statistical Signal Processing (SSP 14), Gold Coast, Australia, pp. 205–208, 2014. [Google Scholar]

38. Y. Chen, E. E. Kuruoglu and H. C. So, “Optimum linear regression in additive Cauchy-Gaussian noise,” Signal Processing, vol. 106, no. July (4), pp. 312–318, 2015. [Google Scholar]

39. J. C. Spall, “Estimation via Markov chain Monte Carlo,” IEEE Control Systems, vol. 23, no. 2, pp. 34–45, 2003. [Google Scholar]

40. C. Andrieu, N. D. Freitas, A. Doucet and M. I. Jordan, “An introduction to MCMC for machine learning,” Machine Learning, vol. 50, no. 1/2, pp. 5–43, 2003. [Google Scholar]

41. C. Robert and G. Casella, Introducing Monte Carlo methods with R. New York: Springer Verlag, 2009. [Google Scholar]

42. S. Chib and E. Greenberg, “Understanding the metropolis-hastings algorithm,” The American Statistician, vol. 49, no. 4, pp. 327–335, 1995. [Google Scholar]

43. P. S. R. Diniz, J. A. K. Suykens, R. Chellappa and S. Theodoridis, Academic Press Library in Signal Processing: Signal Processing Theory and Machine Learning, vol. 1. New York: Academic Press, 2013. [Google Scholar]

44. E. E. Kuruoglu, “Density parameter estimation of skewed α-stable distributions,” IEEE Transactions on Signal Processing, vol. 49, no. 10, pp. 2192–2201, 2001. [Google Scholar]

45. J. F. Kielkopf, “New approximation to the Voigt function with applications to spectral-line profile analysis,” Journal of the Optical Society of America B, vol. 63, no. 8, pp. 987–995, 1973. [Google Scholar]

46. Y. Y. Liu, J. L. Lin, G. M. Huang, Y. Q. Guo and C. X. Duan, “Simple empirical analytical approximation to the Voigt profile,” Journal of the Optical Society of America B, vol. 18, no. 5, pp. 666–672, 2001. [Google Scholar]

47. H. O. Dirocco and A. Cruzado, “The Voigt profile as a sum of a Gaussian and a Lorentzian functions, when the weight coefficient depends on the widths ratio and the independent variable,” Acta Physica Polonica A, vol. 122, no. 4, pp. 670–673, 2012. [Google Scholar]

48. H. O. Dirocco and A. Cruzado, “The Voigt profile as a sum of a Gaussian and a Lorentzian functions, when the weight coefficient depends only on the widths ratio,” Acta Physica Polonica A, vol. 122, no. 4, pp. 666–669, 2012. [Google Scholar]

49. X. T. Li, J. Sun, L. W. Jin and M. Liu, “Bi-parameter CGM model for approximation of α-stable PDF,” Electronics Letters, vol. 44, pp. 1096–1097, 2008. [Google Scholar]

50. R. Kohn, M. Smith and D. Chan, “Nonparametric regression using linear combinations of basis functions,” Statistics and Computing, vol. 11, no. 4, pp. 313–322, 2001. [Google Scholar]

51. T. H. Li, “A nonlinear method for robust spectral analysis,” IEEE Transactions on Signal Processing, vol. 58, no. 5, pp. 2466–2474, 2010. [Google Scholar]

52. Y. Chen, H. C. So, E. E. Kuruoglu and X. L. Yang, “Variance analysis of unbiased complex-valued lp-norm minimizer,” Signal Processing, vol. 135, pp. 17–25, 2017. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools