DOI:10.32604/iasc.2023.025580

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2023.025580 |  |

| Article |

A Novel-based Swin Transfer Based Diagnosis of COVID-19 Patients

1Department of Medicine, Division of Radiology, Medical College, Najran University, Najran, 11001, Saudi Arabia

2Department of Computer Science and Information Technology, Ibadat International University, Islamabad, 44000, Pakistan

3Electrical Engineering Department, College of Engineering, Najran University, Najran, 11001, Saudi Arabia

4Department of Chemical Engineering, PIEAS University, Islamabad, 44000, Pakistan

5Department of Computer Science, COMSATS University Islamabad (CUI), Sahiwal Campus, 57000, Pakistan

6Department of Radiology, College of Medicine, Qassim University, Buraydah, 52571, Saudi Arabia

7Radiological Sciences Department, College of Applied Medical Sciences, Najran University, Najran, 11001, Saudi Arabia

8Faculty of Human Medicine, Zagazig University, 44631, Egypt

*Corresponding Author: Maryam Zaffar. Email: maryam.zaffar82@gmail.com

Received: 29 November 2021; Accepted: 11 February 2022

Abstract: The numbers of cases and deaths due to the COVID-19 virus have increased daily all around the world. Chest X-ray is considered very useful and less time-consuming for monitoring COVID disease. No doubt, X-ray is considered as a quick screening method, but due to variations in features of images which are of X-rays category with Corona confirmed cases, the domain expert is needed. To address this issue, we proposed to utilize deep learning approaches. In this study, the dataset of COVID-19, lung opacity, viral pneumonia, and lastly healthy patients’ images of category X-rays are utilized to evaluate the performance of the Swin transformer for predicting the COVID-19 patients efficiently. The performance of the Swin transformer is compared with the other seven deep learning models, including ResNet50, DenseNet121, InceptionV3, EfficientNetB2, VGG19, ViT, CaIT, Swim transformer provides 98% recall and 96% accuracy on corona affected images of the X-ray category. The proposed approach is also compared with state-of-the-art techniques for COVID-19 diagnosis, and proposed technique is found better in terms of accuracy. Our system could support clinicians in screening patients for COVID-19, thus facilitating instantaneous treatment for better effects on the health of COVID-19 patients. Also, this paper can contribute to saving humanity from the adverse effects of trials that the Corona virus might bring by performing an accurate diagnosis over Corona-affected patients.

Keywords: Biomedical systems; chest X-ray images; CNN; COVID-19; swin transformer; image processing

The epidemic of Coronavirus Syndrome 2019 (COVID-19) has positioned the earth under enormous tension since December 2019. A large number of humanity throughout the world faced this dangerous disease, according to World Health Organization (WHO) roughly three hundred thousand substantiated patients deaths are informed [1]. COVID-19 is triggered by Vicious Lungs Disorder Coronavirus 2 SARS-CoV-2 [2], whereas common indicators of COVID-19 are temperature, dry cough, pain in head, myalgia, pharyngitis, and chest discomfort. This virus takes a period of 14 days when the visibility of the Corona virus symptoms completes. The regular medical diagnosis of Coronavirus patients has gathered a speed, however even so there is a high probability of serious infection of medical staff. Moreover, it has issues of affordability of layman and limitation of tool kits. In contrast, medical imaging procedures including X-ray-based and Computed Tomography CT-based inspection of patients are rarely accurate and safe. The X-ray imaging technique is being used due to its low price and low time consumption as compared to other imaging techniques, moreover, it is widely available even in rural areas [3,4]. However, there is a requirement of highly trained persons to read the X-ray images accurately. Different pre-trained models are previously utilized in the study however improvement is required in the accuracy of existing models. The contribution of this paper is therefore as follows:

• A novel Swim transformer application of a deep learning model is utilized to diagnose the patients afflicted with Corona Virus using X-ray imaging techniques.

• Results of Swim transformer are compared in terms of performance evaluation parameters with eight other deep learning approaches (ResNet50, DenseNet121, InceptionV3, EfficentNetB2, VGG19, MobileNet, Vit, CaIT).

The main aim of conducting this research is to consider the COVID-19 related chest images via X-ray imagining technology. Related dataset employed has already been trained using deep learning models to get better accuracy. The proposed approach has been elaborated and well explained in the third section. The paper that has been presented is arranged sequentially: Section 2 delivers a precise summary of literature that is related to Corona Virus using X-ray imaging techniques. Section 3 provides the details of the Swin transformer utilization for the diagnosis of Corona virus; Section 4 shows the outcomes of the proposed approach, and Section 5 outlines the conclusion of the present work and proposes a future work.

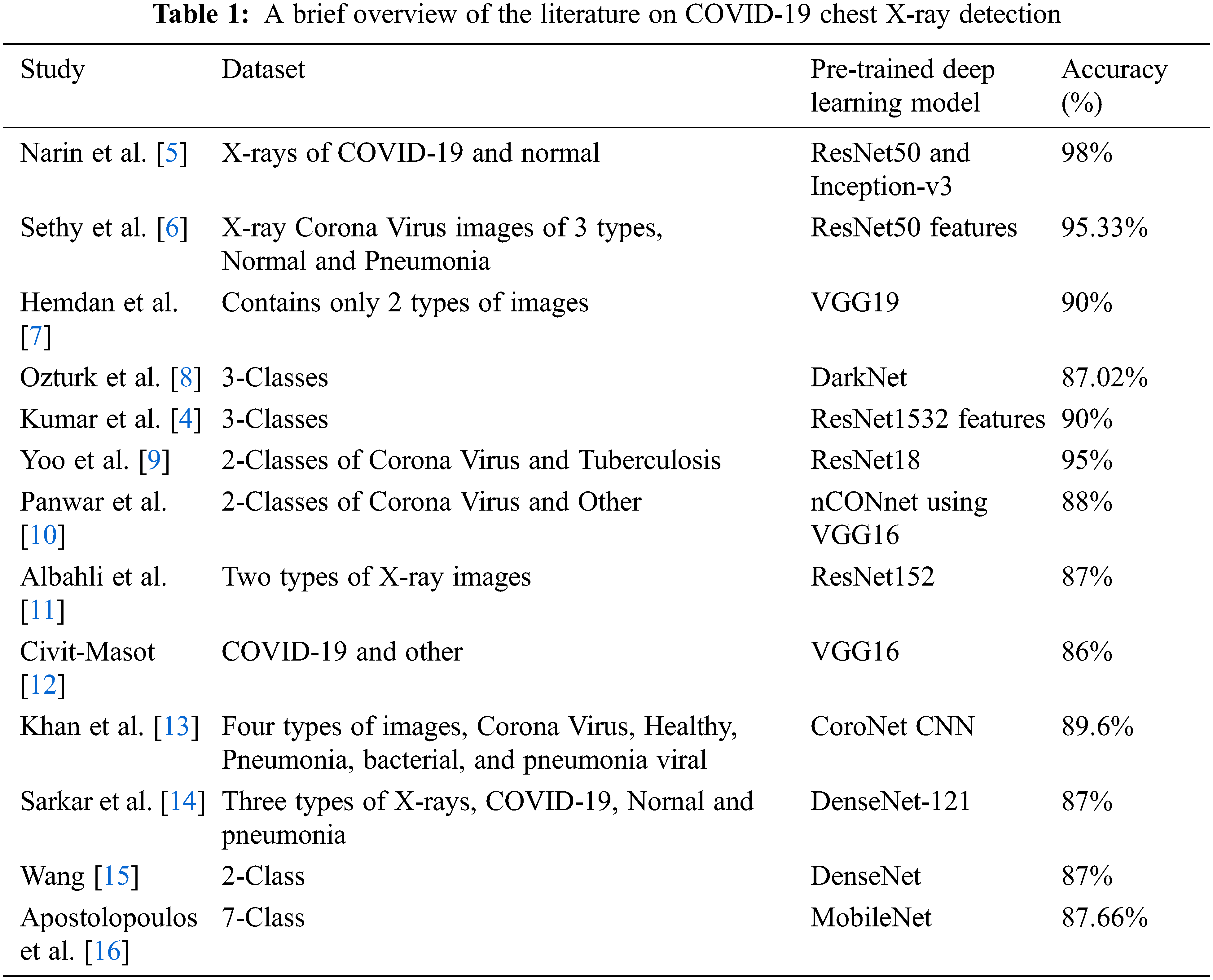

This part of the paper represents the work linked to the Corona Virus using X-ray imaging techniques. The chest images related to the X-ray imaging technique have a lot of advantages over the traditional method of diagnosing COVID-19 patients. Tab. 1 presents a brief review of the pre-trained deep learning models on COVID-19 detection. Different studies have been conducted, to perform the diagnosis of Corona virus patients using deep learning models that are already trained. Dataset of the different number of classes are being used in existing studies, however, there is still need for improvement.

This section introduces a short summary of existing studies, on Corona virus prediction through X-ray imaging technology of chest scans. However, there is a need for improvement in accuracy; as according to the existing studies as different types of images having X-ray imaging technology added in a dataset, the accuracy of the machine learning algorithm decreases.

This section presents a descriptive proposed methodology related to diagnosing a Coronavirus patients using X-ray imaging technology of chest scans.

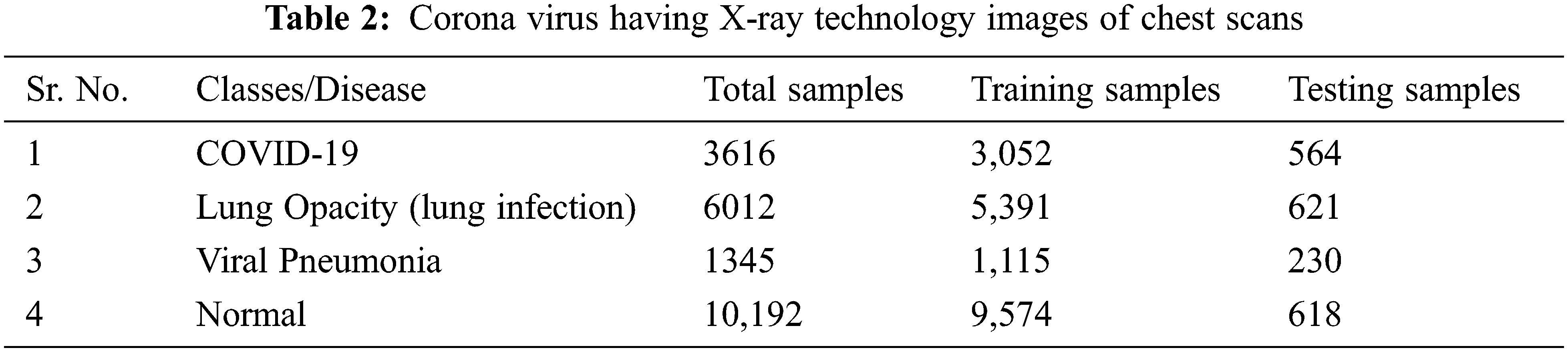

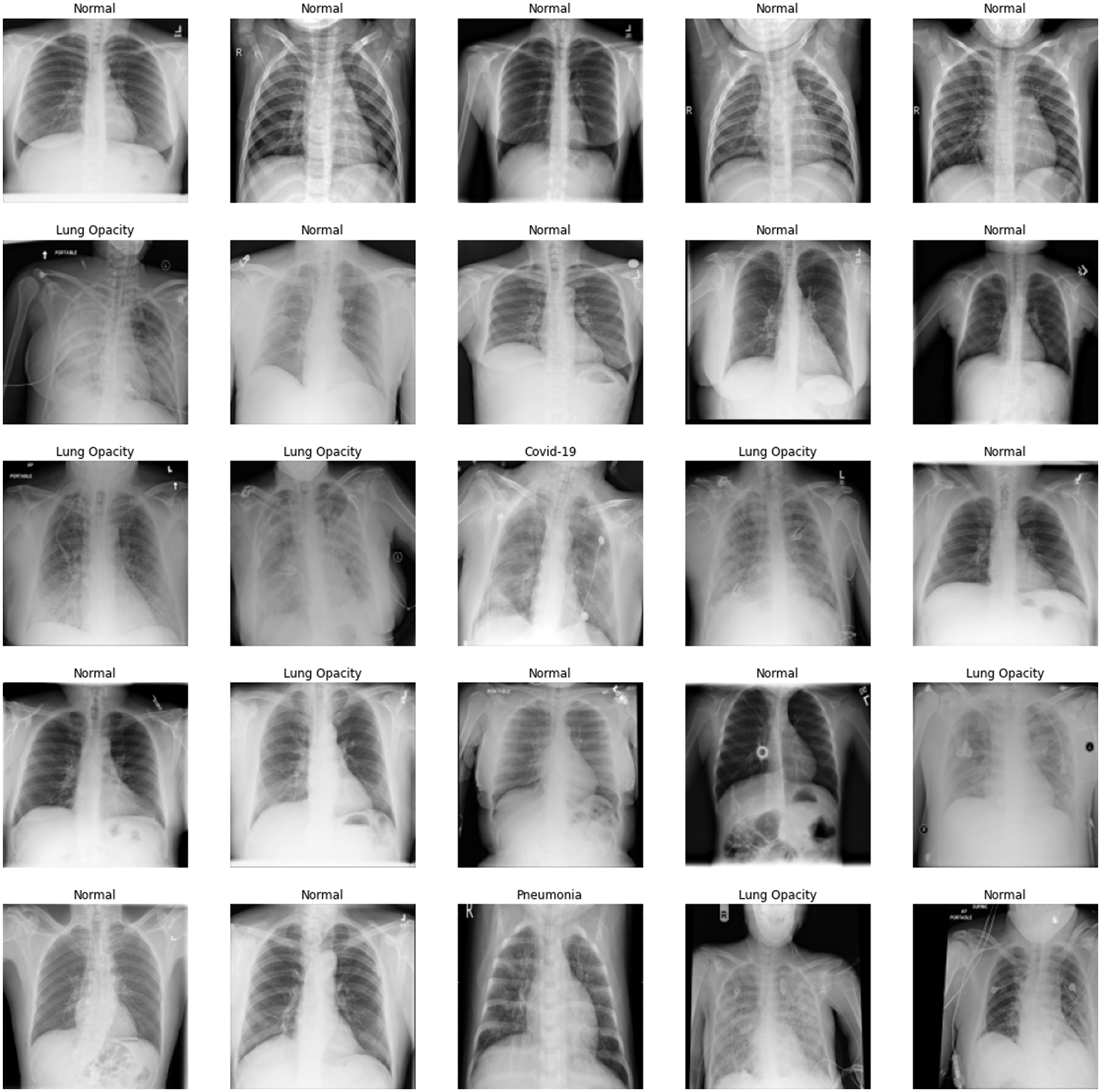

We have utilized an open-source dataset freely accessible at the Kaggle dataset repository [17]. The explanation of the dataset is visible in Tab. 2. The dataset consists of X-rays images containing 3616 positive cases of Corona Virus patients, amount of 1345 pneumonia patients, 6012 non-Corona infected Lung Opacity patients and 10,192 Healthy cases. Whereas Fig. 1 presents a prototype of Coronavirus patients using X-ray Imaging technology, data set containing Corona virus images, lung opacity, pneumonia of viral category and Healthy.

Figure 1: Corona virus visuals using X-ray imaging technology, (a) corona virus visual, (b) non affected corona virus lung opacity, (c) normal (healthy), and (d) pneumonia

The presented work adopts a transfer learning approach for the raining of convolutional neural network (CNN). Purposed work utilized the transfer learning technique to use a convolutional neural network (CNN) variants which is already trained on Image Net dataset having defined weights and then train COVID-19 chest X-ray dataset. Our proposed approach takes benefit of the transfer learning method with a pre-trained initial layer to prevent the vanishing gradient problem.

The performance of convolutional neural network (CNN) variants in vision fields largely brightened this field. The property of transformer to model long-range data dependencies, gathered the attention of researchers [18]. However, in vision applications, tokens are usually not of fixed scale, contrary to the property of transformers where the fixed scale of taken is available [19,20]. Moreover, dense prediction for semantic segmentation tasks is troublesome for transformers. To surmount these above-mentioned issues an enhanced form of transformer called Swin transformer is proposed. The main properties of Swin transformer are:

• The Swin transformer is the customary backbone of distinct vision tasks compared to existing transformers [21]. As Swin transformer builds hierarchal feature maps starting from small patches, and a number of patches remain fixed in each window, and this causes complexity linear to image sizes.

• Shifted window method, Swin transformer is a shifted window approach with low latency compared to existing sliding window methods.

Swin transformer is being used in different recent researcher areas including medical image segmentation [22], including cardiac segmentation [23], image restoration [24] and so on. These articles shows that the Swin transformer outperforms then existing state of the art method.

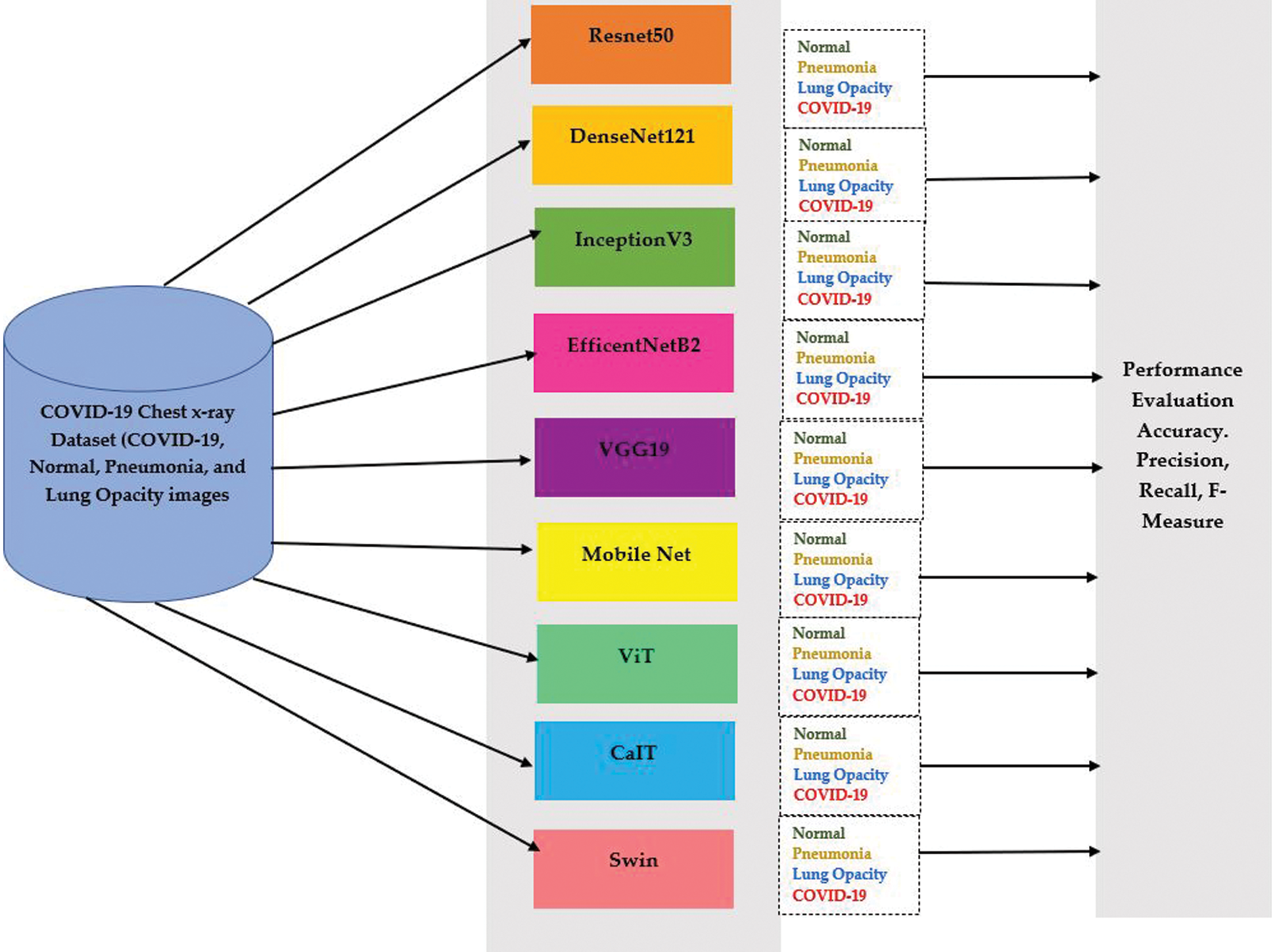

In Fig. 2 presents the main flow of the work presented in this paper, eight pre-trained deep learning networks are compared with Swin transformer on the Coronavirus having the technology of X-rays Image dataset. The accuracy and performance of Swin are evaluated in terms of different evaluation parameters and then compared with eight existing pre-trained deep learning models. Comparisons of results of different evaluation parameters are presented in the coming sections of the paper.

Figure 2: The flow of presented work for predicting COVID-19 patients

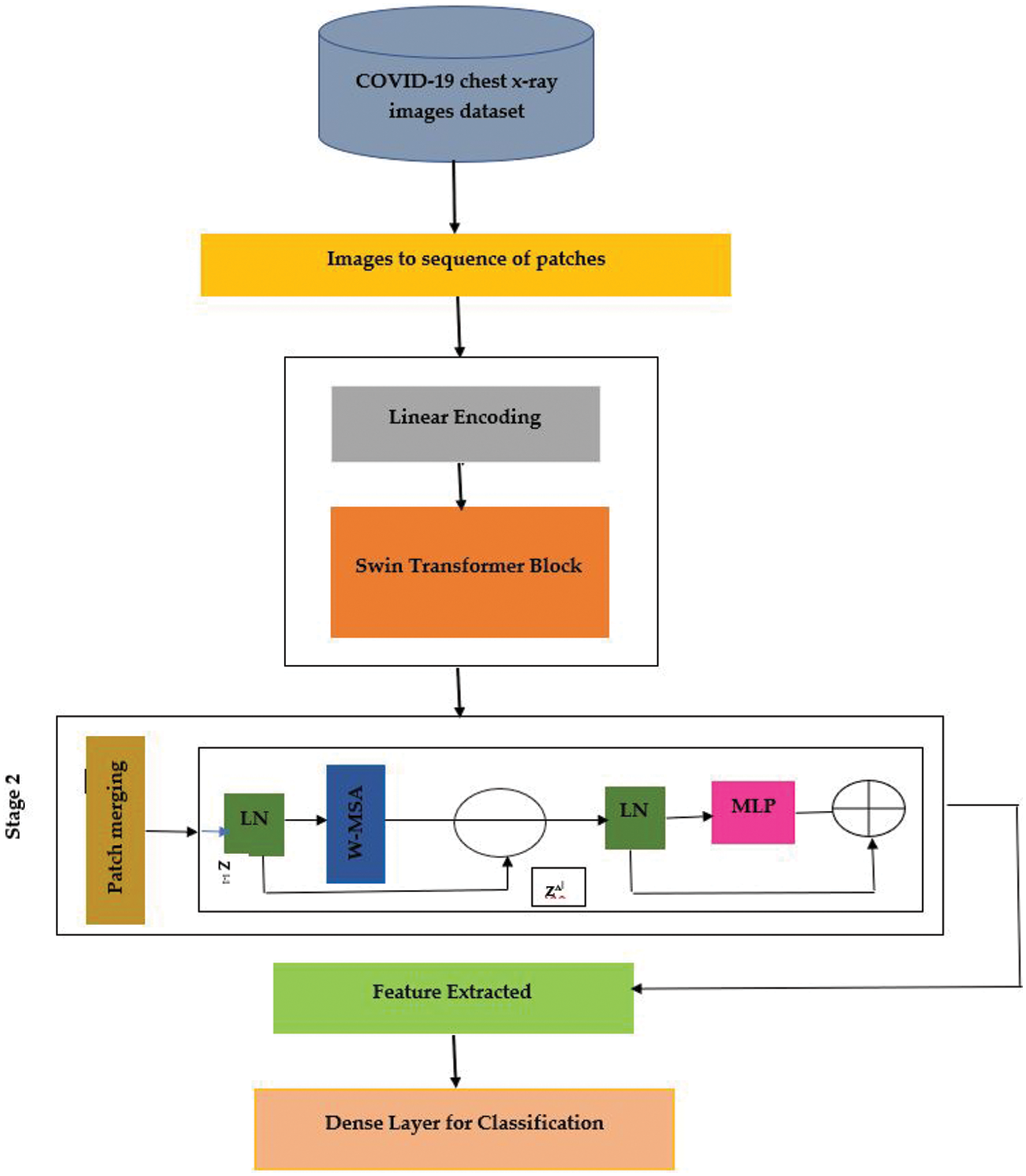

In Fig. 3 presents proposed work for diagnosing Coronavirus patients using X-ray technology of chest images via Swin transformer. Swin transformer is selected for diagnosing covid-19 patients as it integrated the advantages of convolutional neural network (CNN) and transformer.

• It avails local attention mechanism to process large size images due to convolutional neural network (CNN) support.

• Swin handles long range dependency with shifted window scheme as an advantage of transformer [24,25].

Figure 3: Swin transformer proposed detection hierarchy of coronavirus patients using X-ray images

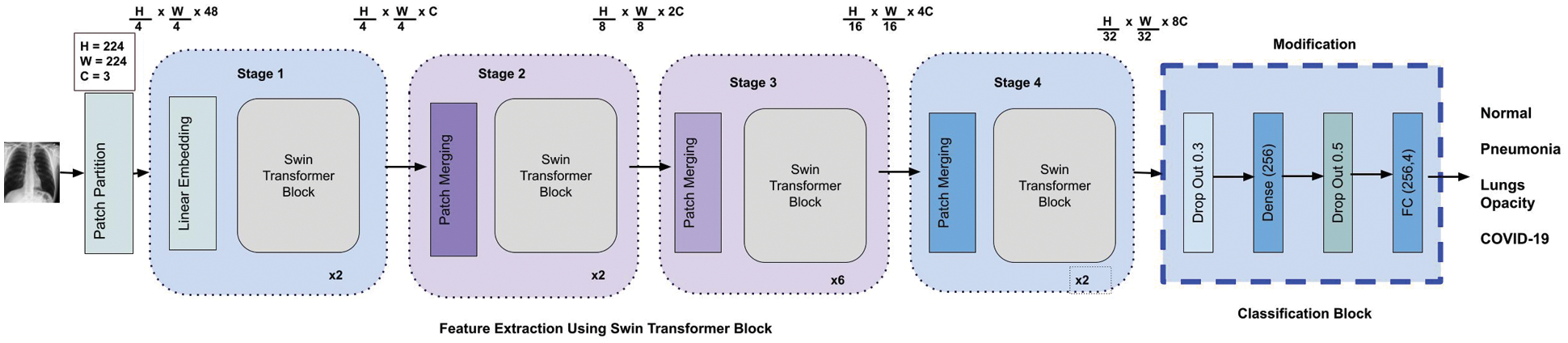

In Fig. 4 presents the modification in Swin transformer, by adding two dropout and two dense layers. The construction of Swin transformer is based on the shifted windows. Main components of each of the Swin transformer consist of Layer Norm (LN) layer, multi-head self-attention module, residual connection, and 2-layer multilayer perceptron (MLP) with GELU non-linearity. The window based multi-head self-attention (W-MSA) module and the shifted window-based multi-head self-attention (SW-MSA).

Figure 4: Modified swin transformer block diagram

In below Eqs. (1) and (2) are outputs of shifted window-based multi-head self-attention (S/W-MSA) module and multilayer perceptron (MLP) module. To preprocess the images CLACHE operation along with Guassian filtering is performed. The modification in last layer of Swin is presented through equations is as follows:

Eqs. (3) and (4) shows dropout rate and FC layers that helps in predicting class labels without over fitting the model, again second pass of dropout and FC layers prediction can be witnessed in Eqs. (5) and (6) is depicting over Fig. 4 as well.

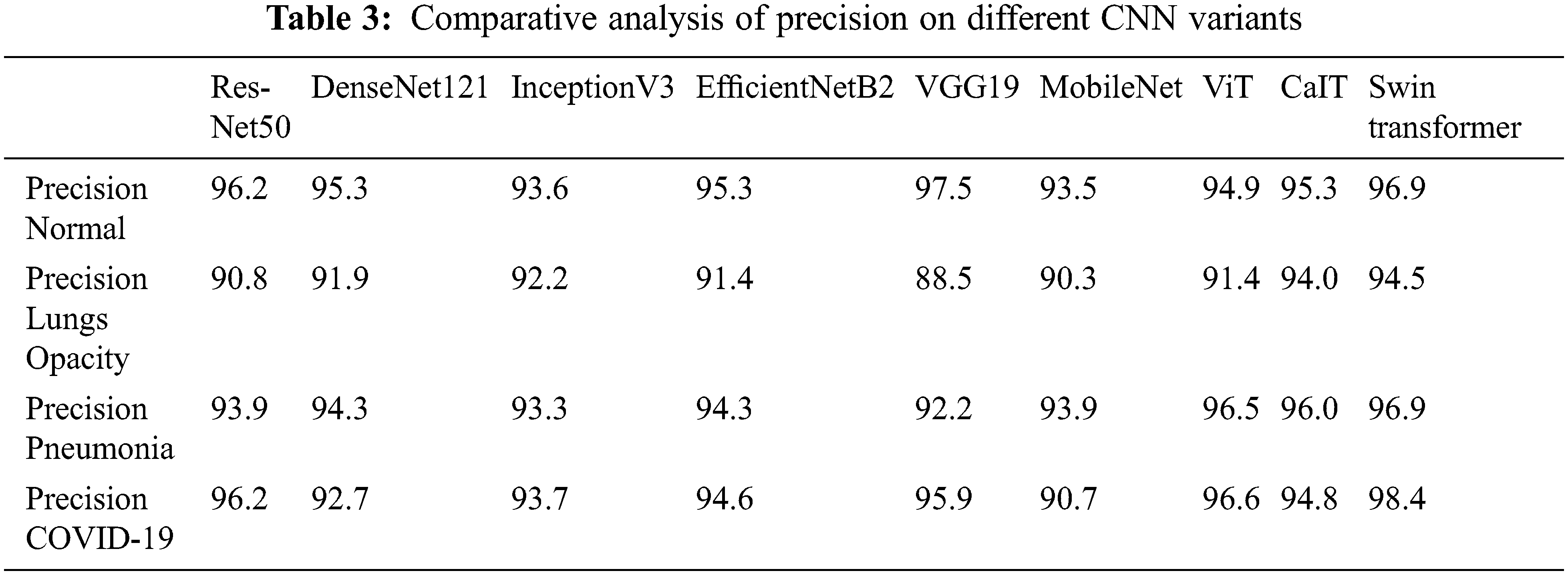

In this research, different experiments are conducted in PyTorch environment, with python 3.7. Dataset is divided into 80 into 20 ratios of testing and training. Performance of Novel Swine transformer is compared with different with 8 other deep learning pre-trained convolutional neural network (CNN) variants. Tab. 3 shows a comparison between convolutional neural network (CNN) variants and Swin transformers based on precision. The precision of each of the classes in the dataset using Swine is compared to other convolutional neural network (CNN) variants. Results show that Swin transformer shows better results on each of the classes of the COVID-19 X-ray dataset in terms of precision. Eq. (1) shows an equation of calculating precision of each of the classes, as the precision of COVID-19 class is calculated by dividing total numbers of COVID-19 images classified by pre-trained deep learning networks divided by the total numbers of normal, COVID-19, lung opacity and pneumonia images classified by the pre-trained deep learning networks.

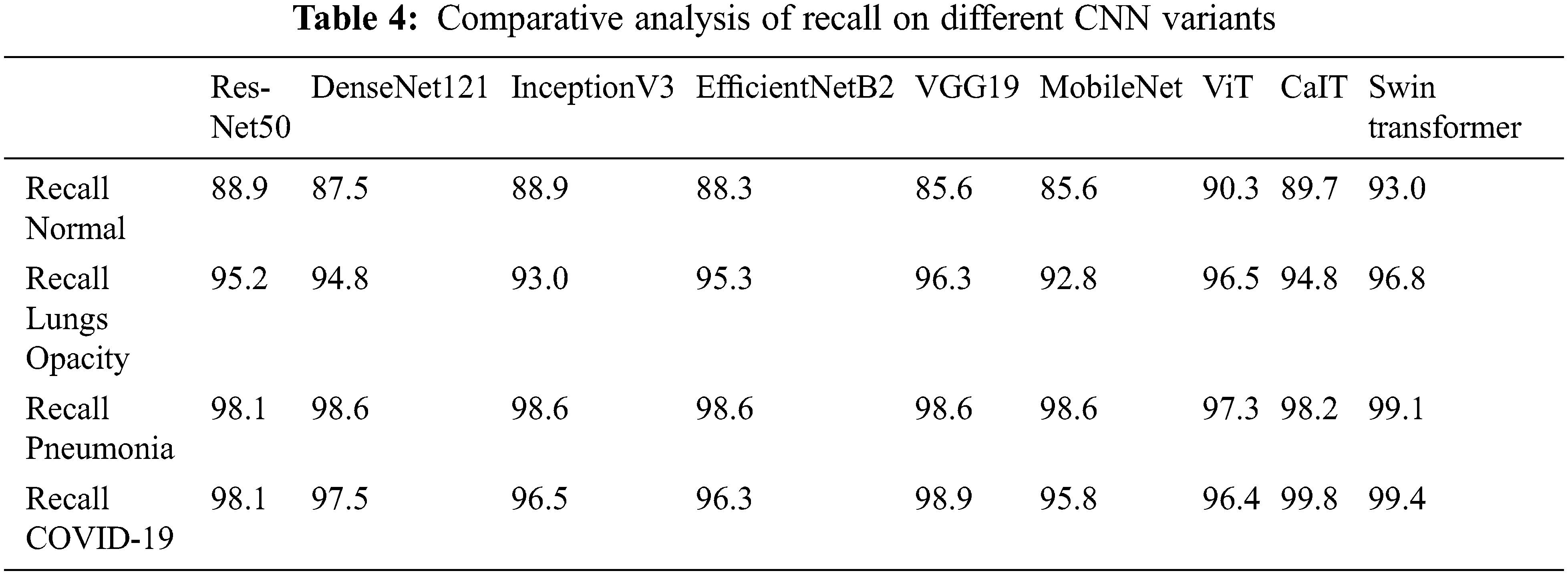

Tab. 4 presents that Swin transformer showed better performance on the dataset of Corona virus inflicted chest scans of X-ray technology. No doubt convolutional neural network (CNN) variants show excellent performance, but Swin transformer outperforms. Eq. (2) presents the formula for calculating recall of any of the classes in the Corona virus dataset containing X-ray technology chest scans. For example, the recall of the COVID-19 class is calculated by dividing the number of COVID-19 chest X-ray images classified by pre-trained deep learning models by a total number of Corona virus-infected chest scans having technology of X-ray visuals.

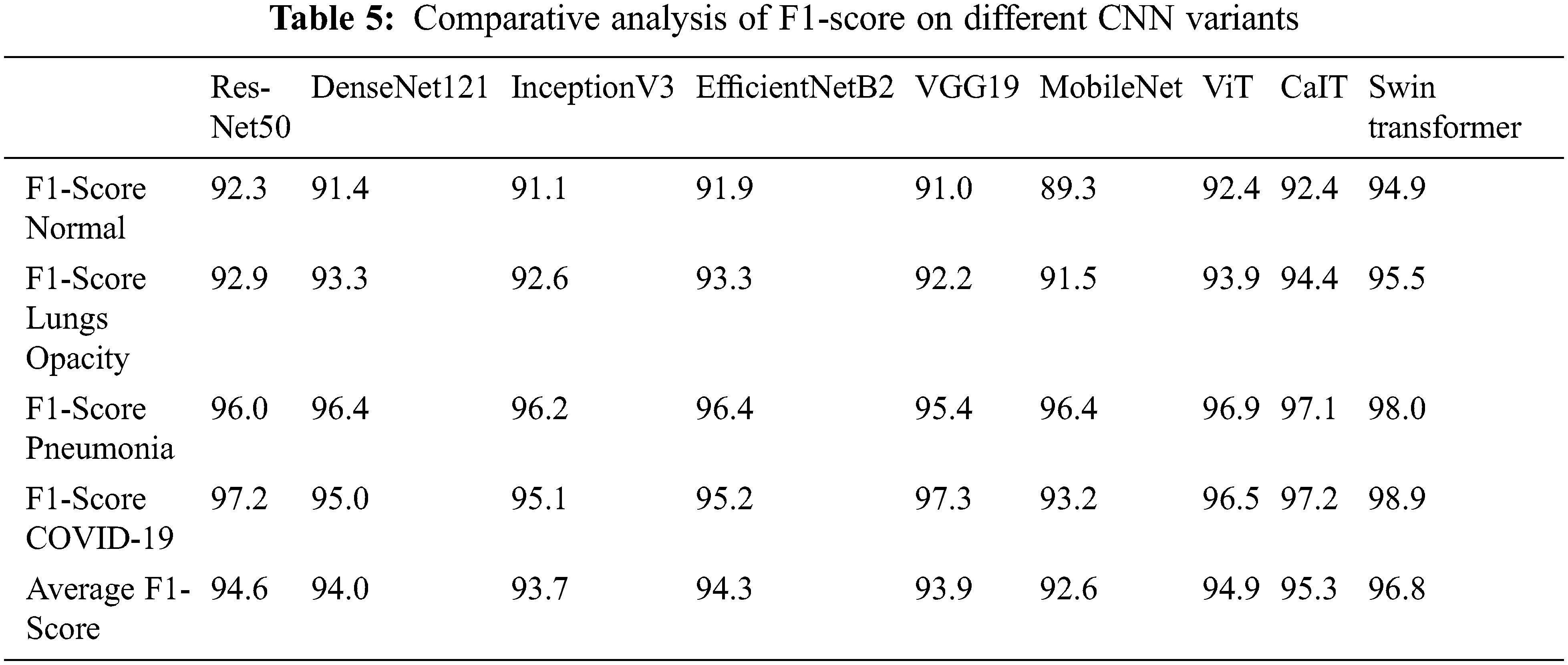

A comparative analysis of the F1 score on the Corona virus dataset of X-ray scans is presented in Tab. 5. Swin transformer showed near 97% of the results on the Corona Virus dataset of X-ray scans. F1-score comparisons on each of the classes of the Coronavirus X-ray scans are presented in Tab. 3. No doubt, the existing re-trained deep learning models showed around 90% results, but in medical, we cannot ignore even a single patient.

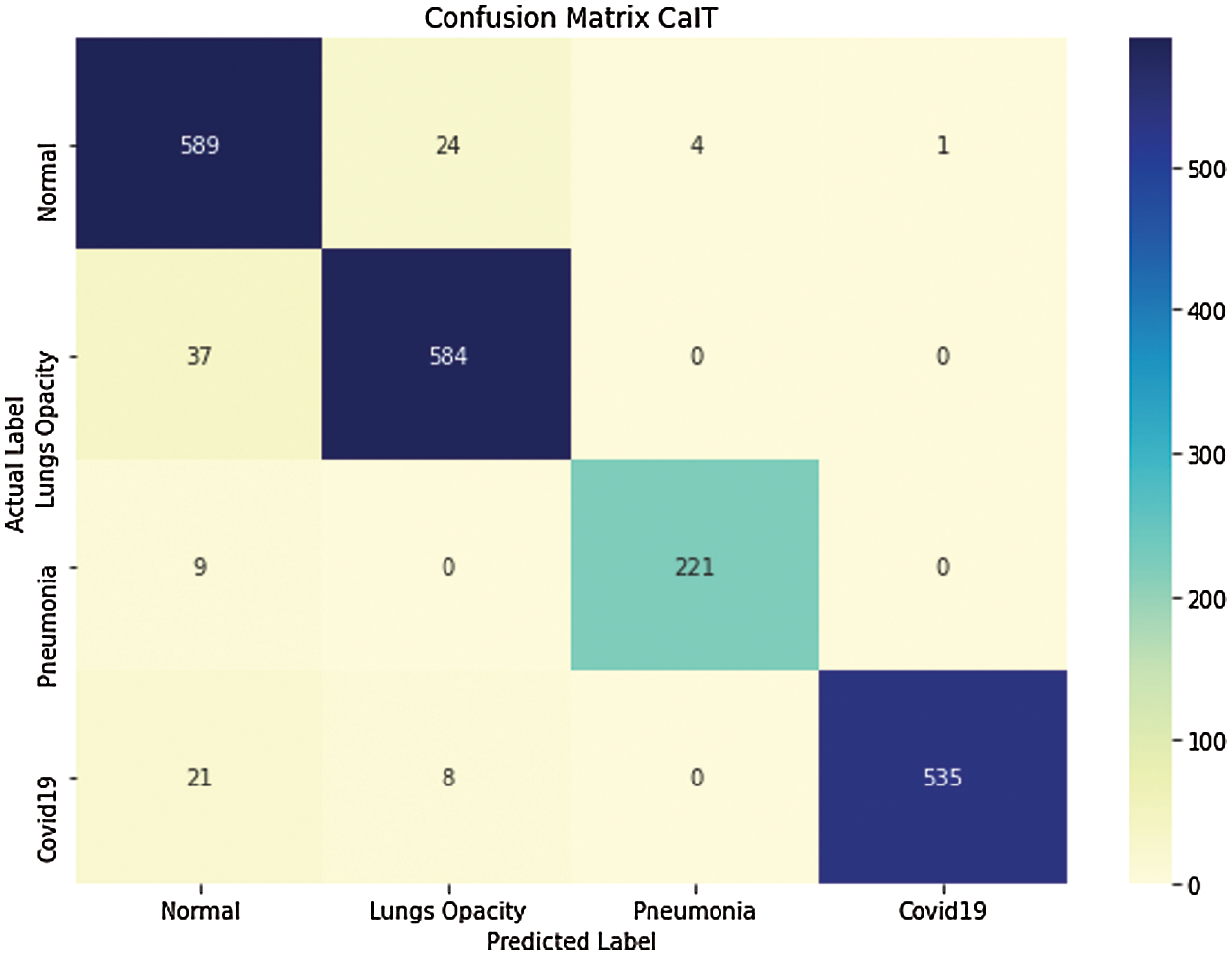

The proposed technique for predicting COVID-19 patients by way of the chest X-ray is compared with CNN deep learning models containing already trained data. The performance evaluators accuracy, precision, recall and F-Score show better performance of Swin transformer. The confusion matrix for all classes using pretrained CaIT is presented in Fig. 5. The figure demonstrates that out of 564 Corona Virus cases, 535 are successfully classified as true positive, whereas 29 Corona virus Patients are misclassified by the CaIT, which is a huge amount and can spread the virus.

Figure 5: Confusion matrix of 4-class classification using CaIT

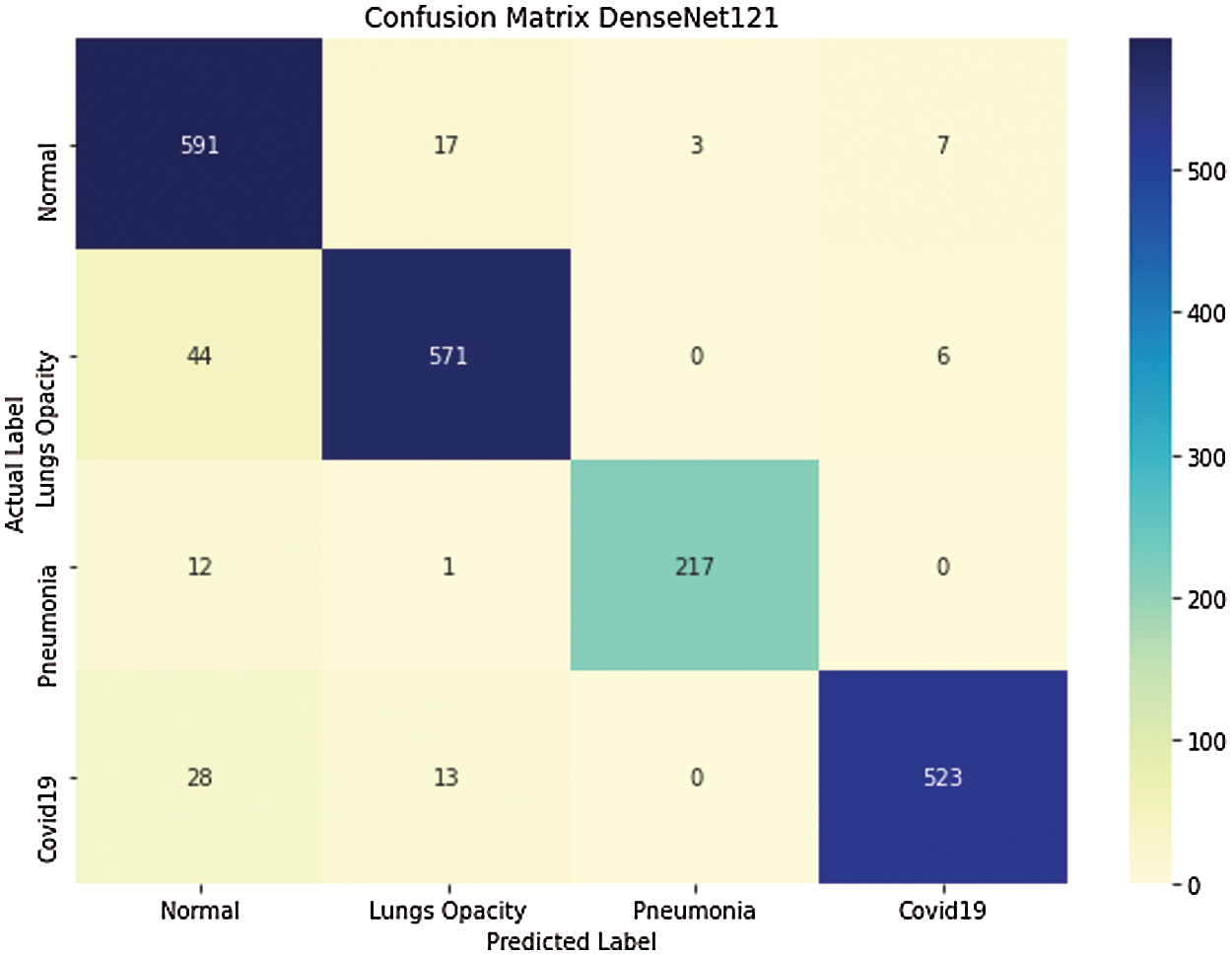

Fig. 6 presents the confusion matrix of the DenseNet121 convolutional neural network (CNN) model of already trained data on the Coronavirus inflicted patient’s dataset of X-ray technology chest scans. The X-axis shows predicted, and on the y-axis, there are actual labels. Therefore, the results indicate that 41 COVID-19 are misclassified.

Figure 6: Confusion matrix of 4-class classification using DenseNet121

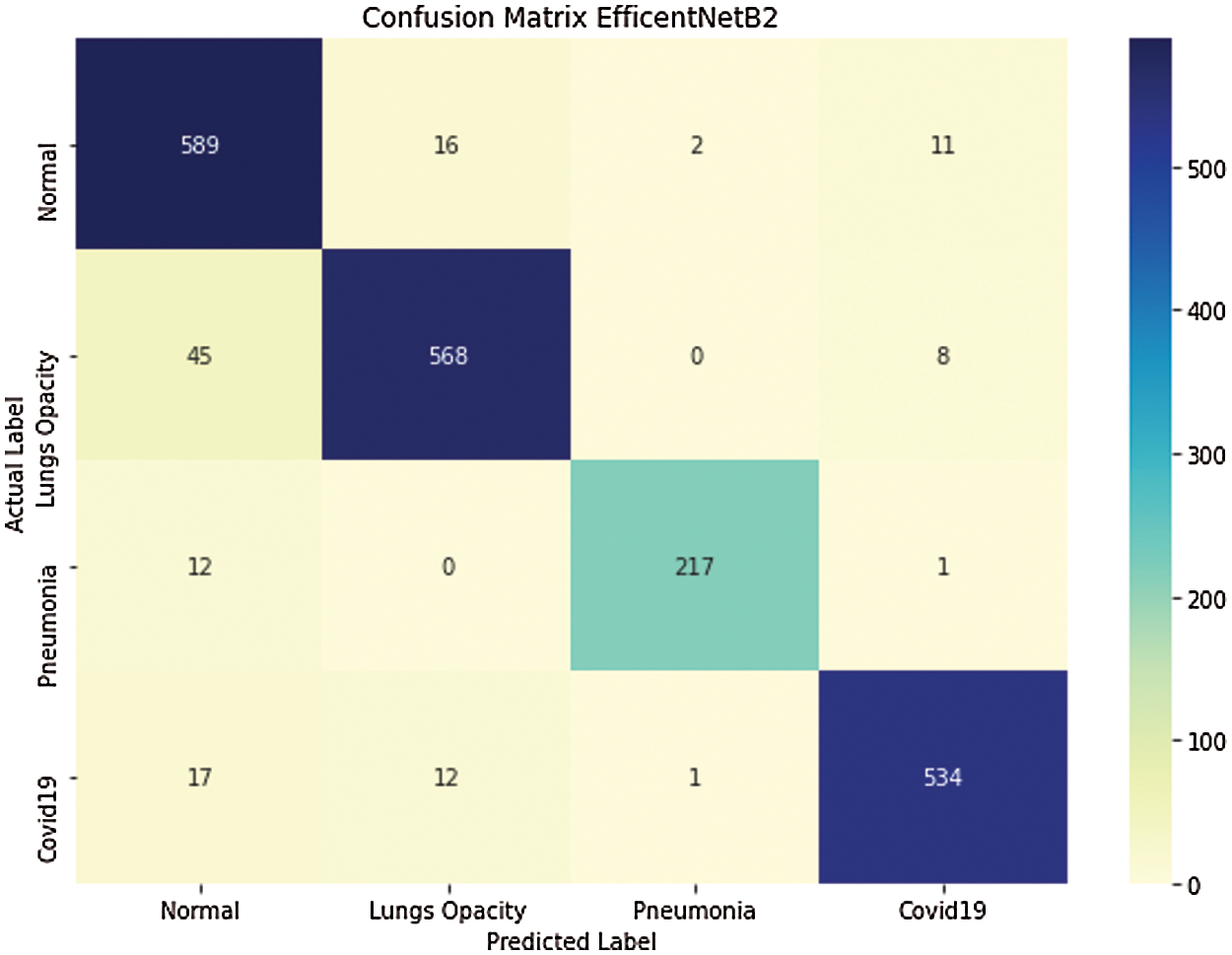

Fig. 7 shows that 534 Corona virus images are rightly diagnosed using the Efficient NetB2 pre-trained model of convolutional neural network (CNN) on the Corona virus dataset. However, a large number of images were misclassified.

Figure 7: Confusion matrix of 4-class classification using EfficientNet82

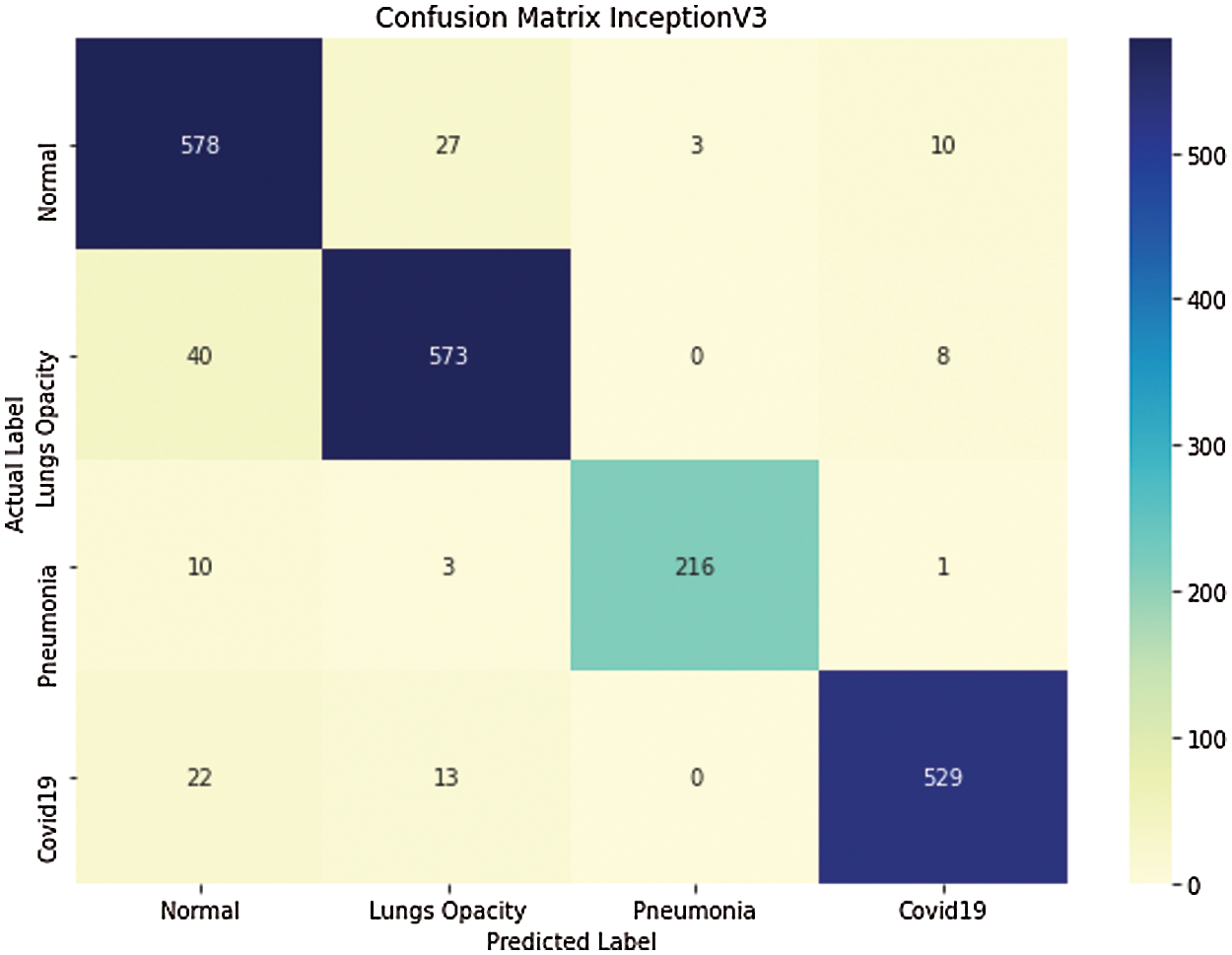

Fig. 8 shows that 35 COVID-19 images are misclassified using the InceptionV3 pre-trained deep learning model.

Figure 8: Confusion matrix of 4-class classification using InceptionV3

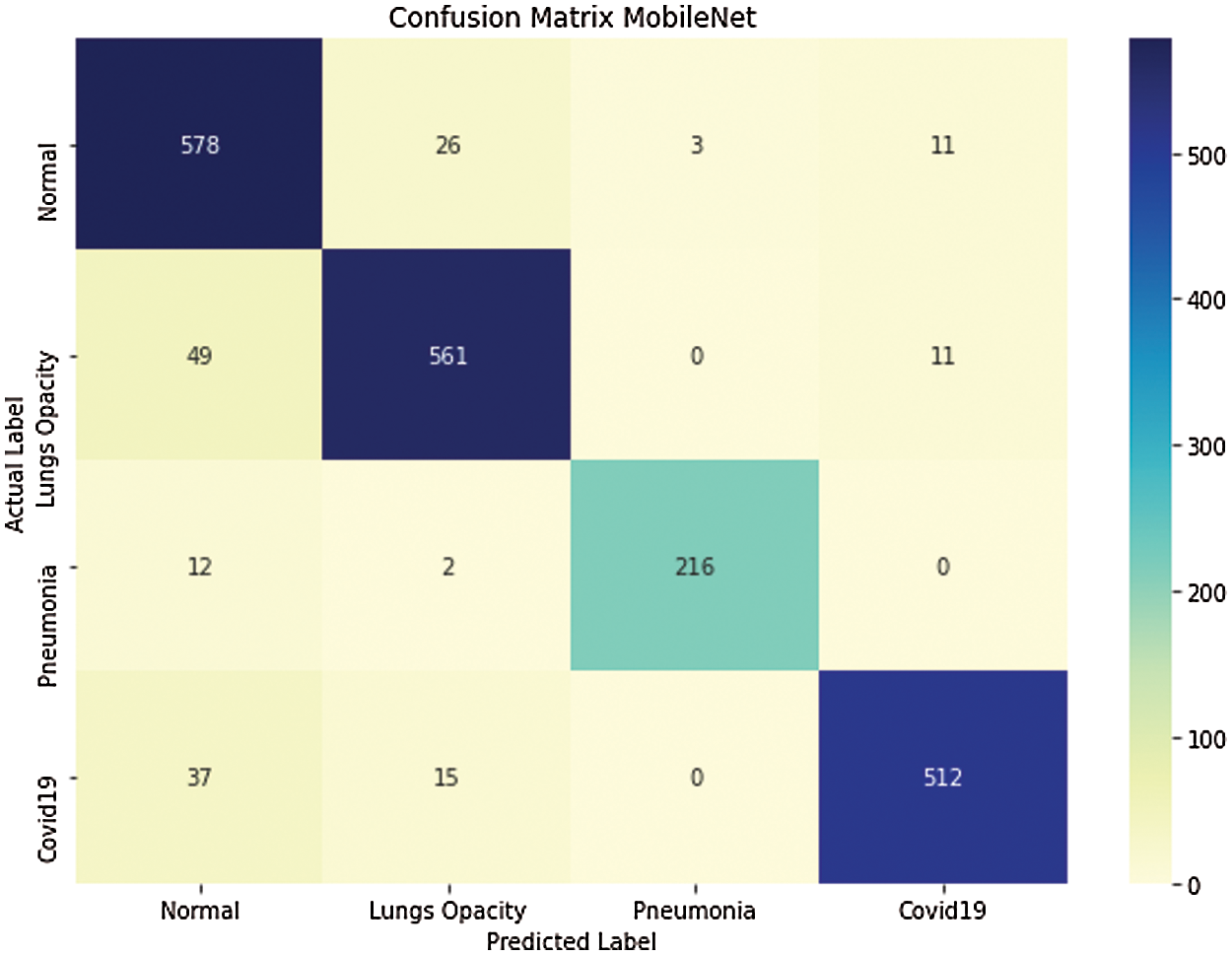

Fig. 9 depicts that 512 out of 564 Corona virus images are correctly classified by using MobileNet pre-trained deep learning model. Fig. 9 gives a clear presentation of classified and misclassified images in the Corona virus chest dataset of X-ray scans using MobileNet.

Figure 9: Confusion matrix of 4-class classification using MobileNet

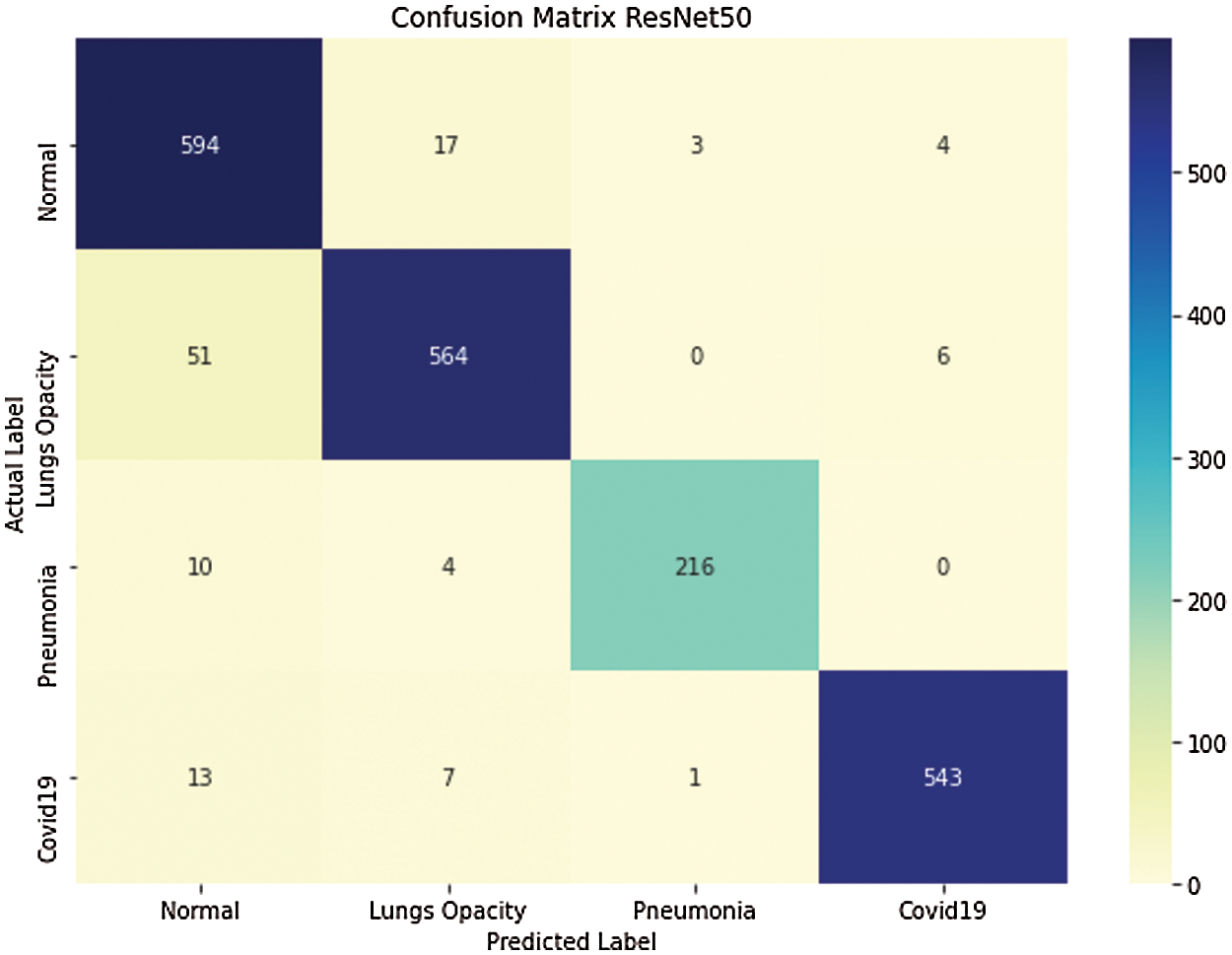

Fig. 10 shows that 543 out of 564 Coronavirus images are correctly classified using the ResNet50 pre-trained deep learning mode.

Figure 10: Confusion matrix of 4-class classification using ResNet50

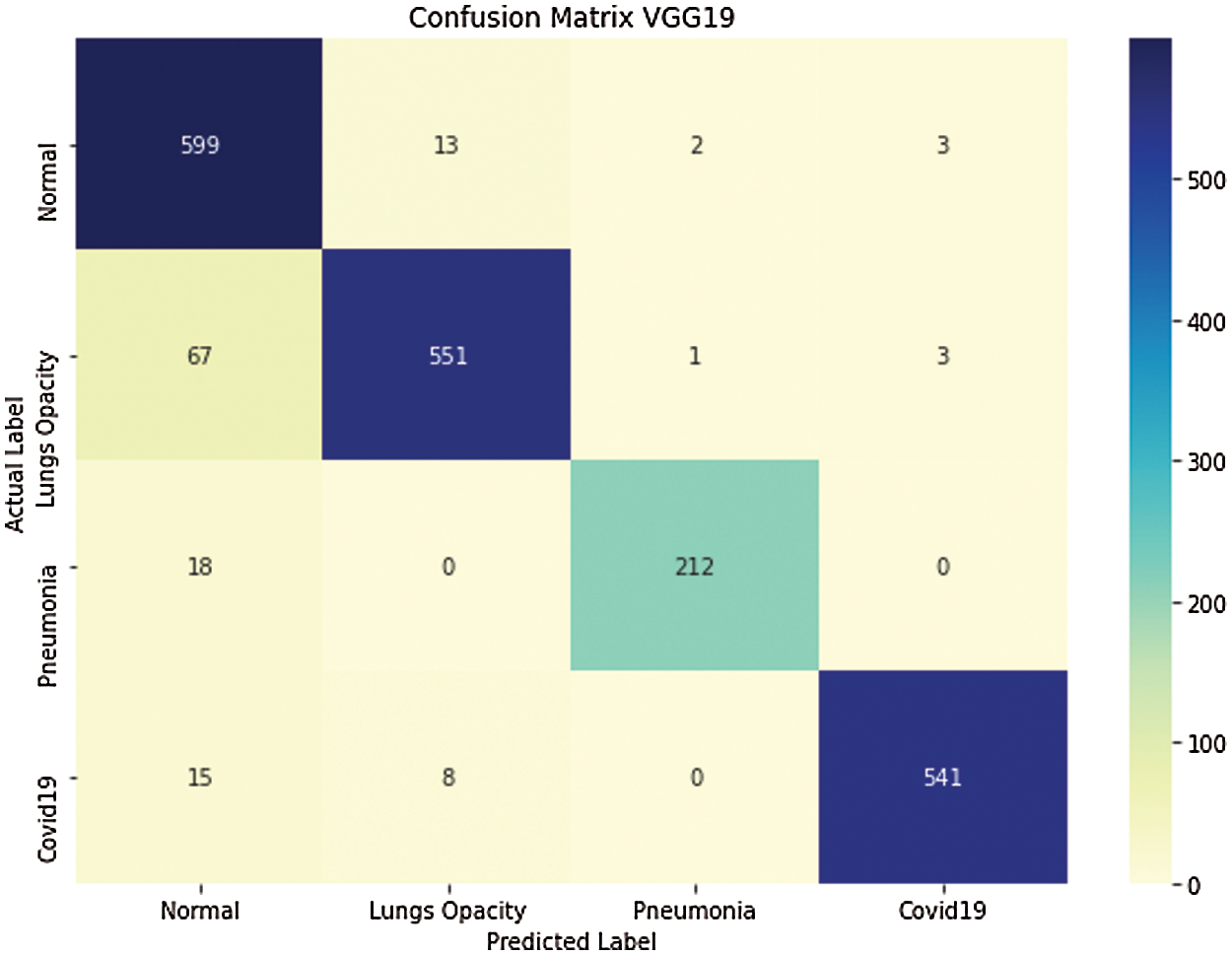

Fig. 11 shows the confusion matrix of VGG19 on COVID -19 chest X-ray dataset. Correctly and incorrectly classified images in each of 4-classes in the COVID-19 chest X-ray dataset.

Figure 11: Confusion matrix of 4-class classification using VGG19

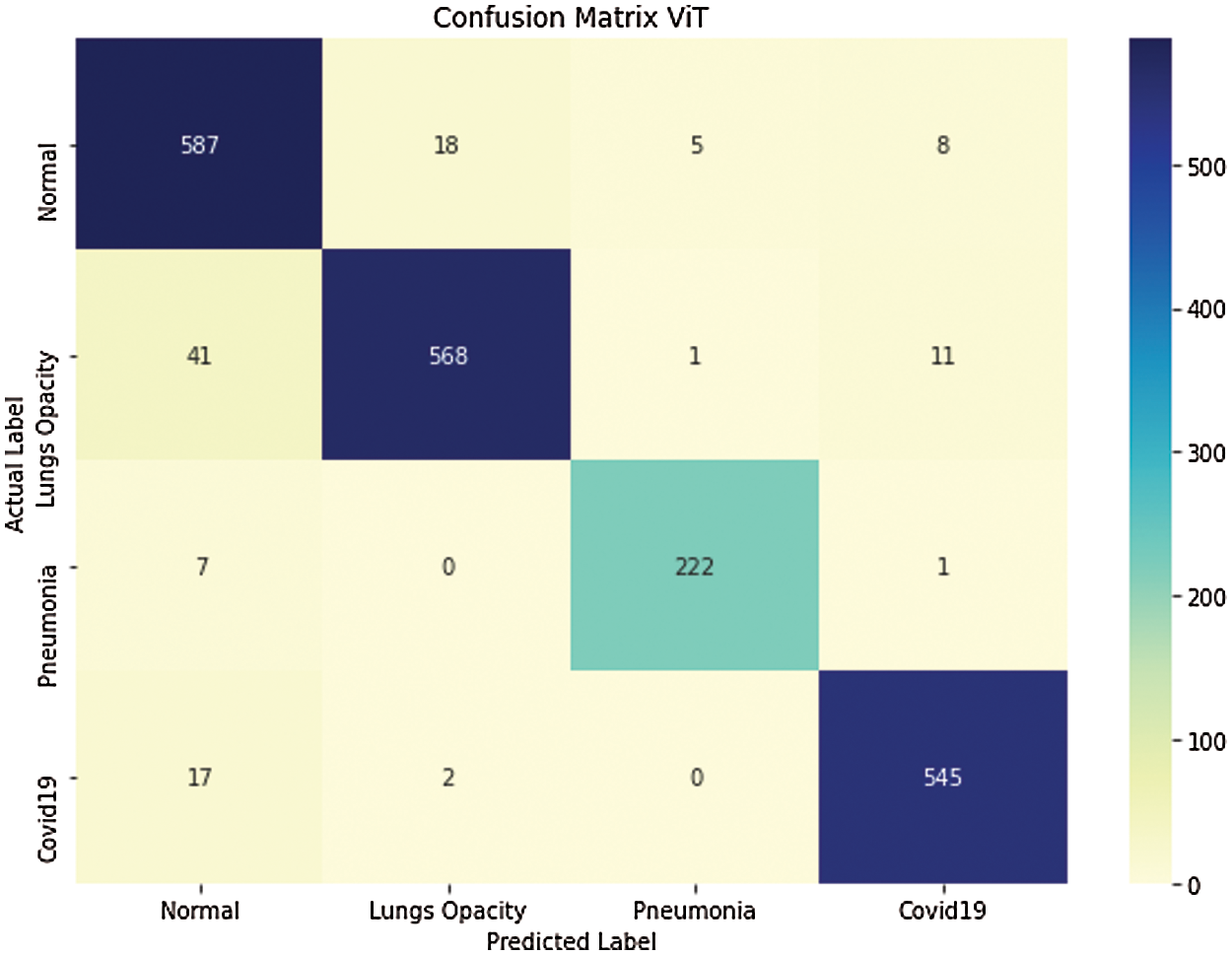

Fig. 12 shows that 17 COVID-19 images are misclassified as normal patients X-ray images, whereas 2 are misclassified as lung opacity X-ray images. Furthermore, Fig. 12 presents the number of classified and unclassified images in each class on the COVID-19 chest X-ray dataset.

Figure 12: Confusion matrix of 4-class classification using ViT

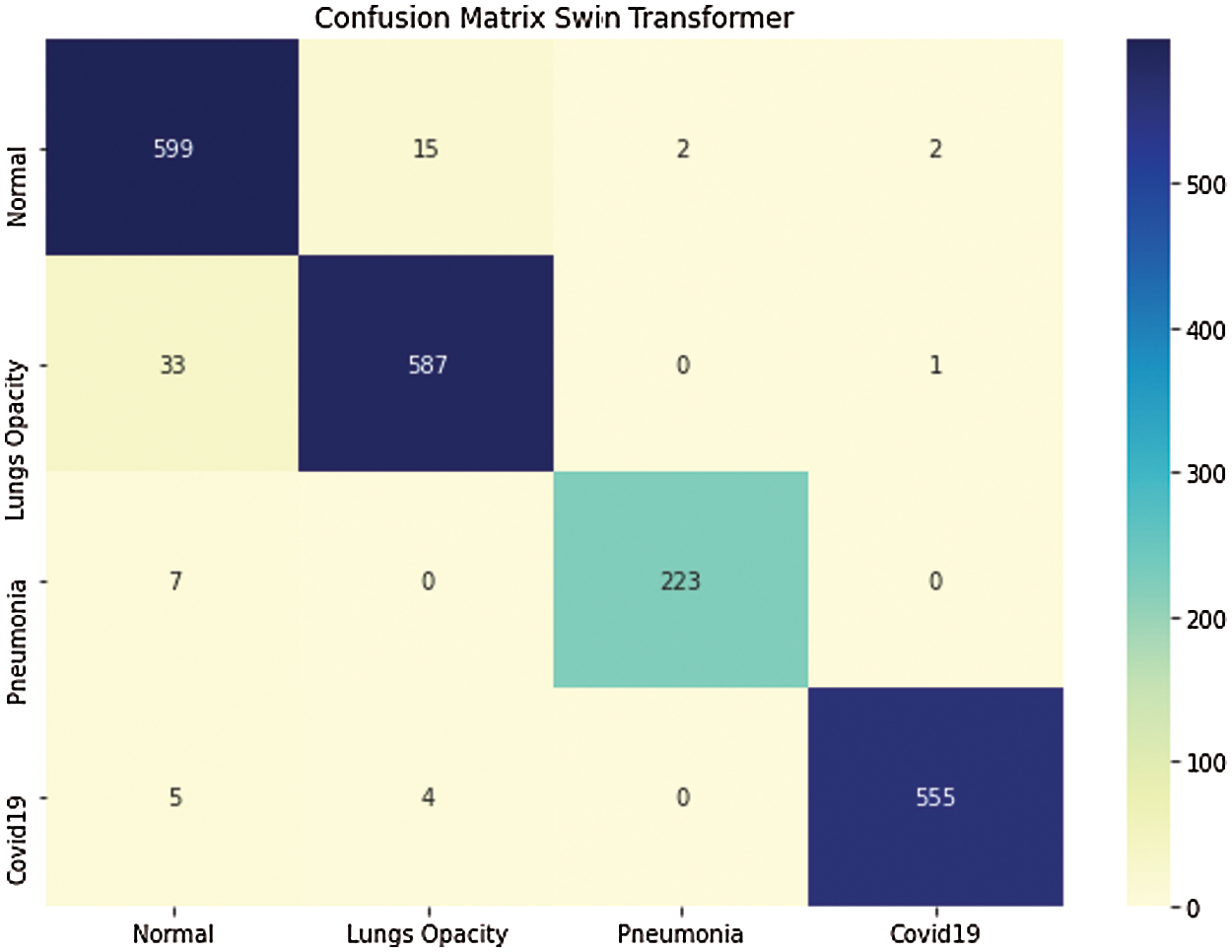

Fig. 13 presents the confusion matrix of Swin transformer on the COVID-19 chest X-ray dataset. Swin transformer shows better results on the COVID-19 chest X-ray dataset. Only 5 COVID-19 X-ray images are misclassified as normal, and 4 misclassified as lung opacity images.

Figure 13: Confusion matrix of 4-class classification using Swin transformer

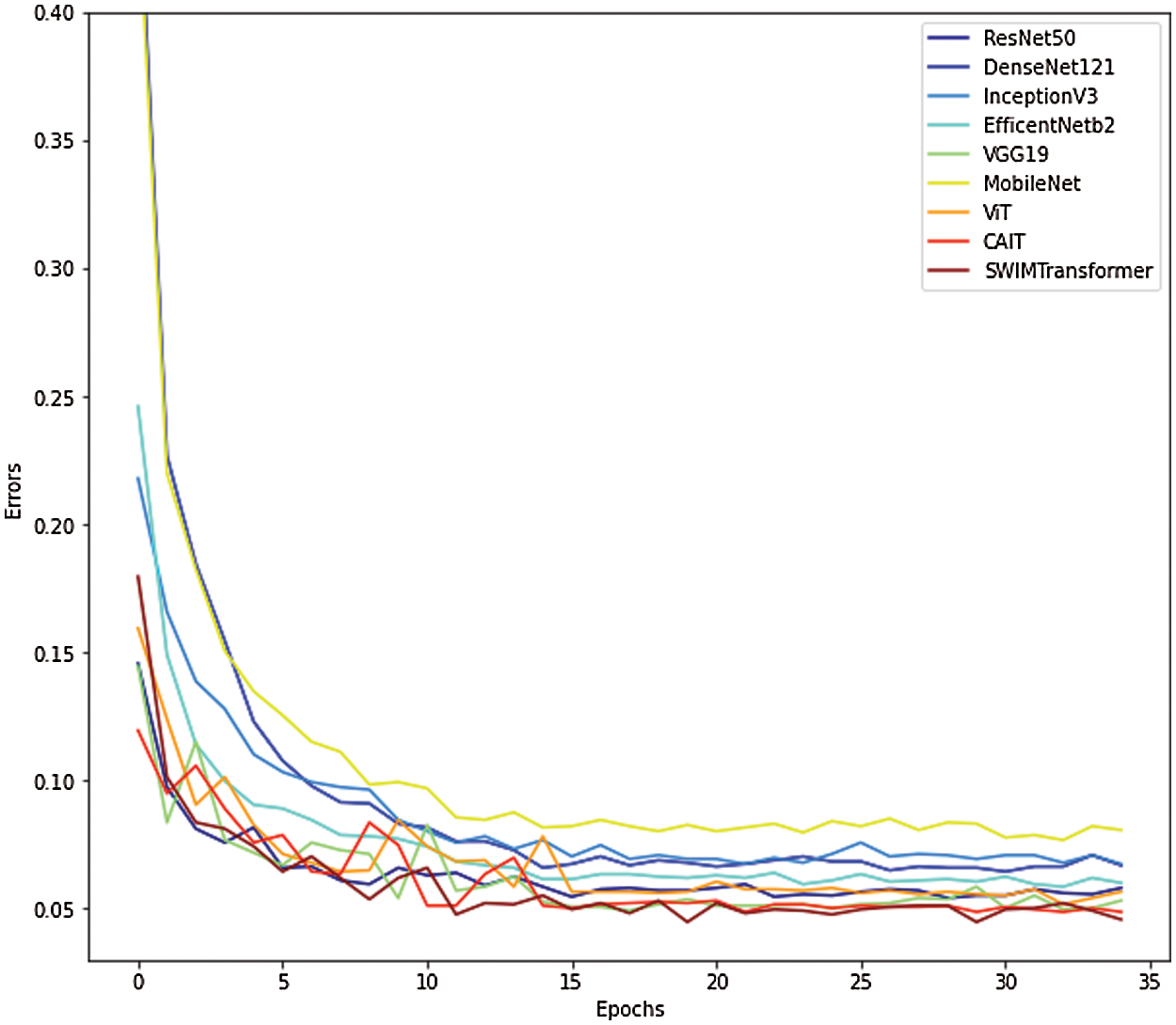

Fig. 14 gives a relative analysis of errors of each of 8 pre-trained deep learning models with Swin transformer. Fig. 13 shows that MobileNet and ResNet50 models show high error rate than Swin transformer. Whereas a very less error rate is shown by Swin transformer on chest X-ray dataset for COVID-19 patient detection.

Figure 14: Comparative analysis of errors of each 8-pretrained deep learning models on COVID-19 chest X-ray dataset

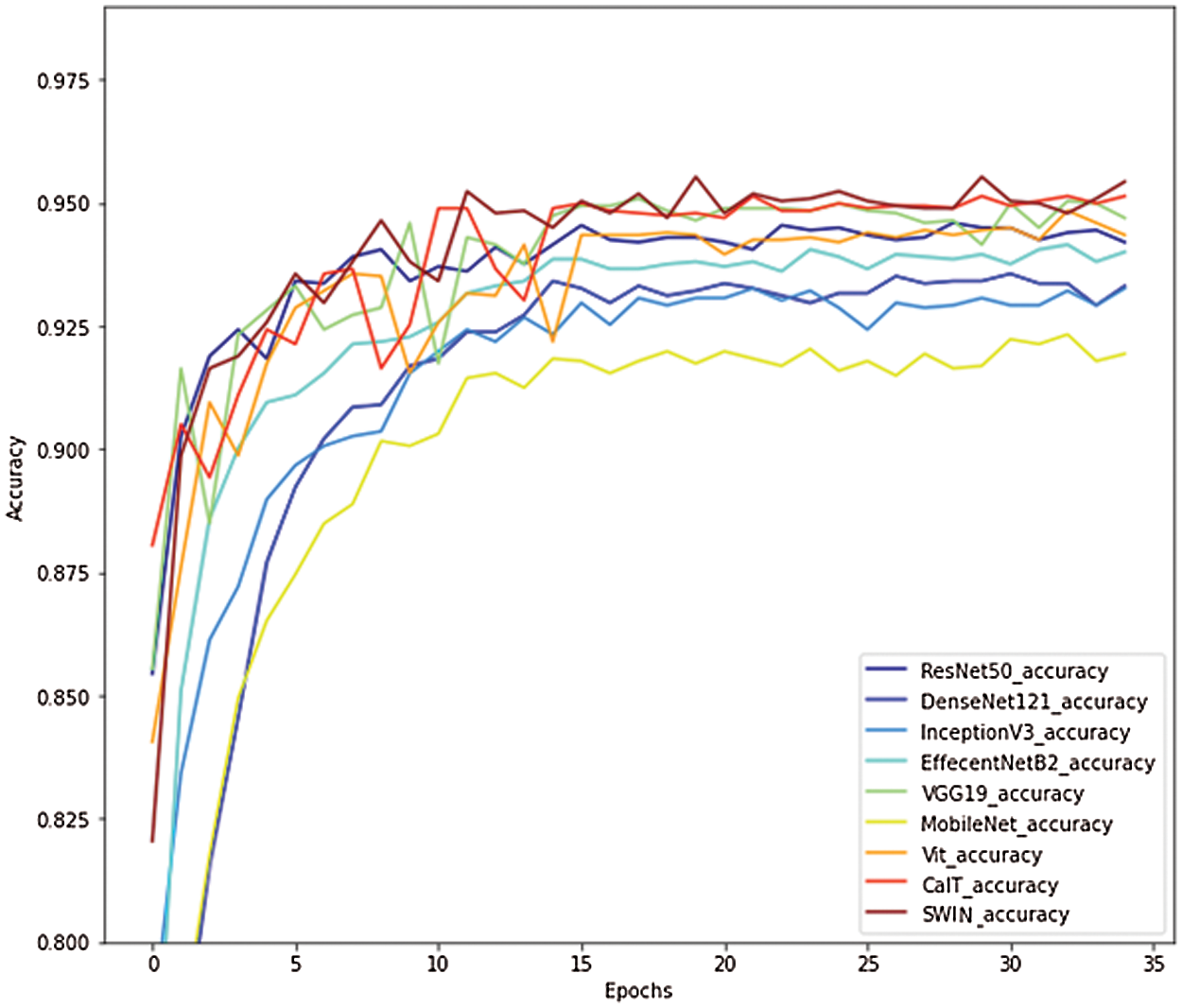

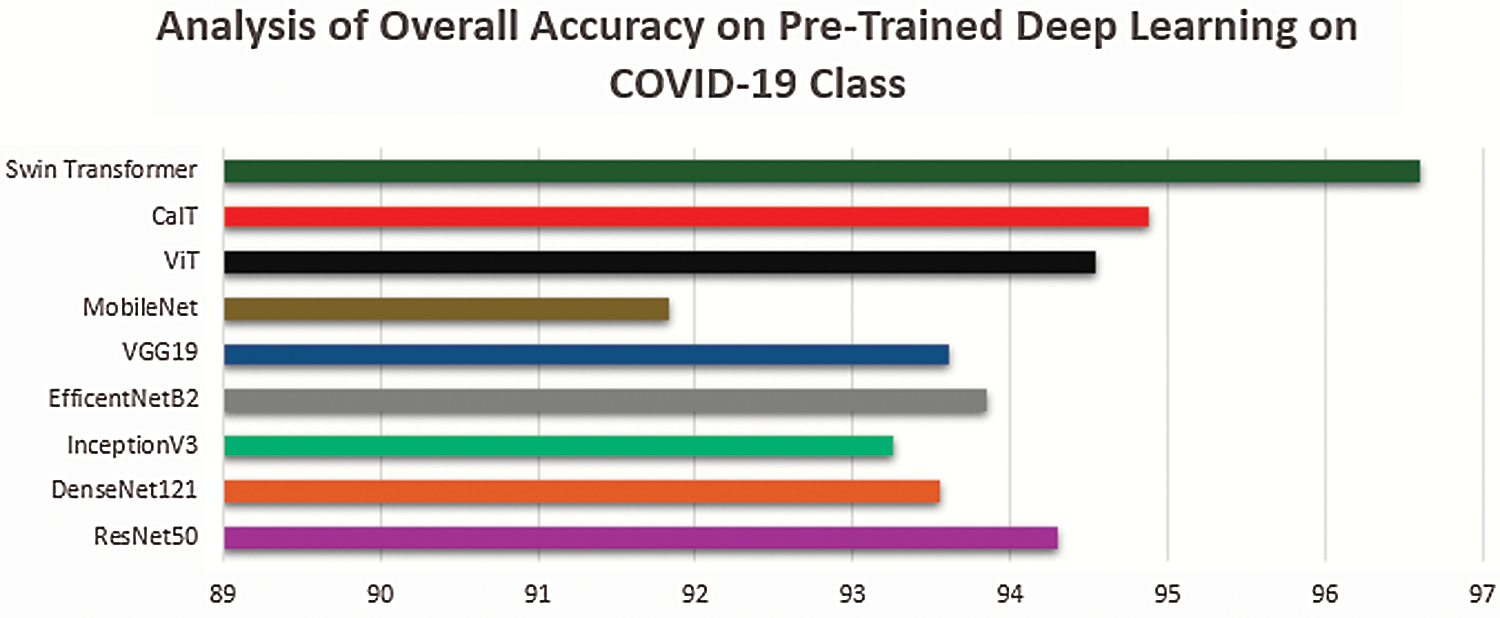

Fig. 15 shows the overall accuracy comparison of 8 pre-trained deep learning models with Swin transformer; results show that Swin transformer performance best as compared to existing pre-trained model on COVID-19 chest X-ray dataset.

Figure 15: Comparative analysis of overall accuracy on COVID-19 chest X-ray dataset

Moreover, Fig. 16 presents a comparative analysis of accuracy using 8 pre-trained deep learning models and Swin transformer.

Figure 16: Comparative analysis of accuracy of deep learning 8 pre-trained models with Swin transformer on COVID-19 class

whereas Eq. (3) shows the formula of calculating accuracy for the COVID-19 class in the COVID-19 chest X-ray dataset. The accuracy of each of the 4 classes in the dataset is calculated using Eq. (3) and then compared and presented in Fig. 16. Results shows that Swin transformer perform better than other 8 pre-trained models (ResNet 50, DenseNet121, InceptionV3, EfficientNet82, VGG19, MobileNeta, Viit, CaIT).

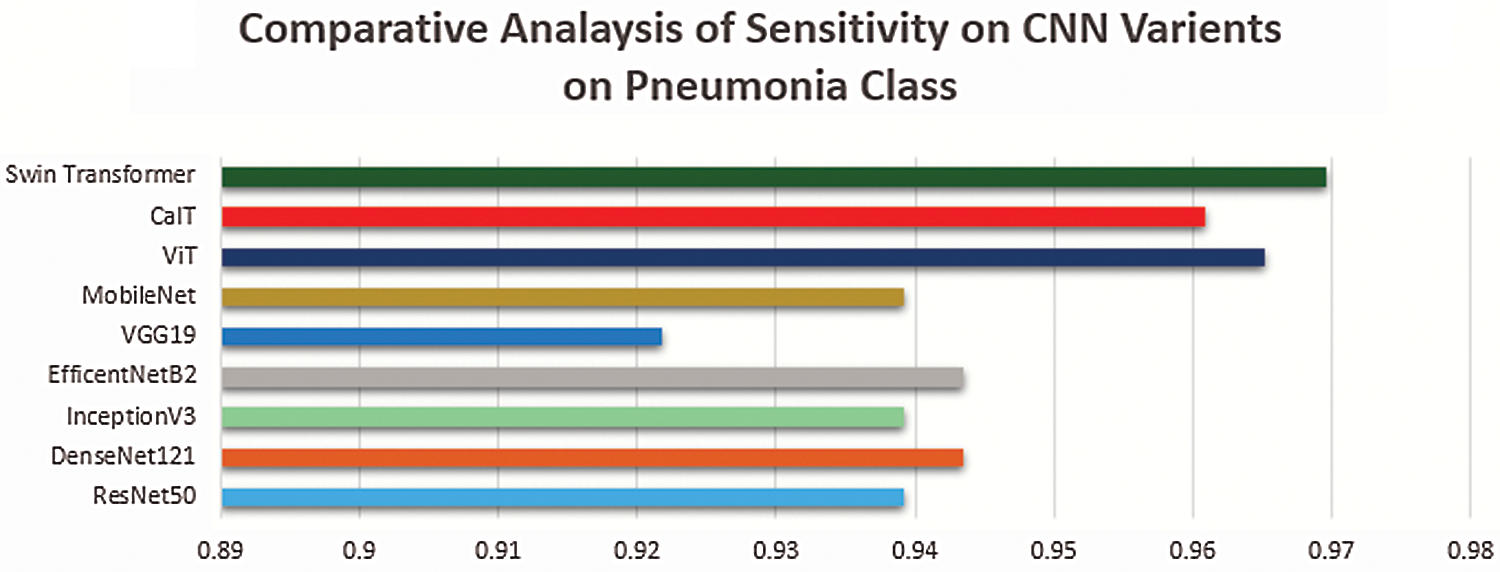

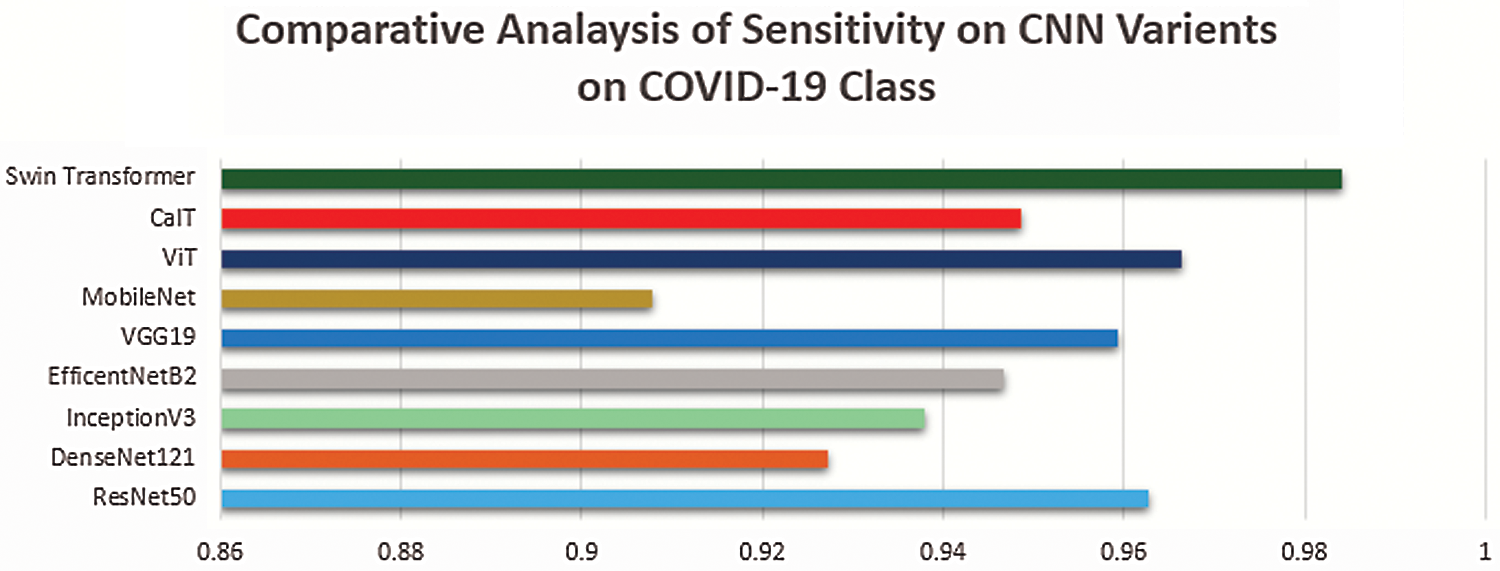

Figs. 17 and 18 presents an analysis of sensitivity on COVID-19 and pneumonia class. The results show that the Swin transformer performed better than the existing 8 pre-trained models in terms of sensitivity on COVID-19 and pneumonia class of COVID-19 chest X-ray datasets. Moreover, statistical test(t-test) is performed to check the statistical significance of proposed approach result than the state of the art work. It was cleared observed that the overall p-value is less than 0.05.

Figure 17: Comparative analysis of sensitivity of deep learning 8 pre-trained models with swin transformer on pneumonia class

Figure 18: Comparative analysis of sensitivity of deep learning 8 pre-trained models with swin transformer on COVID-19 class

4.1 Comparison with State-of-the-Art Methods

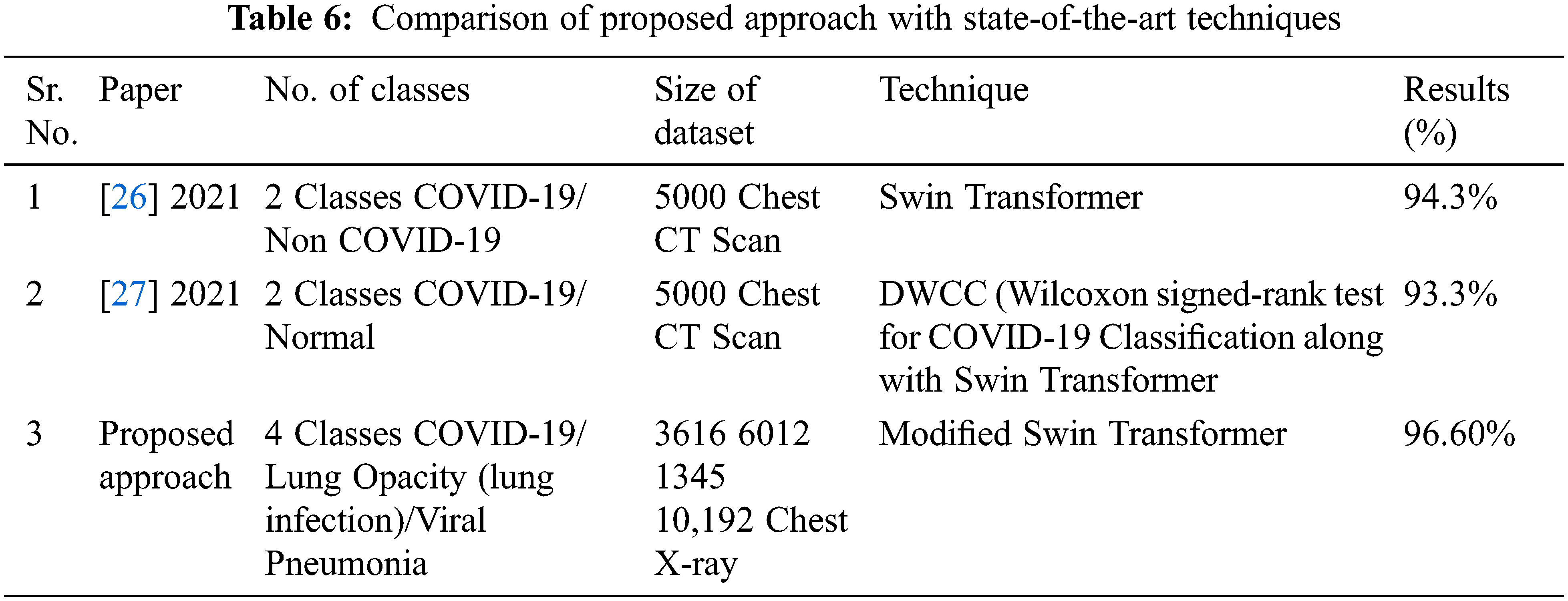

The comparison of modified Swin transformer with state-of-the-art techniques is presented through the table.

Tab. 6 presents some of the latest research on Covid-19 using Swin transformer, whereas the comparison shows that work presented in this article is better than existing approaches. Even the no of classes and size of dataset in proposed approach is more than the existing approaches presented in Tab. 6, even the presented work outperforms than existing approaches.

The speedy and precise exposure of extremely virulent COVID-19 plays a crucial part in avoiding the spread of the COVID-19 virus. In this study, we. have utilized X-ray images of COVID-19, Lung opacity and pneumonia patients. Also, normal chest X-ray images are present in the open-source radiography database. X-ray imaging is cheaper than other conventional methods for diagnosing the COVID-19 disease. To the best of knowledge largest database for COVID-19 detection available as a benchmark, a dataset is being utilized in the presented work. It contains 3616 COVID-19, 6012 non-COVID lung opacity, and 8851 normal X-ray images. Moreover, for the first time, the Swin transformer is utilized to detect the COVID-19 patients through chest X-ray images. Swin transformer showed 96% accuracy on COVID-19 chest X-ray dataset. Furthermore, Swin transformer outperform other than eight pre-trained models ResNet 50, DenseNet121, InceptionV3, EfficientNet82, VGG19, Mobile Net, Viit, CaIT. This deep learning approach can be beneficial as rapid screening of COVID-19 patients that can prevent lives or casualties, particularly during the COVID-19 pandemic period. In the future there may be an enhancement in Swin transformer that leads towards a better result.

Acknowledgement: The authors would like to express their gratitude to the Ministry of Education and the Deanship of Scientific Research, Najran University. They also thank the Kingdom of Saudi Arabia for its financial and technical support under code number NU/MID/18/035.

Funding Statement: This research was funded by the Deanship of Scientific Research, Najran University, Kingdom of Saudi Arabia, Grant Number NU/MID/18/035.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. L. Yan, “Prediction of criticality in patients with severe Covid-19 infection using three clinical features: A machine learning-based prognostic model with clinical data in Wuhan,” MedRxiv, vol. 27, 2020. [Google Scholar]

2. S. B. Stoecklin, “First cases of coronavirus disease 2019 (COVID-19) in France: Surveillance, investigations and control measures,” Eurosurveillance, vol. 25, no. 6, pp. 2000094, 2020. [Google Scholar]

3. B. Antin, J. Kravitz and E. Martayan, Detecting pneumonia in chest X-rays with supervised learning, Semantic scholar Org.: Allen Institute for Artificial intelligence, Seattle, WA, USA, 2017. [Google Scholar]

4. R. Kumar, “Accurate prediction of COVID-19 using chest X-Ray images through deep feature learning model with SMOTE and machine learning classifiers,” MedRxiv, vol. 40, pp. 63, 2020. [Google Scholar]

5. A. Narin, C. Kaya and Z. Pamuk, “Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks,” Pattern Analysis and Applications, vol. 24, no. 3, pp. 1–14, 2021. [Google Scholar]

6. P. K. Sethy and S. K. Behera, “Detection of coronavirus disease (COVID-19) based on deep features,” Preprints, 2020. [Google Scholar]

7. E. E. D. Hemdan, M. A. Shouman and M. E. Karar, “Covidx-net: A framework of deep learning classifiers to diagnose COVID-19 in x-ray images,” arXiv preprint arXiv:2003.11055, 2020. [Google Scholar]

8. T. Ozturk, M. Talo, E. A. Yildirim, U. B. Baloglu, O. Yildirim et al., “Automated detection of COVID-19 cases using deep neural networks with X-ray images,” Computers in Biology and Medicine, vol. 121, no. 7798, pp. 103792, 2020. [Google Scholar]

9. S. H. Yoo, “Deep learning-based decision-tree classifier for COVID-19 diagnosis from chest X-ray imaging,” Frontiers in Medicine, vol. 7, pp. 427, 2020. [Google Scholar]

10. H. Panwar, P. Gupta, M. K. Siddiqui, R. Morales-Menendez and V. Singh, “Application of deep learning for fast detection of COVID-19 in X-Rays using nCOVnet,” Chaos Solitons & Fractals, vol. 138, no. 3, pp. 109944, 2020. [Google Scholar]

11. S. Albahli, “A deep neural network to distinguish COVID-19 from other chest diseases using x-ray images,” Current Medical Imaging, vol. 17, no. 1, pp. 109–119, 2021. [Google Scholar]

12. J. Civit-Masot, F. Luna-Perejón, M. Domínguez Morales and A. Civit, “Deep learning system for COVID-19 diagnosis aid using X-ray pulmonary images,” Applied Sciences, vol. 10, no. 13, pp. 4640, 2020. [Google Scholar]

13. A. I. Khan, J. L. Shah and M. M. Bhat, “CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest x-ray images,” Computer Methods and Programs in Biomedicine, vol. 196, no. 18, pp. 105581, 2020. [Google Scholar]

14. L. Sarker, M. M. Islam, T. Hannan and Z. Ahmed, “COVID-DenseNet: A deep learning architecture to detect COVID-19 from chest radiology images,” Preprints, 2020. [Google Scholar]

15. S. Wang, “A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis,” European Respiratory Journal, vol. 56, no. 2, pp. 2000775, 2020. [Google Scholar]

16. I. D. Apostolopoulos, S. I. Aznaouridis and M. A. Tzani, “Extracting possibly representative COVID-19 biomarkers from X-ray images with deep learning approach and image data related to pulmonary diseases,” Journal of Medical and Biological Engineering, vol. 40, no. 3, pp. 1–469, 2020. [Google Scholar]

17. T. Rahman, “Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images,” Computers in Biology and Medicine, vol. 132, no. 2, pp. 104319, 2021. [Google Scholar]

18. A. Vaswani, “Attention is all you need,” in Advances in Neural Information Processing Systems, California, pp. 5998–6008, 2017. [Google Scholar]

19. B. Singh, M. Najibi and L. S. Davis, “Sniper: Efficient multi-scale training,” arXiv preprint arXiv: 1805.09300, 2018. [Google Scholar]

20. T. Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan et al., “Feature pyramid networks for object detection,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, USA, pp. 2117–2125, 2017. [Google Scholar]

21. A. Dosovitskiy, “An image is worth 16×16 words: Transformers for image recognition at scale,” arXiv preprint arXiv: 2010.11929, 2020. [Google Scholar]

22. A. Lin, B. Chen, J. Xu, Z. Zhang and G. Lu, “DS-TransUNet: Dual swin transformer U-Net for medical image segmentation,” arXiv preprint arXiv: 2106.06716, 2021. [Google Scholar]

23. H. Cao, “Swin-Unet: Unet-like pure transformer for medical image segmentation,” arXiv preprint arXiv: 2105.05537, 2021. [Google Scholar]

24. J. Liang, J. Cao, G. Sun, K. Zhang, L. Van Gool et al., “SwinIR: Image restoration using swin transformer,” in Proc. of the IEEE/CVF Int. Conf. on Computer Vision, Zurich, ZH, CH, pp. 1833–1844, 2021. [Google Scholar]

25. Z. Liu, “Swin transformer: Hierarchical vision transformer using shifted windows,” arXiv preprint arXiv: 2103.14030, 2021. [Google Scholar]

26. L. Zhang and Y. Wen, “A transformer-based framework for automatic COVID19 diagnosis in chest CTs,” in Proc. of the IEEE/CVF Int. Conf. on Computer Vision, Montreal, BC, Canada, pp. 513–518, 2021. [Google Scholar]

27. C. C. Hsu, G. L. Chen and M. H. Wu, “Visual transformer with statistical test for COVID-19 classification,” arXiv preprint arXiv: 2107.05334, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |