DOI:10.32604/iasc.2022.024961

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.024961 |  |

| Article |

Image Masking and Enhancement System for Melanoma Early Stage Detection

Electrical and Electronics Eng. Dep., Kirikkale University, Kirikkale, 71450, Turkey

*Corresponding Author: Fikret Yalcinkaya. Email: fyalcinkaya@kku.edu.tr

Received: 06 November 2021; Accepted: 27 December 2021

Abstract: Early stage melanoma detection (ESMD) is crucial as late detection kills. Computer aided diagnosis systems (CADS) integrated with high level algorithms are major tools capable of ESMD with high degree of accuracy, specificity, and sensitivity. CADS use the image and the information within the pixels of the image. Pixels’ characteristics and orientations determine the colour and shapes of the images as the pixels and associated environment are closely interrelated with the lesion. CADS integrated with Convolutional Neural Networks (CNN) specifically play a major role for ESMD with high degree of accuracy. The proposed system has two steps to produce high degree of accuracy; the first is the Fuzzy-Logic (FL) based maskProd and pixel Enhancement vectors and the second is integration with deep CNN algorithms. The vectors, maskProd and pixel Enhancement, are based on lesion masking and image enhancement via fuzzy logic as hSvMask production has three inputs; sChannel, entropyFilter and distanceVector. The originality of this paper is based on the regional enhancement of each pixel of the image by fuzzy-logic. To change the brightness of an image by the conventional methods (CM), it is required to multiply the value of all and each pixels of the image by the same constant or weight value in order to generate new values of the pixels. But in the proposed method, FL determines a special and specific constant or weight value for every single pixel of the image; called a variable weight value. Secondly via conventional or classical methods of image processing, mask production is realized based on the threshold value predetermined, and this threshold value decides whether the image lesion is melanoma or not. CM use constant weight values but in the method suggested, FL determines a specific variable weight value for every single pixel of the image. Secondly CMs realize the mask production using predetermined threshold values, but proposed method suggests a new way capable of detecting pixels in the lesion-free regions, to do that, a system using FL was developed for each and every single pixel via the data obtained from three sources: the s-channel of the HSV (Hue, Saturation and Value) colour space, distance vector, and image entropy vector. The proposed method and its integration with algorithms; such as AlexNet, GoogLeNet, Resnet18, VGG-16, VGG-19, Inception-V3, ShuffleNet and Xception, detected the melanoma with an accuracy of 86.95, 85.60, 86.45, 86.70, 85.47, 86.76, 86.45 and 85.30 percentages, respectively.

Keywords: Deep CNN algorithms; fuzzy logic masking algorithm; image segmentation; melanoma; pixel enhancement

Cancer has various forms associated with different organs or tissues. It abnormally grows and uncontrollably invades the rest of the tissue leading to death due to the metastasizing [1]. It resides and develops in the human skin as a deadly cancer type. Based on the intensity and quantity of the melanocytes present, the melanoma may grow uncontrollably [2]. But its diagnosis, control and cure need attention because it has clinically been demonstrated that the survival rates dependency is highly associated with the early stage detection. Although statistics show that melanoma incidence is just around 1% of total number of skin cancers, but it is the major cause of deaths. The expected survival rate is predicted as five years under normal conditions but its high tendency spreading to distant tissues is an urgent reason to tackle its early detection. The major benefit of early diagnosis is due to its life-saving role in mortality rates originating from the non-detected melanoma cases [3]. Early detection is the opportunity to tackle the disease timely and gives the chance to test more treatment options to save lives. But visual examination even by experts is subjected to errors due to skin and lesions similarities, possibly leaving patients without diagnosis due to a lack of specialists’ clinical inexperience. The accuracy rate by dermatologists via visual examination is only around 60% whereas the diagnosis of skin lesions using computer aided image processing (CAIP) is quite high due to the innovative medical instrumentation and software support systems [4].

The three steps of diagnosis process are visual screening, dermoscopic analysis, and a biopsy-a time-dependent histopathological examination. By the end of this time elapse, the condition of the patient may greatly deteriorate [5]. Clinically used Dermoscopy is a visualization method of the morphological structures in pigmented lesions under the effects of two main parameters, the uniform illumination and the density of the contrast. Human has a limited visual capacity, if integrated with inaccuracies due to the morphological structures leads to higher level of inaccuracies and uncertainties. Among many sources of inaccuracies, some critical sources are: the presence of non-essential components, such as hair, variability of lesion sizes-variable diameters, different colour and shape of the lesion, boundary irregularities as a result of asymmetrical lesions, or similarities (due to poor contrast) between the normal skin and the lesion part [6]. Therefore, Artificial Intelligence (AI) or deep Neural Networks (NN) based automatic detection of malignancy is a new signal-processing tool capable of diagnosing melanoma as early as possible. But, untreated cases are still there and can only be filled by the result of the histopathological examination of surgically obtained samples of the skin cancer [7]. To detect melanoma, asymmetry, border, colour, diameter and evolving (ABCDE) criterion is commonly used by medical professionals. But ABCDE rule is applicable only in case of relatively large lesion size; when the diameter of the lesion exceeds 6 mm [8], which requires a minor surgery conducted [9].

To resolve the issues exist in the identification of melanoma during its initial early stages; computer based automated models are required, and developed to assist the experts for the identification stage. The computer based automated identification of skin lesion images go through four steps, namely pre-processing and processing, lesion segmentation, features extraction, and finally classification. Lesion segmentation, as a basic process, effectively helps to identify the presence of melanoma in its early stages. But it becomes a difficult task due to the major similarities not only in location but also in the size of the skin lesion images. Secondly, poor contrast of the lesion image creates a high level of difficulty in discriminating the lesion image from the neighbouring tissue cells. The presence of air bubbles, hair, ruler mark, blood vessel, and colour illumination act as the additional constraints on the detection system and pose even more heavier difficulties for segmenting skin lesion images [10].

The critical features of medical lesion images are due to having the pixel-level information capacity and creating high-level attributes of the lesions through signal processing. Additionally, the complex nature of the images may not provide clinically enough relevant results satisfactorily [11]. If full automated segmentation methods are used, then clinically relevant examination of every single pixel’s image is required in order to determine the region of interest correctly and accurately. It is highly difficult to separate highly sensitive and thinner boundaries with their associated nearest neighbourhood, which is expected to differentiate the lesion and the healthy skin regions. For some specific applications, the automated medical image segmentation process may unexpectedly act as a source of new type of difficulties. Some common sources of additional obstacles are as follows: legion size, a wider variation in the shape of the lesion, the image texture, the colour of the region, and the last, the poor contrast (between regions of lesion and normal skin) [12].

The challenge is due to the indistinguishable boundaries of two sections of the image; that is the tumour part with the healthy natural skin. This challenge creates fairly high risks or difficulties for melanoma early stage detection and diagnosis [13]. To overcome this critical challenge(s), the use of the manually controlled clinical conventional practices is not enough. Therefore, the computerized techniques are used instead, using CNNs and its associated functional tools due to their superiority as a result of their automatable ability to learn the hierarchical features from the raw data [11,14].

The artefacts reduction via novel new methods of lesion region extraction requires pre-processing of the images. To do that, deep CNN algorithm based input image processing is carried out, which is able to produce the lesion region segmentation mask [15]. Therefore, CNN is recommended as a practically usable tool for such purposes with relatively less pre-processing algorithms required [16]. The computer based diagnosis methods are required for accurate and sensitive skin lesion segmentation. But, sometimes segmentation methods perform weaker than expected; the reasons for this low level performance can be listed as the fuzzy boundaries of the lesions, homogenate structure, uniformity of the illumination, the low level image contrast, background effects, inhomogeneous textures, and/or artefacts; the fuzziness here needs fuzzification of the boundaries of the lesions.

Detection of the melanoma lesion from the dermoscopy images via automated recognition system is not an easy task as it poses some challenges, making it difficult to accurately segment the lesion region. The major challenge is the low contrast between skin lesion and normal skin regions. Another major challenge is the high degree of visual similarities between melanoma and non-melanoma lesion images [17]. Segmentation of lesions is a major problem in conventional as well as automated recognition systems due to its nature of importance. Skins have different and various appearances and conditions from patient to patient. Some major variables determining skin conditions are skin colour, skin texture, blood vessels and their distributions, natural hair, and internal connections as biological networks. In case the lesion is segmented intelligently, then those major variables and conditions have potential to seriously affect the detection algorithm and its classification performance [18].

Although there are many side-effects, deep learning based CNN architectures have built up success with three main tools or processes; namely, segmentation, object detection, and classification processes. The capacity to extract high-level meaning from the images requires the uses of image feature models [19]; one example of those techniques is Deep Neural Networks (DNNs), which use self-learning algorithms. The algorithms are deriving, for example, edges (or high level features) from pixel intensities (or low level features) [20]. DNN architecture has a multi-layer CNN structure whereas traditional ANNs use some hidden layers. The main contribution of the use of the deep network architectures is their capacity to extract automatically the features present without human interference [21]. The modern methods of computer-aided diagnosis systems; such as, NNs, FLs, CNN, and deep CNN, use the colour features, as one of the most significant features extractors of the image(s). Colour features are definable based on and subject to particular colour spaces or models. RGB, LUV, HSV and HMMD are example of frequently used different colour spaces. A set of well-established significant features extractors for the images are: IE (image entropy), CH (colour histogram), CM (colour moments), CCV (colour coherence vector), and CC (colour correlograms). CM is found as the simplest and yet very operative feature [22].

The applicable procedure follows the following three steps: i.) Colour Space Transformation: The dermoscopic images in the RGB colour space are converted into the Hue, Saturation, and Value space, shortly the HSV colour space. ii.) The Hue Cycle: Hue represents the colour type described in terms of an angle in the range, from 0°-360°, but the angular values must be normalized to a range from 0 to 1. It changes its colour and takes the colour of whole spectrum, starts as red and then go through all other colours (yellow, green, cyan, blue, magenta), and finally ends in red again. iii.) The Saturation Cycle: Saturation describes the vibrancy of the colour, as it changes its values from 0 to 1; the lower the Saturation value of the colour, the greyer the colour, causing it to appear faded. iv.) The Value (Lightness) Cycle: Value or Lightness in the HSV gives the brightness of the colour. It also ranges from 0 to 1, with zero being completely dark and 1 being fully bright. Hue in the form of a 3D conical form is a colour wheel. The Saturation and the Value are two distance measuring metrics. The reason HSV colour space is used is due to its associatedness to human colour perception, and it is accepted as the most efficient colour space in skin detection for the face recognition systems. The conversion between the RGB and HSV colour spaces are done using mathematical formulas developed for this specific conversion [23].

Under the poor illumination conditions, the captured images generally demonstrate some special effects; a short list is as follows: low contrast, low brightness, colour distortion, and a narrow gray range. And the effect of noise level is also considerable, and it has the potential to affect two heavy-weighted parameters: human eyes’ visual effect and the performance of the machine vision systems. Therefore, to improve the images and their visual effects, low-light intensity based image enhancement processing is recommended for better improvement. The main techniques or algorithms of low-lighting used for image enhancement are as follows: Gray transformation, frequency-domain, image fusion, and Retinex methods, as well as histogram equalization, defogging model, and machine learning methods [24]. The image quality either boosts or reduces the sensitivity or accuracy of segmentation process. The segmentation process has a main difficulty particularly in medical images; this difficulty is associated with image degradation which is due to those four factors given below: low contrast, noise, uneven illumination, and obscurity. The degradation is a major cause of loss of information, which is capable of affecting two abilities of CNN networks: its classification and segmentation accuracy, and consequently the diagnosis safety. Independent of CNN model developed, noise and blurriness reduces the pixels classification accuracy due to partial biasness exhibited. The image enhancement technique(s) applicable to the degradation of image quality for all levels of processing (pre-processing, processing, and post-processing stages) are critical or need attention, very critical techniques especially for enhancement methods; particularly methods effecting both sensitivity and ROC curve metrics [25]. CNN has use in various applications; but its role in the medical domain analysis is beyond appreciation, especially when image classification, segmentation, and object detection concerned.

When image analysis is concerned, the visibility of the images becomes one major parameter for processing. In case of low-level visibility, the micro-level tiny objects require correctly accomplished detection and classification. To make image analysis easier for the computers and even for humans, it is suggested to reduce the level of obscurity of the images. Therefore, the image degrading effects, such as noise, blurriness, and illumination disparity, requires signal processing based (pre-and post-processing) enhancement techniques to get better CNNs performance; and the architecture developed is expected to measure two major parameters, namely the output and the predictive accuracy of the network. CNN architecture is made of layers, and one of the layers where the convolution operation takes place is convolutional layer itself. The convolution operation convolves filters (input vector or matrices) with the obtained big data matrices of the input images to create the feature maps. The CNN’s activation layer introduces the training network with a non-linear mathematical property. The task determines the choice of the particular activation function [25].

The acquisition process degrades the image quality to certain level; therefore, it is required to ameliorate the degradation process by some techniques before inputting the processed images to some extent to CNNs for segmentation. The reason is the segmentation accuracy, as its performance is directly depended on the signal processing methods used for image acquisitions. The pre-processing steps are required to enhance the quality of the images with those sorts of limitations. Image enhancement techniques are used only for specific reasons: to fetch the original image as much as possible or to restore the degraded image to its initial-form. The actual segmentation task is realized during the processing stage and the predicted or expected output is obtained. The use of main and post-processing stages aims to find ways to further increase the abilities of the processing architecture developed. Consequently, the main target is to improve two parameters of the architecture developed, the accuracy and the segmentation output. These two parameters are improved by decreasing noise and separating the overlapping boundaries, properly [20]. A short list of widely used effective pre-and post-processing techniques for image enhancement is as follows: histogram equalization operations, thresholding, the contrast manipulations (stretching, filtering and associated operations), mask processing, smoothing operations (smoothing and/or sharpening filters), image operations (transformation, normalization and/or restoration), noise removal, and some morphological operations [25].

Computerized detection of melanoma starts with proper lesion segmentation, it is essential for accurate detection. The accuracy of lesion segmentation is depended upon the success of the clinical features extraction via segmentation of and feature generation from the images. The procedure is as follows: The lesion must be totally separated from its original background, as it is located in a sort of pool made of skin and artefacts. This separation process ends up with a new type of image type, some call it as binary image, or as binary mask labelling the skin area acting as the background for the whole image. And then, clinical features are segmentally obtained from the separated lesion region only, as the lesion region(s) are the active source of the most critical and obtainable global common features; such as, symmetry, asymmetry, regularity of borders, and the irregularity of borders information. But one example of a relatively weak segmentation method is the technique integrating the pixels of the very close neighbourhood of borders with the segmented lesion’s region pixels, the pixels of the region of interest (ROI). This integration may act as a source of misleading and erroneous general and/or local border features. It may also provide unnecessary features, using the characteristics of colour bands present, during the processes used for the feature segmentation stage. In principle, image segmentation task uses various methods for lesion segmentation; but thresholding, clustering, fuzzy logic, and supervised learning is most widely used techniques [26].

Thresholding is an image segmentation operation to convert gray scale to binary format for the detection of the region of interest. Thresholding based algorithms are widely used by the conventional methods but some techniques are also used, such as the Otsu method. The disadvantages of these methods are their insensitivity when the lesion borders are irregular and have a multicolour texture. The disadvantages of the conventional methods are overcome via new methods developed based on NNs and/or CNNs. But ANN based mask production has its own disadvantages as they need to mask not only the training images but also the testing images as well [27]. The segmentation process is done for the extraction of the region of interest (ROI) of the image, and by the proper use of this process the lesion is successfully extracted in order to classify the tumour and its stages. For different level of gray scaling conversion, images need to be pre-processed to some extent. Detecting the borders of the lesions, thresholding is performed having completed the morphological filling and dilation processes. And then, the tracing process is done to determine the border of the lesion of the input image accordingly [27].

The biology of the input image or its histopathological or morphological structure is made of two segments; the skin (healthy) and the lesion (unhealthy), called as the binary structure. The binary structure has the potential to mislead the CNN based training algorithm(s). The texture or patterns of the healthy areas may sometimes provide irrelevant parameter(s) or data for melanoma detection. The outcome may act as a major cause of the information loss quite relevant to the lesion, the region of interest. Normal skin is expected to create some negative side-effects on the CNN based architecture of classification process, additional image processing steps must be used for better lesion or region of interest detection; amongst them are two techniques, namely the segmentation mask (SM) and colour plane transformation (CPT) [28].

To reduce the effects of normal skin’s textures or patterns on the classification process, which is crucial for lesion detection, fuzzy-logic based a new detection method is proposed by creating a fuzzy-masking system based on CNNs architecture. Due to the disadvantages of the CNNs based traditional mask production, as it needs masked-image dataset trained. But developed fuzzy-logic based mask algorithm does not need trained dataset, and that is the partial originality of the proposed method. The colour space of bands or segments of an image provide a huge amount of data for diagnosis. Every channel of an image has its unique data characteristics; and each colour band has its own-specific data types. The body or core structure of this paper uses HSV colour space s-channel based lesion mask production, and combine lesion mask with lesion image on RGB colour space to lesion extraction area. Stating differently, the paper uses both of the colour spaces together. The HSV colour space mimics the human visual system. The S component mimics the perceptual response of the Human Visual System [29]. To perform this, the S channel of HSV colour palette is used because it is the most irrelevant channel to all of HSV palettes for the classification of melanoma.

A transfer learning paradigm integrated with CNN architecture for skin lesion effective classification saves time and reduces the cost [30]. The images must be captured under good and sufficient illumination conditions. A critical result of the insufficient illumination is to create the degraded crucial parameters; such as, contrast, brightness, and visibility degradation. To enhance the image and the information therein, the weaknesses of the captured images need pre-processing. Therefore, based on the degraded images, different techniques are used [31]. The success of the methods used not only depends highly on multi-parametric system but also on the use of the pre-and post-processing techniques applicable to both the images and their binary counterparts. In real samples, skin lesions mostly have flaws, such as, fuzzy boundaries; and their structures demonstrate high degree of variability, from dense to thin natural tissue surrounding, which may sometimes act as a source of additional noises depending upon the data available at hand. Region-oriented segmentation methods are used for skin lesion detection; amongst them two methods are the most commonly used techniques: the colour based thresholding and gray scale thresholding [32]. The extraction of the vector of features is a very important task which requires an elaborated image processing for the digital images produced. Therefore, component based image analysis is essential, for example analysing the Saturation Channel of images of the HSV colour space is such a crucial task. The saturation is a measure of the purity of the whiteness level in the total colour spectrum. In the saturated image, broadly speaking two levels of pixels are present: the pixels of pure colours and pixels without colours. In case of just one missing primary colour, then it is the pixels of pure colour and those pixels have a saturation value of 266. But pixels without colours have a value of 0 (zero), that is, black, gray and white colours. Such choices allow improving the image processing, due to the conformance of the HSV colour space with the human perception of the colours [33].

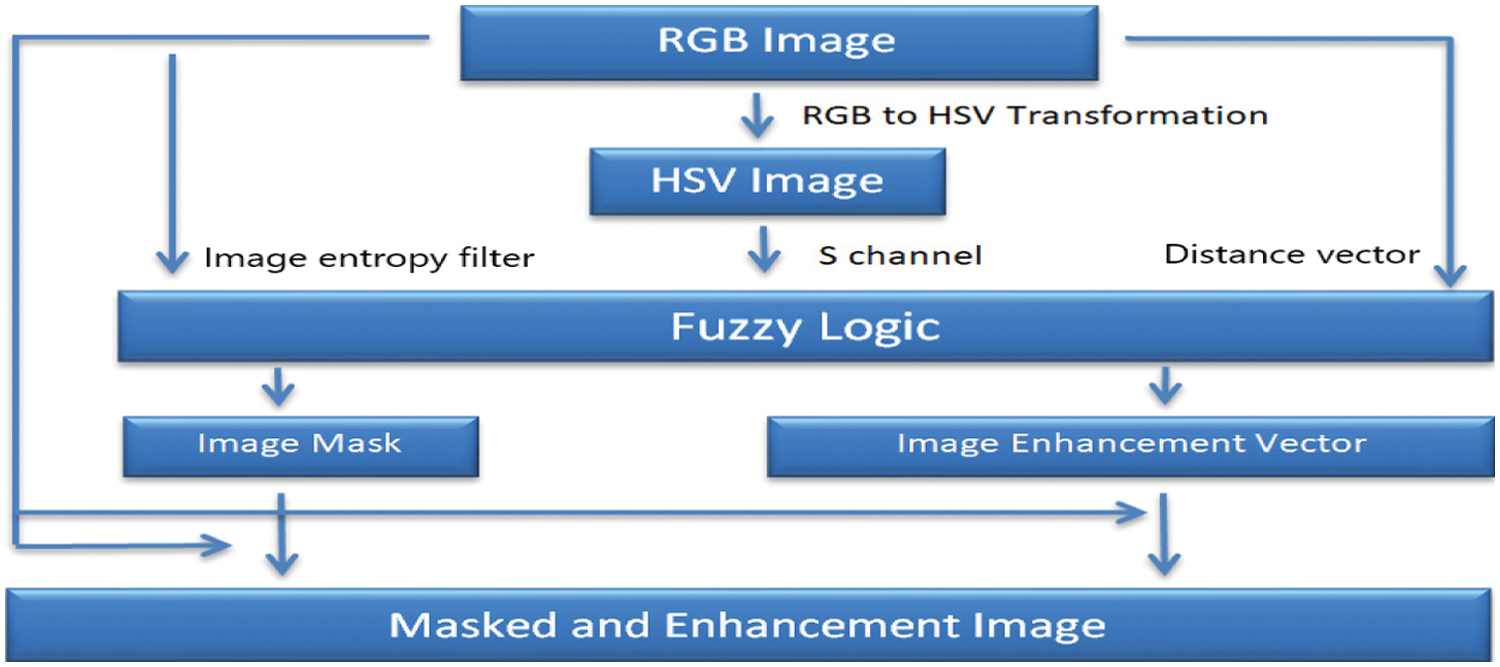

To transform the colour space in order to reflect the human visual system, the input RGB image is decomposed into a set of feature channels. All colours in RGB colour space, due to the nonlinearity characteristics of the pixels of the image, are not visible to human eye [34]. To improve the system or network efficiency and/or reduce the computation time requires the quantification of the HSV colour space components. Therefore, unequal interval quantization is needed to apply on Hue (H), Saturation (S), and Intensity (V) components according to the human colour perception. Quantified H, S and V are obtained based on the availability of different colours and subjective colour perception quantification [6]. Hue takes a wide range of values, from 0 to 360 degrees. It corresponds to the whole colour variation spectrum, beginning with red, and ending with red, a whole circle achieved [35]. The suggested and tested image masking and enhancement system based on fuzzy-logic architecture is given below, as in Fig. 1.

Figure 1: The new image masking and enhancement system with fuzzy-logic architecture

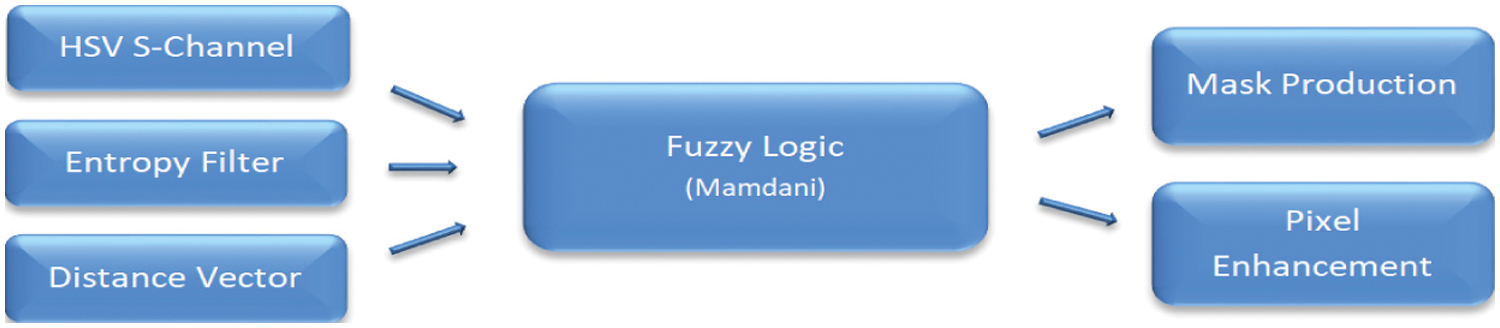

The fuzzy-logic based masking algorithm, that is the proposed new image masking and image enhancement system, is used to overcome not only the unnecessary data loaded to AlexNet, but also to protect the misleading irrelevant areas of lesion images, used to overcome CNN-required minimum medical-grade data problem(s). To overcome that crucial issue, a new method has been proposed. This new method is capable of removing the irrelevant region of interest of the lesion image(s). The data is taken from the ISIC database and images are randomly selected from it. The developed new method has been trained and tested with totally non-pre-processed 1200 raw original images. Brightness and histogram equalization type pre-processing techniques have the potential to enhance the image quality and detection accuracy. The Mamdani based fuzzy-logic system, with three inputs and two outputs, is given in Fig. 2.

Figure 2: The Mamdani based fuzzy-logic system

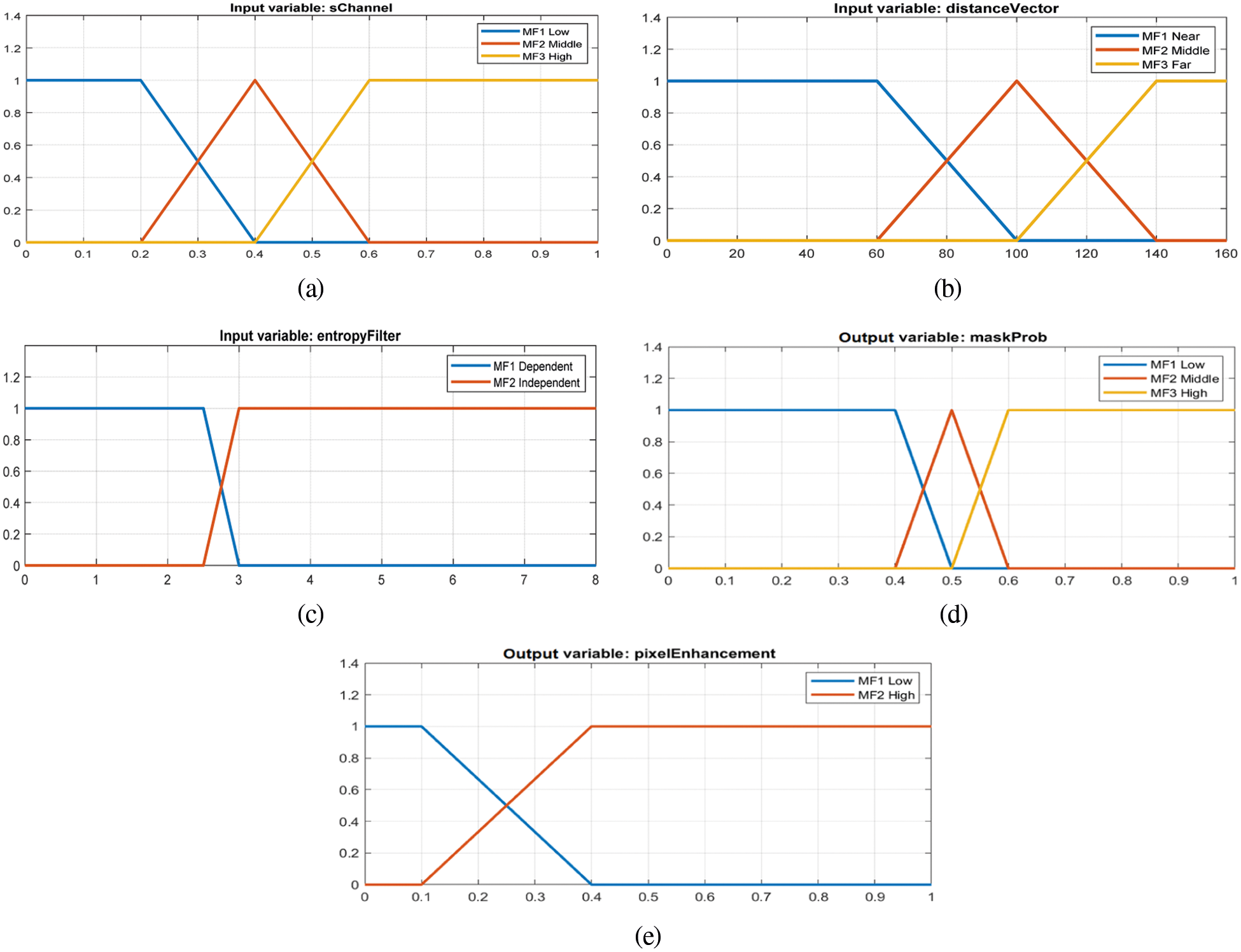

The fuzzification of membership functions of the three inputs (sChannel, entropyFilter, and distanceVector) and two outputs (maskProd, pixelEnhancement) are pictured in Figs. 3a–3e, respectively.

Figure 3: The fuzzification of the membership functions of inputs and output: (a) HSV image S channel data matrix (b) Distance vector, (c) Pixel entropy filter matrix, (d) Mask probability matrix, and (e) Image enhancement weight matrix

A fuzzy-logic based new architecture is used to create the masking process of the image, and the acquired data is used as an input to the CNN based algorithms so as to fetch a high-quality level of melanoma diagnosis. Appropriate filters are used within the architecture suggested and then activation maps were created or selected according to the data available. Having completed the data resizing by pooling operations, then all the data obtained and processed from the lesion image needs transferred to the total network’s last segment. Having completed the processing steps based on CNN and/or its associated counterparts, then the data is recreated using the lesion images inputted to the CNN based fuzzy-logic system, and finally the total system decides its own output, its final decision. In most CNN based applications, the provided input data is insufficient to train the newly developed deep CNN based architecture. This urgent difficulty is solved by a powerful method, namely transfer learning method, which is a practical instrument to train the available data to the required level for CNN based decision making process. It is expected that the pretrained CNN architecture can increase the accuracy of the decision reached in critical applications. The superiority of the image enhancement system based on the fuzzy-logic is due to its capacity of improving CNN performance leading to accurate detection. CNN performance is accomplished via sufficiently enough trained AlexNet and its associated and auxiliary cascaded networks only using a small number of ISIC images [36].

The definable elements of a coloured image are Red (r), Green (g) and Blue (b) parameters of RGB plane; and (h) for Hue, (s) for Saturation and (v) for Value values of HSV colour plane, respectively. The maximum values of RGB colour plane and minimum value of RGB colour plane are min{r, g, b} and maximum value max{r, g, b}. The saturation sHSV is defined as following Eq. (1):

This equation helps to calculate the RGB values (r, g, b) RGB from a given HSV colour {h, sHSV, v}HSV [37].

3 The Developed Fuzzy-Logic Based Masking and Enhancement System

The image processing method via the fuzzy-logic based system performs two basic tasks: segmentation and feature extraction. Segmentation defines the region of interest (ROI) (or the lesion) and helps to separate the ROI from the normal skin (or the healthy region). This process is automated by processing the saturation images via the fuzzy-logic based applications. In the saturation image, the lesion is brighter than the normal skin, and the fuzzy-logic system processes the brightness available in the saturated image [33]. Having produced the mask probability matrix, the activation function makes use of this probability matrix as a threshold value in order to eliminate the irrelevant data fetched. The thresholding is applied via the activation function so as to generate the proper image mask required. After image masking and image enhancement matrix on the available images, the data produced due to these techniques is given as an input to CNN to make the decision.

3.1 Lesion Mask Generation and Fuzzy-Image Segmentation

The pre-processed images carry some difficulties in their natural original forms; such as, distinct boundaries of the images and the different contrast levels between the region(s) of interest. To analyse arbitrary sampled images, different segmentation techniques are used and the results evaluated. The preferred methods are mainly based on border, threshold, region or partial derivation based techniques. Good and effective examples of such methods are K-means, Otsu, Super Pixel, Active Contour, and Fuzzy C-Mean. To validate the usefulness of these methods, some techniques are used, of those are some: Jaccard Index (JI) is the measure of the similarity of the formation of the pixels. Probabilistic Rand Index (PRI) has two states; as zero or one. The index takes 0 indicating that the two data clustering do not agree on any pair of data points; but it takes 1 indicating that the data clustering are exactly the same. Contour Shape Matching (CSM), Object Identification (OI), Variation of Information (VI) and Active Contour Model (ACM) are well performing methods in the majority of the cases protecting the boundaries. The binary mask of the melanoma lesion might contain some holes in a number of different and various situations, in such cases, optimal parameter combinations must be determined to create maximum expected standard correctness of segmentation. And flood fill algorithms are needed in order to occupy the regions accordingly [38].

The presence of skin artefacts can act as a factor distracting the segmentation process. To increase the lesion border visibility and ensure its correct identification, two successive steps are necessary. These steps are image filtration and histogram equalization. These two tools are used to correct the corrupted image clarity in order to reduce the effects of different skin colour variations and their backgrounds [39].

When fuzzy-logic based methods or fuzzification processes are used in the images, then the values of pixels making the images can be described as fuzzy-numbers. Consequently for each pixel value (pi,j) of the image, it becomes possible to compute the membership value of each pixel related to its referenced neighbourhood pixels. This method is different from classical methods, such as from the thresholding approaches because it sets a membership level, a value between 0 and 1, to the region [40].

3.2 Lesion Image Enhancement Part

By using appropriate algorithms via software developed, it may produce results to improve the quality of the image. By manipulating or fine-tuning the intensity of the contrast, it is possible to make an image darker or lighter. By the utilization of the cutting edge image enhancement applications, filters are used to create the image and the changes needed to the image. The original lesion image is given on Fig. 4 [41].

Figure 4: The original lesion image

3.3 Fuzzy-Logic Based Masking Part

3.3.1 Fuzzy Logic System Inputs

HSV Image S Channel Data Matrix

The HSV image s-channel data matrix is produced by the formulas given below and Matlab based software developed. The RGB values of an image are converted into HSV values using Eq. (2):

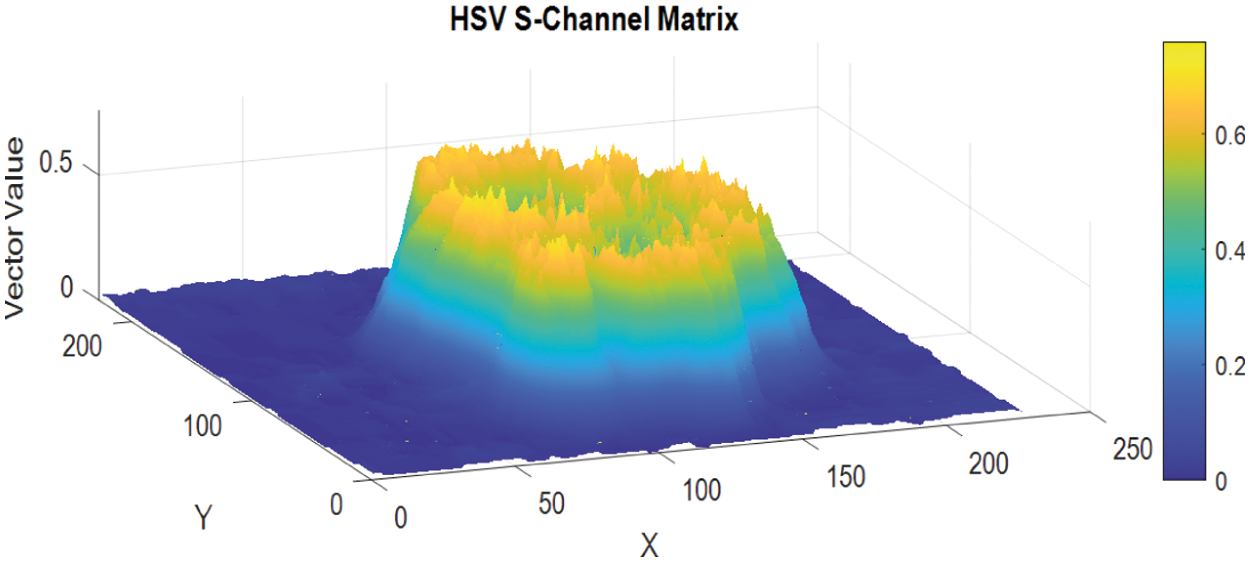

The s-channel data matrix of the HSV image is depicted as in Fig. 5.

Figure 5: The S-Channel data matrix of the HSV image

The Distance vector measures the pixels positions and the centre of the image in terms of the distance between them, accordingly. It is a scalar quantity and is given by the Eq. (3) below:

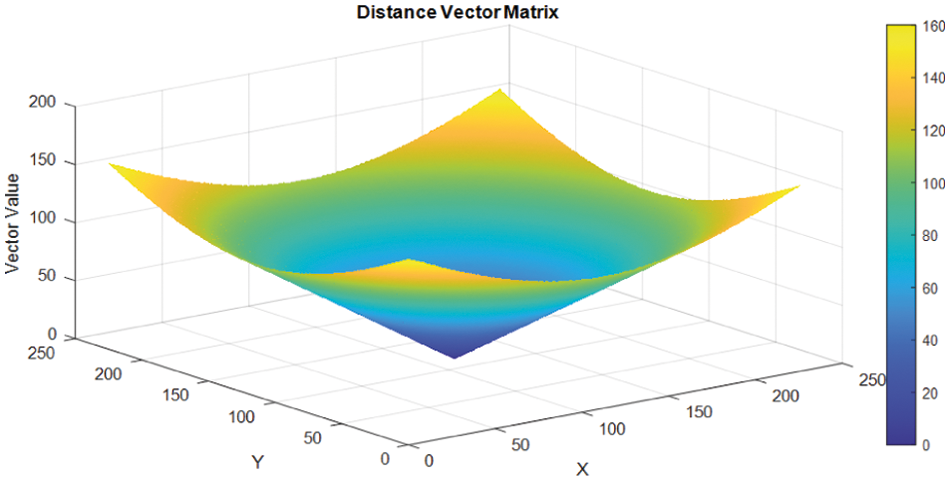

where the parameters with indexes, (cx, cy) are the coordinates of the active centre of the image and is represented by (cx, cy) = (113, 113), respectively, and each image is defined as 227 × 227 pixels. The graphical representation of the matrix of the distance vector vs. the matrix values of the elements is given in Fig. 6. Closely examined the graph of the images demonstrates that the lesion area (ROI) is at the centre of the graphs. The graph describes the readability and location of each pixel and their information carrying ability and capacity based on their distance from the centre.

Figure 6: Distance vector matrix

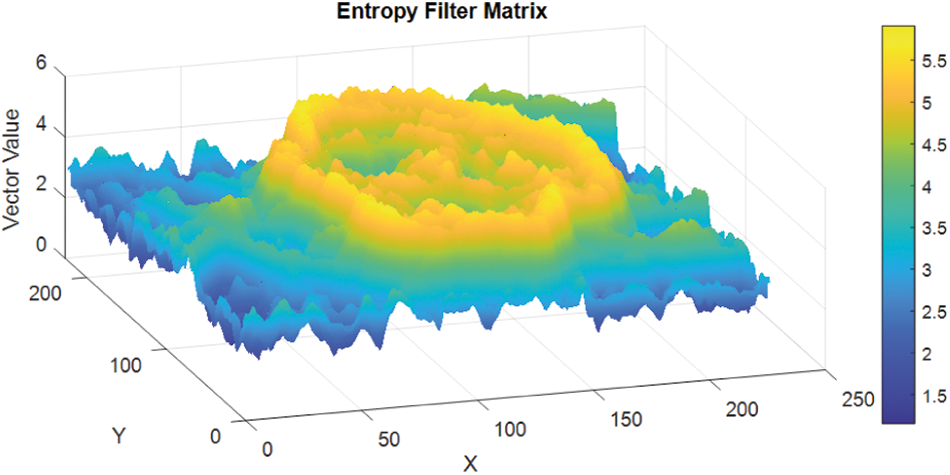

Entropy is a statistical measure of randomness and it can be used to characterize the patterns or the texture structures of the input images [42]. The entropyfilt function of the Matlab programme was used to perform the process of entropy filtering. The brighter pixels with higher entropy level were detected in the filtered image corresponding to its close neighbourhoods in the original image [42]. The neighbourhood is defined and specified as a numeric value, or a logical array containing some zeros and/or some ones. The recommended size of the neighbourhoods must be an odd number in each dimension, as the entropyfilt uses the neighbourhood “true” value by default. To specify the neighbourhood values for different shapes or forms, such as a disk shaped pixel groups, then different functions are used, on MATLAB programming language the strel function is useable for the disk type shapes, this form is called “the structural element”. Then, the neighbourhood value is extracted from the structuring element neighbourhood properties. The different textures on the lesion image and its surrounding skin are calculated using the entropy filter algorithm developed. The algorithms successfully extracted the lesion area (ROI) from the surrounding skin; and the image entropy filter output matrix is given as in Fig. 7.

Figure 7: Image entropy filter matrix output

3.3.2 Fuzzy Logic System Outputs

The designed fuzzy-logic based new system is a three inputs and two outputs system. The graphical representation is a set of fuzzy-variables which simply depicts the proper membership functions. The rule based system developed for input functions is similarly extended to the output functions, targeting a reasonable output. The inputs of the fuzzy-logic based system have three inputs; namely, HSV s-Channel-Data-Matrix (shortly, HSV-s-channel data), Distance-Vector-Matrix (DVM), and the Image-Entropy-Filter-Matrix (I-EFM). The Mamdani based generated outputs are Mask Probability Matrix (MPM) and Image Enhancement Weight Matrix (IE-WM). The rule based definitions are basic tools to produce the membership functions, so as, the behaviour of total system. One example of the rules developed for the fuzzy-logic based system is given below as an example:

If the HSV image s-Channel Data Matrix is low, Distance Vector is near, and Image Entropy Filter Matrix is dependent, then the Outputs are; Mask Probability Matrix is low and Image Enhancement Weight Matrix is low.

The Mask Probability Matrix is generated by the fuzzy-logic system. It defines the lesion mask. Then the activation function is used as a thresholding mechanism in order to accomplish the optimized recognition of the region via the histogram(s) of the processed image(s). The erosion and dilation are used to eliminate the noise present in the system due to the non-connected objects. The binary-plane is a 1-bit image capable of separating regions using proper amount of ones (ROI) and zeros (healthy skin). In computing the numerical features vectors of the images, the binary-plane is used as the basic concept as it gives the purpose of the feature extraction module as well [33].

The step activation function used as a filter is given by the Eq. (4) below.

The z parameter for the developed system is a constant equal to 0.300 [43]. And the image of the lesionmask created is given in Fig. 8.

Figure 8: The image of the lesion mask

Image Enhancement Weight Matrix

To enhance the image, histogram equalization based methods were used. The histogram of HSV colour space s-channel elements are equalized by the fuzzy-logic rules. If the associated pixels of an image have uniformity and homogeny all across the gray levels possible, then it is said that the image has high degree of contrast and large dynamic ranges. Using this basic principle(s), then the histogram equalization algorithm can be used for data analysis. In order to adjust the output gray levels, the algorithms use the cumulative distribution function. This function is expected to conform with a probability density function that corresponds to a uniform distribution. This technique has the power to make the details hidden in intensive or lighter dark areas to reappear, and as a result effectively enhancing the visual effect of the input image [24]. The output fetched as a result of the Masked and Enhanced Lesion Images via the fuzzy-logic based architecture system developed is given in Fig. 9.

Figure 9: The masked and enhanced lesion image output

3.4 The Development of the CNN Based Architecture System

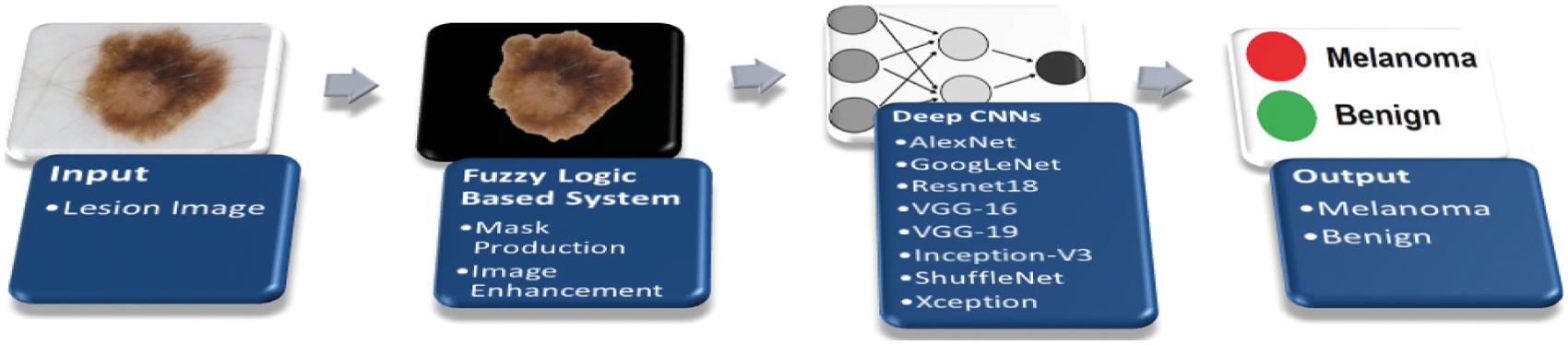

This paper suggests CNN based two stages architecture in order to diagnose the melanoma. In the first stage, the processed ISIC images were given as inputs to the system working on the fuzzy-logic principles and rules. The fuzzy-logic based system performs two main functions; it not only enhances but also masks the images accordingly before submitting them as inputs to the CNN network(s) and its associated counterparts. As a result, the total system becomes capable of making automated decision of the image as melanoma or not. The block diagram of the overall new fuzzy-logic system integrated with CNN based network is given in Fig. 10. The cascaded multi-layer built-in structure has four segments: input, fuzzy-logic based system, deep CNN algorithm, and output layer. The critical second step performs two separate functions: Mask Production and Image Enhancement. In deep CNN based algorithm, the created maps were given as inputs to the deep CNN based algorithms-architectures with automatically resized as inputs of algorithms.

Figure 10: The architecture of new fuzzy-logic and CNN algorithm

A set of images with lesion area is transferred to the CNN based system architectures as input data vectors in conventional applications whereas the system suggested by this paper takes the input lesion-images and processed them by the fuzzy-logic based rules, and then the processed outputs are given to the deep-CNN based algorithms for further second stage processing, that is, the developed system uses the outputs of the fuzzy-logic based segment as inputs to the deep-CNN based algorithms. This method is the original contribution of the paper, the suggested method. The Matlab based new system of fuzzy-logic algorithm accepts three inputs and two outputs based on the membership’s functions defined. The two new outputs of the fuzzy-logic based system are Masked Production and Image Enhancement. Having completed of the network training based on deep-CNN algorithms successfully, then the decision making is left to the system, to decide about the region of interest as melanoma or not.

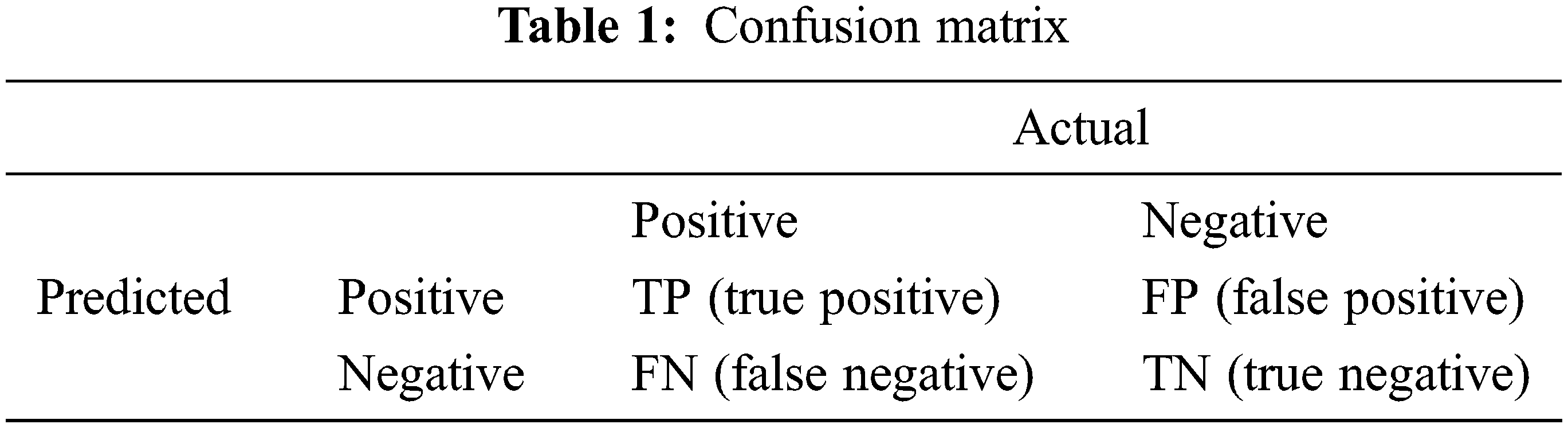

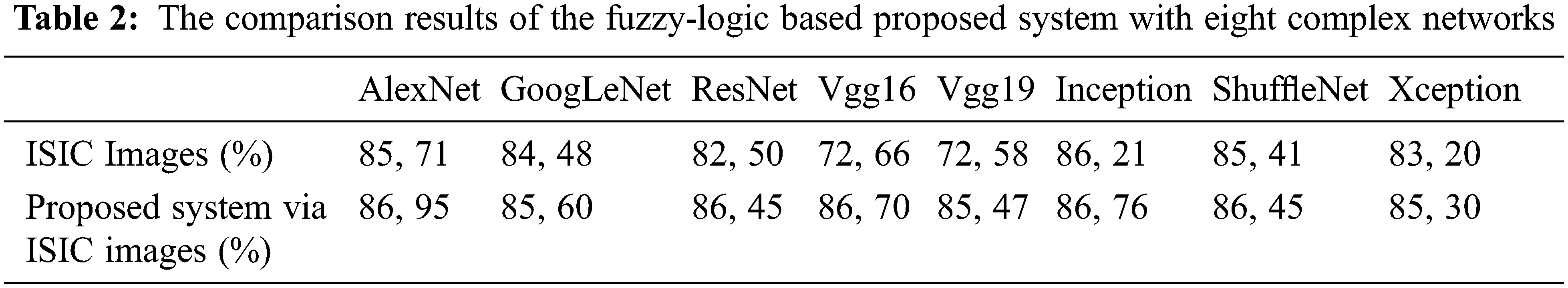

A new fuzzy-logic based image masking and image enhancement system were developed, feeding the CNN architecture, to help diagnose melanoma. The quantitative evaluation is based on the calculation of the accuracy parameter for eight different networks. The segmentation process aims to classify each pixel as melanoma or non-melanoma. Consequently, the accuracies obtained via the developed fuzzy logic-based CNN architectures were compared with the well-established standards; such as, the AlexNet, GoogLeNet, Resnet18, VGG-16, VGG-19, Inception-V3, ShuffleNet and Xception architectures, respectively. Then the classification metrics required were used based on their standard definitions given; such as, accuracy, specificity, and sensitivity to be calculated accordingly. Accuracy is given in the Eq. (5) given below by its definition. The accuracy is the percentage of the correct classification [44]. And the new diagnosis system confusion matrix is obtained as in Tab. 1. The performance of the suggested method is obtained by using processed images of ISIC database and the results produced were entered to the following nets as inputs; AlexNet, GoogLeNet, Resnet18, VGG-16, VGG-19, Inception-V3, ShuffleNet and Xception architectures respectively and the compared results are as in Tab. 2, best quantitative values obtained via fuzzy-logic integration in terms of accuracy parameter of the system. The proposed method produces better performance, in terms of accuracy parameter when compared with AlexNet, GoogLeNet, Resnet, VGG16, VGG19, Inception, ShuffleNet and Xception. Outputs obtained for both using normal input without fuzzy-logic processing of the images and improved image maps with fuzzy-logic processing of the images.

Research on the early stage melanoma detection has gained significant interest during last a few decades due to its deadly capacity. Computer aided diagnosis systems (CADS) integrated with NNs, ML, CNNs, and DL are major preferable tools capable of early stage melanoma detection with high degree of accuracy, specificity, and sensitivity. The core of the CADS is the image itself taken from the patient and the information therein within the pixels of the image. The colour and shapes of the images are determined by the pixels’ characteristics and orientations, as the pixels and their associated environment are strongly interrelated with the lesion. The system developed has two interrelated steps: The fuzzy-logic based maskProd and pixelEnhancement vectors production applied with lesion image and the second step is its integration with deep CNN algorithms, such as AlexNet, Resnet18, and Inception. maskProd and pixel Enhancement are based on lesion masking and image enhancement procedure via fuzzy logic names as hSvMask production process which has three inputs; sChannel, entropyFilter and distanceVector. Image processing techniques, such as conventional methods, are not sufficient enough to make decisions regarding whether the image is melanoma or not by themselves. But they are required as how to tackle the issue and produce appropriate algorithms. The new developments in ML, CNN, NN, and DL create big opportunities to develop innovative new methods. These new methods integrate the modern techniques with the well-established conventional methods available leading to powerful detection algorithms. To create an innovative method, this paper combined eight algorithms with fuzzy-logic based system.The values obtained for accuracy parameter as a result of the integrated form of deep CNN algorithms with fuzzy-logic is 86.95, 85.60, 86.45, 86.70, 85.47, 86.76, 86.45 and 85.30 percentages, respectively. The fuzzy-logic system developed is capable of extracting the hidden extra data in the pixels and neighbouring environment which were not possible to extract by the algorithms with their original forms.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. “Cancer”, Who.int. [Online]. 2021. Available: https://www.who.int/health-topics/cancer#tab=tab_1. [Google Scholar]

2. “What Is Melanoma?”, American Cancer Society. [Online]. 2021. Available: https://www.cancer.org/cancer/melanoma-skin-cancer/about/what-is-melanoma.html. [Google Scholar]

3. D. Bisla, A. Choromanska, R. S. Berman, J. A. Stein and D. Polsky, “Towards automated melanoma detection with deep learning: Data purification and augmentation,” in Proc. 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, pp. 2720–2728, 2019. [Google Scholar]

4. L. Ichim and D. Popescu, “Melanoma detection using an objective system based on multiple connected neural networks,” IEEE Access, vol. 8, pp. 179189–179202, 2020. [Google Scholar]

5. M. A. Kadampur and S. A. Riyaee, “Skin cancer detection: Applying a deep learning based model driven architecture in the cloud for classifying dermal cell images,” Informatics in Medicine Unlocked, vol. 18, no. 100282, 2020. [Google Scholar]

6. R. Mishra and O. Daescu, “Deep learning for skin lesion segmentation,” in Proc. of 2017 IEEE Int. Conf. on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, pp. 1189–1194, 2017. [Google Scholar]

7. E. Pérez, O. Reyes and S. Ventura, “Convolutional neural networks for the automatic diagnosis of melanoma: An extensive experimental study,” Medical Image Analysis, vol. 67, Article 101858, 2021. [Google Scholar]

8. G. Argenziano, G. Albertini, F. Castagnetti, B. De Pace, V. Di Lernia et al., “Early diagnosis of melanoma: What is the impact of dermoscopy?,” Dermatologic Therapy, vol. 25, no. 5, pp. 403–409, 2012. [Google Scholar]

9. A. Naeem, M. S. Farooq, A. Khelifi and A. Abid, “Malignant melanoma classification using deep learning: Datasets, performance measurements, challenges and opportunities,” IEEE Access, vol. 8, pp. 110575–110597, 2020. [Google Scholar]

10. M. Yacin Sikkandar, B. A. Alrasheadi, N. B. Prakash, G. R. Hemalakshmi, A. Mohanarathinam et al., “Deep learning based an automated skin lesion segmentation and intelligent classification model,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, pp. 3245–3255, 2021. [Google Scholar]

11. F. Xie, J. Yang, J. Liu, Z. Jiang, Y. Zheng et al., “Skin lesion segmentation using high-resolution convolutional neural network,” Computer Methods and Programs in Biomedicine, vol. 186, Article 105241, 2020. [Google Scholar]

12. I. R. I. Haque and J. Neubert, “Deep learning approaches to biomedical image segmentation,” Informatics in Medicine Unlocked, vol. 18, Article 100297, 2020. [Google Scholar]

13. T. Y. Tan, L. Zhang and C. P. Lim, “Adaptive melanoma diagnosis using evolving clustering, ensemble and deep neural networks,” Knowledge-Based Systems, vol. 187, Article 104807, 2020. [Google Scholar]

14. A. Coronato, M. Naeem, G. De Pietro and G. Paragliola, “Reinforcement learning for intelligent healthcare applications: A survey,” Artificial Intelligence in Medicine, vol. 109, Article 101964, 2020. [Google Scholar]

15. M. H. Jafari, N. Karimi, E. Nasr-Esfahani, S. Samavi, S. M. R. Soroushmehr et al., “Skin lesion segmentation in clinical images using deep learning,” in Proc. of 23rd Int. Conf. on Pattern Recognition (ICPR), Cancun, Mexico, pp. 337–342, 2016. [Google Scholar]

16. P. Sabouri and H. Gholam Hosseini, “Lesion border detection using deep learning,” in Proc. of 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, pp. 1416–1421, 2016. [Google Scholar]

17. L. Yuexiang and L. Shen, “Skin lesion analysis towards melanoma detection using deep learning network,” Sensors, vol. 18, no. 2, pp. 556, 2018. [Google Scholar]

18. X. Cao, J. S. Pan, Z. Wang, Z. Sun, A. Haq et al., “Application of generated mask method based on mask R-CNN in classification and detection of melanoma,” Computer Methods and Programs in Biomedicine, vol. 207, pp. 106174, 2021. [Google Scholar]

19. L. Bi, J. Kim, E. Ahn, A. Kumar, M. Fulham et al., “Dermoscopic image segmentation via multistage fully convolutional networks,” IEEE Transactions on Biomedical Engineering, vol. 64, no. 9, pp. 2065–2074, 2017. [Google Scholar]

20. S. Sabbaghi, M. Aldeen and R. Garnavi, “A deep bag-of-features model for the classification of melanomas in dermoscopy images,” in Proc. of 2016 38th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, pp. 1369–1372, 2016. [Google Scholar]

21. M. P. Pour, H. Seker and L. Shao, “Automated lesion segmentation and dermoscopic feature segmentation for skin cancer analysis,” in Proc. of 39th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, pp. 640–643, 2017. [Google Scholar]

22. S. Singh and S. Urooj, “Analysis of chronic skin diseases using artificial neural network,” International Journal of Computer Applications, vol. 179, no. 31, pp. 7–13, 2018. [Google Scholar]

23. W. Al-Zyoud, A. Helou, E. Al Qasem and N. Rawashdeh, “Visual feature extraction from dermoscopic colour images for classification of melanocytic skin lesions,” EurAsian Journal of BioSciences, vol. 14, pp. 1299–1307, 2020. [Google Scholar]

24. W. Wang, X. Wu, X. Yuan and Z. Gao, “An experiment-based review of Low-light image enhancement methods,” IEEE Access, vol. 8, pp. 87884–87917, 2020. [Google Scholar]

25. O. O. Sule, S. Viriri and A. Abayomi, “Effects of image enhancement techniques on CNNs based algorithms for segmentation of blood vessels: A review,” in Proc. 2020 Int. Conf. on Artificial Intelligence, Big Data, Computing and Data Communication Systems, Durban, KwaZulu Natal, South Africa, pp. 1–6, 2020. [Google Scholar]

26. E. Okur and M. Turkan, “A survey on automated melanoma detection,” Engineering Applications of Artificial Intelligence, vol. 73, pp. 50–67, 2018. [Google Scholar]

27. M. Reshma and B. Priestly Shan, “A clinical decision support system for micro panoramic melanoma detection and grading using soft computing technique,” Measurement, vol. 163, Article 108024, 2020. [Google Scholar]

28. E. Nasr-Esfahani, S. Samavi, N. Karimi, S. M. R. Soroushmehr, M. H. Jafari et al., “Melanoma detection by analysis of clinical images using convolutional neural network,” in Proc. of 2016 38th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, pp. 1373–1376, 2016. [Google Scholar]

29. S. Pathan, V. Aggarwal, K. G. Prabhu and P. C. Siddalingaswamy, “Melanoma detection in dermoscopic images using color features,” Biomedical and Pharmacology Journal, vol. 12, no. 1, pp. 107–115, 2019. [Google Scholar]

30. A. R. Lopez, X. Giro-i-Nieto, J. Burdick and O. Marques, “Skin lesion classification from dermoscopic images using deep learning techniques,” in Proc. of 13th IASTED Int. Conf. on Biomedical Engineering (BioMed), Innsbruck, Austria, pp. 49–54, 2017. [Google Scholar]

31. C. Li, J. Guo, F. Porikli and Y. Pang, “LightenNet: A convolutional neural network for weakly illuminated image enhancement,” Pattern Recognition Letters, vol. 104, pp. 15–22, 2018. [Google Scholar]

32. E. Zagrouba and W. Barhoumi, “An accelerated system for melanoma diagnosis based on subset feature selection,” Journal of Computing and Information Technology, vol. 13, no. 1, pp. 69–82, 2005. [Google Scholar]

33. A. Sboner, E. Blanzieri, C. Eccher, P. Bauer, M. Cristofolini et al., “Knowledge based system for early melanoma diagnosis support,” in Proc. 6th IDAMAP Workshop-Intelligent Data Analysis in Medicine and Pharmacology, London, UK, 2001. [Google Scholar]

34. Q. Abbas, I. F. Garcia, M. E. Celebi, W. Ahmad and Q. Mushtaq, “A perceptually oriented method for contrast enhancement and segmentation of dermoscopy images,” Skin Research and Technology, vol. 19, no. 1, pp. 490–497, 2012. [Google Scholar]

35. M. Lumb and P. Sethi, “Texture feature extraction of RGB, HSV, YIQ and dithered images using GLCM, wavelet decomposition techniques,” International Journal of Computer Applications, vol. 68, vol. 11, pp. 25–31, 2013. [Google Scholar]

36. K. M. Hosny, M. A. Kassem and M. M. Foaud, “Classification of skin lesions using transfer learning and augmentation with alex-net,” PLoS ONE, vol. 14, no. 5, 2019. [Google Scholar]

37. L. Stratmann, “HSV. Lukas Stratmann”, [Online]. 2021. Available: http://color.lukas-stratmann.com/color-systems/hsv.html. [Google Scholar]

38. A. Maiti and B. Chatterjee, “Improving detection of melanoma and naevus with deep neural networks,” Multimedia Tools and Applications, vol. 79, no. 21–22, pp. 15635–15654, 2019. [Google Scholar]

39. M. Ahmed, A. Sharwy and S. Mai, “Automated imaging system for pigmented skin lesion diagnosis,” International Journal of Advanced Computer Science and Applications, vol. 7, no. 10, pp. 242–254, 2016. [Google Scholar]

40. J. Diniz and F. Cordeiro, “Automatic segmentation of melanoma in dermoscopy images using fuzzy numbers,” in Proc 2017 IEEE 30th Int. Symp. on Computer-Based Medical Systems (CBMS), Thessaloniki, Greece, pp. 150–155, 2017. 2017. [Google Scholar]

41. ISIC Archive, [Online]. 2021. Available: https://www.isic-archive.com/. [Google Scholar]

42. “Local entropy of grayscale image-MATLAB entropyfilt”, Mathworks.com, [Online]. 2021. Available: https://www.mathworks.com/help///images/ref/entropyfilt.html. [Google Scholar]

43. J. Lederer, “Activation functions in artificial neural networks: A systematic overview,” arXiv preprint arXiv:2101.09957, 2021. [Google Scholar]

44. A. M. A. E. Raj, M. Sundaram and T. Jaya, “Thermography based breast cancer detection using self-adaptive gray level histogram equalization color enhancement method,” International Journal of Imaging Systems and Technology, vol. 31, no. 2, pp. 854–873, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |