DOI:10.32604/iasc.2022.024939

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.024939 |  |

| Article |

A New Color Model for Fire Pixels Detection in PJF Color Space

1REGIM-Lab: Research Groups on Intelligent Machines, University of Sfax, ENIS: National Engineering School of Sfax, Route de Soukra, 3038, Sfax, Tunisia

2Department of Computer Engineering, College of Computers and Information Technology, Taif University, Taif, 21944, Saudi Arabia

*Corresponding Author: Amal Ben Hamida. Email: benhamida.amal@gmail.com

Received: 05 November 2021; Accepted: 22 December 2021

Abstract: Since the number of fires in the world is rising rapidly, automatic fire detection is getting more and more interest in computer vision community. Instead of the usual inefficient sensors, captured videos by video surveillance cameras can be analyzed to quickly detect fires and prevent damages. This paper presents an early fire-alarm raising method based on image processing. The developed work is able to discriminate fire and non-fire pixels. Fire pixels are identified thanks to a rule-based color model built in the PJF color space. PJF is a newly designed color space that enables to better reflect the structure of the colors. The rules of the model are established through examining the color nature of fire. The proposed fire color model is assessed over the largest dataset in the literature collected by the authors and composed of diverse fire images and videos. While considering color information only, the experimental findings of detecting flame pixels candidates are promising. The suggested method achieves up to 99.8% fire detection rate and 8.59% error rate. A comparison with the state-of-the-art color models in different color spaces is also carried out to prove the performance of the model. Based on the color descriptor, the developed approach can accurately detect fire areas in the scenes and accomplish the best compromise between true and false detection rates.

Keywords: Image processing; fire detection; PJF color space; rule-based color model

Throughout history, fire has always played an essential but conflictual role: on the one hand fire has enabled to improve the conditions of everyday life, protecting humans and developing industry, on the other hand it has represented a danger to be defended against. Indeed, fire is one of the most grave calamities in the world that often lead to economic, ecological and social damages by endangering people’s lives [1,2]. According to the study of fire disasters provided by the Center of Fire Statistics (CTIF), over than 4.5 million fires in total with at least 30 000 fatalities and 51 000 injuries are reported in 2018 [3]. Furthermore, the Food and Agriculture Organization of the United Nations (FAO) announced that burned surfaces in the world represent about 350 million hectares every year, more than 11 hectares per second. These indicators point out the need to devote more attention to the control and prevention of fire-related accidents. Taking all this into account, it can no longer be denied that fire detection research is of great importance. Early suggested solutions relied mainly on ion, optical or infrared sensors that explore some fire’s properties, such as temperature, smoke, particle, radiation, vapor. These traditional fire alarm systems were widely used in the past while currently their practical efficiency has degraded due to many shortcomings [4]. Recently, Video Fire Detection (VFD) has been seen as one of the most promising candidates. With the speedy evolution of digital cameras and image processing techniques, there has been an outstanding surge towards replacing sensor based fire detection systems with VFD ones. This technology analyzes captured videos searching for fire using computer vision analytical algorithms. That’s why, VFD systems offer several advantages over traditional ones. Firstly, they are based on video surveillance cameras which are already mounted everywhere and have a steady dropping price. In these circumstances, it would be wise to develop a device for detecting fire using this equipment at no cost. Moreover, vision based techniques can be simply embedded into existing systems to empower more efficient solutions. Secondly, the delay of response is shorter than sensors, since the camera does not need to wait for the particles diffusion. Thirdly, as video camera is a volume sensor, VFD has the ability to potentially monitor different kinds of vast and open spaces creating a better opportunity of early fire detection. Fourthly, the captured video images can embed valuable information such as color, texture, shape, dimensions, position which promotes substantially the fire detection and analysis. Finally, in case of an alarm, the human operator has the possibility to check the presence of fire remotely by reviewing the recorded scenes. Even, the cause of the fire can be discerned [5,6].

Video fire detection is getting increasingly the researchers interest. However, it is still a challenge to build a robust fire detection system capable to work efficiently in all possible real world scenarios. This is because of the complex and non-static structure of the fire flame, as well as the real time constraint. As a matter of fact, fire flame is characterized by its dynamic form and changing intensity. Besides, though flame color varies always in the red-yellow range, it can be a misleading feature since many objects may have similar appearances, such as fireworks lights, moving red objects, the sun, which may lead to false alarm detections. Undoubtedly, overcoming these challenges depends considerably on the reliability of the fire detection method. Generally, VFD techniques exploit the color, form, texture and movement signatures of the fire area [7–12]. The color is the most distinct feature widely used to distinguish pixels belonging to a candidate flame region. Using a color-based model is the initial and crucial step for almost all works but it is explored in different color spaces [13,14]. The commonly used are Red Green Blue (RGB), YUV, YCbCr, Hue Saturation Value (HSV) and CIE L*a*b*. Our work is encouraged by the fact that, up to now, no color space has been considered as the perfect reference for fire flame detection. Throughout this paper, we present a color-based model in PJF color space. PJF is an emerging color space showing promising results in computer vision [15]. The developed technique establishes rules to determine flame colored pixels. An experimental comparison with some existing color models in the literature shows that: the classification accuracy of the fire pixels is enhanced by the suggested algorithm, while the error rate is greatly reduced. To be able to manage real-world fire scenarios, we have built an image dataset and a video dataset by downloading fire video clips and images from the public available fire datasets. This tested data is much larger than those used by other researchers.

We should mention that this work is dedicated for only discussing fire color model to extract flame colored pixels. Color models cannot distinguish between fire and fire-like pixels. We agree that further analysis should be applied on the detected regions, but it is a problem by itself, which is not considered in this paper. An extension of this work was addressed in [16], providing a complete VFD system.

This paper is organized as follows: Section 2 outlines literature works using color-based models for fire detection. Section 3 presents the proposed model. Experimental findings and their analysis are provided in Section 4. To sum up, Section 5 concludes the work and exhibits its perspectives.

The number of documents dealing with VFD in the literature has exponentially increased [1]. According to many researches, shape, color, and movements of the fire area are pertinent features. Fire has a very distinct color, which passes from red through yellow and up to white. Based on color properties, the state-of-the-art techniques can be categorized into three classes.

We would like to mention that we only deal with color models in this work.

2.1 Methods Based on Distribution

In these methods, the fire pixels colors are supposed to be concentrated in particular areas of the color space, and so, the probability distribution functions are built by training the model with a set of fire images. A pixel is considered as fire if it belongs to the predetermined color probability distribution. The already developed works differ depending on the used color space, components plane, distribution model, etc. Reference [17] shows that possible fire-pixel values constitute a 3D points cloud in the RGB color system. The cloud is represented using a Gaussian mixture model with 10 Gaussian distributions. In the work of [18], a probabilistic approach for fire detection in RGB color space is proposed. The authors suppose that the distribution of the color channels of every fire pixel is independent and can be described by a unimodal Gaussian model. If the total pixel color probability distribution exceeds a threshold, it is considered as a flame. The Gaussian model presented in [19] assumes that a pixel is considered as a flame pixel, if the red component’s value is greater than the green one, and the green one is higher than the blue one. This model is used to calculate the mean value of the pixel intensities in a fire frame area. Reference [20] uses RGB color space, too, assuming that once the flame blows up, the pixel’s Gaussian distribution instantly bounds from low to high mean value. Wang et al. in [21] propose a Gaussian model for the Cb and Cr distributions in the YCbCr color space supposing that fire pixels take higher values in the Cr channel than in the Cb one. The authors in [22] use also the YCbCr color model to demonstrate that Cr and Cb have their own normal distribution characteristics relative to Y. Statistical characteristics are calculated from the obtained distribution function to identify the occurrence of fire.

For this type of approaches, the color intensities of the pixel are considered as features to categorize pixels as fire and non-fire. In Reference [23], the chrominance components a* and b* of the CIE L*a*b* color space are provided as features to the Fuzzy C-Means (FCM) algorithm. In [24], the values of the pixel color as well as its first and second derivatives are exploited to produce a covariance matrix. Pixel features, which are the elements of the matrix, are fed to a Support Vector Machine (SVM) classifier. Authors in [25], explain the advantage of YCbCr color model used for the SVM-based classification. Jiang, in [6], obtains better classification in L*a*b* color space using the Radial Basis Function (RBF) SVM kernel. Other works use Bag-of-Features (BoF) such as [26] who proposes combining RGB and Hue Saturation Lightness (HSL) color spaces for color feature extraction and then uses the BoF to calculate the classification rate for fire presence. Also [27] suggests to use the BoF in the YUV color model for its fire detection approach. Bow Fire method, described in [28], classifies YCbCr pixel values using the Naive Bayes and the K-Nearest Neighbors (KNN) during the color classification step.

The majority of the works on flame color pixel detection are rules based. They combine rules or thresholds on a color space. Rule-based fire detection methods are the fastest and the simplest. RGB is among the oldest and most used color spaces for fire detection since nearly all cameras capture videos in RGB space. In Reference [29], Chen defines three rules using a combination of the RGB and the HSI color spaces to identify flame pixel color. Çelik et al. propose two methods for fire pixel detection in the RGB color space [30,31]. Several equations are developed imposing conditions on the normalized R, G, B components values. In [32], authors exploit the YUV color space to detect the candidate fire pixels from the Y channel of the video data. Then, the chrominance information of the extracted pixels are examined to decide whether they are fire or not. Assuming that chrominance information is not affected by the illumination variations, Çelik avoid working on a combination of illuminance and chrominance such in RGB and choose the chrominance channels. That’s why, in [33], a color model composed of rules in the YCbCr color space is developed. Other color spaces are also exploited such as the CIE L*a*b* in the work of [34]. The motivation for using this color space is its perceptual uniformity, hence it helps to better represent color information of fire than other color spaces. The HSI color space is also used since it imitates the color detection properties of the human visual system. As example, the work of [35] where rules are established to select fire pixel for brighter and darker environments. Many other works are developed based on a combination of color spaces, we can cite [36] where six rules applied on the RGB and YUV components are presented, [37] where RGB, HSV and YCbCr rule-based models are combined and [38] where rules are elaborated in RGB, HSI and YUV.

To sum up, after a comparison with the related works cited above, we perceive that there is no single color system defined as a standard for the detection of fire flames. For that, we aim to exploit new emerging color spaces that have already shown promising results to provide a color-based model for fire detection.

3.1 RGB to PJF Color Space Conversion

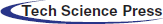

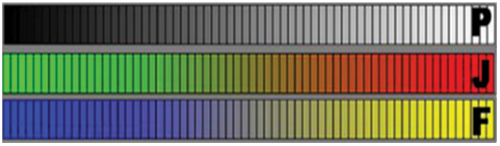

RGB format is the most provided output by video surveillance cameras, however, for better data output representation, other color spaces are used. The first step in our method is the conversion from RGB to PJF color space. PJF imitates the concept of L*a*b* by converting the R, G and B color channels into novel ones to better describe the organization of the colors with a low color calibration error. The brightness is expressed as a single variable and the color is expressed with two variables: one that goes from blue to yellow and the other from green to red, noting that vectors remain inside the RGB color cube [15]. The variable P measures the brightness magnitude by computing the root sum of the squared values, J measures the relative amounts of red and green and F measures the relative amounts of yellow and blue. Given RGB data, the P, J and F equations are expressed below [15]:

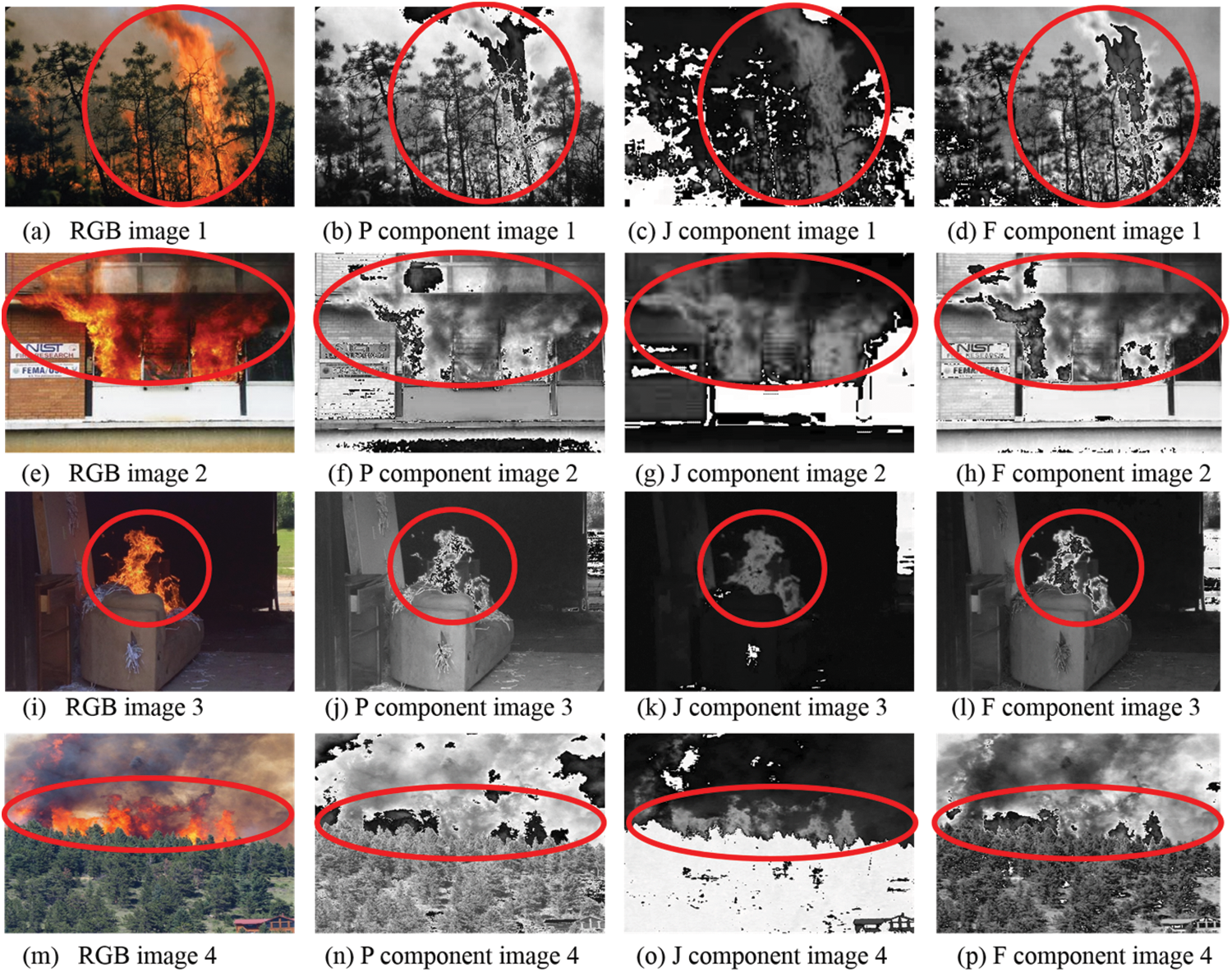

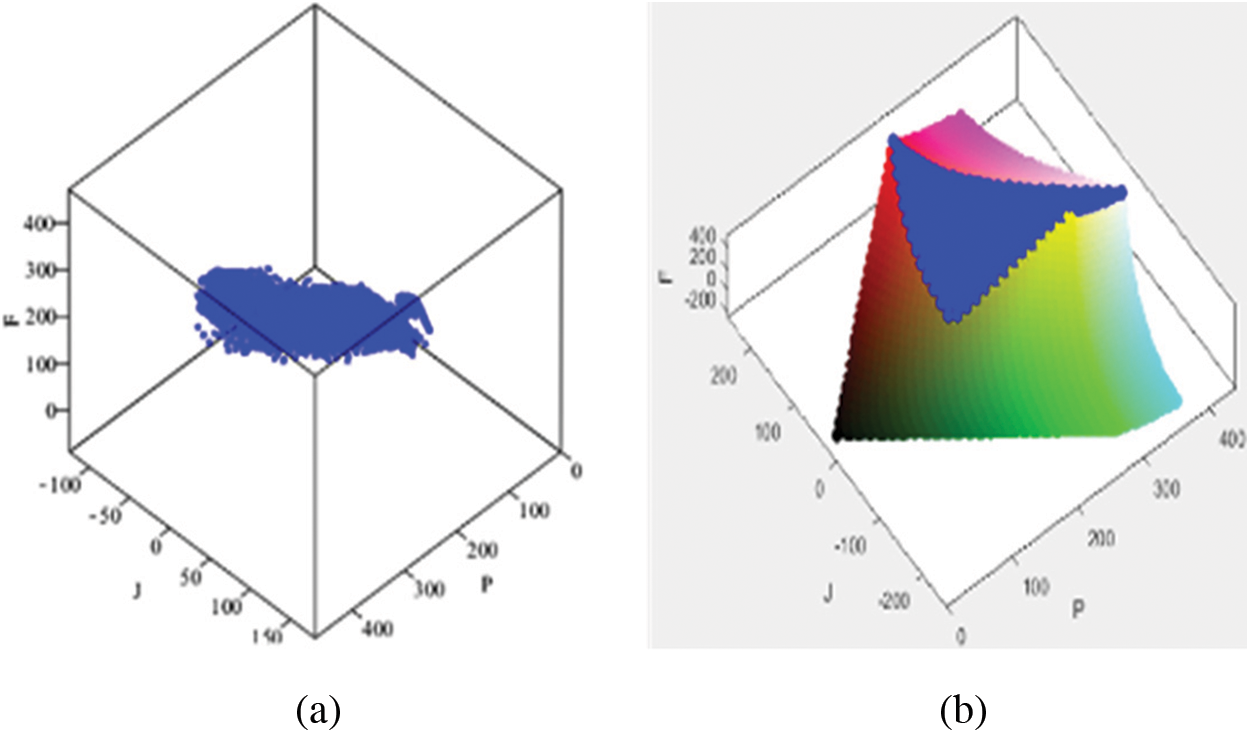

The color components generated by Eqs. (1)–(3) are illustrated in Fig. 1 [15]. Fig. 2 displays the 3D color distribution of the PJF color space where P, J and F channels are quantized into 26 levels.

Figure 1: The PJF color space channels: P, J and F

Figure 2: The PJF 3D color distribution

3.2 Proposed Rule-Based Color Model for Fire Detection

Through this work, we propose to build a simple and efficient rule-based color model to locate possible fire areas in images (namely fire regions and fire-like regions). It should be used in VFD system followed by a more refined analysis to separate between fire and fire-like regions [16]. A fire in an image can be defined based on its chromatic attributes, which can be represented with simple mathematical formulations. Fig. 3, shows fire images and their P, J, F color channels. It helps to understand how PJF color channels represent fire pixels. Based on these ascertainments, we establish, in this work, rules for the PJF color model to detect fire regions.

Figure 3: RGB fire images and their corresponding P, J and F color components

This choice is made to de-correlate luminance and chrominance aiming to avoid illumination changes effects, while gathering the pertinent chrominance information (fire pixel colors) in a small number of components. Therefore, defining a color model that exploits the chrominance channels is more meaningful for the structure of the flame colors. As explained previously, the chroma components J and F can measure the relative amounts of red and yellow respectively which are the two bounds of the fire color range. Based on the color nature of fire, two ascertainments can be discerned:

1/The fire pixel is consistently with high saturation in Red channel (R) which means,

RT indicates the threshold value of R component.

2/For every fire pixel, the Red channel’s value (R) is higher than the Green one (G), and the Green channel’s value is higher than the Blue one (B) which can be translated to,

From Eqs. (2)–(4), the following formulation can be deduced :

Depending on Eq. (5) and fire pixels color characteristics, it is obvious that the Blue channel value is negligible compared to Red and Green channels. Thus, the Eq. (6) can be refined by omitting the Blue channel value to obtain:

FT = 2RT denotes the threshold value of F component.

The examination of Eqs. (2), (3) and (5) can also lead the following:

Based on these equations and verified by an analysis of many fire images, the subsequent rule is built to detect a candidate pixel Pix:

with FT is the empirically fixed threshold value of the F component, (x, y) is the spatial position in the image.

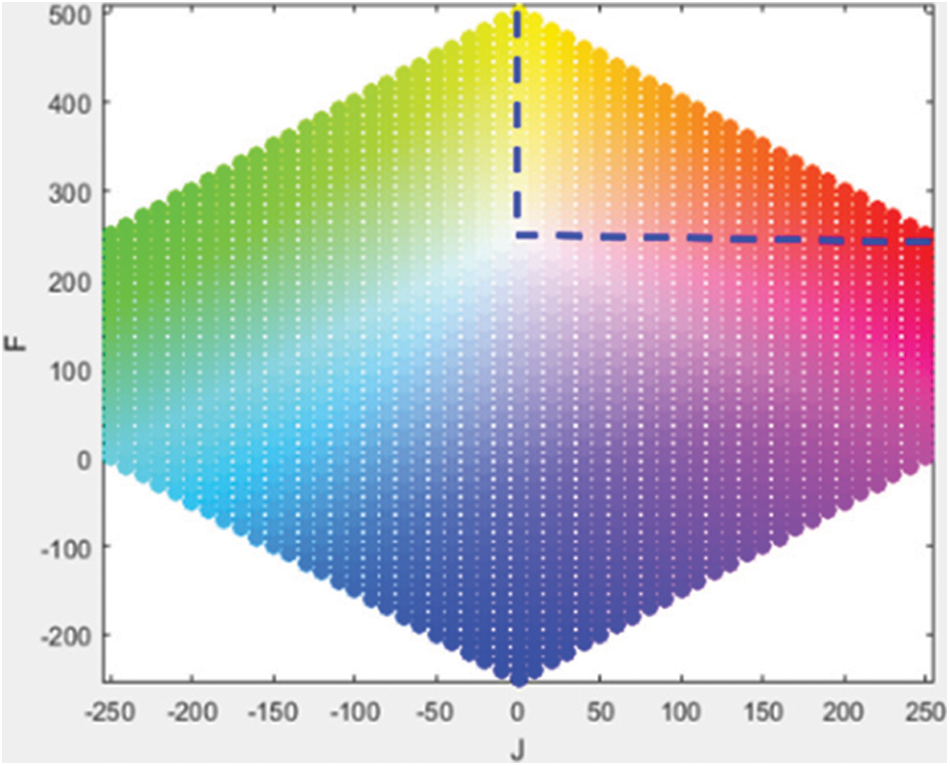

Aiming to statistically validate the developed model, the subsequent policy was applied. A set of RGB images, collected from the public fire datasets, is manually segmented to recognize fire sections. Segmented areas are then converted to PJF color space. Fig. 4a shows a sample of fire-color pixels distribution and then Fig. 4b draws the used images samples fire pixels projection in the PJF space. It is obvious, that the color variation of the fire pixels can be thresholded in the J and F components unlike the P component. The classification of the fire pixel regions is achieved by using two linear lines whose equations are already mentioned in Eq. (11) and which are depicted as blue lines in Fig. 5. We can use these regions to differentiate fire pixels candidates from non-fire. The effectiveness of this color space lies in its ability to separate color components, essentially J and F. From the (J–F) plane drawn in Fig. 5, we can validate the accuracy of the obtained model. Since this plane condenses the fire color information in one representation (the relative amounts of yellow and red colors), the estimation of the fire color variation boundaries becomes precise. It is discernible that the obtained area (area bounded by the blue lines in Fig. 5) contains just the colors corresponding to fire color range. Hence, the threshold

Figure 4: (a) Example of fire-color cloud in PJF space, (b) projection of images samples fire pixels in the PJF color

Figure 5: Fire pixels region boundaries in (J–F) plane

4 Evaluation of the Proposed Model Performances

In this section, experiments are conducted in order to assess the performances of the proposed method. A comparison with color models in different color spaces, cited in the literature, is also carried out.

The color model should be validated on different video sequences and images captured in diverse environmental conditions, such as, indoor, outdoor, daytime and nighttime. The dataset used in the experiments should be of different resolutions, frame rates, and may be shoot by a non-stationary camera. In the state-of-the-art, no standard benchmark image/video fire dataset is openly available. For this reason, we have collected samples from public image and video fire datasets. We tried to put in the majority of the real world scenarios with stationary and dynamic backgrounds such as forest fire, indoor fire, outdoor open space fire. Potential false alarms examples consisting of no flame but flame-like colors such as sunset, car lights, fire-colored objects are also included. The total number of the tested images is 251 996. The built data is much larger than those used by other researchers. It is composed of 3 fire image datasets: Bow Fire [39], Fire images [40] and Fismo [41]; and 6 fire video datasets: Bilkent [42], FireVid [43], Mivia [44], Rescuer [45], KMUIndoor_outdoor_flame [46], RabotVideos [47].

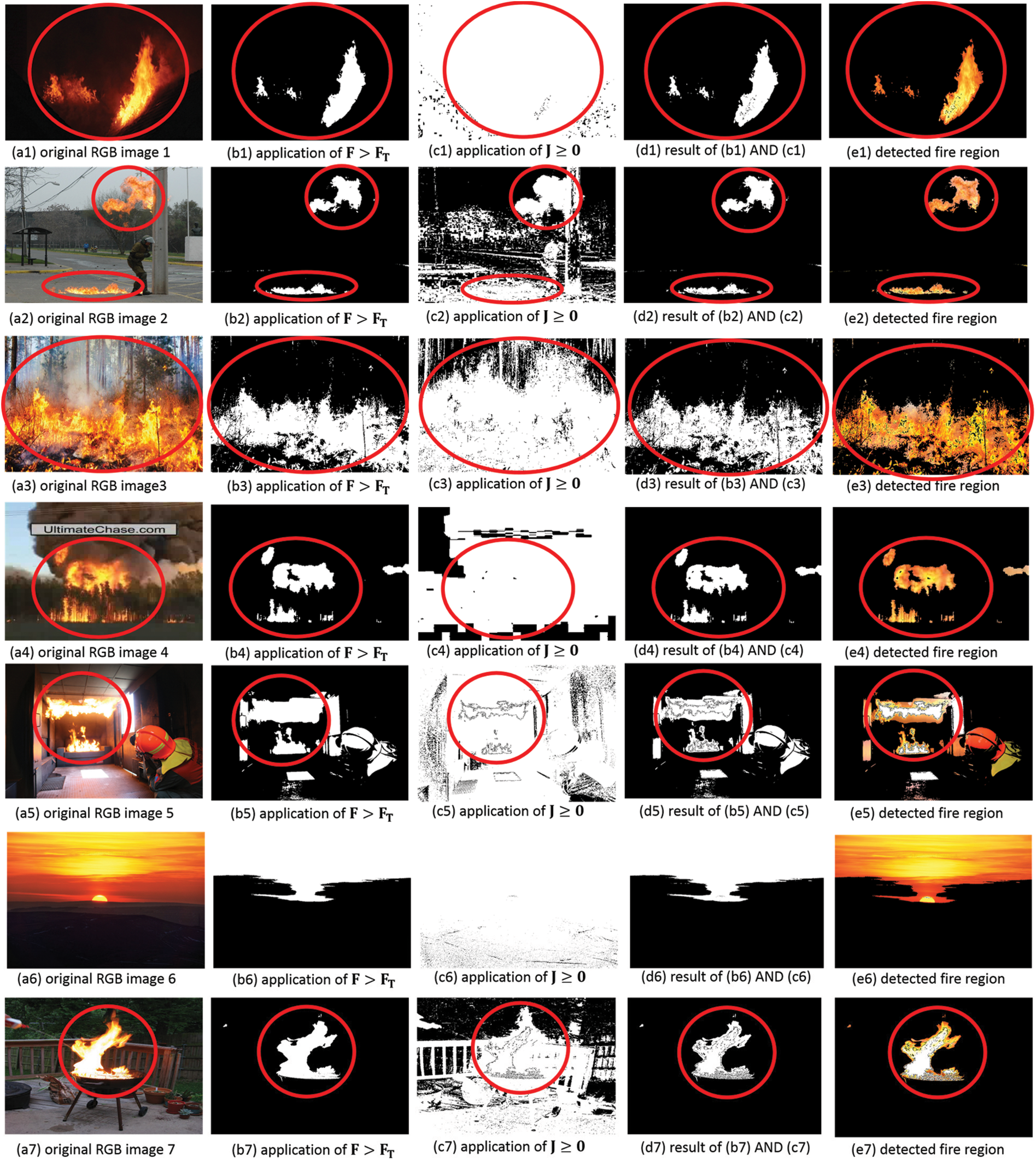

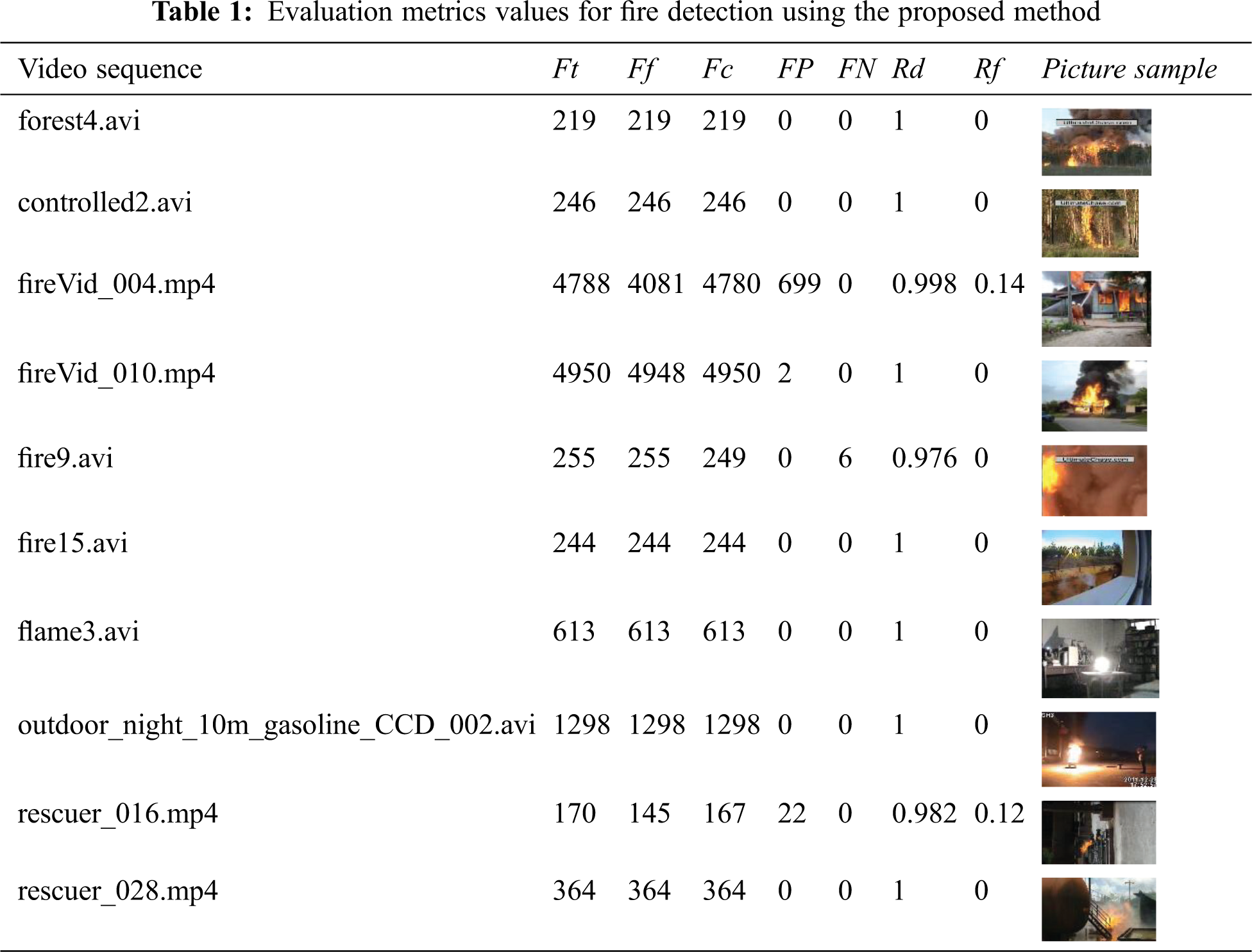

All the experiments are achieved on an Intel i7-2670QM CPU @ 2.20 GHz, 4.0GB of RAM, Windows-64bits. The collected dataset is used to benchmark the performance of our method. Ground truth images are calculated manually. Coding is achieved using the OpenCV/C++ library. Fig. 6 illustrates some fire detection results by testing the developed PJF model on different video sequences. It shows sample images, and their binary results computed using Eq. (11). Fig. 6d shows results of combining these binary outputs with the AND operator. The detected fire regions are displayed in Fig. 6e. It is remarkable that candidate areas including fire and fire-like colored regions (such as the firefighter wearing fire-color uniform and cap or the sunset view in images 5 and 6 of Fig. 6) are successfully extracted. Therewith, the detection is accomplished without missing results, which emphasizes the performance of the method. It should be notable that missing a fire is more costly than launching a false alarm. Besides, scenes are filmed in different environments and weather conditions (indoor, outdoor, forest, daytime and nighttime), nonetheless, regions of interest are efficaciously detected. From Fig. 6, we can also confirm that fire pixel detection is well performing despite the luminosity changes thanks to the illumination independence of the PJF color space. Tab. 1 outlines the evaluation metrics values of the suggested method. The rule-based model is tested on the variable video sequences of the dataset. Ft is the total video sequence frames number, Ff is the fire frames number, and Fc is the number of frames properly identified as fire images by the suggested method (including fire and fire-like areas). FP and FN represent, respectively, the number of false positive and false negative video images. False positive denotes non-fire areas wrongly identified as fire. False negative refers to the fire regions wrongly rejected by the system. The rate of detecting fire successfully in a video using the Eq. (12) is named detection rate Rd. False alarm rate Rf is calculated by Eq. (13). It indicates the rate of identifying fire in a non-fire frame.

Figure 6: Application of Eq. (11) rules to images samples

The average detection rate achieved is 0.995 with the test sequences shown in Tab. 1 which demonstrates the efficiency of the PJF color model in detecting the fire regions correctly (proved by high values of Fc in Tab. 1). As well, the average false alarm rate is around 0.026 leading to a low error rate of the method. The false negative detection rates are obtained due to the presence of tiny fire regions on initial combustion or of intensive smoke regions in the scene. It is clear from Fig. 6 and Tab. 1 that the suggested method can work properly in diverse environments, which is very crucial for a performant fire detection system.

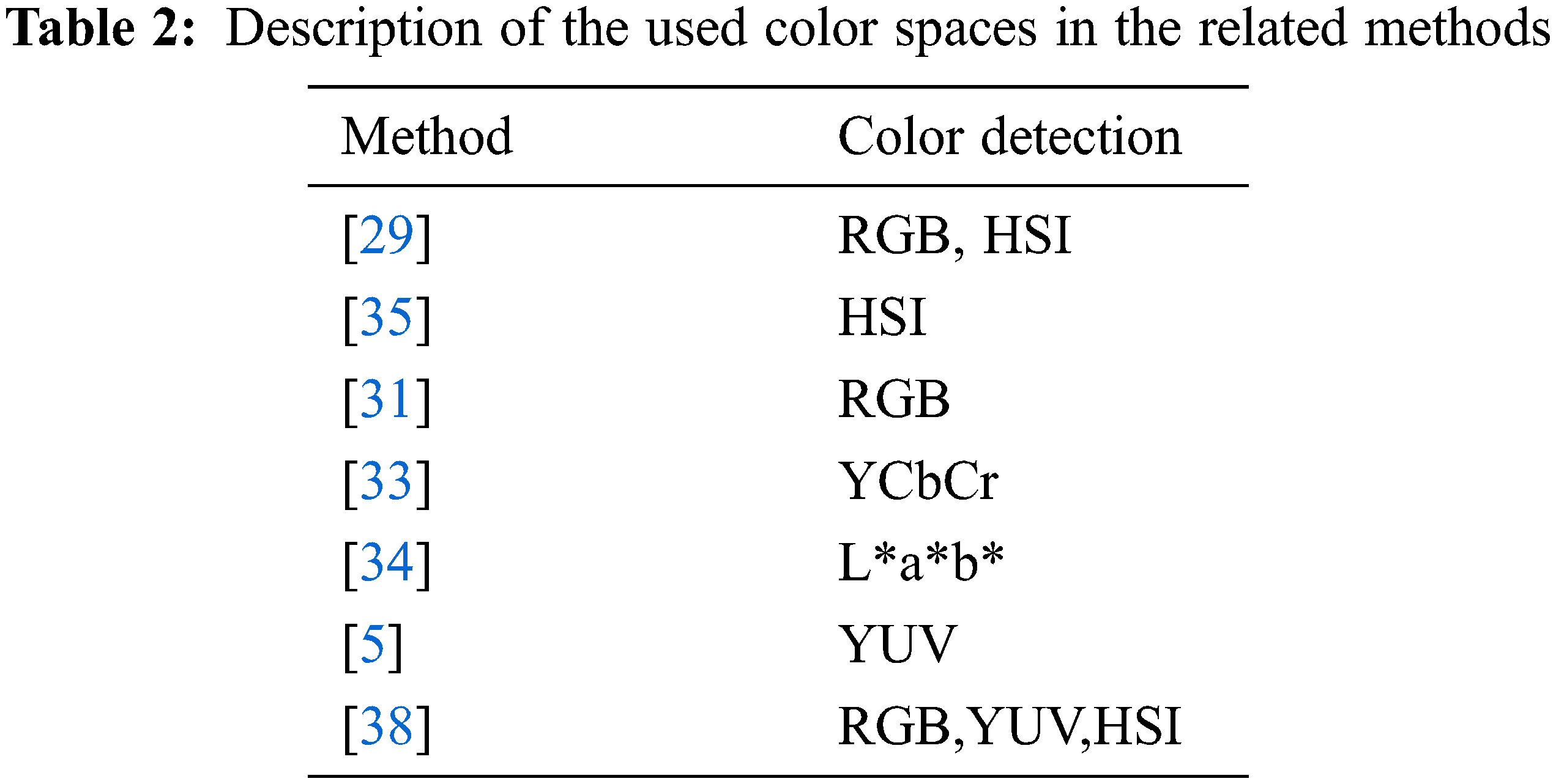

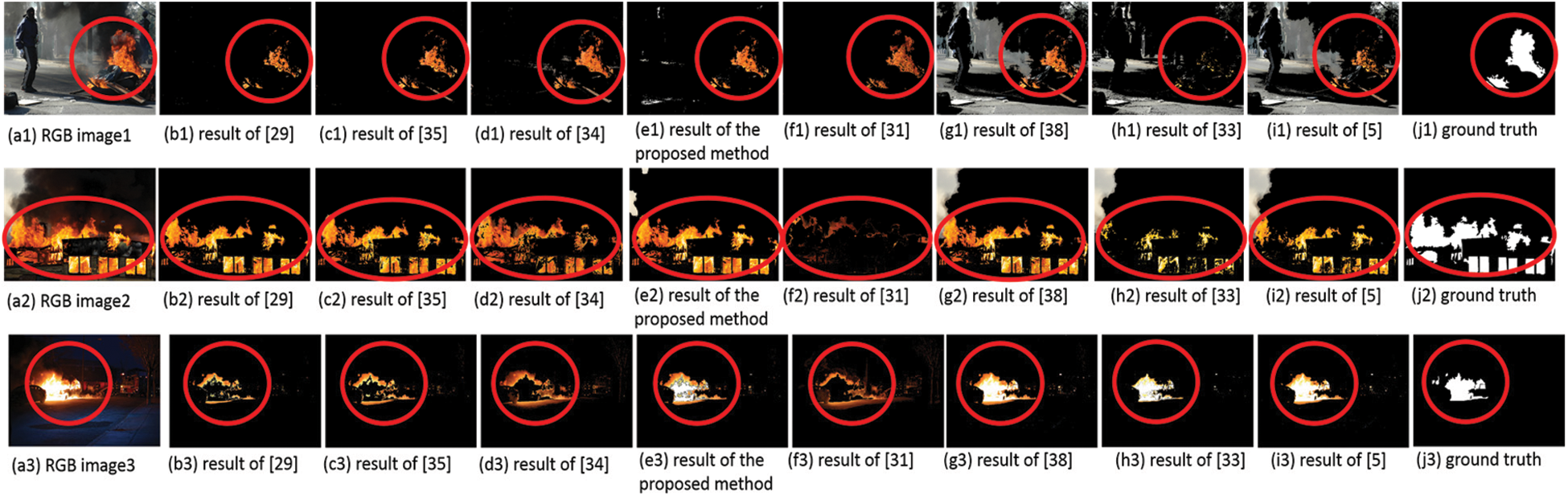

For better assessment, we conduct experiments to compare our method is with some state-of-the-art methods. These related works are the main color-based models for fire detection proposed in different color spaces as summarized in Tab. 2. References [29,38] use rules in combined color spaces while the others develop rules in single color spaces. In Fig. 7, detection outputs of different methods applied on Bow Fire dataset are drawn. Compared to ground truth images, it is clear that the proposed model has pertinent abilities to extract fire regions. It outperforms the models using RGB color space proposed in [29,31].

Figure 7: Comparison of the detection results of the developed model and different literature models applied on the Bow Fire image samples dataset

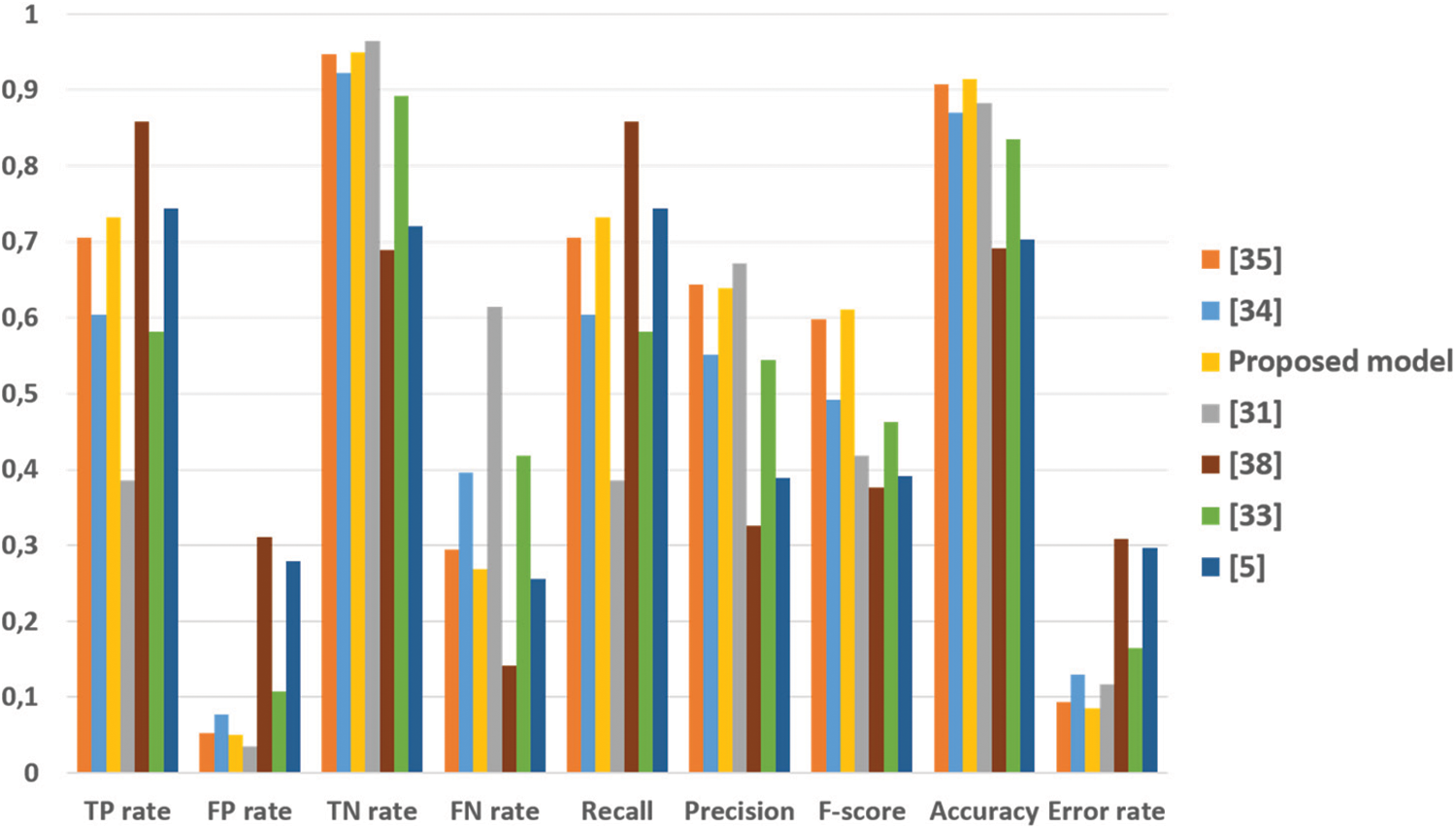

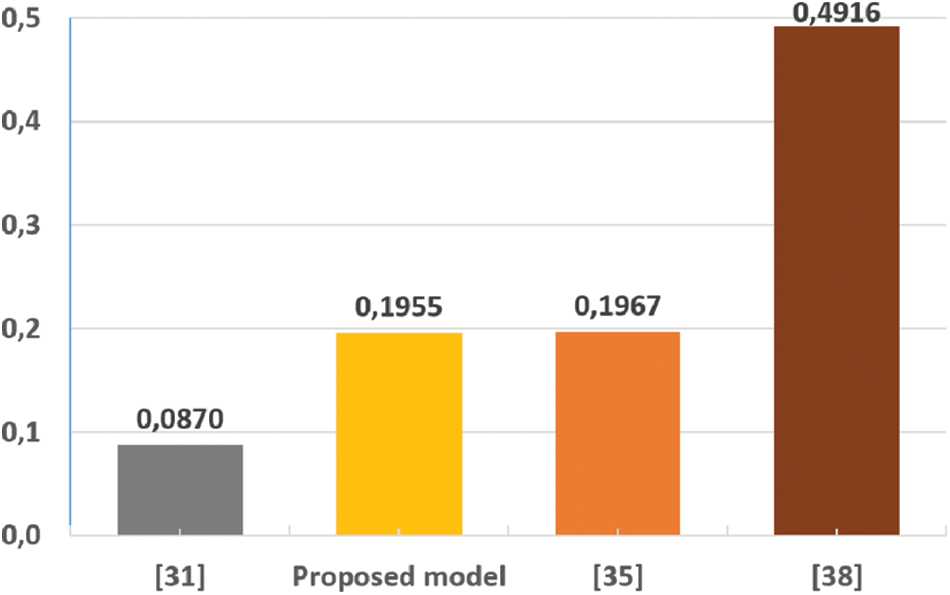

The performance enhancement is expected since PJF color space does not meet the pixel values correlation and the luminance-chrominance dependence. It has also better performance than [38] model, which operates in three powerful color spaces RGB, YUV and HSI. Despite YCbCr and YUV ability to discriminate luminance from chrominance information as well as PJF color space, the latter’s model have better results thanks to its colors structure that highlights the colors of fire in the (J–F) plane. Fig. 8 gathers the evaluation metrics values of some related fire detection works and the proposed one. The overall results are presented after testing on the entire collected dataset. For true positive (TP) rate, our model is ranked third after [5,38] work with a value of 0.731. Besides, for true negative (TN) rate, it achieves a rate of 0.949. Both False rates, FP and FN, are also reduced with the PJF color model with a value of 0.050 and 0.268 respectively. This demonstrates our method effectiveness to discriminate between fire and non-fire colors without missing results. Furthermore, our algorithm affords the best trade-off between true rates (TP and TN) on one side and false rates (FP and FN) on the other. Relatively to FP and FN rates, precision and recall values in Fig. 8 are 0.639 and 0.731. The top values are, respectively, 0.671 with [31] and 0.823 with [38]. Indeed, compared to the other works, the obtained values of precision and recall are quite respectable. According to Fig. 8, [35] has very close results to the proposed method since the color spaces used in these works are HSI and PJF which have abilities to imitate the human visual system color sensing properties. Despite this, [35]’s color model is built based on an experimental analysis of 70 fire images. It relies on many thresholds computed from a small dataset, which makes it very dependent on the choice of the images. These thresholds values vary also depending on the environment luminance; the authors developed two models for brighter and darker environment without proposing any method to distinguish environment luminance. On the contrary, our work justifies all the used rules in the model. Besides that, it works on a large set of images for different environment luminance to prove its high performance. For the accuracy, the suggested algorithm’s value is relevantly high with 0.914 as well as the weighted harmonic mean F-score with 0.610. The obtained error rate with a value of 0.085 is the lowest among the underlying literature works. Not to forget that the proposed method is based on the color information only. In addition, from Fig. 9, the model can identify fire pixels from test images within 0.19 s, which seems very promising. It exceeds the works of [35,38]. The method [31] has the lowest average time consumed for fire detection; however, its detection results are not too accurate, as shown in Fig. 7. For the accuracy and the error rate values in Fig. 8, [31] is surpassed by our proposed model.

Figure 8: Comparison of the calculated evaluation metrics of fire detection using the proposed color model and literature models

Figure 9: Comparison of the calculated average time for fire detection in second per frame using the proposed color model literature models

To conclude, using this model in a hybrid system, which combines other information such as motion, geometry, texture, etc. with the color, should evidently improve the fire detection results by differentiating accurately fire region from fire-like areas in the scenes [16].

In this work, we present a new color based model for fire detection in the PJF color space. The developed method is able to separate between fire and non-fire pixels. The performance of this model is mainly thanks to the capacity of the PJF color space to de-correlate luminance and chrominance and concentrate fire colors range in its J and F components. In this work, the largest dataset in the literature is collected to assess the color model. It is tested on 251 996 image samples achieving up to 99.8% detection rate and 8.59% error rate. Experimental outcomes prove that the PJF model outperforms related models. Its main contribution is its ability to accomplish the best compromise between true and false detection rates. The performance of the method will be further improved by considering Deep Learning methods to build a robust fire detection system.

Acknowledgement: This study was funded by the Deanship of Scientific Research, Taif University Researchers Supporting Project Number (TURSP-2020/161), Taif University, Taif, Saudi Arabia.

Funding Statement: This study was funded by the Deanship of Scientific Research, Taif University Researchers Supporting Project Number (TURSP-2020/161), Taif University, Taif, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. A. Gaur, A. Singh, A. Kumar, A. Kumar and K. Kapoor, “Video flame and smoke based fire detection algorithms: A literature review,” Fire Technology, vol. 53, pp. 1943–1980, 2020. [Google Scholar]

2. D. Guha-Sapir, P. Hoyois, P. Wallemacq and R. Below, “Annual Disaster Statistical Review 2017: The Numbers and Trends,” Brussels, Belgium: CRED.2017, University Cathol. Louvain, Brussels, Belgium, 2017. [Google Scholar]

3. N. N. Brushlinsky, M. Ahrens, S. V. Sokolov and P. Wagner, “World Fire Statistics. The International Association of Fire and Rescue Services, Center of Fire Statistics (CTIF),” 2020. [Google Scholar]

4. Z. C. Zou, H. Leng, K. L. Wu and W. Q. Su, “Intelligent space for building fire detection and evacuation decision support,” in Proc. Int. Conf. on Electrical, Automation and Mechanical Engineering, Phuket, Thailand, pp. 365–368, 2015. [Google Scholar]

5. A. E. Cetin, K. Dimitropoulos, B. Gouverneur, N. Grammalidis, O. Gunay et al., “Video fire detection-review,” Digital Signal Processing, vol. 23, no. 6, pp. 1827–1843, 2013. [Google Scholar]

6. B. Jiang, Y. Lu, X. Li and L. Lin, “Towards a solid solution of real-time fire and flame detection,” Multimedia Tools and Applications, vol. 74, pp. 689–705, 2015. [Google Scholar]

7. C. Rui, L. Zhe-Ming and J. Qing-Ge, “Real-time multi-feature based fire flame detection in video,” IET Image Process, vol. 11, no. 1, pp. 31–37, 2017. [Google Scholar]

8. X. F. Han, J. S. Jin, M. J. Wang, W. Jiang, L. Gao et al., “Video fire detection based on Gaussian mixture model and multicolor features,” Signal Image Video Process, vol. 11, pp. 1419–1425, 2017. [Google Scholar]

9. F. Gong, Ch. Li, W. Gong, X. Li, X. Yuan et al., “A real-time fire detection method from video with multifeature fusion,” Computational Intelligence and Neuroscience, vol. 2019, Article ID 1939171, pp. 1–17, 2019. [Google Scholar]

10. T. Kim, E. Hong, J. Im, D. Yang, Y. Kim et al., “Visual simulation of fire-flakes synchronized with flame,” Visual Computer, vol. 33, pp. 1029–1038, 2017. [Google Scholar]

11. X. Feng, D. Zhu, Z. Wang and Y. Wei, “A geometric control of fire motion editing,” Visual Computer, vol. 33, pp. 585–595, 2017. [Google Scholar]

12. B. Fangming, F. Xuanyi, C. Wei, F. Weidong, M. Xuzhi et al., “Fire detection method based on improved fruit Fly optimization-based SVM,” Computers, Materials & Continua, vol. 62, no. 1, pp. 199–216, 2020. [Google Scholar]

13. S. Y. Du and Z. G. Liu, “A comparative study of different color spaces in computer-vision-based flame detection,” Multimedia Tools and Applications, vol. 75, pp. 10291–10310, 2016. [Google Scholar]

14. M. Thanga Manickam, M. Yogesh, P. Sridhar, S. K. Thangavel and L. Parameswaran, “Video-based fire detection by transforming to optimal color space,” In: S. Smys, J. Tavares, V. Balas and A. Iliyasu (Eds.) Computational Vision and Bio-Inspired Computing. ICCVBIC 2019. Advances in Intelligent Systems and Computing, vol. 1108, pp. 1256–1264, Cham: Springer, 2020. [Google Scholar]

15. P. Jackman, D. W. Sun and G. ElMasry, “Robust colour calibration of an imaging system using a colour space transform and advanced regression modelling,” Meat Science, vol. 91, no. 4, pp. 402–407, 2012. [Google Scholar]

16. Z. Daoud, A. Ben Hamida and C. Ben Amar, “Automatic video fire detection approach based on PJF color modeling and spatio-temporal analysis,” Journal of WSCG, vol. 27, no. 1, pp. 27–36, 2019. [Google Scholar]

17. B. U. Toreyin, Y. Dedeoglu, U. Gudukbay and A. E. Çetin, “Computer vision based method for real-time fire and flame detection,” Pattern Recognition Letters, vol. 27, no. 1, pp. 49–58, 2006. [Google Scholar]

18. B. C. Ko, K. H. Cheong and J. Y. Nam, “Fire detection based on vision sensor and support vector machines,” Fire Safety Journal, vol. 44, no. 3, pp. 322–329, 2009. [Google Scholar]

19. P. V. K. Borges and E. Izquierdo, “A probabilistic approach for vision-based fire detection in videos,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 20, no. 5, pp. 721–731, 2010. [Google Scholar]

20. F. Yuan, “An integrated fire detection and suppression system based on widely available video surveillance,” Machine Vision and Applications, vol. 21, pp. 941–948, 2010. [Google Scholar]

21. D. C. Wang, X. Cui, E. Park, C. Jin and H. Kim, “Adaptive flame detection using randomness testing and robust features,” Fire Safety Journal, vol. 55, pp. 116–125, 2013. [Google Scholar]

22. T. Han, C. Ge, S. Li and X. Zhang, “Flame detection method based on feature recognition,” In: Q. Liang, W. Wang, X. Liu, Z. Na, M. Jia and B. Zhang (Eds.) Communications, Signal Processing, and Systems. CSPS 2019. Lecture Notes in Electrical Engineering, vol. 571, Singapore: Springer, 2019. [Google Scholar]

23. T. X. Truong and J. M. Kim, “Fire flame detection in video sequences using multi-stage pattern recognition techniques,” Engineering Applications of Artificial Intelligence, vol. 25, no. 7, pp. 1365–1372, 2012. [Google Scholar]

24. Y. H. Habiboğlu, O. Günay and A. E. Çetin, “Covariance matrix-based fire and flame detection method in video,” Machine Vision and Applications, vol. 23, pp. 1103–1113, 2012. [Google Scholar]

25. M. Jamali, S. Samavi, M. Nejati and B. Mirmahboub, “Outdoor fire detection based on color and motion characteristics,” in Proc. 21st Iranian Conf. on Electrical Engineering (ICEE), Mashhad, Iran, pp. 1–6, 2013. [Google Scholar]

26. K. Poobalan and S. C. Liew, “Fire detection based on color filters and Bag-of-features classification,” in Proc. IEEE Student Conf. on Research and Development (SCOReD), Kuala Lumpur, Malaysia, pp. 389–392, 2015. [Google Scholar]

27. Z. G. Liu, X. Y. Zhang, Y. Yang and C. C. Wu, “A flame detection algorithm based on Bag-of-features in the YUV color space,” in Proc. of Int. Conf. on Intelligent Computing and Internet of Things, Harbin, China, pp. 64–67, 2015. [Google Scholar]

28. D. Y. T. Chino, L. P. S. Avalhais, J. F. Rodrigues and A. J. M. Traina, “BoWFire: Detection of fire in still images by integrating pixel color and texture analysis,” in Proc. 28th SIBGRAPI Conf. on Graphics, Patterns and Images, Salvador, Brazil, pp. 95–102, 2015. [Google Scholar]

29. T. H. Chen, P. H. Wu and Y. C. Chiou, “An early fire-detection method based on image processing,” in Proc. ICIP, vol. 3, pp. 1707–1710, 2004. [Google Scholar]

30. T. Çelik, H. Demirel and H. Ozkaramanli, “Automatic fire detection in video sequences,” in Proc. 14th European Signal Processing Conf., Florence, Italy, pp. 1–5, 2006. [Google Scholar]

31. T. Çelik, H. Demirel, H. Ozkaramanli and M. Uyguroglu, “Fire detection in video sequences using statistical color model,” in Proc. IEEE Int. Conf. on Acoustics Speech and Signal Processing Proc., vol. 2, Toulouse, France, 2006. [Google Scholar]

32. G. Marbach, M. Loepfe and T. Brupbacher, “An image processing technique for fire detection in video images,” Fire Safety Journal, vol. 41, no. 4, pp. 285–289, 2006. [Google Scholar]

33. T. Çelik, H. Özkaramanlı and H. Demirel, “Fire and smoke detection without sensors: Image processing based approach,” in Proc. 15th European Signal Processing Conf., Poznan, Poland, pp. 1794–1798, 2007. [Google Scholar]

34. T. Çelik, “Fast and efficient method for fire detection using image processing,” ETRI Journal, vol. 32, no. 6, pp. 881–890, 2010. [Google Scholar]

35. W. B. Horng, J. W. Peng and C. Y. Chen, “A new image-based real-time flame detection method using color analysis,” in Proc. 2005 IEEE Networking, Sensing and Control, Tucson, AZ, USA, pp. 100–105, 2005. [Google Scholar]

36. S. Li, W. Liu, H. Ma and H. Fu, “Multi-attribute based fire detection in diverse surveillance videos,” in Proc. MultiMedia Modeling, Reykjavik, Iceland, January 4–6, pp. 238–250, 2017. [Google Scholar]

37. G. F. Shidik, F. N. Adnan, C. Supriyanto, R. A. Pramunendar and P. N. Andono, “Multi color feature, background subtraction and time frame selection for fire detection,” in Proc. Int. Conf. on Robotics, Biomimetics, Intelligent Computational Systems, Jogjakarta, Indonesia, pp. 115–120, 2013. [Google Scholar]

38. X. F. Han, J. S. Jin, M. J. Wang, W. Jiang, L. Gao et al., “Video fire detection based on Gaussian mixture model and multi-color features,” Signal, Image and Video Processing, vol. 11, pp. 1419–1425, 2017. [Google Scholar]

39. W. D. de Oliveira, 2019, Dataset Available https://bitbucket.org/gbdi/bowfire-dataset/downloads/. [Google Scholar]

40. J. Sharma and M. Goodwin, 2017, Dataset Available https://github.com/cair/Fire-Detection-Image-Dataset. [Google Scholar]

41. M. T. Cazzolato, L. P. S. Avalhais, D. Y. T. Chino, J. S. Ramos, J. A. Souza et al., 2017, Dataset Available https://github.com/mtcazzolato/dsw2017. [Google Scholar]

42. A. E. Cetin, 2006, Dataset Available http://signal.ee.bilkent.edu.tr/VisiFire/. [Google Scholar]

43. M. T. Cazzolato, L. P. S. Avalhais, D. Y. T. Chino, J. S. Ramos, J. A. Souza et al., 2017. Dataset Available https://drive.google.com/open?id=0B0SvQV_fqWgZOVI5dERTOWc4MDg. [Google Scholar]

44. P. Foggia, A. Saggese and M. Vento, 2015, Dataset Available https://mivia.unisa.it/datasets/video-analysis-datasets/fire-detection-dataset/. [Google Scholar]

45. M. T. Cazzolato, L. P. S. Avalhais, D. Y. T. Chino, J. S. Ramos, J. A. Souza et al., 2017, Dataset Available https://drive.google.com/open?id=0B0SvQV_fqWgZVGlpeGJxYXRzVmM. [Google Scholar]

46. B. Ko, K. H. Jung and J. Y. Nam, 2012, Dataset Available https://cvpr.kmu.ac.kr/. [Google Scholar]

47. D. O. Tobeck, M. J. Spearpoint and C. M. Fleischmann, 2012, Dataset Available http://multimedialab.elis.ugent.be/rabot2012/. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |