DOI:10.32604/iasc.2022.020629

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.020629 |  |

| Article |

Time-Efficient Fire Detection Convolutional Neural Network Coupled with Transfer Learning

Department of Computer Sciences, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, Riyadh, 11047, KSA

*Corresponding Author: Norah S. Alghamdi. Email: NOSAlghamdi@pnu.edu.sa

Received: 01 June 2021; Accepted: 15 July 2021

Abstract: The detection of fires in surveillance videos are usually done by utilizing deep learning. In Spite of the advances in processing power, deep learning methods usually need extensive computations and require high memory resources. This leads to restriction in real time fire detection. In this research, we present a time-efficient fire detection convolutional neural network coupled with transfer learning for surveillance systems. The model utilizes CNN architecture with reasonable computational time that is deemed possible for real time applications. At the same time, the model will not compromise accuracy for time efficiency by tuning the model with respect to fire data. Extensive experiments are carried out on real fire data from benchmarks datasets. The experiments prove the accuracy and time efficiency of the proposed model. Also, validation of the model in fire detection in surveillance videos is proved and the performance of the model is compared to state-of-the-art fire detection models.

Keywords: Fire detection; classification; neural network; texture features; transfer learning

We use surveillance systems to look for abnormal occurrences such as many emergencies (fires, disaster, suspicious activities, etc,…). The availability of such information in its early stages is very important to take the appropriate actions. This can lead to minimize the occurrence of disasters and minimize loss in human lives. The detection of fire events at early stages in surveillance videos is very important to save lives [1,2]. Beside the fatality of fires also disabilities can affect 15% of the fire victims [3]. 80% of fire fatalities happen in home fires due to delayed escape because home fire systems need close proximity to the fire to get activated [4]. This led to the requirement of effective fire systems based on surveillance cameras.

Two methodologies are used for fire detection systems: sensor-based and vision-based systems. Sensor-based fire detection systems utilize thermal or smoke sensors. Sensors require human confirmation of fire occurrence of a fire in the situation of a fire alarm. Sensor-based smoke detectors can be falsely fired, because they lack the capabilities of distinguishing between smoke and fire. Also, such sensors need an adequate fire intensity for correct detection, which is not suitable for early-stage detection, resulting in widespread damage and life loss [2,3]. On the other hand, vision-based systems are beneficial in early-stage fire detection. These systems can be scalable, suitability for both indoors and outdoors. However, the time complexity and false alarms remain to be challenging.

Flame detection from surveillance videos usually depends on image analysis using pixel-level, or fire blob detection [5,6]. The pixel-based method extracts color features which results in untrusted performance due to the induced bias. Fire blob detection which is based on detecting flames has better performance because they use classification methods to identify blobs [7]. The major problem of blob classification is the many false generated alarms with also many outliers which results in false positives as well which is very dangerous, affecting the accuracy of the model [8].

The model accuracy can be enhanced by extracting color and motion features for blobs and incorporating them in the training process. The authors in Chen et al. [6] studied the irregular behavior and color variations of flames in different color spaces for fire classification. Also, they studied the frame sequence and the difference between consequent frames in the video to enhance prediction. But their model failed to classify real life fire from moving objects that look like flames. The authors in [9] studied the YUV color space combined with motion detection for fire pixel prediction. In [7], the authors utilized wavelet analysis of temporal characteristics of video frames as well as spatial characteristics. However, their model was very complicated and proven useless due to utilizing an excessive number of parameters. Another method was investigated in the research at [10] by utilizing color features of blobs in the authors in [11] investigated the utilization of YCbCr color space with application of defined rules that separated chrominance and luminance components.

In [12], the authors worked on 2D wavelets using multiple resolutions and extracted energy and shape features trying to reduce false positives, but the false warning rate was still high because of rigid objects movement. In [13], the authors achieved improvement by utilizing YUC color scheme, providing less false alarm rate than [14]. A color scheme flame detection model with temporal rate of 20 frames/sec is presented in [15]. The model was based on a Softmax classifier to identify flame blobs with various performance depending on distance. The model performed poorly with fires at greater distance or with small sized fires. In conclusion, color-based fire detection models are sensitive to illumination variances and to existence of shadows, with an increased number of false warnings. To deal with these issues, the authors in [16] and [17] incorporated the flame blob shape and rigid body’s movement in their model. They utilized luminance data and shape models of the flames to identify features that can differentiate flames from moving rigid bodies. The authors in [14] improved accuracy by extracting color, and movement features, to ensure fire identification in real time.

Transfer learning recently was used in deep learning. Transfer learning utilizes a pre-trained CNN and applies this learning on another problem. Transfer learning can lead to reduced training time. Also, transfer learning can be very useful if the annotated dataset for training is limited. For example, in training a classifier to detect fires in an image, one can utilize the learning during training to detect flame-like objects.

From the previous research, it is concluded that accuracy is inversely proportional to computational complexity in fire detection systems, which is a main problem of real time detection. The development of high accuracy models with enhanced speed and less false alarms, are motivated. For these reasons, we investigated the usage of deep learning for real time fire detection. We emphasized on early detection to save lives. The contributions of our proposed research are as follows:

1. We extensively studied deep learning models for fire detection and we present a low computational CNN for flame detection in surveillance videos. We utilized a video frame every 0.5 seconds from the surveillance video as an input to the classifier.

2. We incorporated transfer learning methodology and tuned our model with properties analogous to GoogleNet for fire detection [18].

3. The proposed model enhances the accuracy of fire detection as well as decreases the computational complexity and results in less false warnings. Our model is probed suitable for fire detection at an early stage to avoid disasters.

The remainder of the paper is detailed as follows: We present our proposed model for early-stage flame detection in surveillance in Section 2. Extensive Experiments and result analysis are depicted in Section 3. Conclusions are depicted in Section 4.

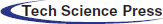

Most of the research in fire detection is focused on features engineering which can be time consuming with degraded performance. Feature engineering methods usually produce false alarms with high rate especially in video surveillance that contains shadows and varying illuminations, and also can falsely detect rigid objects with colors resembling flames as fires. For these reasons, we extensively investigated convolutional learning models for flame detection in an early stage. We explored different CNNs to enhance the performance of fire detection to minimize the false positive rate. The block diagram of the proposed flame detection model in surveillance videos is depicted in Fig. 1.

Figure 1: Block diagram of the proposed flame detection model in surveillance videos

2.1 The Convolutional Neural Network Models

CNN architecture is a deep learning platform. The first CNN architecture was the LeNet network [19]. It was used for handwritten character classification and it was restricted to written digits. Major enhancement of the CNN framework happened during the last two decades giving promise for many classification problems [20–23], pose classification [24–27], object tracking and retrieval [28,29]. CNNs are usually utilized in image classification with high accuracy over many datasets compared to features engineering methods. This is due to the deep learning of features from raw data in the training process.

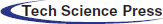

CNNs consist of three main layers. The first layer is the convolution layer where kernels of multiple sizes utilize the input data to produce feature maps. The second layer is the pooling layer which performs subsampling to reduce the features dimensionality. The third layer is fully connected, where rules are inferred from the input.

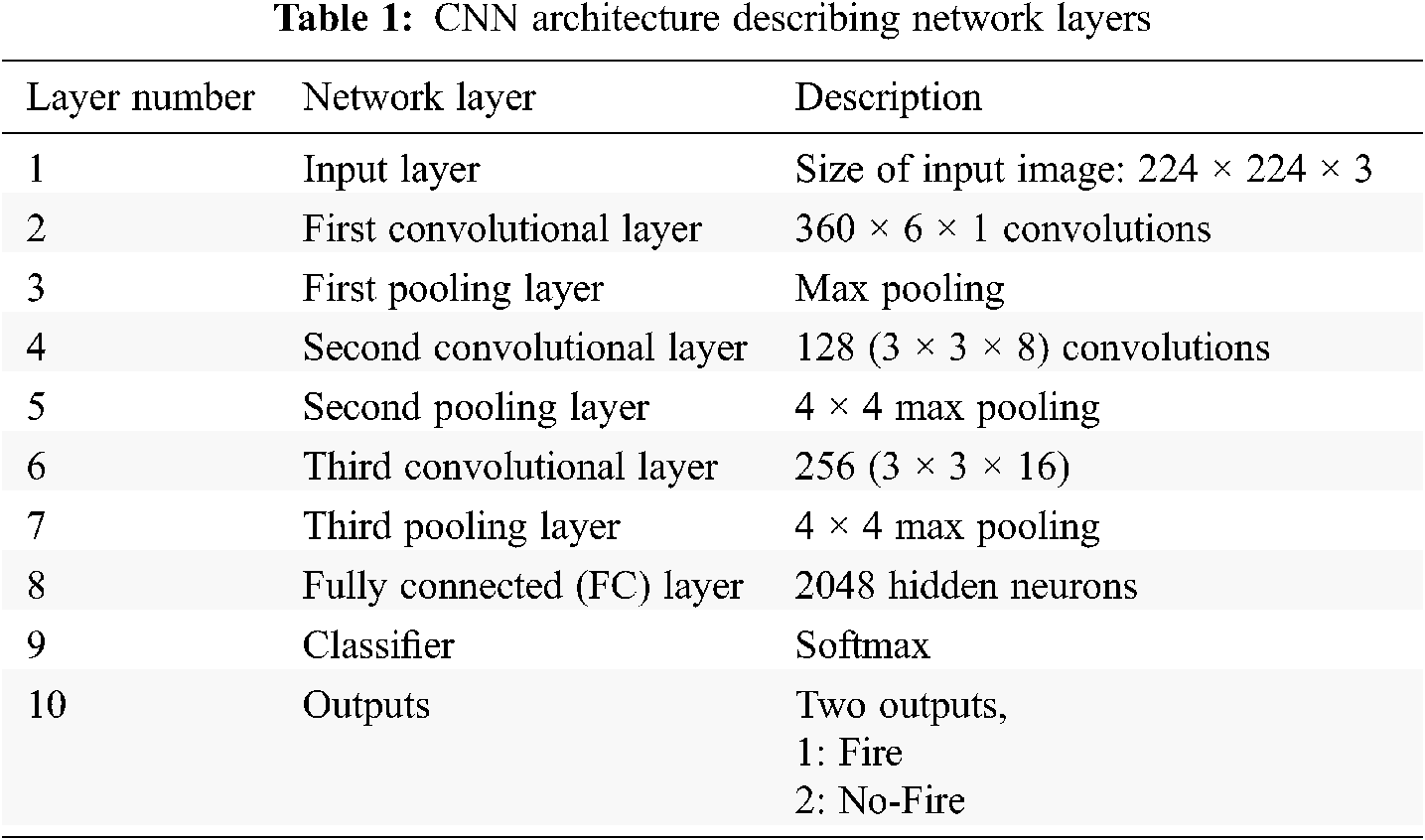

In this research we build a CNN analogous to GoogleNet [18] with changes in the structure to serve our purposes. GoogleNet is better than AlexNet in classification precision with, small size architecture, and less memory requirements. The proposed architecture has 120 layers with two convolutions layers, four pooling layers and one average pooling layer and Softmax classifier as depicted in Fig. 2. The CNN architecture describing network layers is depicted in Tab. 1.

Figure 2: The proposed deep CNN

In the proposed solution we tuned our proposed CNN by training different models with various parameters of the training data. Transfer learning is also combined in our model by utilizing previous knowledge. Improvement in fire detection accuracy increased from 87.41% to 96.33% due to the tuning process of the CNN for 12 epochs. Extensive experiments on various datasets led us to optimize the CNN architecture. The proposed model has the ability to detect flame in various situations in the surveillance videos with better accuracy. The input image is tested by going through the CNN architecture. The input image is then classified into two classes namely Fire or normal with the score probability. The highest score is depicted as the final annotation of an input image. To display the results of this process, different images from the database and their scores are depicted in Fig. 3.

Figure 3: Labels and scores resulted from the proposed CNN framework from benchmark images. (a) Fire 99.56%, Normal 0.44% (b) Fire 99.43%, Normal 0.57% (c) Fire 98.13%, Normal 1.87% (d) Fire 7.86%, Normal 92.14% (e) Fire 0.53%, Normal 99.47% (f) Fire 0.97%, Normal 99.03%

Experiments and results are depicted in this section. We performed extensive experiments utilizing different images and surveillance videos. The experiments are mainly performed on benchmark flame databases: the first dataset: Dataset1 [14] and the second Dataset: Dataset2 [30]. The training phase was performed on images from other datasets that can be found in [31,32]. The training was performed on 70,000 images and frames. We planned our experiments to assess the capabilities of our methodology to detect fire flames and we tested our classifier ability to classify images into fire or normal. We tested our methodology that combines deep learning with transfer learning.

We devised two experiments as follows:

1. Experiment 1 (Exper1): The Deep-CNN is tested with both datasets (Dataset1 and Dataset2). The performance evaluation of the classifier is tested through runs of convolution, pooling and classification layers.

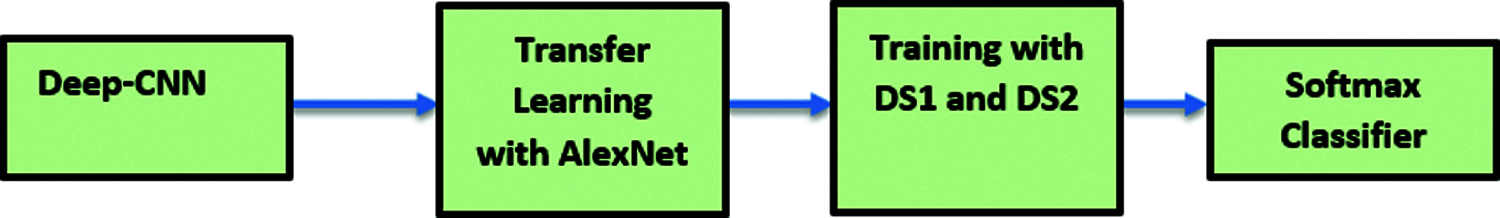

2. Experiment 2 (Exper2): The Deep-CNN is also tested with both datasets (Dataset1 and Dataset2). The Deep learning network is joined with transfer learning. The proposed Deep-CNN is trained with the datasets (Dataset1 and Dataset2) with prior transfer learning from AlexNet. The block diagram of Experiment 2 is depicted in Fig. 4.

Figure 4: Block diagram of Experiment 2

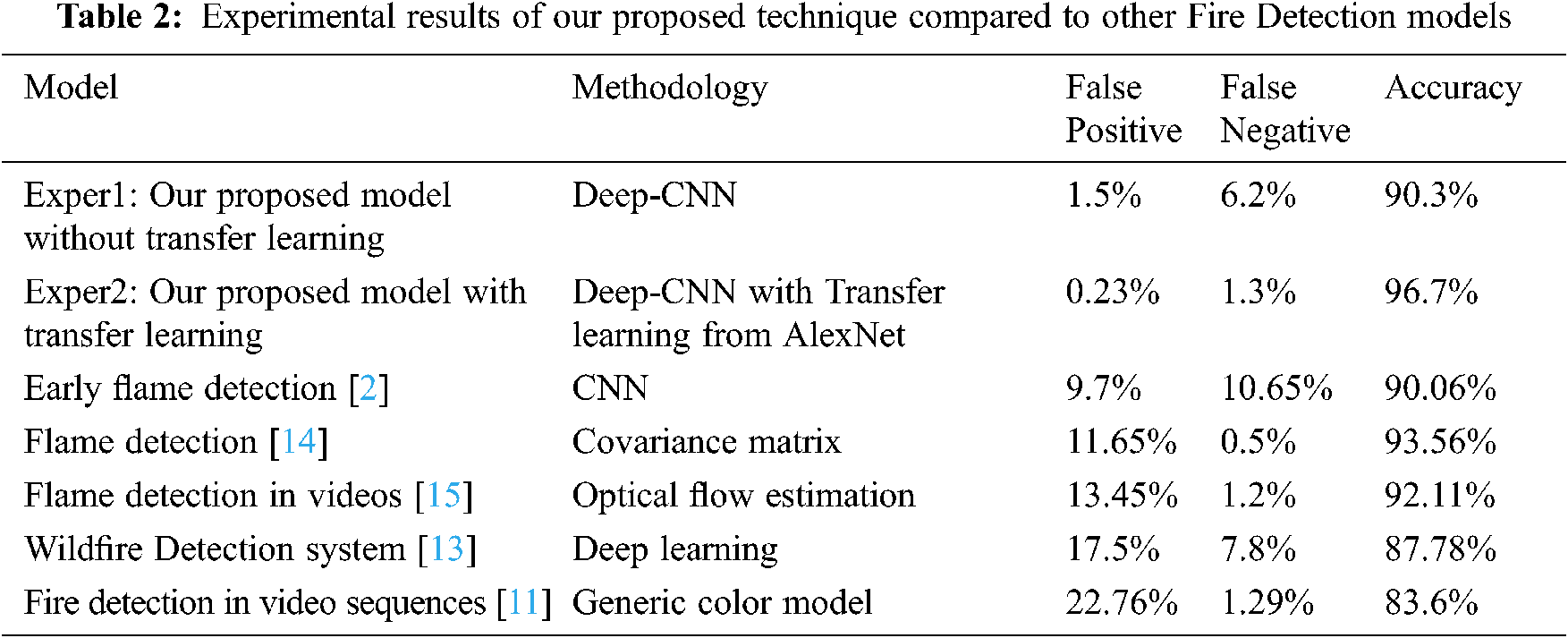

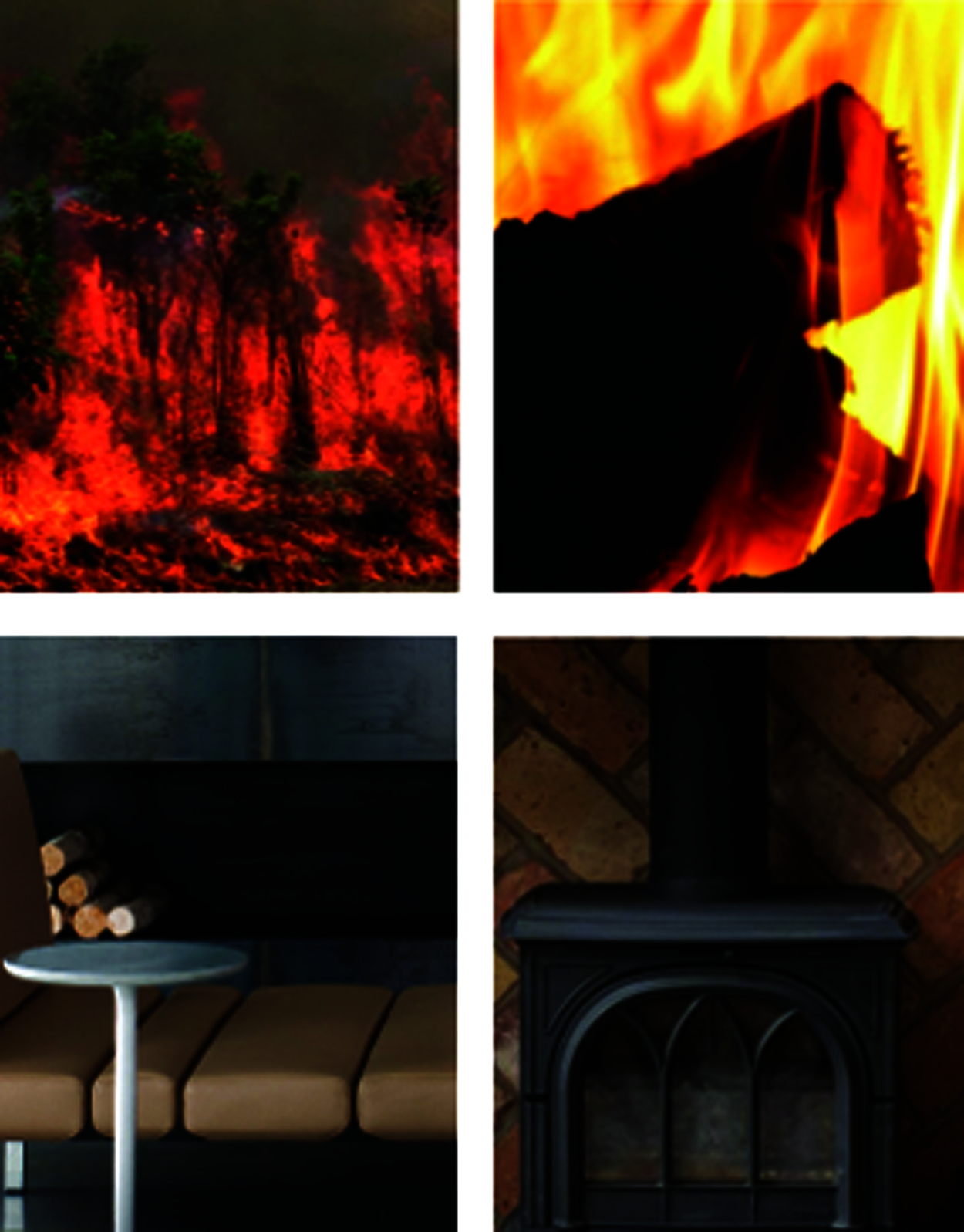

The dataset Dataset1 contains 370 videos with different settings w.r.t illumination, day and night size and distance variations. Dataset1 has 190 videos that include real fire flames, and 180 videos without flames. Dataset1 is a good example of a fire detection benchmark with different settings of colors and motion. Also, videos, with no fires, contain rigid objects that look like fires, clouds, and flames. Fig. 5 displays images from Dataset1. We performed our experiments utilizing our proposed model and compared them to the state of the art for fire detection in literature. Comparison of our proposed model with other Fire Detection models is depicted in Tab. 2.

Figure 5: Images and frames from Dataset, the first rows are frames including fires, the last two rows do not include fires

We compared various flame detection systems to our proposed system. The selected systems are compared using features embedded in the models for fire detection and the utilized datasets. The accuracy of the results presented in [14] was 93.45% with a high false alarm rate of 12.3%. The accuracy indicated high performance but the rate of the false alarms is unacceptable. For this reason, we explored other deep learning networks such as AlexNet as described in [2]. We also investigated fire detection systems using GoogleNet with accuracy of 89.41% combined with a low false positive rate of 0.11%. GoogleNet was adjusted by changing the layers weights with respect to the false alarm rate. Also, we investigated other methods to increase the accuracy by integrating transfer learning techniques [32]. Transfer learning algorithm was performed by selecting the initial weights from GoogleNet training criteria from other systems. The learning threshold was tuned to 0.003, while the fully connected layer was tuned according to the proposed classification paradigm. We achieved better accuracy and reduced the false alarm rate at the same time. Also, the false negatives rate decreased down to 1.43%.

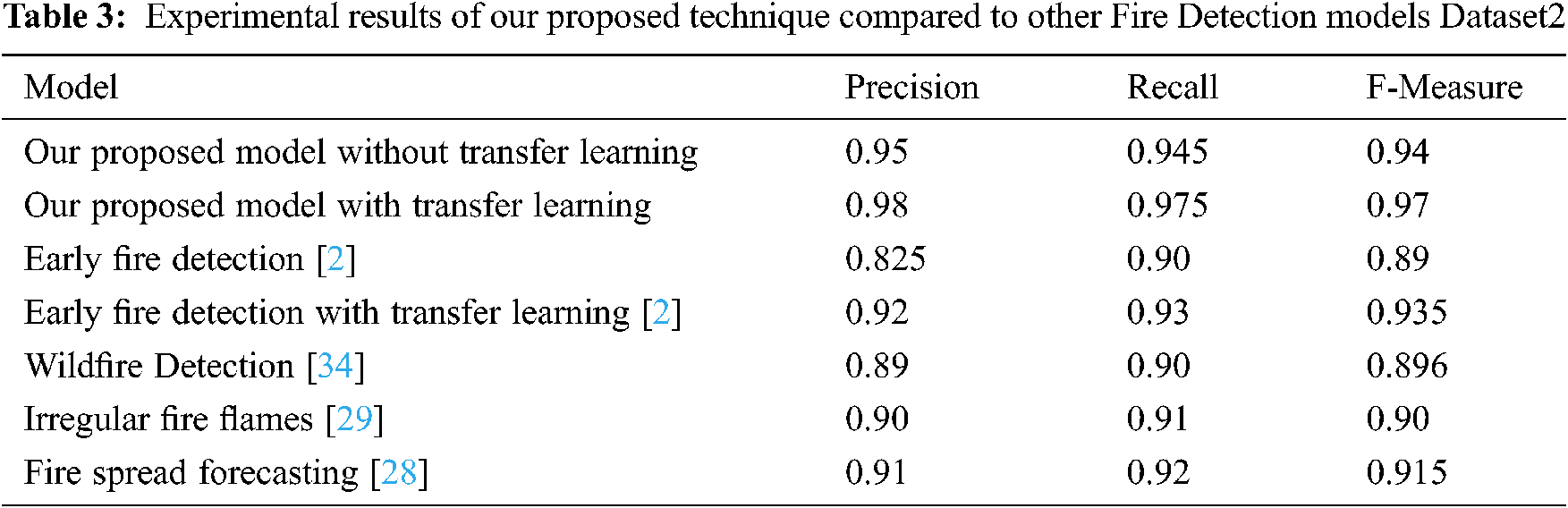

Dataset2 is a dataset that is described in [30]. Dataset2 has 600 images with 380 images that contain fires and 220 normal images with no flames. From the first insight, the size of Dataset2 seems small but it contains many important challenges. Dataset2 includes non-flame objects with flame-colors and also contains many sun scenes in different situations. We exhibited many images from Dataset2 in Fig. 6. We compared the results of our model on Dataset2 with five models, the first two models used engineering features and the other three models used deep learning CNN. We utilized precision, F-measure and recall as metrics [33–34]. The performance of the experiments of our model on Dataset2 compared to other models are represented in Tab. 3. Our results are better than the engineering features models.

Figure 6: First row contains images with fire, second row has images with no fires (Dataset2)

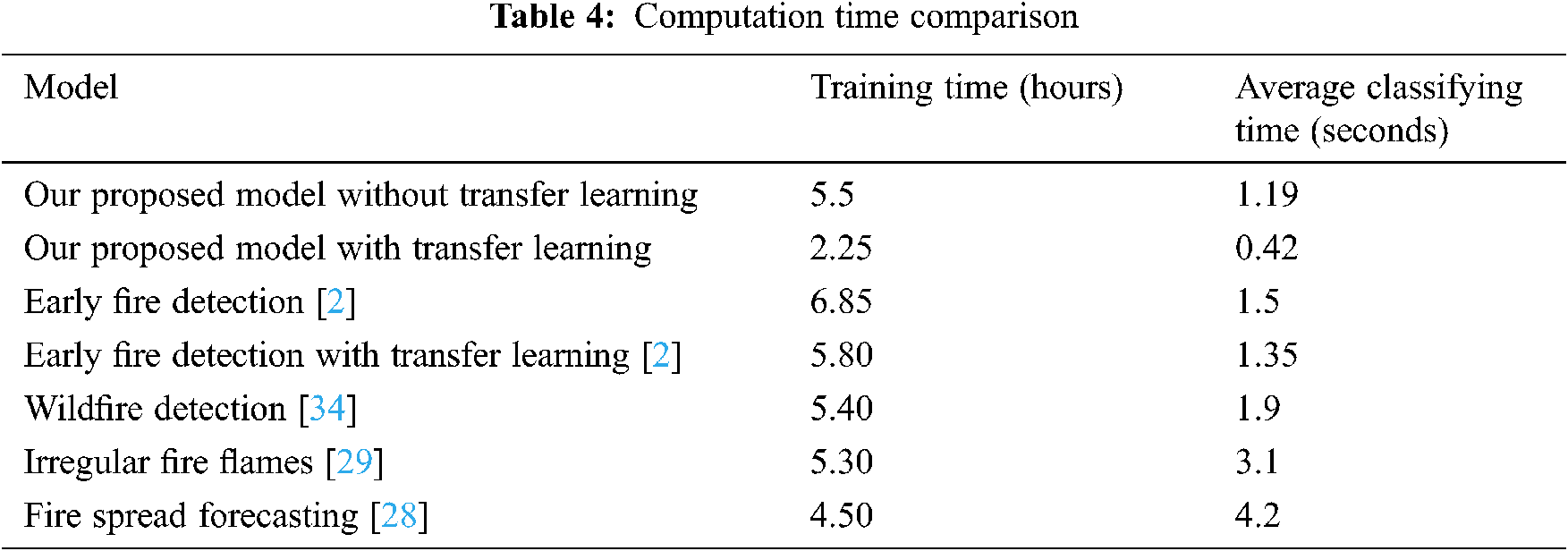

In our model, the computational cost was one of the concerns as real time fire detection is a must to detect fires in an early stage. Deep-CNN models such as AlexNet, and ResNet50 have high computational cost. For example, ResNet50 Deep network has 138 M parameters. On the other hand,

Our proposed Deep-CNN has only 24 M parameters. Also, our proposed model has less training and classification time due to transfer learning. The other Deep-CNN models are more time consuming for both the training and the classification processes as depicted in Tab. 4. The classification time is computed in seconds. The experimental results present the model complexity. Our proposed model takes less time in classifying the entire dataset.

The improved processing abilities revealed better results in surveillance video systems for fire detection. Fire is one of the hazardous situations that can cause death if it is not detected early. The significance of building computerized fire detection models became very important. In our paper, we proposed a real time fire detection Deep learning model for surveillance videos. The system is stimulated from the GoogleNet model with transfer learning incorporation. Experiments results have displayed that our proposed model performance is better than the fire detection models that do not utilize transfer learning. The experiments prove the accuracy of the proposed model. Also, validation of the model in fire detection in surveillance videos is proved and the performance of the model is compared to other fire detection models.

Funding Statement: This research was funded by the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University through the Fast-track Research Funding Program.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. P. Foggia, A. Saggese and M. Vento, “Real-time fire detection for video-surveillance applications using a combination of experts based on color, shape, and motion,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 25, no. 9, pp. 1545–1556, 2015. [Google Scholar]

2. K. Muhammad, J. Ahmad and S. W. Baik, “Early fire detection using convolutional neural networks during surveillance for effective disaster management,” Neurocomputing, vol. 288, no. 5, pp. 30–42, 2018. [Google Scholar]

3. J. Choi and Y. Choi, “An integrated framework for 24-hours fire detection,” in Proc. of European Conf. of Computer Vision, Paris, France, pp. 463–479, 2020. [Google Scholar]

4. X. Zhang, Z. Zhao and J. Zhang, “Contour based forest fire detection using FFT and wavelet,” in Proc. of the Int. Conf. on Computer Science and Software Engineering, Hubei, China, pp. 760–763, 2019. [Google Scholar]

5. C. Liu and N. Ahuja, “Vision based fire detection,” in Proc. 17th Int. Conf. of Pattern Recognition, Athens, Greece, pp. 134–137, 2019. [Google Scholar]

6. T. Chen, P. Wu and Y. Chiou, “An early fire-detection method based on image processing,” in Proc. Int. Conf. of Image Processing (ICIPCleveland, Ohio, pp. 1707–1710, 2020. [Google Scholar]

7. B. Töreyin, Y. Dedeoğlu, U. Güdükbay and A. Çetin, “Computer vision-based method for real-time fire and flame detection,” Pattern Recognition Letters, vol. 27, no. 1, pp. 49–58, 2006. [Google Scholar]

8. J. Choi and Y. Choi, “Patch-based fire detection with online outlier learning,” in Proc. 12th IEEE Int. Conf. of Advanced Video Signal Based Surveillance. (AVSSLondon, England, pp. 1–6, 2019. [Google Scholar]

9. G. Marbach, M. Loepfe and T. Brupbacher, “An image processing technique for fire detection in video images,” Fire Safety Journal, vol. 41, no. 4, pp. 285–289, 2006. [Google Scholar]

10. D. Han and B. Lee, “Development of early tunnel fire detection algorithm using the image processing,” in Proc. Int. Sym. of Visual Computing, pp. 39–48, 2020. [Google Scholar]

11. T. Çelik and H. Demirel, “Fire detection in video sequences using a generic color model,” Fire Safety Journal, vol. 44, no. 2, pp. 147–158, 2019. [Google Scholar]

12. P. Borges and E. Izquierdo, “A Probabilistic approach for vision-based fire detection in videos,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 20, no. 5, pp. 721–731, 2010. [Google Scholar]

13. R. Dianat, M. Jamshidi, R. Tavakoli and S. Abbaspour, “Fire and smoke detection using wavelet analysis and disorder characteristics,” in Proc. 3rd Int. Conf. of Computational Development, Cairo, Egypt, pp. 262–265, 2019. [Google Scholar]

14. P. Govil, K. Welch, M. Ball and J. Pennypacker, “Preliminary results from a wildfire detection system using deep learning on remote camera images,” Remote Sensing, vol. 12, no. 1, pp. 166, 2020. [Google Scholar]

15. Y. Habiboğlu and O. Günay, “Covariance matrix-based fire and flame detection method in video,” Machine Vision and Applications, vol. 23, no. 6, pp. 1103–1113, 2012. [Google Scholar]

16. M. Mueller, P. Karasev, I. Kolesov and A. Tannenbaum, “Optical flow estimation for flame detection in videos,” IEEE Transactions on Image Processing, vol. 22, no. 7, pp. 2786–2797, 2013. [Google Scholar]

17. R. Lascio, A. Greco, A. Saggese and M. Vento, “Improving fire detection reliability by a combination of video analytics,” in Proc. Int. Conf. of Image Analytics Recognition, Ostrava, CZ, pp. 477–484, 2018. [Google Scholar]

18. C. Szegedy and M. Pethay, “Going deeper with convolutions,” in Proc. IEEE Conf. of Computing of Visual Pattern Recognition, Napoli, Italy, pp. 1–9, 2019. [Google Scholar]

19. Y. Cun, X. Yu and Z. Chou, “Handwritten digit recognition with a back-propagation network,” in Proc. Advanced Neural Information Processing Systems, Alexandria, Go, pp. 396–404, 1990. [Google Scholar]

20. J. Ullah, K. Ahmad, K. Muhammad and M. Sajjad, “Action recognition in video sequences using deep Bi-directional LSTM with CNN features,” IEEE Access, vol. 6, pp. 1155–1166, 2018. [Google Scholar]

21. M. Ullah, A. Mohamed and M. Morsey, “Action recognition in movie scenes using deep features of keyframes,” Journal of Korean Institute of Next Generation Computation, vol. 13, pp. 7–14, 2017. [Google Scholar]

22. B. Chen, C. Yuan and J. Song, “SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention,” Remote Sensors, vol. 2, no. 1, pp. 256–267, 2019. [Google Scholar]

23. R. Zhang, J. Shen and K. Sangaiah, “Medical image classification based on multi-scale non-negative sparse coding,” Artificial Intelligence in Medicine, vol. 83, no. 2, pp. 44–51, 2017. [Google Scholar]

24. O. Samuel, H. Hanouk and M. Bayoumi, “Pattern recognition of electromyography signals based on novel time domain features for limb motion classification,” Computation Electrical Engineering, vol. 3, no. 1, pp. 45–57, 2020. [Google Scholar]

25. A. Krizhevsky, A. Sutskever and I. Hinton, “ImageNet classification with deep convolutional neural networks,” Advances in Neural Information Processing Systems, Pereira, vol. 2, no. 1, pp. 1097–1105, 2018. [Google Scholar]

26. J. Yang, B. Jiang, B. Lim and Z. Lv, “A fast image retrieval method designed for network big data,” IEEE Transactions on Industrial Informatics, vol. 13, no. 5, pp. 2350–2359, 2017. [Google Scholar]

27. A. Namozov and A. Cho, “An efficient deep learning algorithm for fire and smoke detection with limited data,” Advanced Electrical Computation, vol. 2, no. 1, pp. 121–128, 2019. [Google Scholar]

28. Y. Jia, X. Yu, M. Chi, S. Mahmoud, N. Nally et al., “Caffe: Convolutional architecture for fast feature embedding,” in Proc. 22nd ACM Conf. of Multimedia, Columbus, OH, pp. 675–678, 2020. [Google Scholar]

29. D. Chino and A. Traina, “BoWFire: Detection of fire in still images by integrating pixel color and texture analysis,” in Proc. 28th SIBGRAPI Conf. Graphics, Patterns and Images, Berlin, Germany, pp. 95–102, 2019. [Google Scholar]

30. S. Verstockt, K. Meras and F. Mamdouh, “Video driven fire spread forecasting (f) using multi-modal LWIR and visual flame and smoke data,” Pattern Recognition Letters, vol. 34, no. 1, pp. 62–69, 2013. [Google Scholar]

31. C. Ko, S. Ham and J. Nam, “Modeling and formalization of fuzzy finite automata for detection of irregular fire flames,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 21, no. 12, pp. 1903–1912, 2011. [Google Scholar]

32. S. Pan and Q. Yang, “A survey on transfer learning,” IEEE Transactions on Knowledge and Data Engineering, vol. 22, no. 10, pp. 1345–1359, 2010. [Google Scholar]

33. N. Lokman, N. Daud and M. Chia, “Fire recognition using RGB and YCbCr color space,” ARPN Journal of Engineering Application, vol. 10, no. 1, pp. 9786–9790, 2019. [Google Scholar]

34. H. Badawi and D. Cetin, “Computationally efficient wildfire detection method using a deep convolutional network pruned via Fourier analysis,” Sensors, vol. 2, no. 3, pp. 234–245, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |