DOI:10.32604/iasc.2021.019020

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2021.019020 |  |

| Article |

Predicting the Breed of Dogs and Cats with Fine-Tuned Keras Applications

1Department of Power Mechanical Engineering, National Formosa University

2Department of Automation Engineering, National Formosa University, Huwei Township, Taiwan

*Corresponding Author: Kuang-Chyi Lee. Email: kclee@gs.nfu.edu.tw

Received: 29 March 2021; Accepted: 30 April 2021

Abstract: The images classification is one of the most common applications of deep learning. Images of dogs and cats are mostly used as examples for image classification models, as they are relatively easy for the human eyes to recognize. However, classifying the breed of a dog or a cat has its own complexity. In this paper, a fine-tuned pre-trained model of a Keras’ application was built with a new dataset of dogs and cats to predict the breed of identified dogs or cats. Keras applications are deep learning models, which have been previously trained with general image datasets from ImageNet. In this paper, the ResNet-152 v2, Inception-ResNet v2, and Xception models, adopted from Keras application, are retrained to predict the breed among the 21 classes of dogs and cats. Our results indicate that the Xception model has produced the highest prediction accuracy. The training accuracy is 99.49%, the validation accuracy is 99.21%, and the testing accuracy is 91.24%. Besides, the training time is about 14 hours and the predicting time is about 18.41 seconds.

Keywords: Image classification; deep learning; Keras; Inception; ResNet; Xception

Deep learning is commonly used to solve computer vision problems, with researchers building upon each other’s work. Dean et al. [1] applied deep learning to speech recognition, and Krizhevsky et al. [2] developed a convolutional neural network (CNN) for image classification. Recognizing that building new, accurate CNNs is difficult due to the data and time required, researchers have fine-tuned existing models to improve results without that expense. Tajbakhsh et al. [3] demonstrated a fine-tuned CNN model that produced better results than an all-new one. Yosinski et al. [4] then demonstrated the feature transferability of CNN models and developed a new image classifier using a pre-built Keras model with a new dataset. Our aim is to tailor a Keras model to develop a classifier for identifying the breeds of dogs and cats (CDC). Our approach can also be applied to develop new image classifiers to enable robots in automated factories to identify objects using appropriate datasets of tools and work pieces.

Image classification of dogs and cats has been frequently used as a case study for deep learning methods. Parkhi et al. [5] proposed a method for classifying the images of 37 different breeds of dogs and cats with an accuracy of 59%. Panigrahi et al. [6] used a deep learning model to classify images of dogs and cats simply as “dog” or “cat”, with a testing accuracy of 88.31%. Jajodia et al. [7] used a sequential CNN to make a similar basic distinction, with 90.10% accuracy. Reddy et al. [8], Lo et al. [9], Deng et al. [10], and Buddhavarapu et al. [11] utilized transfer learning methods with Keras models to create the new models of Resnet, Inception-Resnet, and Xception.

We adopted Keras models pre-trained with a general image dataset. We used that with our new CDC with images of dogs and cats as listed in Tab. 1. Our data included images of 21 breeds of dogs or cats divided into training, validation, and testing images as shown. All images were taken from Dreamstime stock photos [12]. In total, we used 20,574 training images, 2,572 validation images, and 2,590 testing images.

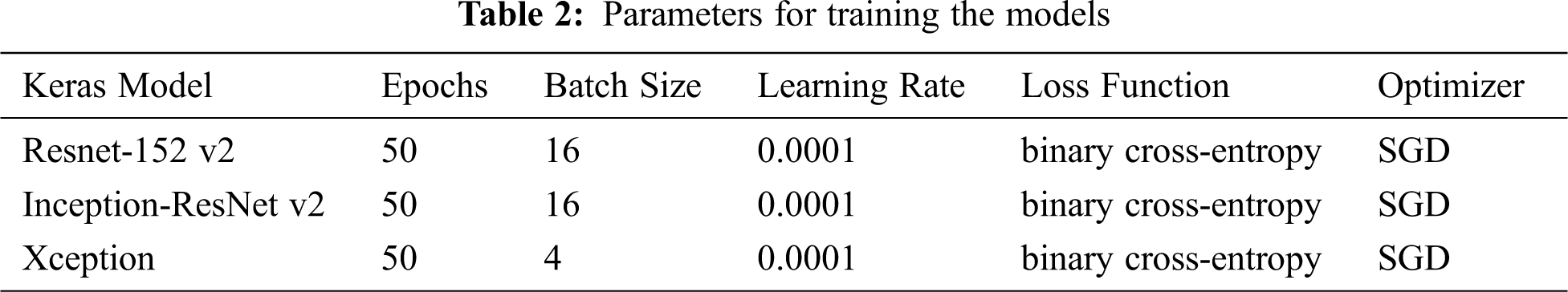

To test our approach, we adopted the Inception-ResNet v2, ResNet152 v2, and Xception models from the Keras repository and fine-tuned them with the new dataset. We fine-tuned CDC with the training and validation datasets, omitted the top layer of each Keras model, and redefined the number of fully connected layers based on the number of breeds. The training parameters are shown in Tab. 2. For training ResNet-152 v2 and Inception-ResNet v2, we used 50 epochs and a batch size of 16. For training Xception, we used 50 epochs and a batch size of 4. We used the stochastic gradient descent (SGD) as the optimizer in all cases, with a learning rate of 0.0001. Finally, we employed binary cross-entropy as the loss function throughout.

We created the confusion matrix by using the fine-tuned Keras model with the testing dataset as input. We calculated the testing accuracy of each CDC-Keras model combination using the confusion matrix and Eq. (1):

where TPi is the true positive value for each breed, FNi is the false negative value for each breed, and n is the number of breeds.

4.1 ResNet-152-Based CDC Model

He et al. [13] proposed ResNet v2 as an improvement of the residual network model. Identity mapping was used to directly propagate the forward and backward signals from one block to another. ResNet v2 offers better performance than the previous version, which has various types with different sizes of hidden layers. ResNet-152 v2 has 152 hidden layers and uses a fixed input image size of 224 × 224 RGB pixels.

The training result for CDC using the fine-tuned ResNet-152 model is shown in Fig. 1, showing accuracy increasing and loss decreasing with the training epoch. The accuracy of the training data with the ResNet-152-based CDC model was about 99.14% with a validation accuracy of about 99.00%. Training the ResNet-152-based CDC required 9 hours and 48 minutes. The confusion matrix of the ResNet-152-based CDC model shown in Tab. 3 indicates the prediction and recall percentage of each breed. The overall accuracy was 89.31%.

Figure 1: Accuracy and loss versus epochs when training ResNet-152 v2 (a) Accuracy (b) Loss

4.2 Inception-ResNet-Based CDC Model

Szegedy et al. [14] developed Inception-ResNet v2 model as an improvement to Inception v3 with residual connections to increase training speed and recognition performance. The input images to Inception-ResNet v2 are fixed size 299 × 299 RGB pixels. Training results of the fine-tuned Inception-ResNet-based CDC model are shown in Fig. 2. The final training accuracy and validation were about 98.97% and 98.94%, respectively. Training this combination required 12 hours and 29 minutes. The confusion matrix of the Inception-ResNet-based CDC model is shown in Tab. 4. The testing accuracy was about 89.50%.

Figure 2: Accuracy and loss versus epochs when training Inception-ResNet v2 (a) Accuracy (b) Loss

Chollet [15] developed Xception as an improvement to Inception v3. The Xception and Inception v3 models have the same parameters, but Xception uses separable convolutions. The inputs to Xception are fixed size 299 × 299 RGB images. The training result of the fine-tuned Xception-based CDC model is shown in Fig. 3. The final training and validation accuracies of the Xception-based CDC model were 99.50% and 99.23%, respectively. This combination required 13 hours and 48 minutes for training. The confusion matrix of the Xception-based CDC model is shown in Tab. 5. The testing accuracy was 91.24%.

Figure 3: Accuracy and loss versus epoch when training Xception (a) Accuracy (b) Loss

Tab. 6 shows the training and validation accuracies for each fine-tuned CDC model. The table also includes values for the fine-tuned VGG19-based CDC model from our previous work [16]. The models we have evaluated for this paper all have higher accuracy than the VGG19-based CDC model. The Xception-based CDC model had the highest accuracy among all the models.

Tab. 7 provides a comparison of the recall values of these same four models. The Xception-based CDC model had the highest class recall among all the models with most of the breeds. However, the highest breed-specific recall values for Alaskan Malamute dog, Norwegian Forest Cat, and Devon Rex cat were achieved by the ResNet-152-based CDC model. The highest breed-specific recall values for Bernese Mountain Dog, Shiba Inu dog, Cornish Rex cat, Scottish Fold cat, and Siamese Cat were achieved by the Inception ResNet-152-based CDC model. The ResNet-152-based CDC model had the highest recall value of 100% for the Alaskan Malamute class and the lowest recall value of 72.19% for the Siberian Cat class. The Inception-ResNet-based CDC model had the highest recall value of 99.35% for the Bernese Mountain Dog class and the lowest recall value of 64.23% for the Norwegian Forest Cat class. Finally, the Xception-based CDC model had the highest recall value of 100% for the Boston Terrier dog class and the lowest recall value of 68.29% for the Norwegian Forest Cat class.

The testing accuracy for the Norwegian Forest Cat class was poor overall. We believe this is due to the similarities between the Norwegian Forest Cat and the Siberian and Maine Coon cats it was commonly misclassified as. Fig. 4 shows images of these three breeds.

Figure 4: Comparison of three cat breeds with similar appearance (a) Norwegian Forest Cat (b) Siberian Cat (c) Maine Coon

Tab. 8 shows the training and testing times for all models. VGG19 had the shortest training time, 6 hours and 38 minutes, but the poorest accuracy. The Xception-based CDC model had the longest training time but achieved the highest accuracy and required the least amount of time to identify the breed.

We also used these combined models to identify individual dog and cat images, with results shown in Fig. 5. The prediction times are shown in Tab. 9.

Figure 5: Single image prediction results (a) American Short Hair image (b) Belgian Malinois image (c) Devon Rex image (d) Basset Hound image (e) Akita image (F) Abyssinian Cat image

Tab. 9 shows that the Xception-based CDC was slower than the VGG19-based CDC model but faster than the other two models. Fig. 6 plots the overall performance of all models. Xception offered better accuracy than VGG19, at the cost of slower classification time.

Figure 6: Overall performance plot of all models

In this paper, we have presented an image classifier for identifying the breeds of dogs and cats that incorporates fine-tuned deep learning models from Keras. Our results show that the Xception-based CDC model has the highest accuracy among those tested, with training, validation, and testing accuracies of 99.49%, 99.21%, and 99.21%, respectively. Although the training speed of the VGG19-based CDC model from our previous work is the fastest among all the models, it offers the lowest accuracy. The higher accuracy of Xception comes at a cost, with the longest training time of 13 hours and 14 minutes. Nonetheless, Xception’s speed at identifying breeds was second-fastest overall, behind only the relatively inaccurate VGG19.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. J. Dean, G. Corrado, R. Monga, K. Chen, M. Devin et al., “Large scale distributed deep networks,” in Advances in Neural Information Processing Systems, pp. 1223–1231, 2012. [Google Scholar]

2. A. Krizhevsky, I. Sutskever and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, no. 6, pp. 84–90, 2017. [Google Scholar]

3. N. Tajbakhsh, J. Y. Shin, S. R. Gurudu, R. T. Hurst, C. B. Kendall et al., “Convolutional neural networks for medical image analysis: Full training or fine tuning,” IEEE Transactions on Medical Imaging, vol. 35, no. 5, pp. 1299–1312, May 2016. [Google Scholar]

4. J. Yosinski, J. Clune, Y. Bengio and H. Lipson, “How transferable are features in deep neural networks,” in Advances in Neural Information Processing Systems, pp. 3320–3328,2014. [Google Scholar]

5. O. M. Parkhi, A. Vedaldi, A. Zisserman and C. V. Jawahar, “Cats and dogs,” in IEEE Conf. on Computer Vision and Pattern Recognition, Providence, RI, pp. 3498–3505, 2012. [Google Scholar]

6. S. Panigrahi, A. Nanda and T. Swarnkar, “Deep learning approach for image classification,” in 2018 2nd Int. Conf. on Data Science and Business Analytics (ICDSBAChangsha, pp. 511–516, 2018. [Google Scholar]

7. T. Jajodia and P. Garg, “Image classification - cat and dog images,” International Research Journal of Engineering and Technology, vol. 6, no. 12, pp. 570–572, 2019. [Google Scholar]

8. A. S. B. Reddy and D. S. Juliet, “Transfer learning with ResNet-50 for malaria cell-image classification,” in 2019 Int. Conf. on Communication and Signal Processing (ICCSPChennai, India, pp. 0945–0949, 2019. [Google Scholar]

9. W. W. Lo, X. Yang and Y. Wang, “An Xception convolutional neural network for malware classification with transfer learning,” in 2019 10th IFIP Int. Conf. on New Technologies, Mobility and Security (NTMSCanary Islands, Spain, pp. 1–5, 2019. [Google Scholar]

10. G. Deng, Y. Zhao, L. Zhang, Z. Li, Y. Liu et al., “Image classification and detection of cigarette combustion cone based on Inception Resnet v2,” in 2020 5th Int. Conf. on Computer and Communication Systems (ICCCSShanghai, China, pp. 395–399, 2020. [Google Scholar]

11. V. G. Buddhavarapu and A. A. Jothi J, “An experimental study on classification of thyroid histopathology images using transfer learning,” Pattern Recognition Letters, vol. 140, pp. 1–9, 2020. [Google Scholar]

12. Dreamtime Stock Photos, n.d. [Online]. Available: https://www.dreamstime.com/photos-images/dreamtime.html. [Google Scholar]

13. K. He, X. Zhang, S. Ren and J. Sun, “Identity mappings in deep residual networks,” in European conf. on computer vision, Cham: Springer, pp. 630–645, 2016. [Google Scholar]

14. C. Szegedy, S. Ioffe, V. Vanhoucke and A. Alemi, “Inception-v4, inception-resnet and the impact of residual connections on learning,” Thirty-First AAAI Conf. on Artificial Intelligence, 2017.[Online]. Available:https://arxiv.org/abs/1602.07261 [Google Scholar]

15. F. Chollet, “Xception: Deep learning with depthwise separable convolutions,” in 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPRHonolulu, HI, pp. 1800–1807, 2017. [Google Scholar]

16. I. H. Mahardi, K. C. Wang Lee, S. L. Chang, “Images classification of dogs and cats using fine-tuned VGG models,” in 2nd IEEE Eurasia Conf. on IOT, Communication and Engineering 2020, Yunlin, Taiwan, pp. 230–233, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |