DOI:10.32604/iasc.2021.019194

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2021.019194 |  |

| Article |

Intelligent and Integrated Framework for Exudate Detection in Retinal Fundus Images

1Bahria University, Lahore Campus, Lahore, 54000, Pakistan

2Seecs, National University of Sciences and Technology, Islamabad, 44000, Pakistan

3Ind. Researcher, Neckarstrasse 244, PLZ: 70190, Stuttgart, Germany

*Corresponding Author: Muhammad Khurram Ehsan. Email: mehsan.bulc@bahria.edu.pk

Received: 04 April 2021; Accepted: 05 May 2021

Abstract: Diabetic Retinopathy (DR) is a disease of the retina caused by diabetes. The existence of exudates in the retina is the primary visible sign of DR. Early exudate detection can prevent patients from the severe conditions of DR An intelligent framework is proposed that serves two purposes. First, it highlights the features of exudate from fundus images using an image processing approach. Afterwards, the enhanced features are used as input to train Alexnet for the detection of exudates. The proposed framework is comprised on three stages that include pre-processing, image segmentation, and classification. During the pre-processing stage, image quality is enhanced using contrast enhancement, median filtering, and removal of Optic Disc (OD). In the segmentation stage, image segmentation is performed using morphological operations. During the classification phase, these segmented images are then applied as input to Alexnet for fine-tuning of features and classification. A new data set of fundus images is also developed using data from a local hospital. CNN classifier Alexnet is applied on a newly developed local data set of fundus retinal images with 96.6% accuracy and on a benchmark public research data set DIARETDB1 with 98.88% accuracy. The statistical evaluation and comparative analysis of the proposed approach with existing state-of-the-art techniques validate that the proposed framework is robust and outperforms other methods for early detection of exudates in the fundus.

Keywords: Diabetic retinopathy; exudates; optic disc; segmentation; Alexnet

Diabetic Retinopathy (DR) is a diabetic disease that affects the retina of human eye. DR has two stages that include Proliferative Diabetic Retinopathy (PDR) and Non-Proliferative Diabetic Retinopathy (NPDR). PDR is considered as an early stage of DR. Development of retinal lesions, micro-aneurysm (MA) and hemorrhages in the retina are symptoms of PDR. A normal retinal fundus image comprises of a healthy retina and a tissue layer that lies in the innermost surface of an eye. The light is captured within the retinal region and is converted to electrical impulses. The pulses are directed through the optic nerve to our brain, where it gets transformed into images. The retinal surface which is photographed using fundus photography consists of a bright circular region known as the Optic Disc (OD) [1]. The fundus images show the branch-like structures which are the blood vessels that carry blood and other essential components for the healthy functional eye. Variations in the levels of sugar within blood make the retinal blood vessels weaker which consequently leads to DR.

These retinal exudates are signs of a severe eye disease and a major cause of blindness. Exudates need regular screening because early detection of exudates can help patients to prevent blindness. Delay in identification of this situation makes the disease worse. According to the world health report about 40%–60% patients in a year can be examined by Ophthalmologists. To reduce the burden of ophthalmologist and for timely treatment, an intelligent system is required. An intelligent and robust framework is proposed that is invariant to the imaging conditions and dynamic illumination patterns (caused by severe abnormalities on the image) to automate the exudates detection process from the fundus image. The contribution of this study is described as following:

• An intelligent framework is proposed for detection of exudates using image processing techniques and Alexnet. The proposed framework is also then compared with other existing methods using benchmark public data set named DIARETDB1 (Standard Diabetic Retinopathy Database level 1).

• A dataset of fundus images is developed using patient’s data obtained from General Hospital, Lahore to investigate the problem of exudate detection on a local dataset as well. The newly developed dataset is also then preprocessed, labeled, and validated with the help of Ophthalmologists for training and testing of the proposed approach.

• Proposed automated framework can certainly aid ophthalmologists to diagnose the retinal pathologies progress such as the exudate with ease and accuracy as compared to a physical manual examination.

Rest of the paper is organized as follows: Section 2 describes prominent related work in the field. Section 3 describes collection of data sets. Section 4 explains the proposed methodology and experimentation. Section 5 presents the results and discussion followed by conclusion in Section 6.

State-of-the-art research trends suggest that deep learning demonstrates high degree of performance when it is employed in classification problems. A lot of intelligent methods have been proposed for the identification of exudates in the literature [2–6]. There are three probable symptoms of DR that can occur in the form of MA, hemorrhages, and hard/soft exudates. MA appear as small dark red spots which are circular in shape. Size of MA varies between 20-200 microns. In medical imaging, deep learning algorithms are known to detect key features from medical images to classify disease. Learning algorithms can then be applied to make predictions on a live scenarios or test cases. Supervised deep learning techniques are being applied using Convolutional Neural Network (CNN) and random forest to classify pixels as exudate and non-exudate. Many frameworks are reported based on morphological operations to extract the blood vessels and features related to the DR. Pre-processing techniques such as scaling, noise removal and enhancements are also used by researchers on fundus images to highlight the regions of interest [7–10]. However, if not used appropriately, it may result in the loss of required valuable features.

Contrast Limited Adaptive Histogram Equalization (CLAHE) [11] is used by exploiting green channel of fundus images using for contrast adjustments. After pre-processing image features are extracted which are further fed to support vector machine (SVM) classifier for differentiating the exudate and non-exudate regions. In Franlink et al. [12], an intelligent algorithm is proposed for detection and analysis of vascular structures in retinal images. Comparison of different neural network classifiers [13] is performed using publicly available dataset of retinal images STARE to classify hard exudates, the authors also validated the better accuracy of CNN in comparison to SVM and KNN. Transfer learning approach [14] is adapted using Alexnet CNN for identification of human based on ear images. In Bharkad [15], mask generation contrast enhancement is performed on the green channel to eliminate blood vessels followed by morphological closing, hole filling and thresholding. After that, exudates are detected by removing the OD and the neural network (NN) based classifier is used for further classification. The accuracy of the NN classifier is obtained 97.46%. A simple framework is proposed in Manohar et al. [16] based on mathematic morphology for the detection of MA from digital color images of the retina.

In Haloi [17], it is assumed that MA generally distributed as Gaussian and having independent structures of their neighbors. A deep neural network (DNN) based framework is proposed for the classification MA at pixel level while in Mohamed et al. [18], mathematical morphology-based architecture is proposed for the detection of NPDR. In another study, A method is proposed to detect exudates and MA using delta local rank transform based on obtained positive and negative delta. The proposed methodology is applied on two publicly available datasets, where obtained accuracy with DIARETDB1 is 97% while with E-OPHTHA is 95.44% respectively [19].

In a recent study [20], local binary pattern (LBP) based methodology is proposed and is then validated by applying on DIARETDB1 to detect exudates with an accuracy of 81%.

As mentioned in Section 1, one contribution of this study is to develop a local dataset to validate the proposed framework of exudates detection and classification for local patients. The acquisition of different datasets is to achieve retrospective objectives and to address the problem in heterogenous way. Two type of datasets are used for the validation of proposed framework: a) Local dataset (Primary), (b) Public dataset (Secondary).

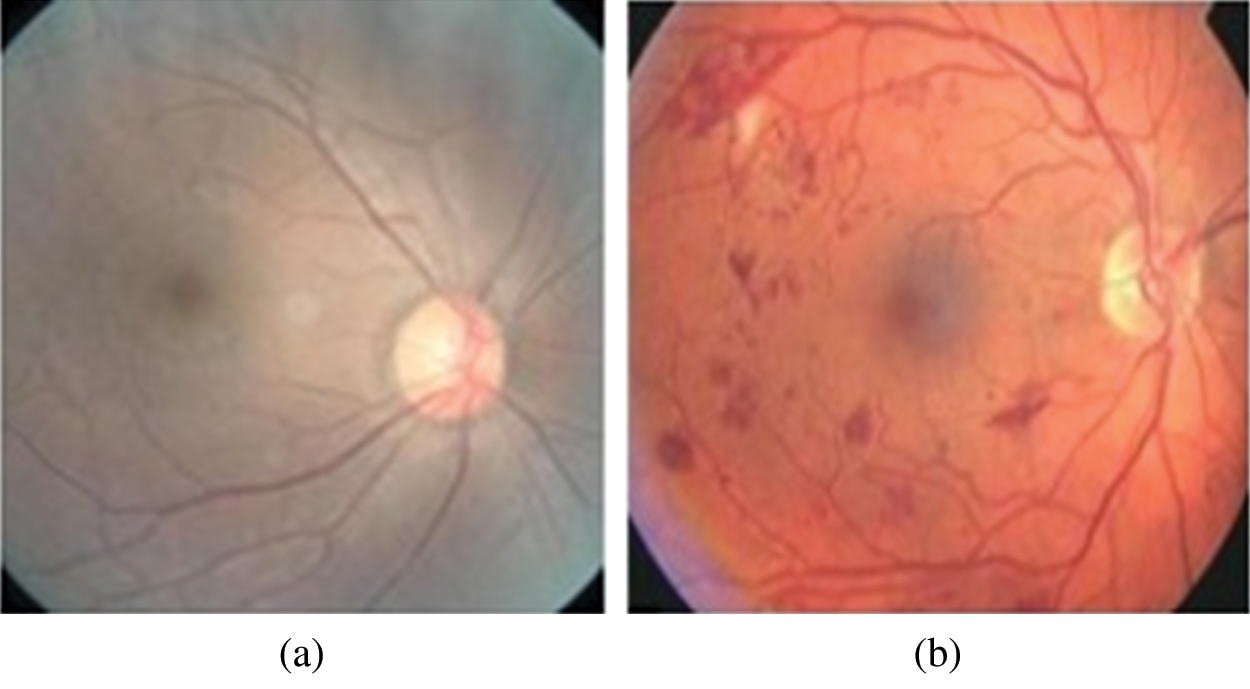

A dataset of 600 fundus images is collected from department of Ophthalmology General Hospital Lahore, Pakistan. This dataset includes images of both left and right eyes. The fundus images are captured by fundus camera with size of 1200 x 1500 pixel in JPEG format. Three ophthalmologists from General Hospital provided the ground truth values for these images. The total number of normal images are 276. Whereas abnormal samples of fundus images are 324 which represent DR symptoms. Fig. 1 shows sample images of normal and diseased (abnormal) retinal fundus of our local dataset.

Figure 1: Retinal fundus images of local dataset collected at General Hospital, Lahore, Pakistan (a) Normal Funds (b) Diseased Fundus

Standard DIARETDB1 [21] is also used to validate proposed framework. DIARETDB1 is a public dataset for benchmark DR detection from fundus images. The dataset is consisted of 89 fundus images having size of 1500 x 1152 in PNG format.

A graphical representation of the proposed methodology in sequential manner is shown in Fig. 2. While the different phases of proposed methodology are described in following subsections:

Figure 2: Methodology

4.1 Colour Retinal Image Acquisition from Database

Images of standard dataset and local dataset are stored in a repository which is named as color retinal image database (CRID). The acquisition of retinal images from dataset is the first step of proposed algorithm.

The fundus images of different patients are taken using different cameras which may result variations of color intensity, size and resolution. These variations can affect the overall results of proposed algorithm. So, pre-processing of these images is required not only to normalize the said variations but also to acquire the better learning input for the classifier. Different pre-processing tasks are carried out on fundus images. Image resizing, extraction of green channel, contrast enhancement, and noise removal technique are performed in this pre-processing phase of the proposed algorithm. The pre-processing step is not only standardized all the images, but it also enables the classifier to extract exudates from retina images in better way. An image resizing is the process of increasing or decreasing the total number of pixels in an image to transform it as one required standard size. Bilinear interpolation method is used in this process to avoid the loss of useful information. After the image resizing, the next step is to extract the green and red plane respectively from the image. As the green plane of color fundus images contains a good contrast level and the exudates are more visible in this plane. While the red plane of the image has a brighter and well distributed gray level values on a wider range that enable the blood vessels are more visible in this channel. Once the green and red channels are extracted from an image, the image is then further processed to enhance the image contrast. The CLAHE is used for image enhancement. To address the quality of an image, noise filtering is performed followed by morphological operation. Median filter of 3 x 3 is applied on green channel to remove noise. Figs. 3a–3d depicts the pre-processing operation of every step on an input image sample.

4.3 Retinal Structure Extraction

OD has the same brightness level as the exudates. To address the confusion between OD and the exudates, retinal structure extraction is performed to eliminate the OD from image completely. An OD is in the central region of an input image. In order to detect it, the diameter is estimated by tracking the rows in image and counting the number of pixels with intensity values representing the foreground (fundus).

Figure 3: Pre-processing stages (a) Input Image (b) Green Channel (c) Red Channel (d) Histogram Equalization

The maximum value is considered as the diameter of the fundus. Once the diameter is determined, central points of the circle (fundus) are easy to extract. Based on the central points, circle equation is applied in which position of each pixel on the boundary is estimated in (x, y) directions and the angle is transformed from 0 to 360 degree. After obtaining all the boundary positions, 10 * 10 pixels window is implemented to track the boundary and eliminate them by replacing their values by zeros. After that, morphological operators are applied to remove all remaining residuals from the blood vessels that cross the optic disc boundary. To make the OD boundary clear, a dilation operator is applied followed by an erosion operator. Stages of retinal structure extraction are shown in Fig. 4.

Figure 4: Retinal structure extraction (a) Green Channel Image (b) Optic Disk Mask (c) Optic Disk Eliminated

After extracting retinal structure, adaptive thresholding is performed for segmenting of an image. Segmentation by maximizing the interclass variance characterizes an input image either as foreground or background. Foreground shows exudate values after that canny edge method is applied to draw exudate region boundaries. Fig. 5 shows the results of segmentation.

Figure 5: Exudate segmentation (a) Optic Disc Eliminated (b) Intermediate Detection (c) Final Exudate Detection

4.5 Training for Classification

CNN are used for classification purpose considering the affine invariant requirements of image data learning for a neural network. CNN is one of the best techniques for image recognition and object detection applications in real time scenarios. Topology of CNN is divided into multiple learning stages. A typical CNN architecture generally comprises of alternate layers of convolution and pooling followed by one or more fully connected layers at the end. In addition to different learning stages, different regulatory units such as batch normalization and dropout are also incorporated to optimize CNN performance [22]. AlexNet [23] is a popular CNN based architecture pretrained on more than a million images from the ImageNet database. Alexnet is used for classification of input images in the proposed framework. The classifier is trained on 90% of total image samples whereas rest of 10% image samples are used for testing. Alexnet is consisted of five convolutional layers and three fully connected layers. The last layer of AlexNet is classifier layer using softmax activation function and the rest of network layers are used as feature extractor. The structure of AlexNet used for training and classification in proposed approach is depicted in Fig. 6.

Figure 6: AlexNet architecture [23]

The Sensitivity, specificity and accuracy are estimated for each dataset (public and local) to validate the proposed framework. The results obtained using public dataset DIRETDB1 are compared with existing work for performance comparison. Sensitivity and specificity are the percentage of abnormal and normal fundus images classified by screening, respectively. Performance metrics including sensitivity, specificity and accuracy that are estimated based on ground truth values True Negative (TN), True Positive (TP), False Negative (FN) and False Positive (FP) [24] as following: -

Accuracy: (Number of correct assessments)/ (Total number of assessments)

Specificity: (Number of true negative assessment)/ (Total number of negative assessments)

Sensitivity: (Number of true positive assessment)/ (Total number of positive assessments)

The obtained accuracy of proposed framework is 98.88% with 98.8% sensitivity and 98.78% specificity respectively while applying on public dataset DIRETDB1. The DIRETDB1 dataset is preprocessed first, and retinal structure extraction is performed then. After that, segmentation is performed as shown in Fig. 7 to get label for better training of Alexnet.

Figure 7: Detection of exudate result samples for data set DIARETDB1

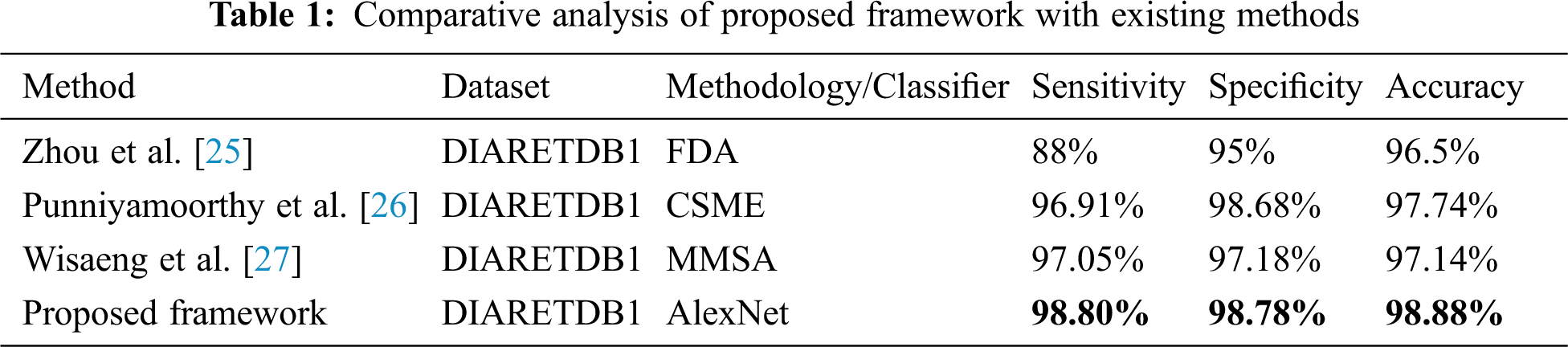

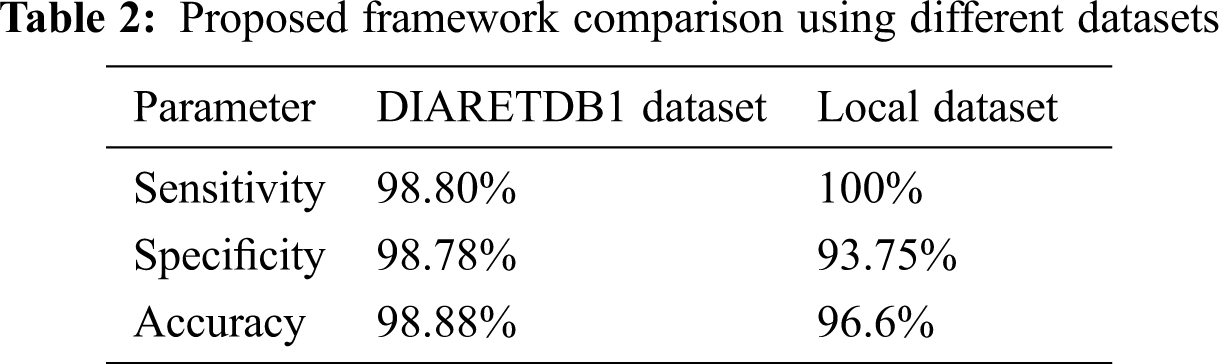

The comparison of proposed framework with existing approaches in terms of performance metrices is listed in Tab. 1 while comparative accuracies are depicted in Fig. 8. Both validate the supremacy of proposed framework in comparison to existing approaches.

Figure 8: Accuracy comparison of the proposed framework with existing approaches

In a similar fashion, the proposed framework is also applied on local dataset to evaluate its performance on diverse datasets. The obtained accuracy of proposed framework is 96.6% with 100% sensitivity and 93.75% specificity respectively while applying on local dataset. Results of the proposed framework are examined by three Ophthalmologist and compared with given ground truth values too.

While Tab. 2 shows the performance of proposed framework using public and local datasets where comparison is listed based on estimated performance metrices.

Automated identification of exudates eliminates possibility of blindness caused by DR and helps ophthalmologists to diagnose timely and suggest an appropriate treatment. An intelligent framework is proposed for exudate detection in Fundus images of diabetic patients. The contribution of this research is two folds; an end-to-end framework to process and analyze the retinal images of DR patients automatically. The proposed approach applies necessary pre-processing and removes all undesired artifacts such as the optical disc and the fundus boundary. It also performs segmentation based on selected features for exudate detection. Then these segmented images are used to train and test the Alexnet classifier. The proposed framework surpasses the efficiency of existing framework with obtained accuracy of 98.8% while applying on DIARETDB1. The proposed framework is also validated using locally developed dataset with obtained accuracy of 96.6%. Proposed automated framework will aid ophthalmologists to early diagnose the retinal pathologies progress such as the exudate with ease and accuracy as compared to a physical manual examination.

Acknowledgement: Author thanks Dr. Bilal Hassan Khan and staff at Eye clinic, Department of Ophthalmology, General Hospital, Lahore Pakistan for their guidance in medical domain and tremendous support in collection of research data.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study. Although APC is to be funded by author’s institute under predefined standard policy.

1. J. Amin, M. Sharif and M. Yasmin, “A review on recent developments for detection of diabetic retinopathy,” Scientifica, vol. 2016, no. 9, pp. 1–21, 2016. [Google Scholar]

2. P. Liskowski and K. Krawiec, “Segmenting retinal blood vessels with deep neural networks,” IEEE Transactions on Medical Imaging, vol. 35, no. 11, pp. 2369–2380, 2016. [Google Scholar]

3. G. Arun, Y. H. Robinson, E. G. Julie, V. Shanmuganathan, S. Rho et al., “Intelligent prediction approach for diabetic retinopathy using deep learning based convolutional neural networks algorithm by means of retina photographs,” Computers, Materials & Continua, vol. 66, no. 2, pp. 1613–1629, 2021. [Google Scholar]

4. H. Asha, J. Anitha, D. E. Popescu, A. Asokan, D. J. Hemanth et al., “Detection and grading of diabetic retinopathy in retinal images using deep intelligent systems: a comprehensive review,” Computers, Materials & Continua, vol. 66, no. 3, pp. 2771–2786, 2021. [Google Scholar]

5. R. Sivakumar, R. Tamilselvi, N. Archana, N. Deepthi and N. Priyadharisini, “Classification and detection of retinal diseases, ” in IEEE IPCSIT Proc. Kuala Lumpur, Malaysia, 2011. [Google Scholar]

6. M. Melinscak, P. Prentasic and S. Loncaric, “Retinal vessel segmentation using deep neural networks,” in SciTePress VISAPP Proc. Berlin, Germany2015. [Google Scholar]

7. D. Singh, Dharmveer and B. Singh, “A new morphology based approach for blood vessel segmentation in retinal images,” in IEEE INDICON Proc. Pune, India2014. [Google Scholar]

8. T. Walter, J. Klein, P. Massin and A. Erginay, “A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina,” IEEE Transactions on Medical Imaging, vol. 21, no. 10, pp. 1236–1243, 2002. [Google Scholar]

9. C. I. Sánchez, M. Niemeijer, I. Isgum, A. Dumitrescu, M. S. A. Suttorp-Schulten et al., “Contextual computer-aided detection: improving bright lesion detection in retinal images and coronary calcification identification in CT scans,” Medical Image Analysis, vol. 16, no. 1, pp. 50–62, 2012. [Google Scholar]

10. N. Mohanapriya and D. B. Kalaavathi, “Adaptive image enhancement using hybrid particle swarm optimization and watershed segmentation,” Intelligent Automation & Soft Computing, vol. 25, no. 4, pp. 663–672, 2019. [Google Scholar]

11. N. Chidambaram and D. Vijayan, “Detection of exudates in diabetic retinopathy,” in IEEE ICACCI Proc. Bangalore, India, 2018. [Google Scholar]

12. W. S. Franlink and S. E. Rajan, “Computerized screening of diabetic retinopathy employing blood vessel segmentation in retinal images,’,” Biocybernetics Biomedical Engineering, vol. 34, no. 2, pp. 117–124, 2014. [Google Scholar]

13. J. G. Anitha and G. K. Maria, “Detecting hard exudates in retinal fundus images using convolutional neural networks,” in IEEE ICCTCT Proc. Coimbatore, India, 2018. [Google Scholar]

14. A. A. Almisreb, N. Jamil and N. M. Din, “Utilizing Alexnet deep transfer learning for ear recognition,” in IEEE CAMP Proc. Sabah, Malaysia, 2018. [Google Scholar]

15. S. Bharkad, “Morphological and neural network-based approach for detection of exudates in fundus images,” in IEEE ICCMC Proc. New Jersey, USA, 2018. [Google Scholar]

16. P. Manohar and V. Singh, “Morphological approach for retinal microaneurysm detection,” in 2018 IEEE ICAECC Proc. Bangalore, India, 2018. [Google Scholar]

17. M. Haloi, “Improved microaneurysm detection using deep neural networks,” arXiv preprint: 1505. 04424, 2015. [Google Scholar]

18. B. Mohamed, C. Yazid, B. Nourreddine, B. Abdelmalek and C. Assia, “Non-proliferative diabetic retinopathy detection using mathematical morphology,” in IEEE MECBME Proc. Tunisia, 2018. [Google Scholar]

19. M. R. Kamble, B. Aujih, M. Kokare and F. Mériaudeau, “Detection of bright and dark lesions from color fundus images using delta-rank transform,” in ICIAS Conf. Proc., Kuala Lumpur, Malaysia, 2018. [Google Scholar]

20. A. Colomer, J. Igual and V. Naranjo, “Detection of early signs of diabetic retinopathy based on textural and morphological information in fundus images,” Sensors, vol. 20, no. 4, pp. 1–21, 2020. [Google Scholar]

21. T. Kauppi, V. Kalesnykiene, K. J. Kamarainen, L. Lensu, I. Sorri et al., “Sorri, etal, DIARETDB1 diabetic retinopathy database and evaluation protocol,” in MIUA conf. Proc., Aberystwyth, UK,2007,Database: [online]. Available: www2.it.lut.fi/project/imageret/diaretab1 [Google Scholar]

22. A. Krizhevsky, I. Sutskever and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in NIPS Conference Proc, Nevada, USA, 2012. [Google Scholar]

23. X. Han, Y. Zhong, L. Cao and L. Zhang, “Pre-trained Alexnet architecture with pyramid pooling and supervision for high spatial resolution remote sensing image scene classification,” Remote Sensing, vol. 9, no. 8, pp. 1–22, 2017. [Google Scholar]

24. L. Gowda and K. V. Viswanatha, “Automatic diabetic retinopathy detection using fcm,” International Journal of Engineering Science Invention, vol. 7, no. 4, pp. 19–24, 2018. [Google Scholar]

25. W. Zhou, C. Wu, Y. Yi and W. Du, “Automatic detection of exudates in digital color fundus images using super pixel multi-feature classification,” IEEE Access, vol. 5, pp. 17077–17088, 2017. [Google Scholar]

26. U. Punniyamoorthy and I. Pushpam, “Remote examination of exudates-impact of macular oedema,” Healthcare Technology Letters, vol. 5, no. 4, pp. 118–123, 2018. [Google Scholar]

27. K. Wisaeng and W. Sa-Ngiamvibool, “Exudates detection using morphology mean shift algorithm in retinal images,” IEEE Access, vol. 7, no. 1, pp. 11946–11958, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |