DOI:10.32604/iasc.2021.013732

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2021.013732 |  |

| Article |

Design and Development of Collaborative AR System for Anatomy Training

1Company for Visualization & Simulation (CVS), Duy Tan University, Danang, 550000, Vietnam

2Hue University of Medicine and Pharmacy, Hue, 490000, Vietnam

3Institute of Research and Development, Duy Tan University, Danang, 550000, Vietnam

4Faculty of Information Technology, Duy Tan University, Danang, 550000, Vietnam

*Corresponding Author: Dac-Nhuong Le. Email: ledacnhuong@duytan.edu.vn

Received: 18 August 2020; Accepted: 30 November 2020

Abstract: Background: Augmented Reality (AR) incorporates both real and virtual objects in real-time environments and allows single and multi-users to interact with 3D models. It is often tricky to adopt multi-users in the same environment because of the devices’ latency and model position accuracy in displaying the models simultaneously. Method: To address this concern, we present a multi-user sharing technique in the AR of the human anatomy that increases learning with high quality, high stability, and low latency in multiple devices. Besides, the multi-user interactive display (HoloLens) merges with the human body anatomy application (AnatomyNow) to teach and train students, academic staff, and hospital faculty in the human body anatomy. We also introduce a pin system to share the same knowledge between multiple users. A total of 5 groups have been considered for the study and the evaluation has been performed using two parameters: latency between the devices and position accuracy of the 3D objects in the same environment. Results: The proposed multi-user interaction technique is observed to be good in terms of 3D object position accuracy and latency between the devices. Conclusion: AR technology provides a multi-user interactive environment for teaching and training in the human body anatomy.

Keywords: Augmented reality; collaboration; anatomy; sharing hologram

Augmented Reality (AR) integrates real and virtual objects in a three-dimensional real-world environment and simulates through an interactive interface [1]. Solo AR devices are considered for a huge range of applications and lead to significant success. One of the features that make AR highly usable is the ability of multiple interfaces to virtually interact with each other [2]. Over the last few decades, AR has become one of the most promising technologies for supporting 3D display tasks. Advancement of research in this field is gaining popularity because of the AR system supply [3–6]. Therefore, developers and researchers are aiming to build optimal and practical AR products shortly on a global scale. Most AR outcomes target single user experience and lack share ability in the same virtual object. AR collaboration scenarios can be classified into two different types [7–9]:

a) Remote collaboration for building the illusion, which combines two or more participants sharing the same space through telepresence

b) Co-located AR collaboration, which enhances the shared and mutual workspace so the participants can be visible to each other at the time of collaboration.

The world has witnessed many drastic changes and fluctuations due to natural disasters [10], epidemics [11], and wars [12] that lead to diminishing human interaction and travel restrictions. Building virtual interactive, shareable applications can make remote training, evaluation, and diagnosis possible [13], which may eventually eliminate those current issues. It may also lead to improvised consultations in hospitals worldwide. Interactive environments can also be applied to teaching and training, and be considered for distance employee training workshops. Teaching and training workshops may be led by a team of experts and doctors and support visual utilities, such as photos, videos, or voice communication. One of the biggest challenges for medical experts is providing medical explanations in addition to lessons regarding specific details and notes in the classroom [14,15]. However, it is possible to address such issues by using a multiple-user AR system, which allows interaction and sharing among multi-users on the 3D objects [16,17]. Many researchers have proposed AR-based solutions for exploring the human body anatomy. In Stefan et al. [18–20], an AR-based system is proposed for the human body anatomy. The main motive of such systems is to learn the complex anatomy in a detailed and faster way as compared to traditional learning systems. Some studies [21–25] rely on exploring human body anatomy through mobile applications. Kurniawan et al. [26] proposed an AR-based mobile application for that purpose. A camera was responsible for capturing pictures, which were then split into several pieces and patterns to be matched with a database of images. In addition, a floating euphoria framework was deployed and integrated with an SQLite database. Kuzuoka [27] employed a video stream to simulate the actions of a skilled person. Local users were captured in the video and then transmitted to the remotely skilled person who could annotate the captured content. Baurer et al. [28] extended this approach and presented a specialist enabled mouse pointer on the head-mounted display (HMD) of the local workers. However, the position of the mouse is in the two-dimensional display, and stability is observed to be poor at the HMD movement time. Chasitine et al. [29] presented a system that exhibits three-dimensional pointers to represent the skilled person’s intention. However, 3D pointers considered for the study are quite slow. In Bottecchia et al. [30], an AR-based system that permits to put down 3D animated objects for observation via local staff. The purpose of this system is to demonstrate to the participants how to analyze or solve a problem. Researcher [31,32] brings forward a solution to diagnose heart disease, raise awareness about osteoarthritis and Rheumatoid Arthritis, Parkinson’s disease, and the diagnosis of autism in children. The invention of 3D Microsoft HoloLens has become a breakthrough in augmented reality. This had led to researchers and developers working on AR applications and academic publications rapidly. Some popular areas are medical visualization, molecular sciences, architecture, and telecommunications. Trestioreanu [31] offered a 3D medical visualization technique using HoloLens for visualizing the CT dataset in 3D. This technique is limited to a specific dataset and requires an external system for rendering the tasks. Si et al. [33] presented an AR interactive environment, especially for neurosurgical training. The study considered two different methods for developing the holographic visualization of the virtual brain. The first step is to redevelop personalized anatomy structures from segmented MR imaging and the second step is to deploy the precise registration method for the mapping of virtual-real spatial information. An AR-based solution [34] for testing clinical and non-clinical applications using HoloLens. In this study, Microsoft HoloLens was employed for virtual annotation, observing the three-dimensional gross, navigating full slide, telepathology, and observing the real-time correlation between the pathology and radiology. A comparative evaluation study [35] based on the optical AR system and semi-immersive VR table. A total of 82 participants participated in this study and the performance and preferences were evaluated via questionnaires. A mixed-reality application developed by Maniam et al. [36] for temporal bone anatomy teaching and simulated the same on the HoloLens. Through vertex displacement and texture stretching, simulation replicates the real drilling experience into the virtual environment and allows the user to understand the variety of ontological structures. Pratt et al. [37] asserted that AR techniques could help identify, dissect, and execute vascular pedunculated flaps during reconstructive surgery. Through computer tomography angiography (CTA) scanning, three-dimensional models can be generated and transformed into polygonal models and rendered inside the Microsoft HoloLens. Erolin [38] explored the interactive three-dimensional digital model to enhance medical anatomy learning and medical education. The study was used to develop virtual 3D anatomy resources that include photogrammetry, surface scanning, and digital format modeling.

The main objective of the study is to develop a multi-user human body anatomy application (AnatomyNow) for anatomy teaching and training. Multi-user interaction in the same environment can be challenging. To address this issue, we propose a sharing technique for the multi-user participants in the same environment and develop high-quality 3D objects for a detailed understanding of human body parts and organs. In this study, we consider two cases- first, different users in the same physical space, and second, users in other geographical parts. The developed anatomy application is also evaluated through these cases and analyzes the performance of the sharing technique in HoloLens.

The main objective of this paper is as follows:

• We integrate human anatomy applications (AnatomyNow) with the Microsoft HoloLens.

• We create a multi-user AR interaction platform for the human body anatomy and testing for two different cases.

• A pin system is introduced for the interaction of multiple users in the same environment.

• Performance evaluation has been conducted using two parameters: data lagging between the devices and distance between the models of different devices.

The rest of the paper is as follows: Section 2 describes augmented reality technologies. Section 3 discusses the methodology used in the proposed system. Section 5 illustrates the performance evaluation of the study followed by a discussion on the comparative analysis of the research. Section 6 concludes the proposed study.

2 Augmented Reality Technologies

2.1 On-device Augmented Reality

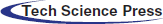

For on-device augmented reality, a typical AR application annotates the content from the real-world environment. This is followed by enumerating the pose of the on-chip or on-board camera and lining-up the virtual camera rendering for each frame. Here, the pose in six degrees of freedom includes three degrees of translation and three degrees of rotation. Two different types of mobile SDK are available for the on-device augmented reality, as discussed in Tab. 1:

Table 1: Different type of AR-based mobile SDK’s

2.2 Cloud-Based Augmented Reality

With the introduction of cloud computing, virtual reality and AR moved towards a new technological era. It authorizes the information and experiences real-time data with specific locations and exists across the user terminal and devices. Google lens [39] allows a user to search and identify objects by taking pictures. It also provides information about the product if the lens finds related information on its server. Many studies address the scalability problem [40,41], and combine the image tracking system with the image retrieval method. However, this approach was unable to tackle the mismatching problem. Another study [42] proposed a system that initializes image recognition and tracking with the region of interest (ROI). In Jain et al. [43–53], a cloud-based augmented reality solution was proposed to extract features in the images instead of the full picture. This method has been useful for saving the overall usage of the network. CloudAR performs better in terms of network latency and power consumption as compared to the traditional AR systems.

2.3 Augmented Reality Communications

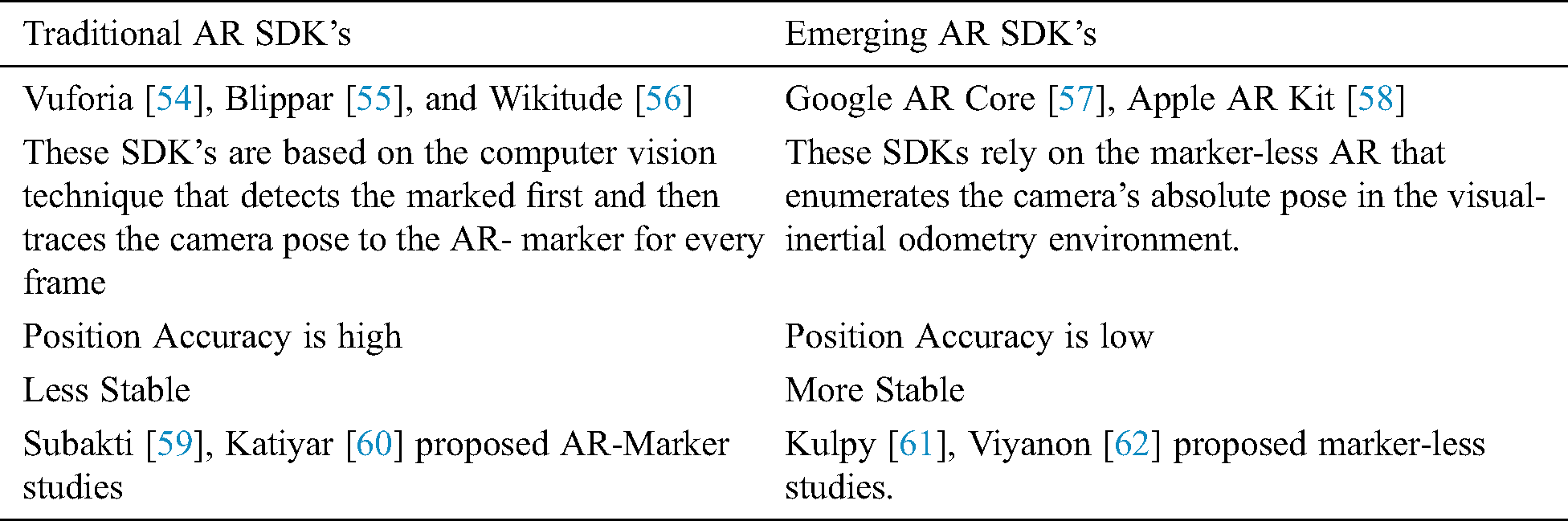

Augmented Reality communication works as a bridge for ensuring communication between devices through the cloud server or direct contact (peer-to-peer). AR communication is classified into two different types (see Fig. 1):

1) Client-server architecture: In this architecture, when a user moves a 3D object, the performed action would be sent to the server in the form of information. The server checks whether the received data is correct or not. If the received data is correct, the applications will be updated accordingly and replaced with the latest status. The users on this server will be provided with the same information.

2) Peer to Peer architecture: The peer-to-peer (P2P) architecture is a “peer-to-peer” network model as there is no centralized server. Every “peer” or single user device receives or updates information flow from other sources. At the same time, all the status changes are automatically sent to other devices.

Figure 1: Classification of AR communications

AnatomyNow is an application based on virtual reality (VR) systems for human anatomy. This project contributes immensely to medical training and teaching. The 3D simulation models support teachers and students for better visual observation of individual body parts and deep interaction in 3D space. Besides, this virtual model may facilitate hands-on clinical skills for medical students or doctors, which eventually saves costs and creates better chances of applying knowledge when samples are not available.

Anatomy Now: 3D human body simulation system illustrates the entire body of the full virtual person with more than 3924 units simulating organs and techniques in the human body. It includes bones, muscles, vascular system, heart, nervous system and brain, respiratory system, digestive system, excretory and genital systems, glands, and lymph nodes. The anatomical details are simulated precisely according to the characteristics and anatomical features of Vietnamese people. The accuracy of data science, shape, and position of all simulation models with details have been tested and examined by doctors, researchers, professors, and scientists working in medical universities and hospitals (Hue Medical University, Hanoi Medical University). The 3D simulation system provides learners with visualized body parts and details the aspects of anatomy. The interactive nature supports operations like rotate, hide, show, move, view name, scientific name, etc. It can also provide a brief description of the genus anatomy, marked on a specific organ’s surface in different ways. Moreover, it can describe movements, receiving signals, searching, listing, and diversifying anatomical units such as anatomical landmarks, anatomical regions, anatomical subjects, anatomical systems, and anatomical sites. However, it may not be compatible with anatomical pictures or templates.

The system allows users to interact directly in 3D space via 3D projectors, 3D glasses, or various glasses that are compatible with the VR system include oculus rift, Gear VR, HTC Vive, GPS, and touch devices. It also promotes interaction through a compatible screen with many operating systems like Windows, Mac, and Linux and customized depending on different devices like smartphones and tablets (Android or iOS).

The methodology of the proposed study discusses the architecture of the system, implementation, and algorithm as follows:

3.1 Architecture of the Proposed Work

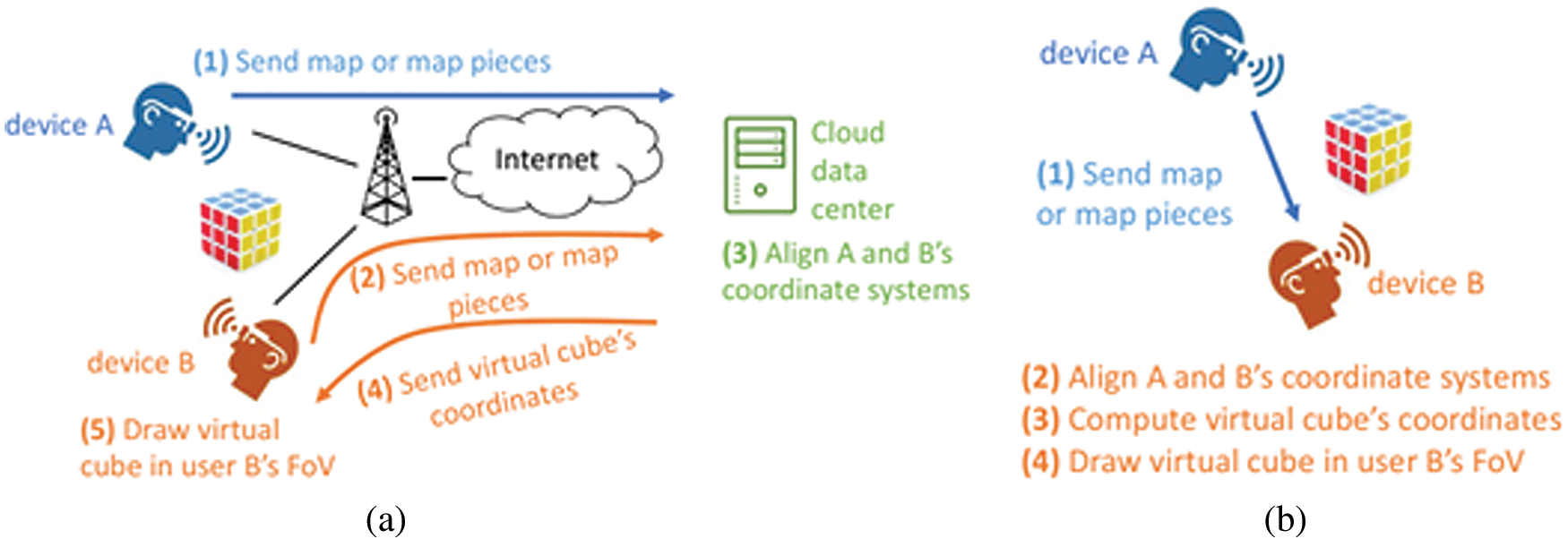

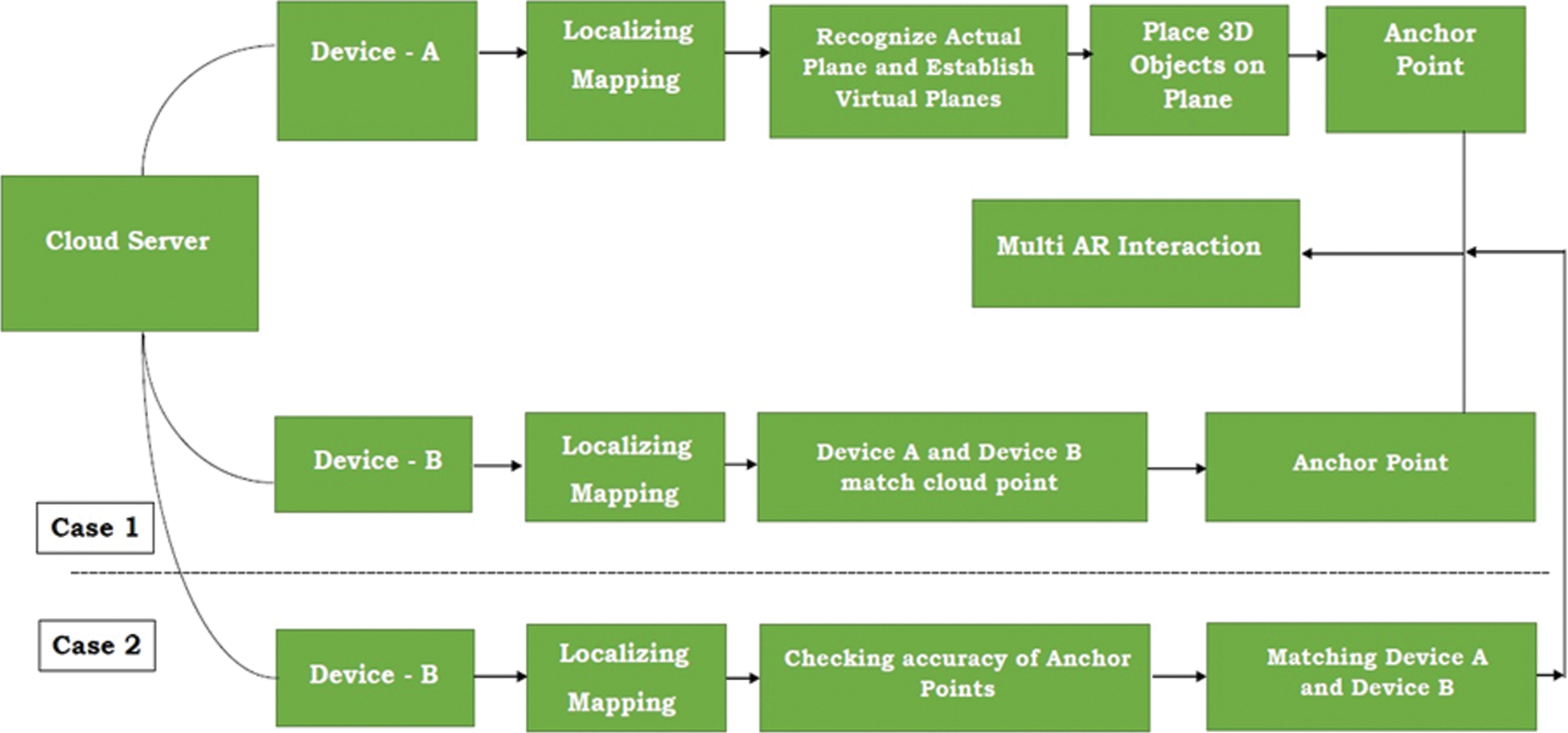

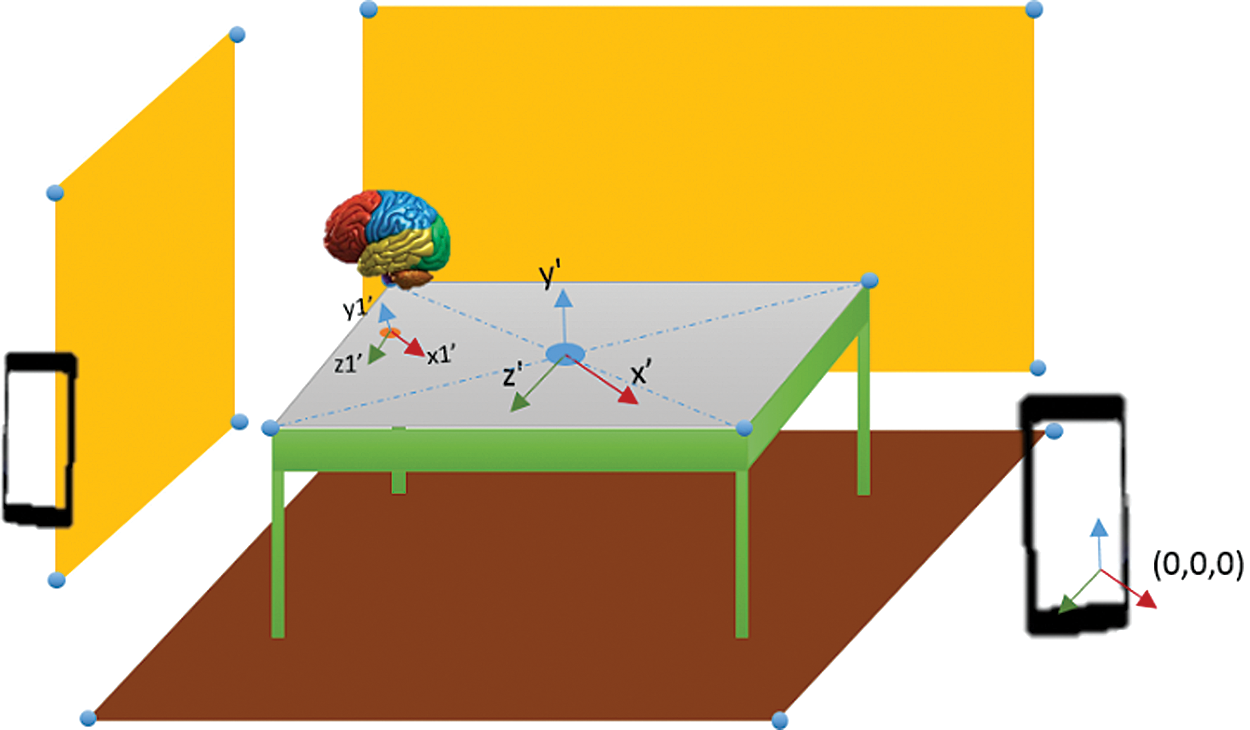

This study considers two cases for the anatomical multi-AR interaction: 1) Different users in the same physical space; 2) Different users in the geographical part (see Fig. 2).

Figure 2: Architecture of the proposed work

3.1.1 Case 1: Different Users in the Same Physical Space

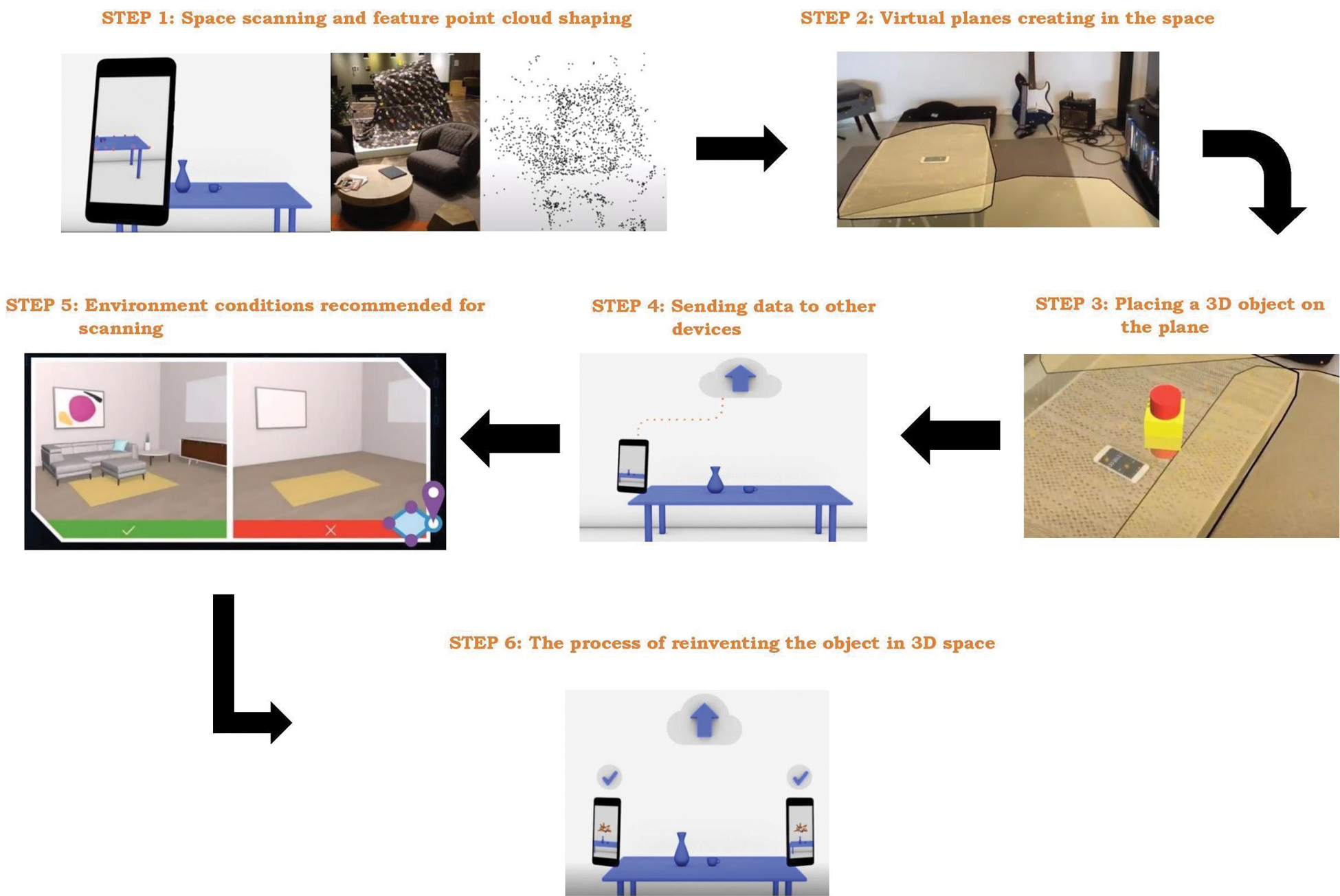

In the first case, all devices observe the 3D objects placed in a physical position. For instance, how to put a virtual body on a floor or a table can be carried as follows (see Fig. 3):

Figure 3: Detailed setting for the proposed system

Device A (a phone or other AR glasses like HoloLens):

• Step 1: Executed AR application on device helps to localize and map the real-world spaces by combining pre-fixed algorithms with sensors such as SLAM.

• Step 2: The device recognizes the surrounding space’s planes based on a feature point cloud shaping and saves these feature points in the storage.

• Step 3: The application establishes virtual planes found from feature point clouds to form a corresponding virtual world map including all detectable planes.

• Step 4: Users place a 3D object on a plane in real space by interacting with the virtual place positions such as touching the screen on the phone or air tapping on HoloLens. After that, users can set the appointment via anchor points, including location, rotation, and scale parameters.

Device B:

• Step 1: The application scans the actual space to record itself a local world map while it is in operating mode. The scanning process on device B continuously executes and compares world maps with device A until the two maps match in the point cloud. The calculation depends on certain factors such as lighting, current objects in the space, and different device sensors.

• Step 2: In the next step, anchor values get downloaded from the cloud server or peer device

• Step 3: AR application on device B re-creates the 3D objects on the device’s world map.

All information, interactions, or movements from any device (user) is sent to other devices in session by approaches like P2P messages, Client-Server. The user extracts information from the server or another device and makes appropriate changes in the scene as per the receiving directives. The message information includes the name of the interacting virtual object, position, rotation, and scale. In this instance, device A and device B can see the same 3D object in one place and can determine each other’s position through relative position with the anchor’s angular coordinates.

3.1.2 Case 2: Different Users in the Geographical Part

In the second case, the users join AR sharing experience and stay far apart geographically and the procedure to implement is similar with case 1. The difference is that when device B scans the surrounding space, the accuracy of the anchor point depends on whether the actual area around device B has been designed to coincide with the location of device A. The real space of the two devices is entirely different and the anchor point on device B gets recreated at a relative position (on any plane). This can be accepted as the joining users do not see each other. They are aware of each other’s position based on their avatars.

3.2 Implement AnatomyNow in an AR Environment

The implementation of the AnatomyNow in the AR environment is discussed as follows:

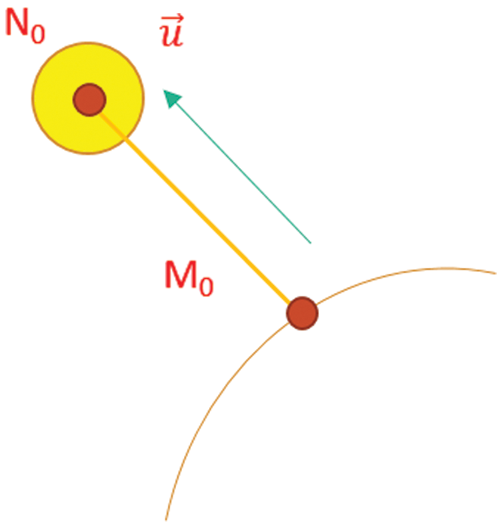

3.2.1 Create 3D Pin and Drawing

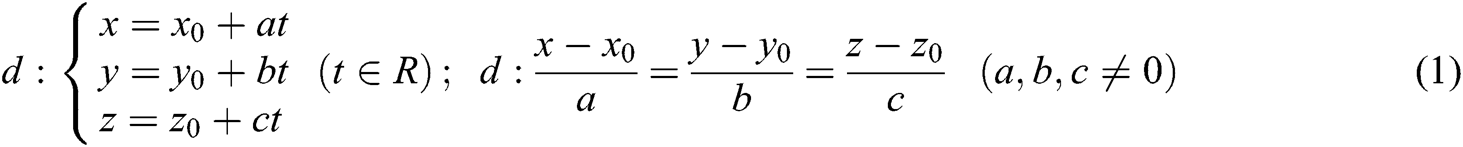

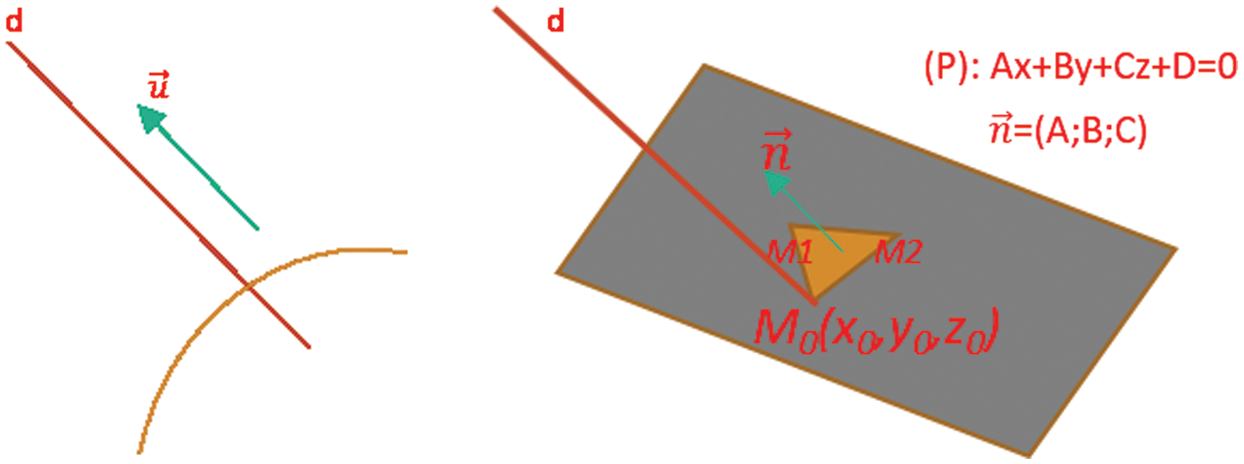

We create multi-users 3D interactive tools by marking the drop pin or interacting with color areas on a 3D object. Steps to create the 3D pin are as follows:

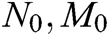

• Step 1: Draw a straight line in 3D space such that ‘line d’ passes through  and has an Eigen frequency vector

and has an Eigen frequency vector  (see Eqs. (1) and (2))

(see Eqs. (1) and (2))

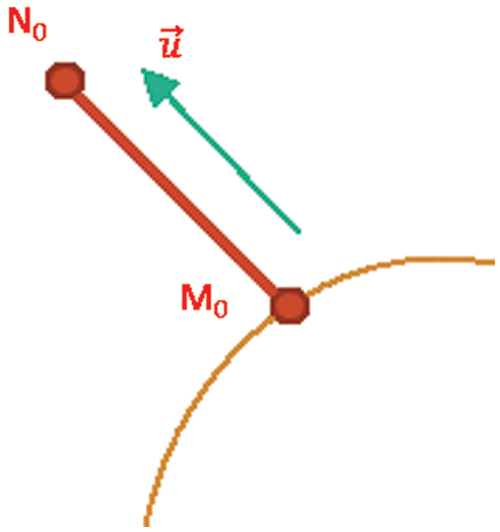

• Step 2: Draw a plane P going to 3 points M0, M1, M2 (see Fig. 4).

Figure 4: Position of Points in 3D space

where M1 and M2 are determined by the smallest plane via M0. The points have been clicked and hereby are designated by vector  .

.

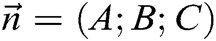

• Step 3: From M0, we define N0, which belongs to line d in top of the direction (so that the Pin stays outside the Object (see Fig. 5))

Figure 5: Pin stay outside of object

The code of vector obtained from the clicking on the plane

PickHandler:

osg::Vec3 normal = plane->getNormal();

osgUtil::LineSegmentIntersector::Intersection& result

osg::Vec3 normal = result.getWorldIntersectNormal();

The code of drawing a plane going to 3 points M0, M1, M2.

osg::Plane* plane = new osg::Plane;

plane->set(osg::Vec3(x0, y0, z0), M1, M2);

osg::Vec3 normal = plane->getNormal();

• Step 4: Attach the Pins to the selected location

Draw a line going to 2 points  and then make a sphere with focus:

and then make a sphere with focus:  . Figs. 6 and 7 represent the sphere with focus and resulting outcome from the pin interaction respectively.

. Figs. 6 and 7 represent the sphere with focus and resulting outcome from the pin interaction respectively.

Figure 6: Sphere with Focus

Figure 7: Remote interaction results of Pin

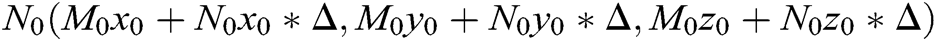

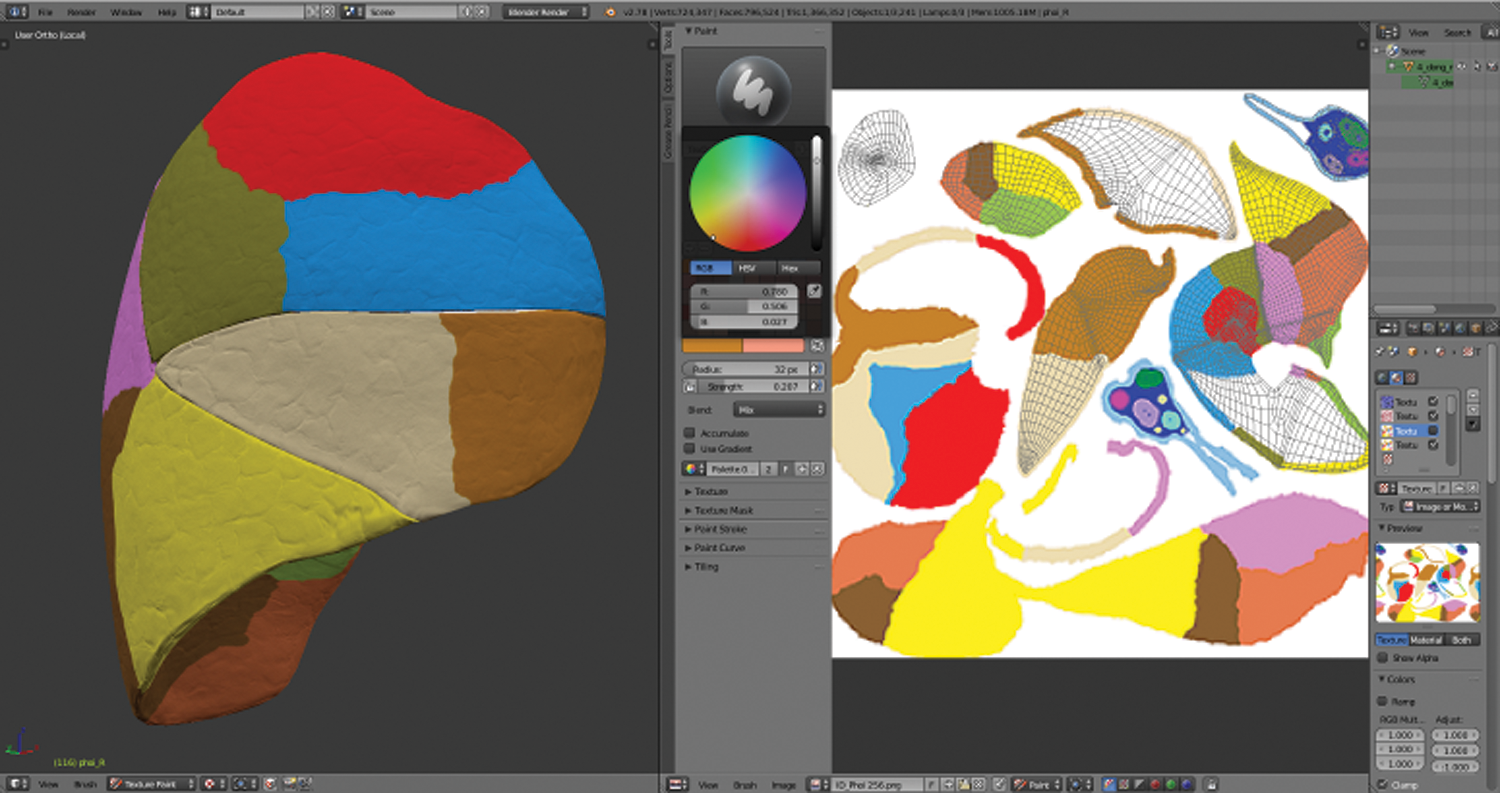

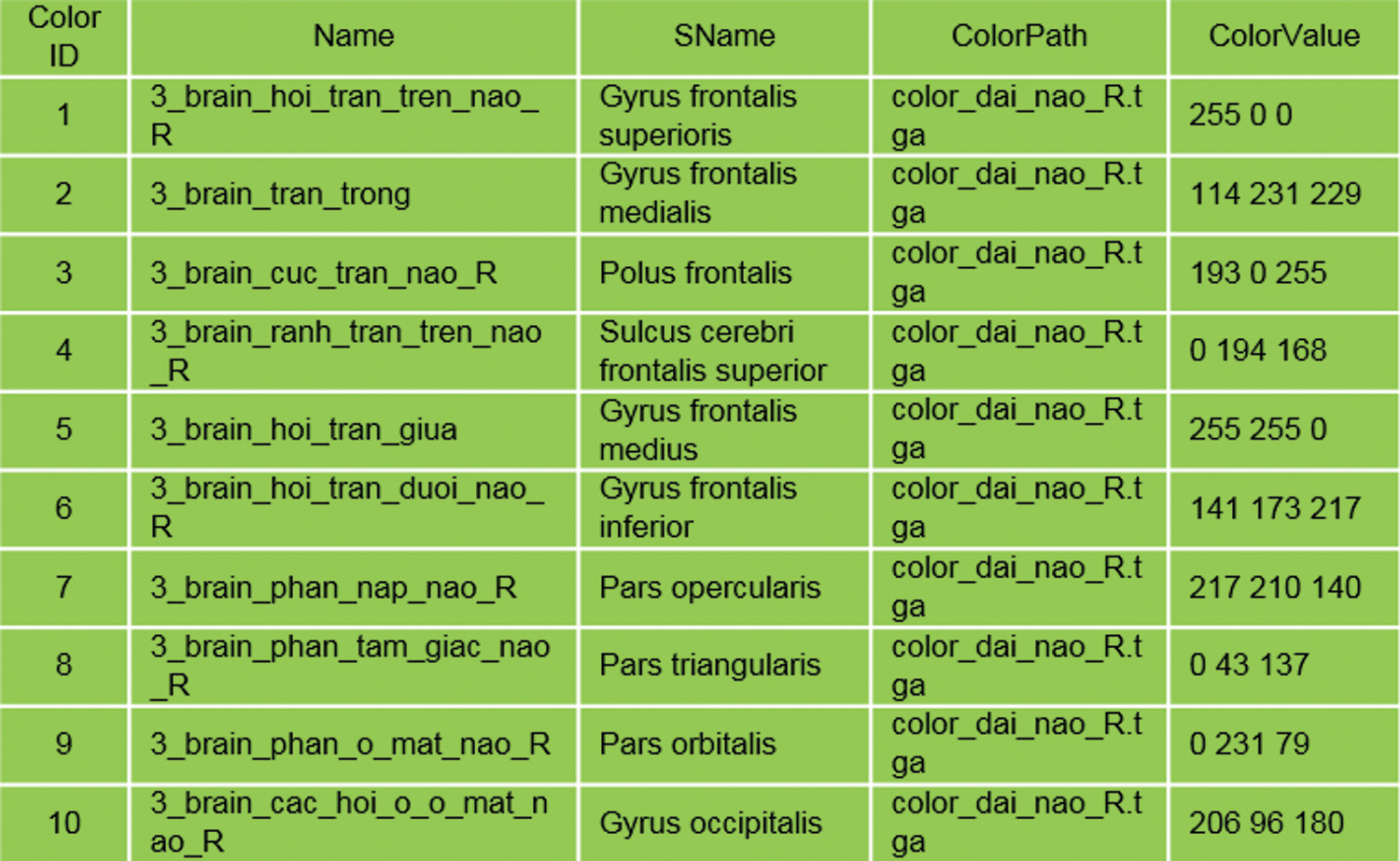

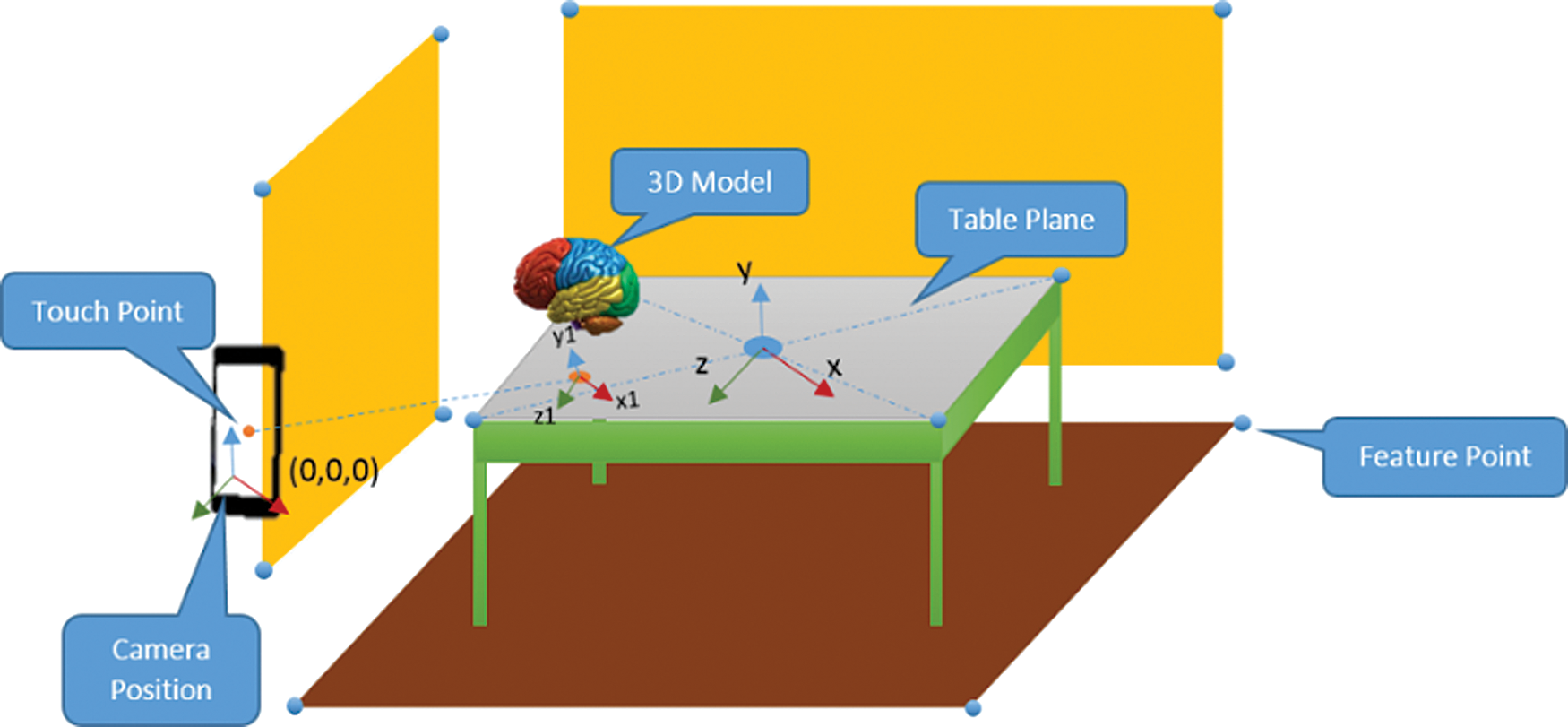

3.2.2 Create Color Areas and Interact With 3D Objects

The method is based on selecting the RGB color area on the map of 3D objects as follows:

• Step 1: Draw before selection and identifier (see Fig. 8)

Figure 8: Draw the RGB color area identifier

• Step 2: Create data into the table (see Fig. 9)

Figure 9: RGB table data stores

• Step 3: The interacting code with the selected area

Virtual void doUserOperations(osgUtil::LineSegmentIntersector::Intersection& result); osg::Texture* texture = result.getTextureLookUp(tc);

osg::Vec4 textureRGB = myImage->getColor(tc);

int red = textureRGB.r() * 255;

int green = textureRGB.g() * 255;

int blue = textureRGB.b() * 255; // Đọc ra màu Blue

• Step 4: Code processing in Fragment and Vertext (see Fig. 10)

uniform sampler2D baseMap;

varying vec2 Texcoord;

vec4 fvBaseColor = texture2D(baseMap, Texcoord);

vec3 color = (fvTotalAmbient + fvTotalDiffuse + fvTotalSpecular);

gl_FragColor.rgb = color;

Figure 10: Some results of RGB and Pin interaction

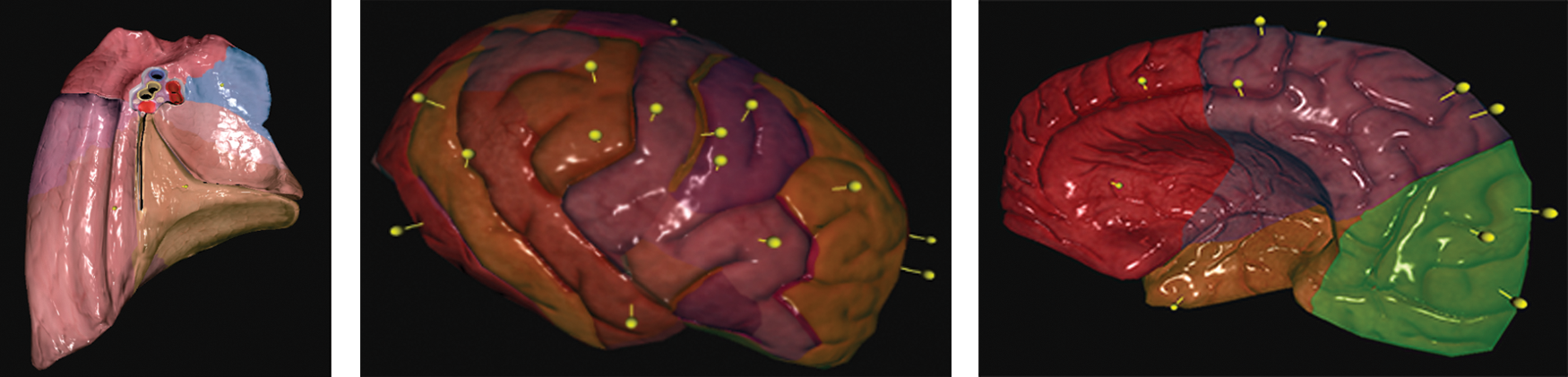

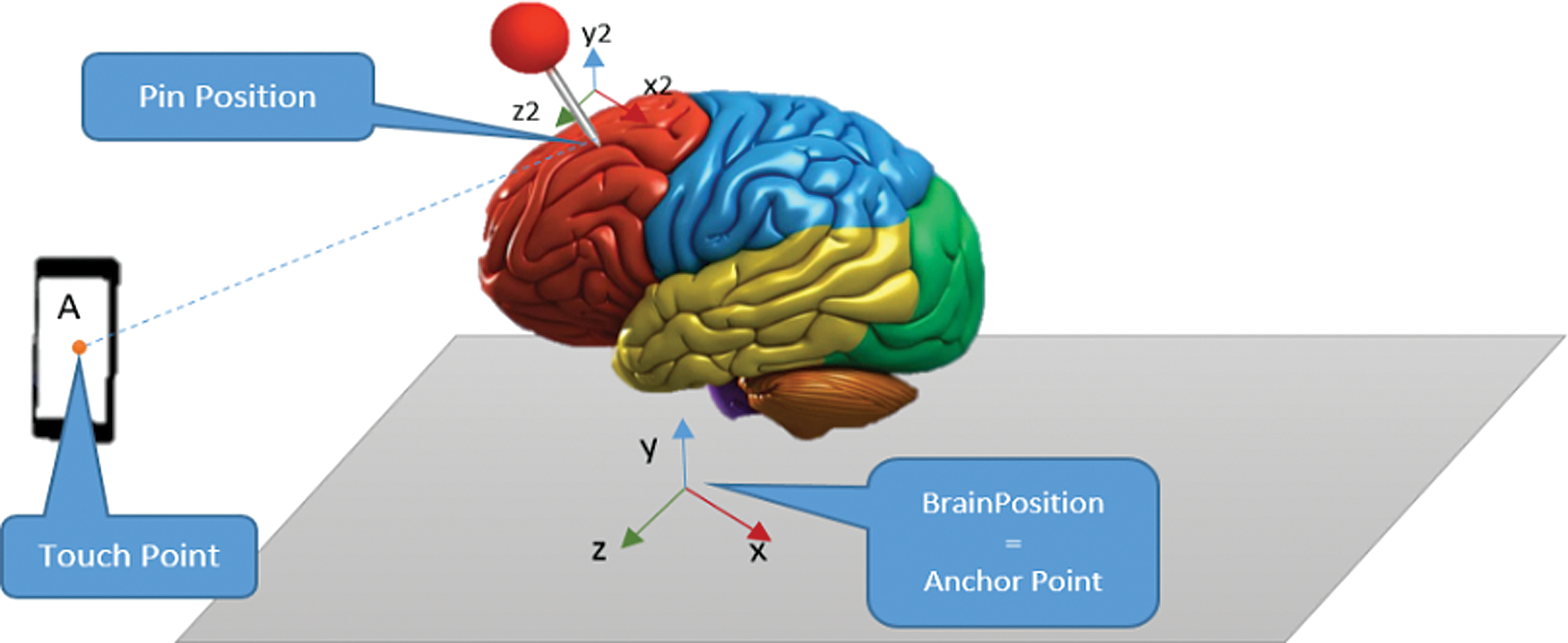

3.2.3 Sharing Multi-Users Technique

Device A

• Step 1: When device A executes AnatomyNow, the application sets the device’s camera at the original location, Camera Position (0,0,0), together with actual angular rotation Camera Rotation (0,0,0) (see Fig. 11).

• Step 2: The camera scans the real space and shapes the world map. When planes get created from feature points, we determine the Vector3 coordinates of each plane. For example, Table plane made from coordinates of the table: Table Plane (x, y, z)

• Step 3: Place a 3D object into a plane by touching the screen (a phone) or air tapping (HoloLens). At the touching point, a ray cast hits a plane to position the 3D object. A brain 3D model is rendered at position Brain Position (x1, y1, z1), this point called as Anchor Point.

• Step 4: Calculate vector distance and angle deviation from Brain Position to Table Plane

Vector3 distance = TablePlane.transform.position – BrainPosition.transform.position; Vector3 angle = TablePlane.transform.eulerAngles - BrainPosition.transform.eulerAngles;

Figure 11: Creation of space for Device A

Figure 12: Creation of space from the following devices

Device B: The following device B taking part in AR Sharing

• Step 1: Likewise, device A and device B also join the AR Sharing experience and scan the surroundings to determine the plane and Vector3 coordinates based on each device’s original coordinates. Device B includes TablePlane1 (x’, y’, z’) being in charge of scanning the environment and shaping the world map until a match between the two devices (the identical characteristics are quantity, scales, detected plane positions) is found. A random TablePlane1(x’, y’, z’) is created when there is no coincidence between world maps of participant devices (see Fig. 12).

• Step 2: Device B renders the brain 3D model at BrainPosition1 (x1’, y1’, z1’), calculated based on Device A’s distance-vector value and received angle.

Vector3 BrainPosition1.transform.position = TablePlane1 transform.position – distanceVector3

BrainPosition1. transform.eulerAngles = TablePlane1.transform.eulerAngles – angle

Multiple Interaction: After the two devices participate in the AR experience and share an anchor point, each device’s interactions on the 3D scene take anchor coordinates as the landmark. Multiple interactions are used for setting the calculations and parameters for other devices. When a user using device A implements a marking pin on the location of the 3D brain model, the acknowledgment is sent to another device. The process described is as follows:

• Step 1: First of all, make a ray cast on a 3D brain model. At the hit position, a pin is positioned with the coordinates of Pin Position (x2, y2, z2). Calculate the distance and angle values:

Vector3 distanceToAnchor = BrainPosition.transform.position – PinPosition.transform.position;

Vector3 angleToAnchor = BrainPosition.transform.eulerAngles - PinPosition.transform.eulerAngles;

• Step 2: In a session, a message of the newly- released values is sent to other devices with the following code (see Fig. 13):

CustomMessages.Instance.SendTransform (PinName, distance, angle);

Figure 13: Position and Values of Pin on server

• Step 3: All the devices participating in the session are created to get the messages, which are eventually extracted to handle parameters such as the name of the 3D object, distance, angle, etc.

The pin position on device B (proposition 1) may be calculated as follows:

Vector3 PinPosition1.transform. position = BrainPosition1 transform.position – distanceToAnchor

Vector3 PinPosition1. transform.eulerAngles = BrainPosition1.transform.eulerAngles – angleToAnchor

• Step 4: Changing the state on the 3D object at device B

When device B moves or rotates pin angle, it shares the same parameters information with device A. The two devices can fully interact on the 3D object in the AR Sharing experience. With the mechanism mentioned above, along with manipulating pin position or moving 3D objects, the system allows us to draw or select the RGB color area into 3D space together.

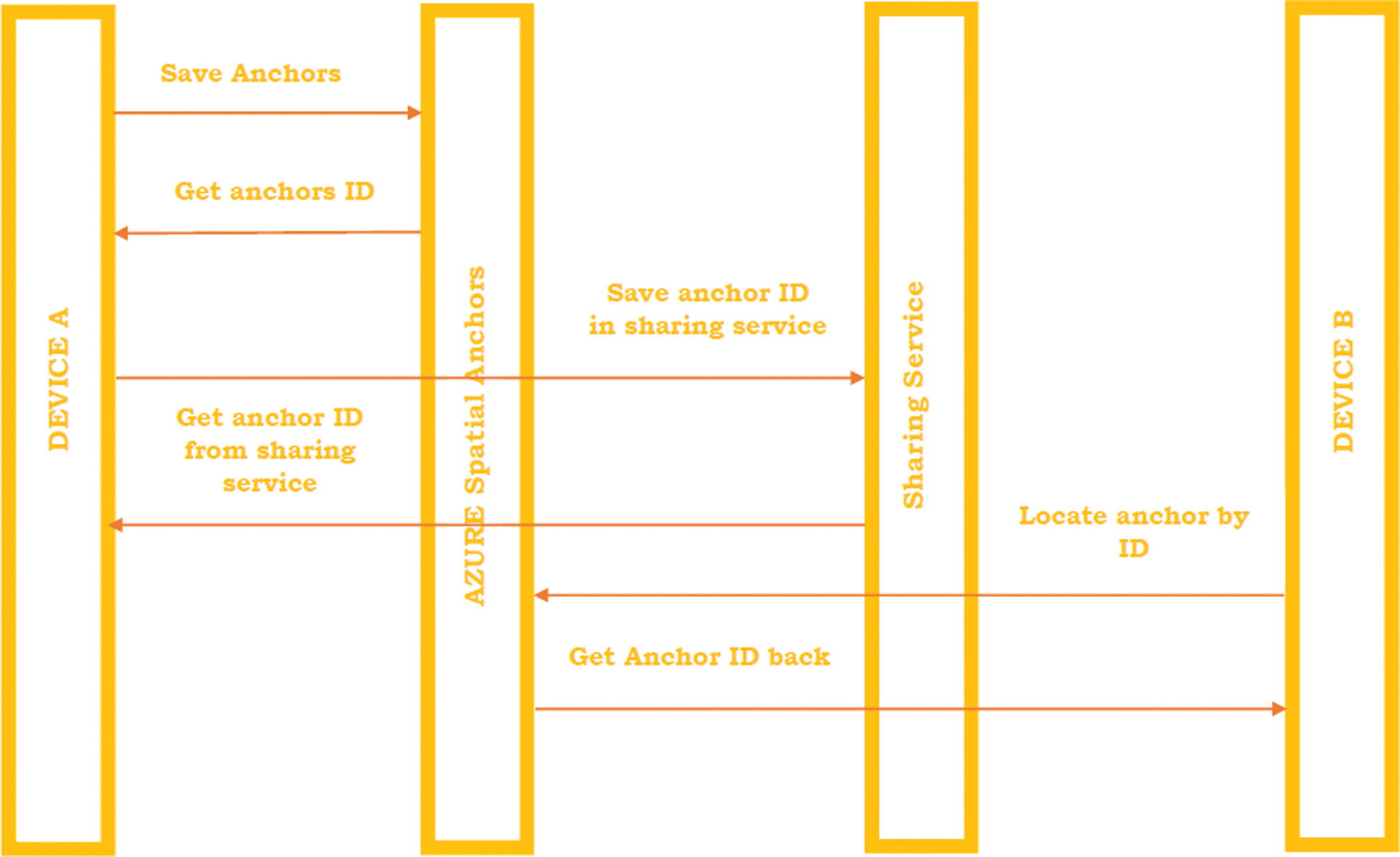

3.3 Workflow of the Multi-Device AR Interaction

This section classifies multi-device AR interaction into three categories: 1) create session 2) generate the anchors 3) locate the anchors. In the create session, Microsoft HoloLens is employed to develop the first session by executing a developed application (AnatomyNow). In the next two categories, HoloLens generates the anchor points and locates through the client device for the multi-device AR interaction.

Figure 14: Workflow of multi-device AR interaction

Fig. 14 illustrates the workflow of the multi-device AR interaction. Device A executes AnatomyNow to generate anchor points, and sends it to the AZURE spatial anchor. Spatial anchors send an acknowledgment to device A as an anchor ID. After generating a successful anchor ID, device A sends this ID to the sharing services and retrieves another ID from the sharing services as an acknowledgment. Device B will try to connect through this anchor ID. Firstly, Device B sends information to the spatial services and retrieves the data, including the Anchor points. Moreover, it also provides permission to device B for entering the multi AR environment.

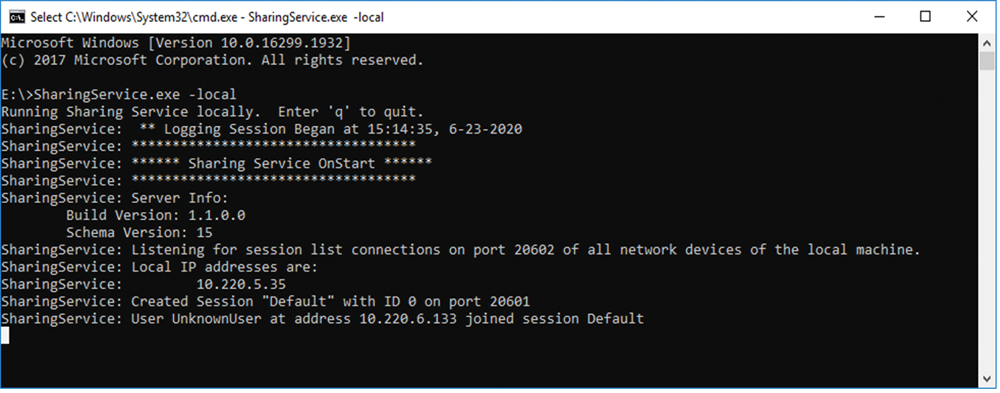

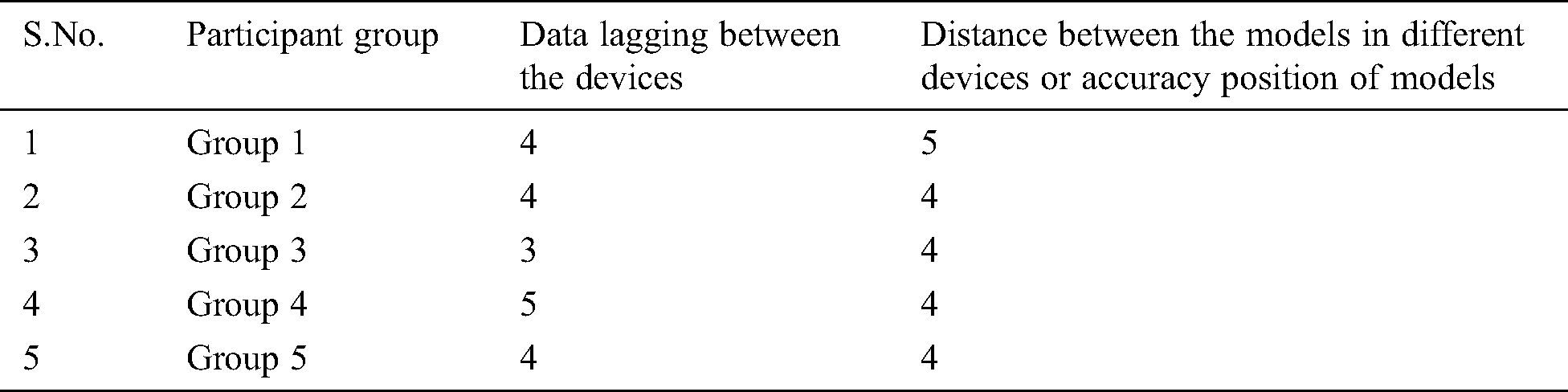

For the AnatomyNow testing with the multi-sharing technique, we ran our experiment in the center of visualization and simulation, Duy Tan University. This study’s primary goal is to ensure adequate training and teaching of the human body anatomy for students, academic staff, and healthcare staff who lack professional experience in Microsoft HoloLens. A total of 10 participants were registered for the experiment. The participants were split into five different groups. The first step was to provide the experiment description and sign the mandatory terms and conditions agreement. Before starting the experiment, instructors gave a 10 min presentation on how to use the HoloLens. After 40 min of investigation, five groups provided their feedback about the experiment in the last step. The feedback was evaluated using two parameters: 1) data lagging between the devices, 2) accuracy in the model’s position or distances between the models in different devices.

As per the Client-Server model, AnatomyNow services are executed on the server, and clients are connected to this server. While sharing at the same location, a socket-based communication protocol is implemented in the local area network (LAN) based on the mixed reality toolkit provided by Microsoft. All participating HoloLens users were connected to the same LAN (see Fig. 15).

Figure 15: Sharing Service IP

The proposed system tracks user interactions and synchronizes them to be applied to shared objects. It includes a transformation matrix of objects and any state of minimal change. The sharing menu is designed to deal with 3D objects in the easiest way for any user.

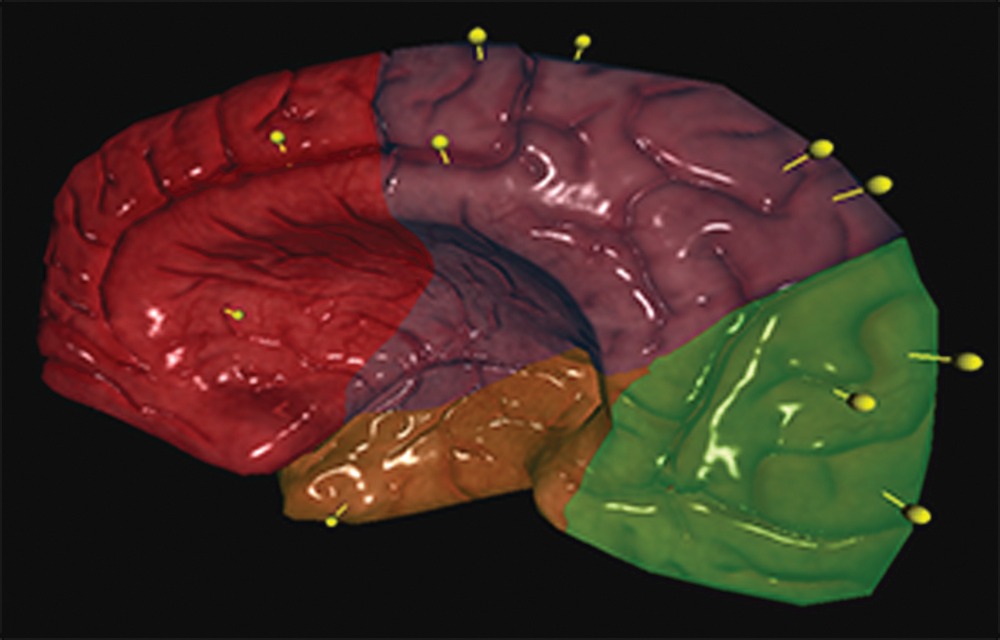

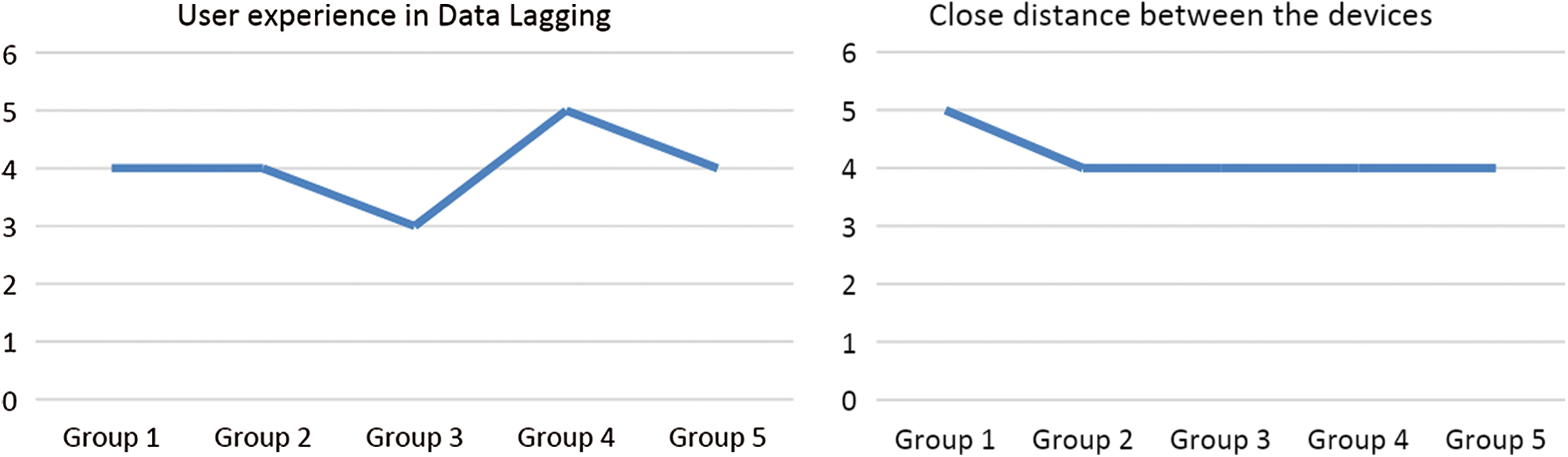

Fig. 16 shows the AnatomyNow executed through multi-devices. For analyzing AnatomyNow on a multi-share environment, we consider two parameters, i.e., data lagging between the devices and how close the AnatomyNow models appears to be with another device. The purpose of this study was to get feedback about the experience of the AnatomyNow on the multi-device AR interaction. A total of 10 participants were considered for the multi-user AR environment experiment. The group was divided into five groups of 2 users each. All the participants were familiar with the AR anatomy application, HoloLens device, and goals and objectives of the proposed solution. Two parameters, i.e., data lagging between the devices, and distance between the two models in two different devices were used to analyze the application in the multi-user AR environment.

Figure 16: AnatomyNow displayed with multi-user AR interaction

The experience of the participants was measured using the Likert scale from 1 to 5. On a Likert scale, 1 represents the most negative experience, and 5 represents the most positive experience. Tab. 2 presents the analysis of AnatomyNow in the HoloLens.

Table 2: Analysis of different participants using AnatomyNow in the HoloLens

Fig. 17 illustrates the user experience on AR anatomy with HoloLens. Data lagging between the devices from group 1, group 2, group 3, group 4, and group 5 are 4, 4, 3, 5, and 4 respectively. The close distance between the models in different devices from group 1, group 2, group 3, group 4, and group 5 are 5, 4, 4, 4, 4, respectively. The overall results of this experiment are good, except for group 3. The reason behind data lagging between the devices in group 3 may be device faces data fluctuations in the internet speed or data latency.

Figure 17: User Experiences on AR Anatomy with HoloLens

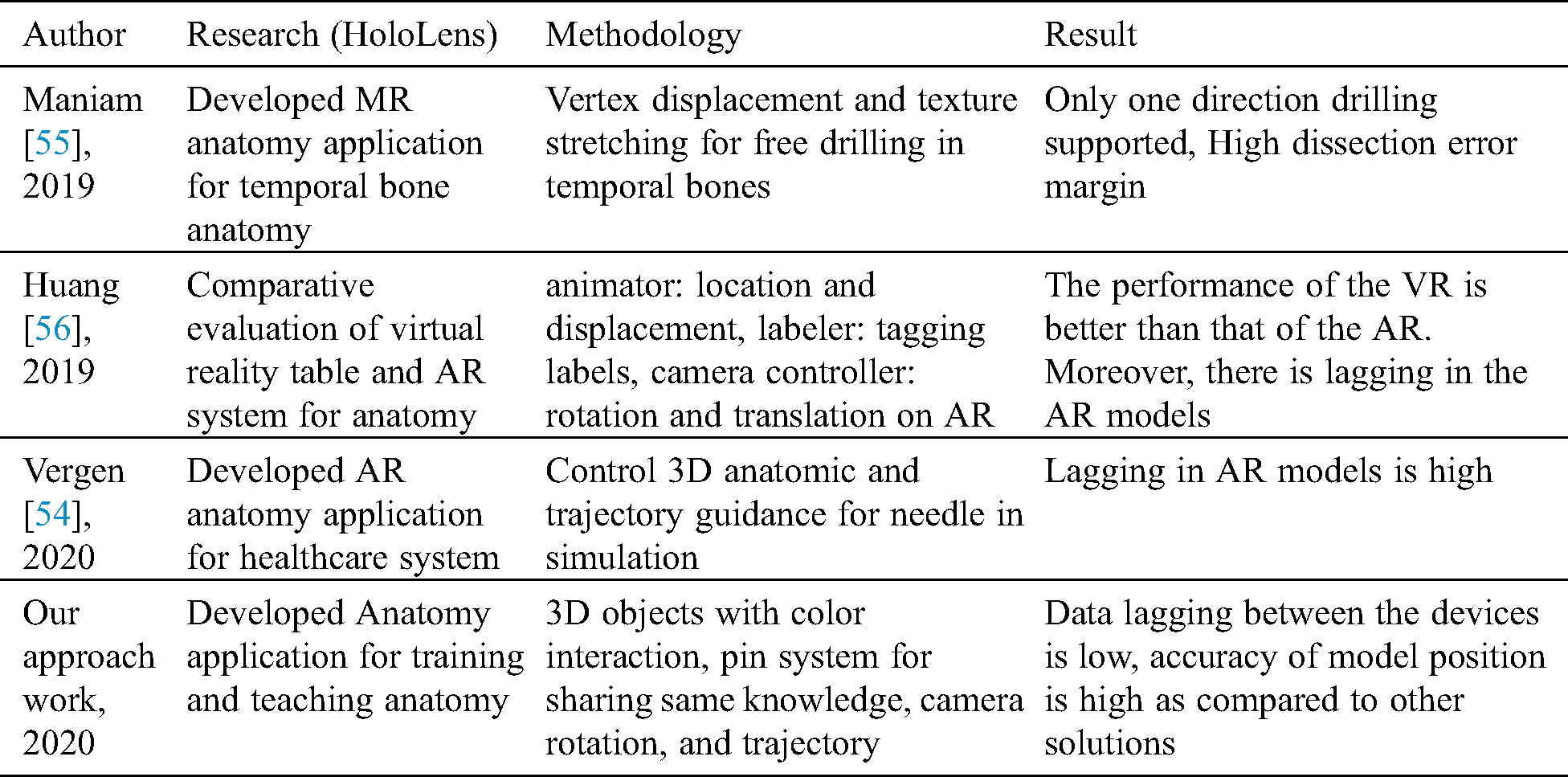

Tab. 3 discusses the comparative analysis of the proposed solution with other solutions. The overall evaluation of this experiment is quite satisfactory. However, the number of devices that share the same environment in the HoloLens is limited. While integrating, there was an observed delay for device collaboration. Due to latency in the internet bandwidth, 3D objects render in different locations.

Table 3: Comparative analysis of the proposed solution with other solutions

Human Anatomy training with real corpses faces multiple practical problems. Hence, it is essential to find alternative ways to replace the traditional anatomy training method, and a multi-user 3D interactive system is one of the preeminent methods. In this study, we have designed and developed an anatomy application and used it in a multi-user environment. A multi-user simultaneous control strategy carries out coordinated control of the user’s 3D virtual sets. It is also capable of avoiding concurrent conflicts that may be created during the interaction. The interactive multiplayer system has been designed to realize collaborative interaction for multiple HoloLens users’ in a similar 3D scene.

Furthermore, 10 participants used an AR interactive system that encourages AnatomyNow. The results show that using a comfortable, interactive mode leads to a better and robust interactive experience and also provides a realistic experience. It is an exciting concept that may be worth exploring. In the future, we would like to study multi-user interaction techniques to ensure better accuracy and low latency. Likewise, multi-user sharing techniques may be embedded with artificial intelligence techniques and computer vision algorithms to make systems more efficient and reliable.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. Siltanen. (2012). “Theory and applications of marker-based augmented reality,” Licentiate Thesis, VTT Technical Research Centre of Finland. [Google Scholar]

2. M. Billinghurst and H. Kato. (2002). “Collaborative augmented reality,” Communications of the ACM, vol. 45, no. 7, pp. 64–70. [Google Scholar]

3. J. Carmigniani, B. Furht, M. Anisetti, P. Ceravolo, E. Damiani et al. (2011). , “Augmented reality technologies, systems and applications,” Multimedia Tools and Applications, vol. 51, no. 1, pp. 341–377. [Google Scholar]

4. M. Billinghurst. (2002). “Augmented reality in education,” New Horizons for Learning, vol. 12, no. 5, pp. 1–5. [Google Scholar]

5. K. Lee. (2012). “Augmented reality in education and training,” TechTrends, vol. 56, no. 2, pp. 13–21. [Google Scholar]

6. D. W. F. V. Krevelen and R. Poelman. (2019). “A survey of augmented reality technologies, applications and limitations,” International Journal of Virtual Reality, vol. 9, no. 2, pp. 1–20. [Google Scholar]

7. M. Akçayır and G. Akçayır. (2017). “Advantages and challenges associated with augmented reality for education: A systematic review of the literature,” Educational Research Review, vol. 20, pp. 1–11. [Google Scholar]

8. J. Bacca, S. Baldiris, R. Fabregat and S. Graf. (2014). “Augmented reality trends in education: A systematic review of research and applications,” Journal of Educational Technology and Society, vol. 17, no. 4, pp. 133–149. [Google Scholar]

9. H. K. Wu, S. W. Y. Lee, H. Y. Chang and J. C. Liang. (2013). “Current status, opportunities and challenges of augmented reality in education,” Computers & Education, vol. 62, pp. 41–49. [Google Scholar]

10. A. Ferracani, D. Pezzatini and A. Del Bimbo. (2014). “A natural and immersive virtual interface for the surgical safety checklist training,” in Proc. of 2014 ACM Int. Workshop on Serious Games, pp. 27–32. [Google Scholar]

11. H. Y. Chen. (2010). “GVSS: A new virtual screening service against the epidemic disease in Asia,” in ISGC Conference, Taipei, Taiwan, pp. 1–23. [Google Scholar]

12. J. Pair, B. Allen, M. Dautricourt, A. Treskunov, M. Liewer et al. (2006). , “A virtual reality exposure therapy application for Iraq war post-traumatic stress disorder,” in IEEE Virtual Reality Conf. (VR 2006), Alexandria, VA, USA, pp. 67–72. [Google Scholar]

13. A. H. Al-Khalifah, R. J. Mc Crindle, P. M. Sharkey and V. N. Alexandrov. (2006). “Using virtual reality for medical diagnosis, training and education,” International Journal on Disability and Human Development, vol. 5, no. 2, pp. 135. [Google Scholar]

14. M. Meng, P. Fallavollita, T. Blum, U. Eck, C. Sandor et al. (2013). , “Kinect for interactive AR anatomy learning,” in 2013 IEEE Int. Sym. on Mixed and Augmented Reality (ISMAR), Adelaide, Australia, pp. 277–278. [Google Scholar]

15. M. Ma, P. Fallavollita, I. Seelbach, A. M. V. D. Heide, E. Euler et al. (2016). , “Personalized augmented reality for anatomy education,” Clinical Anatomy, vol. 29, no. 4, pp. 446–453. [Google Scholar]

16. A. Murugan, G. A. Balaji and R. Rajkumar. (2019). “AnatomyMR: A multi-user mixed reality platform for medical education,” Journal of Physics: Conference Series, vol. 1362, no. 1, pp. 012099, IOP Publishing. [Google Scholar]

17. Z. Kaddour, S. D. Derrar and A. Malti. (2019). “VRAnat: A complete virtual reality platform for academic training in anatomy,” in Int. Conf. on Advanced Intelligent Systems for Sustainable Development. Cham: Springer, pp. 395–403. [Google Scholar]

18. P. Stefan, P. Wucherer, Y. Oyamada, M. Ma, A. Schoch et al. (2014). , “An AR edutainment system supporting bone anatomy learning,” in 2014 IEEE Virtual Reality (VRMinneapolis, MN, USA, pp. 113–114. [Google Scholar]

19. C. H. Chien, C. H. Chen and T. S. Jeng. (2010). “An interactive augmented reality system for learning anatomy structure,” in proc. of the Int. multiconference of engineers and computer scientists, Hong Kong, China: International Association of Engineers, vol. 1, pp. 17–19. [Google Scholar]

20. R. Wirza, S. Nazir, H. U. Khan, I. García-Magariño and R. Amin. (2020). “Augmented reality interface for complex anatomy learning in the central nervous system: A systematic review,” Journal of Healthcare Engineering, vol. 2020, no. 1, pp. 1–15. [Google Scholar]

21. S. Küçük, S. Kapakin and Y. Göktaş. (2016). “Learning anatomy via mobile augmented reality: Effects on achievement and cognitive load,” Anatomical Sciences Education, vol. 9, no. 5, pp. 411–421. [Google Scholar]

22. J. H. Seo, J. Storey, J. Chavez, D. Reyna, J. Suh et al. (2014). , “ARnatomy: Tangible AR app for learning gross anatomy,” In ACM SIGGRAPH 2014 Posters, Vancouver, Canada, pp. 1. [Google Scholar]

23. F. Khalid, A. I. Ali, R. R. Ali and M. S. Bhatti. (2019). “AREd: Anatomy learning using augmented reality application,” in 2019 Int. Conf. on Engineering and Emerging Technologies (ICEETLahore, Pakistan, pp. 1–6. [Google Scholar]

24. J. A. Juanes, D. Hernández, P. Ruisoto, E. García, G. Villarrubia et al. (2014). , “Augmented reality techniques, using mobile devices, for learning human anatomy,” in Proc. of the Second Int. Conf. on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, pp. 7–11. [Google Scholar]

25. D. Chytas, E. O. Johnson, M. Piagkou, A. Mazarakis and G. C. Babis. (2020). “The role of augmented reality in Anatomical education: An overview,” Annals of Anatomy-Anatomischer Anzeiger, vol. 229, pp. 151463. [Google Scholar]

26. M. H. Kurniawan and G. Witjaksono. (2018). “Human anatomy learning systems using augmented reality on mobile application,” Procedia Computer Science, vol. 135, pp. 80–88. [Google Scholar]

27. H. Kuzuoka. (1992). “Spatial workspace collaboration: A SharedView video support system for remote collaboration capability,” in Proc. of SIGCHI Conf. on Human Factors in Computing Systems, California, USA, pp. 533–540. [Google Scholar]

28. M. Bauer, G. Kortuem and Z. Segall. (1999). “Where are you pointing at?” A study of remote collaboration in a wearable videoconference system,” in Digest of Papers. Third Int. Sym. on Wearable Computers, California, USA, pp. 151–158. [Google Scholar]

29. J. Chastine, K. Nagel, Y. Zhu and M. Hudachek-Buswell. (2008). “Studies on the effectiveness of virtual pointers in collaborative augmented reality,” in 2008 IEEE Sym. on 3D User Interfaces, Reno, NE, USA, pp. 117–124. [Google Scholar]

30. S. Bottecchia, J. M. Cieutat and J. P. Jessel. (2010). “TAC: Augmented reality system for collaborative tele-assistance in the field of maintenance through internet,” in Proc. of the 1st Augmented Human Int. Conf., New York, USA, pp. 1–7. [Google Scholar]

31. L. Trestioreanu. (2018). “Holographic visualization of radiology data and automated machine learning-based medical image segmentation,” arXiv preprint arXiv: 1808.04929. [Google Scholar]

32. M. Wedyan, A. J. Adel and O. Dorgham. (2020). “The use of augmented reality in the diagnosis and treatment of autistic children: A review and a new system,” Multimedia Tools and Applications, vol. 79, no. 25–26, pp. 18245–18291. [Google Scholar]

33. W. Si, X. Liao, Q. Wang and P. A. Heng. (2018). “Augmented reality-based personalized virtual operative anatomy for neurosurgical guidance and training,” in 2018 IEEE Conf. on Virtual Reality and 3D User Interfaces (VRReutlingen, Germany, pp. 683–684. [Google Scholar]

34. M. G. Hanna, I. Ahmed, J. Nine, S. Prajapati and L. Pantanowitz. (2018). “Augmented reality technology using Microsoft HoloLens in anatomic pathology,” Archives of Pathology & Laboratory Medicine, vol. 142, no. 5, pp. 638–644. [Google Scholar]

35. R. S. Vergel, P. M. Tena, S. C. Yrurzum and C. Cruz-Neira. (2020). “A comparative evaluation of a virtual reality table and a HoloLens-based augmented reality system for anatomy training,” IEEE Transactions on Human-Machine Systems, vol. 50, no. 4, pp. 337–348. [Google Scholar]

36. P. Maniam, P. Schnell, L. Dan, R. Portelli, C. Erolin et al. (2020). , “Exploration of temporal bone anatomy using mixed reality (HoloLensDevelopment of a mixed reality anatomy teaching resource prototype,” Journal of Visual Communication in Medicine, vol. 43, no. 1, pp. 17–26. [Google Scholar]

37. P. Pratt, M. Ives, G. Lawton, J. Simmons, N. Radev et al. (2018). , “Through the HoloLens™ looking glass: Augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels,” European Radiology Experimental, vol. 2, no. 1, pp. 871. [Google Scholar]

38. C. Erolin. (2019). “Interactive 3D digital models for anatomy and medical education,” in Biomedical Visualization. Cham: Springer, pp. 1–16. [Google Scholar]

39. Google Lens. (2020). Accessed on 17 July 2020, . [Online]. Available: https://play.google.com/store/apps/details?id=com.google.ar.lens&hl=en. [Google Scholar]

40. J. Pilet and H. Saito. (2010). “Virtually augmenting hundreds of real pictures: An approach based on learning, retrieval, and tracking,” in 2010 IEEE Virtual Reality Conf. (VRMassachusetts, USA, pp. 71–78. [Google Scholar]

41. S. Gammeter, A. Gassmann, L. Bossard, T. Quack and L. Van Gool. (2010). “Server-side object recognition and client-side object tracking for mobile augmented reality,” in 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition-Workshops, California, USA, pp. 1–8. [Google Scholar]

42. J. Jung, J. Ha, S. W. Lee, F. A. Rojas and H. S. Yang. (2012). “Efficient mobile AR technology using scalable recognition and tracking based on server-client model,” Computers & Graphics, vol. 36, no. 3, pp. 131–139. [Google Scholar]

43. P. Jain, J. Manweiler and R. Roy Choudhury. (2016). “Low bandwidth offload for mobile AR,” in Proc. of the 12th Int. on Conf. on emerging Networking Experiments and Technologies, Nice, France, pp. 237–251. [Google Scholar]

44. A. Nayyar, B. Mahapatra, D. N. Le and G. Suseendran. (2018). “Virtual Reality (VR) & Augmented Reality (AR) technologies for tourism and hospitality industry,” International Journal of Engineering & Technology, vol. 7, no. 2. 21, pp. 156–160. [Google Scholar]

45. V. Bhateja, A. Moin, A. Srivastava, L. N. Bao, A. Lay-Ekuakille et al. (2016). , “Multispectral medical image fusion in Contourlet domain for computer based diagnosis of Alzheimer’s disease,” Review of Scientific Instruments, vol. 87, no. 7, pp. 074303. [Google Scholar]

46. A. S. Ashour, S. Beagum, N. Dey, A. S. Ashour, D. S. Pistolla et al. (2018). , “Light microscopy image de-noising using optimized LPA-ICI filter,” Neural Computing and Applications, vol. 29, no. 12, pp. 1517–1533. [Google Scholar]

47. D. N. Le, G. N. Nguyen, V. Bhateja and S. C. Satapathy. (2017). “Optimizing feature selection in video-based recognition using Max-Min Ant System for the online video contextual advertisement user-oriented system,” Journal of Computational Science, vol. 21, pp. 361–370. [Google Scholar]

48. V. C. Le, G. N. Nguyen, T. H. Nguyen, T. S. Nguyen and D. N. Le. (2020). “An effective RGB color selection for complex 3D object structure in scene graph systems,” International Journal of Electrical and Computer Engineering (IJECE), vol. 10, no. 6, pp. 5951–5964. [Google Scholar]

49. D. N. Le, C. Van Le, J. G. Tromp and G. N. Nguyen. (2018). (Eds.“Emerging technologies for health and medicine: Virtual reality, augmented reality, artificial intelligence, internet of things, robotics, industry 4.0,” John Wiley & Sons. [Google Scholar]

50. J. D. Hemanth, U. Kose, O. Deperlioglu and V. H. C. de Albuquerque. (2020). “An augmented reality-supported mobile application for diagnosis of heart diseases,” Journal of Supercomputing, vol. 76, no. 2, pp. 1242–1267. [Google Scholar]

51. F. Fiador, M. Poyade and L. Bennett. (2020). “The use of augmented reality to raise awareness of the differences between Osteoarthritis and Rheumatoid Arthritis,” in Biomedical Visualisation, Springer, pp. 115–147. [Google Scholar]

52. T. Tunur, A. DeBlois, E. Yates-Horton, K. Rickford and L. A. Columna. (2020). “Augmented reality-based dance intervention for individuals with Parkinson’s disease: A pilot study,” Disability and Health Journal, vol. 13, no. 2, pp. 100848. [Google Scholar]

53. C. L. Van, V. Puri, N. T. Thao and D. Le. (2020). “Detecting lumbar implant and diagnosing scoliosis from vietnamese x-ray imaging using the pre-trained API models and transfer learning,” Computers, Materials & Continua, vol. 66, no. 1, pp. 17–33. [Google Scholar]

54. Vuforia. (2020). Accessed on 15 July 2020, . [Online]. Available: https://developer.vuforia.com/. [Google Scholar]

55. Blippar. (2020). Accessed on 15 July 2020, . [Online]. Available: https://www.blippar.com/. [Google Scholar]

56. Wikitude. (2020). Accessed on 15 July 2020, . [Online]. Available: https://www.wikitude.com/. [Google Scholar]

57. Google AR Core. (2020). Accessed on 15 July, 2020, . [Online]. Available: https://developers.google.com/ar. [Google Scholar]

58. Apple AR Kit. (2020). Accessed on 15 July, 2020, . [Online]. Available: https://developer.apple.com/augmented-reality/arkit/. [Google Scholar]

59. H. Subakti and J. R. Jiang. (2016). “A marker-based cyber-physical augmented-reality indoor guidance system for smart campuses,” in 2016 IEEE 18th Int. Conf. on High Performance Computing and Communications, Sydney, Australia, pp. 1373–1379. [Google Scholar]

60. A. Katiyar, K. Kalra and C. Garg. (2015). “Marker based augmented reality,” Advances in Computer Science and Information Technology (ACSIT), vol. 2, no. 5, pp. 441–445. [Google Scholar]

61. A. Kulpy and G. Bekaroo. (2017). “Fruitify: Nutritionally augmenting fruits through markerless-based augmented reality,” in 2017 IEEE 4th Int. Conf. on Soft Computing & Machine Intelligence (ISCMIMauritius, pp. 149–153. [Google Scholar]

62. W. Viyanon, T. Songsuittipong, P. Piyapaisarn and S. Sudchid. (2017). “AR Furniture: Integrating augmented reality technology to enhance interior design using marker and markerless tracking,” in Proc. of the 2nd Int. Conf. on Intelligent Information Processing, Bangkok, Thailand, pp. 1–7. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |