DOI:10.32604/iasc.2021.014369

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2021.014369 |  |

| Article |

Roughsets-based Approach for Predicting Battery Life in IoT

1Vellore Institute of Technology, Vellore, Tamil Nadu, 632014, India

2Faculty of Science and Technology, University of the Faroe Islands, Vestarabryggja 15, FO 100, Torshavn, Faroe Islands

*Corresponding Author: Qin Xin. Email: qinx@setur.fo

Received: 16 September 2020; Accepted: 10 November 2020

Abstract: Internet of Things (IoT) and related applications have successfully contributed towards enhancing the value of life in this planet. The advanced wireless sensor networks and its revolutionary computational capabilities have enabled various IoT applications become the next frontier, touching almost all domains of life. With this enormous progress, energy optimization has also become a primary concern with the need to attend to green technologies. The present study focuses on the predictions pertinent to the sustainability of battery life in IoT frameworks in the marine environment. The data used is a publicly available dataset collected from the Chicago district beach water. Firstly, the missing values in the data are replaced with the attribute mean. Later, one-hot encoding technique is applied for achieving data homogeneity followed by the standard scalar technique to normalize the data. Then, rough set theory is used for feature extraction, and the resultant data is fed into a Deep Neural Network (DNN) model for the optimized prediction results. The proposed model is then compared with the state of the art machine learning models and the results justify its superiority on the basis of performance metrics such as Mean Squared Error, Mean Absolute Error, Root Mean Squared Error, and Test Variance Score.

Keywords: Internet-of-Things; sustainability; roughsets; deep neural network; pre-processing

Internet-of-Things (IoT) and related applications have gained immense momentum since the past decade with its implementations touching almost all spheres of life in our planet. IoT is often termed as Internet of Everything (IoE) which creates a global network of machines and devices integrated with one another establishing seamless communication systems across wide spectrum of domains [1,2]. With the rapid growth in human population and with surging usage of devices, services and related protocols the applications of IoT are numerous and hence become impossible to enlist [3]. The popular and extremely successful applications of IoT have been visualized in healthcare, agriculture, environment, automobile, transportation and defence [4,5].

In healthcare, IoT applications have enabled reliable tracking of patients and transforming of data for providing essential and accelerated medical service [6]. The use of sensors has helped collect crop data thereby aiding agronomic decision making and enhancement of cultivation [7]. Industry 4.0 is the present trend for automation and data exchange in the manufacturing sector using cutting edge technologies like cyber physical systems (CPS), IoT, AI, cloud computing and Industrial IoT (IIoT). Industry 4.0 is a subset of the fourth generation industrial revolution which includes areas that are generally outliers in industrial sector. It basically encompasses a framework consisting of wireless connectivity, sensors and systems capable of making intelligent decisions providing visualization of the working of an entire manufacturing floor [8]. This concept sets the stage for smart factories. It is a giant progression from automation to a system which collects data streams from various operational processes and takes intelligent decisions for its running without human intervention. The mundane routine tasks are taken care by AI and machine learning applications making time for human beings to focus on only high level decision making and exception handling [9,10]. IoT environment have significantly contributed in traffic control, parking allotments and even assisted in improving driving experiences ensuring efficiency and safety [11,12]. Most importantly in the defense sector IoT applications have been adopted for surveillance, disaster prevention and robotic interventions [13]. The applications of IoT in agriculture, industry and various real time sectors have helped primarily in monitoring and control of weather conditions, health conditions, mechanical failures and various other instances of failure predictions. For all of these implementations sustainability of battery life is extremely important because if battery drains out it may lead to disastrous consequences due to failure in predictions of exceptional events [14–16].

The present study focuses on prediction of battery life sustainability for sensors which are installed in the beaches for collecting marine data. These sensors and batteries are primary components for IoT applications deployed to collect and monitor data from the marine environment namely Beach name, Measurement Timestamp, Water Temperature, Turbidity, Transducer Depth, Wave Height, Wave period and the battery life. Various applications for monitoring marine environment have been developed integrating IoT architectures, sensing, and control and communication technologies. These implementations are predominant in the areas of ocean sensing and monitoring, wave and current monitoring, water quality assessment, fish farming and coral reef monitoring. Although significant research has been conducted on IoT applications catering to the needs of marine environment, most of the studies have been conducted either at the under-water or surface level. Also solar energy harvesting has been a major point of discussion among various studies along with other forms of energy – wind, wave and ocean currents. The world at this point is progressing towards green technologies where energy optimization plays a vital role [17,18]. Based on such thorough analysis of the various applications of IoT in marine and other sectors, it has been observed that not much research have been conducted focusing on predicting the sustainability of battery life. Energy optimization is an essential aspect to be focused upon where the numerous research is being conducted emphasizing green computing. Naturally predicting the sustainability of battery life acted as a prime motivation in the present study.

The data used in the study is a publicly available dataset collected from the Chicago park district beach water. The missing values in the raw data are replaced with attribute means and then one hot encoding technique is applied achieve data homogeneity transforming all categorical values to numerical ones. This data is further normalized using Standard scalar technique and rough set approach is used for feature extraction. The extracted data thus contain best features having most significant impact on the target class – battery life. The extracted data is fed into a DNN ensuring use of activation function for optimized prediction results on battery life sustainability of the devices in the IoT framework. The prediction of battery life would help in tuning the connected sensor and network devices for the achievement of sustainable energy. The results are compared with the start of the art technologies to establish reliability of the prediction results.

The contributions of the proposed model are:

1. Using the DNN’s a prediction model is proposed to forecast the battery life of the water sensors deployed along Chicago District Beach.

2. The proposed architecture uses the concepts of rough sets to minimize dimensionality and select significant features to increase prediction accuracy and decrease complexity.

3. The results are compared with common state-of-the-art techniques such as Linear Regression and XGBoost, which highlight the enhanced performance of the proposed model

4. A review of the current rough sets model and the state-of-the-art techniques is performed in depth.

5. The experimentation justify the fact that the proposed prediction model outperforms other popular ML techniques thereby validating the positive impact of integrating rough set technique with the ML model.

In the recent decade numerous artificial intelligence methods have been used to boost the learning level of the systems in an extremely granular manner. In this section, discussion on the various contributions of deep learning algorithms and rough set concepts are discussed through an extensive survey of literature.

In [19] authors have developed the process by which deep learning and rough sets approaches can automate the evaluation network for motors that work through highways in snow and ice environments. The experiment was conducted first, on a motorway in Jilin, China, with a naturalistic driving test involving 13 licensed drivers, with specific crash point set before the inception of the experiment. It was used to collect images and numerical data with multi-sensors (eye trackers, mini camera, and speed detectors). The Boltzmann Restricted machine was used for the development and training of a deep-belief network (DBN). The technique of Rough Sets was added as an evaluation of the DBN output layer. Various conditions for perception of input factors were used to train the network. The findings emphasize that DBN-FS was not only higher in the Naive Bayes and the BP-ANN but also greater precision in sensing dangerous conditions due to the comparative implementation with Naive Bayes and BP-ANN of the DBN-based perception network. This approach was useful to design partially automated vehicle hazard detection systems.

In [20] authors proposed a DNN hybrid architecture, stacked autoencoder (SAE) and stacked denoising autoencoder (SDAE) for ultrashort-term and short-term wind velocity prediction. Auto-encoders (AEs) were used to learn unsupervised feature learning from a supervised regression layer and wind data was used for wind speed forecasting. It was observed that many unknown factors had significant effect on the accuracy of existing methodologies used in prediction of wind velocities. Deep learning models with RNN have been used to develop new rough extensions of SAE and SDAE that stood to wind uncertainties and ensure higher accuracy in forecasting. Experimental results of this study proclaimed the proposed rough DNN models are superior to the traditional DNNs and various existing methods which apply shallow architectures and mean absolute error measurements.

In [21] authors provided a novel aggregation algorithm for wireless sensor networks using the combination of rough set concepts with an improvised version of Convolutional Neural Network (CNN). The designed model was used for the extraction of features and training in the sink node. The rough set concept was adopted in order to simplify information effectively and to reduce tagged dimensions. When data features were removed from the granular deep network by cluster nodes, these data functionalities have been transferred to the sink node to further increase the life span of the network by reducing data density. Simulation results in the study helped to compare the existing data aggregation algorithms wherein in terms of energy consumption, the proposed granular CNN model contributed significantly towards enhancement of accuracy in data aggregation.

In [22] authors provided a brief summary and functionality of the association of IoT with sensing and wireless technologies for the implementation of the necessary health applications. In [23] authors proposed a prediction model based on DNN, which used as a patent indicator as predictors by the company’s financial. Proposed approach has two phases: a learning phase and another is a fine-tuning phase. The learning stage uses limited Boltzmann machines and the fine-tuning stage uses the latest data sets to implement a back propagation algorithm, which reflect the recent trends pertinent to relationships between predictor-company relations.

In [24] authors checked the performance of generated data using the IEEE 802.15.4 standard. The DNN was used to model the relationship between different communication parameters and delays. The evaluations identified DNN model being capable of achieving optimum level of forecast accuracy outperforming other popular regression models. The study also highlighted the success of the model even further due to its ability to generate comparable accuracy when trained with small fraction of data.

In [25] authors focused on development and implementation of multi-source data fusion algorithmic model (JDL) for the diagnosis and prediction of faults in mechanical equipment. The JDL fusion model was relatively mature, yet best suited for mechanical fault diagnostic applications. The ideas of this approach correlated with that of the hierarchical fusion model and hence were prevalent in the diagnosis of mechanical faults. The basis of this model was neural network in convergence with data pre-processing algorithms like SVD, EMD and WPD to achieve better accuracy. Similarly fusion algorithms were deployed based on deep learning approaches capable of directly training and extracting data for analysis and prediction purposes.

The management of on-site maintenance visits is an important requirement for the successful operation of IoT networks. In particular, on-site visits to replace or recharge depleted batteries may be required in battery powered devices. For this purpose, practitioners often use prediction techniques to estimate the battery life of the deployed devices. Battery life is one of the biggest challenges for IoT at present [26].

In [26] authors concentrate on the actual battery lifetime and battery lifetime predictions during the development of the deployed system with discharge patterns. The result shows the difficulties of making predictions about battery life in an effort to encourage more study. In [27] authors have used four different machine learning techniques that are used to handle the problem of remaining useful life battery estimates under different flight conditions. Based on multiple experimental flight data conditions, the effectiveness of the overall machine learning techniques in the field of battery prognostics are evaluated.

In [28] authors present a deep learning method that employs DCNN to estimate cellular capacity based on measurements of voltage, current, and charging capacity for a partial charge cycle. In accordance with our knowledge, this is one of the first attempts to apply a deep learning to online Li-ion battery capacity assessment.

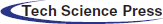

Tab. 1 present a comparative study of the various research works conducted in the field of Deep Learning and Roughsets theory.

Table 1: Comparative study among different Deep Learning and Rough Sets

In this section the algorithms and methods used in this study are explicitly discussed.

The conventional mathematical methods like crisp sets failed to resolve unclear and vague problems which acted as the prime motivation among researchers to work in a direction to solve such problems where approximations would play a major role. The concept of Rough sets was first proposed in [29] where the primary emphasis was on selection and extraction of features, data reduction and generation of decision rule.

Fuzzy and rough sets have been considered as complimentary simplifications of the classical set theory concepts. The recourse to two definable subsets (ψ) is loosely defined by subsets of a universal structure (U).

As per the theory of Rough sets, there exist four classes namely -

A is roughly X - definable, iff X(A)≠ ψ ∧ X¯ (A) ≠U

A is roughly X - definable, iff X(A) = ψ ∧ X¯ (A) ≠U

A is roughly X - definable, iff X(A) ≠ ψ ∧ X¯ (A) = U

A is roughly X - definable, iff X(A) = ψ ∧ X¯ (A) = U

Accuracy of any rough set (A) can be measured by the possible values of target set (A) to the immediate set.

where A - represents the carnality of set A and α X(A) lies in between 0 and 1.

It is the most significant element in determining the variables from the rough sets. Considering two sets (X, Y), its equivalence classes is considered as [A]X, [A]Y where Yi is the equivalence class from the attribute set Y. The dependency for the other set X, η can be calculated as

From Eq. (2), it is evident that If γ(X, Y) = 1 then Y depends on X, on the contrary, if γ(X, Y) ≤ 1 then Y partially depends on X.

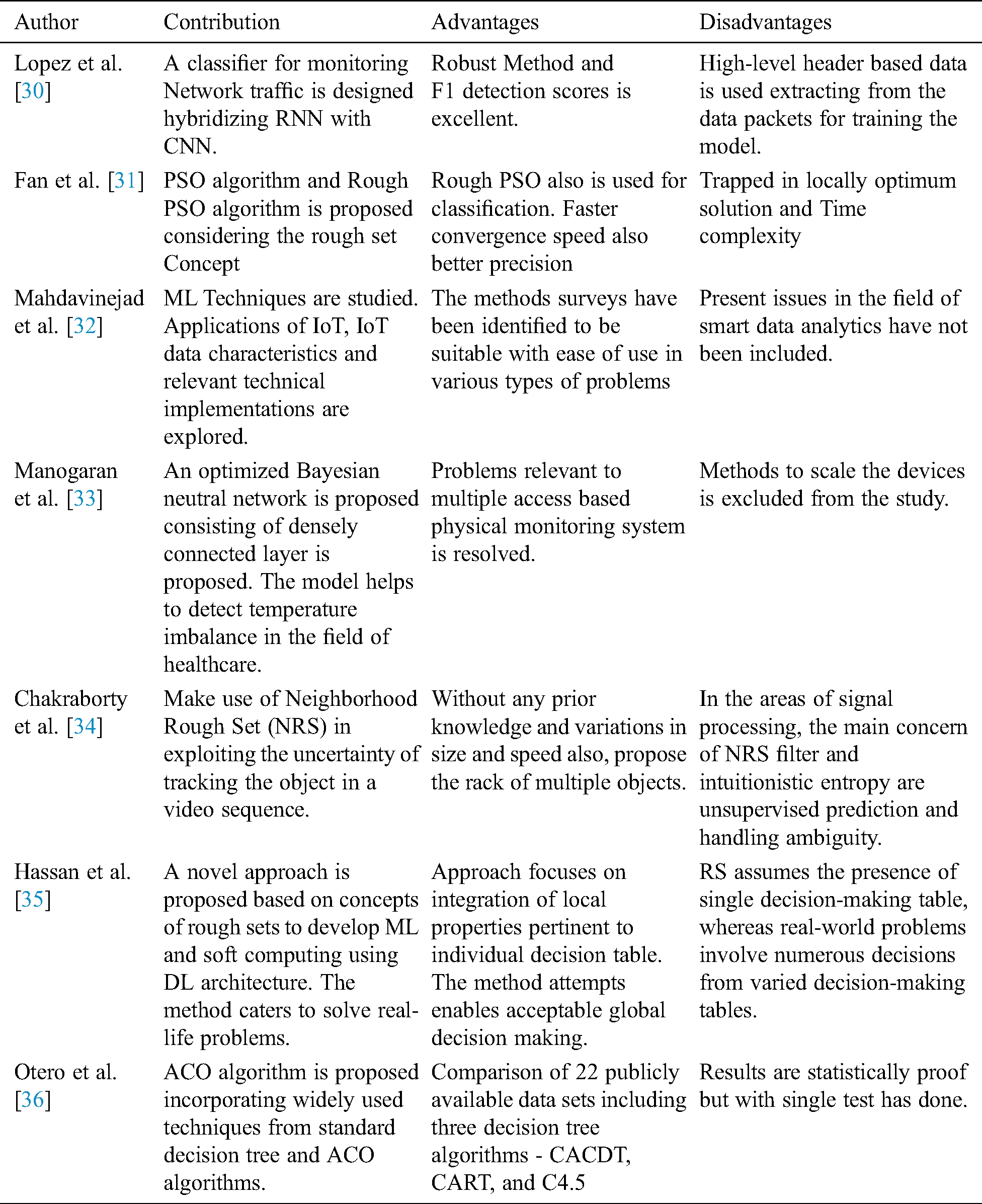

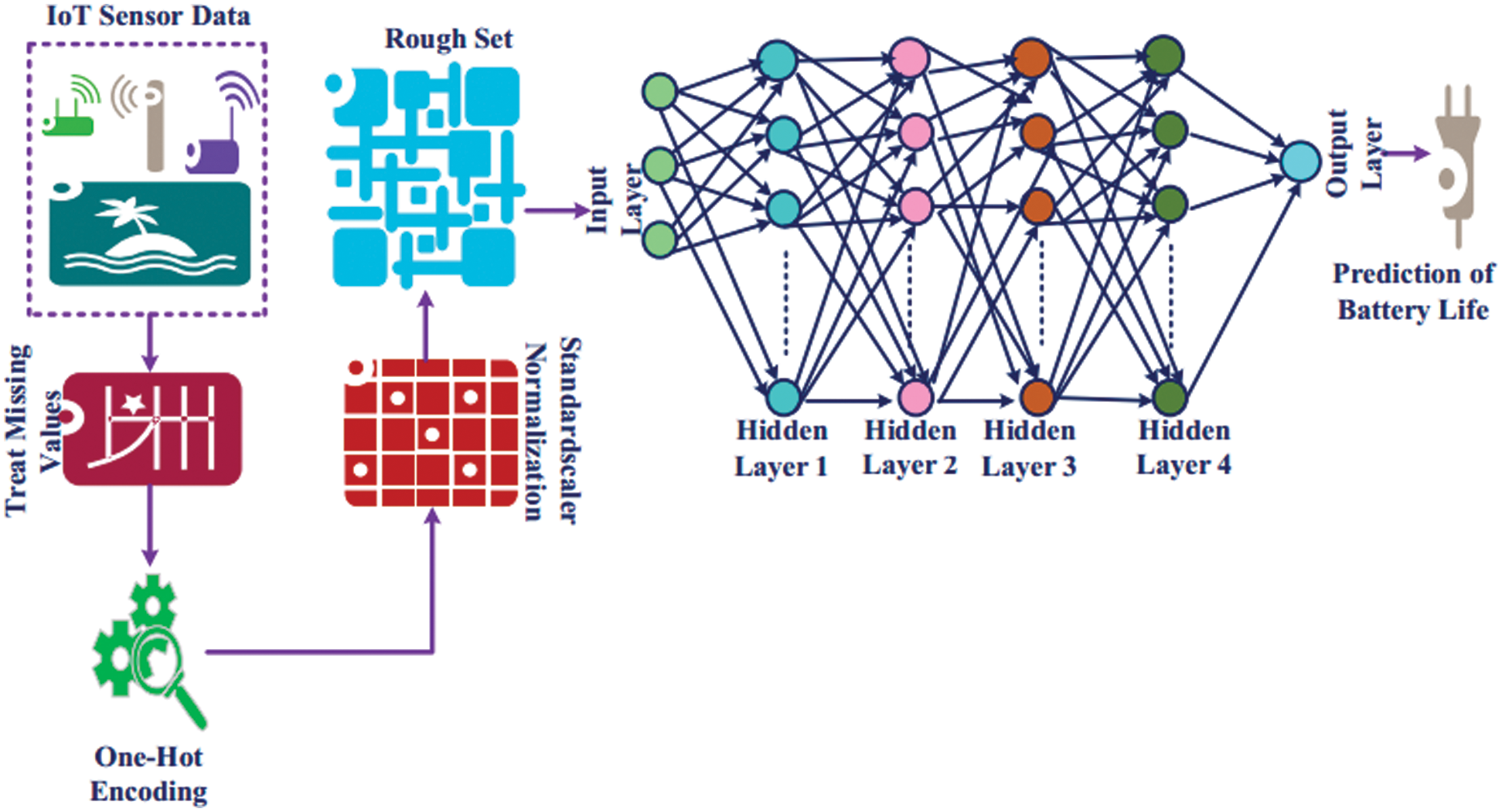

DNN is one of the standard methods for generating classification models where learning can be of any forms supervised, unsupervised or semi-supervised [37,38]. DNN parameters are used to examine recognition tasks, which tend to provide best performance over datasets. A DNN builds a deep architecture by taking self-encoders for the representation of hierarchical features. The Fig. 1 illustrates the structure of DNN architecture, consisting of an input layer, hidden layers, and an output layer. The data set acts as an input to the input layer.

Figure 1: Deep Neural Network (DNN)

The input to neuron in the first hidden layer is given in Eq. (3)

where C1 (x,y) and z1 (x) are the weight and bias respectively. The output of the neuron x in the first hidden layer is given by h1(x) = Activation Function (A1(1)) where Activation Function (.) is a particular activation function used for the DNN.

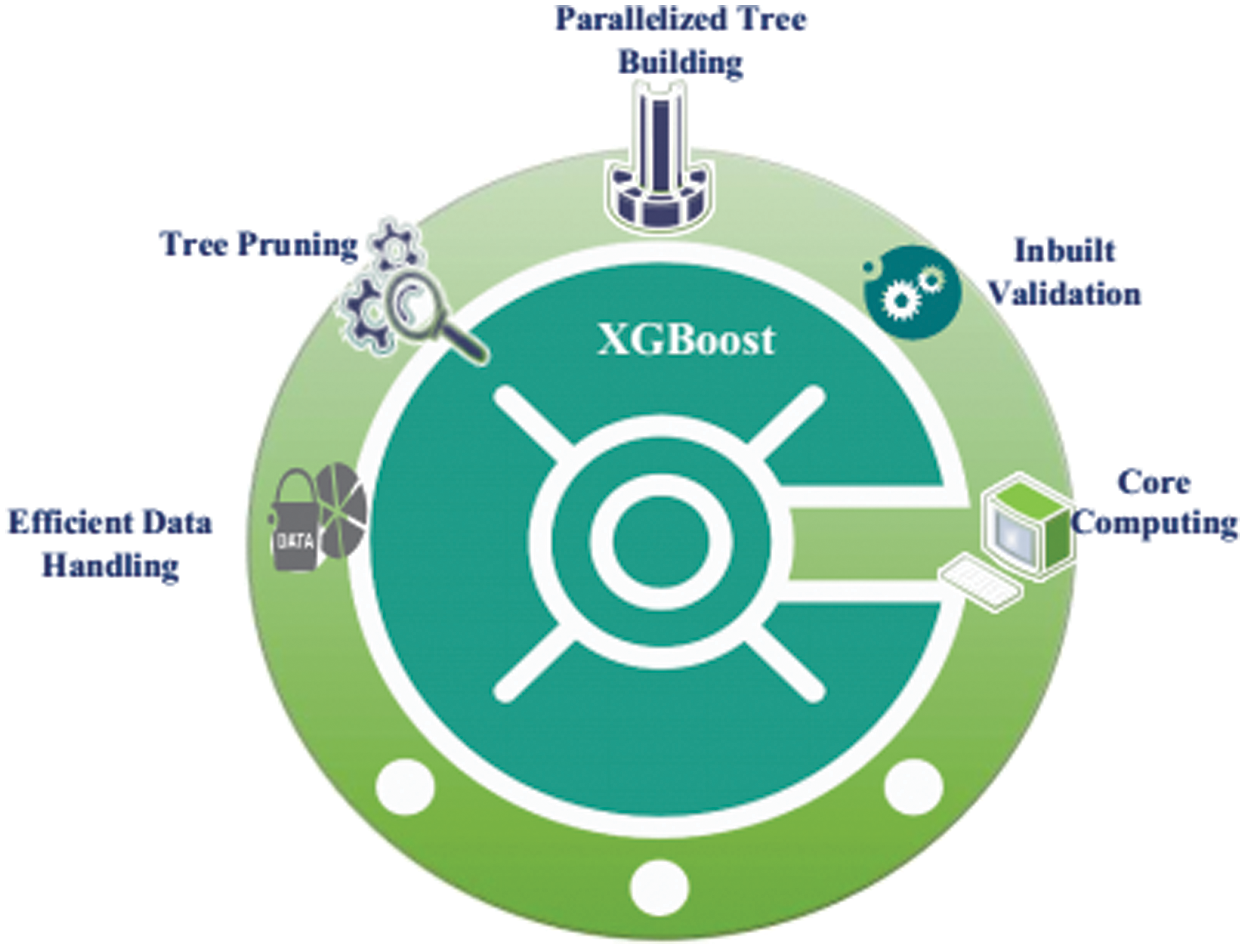

XGBoost is one of the most effective approaches in ML which implements the concept of decision trees in regular successions without any gaps [39]. Considering the aspect of computing paradigms, the model primarily uses gradient boosting framework for optimized estimations. Fig. 2 gives a detailed view on the various forms of gradient boosting and the process of effective data handling using paralleled tree building segment. XGBoost basically is a form of boosting algorithm that convert weak learners into stronger ones which basically improves the process of random guessing. Boosting as a popular methodology is a sequential process wherein trees are developed using information of previously developed trees in a sequential process following one another. Hence by learning from data, the process improves the resultant predictions of the following iterations. The algorithm also renders good rate of regularization in boosting the model and handles the missing values appropriately. Hence, XGBoost stands extremely appropriate for classifying the labels by modeling its attributes. Numerous researchers have prioritized the use of XGBoost algorithm in comparison to other algorithms due to its ability to generate accurate results ensuring optimal performance level.

Figure 2: XGBoost

Linear Regression is one of the best supervised algorithms with declared output having perpetual and constant slope. It predicts values from a range of enclosed data values. Simple regression and multi-variable regression are the forms of Linear Regression. Simple regression and multi-variable regression are usually referred by

where x,y,z represents attributes, m, a1, a2 and a3 are variables for the regression process. Statistical methods can be used to measure and reduce the size of the error variable to improve predictive power of the model.

One Hot Encoding is a process of exemplifying categorical values into binary numbers. It is a known fact that machine learning algorithms fail to work on categorical data and hence have to be converted to numbers where one hot encoding technique plays its significant role. As a natural reaction it is possible to opine on the use of integer coding directly but it has its limitations when used on relationships of the natural ordinal types. In one hot encoding technique, the categorical values in the data are directly assessed to integer values and each integer value is changed to a binary value [40].

The architecture of the proposed model is depicted in the Fig. 3. The steps involved are: The IoT dataset collected from the Chicago District Beach Water District [41]. The sensors are used in six different district locations to monitor and detect indicative measurements of beach name, date of measurement, water temperature, turbidity, transducer depth, wavelength, and wave duration for six places per hour during the summer.

Figure 3: Proposed Rough Set Based DNN Architecture

a. Preprocessing plays a crucial role in machine learning algorithms.

• Treat Missing Values: The missing values in the dataset are filled with the respective attribute mean, which makes the dataset ready for further analysis. The dataset consists of ten features, out of which two features are time tag driven, which do not contribute to the battery life prediction of the sensor node. Therefore, these two features are omitted for further analysis. The remaining eight features consist of categorical data, which also requires processing.

• One-Hot Encoding: In the next step of processing, the one-hot encoding scheme converts the categorical data into numerical data. There are eight features with various instances that get extrapolated to 206 features.

• Standard scaler Normalization: Further, the standard scaler normalization technique is applied on the numerical dataset, which enables standardization of the features transforming the mean of the distribution to 0. This ensures most of the values in the dataset range within 0 and 1.

b. The dimensionality reduction technique is applied to the used data using rough Set theory.

c. The reduced dataset is then fed into a deep neural network model for predicting the sustainability of battery life.

d. The DNN model is evaluated against the traditional state of the art models to validate the performance of the predictions.

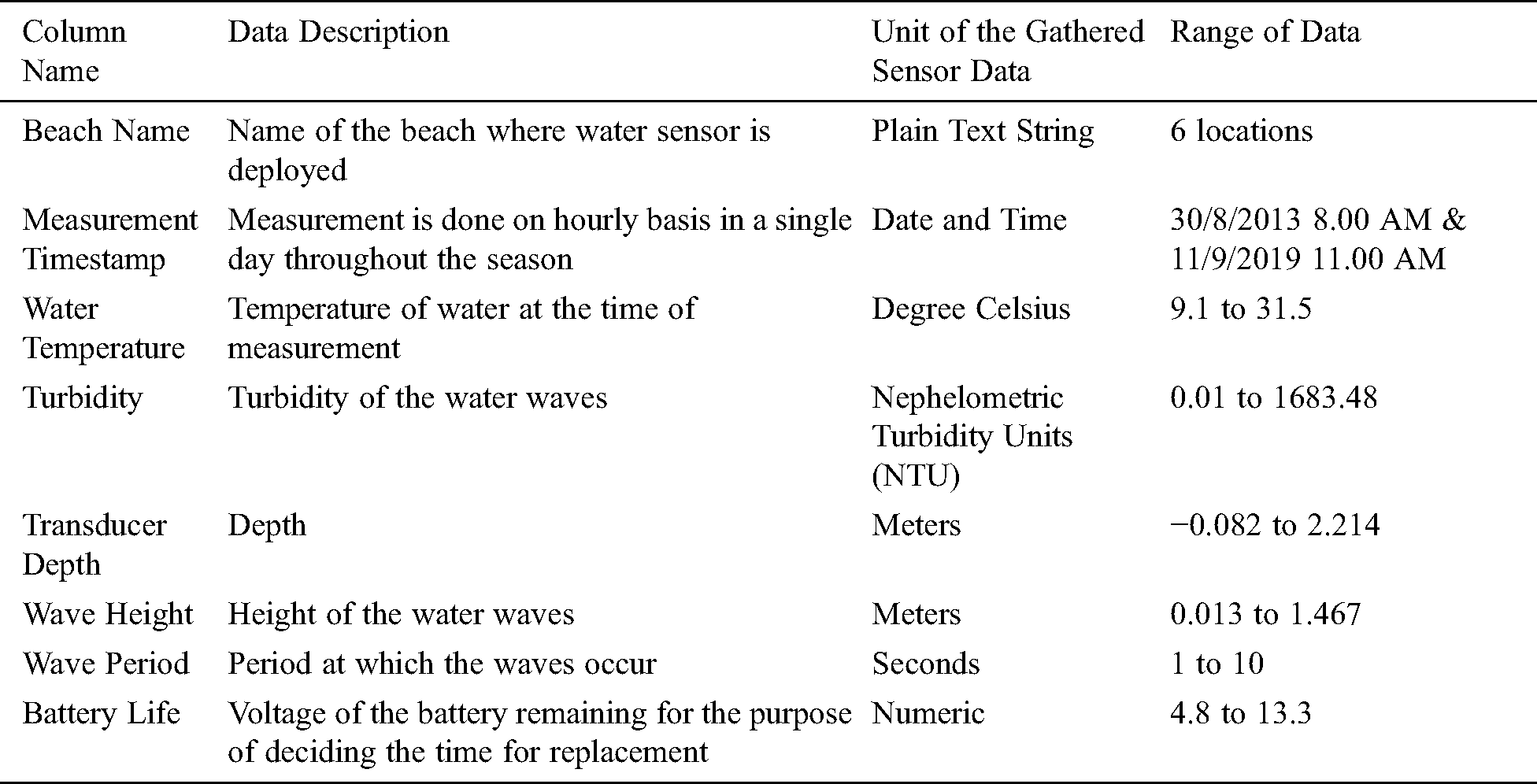

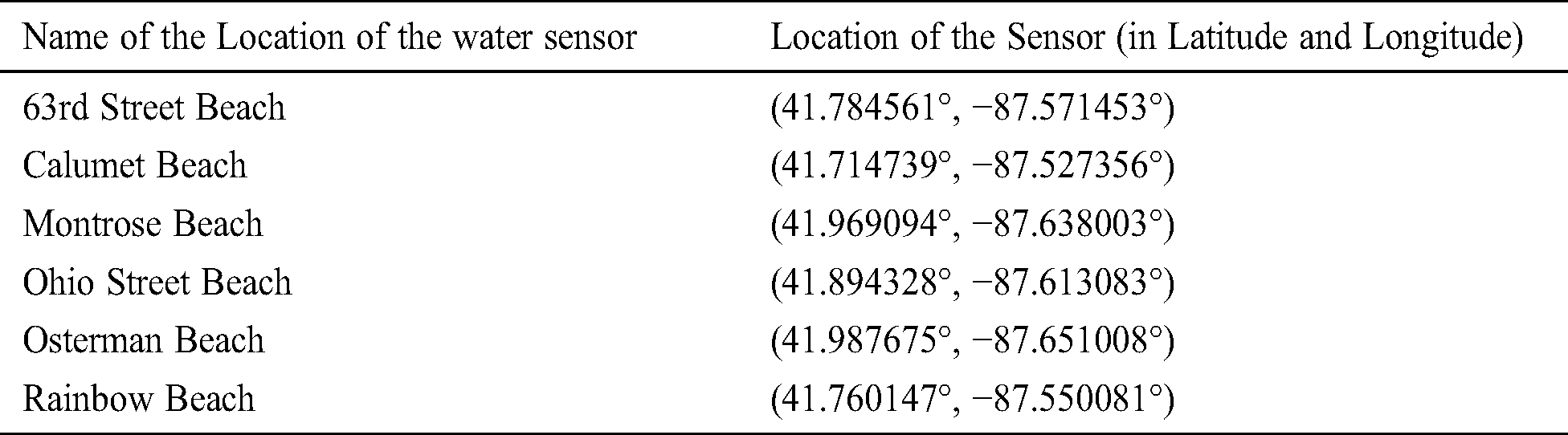

Dataset Description: The experimentation was carried out in a personal laptop with 8GB RAM, 500GB of Hard-disk, Windows 10 operating system and coding was done using Python 3.8. The dataset used for this experimentation is of the Chicago Park District Beach Water collected from [41]. There exists specific sensors mounted in the water at specific beaches along the Michigan Lakefront in Chicago Park District. These sensors are used in six locations in the district to track and detect indicative measurements pertaining to the name of the beach, the date of measurement, the temperature of the water, the turbidity, and the depth of the transducer, the wave length and the wave duration for six places per hour during the summer. The attributes of the dataset are described in the below Tab. 2. The dataset holds water sensor data for 10 characteristics in all 39.5 K cases. As shown in the following Tab. 3, the location where water sensors are mounted on the beaches.

Table 2: Chicago Park District Beach Dataset

Table 3: Location of Water Sensors Deployed in the Beaches

5.1 Metrics used for Evaluating the Model

Mean Squared Error (MSE): This is basically an average estimator that allows the researchers to determine the difference between the value and the actual value.

where  orthoginal value and

orthoginal value and  is the predicted value and m is the number of observations.

is the predicted value and m is the number of observations.

Mean Absolute Error (MAE): The mean absolute error metric measures the difference between a pair of continuous measurements in a single specific phenomenon. It reports that all the findings in progress show the same absolute error.

Root Mean Squared Error (RMSE): The statistics are commonly used to assess the magnitude of the prediction error and aggregates the same to a single measurement for assessing the strength of the prediction.

The MAE calculates similarity of the projections to potential results, while the RMSE is the standard deviation of the sample from the discrepancies between the expected  and the observed

and the observed  values, which measure the intensity and direction of a linear

values, which measure the intensity and direction of a linear  relation.

relation.

Test Variance Score (TVS): This metric calculates the variance between the actual values and observed values from the cumulative average of all expected rates generated by the forecast model or algorithm. The main goal of the predictor is to have a low variance performance. h is the sum of the variations between the observed and the predicted values.

z is every z[i] value that is observed minus the sum of the average of the ztest values that are observed. The variance is,

5.2 Dataset Preparation for Prediction

Real time raw data for water are collected from different places. Since the data is raw, there are few instances with some features missing, as the battery drain the sensor reducing battery sustainability. There is a possibility that some time-stamp instances would also not be usable until the battery is replaced. But filling in of missing values is extremely important before initiating the process of forecasting using the ML model. In the present study, the missing values in the dataset are first filled with the the respective attribute mean which makes the dataset ready for further analysis.

It is known from the data summary that out of ten characteristics, two are time tag driven, which do not contribute to the battery life prediction of the sensor node. Therefore, both features are omitted for further analysis. The remaining eight features of the Tab. 2 consist of categorical data which also requires processing. Thus, the one-hot encoding scheme converts these categorical data into numerical data. There are eight features with various instances that are extrapolated to 206 features. The transformed sensor results were then normalized from 0 to 1 using the Standardscalar method.

The next attempt was directed to extract features having higher impact on battery life prediction. Also the ones with negative impact were eliminated using rough sets. The number of features thus got decreased to 182 as part of dimensionality reduction. These features have the best impact on the target class prediction.

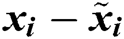

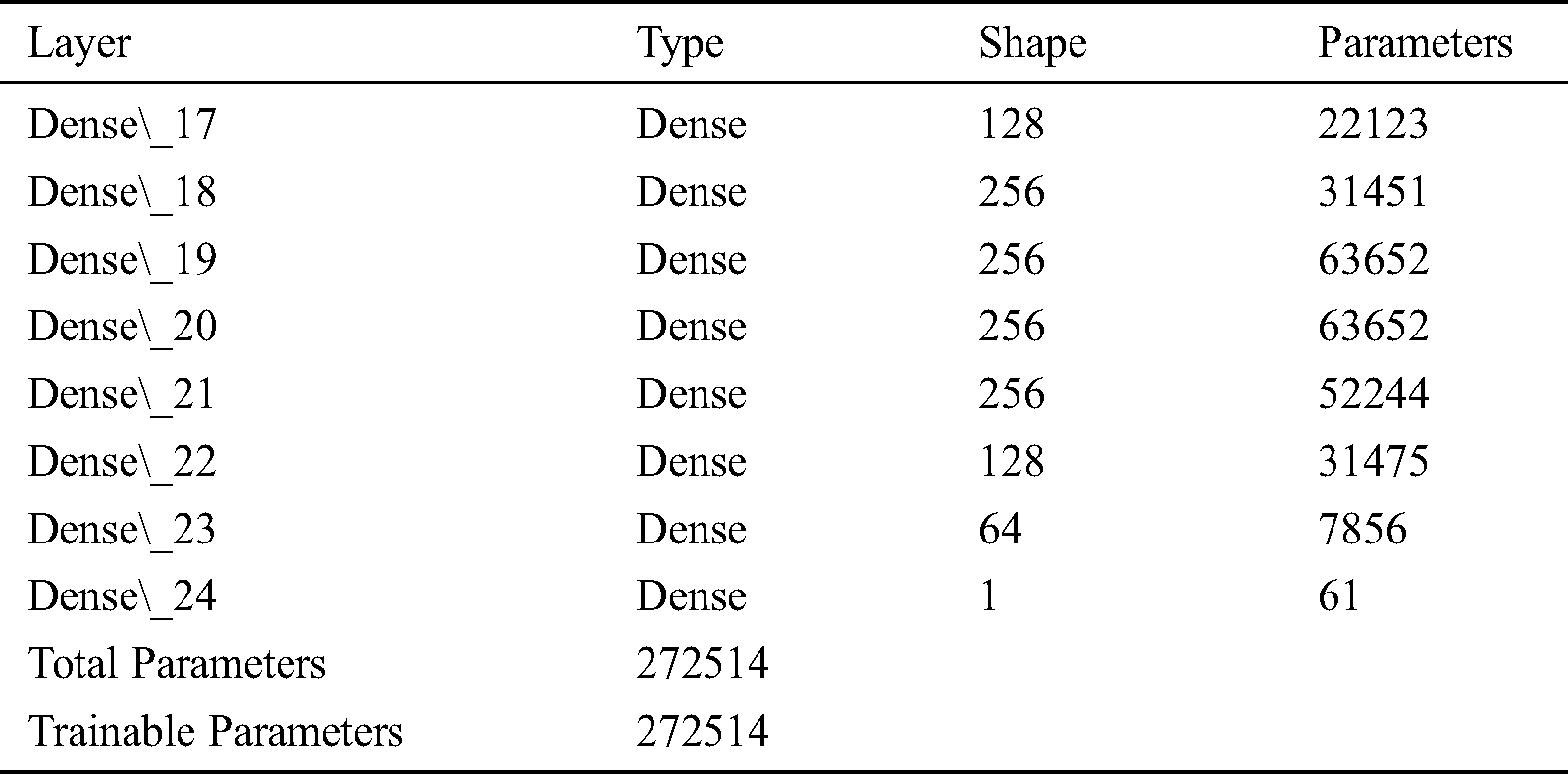

Prediction Model: The DNN used in the prediction model consisted of one input layer with 128 neurons, five hidden layers with 256, 256, 256, 128, and 64 neurons respectively and one output layer with 1 neuron. The Adaptive Moment Estimation (ADAM) Optimizer and the Relu activation function are used for compiling the prediction model. Tabs. 4 and 5 provide an overview of a DNN prediction process considering the use of rough sets in contrast with non-inclusion of the rough set technique.

Table 4: Prediction Model DNN with rough sets as Dimensionality Reduction

Table 5: Prediction Model DNN without Dimensionality Reduction

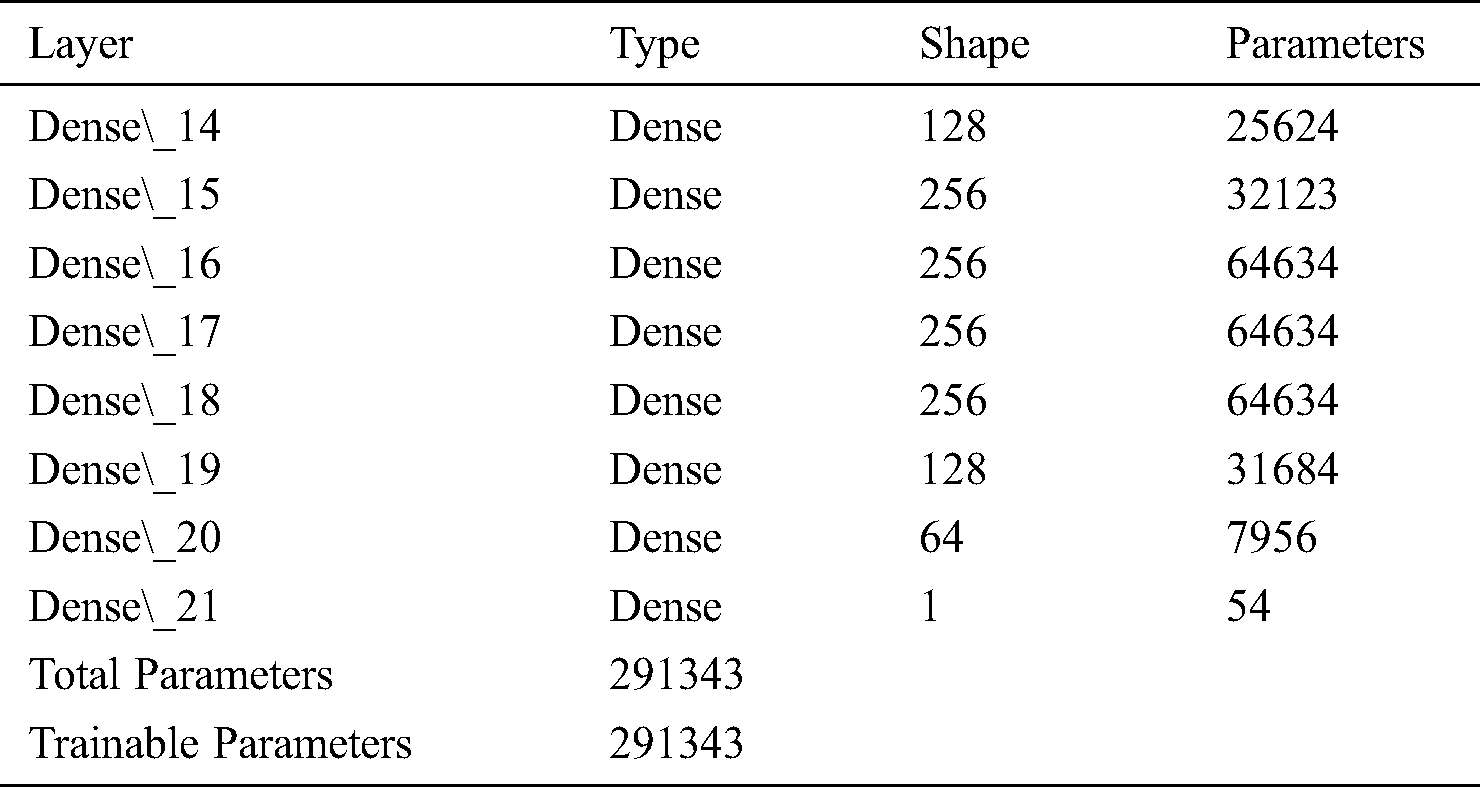

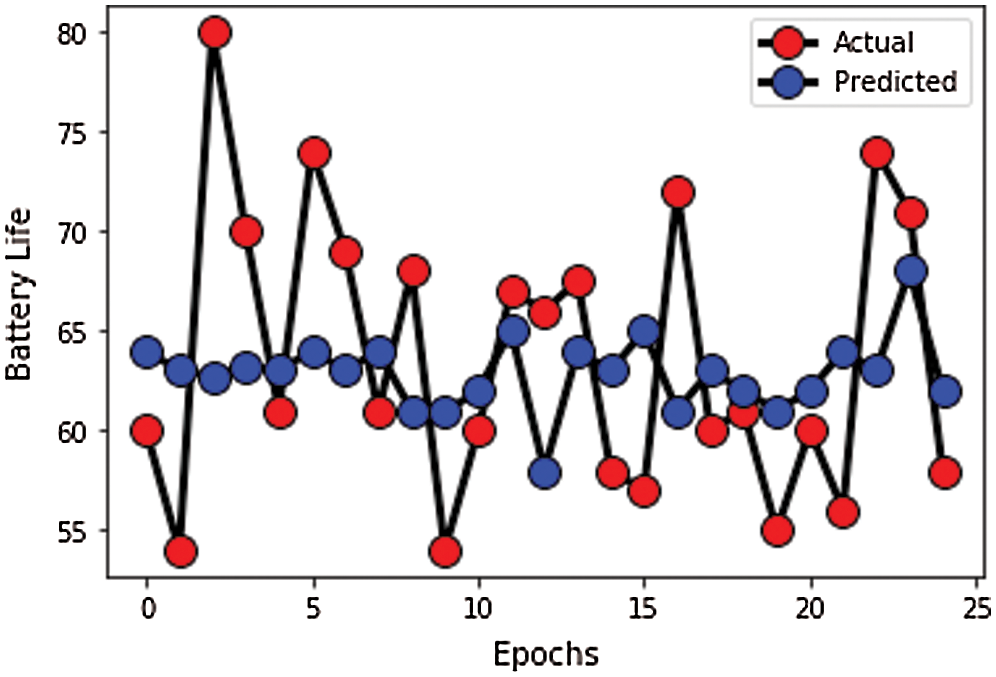

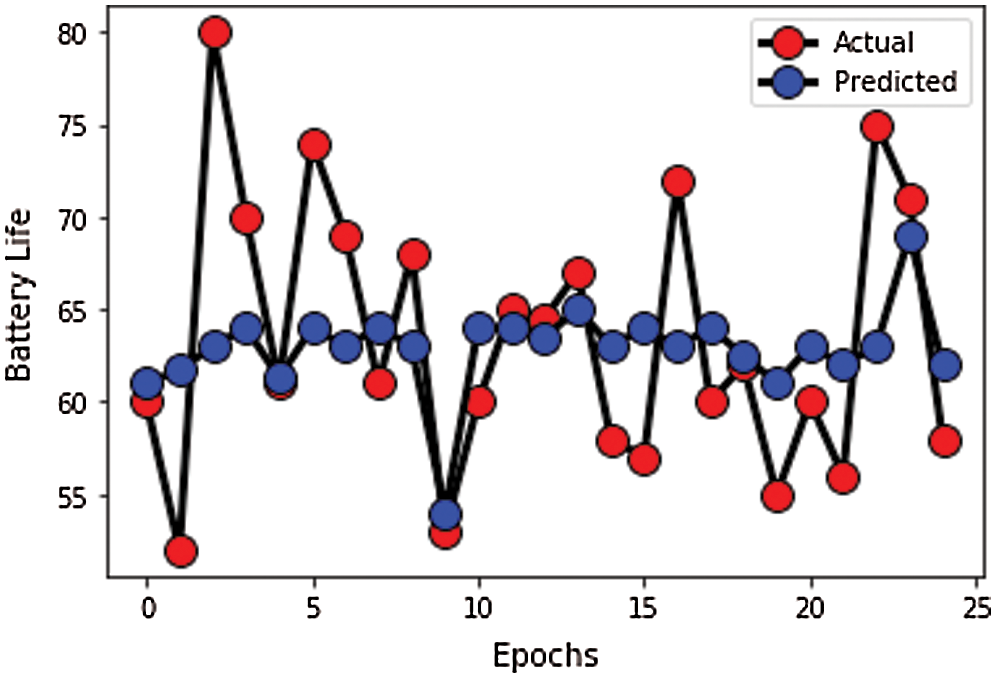

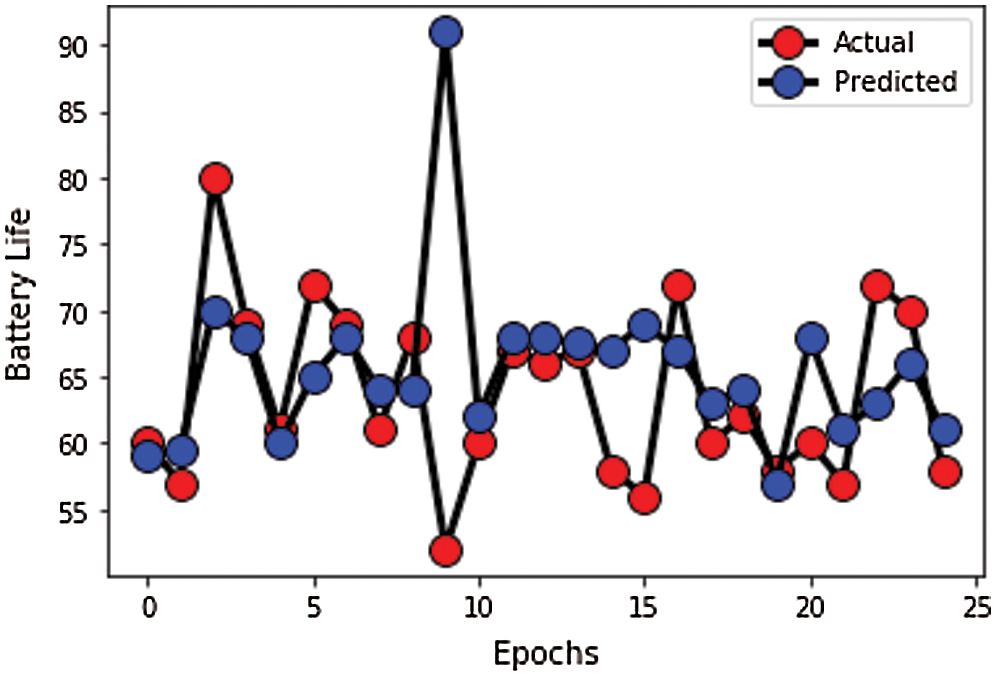

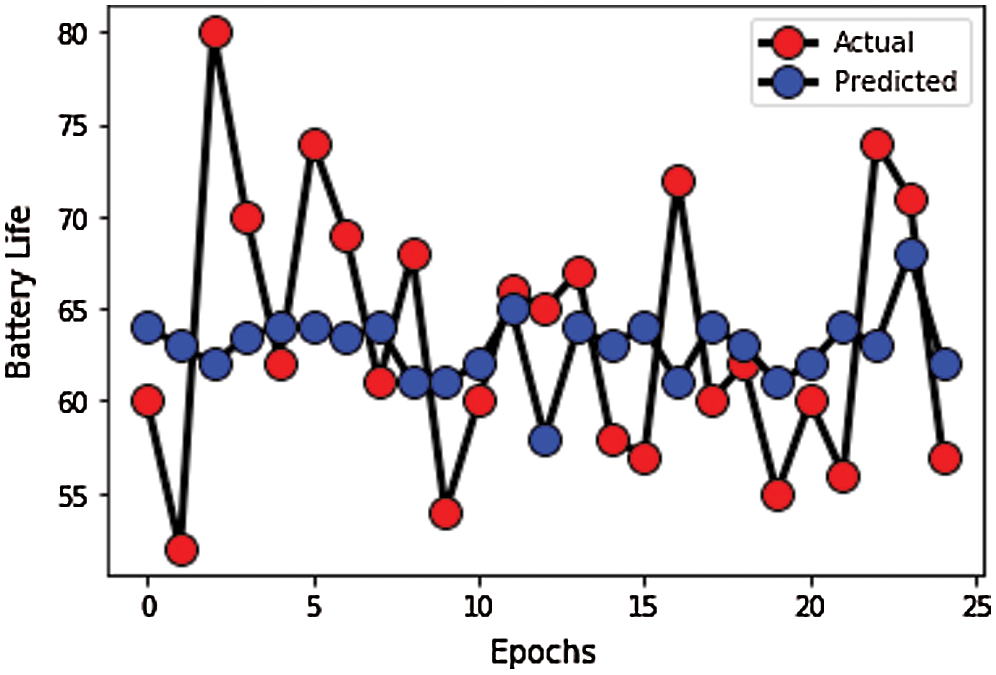

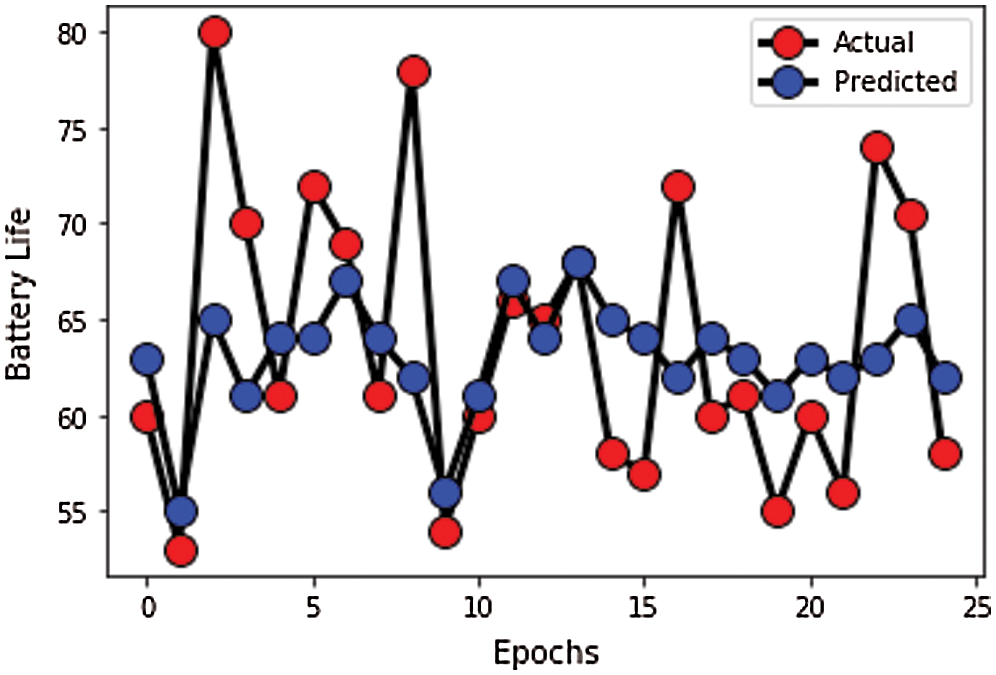

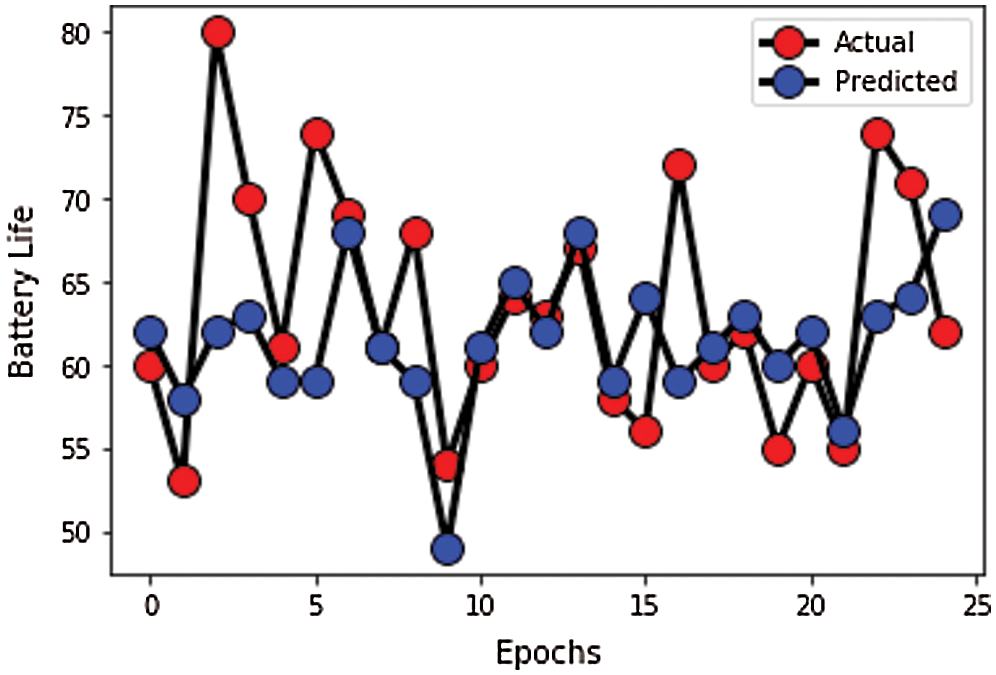

Performance Evaluation: The proposed predictive model is evaluated against other commonly used state-of-the-art models, namely DNN, Linear Regression and XGBoost, for validating the predictive effect of the model using DNNs with rough sets integrated for dimensionality reduction. The results integrating rough sets with DNN and without are critically analyzed. The data is shown in the Figs. 4–6 highlights the effects of the random selection for 25 data instances. The same experiment is repeated after reducing the dimension using rough sets with respect to all the prediction models. The obtained results are shown in the Figs. 7–9. Post observation of the all the images, the proposed model shows the better results when we compare the other models like DNN, Linear Regression, XGBoost without roughsets.

Figure 4: Linear Regression Model Plot

Figure 5: XGBoost Model Plot

Figure 6: Deep Neural Networks Prediction Model

Figure 7: Linear Regression with Rough sets

Figure 8: XGBoost with Rough sets

Figure 9: Deep Neural Networks with Rough sets

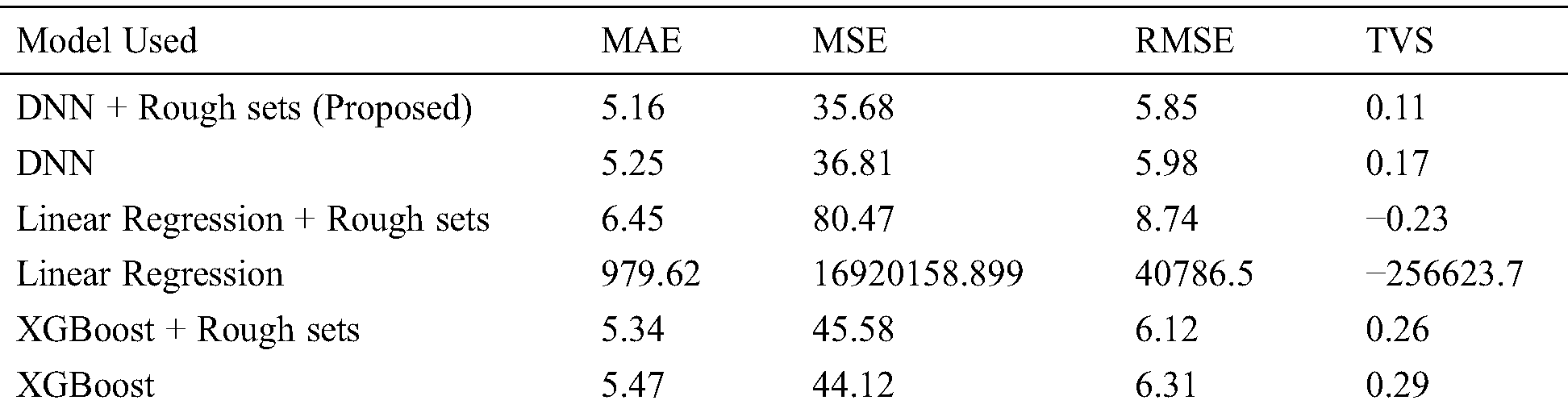

The proposed model is evaluated on the basis of various standard performance metrics: Mean Absolute Error, Mean Squared Error, Root Mean Squared Error, and Test Variance [42–47]. A comparison of different machine learning models is shown in Tab. 6, values of MAE indicate the superiority of Roughsets DNN where DNN, Roughsets Linear Regression, linear regression, Roughsets XGBoost and XGBoost achieve MAE values of 5.16, 5.25, 6.45, 979.62, 5.34 and 5.47, respectively.

Table 6: Evaluation of Prediction Models using Standard Metrics

The Figs. 4–9 highlight the residual battery life (mAh) for the various prediction models namely Linear Regression, XGBoost, DNN, Linear Regression + Rough Set, XGBoost + Rough Set, DNN + Rough Set. It is pertinent to mention that the primary focus of the work was the prediction of battery life in terms of residual life of battery when used in sensors of Chicago district beach water. The more life remains, the working ability would automatically get extended for data collection of these sensors for prolonged time period.

There is no doubt that there are significant studies relevant to application of IoT in marine environment. But there exist lag in research which focus on energy optimization aspects of IoT applications especially towards enhancement of sustainability of battery life. In the present study a novel approach has been adopted based on converging rough set with DNN to predict battery life of the IoT network with optimum accuracy. The normal pre-processing steps used in this approach were further refined incorporating the rough set approach for extracting significant features which has contributed immensely towards more accurate predictions. The results of the model were compared with the state of the art techniques to establish its superiority. As part of future work, the same model can be deployed on several IoT applications rendering home surveillance, healthcare and defence services. Also the scalability and robustness of the model can be validated by testing the same on magnanimous IoT application like traffic predictions, air pollution, waste management etc.in smart city setups. The predictions of battery life would also guide in the design and development of energy efficient products involving IoT and sustainable energy technologies.

Funding Statement: The author(s) received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. Madakam and V. Lake. (2015). “Internet of things (IOTA literature review,” Journal of Computer and Communications, vol. 3, no. 05, pp. 164–172. [Google Scholar]

2. M. Reddy and M. R. Babu. (2019). “Implementing self adaptiveness in whale optimization for cluster head section in Internet of Things,” Cluster Computing, vol. 22, no. 1, pp. 1361–1372. [Google Scholar]

3. M. U. Farooq, M. Waseem, S. Mazhar, A. Khairi and T. Kamal. (2015). “A review on internet of things (IOT),” International Journal of Computer Applications, vol. 113, no. 1, pp. 1–7. [Google Scholar]

4. I. Lee and K. K.Lee. (2015). “The Internet of Things (IOTApplications, investments, and challenges for enterprises,” Business Horizons, vol. 58, no. 4, pp. 431–440. [Google Scholar]

5. D. Minoli, K. Sohrabyand and B. Occhiogrosso. (2017). “IOT considerations, requirements, and architectures for smart buildings—energy optimization and next-generation building management systems,” IEEE Internet of Things Journal, vol. 4, no. 1, pp. 269–283. [Google Scholar]

6. S. Ansari, T. Aslam, J. Poncela, P. Otero and A. Ansari. (2020). “Internet of things-based healthcare applications,” in B. S. Chowdhry, F. K. Shaikh and N. A. Mahoto (eds.IoT Architectures, Models, and Platforms for Smart City Applications, USA: IGI Global, pp. 1–28. [Google Scholar]

7. J. Muangprathub, N. Boonnam, S. Kajornkasirat, N. Lekbangpong, A. Wanichsombat et al. (2019). , “IoT and agriculture data analysis for smart farm,” Computers and Electronics in Agriculture, vol. 15, no. 6, pp. 467–474. [Google Scholar]

8. T. Stock and G. Seliger. (2016). “Opportunities of sustainable manufacturing in industry 4.0,” Procedia CIRP, vol. 40, pp. 536–541. [Google Scholar]

9. J. Wan, J. Hong, Z. Z.Pang, B. B.Jayaraman and F. Shen. (2019). “Key technologies for smart factory of industry 4.0,” IEEE Access, vol. 7, no. 2, pp. 17969–17974. [Google Scholar]

10. J. Lin, S. Liao and F. Leu. (2019). “Sensor data compression using bounded error piecewise linear approximation with resolution reduction,” Energies, vol. 12, no. 13, pp. 252– 259. [Google Scholar]

11. P. Ray and Y. Rao. (2019). “A review of industry 4.0 applications through smart technologies by studying examples from the automobile industry,” Advance and Innovative Research, vol. 16, no. 2, pp. 80–89. [Google Scholar]

12. C. Iwendi, P. Maddikunta, T. Gadekallu, K. Lakshmanna, A. Bashir et al. (2020). , “A metaheuristic optimization approach for energy efficiency in the IOT networks,” Software: Practice and Experience, vol. 12, no. 6, pp. 92–99. [Google Scholar]

13. T. Ahanger and A. Aljumah. (2018). “Internet of things: A comprehensive study of security issues and defence mechanisms,” IEEE Aceess, vol. 2, no. 4, pp. 161–169. [Google Scholar]

14. P. Eskerod, S. Hollensen, M. Contreras and J. ArteagaOrtiz. (2019). “Drivers for pursuing sustainability through IoT technology within high-end hotels—an exploratory study,” Sustainability, vol. 11, no. 19, pp. 53–72. [Google Scholar]

15. E. Manavalan and K. Jayakrishna. (2019). “A review of internet of things (IoT) embedded sustainable supply chain for industry 4.0 requirements,” Computers & Industrial Engineering, vol. 12, no. 7, pp. 925–953. [Google Scholar]

16. F. Behrendt. (2019). “Cycling the smart and sustainable city: Analyzing ec policy documents on internet of things, mobility and transport, and smart cities,” Sustainability, vol. 11, no. 3, pp. 76–82. [Google Scholar]

17. R. M. Swarnapriya, S. Bhattacharya, P. K. R. Maddikunta, S. R. K. Somayaji, K. Lakshmanna et al. (2020). , “Load balancing of energy cloud using wind driven and firefly algorithms in internet of everything,” Journal of Parallel and Distributed Computing, vol. 142, pp. 16–26. [Google Scholar]

18. P. K. R. Maddikunta, T. R. Gadekallu, R. Kaluri, G. Srivastava, R. M. Parizi et al. (2020). , “Green communication in IoT networks using a hybrid optimization algorithm,” Computer Communications, vol. 159, pp. 97–107. [Google Scholar]

19. W. Zhao, L. Xu, J. Bai, M. Ji and T. Runge. (2018). “Sensor-based risk perception ability network design for drivers in snow and ice environmental freeway: A deep learning and rough sets approach,” Soft Computing, vol. 22, no. 5, pp. 1457–1466. [Google Scholar]

20. M. Khodayar, O. Kaynakand and M. Khodayar. (2017). “Rough deep neural architecture for short-term wind speed forecasting,” IEEE Transactions on Industrial Informatics, vol. 13, no. 6, pp. 2770–2779. [Google Scholar]

21. J. Cao, X. Zhang, C. Zhang and J. Feng. (2018). “Improved convolutional neural network combined with rough set theory for data aggregation algorithm,” Journal of Ambient Intelligence and Humanized Computing, vol. 2, no. 6, pp. 1–8. [Google Scholar]

22. G. Elhayatmy, N. Dey and A. Ashour. (2018). Internet of things based wireless body area network in healthcare. Internet of Things and Big Data Analytics Toward Next-Generation Intelligence, Switzerland AG: Springer, pp. 3–20. [Google Scholar]

23. J. Lee, D. Jang and S. Park. (2017). “Deep learning-based corporate performance prediction model considering technical capability,” Sustainability, vol. 9, no. 6, pp. 68–72. [Google Scholar]

24. M. Ateeq, F. Ishmanov, M. Afzal and M. Naeem. (2019). “Predicting delay in IoT using deep learning: A multiparametric approach,” IEEE Access, vol. 7, no. 6, pp. 62022–62031. [Google Scholar]

25. M. Huang, Z. Liu and Y. Tao. (2019). “Tao Mechanical fault diagnosis and prediction in iot based on multi-source sensing data fusion,” Simulation Modelling Practice and Theory, vol. 102, pp. 1–19. [Google Scholar]

26. X. Fafoutis, A. Elsts, A. Vafeas, G. Oikonomou and R. Piechocki. (2018). “On predicting the battery lifetime of IOT devices: Experiences from the sphere deployments,” in Proc. of the 7th Int. Workshop on Real-World Embedded Wireless Systems and Networks, Shenzhen, China, pp. 7–12. [Google Scholar]

27. S. Mansouri, P. P.Karvelis, G. Georgoulas and G. Nikolakopoulos. (2017). “Remaining useful battery life prediction for UAVs based on machine learning,” IFAC-Papers OnLine, vol. 50, no. 1, pp. 4727–4732. [Google Scholar]

28. S. Shen, M. Sadoughi, X. Chen, M. Hong and C. Hu. (2019). “A deep learning method for online capacity estimation of lithium-ion batteries,” Journal of Energy Storage, vol. 25, no. 5, pp. 100–109. [Google Scholar]

29. Z. Pawlak. (1982). “Rough sets,” International Journal of Computer & Information Sciences, vol. 11, no. 5, pp. 341–356. [Google Scholar]

30. M. Lopez-Martin, B. Carro, A. Sanchez-Esguevillas and J. Lloret. (2017). “Network traffic classifier with convolutional and recurrent neural networks for internet of things,” IEEE Access, vol. 5, no. 2, pp. 18042–18050. [Google Scholar]

31. J. C. Fan, Y. Li, L. Y. Tang and G. K. Wu. (2018). “RoughPSO: rough set-based particle swarm optimisation,” International Journal of Bio-Inspired Computation, vol. 12, no. 4, pp. 245–253. [Google Scholar]

32. S. Mahdavinejad, M. Rezvan, M. Barekatain, P. Adibi, P. Barnaghi et al. (2018). , “Machine learning for internet of things data analysis: A survey,” Digital Communications and Networks, vol. 4, no. 3, pp. 161–175. [Google Scholar]

33. G. Manogaran, P. Shakeel, H. Fouad, Y. Nam, S. Baskar et al. (2019). , “Wearable IoT smartlog patch: An edge computing-based bayesian deep learning network system for multi access physical monitoring system,” Sensors, vol. 19, no. 13, pp. 30–38. [Google Scholar]

34. D. B. Chakraborty and S. K. Pal. (2017). “Neighborhood rough filter and intuitionistic entropy in unsupervised tracking,” IEEE Transactions on Fuzzy Systems, vol. 26, no. 4, pp. 2188–2200. [Google Scholar]

35. Y. Hassan. (2017). “Deep learning architecture using rough sets and rough neural networks,” Kybernetes, vol. 46, no. 4, pp. 693–705. [Google Scholar]

36. F. Otero, A. Freitas and C. Johnson. (2012). “Inducing decision trees with an ant colony optimization algorithm,” Applied Soft Computing, vol. 12, no. 11, pp. 3615–3626. [Google Scholar]

37. R. Miikkulainen, J. Liang, E. Meyerson, A. Rawal, D. Fink et al. (2019). , “Evolving deep neural networks,” in R. Kozma, C. Alippi, Y. Choe and F. C. Morabito (eds.Artificial Intelligence in the Age of Neural Networks and Brain Computing. USA: Elsevier, pp. 293–312. [Google Scholar]

38. T. Gadekallu, N. Khare, S. Bhattacharya, S. Singh, P. Reddy et al. (2020). , “Early detection of diabetic retinopathy using PCA-firefly based deep learning model,” Electronics, vol. 9, no. 2, pp. 274–281. [Google Scholar]

39. S. Bhattacharya, R. Kaluri, S. Singh, M. Alazab, U. Tariq et al. (2020). , “A Novel PCA-Firefly based XGBoost classification model for Intrusion Detection in Networks using GPU,” Electronics, vol. 9, no. 2, pp. 1–17. [Google Scholar]

40. H. Fu, Y. Yang, X. Wang, H. Wangand and Y. Xu. (2019). “DeepUbi: A deep learning framework for prediction of ubiquitination sites in proteins,” BMC Bioinformatics, vol. 20, no. 1, pp. 86–92. [Google Scholar]

41. C. District. (2019). “Chicago Beach Sensor Life Prediction.” (Accessed on December 12, 2019. Available: https://www.chicagoparkdistrict.com/parks-facilities/beaches. [Google Scholar]

42. T. R. Gadekallu and N. Khare. (2017). “Cuckoo search optimized reduction and fuzzy logic classifier for heart disease and diabetes prediction,” International Journal of Fuzzy System Applications (IJFSA), vol. 6, no. 2, pp. 25–42. [Google Scholar]

43. T. Reddy, R. M. Swarnapriya, M. Parimala, C. L. Chowdhary, S. Hakak et al. (2020). , “A deep neural networks based model for uninterrupted marine environment monitoring,” Computer Communications, vol. 157, pp. 64–75. [Google Scholar]

44. P. K. R. Maddikunta, G. Srivastava, T. R. Gadekallu, N. Deepa and P. Boopathy. (2020). “Predictive model for battery life in IoT networks,” IET Intelligent Transport Systems, vol. 14, pp. 1388–1395. [Google Scholar]

45. G. T. Reddy, M. P. K. Reddy, K. Lakshmanna, R. Kaluri, D. S. Rajput et al. (2020). , “Analysis of dimensionality reduction techniques on big data,” IEEE Access, vol. 8, pp. 54776–54788. [Google Scholar]

46. K. N. Tran, M. Alazab and R. Broadhurst. (2014). “Towards a feature rich model for predicting spam emails containing malicious attachments and urls,”. [Google Scholar]

47. T. R. Gadekallu, N. Khare, S. Bhattacharya, S. Singh, P. K. R. Maddikunta et al. (2020). , “Deep neural networks to predict diabetic retinopathy,” Journal of Ambient Intelligence and Humanized Computing, vol. 1, pp. 1–14. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |