DOI:10.32604/iasc.2020.012995

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2020.012995 |  |

| Article |

A Successful Framework for the ABET Accreditation of an Information System Program

1College of Computer and Information Sciences, Imam Muhammad Ibn Saud Islamic University, Riyadh, 11673, Saudi Arabia

2College of Computing and Informatics, Saudi Electronic University, Riyadh, 11673, Saudi Arabia

3Faculty of Computer Studies, Arab Open University, Riyadh, 11564, Saudi Arabia

4Department of Computer Science, College of Shari'ah and Islamic Studies in Ahsa, Imam Muhammad Ibn Saud Islamic University, Riyadh, 11673, Saudi Arabia

*Corresponding Author: Waleed Rashideh. Email: wmrashideh@imamu.edu.sa

Received: 21 July 2020; Accepted: 18 September 2020

Abstract: Scientific programs in higher educational institutes are measured by their performances in showing evidences for expected quality assurance levels and for obtaining the academic accreditation. However, obtaining an accreditation for a scientific program is a lengthy process, as it requires extensive efforts from all members belonging to the program. The Accreditation Board for Engineering and Technology (ABET) accreditation is a common form of quality assurance that is based on different areas such as computing, engineering and sciences. In order to determine the ABET accreditation, several processes and methods are assessed to guarantee the required accreditation. Similar to many other programs, the Information System (IS) programs require an ABET accreditation so that their academic processes could be aligned with standards. In this paper, a new framework is implemented based on particular processes for ensuring a high quality IS program and acquiring the ABET accreditation. Further, the paper elaborates a number of preparation steps that are taken into account for evaluating the IS program’s objectives and outcomes based on an efficient and improved process. Finally, the process applies the obtained evaluated results in order to develop the IS Program.

Keywords: ABET accreditation; assessments; continuous improvement; information system; program outcomes; quality assurance

At present, computer education is an important track for developing a community. One of the main objectives of computer education can be achieved by aligning the acquired knowledge, competence, and perception of computers. In fact, computer education aims at constructing the awareness of one of this century’s universal technologies. Additionally, computer education includes a variation of disciplines (i.e., hardware and software). Therefore, quality is a significant issue in this field, and thus, adopting assessment procedures is necessary for achieving this goal. Attention has been paid to the learning process through several aspects, e.g., cyber security education [1,2], electrical engineering education [3], telecommunication engineering education [4,5], mechatronics education [6], Computer Science Program [7], and Engineering Program [7]. Saudi Arabia, which is considered one of the fast ever-developed countries in the Middle East, emphasizes the alignment of its educational system based on various global standards for producing highly qualified graduate students. To achieve this goal, the Ministry of Education (MOE) requested Saudi universities to obtain an accreditation for their programs. The Imam Mohammad Ibn Saud Islamic University (IMSIU) represents the second largest university with a total number of students reaching over than 90,000 students. This university exploits its initiatives to achieve the mission of the MOE in Saudi Arabia. The Information System (IS) program represents one of the scientific programs at the College of Computer and Information Sciences in the IMSIU. In fact, it acquired its academic accreditation via the Accreditation Board for Engineering and Technology (ABET). This program ensures that its outcomes are locally and internationally equivalent to their peer programs. The equivalence allows the program to align its educational process with its standards, and to raise the prestige of its degree programs, which are offered by the university. Further, the equivalence allows the program to align its outputs with the MOE’s vision.

The ABET controls most of the accreditations in the United States, which has been recognised by many educational institutes across the globe [8]. In fact, it represents one of the oldest accreditation body for technology and engineering programs, which was established in 1932 [9]. Moreover, it represents a non-profitable and non-governmental organisation that is responsible for accrediting different colleges and university programs through several disciplines, including the computing discipline. The accreditation of such programs emphasises to meet established standards that can enable graduates to gain access through several critical and technical fields, which pave the way for innovation, emergence technologies, and anticipation of welfare and safety needs toward the public [10]. Many challenges are experienced in preparing the main report of a self-study for the accreditation process [11], including the heavy workload of faculty members and the lack of available training and management commitments [6].

The ABET accreditation assists in improving learning experiences and program quality regardless of how a lengthy process requires combined efforts from the entire involved stakeholders. In the field of computing, the ABET establishes particular criteria for programs by meeting an appropriate on-demand accreditation [12]. These criteria shed the light on various computing programs that assure the assessment of particular procedures by satisfying a particular program and outcomes of educational objectives. To achieve that, continuous improvements are employed to document data for assessing the achievement of different programs that are related to a number of educational objectives and outcomes, and for evaluating different ways of meeting its extent. The assessment requirements of the ABET accreditation are relevant to information system programs [13]. One type of quality assurance is based on an educational accreditation, which relates to the establishment of the status, legitimacy or appropriateness of a module of study, program or institution [14]. In terms of the accreditation process, the operations and services of educational institutions are evaluated by an external body (e.g., an accreditation agency) in order to determine if applicable standards are either met or not [15]. Meeting these criteria allows the program to obtain an accreditation from the ABET agency. One of the key elements of these criteria is to apply a continuous improvement process by assessing particular procedures for the program’s objectives and outcomes.

This paper describes the experience of the IS department at the IMSIU in accrediting the IS program and the quality assurance system that have far been followed during the accreditation stage. To align the academic processes of a scientific program and its standards, the outcomes of the program should be persistently assessed and the parts of the program should be identified to acquire corrective actions. Hence, this can be achieved by critically analysing and evaluating the program’s objectives and outcomes, and by collecting the responses of the program’s constituencies. Therefore, the main research question that is likely to emerge is “What are the key procedures that should be applied to the IS program and should successfully lead to ABET’s accreditation?” The results of applying the procedures of the continuous improvement process are described in detail in this paper. The remaining sections of this paper are outlined as follows. Section 2 presents the secondary research, which forms the literature review. Details on the followed accreditation process that are based on a case study are given in Section 3. The applied quality assurance system is introduced in Section 4, while the obtained assessment results and the applied actions of the continuous improvement process are further discussed in Section 5. Finally, Section 6 concludes the paper.

Curriculums of academic programs comprise technical and professional requirements that include general education requirements and electives. The program complies with these requirements in order to prepare students for a professional career and further studies in the computing discipline [16]. Developing an educational program must be aligned with the mission and objectives of this program. Computing programs must also provide proofs for achieving particular skills and technical knowledge via its students upon their graduation [17–20]. Achieving accreditation criteria is an evidence of performance for the quality of higher education. Additionally, an accredited degree provides a recognised reputation that can assist in supporting the promotion and marketing of different scientific programs [21]. Students’ outcomes represent continuous improvements and accreditation for the core of the process. In particular, they are used to fulfil their needs and meet different objectives of educational programs and student outcomes [22–24]. The department of IS works extensively to meet the ABET’s criteria by formulating a number of procedures related to quality assurance for the educational process where this department develops its mission for this purpose. Moreover, committees are established to start the accreditation process for ensuring efficient achievements of the program’s educational objectives and outcomes. According to the ABET program, educational objectives are defined as “broad statements that describe what graduates are expected to attain within a few years after graduation. Program educational objectives are based on the needs of the program’s constituencies” [10]. On the other hand, students’ outcomes are defined as “What students are expected to know and be able to do by the time of graduation. These relate to the knowledge, skills, and behaviours that students acquire as they progress through the program” [10]. Feedbacks from a program consistency is used to evaluate the achievement of various educational objectives. The IS program constituently includes the following stakeholders: current students, alumni, industry/employers and university staff.

Program committees function all together with constituent stakeholders as mentioned above in order to revise and approve different educational objectives of a program. Constituent meetings are conducted periodically to ensure that all stakeholders’ interests are taken into consideration. The Quality Assurance Committee (QAC) of the department ensures that the institution and department’s objectives are consistent with the program to be delivered. The college’s Quality Assurance and Development Committee (QADC) holds a series of meetings with students at the end of each academic year for obtaining valuable feedbacks. Additionally, the department requires from enrolled students to evaluate each of the offered courses at almost the end of each semester. In fact, this activity assists in adjusting the educational objectives based on students’ constituent feedbacks. Furthermore, industry leaders in the Kingdom of Saudi Arabia provide meetings with the policy-makers in universities and colleges in order to ascertain their valuable observations and comments. Such observed comments are also useful in updating the information systems of different educational objectives related to a program according to the industry’s perceptions. Further, alumni can evaluate their experiences with the department of information systems by following their current career level through some surveys that are conducted by the deanship of the college. The university administration can periodically provide the college and its departments with its updated and reviewed missions and objectives. The college including its departments use this information for reviewing each Program’s Educational Objectives (PEOs) and for ensuring harmony, consistency, and conformity among the university’s missions, the college’s missions and the IS program [25].

Many researchers have published their experiences in acquiring the ABET accreditation [24,26,27]. McKenzie et al. [27] publish their experience in developing and implementing an enhanced plan for satisfying the process of continuous improvements of an engineering program at the Old Dominion University, and for meeting the requirements of the ABET accreditation. Al-Yahya et al. [26] describe a number of procedures for implementing a system of a high-quality electrical-engineering program, which in return leads to acquire the ABET accreditation. In particular, Al-Yahya and Abdel-Halim explain the undertaken processes and steps by the department in order to establish and evaluate the outcomes and results that contribute towards developing the scientific programs of the department. Additionally, they apply a set of procedures on a small sample of 23 students from the first graduation batch that is divided into two tracks, and which is comprised of respondents of 17 students who are voluntarily selected from the Electrical Power Engineering (EPE) department and 16 students who are voluntarily selected from the Electronics and Communication Engineering (ECE) department. The implementation of the continuous improvement process can improve the quality of programs, and therefore, it yields major changes on the educational plan and teaching methodologies. Recently, Shafi et al. [24] provide detailed evaluation strategies for the ABET-defined Student Outcomes (SOs) of the computer science and computer information system programs. They apply a set of direct and indirect assessment methods, including summative data analysis, formative data analysis, exit exam, and faculty and alumni surveys. After that, they analyse their collected data during their primary research, and applied some improvement process activities to the accreditation-related activities. The main contribution of this research is to adopt some strategies for handling the issues and challenges that have been faced during the improvement process of the program.

In this context, it is worth shedding the light on the accreditation process, which is related to the second largest academic institute in Saudi Arabia, namely, the IMSIU. The quality of education is measured by several criteria so that it can be examined to obtain accreditation and form the culture of quality assurance. Researchers have examined various aspects related to different accreditation processes. Nevertheless, the experiences and challenges of implementing the ABET accreditation should be efficiently discussed within the environment of an institution. The reason behind that is to cover all possible challenges of the human factor. The challenges that are faced by the IS program are discussed in detail. Moreover, the processes and methodology are implemented to tackle these challenges and to obtain the accreditation of the examined IS program, which constructs and assesses the outcomes of the PEOs and students. Further, these processes map the PEOs’ outcomes where the results are obtained by implementing a continuous improvement technique.

3 The Accreditation Process—Case Study

This section provides a background information regarding the IS program that is used as a case study to exhibit the evaluation and assessment of SOs. In 2012, the IS department revised its program objectives, structure and curriculum, and introduced many courses, which are accompanied by the revision of the majority of course specifications and contents. In particular, it introduced different practical sessions and course projects. The QAC was established by the IS department in order to ensure the delivery of the quality academic program. In fact, this department consists of six faculty members. The QAC has the responsibility of overseeing the curriculum and course contents for ensuring the conformance with the rules and regulations that are based on quality achievements. The IS program complies with the guidelines of professional organisations in the field of information systems such as the promoted organisations by the Association for Computing Machinery (ACM) and Association for Information Systems (AIS). Additionally, the department achieves its objectives through a continuous improvement process according to the latest quality measures. IS graduates who gain theoretical knowledge and practical skills are able to compete and succeed in the marketplace and in relevant academic fields. The required information that is discussed during the case study are presented in the following sub-sections, including the missions of the college and IS program, PEOs, the mapping of the program outcomes to the PEOs, and the mapping of the curriculum courses to the program’s outcomes.

3.1 Missions of the College and IS Program

One of the requirements for the IS program ABET accreditation is to consider the declaration of the program’s mission. The school of computer and information system establishes its mission and program objectives based on the following extractions that are derived from the College of Computer and Information Sciences (CCIS) and IS department’s websites.

“The mission of the CCIS is to provide, through innovative teaching and research, science and technology education that is aimed at producing a new generation of highly motivated, competent, skilful, innovative and entrepreneurial scientists and professionals to help the Kingdom of Saudi Arabia become a leading knowledge-based economy” [25].

“The mission of the IS Department is to pursue the department vision by offering and maintaining the most effective educational programs derived from the world-class academic establishments. It is committed to discovering, teaching and disseminating skills and renowned knowledge that are based on designing intelligent interactive information systems and on leveraging the power of information technology to provide solutions in organisational contexts” [28].

3.2 Program Educational Objectives (PEOs)

Students’ outcomes describe what students are expected to understand and be able to perform. On the other hand, PEOs describe what students are expected to achieve or become following their graduation. Our IS of the PEOs are represented as follows:

• PEO-1: Become practicing professionals with the fortitude to attain managerial and executive positions in an industry or government.

• PEO-2: Pursue graduate studies with vigour and zeal, and attain a higher-level graduate degree in information systems or related fields at reputable institutions of higher learning programs.

• PEO-3: Become socially responsible, mature and well-prepared leaders who are capable of assuming positions of responsibilities by affecting and contributing positively and productively to the Saudi society.

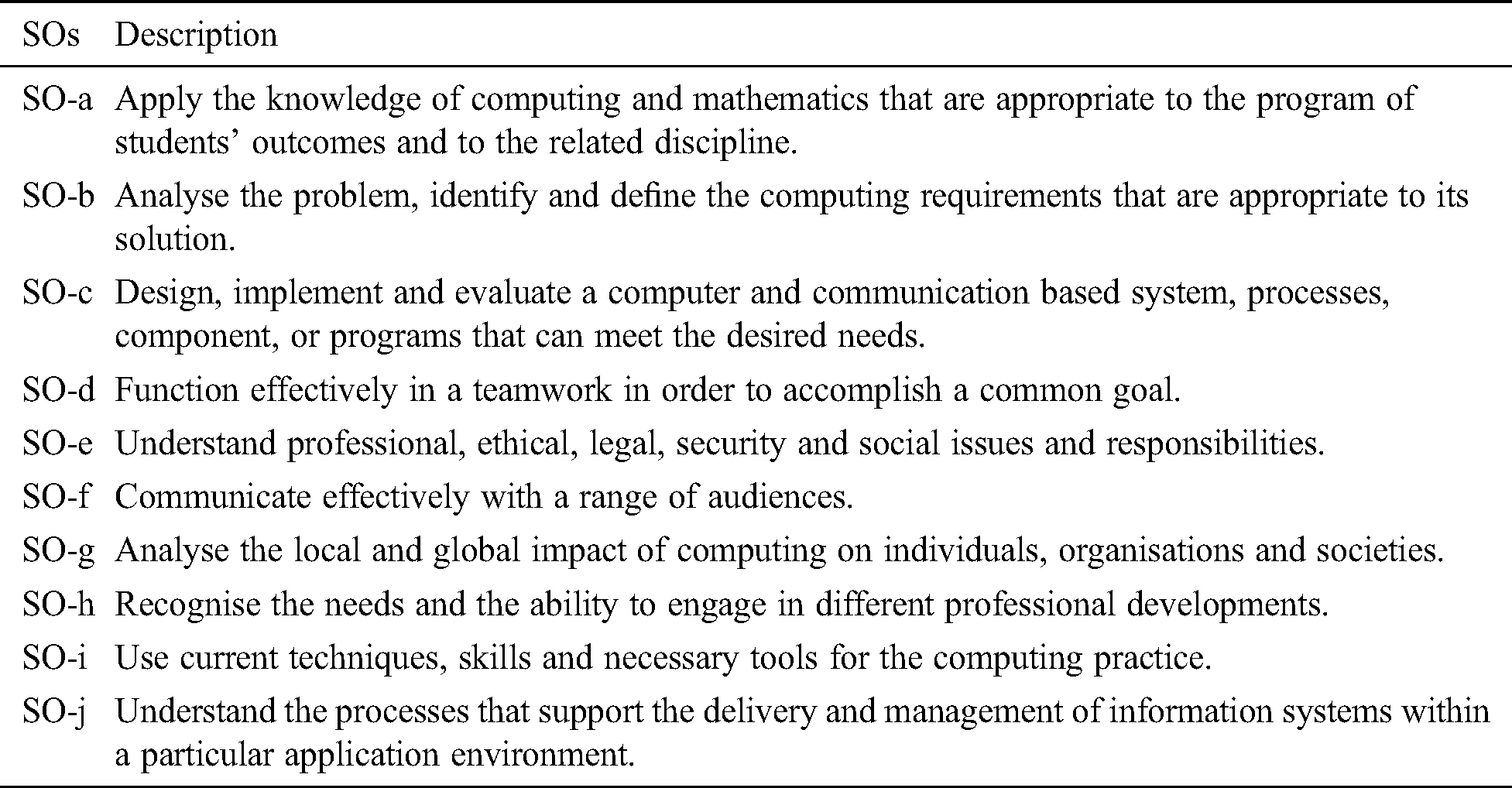

Furthermore, PEOs are posted on the college’s website and can be accessed through the following link [28]. Tab. 1 provides highlights of students’ outcomes (a through j) along with a description of each outcome [29,30].

Table 1: The students’ outcomes

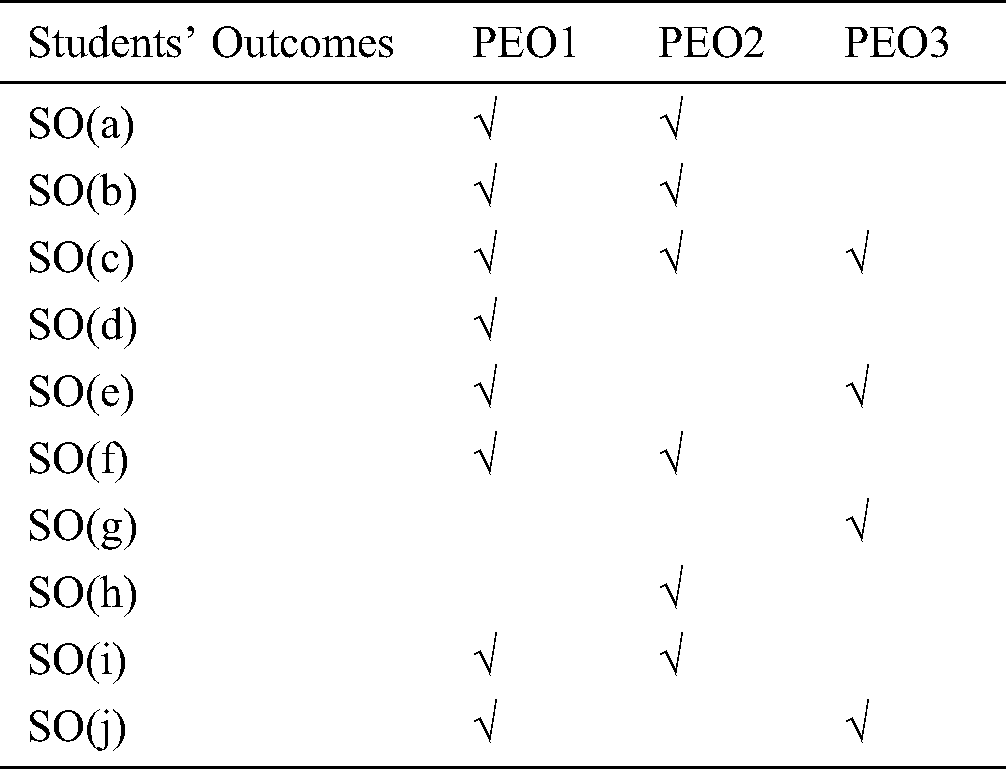

3.2.1 The Mapping of the Program’s Outcomes to Program Educational Objectives

To clearly present the connection between PEOs and SOs, the IS program designs and presents a table that is similar to the below listed table (see Tab. 2). This table demonstrates the relationship between students’ outcomes (SO(a) to SO(j)) and the program educational objectives (PEO1, PEO2, and PEO3). Students’ outcomes support the program educational objectives such that each student’s outcomes support one of the previously mentioned objectives for at least four places (see Tab. 2). The rows in Tab. 2 represent students’ outcomes (a through j) and the columns represent the program educational objectives. On this basis, it can be inferred that the entire IS program objectives are matching with the entire students’ outcomes, and there is no such outcome that is unmatched. It is significant for the college’s objectives stated above, including the program objectives and students’ outcomes to be mapped to show that the educational objectives are appropriately addressed.

Table 2: The intersection of SOs and PEOs

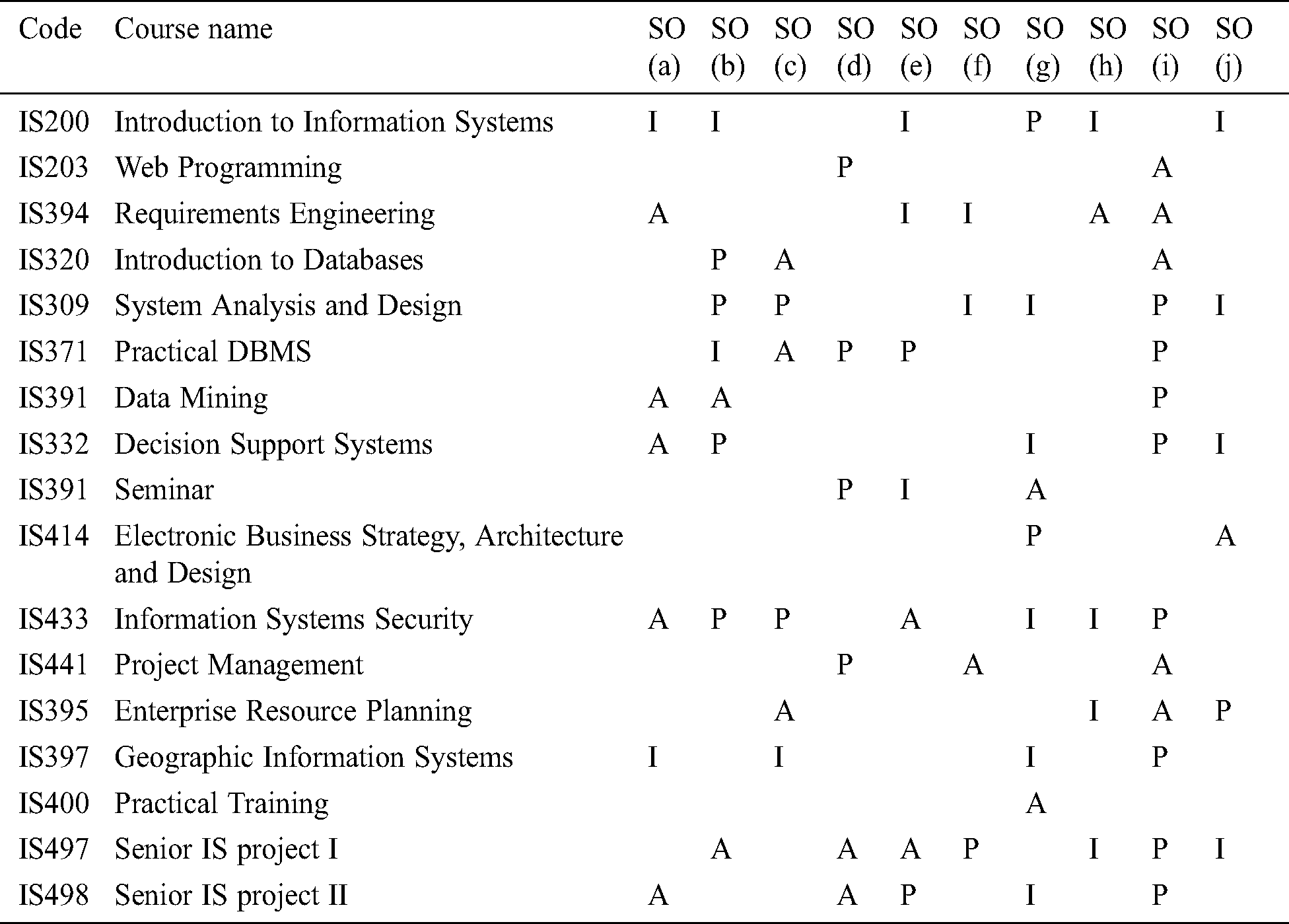

3.2.2 The Mapping of Courses in the Curriculum to the Program Outcomes

Each IS program outcome occurs in at least one course. According to Tab. 3, the mapping between the tenth students’ outcomes and the relevant subset of the eighteenth required courses in the curriculum is obviously depicted. The expected level of achievement of students’ outcomes are classified as Proficient (P), Advanced (A) and Introductory (I). The mapping shown in Tab. 3 provides further details regarding these classifications, which are summarised as follows:

Table 3: The mapping process between courses and SOs

• SO(a) is enabled in 7 information system courses.

• SO(b) is enabled in 8 information system courses.

• SO(c) is enabled in 6 information system courses.

• SO(d) is enabled in 6 information system courses.

• SO(e) is enabled in 7 information system courses.

• SO(f) is enabled in 4 information system courses.

• SO(g) is enabled in 9 information system courses.

• SO(h) is enabled in 5 information system courses.

• SO(i) is enabled in 13 information system courses.

• SO(j) is enabled in 6 information system courses.

The department assesses the outcomes for selected courses only.

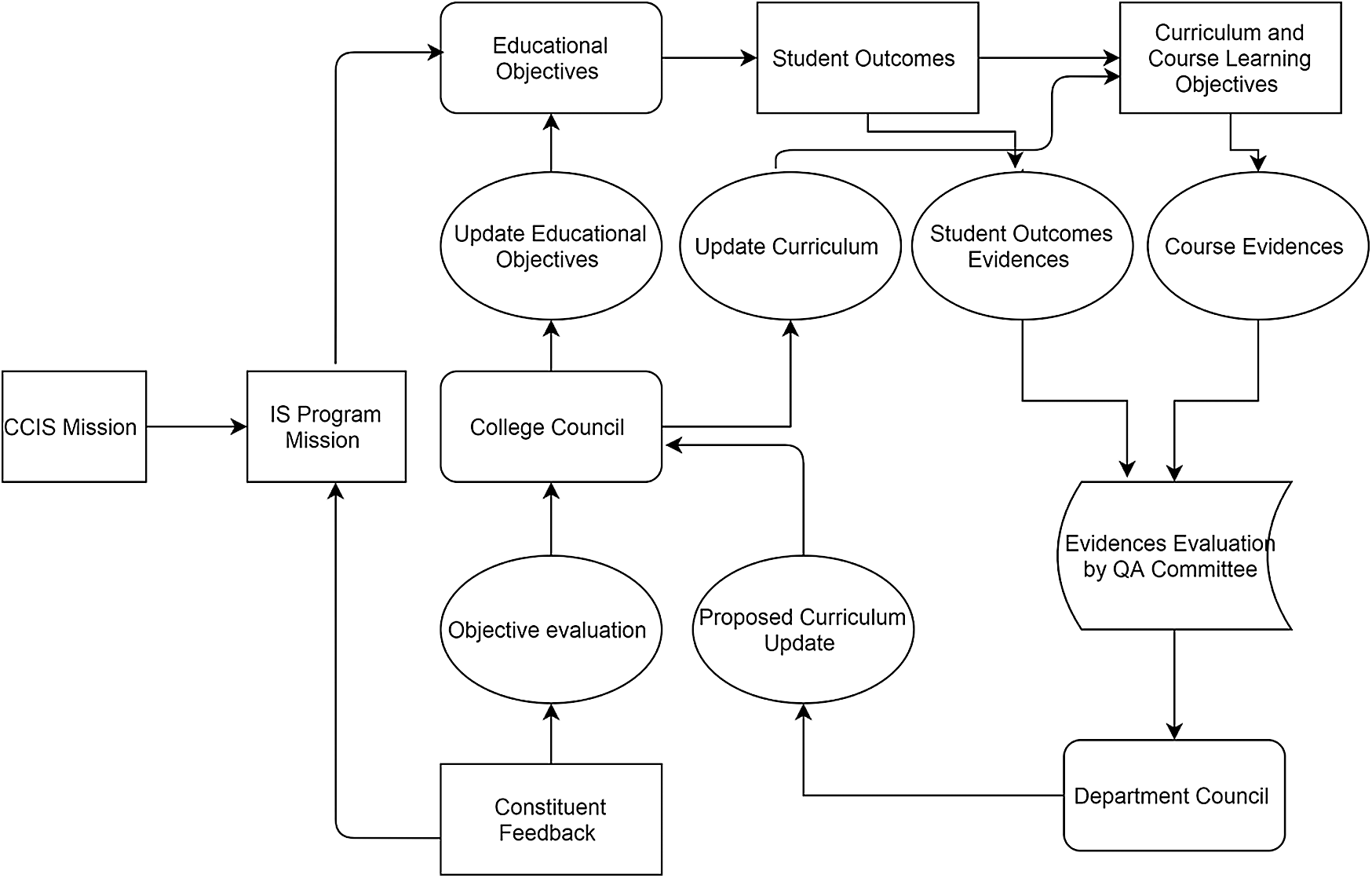

4 The Quality Assurance System

The applied quality assurance system of the case study is provided in this section. The aim of this quality is to implement a continuous improvement process that can maintain it efficiently. The department of IS formulates a quality assurance committee with this regard. Fig. 1 illustrates the continuous improvement process that addresses the program educational objectives and students’ outcomes related to the program. The QAC has the responsibility of overseeing the curriculum and course content issues and ensuring the conformance with the rules and regulations for quality achievements. The department achieves its objectives through a continuous improvement process, which is based on the latest quality measures.

Figure 1: The continuous improvement process

The department has adopted the requirements of the ABET Computing Accreditation Commission (CAC) as a guidance for achieving the outcomes of students’ program [12]. The QAC reviews and decides as to whether students’ outcomes are kept the same or modified. When the QAC held a meeting in the fall semester of 2014, it decided to keep students’ outcomes the same in order to observe their applicability in the assessment of Cycle 2. Additionally, the department of the IS adopts the PDCA’s life-cycle that stands for “Plan, Do, Check, and Act” in order to enhance the process of continuous improvements [31,32]. To implement this method, the department has prepared the ground for training and identifying a number of evaluation techniques, including the development of key performance indicators (KPIs) for fulfilling the evaluation process. At the end of each cycle, the QAC reviews the obtained results as a guideline for the approaching cycle, which aims at improving its performance and process. An adequate feedback is needed for ensuring a continuous and improved process.

The established continuous improvement process passes through the following steps:

1. Collecting data of an activity for measuring its level of proficiency.

2. Analysing and evaluating the obtained performance.

3. Proposing some corrective action plans to overcome any low performance when necessary.

4. Validating and applying the proposed actions.

The assessment of the IS program occurs through its students’ outcomes where it represents the main process that is used to collect data for assessing, analysing, evaluating and improving the program. The IS department and the college have established the assessments for Cycles 1 and 2.

In this research, several procedures are implemented in the research methodology part. The main goal of the IS program’s committees is to collect significant documents and facts for assessing, evaluating and developing the IS program. Therefore, the main procedures comprise:

1. Collecting, critically analysing and evaluating program’s objectives and outcomes.

2. Conducting several surveys for collecting the responses of current students and those who have graduated.

3. Proposing correct action plans for overcoming the low performance in some program outcomes.

4. Applying the proposed correct actions.

5. Evaluating the effectiveness of adopted plans and actions.

In fact, the entire aforementioned procedures are conducted in order to achieve the objectives of the IS program through a continuous improvement process that is based on the latest quality measures. Students’ evaluation occurs through quizzes, midterms and final exams as there are many goals of the IS program. However, all of these goals provide students with valuable qualitative skills. This provision fosters high-level teaching processes that are followed by standard techniques for assessing students’ performances in evaluating the outcomes of the learning process, and in adjusting this process for further improvements. The intended learning outcomes of every course involve transferred knowledge, enhanced cognitive skills and improvements on interpersonal and responsibility skills [33].

The success of reaching the goals for every course passes through the following measures, which are related to direct performances of students. These comprise:

1. Quizzes,

2. Assignments,

3. Lab exams,

4. Midterm exams,

5. Projects and case studies,

6. Final exams,

7. Summer training evaluation, and

8. Graduation project evaluation.

In terms of the summer training, the evaluation measure is based on the following criteria:

1. Attendance sheet,

2. Evaluation report from the supervisor of the company, and

3. Students’ final report.

To ensure the achievement of course objectives, the faculty members have presented, in a corresponding course report, a way of assessing learning outcomes. Moreover, they have provided different sheets of students’ evaluations in order to map each evaluation that is a part of a course plan. On the other hand, students’ performances depend on other measures that can achieve program’s outcomes such as the current students’ survey, the alumni’s survey, the employer’s survey, and the meeting with the IS department council.

5 Continuous Improvement Actions

This section presents the assessment results of students’ outcomes and provides continuous improvements on actions for the IS program.

The department has conducted the assessment in a selected set of upper-level required courses. For each case, the instructor formulates an activity that can reflect a particular KPI and apply it to some students’ activities. When these activities are completed and graded, the instructor scores the KPIs for each sample that relates to students' activities. The sample size is a representative that is covered for all students at all levels. Therefore, the department flags any KPI, which does not satisfy the threshold of these criteria. During the evaluation phase of the assessment process, the KPIs for which the thresholds are in violation of the threshold can allow the department to scrutinise them in a suitable manner. The activity assists in identifying which particular activity is not appropriately addressed by a particular student’s outcome. The department scrutinizes such cases for determining an appropriate line of action that is required for rectifying the situation.

The IS department of the QAC selects the following courses in Assessment Cycle 1 where the assessment of the KPIs (and hence the student outcomes) can be considered, and which comprises:

• IS433: Information Security

• IS497: Graduation Project-1

• IS498: Graduation Project-2

• IS309: Systems Analysis and Design

Based on the evaluation of the results from Assessment Cycle 1, the department of the QAC decides to modify the course selection. For Assessment Cycle 2, the QAC selects eight courses for conducting the assessment. The selected courses are outlined as follows:

• IS433: Information Security

• IS203: Web Programming

• IS394: Requirements Engineering

• IS441: Project Management

• IS497: Graduation Project-1

• IS309: Systems Analysis and Design

• IS498: Graduation Project-2

• IS400: Practical Training

The QAC creates the rubrics and distributes them through to the instructors of the selected courses. Accordingly, each instructor selects a random sample (around 30% to 40% of the class’s capacity) to assess the KPIs. After that, the QAC assembles the entire rubrics and summarises the results per student’s outcomes for all the courses that are selected in a semester. Additionally, the QAC scrutinises and investigates those courses that address the KPIs where the results do not meet the thresholds. The QAC department has accordingly provided recommendations that mostly fall into one of the following three actions:

• Modify the KPI that is used in the assessment process to make it more obvious.

• Update the course’s content by introducing a topic in the syllabus of the affected course.

• Address the KPI by using a different course.

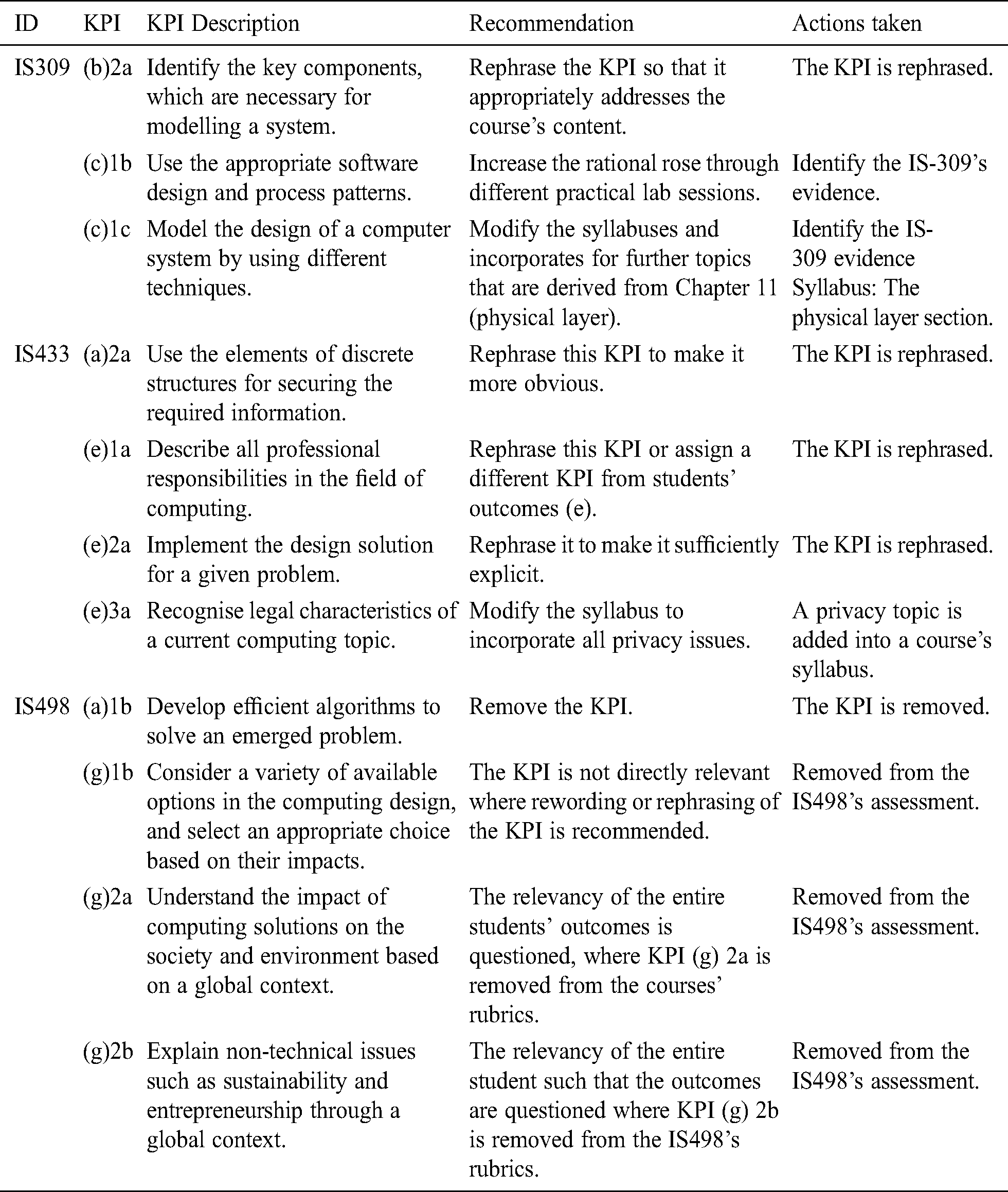

Tab. 4 represents Cycle 1, which identifies the KPI problems and recommended actions.

Table 4: Cycle-1 identifies the KPI problems and recommended actions

Due to the taken actions of the above produced recommendations, the rubrics that are used in Cycle 2 have changed from those used in Cycle 1. Other changes that are performed when transitioning from Cycle 1 to Cycle 2 include the followings:

1. Adding the “privacy/ethics” topic in the information system security course to appropriately enable the KPI (e)3a for the students’ outcomes (e);

2. Special requests are received from some faculty members regarding the rephrasing or modification processes of some KPIs as they are found not to be directly relevant to the courses. These requests include all KPIs that are derived from IS394 (Requirements Engineering) and IS441 (Project management).

During the assessment of Cycle 1, the department creates a number of KPIs for each student’s outcomes. In fact, these KPI’s are derived from partitioning a particular student’s outcome. For example, “Apply the knowledge of computing and mathematics that is appropriate to the discipline” is a statement of the SO (a). The department partitions this type of students’ outcomes into two segments, which are comprised of SO (a)1 and SO (a)2 according to the following respective statements:

• “Apply the knowledge of computing that is appropriate to the discipline”.

• “Apply the knowledge of mathematics that is appropriate to the discipline”

For each segment, the department establishes a set of KPIs that it used as a direct assessment vehicle for ensuring the achievement of a particular student’s outcomes.

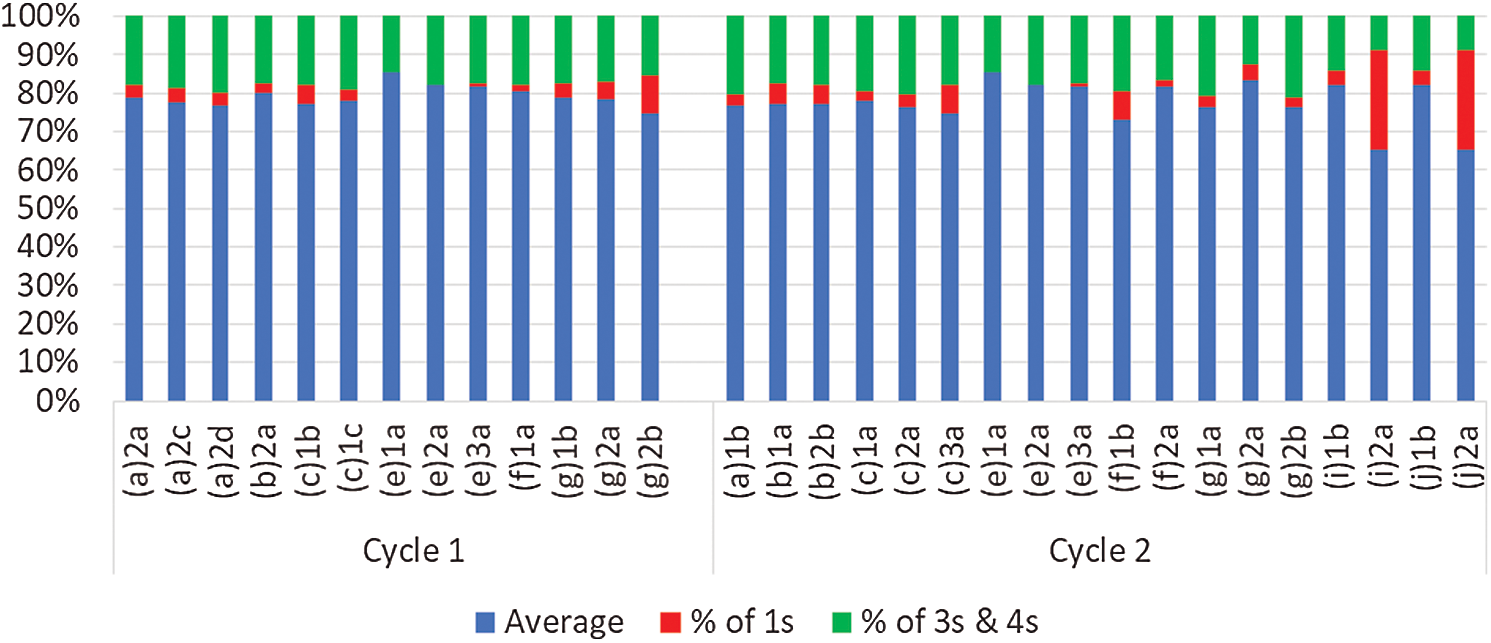

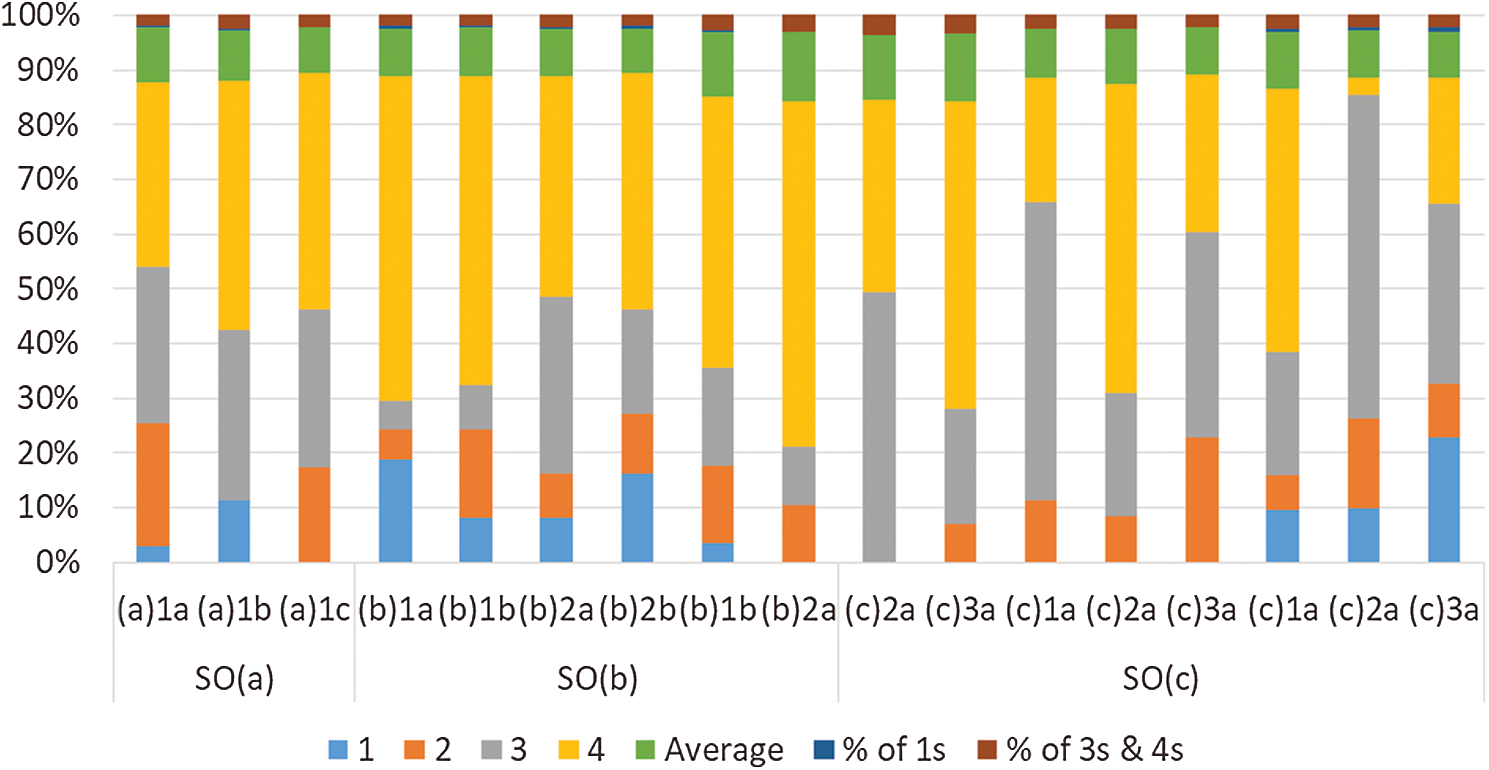

5.2 Actions Taken when Transitioning from Cycle 1 to Cycle 2

The scoring results are based on the following criteria; 1 = Unsatisfactory, 2 = Marginal, 3 = Satisfactory and 4 = Excellent. The percentages of the samples that score “1” are computed as the percentages of samples that score “3” or “4”. If the percentage of the samples that score 1 s is significantly more than 10%, it can become an issue. If the percentage of the samples that score 3 s and 4 s is significantly less than 70%, it can also become an issue. Periodically, appropriate modifications of KPIs are performed by applying them through different courses. Additionally, courses’ contents are updated thoroughly to ensure that they are inline and synchronised with students’ outcomes. During the assessment of Cycles 1 and 2, it can be inferred from Fig. 2 that the 13th and 18th KPIs, are respectively, derived from Cycles 1 and 2 that do not meet their intended threshold among several courses. Furthermore, updates are produced when the transitioning process from Cycle 1 to Cycle 2 contains some topics that are added to some courses in order to enable KPIs for students’ outcomes. Tab. 5 highlights the KPIs during the assessment of Cycle 2 for each student’s outcomes. The rubrics that are used in Cycle 2 and changed from those used in Cycle 1 are also provided in the table.

Figure 2: The assessment results of Cycles 1 and 2 (KPIs do not meet the given threshold)

Table 5: The assessment of Cycle 2’s KPIs and the used rubrics for each set of the KPI

During the assessment of Cycles 1 and 2, the department produces a schedule of a number of assessment activities called an ‘Assessment Plan’ that contains the following details:

• The SOs,

• The parsed SOs,

• The set of related KPIs,

• When the program enables the KPIs,

• When the department assesses the KPIs, and

• When the department plans to perform the assessment of each KPI.

5.3 The Improvement of the Generated Actions through the Assessment of SOs

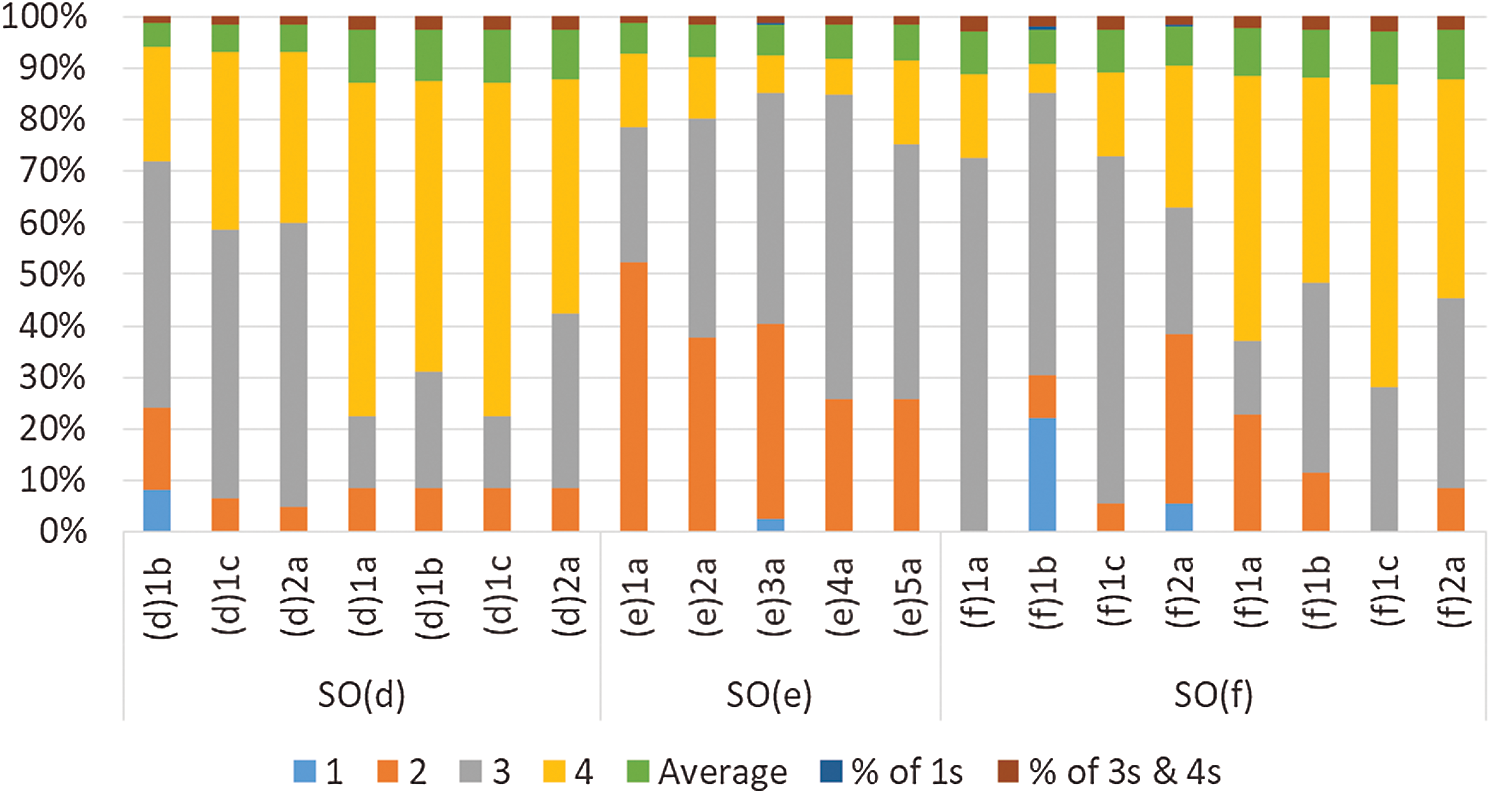

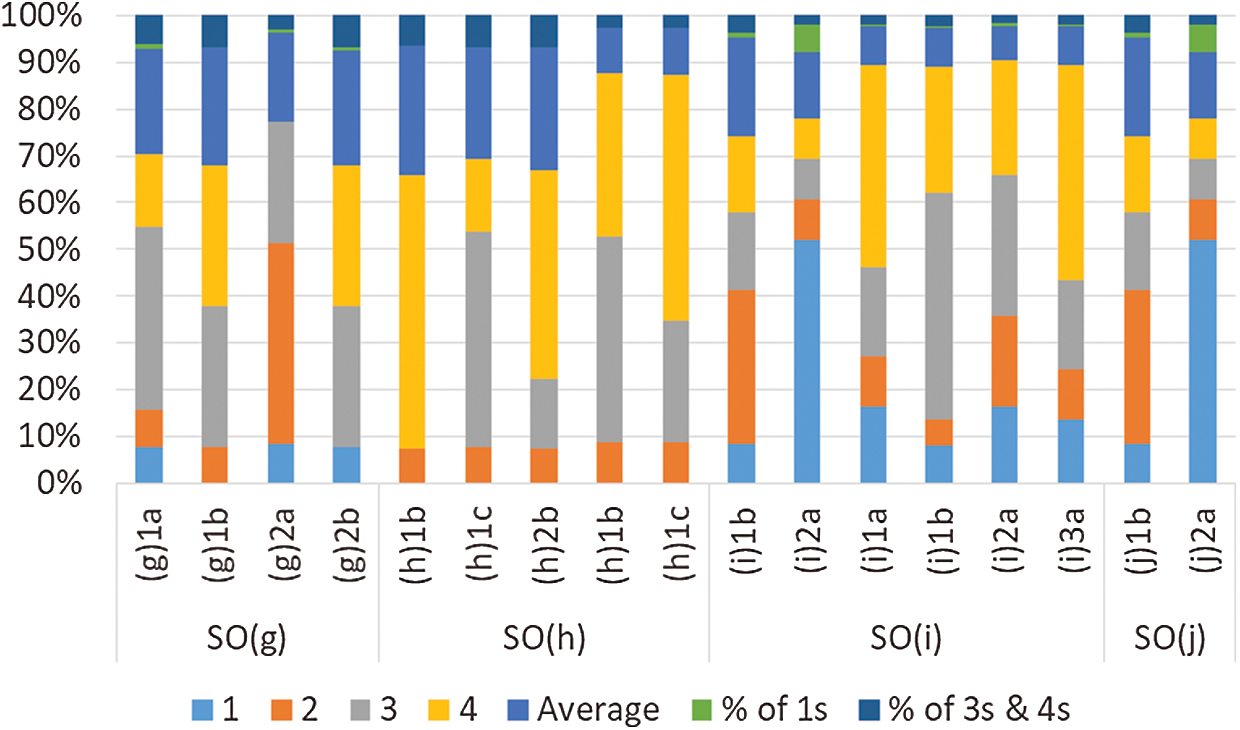

The improved actions are considered for Cycle 2 based on assessing students’ outcomes at the end of the first semester. The QAC assesses and integrates the collected data of the second semester of Cycle 2 and recommendations and actions are applied where appropriate. Examples of assessing KPIs from Cycle 2 are illustrated (see Figs. 3–5).

Figure 3: An example of assessing SOs (a, b, and c)

Figure 4: An example of assessing SOs (d, e, and f)

Figure 5: An example of assessing SOs (g, h, i, and j)

According to Fig. 3, the assessment of students’ outcomes of KPI set (a) that is enabled for the IS203 course highlights the scores of 1 s, 3 s and 4 s of SO(a) 1a, which conform to the expected thresholds. For SO(a) 1b, the scores of 1 s can be seen slightly higher than the established 10% threshold mentioned above. Similarly, SO(a) 1c demonstrates that it conforms to the expected threshold of 1 s, 3 s and 4 s. The action that is considered improves this KPI set by including appropriate implementation activities of algorithms into students’ laboratory tasks. Additionally, KPI set (b) that is enabled for the IS394 course demonstrates that the scores of 1 s of SO(b) 1a exceed the expected 10% threshold. On the other hand, the scores of 1 s, 3 s and 4 s of SO(b) 1b conform to the required percentage of thresholds. The scores of 1 s, 3 s and 4 s of SO(b) 2a conform to the given thresholds, while SO(b) 2b’s thresholds do not conform to 1a, 3 s and 4 s. Consequently, it is recommended for this course to find a suitable textbook that includes further topics, which are more relevant for addressing their KPIs. Finally, students’ outcomes (c) that are enabled for the IS309 course, demonstrate that SO(c) 1a, SO(c) 2a and SO(c) 3a are addressed as required. A recommended action is taken into account to ensure providing continuous improvements when a non-conformity threshold emerges. In fact, this non-conformity occurs due to the involvement of students’ assignments in assessing SO(c). If some students do not submit their partial or entire assignments, the results may be affected, and thus, it is recommended that quizzes or exams can rather be used in this occasion.

In Fig. 4, the assessment results are presented from Cycle 2 based on students’ outcomes (d, e and f). Student’s outcome (d) that is enabled for the IS441 and IS497 courses demonstrate that the assessment results are efficiently addressed for it when it is applied into two different courses. Furthermore, all targeted thresholds are conformed, and there is no further action taken for providing continuous improvements. Student’s outcome (e) that is enabled for the IS433 course demonstrates that SOs(e) 1a, 2a and 3a do not conform to the established 70% threshold of 3 s and 4 s scores. Consequently, a recommended action is considered to ensure providing continuous improvements by adding further professional and ethical topics for addressing the set of KPIs. Moreover, student’s outcome (f) that is enabled for IS433 and IS498 courses, presents the results of the thresholds of SO(f) 1b that are non-conformal for 1 s, 3 s and 4 s scores. In case of SO(f) 2a, the threshold of scores 3 s and 4 s are also seen non-conformal. To handle these cases, a recommended action is considered for providing continuous improvements that can assign particular scores on how students present their research topics during their intended presentations. To rephrase (f) 2a clearly, it is suggested to be used in future researches. Fig. 5 introduces the assessment results from Cycle 2 for SOs (g, h, i and j).

As revealed from Fig. 5, the student outcome (g) that is enabled for the IS400 course demonstrates that the results of scoring a threshold of 1 s of KPI(g) are non-conformal, so as the thresholds of scores 1 s, 3 s and 4 s of KPI(g) 2a including the threshold of score 1 s of KPI(g) 2b. The recommended action that is taken into consideration is to provide continuous improvements by allowing students to include their final reports for this course, and to mention what they have learned about the “impact of computing on society” within the local and global environments. Further, student’s outcome (h) that is enabled for the IS400 and IS498 courses show that all SOs(h) KPIs are efficiently addressed, and no action is further taken for providing continuous improvements. Student’s outcome (i) that is enabled for IS400 and IS309 courses show that the results of KPI(i) 1b are non-conformal to the thresholds of scores 1 s, 3 s and 4 s when the IS400 course is used to assess this SO. Additionally, KPI(i) 2a show that its thresholds of scores 1 s, 3 s and 4 s are non-conformal when the same indicated course is used for assessing this SO. A recommended action is taken for the previously mentioned results by allowing students to provide a clear report on the encountered technical issues, which assists in addressing SO(i) more appropriately. Finally, student outcome (j) that is enabled for the IS400 course demonstrates that the results of SO(j) 1b and 2a are non-conformal for the thresholds of scores 1 s, 3 s and 4 s. Consequently, a recommended action is considered for continuous improvements in order to acquire the necessary feedback from employers and students, which may assist in assessing SO(j) much more appropriately and objectively.

The sub-section describes further details on how program educational objectives meet the needs of these constituencies. The IS program’s constituency is comprised of the followings: current students, alumni, industry/employers and university staff members.

1. Students: The education of students constitutes the principal reason for the existence of the IS program. The department considers its students as the first source for defining its educational objectives where students can evaluate several activities of the department. Students can continuously provide the department with their feedback in order to adjust some revealed issues and to improve the educational process.

2. Alumni: The alumni of the IS program represents a mirror, which reflects the level for which the program accomplishes its objectives. Alumni succeed in their careers based on the success of their department following their education. The department considers their alumni as one of the main constituencies of the IS program. The department runs a survey for recent graduates and the alumni’s feedback assists in remedying any limitations of the program, and assists in supporting the rigidness of their selected program.

3. Industry: The IS program provides the industry with highly qualified graduates who are capable of adding values to their institutions and who are capable of participating towards the success of many appropriate enterprises. Moreover, the department expects the industry to form a significant constituency for reviewing its program educational objectives. The college establishes close relationships with leading companies in the field of information technology in the KSA. The college periodically invites a number of industry leaders for presenting plenary talks and seminars within the college.

4. University: The IMSIU offers to the college and IS department an appropriate environment for running its programs and reaching its objectives. Consequently, the university assists in defining the IS program educational objectives and in reviewing them on a regular basis.

5.4.2 The Constituent and Program Feedback

The university aims at developing and implementing high quality scientific programs, and hence, the college requests periodic inputs from its constituents. To improve the curriculum and the overall improvements of instructional delivery, a continuous review of the courses, course reports and course specification reviews should occur in each semester. The loop of this principal feedback affects the curriculum organisation and its contents. Faculty members provide a collection of particular feedback forms to a given course or to the entire program throughout the college.

The college receives the feedback through these forms in order to encourage an unencumbered exchange of ideas and responses to the material that is presented in a class and to the overall design of a given curriculum. This is particularly assistive for students when they receive and benefit from the most up-to-date contents and knowledge. Comments and suggestions from current and prospective students within the college reach the persons in charge such as the dean of the college or the faculty advisor. In fact, this activity renders students to remain connected and helpful in the instructional delivery. The college can periodically consult the industry and government to ensure that the department’s efforts align appropriately with their demands.

The IS department remains in contact with their current and prospective employers of its graduates where such constituents are strongly encouraged to join the industrial affiliates program. This program provides valuable feedback to the faculty members of a college based on students’ preparations. Appropriate actions are considered necessary through the advising unit and/or the curriculum design and development committees. Further, alumni’s surveys provide significant and valuable feedback regarding their employment opportunities and the opportunity to assess program’s educational outcomes.

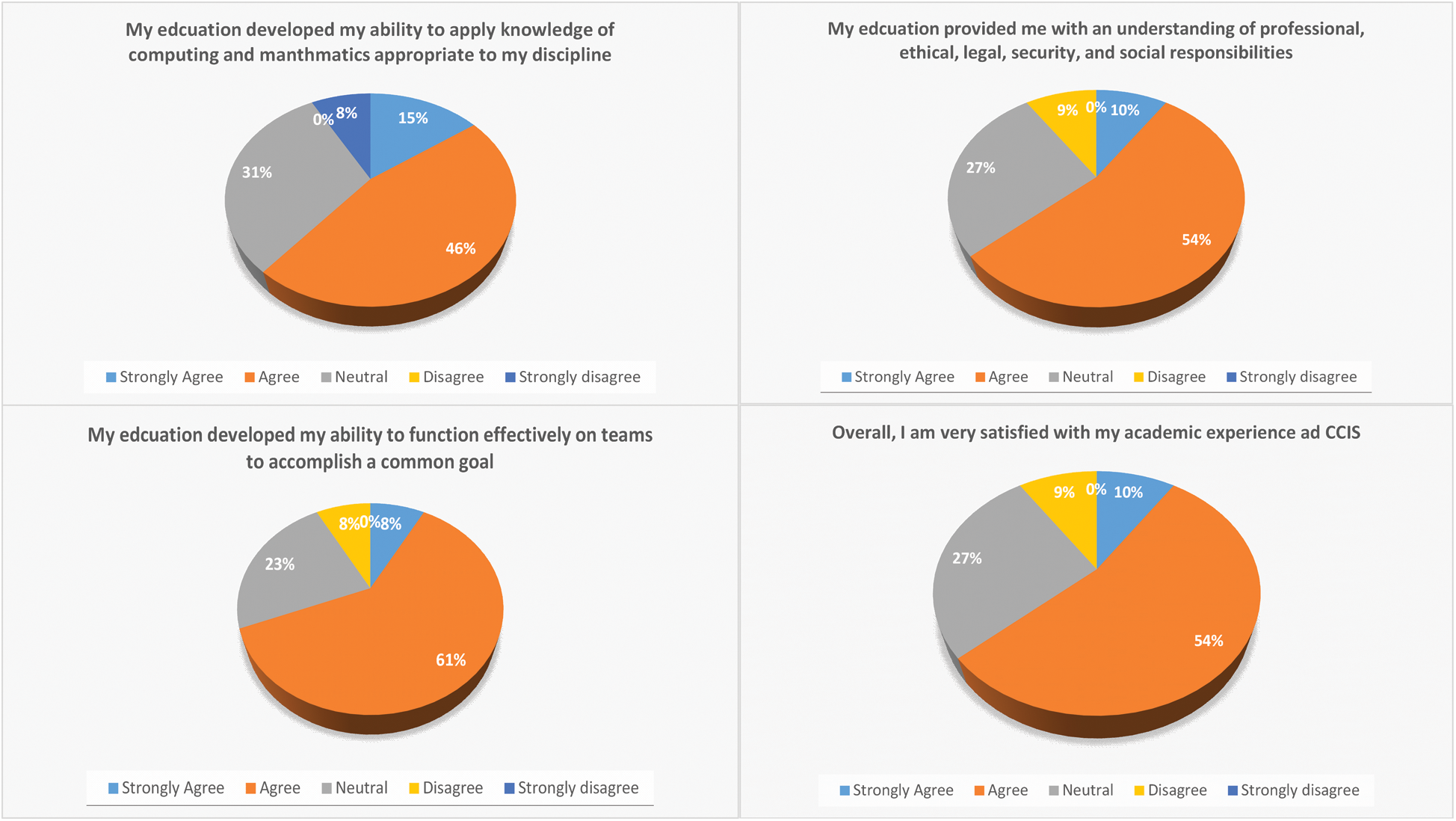

The department can strongly encourage former students to provide the college with an information about their efficient preparations for their careers once they have a longer-term perspective. The feedback about the preparation of students for the graduate school occurs through an external advisory committee, including faculty’s colleagues at the top graduate schools. In fact, this is followed by a similar path towards industry’s comments. Fig. 6 depicts different resulted samples of the obtained surveys from the college’s alumni.

Figure 6: Samples of the obtained surveys from the college’s alumni

According to the results of this figure, it can be concluded that students express their satisfaction based on the preparation and quality of education they receive from the IS program, which leads them towards a fruitful direction in their careers. Additionally, students express their beliefs that the technical preparation they receive can provide them with the required skills, which allow them to function effectively within a professional setting and to function in a team setting for achieving their targeted goals. This provision implies that the program can properly enable students’ attributes to achieve the PEOs for the IS program.

During the constituency meeting, the employers of the industry are also provided with the survey forms. Some questions implied by employers are based on the following points:

• The quality of the IS program graduates,

• Comparisons of the IS program with other similar programs,

• The ability of the IS program to adapt to up-to-date technologies,

• Managerial and leadership skills that are exhibited by the graduates of the IS program, and

• The contribution of the IS program’s graduates towards the growth of an organisation.

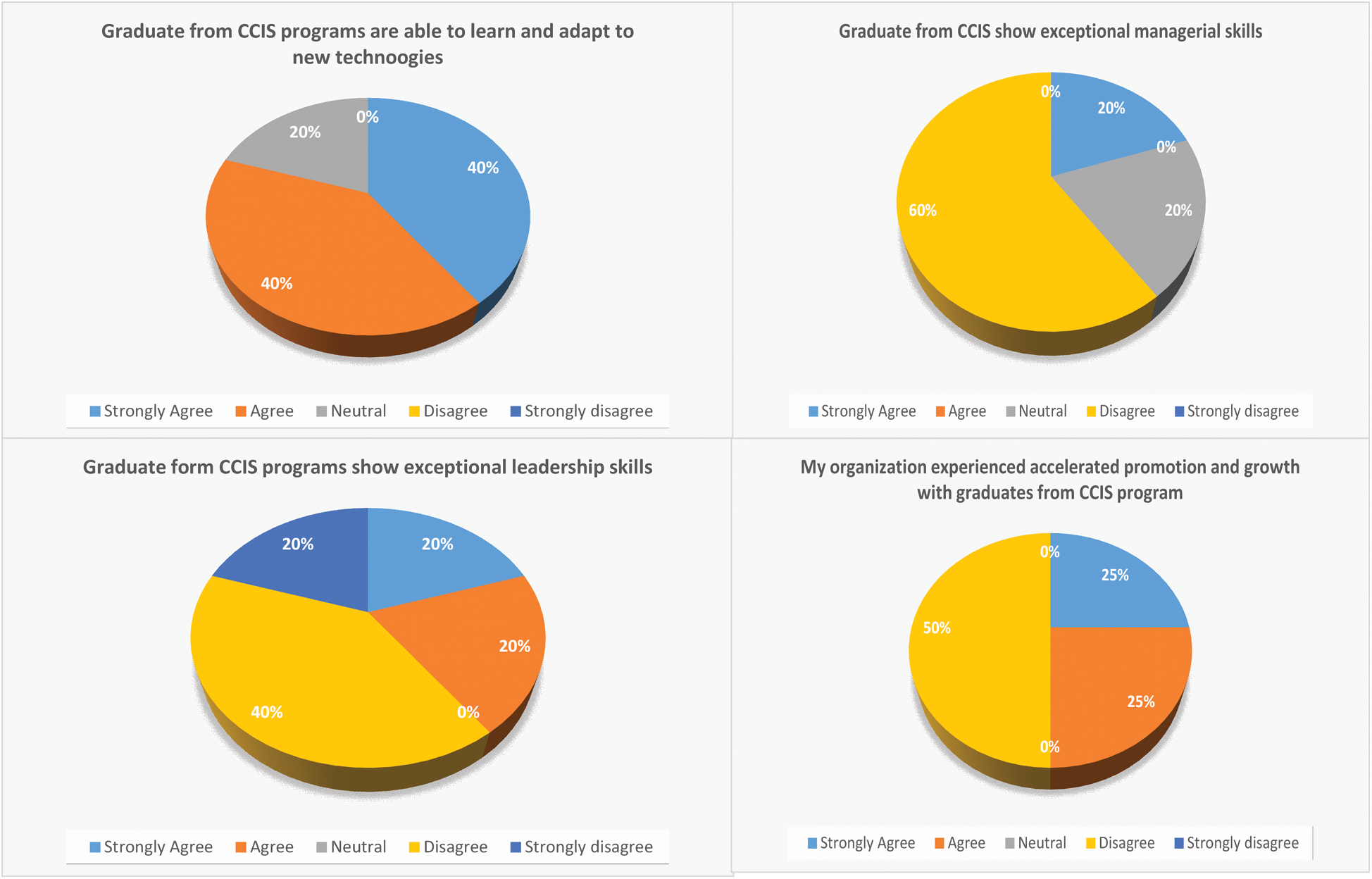

Fig. 7 illustrates different resulted samples that are compiled during the last constituency meeting according to the obtained surveys from employers.

Figure 7: The resulted samples that are compiled during the last constituency meeting according to the obtained surveys from employers

The responses that are received from the produced survey by the alumni and employers suggest the following points regarding the graduates:

• The overall satisfaction for the quality of education they received from the university,

• The satisfaction of the course combination and offerings in the IS program,

• The course offerings from IS department prepare graduates to secure places for suitable careers,

• They are satisfied with the career they are pursuing, and

• They are satisfied with their employment situations.

It can be found to be proven from the results that the IS course combination can properly address students’ outcomes and that the IS program meets its program’s educational objectives. Therefore, the IS program’s educational objectives serve its constituencies. The program constituencies represent any entity or organisation that can benefit from the program’s outcomes. The current objectives require periodical revisions, which may reflect the needs of the program constituencies for a greater depth.

The strength of employing the continuous improvement process possesses a valuable gain when enhancing the quality of the IS program, and facilitates the ABET’s accreditation. The reason behind this is that the continuous improvement process can enhance several dimensions related to the IS program such as the program plan, curriculum development, teaching approach and evaluation methods. Further, the obtained findings confirm that measuring the achievement of the program’s objectives and students’ outcomes after applying the continuous improvement process is extremely effective in assessing and developing the IS program.

The ABET accreditation assists in improving the learning experience and the academic program quality. Nonetheless, several challenges are encountered during the process of this accreditation, including the lack of information that should be available for different implementation procedures. Other factors involve the workload of the faculty staff members and the lack of an appropriate training and management commitments. The scarce of available documents and reports for outlining the required methodology and implementing a successful accreditation for IS programs are also considered a significant factor. For the ABET’s accreditation, assessing students’ outcomes is taken into account for the basis of the continuous improvement process. Thus, details on implementing suitable strategies and processes for the IS program are addressed in this research. Additionally, the achievement rates of students’ outcomes are calculated based on a set of direct and indirect measures. The applied measures comprise conducting the data and procedural analysis, current students’ survey, alumni’s survey, employers’ survey, and meeting with the IS department council. Following that, the obtained results are analysed and a set of correct actions are proposed as a plan, which forms the basis of providing continuous improvements. In order to ensure generality, the implemented methodology along with the followed strategies and processes that are adopted in this paper can be further applied to many different programs seeking the ABET accreditation. Further, policy-makers in scientific departments can find this research useful prior to the start of the accreditation process throughout their academic programs.

Funding Statement: The author(s) received no specific funding for this study.

Conflict of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. R. K. Raj and A. Parrish. (2018). “Toward standards in undergraduate cybersecurity education in 2018,” Computer, vol. 51, no. 2, pp. 72–75. [Google Scholar]

2. S. C. Yang, “A curriculum model for cybersecurity master’s program: A survey of AACSB-accredited business schools in the United States,” Journal of Education for Business, vol. 94, no. 8, pp. 520–530. [Google Scholar]

3. B. K. Jesiek and L. H. Jamieson. (2017). “The expansive (dis)integration of electrical engineering education,” IEEE Access, vol. 5, pp. 4561–4573. [Google Scholar]

4. T. S. El-bawab. (2015). “Telecommunication engineering education (TEEMaking the case for a new multidisciplinary undergraduate field of study,” IEEE Communications Magazine, vol. 53, no. 11, pp. 35–39. [Google Scholar]

5. S. C. Yang. (2018). “A curriculum model for IT infrastructure management in undergraduate business schools,” Journal of Education for Business, vol. 93, no. 7, pp. 304–314. [Google Scholar]

6. M. Garduno-Aparicio, J. Rodriguez, G. Macias-Bobadilla and S. Thenozhi. (2017). “A multidisciplinary industrial robot approach for teaching mechatronics-related courses,” IEEE Transactions on Education, vol. 61, no. 1, pp. 1–8. [Google Scholar]

7. S. Hadfield, T. Weingart, J. Coffman, D. Caswell, B. Fagin et al. (2019). , “Streamlining computer science curriculum development and assessment using the new ABET student outcomes,” in Proc. of the Western Canadian Conf. on Computing Education, Calgary, AB, Canada. [Google Scholar]

8. J. Moore. (2020). “Towards a more representative politics in the ethics of computer science,” in Proc. of the 2020 Conf. on Fairness, Accountability, and Transparency, Barcelona, Spain. [Google Scholar]

9. P. Kumar, B. Shukla and D. Passey. (2020). “Impact of accreditation on quality and excellence of higher education institutions,” Investigación Operacional, vol. 41, no. 2, pp. 151–167. [Google Scholar]

10. ABET, “About ABET,” 2020. [Online]. Available: https://www.abet.org/about-abet/ [Google Scholar]

11. C. Cook, P. Mathur and M. Visconti. (2004). “Assessment of CAC self-study report,” in Presented at the 34th Annual Frontiers in Education, Savannah, GA, USA. [Google Scholar]

12. ABET, “Criteria for accrediting computing programs, 2010–2011,” 2020. [Online]. Available: https://www.abet.org/wp-content/uploads/2015/04/abet-cac-criteria-2011-2012.pdf [Google Scholar]

13. C. D. Challa, G. M. Kasper and R. Redmond. (2005). “The accreditation process for IS programs in business schools,” Journal of Information Systems Education, vol. 16, no. 2, pp. 207–216. [Google Scholar]

14. L. Harvey, “Analytic quality glossary,” Quality Research International, 2020. [Online]. Available: http://www.qualityresearchinternational.com/glossary/ [Google Scholar]

15. CLICKS, “Quality assurance & accreditation,” 2020. [Online]. Available: http://www.cli-cks.com/quality_assurance_accreditation/#:~:text=Accreditation%20is%20a%20form%20of,a%20status%20and%20a%20process [Google Scholar]

16. ABET, “ABET glossary,” 2020. [Online]. Available: http://www.abet.org/glossary/ [Google Scholar]

17. R. G. Bringle and J. A. Hatcher. (1995). “A service-learning curriculum for faculty,” Michigan Journal of Community Service Learning, vol. 2, pp. 112–122. [Google Scholar]

18. M. Carter, R. Brent and S. Rajala. (2001). “EC 2000 criterion 2: A procedure for creating, assessing, and documenting program educational objectives,” in ASEE Annual Conf. American Society for Engineering Education, Albuquerque, New Mexico, pp. 1–10.

19. M. J. Allen. pp. 197, 2004. , Assessing Academic Programs in Higher Education. USA: John Wiley & Sons.

20. L. Suskie. (2018). Assessing Student Learning: A Common Sense Guide. USA: John Wiley & Sons, pp. 385. [Google Scholar]

21. T. Crick, J. H. Davenport, P. Hanna, A. Irons and T. Prickett. (2020). “Computer science degree accreditation in the UK: A post-shadbolt review update,” in Proc. of the 4th Conf. on Computing Education Practice 2020, Durham, UK. [Google Scholar]

22. R. G. Bringle and J. A. Hatcher. (1996). “Implementing service learning in higher education,” The Journal of Higher Education, vol. 67, no. 2, pp. 221–239. [Google Scholar]

23. D. Kennedy. (2007). Writing and Using Learning Outcomes: A Practical Guide. Ireland: University College Cork, pp. 103.

24. A. Shafi, S. Saeed, Y. A. Bamarouf, S. Z. Iqbal, N. Min-Allah et al. (2019). , “Student outcomes assessment methodology for ABET accreditation: A case study of computer science and computer information systems programs,” IEEE Access, vol. 7, pp. 13653–13667. [Google Scholar]

25. R. Ellis. (2018). “Quality assurance for university teaching: Issues and approaches,” in Handbook of Quality Assurance for University Teaching. London: Routledge, pp. 3–18. [Google Scholar]

26. S. A. Al-Yahya and M. A. Abdel-halim. (2013). “A successful experience of ABET accreditation of an electrical engineering program,” IEEE Transactions on Education, vol. 56, no. 2, pp. 165–173. [Google Scholar]

27. F. D. McKenzie, R. R. Mielke and J. F. Leathrum. (2015). “A successful EAC-ABET accredited undergraduate program in modeling and simulation engineering (M&SE),” in 2015 Winter Simulation Conf. (WSC), Huntington Beach, CA, USA: IEEE, pp. 3538–3547. [Google Scholar]

28. QAC. (2015). “Self-study report,” Information System Department, College of Computer and Information Systems, Al Imam Mohammad Ibn Saud Islamic University, Saudi Arabia. [Google Scholar]

29. A. I. M. I. S. I. University, College of Computer and Information Sciences, “Student handbook,” 2020. [Online]. Available: http://units.imamu.edu.sa/colleges/en/ComputerAndInformation/profile/Pages/default.aspx [Google Scholar]

30. QAC. (2016). “Reply to ABET commission: Procedures followed to avoid weaknesses,” Information System Department, College of Computer and Information Systems, Al Imam Mohammad Ibn Saud Islamic University, Saudi Arabia. [Google Scholar]

31. A. I. M. I. S. I. University, “Information technology, mission, vision and goals,” 2020. [Online]. Available: https://units.imamu.edu.sa/colleges/en/ComputerAndInformation/academic_department/Pages/default.aspx [Google Scholar]

32. N. R. Tague. (2005). The Quality Toolbox. Second ed. USA: Quality Press, pp. 584. [Google Scholar]

33. J. A. Durlak and E. P. DuPre. (2008). “Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation,” American Journal of Community Psychology, vol. 41, no. 3–4, pp. 327–350. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |