Open Access

Open Access

ARTICLE

Sand Cat Swarm Optimization with Deep Transfer Learning for Skin Cancer Classification

1 Department of Electronics and Instrumentation Engineering, V. R. Siddhartha Engineering College, Vijayawada, 520007, India

2 Department of Software Engineering, College of Computer Science and Engineering, University of Jeddah, Jeddah, Saudi Arabia

3 Department of Computer Science and Engineering, University Institute of Engineering and Technology (UIET), Guru Nanak University, Hyderabad, India

4 Department of Computer Science and Engineering, Vignan’s Institute of Information Technology, Visakhapatnam, 530049, India

5 Department of Applied Data Science, Noroff University College, Kristiansand, Norway

6 Artificial Intelligence Research Center (AIRC), College of Engineering and Information Technology, Ajman University, Ajman, United Arab Emirates

7 Department of Electrical and Computer Engineering, Lebanese American University, Byblos, Lebanon

8 Department of Software, Kongju National University, Cheonan, 31080, Korea

* Corresponding Author: Jungeun Kim. Email:

Computer Systems Science and Engineering 2023, 47(2), 2079-2095. https://doi.org/10.32604/csse.2023.038322

Received 07 December 2022; Accepted 02 February 2023; Issue published 28 July 2023

Abstract

Skin cancer is one of the most dangerous cancer. Because of the high melanoma death rate, skin cancer is divided into non-melanoma and melanoma. The dermatologist finds it difficult to identify skin cancer from dermoscopy images of skin lesions. Sometimes, pathology and biopsy examinations are required for cancer diagnosis. Earlier studies have formulated computer-based systems for detecting skin cancer from skin lesion images. With recent advancements in hardware and software technologies, deep learning (DL) has developed as a potential technique for feature learning. Therefore, this study develops a new sand cat swarm optimization with a deep transfer learning method for skin cancer detection and classification (SCSODTL-SCC) technique. The major intention of the SCSODTL-SCC model lies in the recognition and classification of different types of skin cancer on dermoscopic images. Primarily, Dull razor approach-related hair removal and median filtering-based noise elimination are performed. Moreover, the U2Net segmentation approach is employed for detecting infected lesion regions in dermoscopic images. Furthermore, the NASNetLarge-based feature extractor with a hybrid deep belief network (DBN) model is used for classification. Finally, the classification performance can be improved by the SCSO algorithm for the hyperparameter tuning process, showing the novelty of the work. The simulation values of the SCSODTL-SCC model are scrutinized on the benchmark skin lesion dataset. The comparative results assured that the SCSODTL-SCC model had shown maximum skin cancer classification performance in different measures.Keywords

Skin cancer is a common type of tumour; if it is identified and treated early, it can mostly be cured. So effective techniques should be developed for the automatic classification of skin cancer [1]. Since the largest part of the human body, the skin is responsible for protecting other human systems, which raises its vulnerability to disease. More skin cancers are identified every year than all other cancers in the US. Melanoma has a five-year survival rate of 99% if it does not spread to other organs [2]. If it spreads to other body parts, the survival rate decreases to 20%. But the early indications of skin cancer are not visible at all times, diagnostic outcomes are reliant on the experience of a dermatologist [3]. For inexperienced doctors, an automated diagnosis mechanism will be a powerful tool for more precise diagnoses. Other than that, identifying skin cancer with the human eye is rarely generalizable. Hence, it is required to design an automatic classification technique for skin cancer that is quicker to diagnose, more precise, and less expensive [4]. In addition, applying this automatic diagnostic mechanism can effectually reduce skin cancer deaths.

Due to the diversity and complexity of skin disease images, the automatic classification of skin cancer remains challenging [5]. Initially, various skin lesions have many interclass similarities, which leads to misdiagnosis. For instance, there are several mimics of skin cancer in histopathological images, namely SCC and other diseases [6]. So, it is tough for the diagnosis mechanism to differentiate skin malignancies from their familiar imitators efficiently. Then, numerous skin lesions vary within similar classes in size, colour, structure, location, and feature [7]. For instance, the presence of skin cancer and its subclasses are different. This makes it hard to categorize different subclasses of the same classes. Also, the classification techniques are extremely sensitive to the types of cameras utilized for capturing images [8].

Machine Learning (ML) techniques are utilized for automating the diagnosis, leading to a framework and system in the medical sector that would help in assisting physicians in communicating, offering contextual relevance, enhancing clinical reliability, lowering medical costs, minimalizing errors relevant to human tiredness, more easily identifying diseases and lowering mortality rates [9]. An ML technique that can classify both benign and malignant pigmented skin lesions was a step toward reaching such objectives. In the presented work, ML methods and Convolutional Neural Networks (CNN) were utilized to precisely categorize pigmented skin lesions in dermoscopic imageries to find malignant skin lesions as soon as possible [10]. However, it remains a challenging problem. Because of the continual deepening of the model, the number of parameters of DL models also increases quickly, which results in model overfitting. At the same time, different hyperparameters significantly impact the efficiency of the CNN model, particularly the learning rate. It is also necessary to modify the learning rate parameter to obtain better performance.

This study develops a new sand cat swarm optimization with a deep transfer learning model for skin cancer detection and classification (SCSODTL-SCC) technique. The major intention of the SCSODTL-SCC model lies in the recognition and classification of different types of skin cancer on dermoscopic images. Primarily, Dull razor method-based hair removal and median filtering-based noise elimination are performed. Moreover, the U2Net segmentation approach is employed to detect infected lesion regions in dermoscopic images. Furthermore, the NASNetLarge-based feature extractor with a hybrid deep belief network (DBN) model is used for classification. Finally, the classification performance can be improved by the SCSO algorithm for the hyperparameter tuning process. The simulation values of the SCSODTL-SCC model are inspected on the benchmark ISIC dataset.

Reshma et al. [11] introduced multilevel thresholding with DL (IMLT-DL) related skin lesion classification and segmentation methods utilizing dermoscopy images to overcome such difficulties. At first, the IMLT-DL method integrates the inpainting and Top hat filtering approach to pre-process the dermoscopic imageries. Likewise, Mayfly Optimized (MFO) plus multilevel Kapur’s thresholding-oriented segmenting procedure is included in determining affected areas. In addition, to extract a useful set of feature vectors, an Inception v3-oriented feature extractor is enforced. At last, the classification can be accomplished through a gradient boosting tree (GBT) technique. In [12], a mechanism was presented for identifying melanoma mechanically with the help of an ensemble method, image texture feature extraction, and CNN. For image classification in the CNN stage, 2 CNN models, the VGG-19 and a proposed network are used. Beyond that, texture features were derived, and their dimension will be minimized through kernel PCA (kPCA) to scale up the productivity of classification in extracting feature stage.

In [13], the author designed an ensemble CNNs for a multiclass classification study related to incorporating image preprocessing, risk management, and DL on skin cancer dermoscopic imageries with Meta-data. The author fundamentally exemplified general stacking ensemble flow from Meta-data concatenating, data preprocessing phases, first CNNs ensemble and second meta-classifiers ensemble and CNN’s grouping. Shorfuzzaman [14] devises a CNN-oriented stacked ensemble structure for diagnosing skin cancer at the initial level. In stacking ensemble structure, the TL was leveraged in which many CNNs sub-methods that execute the similar classifier task were re-accumulated. A new technique named a meta-learner used all sub-model predictions and generated final predictive outcomes. Kaur et al. [15] suggest an automatic technique related to a DCNN to classify benign and malignant melanoma precisely. The DCNN structure is devised prudently by forming multiple layers that are liable for the extraction of high and low-level attributes of skin imageries in a unique way.

Chaturvedi et al. [16] modelled an automatic CAD mechanism for a multi-class skin (MCS) cancer classification with maximum precision. The presented technique outpaced modern DL techniques and expert dermatologists for MCS cancer classification. The author executed optimal tuning on 7 classes of HAM10000 data and performed a brief analysis to examine the efficiency of 4 ensemble models and 5 pre-trained CNNs. The authors [17] developed a DCNN-related method to classify skin cancer types automatically into non-melanoma and melanoma with more precision. To solve such 2 issues and attain high classification efficiency, the author leveraged EfficientNet architecture related to TL approaches, which learned fine-grained and more complex paradigms from lesion images by mechanically scaling the resolution, depth, and width of the network.

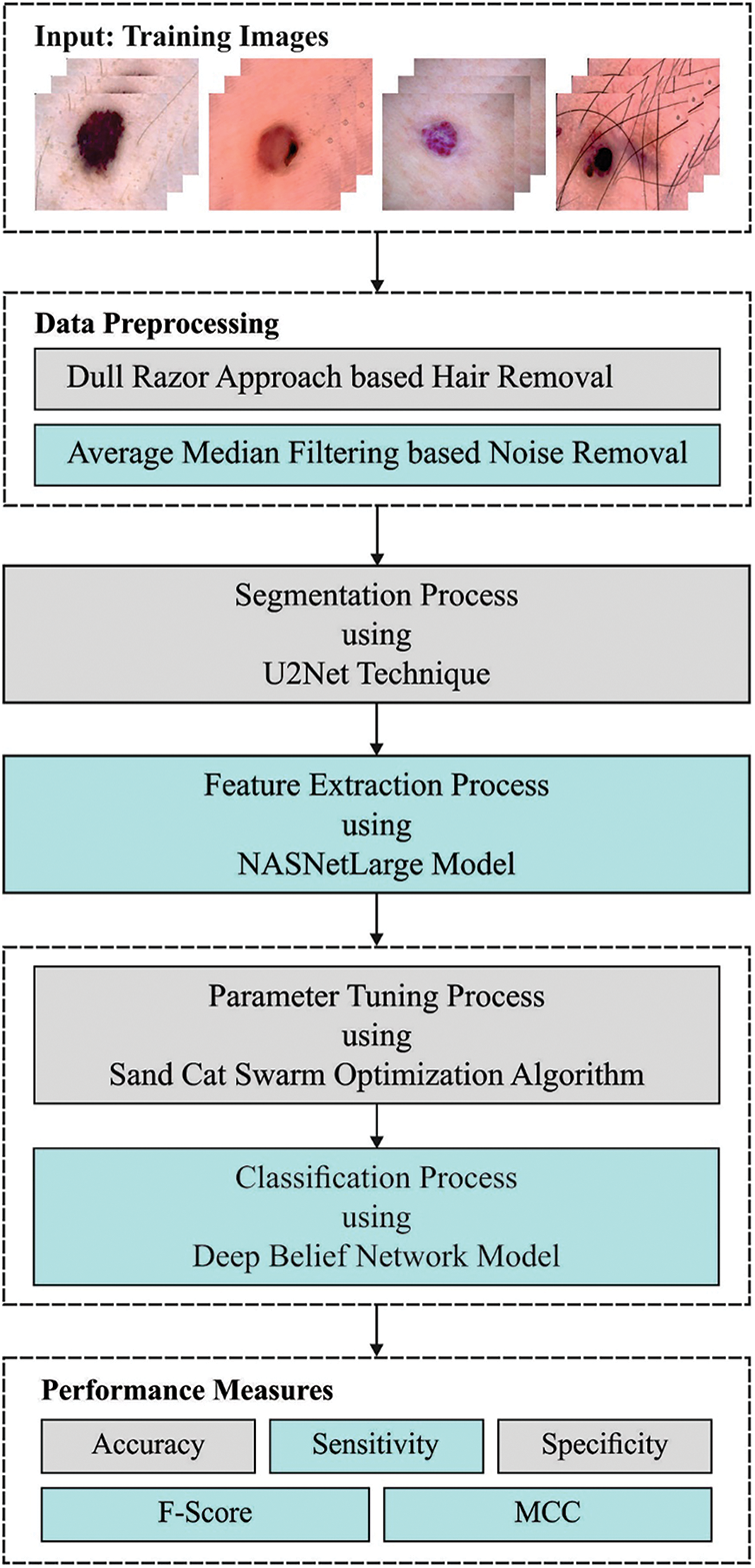

In this study, an automatic skin cancer classification utilizing the SCSODTL-SCC technique has been developed on dermoscopic images. The SCSODTL-SCC model's goal is to detect and classify different kinds of skin cancer on dermoscopic images. In the presented SCSODTL-SCC model, various subprocesses are involved, namely preprocessing, U2Net segmentation, NASNetLarge feature extraction, hybrid DBN classification, and SCSO hyperparameter tuning. Fig. 1 represents the overall process of the SCSODTL-SCC approach.

Figure 1: Working process of the SCSODTL-SCC system

Firstly, Dull razor approach-related hair removal and median filtering-based noise elimination are performed. In dermoscopic images, hairs are removed using the DullRazor technique for accurate detection and segmentation. This method makes hair removal on lesions in three phases. In the primary stage, it identifies hair positions through a grayscale morphological closing function. In the subsequent stage, it determines hair positions by monitoring the thickness and length of identified shapes. Later, the ensured pixels will be interchanged as a bilinear interpolation method. Finally, it smoothened the exchanged pixel utilizing a median filter. Moreover, the U2Net segmentation approach is employed to detect infected lesion regions in dermoscopic images. U2-Net can be referred to as an advanced DL method for background removal, which generates the mask that can be further leveraged for segmenting the image through image processing functionalities of Pillow and OpenCV libraries. The image with background is given to the U2-Net model, which produced a mask for the image. The mask can extract the RoI from the original image without including the background.

3.2 Feature Extraction Using NASNet Model

At this stage, the NASNetLarge-based feature extractor is applied. Neural architecture search (NAS) is one of the authors’ essential search processes during this network. The child network was revealed for accomplishing any accuracy on the validation set that notably existed for convergence [18]. The succeeding accuracy value has been employed for upgrading the controller that successively creates optimum structure over time. This procedure gradient occurs for updating the controller weighted. During this method, the entire convolutional net infrastructure existed manually. It can be collected of convolutional cells which take related shapes as a new but are weight in a distinct approach. 2 kinds of convolutional cells are occurring for quickly evolving scalable structures for images of several sizes: (i) convolutional cells produce feature maps having a 2-fold decrease in height and width, and (ii) convolutional cells return feature map with same dimensional.

These 2 types of convolutional cells can be demonstrated as reduction and normal cells correspondingly. An initial procedure utilized for the cell input provides a 2-step stride for minimalizing the cell height and width. The convolutional cell assists striding as it can assume all the operations. The normal and reduction cells infrastructure the controller RNN is searching for is different from the convolutional net. The search area was employed to search all the cell shapes. There were two hidden states (HS) such as

Step1. Select HS from

Step2. During the same selection as in Step 1, select the next HS.

Step3. In Step 1, choose the HS that the authors require for employing.

Step4. Then selecting an HS in Step 2, select a process for employing.

Step5. Determine that outcome in Steps 3 & 4 are combined to generate a new HS. It could be potential for applying the newly created HS as an input in the ensuing block.

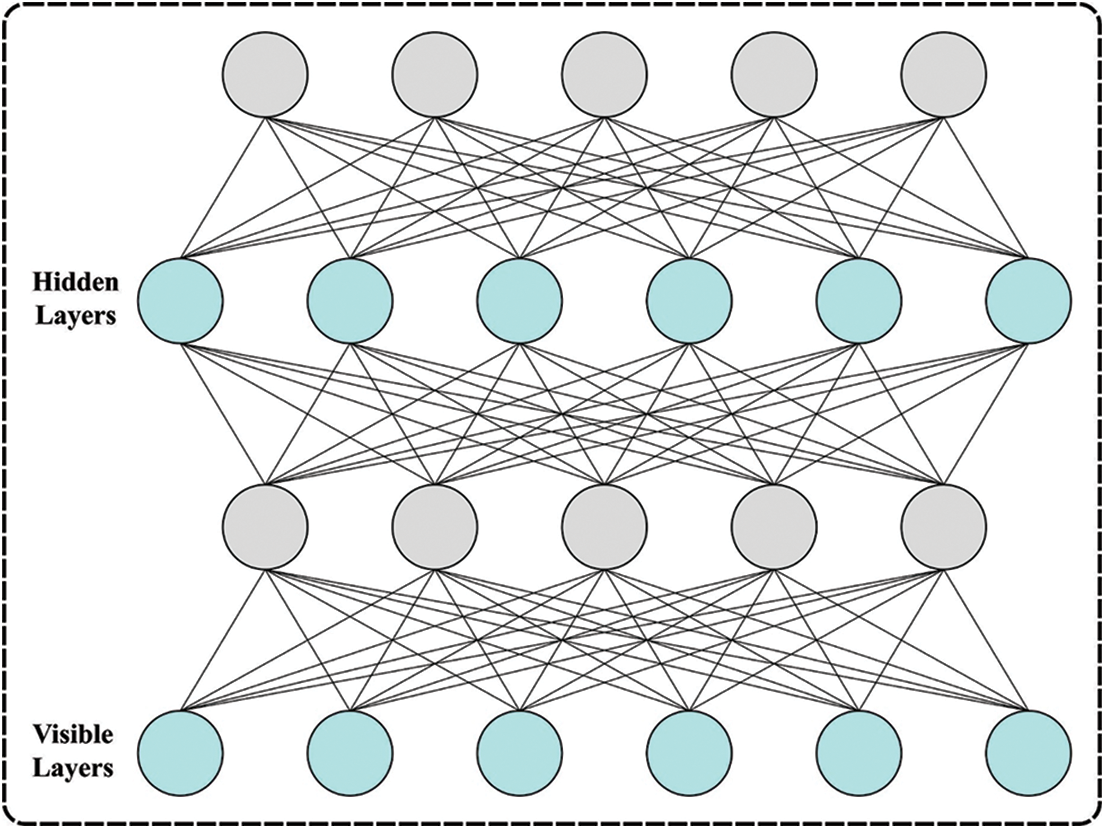

3.3 Skin Cancer Classification Using Optimal DBN Model

At this stage, the DBN model analyses the feature vectors for the classification process. The DNN technique includes four layers of pre-trained RBM and resultant layers (Softmax Regression) [19]. The parameter can be estimated by the trained method previously employing DBN for classifying and representing assaults. The training of DBN is categorized as pre-train for presenting and fine-tuning to classifiers. At the same time, the resulting DBN is transmitted to inputs of Softmax Regression and comprised in DBN, which includes stacked RBM. Primarily, DBN was training to reconfigure untagged training databases and therefore executed unsupervised. Edata [.] and Emodel [.] can be expectations of possibilities.

During this work, the 3 approaches (Eqs. (1)–(3)) primarily comprised in typical DBN network; the second term cannot be reached directly. While it can be probability in distribution which is considered utilizing the DBN. Gibb’s sampling was utilized to calculate this probability. But, this approach is time-consuming and is utilized in real-time. The contrastive divergence (CD) process was utilized to determine optimum solutions, a fast-learning technique. Primarily, a trained sample has been employed to finish the Markov chain’s beginning. Afterwards, instances are obtained, and then

During this work, for training, stacked RBM layer-wise to generate DBN,

In this formula,

The stages for implementing greedy layer-wise trained processes to every layer of DBN are provided. Fig. 2 showcases the framework of DBN. A primary RBM trained, the data appropriate to

Figure 2: Architecture of DBN

As the trained set

In Eq. (5),

Finally, the classification performance can be improved by the SCSO algorithm for the hyperparameter tuning process.

The SCSO algorithm was inspired by the foraging behaviours of sand cats in the desert [20]. They are capable of detecting lower-frequency noise to find prey either below or above ground. The model considers optimum values in the exploration stage as prey, and the search process always explores searching space via position update, finally moving nearer to the region where the optimum value is positioned. The SCSO model was intended with the prey attack and prey search mechanisms. The search prey system simulates the behaviour of sand cats' search for prey.

In Eq. (7),

In Eq. (8),

In Eq. (9),

The SCSO technique attacks prey after prey search, and the prey attack system for sand cats can be defined using Eqs. (10) and (11):

where

EXPLORATION AND EXPLOITATION

SCSO balance the exploitation and exploration stages via adaptive factor

Since the count of iterations increases,

In Eq. (13), the searching agent of SCSO attacks targeted prey if

The SCSO has the subsequent feature: 1) every population member moves in distinct circular directions that safeguard that the mechanism has the possibility of getting closer to the prey and achieving an improved convergence rate. 2) SCSO features a simple framework with fewer parameters that are easily implemented. 3) SCSO consider the position of the optimum solution as prey. The mechanism is evaded to getting trapped to search stagnation by the following angle. 4) SCSO is capable of balancing exploration and exploitation stages for improving the convergence speed. 5) SCSO retains the global optimum solution in the iteration method, and the prey location isn't affected by reducing population quality.

The SCSO approach derived fitness function (FF) to have a better classifier outcome. It determined positive values for signifying the superior outcome of the candidate solutions. In this study, minimized classifier rate of error will be treated as FF, as seen in Eq. (14).

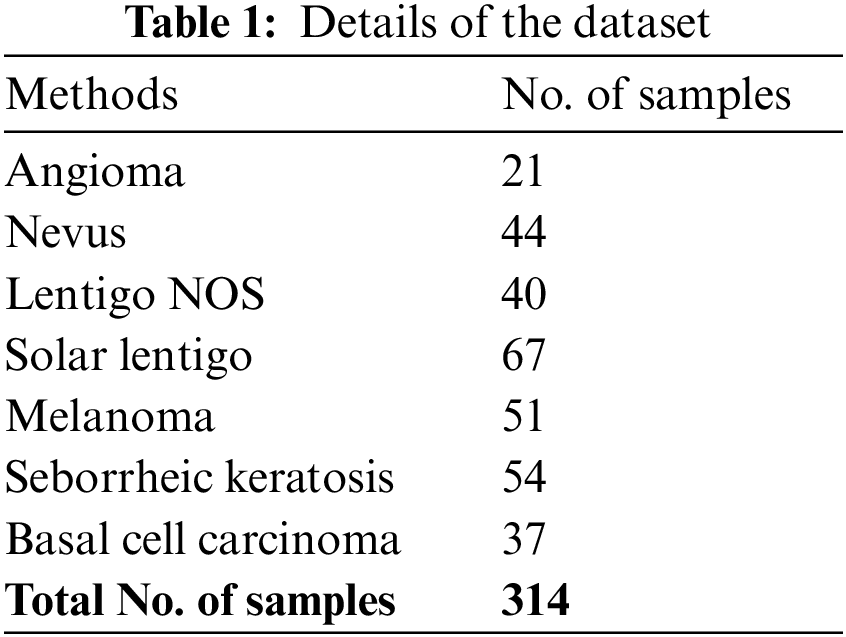

The proposed model is simulated using Python 3.6.5 tool. The proposed model has experimented on PC i5-8600k, GeForce 1050Ti 4 GB, 16 GB RAM, 250 GB SSD, and 1TB HDD. The parameter settings are given as follows: learning rate: 0.01, dropout: 0.5, batch size: 5, epoch count: 50, and activation: ReLU. In this section, the results examined the performance of the SCSODTL-SCC method on the ISIC dataset (available at https://www.isic-archive.com) comprising 314 images, as depicted in Table 1.

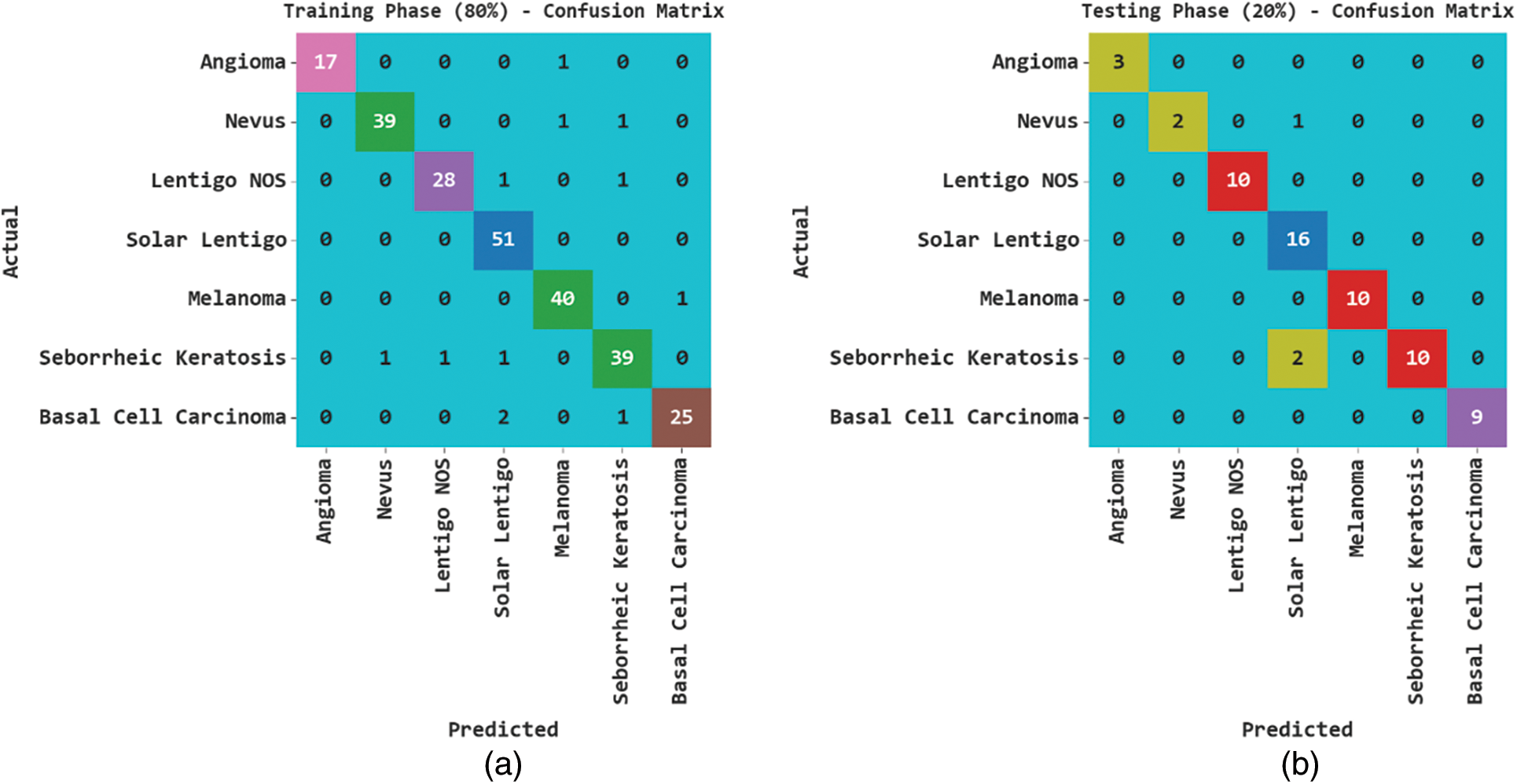

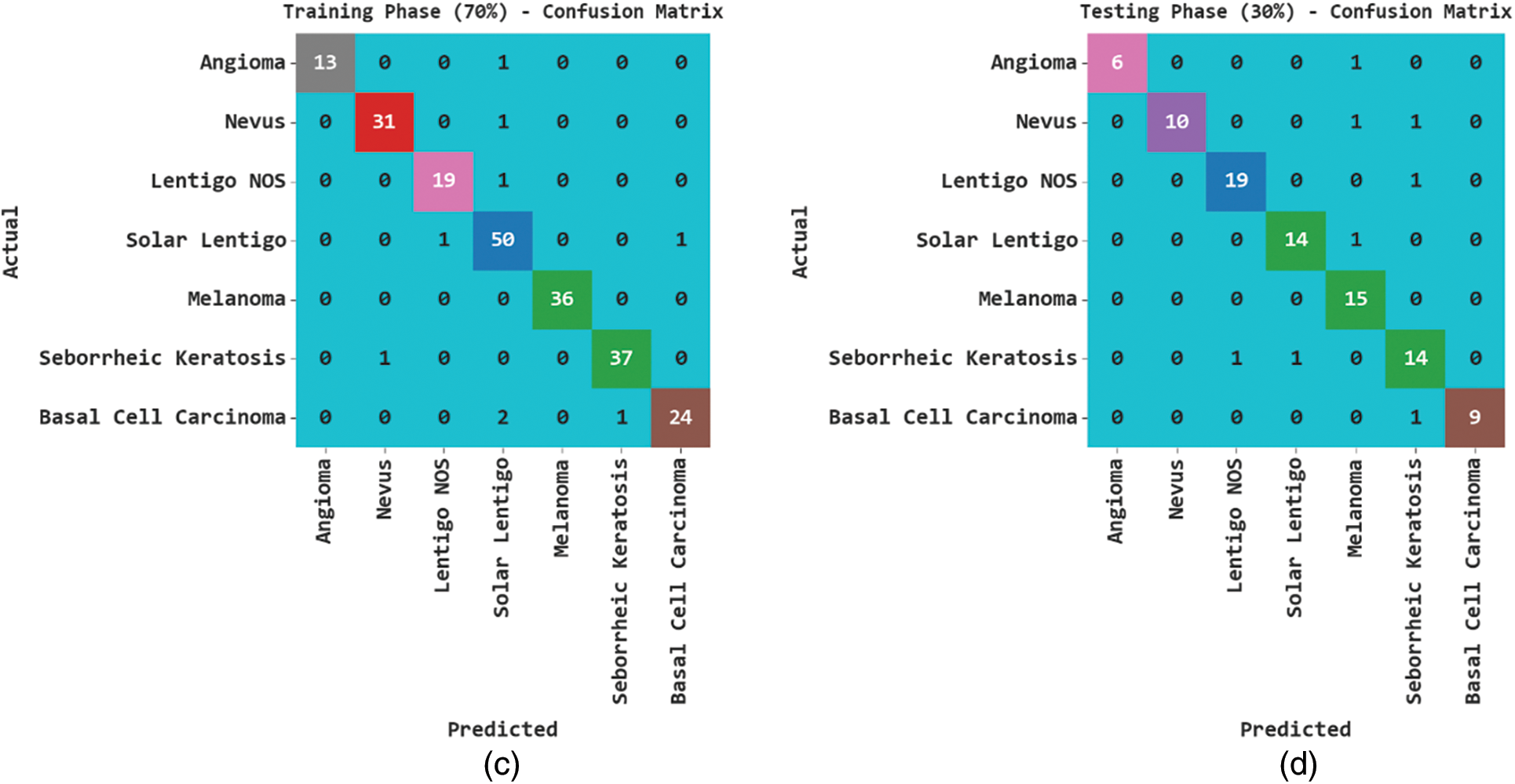

Fig. 3 exhibits the classification outcomes of the SCSODTL-SCC method in terms of the confusion matrix. The results indicated that the SCSODTL-SCC method has accurately recognized different skin cancer classes under each aspect.

Figure 3: Confusion matrices of SCSODTL-SCC approach (a–b) TR and TS databases of 80:20 and (c–d) TR and TS databases of 70:30

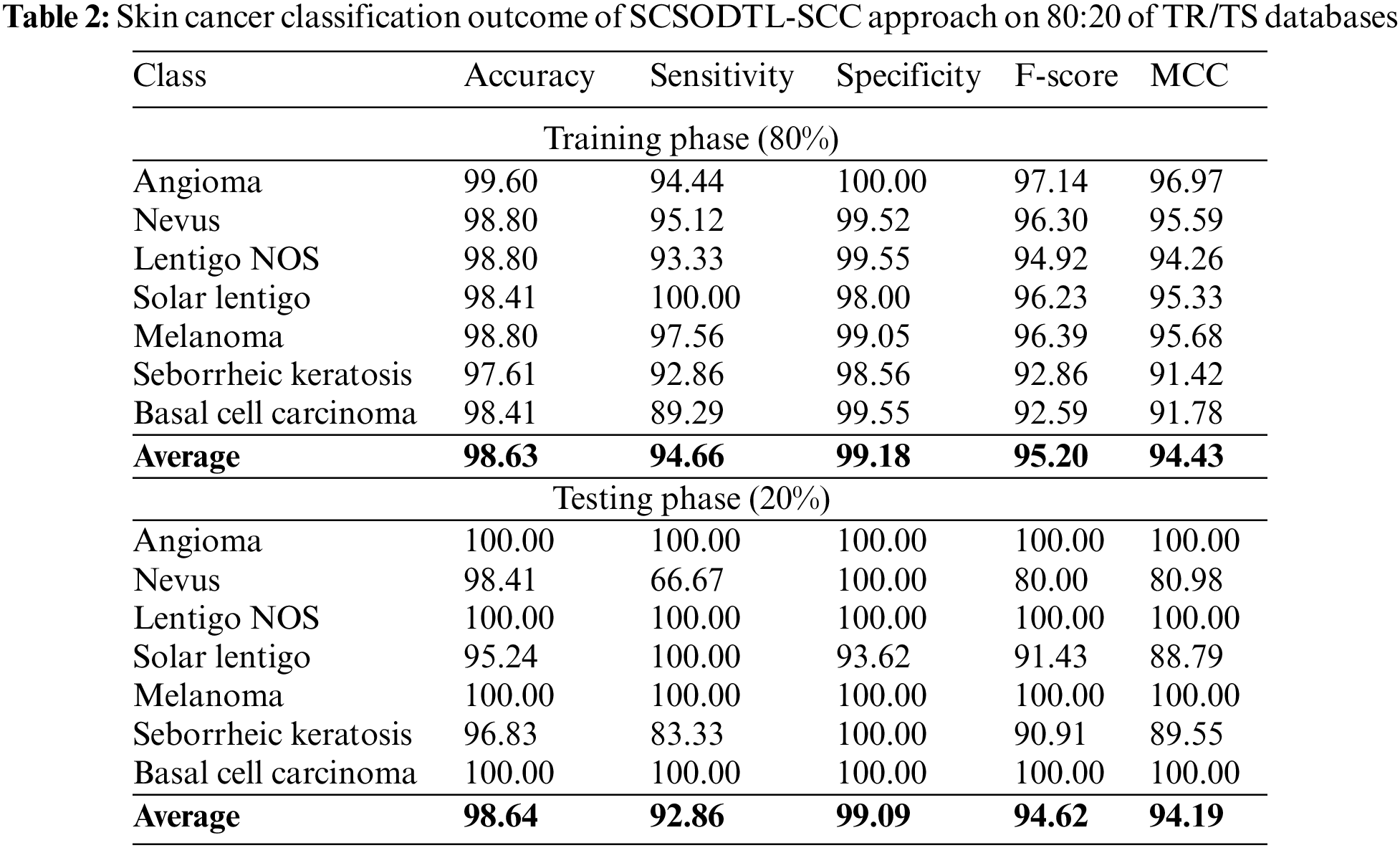

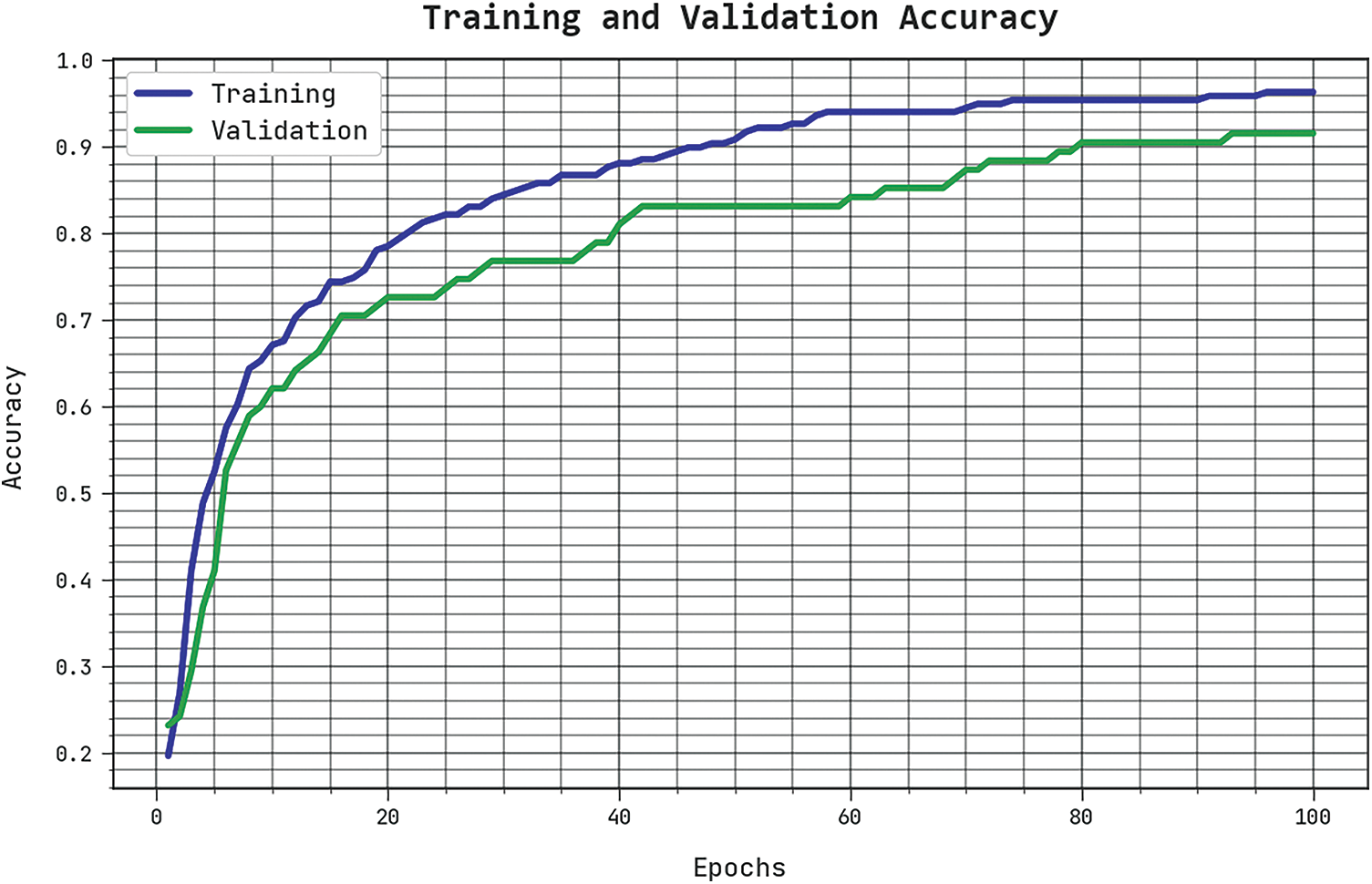

In Table 2, the skin cancer classification results of the SCSODTL-SCC method with 80:20 of TR/TS databases are provided. The figure shows the SCSODTL-SCC technique has reached enhanced results in every class. For example, on 80% of the TR database, the SCSODTL-SCC model has gained an average

In Table 3, the skin cancer classification results of the SCSODTL-SCC method with 70:30 of TR/TS databases are provided. The figure exhibited the SCSODTL-SCC methodology has reached enhanced results in different class labels. For example, on 70% of the TR database, the SCSODTL-SCC approach has reached an average

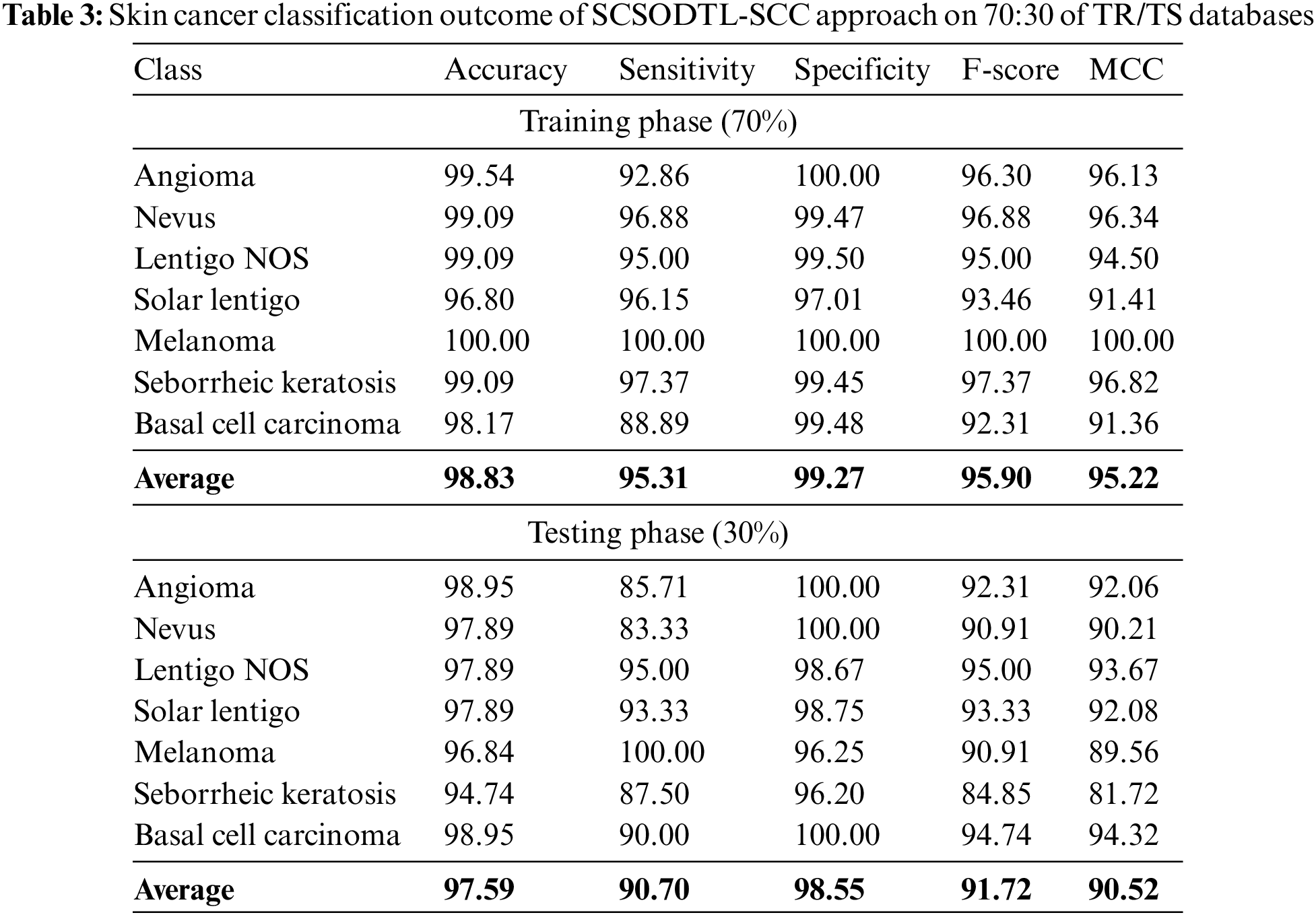

The TACC and VACC of the SCSODTL-SCC methodology are examined on skin cancer classification performance in Fig. 4. The results signified the SCSODTL-SCC approach has improved performance with increased values of TACC and VACC. Notably, the SCSODTL-SCC approach has maximum TACC outcomes.

Figure 4: TACC and VACC analysis of SCSODTL-SCC approach

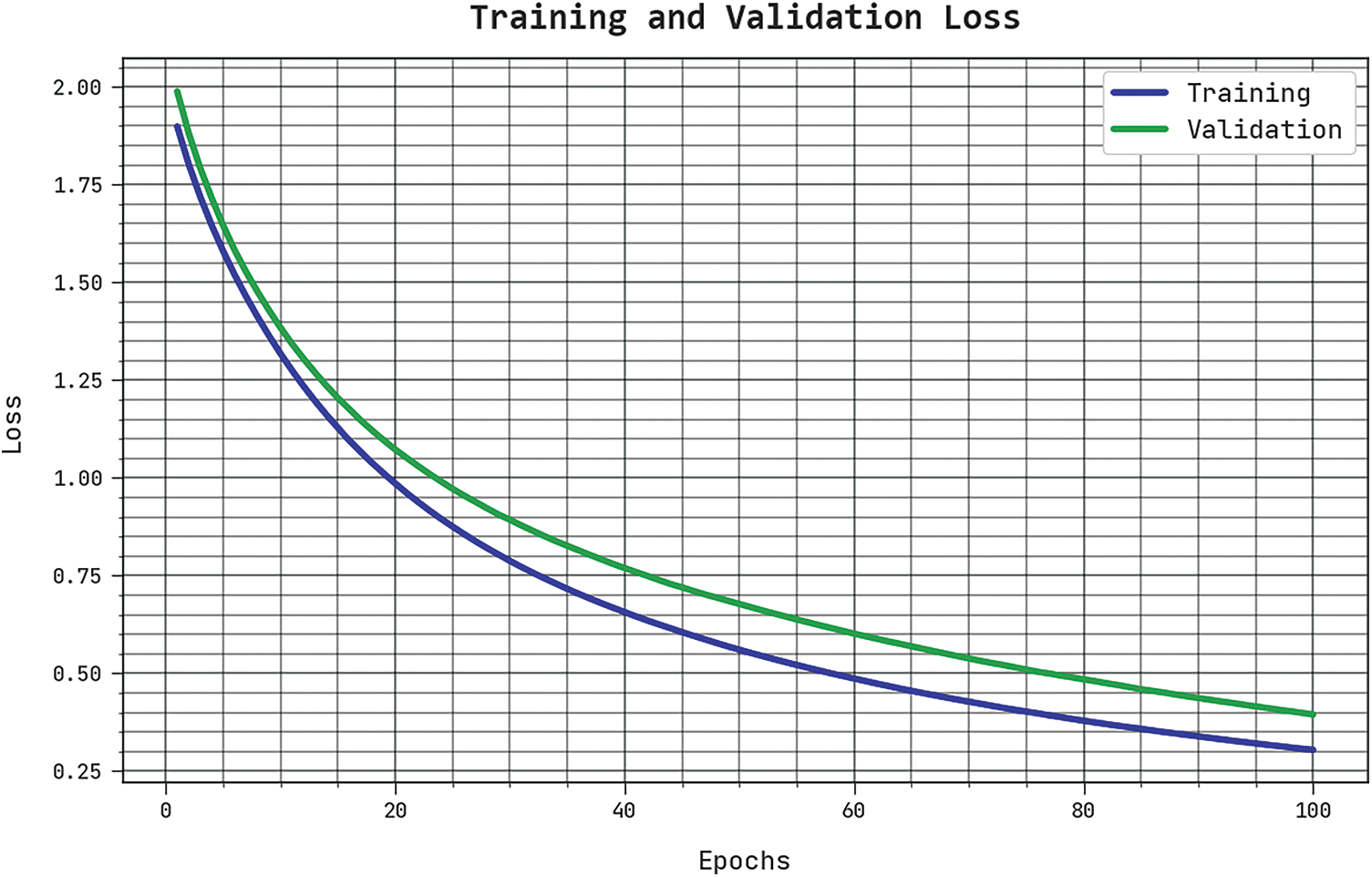

The TLS and VLS of the SCSODTL-SCC method are tested on skin cancer classification performance in Fig. 5. The outcomes inferred that the SCSODTL-SCC technique had revealed better performance with the least values of TLS and VLS. The SCSODTL-SCC technique has reduced VLS outcomes.

Figure 5: TLS and VLS analysis of SCSODTL-SCC approach

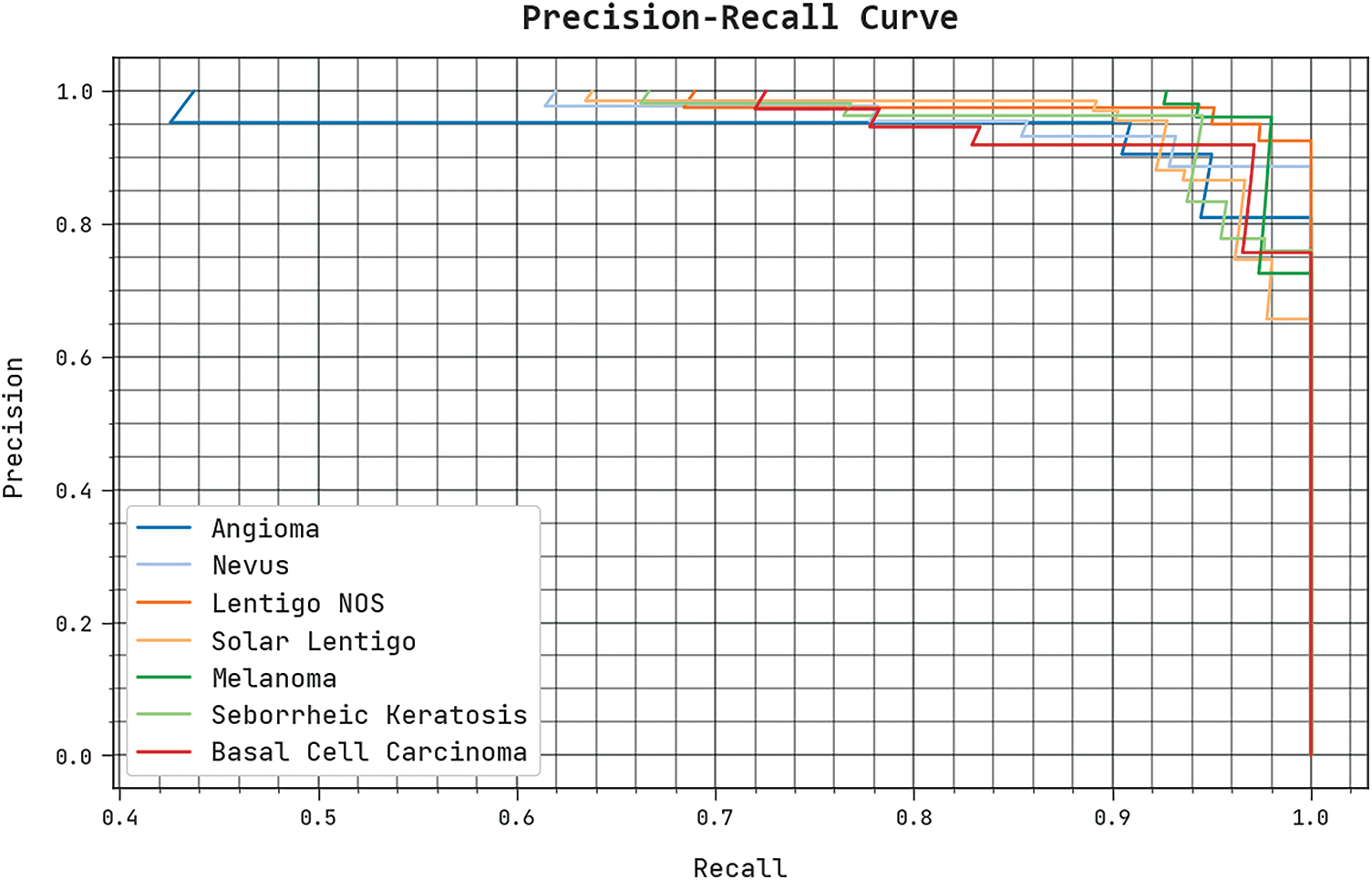

A clear precision-recall investigation of the SCSODTL-SCC method under the test database is seen in Fig. 6. The outcomes exhibited the SCSODTL-SCC method has enhanced values of precision-recall values.

Figure 6: Precision-recall analysis of the SCSODTL-SCC approach

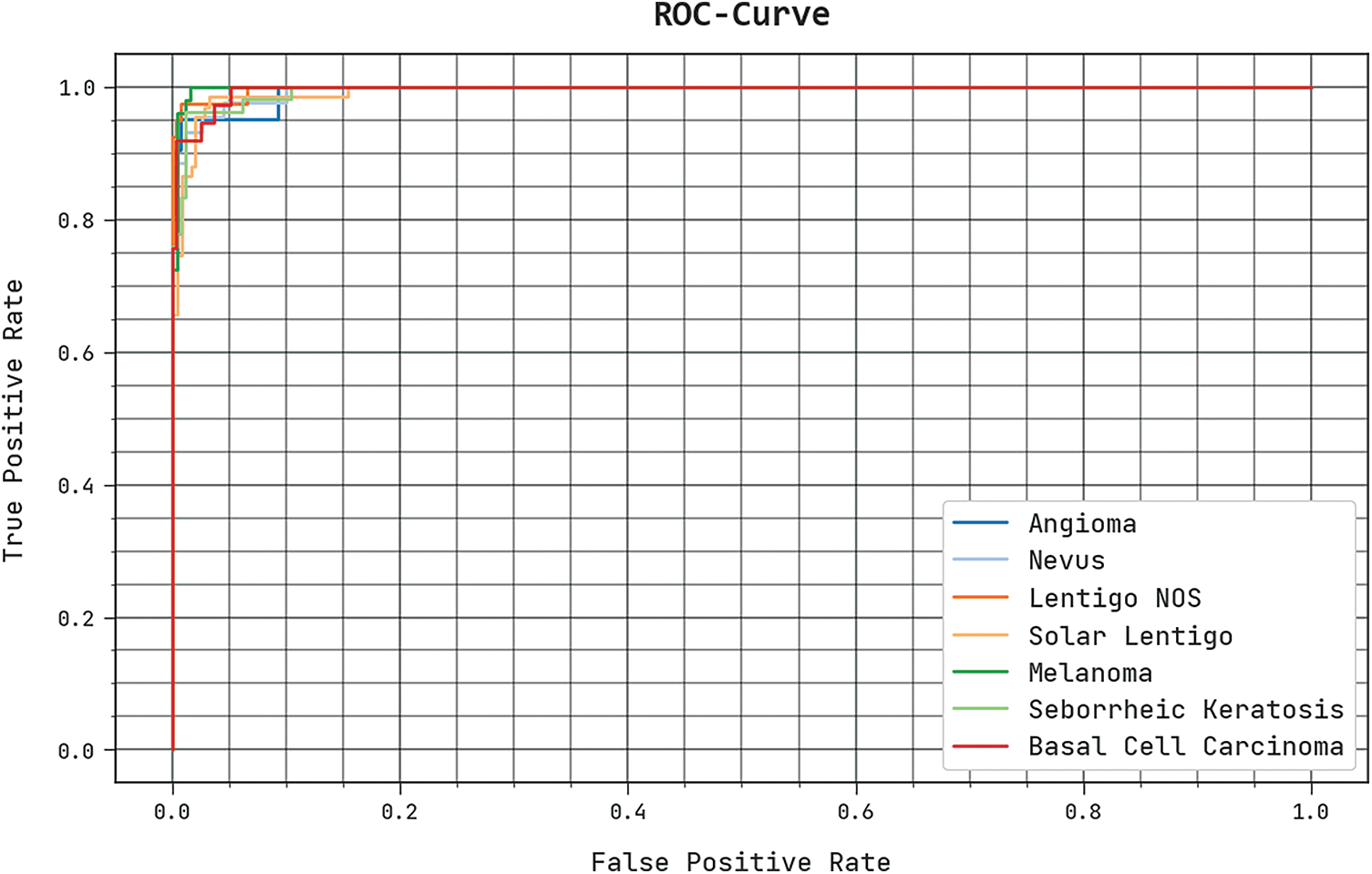

The detailed ROC investigation of the SCSODTL-SCC method under the test database is seen in Fig. 7. The outcomes designated the SCSODTL-SCC approach have revealed its ability in classifying distinct classes under the test database.

Figure 7: ROC curve analysis of SCSODTL-SCC approach

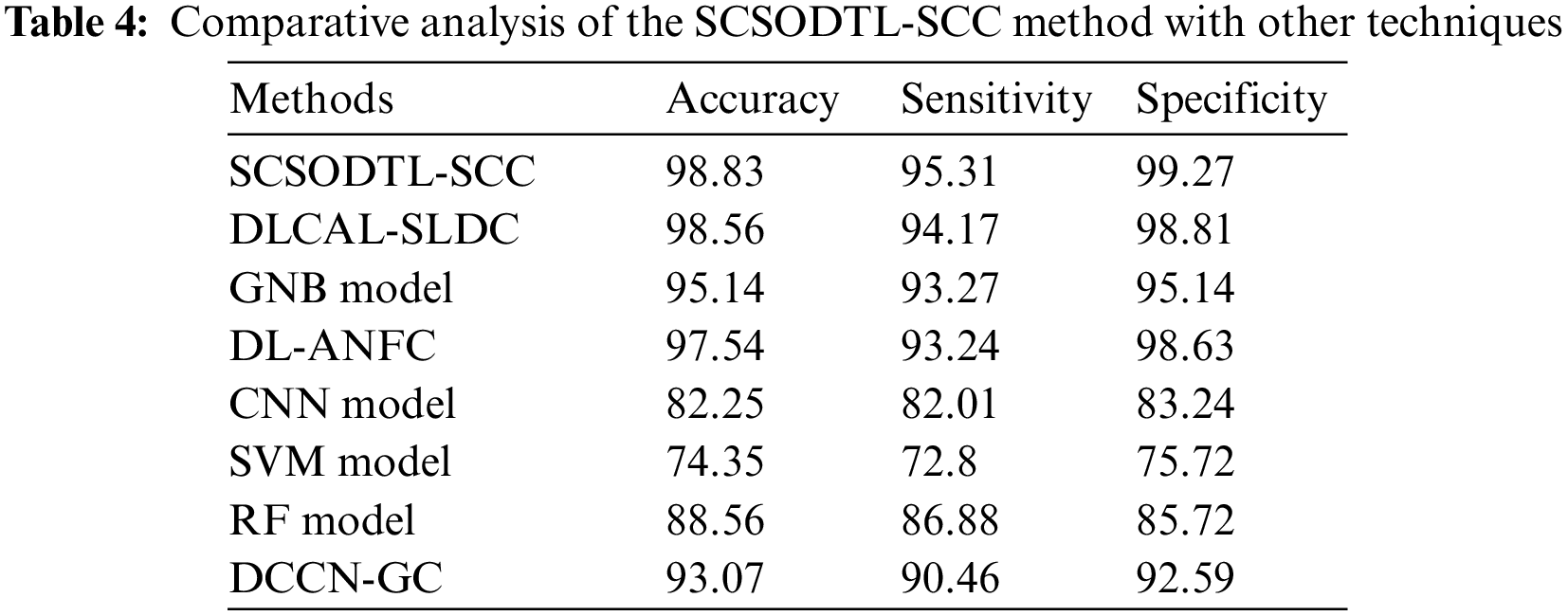

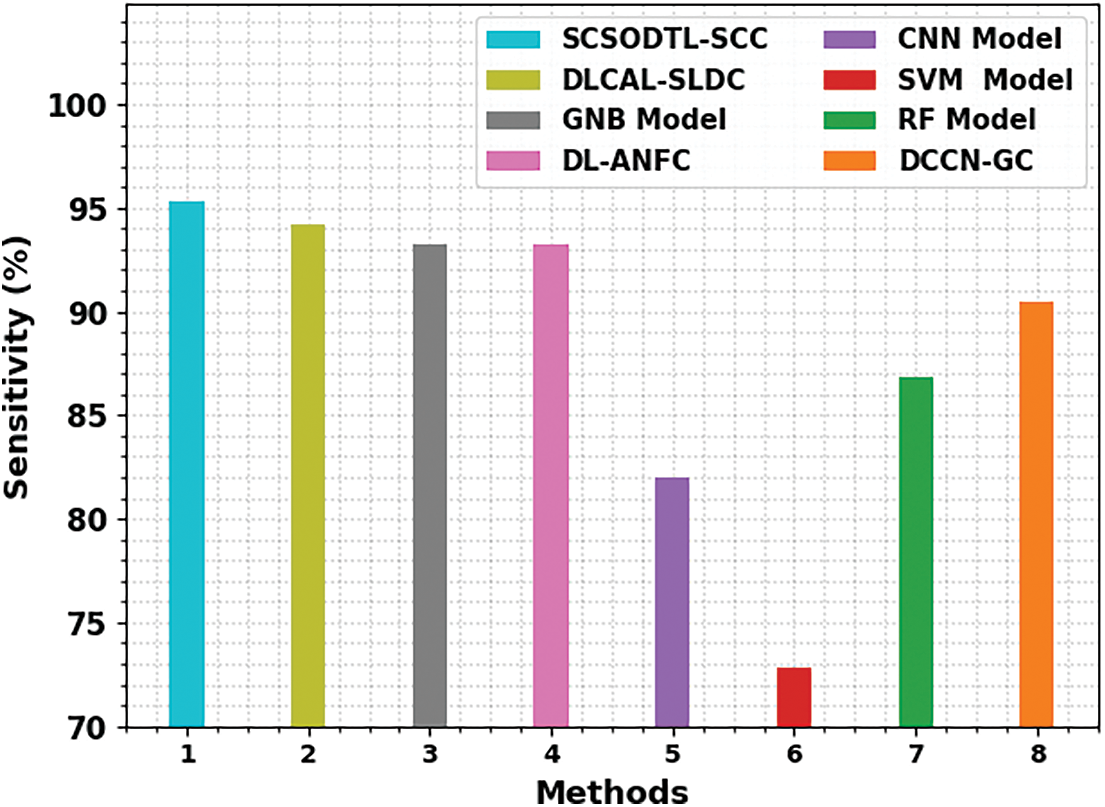

Table 4 reports an overall comparison study of the proposed model with other recent approaches [21]. Fig. 8 shows a comparative investigation of the SCSODTL-SCC model in terms of

Figure 8:

The results indicated the SVM and CNN had reached reduced

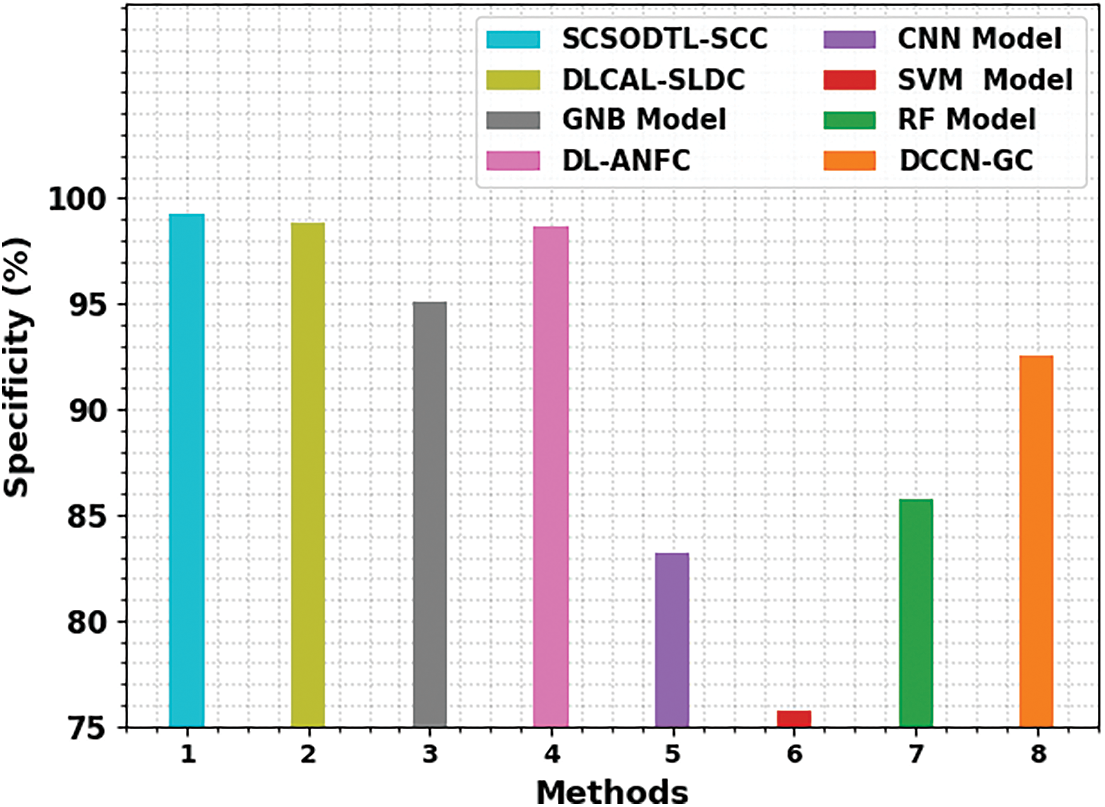

Fig. 9 shows a comparative study of the SCSODTL-SCC model in terms of

Figure 9:

From these results, it is guaranteed that the SCSODTL-SCC method has reached enhanced skin cancer classification performance.

In this study, an automatic skin cancer classification using the SCSODTL-SCC technique has been developed on dermoscopic images. The goal of the SCSODTL-SCC model exists in the detection and classification of dissimilar kinds of skin cancer on dermoscopic images. Mainly, Dull razor approach-related hair removal and median filtering-based noise elimination are performed. Moreover, the U2Net segmentation approach is employed for the detection of infected lesion regions in dermoscopic images. Furthermore, the NASNetLarge-based feature extractor with the hybrid DBN model is used for classification. Finally, the classification performance can be improved by the SCSO algorithm for the hyperparameter tuning process. The simulation values of the SCSODTL-SCC model are inspected on the benchmark ISIC dataset. The comparative outcomes assured that the SCSODTL-SCC method had shown maximum skin cancer classification performance with maximum accuracy of 98.83%. In the future, the ensemble learning process can be employed to improve the classification results of the proposed model.

Acknowledgement: The authors thank for the seed grant support of Siddhartha Academy of General & Technical Education and Velagapudi Ramakrishna Siddhartha Engineering College.

Funding Statement: This research was partly supported by the Technology Development Program of MSS [No. S3033853] and by the National University Development Project by the Ministry of Education in 2022.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. M. Dildar, S. Akram, M. Irfan, H. U. Khan, M. Ramzan et al., “Skin cancer detection: A review using deep learning techniques,” International Journal of Environmental Research and Public Health, vol. 18, no. 10, pp. 5479, 2021. [Google Scholar] [PubMed]

2. K. Aljohani and T. Turki, “Automatic classification of melanoma skin cancer with deep convolutional neural networks,” Artificial Intelligence, vol. 3, no. 2, pp. 512–525, 2022. [Google Scholar]

3. H. C. Reis, V. Turk, K. Khoshelham and S. Kaya, “InSiNet: A deep convolutional approach to skin cancer detection and segmentation,” Medical & Biological Engineering & Computing, vol. 60, no. 3, pp. 643–662, 2022. [Google Scholar]

4. N. Kausar, A. Hameed, M. Sattar, R. Ashraf, A. S. Imran et al., “Multiclass skin cancer classification using ensemble of fine-tuned deep learning models,” Applied Sciences, vol. 11, no. 22, pp. 1–20, 2021. [Google Scholar]

5. T. Saba, “Computer vision for microscopic skin cancer diagnosis using handcrafted and non-handcrafted features,” Microscopy Research and Technique, vol. 84, no. 6, pp. 1272–1283, 2021. [Google Scholar] [PubMed]

6. A. G. Pacheco and R. A. Krohling, “An attention-based mechanism to combine images and metadata in deep learning models applied to skin cancer classification,” IEEE Journal of Biomedical and Health Informatics, vol. 25, no. 9, pp. 3554–3563, 2021. [Google Scholar] [PubMed]

7. M. Fraiwan and E. Faouri, “On the automatic detection and classification of skin cancer using deep transfer learning,” Sensors, vol. 22, no. 13, pp. 1–16, 2022. [Google Scholar]

8. T. Saba, M. A. Khan, A. Rehman and S. L. Marie-Sainte, “Region extraction and classification of skin cancer: A heterogeneous framework of deep CNN features fusion and reduction,” Journal of Medical Systems, vol. 43, no. 9, pp. 1–19, 2019. [Google Scholar]

9. A. M. Hemeida, S. A. Hassan, A. A. A. Mohamed, S. Alkhalaf, M. M. Mahmoud et al., “Nature-inspired algorithms for feed-forward neural network classifiers: A survey of one decade of research,” Ain Shams Engineering Journal, vol. 11, no. 3, pp. 659–675, 2020. [Google Scholar]

10. M. S. Ali, M. S. Miah, J. Haque, M. M. Rahman and M. K. Islam, “An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models,” Machine Learning with Applications, vol. 5, no. 8, pp. 1–8, 2021. [Google Scholar]

11. G. Reshma, C. Al-Atroshi, V. K. Nassa, B. Geetha, G. Sunitha et al., “Deep learning-based skin lesion diagnosis model using dermoscopic images,” Intelligent Automation & Soft Computing, vol. 31, no. 1, pp. 621–634, 2022. [Google Scholar]

12. S. M. Alizadeh and A. Mahloojifar, “Automatic skin cancer detection in dermoscopy images by combining convolutional neural networks and texture features,” International Journal of Imaging Systems and Technology, vol. 31, no. 2, pp. 695–707, 2021. [Google Scholar]

13. T. C. Lin and H. C. Lee, “Skin cancer dermoscopy images classification with meta data via deep learning ensemble,” in Int. Computer Symp. (ICS), Tainan, Taiwan, pp. 237–241, 2020. [Google Scholar]

14. M. Shorfuzzaman, “An explainable stacked ensemble of deep learning models for improved melanoma skin cancer detection,” Multimedia Systems, vol. 28, no. 4, pp. 1309–1323, 2022. [Google Scholar]

15. R. Kaur, H. GholamHosseini, R. Sinha and M. Lindén, “Melanoma classification using a novel deep convolutional neural network with dermoscopic images,” Sensors, vol. 22, no. 3, pp. 1–15, 2022. [Google Scholar]

16. S. S. Chaturvedi, J. V. Tembhurne and T. Diwan, “A multi-class skin cancer classification using deep convolutional neural networks,” Multimedia Tools and Applications, vol. 79, no. 39, pp. 28477–28498, 2020. [Google Scholar]

17. SM. J., C. Aravindan and R. Appavu, “Classification of skin cancer from dermoscopic images using deep neural network architectures,” Multimedia Tools and Applications, vol. 82, pp. 1–16, 2022. [Google Scholar]

18. F. Alrowais, S. S. Alotaibi, F. N. Al-Wesabi, N. Negm, R. Alabdan et al., “Deep transfer learning enabled intelligent object detection for crowd density analysis on video surveillance systems,” Applied Sciences, vol. 12, no. 13, pp. 6665, 2022. [Google Scholar]

19. K. A. Alissa, H. Shaiba, A. Gaddah, A. Yafoz, R. Alsini et al., “Feature subset selection hybrid deep belief network based cybersecurity intrusion detection model,” Electronics, vol. 11, no. 19, pp. 3077, 2022. [Google Scholar]

20. Y. Li and G. Wang, “Sand cat swarm optimization based on stochastic variation with elite collaboration,” IEEE Access, vol. 10, pp. 89989–90003, 2022. [Google Scholar]

21. D. Adla, G. V. R. Reddy, P. Nayak and G. Karuna, “Deep learning-based computer aided diagnosis model for skin cancer detection and classification,” Distributed and Parallel Databases, vol. 40, no. 4, pp. 717–736, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools