Open Access

Open Access

ARTICLE

Artificial Humming Bird Optimization with Siamese Convolutional Neural Network Based Fruit Classification Model

1 Department of Information Technology, Chaitanya Bharathi Institute of Technology, Hyderabad, Telangana, India

2 Department of Computer Science, College of Computer and Information Sciences, Majmaah University, Al-Majmaah, 11952, Saudi Arabia

3 Department of Electrical Engineering, College of Engineering, Jouf University, Sakaka, Saudi Arabia

4 Department of Computer Science and Engineering, Vignan’s Institute of Information Technology, Visakhapatnam, 530049, India

5 Department of International Affairs and Education, Gangseo University, Seoul, 07661, Korea

6 Department of Information and Communication Engineering and Convergence Engineering for Intelligent Drone, Sejong University, Seoul, 05006, Korea

7 Department of Computer Science and Engineering, Sejong University, Seoul, 05006, Korea

* Corresponding Author: Hyeonjoon Moon. Email:

Computer Systems Science and Engineering 2023, 47(2), 1633-1650. https://doi.org/10.32604/csse.2023.034769

Received 27 July 2022; Accepted 26 October 2022; Issue published 28 July 2023

Abstract

Fruit classification utilizing a deep convolutional neural network (CNN) is the most promising application in personal computer vision (CV). Profound learning-related characterization made it possible to recognize fruits from pictures. But, due to the similarity and complexity, fruit recognition becomes an issue for the stacked fruits on a weighing scale. Recently, Machine Learning (ML) methods have been used in fruit farming and agriculture and brought great convenience to human life. An automated system related to ML could perform the fruit classifier and sorting tasks previously managed by human experts. CNN’s (convolutional neural networks) have attained incredible outcomes in image classifiers in several domains. Considering the success of transfer learning and CNNs in other image classifier issues, this study introduces an Artificial Humming Bird Optimization with Siamese Convolutional Neural Network based Fruit Classification (AMO-SCNNFC) model. In the presented AMO-SCNNFC technique, image preprocessing is performed to enhance the contrast level of the image. In addition, spiral optimization (SPO) with the VGG-16 model is utilized to derive feature vectors. For fruit classification, AHO with end to end SCNN (ESCNN) model is applied to identify different classes of fruits. The performance validation of the AMO-SCNNFC technique is tested using a dataset comprising diverse classes of fruit images. Extensive comparison studies reported improving the AMO-SCNNFC technique over other approaches with higher accuracy of 99.88%.Keywords

Automated fruit classification is an exciting challenge in developing fruit and retailing industrial chains as it is useful for supermarkets and fruit producers to discover several fruits and their condition from the stock or container to improvise production and income of the business [1]. Therefore, intellectual mechanisms with computer vision (CV) and machine learning (ML) techniques were implemented for classification, fruit defect recognition, and ripeness grading for the past few decades in automated fruit classifications, 2 main approaches, deep learning (DL)-based methods and conventional CV-related methods are inspected [2]. The conventional CV-based approaches initially extract the lower-level features. They do image classifiers via the conventional ML techniques, whereas the DL-based approaches effectively extract the features and implement an end-wise image classification [3]. In CV techniques and conventional image processing, image features, colour, shape, and texture are input units for fruit classifiers [4]. Former, fruit processing and selection rely on artificial approaches, resulting in a vast volume of waste of labor [5]. With the rapid development of 4G communication and wide familiarity with numerous mobile Internet devices, people have constituted many images, videos, sounds, and other data, and image-identifying technology has gradually matured [6]. Image-based fruit recognition has grabbed the author’s interest due to its low-cost devices and incredible performances. Meanwhile, it has to devise automated tools to deal with unplanned situations like an accidental mixture of fresh goods, placement of fruit in unusual packaging, spider webs on the lens, various lighting conditions, and so on [7]. DL refers to a subfield of ML which has shown outstanding outcomes in image detection [8]. DL uses the multilayer structure for processing image features that pointedly raise the efficiency of image recognition. Otherwise, implementing image detection and DL becomes a concept in the supply chain and logistics [9]. Another application of DL is the fruit classifier. DL could efficiently derive image features after the present classification [10]. Current CV advancements have shown outstanding fallouts in many areas of life. Fruit classification and detection have been illustrated as complicated and challenging tasks.

Chen et al. [11] devise a fruit image classifier algorithm related to the multi-optimization CNN with the background of the fruit classifier. Initially, to avoid the interference of exterior noise and influences on the classifier accurateness, the wavelet thresholds can be employed to denoise the fruit image, that could diminish image noises while preserving the image details. Then, the gamma transformation can be applied for image correction to rectify the over-dark fruit image or over-bright fruit image. At last, in the process of building the CNN, the SOM network can be presented for pre-learning the samples. Ashraf et al. [12] formulate a mechanism trained with a fruit image data set and identifies whether the fruit is fresh or rotten from an input image. And framed the initial method utilizing the Inception V3 algorithm and trained with this dataset implementing TL.

In [13], the authors designed a new fruit classifier algorithm using Recurrent Neural Network (RNN) architectures, CNN features, and LSTM. Type-II fuzzy enhancement was even utilized as a pre-processor tool for enhancing the images. Moreover, to fine-tune the hyperparameters of the devised method, TLBO-MCET was employed. Shahi et al. [14] project a lightweight DL technique utilizing the attention module and pretrained MobileNetV2 method. Firstly, the convolution features were derived for capturing high-level object-related data. Secondly, an attention module was employed to capture the exciting semantic data. The convolution and attention modules were compiled together to merge the interesting semantic information and high-level object-related data that can be pursued by the softmax-and FC layers.

Zhu et al. [15] devised a high-performance approach for vegetable images classifier related to DL structure. The AlexNet network method in Caffe has been leveraged for training the vegetable image dataset. The vegetable image dataset has been acquired from ImageNet and classified into a test dataset and training dataset. The output functioning of the AlexNet network implemented the Rectified Linear Units (ReLU) rather than the conventional tanh function and sigmoid function that hastened the training of the DL network. The dropout technology has been utilized for enhancing model generalization. In [16], this crew formulated a 13-layer CNN. Three kinds of data augmenting approaches are employed: noise injection, image rotation, and Gamma correction. And comparison was made between average pooling with max pooling. The stochastic gradient descent (SGD) with momentum has been utilized for training the CNN with a minibatch size of 128. Though several models are available in the literature, there is still a room to improve the classification performance. Most of the existing works have not focused on hyperparameter tuning process, which is addressed in this work.

This study introduces an Artificial Humming Bird Optimization with Siamese Convolutional Neural Network based Fruit Classification (AMO-SCNNFC) model. In the presented AMO-SCNNFC technique, image preprocessing is performed to enhance the contrast level of the image. In addition, spiral optimization (SPO) with the VGG-16 model is utilized to derive feature vectors. For fruit classification, AHO with an end-to-end (ESCNN) model is applied to identify different classes of fruits. The performance validation of the AMO-SCNNFC technique is tested using a dataset comprising diverse classes of fruit images.

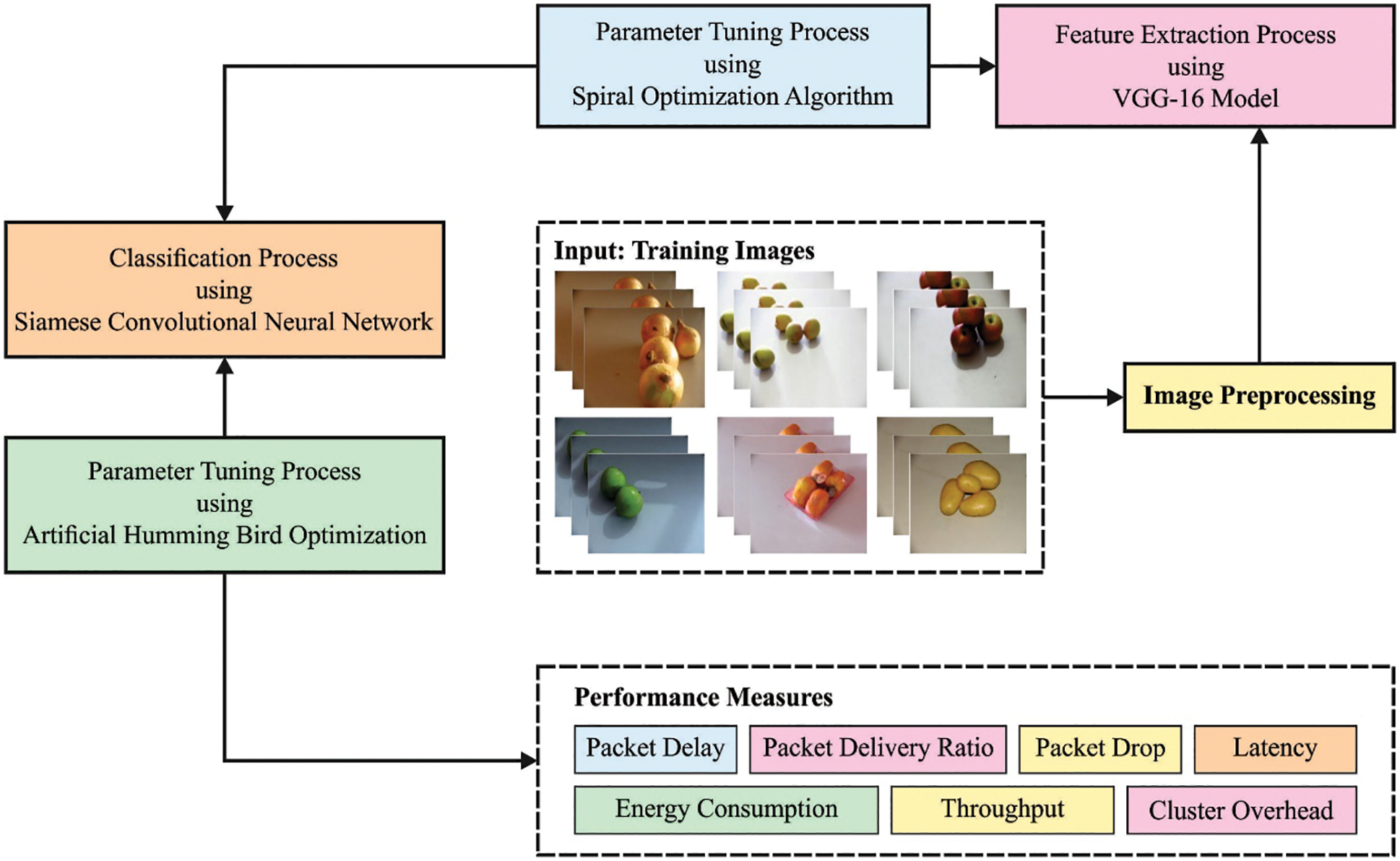

In this study, a new AMO-SCNNFC model has been developed for the automated classification of fruits. The AMO-SCNNFC technique encompasses preprocessing, VGG-16 feature extraction, SPO hyperparameter tuning, ESCNN classification, and AHO-based parameter optimization. Using SPO and AHO techniques helps accomplish enhanced fruit classification performance. Fig. 1 depicts the block diagram of the AMO-SCNNFC approach.

Figure 1: Block diagram of AMO-SCNNFC approach

2.1 Feature Extraction: VGG-16 Model

In this work, the input fruit images are given into the VGG-16 model to derive feature vectors. A CNN is the most effective kind of DL technique [17]. It employs a deep convolution network and non-linearity for learning local and spatial features and patterns directly in raw data, namely sound, video, images, and text. CNN learn the features in data automatically, reducing require for extracting them manually. It creates difficult features as a combination of easy features with a series of sequential convolution layers. The previous layers learned low-level features, namely edge and curve and the deeper layer learned for recognizing difficult high-level features like whole objects from the images. The VGG_16, the D-configuration, also called VGG_D, is an optimum network configuration amongst the VGGNets created with the VGG group. Its structure was popular in the literature because of its uniform infrastructure and higher accuracy of the classifier task. The VGG_16 is a deeper network than AlexNet. It contains 16 trainable layers, 13 convolution layers and 3 fully connected (FC) layers. This technique features a homogeneous and smooth infrastructure that only utilizes filters of sizes 3 × 3 with a stride of one for convolution and 2 × 2 pooling with a stride of two from every layer. The convolution layer was grouped into 5 blocks. Adjacent blocks were connected with a max-pooling layer that executes downsampling with half along the spatial dimensional. The max-pooling decreases the dimensional of layers from 224 × 224 from the 1st block to 7 × 7 afterward the last one. The amount of convolutional filters remains set in one block and doubles afterward all the max-pooling layers in 64 from the 1st block to 512 from the last blocks. As in AlexNet, the ReLU layer was tailed afterward all the convolution and FC layers. The FC layer from VGG_16 takes similar structures from the AlexNet. VGG_16 could not utilize the normalized layer because of its lesser involvement in improving accuracy.

2.2 Hyperparameter Tuning: SPO Algorithm

To optimally modify the hyperparameters related to the VGG-16 model, the SPO algorithm is exploited in this study.

The SPO approach applies a logarithmic spiral produced using the general spiral model, and it is a dynamic method where

In Eq. (1),

Now,

The inspiration for the presented approach was the comprehension that the dynamic generating logarithmic spiral seems to have an affinity with the efficient technique of metaheuristics, “diversification in the initial half and intensification in the next half.

• Diversification: Search for good solutions by seeking a wider area.

• Intensification: Search for a good solution by seeking intensely nearby a better solution.

Subsequently, the SPO approach is a direct multi-point search technique that employs different general spiral mechanisms of Eq. (1) and is defined in case of a problem to minimalize an objective function:

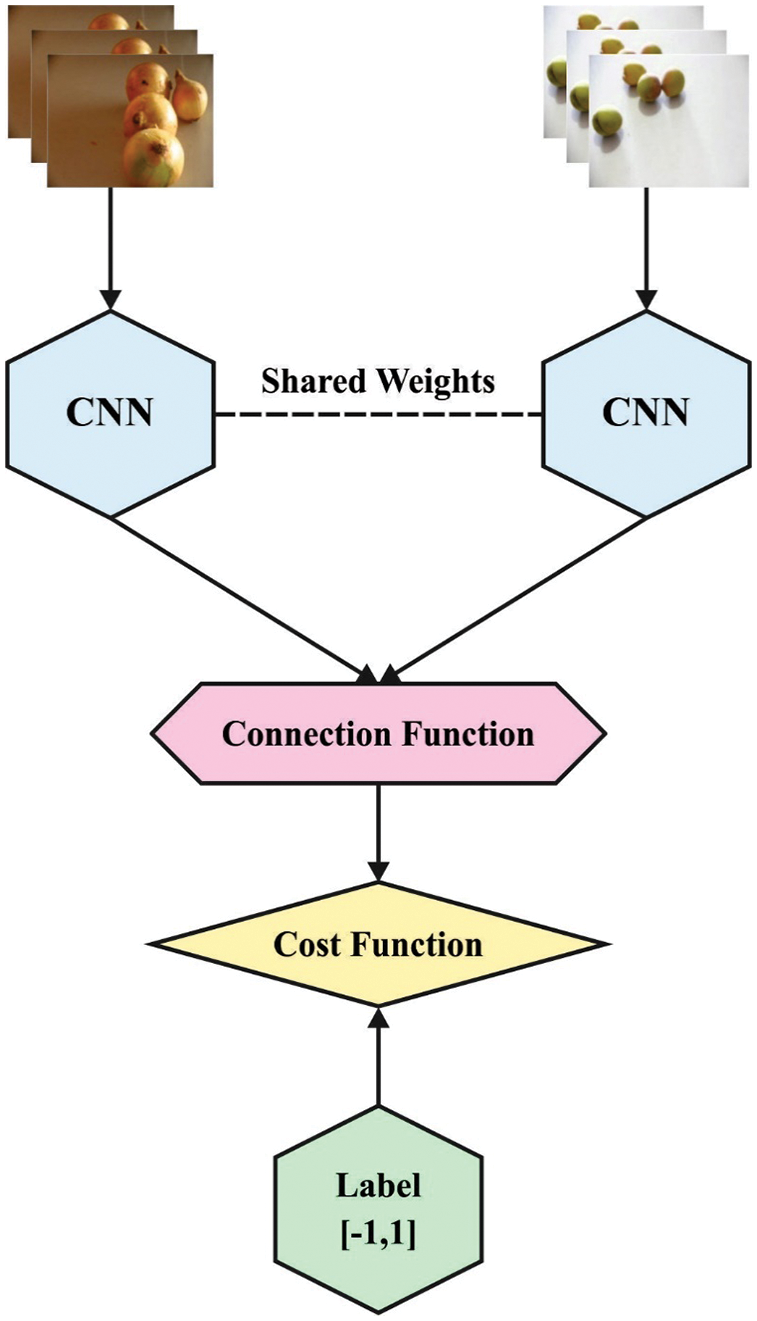

2.3 Fruit Classification: SCNN Model

For fruit classification, the ESCNN model is applied to identify different classes of fruits. An important development in the original SCNN to ESCNN is that ESCNN was provided with a fully connected (FC) network for replacing the energy function and resultant layer from the original SCNN [19]. The resultant layer of ESCNN comprises several neurons that allow it for managing multi-class classifier tasks directly. While the alteration of network topology, related to SCNN, ESCNN labeling approach of sample-pair, the process of creating classifier map and loss function were established as follows. It can be executing the labeling approach of the sample pair presented. Afterwards, labeling the sample-pair set by this approach, the labeled sample-pair set comprises another class than the original trained set. In detail, when the trained set involves

Figure 2: Structure of SNN

The underlying assumption of this approach is that the central pixel has a higher probability of going to a similar class as their neighbor pixels. Consider that a

whereas

To adjust the hyperparameters related to the ESCNN model, the AHB is used. The AHB technique was employed to efficiently alter the parameters contained in the ESCNN technique to achieve maximal classifier efficiency [20]. The AHB technique is an optimized method inspired by the foraging and flight of hummingbirds. The 3 important processes are offered as follows: during the guided foraging method, 3 flight performances were employed from the foraging (omnidirectional, axial, and diagonal flights). It could be demonstrated as:

whereas

In which

whereas

In which

The AHB algorithm makes derivations of a fitness function (FF) for achieving enhanced classifier outcomes. It sets a positive numeral for indicating the superior outcome of the candidate solutions. The reduction of the classifier error rate can be regarded as the FF, as presented in Eq. (9).

The proposed model is simulated using Python 3.6.5 tool on PC i5-8600k, GeForce 1050Ti 4 GB, 16 GB RAM, 250 GB SSD, and 1TB HDD. The parameter settings are given as follows: learning rate: 0.01, dropout: 0.5, batch size: 5, epoch count: 50, and activation: ReLU. This section inspects the fruit classification performance of the AMO-SCNNFC model on an openly accessible fruit and vegetable dataset [21] that comprises 15 classes as shown in Table 1. All the classes involve at least 75 images, resulting in 2633 images in total.

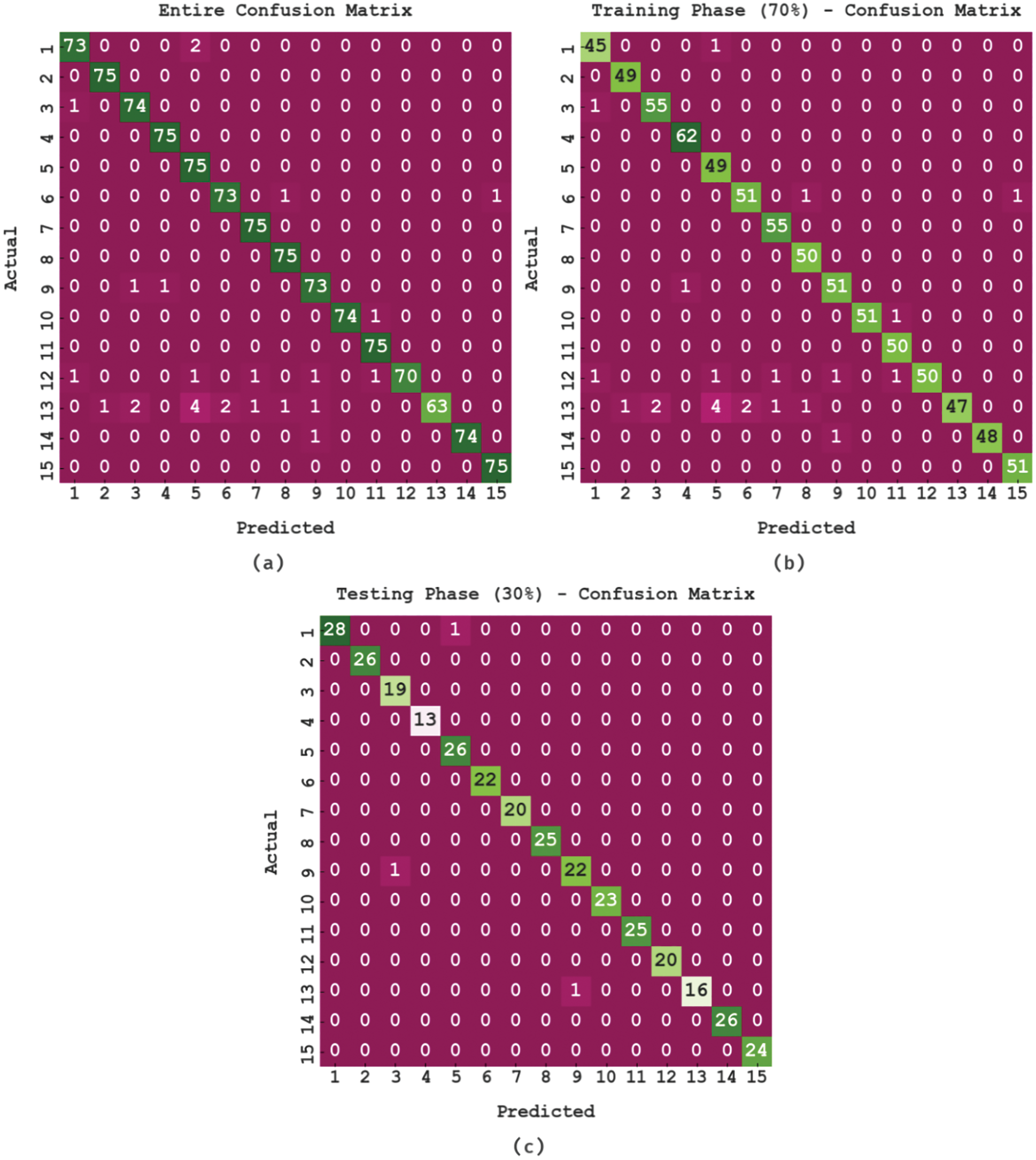

Fig. 3 represents the confusion matrices produced by the AMO-SCNNFC model. The figure ensured that the AMO-SCNNFC model had effectually identified different kinds of fruit classes proficiently.

Figure 3: Confusion matrices of AMO-SCNNFC approach (a) Entire dataset, (b) 70% of TR data, and (c) 30% of TS data

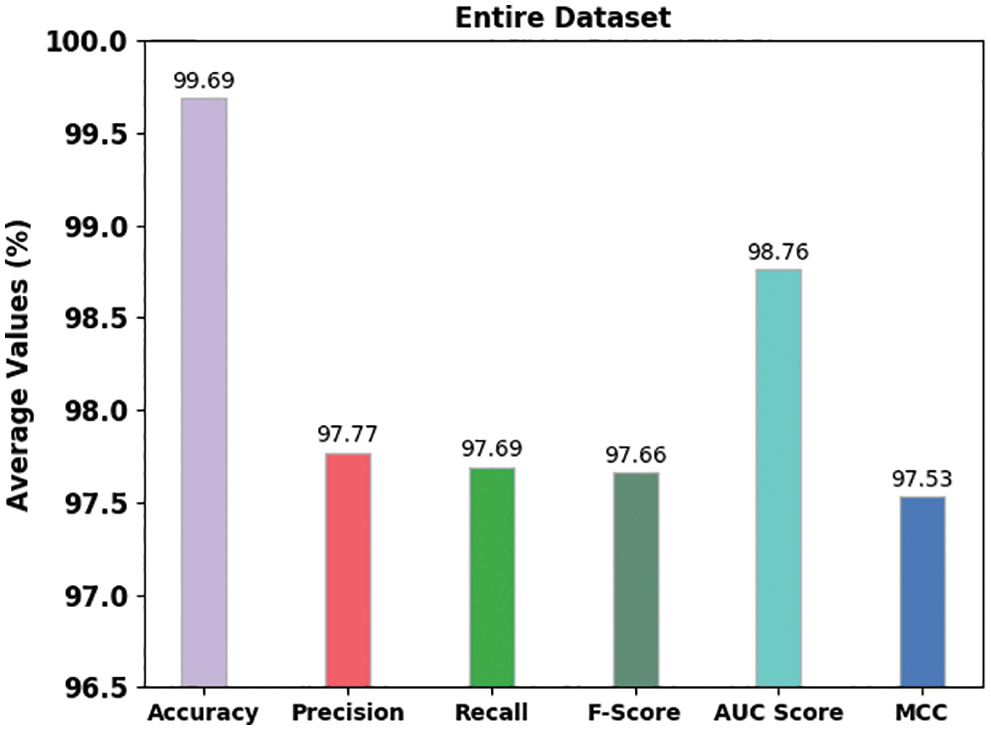

Table 2 and Fig. 4 portrayed the fruit classification results of the AMO-SCNNFC model on the entire dataset. The AMO-SCNNFC method has classified samples under class 1 with

Figure 4: Result analysis of AMO-SCNNFC approach under the entire dataset

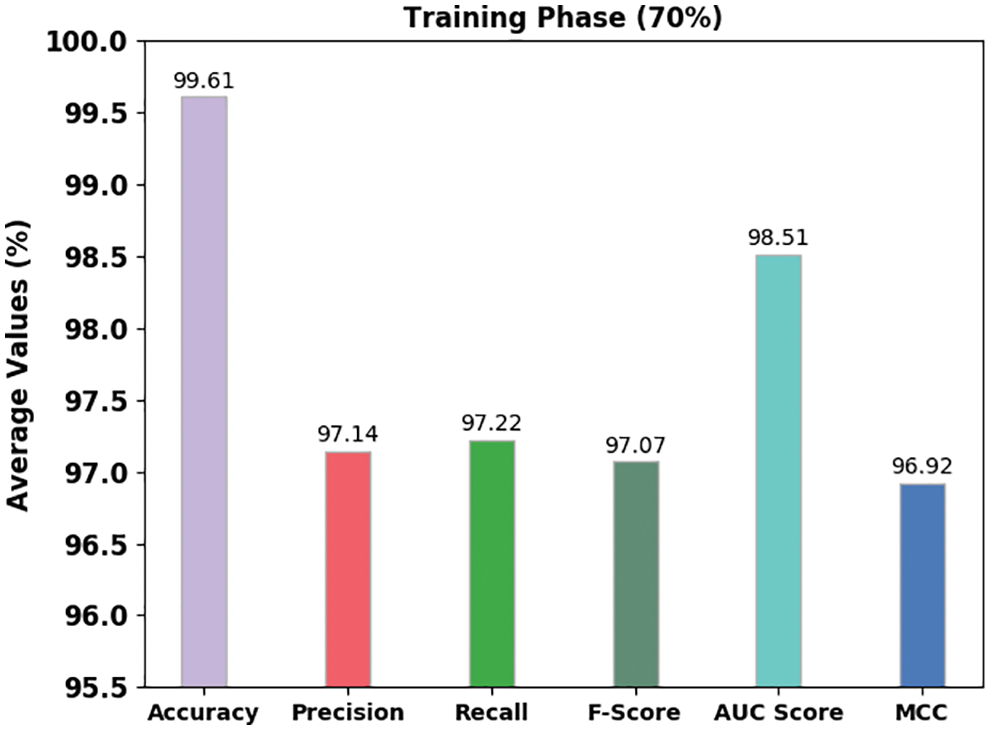

Table 3 and Fig. 5 portrayed the fruit classification results of the AMO-SCNNFC model on 70% of training (TR) data. The AMO-SCNNFC algorithm has classified samples under class 1 with

Figure 5: Result analysis of AMO-SCNNFC approach under 70% of TR data

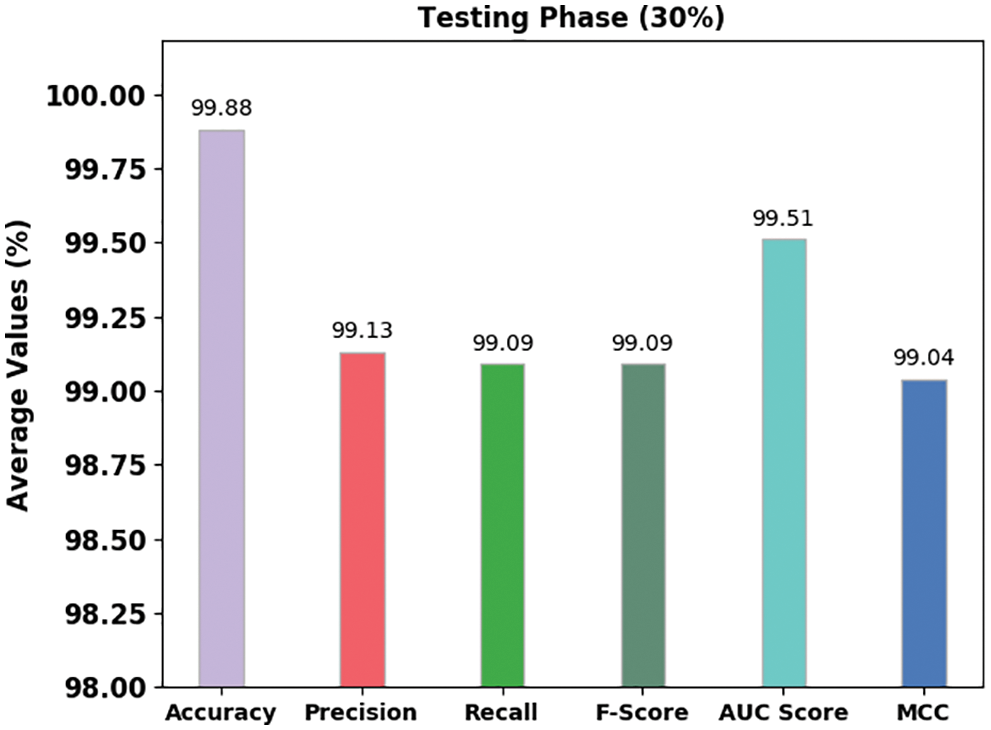

Table 4 and Fig. 6 portrayed the fruit classification results of the AMO-SCNNFC technique on 30% of testing (TS) data. The AMO-SCNNFC approach has classified samples under class 1 with

Figure 6: Result analysis of AMO-SCNNFC approach under 30% of TS data

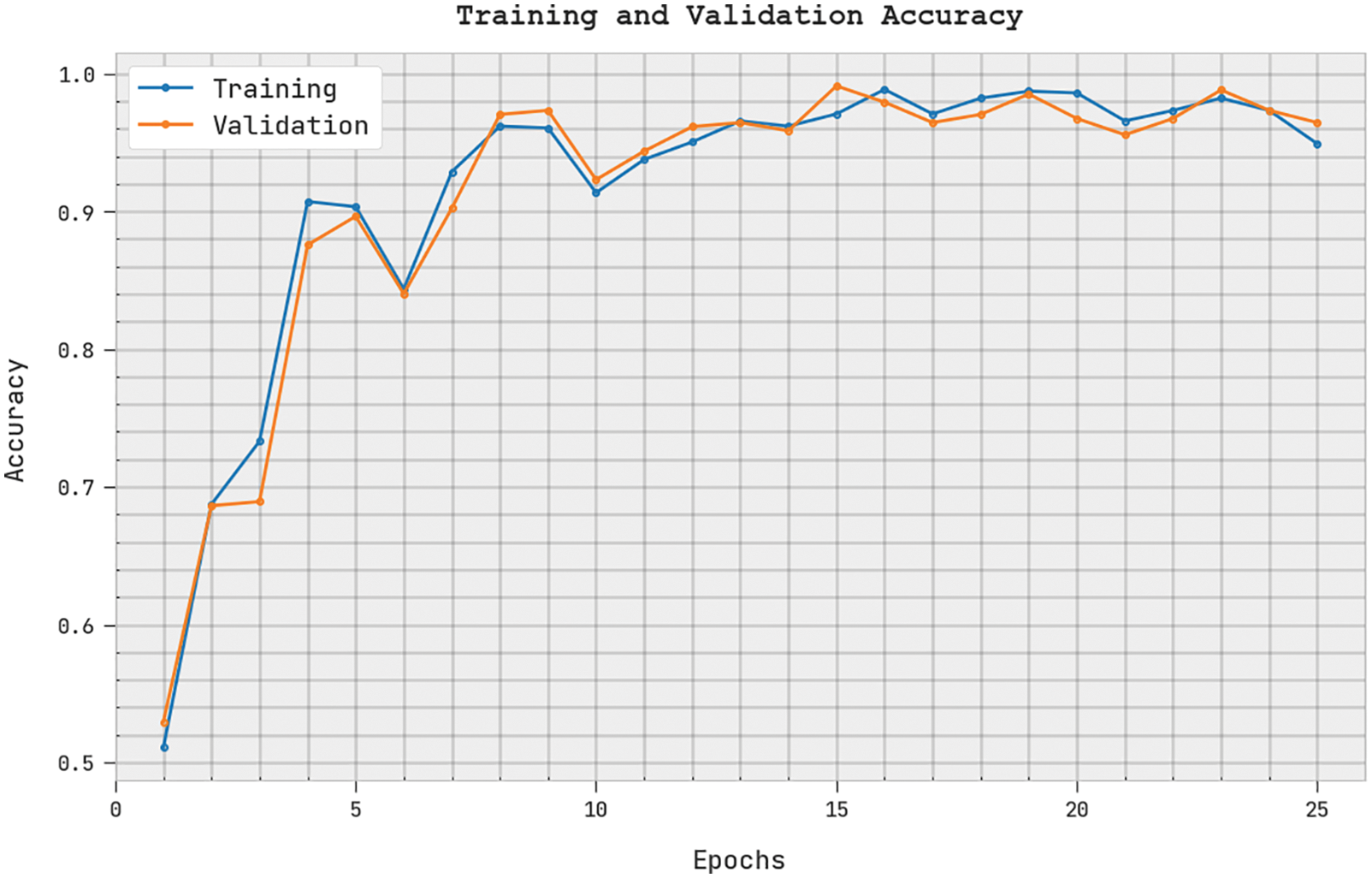

The training accuracy (TRA) and validation accuracy (VLA) acquired by the AMO-SCNNFC method on the test dataset is shown in Fig. 7. The experimental result implicit the AMO-SCNNFC method has gained maximal values of TRA and VLA. Seemingly the VLA is greater than TRA.

Figure 7: TRA and VLA analysis of AMO-SCNNFC methodology

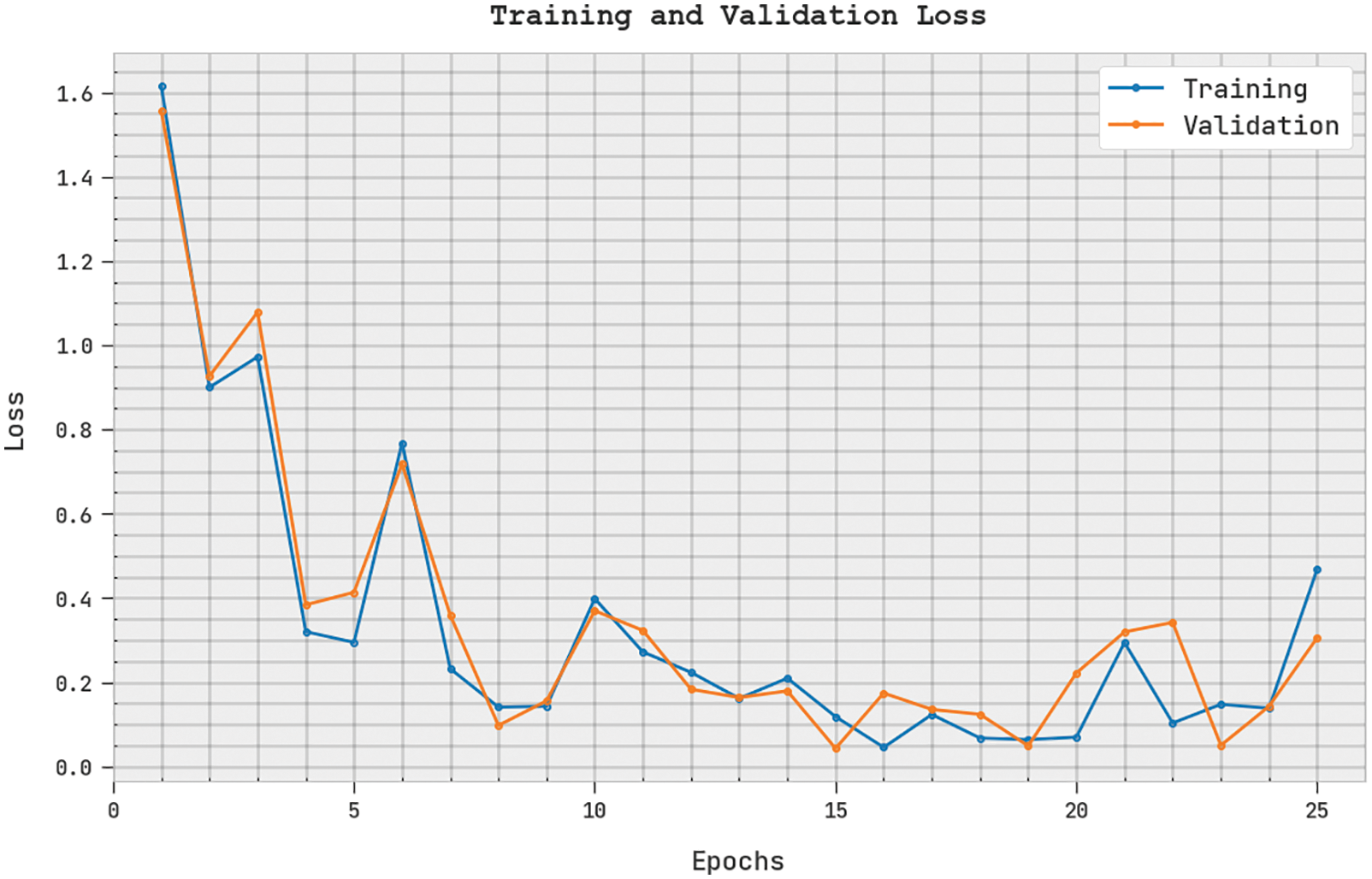

The training loss (TRL) and validation loss (VLL) attained by the AMO-SCNNFC method on the test dataset were exhibited in Fig. 8. The experimental outcome denoted the AMO-SCNNFC algorithm has established the least values of TRL and VLL. Specifically, the VLL is lesser than TRL.

Figure 8: TRL and VLL analysis of AMO-SCNNFC methodology

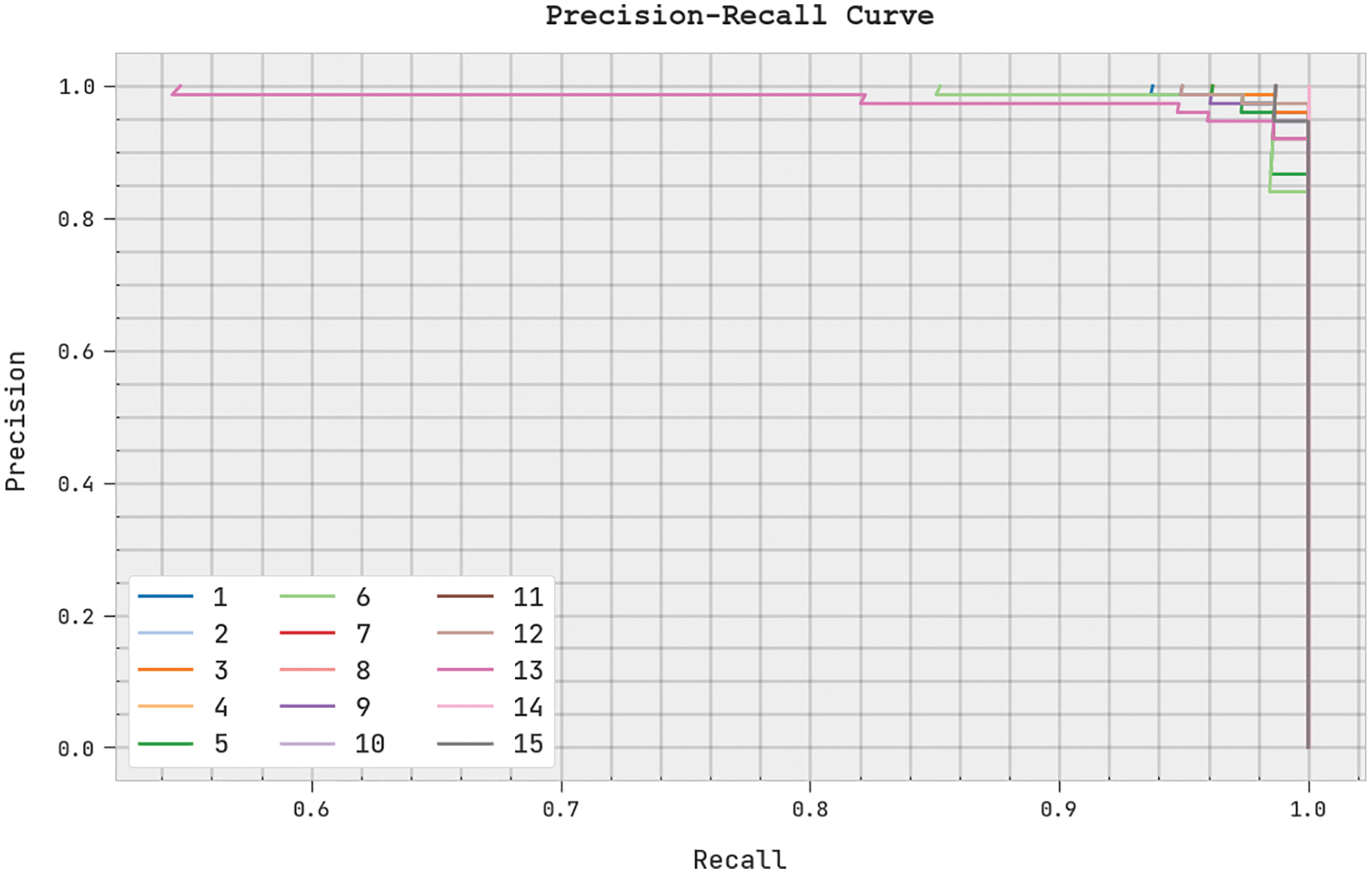

A clear precision-recall analysis of the AMO-SCNNFC method on the test dataset is shown in Fig. 9. The figure demonstrated that the AMO-SCNNFC method has resulted in enhanced values of precision-recall values under all classes.

Figure 9: Precision-recall analysis of AMO-SCNNFC methodology

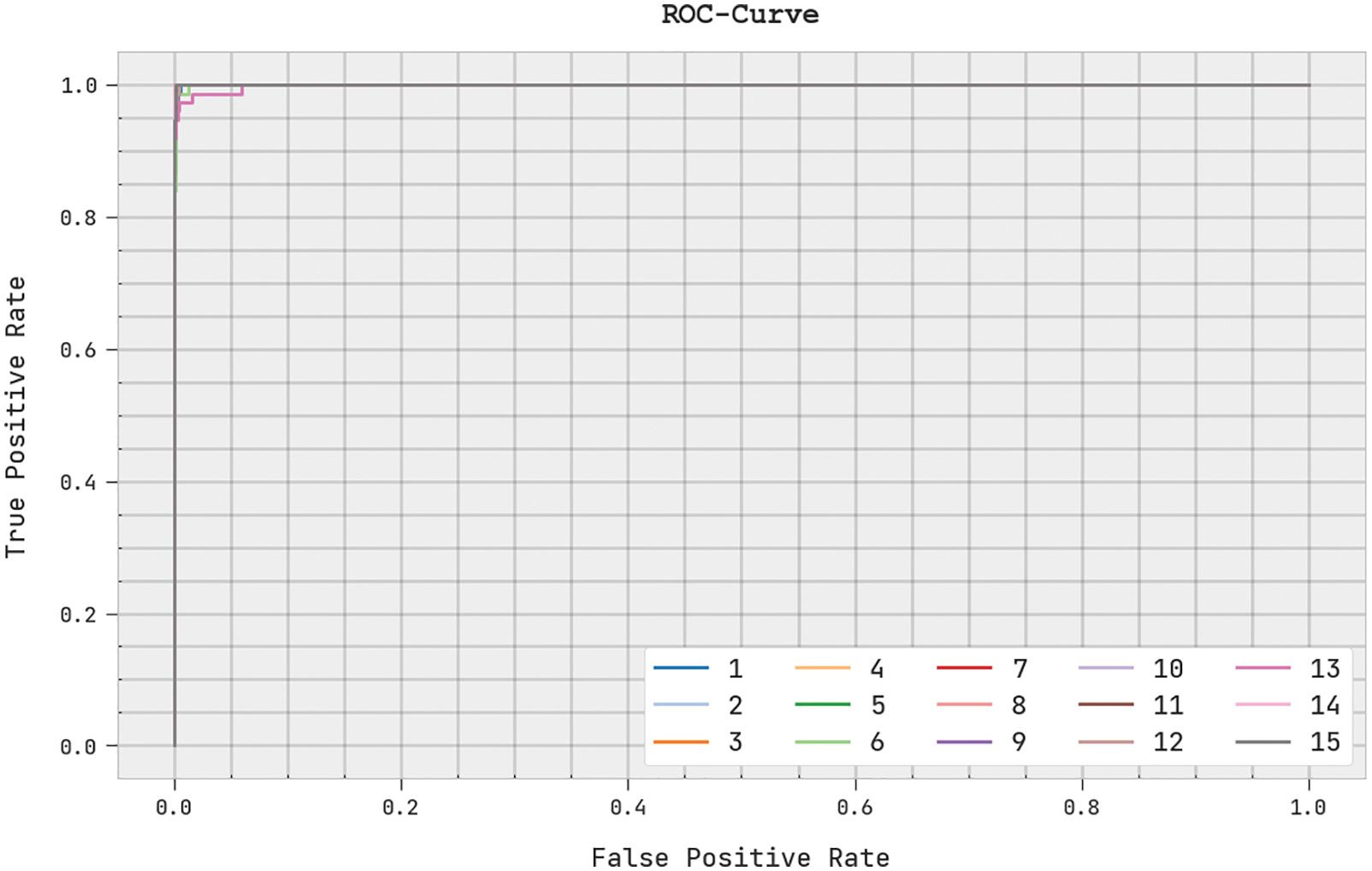

A brief ROC study of the AMO-SCNNFC method on the test dataset is exhibited in Fig. 10. The results denoted the AMO-SCNNFC method has shown its ability in categorizing distinct classes on the test dataset.

Figure 10: ROC analysis of AMO-SCNNFC methodology

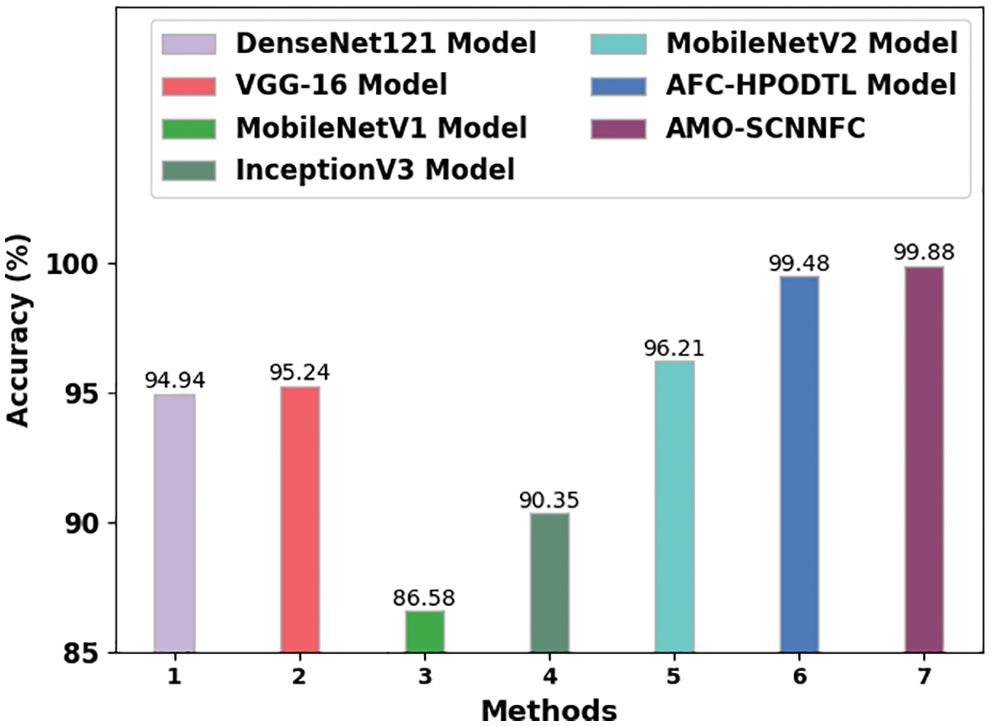

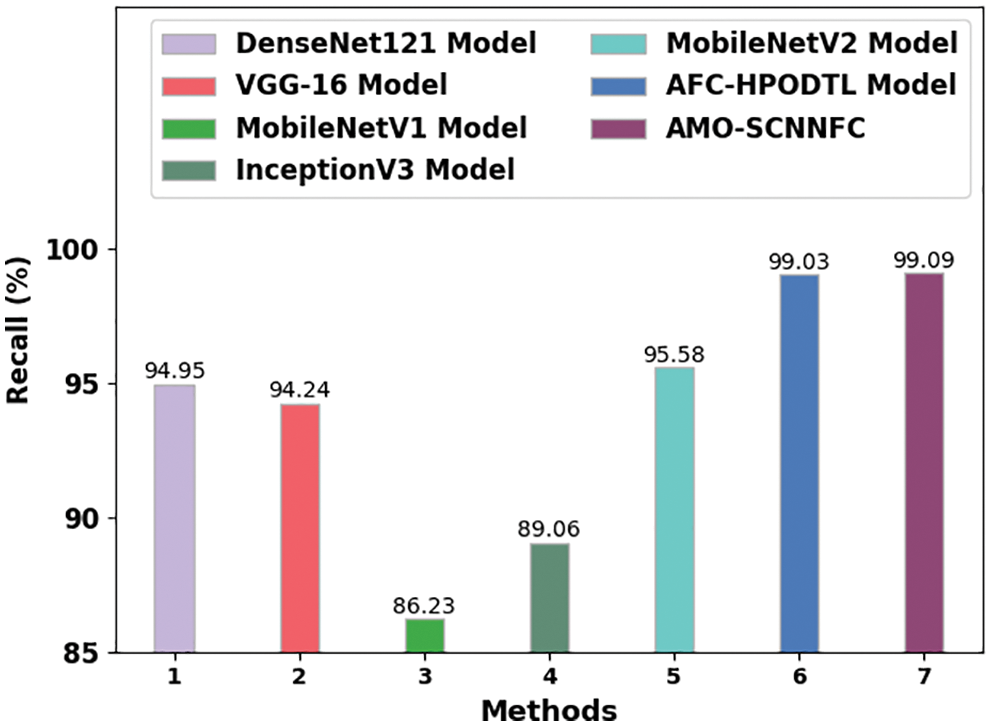

Table 5 highlights an overall comparison study of the AMO-SCNNFC model with existing techniques [22]. Fig. 11 demonstrates the comparative

Figure 11:

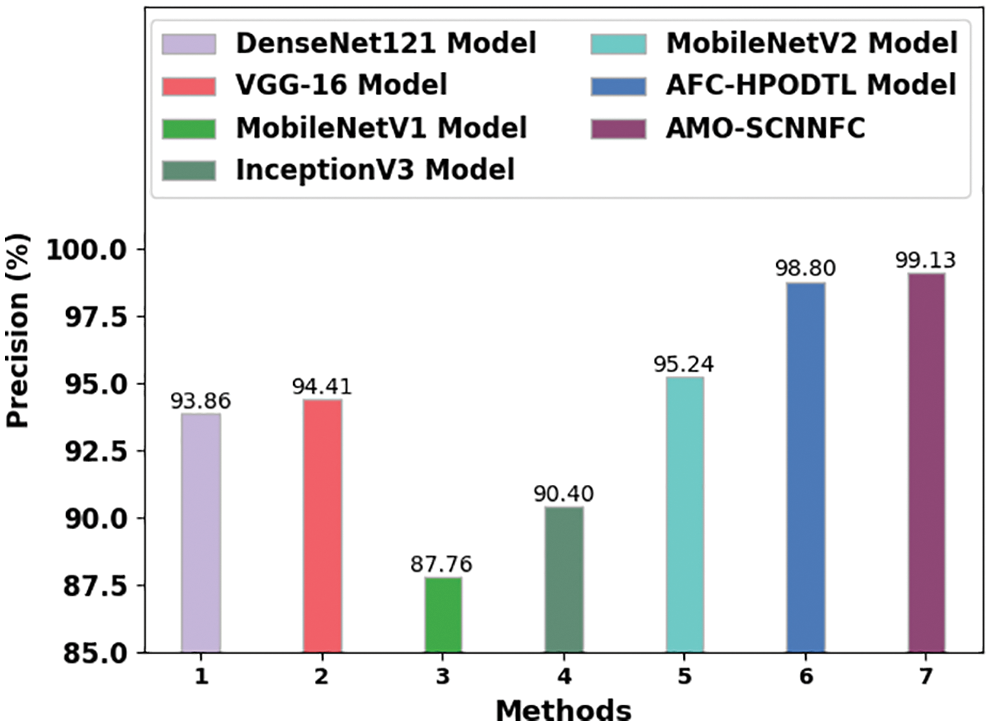

Fig. 12 establishes the comparative

Figure 12:

Fig. 13 validates the comparative

Figure 13:

The results implied that the MobileNetV1 and Inception v3 models have displayed shown poor outcomes with minimal

In this study, a new AMO-SCNNFC model has been developed for the automated classification of fruits. The presented AMO-SCNNFC technique encompasses preprocessing, VGG-16 feature extraction, SPO hyperparameter tuning, ESCNN classification, and AHO-based parameter optimization. The utilization of SPO and AHO techniques helps in accomplishing enhanced fruit classification performance. For fruit classification, AHO with the SCNN model is applied to identify different classes of fruits. The performance validation of the AMO-SCNNFC technique is tested using a dataset comprising diverse classes of fruit images. Extensive comparison studies reported the betterment of the AMO-SCNNFC technique over other approaches with higher accuracy of 99.88%. Thus, the AMO-SCNNFC technique can be utilized for the effectual fruit classification process. In the future, three DL-based fusion approaches can be developed to improve detection performance.

Funding Statement: This work was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2020R1A6A1A03038540) and by Korea Institute of Planning and Evaluation for Technology in Food, Agriculture, Forestry and Fisheries (IPET) through Digital Breeding Transformation Technology Development Program, funded by Ministry of Agriculture, Food and Rural Affairs (MAFRA) (322063-03-1-SB010) and by the Technology development Program (RS-2022-00156456) funded by the Ministry of SMEs and Startups (MSS, Korea).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. J. N. Torres, M. Mora, R. H. García, R. J. Barrientos, C. Fredes et al., “A review of convolutional neural network applied to fruit image processing,” Applied Sciences, vol. 10, no. 10, pp. 3443, 2020. [Google Scholar]

2. D. Shakya, “Analysis of artificial intelligence based image classification techniques,” Journal of Innovative Image Processing (JIIP), vol. 2, no. 1, pp. 44–54, 2020. [Google Scholar]

3. M. Tripathi, “Analysis of convolutional neural network based image classification techniques,” Journal of Innovative Image Processing (JIIP), vol. 3, no. 2, pp. 100–117, 2021. [Google Scholar]

4. D. T. P. Chung and D. V. Tai, “A fruits recognition system based on a modern deep learning technique,” Journal of Physics: Conference Series, vol. 1, pp. 012050, 2019. [Google Scholar]

5. R. G. de Luna, E. P. Dadios, A. A. Bandala and R. R. P. Vicerra, “Size classification of tomato fruit using thresholding, machine learning, and deep learning techniques,” AGRIVITA, Journal of Agricultural Science, vol. 41, no. 3, pp. 586–596, 2019. [Google Scholar]

6. F. Valentino, T. W. Cenggoro and B. Pardamean, “A design of deep learning experimentation for fruit freshness detection,” IOP Conference Series: Earth and Environmental Science, vol. 794, no. 1, pp. 012110, 2021. [Google Scholar]

7. M. Bayğin, “Vegetable and fruit image classification with squeezenet based deep feature generator,” Turkish Journal of Science and Technology, 2022. https://dx.doi.org/10.55525/tjst.1071338 [Google Scholar] [CrossRef]

8. V. A. Meshram, K. Patil and S. D. Ramteke, “MNet: A framework to reduce fruit image misclassification,” Ingénierie des Systèmes d’Information, vol. 26, no. 2, pp. 159–170, 2021. [Google Scholar]

9. F. Risdin, P. K. Mondal and K. M. Hassan, “Convolutional neural networks (CNN) for detecting fruit information using machine learning techniques,” IOSR Journal of Computer Engineering (IOSR-JCE), vol. 22, no. 2, pp. 1–13, 2020. [Google Scholar]

10. M. Hossain, M. Al-Hammadi and G. Muhammad, “Automatic fruit classification using deep learning for industrial applications,” IEEE Transactions on Industrial Informatics, vol. 15, no. 2, pp. 1027–1034, 2019. [Google Scholar]

11. X. Chen, G. Zhou, A. Chen, L. Pu and W. Chen, “The fruit classification algorithm based on the multi-optimization convolutional neural network,” Multimedia Tools and Applications, vol. 80, no. 7, pp. 11313–11330, 2021. [Google Scholar]

12. S. Ashraf, I. Kadery, M. A. A. Chowdhury, T. Z. Mahbub and R. M. Rahman, “Fruit image classification using convolutional neural networks,” International Journal of Software Innovation, vol. 7, no. 4, pp. 51–70, 2019. [Google Scholar]

13. H. S. Gill and B. S. Khehra, “Hybrid classifier model for fruit classification,” Multimedia Tools and Applications, vol. 80, no. 18, pp. 27495–27530, 2021. [Google Scholar]

14. T. B. Shahi, C. Sitaula, A. Neupane and W. Guo, “Fruit classification using attention-based MobileNetV2 for industrial applications,” PLoS One, vol. 17, no. 2, pp. e0264586, 2022. [Google Scholar] [PubMed]

15. L. Zhu, Z. Li, C. Li, J. Wu and J. Yue, “High performance vegetable classification from images based on AlexNet deep learning model,” International Journal of Agricultural and Biological Engineering, vol. 11, no. 4, pp. 213–217, 2018. [Google Scholar]

16. Y. D. Zhang, Z. Dong, X. Chen, W. Jia, S. Du et al., “Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation,” Multimedia Tools and Applications, vol. 78, no. 3, pp. 3613–3632, 2019. [Google Scholar]

17. S. Srivastava, P. Kumar, V. Chaudhry and A. Singh, “Detection of ovarian cyst in ultrasound images using fine-tuned vgg-16 deep learning network,” SN Computer Science, vol. 1, no. 2, pp. 81, 2020. [Google Scholar]

18. A. Kaveh and S. Mahjoubi, “Hypotrochoid spiral optimization approach for sizing and layout optimization of truss structures with multiple frequency constraints,” Engineering with Computers, vol. 35, no. 4, pp. 1443–1462, 2019. [Google Scholar]

19. M. H. Ali, M. M. Jaber, S. K. Abd, A. Rehman, M. J. Awan et al., “Threat analysis and distributed denial of service (ddos) attack recognition in the internet of things (IoT),” Electronics, vol. 11, no. 3, pp. 494, 2022. [Google Scholar]

20. A. Ramadan, S. Kamel, M. Hassan, E. Ahmed and H. Hasanien, “Accurate photovoltaic models based on an adaptive opposition artificial hummingbird algorithm,” Electronics, vol. 11, no. 3, pp. 318, 2022. [Google Scholar]

21. A. Rocha, D. Hauagge, J. Wainer and S. Goldenstein, “Automatic fruit and vegetable classification from images,” Computers and Electronics in Agriculture, vol. 70, no. 1, pp. 96–104, 2010. [Google Scholar]

22. K. Shankar, S. Kumar, A. K. Dutta, A. Alkhayyat, A. J. M. Jawad et al., “An automated hyperparameter tuning recurrent neural network model for fruit classification,” Mathematics, vol. 10, no. 13, pp. 2358, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools