Open Access

Open Access

ARTICLE

Harris Hawks Optimizer with Graph Convolutional Network Based Weed Detection in Precision Agriculture

1 Department of Software Engineering, College of Computer Science and Engineering, University of Jeddah, Jeddah, Saudi Arabia

2 King Abdul Aziz City for Science and Technology, Riyadh, Kingdom of Saudi Arabia

3 Chaitanya Bharathi Institute of Technology, Hyderabad, Telangana, India

4 Department of Civil Engineering, Vignan’s Institute of Information and Technology (A), Duvvada, Visakhapatnam, AP, 530049, India

5 Department of Computer Science and Engineering, Vignan’s Institute of Information Technology, Visakhapatnam, 530049, India

6 Department of Applied Data Science, Noroff University College, Kristiansand, Norway

7 Artificial Intelligence Research Center (AIRC), College of Engineering and Information Technology, Ajman University, Ajman, United Arab Emirates

8 Department of Electrical and Computer Engineering, Lebanese American University, Byblos, Lebanon

9 Department of Software, Kongju National University, Cheonan, 31080, Korea

* Corresponding Author: Seifedine Kadry. Email:

Computer Systems Science and Engineering 2023, 46(2), 1533-1547. https://doi.org/10.32604/csse.2023.036296

Received 24 September 2022; Accepted 21 November 2022; Issue published 09 February 2023

Abstract

Precision agriculture includes the optimum and adequate use of resources depending on several variables that govern crop yield. Precision agriculture offers a novel solution utilizing a systematic technique for current agricultural problems like balancing production and environmental concerns. Weed control has become one of the significant problems in the agricultural sector. In traditional weed control, the entire field is treated uniformly by spraying the soil, a single herbicide dose, weed, and crops in the same way. For more precise farming, robots could accomplish targeted weed treatment if they could specifically find the location of the dispensable plant and identify the weed type. This may lessen by large margin utilization of agrochemicals on agricultural fields and favour sustainable agriculture. This study presents a Harris Hawks Optimizer with Graph Convolutional Network based Weed Detection (HHOGCN-WD) technique for Precision Agriculture. The HHOGCN-WD technique mainly focuses on identifying and classifying weeds for precision agriculture. For image pre-processing, the HHOGCN-WD model utilizes a bilateral normal filter (BNF) for noise removal. In addition, coupled convolutional neural network (CCNet) model is utilized to derive a set of feature vectors. To detect and classify weed, the GCN model is utilized with the HHO algorithm as a hyperparameter optimizer to improve the detection performance. The experimental results of the HHOGCN-WD technique are investigated under the benchmark dataset. The results indicate the promising performance of the presented HHOGCN-WD model over other recent approaches, with increased accuracy of 99.13%.Keywords

Weeds are unwanted plants that grow in fields and compete with crops for light, water, space, and nutrients. If uncontrollable, they might have many adverse impacts like loss of crop yields, contamination of grain during harvesting, and production of a considerable amount of seeds, thus forming a weed seed bank in the fields [1]. Conventionally, weed management program involves the control of weeds via mechanical or chemical resources, namely the uniform application of herbicides all over the fields [2]. But, the spatial density of weeds isn’t uniform throughout the fields, thus resulting in the overuse of chemicals that lead to the evolution of herbicide-resistant weeds and environmental concerns. A site-specific weed management (SSWM) concept that represents detecting weed patches and removal or spot spraying by mechanical resources was introduced to resolve these shortcomings in the early 90s [3]. Earlier control of Weeds in the season is crucial because the weed will compete with the crop yields for the resource in the development phase of the crops, which leads to potential yield loss [4–6]. A considerable study has developed specific variable spraying methods to prevent waste and herbicide residual difficulties caused by conventional full-coverage spraying [7]. To accomplish accurate variable spraying, a major problem must be resolved in real-time accurate identification and detection of weeds and crops. Approaches to realizing the weed detection field through the computer vision (CV) technique primarily involve deep learning (DL) and conventional image processing [8]. While the detection of weeds is carried out with conventional image-processing techniques, extracting features, like shape, color, and texture, of the image and combining with conventional machine learning (ML) approaches like Support Vector Machine (SVM) or random forest (RF) approach, for the detection of weeds are needed [9]. Such techniques should have high dependence and design features manually on the quality of feature extraction, pre-processing methods, and image acquisition methods. With the increase in data volume and the advancement in computing power, the DL algorithm could extract multi-dimensional and multi-scale spatial semantic features of weed via Convolution Neural Network (CNN) because of their improved data expression abilities for images, which avoids the disadvantage of conventional extraction method [10]. Consequently, they have gained considerable interest among the researcher workers.

Ukaegbu et al. [11] define the advancement of a modular unmanned aerial vehicle (UAV) to eradicate and detect weeds on farmland. Precision agriculture involves resolving the issue of poor agricultural yield because of competition for nutrients by weeds and offers a rapid method to eliminate the difficult weeds utilizing developing technologies. This work has solved the mentioned problem. A quadcopter has been built, and lightweight resources accumulate elements. The system has a lithium polymer (li-po) battery, electric motor, propellers, receiver, flight controller, electronic speed controller, GPS, and frame. In [12], a DL mechanism can be advanced to find crops and weeds in croplands. The advanced system has been enforced and assessed by high-resolution UAV images captured on 2 different target fields: strawberry and pea; the advanced system can find weeds. In [13], a method can be advanced for accelerating the manual labelling of pixels utilizing a 2-step process. Firstly, the foreground and background were divided by maximum likelihood classification; secondly, the weed pixels were labelled manually. These labelled data were utilized for training semantic segmentation techniques that classify crop and background pixels into one class and other vegetation into the second class.

Osorio et al. [14] devise 3 techniques for weed estimation related to DL image processing in lettuce crops and a comparison made to visual assessments by professionals. One technique depended on SVM utilizing histograms of oriented gradients (HOG) as feature descriptors. The second one depends on YOLOV3 (you only look once at V3), using its robust structure for object detection. The last method relies upon Mask R-CNN (region-oriented CNN) to receive an instance segmentation for every individual. Such techniques are supplemented with a normalized difference vegetation index (NDVI) index (normal difference vegetation indexes) as a background sub-tractor to remove non-photosynthetic objects. In [15], a novel technique that integrates high-resolution RGB and low-resolution MS imageries is presented for detecting Gramineae weed in rice fields with plants 50 days afterwards emergence. The images were taken from a UAV. The presented technique will combine the texture data offered by high-resolution RGB imagery and the reflectance data presented by low-resolution MS imagery to obtain an integrated RGB-MS image with superior weed-discriminating features. After scrutinizing the normalized green-red difference index (NGRDI) and NDVI for detecting weeds, it is noted that NGRDI provides superior features.

This study presents a Harris Hawks Optimizer with Graph Convolutional Network based Weed Detection (HHOGCN-WD) technique for Precision Agriculture. The presented HHOGCN-WD technique mainly focuses on identifying and classifying weeds for precision agriculture. For image pre-processing, the HHOGCN-WD model utilizes a normal bilateral filter (BNF) for noise removal. In addition, coupled convolutional neural network (CCNet) model is utilized to derive a set of feature vectors. To detect and classify weed, the GCN model is utilized with the HHO algorithm as a hyperparameter optimizer to improve the detection performance. The experimental results of the HHOGCN-WD technique are investigated under the benchmark dataset.

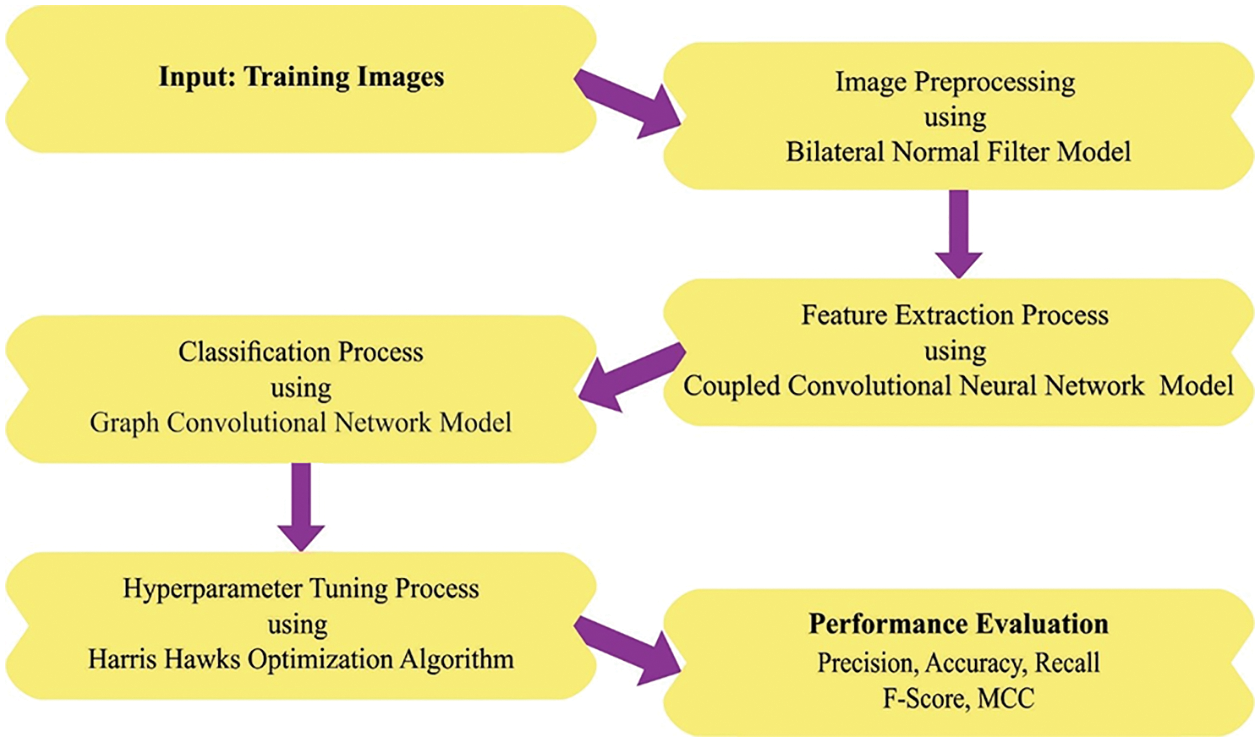

This study developed a new HHOGCN-WD technique for weed detection and classification for Precision Agriculture. The presented HHOGCN-WD technique mainly focuses on identifying and classifying weeds for precision agriculture. Fig. 1 depicts the overall block diagram of the HHOGCN-WD approach.

Figure 1: Overall block diagram of HHOGCN-WD approach

For image pre-processing, the HHOGCN-WD model utilizes BNF for noise removal. The BNF technique is a two-stage process that upgrades normal Niter time and later upgrades vertices Viter times [16]. The procedure of denoising process with the BNF is denoted by (update normal)

In Eq. (1),

Function

2.2 Feature Extraction: CCNet Model

The CCNet model is utilized at this stage to derive a set of feature vectors. It is well-known that CNN has established impressive abilities in image processing. Likewise, numerous CNN and derivative has been proposed for capturing the neighbourhood spatial feature from the image in weed classification [17]. The discriminatory feature allows discrimination against targeted regions in complicated scenes.

It is noted that the input dataset

Because of the weight-sharing module, the two CNN networks share the parameter and setting of the additional 3 convolution layers, excluding the initial convolution layer. It has the apparent advantage of a larger reduction in several major variables. Then, the convolutional operation rule of the two CNN models is shown below:

In Eq. (4),

2.3 Weed Detection Using GCN Model

This study utilises the GCN model to detect and classify weeds. Assume an input image I, the objective of multilabel image classifier is to evaluate a f function which forecasts the occurrence or not of label belonging to a set

where w and h correspondingly represent the pixel-wise width and height of the image. Noted that

In Eq. (5),

Next, assume that

where h refers to the nonlinear activation function frequently selected as a Leaky Rectified Linear Unit (Leaky ReLU),

This study applies the HHO algorithm as a hyperparameter optimizer to improve detection performance. The HHO technique utilized 2 distinct approaches to searching functions from the exploration stage [19]. Each of the approaches is chosen dependent upon q; If

whereas

A distinct process was utilized for moving from the exploration to the exploitation stages. In optimized functions, the exploration function was carried out first, then the exploitation function, by improving the iterations, defining an optimum solution, and creating a promising solution. Eq. (9) has been utilized for mathematical modeling.

If

Soft besiege

If

In Eq. (10),

Hard besiege

If

If

This method utilizes a Lévy flight (LF) to improve efficiency. In addition, the 2 states of Eq. (18) were related to the present solution. The LF could not be utilized as an outcome of Y but is employed in Z, provided in Eq. (8).

In Eq. (14), S refers to the arbitrary number from the dimension of problems from the range of zero and one, and

In Eq. (15), u and v represent the 2 arbitrary numbers between

Based on Eq. (16), the outcome of Eq. (13) is superior to the present solutions and, therefore, changes it; else, the solution achieved in Eq. (14) is related to the present solutions. Assume that

In Eq. (17), Y and Z are attained utilizing Eqs. (18) and (19).

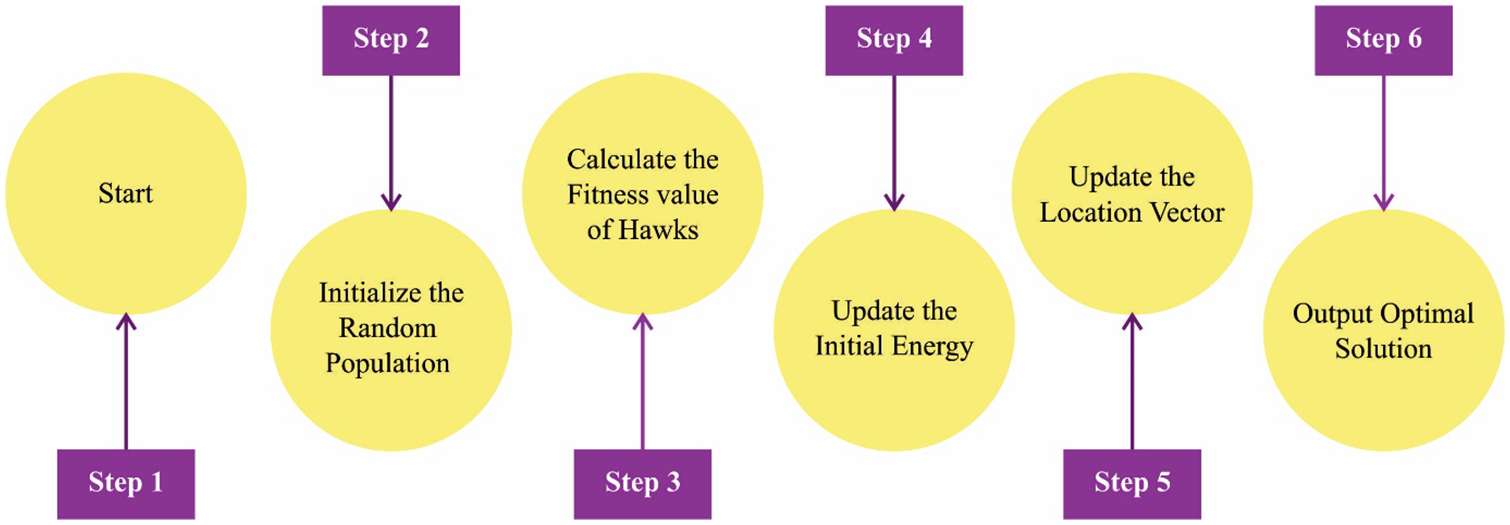

During this approach, the solution achieved in Eq. (18) changes the present solution when it can be more effective than others; else, the solution achieved in Eq. (19) is exchanged when it can be more effective than the present solution. Fig. 2 showcases the flowchart of the HHO technique.

Figure 2: Flowchart of HHO technique

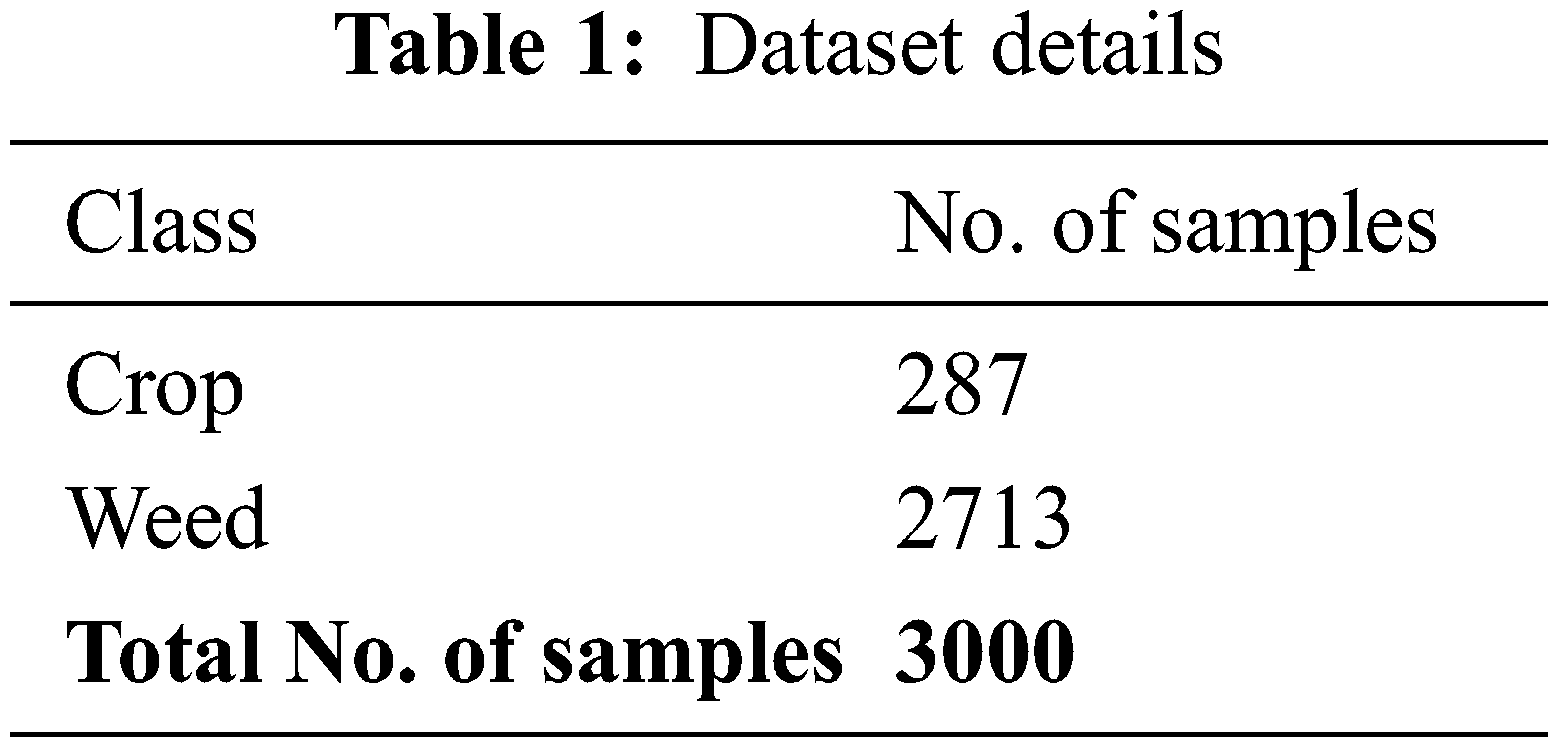

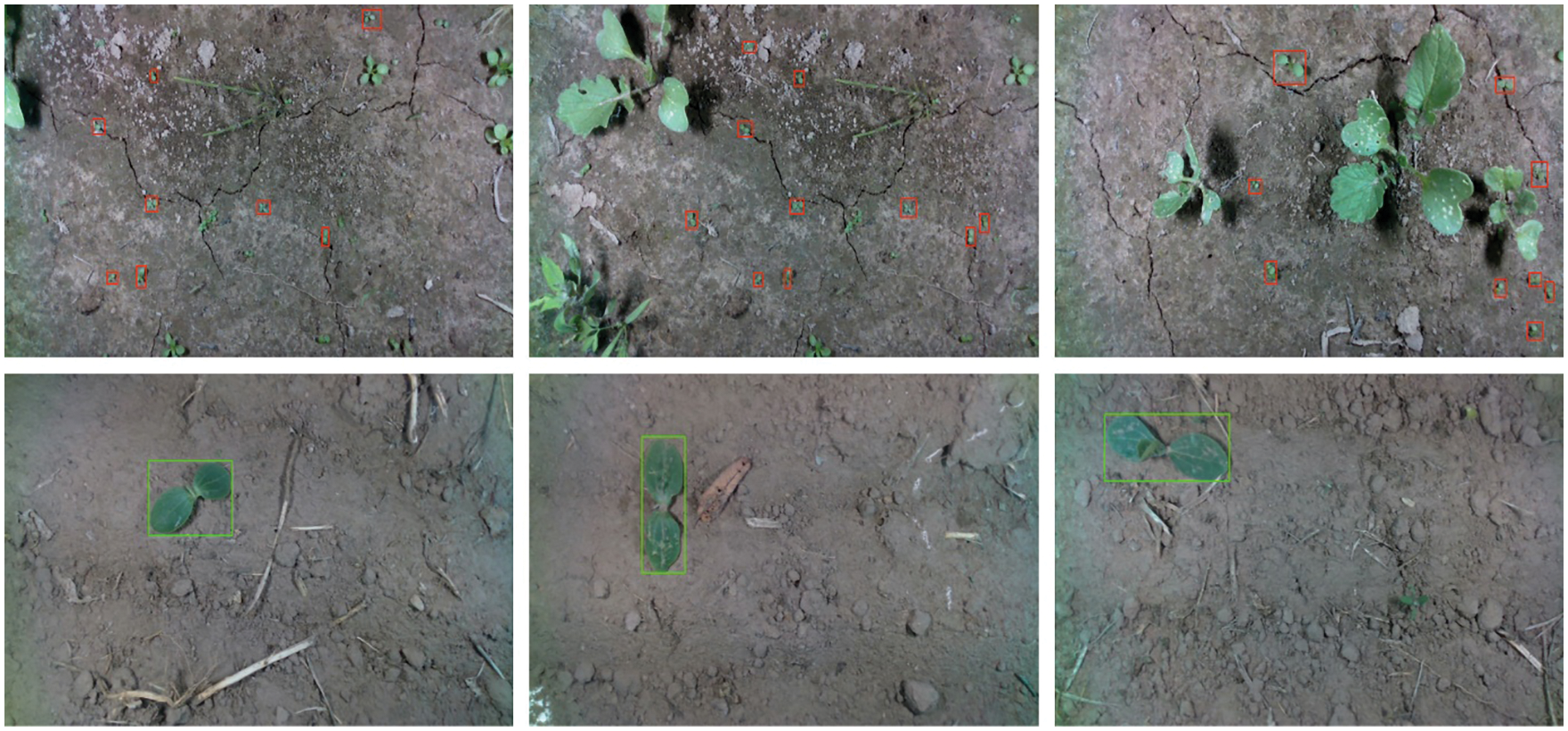

The weed detection performance of the HHOGCN-WD model is tested using a benchmark weed dataset [20]. The dataset holds 3000 samples with two classes, as in Table 1. A few sample images are demonstrated in Fig. 3.

Figure 3: Sample images

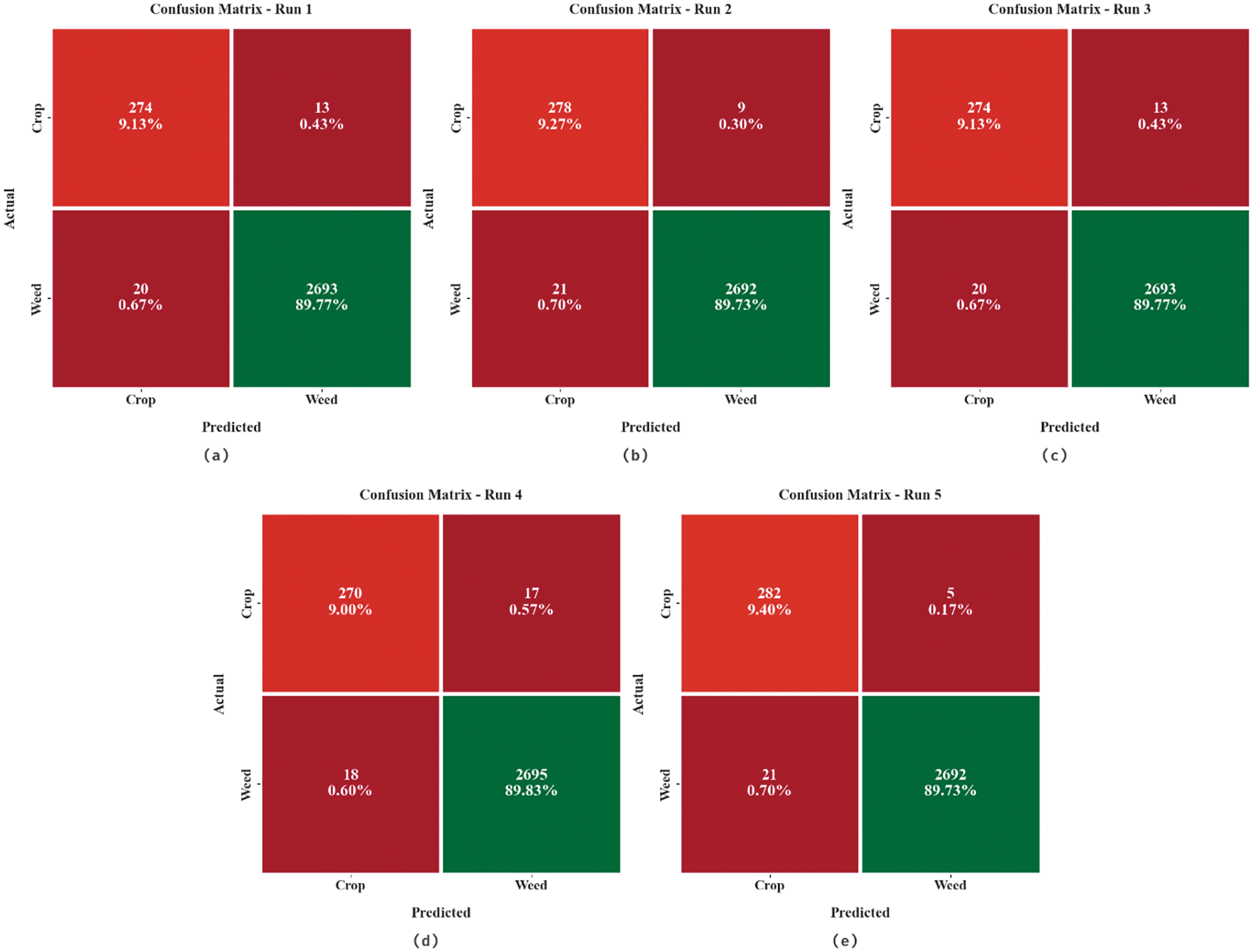

Fig. 4 depicts the confusion matrices offered by the HHOGCN-WD model under five distinct runs. The figure implied that the HHOGCN-WD model had identified the weeds proficiently under each class. For instance, on run-1, the HHOGCN-WD model detected 274 samples under crop and 2693 samples under weed class. Moreover, on run-2, the HHOGCN-WD technique detected 278 samples under crop and 2692 samples under weed class. Further, on run-3, the HHOGCN-WD approach detected 274 samples under crop and 2693 samples under weed class. Then, on run-4, the HHOGCN-WD technique detected 270 samples under crop and 2695 samples under weed class. Next, on run-5, the HHOGCN-WD method detected 282 samples under crop and 2692 samples under weed class.

Figure 4: Confusion matrices of HHOGCN-WD approach (a) Run1, (b) Run2, (c) Run3, (d) Run4, and (e) Run5

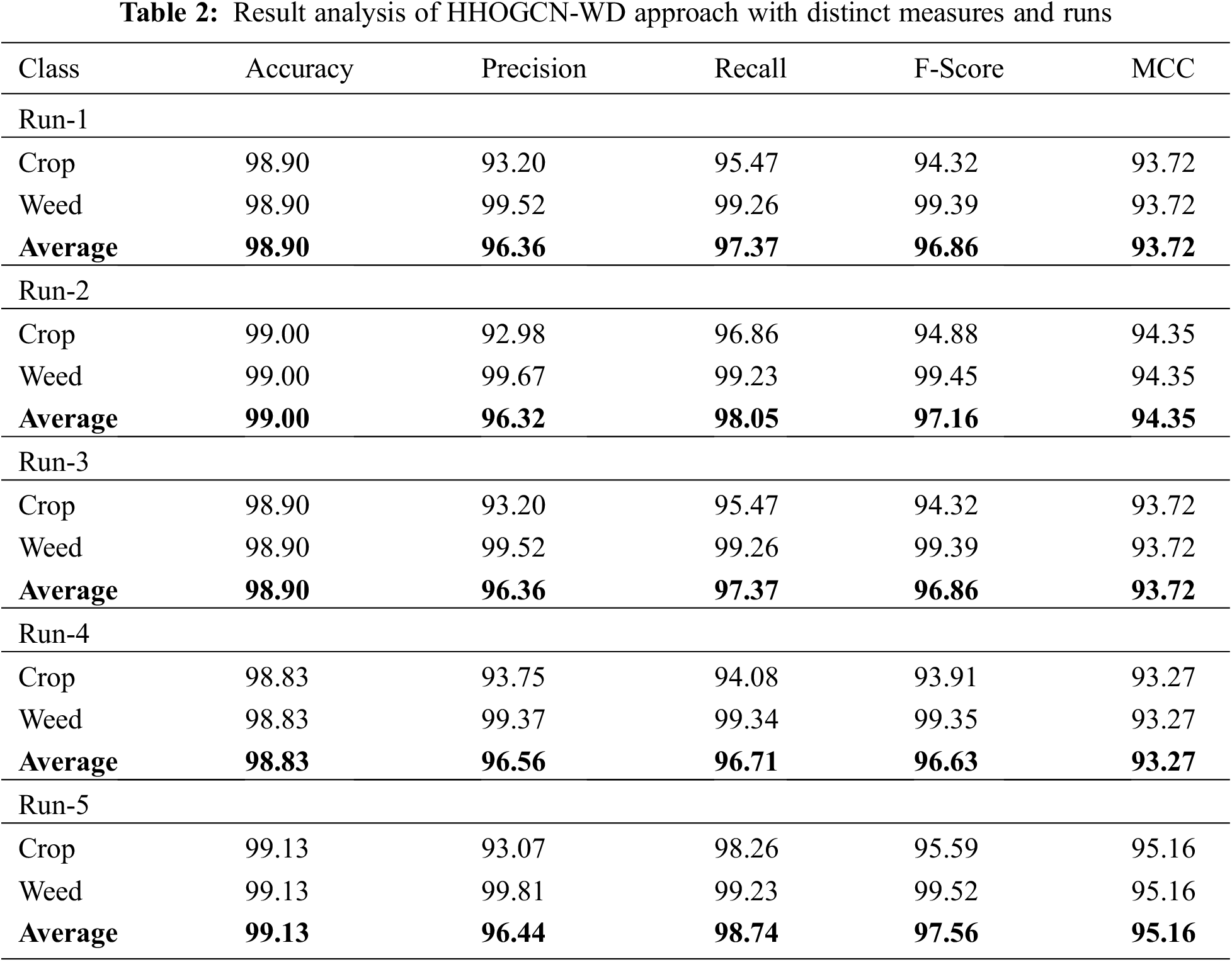

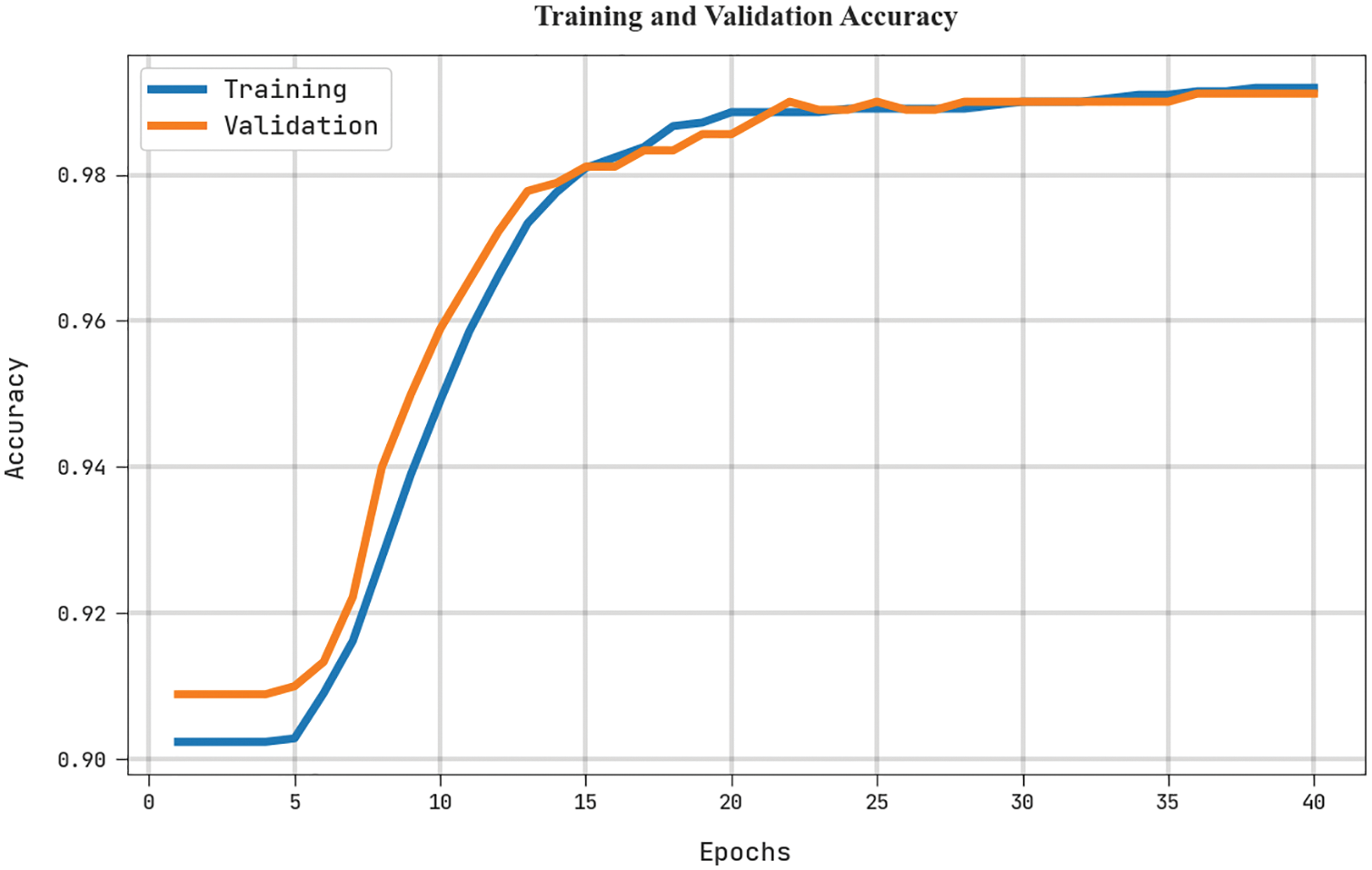

Table 2 and Fig. 5 demonstrate the overall weed detection outcomes of the HHOGCN-WD model under five distinct runs. The results indicated that the HHOGCN-WD model had shown enhanced weed detection outcomes. For instance, on run-1, the HHOGCN-WD model has offered average

Figure 5: Average analysis of HHOGCN-WD approach (a) Run1, (b) Run2, (c) Run3, (d) Run4, and (e) Run5

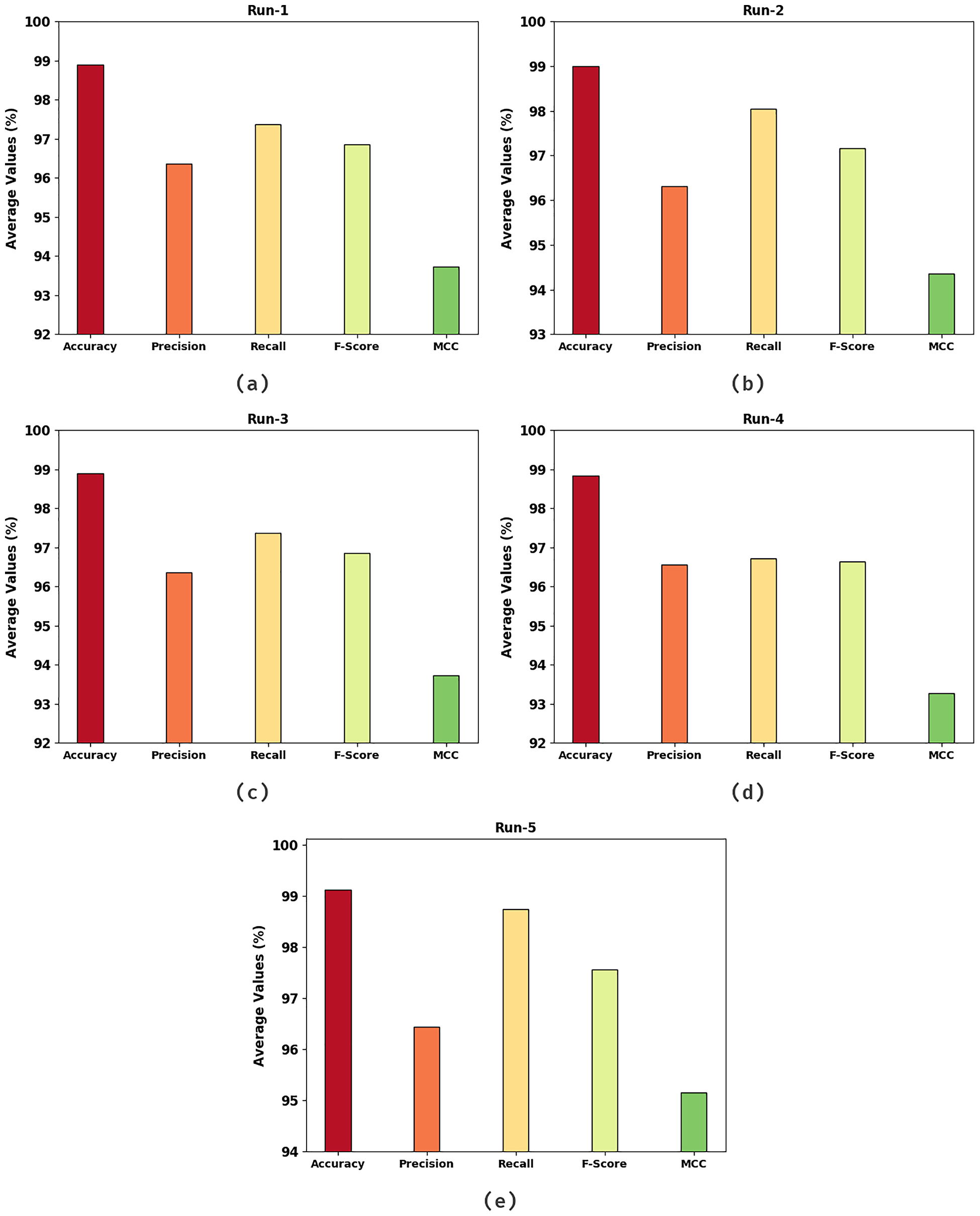

The training accuracy (TRA) and validation accuracy (VLA) acquired by the HHOGCN-WD approach in the test dataset is shown in Fig. 6. The experimental outcome implicit in the HHOGCN-WD method has attained maximal values of TRA and VLA. Seemingly the VLA is greater than TRA.

Figure 6: TRA and VLA analysis of HHOGCN-WD approach

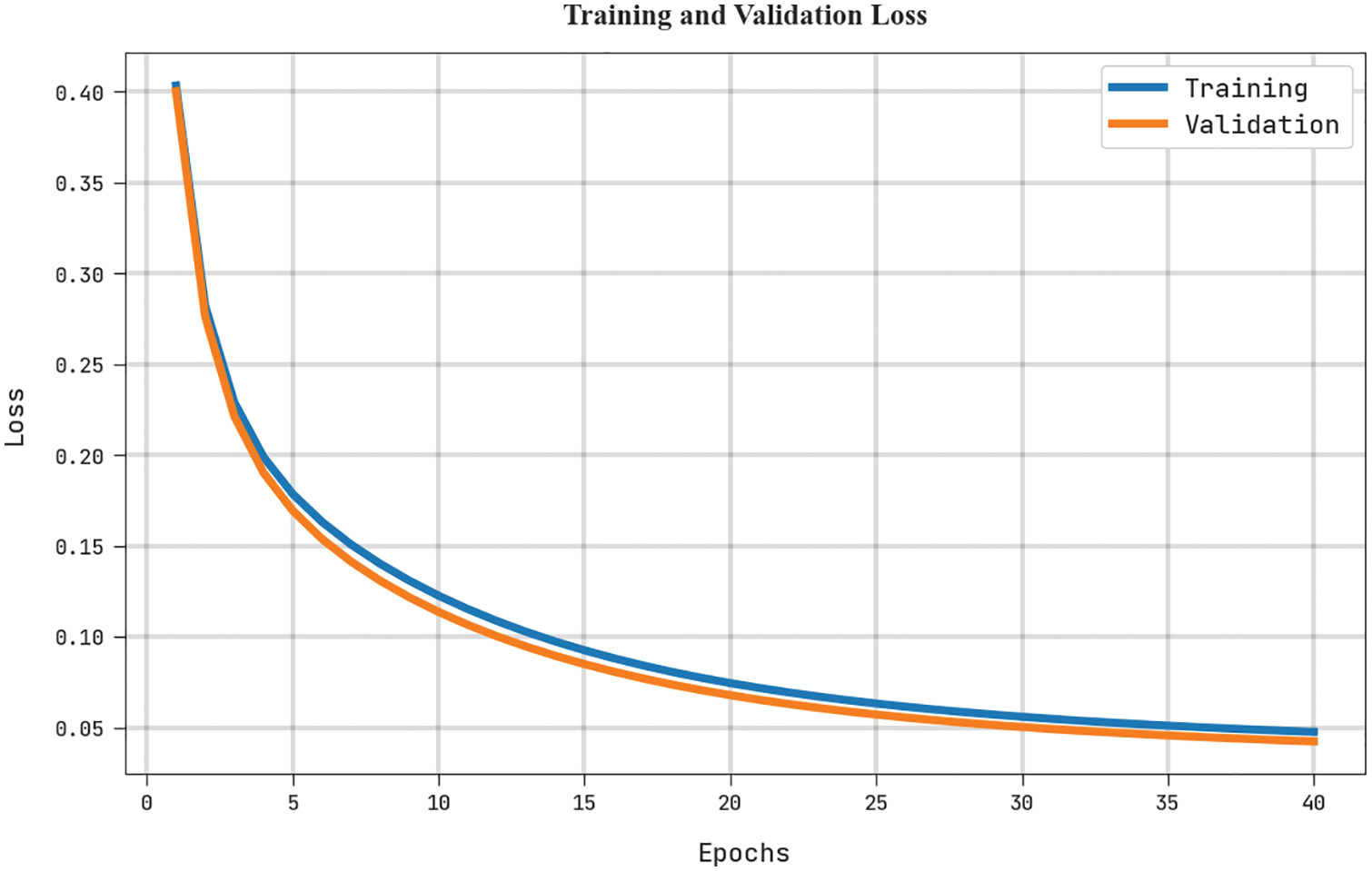

The training loss (TRL) and validation loss (VLL) obtained by the HHOGCN-WD method in the test dataset are accomplished in Fig. 7. The experimental outcome denotes the HHOGCN-WD approach has exhibited the least values of TRL and VLL. Particularly, the VLL is lesser than TRL.

Figure 7: TRL and VLL analysis of the HHOGCN-WD approach

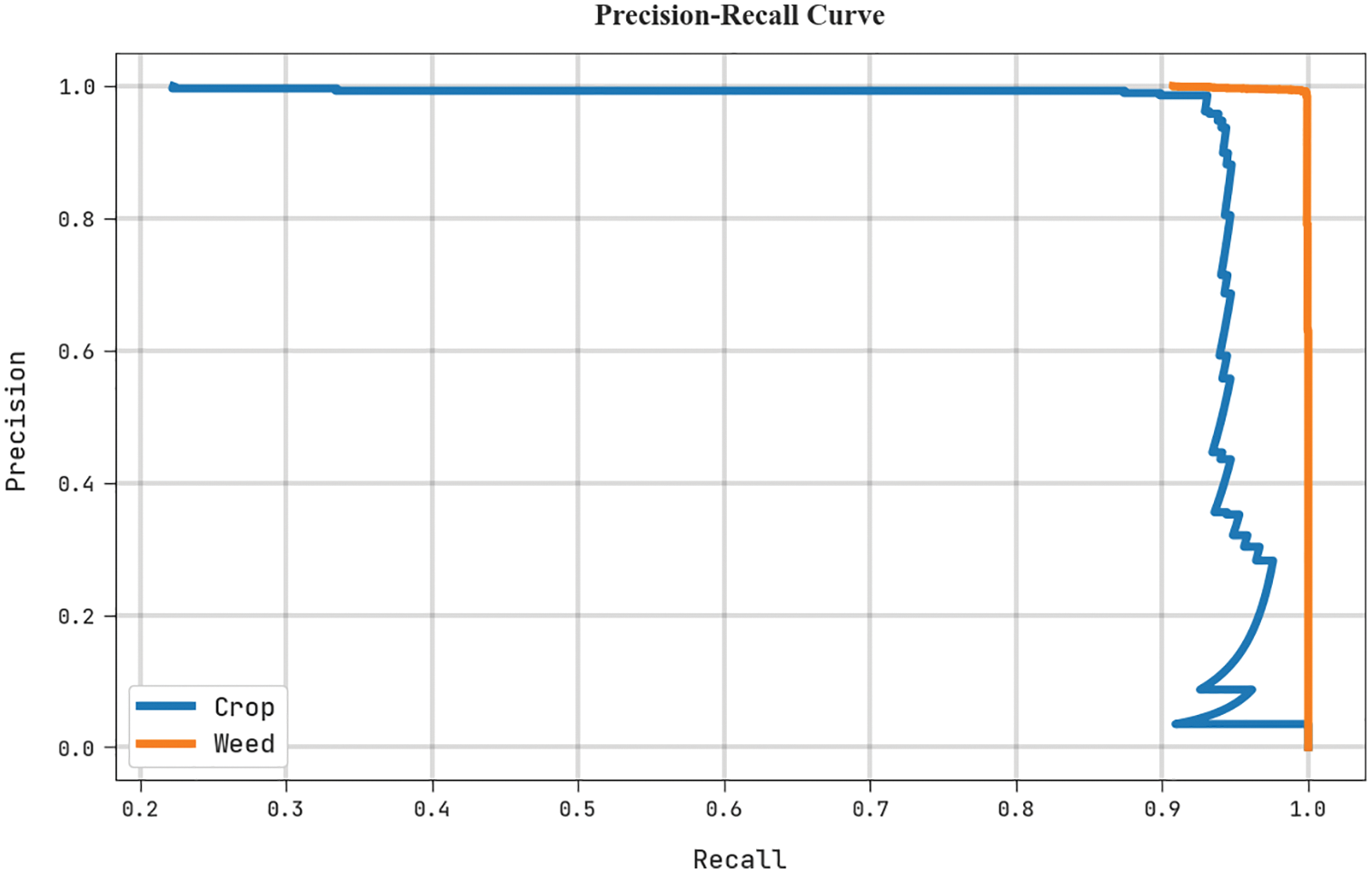

A clear precision-recall inspection of the HHOGCN-WD algorithm in the test dataset is given in Fig. 8. The figure representing the HHOGCN-WD approach has resulted in enhanced precision-recall values in all classes.

Figure 8: Precision-recall analysis of the HHOGCN-WD approach

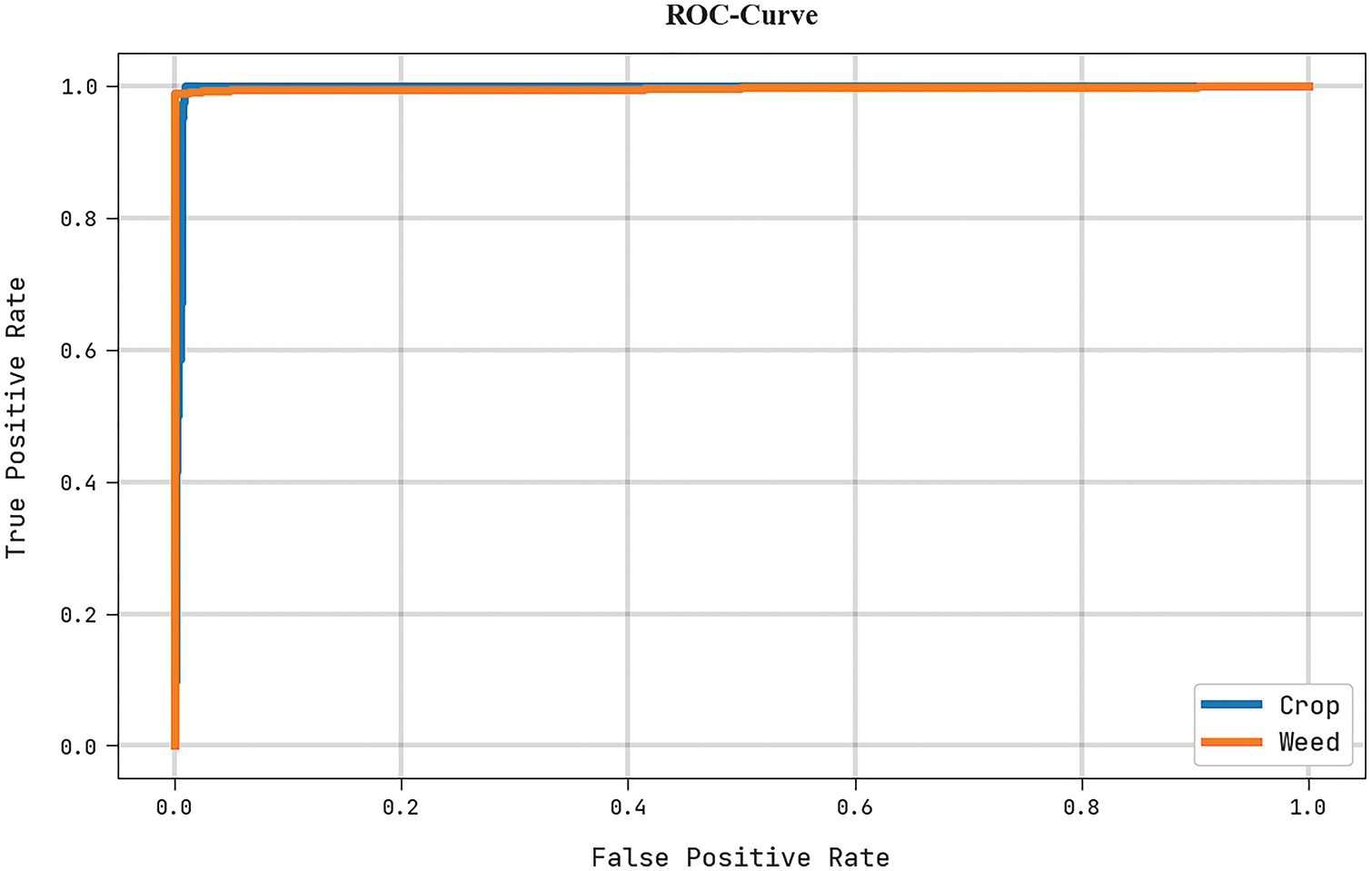

A brief ROC investigation of the HHOGCN-WD technique under the test dataset is portrayed in Fig. 9. The results implicit the HHOGCN-WD method has displayed its ability in classifying distinct class labels in the test dataset.

Figure 9: ROC analysis of HHOGCN-WD approach

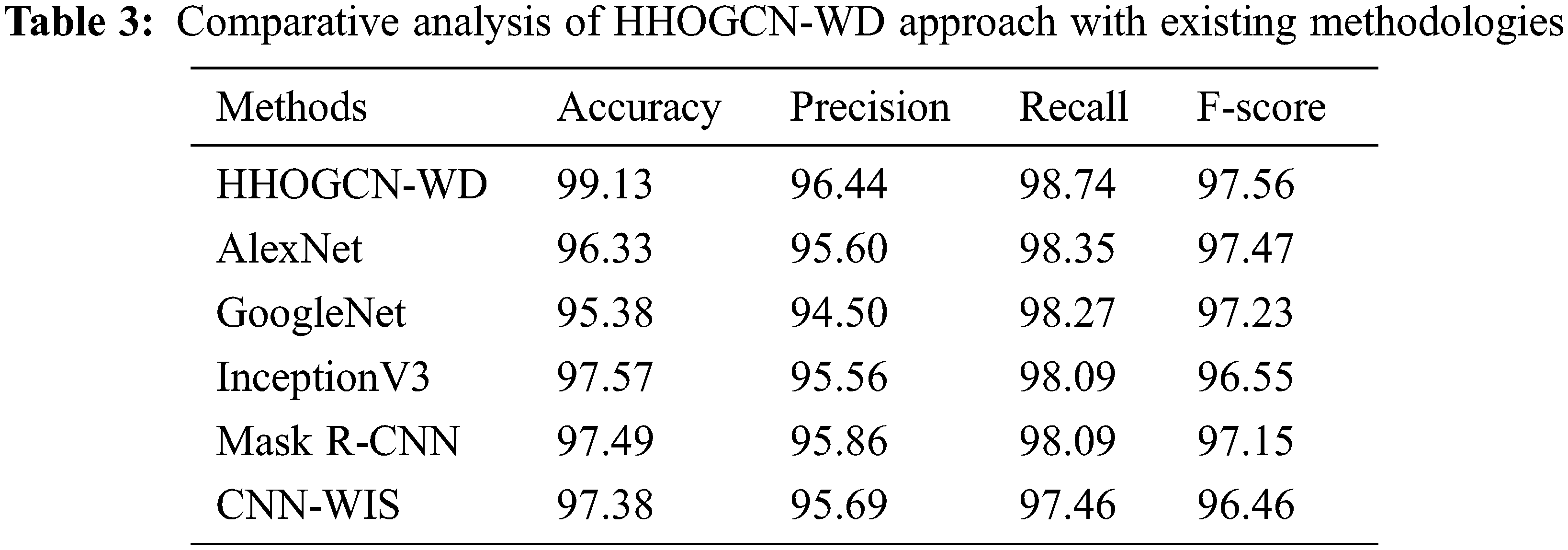

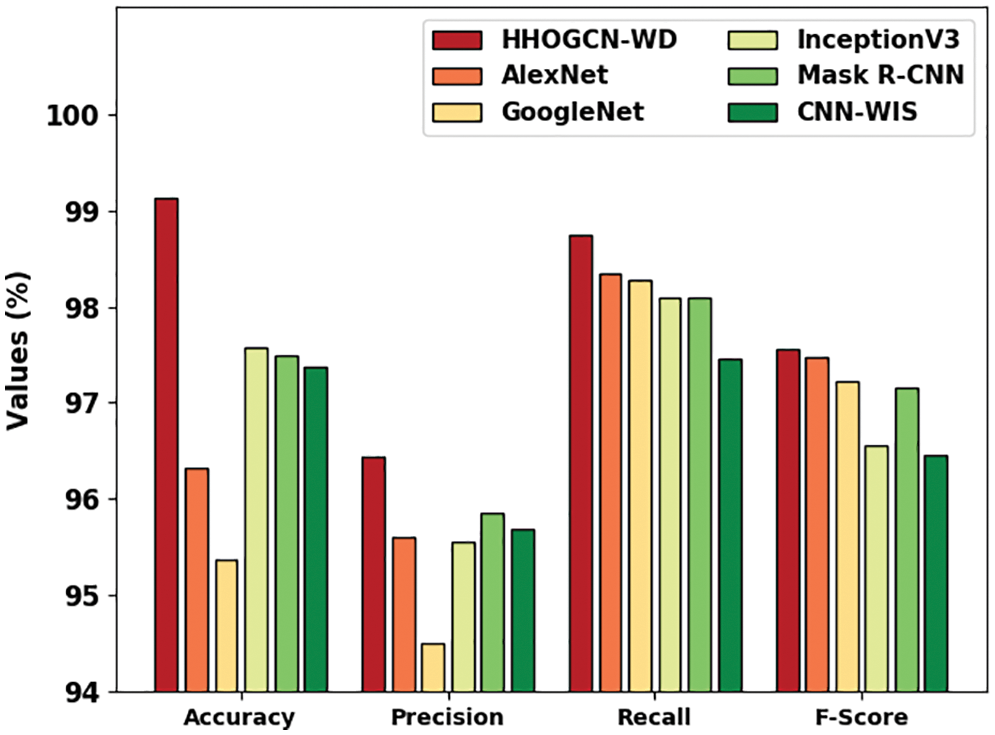

Table 3 and Fig. 10 depict the comparison weed detection results of the HHOGCN-WD model with other existing models [21,22]. The results implied that the HHOGCN-WD model has obtained enhanced results in terms of different measures. For instance, concerning

Figure 10: Comparative analysis of HHOGCN-WD approach with existing methodologies

Finally, for

In this study, a new HHOGCN-WD technique has been developed for weed detection and classification for Precision Agriculture. The presented HHOGCN-WD technique mainly focuses on the identification and classification of weeds for precision agriculture. For image pre-processing, the HHOGCN-WD model utilizes BNF for noise removal. In addition, the CCNet model is utilized to derive a set of feature vectors. To detect and classify weed, the GCN model is utilized with the HHO algorithm as a hyperparameter optimizer to improve the detection performance. The experimental results of the HHOGCN-WD technique are investigated under the benchmark dataset. The results indicate the promising performance of the presented HHOGCN-WD model over other recent approaches.

Funding Statement: This research was partly supported by the Technology Development Program of MSS [No. S3033853] and by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. 2020R1I1A3069700).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. W. H. Maes and K. Steppe, “Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture,” Trends in Plant Science, vol. 24, no. 2, pp. 152–164, 2019. [Google Scholar]

2. M. F. Aslan, A. Durdu, K. Sabanci, E. Ropelewska and S. S. Gültekin, “A comprehensive survey of the recent studies with uav for precision agriculture in open fields and greenhouses,” Applied Sciences, vol. 12, no. 3, pp. 1047, 2022. [Google Scholar]

3. J. Champ, A. M. Fallas, H. Goëau, E. M. Montero, P. Bonnet et al., “Instance segmentation for the fine detection of crop and weed plants by precision agricultural robots,” Applications in Plant Sciences, vol. 8, no. 7, pp. e11373, 2020. [Google Scholar]

4. S. Goel, K. Guleria and S. N. Panda, “Machine learning techniques for precision agriculture using wireless sensor networks,” ECS Transactions, vol. 107, no. 1, pp. 9229, 2022. [Google Scholar]

5. E. Mavridou, E. Vrochidou, G. A. Papakostas, T. Pachidis and V. G. Kaburlasos, “Machine vision systems in precision agriculture for crop farming,” Journal of Imaging, vol. 5, no. 12, pp. 89, 2019. [Google Scholar]

6. A. Chawade, J. van Ham, H. Blomquist, O. Bagge, E. Alexandersson et al., “High-throughput field-phenotyping tools for plant breeding and precision agriculture,” Agronomy, vol. 9, no. 5, pp. 258, 2019. [Google Scholar]

7. D. Andujar and J. tinez-Guanter, “An overview of precision weed mapping and management based on remote sensing,” Remote Sensing, vol. 14, no. 15, pp. 3621, 2022. [Google Scholar]

8. N. Delavarpour, C. Koparan, J. Nowatzki, S. Bajwa and X. Sun, “A technical study on UAV characteristics for precision agriculture applications and associated practical challenges,” Remote Sensing, vol. 13, no. 6, pp. 1204, 2021. [Google Scholar]

9. A. E. Abouelregal and M. Marin, “The size-dependent thermoelastic vibrations of nanobeams subjected to harmonic excitation and rectified sine wave heating,” Mathematics, vol. 8, no. 7, pp. 1128, 2020. [Google Scholar]

10. L. Zhang, M. M. Bhatti, E. E. Michaelides, M. Marin and R. Ellahi, “Hybrid nanofluid flow towards an elastic surface with tantalum and nickel nanoparticles, under the influence of an induced magnetic field,” The European Physical Journal Special Topics, vol. 231, no. 3, pp. 521–533, 2021. [Google Scholar]

11. U. F. Ukaegbu, L. K. Tartibu, M. O. Okwu and I. O. Olayode, “Development of a light-weight unmanned aerial vehicle for precision agriculture,” Sensors, vol. 21, no. 13, pp. 4417, 2021. [Google Scholar]

12. S. Khan, M. Tufail, M. T. Khan, Z. A. Khan and S. Anwar, “Deep learning-based identification system of weeds and crops in strawberry and pea fields for a precision agriculture sprayer,” Precision Agriculture, vol. 22, no. 6, pp. 1711–1727, 2021. [Google Scholar]

13. M. H. Asad and A. Bais, “Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network,” Information Processing in Agriculture, vol. 7, no. 4, pp. 535–545, 2020. [Google Scholar]

14. K. Osorio, A. Puerto, C. Pedraza, D. Jamaica and L. Rodríguez, “A deep learning approach for weed detection in lettuce crops using multispectral images,” AgriEngineering, vol. 2, no. 3, pp. 471–488, 2020. [Google Scholar]

15. O. Barrero and S. A. Perdomo, “RGB and multispectral UAV image fusion for gramineae weed detection in rice fields,” Precision Agriculture, vol. 19, no. 5, pp. 809–822, 2018. [Google Scholar]

16. H. D. Han and J. K. Han, “Modified bilateral filter for feature enhancement in mesh denoising,” IEEE Access, vol. 10, pp. 56845–56862, 2022. [Google Scholar]

17. L. Wang and X. Wang, “Dual-coupled cnn-gcn-based classification for hyperspectral and lidar data,” Sensors, vol. 22, no. 15, pp. 5735, 2022. [Google Scholar]

18. L. Zhao, Y. Song, C. Zhang, Y. Liu, P. Wang et al., “T-Gcn: A temporal graph convolutional network for traffic prediction,” IEEE Transactions on Intelligent Transportation Systems, vol. 21, no. 9, pp. 3848–3858, 2019. [Google Scholar]

19. Y. Zhang, R. Liu, X. Wang, H. Chen and C. Li, “Boosted binary harris hawks optimizer and feature selection,” Engineering with Computers, vol. 37, no. 4, pp. 3741–3770, 2021. [Google Scholar]

20. K. Sudars, J. Jasko, I. Namatevs, L. Ozola and N. Badaukis, “Dataset of annotated food crops and weed images for robotic computer vision control,” Data in Brief, vol. 31, pp. 105833, 2020. [Google Scholar]

21. Z. Wu, Y. Chen, B. Zhao, X. Kang and Y. Ding, “Review of weed detection methods based on computer vision,” Sensors, vol. 21, no. 11, pp. 3647, 2021. [Google Scholar]

22. A. Subeesh, S. Bhole, K. Singh, N. S. Chandel, Y. A. Rajwade et al., “Deep convolutional neural network models for weed detection in polyhouse grown bell peppers,” Artificial Intelligence in Agriculture, vol. 6, pp. 47–54, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools