Open Access

Open Access

ARTICLE

Human Personality Assessment Based on Gait Pattern Recognition Using Smartphone Sensors

1 Department of Computer Science, University of Wah, Wah Cantt, Pakistan

2 Department of Computer Science, HITEC University, Taxila, Pakistan

3 Computer Sciences Department, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, Riyadh, 11671, Saudi Arabia

4 Department of Management Information Systems, CoBA, Prince Sattam Bin Abdulaziz University, Al-Kharj, 16278, Saudi Arabia

5 Department of Obstetrics and Gynecology, Soonchunhyang University Cheonan Hospital, Soonchunhyang University College of Medicine, Cheonan, Korea

6 Department of ICT Convergence, Soonchunhyang University, Asan, 31538, Korea

* Corresponding Author: Yunyoung Nam. Email:

Computer Systems Science and Engineering 2023, 46(2), 2351-2368. https://doi.org/10.32604/csse.2023.036185

Received 20 September 2022; Accepted 14 December 2022; Issue published 09 February 2023

Abstract

Human personality assessment using gait pattern recognition is one of the most recent and exciting research domains. Gait is a person’s identity that can reflect reliable information about his mood, emotions, and substantial personality traits under scrutiny. This research focuses on recognizing key personality traits, including neuroticism, extraversion, openness to experience, agreeableness, and conscientiousness, in line with the big-five model of personality. We inferred personality traits based on the gait pattern recognition of individuals utilizing built-in smartphone sensors. For experimentation, we collected a novel dataset of 22 participants using an android application and further segmented it into six data chunks for a critical evaluation. After data pre-processing, we extracted selected features from each data segment and then applied four multiclass machine learning algorithms for training and classifying the dataset corresponding to the users’ Big-Five Personality Traits Profiles (BFPT). Experimental results and performance evaluation of the classifiers revealed the efficacy of the proposed scheme for all big-five traits.Keywords

Every person has a distinctive gait. Gait recognition studies how people walk and how that can be analyzed in detail. It is defined as “the systematic investigation of human movement.” [1]. The human gait cycle, commonly referred to as the stride, is a series of motions that starts and ends with one foot contacting the ground. Stance and swing are the two phases that make up a stride. 60% of the gait cycle, which begins when the foot contacts the ground, is taken up by stance. Up until that foot lifts off the ground, it continues. 40% of the stride is spent in the swing. The foot leaves the ground to start it, returning to the earth to finish it [2,3]. Eight sub-phases make up the entire gait cycle. The first contact, loading response, mid-stance, terminal stance, and pre-swing are the five phases that make up the stance phase. The initial swing, mid-swing, and terminal swing are all parts of the swing phase. The research on automatic gait identification is currently quite popular. It can be used in a variety of settings, including medical [4], soft biometric identification [5,6], surveillance, product marketing and sports [5], rehabilitation, product marketing [7], and sports [8,9]. Several time-tested methods have been developed for gathering and identifying gait patterns [10], including Video-Sensor (V-S) based [11–13], Ambient-Sensor (A-S) or Floor-Sensor based [14–16], and Wearable-Sensor (W-S) based techniques [17–19]. The most up-to-date method entails collecting and recognizing gait patterns via the use of a smartphone’s in-built sensors (GPS, proximity, accelerometer, gyroscope, etc.) [20–23]. Since it helps scientists collect and examine naturally occurring gait variations, it has been an increasingly popular method in recent years. Given that it doesn’t require users to attach sensors to various parts of their bodies, this method is not only inexpensive but also incredibly convenient. The most recent method uses smartphone sensors, including GPS, proximity, accelerometer, gyroscope, and others, to collect and recognize gait patterns.

Gait pattern analysis depicts significant details about a person’s personality traits and emotions. We can recognize others in an abstract display of their movement and judge their personality traits according to their behavior, such as noticing someone walking for a couple of seconds by watching a video clip of their behavioral sequences [24]. A human walking pattern can vary in different contexts, such as walking while listening to music, upstairs, normal walking, and walking during a foot injury. However, behavior observation is a traditional and widely accepted technique for identifying individual differences in psychology. It also judges personality from action [25]. However, this technique requires human coders to rate participants’ behavior. Ultimately causing personality traits leaving a significant chance of error and missing some salient features and details [26]. An alternative approach utilizes the gait analysis techniques (V-S based, A-S based, and W-S based) to assess an individual’s personality traits by collecting gait patterns of the individuals with standard gait biomechanics measures. These techniques are the most advanced and efficient as they allow researchers to collect data continuously over many strides, unobtrusively revealing critical details with less uncertainty and errors.

This paper assesses the key personality traits in line with the big-five personality model based on individuals’ gait pattern recognition. The Big Five inventory [27] measures widely used big-five personality traits. It is technically the most accepted and dependable personality model in individual differences research. Researchers in [28,29] described the big-five model traits in detail.

This research focuses on maintaining the Big-Five Personality Test (BFPT) profile. After maintaining BFPT profiles, it collects the gait data of all participants using five different gait patterns, including normal walking, walking upstairs, walking downstairs, and presenting with audiences and without audiences. Furthermore, to extract meaningful data, this work deals with pre-processing the data, extracting features, and then applying four Machine Learning (ML) classifiers. Results obtained after classification proved the efficiency of the scheme. The main contributions of our work are as follows:

• This work includes maintaining participants’ Big Five Personality Traits (BFPT) profiles before collecting data. BFPT is a 50-item questionnaire, having a scale of 1–5, developed to measure the big-five factor markers.

• A novel dataset of 22 participants, including 10 males and 12 females aged between 19 to 26 years, is collected using an android application “data collector.” Two smartphones are used for dataset collection. Participants kept one smartphone in their pocket and the other in hand while recording data in different contexts.

• To the best of our knowledge, this research, for the first time, proposed a method for inferring the key personality traits of individuals in line with the big-five model of personality through gait pattern recognition.

• The proposed approach is evaluated by comparing the inferred personality traits to the personality scores obtained through BFPT for each user. Performance evaluation showed promising results, highlighting the efficiency of the scheme.

Gait analysis has been one of the most escalating fields for decades. Researchers have done remarkable work in this domain to date. It has many daily applications, including security and surveillance, soft biometrics, health, and fitness [30]. Human personality assessment through gait pattern recognition is a recent phenomenon. Predictions about inferring personality traits through actions have long been neglected. Researchers have just started working in this domain for almost over a decade. In 1995, Philosopher Taylor suggested the link between walking patterns and the personality of individuals [31]. His claim has not been considered for practical research for years. Behavioral observation techniques effectively implied personality trait attribution [25]. Body movement is a medium used by observers to make judgments about an individual. Especially if face and body appearances are invisible or the person is at a distance.

In the paper [32], the authors researched to assess the point-light walkers and ultimately identified their motion-related visual cues. In their research, participants completed personality questionnaires and marked their personality traits as ground truth. Twenty-six participants were involved in this study, and whole-body walking movements were captured using a VICON system. Observers were shown one gait cycle of point-light walkers’ stimuli. They rated their stimuli on a six-rating scale. Five of these were related to the big-five personality model. Findings confirmed that observers made reliable trait judgements that were linked to the motion components derived from the analysis of the motion data. Thereby reflecting the relationship between personality traits and gait cues.

Besides behavioral observation techniques, researchers have made incredible efforts in emotion recognition through gait. Authors in [28] presented a neural network-based application of emotion recognition by analyzing human gait. They performed two experiments. First, they identified the participants’ emotional states by analyzing their gait. Participants reflected four diverse emotional states such as normal, happy, sad, and angry. Second, they investigated the changes in gait patterns while listening to different types of music, including excitatory, calming, and no music. Performance evaluation of this application proved that individual emotion detection rates up to 99% through gait analysis.

Additionally, in the second experiment, the gait data of participants provided significant information for emotion recognition. The utilization of human gait for personality assessment can be considered as an augmentation of emotion recognition through gait analysis. By analyzing papers [29,33], we can say that authors have presented evidence of having different personality cues in gait patterns. They adopted the approach of human coding and each observer-rated trait by looking at the shown gait patterns of participants. These ratings were then correlated with the participants’ self-reported measures to determine the accuracy of the perceived information.

Researchers in [34] proposed an unconventional approach to human coding to explore how personality traits are being revealed in gait analysis. They recruited 29 participants for their research. They further attached reflective markers at their anatomical positions of the thorax and pelvis, knees, and feet. Consequently, participants completed the Buss-Perry questionnaire, including the big-five measure. They captured the motion data of the participants walking on a treadmill naturally for the 60 s. Analyzing their upper and lower body movements demonstrated that gait analysis could correlate to the big-five and aggressive personalities. Although the current studies [35–37] looked at several components of gait and how they relate to aggressiveness and the big-five personality characteristics, it was exploratory and lacked a strong hypothesis. Authors [38] evaluated the association between the big-five personality traits, particularly three major traits: neuroticism, Conscientiousness, extraversion, physical activity level, and muscular strength. They suggested that neuroticism negatively correlates with muscle strength. While extraversion and conscientiousness, along with their facets, positively correlated. The study claimed that various personality traits were linked with the physical activity level. It further explored that having multiple negative traits exhibits a great risk of low muscular strength.

Researchers in the paper [39] observed the relationship between personality traits and physical capacities in older adults through gait speed and muscular strength analysis. Almost 243 older women were recruited in this study. Participants completed a questionnaire for personality traits measurement, subjective age information, identification of their attitudes toward aging, and physical self-perceptions. The authors adopted a structural equation modeling technique (SEM) for data analysis. This research provided evidence of extended associations between extraversion, neuroticism, conscientiousness, and walking speed. It also depicts the relationship between conscientiousness, neuroticism, openness, and muscular strength. Likewise, analysis of papers [40] and [41] indicates the associations of personality traits, physical activity level, and cognitive impairment with the risk of falls in older adults as well. Participants completed a series of questionnaires regarding subjective personality type, physical activity level, and cognitive status and later analyzed those descriptively. Results indicated favorable evidence of the above-mentioned associations.

The ability to read people’s emotions and traits has numerous practical uses. Rehabilitation, better product marketing, optimization based on customer behavior, security, personality detection in a shopping mall, and improved interactive and realistic computer games are just some of the applications [4–9]. However, there is a shortage of studies examining the possibility of assessing personality traits through the automated acquisition and analysis of motion data. It also implies the need for further study in the area.

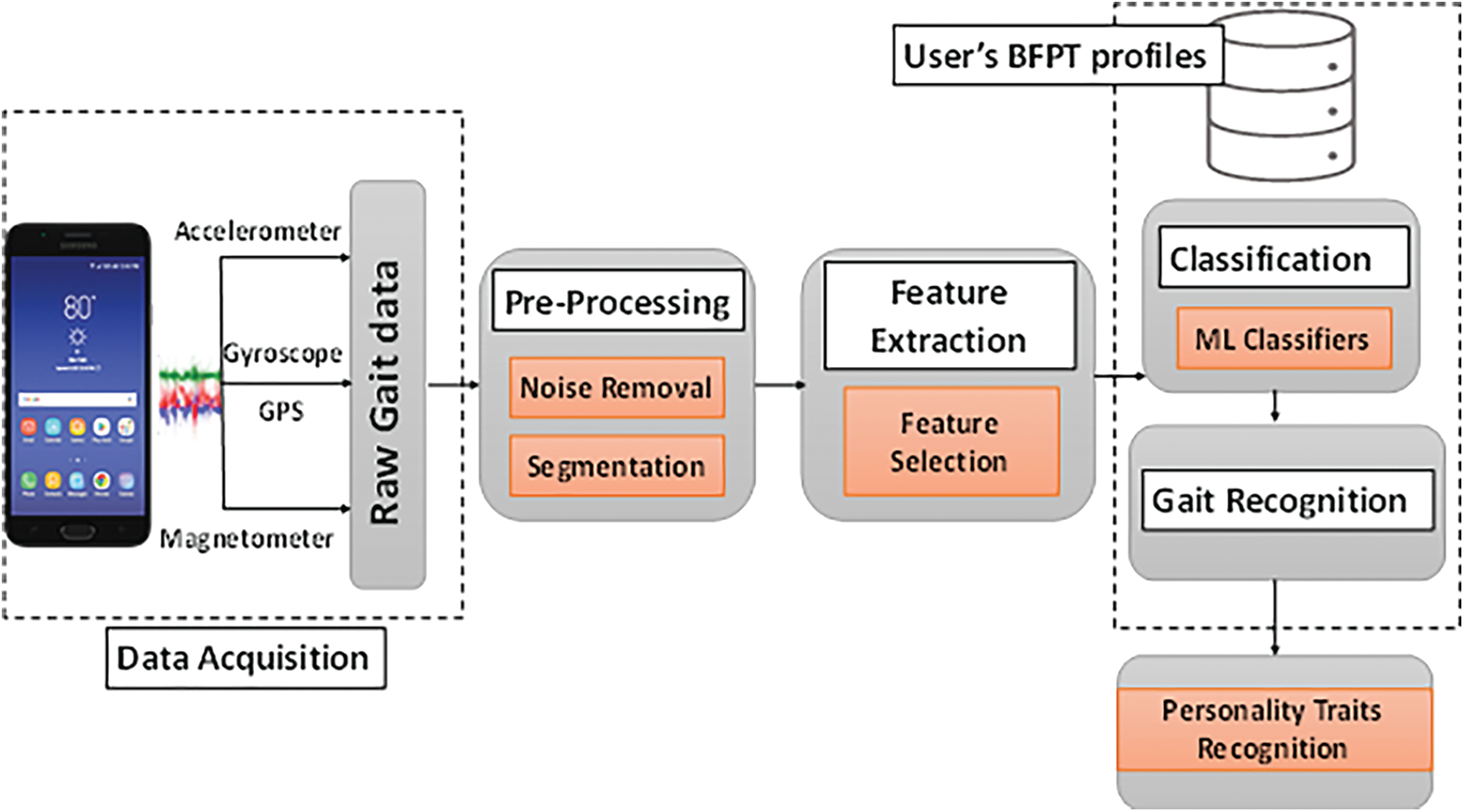

In this section, the methods used to infer personality traits from gait pattern recognition with smartphone sensors have been discussed in detail. The proposed methodology consists of six distinct steps, including acquiring and pre-processing data, feature selection, and extraction, machine learning-based classification, gait recognition, and personality traits recognition, as shown in Fig. 1. Each phase of the proposed scheme is described in the sections below in detail.

Figure 1: Block diagram of the proposed methodology

3.1 Data Acquisition using Smartphones’ Sensors

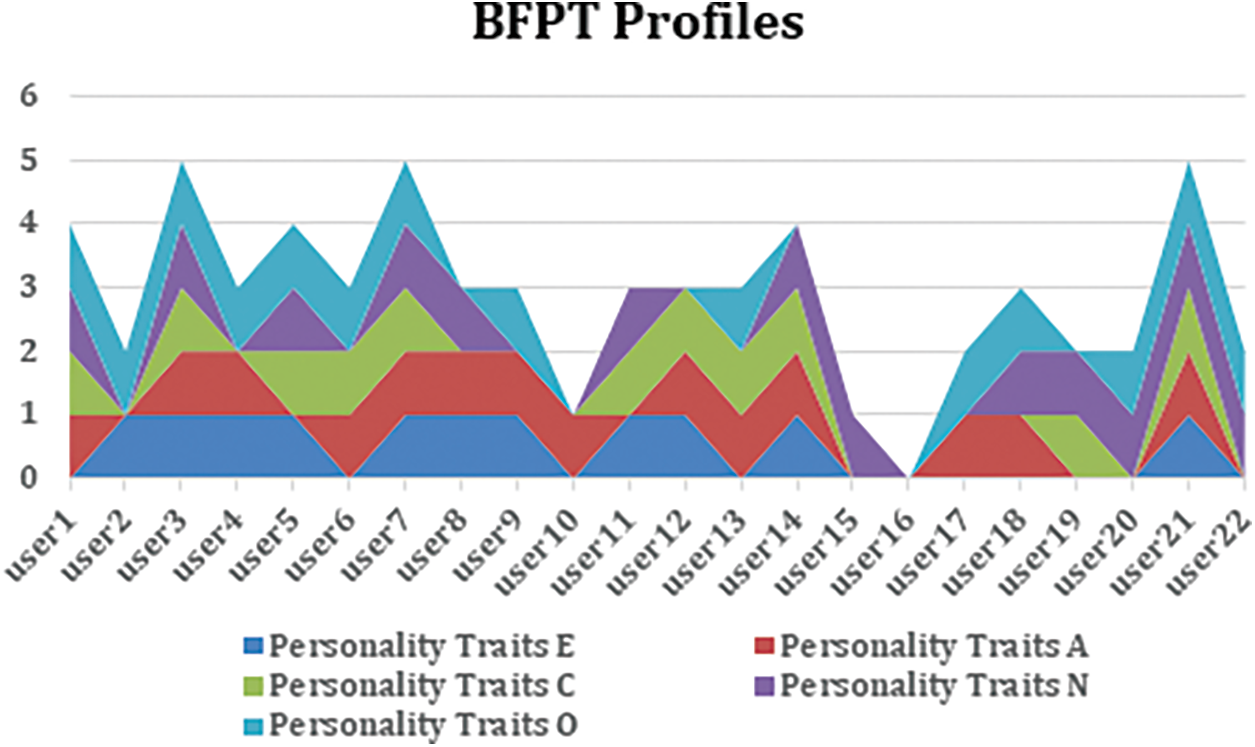

Before data recording, each participant completed the BFPT using revisions suggested by the Big-Five Inventory [27,33]. BFPT questionnaire [42] contains 50 questions and measures the big-five factor markers, which include Neuroticism (N), Extraversion (E), Openness to experience (O), Agreeableness (A), and Conscientiousness (C). Each question is marked on a scale of 1–5, where 1 = disagree, 2 = slightly disagree, 3 = neutral, 4 = slightly agree, and 5 = agree.

To maintain users’ BPFT profiles, this work calculates scores against each trait for every user according to the method given in [42,43]. After scoring five traits separately for all participants, we measured the average value for each trait (

Fig. 2 shows the BFPT profiles maintained for all participants. All users are marked as ‘Positive’ (1) or ‘Negative’ (0) for every single trait based on their calculated average scores. These BFPT profiles of users were used later for training the dataset using machine learning classifiers.

Figure 2: Big five personality traits profiles of all users

Suppose for Extraversion

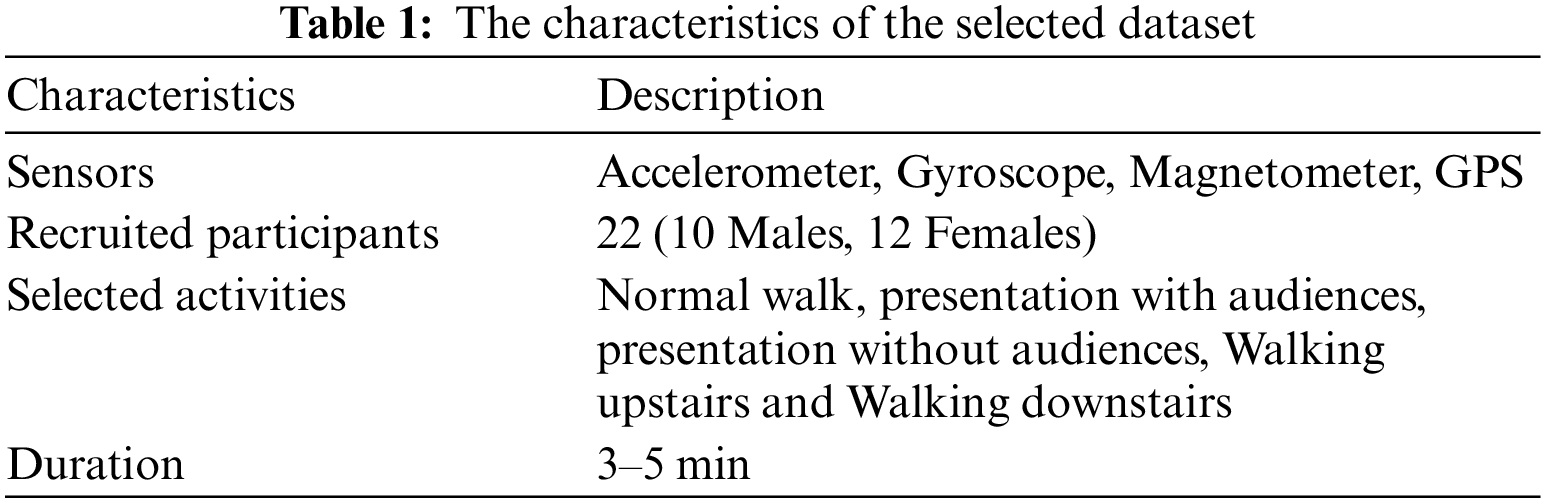

To validate the proposed scheme, a novel dataset for personality traits recognition has been collected using an android application called “data collector” [44]. Dataset included data obtained from the smartphone’s built-in sensors, including GPS, accelerometer, magnetometer, and gyroscope sensor readings at two different perspectives (hand, pocket), including altitude, gravity, user accelerations, and rotation rate collected at 50 hertz (HZ). It included data from 22 subjects; 10 males and 12 females aged between 19 and 26 years and weighing between 39 and 70 kg. The dataset is collected in an ideal environment with fixed temperature conditions during the morning. Participants were asked to keep smartphones in two positions: one held in hand and the other placed in the pocket for data collection. Five gait patterns were selected for this work, including normal walking, presentation with audiences, presentation without audiences, walking upstairs, and walking downstairs. Each gait pattern was recorded for 3–5 min, giving enough examples for our analysis. In our novel dataset, we had approximately 7500 instances in each gait data file, and each user recorded data of five different gait patterns, so accumulative instances for the individual user were 7500 * 5 = approximately 37,500. Furthermore, the dataset consisted of 22 subjects, so it includes (7500 instances (approx.) * 5 gait patterns) * 22 users = 8,25000 instances (approx.) for one smartphone. This research deals with the dataset collection from two smartphones from two different perspectives, so the overall dataset contains approximately 825000 + 825000 = 1,650,000 instances from both smartphones. With this dataset, this research aimed to identify the subjects' personality traits in time-series sensor data based on their gait pattern recognition. The characteristics of the dataset are described in Table 1.

3.3 Pre-processing using Average Smoothing Filter

Eliminating background noise is crucial before using the data for further analysis. Consequently, a filter with average smoothing is applied along all three dimensions. After denoising the signal, the next step is segmenting the whole gait data. For this purpose, every collected dataset file for each activity is divided into six different segments starting from 5 s up to 30 s with a gap of 5 s (5, 10, 15 s, the 20, 25, and 30 s) using a fixed-sized sliding window. Our dataset files were divided into six distinct chunks to conduct an in-depth study of the suggested scheme at each available segment to get a deeper comprehension and insight.

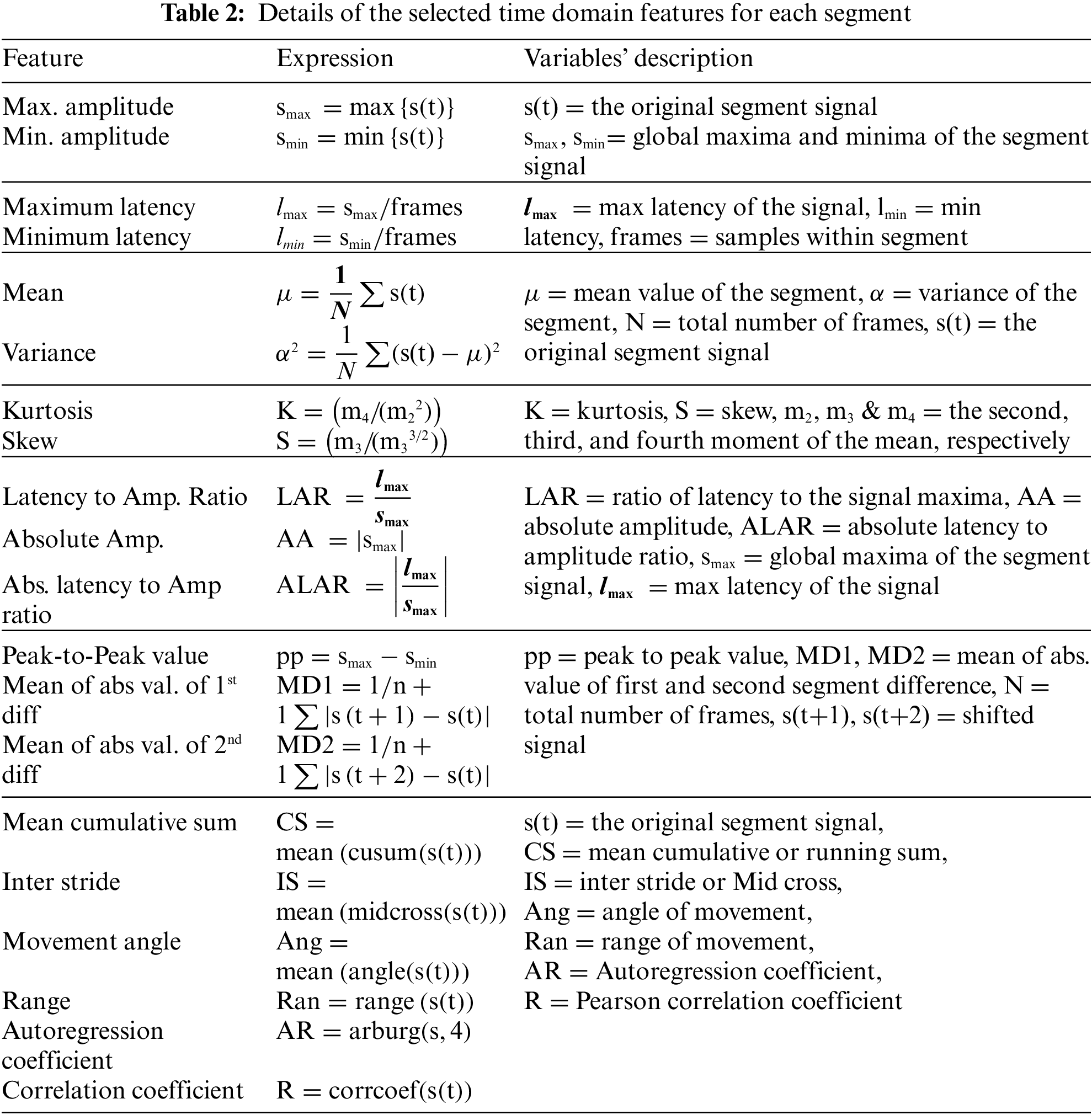

3.4 Feature Extraction on Each Segmented Chunk

Features are computed after pre-processing, and this involves extracting data that could be employed for user recognition and considerable speed boosts. This work deals with the 20 features’ extraction in the time domain after concluding from the literature. The size of the feature matrix was n * 20, where n is the total number of users. As the dataset included 22 subjects, so the feature matrix size was 22 * 20. Table 2 gives an overview of all the extracted features for every segment.

Next, an appropriate classification method for training the dataset is chosen, after feature extraction.

3.5 Personality Traits Prediction using BFPT Profiles

The validation, efficiency, and performance analysis of the proposed method rely heavily on the choice of a classifier. In supervised machine learning, the extracted characteristics are used to train an algorithm, which subsequently uses that knowledge to make judgments. Support Vector Machine (SVM), Bagging, Random Forest (RF), and K-Nearest Neighbor (K-NN) classifiers, four of the most popular in use today, were used. The method was verified using a five-fold cross-validation. To classify the data, we trained four multiclass machine learning classifiers using maintained users’ BFPT profiles to recognize the big-five personality traits of users based on their gait recognition for all six segments of data. The main reason for selecting these classifiers was the efficient performance in existing work [45–48].

4 Experimental Results and Analysis

This subsection discusses the methodology used to evaluate the proposed system, including the detailed experimental findings and performance analyses. The suggested framework performed well in detecting personality traits from gait patterns. We tested the suggested technique on our dataset using the four aforementioned multiclass ML classifiers in a 5-fold cross-validation setup. Therefore, the dataset’s gait pattern occurrences are divided at random into five equal groups. One group of the data is used for testing the classifiers, whereas the remaining data groups are used for training. This procedure is done five times, or until all of the gait, examples have been used for training and testing the classifiers, to ensure that the final recognition results are consistent throughout all iterations. In addition, each data fold’s hyper-parameters are tweaked before training begins to minimize the training error.

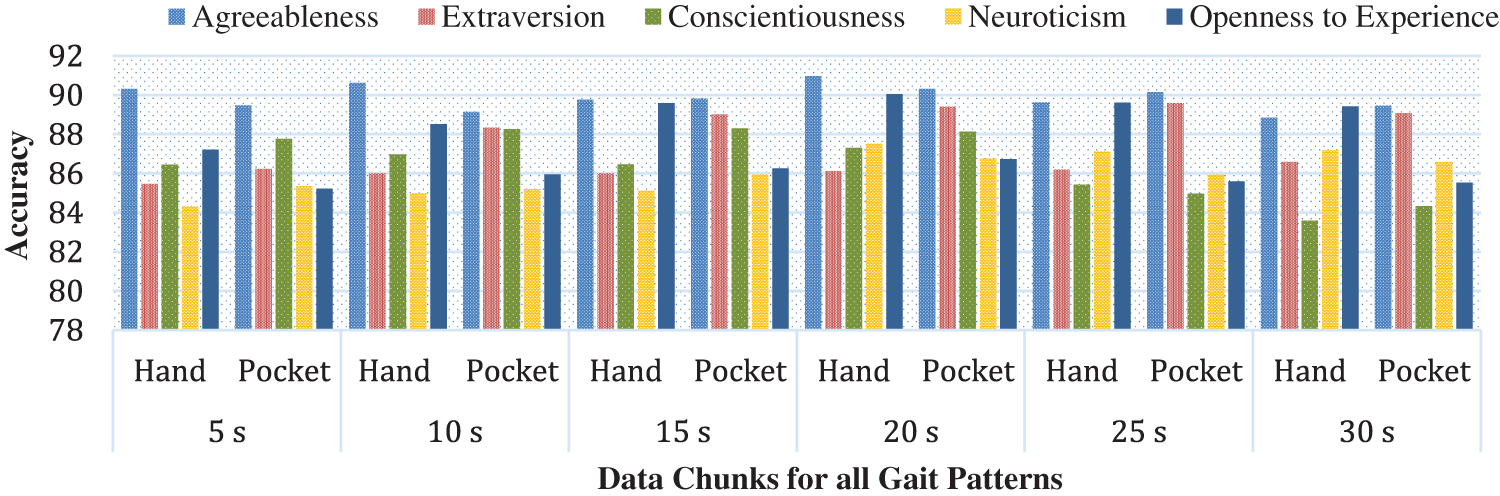

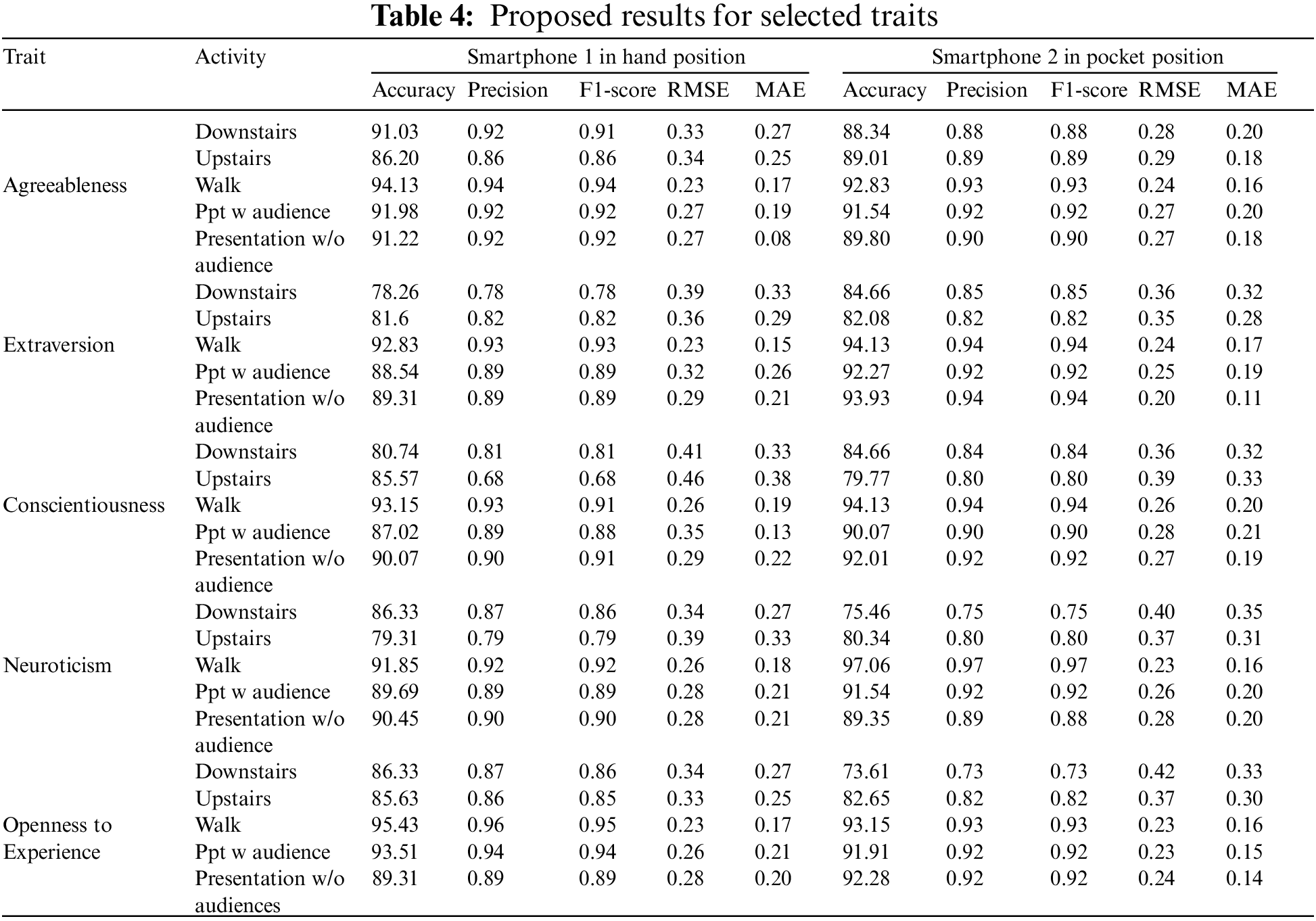

Four widely accepted ML classifiers are being applied to evaluate our scheme. Overall analysis shows that the most effective results are obtained using the RF classifier. Fig. 3 depicts the overall system accuracy for all data segments (5, 10, 15, 20, 25, 30 s) obtained from each of five different gait patterns using both smartphones’ positions using an RF classifier. It is being observed that the proposed scheme achieved promising results from all six data chunks but the most favorable results are being acquired with the data chunk of the 20 s. The system’s accuracy was improved proportionally with the increased size of the chunk up to 20 s, and then downfall approaches as the size further increased from 20 s up to 30 s. Overall comparison of results in terms of accuracies being obtained from both hand and pocket positions (using smartphones 1 and 2 respectively) shows that the results found in pocket position are more encouraging than hand position. Table 4 presents the detailed performance evaluation of recognition of all big-five personality traits against all selected gait patterns for the data segment of the 20 s. Accuracy, F1-score, Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Precision are used to measure the efficacy of the suggested system. An overall table analysis illustrates some interesting facts regarding the human personality assessment using BFPT. Albeit all selected gait patterns were found effective, the most desirable performance was achieved through the walk and presentation patterns.

Figure 3: Overall performance analysis in terms of the system’s accuracy for six all data chunks

The detailed evaluation and performance analysis of each trait against all selected gait patterns using smartphones from two different perspectives is discussed below.

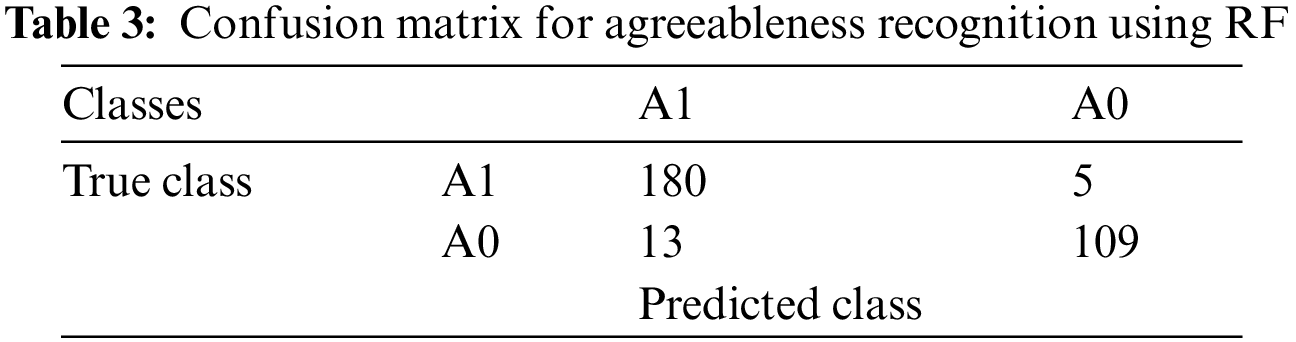

For experimentation, users were assigned a label, either A1 or A0, based on their BFPT profiles and the calculated average for agreeableness

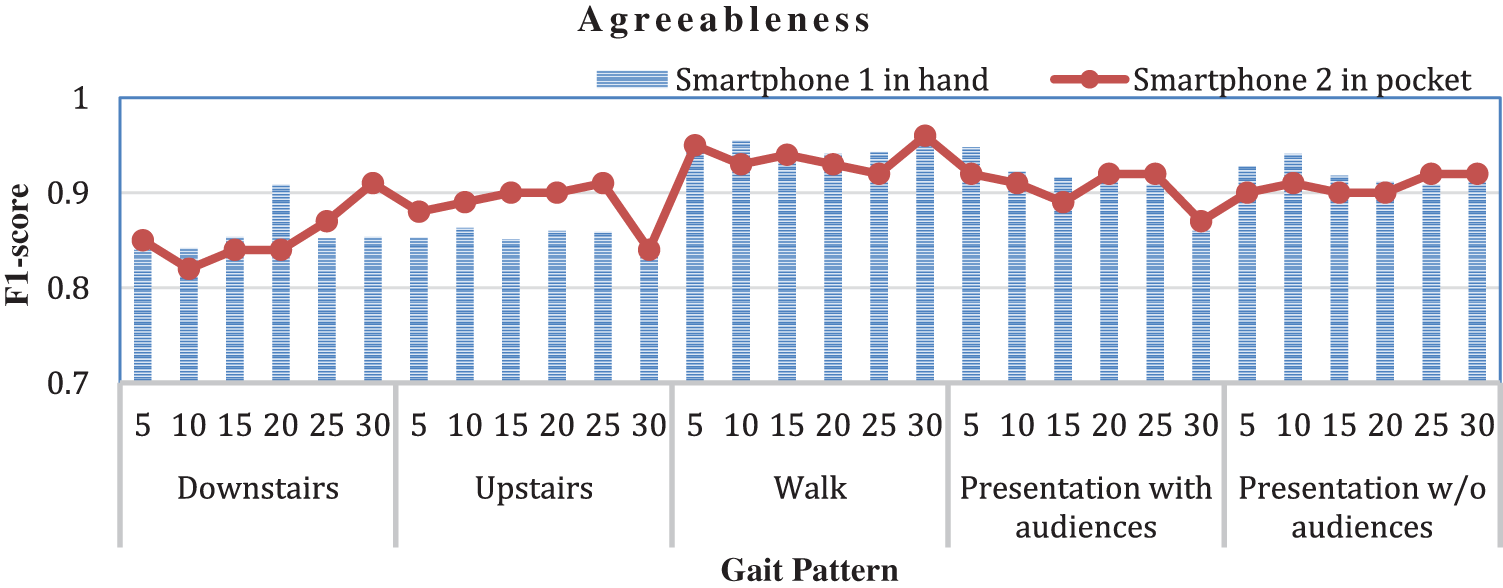

Fig. 4 shows the analysis of the F1-score concerning all selected gait patterns against 05 data segments (5, 10, 15, 20, 25, and 30 s) being recorded using smartphone 1 (held in hand) and smartphone 2 (placed in pocket) for agreeableness recognition among 22 participants. In the case of smartphone 1, the scenarios of a normal walk, presentation with audiences, and presentation without audiences provide better results in terms of the F1-score than the patterns of walking downstairs and upstairs. It confirms that the highest F1-score is obtained for the scenario of a normal walk at 30 s segmented chunks as 0.956. Furthermore, the obtained results got improved in the case of smartphone 2. On the other hand, the F1-score obtained in the scenarios of walking downstairs and upstairs is far much better than the score obtained using smartphone 1. Fig. 4 shows the increasing trends of F-measure for walking downstairs and upstairs activities. The highest F1-score for the scenarios of downstairs, and normal walking is acquired at the 30 and 5 s segmented chunk as 0.91, and 0.95, respectively, for upstairs it is 0.895 being achieved at 25 s. Furthermore, For the scenarios of presentation with and without audiences, the desired results are achieved at 25 s as 0.918.

Figure 4: F1-score for agreeableness recognition using both smartphones by applying RF classifier

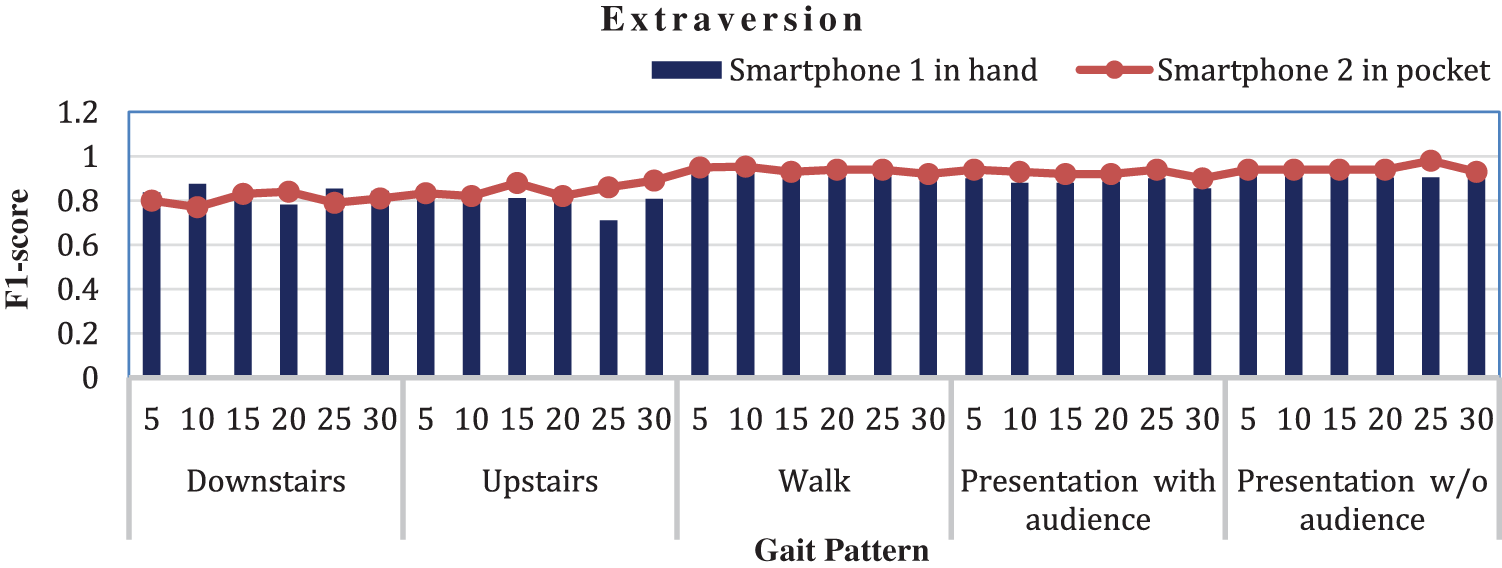

To evaluate our scheme, every participant is assigned a label either E1 or E0 based on their BFPT profiles and the calculated average for extraversion

Fig. 5 shows the F-measure evaluation for extraversion recognition at the data chunks of 5, 10, 15, 20, 25, and 30 s against all selected gait patterns recorded using smartphones 1 and 2. For the scenario of smartphone 1, it can be observed from Fig. 5 that the patterns, including normal walking, presentation with audiences, and presentation without audiences, accomplished efficient results in terms of the F1-score. The highest F1-score obtained in the case of a normal walk presentation with and without audiences is 0.953, 0.937, and 0.943, respectively. The same pattern of results is being perceived in the case of smartphone 2, the results obtained for a normal walk. Both scenarios of presentations are far better than the remaining two gait scenarios. It can be analyzed from Fig. 5 that the F1-scores for the gait patterns recorded using smartphone 2 is more auspicious than smartphone 1. Overall analysis shows that the results achieved for presentations and normal walking scenarios are worth noticing among all for extraversion recognition.

Figure 5: F1-score for extraversion recognition using both smartphones by applying RF classifier

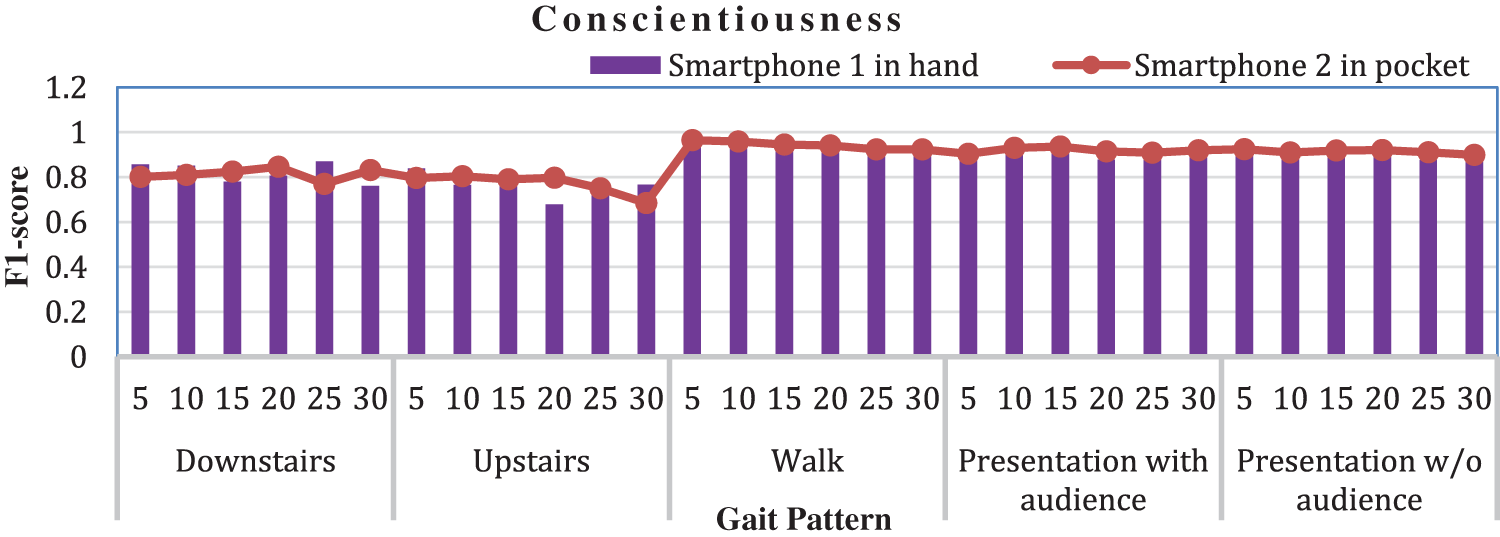

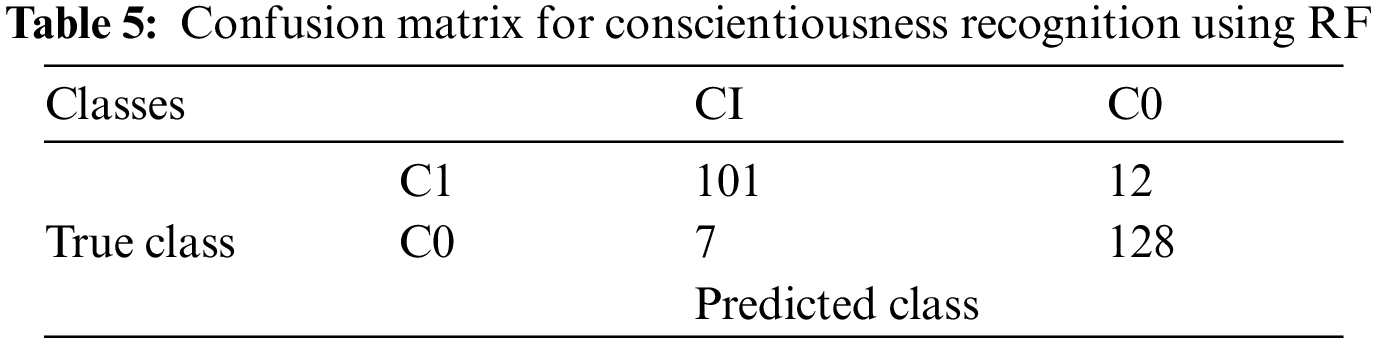

For conscientiousness recognition, each participant is marked as C1 or C0 depending upon the maintained BFPT profile and the estimated conscientiousness’ average value

Fig. 6 presents the F1-score for all data segments (5 s up to 30 s with a gap of 5 s) against all five gait patterns being recorded using both smartphones for conscientiousness recognition. In the case of smartphone 1, The F1-score obtained for the pattern of a normal walk at the 5 s segment is 0.945, which is the most dominant among all. In the case of presentation with audiences and presentation without audiences, the most favourable F1-score is 0.907 and 0.927 at 5 and 10 s data chunks, respectively. Besides, in the case of smartphone 2, the results are more encouraging than smartphone 1, the most favourable result in terms of F1-score obtained for downstairs is 0.846 at 20 s, for upstairs it is 0.804 at 10 s, in the case of a normal walk it is recorded as 0.965 at 5 s. 0.936 for presentation with audiences, and in the scenario without audiences, it is 0.924 at 15 and 5 s, respectively. It can be analysed from the given Fig. 6 that the most dominant gait patterns for this trait’s recognitions include a normal walk, and presentation with audiences and without audiences. We obtained very promising F1 scores for these gait patterns at every data segment. The confusion matrix for conscientiousness recognition in the case of walking being recorded using smartphone 1 by applying an RF classifier is given below in Table 5.

Figure 6: F1-score for conscientiousness recognition using both smartphones against RF classifier

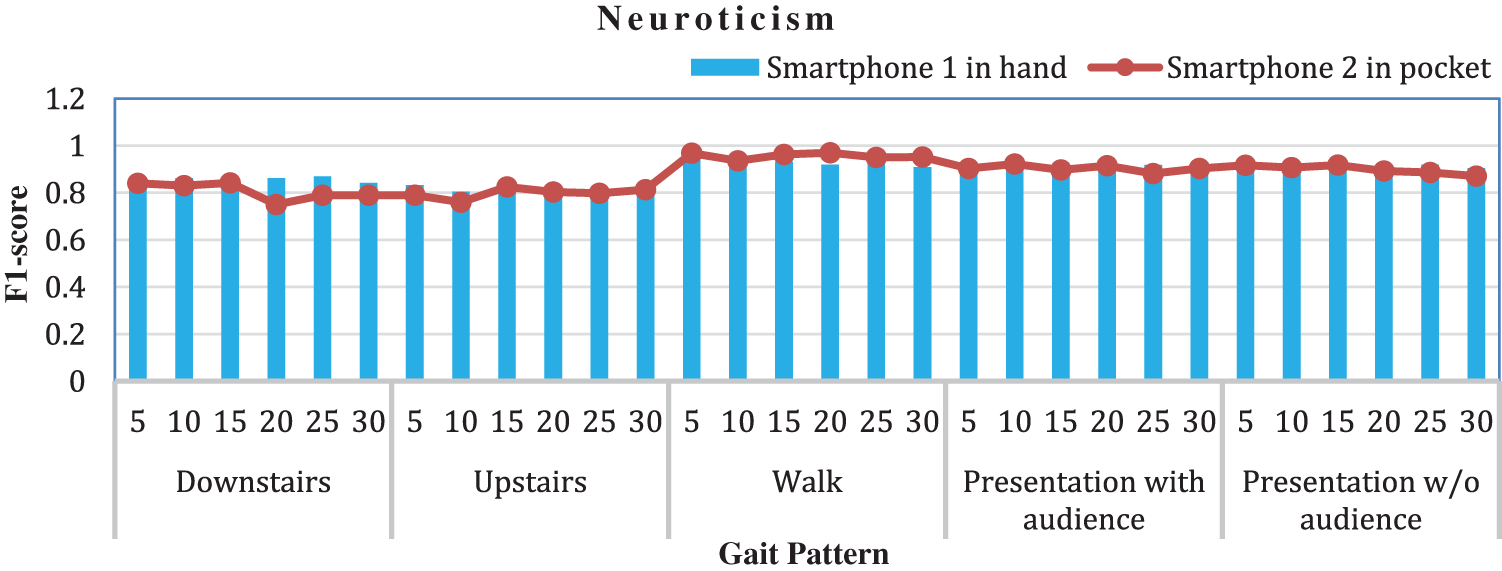

Neuroticism is considered one of the highest-order big-five personality traits in psychology. Individuals with high neuroticism scores are more likely to experience mood swings, anger, frustration, worry, anxiety, guilt, envy, and jealousy than those with average scores. On the other hand, individuals having low scores tend to be more optimistic, organized, and managed. Table 4 presents the performance analysis of the neuroticism recognition against all five gait patterns recorded using both smartphones for a 20 s segment against RF. It provided the best results in terms of all metrics in the case of all gait patterns. After comparing the results of both smartphones, it can be observed that the results obtained for the walking downstairs patterns and presentation without audiences recorded using smartphone 1 is more encouraging and favourable than those recorded using smartphone 2. Furthermore, the error rates, including MAE and RMSE for a normal walk, and both scenarios of presentations are also very efficient. Though we have achieved quite promising results from all five patterns, an overall analysis of the smartphones’ data shows that for neuroticism recognition, the most efficient results in terms of all the metrics are provided by both scenarios of the presentation and the pattern of a normal walk.

Fig. 7 shows the analysis of the F1-score obtained for all six data segments against all five gait patterns recorded using both smartphones for neuroticism recognition by applying an RF classifier. It can be analysed that the results are quite propitious and satisfactory. In the case of smartphone 1, for the scenario of a normal walk, the most dominant F1-score achieved is 0.94 at 25 s, for presentation with audiences and presentation without audiences it is 0.927 at the 30 s, and 0.92 at 25 s, respectively. Furthermore, in the case of smartphone 2, the highest F1-score in the case of walking and presentation with audiences is 0.97 and 0.92 at the 20 and 10 s, respectively. The desired F1-score achieved for the presentation scenario without audiences is 0.918 at the 15 s data segment. Furthermore, the analysis of both smartphones’ data shows that the most worth-noticing patterns for neuroticism recognition are walking and both presentation scenarios.

Figure 7: F1-score for neuroticism recognition using both smartphones by applying RF classifier

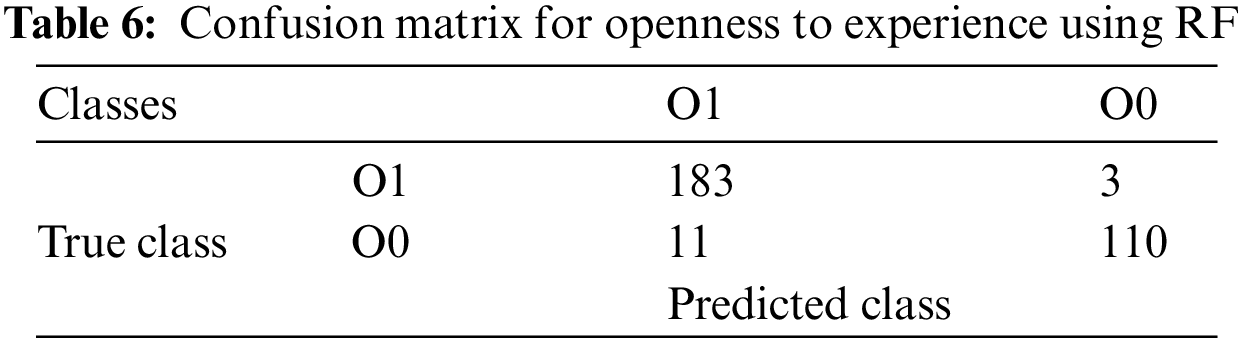

Openness to experience highlights the active imagination, aesthetic sensitivity, variety and novelty in thinking, intellectual curiosity, and alertness to their inner feelings. To recognize this trait among users, this work proposes labeling the dataset as O1 and O0 consistent with every individual’s BFPT profile and calculated

Table 4 shows the performance evaluation of the proposed scheme for all five traits against five selected gait patterns recorded for a 20 s segment against RF. Results obtained from the experimental analysis suggested that the RF provided the best results for all selected patterns for this trait as well. Although the results obtained in the case of smartphone 1 from the pattern of walking downstairs and upstairs were quite satisfactory, the remaining three gait patterns, including normal walking, presentation with audiences, and presentation without audiences, dominated completely over both the above-mentioned gait patterns in terms of results, as demonstrated in Table 4. It can be observed that the results obtained in the case of smartphone 2 for all selected metrics for this trait recognition against all gait patterns are quite promising and efficient. RF provided the desired results for the patterns of normal walk and presentation without audiences in terms of all metrics such as accuracy, precision, F1-score, error rates, etc. Comparative analysis of the results of both smartphones shows that for the trait of openness to experience recognition, the most dominant and worth-noticing patterns are of a normal walk and both scenarios of the presentations. The confusion matrix for openness to experience recognition using RF classifier in case of walking being recorded using smartphone 1 for a 20 s segment is given below in Table 6.

It is observed that the proposed scheme provided very promising results for all big-five personality traits. A novel dataset was acquired using smartphone sensing from multiple perspectives, performance analysis shows that the results obtained for smartphone 2 (which was being placed in the pocket) are comparatively more favourable than that of smartphone 1 (which was being held in the hand). Although all activities provided quite satisfactory and promising results, the patterns of a normal walk and presentation with audiences and without audiences are the most worth considering for all five traits. For the recognition of the agreeableness among individuals, gait patterns of a normal walk and presentation with audiences provided the most efficient results in terms of all five metrics (accuracy, precision, F1-measure, RMSE, and MAE) for both smartphones. Though Four multiclass machine learning classifiers were applied to the selected features for training the dataset. The results obtained using RF were the most desirable among all and therefore presented with detailed analysis. The accuracy of agreeableness recognition for walking activity corresponds to 94.13% and 92.83% for RF. In the case of SVM, it’s 81.36% and 84.04% respectively. Whereas for presentation with audiences the accuracy obtained from RF is 91.98% and 91.54% while in the case of bagging, 84.19% and 83.96% for smartphones 1 and 2, respectively. Moreover, for extraversion recognition among all participants, scenarios of a normal walk and presentation without audiences provided the most favorable results in terms of all metrics for both smartphones 1 and 2 using RF with accuracies of 92.83% and 94.13% for a normal walk, 89.31% and 93.93% for presentation without audiences, respectively. Quite satisfactory results were achieved with the rest of the gait patterns as well, especially for the scenario of presentation with audiences. Additionally, the most prominent activities for conscientiousness recognition are a normal walk, with achieved accuracy of 93.15% and 94.13%, and presentation without audiences, with the corresponding accuracy of 90.07% and 92.01% for smartphones 1 and 2, respectively. The error rates provided by these gait patterns are also much more favorable as compared to other patterns. Furthermore, for neuroticism recognition and in the case of recognition of openness to experience among all users, the most efficient results in terms of all the metrics are provided by both scenarios of the presentation and the pattern of a normal walk for both smartphones. The accuracy obtained for neuroticism recognition from smartphones 1 and 2 in the case of a normal walk is 91.85% and 97.06% using RF while it is 87.62% and 89.9% in the case of K-NN. The accuracy obtained for the scenarios of presentation with audiences is 89.69% and 91.54% using RF. After applying SVM, we obtained 83.2% and 83.82% for both smartphones respectively. For the activity of presentation without audiences, we applied the RF classifier and obtained accuracies of 90.45% and 89.35%. Similarly, the accuracy of openness to experience’s recognition for walking patterns in the case of RF corresponds to 95.43% and 93.15%, in the case of presentation with audiences, it is 93.51% and 91.91%, whereas for presentation without audiences, the accuracy obtained is 89.31% and 92.28% for smartphone 1 and 2, respectively.

The intended research is focused on inferring key personality traits including neuroticism, extraversion, openness to experience, agreeableness, and conscientiousness in line with the big-five model of personality based on the gait pattern recognition of individuals using smartphone sensors. This work deals with the conduction of a personality test of all participants and maintaining their BFPT profiles. Using two smartphones, from multiple perspectives, an android application named ``data-collector’’ was used for the dataset collection of 22 participants including 10 males and 12 females. Smartphone built-in sensors such as GPS, proximity, accelerometer, and gyroscope were utilized for gait pattern collection and recognition. The selected gait patterns obtained from participants included a normal walk, walking downstairs, walking upstairs, and presentations with and without audiences for 3 to 5 min. The dataset was pre-processed to obtain a collection of temporal domain features for human personality evaluation, and each subject was assigned a positive or negative label for each of the five personality traits. Those attributes were used to train the dataset's four different multiclass machine learning classifiers. The most desired outcomes are attained using RF. The effectiveness and efficiency of the suggested strategy were shown by analyzing the acquired results.

Gait pattern recognition as a tool for assessing human personality and identifying a person's underlying emotions is a relatively new field of study. Rehabilitation, enhanced product marketing, optimized product design in light of consumer behavior and personality recognition in a shopping mall, security, and surveillance, etc., are only a few of its numerous practical uses. However, studies evaluating the efficacy of gathering and analyzing raw gait data through an android application making use of smartphone sensors for personality traits assessments are few, indicating the need for more development in this area.

Funding Statement: This research was supported by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant number: HI21C1831) and the Soonchunhyang University Research Fund.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. M. A. Laribi and S. Zeghloul, “Human lower limb operation tracking via motion capture systems,” Design and Operation of Human Locomotion Systems, vol. 14, no. 1, pp. 83–107, 2020. [Google Scholar]

2. A. Kharb, V. Saini, Y. Jain and S. Dhiman, “A review of gait cycle and its parameters,” International Journal of Computational Engineering & Management, vol. 13, no. 2, pp. 78–83, 2011. [Google Scholar]

3. S. Mohammed, A. Same, L. Oukhellou and Y. Amirat, “Recognition of gait cycle phases using wearable sensors,” Robotics and Autonomous Systems, vol. 75, no. 7, pp. 50–59, 2016. [Google Scholar]

4. A. Muro-De-La-Herran, B. Garcia-Zapirain and A. Mendez-Zorrilla, “Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications,” Sensors, vol. 14, no. 2, pp. 3362–3394, 2014. [Google Scholar]

5. D. A. Reid, M. S. Nixon and S. V. Stevenage, “Soft biometrics; Human identification using comparative descriptions,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 6, pp. 1216–1228, 2013. [Google Scholar]

6. K. Moustakas, D. Tzovaras and G. Stavropoulos, “Gait recognition using geometric features and soft biometrics,” IEEE Signal Processing Letters, vol. 17, no. 4, pp. 367–370, 2010. [Google Scholar]

7. Z. Li and G. Zhang, “A gait recognition system for rehabilitation based on wearable micro inertial measurement unit,” Sensors, vol. 2, no. 3, pp. 1678–1682, 2022. [Google Scholar]

8. Y. Li and S. Jiang, “Application of gait recognition technology in badminton action analysis,” Sensors, vol. 1, no. 1, pp. 52008, 2008. [Google Scholar]

9. Y. Wahab and N. A. Bakar, “Gait analysis measurement for sport application based on ultrasonic system,” Sensors, vol. 4, no. 6, pp. 20–24, 2021. [Google Scholar]

10. D. Gafurov and E. Snekkenes, “Gait recognition using wearable motion recording sensors,” EURASIP Journal on Advances in Signal Processing, vol. 2009, no. 3, pp. 1–16, 2009. [Google Scholar]

11. J. I. Liang, M. Y. Chen, T. H. Hsieh and M. L. Yeh, “Video-based gait analysis for functional evaluation of healing achilles tendon in rats,” Annals of Biomedical Engineering, vol. 40, no. 12, pp. 2532–2540, 2012. [Google Scholar]

12. U. C. Ugbolue, E. Papi, K. T. Kaliarntas and P. J. Rowe, “The evaluation of an inexpensive, 2D, video based gait assessment system for clinical use,” Gait & Posture, vol. 38, no. 3, pp. 483–489, 2013. [Google Scholar]

13. X. Ben, C. Gong, P. Zhang, X. Jia and W. Meng, “Coupled patch alignment for matching cross-view gaits,” IEEE Transactions on Image Processing, vol. 28, no. 6, pp. 3142–3157, 2019. [Google Scholar]

14. M. Alwan, P. J. Rajendran, S. Kell and R. Felder, “A smart and passive floor-vibration based fall detector for elderly,” Sensors, vol. 6, no. 4, pp. 1003–1007, 2021. [Google Scholar]

15. L. Middleton, A. A. Buss, A. Bazin and M. S. Nixon, “A floor sensor system for gait recognition,” in Fourth IEEE Workshop on Automatic Identification Advanced Technologies (AutoID’05), Beijing, China, pp. 171–176, 2020. [Google Scholar]

16. D. Gouwanda and S. Senanayake, “Real time multi-sensory force sensing mat for sports biomechanics and human gait analysis,” International Journal of Biomedical and Biological Engineering, vol. 2, no. 9, pp. 338–343, 2008. [Google Scholar]

17. G. Chelius, C. Braillon, M. Pasquier, N. Horvais and C. A. Coste, “A wearable sensor network for gait analysis: A six-day experiment of running through the desert,” IEEE/ASME Transactions on Mechatronics, vol. 16, no. 5, pp. 878–883, 2011. [Google Scholar]

18. W. Tao, T. Liu, R. Zheng and H. Feng, “Gait analysis using wearable sensors,” Sensors, vol. 12, no. 2, pp. 2255–2283, 2012. [Google Scholar]

19. W. C. Hsu, T. Sugiarto, Y. J. Lin, F. C. Yang and K. N. Chou, “Multiple-wearable-sensor-based gait classification and analysis in patients with neurological disorders,” Sensors, vol. 18, no. 10, pp. 3397, 2018. [Google Scholar]

20. K. Ibrar, M. A. Azam and M. Ehatisham-ul-Haq, “Personal attributes identification based on gait recognition using smart phone sensors,” Applied Sciences, vol. 21, no. 5, pp. 94–97, 2022. [Google Scholar]

21. M. Malekzadeh, R. G. Clegg, A. Cavallaro and H. Haddadi, “Privacy and utility preserving sensor-data transformations,” Pervasive and Mobile Computing, vol. 63, no. 6, pp. 101132, 2020. [Google Scholar]

22. J. Juen, Q. Cheng, V. Prieto-Centurion, J. A. Krishnan and B. Schatz, “Health monitors for chronic disease by gait analysis with mobile phones,” Telemedicine and e-Health, vol. 20, no. 11, pp. 1035–1041, 2014. [Google Scholar]

23. D. Steins, I. Sheret, H. Dawes, P. Esser and J. Collett, “A smart device inertial-sensing method for gait analysis,” Journal of Biomechanics, vol. 47, no. 15, pp. 3780–3785, 2014. [Google Scholar]

24. R. F. Baumeister, K. D. Vohs and D. C. Funder, “Psychology as the science of self-reports and finger movements: Whatever happened to actual behavior?,” Perspectives on Psychological Science, vol. 2, no. 4, pp. 396–403, 2007. [Google Scholar]

25. R. W. Robins, R. C. Fraley and R. F. Krueger, Handbook of Research Methods in Personality Psychology. Cham: Guilford Press, Springer, pp. 1–41, 2009. [Google Scholar]

26. N. M. Szajnberg, What the Face Reveals: Basic and Applied Studies of Spontaneous Expression using the Facial Action Coding System (FACS). Los Angeles, CA: SAGE Publications Sage CA, pp. 1–26, 2022. [Google Scholar]

27. O. P. John, L. P. Naumann and C. J. Soto, “Paradigm shift to the integrative big five trait taxonomy: History, measurement, and conceptual issues,” Sensors, vol. 21, no. 6, pp. 1–26, 2008. [Google Scholar]

28. J. Heinström, “Five personality dimensions and their influence on information behaviour,” Information Research, vol. 9, no. 1, pp. 1–9, 2003. [Google Scholar]

29. P. J. Howard and J. M. Howard, “The big five quickstart: An introduction to the five-factor model of personality for human resource professionals,” Medicine, vol. 6, no. 4, pp. 1–25, 1995. [Google Scholar]

30. T. Huang, X. Ben, C. Gong, B. Zhang and Q. Wu, “Enhanced spatial-temporal salience for cross-view gait recognition,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 5, no. 3, pp. 1–21, 2022. [Google Scholar]

31. C. Taylor, “Philosophical arguments,” Harvard University Press, vol. 1, no. 2, pp. 1–31, 1995. [Google Scholar]

32. J. C. Thoresen, Q. C. Vuong and A. P. Atkinson, “First impressions: Gait cues drive reliable trait judgements,” Cognition, vol. 124, no. 3, pp. 261–271, 2012. [Google Scholar]

33. P. T. Costa Jr and R. R. McCrae, “The revised NEO personality inventory (NEO-PI-R),” Sage Publications, vol. 5, no. 2, pp. 1–21, 2008. [Google Scholar]

34. L. R. Goldberg, “The development of markers for the big-five factor structure,” Psychological Assessment, vol. 4, no. 1, pp. 26–42, 1992. [Google Scholar]

35. R. S. Kramer, V. M. Gottwald, T. A. Dixon and R. Ward, “Different cues of personality and health from the face and gait of women,” Evolutionary Psychology, vol. 10, no. 2, pp. 147470491201000208, 2012. [Google Scholar]

36. L. Satchell, P. Morris, C. Mills, L. O’Reilly and L. Akehurst, “Evidence of big five and aggressive personalities in gait biomechanics,” Journal of Nonverbal Behavior, vol. 41, no. 1, pp. 35–44, 2017. [Google Scholar]

37. A. S. Heberlein, R. Adolphs, D. Tranel and H. Damasio, “Cortical regions for judgments of emotions and personality traits from point-light walkers,” Journal of Cognitive Neuroscience, vol. 16, no. 7, pp. 1143–1158, 2004. [Google Scholar]

38. M. I. Tolea, A. Terracciano, E. M. Simonsick and L. Ferrucci, “Associations between personality traits, physical activity level, and muscle strength,” Journal of Research in Personality, vol. 46, no. 3, pp. 264–270, 2012. [Google Scholar]

39. M. Deshayes, K. Corrion, R. Zory, O. Guérin and F. d’Arripe-Longueville, “Relationship between personality and physical capacities in older adults: The mediating role of subjective age, aging attitudes and physical self-perceptions,” Archives of Gerontology and Geriatrics, vol. 95, no. 3, pp. 104417, 2021. [Google Scholar]

40. A. Azizan, I. H. Shaari, F. Rahman and S. Sahrani, “Association between fall risk with physical sctivity and personality among community-dwelling older adults with and without cognitive impairment,” International Journal of Aging Health and Movement, vol. 3, no. 2, pp. 31–39, 2021. [Google Scholar]

41. E. K. Graham, B. D. James, K. L. Jackson and D. K. Mroczek, “Associations between personality traits and cognitive resilience in older adults,” The Journals of Gerontology: Series B, vol. 76, no. 1, pp. 6–19, 2021. [Google Scholar]

42. L. Goldberg, “Administering IPIP measures, with a 50-item sample questionnaire,” Medicine, vol. 2, no. 5, pp. 1–6, 2006. [Google Scholar]

43. F. J. P. Abal, J. A. Menénde and H. Félix Attorresi, “Application of the item response theory to the neuroticism scale of the big five inventory,” Ajayu Órgano de Difusión Científica del Departamento de Psicología UCBSP, vol. 17, no. 2, pp. 424–443, 2019. [Google Scholar]

44. M. Shoaib, S. Bosch, O. D. Incel and P. J. Havinga, “A survey of online activity recognition using mobile phones,” Sensors, vol. 15, no. 1, pp. 2059–2085, 2015. [Google Scholar]

45. D. Janssen, W. I. Schöllhorn, J. Lubienetzki and K. Davids, “Recognition of emotions in gait patterns by means of artificial neural nets,” Journal of Nonverbal Behavior, vol. 32, no. 2, pp. 79–92, 2008. [Google Scholar]

46. A. Mannini, D. Trojaniello, A. Cereatti and A. M. Sabatini, “A machine learning framework for gait classification using inertial sensors: Application to elderly, post-stroke and huntington’s disease patients,” Sensors, vol. 16, no. 1, pp. 134, 2016. [Google Scholar]

47. Q. Riaz, A. Vögele, B. Krüger and A. Weber, “One small step for a man: Estimation of gender, age and height from recordings of one step by a single inertial sensor,” Sensors, vol. 15, no. 12, pp. 31999–32019, 2015. [Google Scholar]

48. Y. D. Cai, X. J. Liu and K. C. Chou, “Support vector machines for prediction of protein domain structural class,” Journal of Theoretical Biology, vol. 221, no. 1, pp. 115–120, 2003. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools