Open Access

Open Access

ARTICLE

Biometric Verification System Using Hyperparameter Tuned Deep Learning Model

1 Department of Management Information Systems, Faculty of Economics and Administration, King Abdulaziz University, Jeddah, 21589, Saudi Arabia

2 Department of Business Administration, Faculty of Economics and Administration, King Abdulaziz University, Jeddah, 21589, Saudi Arabia

3 Department of Management Information Systems, Faculty of Economics and Administration, King Abdulaziz University, Jeddah, Saudi Arabia

4 Department of Computer Science and Engineering, Vignan’s Institute of Information Technology, Visakhapatnam, 530049, India

* Corresponding Author: E. Laxmi Lydia. Email:

Computer Systems Science and Engineering 2023, 46(1), 321-336. https://doi.org/10.32604/csse.2023.034849

Received 29 July 2022; Accepted 19 October 2022; Issue published 20 January 2023

Abstract

Deep learning (DL) models have been useful in many computer vision, speech recognition, and natural language processing tasks in recent years. These models seem a natural fit to handle the rising number of biometric recognition problems, from cellphone authentication to airport security systems. DL approaches have recently been utilized to improve the efficiency of various biometric recognition systems. Iris recognition was considered the more reliable and accurate biometric detection method accessible. Iris recognition has been an active research region in the last few decades due to its extensive applications, from security in airports to homeland security border control. This article presents a new Political Optimizer with Deep Transfer Learning Enabled Biometric Iris Recognition (PODTL-BIR) model. The presented PODTL-BIR technique recognizes the iris for biometric security. In the presented PODTL-BIR model, an initial stage of pre-processing is carried out. In addition, the MobileNetv2 feature extractor is utilized to produce a collection of feature vectors. The PODTL-BIR technique utilizes a bidirectional gated recurrent unit (BiGRU) model to recognise iris for biometric verification. Finally, the political optimizer (PO) algorithm is used as a hyperparameter tuning strategy to improve the PODTL-BIR technique’s recognition efficiency. A wide-ranging experimental investigation was executed to validate the enhanced performance of the PODTL-BIR system. The experimental outcome stated the promising performance of the PODTL-BIR system over other existing algorithms.Keywords

Biometric security resolved the hindrances of earlier computing days and over the globe prefer to utilize biometric recognition systems as an alternative solution to traditional password-based authentication methods. Law enforcement agencies, border control, finance, and different smart devices have opted for biometric recognition as it eradicates the requirement to recollect passwords, is highly precise in validating the person’s identity, and acts as a layer of protection against unauthorized access, thereby catering to the need for security in the present context [1]. It is the science of establishing the person’s identity via semi or fully automated methods on the basis of behavioural traits, like signature or voice, or physical traits, like the fingerprint and the iris [2]. The exceptional characteristics of biometrical data provide several benefits when compared with conventional recognition approaches, like passwords, since they cannot be stolen, replicated, or lost. Biometric traits are classified into 2 groups one is an extrinsic biometric trait, i.e., fingerprint and iris, and another one is an intrinsic biometric trait, i.e., finger vein and palm vein [3]. Generally, the biometric recognition mechanism has 4 modules that are matching, sensor, decision-making, and feature extraction modules. There were 2 kinds of biometric recognition techniques, (1) multimodal and (2) unimodal. The unimodal method utilizes a single biometric trait for recognizing the user [4]. And they were trustworthy and proved better than conventional approaches. However, it contains limits. Such involve issues with intra-class, and inter-class similarities, noise in sensed data, prone to spoofing assaults, and non-universality perplexities.

Most recently, substantial attention was paid to multi-modal mechanisms because of their capability to obtain superior performances to unimodal mechanisms [5]. Multi-modal systems could generate adequate population coverage by proficiently talking about the issues based on the enrollment stage like non-universality [6]. Moreover, such mechanisms could offer more accuracy and a larger resistance to unauthorized access by a pretender compared to unimodal mechanisms because of the complexity of spoofing or forging many biometric traits of a legal user simultaneously [7,8]. Generally, formulating and applying a multi-modal biometric system becomes challenging, and various factors which have a great impact on the complete performance which is addressed, involving the accuracy, cost, fusion strategy, and sources of biometric traits. But, the basic problem in devising the multi-modal system was selecting very powerful biometric traits from several resources and discovering a potential technique for combining them [9]. In such cases, the fusion in the rank level is implemented by utilizing one ranking-level fusion technique for consolidating the ranks generated by every individual classifier for deducing a consensus rank for every individual [10].

This article introduces a new Political Optimizer with Deep Transfer Learning Enabled Biometric Iris Recognition (PODTL-BIR) model. The presented PODTL-BIR technique recognizes the iris for biometric security. In the presented PODTL-BIR model, an initial stage of pre-processing is carried out. In addition, the MobileNetv2 feature extractor is utilized to produce a collection of feature vectors. The PODTL-BIR technique utilizes a bidirectional gated recurrent unit (BiGRU) model to recognise iris for biometric verification. Finally, the political optimizer (PO) algorithm is used as a hyperparameter tuning strategy to improve the PODTL-BIR technique’s recognition efficiency. To validate the enhanced performance of the PODTL-BIR algorithm, a wide-ranging experimental analysis is executed.

In [11], a fast localization iris recognition method can be devised that compiles the iris segmentation approach with DL to derive the iris region for detection rapidly. Initially, the pupil edge was derived by contour extraction and dynamic threshold analysis, and after that iris has been positioned by gray calculation and edge detection. At last, features of normalized images are learned by the DL network. Jayanthi et al. [12] present an effectual DL-related integrated algorithm for accurate iris detection, recognition, and segmentation. The devised method involves various stages like segmentation, pre-processing, recognition, and detection. Primarily, pre-processing of images can be performed to improve the standard of input images utilizing Gamma Correction, Black Hat filtering, and Median filtering. After that, the Hough Circle Transform algorithm can be implemented for localizing RoI that is an iris potentially. Then, the Mask region proposal network along with R-CNN with Inception v2 method can be implemented for trustworthy iris segmentation and recognition that recognizes non-iris or iris pixels.

In [13], an effectual and real-time multi-modal biometric mechanism can be formulated based on framing DL representations for images of both the left and right irises of an individual and merging the outcomes gained by utilizing a ranking-level fusion approach. The proposed trained DL method is named IrisConvNet. Its architecture was related to a set of Softmax classifiers and CNN for extracting discriminative features from the input images without field knowledge in which input images indicate localized iris region and after classifies it into one of N classes. This study presented a discriminative CNN-trained method based on a grouping of mini-batch AdaGrad optimization and back-propagation (BP) methods for learning rate adaptation and weight updating. Azam et al. [14] introduce an effectual approach which utilizes a support vector machine (SVM) and CNN for feature extracting and classifying to increase the recognition efficiency.

Gupta et al. [15] formulate a DL perspective for solving 2 of such assaults—database attack and replay attack. The presented architecture HsIrisNet, a CNN, is tested and trained on 2 openly available databases, IIT Delhi DB and Casia-Iris-Interval v4 DB. The projected method HsIrisNet employs histograms of particular powerful regions pursued by image upsampling for authenticating users. Such robust regions were discovered to be adequate for authenticating the user, allowing a non-deterministic algorithm. Wang et al. [16] examine a novel DL-related method for iris recognition and efforts to enhance the accurateness utilizing an easier structure to recover the representative feature precisely. These methods even alleviate the necessity for up-sampling and down-sampling layers.

This study developed a new PODTL-BIR system to recognize the iris for biometric security. In the presented PODTL-BIR model, an initial stage of pre-processing is carried out. Besides, the MobileNetv2 feature extractor is utilized to produce a collection of feature vectors. The PODTL-BIR technique utilizes PO with the BiGRU model to recognise iris for biometric verification. Fig. 1 showcases the block diagram of the PODTL-BIR approach.

Figure 1: Block diagram of PODTL-BIR approach

In this study, the MobileNetv2 feature extractor is utilized to produce a collection of feature vectors. Conventional machine learning (ML) algorithm requires the same source and target domain data distribution. Given that it has specific relationships between the target and source domains, the extracted knowledge from the source domain data is exploited during the training of the target domain classification method. It allows the transfer and reuse of learned knowledge amongst related or similar domains. These methods decrease the training model cost and increase ML’s consequence considerably. As a result, the transfer learning (TL) mechanism assist the user to manage novel application scenario, which enables the ML approach if there exists insufficient labeled available information. MobileNetV1 is intended to relate to the conventional VGG framework that constructs a network through a stacked convolutional layer to increase performance. But the vanishing gradient problem takes place if multiple convolution layers are arranged. Residual block in ResNet contributes to the data stream between layers, involving feature reusing in forwarding propagation and alleviates vanishing gradient in backpropagation. As a result, it continues with the depth separable convolutional layer of MobileNetV1, as well as drawn on ResNet architecture.

In contrast to MobileNetV1, the major development of MobileNetV2 has two themes such as inverted ResNet block in the network and the implementation of linear bottleneck [17]. The fundamental concept of MobileNetV2 is a depth separable convolution that depends on an inverted ResNet with a linear bottleneck. Certain application transforms feature from N to M channels, with expansion factor t and stride s. Before the depthwise convolution, a 1 × 1 convolution is added and exploits linear activation instead of non-linear activation function afterward the pointwise convolution layer, and accomplishes the purpose of down-sampling via fixing the variable s in the depthwise convolution layer. In the architecture of MobileNetV2,

3.2 Iris Recognition Using BiGRU Model

For the iris recognition process, the BiGRU model is exploited in this study. An LSTM block is collected from the forgetting gate

Lastly, the present neuron output gate

A bi-directional method procedures data from either forward or backward propagations, whereas the forward layer iterate in

Variables

3.3 Hyperparameter Tuning Using PO Algorithm

To optimally adjust the hyperparameters related to the BiGRU model, the PO algorithm is utilized. The PO algorithm is a socio-inspired, recent, novel metaheuristics methodology that can be utilized to resolve global optimization problems [19]. The algorithm based on the natural process is classified into human-behaviour, physics-based, evolution-based, and swarm-based groups. PO varies from the current metaheuristics as follows:

• PO has a current location updating approach with a mathematical model of the learning activities of politicians in prior elections. This approach is termed a new historical-based location updating strategy.

• The most important characteristic of the suggested module is that a major development has been accomplished in the convergence rate because of the better exploration ability.

• The presented approach is effective in resolving difficult optimization issues with a massive quantity of variables.

Party and Constituency Formation

The architecture with an overall population count

Election Campaign

The election campaign technique illustrates the exploration and exploitation stage. This stage assists parliamentary candidate to speed up their process in the elections and search for optimal solutions to the problem. In such cases, the antenna parameter is attuned to attain good SLL. Learning from the preceding election is defined as a new historical-based location updating approach that is expressed as follows. The following expression measures the appropriateness of a feasible candidate solution.

Party Switching

The party-switching phase in politics continues with the campaign in the electoral region; however, during the PO methodology, this procedure is run the election campaign afterward. The party-switching rate, named

Election

An election is a procedure where winners are declared by assessing the entitlement of each parliamentary candidate opposing for election in an area. The antenna parameter evaluates the candidate’s fitness in such cases. The lesser the SLL, the improved the candidate. In Eq. (9),

Parliamentary Affairs

The constituency winner and Party leader are defined as follows. Afterward, the election procedure, with the declaration of the result, the determinations to launch a government initiate. The government is made an inter-party election afterward. The party leader and constituency winner are decided. Once every parliamentarian

This section inspects the experimental validation of the PODTL-BIR model on three datasets. Fig. 2 illustrates some sample images.

Figure 2: Sample images

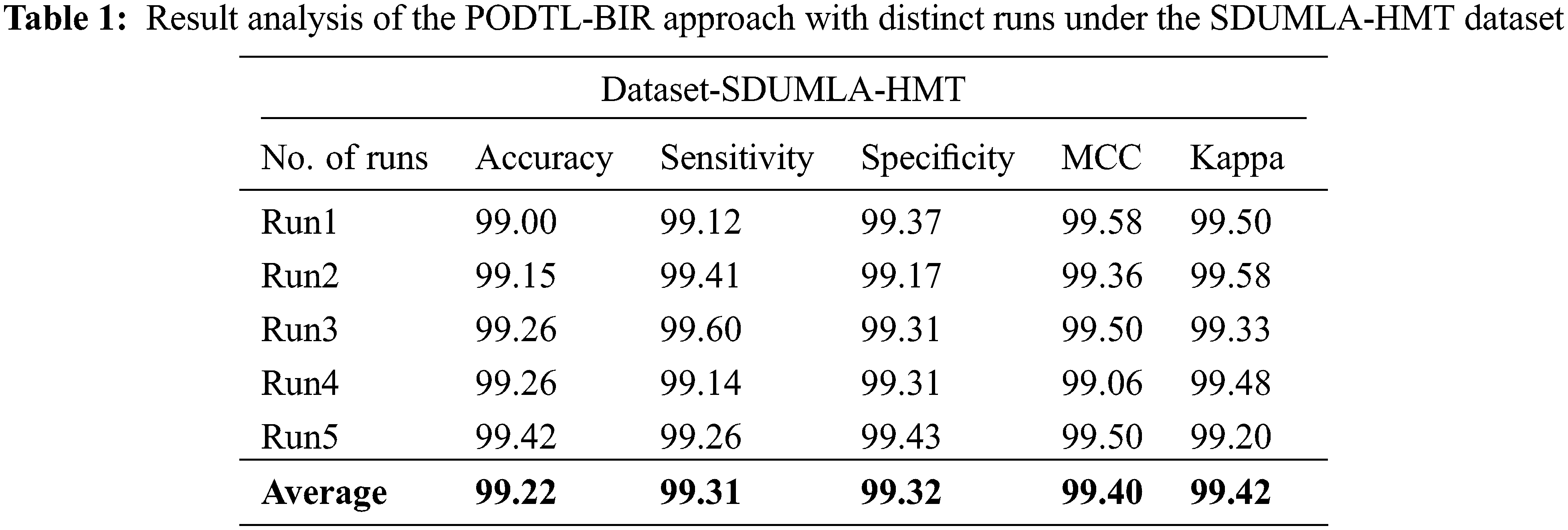

Table 1 and Fig. 3 provide detailed iris recognition outcomes of the PODTL-BIR algorithm on the test SDUMLA-HMT dataset. The experimental values indicated that the PODTL-BIR approach improved performance under all runs. For sample, with run1, the PODTL-BIR model has offered

Figure 3: Result analysis of the PODTL-BIR approach under the SDUMLA-HMT dataset

Fig. 4 reports the overall results offered by the PODTL-BIR approach on the test SDUMLA-HMT dataset. The outcome indicated that the PODTL-BIR model had reached enhanced results with maximum average

Figure 4: Average analysis of the PODTL-BIR approach under the SDUMLA-HMT dataset

The training accuracy (TRA) and validation accuracy (VLA) attained by the PODTL-BIR method on the SDUMLA-HMT dataset is illustrated in Fig. 5. The experimental result exposed that the PODTL-BIR system has attained maximal values of TRA and VLA. Mostly the VLA appeared superior to TRA.

Figure 5: TRA and VLA analysis of the PODTL-BIR approach under the SDUMLA-HMT dataset

The training loss (TRL) and validation loss (VLL) accomplished by the PODTL-BIR system on the SDUMLA-HMT dataset are recognized in Fig. 6. The experimental result stated that the PODTL-BIR technique had gained minimum values of TRL and VLL. In specific, the VLL is lesser than TRL.

Figure 6: TRL and VLL analysis of the PODTL-BIR approach under the SDUMLA-HMT dataset

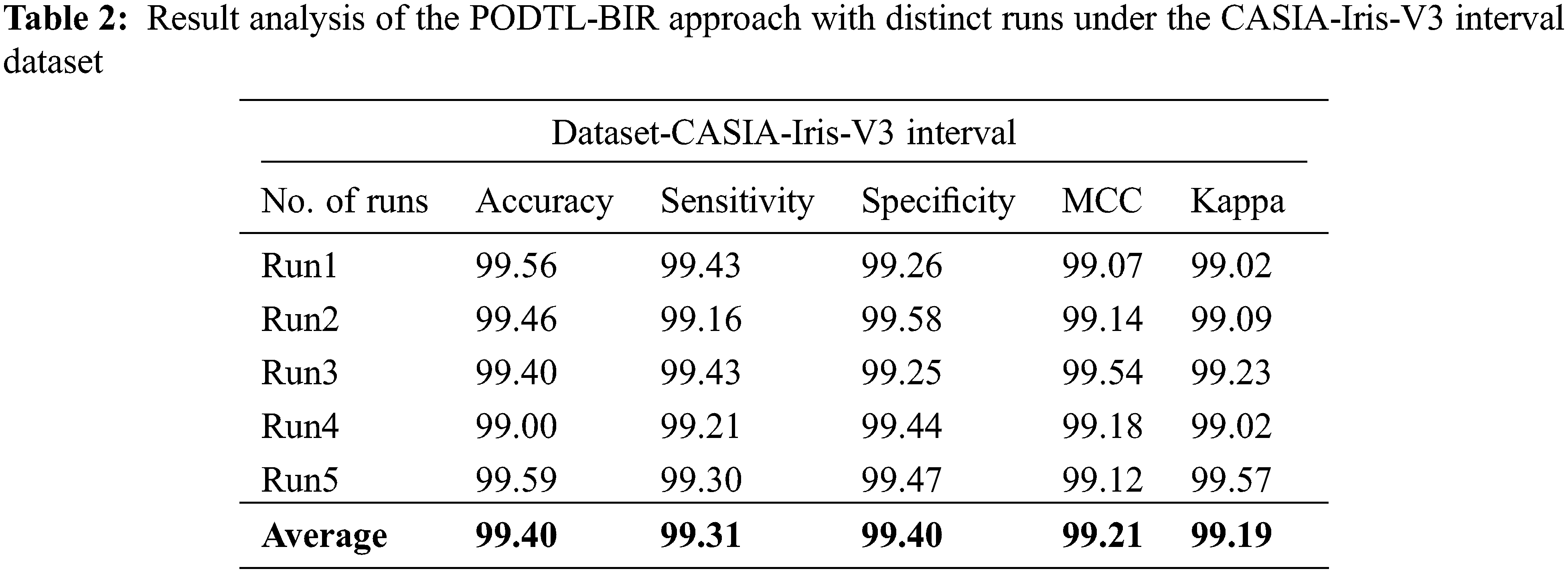

Table 2 and Fig. 7 offer a detailed iris recognition outcome of the PODTL-BIR technique on the test CASIA-Iris-V3 Interval dataset. The experimental values showed that the PODTL-BIR system has improved performance under all runs. For sample, with run1, the PODTL-BIR model has offered

Figure 7: Result analysis of the PODTL-BIR approach under the CASIA-Iris-V3 interval dataset

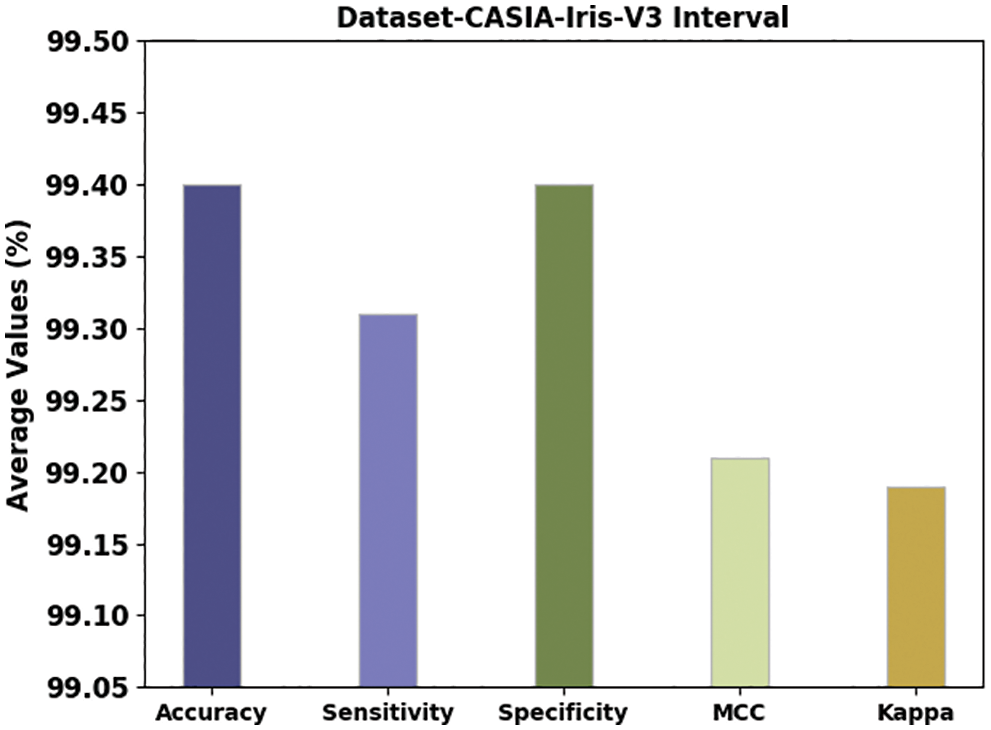

Fig. 8 demonstrates an overall outcome obtainable by the PODTL-BIR method on the test CASIA-Iris-V3 Interval dataset. The outcomes referred that the PODTL-BIR methodology has obtained enhanced outcomes with maximal average

Figure 8: Average analysis of the PODTL-BIR approach under the CASIA-Iris-V3 interval dataset

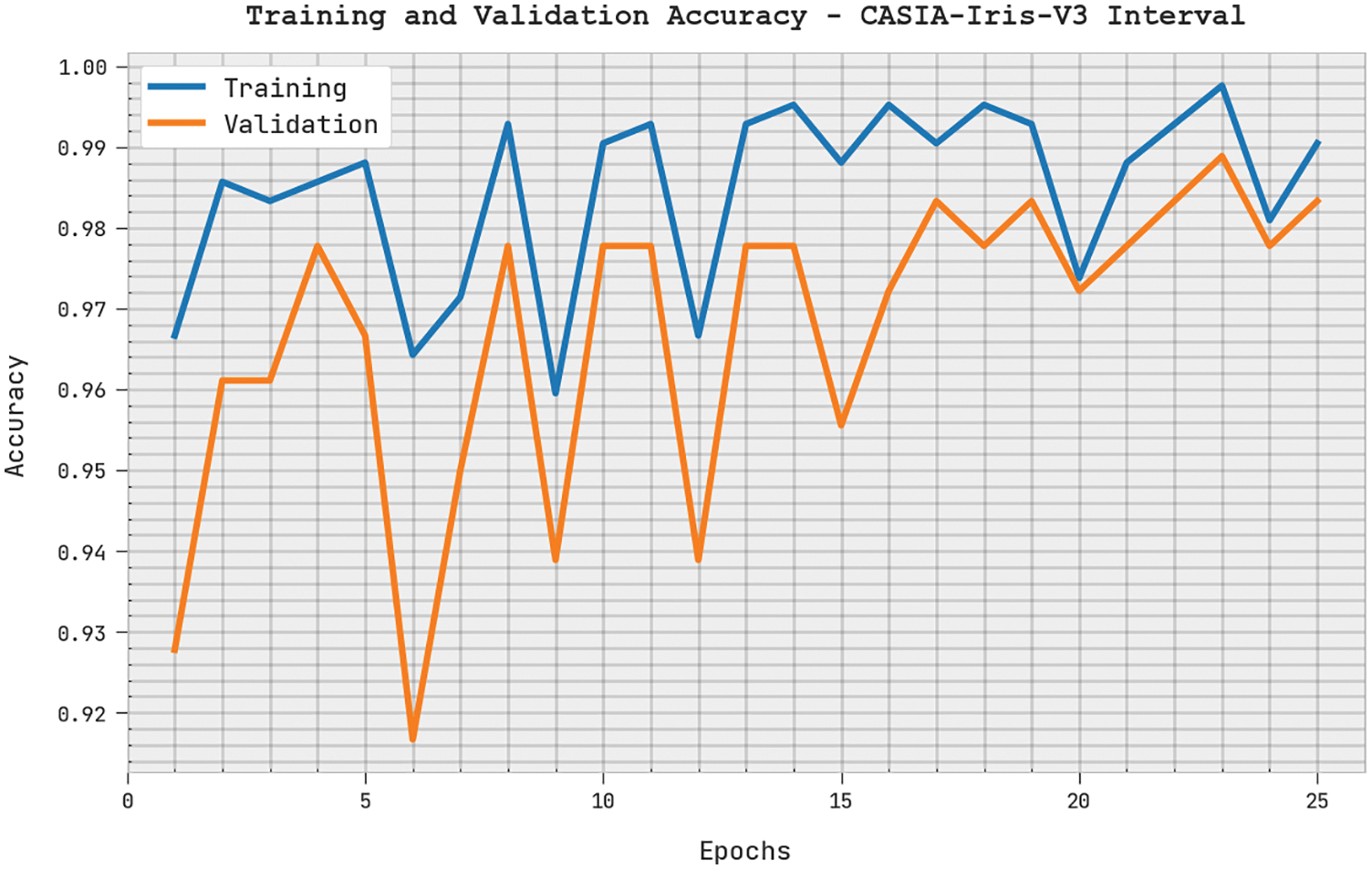

The training accuracy (TRA) and validation accuracy (VLA) acquired by the PODTL-BIR method on the CASIA-Iris-V3 Interval dataset is depicted in Fig. 9. The experimental outcome inferred that the PODTL-BIR system had gained maximal values of TRA and VLA. Particularly the VLA seemed to be superior to TRA.

Figure 9: TRA and VLA analysis of PODTL-BIR approach under CASIA-Iris-V3 interval dataset

The training loss (TRL) and validation loss (VLL) achieved by the PODTL-BIR approach on the CASIA-Iris-V3 Interval dataset are revealed in Fig. 10. The experimental outcome implied that the PODTL-BIR system has accomplished lower values of TRL and VLL. In certain, the VLL is lesser than TRL.

Figure 10: TRL and VLL analysis of the PODTL-BIR approach under the CASIA-Iris-V3 interval dataset

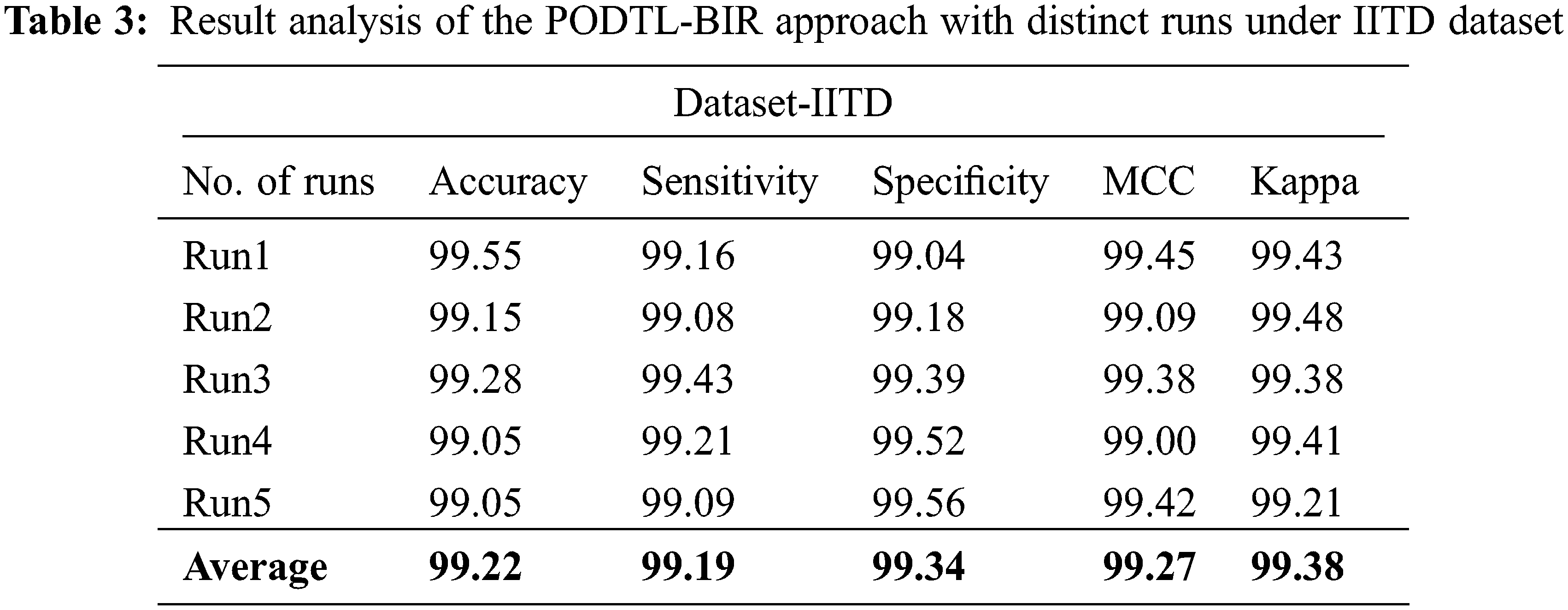

Table 3 and Fig. 11 offer a detailed iris recognition outcome of the PODTL-BIR method on the test IITD dataset. The experimental values indicated that the PODTL-BIR technique has improved performance under all runs. For sample, with run1, the PODTL-BIR model has accessible

Figure 11: Result analysis of the PODTL-BIR approach under IITD dataset

Fig. 12 depicts the overall results obtainable by the PODTL-BIR technique on the test IITD dataset. The outcomes indicated that the PODTL-BIR methodology had improved outcomes with maximal average

Figure 12: Average analysis of PODTL-BIR approach under the IITD dataset

The training accuracy (TRA) and validation accuracy (VLA) achieved by the PODTL-BIR approach on the IITD dataset is illustrated in Fig. 13. The experimental result implied that the PODTL-BIR system had achieved maximal values of TRA and VLA. Mostly the VLA appeared superior to TRA.

Figure 13: TRA and VLA analysis of PODTL-BIR approach under the IITD dataset

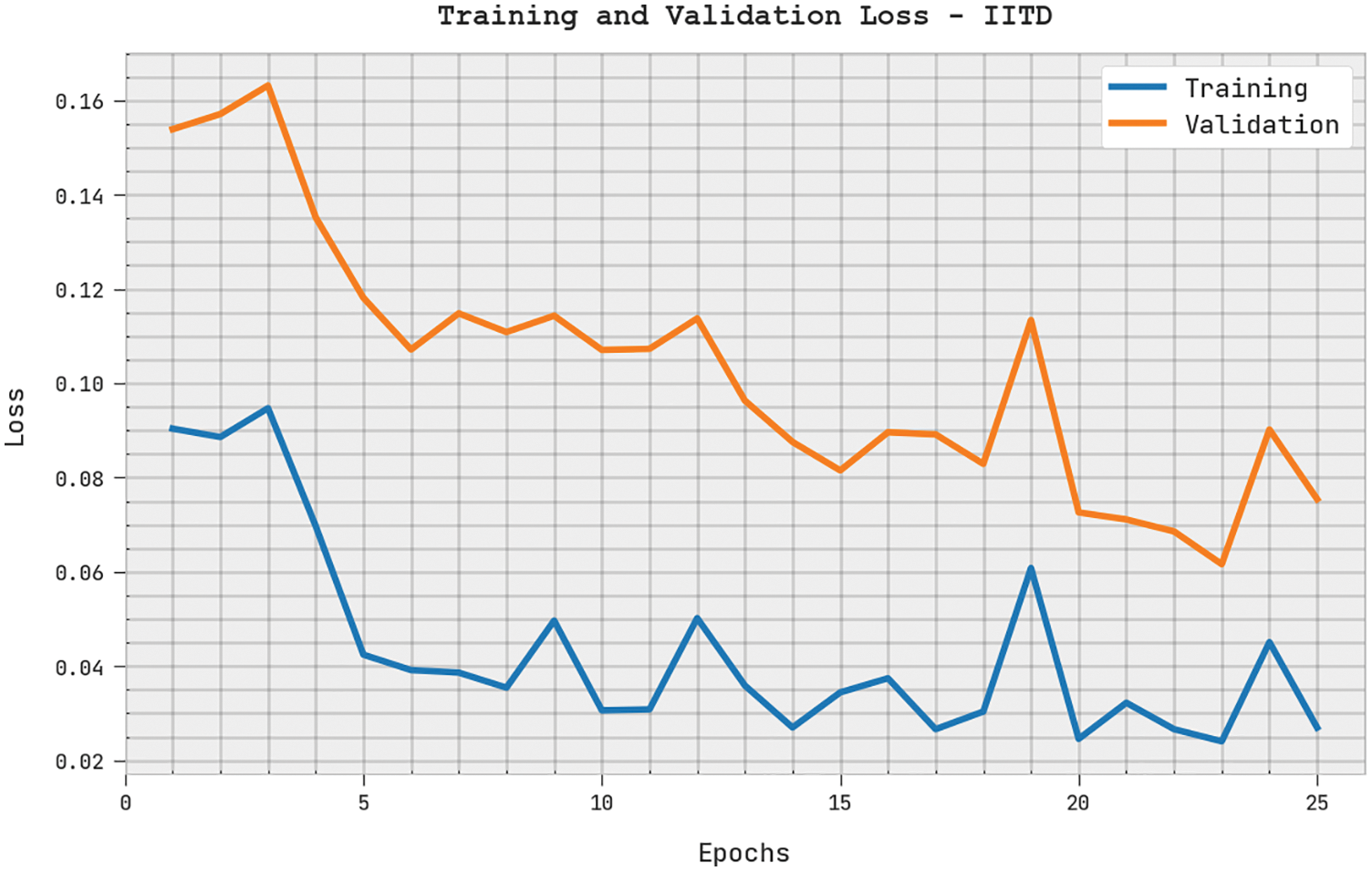

The training loss (TRL) and validation loss (VLL) accomplished by the PODTL-BIR approach on the IITD dataset are illustrated in Fig. 14. The experimental result stated that the PODTL-BIR algorithm has attained minimum values of TRL and VLL. In certain, the VLL is lesser than TRL.

Figure 14: TRL and VLL analysis of PODTL-BIR approach under IITD dataset

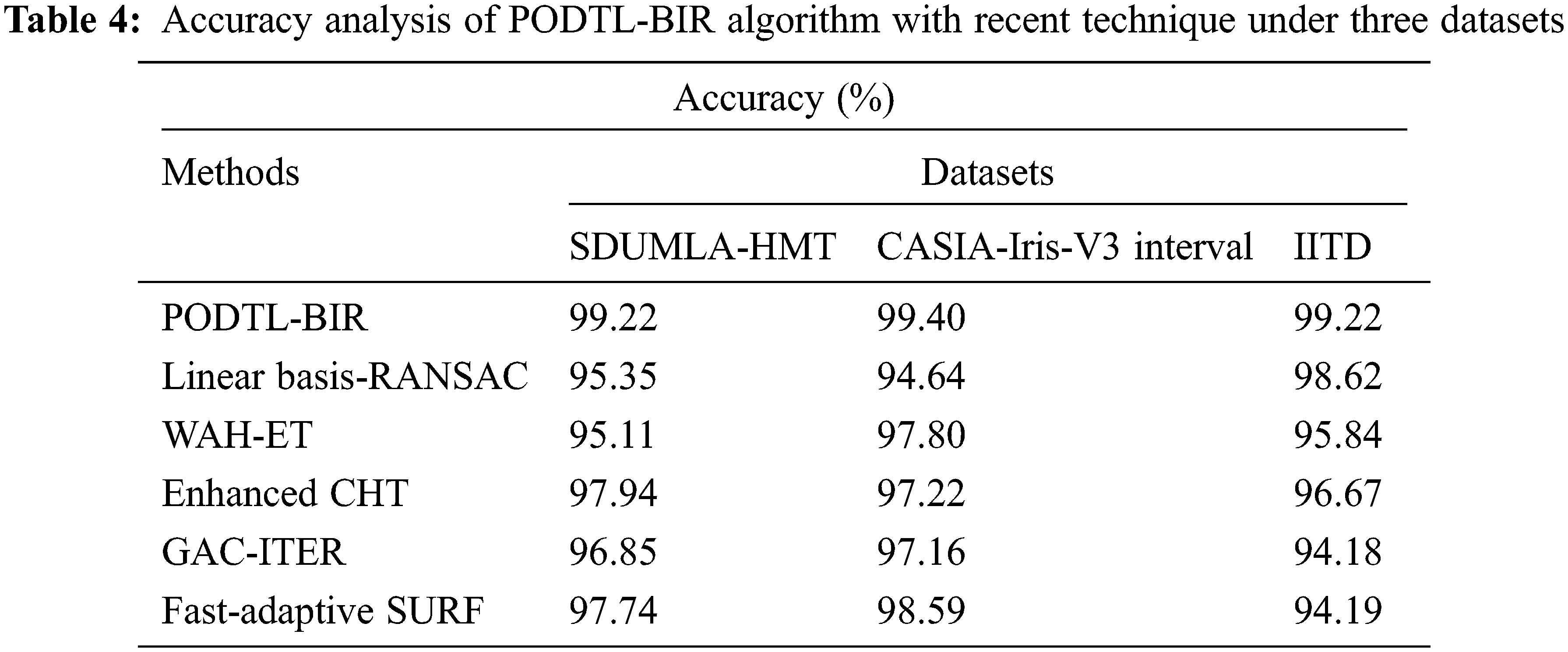

Table 4 exhibit an

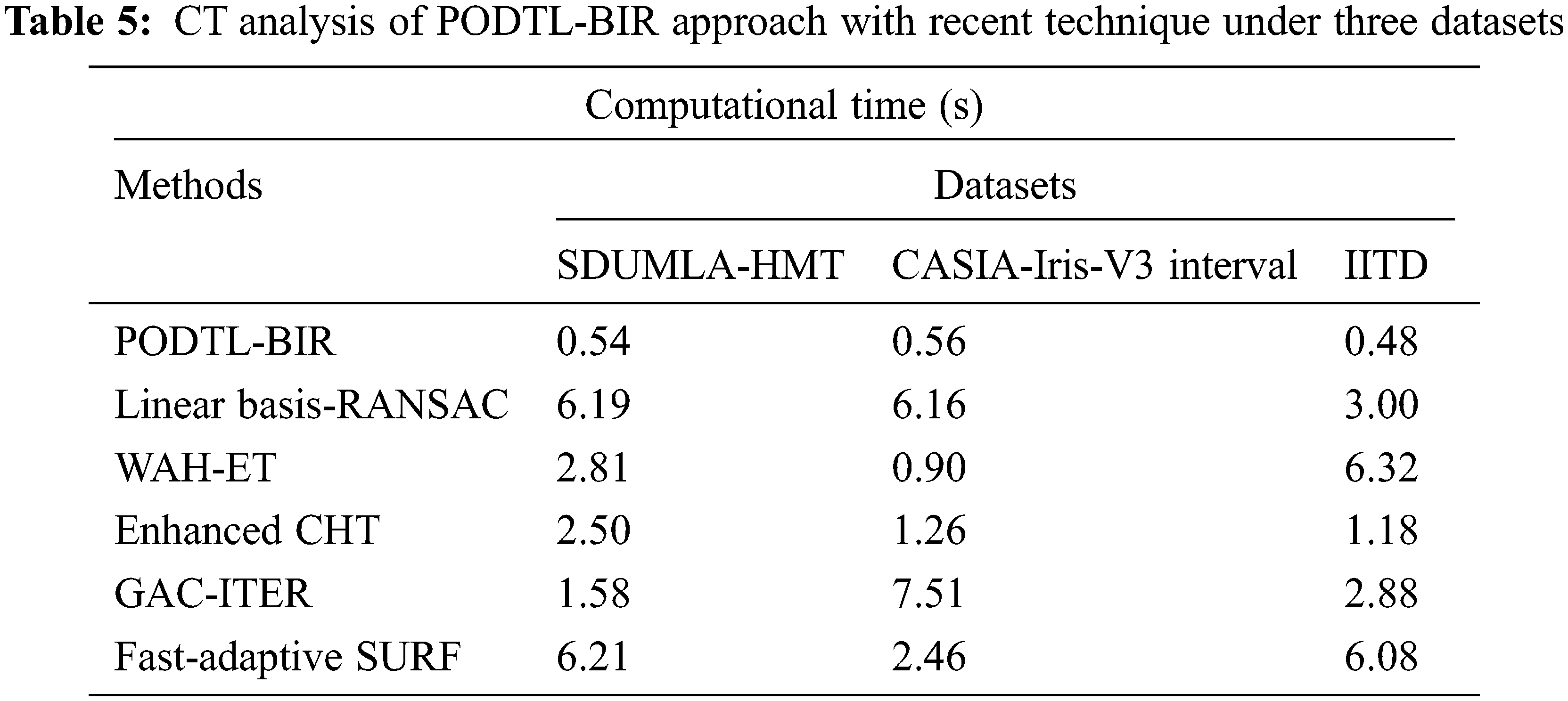

Table 5 provide a brief computation time (CT) examination of the PODTL-BIR model on three datasets. The experimental values referred that the PODTL-BIR method has offered lower CT values under each dataset. For instance, on SDUMLA-HMT dataset, the PODTL-BIR model has provided minimal CT of 0.54 s whereas the Linear Basis-RANSAC, WAH-ET, enhanced CHT, GAC-ITER, and Fast-Adaptive SURF models have resulted to increased CT of 6.19, 2.81, 2.50, 1.58, and 6.21 s respectively.

Moreover, on CASIA-IRIS dataset, the PODTL-BIR algorithm has provided minimal CT of 0.56 s whereas the Linear Basis-RANSAC, WAH-ET, enhanced CHT, GAC-ITER, and Fast-Adaptive SURF techniques have resulted in maximal CT of 6.16, 0.90, 1.26, 7.51, and 2.46 s correspondingly. From the detailed results and discussion, it can be obvious that the PODTL-BIR model has shown effectual outcomes on all datasets.

In this study, a novel PODTL-BIR approach was established to recognize the iris for biometric security. In the presented PODTL-BIR model, an initial stage of pre-processing is carried out. Besides, MobileNetv2 feature extractor is utilized to produce a collection of feature vectors. To recognize iris for biometric verification, the presented PODTL-BIR technique utilizes BiGRU model. At last, the PO algorithm is used as a hyperparameter tuning strategy for enhancing the recognition efficiency of the PODTL-BIR approach. To validate the enhanced performance of the PODTL-BIR system, a wide-ranging experimental investigation is applied. The experimental outcomes stated the promising performance of the PODTL-BIR approach on other state-of-art techniques. In future, the recognition rate of the PODTL-BIR approach was boosted by fusion based DL models.

Funding Statement: The Deanship of Scientific Research (DSR) at King Abdulaziz University (KAU), Jeddah, Saudi Arabia has funded this project, under grant no. KEP-3-120-42.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. S. Arora and M. P. S. Bhatia, “Presentation attack detection for iris recognition using deep learning,” International Journal of System Assurance Engineering and Management, vol. 11, no. S2, pp. 232–238, 2020. [Google Scholar]

2. M. Liu, Z. Zhou, P. Shang and D. Xu, “Fuzzified image enhancement for deep learning in iris recognition,” IEEE Transactions on Fuzzy Systems, vol. 28, no. 1, pp. 92–99, 2020. [Google Scholar]

3. M. Choudhary, V. Tiwari and U. Venkanna, “Enhancing human iris recognition performance in unconstrained environment using ensemble of convolutional and residual deep neural network models,” Soft Computing, vol. 24, no. 15, pp. 11477–11491, 2020. [Google Scholar]

4. H. M. Therar, E. A. Mohammed and A. J. Ali, “Multibiometric system for iris recognition based convolutional neural network and transfer learning,” IOP Conference Series: Materials Science and Engineering, vol. 1105, no. 1, pp. 012032, 2021. [Google Scholar]

5. M. Omran and E. N. AlShemmary, “An iris recognition system using deep convolutional neural network,” Journal of Physics: Conference Series, vol. 1530, no. 1, pp. 012159, 2020. [Google Scholar]

6. S. Ismail, “Reducing intra-class variations of deformed iris recognition system,” International Journal of Advanced Trends in Computer Science and Engineering, vol. 9, no. 1.3, pp. 356–360, 2020. [Google Scholar]

7. S. Shirke and C. Rajabhushnam, “Local gradient pattern and deep learning-based approach for the iris recognition at-a-distance,” International Journal of Knowledge-based and Intelligent Engineering Systems, vol. 25, no. 1, pp. 49–64, 2021. [Google Scholar]

8. Z. R. Sami, H. K. Tayyeh and M. S. Mahdi, “Survey of iris recognition using deep learning techniques,” Journal of Al-Qadisiyah for Computer Science and Mathematics, vol. 13, no. 3, pp. 47, 2021. [Google Scholar]

9. B. M. Salih, A. M. Abdulazeez and O. M. S. Hassan, “Gender classification based on iris recognition using artificial neural networks,” Qubahan Academic Journal, vol. 1, no. 2, pp. 156–163, 2021. [Google Scholar]

10. G. Liu, W. Zhou, L. Tian, W. Liu, Y. Liu et al., “An efficient and accurate iris recognition algorithm based on a novel condensed 2-ch deep convolutional neural network,” Sensors, vol. 21, no. 11, pp. 3721, 2021. [Google Scholar]

11. W. Zhou, X. Ma and Y. Zhang, “Research on image preprocessing algorithm and deep learning of iris recognition,” Journal of Physics: Conference Series, vol. 1621, no. 1, pp. 012008, 2020. [Google Scholar]

12. J. Jayanthi, E. L. Lydia, N. Krishnaraj, T. Jayasankar, R. L. Babu et al., “An effective deep learning features based integrated framework for iris detection and recognition,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, no. 3, pp. 3271–3281, 2021. [Google Scholar]

13. A. S. Al-Waisy, R. Qahwaji, S. Ipson, S. Al-Fahdawi and T. A. M. Nagem, “A multi-biometric iris recognition system based on a deep learning approach,” Pattern Analysis and Applications, vol. 21, no. 3, pp. 783–802, 2018. [Google Scholar]

14. M. S. Azam and H. K. Rana, “Iris recognition using convolutional neural network,” International Journal of Computer Applications, vol. 175, no. 12, pp. 24–28, 2020. [Google Scholar]

15. R. Gupta and P. Sehgal, “HsIrisNet: Histogram based iris recognition to allay replay and template attack using deep learning perspective,” Pattern Recognition and Image Analysis, vol. 30, no. 4, pp. 786–794, 2020. [Google Scholar]

16. K. Wang and A. Kumar, “Toward more accurate iris recognition using dilated residual features,” IEEE Transactions on Information Forensics and Security, vol. 14, no. 12, pp. 3233–3245, 2019. [Google Scholar]

17. R. Shinde, M. Alam, S. Park, S. Park and N. Kim, “Intelligent IoT (IIoT) device to identifying suspected COVID-19 infections using sensor fusion algorithm and real-time mask detection based on the enhanced mobilenetv2 model,” Healthcare, vol. 10, no. 3, pp. 454, 2022. [Google Scholar]

18. H. Ikhlasse, D. Benjamin, C. Vincent and M. Hicham, “Multimodal cloud resources utilization forecasting using a bidirectional gated recurrent unit predictor based on a power efficient stacked denoising autoencoders,” Alexandria Engineering Journal, vol. 61, no. 12, pp. 11565–11577, 2022. [Google Scholar]

19. Q. Askari and I. Younas, “Political optimizer based feedforward neural network for classification and function approximation,” Neural Processing Letters, vol. 53, no. 1, pp. 429–458, 2021. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools