Open Access

Open Access

ARTICLE

Coherence Based Sufficient Condition for Support Recovery Using Generalized Orthogonal Matching Pursuit

1 Department of Electronics and Communication Engineering, Annamalai University, Chidambaram, 608002, Tamil Nadu, India

2 School of Electrical Sciences, Indian Institute of Technology, Farmgudi, Ponda, Goa, India

* Corresponding Author: Aravindan Madhavan. Email:

Computer Systems Science and Engineering 2023, 45(2), 2049-2058. https://doi.org/10.32604/csse.2023.031566

Received 21 April 2022; Accepted 17 June 2022; Issue published 03 November 2022

Abstract

In an underdetermined system, compressive sensing can be used to recover the support vector. Greedy algorithms will recover the support vector indices in an iterative manner. Generalized Orthogonal Matching Pursuit (GOMP) is the generalized form of the Orthogonal Matching Pursuit (OMP) algorithm where a number of indices selected per iteration will be greater than or equal to 1. To recover the support vector of unknown signal ‘x’ from the compressed measurements, the restricted isometric property should be satisfied as a sufficient condition. Finding the restricted isometric constant is a non-deterministic polynomial-time hardness problem due to that the coherence of the sensing matrix can be used to derive the sufficient condition for support recovery. In this paper a sufficient condition based on the coherence parameter to recover the support vector indices of an unknown sparse signal ‘x’ using GOMP has been derived. The derived sufficient condition will recover support vectors of P-sparse signal within ‘P’ iterations. The recovery guarantee for GOMP is less restrictive, and applies to OMP when the number of selection elements equals one. Simulation shows the superior performance of the GOMP algorithm compared with other greedy algorithms.Keywords

Compressed sensing will aid to recover a P-sparse (P-non zero elements) unknown signal

where

The smallest constant

Sufficient condition to guarantee the support recovery for GOMP algorithm has been derived using the coherence of the sensing matrix H.

GOMP can select

GOMP Algorithm:

Step 1: Input:

Step 2: Initialize:

Step 3: For Each

Step 4: Compute the correlation and find the first Q indices where correlation is

Step 5: Select the first ‘Q’ largest value indices

Step 6: Update sub band support indices

Step 7: Estimate the signal vector

Step 8: Update residual

Step 9:

Step 10: Go to step 2

Step 11: End For Return index set of sub band support indices

Step 12: Output: Support vector indices

Step 13: end

1.2 Preliminaries: Generalized Orthogonal Matching Pursuit (GOMP)

Algorithm for generalized orthogonal matching pursuit shown in GOMP algorithm has three parts identification, augmentation and residual update. In the step 4, magnitude of the correlation between each column of matrix H and residual is found and the values are arranged in descending order. Step 5: First Q largest column indices

Line 7 finding the least square estimate of the signal vector, to find the residual

Coherence of sensing matrix H is maximum of absolute correlation between the two columns of H and it is given by

Lemma 1: Let

Lemma 2: For any matrix H, the RIP constant

Lemma 3: Suppose a matrix H satisfy RIP of order P and

In this lemma 3 lower bound can be defined with the help of coherence

The above expression plays a key role in proving the theorem 1. It holds good for generalized case of OMP.

Remark 1: The condition

Theorem 1: Let H satisfy the coherence with

The proof for the special case

First we need to prove that the selection of Q elements contains at least one support index in iterations will be considered as success. The proof for the first iteration should be carried at first. Let us assume

Instead of proving

By expression (5),

residual in the algorithm at tth iteration can be expressed as

Thus, by Eq. (8) and the triangular inequality

It is a two-step process to fond the lower bound. To find the lower bound on maximum of

Lower bound on

Hence the proof.

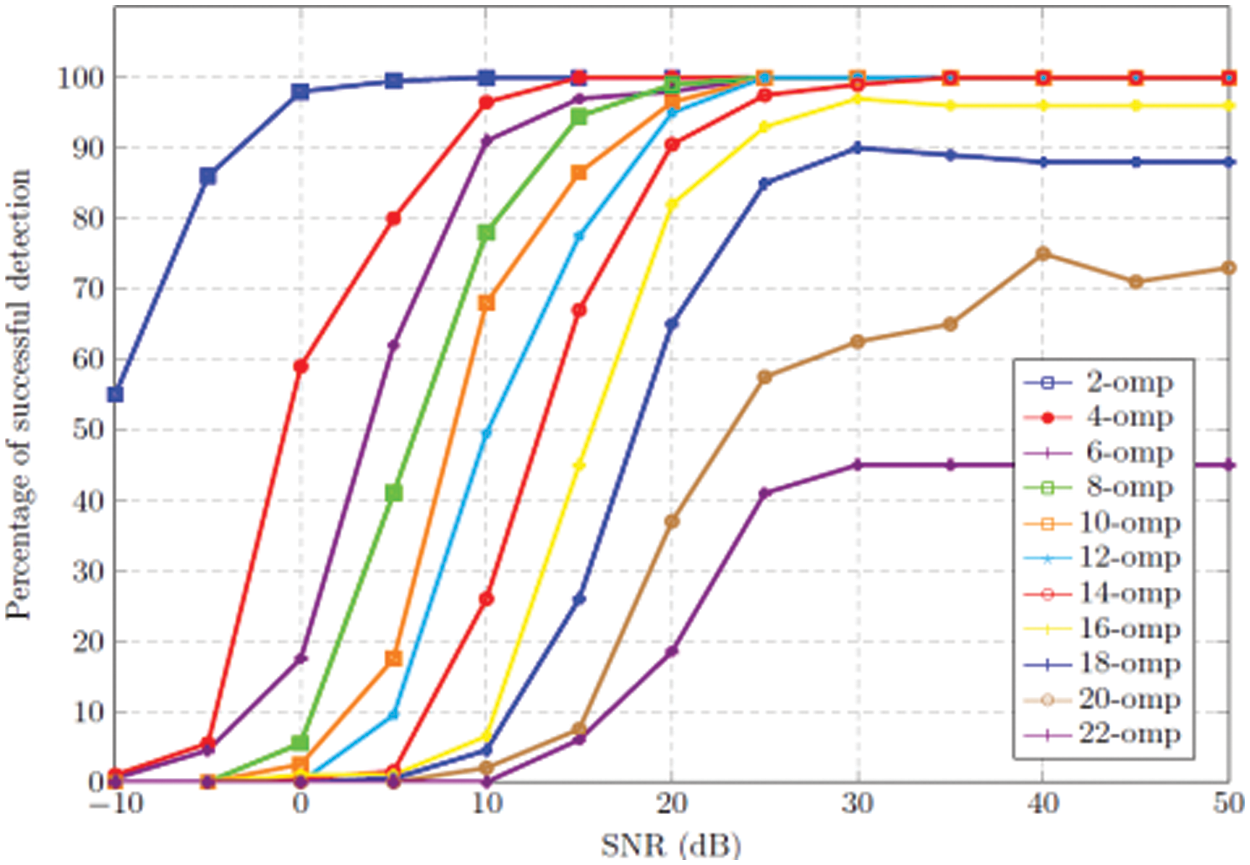

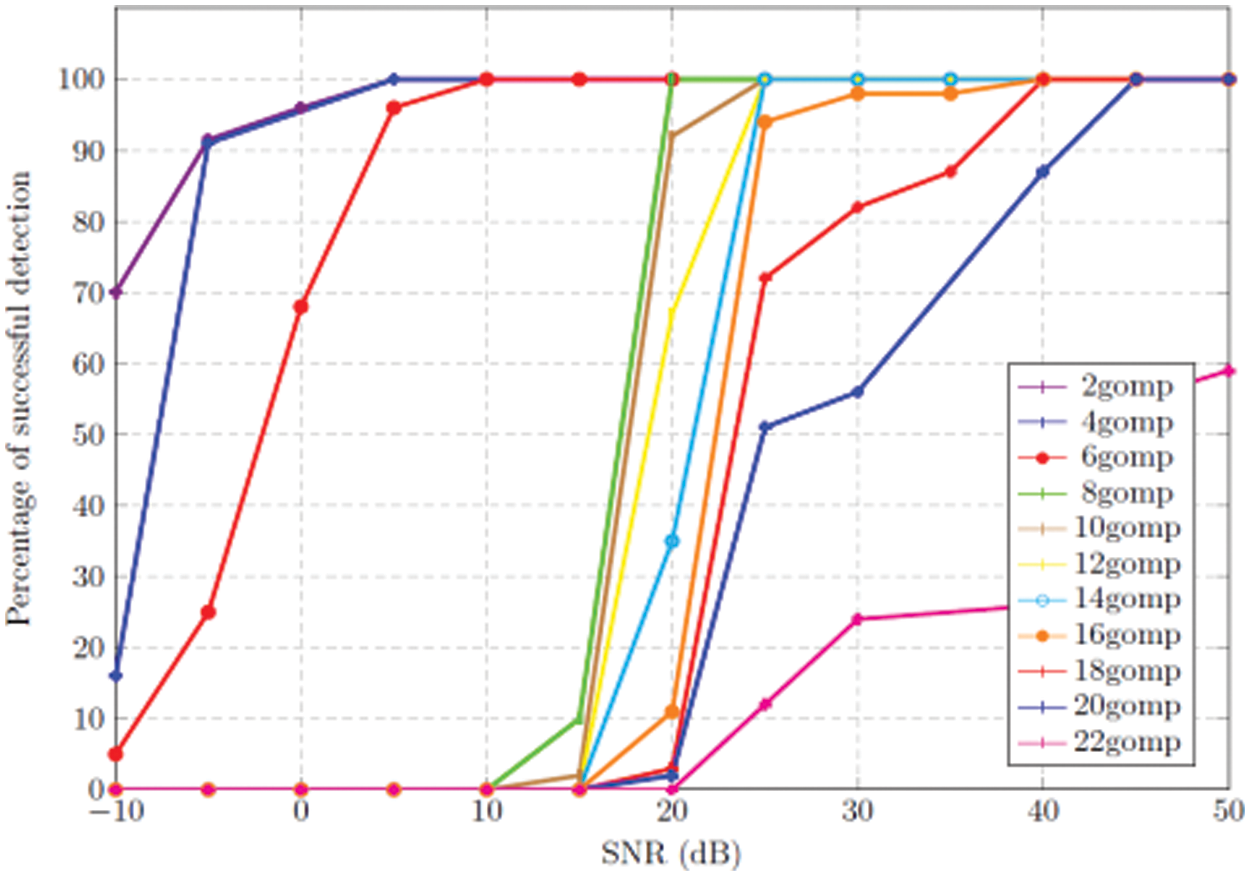

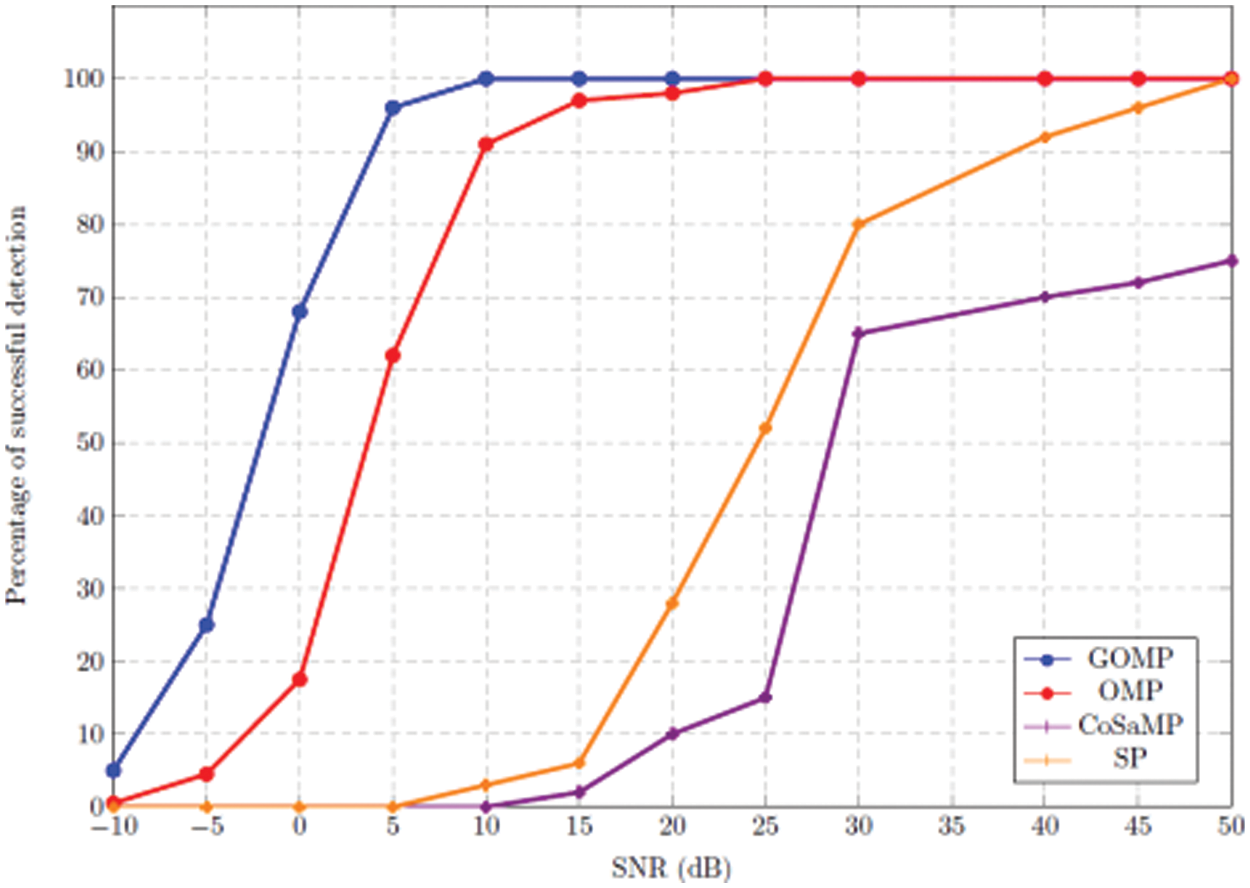

Remark: from the expression (23) it has been proved that if elements of the sparse signal satisfy the constraint, then the GOMP algorithm will identify at least one support index in t iterations. Figs. 2 and 3 show the performance comparison of GOMP and OMP algorithms at various Signal to Noise Ratio (SNR) level for different sparsity. It shows that GOMP has superior performance over the OMP at every SNR levels for the same sparsity. A sufficient condition has been derived in the previous section. Simulations have been carried out to validate the performance of the GOMP algorithm.

In the simulation, the unknown sparse signal is defined as

where L is the number of occupied bands,

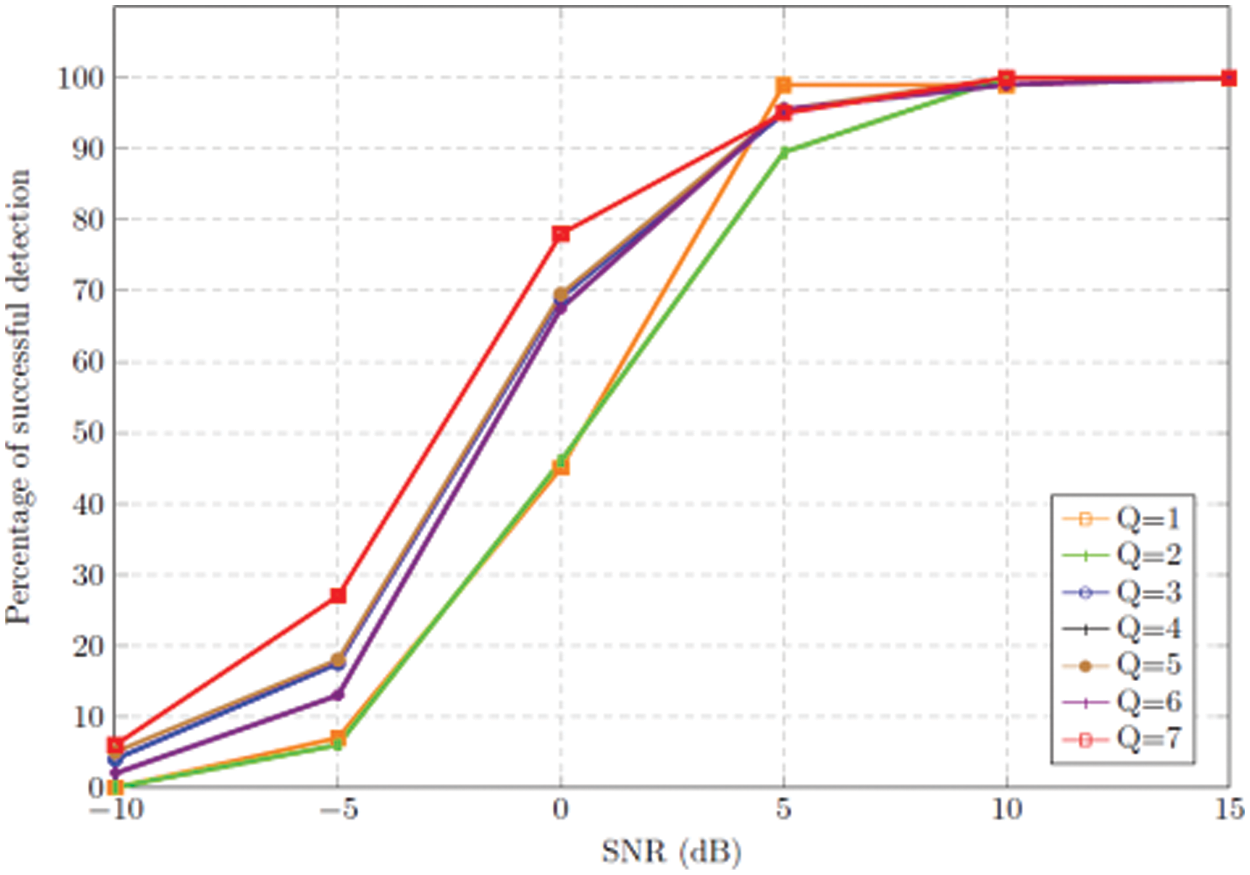

Number of support indices selected in each iteration should satisfy the constraint

Figure 1: Detection performance of GOMP algorithm for different number of selection indices in each iteration at various signal to noise ratio levels

In this paper, successful recovery of support vector indices with respect to SNR for given sparsity of P using generalized orthogonal matching pursuit is shown in the Fig. 2. Sparsity of the signal x will be varied between 2 and 22. It is evident from the Fig. 3 that the algorithm can able to recover the signal support successfully only when there are few non-zero elements.

Figure 2: Detection performance of the OMP (Special case where number of selection element per iteration Q = 1) algorithm for different sparsity P varies from 2 to 22 at various SNR levels

Figure 3: Detection performance of the GOMP algorithm for different sparsity P varies from 2 to 22 at various SNR levels

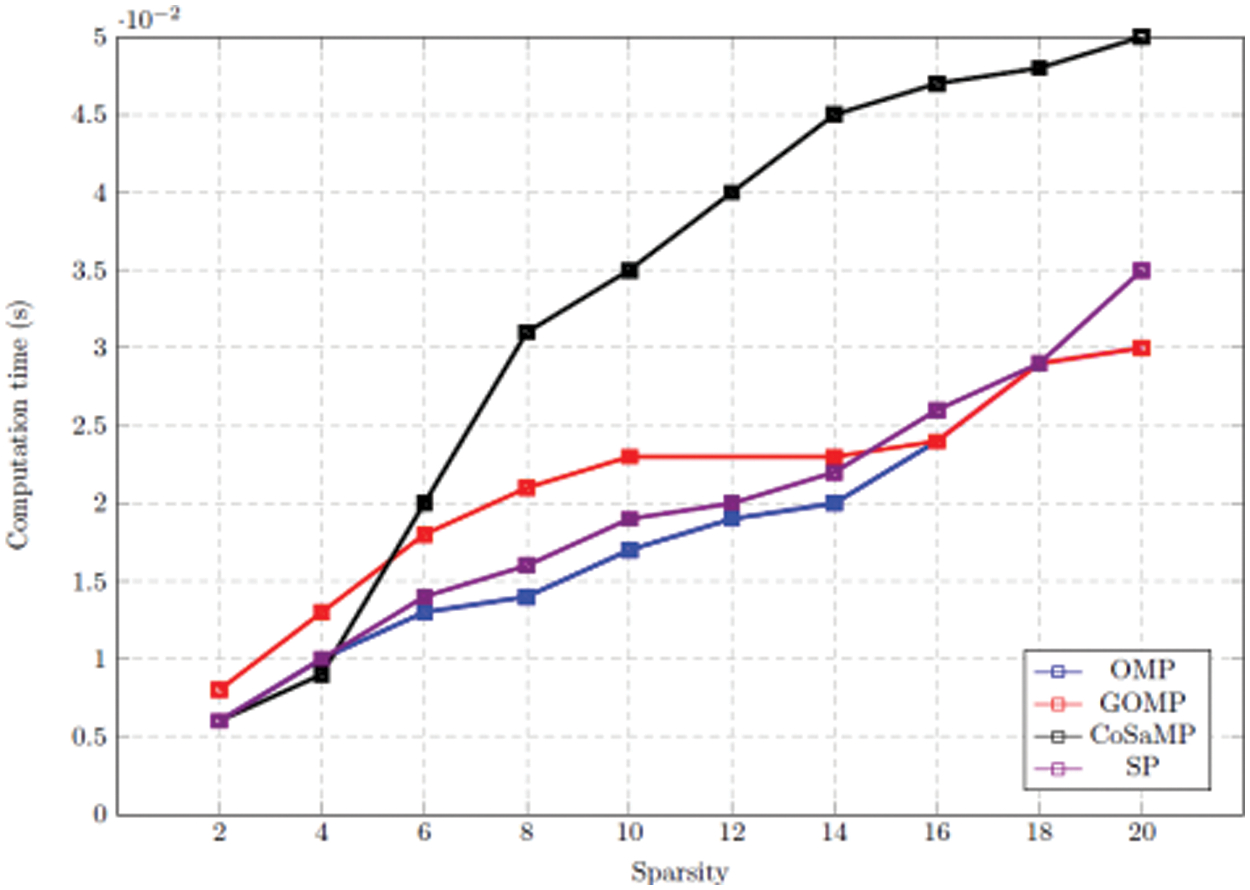

Figure 4: Graph between different sparsity level and computation time for different greedy algorithms

We note that the precise reconstruction probability and the computation time of the sparse signal vary with the level of sparsity. Reconstruction results for the different algorithms are presented in Fig. 5, where the x and y axes represent the level of sparsity and probability of exact recovery, respectively. Percentage of successful detection of support vectors at lower SNR range in GOMP is better compared to the other algorithm because GOMP recovers at least one true support vector in each iteration.

Figure 5: Detection performance of various greedy algorithm plotted between signal to noise ratio in deciBel and percentage of successful detection

In this paper, it has been proved that

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Y. Wang, Z. Tian and C. Feng, “Sparsity order estimation and its application in compressive spectrum sensing for cognitive radios,” IEEE Transactions on Wireless Communications, vol. 11, no. 6, pp. 2116–2125, 2012. [Google Scholar]

2. E. Liu and V. N. Temlyakov, “The orthogonal super greedy algorithm and applications in compressed sensing,” IEEE Transactions on Information Theory, vol. 58, no. 4, pp. 2040–2047, 2011. [Google Scholar]

3. S. Satpathi, R. L. Das and M. Chakraborty, “Improving the bound on the RIP constant in generalized orthogonal matching pursuit,” IEEE Signal Processing Letters, vol. 20, no. 11, pp. 1074–1077, 2013. [Google Scholar]

4. Y. Shen, B. Li, W. Pan and J. Li, “Analysis of generalised orthogonal matching pursuit using restricted isometry constant,” Electronics Letters, vol. 50, no. 14, pp. 1020–1022, 2014. [Google Scholar]

5. Z. Ben-Haim, Y. C. Eldar and M. Elad, “Coherence-based performance guarantees for estimating a sparse vector under random noise,” IEEE Transactions on Signal Processing, vol. 58, no. 10, pp. 5030–5043, 2010. [Google Scholar]

6. Q. Duan, T. Kim, L. Dai and E. Perrins, “Coherence statistics of structured random ensembles and support detection bounds for OMP,” IEEE Signal Processing Letters, vol. 26, no. 11, pp. 1638–1642, 2019. [Google Scholar]

7. J. A. Tropp and A. C. Gilbert, “Signal recovery from random measurements via orthogonal matching pursuit,” IEEE Transactions on Information Theory, vol. 53, no. 12, pp. 4655–4666, 2007. [Google Scholar]

8. C. K. Au-Yeung and D. J. Love, “On the performance of random vector quantization limited feedback beamforming in a MISO system,” IEEE Transactions on Wireless Communications, vol. 6, no. 2, pp. 458–462, 2007. [Google Scholar]

9. X. R. Zhang, W. Z. Zhang, W. Sun, H. L. Wu, A. G. Song et al., “A Real-time cutting model based on finite element and order reduction,” Computer Systems Science and Engineering, vol. 43, no. 1, pp. 1–15, 2022. [Google Scholar]

10. X. R. Zhang, J. Zhou, W. Sun and S. K. Jha, “A lightweight CNN based on transfer learning for COVID-19 diagnosis,” Computers, Materials & Continua, vol. 72, no. 1, pp. 1123–1137, 2022. [Google Scholar]

11. J. Wen, Z. Zhou, D. Li and X. Tang, “A novel sufficient condition for generalized orthogonal matching pursuit,” IEEE Communications Letters, vol. 21, no. 4, pp. 805–808, 2016. [Google Scholar]

12. A. S. Bandeira, M. Fickus, D. G. Mixon and P. Wong, “The road to deterministic matrices with the restricted isometry property,” Journal of Fourier Analysis and Applications, vol. 19, no. 6, pp. 1123–1149, 2013. [Google Scholar]

13. J. Weed, “Approximately certifying the restricted isometry property is hard,” IEEE Transactions on Information Theory, vol. 64, no. 8, pp. 5488–5497, 2017. [Google Scholar]

14. D. Cohen, S. Tsiper and Y. C. Eldar, “Analog-to-digital cognitive radio: Sampling, detection, and hardware,” IEEE Signal Processing Magazine, vol. 35, no. 1, pp. 137–166, 2018. [Google Scholar]

15. R. Neelakandan, “Sub-optimal low-complexity detector for generalised quadrature spatial modulation,” Electronics Letters, vol. 54, no. 15, pp. 941–943, 2018. [Google Scholar]

16. Y. C. Eldar and T. Michaeli, “Beyond bandlimited sampling,” IEEE Signal Processing Magazine, vol. 26, no. 3, pp. 48–68, 2009. [Google Scholar]

17. M. Mishali and Y. C. Eldar, “Blind multiband signal reconstruction: Compressed sensing for analog signals,” IEEE Transactions on Signal Processing, vol. 57, no. 3, pp. 993–1009, 2009. [Google Scholar]

18. M. Jia, Y. Shi, X. Gu, X. Wang and Y. Yin, “Improved algorithm based on modulated wideband converter for multiband signal reconstruction,” EURASIP Journal on Wireless Communications and Networking, vol. 2016, no. 1, pp. 1–9, 2016. [Google Scholar]

19. P. Qi, Z. Li, H. Li and T. Xiong, “Blind sub-nyquist spectrum sensing with modulated wideband converter,” IEEE Transactions on Vehicular Technology, vol. 67, no. 5, pp. 4278–4288, 2018. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools