DOI:10.32604/csse.2023.029005

| Computer Systems Science & Engineering DOI:10.32604/csse.2023.029005 |  |

| Article |

Tracking Pedestrians Under Occlusion in Parking Space

1Graduate School of Informatics, Nagoya University, Nagoya, 464-8601, Japan

2Institute of Innovation for Future Society, Nagoya University, Nagoya, Aichi, Japan

*Corresponding Author: Zhengshu Zhou. Email: shu@ertl.jp

Received: 22 February 2022; Accepted: 25 March 2022

Abstract: Many traffic accidents occur in parking lots. One of the serious safety risks is vehicle-pedestrian conflict. Moreover, with the increasing development of automatic driving and parking technology, parking safety has received significant attention from vehicle safety analysts. However, pedestrian protection in parking lots still faces many challenges. For example, the physical structure of a parking lot may be complex, and dead corners would occur when the vehicle density is high. These lead to pedestrians’ sudden appearance in the vehicle’s path from an unexpected position, resulting in collision accidents in the parking lot. We advocate that besides vehicular sensing data, high-precision digital map of the parking lot, pedestrians’ smart device’s sensing data, and attribute information of pedestrians can be used to detect the position of pedestrians in the parking lot. However, this subject has not been studied and explored in existing studies. To fill this void, this paper proposes a pedestrian tracking framework integrating multiple information sources to provide pedestrian position and status information for vehicles and protect pedestrians in parking spaces. We also evaluate the proposed method through real-world experiments. The experimental results show that the proposed framework has its advantage in pedestrian attribute information extraction and positioning accuracy. It can also be used for pedestrian tracking in parking spaces.

Keywords: Pedestrian positioning; object tracking; LiDAR; attribute information; sensor fusion; trajectory prediction; Kalman filter

It is difficult to regulate the behavior of vehicles and pedestrians in parking lots because there is not enough traffic guidance information, such as signals and signs on the road. For example, a car suddenly coming out of a parking space; a car driving in front of a pedestrian and then reversing into a garage; or a pedestrian suddenly appearing in front of a car. There are potential hazards everywhere that threaten pedestrian safety. With the development of advanced driver assistance systems and automated parking systems, the issue of pedestrian safety in parking vehicles has also attracted the attention of academics and engineers. To detect hazards as soon as possible, it is necessary to pay attention to the people around and perform adequate safety confirmation. Especially for pedestrians, even if the vehicle moves slowly, there is a risk of vehicle-person collision injuries and sometimes even fatal accidents. As representatives of vulnerable road users, older people and children need special protection. Older people have a narrower vision and weaker hearing; therefore, they often do not notice oncoming cars. Additionally, if they pay attention to the vehicle in front of them, their attention to other cars becomes negligent because it is not easy to divide their attention as they age. A study published in the Journal of Safety Research [1] found that a large percentage of young children were left unsupervised in parking lots or out of reach of their adult guardians. If a child crash into a slow-moving car in a parking lot, he or she will not be bounced up; instead, the child may fall in front of the car. If the driver continues driving, the child’s body will be bearing more than a ton of weight of the car, generating the risk of a fatal accident. The sensing dead zone created by adjacent vehicles with a tall profile is a major factor that causes accidents in parking lots. In this case, even if the vehicle is equipped with a driver assistance system, it is not easy for the sensors to detect pedestrians nearby. Additionally, drivers and pedestrians tend to let their guard down due to the relatively low speed of vehicles in parking lots. Moreover, when parking lots are congested, the pedestrian walking route becomes more unpredictable. These factors combine to make parking lot pedestrian safety assurance difficult.

As one of the most important functions of the autonomous driving system, pedestrian detection systems have become a hot topic of research and development in recent years. It is typically integrated into collision avoidance systems using radar cameras and sensors to detect pedestrians and slow down and brake in time to reduce accident injuries. Major car manufacturers have introduced advanced pedestrian detection systems to identify pedestrians on the road and perform dynamic analysis to predict whether they will suddenly break into the driving route. In addition to traditional automotive manufacturers, Internet companies are developing pedestrian detection systems to enable smart mobility. For example, Google’s latest pedestrian detection system relies on camera images to capture pedestrians’ movement and optimize the efficiency of pedestrian detection. Other computer programs, such as vehicle-assisted driving systems, intelligent video surveillance, robotic navigation, drone monitoring, and pedestrian tracking, are also beginning to be applied to pedestrian protection. However, most of these existing methods are based on detecting sensors, such as camera, LiDAR, and RADAR, which are powerless when dealing with complex conditions and observing dead zone in parking lots. Therefore, we advocate that in addition to information from sensors, such as LiDAR and cameras, multiple sources of information, such as high-precision maps, pedestrian device sensors, and pedestrian attribute information, can be used to detect pedestrian location in parking lots. However, this subject has not been studied and explored yet. To fill this gap, we propose a pedestrian tracking method that integrates multiple information sources to provide pedestrian location and status information for vehicles to protect pedestrians in parking spaces. The contributions of this paper are summarized as follows:

• A framework for persistent pedestrian tracking in parking space. We proposed in this paper a framework that can be used to estimate the pedestrian position under occlusion and pedestrian attribute information extraction.

• Implementation of the proposed pedestrian tracking framework. We develop a pedestrian tracking system for pedestrian tracking in parking space using C++.

• An evaluation of the proposed system. To evaluate the proposed framework and system, we carry out real-world experiments in the parking space of a municipal service center. The experimental results indicate that compared with sensor fusion based method, the proposed framework has its advantage in pedestrian attribute information extraction and positioning accuracy.

The remainder of this paper is organized as follows. Section II introduces the work related to pedestrian detection and tracking. Section III introduces the structure, target, and function of the proposed framework for pedestrian detection and tracking. Section IV describes the implementation of the proposed method. In Section V, we conduct an experiment to evaluate the proposed method. Section VI discusses the effectiveness, novelty, and limitations of the proposed framework. Finally, Section VII presents the conclusion and future work.

There are many published pedestrian protection theories and methods. As a pioneering work, Gandhi and Trivedi conducted a systematic literature review of research on the enhancement of pedestrian safety to develop a better understanding of the nature, issues, approaches, and challenges surrounding the problem [2]. Some authors conducted a comprehensive survey of recent advances in tracking-by-detection-based (TBD-based) multiple pedestrian tracking algorithms [3].

The most popular pedestrian detection and tracking solutions are object tracking methods based on passive sensors, such as surveillance cameras, LiDAR, and RADAR. Dai et al. proposed an improved labeled multi-Bernoulli (LMB) filter with inter-target occlusion handling ability for multi-extended target tracking using laser range finder [4]. Chavez–Garcia et al. improved the perceived model of the environment by including the object classification from multiple sensor detections as a key component of the object’s representation and the perception process [5]. Zhao et al. proposed a system for dynamic object tracking in three-dimensional (3D) spaces to recover tracking after the target is lost [6]. The system combines a 3D position tracking algorithm based on a monocular camera and LiDAR for the dynamic object and a re-tracking mechanism that restores tracking when the target reappears in the camera’s FOVs. Wang et al. investigated the pedestrian recognition and tracking problem for autonomous vehicles using a 3D LiDAR. They used a classifier trained by a support vector machine (SVM) to recognize pedestrians and improved the recognition performance using tracking results [7]. Zhao et al. explored fundamental concepts, solution algorithms, and application guidance associated with using infrastructure-based LiDAR sensors to accurately detect and track pedestrians and vehicles at intersections [8]. Cui et al. proposed a two-stage network flow model for multiple pedestrian tracking [9]. A tracking-by-detection framework is used in the local stage to generate confident tracklets with boosted particle filter. Meanwhile, the data association is formulated as a maximum-a-posteriori (Map) problem in the global stage and solved using a typical min-cost flow algorithm. Wu et al. proposed a method for pedestrian-vehicle near-crash identification that uses a roadside LiDAR sensor [10]. The proposed system extracts the trajectory of road users from roadside LiDAR data through several data processing algorithms: background filtering, lane identification, object clustering, object classification, and object tracking. Pedestrians’ and vehicles’ features are extracted from video data using detection and tracking techniques in computer vision. Zhang et al. proposed a long short-term memory neural network to predict the pedestrian-vehicle conflicts 2s ahead [11]. Farag introduced a real-time road-object detection and tracking (LR_ODT) method for autonomous driving based on the fusion of LiDAR and RADAR data [12]. Xu et al. developed a seamless indoor pedestrian tracking scheme using least square-SVM (LS-SVM) assisted unbiased finite impulse response filter to achieve seamless reliable human position monitoring in indoor environments [13]. Wang et al. proposed a multi-sensor framework to fuse camera and LiDAR data to detect objects on a railway track, including small obstacles and forward trains [14]. Stadler et al. proposed an occlusion handling strategy that explicitly models the relation between occluding and occluded tracks to achieve reliable pedestrian re-identification when occlusion occurs [15]. Wong et al. presented a methodology for pedestrian tracking and attribute recognition. The method employs high-level pedestrian attributes, a similarity measure integrating multiple cues, and a probation mechanism for robust identity matching [16]. Chowdhury et al. designed a multi-target tracking algorithm for dense point clouds based on probabilistic occlusion reasoning [17].

In addition to these passive tracking methods, there are pedestrian tracking methods based on active devices, such as smartphones and inertial measurement unit (IMU) sensors carried by road users. Park et al. designed a stand-alone pedestrian tracking system for indoor corridor environments using smartphone sensors, such as a magnetometer and accelerometer [18]. Kang et al. presented a smartphone-based pedestrian dead reckoning system to track pedestrians through a dead reckoning approach using data from inertial sensors embedded in smartphones [19]. Tian et al. proposed an approach for pedestrian tracking using dead reckoning enhanced with a mode detection using a standard smartphone [20]. Jiang et al. presented an approach to resolve the problem of tracking cooperative people, such as children, the elderly, or patients, by combining passive tracking (surveillance cameras) and active tracking (IMU carried by targets) techniques [21]. Geng et al. proposed an indoor positioning method based on the micro-electro-mechanical system sensors of smartphones [22].

Although several pedestrian tracking methods have been explored, the results of our literature survey suggest that there is no research on pedestrian tracking using multiple information sources (e.g., LiDAR and global positioning system (GPS) sensor data, IMU sensor data, and pedestrian attribute information) at the same time. On the other hand, there are studies on multiple data integration such as [23,24], but the purposes of these studies are improving the robustness of data processing. In this paper, we propose a persistent pedestrian tracking framework and evaluate the effectiveness of the proposed framework through real-world experiments.

3 System Architecture and Framework

This section introduces the structure of the proposed pedestrian tracking system for parking spaces.

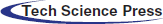

The proposed framework for persistent pedestrian tracking in parking spaces is based on an ongoing project of DM consortium called Dynamic Map (DM2.0) platform [25,26], which is proposed as a city-level dataset that allows the overlaying of sensor data onto a high-definition digital map. It is also seen as the next-generation road map to integrate and share traffic-related information for autonomous driving and traffic situation analysis.

As shown in Fig. 1, DM2.0PF is designed with a three-layer physical architecture: cloud server, edge servers, and Internet of things. Furthermore, the platform has a four-layer logical architecture: highly dynamic, transient dynamic, transient static, and permanent static data. As one of the contents of highly dynamic data, pedestrian real-time location and attribute information are of great significance to autonomous driving, road safety, and traffic condition analysis [26]. However, tracking pedestrians seamlessly still remains a challenge in parking spaces where observation dead spots are peculiarly prone to be produced. To propose a framework that can seamlessly track pedestrians forms the main motivation of this paper.

Figure 1: System architecture of the Dynamic Map 2.0 Platform (DM2.0 PF)

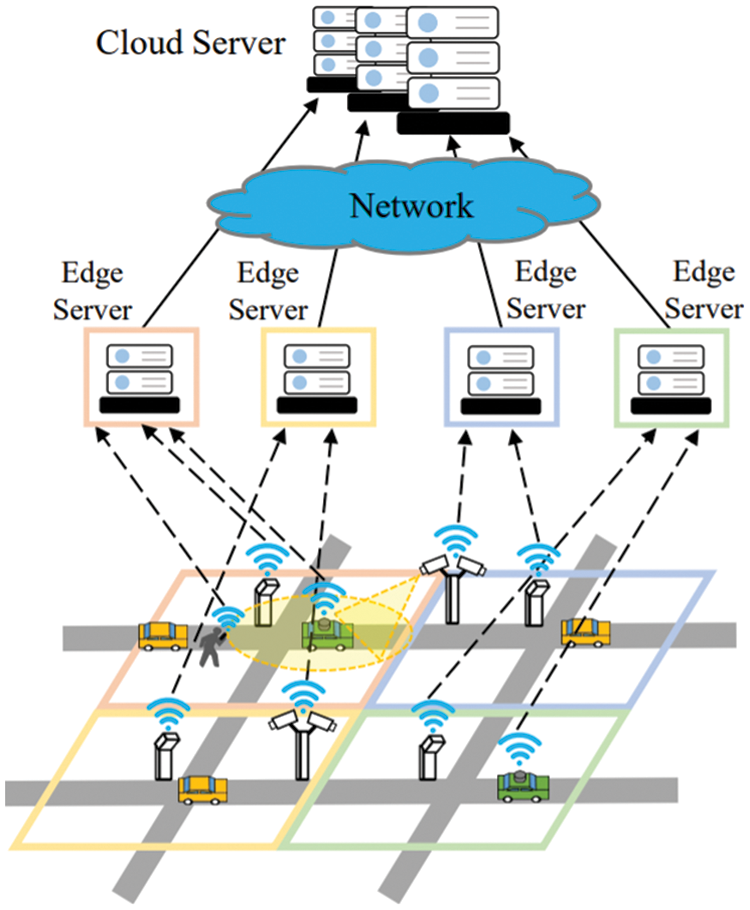

Fig. 2 shows the hardware composition of our system. The system consists of roadside infrastructure, edge server, smart devices, and DM2.0 platform. The roadside infrastructure includes three 3D LiDARs connected to the edge computer through Ethernet. The edge server is connected to the DM2.0 deployed on the cloud server through a wide area network, and the smart devices carried by pedestrians are connected to the edge server through Wi-Fi.

Figure 2: Physical composition of the proposed pedestrian tracking system

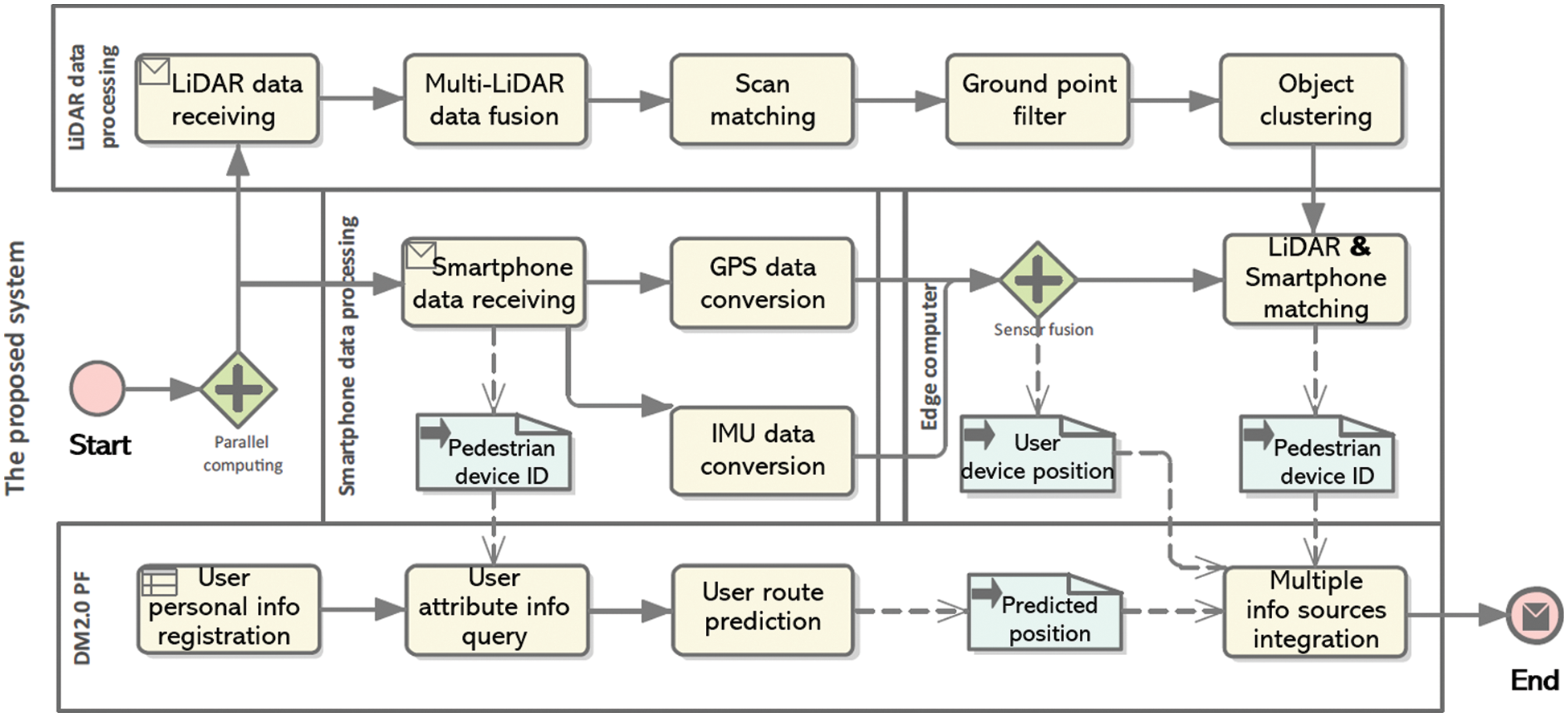

The proposed framework is shown in Fig. 3. LiDAR and smartphone data are processed in parallel. LiDAR point cloud data are received from multiple roadside units to form data fusion and coordinate transformation. Then, ground points are separated from the LiDAR point cloud data for accurate object clustering. After that, we use several pedestrian feature filters to extract pedestrians from the detected object clusters [27]. GPS and IMU data are also received from the pedestrian’s smartphone built-in sensors. We convert the raw GPS data from longitude and latitude values to the same coordinate system of the converted LiDAR point cloud data. Next, the converted GPS position of the smartphone is matched with the pedestrian’s position detected by LiDAR to calculate the probability that the detected pedestrian is the smartphone user. We treat the matching result of the pedestrian position with the largest probability as the optimal solution of the actual pedestrian position. The result of the pedestrian position matching is sent to the DM2.0 platform to be linked with the personal attribute information of the pedestrian.

Figure 3: Proposed framework for persistent pedestrian tracking in parking space

We use the pedestrian’s present position, walking direction, and attribute information (e.g., position of parking spaces and average walking speed) to predict the pedestrian’s approximate position at the next time step. If the pedestrian can be detected by LiDARs, the optimal matching position of pedestrians is regarded as the optimal estimation position. However, if the pedestrian is occluded, we use the extended Kalman filter to fuse the pedestrian device position (GPS&IMU fusion) and the predicted pedestrian position to obtain the optimal estimation of pedestrian position. When occlusion continues to occur, the optimal estimated value of the pedestrian position at the previous time step is taken as the real position of the pedestrian and iterated into the pedestrian position estimation at the next time step until the LiDAR and GPS matching successfully detects the pedestrian again. Meanwhile, when the occlusion is lifted, we calculate the deviation of the predicted pedestrian position and pedestrian device position data based on the actual pedestrian position to evaluate the reliability of these two information sources and decide the weight for data fusion when occlusion occurs again. Finally, the edge server publishes pedestrians’ position data and attribute information to DM2.0 PF and AVs for traffic data analysis and collision warning, respectively.

4 The Proposed Framework for Seamless Multiple Pedestrian Tracking in Parking Space

This section elaborates on the implementation of the proposed pedestrian tracking framework. We aim to provide a pedestrian tracking system in contract parking space to enhance parking safety. Then, we postulate that our target operating environment is as follows.

1. All parking lot users are equipped with a smart device and have completed the registration of DM2.0 PF.

2. For any parking lot user, a fixed parking space is guaranteed.

4.1 User Device Position Acquisition

The common defect of pedestrian detection and tracking methods based on computer vision and LiDAR is that these methods are ineffective in solving the occlusion handling and pedestrian re-identification problems. Therefore, we advocate that more pedestrian information should be integrated to track pedestrians in an environment prone to produce observation blind spots. Among the easily available pedestrian information, user device data are one of the most meaningful information sources for pedestrian detection and tracking. Generally, smartphones are the most widely used user devices. Although the positioning accuracy of smartphone built-in sensors, such as GPS sensors, are unsatisfactory, it has the advantage of continuously sending pedestrian location information without shielding interference. Additionally, while sending the pedestrian location, the smartphone can send the device ID information to identify the pedestrian. Therefore, we first use the GPS and IMU information of user smartphones to obtain the user ID and imprecise location. For the raw GPS data, we first use the coordinate conversion formula published by the Geospatial Information Authority of Japan to convert the GPS data into the Japanese plane rectangular coordinate system. For the raw IMU data, the rotation matrixes for rotating about the x-axis, y-axis, and z-axis are expressed as Eqs. (1)–(3), respectively.

Thus, when the rotation order is x, y, z, the rotation matrix can be expressed as Eq. (4).

The component of gravitational acceleration in the Cartesian coordinate system [x, y, z] is given as follows:

In this way, we can obtain the residual component of the pedestrian’s acceleration after removing gravity acceleration. We use the Kalman filter to fuse GPS and IMU data to obtain relatively accurate user device position. The data fusion process consists of two steps: prediction and correction steps.

Prediction

Correction

where

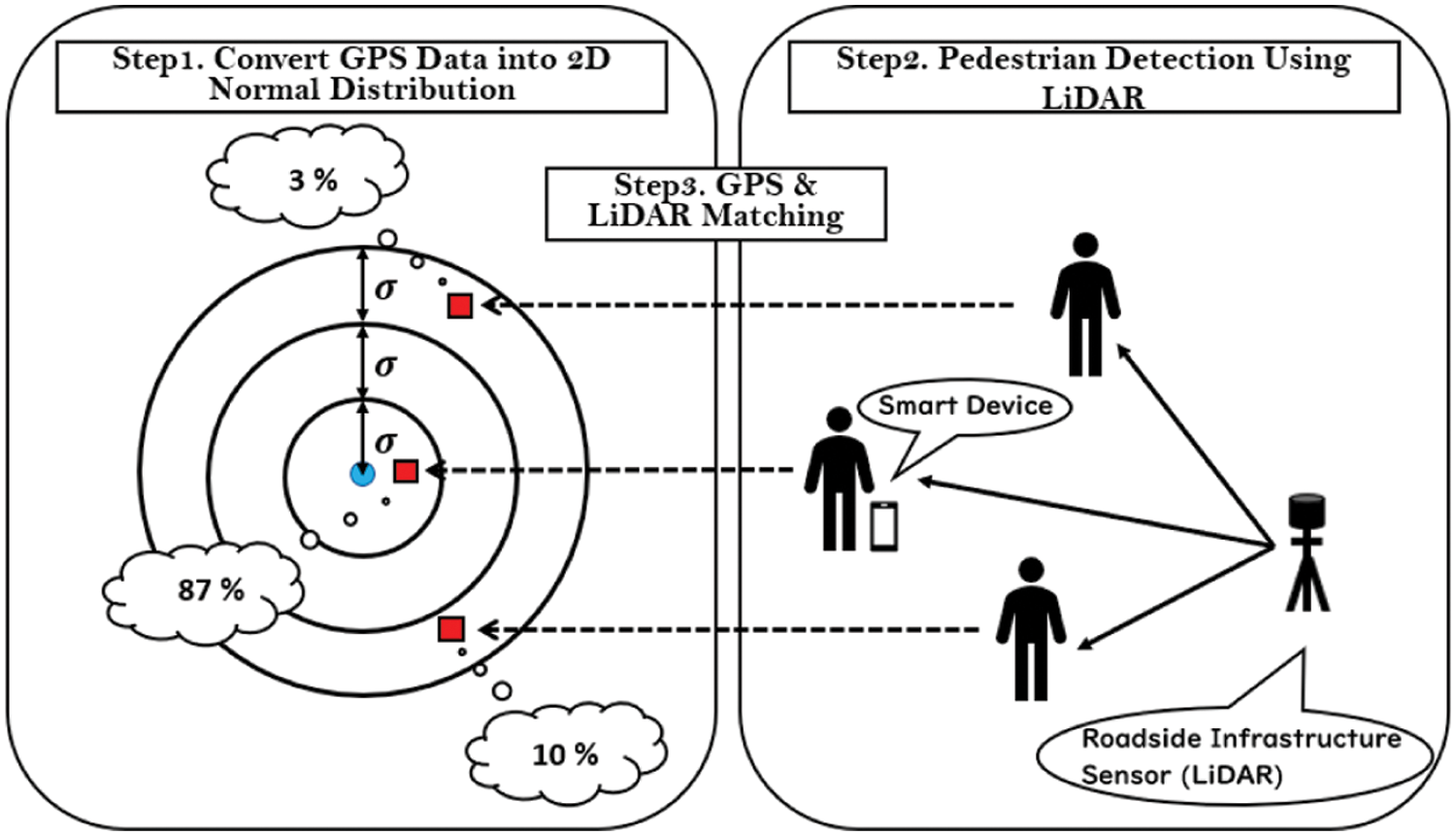

4.2 LiDAR and Smartphone Data Matching

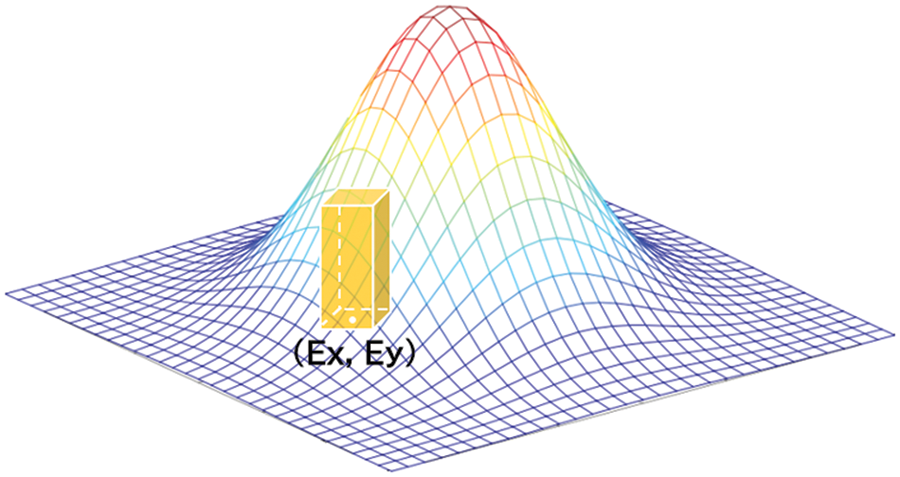

After converting GPS position and LiDAR position data into DM2.0 PF coordinates, we can match the converted GPS data with LiDAR position data to obtain the position of a specific pedestrian through the pedestrian device ID contained in the GPS message. As shown in Eq. (12), we define the calculation method of the probability of the existence of GPS source pedestrians in a certain range by integrating the 2D normal distribution probability density function (PDF) of the existence probability of GPS source pedestrians in that range [27].

where x and y are the x- and y-coordinates of the object detected by LiDAR, respectively; Ex and Ey denote the expected value of the x- and y-coordinates of the GPS source, respectively;

As the actual position coordinate variables of the GPS source follow the normal distribution of the inherent coordinates and variance values of the GPS sensor, we chose multivariate normal distribution for GPS and LiDAR matching. Furthermore, since the vertical sensing accuracy of the smartphone GPS sensor is very low (almost unreliable), we use the PDF of the 2D normal distribution instead of that of the 3D normal distribution. Additionally, our pedestrian tracking system is based on the 2D plane space of the x-y coordinate system. Therefore, we abandon the z-coordinate (height) and use only the x-coordinate and y coordinate positions of the horizontal plane. Fig. 4 shows that the probability of pedestrians in this area can be obtained by integrating the PDF representing 2D normal distribution in a specific range on the x-y plane. To improve the calculation efficiency, we approximate the integral by calculating the volume of a cuboid according to the geometric definition of the integral of the PDF of the 2D normal distribution. The bottom surface of which is 1 m × 1 m square centered on point (x, y). The height of the cuboid is the value of the PDF corresponding to the x and y coordinate values.

Figure 4: Integral method of the probability density function of the 2D normal distribution

As presented in Eq. (13), within the [0~

where

Figure 5: GPS position data and LiDAR object data matching

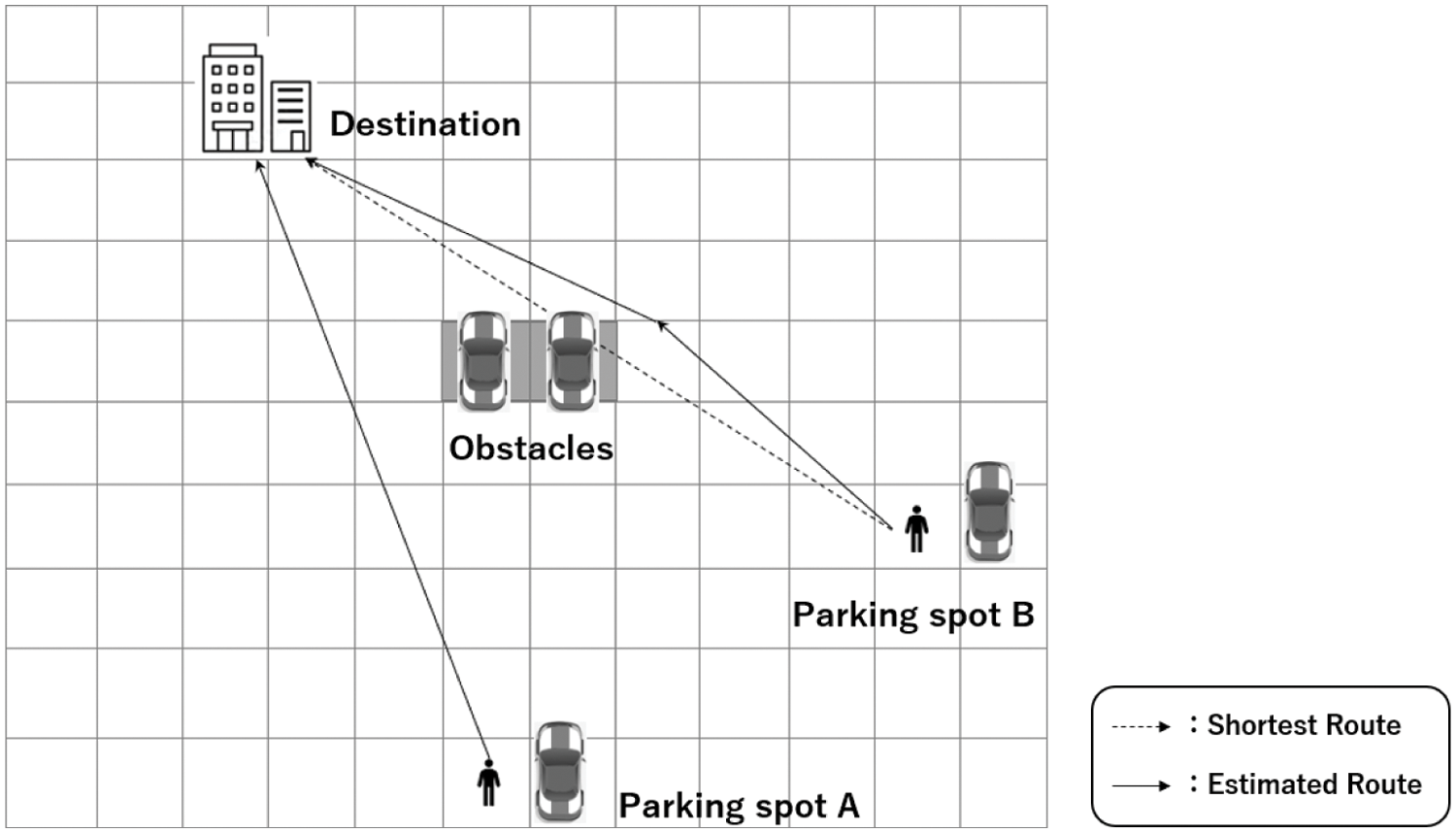

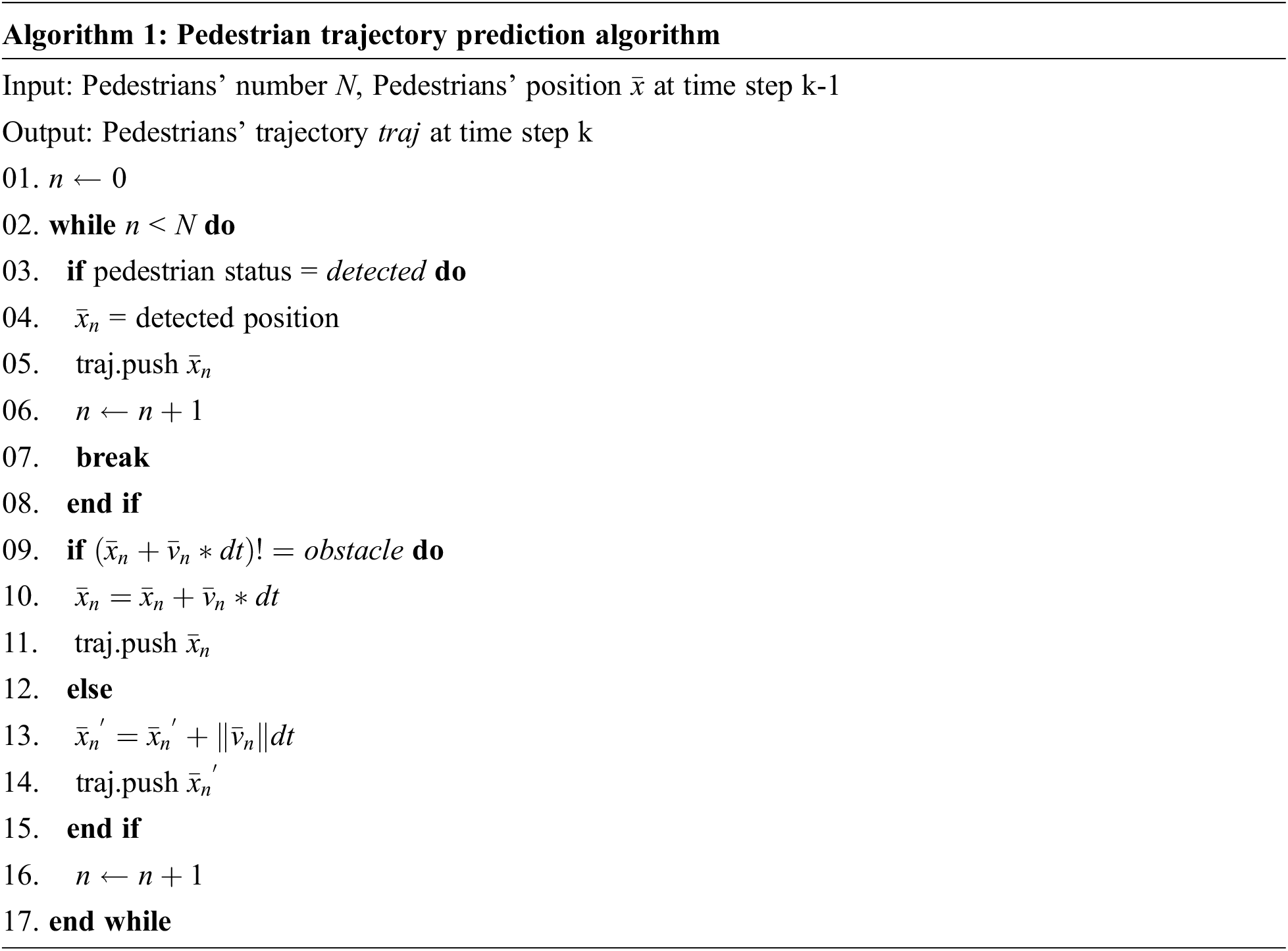

4.3 Pedestrian Route Prediction

When the pedestrian is occluded, although the exact positions and tracks of the pedestrians are unavailable, we know the exit position of the parking lot (digital map information), user’s parking position (user attribute information managed by DM2.0 PF), and can obtain the pedestrian’s walking direction (toward the exit of the parking lot or his parking space), and instantaneous speed through history average speed data and real-time GPS and IMU data. We use the constant velocity model to estimate the position of pedestrians because the walking speed of pedestrians is relatively slow (usually, 1.1–1.5 m/s), and the acceleration is basically negligible. Furthermore, in addition to pedestrians, there are obstacles, such as parked vehicles in the parking lot (see Fig. 6). These will also affect the pedestrians’ route; therefore, we propose an algorithm in this section to predict the pedestrian position.

Figure 6: Pedestrian trajectory prediction based on pedestrian attribute information

The direction of movement of each pedestrian (exit direction or parking lot direction) is first obtained from the GPS data, and the movement speed is calculated from a weighted average of the movement speed of the GPS data and the walking speed of the pedestrian attribute information. Then, we use LiDAR point cloud data to obtain the location of obstacles in the parking space and determine if there is an obstacle on the pedestrian route. If there is no obstacle, the system predicts that the pedestrian will move at a constant speed toward the destination. Meanwhile, if there is an obstacle, the system predicts that the pedestrian will move at a constant speed toward the target point of the adjacent route that is closest to the pedestrian’s original route. Originally, the prediction of the pedestrian’s path should be like a maze search to determine whether there is a path or not. However, according to the actual situation of a parking lot, there is no dead-end, and pedestrians would not go to the exit like a maze search. Therefore, in this section, we do not use recursive or backtracking methods to improve the real-time performance of the proposed system.

4.4 Pedestrian Position Data Fusion

Using the proposed methods in Subsections 4.1, 4.2, and 4.3, we can obtain user device, LiDAR and smartphone matching, and predicted pedestrian positions, respectively. However, these position information sources have their advantages and shortcomings. Although smartphone position and predicted pedestrian position data could continuously provide pedestrian position, there may be a certain amount of error between these data and the actual pedestrian position. Meanwhile, LiDAR and smartphone matching results can accurately reflect pedestrians’ position, but it becomes unavailable when occlusion occurs. As these data are unreliable, we advocate fusing on these data to improve data reliability to provide accurate real-time pedestrian position estimation. Therefore, we propose a Kalman filter-based algorithm for continuous pedestrian tracking to carry out the multiple information sources fusion.

Prediction

Correction

where

When LiDARs cannot detect the pedestrians (occlusion occurs), the proposed system estimates the pedestrians’ position by fusing the device position and predicted pedestrian position data. Furthermore, if occlusion occurs continuously, the estimation will continue iteratively. If LiDARs detect pedestrians again, the system outputs the matching results of LiDAR and GPS data with the maximum probability as the accurate position of the pedestrians and evaluate the reliability of the device position and predicted pedestrian position data to determine the Kalman gain for future estimation.

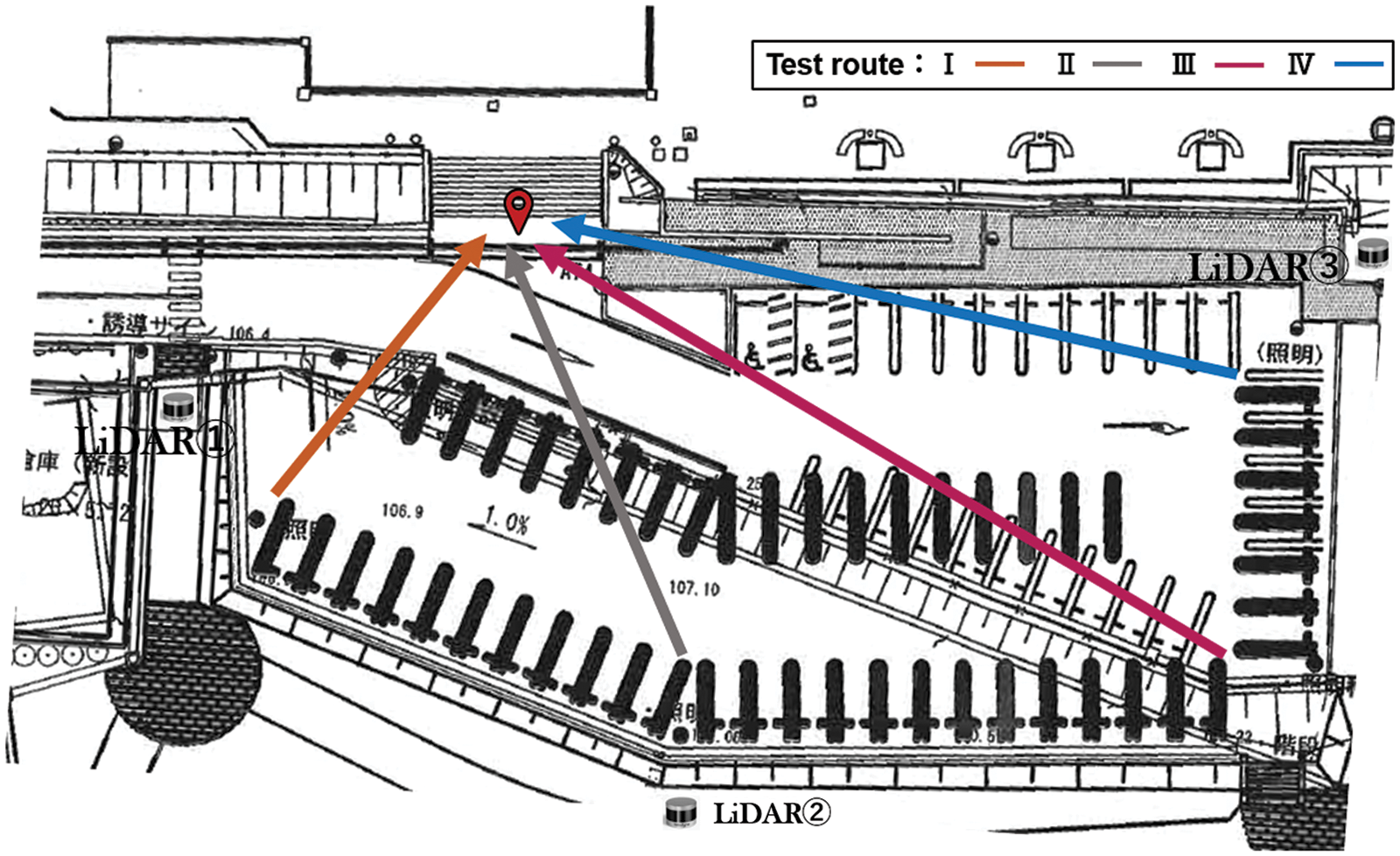

We implemented the proposed framework using C++ and conducted real-world experiments to evaluate our system in the parking space of a municipal service center in Kasugai city, Japan. As shown in Fig. 7, the experiments were conducted using LiDARs (Velodyne vlp-16, vlp-32), smartphones (Google Pixel 4XL), edge server (Ubuntu 18.04, ROS Melodic), and cloud server (DM2.0 PF). Two groups of experiments have been designed to test our system for pedestrian tracking: single and multiple pedestrians. In each group of experiments, we asked the participants to walk along four paths designed in advance (see Fig. 8). We test the proposed system using these paths because walking along the paths can walk through the entire parking space.

Figure 7: Experimental devices and test scene

Figure 8: Experimental design

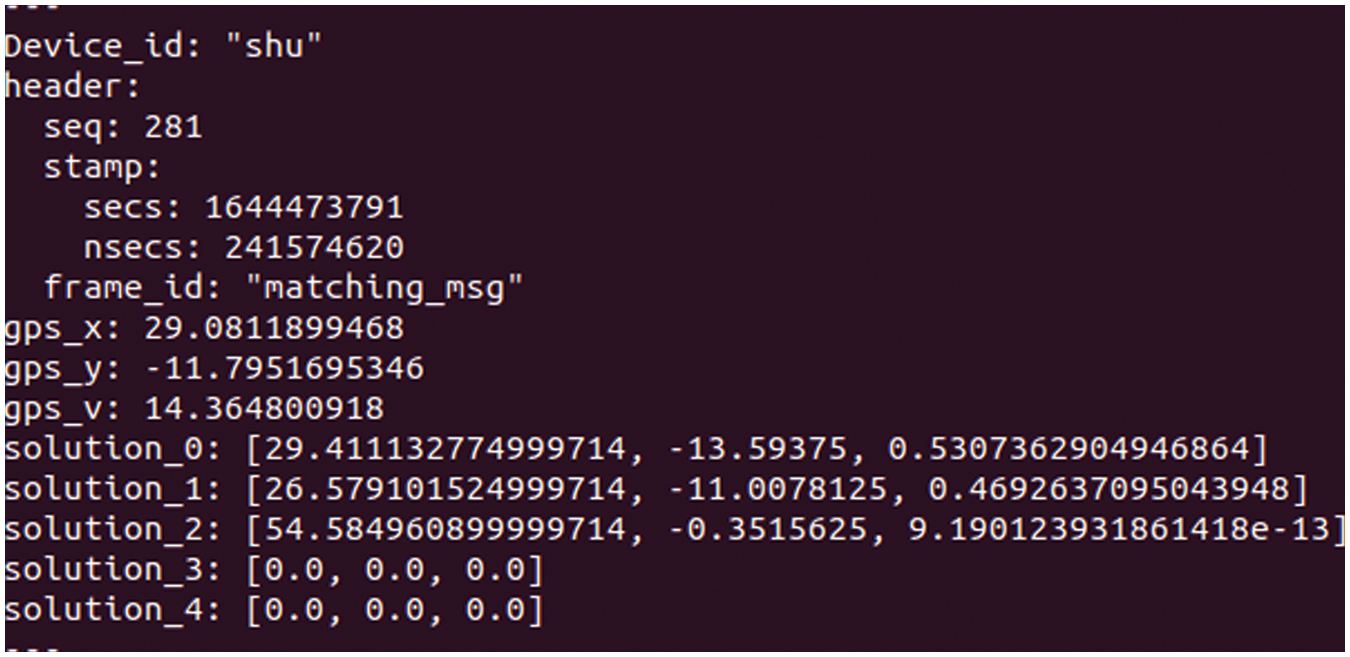

The output of our system includes a unique device ID used to identify the detected pedestrian, pedestrian device position, precise pedestrian position detected by the LiDARs, pedestrian identification probability, and the estimated pedestrian position when occlusion occurs. Fig. 9 shows an example of the output of the proposed system. The device position of the pedestrian id = “shu” is [x = 29.08, y = −11.80]. There are three detected LiDAR objects, and the pedestrian position with the maximum probability value is [x = 29.41, y = −13.59]. Furthermore, the attribute information of the pedestrian can be identified in the DM2.0 platform using the unique device ID.

Figure 9: An example of system output

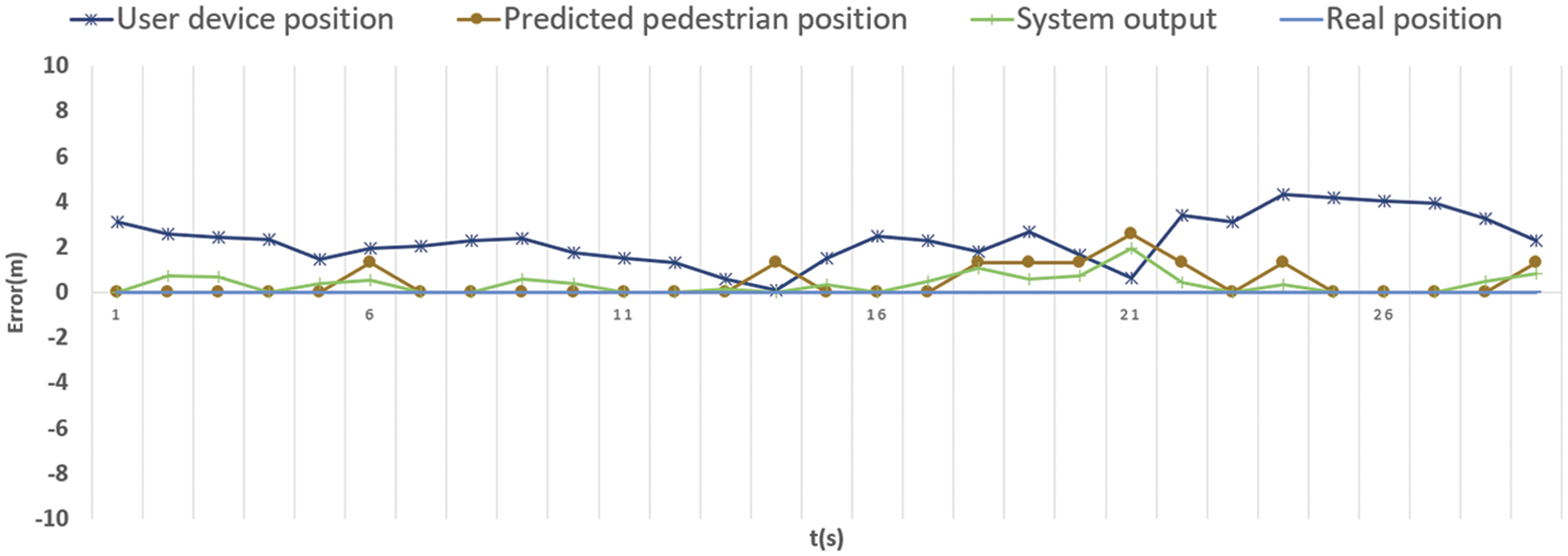

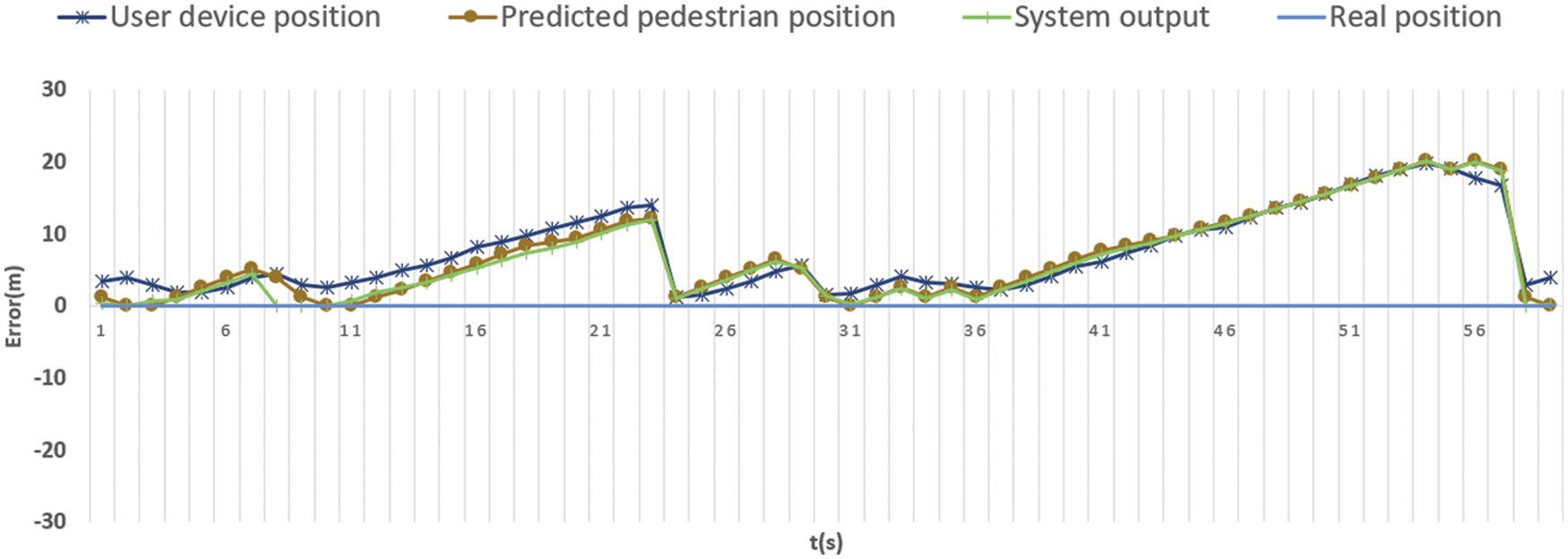

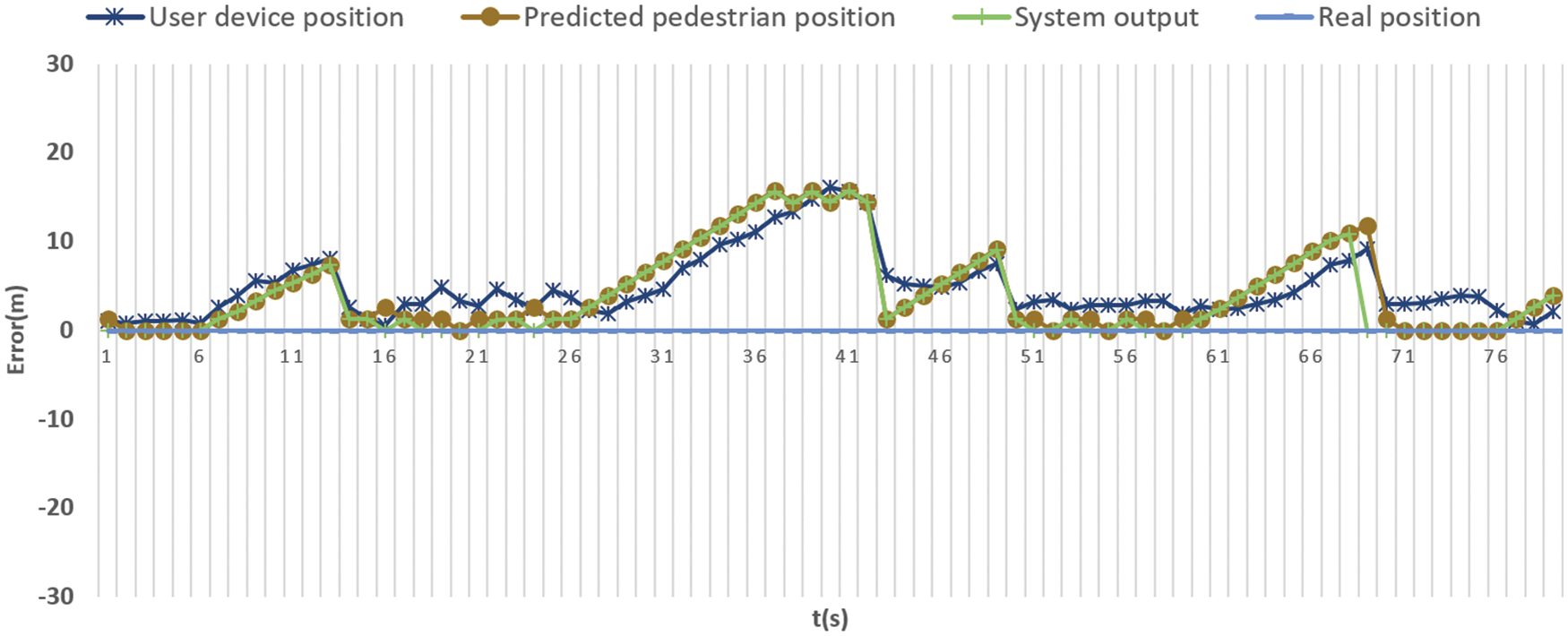

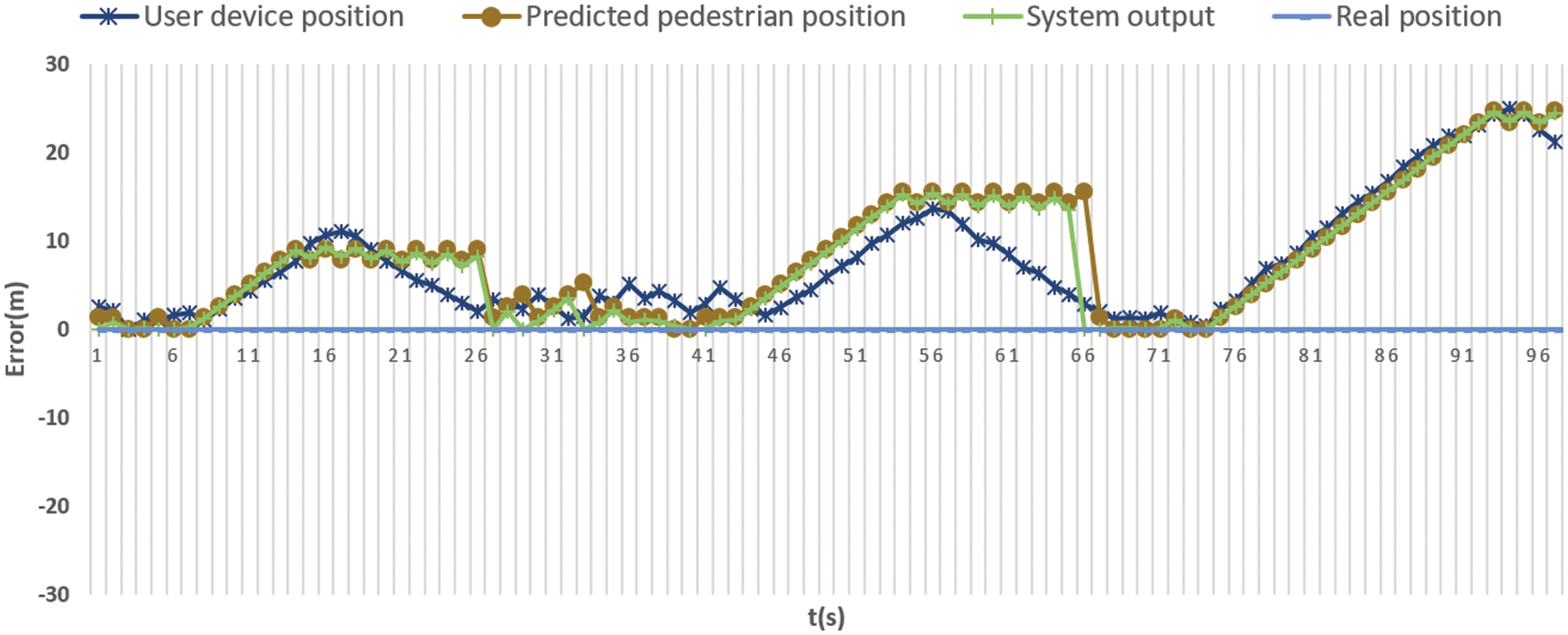

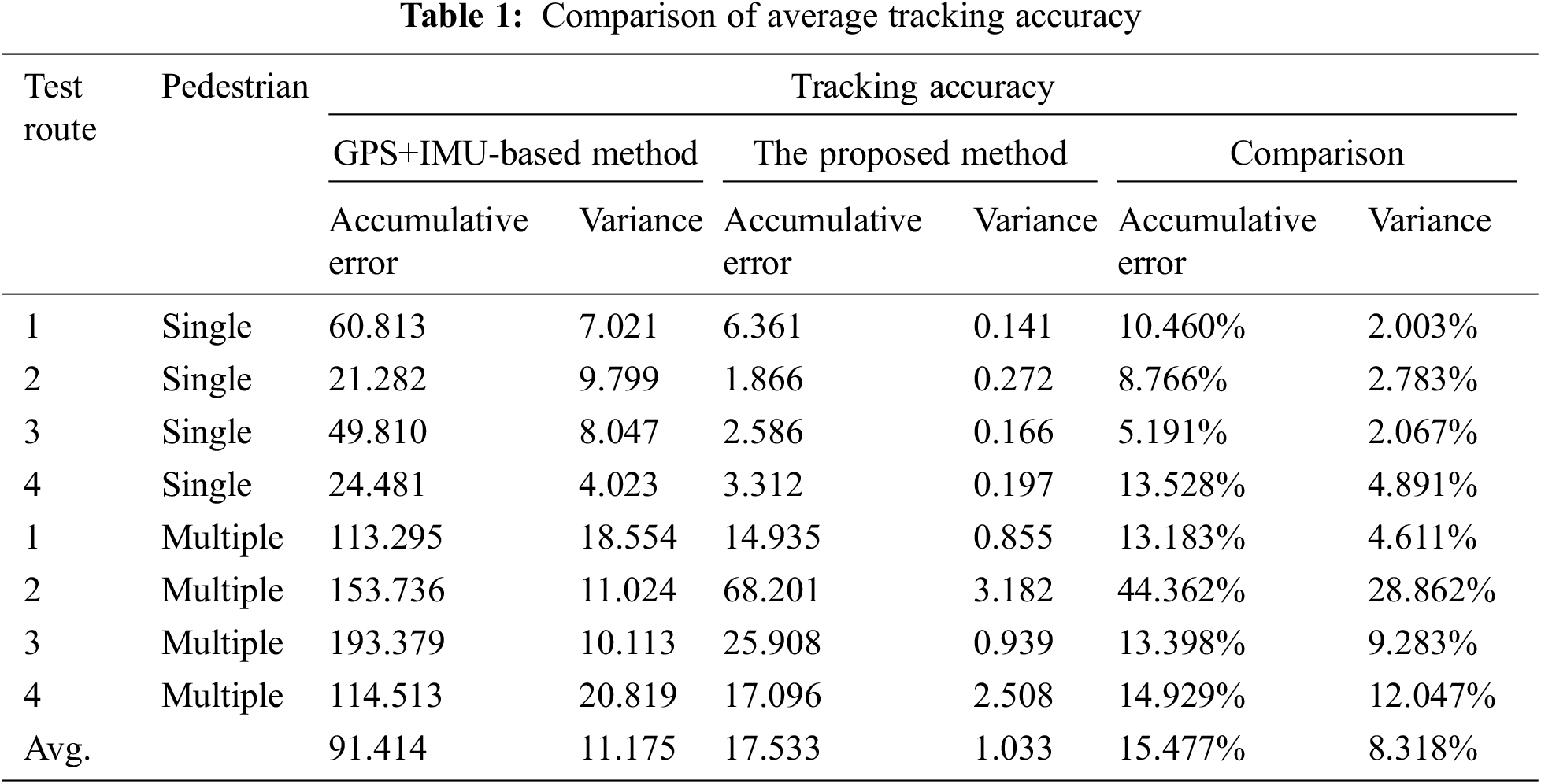

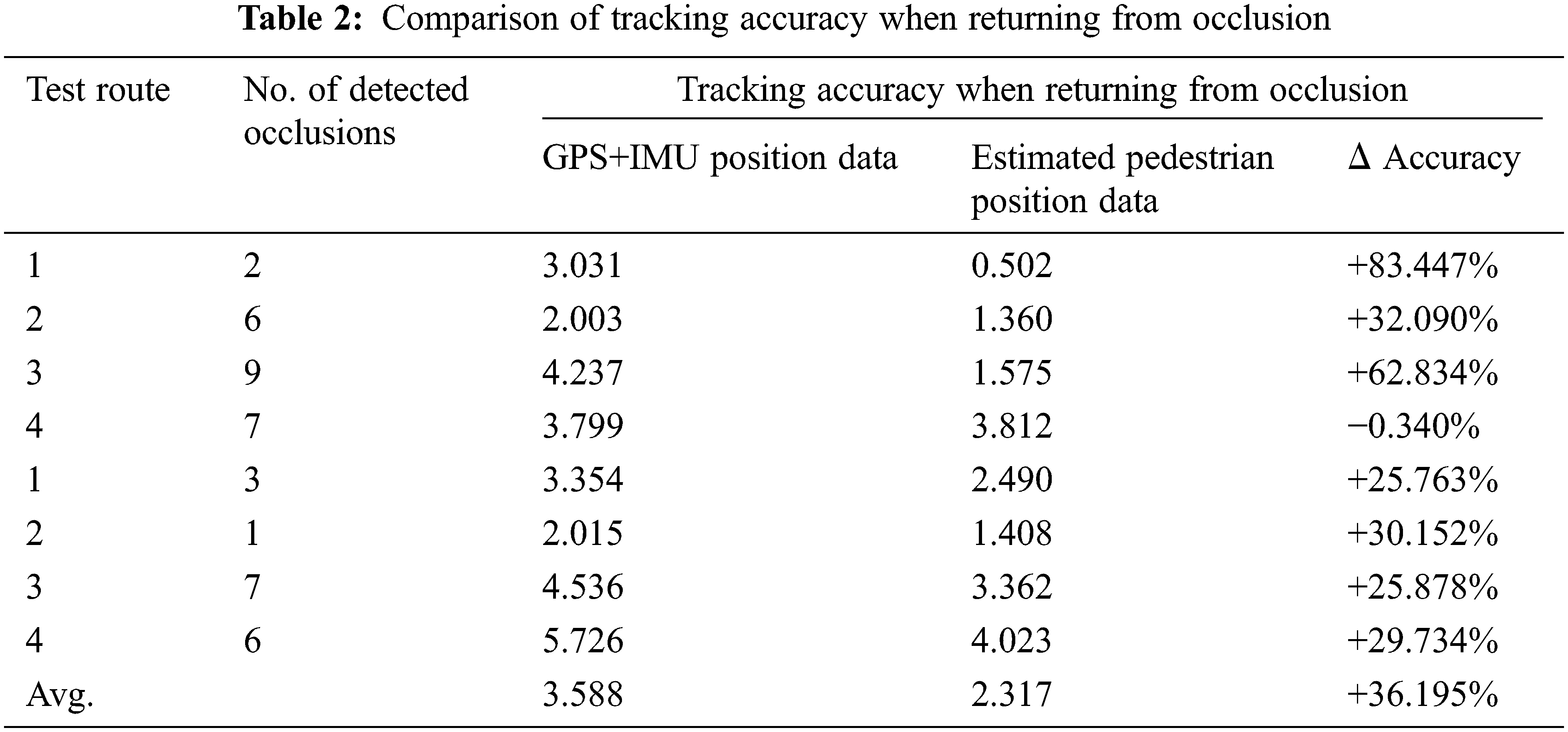

Figs. 10–13 summarize the experimental results of the routes I–IV, respectively. The abscissa of these figures is the experimental time, and the ordinate is the accuracy of the pedestrian position data (measured by the error with the pedestrians’ real position). It can be seen that the participants have been occluded for several times when walking along the experimental routes, except route I. Tab. 1 presents the detailed experimental data. It can be seen that comparing our method with the tracking results of the GPS+IMU method, the average error of the pedestrian tracking accuracy is 15.48%, and the variance is 8.32% of the latter. It shows that the proposed method is more accurate and effective.

Figure 10: Tracking accuracy evaluation (Test route I)

Figure 11: Tracking accuracy evaluation (Test route II)

Figure 12: Tracking accuracy evaluation (Test route III)

Figure 13: Tracking accuracy evaluation (Test route IV)

The GPS signal and IMU signal sent by the user equipment can be used to identify the pedestrians and will not be occluded by obstacles. However, its accuracy is too low to be trusted. LiDAR data have high accuracy and are close to the real pedestrian position. However, it cannot identify the pedestrians, and it cannot detect pedestrians when they are occluded. Our method integrates these two data sources and estimates pedestrians’ trajectory using their attribute information to improve the accuracy of the pedestrian position estimation. Although Tab. 1 shows the advantages of our data fusion-based method, it is recognized that LiDAR data is more accurate than GPS and IMU; it is also unfair to only compare the accuracy of the two. Therefore, we compare the positioning accuracy of pedestrians immediately before they recovered from occluded state to detected state. As presented in Tab. 2, when only using GPS and IMU sensor fusion, the accuracy error of pedestrian positioning before recovering occlusion is 3.588. Meanwhile, when using our multi-information sources fusion method, the accuracy error of pedestrian positioning is 2.317, with an increase of about 36.2%. For test route IV with a single pedestrian, the continuous occlusion time is relatively long, decreasing the accuracy of the predicted position, which is the main reason for the decrease in the system output accuracy.

This section discusses the effectiveness, novelty, and limitations of the proposed tracking framework for pedestrian protection in parking spaces.

Occlusion handling is an essential and difficult task in pedestrian detection and tracking. When there is no occlusion or partial occlusion, traditional methods based on sensor fusion and computer vision can well solve the problem of pedestrian detection and tracking. However, traditional methods are generally powerless when severe occlusion or complete occlusion occurs. Only based on road user device sensors can we obtain an inaccurate pedestrian position; however, it is inadequate for collision prevention and pedestrian protection. Therefore, to solve this problem, we proposed a solution for seamless pedestrian tracking. The proposed pedestrian tracking framework uses a Kalman filter to fuse the position information of pedestrian smart devices, LiDAR position information, and pedestrian attribute information. We also conducted a real-world experiment in parking spaces to evaluate the proposed framework. The experimental results show that the proposed method is more reliable than the GPS and IMU sensor fusion-based method. When detecting the pedestrian location, the proposed framework can also detect personal information of pedestrians, such as the elderly and children, to evoke the vigilance of drivers and automatic driving systems for decision-making support or providing voice guidance services. This could help the traffic safety of the parking space. Since the proposed framework tracks and manages real-time pedestrian position information, it is also a driving force for building a more comprehensive DM2.0 platform.

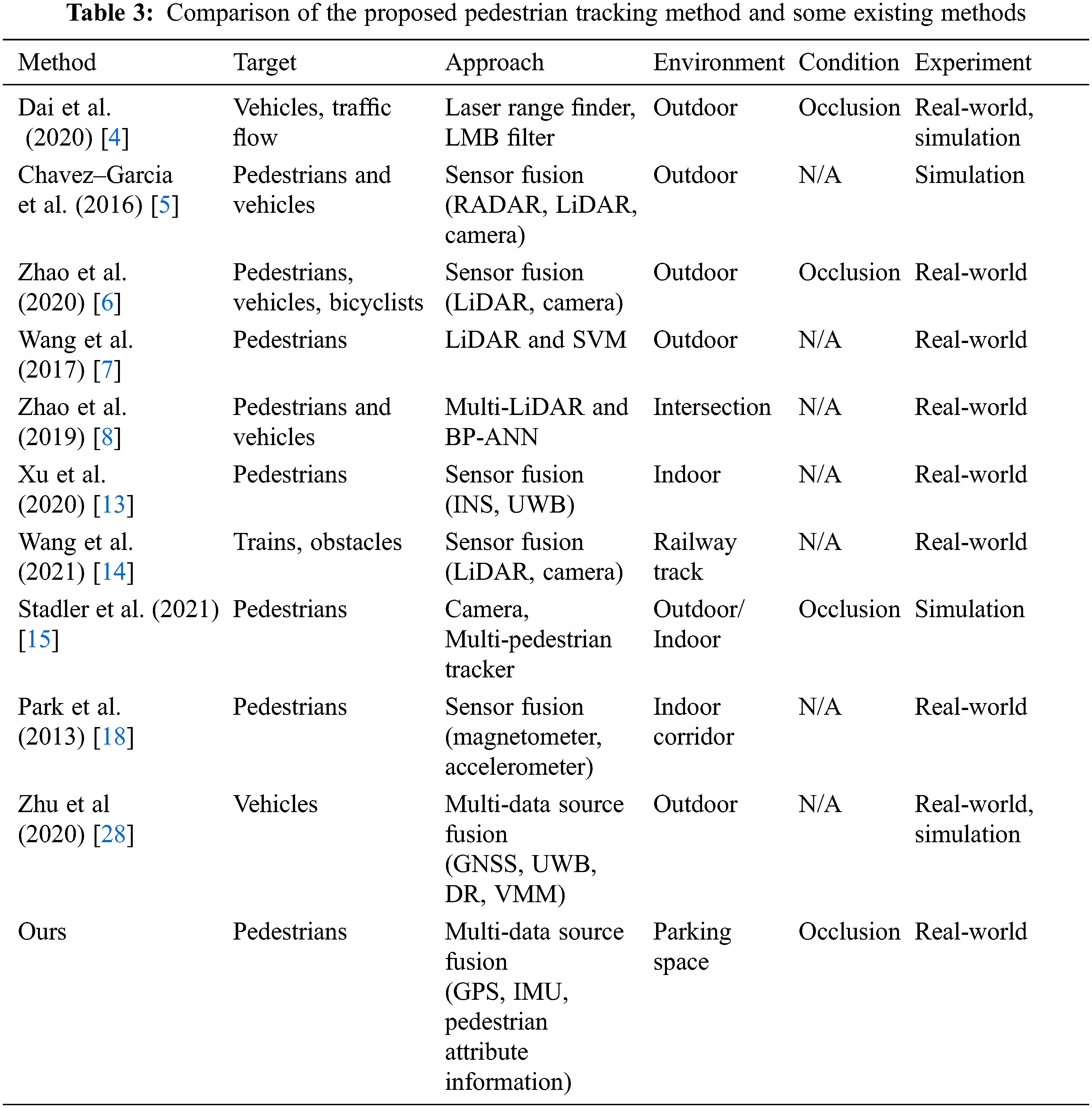

The results of the literature survey in Section 2 shows that there is no research on using pedestrian attribute information for pedestrian tracking. Tab. 3 compares some existing studies with our framework. Although the referenced studies [4–6,13,14] have proposed to use various information sources to track targets, they have not tried to use pedestrian attribute information for tracking. As presented in paper [16], although there is research on pedestrian attributes, its purpose is to obtain pedestrian attributes, not to use pedestrian attributes. The purposes and means of our study and the referenced study are different. This is the basis for us to claim the novelty of this paper.

Although the proposed pedestrian tracking framework can track pedestrians in parking spaces and deal with occlusion, the experimental results show that the positioning accuracy of multi-person tracking becomes worse than a single-person tracking. This is because the recognition of multiple pedestrians becomes incoherent when occlusion occurs, and the recognition accuracy of relocation is insufficient. The reason is that the system sometimes recognizes the detected pedestrians as pedestrians in occlusion. Additionally, our experiment is conducted in a parking lot with only one exit. It is more challenging to conduct the experiment in a complex environment. The experimental data show that when the continuous occlusion time is long (

This paper presents a parking lot pedestrian tracking framework using LiDAR, pedestrian smart device, and pedestrian attribute information data simultaneously. We also implemented the framework in C++ and conducted experiments in the real-world to verify its rationality and practicability. The experimental results show that the proposed method can reduce the error by 67.31% compared with the method using only GPS and IMU sensor fusion when tracking pedestrians in the parking space. This shows that our system can be applied to the parking lot environment and occlusion handling. The proposed system can be used to extract pedestrian attribute information. However, as stated in the previous section, there are limitations to our research. Solving the limitations is the future direction of this study. For instance, we plan to test the proposed system in a more complex environment with more pedestrians at the same time (e.g., the parking lot of a large shopping mall). Moreover, we aim to implement a pedestrian identification mechanism using machine learning to improve pedestrian re-identification accuracy for occlusion handling.

Funding Statement: Our research in this paper was partially supported by JST COI JPMJCE1317.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. J. B. Rouse and D. C. Schwebel, “Supervision of young children in parking lots: Impact on child pedestrian safety,” Journal of Safety Research, vol. 70, no. 1, pp. 201–206, 2019. [Google Scholar]

2. T. Gandhi and M. M. Trivedi, “Pedestrian protection systems: Issues, survey, and challenges,” IEEE Transactions on Intelligent Transportation Systems, vol. 8, no. 3, pp. 413–430, 2007. [Google Scholar]

3. Z. Sun, J. Chen, L. Chao, W. Ruan and M. Mukherjee, “A survey of multiple pedestrian tracking based on tracking-by-detection framework,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 31, no. 5, pp. 1819–1833, 2021. [Google Scholar]

4. K. Dai, Y. Wang, J. S. Hu, K. Nam and C. Yin, “Intertarget occlusion handling in multiextended target tracking based on labeled multi-bernoulli filter using laser range finder,” IEEE/ASME Transactions on Mechatronics, vol. 25, no. 4, pp. 1719–1728, 2020. [Google Scholar]

5. R. O. Chavez-Garcia and O. Aycard, “Multiple sensor fusion and classification for moving object detection and tracking,” IEEE Transactions on Intelligent Transportation Systems, vol. 17, no. 2, pp. 525–534, 2016. [Google Scholar]

6. L. Zhao, M. Wang, S. Su, T. Liu and Y. Yang, “Dynamic object tracking for self-driving cars using monocular camera and LiDAR,” in 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, pp. 10865–10872, 2020. [Google Scholar]

7. H. Wang, B. Wang, B. Liu, X. Meng and G. Yang, “Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle,” Robotics and Autonomous Systems, vol. 88, no. 1, pp. 71–78, 2017. [Google Scholar]

8. J. Zhao, H. Xu, H. Liu, J. Wu, Y. Zheng et al., “Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors,” Transportation Research Part C: Emerging Technologies, vol. 100, no. 1, pp. 68–87, 2019. [Google Scholar]

9. Y. Cui, J. Zhang, Z. He and J. Hu, “Multiple pedestrian tracking by combining particle filter and network flow model,” Neurocomputing, vol. 351, no. 1, pp. 217–227, 2019. [Google Scholar]

10. J. Wu, H. Xu, Y. Zhang and R. Sun, “An improved vehicle-pedestrian near-crash identification method with a roadside LiDAR sensor,” Journal of Safety Research, vol. 73, no. 1, pp. 211–224, 2020. [Google Scholar]

11. S. Zhang, M. Abdel-Aty, Q. Cai, P. Li and J. Ugan, “Prediction of pedestrian-vehicle conflicts at signalized intersections based on long short-term memory neural network,” Accident Analysis & Prevention, vol. 148, no. 1, pp. 1–9, 2020. [Google Scholar]

12. W. Farag, “Road-objects tracking for autonomous driving using lidar and radar fusion,” Journal of Electrical Engineering, vol. 71, no. 3, pp. 138–149, 2020. [Google Scholar]

13. Y. Xu, Y. Li, C. K. Ahn and X. Chen, “Seamless indoor pedestrian tracking by fusing INS and UWB measurements via LS-SVM assisted UFIR filter,” Neurocomputing, vol. 388, no. 1, pp. 301–308, 2020. [Google Scholar]

14. W. Zhangyu, Y. Guizhen, W. Xinkai, L. Haoran and L. Da, “A camera and LiDAR data fusion method for railway object detection,” IEEE Sensors Journal, vol. 21, no. 12, pp. 13442–13454, 2021. [Google Scholar]

15. D. Stadler and J. Beyerer, “Improving multiple pedestrian tracking by track management and occlusion handling,” in 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, pp. 10953–10962, 2021. [Google Scholar]

16. P. K. Y. Wong, H. Luo, M. Wang, P. H. Leung and J. C. P. Cheng, “Recognition of pedestrian trajectories and attributes with computer vision and deep learning techniques,” Advanced Engineering Informatics, vol. 49, no. 1, pp. 1–17, 2021. [Google Scholar]

17. S. M. Chowdhury and J. P. de Villiers, “Extended rigid multi-target tracking in dense point clouds with probabilistic occlusion reasoning,” in 2020 IEEE 23rd Int. Conf. on Information Fusion, Rustenburg, South Africa, pp. 1–8, 2020. [Google Scholar]

18. K. Park, H. Shin and H. Cha, “Smartphone-based pedestrian tracking in indoor corridor environments,” Personal and Ubiquitous Computing, vol. 17, no. 2, pp. 359–370, 2013. [Google Scholar]

19. W. Kang and Y. Han, “SmartPDR: Smartphone-based pedestrian dead reckoning for indoor localization,” IEEE Sensors Journal, vol. 15, no. 5, pp. 2906–2916, 2015. [Google Scholar]

20. Q. Tian, Z. Salcic, K. I. K. Wang and Y. Pan, “A multi-mode dead reckoning system for pedestrian tracking using smartphones,” IEEE Sensors Journal, vol. 16, no. 7, pp. 2079–2093, 2016. [Google Scholar]

21. W. Jiang and Z. Yin, “Combining passive visual cameras and active IMU sensors to track cooperative people,” in 2015 18th Int. Conf. on Information Fusion (Fusion), Washington, DC, USA, pp. 1338–1345, 2015. [Google Scholar]

22. J. Geng, L. Xia, J. Xia, Q. Li, H. Zhu et al., “Smartphone-based pedestrian dead reckoning for 3D indoor positioning,” Sensors, vol. 21, no. 24, pp. 1–21, 2021. [Google Scholar]

23. X. Zhang, W. Zhang, W. Sun, X. Sun and S. K. Jha, “A robust 3-D medical watermarking based on wavelet transform for data protection,” Computer Systems Science & Engineering, vol. 41, no. 3, pp. 1043–1056, 2022. [Google Scholar]

24. X. Zhang, X. Sun, X. Sun, W. Sun and S. K. Jha, “Robust reversible audio watermarking scheme for telemedicine and privacy protection,” Computers, Materials & Continua, vol. 71, no. 2, pp. 3035–3050, 2022. [Google Scholar]

25. H. Shimada, A. Yamaguchi, H. Takada and K. Sato, “Implementation and evaluation of local dynamic map in safety driving systems,” Journal of Transportation Technologies, vol. 5, no. 1, pp. 102–112, 2015. [Google Scholar]

26. L. Tao, Y. Watanabe, Y. Li, S. Yamada and H. Takada, “Collision risk assessment service for connected vehicles: leveraging vehicular state and motion uncertainties,” IEEE Internet of Things Journal, vol. 8, no. 14, pp. 11548–11560, 2021. [Google Scholar]

27. Z. Zhou, S. Kitamura, Y. Watanabe, S. Yamada and H. Takada, “Extraction of pedestrian position and attribute information based on the integration of LiDAR and smartphone sensors,” in 2021 IEEE Int. Conf. on Mechatronics and Automation (ICMA), Takamatsu, Japan, pp. 784–789, 2021. [Google Scholar]

28. B. Zhu, X. Tao, J. Zhao, M. Ke, H. Wang et al., “An integrated GNSS/UWB/DR/VMM positioning strategy for intelligent vehicles,” IEEE Transactions on Vehicular Technology, vol. 69, no. 10, pp. 10842–10853, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |