DOI:10.32604/csse.2023.026501

| Computer Systems Science & Engineering DOI:10.32604/csse.2023.026501 |  |

| Article |

Non Sub-Sampled Contourlet with Joint Sparse Representation Based Medical Image Fusion

Department of Electronics and Communication Engineering, Tamilnadu College of Engineering, Coimbatore, 641659, India

*Corresponding Author: Kandasamy Kittusamy. Email: kkandasamy.03@rediffmail.com

Received: 28 December 2021; Accepted: 09 March 2022

Abstract: Medical Image Fusion is the synthesizing technology for fusing multimodal medical information using mathematical procedures to generate better visual on the image content and high-quality image output. Medical image fusion represents an indispensible role in fixing major solutions for the complicated medical predicaments, while the recent research results have an enhanced affinity towards the preservation of medical image details, leaving color distortion and halo artifacts to remain unaddressed. This paper proposes a novel method of fusing Computer Tomography (CT) and Magnetic Resonance Imaging (MRI) using a hybrid model of Non Sub-sampled Contourlet Transform (NSCT) and Joint Sparse Representation (JSR). This model gratifies the need for precise integration of medical images of different modalities, which is an essential requirement in the diagnosing process towards clinical activities and treating the patients accordingly. In the proposed model, the medical image is decomposed using NSCT which is an efficient shift variant decomposition transformation method. JSR is exercised to extricate the common features of the medical image for the fusion process. The performance analysis of the proposed system proves that the proposed image fusion technique for medical image fusion is more efficient, provides better results, and a high level of distinctness by integrating the advantages of complementary images. The comparative analysis proves that the proposed technique exhibits better-quality than the existing medical image fusion practices.

Keywords: Medical image fusion; computer tomography; magnetic resonance imaging; non sub-sampled contourlet transform (NSCT); joint sparse representation (JSR)

The evolution of digital era and the drastic improvements of computer vision have stimulated the research in the digital image processing in terms of figure amalgamation technology [1]. The figure amalgamation holds good performance in diversified applications [2] like remote sensing, military applications, surveillance, bio medicine, and various domestic and industrial applications. Medical figure amalgamation is defined as the progression of integrating multi-modal images, which were captured at different modalities to provide wide-ranging information in a single image, which the image reader cannot intercept in each image separately. The development of figure amalgamation technology has contributed well in the inclination of medical research and treatments such that the microscopic details hold good scope of visibility and perception. The medical images of different modality portray different minute information on human body and the information also differs with respect to different modality medical images. The medical modality images are organized into dual categories namely functional imaging modality and anatomical imaging modality. The common functional imaging modality images are including Positron Emission Tomography (PET) [3], Single Positron Emission Computed Tomography (SPECT) [4] provides the metabolic information and psychological information in relation to the individual patient, while the anatomical imaging modality provides structural relevant data regarding the human disease.

Some of the notable examples of the anatomical imaging modality are X-Ray CT, Ultrasound Imaging (UI) [5] and MRI [6]. These multimodal images yield different forms of information which will not be replicated in any other image forms. The necessity of figure amalgamation increased as the Computed Tomography image yields high level accurate information on estimation of radiation dose while in contrast provides limited information on soft tissue contrast. On analyzing the other imaging methodologies, MRI affords highly accurate data on elastic tissue contrast providing accurate information of abnormalities of tissue in various parts of the body. The analysis of the different modality medical image proves that it is certain to a particular type of application and is uncertain on the other information of human body. This motivates the concept of figure amalgamation such that any quantity of different modality images was fused together to extract valid information of different types from a single image. The Figure amalgamation process keep hold of complementary and redundant information present in the multi modal image and care must be taken such that no to include any relic information to the fused multimodal image. The figure amalgamation is classified into three classes depending on the nature of the figure amalgamation and is represented in multiple levels of pixel, decision and feature levels of figure amalgamation. In the pixel level of figure amalgamation, the pixels relate to the multimodal images are integrated directly from the source representation input image to the output amalgamated figure.

The pixel level feature extraction can be performed in spatial domain [7] and transform domain, as it is highly suitable for images at different resolutions were considered for image fusing. The feature level figure amalgamation methodology incorporates the process of extracting the emphasized characteristics of the multimodal source image and integrating the extracted features to form a single multimodal amalgamated figure. The feature extraction from the multimodal source image can be performed by advanced algorithms like neural network, artificial intelligence, K means clustering algorithms etc. The Decision level of figure amalgamation is proven to be the advanced figure amalgamation methodology as the individual source images were pre-processed to extract the features and the concentrated information from each source image, then forwarding to the integrate all the extracted information to form the single multimodal fused output image. This methodology is considered to be precise as it concentrates on extracting the sensitive information from each source image. The aforementioned multiple levels of image fusing methodology holds good for variety of application domain and in the proposed domain of medical field, the pixel level figure amalgamation is more suitable as it supports multi-scale figure amalgamation.

The proposed method of figure amalgamation is concentrated on medical image inputs and the inputs were in multiple frequency domain, and employs a hybrid concept of NSCT integrated with JSR to accept the multi-frequency domain input images like CT [8] images and MRI to form a multimodal amalgamated figure. This method holds good in medical domain applications, as the end results are highly informative based on which the medical treatment decision can be taken accurately. The reason for exploiting NSCT in this medical figure amalgamation application is its adverse characteristics of effective reduction of mis-registration effect on the resulting amalgamated figures. The notable advantage of the NSCT is its good level shift invariance during the decomposing process and it possess certain limitations like it is more complex in process and possess high level of time complexity leading to more time consumption during the image decomposition process. This limitation is prevailing over by performing a hybrid concept of image fusion using Joint Sparse Representation which takes advantages of Reduced Geometric Algebra (RGA) [9]. The RGA theory represents the input multimodal complex color image with three channel multi-vector in spatial domain representation. Thus on integrating both the algorithms, the time complexity of the input image is reduced further leading to the increase of fusion efficiency than the existing methodology with a high level of distinctness and better Peak Signal to Noise Ratio (PSNR) values. The rest of this research manuscript is preceded as follows. The detailed study on the recent research results were illustrated in Section 2 while the theory of proposed method of hybrid NSCT and JSR was described in Section 3. Experiments were done and the performance analysis was described in Section 4 with a conclusion in Section 5.

This section describes the various methodologies and algorithms to perform the image fusion in an efficient mode. Various researchers had contributed for designing an efficient method of performing multimodal image fusion, out of which the notable research results were illustrated briefly in this section.

Jingyue Chen et al. (2019) has proposed a novel medical image fusion method using Rolling Guidance Filtering [10] to isolate the source image to two components namely detailed component and structural component. The author had employed Laplacian Pyramid based image fusion rule to integrate the structural component and the performance analysis proves that the proposed method outperforms well than the exiting methodologies with an average processing time of 0.1314 s.

Zhaobin Wang et al. (2020) had performed multimodal image fusion of medical image using Laplacian pyramid and Adaptive Sparse Representation [11]. The Adaptive Sparse Representation does not require high level of redundancy as required by the traditional Sparse Representation method and the proposed methods decompose the input image into four different dimensional images.

Lu Tang et al. (2020) had performed a perceptual quality assessment of multimodal medical image fusion using Pulse Coupled Neural Network (PCNN) [12] in NSCT and the resulting image were integrated to obtain the amalgamated figure output.

Hikmat Ullah et al. (2020) had proposed a medical image fusion technique with a foundation on restricted features of fuzzy sets [13] and new Sum-Modified-Laplacian in Non Sub-sampled Shearlet Transform domain. The system was then extended to fusion of color image with improved level of visibility.

Lisa Corbat et al. (2020) had performed fusion of multiple segmentations of using Overlearning Vector for Valid Sparse Segmentations (OV2ASSION) and Deep Learning Methods [14]. The author applied the output image for treating CT image of tumoral kidney. The proposed system was trained with Fully Convolutional Network (FCN-8N) network during the segmentation process with more number of patient details to resolve the treatment of conflicting pixels.

Qiu Hu et al. (2020) had designed a method for medical representation figure fusion depending on separable dictionary learning and Gabor filtering method [15]. The proposed method decomposed the input source figure into low frequency and high frequency component by NSCT method. The Gabor filtering method employed in this method analyzed the weightage of the low frequency component in the source representation to progress the grade of image fusion.

Sarmad Maqsood et al. (2020) had performed the medical multimodal image blending employing Two scale image decomposition and Sparse Representation [16]. The author executes spatial gradient based edge detection method to extort the edge-based data from the input source image. The decomposed figure is classified into two components and the final detailed layer is extracted using Spatial Stimuli Gradient Sketch Model (SSGCM).

Jiau Du et al. (2020) had proposed an adaptive dual degree biomedical figure blending methodology using arithmetical comparison technique [17]. In the proposed method, the 2-scale image fusion methodology is implemented with adaptive threshold image is employed for pseudo-color image. The performance analysis reveals that the proposed method yields an output fused multimodal image with enhanced structural and luminance information.

Vikrant Bhateja et al. (2020) had developed a fusion method for two stage multi modal Magnetic Resonance (MR) image using Parametric Logarithmic Image Processing (PLIP) representation [18]. In the projected technology, the multi modal amalgamation of MR-T1, MR-T2, MR-PD and MR-Gad has been dealt with and were analyzed based on the entropy, blending factor, and edge potency.

Lina Xu et al. (2020) has proposed a novel model for figure blending technique by means of personalized shark smell optimization algorithm and hybrid wavelet Homomorphic filter [19]. The hybrid concept was employed here for better level of image fusion with good entropy and fusion factor. The simulation has been performed on 5 medical images inclusive of CT-MR, MR-SPECT, and MR-PET as source image for multimodal fusion process.

Based on the study and analysis of the existing methodology and research results, it is graspable that these methodologies are complex in dealing with decomposition of multi modal image and the source image. This operation complexity deteriorates the performance of the medical image fusion system and makes the system more sluggish. The objectives of the proposed methodology are as follows:

• To design a methodology for medical image fusion with efficient shift variant decomposition transformation.

• To haul out the common representations of the medical source figure for fusion process.

The proposed work is a hybrid methodology composed of two technologies mentioning NSCT and JSR to perform fusion of multimodal medical source image. Consider a source figure Im(x, y) which is obtained by the product of reflection Ir(x, y) and illumination Ii(x, y).

The input source image Im(i, j) is treated with two types of technology which were described in the following subsections. The common notation used throughout this research manuscript is a two dimensional filter which is represented in terms of Z transform, mentioned as H(z) where, the z is represented as in Eq. (2).

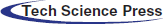

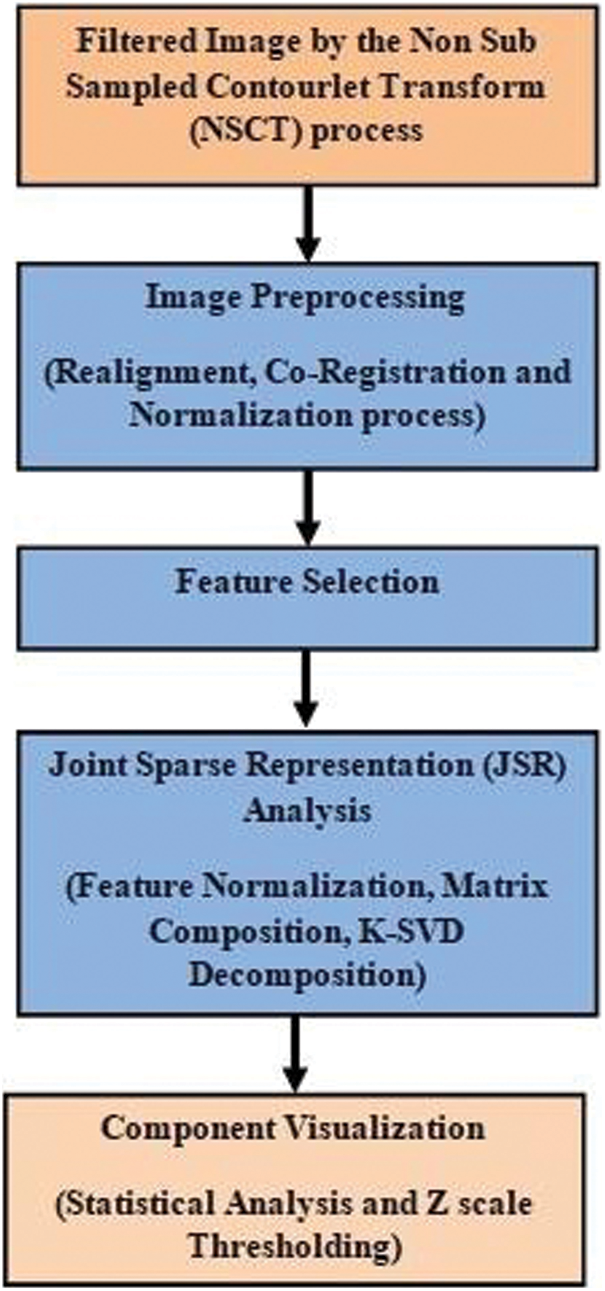

The two dimensional filter is represented in terms of polynomial f(x1, x2) where, x1, and x2 are the phase angles of the unit sphere filters. The structural design of the proposed work is depicted in Fig. 1.

Figure 1: Architecture diagram of proposed image fusion method

3.1 Non Sub-Sampled Contourlet Transform (NSCT)

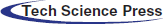

NCST is a multi-directional and multi scale transform exhibits efficient shift variance transformation characteristics which is proved to be more efficient in figure blending technique and allied application [20] reducing the Pseudo-Gibbs phenomenon to keep away from the critical concern of mis-registration. The NSCT performs medical figure blending process based on the Non Sub-Sampled Pyramids (NSP) and Non Sub-sampled Directional Filter (NSDF) to haul out the multi scale and multi-dimensional decomposition of source input image without up sampling and down sampling as depicted in Fig. 2.

Figure 2: Non sub sampled contourlet transform process

The NSCT was standardized with its characteristics of efficient shift variance and size equality among each sub bands and the input source image with an objective to reduce the mis-registration effect. The NSCT process still experience certain critical concerns of unclear object area, visual effect relics in the fused output image [21–24]. To address the aforementioned concern the NSCT is integrated with the region detection in the input source image to obtain detailed information from the source image. The proposed image fusion methodology is composed of two major parts namely Non Sub sampled Pyramid (NSP) and Non Sub-sampled Directional Filter bank that provides an high level of directionality.

3.1.1 Non Sub-Sampled Pyramid (NSP)

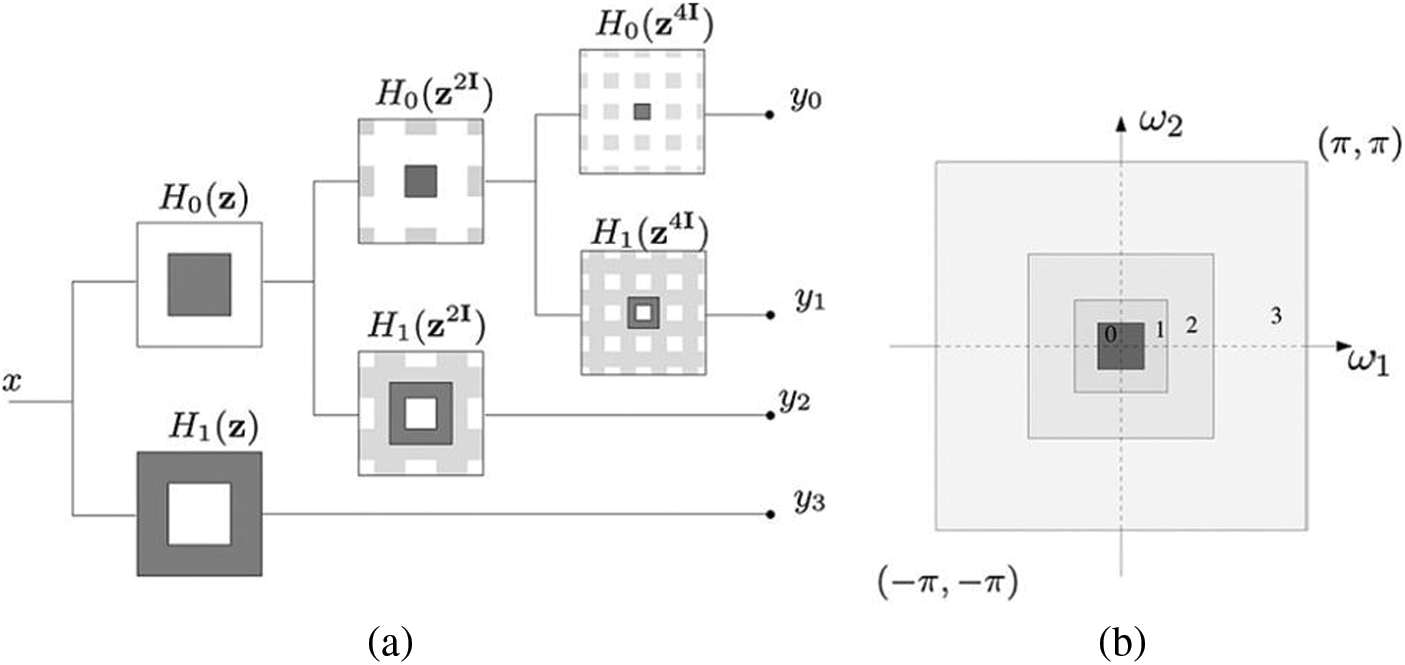

The Non Sub-sampled Pyramid possess two channel filter banks in absence of performing up sampling and down sampling process and the ideal frequency response of the NSP is depicted in Fig. 2. The multi-scale characteristics of the NSCT are extracted from a shift variant filtering arrangement to obtain a sub-band decomposition parallel to the Laplacian pyramid. This process can be executed by employing a 2-channel non-sub sampled filter bank as depicted in Fig. 3. From Fig. 3, it is clear that the proposed model of NSCT encompassing the Non sub-sampled pyramid decomposition process with the value “J” is fixed to three stages.

Figure 3: (a) Non sub-sampled pyramid: Proposed three stage decomposition. (b) Two dimensional frequency plane-sub bands

The frequency subplot depicted in Fig. 3b possess the filter function Hk(z) with (K = 0, 1) which is considered as the initial stage of filters while the synthesis filters with the function Gk(z) with k = 0, 1. The three state of filters in the NSP method composed of one low pass filtered image (y0) and one band pass filtered stage (y1) in entire three stages. Subsequent stages of decomposition is achieved by the low frequency sub-band which iterates to perform the finest filtration process for image decomposition. The two stage decomposition structure depicted in Fig. 3a in which the filters of the concurrent stages are attained using the process of up sampling method implemented in the filters of the preceding stages of the decomposition. The initial stages of the low pass filter and band pass filter are mentioned as H0((Z) and H1(Z) respectively while the filters namely the low pass filter and the band pass filters in the second stage of decomposition is mentioned as H0(Z2I) and H1(Z2I) respectively. The third stage of decomposition possess two filters like low pass filter and band pass filter and is mentioned as H0((Z4I) and H1(Z4I) respectively. The proposed system using the three levels of filters encompassing one low pass filter and one band pass filter at all stages of filters the selective noisy spectrum parts of the input source image in the pyramid coefficients.

3.1.2 Non Sub-sampled Directional Filter Bank (NSDFB)

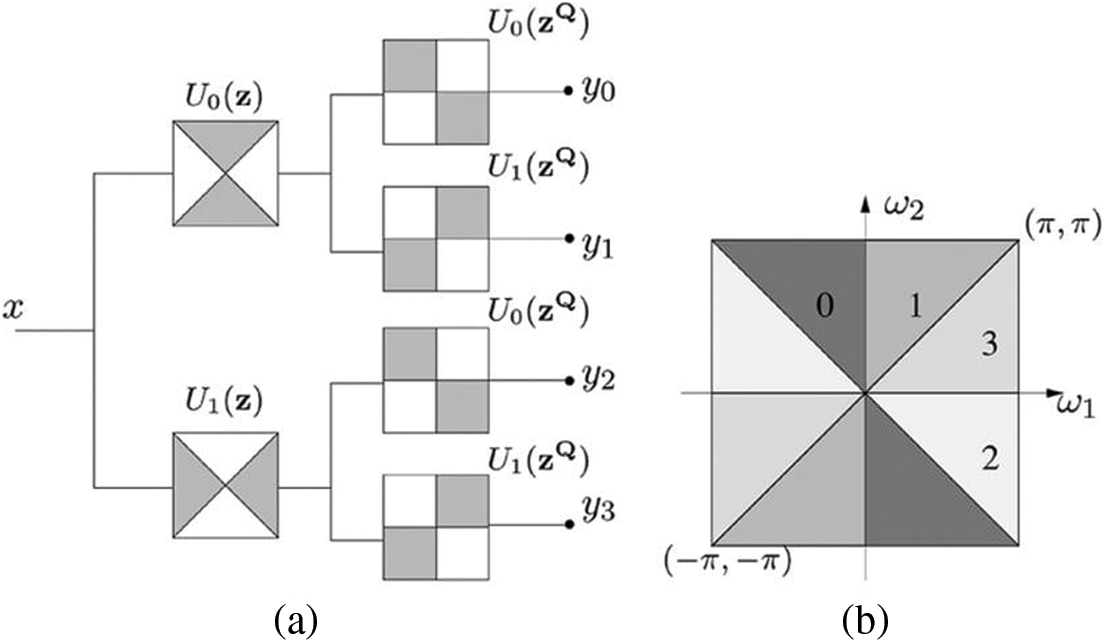

NSP process accepts the multimodal source image and delivers the band pass image as the input to the NSDFB with an objective of achieving the directional decomposition. The second stage of the NSCT is composed of NSDFB which is constructed by eradicating the up sampling and down sampling process, which is executed through toggling the up sampling and down sampling respectively in all the dual channels of the filter bank in the directional filter bank structure. The switching off process yields the new filter tree with dual channel Non sub-sampled Filter bank. A similar model was proposed in this system with a quad channel non sub-sampled directional filter bank designed using dual channel filter bank which is depicted in Fig. 4.

Figure 4: (a) Non sub-sampled directional filter bank structure (b) Frequency decomposition sub bands

The Non sub-sampled directional filter bank structure depicted in Fig. 4a performs the image decomposition with four channels. From the Fig. 4, the second level of filtering possess the non sampled fan filter with the function mention in Eq. (3).

The complexity analysis of the NSFB is reduced when compared with the complexity of the NSDFB. The complexity reduction is performed by reducing the complexity function of filters placed at each levels of the NSFB filter bank tree with a non sub-sampling process in all the filters.

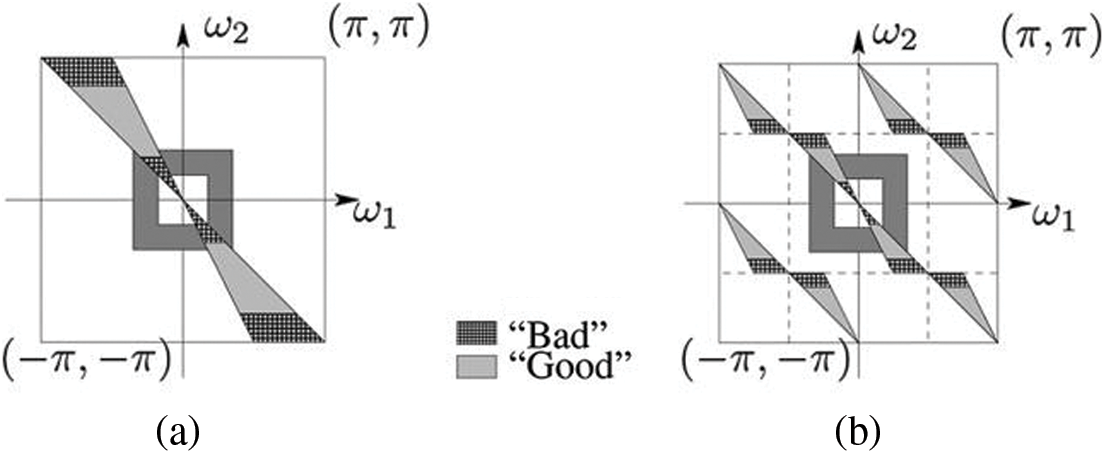

The non sub sampled pyramid integrated with the directional filter bank in the proposed NSCT is performed by integrating the directional filters present at each levels of the filter bank tree in each NSFB and NSP. The integration process must be performed with an objective to reduce the response of the directional filter at the end frequencies experiences aliasing effect which creates loss of data in upper stages as depicted in Fig. 5.

Figure 5: Up sampling process in NSCT (a) Without up sampling process (b) With up sampling process

Fig. 5 depicts that that pass band filter in the proposed directional filtered is highlighted as the Good and Bad regions which filters off the unwanted regions by the directional filter. The proposed system accepts CT image and MRI image in which entire unwanted portions has been clipped off on employing the directional filter in integrating the NSP and NSFB. The common scales create aliasing effects and is filtered using high pass filter in the directional filter creating severe aliasing effect and reduction of data resolution in the output of the directional filter. This effect is neutralized using the NSDFB up sampling filters denoted by Uk(Z) in which the filter functions of Uk(Z2mI) is substituted in place of Uk(Z), where the value of “m” is chosen such that the good portion of the input image be related to the pyramid passband filtered image. The three stages of filtering process does not elevate the complexity of the proposed system but refines the data clarity at the multimodal amalgamated figure at the output end. The proposed system employs sampling matrix “S” and a response function H(Z) to produce a filtered output using the convolution theorem as mentioned in Eq. (4).

The filters present in multiple layers of the NSDFB possess same level of complexity as incurred in the initial stage of receiving the input multimodal CT image and MRI. The proposed NSCT method comprises of NSP and NSDFB which required “M” number of operation on each output samples and in addition extract SNL operations. Where the S represents the quantity of sub bands and L represents the number of operations required on each stage of filtering process. In the proposed system, the L is considered to be 32 executed by the four levels of pyramid levels in 16 directions. The NSCT is not restricted to the number of operations and directions and has a feasible flexibility on the number of directions in NSDFB.

3.1.3 Non Sub-sampled Filter Bank (NSFB)

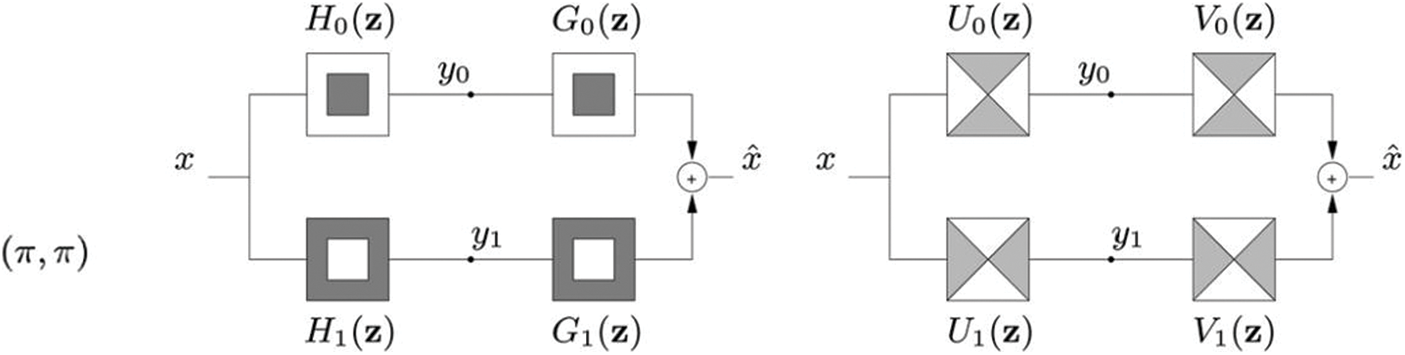

The proposed NSCT possess a 2-dimensional (2-D) channel Non Sub sampled Filter Bank (NSFB) as depicted in Fig. 6.

Figure 6: Non sub sampled filter bank (NSFB) with dual channel structure (i) Pyramid structured NSFB (ii) Fan structured NSFB

The proposed method concentrates on the Finite Impulse Response (FIR) filter which is known for its simpler structure and involves simpler installation procedures. The Fig. 6 depicts the two channel NSFB with pyramid shaped and fan shaped structures. The proposed two channel NSFB satisfies the Bezout identity as mentioned in Eq. (5).

The implementation of Bezout identity in the filters of image fusion process does not affects the frequency reaction corresponding to entire bands of filters employed in the decomposition process, thus yielding a better solution for the objective function of fusing multimodal source image of CT and MRI. The investigation performed on the designed frames is synthesized with respect to the operators and is known as the frame systems. The equation for the analysis of the designed frames can be represented as mentioned in Eq. (6).

The incoming frames corresponding to the CT and MRI are synthesized by the proposed NSFB and are computed as mentioned in Eq. (7).

Based on the aforementioned design of proposed model, the designed linear phase filters and the frames are mutually restricted to each other and the pseudo inverse is enviable.

3.2 Joint Sparse Representation (JSR)

The filtered input multimodal source CT image and MRI is of multi-dimensional and requires suitable representation for performing de-noising, reconstructing the output image into a super resolution image [25–29], and ending with amalgamated figure retrieval. For this multi-objective process, the Joint Sparse Representation process will be more suitable and is well known for its attractive features in multi modal image fusion process. Let the output image from the NSCT process be the Si and D be the database that acts as the repository and ρ be the coefficient introduced by JSR as mentioned in Eq. (8).

where, the Sir and Sic represents the image redundancy component and the complementary component of the input image to the Joint Sparse Representation (JSR) process, while the Dr and Dc are the dictionaries of the redundancy and complementary components, whereas ρr and ρc are the corresponding sparse coefficients. The process flow of the Joint Sparse Representation (JSR) is depicted in Fig. 7.

Figure 7: Flow chart of joint sparse representation on CT and MRI images

The proposed methodology exhibits three Joint Sparse models that can be applied in variety of conditions and is represented as JSR-1 (Sparse Common component Innovations), JSR-2 (Common Sparse Supports) and JSR-3 (Non Sparse Common Sparse Innovations). The proposed methodology of fusing multi modal input source image commence from training the incomplete data dictionary by K-SVD decomposition process and the amalgamated figure coefficients were undergone de-noising effect and finally concluded with the inverse transform to obtain the amalgamated figure with high level of perception. The adaptive dictionary processed by the K-Singular Value Decomposition (K-SVD) decomposition process is also termed as the matching dictionary. The predominant process in the JSR process is the treatment of input image with the sparse coefficients. The fused multimodal image is obtained by construction of fused coefficients of input images matched with the values of fused dictionary and the final output amalgamated figure is obtained by summing of the corresponding merged figure with the superfluous figure as depicted in Fig. 8.

Figure 8: Proposed architecture of joint sparse representation

The proposed section represented by the Joint Sparse coefficient is performed in three steps as follows:

i) Creating the combined glossary, the redundant and complementary configuration of the input multimodal foundation figure is to be isolated with high level of significance on complementary compositions.

ii) Performing image fusion based on the optimal theory based on Eq. (9) to extract maximum level of complementary component from the source image.

iii) Perform multiplication between the joint dictionary and the sparse coefficients of the fused output image to reconstruct the amalgamated figure.

The image fusion is performed based on the sparse coefficients extracted from the various source image and the fusion process is performed based on the optimal theory represented in Eq. (9).

From the above Eq. (9), the

The preliminary blending choice plot is attained by opting maximum amalgamation method by. opting the coadjutant possessing the high level of action quantification and is mathematically articulated in Eq. (11)

The aforementioned Eq. (11) signifies that in first case of obtaining unity value, the figure A is to be identified at new position (m, n) and if the value turned to be null, then figure B is to be pointed at new position (m’, n’). The concluding amalgamation choice plot is derived via steadiness substantiation in a window sized 3 × 3 matrix by employing preponderance filtering process. In the recommended technique, city block stretch mass matrix is employed as mathematically illustrated in Eq. (12).

The Weighted Similarity is determined at every high-frequency sub-band coefficient of input figure A and figure B as their action assessment.

where

4 Experiment Results and Performance Analysis

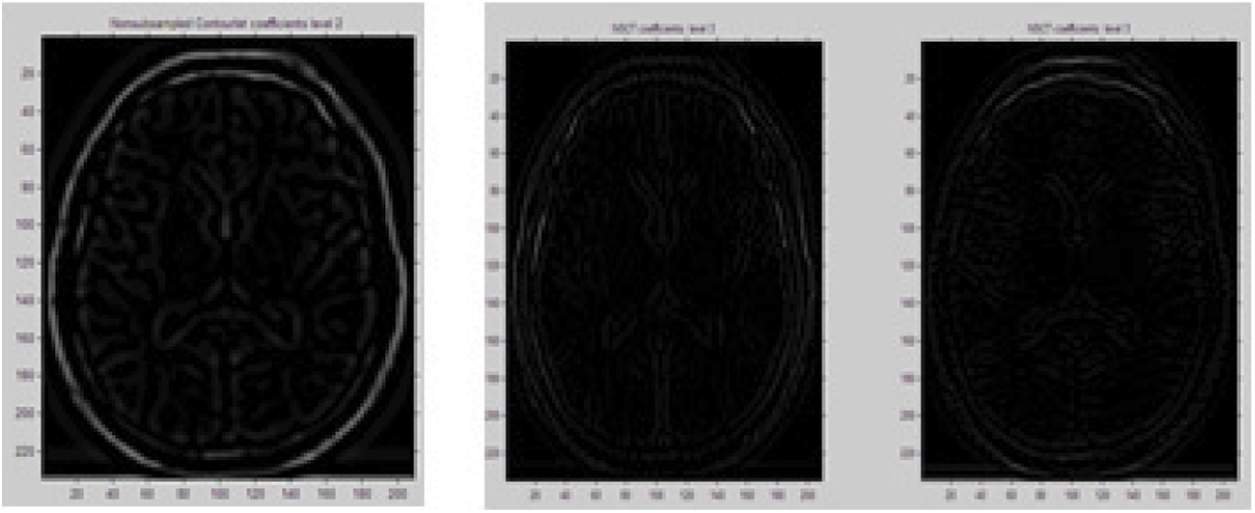

The perform experimental analysis of the proposed system, two different types of medical image mentioning CT image and MRI of different modality were considered. The image group C and M are to be fused. On simulating our algorithm, we implement three levels of decomposition of input images by the proposed NSCT. To analyze the performance of the proposed method we implement Discrete Cosine transformation and Discrete Wavelet transformation method and the results were depicted in Fig. 9. The Fig. 9 portrays the fused results of two different modality image mentioning Computer Tomography Image and Magnetic Resonance Image.

Figure 9: NSCT coefficients extracted from source image

The Fig. 9 depicts the process of extracting the NSCT first level of coefficient from the multimodal source image, through which the successive levels of NSCT coefficients were extracted which, is depicted in Fig. 10.

Figure 10: NSCT coefficient 2 and 3 extracted from coefficient 1

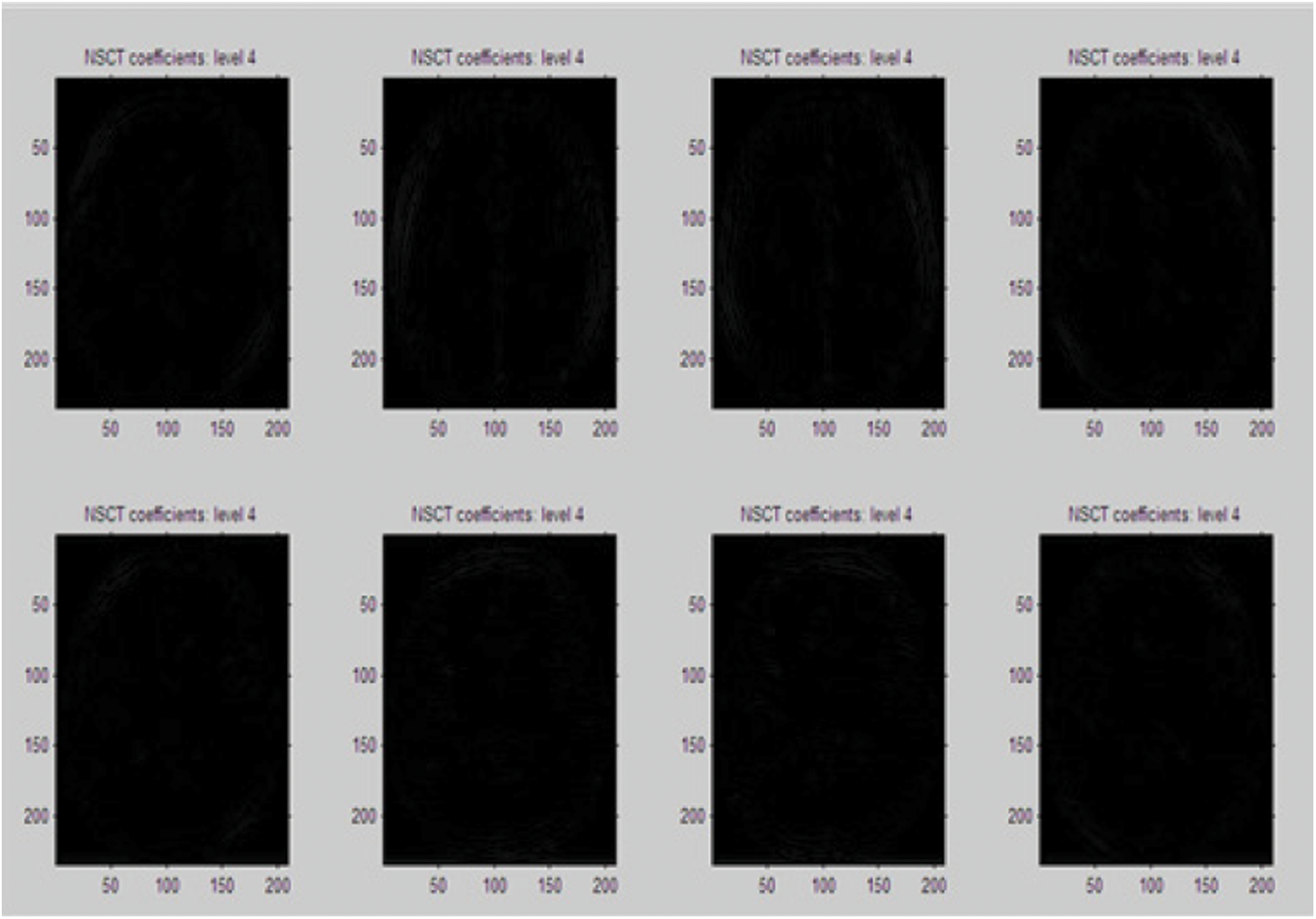

The multi-level NSCT coefficient 4 is further extracted from the previous obtained coefficient by performing further level of directional filtering process such that to extract the minute level of information from the source multi modal image. The extracted NSCT coefficient 4 is depicted in Fig. 11.

Figure 11: NSCT coefficient 4 extracted from previous coefficients

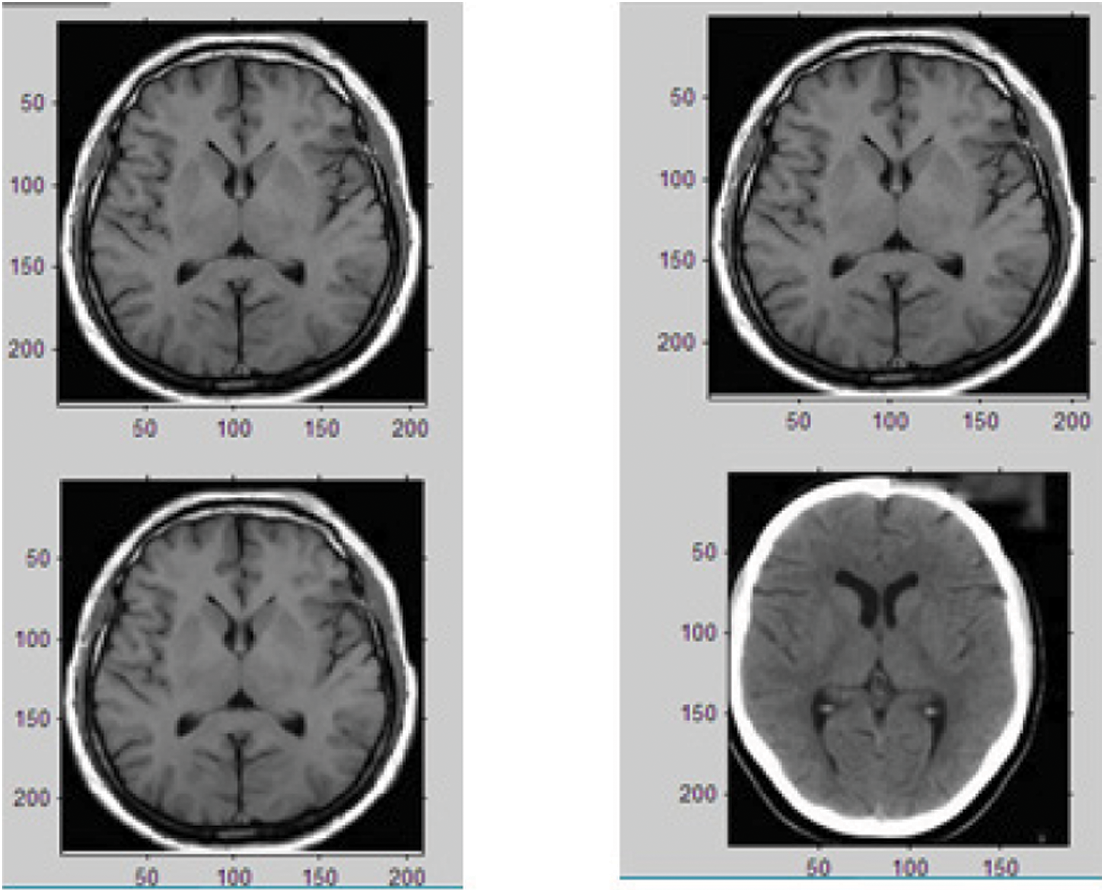

On extracting the four levels of NSCT coefficients from the input multimodal source image, the NSCT fusion rule is applied to amalgamate the inferior frequency component and the majority frequency components and the particle blended output figure is acquired. The second phase of process, Joint Sparse Representation (JSR) is applied on the NSCT coefficients and the initial process of preprocessing is performed before executing the process of extracting the sparse coefficients from the input multimodal source images. The multi-level of extracted sparse coefficients of the input source image is depicted in Fig. 12.

Figure 12: Sparse coefficients extraction from the input image

The coefficients extracted from the process of Non Sub sampled Contourlet Transform and the Joint Sparse Representation is fused together to acquire the concluding level of blended figure as depicted in Image 13. The Fig. 13 portrays the fusion process of the proposed hybrid NSCT process and Joint Sparse Representation process and the final fusion image provides depth information on the input multimodal image.

Figure 13: Final fused image by the proposed NSCT-JSR process

The experimental results yield a highly informative output image from the low informative input multi modal figure. The attainment analysis of the recommended method is executed using the MATLAB simulator. The vital parameters of the image analysis were performed based on the following parameters.

• Average Gradient

• Entropy

• Sharpness

• Standard Deviation

• Mean Square Error (MSE)

• Mutual Information (MI)

• Peak Signal to Noise Ratio (PSNR)

• Structural Similarity Index (SSIM)

The Tab. 1 mentions the values of the aforementioned parameters results analyzed in the recommended technique and is compared with the existing methodology of image fusion techniques. The outcome of recommended techniques are mentioned in last line while the considered existing medical image fusion methodologies are Discrete Cosine Transform (DCT), Contourlet Transform (CT), Discrete Wavelet Transform (DWT), and basic Non Sub sampled Contourlet Transform (NSCT) method.

From the Performance analysis, it is proved that the proposed system outperforms in the process of medical image fusion with better parametric performance than the existing methodologies. This system proves to be more suitable for the medical image fusion yielding high perception output image.

Medical image fusion is an essential process in medical treatment as the diagnosing of disease depends on the quality of image recorded in the form of Computed Tomography and Magnetic Resonance Image. The Digital Image Processing hold good in extracting the features from the recorded image and providing an enhanced image with highlighted features of the recorded image. The medical experts make use of these medical fused images for a perfect diagnosis and for further treatment of diseases. The process of fusing two multi modal images is really a challenging process and the end results yields high level of information. The proposed method of hybrid Non-Sub sampled Contourlet Transform (NSCT) integrated with the Joint Sparse Representation (JSR) effectively performs the image fusion process and yields a high degree of perception output image with better level of performance results when compared with the existing methodologies. Investigation results disclose that recommended medical figure blending technique is keeping hold of the bony arrangement information present in the multimodal Computed Tomography image, Magnetic Resonance Image and soft tissue particulars with good contrast and high level of perception. In future, the presented model can be extended to the design of image classification models for healthcare sector.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. B. Huang, F. Yang, M. Yin, X. Mo and C. Zhong, “A review of multimodal medical image fusion techniques,” Computational and Mathematical Methods in Medicine, vol. 2020, pp. 1–16, 2020. [Google Scholar]

2. T. Li, Y. Wang, C. Chang, N. Hu and Y. Zheng, “Color-appearance-model based fusion of gray and pseudo-color images for medical applications,” Information Fusion, vol. 19, pp. 103–114, 2014. [Google Scholar]

3. J. M. A. van der Krogt, W. H. van Binsbergen, C. J. van der Laken and S. W. Tas, “Novel positron emission tomography tracers for imaging of rheumatoid arthritis,” Autoimmunity Reviews, vol. 20, no. 3, pp. 102764, 2021. [Google Scholar]

4. C. M. Naumann, C. Colberg, M. Jüptner, M. Marx, Y. Zhao et al. “Evaluation of the diagnostic value of preoperative sentinel lymph node (SLN) imaging in penile carcinoma patients without palpable inguinal lymph nodes via single photon emission computed tomography/computed tomography (SPECT/CT) as compared to planar scintigraphy,” Urologic Oncology: Seminars and Original Investigations, vol. 36, no. 3, pp. 92.e17–92.e24, 2018. [Google Scholar]

5. B. C. F. de Oliveira, B. S. Marció and R. C. C. Flesch, “Enhanced damage measurement in a metal specimen through the image fusion of tone-burst vibro-acoustography and pulse-echo ultrasound data,” Measurement, vol. 167, pp. 108445, 2021. [Google Scholar]

6. A. Vijan, P. Dubey and S. Jain, “Comparative analysis of various image fusion techniques for brain magnetic resonance images,” Procedia Computer Science, vol. 167, pp. 413–422, 2020. [Google Scholar]

7. W. Kong, Y. Chen and Y. Lei, “Medical image fusion using guided filter random walks and spatial frequency in framelet domain,” Signal Processing, vol. 181, pp. 107921, 2021. [Google Scholar]

8. J. Tan, T. Zhang, L. Zhao, X. Luo and Y. Y. Tang, “Multi-focus image fusion with geometrical sparse representation,” Signal Processing: Image Communication, vol. 92, pp. 116130, 2021. [Google Scholar]

9. F. Yang, D. Zhang, H. Zhang, K. Huang and Y. Du, “Fusion reconstruction algorithm to ill-posed projection (FRAiPP) for artifacts suppression on X-ray computed tomography,” Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 976, pp. 164263, 2020. [Google Scholar]

10. J. Chen, L. Zhang, L. Lu, Q. Li, M. Hu et al. “A novel medical image fusion method based on rolling guidance filtering,” Internet of Things, vol. 14, pp. 100172, 2021. [Google Scholar]

11. Z. Wang, Z. Cui and Y. Zhu, “Multi-modal medical image fusion by laplacian pyramid and adaptive sparse representation,” Computers in Biology and Medicine, vol. 123, pp. 103823, 2020. [Google Scholar]

12. L. Tang, C. Tian, L. Li, B. Hu, W. Yu et al. “Perceptual quality assessment for multimodal medical image fusion,” Signal Processing: Image Communication, vol. 85, pp. 115852, 2020. [Google Scholar]

13. H. Ullah, B. Ullah, L. Wu, F. Y. O. Abdalla, F. Y. Ren et al. “Multi-modality medical images fusion based on local-features fuzzy sets and novel sum-modified-laplacian in non-subsampled shearlet transform domain,” Biomedical Signal Processing and Control, vol. 57, pp. 101724, 2020. [Google Scholar]

14. L. Corbat, J. Henriet, Y. Chaussy and J. C. Lapayre, “Fusion of multiple segmentations of medical images using OV2ASSION and deep learning methods: Application to CT-scans for tumoral kidney,” Computers in Biology and Medicine, vol. 124, pp. 103928, 2020. [Google Scholar]

15. Q. Hu, S. Hu and F. Zhang, “Multi-modality medical image fusion based on separable dictionary learning and gabor filtering,” Signal Processing: Image Communication, vol. 83, pp. 115758, 2020. [Google Scholar]

16. S. Maqsood and U. Javed, “Multi-modal medical image fusion based on Two-scale image decomposition and sparse representation,” Biomedical Signal Processing and Control, vol. 57, pp. 101810, 2020. [Google Scholar]

17. P. Dharani Devi, and D. Iyanar, “CNN based nutrient extraction from food images,” in 2020 Fourth Int. Conf. on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, pp. 398–402, 2020. [Google Scholar]

18. S. Li, B. Yang and J. Hu, “Performance comparison of different multi-resolution transforms for image fusion,” Information Fusion, vol. 12, no. 2, pp. 74–84, 2011. [Google Scholar]

19. H. Lu, L. Zhang and S. Serikawa, “Maximum local energy: An effective approach for multisensor image fusion in beyond wavelet transform domain,” Computers & Mathematics with Applications, vol. 64, no. 5, pp. 996–1003, 2012. [Google Scholar]

20. G. Pajares and J. M. D. L. Cruz, “A Wavelet-based image fusion tutorial,” Pattern Recognition, vol. 37, no. 9, pp. 1855–1872, 2004. [Google Scholar]

21. G. Piella, “A general framework for multiresolution image fusion: From pixels to regions,” Information Fusion, vol. 4, no. 4, pp. 259–280, 2003. [Google Scholar]

22. Y. Yang, D. S. Park, S. Huang and N. Rao, “Medical image fusion via an effective wavelet-based approach,” EURASIP Journal on Advances in Signal Processing, vol. 2010, no. 1, pp. 579341, 2010. [Google Scholar]

23. J. J. Lewis, R. J. O'Callaghan, S. G. Nikolov, D. R. Bull and N. Canagarajah, “Pixel-and region-based image fusion with complex wavelets,” Information Fusion, vol. 8, no. 2, pp. 119–130, 2007. [Google Scholar]

24. H. Li, Y. Chai and Z. Li, “Multi-focus image fusion based on nonsubsampled contourlet transform and focused regions detection,” Optik, vol. 124, no. 1, pp. 40–51, 2013. [Google Scholar]

25. Y. Chai, H. Li and X. Zhang, “Multifocus image fusion based on features contrast of multiscale products in nonsubsampled contourlet transform domain,” Optik, vol. 123, no. 7, pp. 569–581, 2012. [Google Scholar]

26. Y. Zheng, E. A. Essock, B. C. Hansen and A. M. Haun, “A new metric based on extended spatial frequency and its application to DWT based fusion algorithms,” Information Fusion, vol. 8, no. 2, pp. 177–192, 2007. [Google Scholar]

27. M. N. Do and M. Vetterli, “The contourlet transform: An efficient directional multiresolution image representation,” IEEE Transactions on Image Processing, vol. 14, no. 12, pp. 2091–2106, 2005. [Google Scholar]

28. L. Yang, B. L. Guo and W. Ni, “Multimodality medical image fusion based on multiscale geometric analysis of contourlet transform,” Neurocomputing, vol. 72, no. 1–3, pp. 203–211, 2008. [Google Scholar]

29. A. L. Da Cunha, J. Zhou and M. N. Do, “The nonsubsampled contourlet transform: Theory, design, and applications,” IEEE Transactions on Image Processing, vol. 15, no. 10, pp. 3089–3101, 2006. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |