DOI:10.32604/csse.2023.027980

| Computer Systems Science & Engineering DOI:10.32604/csse.2023.027980 |  |

| Article |

Study on Real-Time Heart Rate Detection Based on Multi-People

1School of Electrical and Electronic Engineering, Wuhan Polytechnic University, Wuhan, 430000, China

2School of Electronic and Information Engineering, Guizhou Normal University, Guizhou, 550000, China

3Interactive Media and Computer Science, University of Miami, Miami, 33124, Florida, USA

*Corresponding Author: Wu Zeng. Email: zengwu@whpu.edu.cn

Received: 30 January 2022; Accepted: 19 April 2022

Abstract: Heart rate is an important vital characteristic which indicates physical and mental health status. Typically heart rate measurement instruments require direct contact with the skin which is time-consuming and costly. Therefore, the study of non-contact heart rate measurement methods is of great importance. Based on the principles of photoelectric volumetric tracing, we use a computer device and camera to capture facial images, accurately detect face regions, and to detect multiple facial images using a multi-target tracking algorithm. Then after the regional segmentation of the facial image, the signal acquisition of the region of interest is further resolved. Finally, frequency detection of the collected Photoplethysmography (PPG) and Electrocardiography (ECG) signals is completed with peak detection, Fourier analysis, and a Wavelet filter. The experimental results show that the subject’s heart rate can be detected quickly and accurately even when monitoring multiple facial targets simultaneously.

Keywords: Face recognition; face analysis; heart rate detection; IPPG signal

The heart is one of the most important organs of the human body, and is closely associated with health status. If the state of the heart can be monitored in real-time, it can even prevent the early onset of heart disease. In conventional medical devices, monitoring the heartbeat rate and cardiac activity is done by measuring electrophysiological signals and electrocardiography (ECG), which requires connecting the electrodes to the body to measure the signals of electrical activity in the heart tissue. As a result of the heartbeat, a pressure wave passes through the blood vessels, which slightly changes the diameter of the vessels. Due to the expensive instruments for ECG measurement, they are usually configured in large hospitals and are not suitable for daily life or other specific scenarios.

An alternative to ECG uses the measurement of light reflection. In this approach the hemoglobin concentration in the blood will change with the heartbeat pulse, and when light passes through the skin through the blood, the change of blood component concentration corresponding to the change of light absorption is collected to form IPPG optical electrocardiogram [1,2]. Image Photoplethysmography (IPPG), is a method to obtain Photoplethysmography (PPG) signals using remote non-contact video images. Peripheral blood volume increases; otherwise, peripheral blood volume decreases. Because the blood absorbs more light than the surrounding tissue, the intensity of light reflected on the surface periodically pulsates with the rhythm of the heartbeat. Studies have shown that cameras can obtain PPG signals by detecting human facial color changes. In this paper, the we describe a method for detecting heart rates in multiple test subjects in real-time using facial video imagery which measures facial color changes caused by changes in blood flow. The pulsating change of blood volume can generally be obtained through a photoelectric volume sensor. The waveform obtained by the sensor contains volume pulse and blood flow information. Thus, systolic and diastolic blood pressures can be obtained by comparing volume pulse flow information with blood pressure signals. This method is called Photoplethysmography. Photoelectric plethysmography uses conventional cameras to capture facial images and measures the small periodic color changes of facial skin caused by cardiac activity to obtain the basic physiological parameters of the human body. It is more convenient and faster than the traditional contact heart rate measurement technology.

Starting with early PPG technology in the 1980s, J. A. Nijboer studied the principle of Photoplethysmography, and observed that there is a difference between reflection and transmission values, depending on the location where the image is acquired. Since the erythrocytes have absorptive properties, and the light reflection and the volume offset is in the reverse phase, the absorptive property manifests itself by a strong reflection of the surrounding tissue; In 2007, J. Allen et al. proposed using PPG technology to measure the physiological signals of human heart rate and respiratory rate due to its simple and low-cost characteristics [3]. In 2010, an IPPG heart rate detection method based on automated facial tracking was proposed by Ming-Zher Poh et al., This technique uses the Independent Component Analysis method (ICA) to separate the timing of Red-Green-Blue (RGB) traces into independent red-Image Photoplethysmography (r-IPPG), green-Image Photoplethysmography (g-IPPG) and blue-Image Photoplethysmography (b-IPPG) signal sources to obtain the pulses. The application of IPPG technology resulted in the rapid development of contactless heart rate detection technology [4]; A different 2013 method from the previous color-based signal separation (CHROM) was proposed in G. De. Haan et al., The method signals a linear combination of [5] by assuming a standardized skin tone to a white balance camera; In 2014, G.de Haan proposed the motion robustness improvement method (PBV) based on the characteristics of the blood volume pulse, The proposed method utilizes features of blood volume changes at different wavelengths to explicitly distinguish between color changes induced by the pulse and motion noise in RGB measurements [6]. In 2016, W. J. Wang et al. designed a flat, orthogonal skin algorithm (POS) to improve the accuracy of the results, The algorithm defines a plane orthogonal to skin color in the time-normalized RGB space for pulse extraction [7]. In 2019, a multi-person heart rate measurement system based on an open computer vision library (OpenCV) was proposed by W. J. Wang et al., The system use the target tracking algorithm to capture multiplayer facial regions of interest to monitor the multiplayer heart rate value in real-time [8,9].

Key developments in the Photoplethysmography wave tracing methods are as follows; extract the heartbeat signal from single-channel light green signal, then to verify the technical feasibility, the blind source separation technology was proposed, which compares three separate extractions of the signal channel, to select the appropriate signal component as the final heartbeat signal, and then to put forward a series of related personnel to overcome motion artifact effects, to overcome the non-rigid movement, to overcome the influence of mirror reflection, to overcome the change of light. Then, relevant scholars proposed to divide the face region grid and give weight, to define the region of interest more effectively. The application of these methods has achieved good results in heart rate measurement.

Using a Light Emitting Diode (LED) light source and the optical volumetric heart rate sensor, the photoelectric volumetric pulse wave tomography measures the attenuated light reflected and absorbed by human blood vessels and tissues, traces the pulse state of the blood vessels, and measures the pulse wave.

The essence of heart rate measurement combined with LED light and optical sensors is the conversion between photoelectric signals.

That is, when the LED light is irradiated to the skin, the reflected light of the skin tissue will be received by a photosensitive sensor and converted into an electrical signal, which will be converted into a digital signal through an analog-digital converter. Most optical sensors choose green light (~500 nm) as the source of light reasons:

(1) The skin’s melanin tends to absorb shorter wavelengths;

(2) Moisture in the skin also tends to absorb UV and IR light;

(3) Most of the yellow light (600 nm) is absorbed by red blood cells in the tissue;

(4) Red and near-IR light passes through skin tissue more easily than other wavelengths of light;

(5) Compared with red light, green (green-yellow) light can be absorbed by oxyhemoglobin and deoxyhemoglobin.

When the light source is directed on to the skin tissue and reflected to the optical displacement sensor, the light intensity fluctuates, mainly due to changes in arterial blood flow owing to the fact that muscle, bone, and intravenous groups exhibit largely constant light absorption across the time domain (assuming no large movement of the image acquisition site).

When light signals are converted into electrical signals, it is precisely because the absorption of light by the arteries changes while that of other tissues remains essentially the same that the measured signals can be divided into DC and AC signals (this is the most important premise of the approach).

The AC signal, the part of the signal that reflects the characteristics of blood flow, is extracted. Because the skin tissues absorb red light and IR to different degrees, the DC part is naturally different. “Eliminating” the DC component and analyzing the changing AC component is called photoplethysmography (PPG).

IPPG technology is based on PPG. IPPG, unlike previous PPG technology which requires special sensor equipment, can obtain human Blood Volume Pulse (BVP) signals through smartphones and cameras of various monitoring devices. It generally estimates the heart rate parameters by extracting the human skin color changes (generally facial or fingertip skin) from the video information captured by the device. With the development of IPPG technology [10–12], [13–15], contactless heart rate detection can be achieved through ordinary consumer-level cameras, and on this basis, this paper studies multi-person real-time heart rate detection through network cameras in a controlled environment.

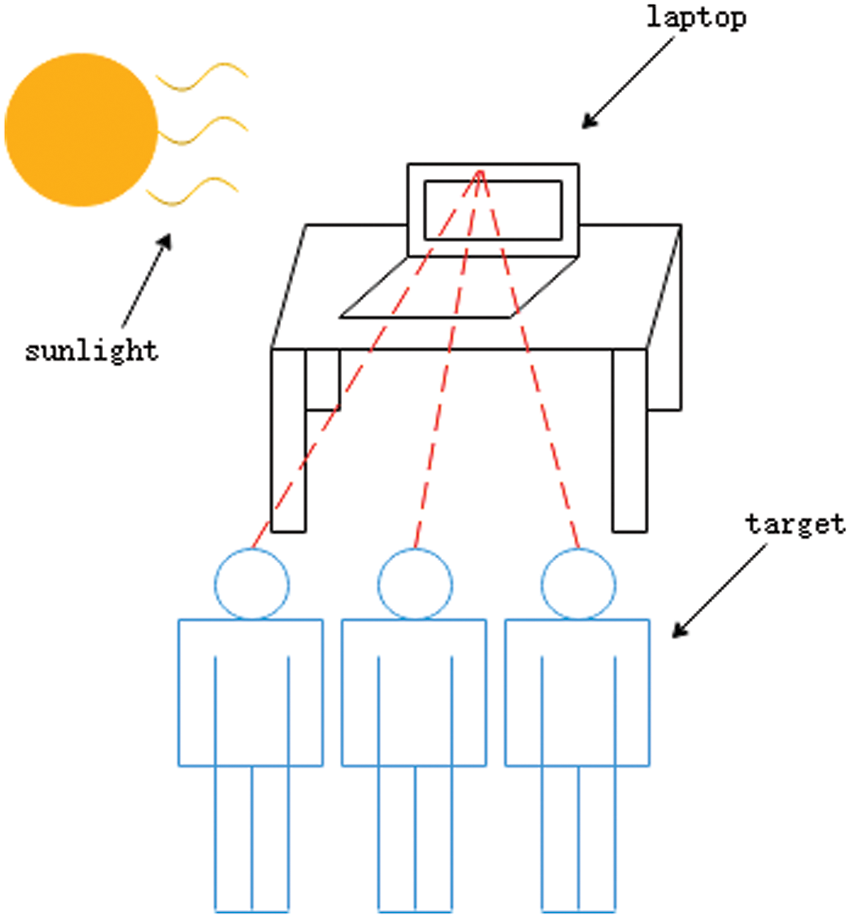

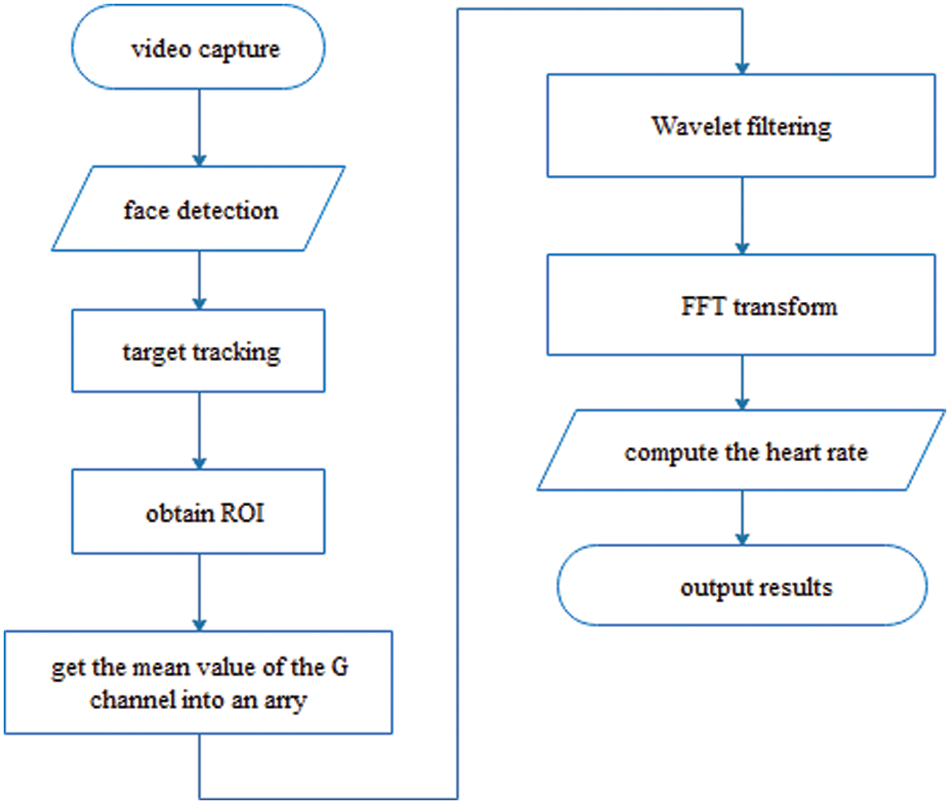

This section will introduce the experimental process of a video-based multi-person heart rate detection system, to achieve a real-time multi-person heart rate detection function, which uses remote-photoplethysmography (RPPG) to calculate the heart rate values. For the experimental construction section, natural light was set to constant conditions, and real-time heart rate monitoring was conducted through a network camera. The experimental process of the whole system is mainly divided into four parts: face detection, face tracking, region of interest (ROI) selection, and IPPG extraction, The experimental device is shown in Fig. 1.

Figure 1: The experimental setup for this study

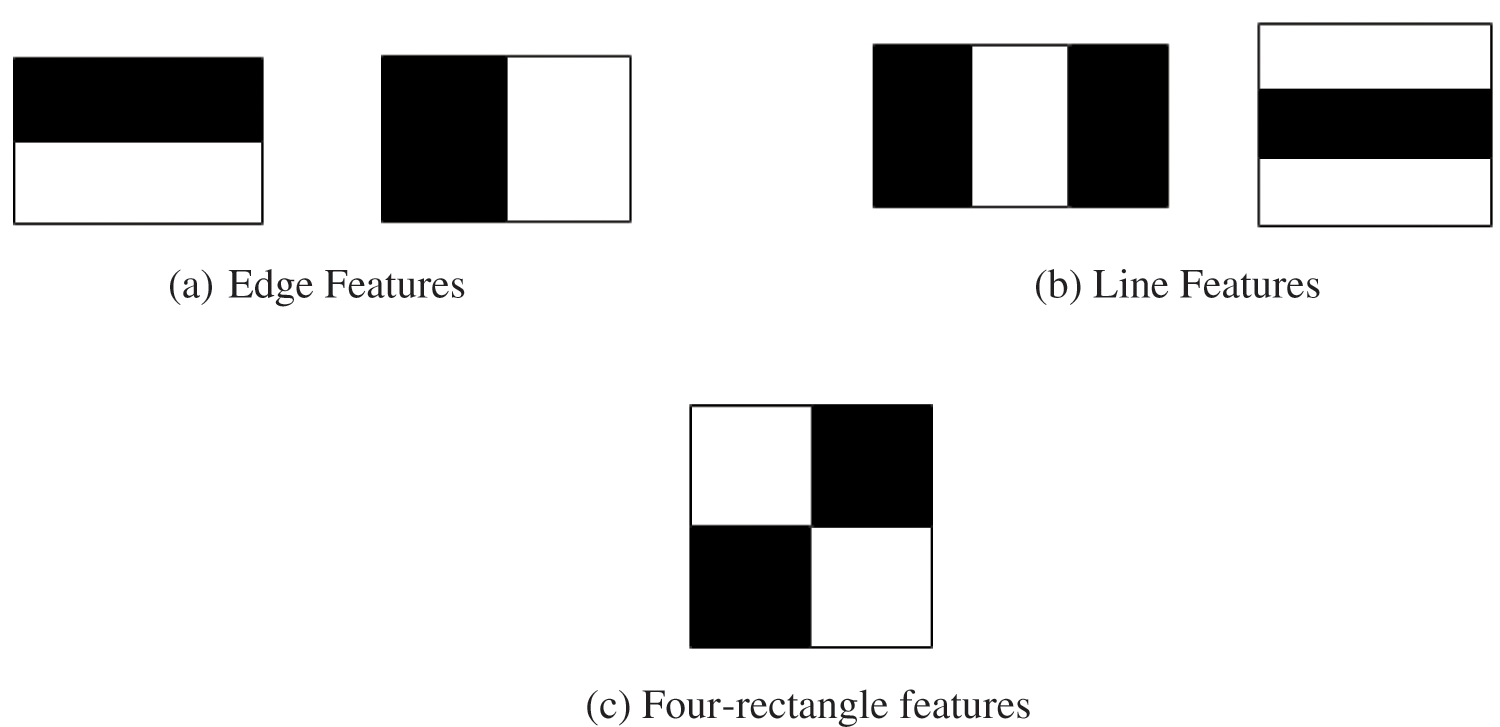

In this paper, the face detection algorithm based on the cascade classifier model of Haar features, is an effective object detection method based on a machine learning algorithm, which is trained to obtain the cascade equation in many positive and negative samples and then applied to other images such as faces.

First, the algorithm requires many positive samples (including pictures of the face) and negative samples (pictures of the face) to train the classifier. We then want to get the features from these images. The way the Haar features are obtained, is shown in Fig. 2 below. Similar to the convolution kernel, the eigenvalues of each feature are obtained by subtracting the sum of the white region’s pixels from the sum of the black region’s pixels. All possible size and location calculations for each kernel yielded a large number of features. For each feature, we need to calculate the pixel-sum of the white and black squares.

Figure 2: Haar feature map

To solve the problem of extensive computation, the concept of integral images, a matrix representation method capable of describing the global information, as shown in Eq. (1), is introduced. The integral graph is constructed as the value ii (i, j) at position (i, j) is the sum of all pixels in the upper left corner of the original image (i, j), which greatly simplifies the calculation of pixels, requiring only about orders of magnitude of pixels, involving only 4 pixels per operation. Converting pixel intensity values to an integer image quickly computes the sum of pixels in an image or a rectangle.

The face detection process is divided into the training stage and the test stage. In the training stage, a prepared training set is adopted, which includes 5,250 images for face detection, corresponding to 11,931 faces, and each image corresponds to a marker file, including the coordinate point position in the upper left corner of the marker box, the length and width of the marker box, blur, light condition, occlusion, effectiveness, posture, and other characteristics, etc. Model parameters for face detection were obtained from the existing training set and saved locally. During the test phase, we experimentally validated the accuracy of the face detection algorithm based on the cascade classifier model with Haar features, and the faces of each subject participating in the experiment could be accurately detected.

Using face detection to identify all subjects, each subject’s real-time heart rate data can be determined., We used the face tracking algorithm in real-time tracking to prevent data confusion and pollution of the experiment results. Real-time tracking is possible because the algorithm is executed on every new frame. Thus the procedure is done in one direction: forward. Only one framework is needed to provide the necessary information and then take the appropriate follow-up.

The OpenCV library is a widely used library of computer vision research that provides many algorithms that can track a small number of targets experimentally. These algorithms can be divided into two categories: the first is an algorithm that tracks the trajectory of the target, which uses a prediction of the future location, which requires correction for inter-frame errors. One of the disadvantages is that accuracy is lost when the network camera moves too fast or the target movement direction changes suddenly and then identifies another object at the location predicted by the algorithm. Example algorithms: MIL (multiple-instance learning), Boosting, Median-Flow, etc. The second class are algorithms developed to solve complex tracking problems that can adapt to new conditions and correct all existing major errors. Their disadvantage is the need for more memory and more processing power consumption. Several examples of target tracking algorithms are described below.

(1) MOSSE——The Minimum Sum of Error Filter (MOSSE) initializes the first frame in the video. It has better robustness to brightness, scale, pose, and lax deformation of time at the speed of 669 frames per second (fps). Filter-based tracking is to model the appearance of the target object with a filter trained on the template image. Goals were initially selected based on a small tracking window centered on the target in the first frame. In this regard, tracking and filter training are done together. The target is tracked by filtering in the search window of the next frame picture. Where the maximum value generated after filtering is the new location of the target. Complete the online updates based on the new location obtained.

(2) KCF——The Nucleation Correlation Filter (KCF) tracking algorithm is a discriminative tracking method, which requires training a target detector in the tracking process, using the trained target detector to predict the tracking object position of the next frame, and then updating the detection results. The target area selected during the operation is generally a positive sample, while the surrounding area outside the target area is a negative sample. The algorithm collects positive and negative samples using the cycle matrix of the area around the target, uses ridge regression to train the target detector, and successfully uses the diagonal nature of the cycle matrix in the Fourier space to transform the operation of the matrix into a vector Hadamard product, namely the point multiplication of the element, which greatly reduces the operation amount, improves the operation speed, and allows the algorithm to meet the real-time requirements.

(3) CSK——With the Circulant Structure of Tracking-by-detection with Kernels (CSK) method, most of the motion tracking is done by finding the mutual relationship of the two adjacent frames, and then determining the motion direction of the target object. After sufficient iteration, the object can be tracked completely, as can the CSK. After determining the tracking object, the target window and the next frame are cut out according to the target position, and then Fast Fourier Transform (FFT) is performed, after which the window is multiplied directly into the frequency domain map after transformation. This process can be simply understood as finding the frequency domain resonance position of two connected frames, then mapping the resonance frequency domain map using the nuclear function, followed by training. The training process introduces the original response Y. Y can be understood as the starting position of the object, because the starting positions are all the center of the first frame, the image of Y is a built Gaussian function based on the size of the tracking window. CSK uses the cyclic structure for the correlation detection of the adjacent frames. The so-called cycle structure is that two frames multiply by points on the frequency domain, that is, two frames are convolved on the time domain. In the previous motion tracking, the correlation detection was done using the sliding window method. If the sliding step length of the window is 1, it can be regarded as a convolution between two frames. However, the amount of convolution in the time domain is very amazing, while point multiplication in the frequency domain is much smaller, so the cycle structure used by CSK in the frequency domain can be well accelerated in the frequency domain.

(4) CN——The Color Names (CN) is a way of color naming, which belongs to RGB and Hue, Saturation, Value (HSV). Research in the CN article shows that the effect of CN space is better than any other space, so CN color space is used to color expand CSK. CN is very simple; first map the images of RGB space to CN space (CN space is 11 channels, respectively black, blue, brown, grey, green, orange, pink, purple, red, white, yellow), apply FFT to each channel, perform nuclear mapping, and finally perform the 11-channel frequency domain signal linear addition (sum), and then complete the CSK calculation, such as α calculation, training, detection, etc. However, the operation is numerically intensive and in order to. reduce the scale of the operation dimension reduction using PCA is performed. Some of the colors contained within the 11 channels of CN are not significant, and thus with PCA the 11 channel information is condenced down to 2 dimensions which contain most of the meaningful information, but within a reduced 2 dimensional matrix which results in much faster calculations.

(5) SAMF——The Split and Merge Files (SAMF) is improved based on the KCF, using a multi-feature (grayscale, Hog, CN) fusion. The Hog and CN features can be complementary (color and gradient)). And a multiscale search strategy is adopted.

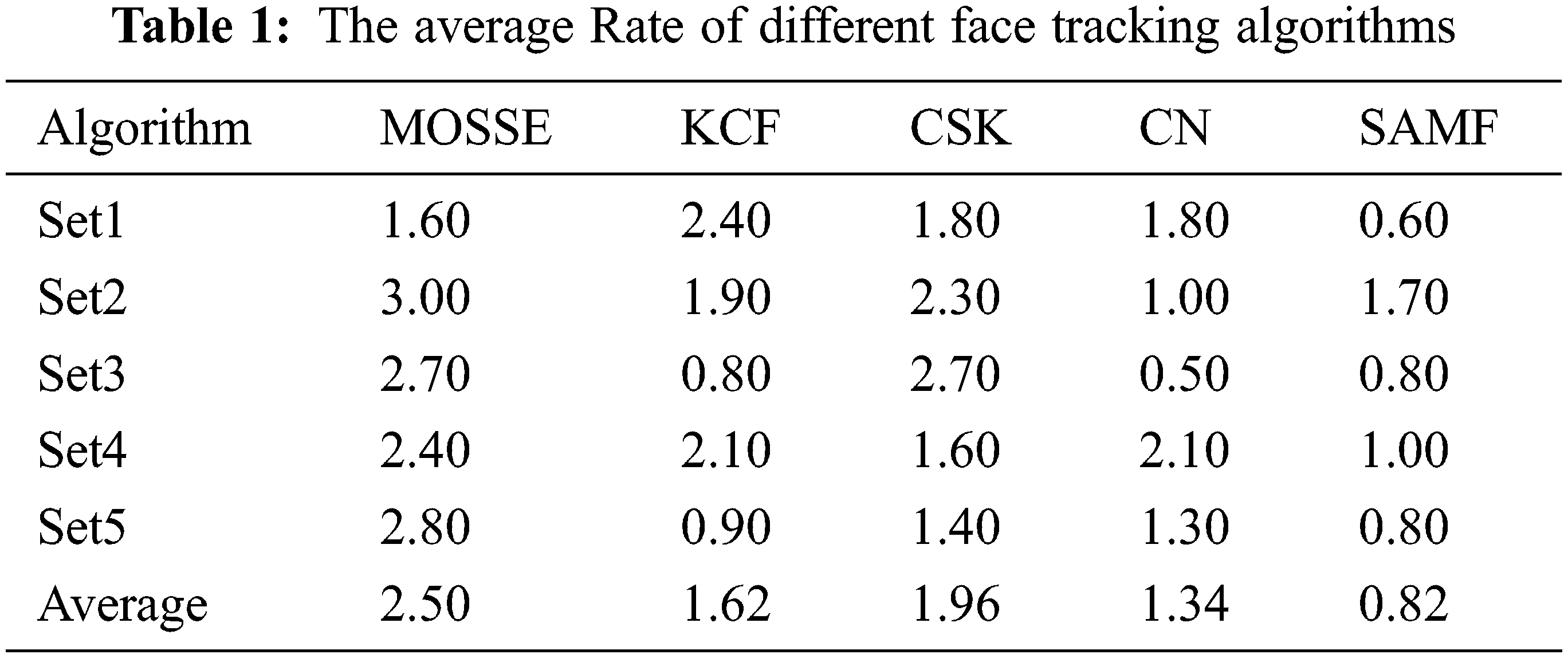

Since the above algorithms described previously can be used in different situations, it is difficult to compare them as complex and objective. Tracking algorithms have also been developed to overcome various problems encountered. Some of these algorithms are more effective when the target rotates, while others better handle changes in light conditions or sudden movement of web cameras more effectively. Sometimes, the tracker fails to initialize, or the target is lost while running the algorithm. This makes the most complete comparison likely to require a very large set of data. After the comparative experiment in the test stage, the SAMF algorithm had the best overall performance, so the SAMF algorithm was chosen to track the target images in the video in real-time in this experiment.

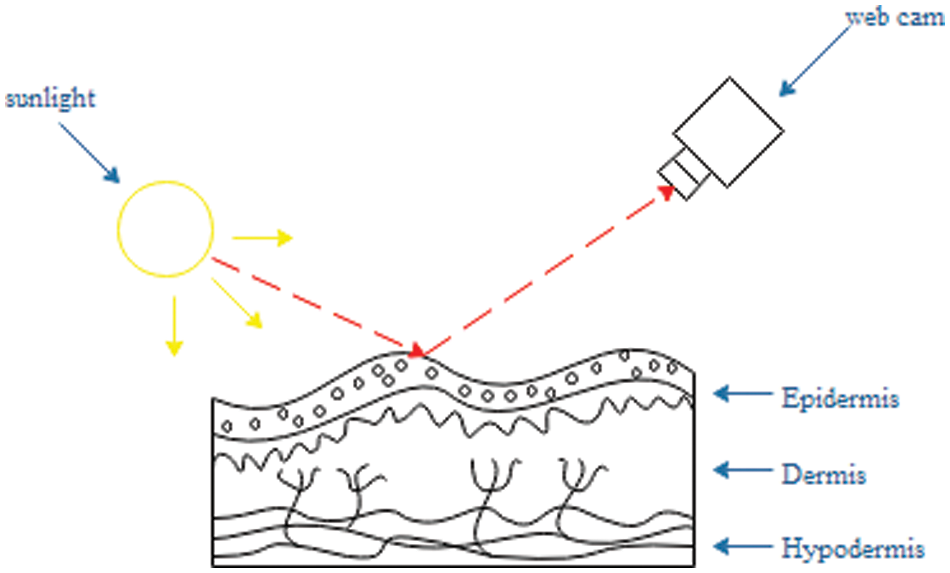

IPPG unlike traditional PPG technology, does not require contact sensors. IIPG captures facial images through a network camera, and then extracts heart rate information from the video sequence. The human heartbeat has a periodic rhythm, and the systolic/diastolic contractions of the heart causes periodic fluctuations in the arterial pressure such that periodic expansion and retraction of the arterial occurs, resulting in periodic changes in blood volume within the blood vessels. This feature drives the development of IPPG technology which functions as described in Fig. 3. Reflected or incident external natural light is used to sample the skin tone variation due to heart activity and the resultant change in hemoglobin content in the dermal microvascular network. Meanwhile, the absorption of light also varies with the content of hemoglobin, thus changing the intensity of the reflected light. Imaging photoplethysmography technology is to use to obtain the facial video images containing the pulse information, record the light intensity changes caused by the blood volume changes in the dermal microvascular structures, and then extract the pulse waves through the image pre-processing and signal separation technologies. Finally from the image information, the the real-time heart rate value is determined.

Figure 3: Basic principles of IPPG measurement

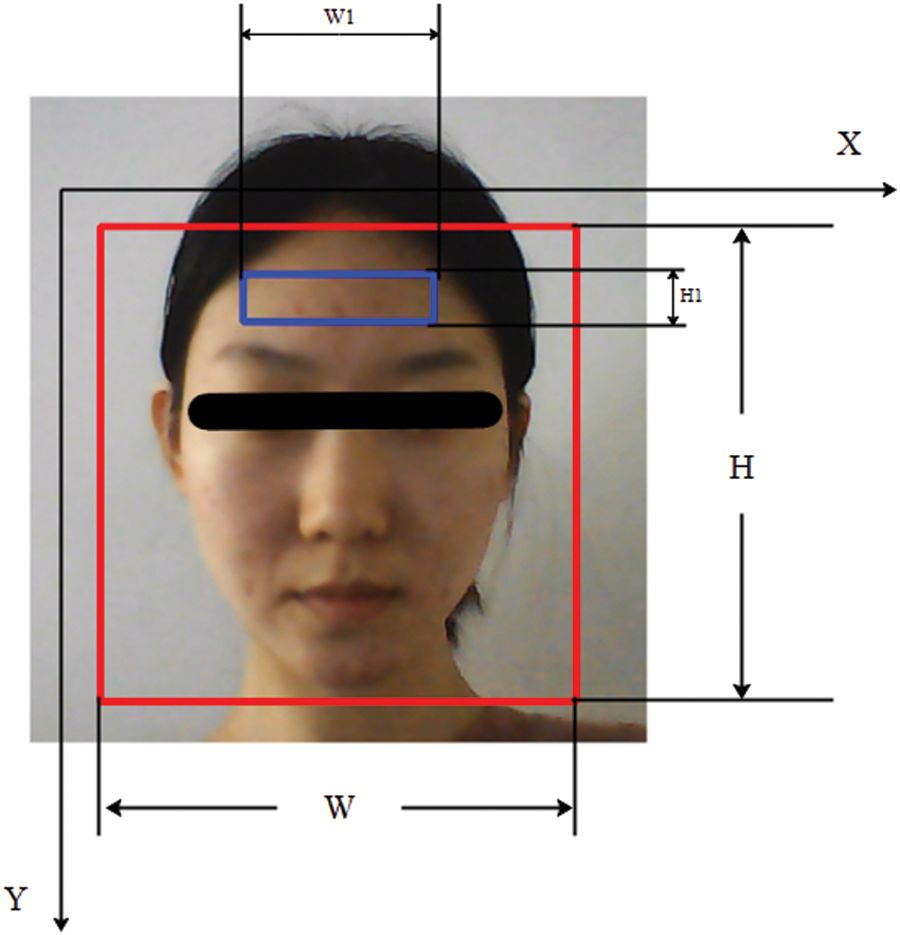

To enable IPPG technology, vascular areas of the human body should be selected as the areas of interest. Because the facial forehead area has rich capillaries and a smooth skin distribution, the forehead of the subject is ideal as the area of interest, as shown in Fig. 4.

Figure 4: ROI fixed position

First, each face was modeled as a rectangular detection box with the parameters X, Y, W, and H, where X and Y are the upper left coordinates of the detection box, and W and H are the width and height of the detection box, respectively. The corresponding rectangular ROI detection box was set inside the face detection box of each object, with the parameters X1, Y1, W1, and H1. The calculation is shown in Eq. (2).

The experimental flow chart for the work described in this paper is shown in Fig. 5. After the corresponding image pre-processing step for each subjects’ facial image, the next step is to process the signal by balancing the histogram of the frame received from the network camera, with the average pixel calculation for R, G, and B of the regions of interest in the histogram of each frame. The histogram of the image is its grayscale distribution (a graphical representation of the number of pixels per intensity considered). Equilibrium histograms are a method to improve image contrast to obtain uniform histograms. Under shooting recordings of continuous frames, three-channel continuous IPPG signals for each individual, namely r-IPPG, g-IPPG, and b-IPPG, can be obtained. Because the light intensity change signal caused by the blood volume change is very weak, the resulting IPPG signal is easily submerged in the noise signal caused by the imaging equipment quantification noise, subject motion, etc., the original three-channel signal is required. In this paper, we use a wavelet filtering algorithm. The next step is to apply FFT to the vector formed by the last mean value “N” (where the maximum is 250) obtained from the previous point.

Figure 5: Experimental flowchart

A wavelet filter [16,17] is applied in order to remove the superimposed noise and interference components in the signal to make the computational FFT more accurate. The application of wavelet filtering can typically keep the source signal containing high and low-frequency noise smooth. After converting the time-domain IPPG signal into a frequency domain spectrum using FFT, the frequency corresponding to the maximum spectral amplitude within the heart rate range [0.5,3] HZ was found as the number of heart rate per second and finally multiplied by 60 to obtain the numbers of heart-beats per minute.

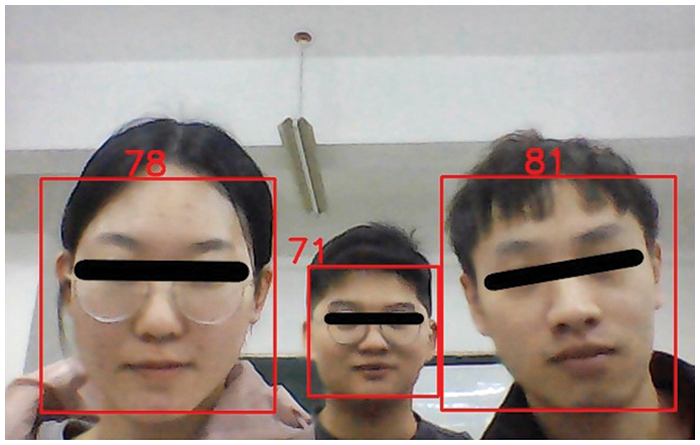

The test subjects and their corresponding real-time heart rate data are shown in Fig. 6. At the top of the image, the heart rate of three subjects from left to right: the ROI area of each subject from the face detection algorithm, and the data displayed in the middle red font is the mean heart rate processed for a frame during the current heart rate calculation. All data and bounding boxes for face detection are displayed in real-time in each frame of the video for easy detector observation. This experiment only supports real-time heart rate detection in the range of five people, and a larger range of multi-person real-time heart rate detection needs further research. Tab. 1 lists the average rates for each algorithm. In these tables, we compare a SAMF-based target-tracking algorithm with several other mainstream algorithms, such as MOSSE, KCF, and CSK, et al. The results show that, on average, the SAMF-based algorithm outperformed all other algorithms, although KCF and CN perform slightly better on certain specific datasets.

Figure 6: Multiplayer real-time heart rate measurement

3.2 Performance Evaluation Metric

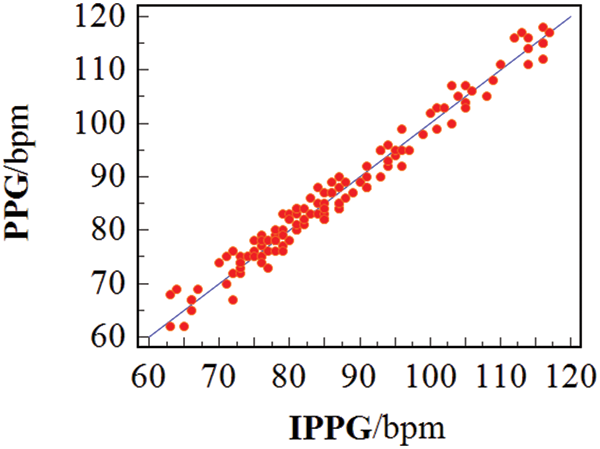

The experiment used natural light sources and two devices, a pulse oximeter and a web camera to collect two types of physiological information, namely PPG and IPPG data. The results from 120 experiments are shown in Fig. 7, and clearly show high agreement between the PPG and IPPG methods.

Figure 7: Comparison of the correlation results between IPPG and PPG

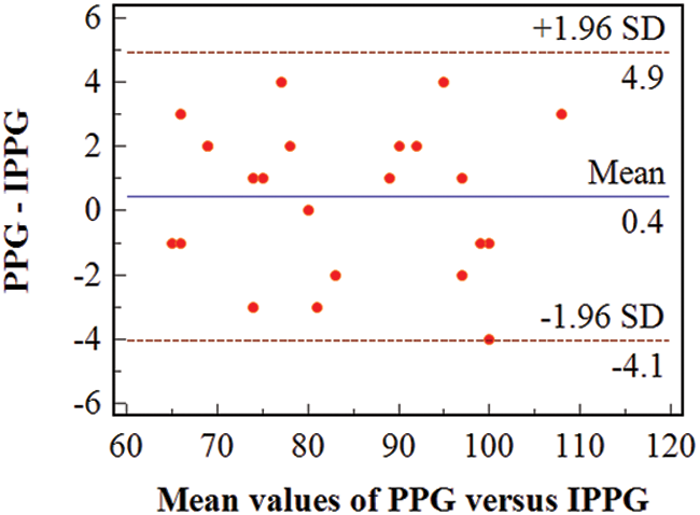

The consistency between the contact and non-contact measurements was analyzed using Bland-Altman analysis. Fig. 8 is a Bland-Altman analysis diagram of the IPPG method and the PPG method (non-contact vs contact measurements respectively). The upper and lower red horizontal dashed lines indicate the upper and lower limits of the 95% agreement limit (standard deviation = 1.96) and the blue horizontal solid line in the middle represents the mean of the difference.

Figure 8: Bland-Altman analysis

From Fig. 8 we can conclude that: the difference between the heart rate value measured by the IPPG method and the PPG method is 0.4 BPM (Mean Difference +/− Agreement Limit = 0.4 +4.1/−4.9). Most of the points are distributed within 95% Agreement Limit interval, indicating that the detection results of the video-based real-time multi-human heart rate monitoring system designed in this paper are acceptable.

Currently, widely used heart rate measurement instruments (such as ECG monitors) require direct contact with the skin of the test subject, but the contact measurement equipment is expensive, typically not portable, and cannot be deployed during daily life activities and specific scenarios. For these reasons there is a significantly demand for contactless pulse wave extraction techniques. This paper presents a multi-human real-time heart rate detection system under sunlight exposure and a detailed introduction and evaluation of the entire experimental process. The system designed in this experiment detects and tracks ROI in acquired video frames using deep learning-based face detection and face tracking technology. The ROI’s image sequence was processed using image preprocessing and used signal separation techniques to obtain the RGB color vector signal containing pulse wave information and high signal-to-noise ratio pulse timing maps using a noise reduction by band-pass filtering algorithm. Finally, the heart rate calculation was performed using FFT. Experimental results show that the non-contact measurement method and associated algorithms presented herein can accurately determine the heart rate of multiple persons simultaneously in real-time. The experimental results showed high agreement with the accepted contact measurement method which uses a pulse oximeter device for heart rate detection. With the development of contactless heart rate measurement technology, there will be more scenarios that can be applied for medical testing as well as the physical condition testing of test subjects.

Acknowledgement: The authors gratefully acknowledge the laboratory of electronic and information engineering, Multimedia Computing Laboratory, Guizhou Normal University, Guizhou Province, China.

Funding Statement: This work was financially supported by the National Nature Science Foundation of China (Grant Number: 61962010).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. X. Y. Li, P. Wu, Y. Liu, H. Y. Si, Z. L. Wang et al., “Heart rate parameter extraction based on face video,” Optics and Precision Engineering, vol. 3, pp. 548–557, 2020. [Google Scholar]

2. H. C. Liu, X. M. Wang and G. A. Chen, “Non-contact fetal heart rate detection,” Computer Systems & Applications, vol. 28, pp. 204–209, 2019. [Google Scholar]

3. J. Allen, “Photoplethysmography and its application in clinical physiological measurement,” Physiological Measurement, vol. 28, no. 3, pp. R1–R39, 2007. [Google Scholar]

4. M. Z. Poh, D. J. McDuff and R. W. Picard, “Non-contact, automated cardiac pulse measurements using video imaging and blind source separation,” Optics Express, vol. 18, no. 10, pp. 10762–10774, 2010. [Google Scholar]

5. G. de Haan and V. Jeanne, “Robust pulse rate from chrominance-based rPPG,” IEEE Transactions on Biomedical Engineering, vol. 60, no. 10, pp. 2878–2886, 2013. [Google Scholar]

6. G. de Haan and A. van Leest, “Improved motion robustness of remote-PPG by using the blood volume pulse signature,” Physiological Measurement, vol. 35, no. 9, pp. 1913–1926, 2014. [Google Scholar]

7. W. Wang, A. C. den Brinker, S. Stuijk and G. de Haan, “Algorithmic principles of remote PPG,” IEEE Transactions on Biomedical Engineering, vol. 64, no. 7, pp. 1479–1491, 2017. [Google Scholar]

8. X. R. Wang, W. J. Wang, J. S. Zhao, L. Q. Kong and Y. J. Zhao, “Real-time video-based non-contact multiplayer heart rate detection during exercise,” Optical Technique, vol. 45, pp. 6, 2019. [Google Scholar]

9. W. Weijie and K. Lingqin, “Real-time and robust heart rate measurement for multi-people in motion using IPPG,” in Applications of Digital Image Processing XLII, San Diego, CA, USA, vol. 11137, pp. 1113706, 2019. [Google Scholar]

10. W. Wang, S. Stuijk and G. D. Haan, “A novel algorithm for remote photoplethysmography: Spatial subspace rotation,” IEEE Transactions on Biomedical Engineering, vol. 63, no. 9, pp. 548–557, 2015. [Google Scholar]

11. J. Y. Zhang and Z. Wu, “Multimodal face recognition based on color and depth information,” Engineering Journal of Wuhan University, vol. 4, pp. 353–363, 2020. [Google Scholar]

12. L. Lu and J. Cheng, “Non-contact thermal infrared video heart rate detection method based on multi-region analysis,” Journal of Biomedical Engineering Research, vol. 40, pp. 21–27, 2021. [Google Scholar]

13. W. Zeng, Y. Sheng, Q. Y. Hu, Z. X. Huo and Y. G. Zhang, “Heart rate detection using SVM based on video imagery,” Intelligent Automation & Soft Computing, vol. 32, no. 1, pp. 377–387, 2022. [Google Scholar]

14. J. C. Zou, S. Y. Zhou, B. L. Ge and X. Yang, “Non-contact blood pressure measurement based on IPPG,” Journal of New Media, vol. 3, no. 2, pp. 41–51, 2021. [Google Scholar]

15. X. Y. Lv, X. F. Lian, L. Tan, Y. Y. Song and C. Y. Wang, “HPMC: A multi-target tracking algorithm for the IoT,” Intelligent Automation & Soft Computing, vol. 28, no. 2, pp. 513–526, 2021. [Google Scholar]

16. X. R. Zhang, W. F. Zhang, W. Sun, X. M. Sun and S. K. Jha, “A robust 3-D medical watermarking based on wavelet transform for data protection,” Computer Systems Science & Engineering, vol. 41, no. 3, pp. 1043–1056, 2022. [Google Scholar]

17. X. R. Zhang, X. Sun, X. M. Sun, W. Sun and S. K. Jha, “Robust reversible audio watermarking scheme for telemedicine and privacy protection,” Computers Materials & Continua, vol. 71, no. 2, pp. 3035–3050, 2022. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |