DOI:10.32604/csse.2023.026011

| Computer Systems Science & Engineering DOI:10.32604/csse.2023.026011 |  |

| Article |

Combining Entropy Optimization and Sobel Operator for Medical Image Fusion

1Faculty of Computer Science and Engineering, Thuyloi University, 175 Tay Son, Dong Da, Hanoi, 010000, Vietnam

2University of Information and Communication Technology, Thai Nguyen University, Thai Nguyen, 240000, Vietnam

*Corresponding Author: Nguyen Tu Trung. Email: trungnt@tlu.edu.vn

Received: 13 December 2021; Accepted: 14 January 2022

Abstract: Fusing medical images is a topic of interest in processing medical images. This is achieved to through fusing information from multimodality images for the purpose of increasing the clinical diagnosis accuracy. This fusion aims to improve the image quality and preserve the specific features. The methods of medical image fusion generally use knowledge in many different fields such as clinical medicine, computer vision, digital imaging, machine learning, pattern recognition to fuse different medical images. There are two main approaches in fusing image, including spatial domain approach and transform domain approachs. This paper proposes a new algorithm to fusion multimodal images. This algorithm is based on Entropy optimization and the Sobel operator. Wavelet transform is used to split the input images into components over the low and high frequency domains. Then, two fusion rules are used for obtaining the fusing images. The first rule, based on the Sobel operator, is used for high frequency components. The second rule, based on Entropy optimization by using Particle Swarm Optimization (PSO) algorithm, is used for low frequency components. Proposed algorithm is implemented on the images related to central nervous system diseases. The experimental results of the paper show that the proposed algorithm is better than some recent methods in term of brightness level, the contrast, the entropy, the gradient and visual information fidelity for fusion (VIFF), Feature Mutual Information (FMI) indices.

Keywords: Medical image fusion; wavelet; entropy optimization; PSO; Sobel operator

Fusing medical images is combining the information of multimodality images to acquire accurate information [1]. This fusion aims to improve the image quality and preserve the specific features. An overview of the techniques of image fusion applied into medical applications can be seen in [2]. The methods of medical image fusion generally use knowledge in many different fields such as clinical medicine, computer vision, digital imaging, machine learning, pattern recognition to fuse different medical images [3].

There are two main approaches in fusing image, including spatial domain approach and transform domain approachs [4]. With the spatial domain approach, the fused image is chosen from the regions/pixels of the input images without transformation [5]. This approach includes the region based [4] and pixel based [6] methods. The techniques of transform domain do fusing the corresponding transforming coefficients and later apply the inverse transformation for producing the fused image. One of the popular fusion techniques is transform of multi scales. There are various multi transform based on contour transform [7–9], a complex wavelet transform [10], the discrete wavelet transform [11] or sparse representing [12].

Recently, there are many new techniques in fusing images. Mishra et al. [13] presented a method of fusing Computed Tomography-Magnetic Resonance Imaging (CT-MRI) images using discrete wavelet transform. In [14] and [15], the authors introduced a method of fusing images using the Principal Component Analysis (PCA). Sarmad et al. proposed a method of fusing multimodal medical images by applying sparse representing and two-scale decomposing techniques on images [16]. Xu et al. [17] proposed a method of fusing medical images using hybrid of wavelet-homomorphic filter and an algorithm of modified shark smell optimization. Polinati et al. [18] introduced a method of fusing the information of the various image modalities such as speculation (SPEC), positron emission tomography (PET) and MRI using fusion rule of local energy maxima and empirical wavelet transform representation. Hu et al. [19] presented a fusing method of combining dictionary optimization and the filter Gabor in contourlet transform domain. Chen et al. [20] proposed a method of medical image fusion that is based on Rolling Guidance Filtering. Haribabu et al. [21] showed statical measurements of fusing medical images for MRI-PET images using 2D Herley transform with HSV color space. Manchanda et al. [22] improved an algorithm of medical image fusion by using fuzzy transformation (FTR). In [23], a new algorithm for fusing medical images was proposed. This algorithm used lifting scheme based bio-orthogonal wavelet transform. Hikmat Ullah et al. proposed a method of fusing multimodality medical images. This method is based on fuzzy sets with local features and new sum-modified-Laplacian in domain of the shearlet transform [24]. In [25], Liu et al. introduced a new method of fusing medical images that is Convolutional Sparsity-based by Analysis of Morphological Component.

The new techniques which are based deeplearning, are proposed recently. In [26], a medical image fusion method based on convolutional neural networks (CNNs) is proposed. In our method, a siamese convolutional network is adopted to generate a weight map which integrates the pixel activity information from two source images. B. Yang et al. [27] present a novel joint multi-focus image fusion and super-resolution method via convolutional neural network (CNN). While a novel jointed image fusion and super-resolution algorithm is proposed in [28]. And Jiayi Ma et al. proposed a new end-to-end model, termed as dualdiscriminator conditional generative adversarial network (DDcGAN), for fusing infrared and visible images of different resolutions [29].

The medical image fusion approach, uses wavelet transform, usually applies the average selection rule on low frequency components and max selection rule on high frequency components. This causes the resulting image to be greatly grayed out compared to the original image because the grayscale values of the frequency components of the input images differ greatly. In addition, some recent methods focus mainly on the fusion so that they can reduce the contrast and brightness of the fused image. This makes it difficult to diagnose and analyze based on the fused image. To overcome the limitations, this paper proposes a novel algorithm for fusing multimodal images by combining of Entropy optimization and the Sobel operator.

The main contributions of this article include:

• Propose a new algorithm based on the Sobel operator for combining high frequency components.

• Propose a novel algorithm that is used for fusing multimodal images based on wavelet transform.

• Propose a new algorithm based on the Sobel operator for combining low frequency components. This algorithm is combined by Entropy based on parameter optimization using PSO algorithm. The fusion image preserves colors and textures similarly to input image.

The remaining of this article is structured as follows. In Section 2, some related works are presented. The proposed algorithm about image fusion is presented in Section 3. Section 4 presents some experiments of our algorithm and other related algorithms on selected images. Conclusions and the future researches are given in Section 5.

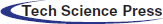

Wavelet Transformation (WT) is a mathematical tool [30]. This tool is used for presenting images with multi-resolution. After transforming, wavelet coefficients is obtained. For remote sensing images, wavelet coefficients can be obtained by Discrete Wavelet Transform (DWT). In which, the most important content is low frequency. This content keeps most of the features of input image and its size is decreased by four times. By using low pass filter with two directions, the approximate image (LL) is achieved.

When DWT performed, the size of image LL is four times smaller than the image LL of the previous stage. Therefore, if the input image is disaggregated into 3 levels, size of the final approximate image is 64 times smaller than the input image. Wavelet transformation of image is illustrated as in Fig. 1.

Figure 1: Image Decomposition using DWT

2.2 Particle Swarm Optimization (PSO)

PSO is an algorithm about finding solutions to optimization problems [31]. This is the result of modeling bird flocks that fly to find foods. In many fields, this algorithm was successfully applied. First, PSO initialized a group of individuals randomly. Then, the algorithm updated generations to find the optimal solution. With each generation, two best positions of each individual was updated, denoted as PI_best and GI_best. Wherein the first value, PI_best is best the position that has ever reached. GI_best is the best position that obtained in the whole search process of the population up to the present time. Specifically, after each generation was updated, velocity and the position of each individual are updated by following formulas:

where:

•

•

•

•

•

•

•

•

•

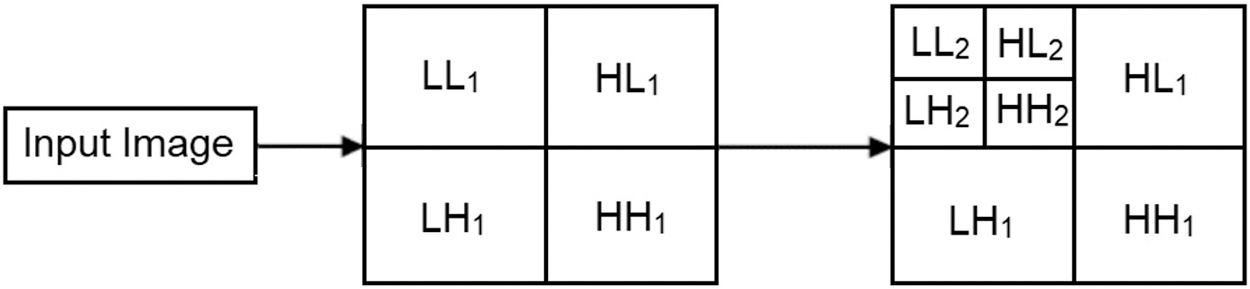

2.3 Fusing Images Based on Wavelet Transformation

Reference [13] presented a method of fusing CT-MRI images based on the discrete wavelet transform (WIF), as shown in Fig. 2.

Figure 2: The chart of fusing image using the wavelet transform

With

• Average method:

• Select Maximum:

• Select Minimum:

3.1 The Algorithm of Combining High Frequency Components Based on Sobel Operator

The algorithm of combining high frequency components based on Sobel operator (CHCSO) is stated as follows:

Input: Two high frequency components

Output: Combining component.

The main steps of CHCSO include:

Step 1: Get

Step 2: Get

Step 3: Combine component

3.2 The Medical Image Fusion Algorithm

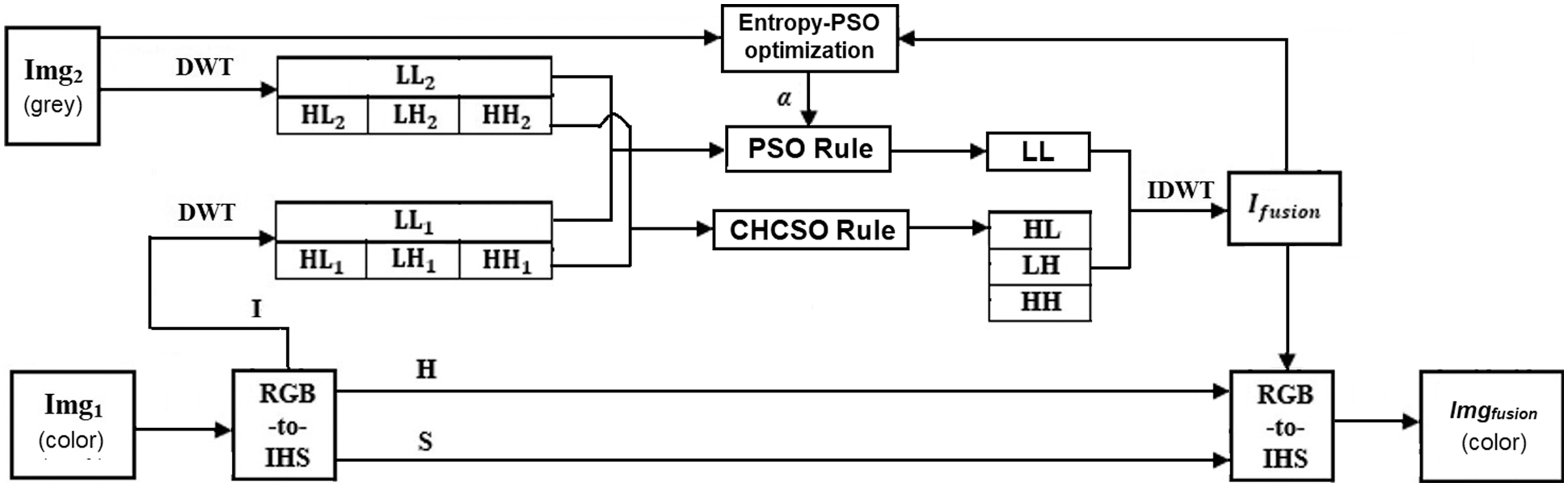

In this section, a new algorithm for fusing medical images named as the Entropy optimization and Sobel operator based Image Fusion (ESIF) is proposed. The general framework of the algorithm ESIF is shown in Fig. 3 below.

Figure 3: The framework of the algorithm of medical image fusion ESIF

Where, Img1 is PET or SPEC image (color images), Img2 is CT or MRI image (grey images).

According to Fig. 3, the algorithm includes the following steps:

• Step 1: Convert image img1 in Red, Blue and Green (RGB) color space to Hue, Saturation, Intensity (HIS) color space to get

• Step 2: Transform

• Step 3: Fuse the high frequency components (HL1, LH1, HH1) and (HL2, LH2, HH2) to get HL, LH, HH using the rule which is based on the algorithm CHCSO as follows:

• Step 4: Fuse the low frequency components (LL1) and (LL2) to get LL using the rule as follows:

The parameter

• Step 5: Transform the components (LL, LH, HL, HH) to get Ifusion using IDWT transformation.

where,

• Step 6: Convert the components Ifusion, HImg1, SImg1 in HIS color space to RGB color space to obtain the output fused image.

The proposed algorithm has some advantages, including:

i) Combining the high frequency components is adaptive using the algorithm CHCSO with the Sobel operator instead of the rule Select Maximum [13].

ii) Combining the low frequency components using weighted parameters which are found by using an algorithm PSO with the optimization of objective function in formula (11).

iii) Overcome the limitations of the approach that is based on wavelet transform as mentioned in section I.

Input data is downloaded from Atlas [32] with 1500 image files as slices. The image size is 256 × 256. This dataset is used to introduce to basic neuroanatomy, with emphasizing pathoanatomy of some diseases about central nervous system. It includes many different types of medical images such as MRI, PET or SPECT. On this dataset, our proposed algorithm (ESIF) is compared with other available methods, including Wavelet based image fusion (WIF) [13], PCA based image fusion (PCAIF) [14] and morphological component analysis based on convolutional sparsity (CSMCA) [25].

To assess image quality, we use the measures such as the brightness level (

Herein, we illustrate the experiment with 5 slices 070, 080 and 090, 004, 007 as below. Input and output images of the fused methods are presented in Tab. 1.

From the output images of four methods in Tab. 1, some characteristics of the results can be summarized as below:

• The WIF and PCAIF methods do not highlight the boundary of the areas in the resulting images.

• The CSMCA method even generates very dark fused image compared to WIF and PCAIF methods. This makes it difficult to distinguish areas in the image.

• The fused images generated by the proposed method has better contrast and bright and clearly distinguishing the areas than fused images using the compared methods.

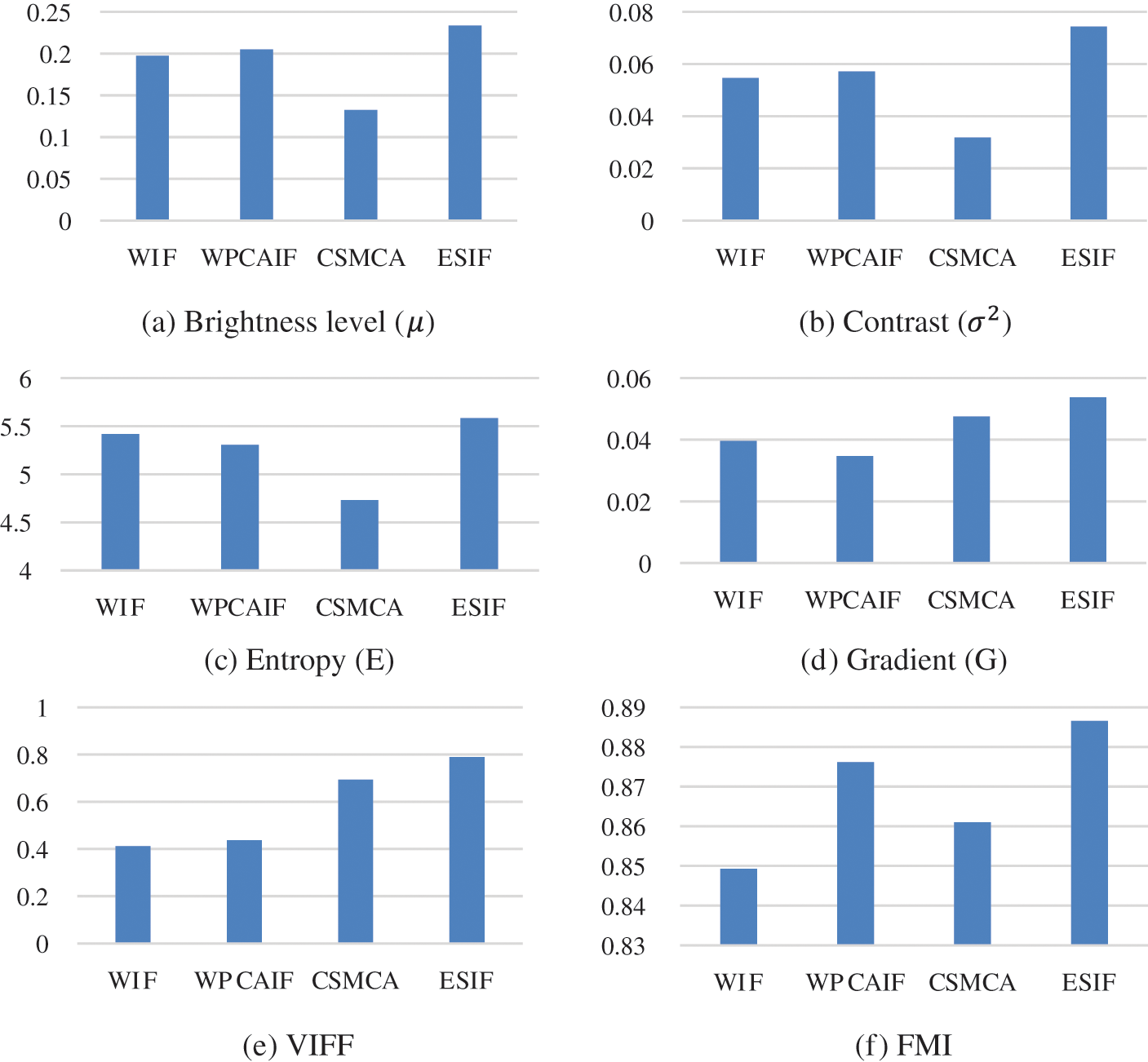

For the quantity evaluation, the values of criteria

From the results in Tab. 2, by using our proposed method, the results of

Figure 4: Comparison among four methods by the average values on 5 slices of 6 evaluation indices. (a) Brightness level (

Fig. 4 shows that the average values of

Moreover, from the results in Tab. 2 and Fig. 4, the values of all criteria achieved by using ESIF are higher than other methods. Especially, the values of ESIF are 1.76 times higher than CSMCA on brightness level; 2.34 times higher than CSMCA on the contrast; 1.92 times higher than VIF on FMI. This leads to conclude that the quality of the fused images when applying our proposed method is much better than three mentioned methods on the same data.

5 Conclusions and Future Works

This paper introduces the new algorithm of fusing multimodal images based on Entropy optimization and the Sobel operator (ESIF). This algorithm aims to get the fused images without reducing the brightness and contrast. The proposed method has advantages as the adaptability of combining the high frequency components by using the algorithm CHCSO with the Sobel operator; the high performance in combining the low frequency components based on the weighted parameter obtained by using an algorithm PSO. Apart from that, our proposed method overcomes the limitations of wavelet transform based approaches.

The experimental results on five different slices of images show the higher performance of proposed method in term the brightness level, the contrast, the entropy, the gradient and VIFF, FMI indices. For further works, we intend to integrate the parameter optimization in image processing and apply the improvement method in other problems.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. H. Li, Z. Yu and C. Mao, “Fractional differential and variational method for image fusion and super-resolution,” Neurocomputing, vol. 171, no. 9, pp. 138–148, 2016. [Google Scholar]

2. J. Du, W. Li, K. Lu and B. Xiao, “An overview of multi-modal medical image fusion,” Neurocomputing, vol. 215, no. 4, pp. 3–20, 2016. [Google Scholar]

3. A. P. James and B. V. Dasarathy, “Medical image fusion: A survey of the state of the art,” Information Fusion, vol. 19, no. 3, pp. 4–19, 2014. [Google Scholar]

4. S. Li, X. Kang, L. Fang, J. Hu and H. Yin, “Pixel-level image fusion: A survey of the state of the art,” Information Fusion, vol. 33, no. 6583, pp. 100–112, 2017. [Google Scholar]

5. H. Li, H. Qiu, Z. Yu and B. Li, “Multifocus image fusion via fixed window technique of multiscale images and non-local means filtering,” Signal Processing, vol. 138, no. 3, pp. 71–85, 2017. [Google Scholar]

6. M. Zribi, “Non-parametric and region-based image fusion with Bootstrap sampling,” Information Fusion, vol. 11, no. 2, pp. 85–94, 2010. [Google Scholar]

7. S. Yang, M. Wang, L. Jiao, R. Wu and Z. Wang, “Image fusion based on a new contourlet packet,” Information Fusion, vol. 11, no. 2, pp. 78–84, 2010. [Google Scholar]

8. F. Nencini, A. Garzelli, S. Baronti and L. Alparone, “Remote sensing image fusion using the curvelet transform,” Information Fusion, vol. 8, no. 2, pp. 143–156, 2007. [Google Scholar]

9. H. Li, H. Qiu, Z. Yu and Y. Zhang, “Infrared and visible image fusion scheme based on NSCT and low-level visual features,” Infrared Physics & Technology, vol. 76, no. 8, pp. 174–184, 2016. [Google Scholar]

10. B. Yu, B. Jia, L. Ding, Z. Cai, Q. Wu et al., “Hybrid dual-tree complex wavelet transform and support vector machine for digital multi-focus image fusion,” Neurocomputing, vol. 182, no. 11, pp. 1–9, 2016. [Google Scholar]

11. Y. Yang, “A novel DWT based multi-focus image fusion method,” Procedia Engineering, vol. 24, pp. 177–181, 2011. [Google Scholar]

12. B. Yang and S. Li, “Multifocus image fusion and restoration with sparse representation,” IEEE Transactions on Instrumentation and Measurement, vol. 59, no. 4, pp. 884–892, 2009. [Google Scholar]

13. H. O. S. Mishra and S. Bhatnagar, “MRI and CT image fusion based on wavelet transform,” International Journal of Information and Computation Technology, vol. 4, no. 1, pp. 47–52, 2014. [Google Scholar]

14. S. Mane and S. D. Sawant, “Image fusion of CT/MRI using DWT, PCA methods and analog DSP processor,” International Journal of Engineering Research and Applications, vol. 4, no. 2, pp. 557–563, 2014. [Google Scholar]

15. S. Deb, S. Chakraborty and T. Bhattacharjee, “Application of image fusion for enhancing the quality of an image,” CS & IT, vol. 6, pp. 215–221, 2012. [Google Scholar]

16. S. Maqsood and U. Javed, “Multi-modal medical image fusion based on two-scale image decomposition and sparse representation,” Biomedical Signal Processing and Control, vol. 57, no. 2, pp. 101810–101817, 2020. [Google Scholar]

17. L. Xu, Y. Si, S. Jiang, Y. Sun and H. Ebrahimian, “Medical image fusion using a modified shark smell optimization algorithm and hybrid wavelet-homomorphic filter,” Biomedical Signal Processing and Control, vol. 59, no. 4, pp. 101885–101894, 2020. [Google Scholar]

18. S. Polinati and R. Dhuli, “Multimodal medical image fusion using empirical wavelet decomposition and local energy maxima,” Optik, vol. 205, no. 3, pp. 163947–163979, 2020. [Google Scholar]

19. Q. Hu, S. Hu and F. Zhang, “Multi-modality medical image fusion based on separable dictionary learning and Gabor filtering,” Signal Processing: Image Communication, vol. 83, pp. 115758–115787, 2020. [Google Scholar]

20. J. Chen, L. Zhang, L. Lu, Q. Li, M. Hu et al., “A novel medical image fusion method based on Rolling Guidance Filtering,” Internet of Things, vol. 14, no. 3, pp. 100172–100188, 2021. [Google Scholar]

21. M. Haribabu and V. Guruvaiah, “Statistical measurements of multi modal MRI-PET medical image fusion using 2D-HT in HSV color space,” Procedia Computer Science, vol. 165, no. 38, pp. 209–215, 2019. [Google Scholar]

22. M. Manchanda and R. Sharma, “An improved multimodal medical image fusion algorithm based on fuzzy transform,” Journal of Visual Communication and Image Representation, vol. 51, no. 2, pp. 76–94, 2018. [Google Scholar]

23. O. Prakash, C. M. Park, A. Khare, M. Jeon and J. Gwak, “Multiscale fusion of multimodal medical images using lifting scheme based biorthogonal wavelet transform,” Optik, vol. 182, pp. 995–1014, 2019. [Google Scholar]

24. H. Ullah, B. Ullah, L. Wu, F. Y. Abdalla, G. Ren et al., “Multi-modality medical images fusion based on local-features fuzzy sets and novel sum-modified-Laplacian in non-subsampled shearlet transform domain,” Biomedical Signal Processing and Control, vol. 57, pp. 101724–101738, 2020. [Google Scholar]

25. Y. Liu, X. Chen, R. K. Ward and Z. J. Wang, “Medical image fusion via convolutional sparsity based morphological component analysis,” IEEE Signal Processing Letters, vol. 26, no. 3, pp. 485–489, 2019. [Google Scholar]

26. Y. Liu, X. Chen, J. Cheng and H. Peng, “A medical image fusion method based on convolutional neural networks,” in Proc. of 20th Int. Conf. on Information Fusion, pp. 1–7, 2017. [Google Scholar]

27. B. Yang, J. Zhong, Y. Li and Z. Chen, “Multi-focus image fusion and superresolutionwith convolutional neural network,” in Int. J. Wavelets Multiresolut. Inf. Process, vol. 15, no. 4, pp. 1–15, 2017. [Google Scholar]

28. J. Zhong, B. Yang, Y. Li, F. Zhong and Z. Chen, “Image fusion and super-resolution withconvolutional neural network,” in Proc. of Chinese Conf. on Pattern Recognition, pp. 78–88, 2016. [Google Scholar]

29. Jiayi Ma, Han Xu, Junjun Jiang, Xiaoguang Mei, Xiao-Ping Zhang, “DDcGAN: A Dual- Discriminator Conditional Generative Adversarial Network for Multi-Resolution Image Fusion,” in Image Proc. IEEE Transactions on, vol. 29, pp. 4980–4995, 2020. [Google Scholar]

30. S. G. Mallat, “A theory for multiresolution signal decomposition: The wavelet representation,” in Fundamental Papers in Wavelet Theory. Princeton: Princeton University Press, pp. 494–513, 2009. [Google Scholar]

31. J. Kennedy and R. Eberhart, “Particle swarm optimization,” Proceedings of ICNN’95-Int. Conf. on Neural Networks, IEEE, vol. 4, pp. 1942–1948, 1995. [Google Scholar]

32. http://www.med.harvard.edu/AANLIB. [Google Scholar]

33. Y. Han, Y. Cai, Y. Cao and X. Xu, “A new image fusion performance metric based on visual information fidelity,” Information Fusion, vol. 14, no. 2, pp. 127–135, 2013. [Google Scholar]

34. M. B. A. Haghighat, A. Aghagolzadeh and H. Seyedarabi, “A non-reference image fusion metric based on mutual information of image features,” Computers & Electrical Engineering, vol. 37, no. 5, pp. 744–756, 2011. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |