DOI:10.32604/csse.2022.021086

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.021086 |  |

| Article |

FASTER–RCNN for Skin Burn Analysis and Tissue Regeneration

Department of Computer Science and Engineering, SRM Valliammai Engineering College, Chennai, 603203, India

*Corresponding Author: C. Pabitha. Email: pabithac.cse@valliammai.co.in

Received: 22 June 2021; Accepted: 19 August 2021

Abstract: Skin is the largest body organ that is prone to the environment most specifically. Therefore the skin is susceptible to many damages, including burn damage. Burns can endanger life and are linked to high morbidity and mortality rates. Effective diagnosis with the help of accurate burn zone and wound depth evaluation is important for clinical efficacy. The following characteristics are associated with the skin burn wound, such as healing, infection, painand stress and keloid formation. Tissue regeneration also takes a significant amount of time for formation while considering skin healing after a burn injury. Deep neural networks can automatically assist in the extraction of features from a burn image. In our approach to burn wound analysis and regeneration of the tissue of the skin burn wound, we use the Faster RCNN (Regional Convolutional Neural Network), which is based on their severity of the burn wound. The success rates of skin cure for burning injuries can be dramatically increased with the use of different skin replacements. Our objective is to analyze different deep learning techniques that may help to analyze and classify burn wounds in a superficial, partial and complete thickness, while treating burn wounds more accurately. The application of Faster RCNN effectively classifies wound without first degree, second and third degree confusion, thus providing a suitable solution to burning wounds. The advancement in the field of profound training offers an important path in the field of the processing and burning of trauma.

Keywords: Faster R-CNN; skin burn; deep learning; RPN; computer vision

Skin burn damage, still one of the most stressful mental and physical injuries, does not only damage almost every human organ system but also one of the largest and most widely spread organ within the human skin. This serious effect also affects almost every human organ system. This significant impact also leads to death and the loss of the sensation from which an ordinary individual can recover. This is the most difficult to generate and challenging problem for physicians. Human skin is the biggest organ among all the organs in the human body and is directly exposed to the physical environment. The human skin is thus very susceptible to diseases, damage and skin burns/wounds of different kinds. The treatment of skin-based injuries and burns takes time, based on severity and impact level. These conditions occur mainly when tissue cells and thus the burn wounds heal and recover from the impact failure, and classifying the skin burn wounds takes more time. This situation leads to regional and procedural responses. With the extensive use of several medical technologies, the ultimate progress and successive rate in the cure of skin burn has increased enormously. The severity, dense impact, and presence of skin burns are regarded as critical parameters in analyzing and categorizing the various levels of severity in burn wounds. Superficial (First Degree), Partial Thickness (Second Degree), and Full Thickness (Third Degree) are different burn wounds that can be classified. Accuracy in grading and assessing the severity of a skin burn is critical and is primarily considered when treating the various courses of treatment and cure. Delays in classifying and grading skin burn wounds, and thus in curing skin burns, increase the likelihood of deep scarring and infection. As technology advances, several solutions are proposed and implemented. By providing a cure for skin burn wounds, advancements in the fields of artificial intelligence and computer vision aid in providing a greater solution and faster recoverability. Machine learning, a subfield of Artificial Intelligence, offers many popular classification algorithms, which provide accurate classification of the skin burn wound based on the severity of the impact. And also Tissue regeneration is considered to be the part of the human body in which the cell/tissue which is severely traumatized by external burn wounds and partially loss of body parts; with whatever cell/tissues remaining, developing the same physical structure and giving its ability to function like before. It is very tedious process, as the lost body part also grows; this repairing process is known as tissue regeneration, which imparts the process of regeneration of epithelial tissue, present in skin and also the regeneration of another tissue called as fibrous tissue, in which the regeneration of bone tissue and also cartilage, also the regeneration of vessels inside the blood, the regeneration also include muscle tissue, and cells present in nerves. Extensive skin burn damage frequently results in extreme scarring, disfigurement, and chronically tight skin with severe itchiness.

This is primarily due to the fact that, unlike the normal process of regeneration or restoration of human skin tissue, the healing process of the body naturally prevents infection by easily closing wounds in a faster manner. Medical aid is given at a later stage, without proper initial treatment, complicating the recovery process. Since the dawn of technology, human nature has been predisposed to become obsessed with digital automation and inventions. Artificial Intelligence enables computers to think rationally without the intervention of humans. It is the most advanced and widely used branch of computer science. Computer systems that are artificially induced with knowledge can be classified into three major types: narrow artificial intelligence, also known as ANI, which can be programmed and is generally goal-oriented in order to outperform any assigned job, artificial general intelligence, also known as AGI, which causes computers to adapt to their surroundings and learn. The third category is known as Artificial Super Intelligence, or ASI. ASI is a hypothetical Artificial Intelligence that includes computers with the ability to display smartness and thus have the capability of surpassing human knowledge and intelligence. Machine learning is another major field that is considered to be a subset of the mother field Artificial intelligence because it employs complex mathematical computations such as statistical learning and algorithms related to it. This field has the potential to create machines that can automatically understand, gather information from their surroundings, learn from it, and improve their experiences without the need for explicit programming knowledge. Deep learning is a class of machine learning techniques inspired by common sense and knowledge, in which the human brain works by filtering information and taking only what is necessary. It learns and adapts to its surroundings through training examples. This enables a system to filter input data from multiple layers in order to predict and classify the information. Because it is widely acknowledged that deep learning processes any information in the same way that the human brain does, this field is widely used in applications and projects that would normally be performed by a human being. Computer vision is a subfield of AI that can train computers to interpret and understand information from their visual environment. Object detection and locating the position of any object in images is the most basic and time-consuming challenge in the field of computer vision. Many techniques are used to learn from the environment more quickly, ranging from Convolutional Neural Networks (CNN) to Region Based Convolutional Neural Networks (R-CNN). A region-based Convolutional Neural Network is an object identification algorithm in machine vision analysis that uses deep learning techniques and algorithms. This technique employs a three-step procedure, the first of which employs a selective search algorithm to identify Regions of Interest, also known as ROIs. Then, in the following stage, a fully convolutional neural network is used to extract feature maps that inform whether objects are present in the selected ROIs, after which a set of classifiers such as SVM and softmax are used to categorize the object in the image, after which ROI pooling, which can be generally Max pooling, is performed.

The term CNN derived from Convolutional Neural Networks is the most globally used by researchers and scientist of machine vision field for the application of image classification and segmentation of images. This algorithm has been acknowledged greatly and largely utilized in several application areas like character recognition, handwriting recognition, image processing and face recognition, [1,2] and also in animal/skin/plant disease identification [3]. The main use of Conv-Net is the ability for identification and collection of variety ofrich parameters that differentiates the features at every level of processing. Another new and widely acknowledged approach was suggested by several authors in a study done in [4], it classifies and identifies whether the pictures of skin in human body is clear and normal or burnt and shriveled. It classifies the image as burnt or non-burnt, this approach starts by pre-training the attributes taken from the image to the CNN, and generating feature maps. Several such models were utilized due to not much availability of datasets. Due to the less availability of training data's and also as the images are in different formats, it is very difficult to process these training datasets. They are originally fed in to the Conv-Net for carrying out major Pre-processing steps, These steps include removal of noise, resizing the image using filter, stride, padding/pooling to a fixed size of standard nature that supports the model to greater extent and classifies the presence of object in image or not and group them according to the classes. Several CNN models like VGG-16, VGG-19, Inception and Res Net models were mostly used in pre-training these datasets. The feature maps extracted are classified using data mining classification algorithms such as SVM–Support Vector Machine Classification Algorithm and are trained using cross validation of ten-fold. This approach provides a major performance and accurate in predicting and classifying the skin burnt images up to a percentage of 99%.

One another major approach put forward in [5] uses the concepts of deep learning for classifying the skin burn images with the help of binary classification that determines whether the object present in the image as yes and if the object not present in the image as no, the neural network features are used to determine these classification. This approach also uses the algorithm support vector machine SVM for classification of the images. In this methodology, three new Conv-Net models were used and implemented; in these two of the CNN models were used for pre training the training dataset using ImageNet dataset with more than 5000 images, this helps in classifying the input image as the models are trained with over 5000 entries present in the image dataset. The last and the final model helps in pre-training the application to identify and detect Face recognition. As a combined process, all three pre-trained Conv-Net models are separately implemented to extract the feature maps and helps in classification. The accuracy of these models results in displaying a performance average of more than 98.5% and nearly 97%. These models are implemented using the pre-trained models like VGG16 and VGG19 respectively, and when they are implemented for applications like face recognition, a performance accuracy of more than 95% is attained. Thus, the authors of the paper concluded the research with a high percentage of accuracy upon trained using the ImageNet dataset models and thus corresponding to the overall major fact that the used CNN models learns and classifies several categories of applications that are diverse in nature.

Another major approach introduced in [6] majorly focuses on the basically trained models available in deep neural network as they are pre trained from scratch with a very limited datasets. This approach also uses another very majorly used technique named as transfer learning for extracting the features while implementing deep learning techniques. Two already trained ResNet Residual network models, are used for generating feature maps so that the skin burn images can be differentiated from normal skin diseases and rashes/allergies caused due to external factors. Classification algorithms like SVM-Support vector machines were implemented and are trained with lot of datasets for accurate image classification and object detection. Residual Network models when compared with other models like Inception and VGG16 and VGG 19 generates several features that has greater variety of patterns that can be achieved from the enormous training datasets. The use of Support Vector Machine Classification in this type of classification yields an accuracy of 99% as claimed by the authors.

Another methodology proposed in the paper [7] again utilizes the similar binary classification that classifies skin burn images and also fine-tuning approach which can determine the normal healthy body from skin burn wound. A pre-trained ImageNet models were implemented and was upgraded with added top layers for separate functionality. The model’s deep inner level layers were extracted and replaced with new layers; these inner layers were then pre-trained with properties and attributes from the ResNet model's base layers. The model correctly recognizes 97 percent of skin burn images and achieves 99 percent classification accuracy on different skin tone images.

When it comes to the depth of burn wound recognition, very few works use machine learning approaches. To illustrate, a study published in [8] classified DPT, SPT, and first-degree burns using machine learning. The model performs feature extraction, segmentation of relevant ROI (ROI), DWT-discrete wavelet transforms were used to specifically remove texture features, and (PCA) Principal Component Analysis is used to reduce dimensionality features and speed up the entire process as an initial process. Logistic regression, that are simple in nature were implemented to attain a good classification accuracy which records an accuracy rate of 73%.

In another study made in [9] a diagnosing process that can automatically classify the burn wounds according to the level of severity is proposed. The classification of skin burn images and common skin diseases was carried out using this methodology. Due to a lack of datasets, the study included two approaches that used the transfer learning technique; this mainly includes fine tuning the methodology that involves renovating the first few layers of the deep learning model, and also by the side it tunes the SVM support vector machines algorithm by adding the feature maps generated by trained models of deep neural network. This methodology compares the results of three already trained models.

At last, the repeated Training of data mining and machine learning algorithms like SVM support vector machines that are implemented in ResNet CNN is still considered to be the best image classifier, that provides an accuracy of nearly 99 percent and a ROC curve of above 99 percent. Contrast to the earlier technologies, this model proves to provide optimal solution while using a huge amount of datasets.

Machine learning approaches are used to categorize skin burn images based on wound depth, and the results are claimed in a study by [10]. The goal is primarily to issue a significant automated diagnosing approach in order to determine whether the burned wound requires surgery or other treatment, because determining this at an early stage provides appropriate attention and avoids delay, it also reduces healing time and scar occurrence to a greater extent. The training images are separated and pre-processed in order to extract features such as hog, chroma, colour, skew, hue, hog, and kurtosis. This paper also employs SVM, which has been trained to achieve an accurate classification of F1-score of 82 percent, precision of 88 percent, and recall of 85 percent.

Another method described in [11] uses skin burn wound images from approximately 500 mummers and proposes a discriminatory method to categorize the wound based on depth and severity. The burn images are then converted to a different color space, compressed, and resized to lower level pixels. Each category of burn wounds, such as first, second, and third degree burn wounds, was kept with 100–200 images. The paper employs various classification and segmentation techniques based on ROI and achieves a 75% accuracy.

The most widely used neural network methodology is Artificial Neural Networks (ANN), which is primarily based on the human brain, neurons, and their network connectivity. The ANN is made up of a swarm of fully-connected nodes (neurons) that model the propagation of brain synapses—whether they fire or not—across the neural network. Such Deep Learning architectures, like convolutional neural networks, are used for feature selection, classification, dimensionality reduction, or as a sub module of a deeper architecture.

Convolutional Neural Network (CNN) is a deep neural network architecture that is most commonly used in visual imagery research. It was originally designed as a fully-automated image analysis network for classifying handcrafted characters. CNNs use the multilayer perceptron method and represent fully connected networks in which each node/neuron in one layer is (fully) connected to all or any or all nodes in the next layer. ANN could also be a collection of interconnected and tunable units capable of passing a symbol from one unit to another, In contrast to ANN, CNNs have many layers and steps of convolution units that are responsible for receiving input from the previous layer's units and combining it to produce a proximity result. The basic idea behind this deep architecture is to massively compute and blend feature maps in order to infer non-linear relationships between the input and thus the desired output [12]. CNN is regarded as one of the most prominent techniques for performing feature extraction, feature selection, and feature reduction, primarily for the classification of available image datasets.

RNN-Recurrent Neural Networks is one another widely utilized technique that always tends to provide computations similar to ConvNet, with the help of a Feed-Forward Network (FNN) [13] in which the connectivity exists in between the several nodes which in turn forma a DG-Directed Graph that depends on a time sequence [14]. This allows the RNNs to promote time based widely changing which is integrated with the internal memory. This model allows RNN Recurrent networks to gather the information perceived from the states before performed, this perfectly fit analysis done sequentially and are considered to be a good predictive methodology. The major positivity of RNNs is the concept oriented models that are capable to merge the information from the prior task to the current task.

Long STMs may also be a variant of RNNs that are capable of learning long-term dependencies and are truly designed to avoid the long-term dependency problem [15]. A LSTM unit is made up of a cell/node, an input gate, an output gate, and a forget gate. This node then collects the values while keeping a fixed time interval in mind, so that the flow of knowledge is synchronized by input/output gates.

GANs (Generative Adversarial Networks) are a more recent architecture that pits two neural networks against each other [16]. The first network generates synthetic realistic data, while the second network verifies the data's authenticity. GANs have been shown to improve classification accuracy in a variety of domains, including medical image processing [17].

Auto encoders (AE) are well-known DL models for unsupervised learning because they learn from an encoder that represents and manages the data by training the network to ignore signal “noise” [18]. Simple non-linearity are commonly used in neural nets, in which a non-linear function is applied to the scalar output of a linear filter. Capsules employ a more complex nonlinearity, in which a swarm of neurons model an area of input by activating a small subset of its properties [19]. The CapsuleNet proposed in [20] consists of capsules that are not dependent on one another but are dependent on kernels.

Deep Learning models can outperform the most recent methods for the majority of skin burn analysis tasks. Furthermore, deep learning can handle multimodal data effectively and provides extremely heterogeneous data, making it an excellent candidate for achieving precision medicine. The process of translating medical image processing knowledge into clinically useful tools, on the other hand, has been extremely slow. More work should be done to analyze and combine datasets (both private and public) in order to improve the role of Deep Learning medical analysis in prediction and prognosis. Further most of the Deep Learning models paves a major breakthrough to identify several skin conditions such as tissues and disease ranges in different ways.

3.1 Summary of Significant Contribution of the Paper

1. The paper proposes a novel method for segmenting burn wounds in images using a cutting-edge deep learning technique. Faster R-CNN is used to classify the burn wounds.

2. We labeled 1300 images in the format of the Common Objects in Context (COCO) data set for training our framework and trained our model on 1000 images.

3. We compared the various backbone networks in our framework during the evaluation, the networks used are VGG -16, Inception, SegNet, and it is compared with Faster–RCNN.

4. Finally, we implemented Precision, Recall and Dice–Coefficient to compare the evaluation metrics.

4 Skin Anatomy and Various Levels of Burn Injury

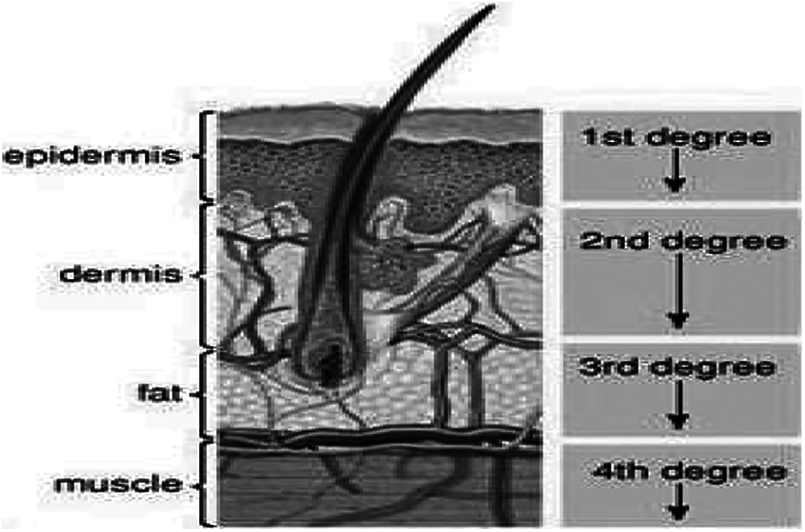

As shown in Fig. 1. Shows, depending on how deeply and difficultly they penetrate the skin's surface, burns are classified as first, second, or third degree burns.

Figure 1: Skin classification and structure representation

4.1 First Degree Burns (Superficial)

Superficial burns are termed as first degree burns that damages the epidermis which is termed to be the outer layer of the skin, the outer area becomes scaly, dry, red and itchy without any bubbles. Only the epidermis, or outer layer of skin, is affected by first-degree burns. The burn site is red, painful, dry, and blister-free. As an example, consider mild sunburn. Long-term tissue damage is uncommon and usually manifests as an increase or decrease in skin color. Normally this comes under a simple sunburn. This generally classifies under the normal sunburn that does not have major impact on severe tissue damage and it is rare, this usually modifies the skin color.

4.2 Second Degree - (Partial Thickness) Burns

Second degree Burns also named as Partial thickness creates a major impact on the epidermis, the outer layer and to some extent of the part of dermis-skin layer. The burnt area generally appears to be red, scaly, covered with blistered, and can be swollen and very painful. Second-degree burns affect the epidermis as well as a portion of the dermis layer of skin. The burn site appears red, blistered, swollen, and painful.

4.3 Third Degree Burns (Full Thickness)

The epidermis and dermis are destroyed in third-degree burns, also called as full Thickness burn. Third-degree burns can also cause damage to the bones, muscles, and tendons beneath the skin. The burn area appears to be white or charred. Because the nerve endings have been destroyed, there is no sensation in the area. The epidermis and dermis are destroyed in third-degree burns. Third-degree burns may also damage the underlying bones, muscles, and tendons. The burn area appears to be white or charred. Because the nerve endings have been destroyed, there is no sensation in the area.

A major and severe burn can be a life-changing injury, not only physically but also emotionally [21]. It can have ramifications for the entire family as well as the burn victim. Individuals with severe burns may lose certain physical skills, such as a limb (s), disfigurement, loss of mobility, scarring, and recurrent infections because the burned skin is less able to fight infection.

Burns primarily cause stressful and emotional upset, similar to mental stress, depression, traumatizing disorder, or flashbacks of the event. Skin healing would be a systematic process that includes four overlapping classic phases: they are hemostasis also known as coagulation, inflammation termed as mononuclear cell infiltration, proliferation, and maturation said to be deposition of collagen and which forms scar tissue on skin. Burn causes, the degree and size of the burn, and the general condition and type of graft or materials used by the patient to cover burn wounds are all factors that influence skin healing after burn injuries.

The healing process may occur depending on the severity of the burn, with various consequences. Typically, first-degree burns heal in two weeks or less and cause minimal damage and scarring. Keratinocyte migration from skin appendages within a few hours of the injury ensures the re-epithelialization of burns with partial thickness. Because of the need for rapid wound closure, healing begins around the edges of deeper burns rather than in the center. The early cell's acceleration Proliferation occurs as a result of the release of various factors by dendritic cells, which ensures rapid healing of burns. As a result, agents that improve dendritic cells are viewed as therapeutics for improving burn wound care. Angiogenesic cytokines like VEGF and CXCL12 are induced in burning by hypoxia-indulgence-factor 1, which increases endothelial progenitor-cell blood levels that correspond to the burned skin area. The increase in contraction is ensured through the start of the TGF-β pathway, which in turn may cause tissue restructuring and skin scarring.

Burns can have a systemic effect on nearly every system of the body compared to other types of wounds, and cause changes in lung, kidney, heart, liver, gastrointestinal tract, bone marrow or lymphoid organ syndrome and multiple organ dysfunction [22]. Their serum concentration in burn sites is related to the area of the burn on inflammatory mediators such as the tumor necrosis factor alpha (TNF-α) and interleukins 6, 8 and 1-betas responsible for systemic effects. The increased risk of infections, multi-organ dysfunction and death is supposed to deepen.

After a skin injury, the damaged tissue is restored by coordinated signals, which form a cure for the skin. This skin response takes place in three phases: inflammation, proliferation and maturation. The blood clotting results in a four-day period which provides temporary pathogens protection and fluid loss. This results in a blood clot. The blood flow in areas adjacent to the wound will increase followed by swelling and redness due to increased local inflammatory agent vascular permeability to extravase plasma and produce a fibrin matrix. In order to eliminate dead tissue and control infection, neutrophils, monocytes, and other immunocompetent cells pervade this matrix [23].

During the 5–20 days of inflammation, vascular endothelial cell proliferation and fibroblasts are encouraged because inflammatory cells secrete factors of growth. The collagen secreted with fibroblasts gradually replaces the fibrin matrix. Fibroblasts can distinguish themselves in the form of actin-expressing myofibroblasts, thus reducing the area of injury. In turn this develops angiogenesis which can lead to recurrence of the capillary cells and the vascular endothelial cells. The keratinocytes migrate from the wound margins to the surface underneath the blood clot of the granulation tissue [24].

4.4 Wound Healing and Deep Learning Process

Wound cure can be divided into 3 phases artificially [25]. However, these phases should be kept constant and overlapping. 6–8 Phase 1 (inflammatory phase), includes haostasis, damage limited inflammation, and platelet activation and degradation causing inflammation, clotting cascade creating fibrous wound sealing clots, fibrous matrix, a temporary inflammatory cells scaffold, cytokines released for attraction of the wound to inflammatory, mesenchymal and endothelial Cells in Phase 1 (inflammation). The phase 2, called proliferative phase, restore skin macrophage, extracellular matrix production and secretion, including an increase in the collagen type III ratio and the collagen type III ratio, which are caused by spur when collagen formation takes place, granulative tissue formation, re-epithelisation, angiogenesis improvement and a repair of structure. Phase 3, known as a remodeling phase, progresses towards scarring, and replenishes a wound and occlusive wound with the resulting extracellular and protease syntheses and matrix restructuring secretion such as collagen of type III. The collagen Type I vs. Type III is resurrected and therefore the strength and adult scarring of the vascular and dermal beds as appropriate for the injury depth is optimized. Fourthly, the results of wound healing depend on a number of factors including the gravity and depth of the wound, the anatomically placed wound and thickness of its skin, density of adnexal structures, the genetic and epigenetic background and time for healing, comorbidity and microbial contamination.

5 FASTER r-CNN for Skin Burn Segmentation

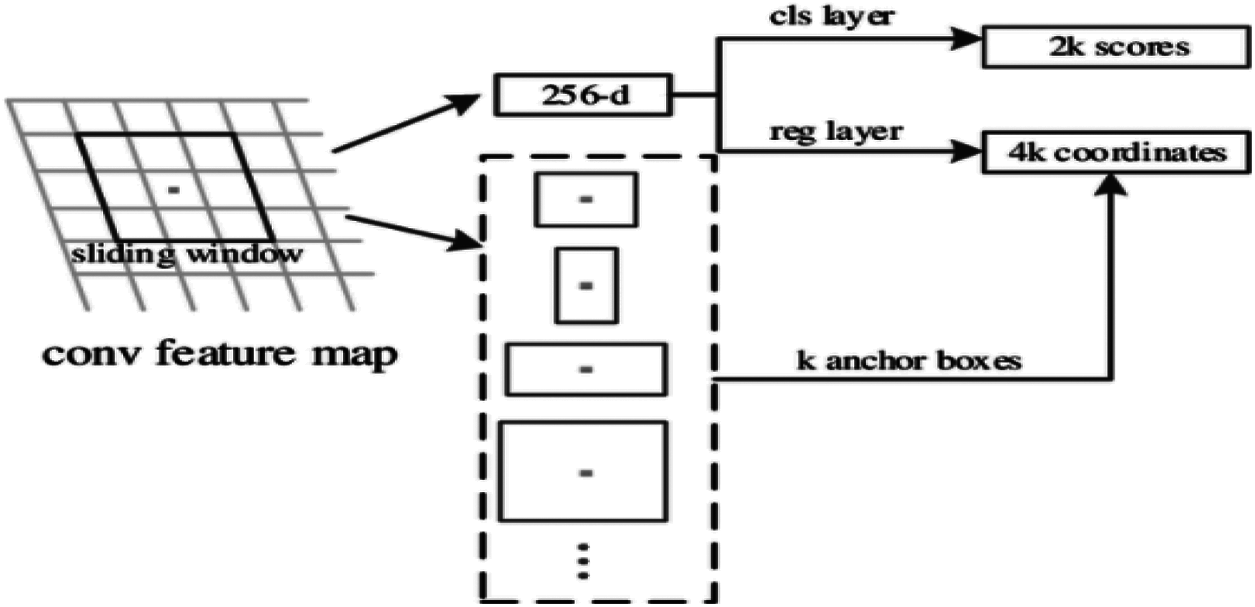

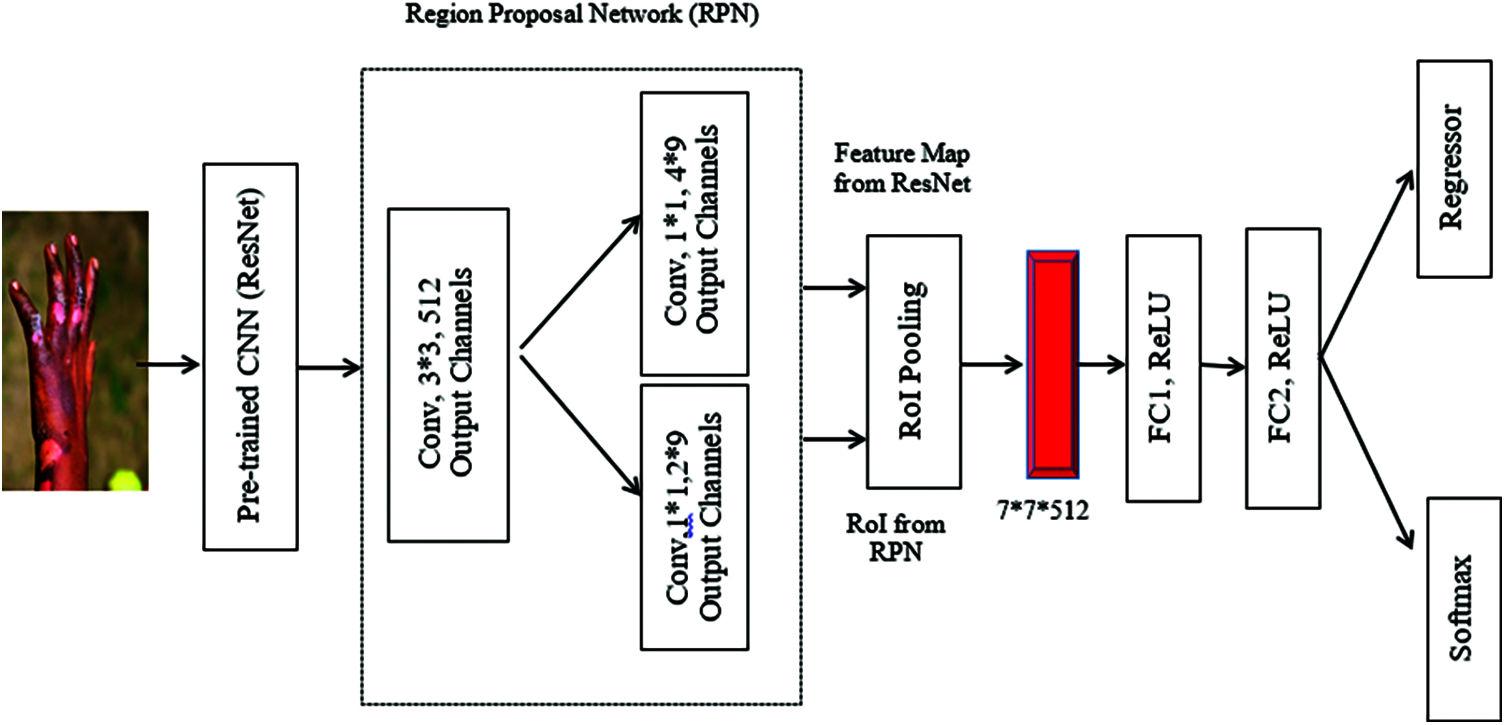

In addition to R-CNN and R-CNN faster, programmers found that R-CNN is a new method of identification for objects that reduces the running time for the detection network even further. They proposed the creation of an item bounding box for a region proposal network (RPN), which replaces the traditional selection search by a detection network that includes the full image convergence features. RPN is a complete network which can predict each destination area box and input image target score simultaneously. RPN tries to produce high-quality R-CNN classification and detection regional proposals within a short space of time. RPN and R-CNN quickly share convolutionary features during training. Faster R-CNN incorporates an RPN approach that generates the map functionality and whether or not the object is included in the ROI. By the last convolution layer a sliding window links the RPN to the map output of the feature. For classification and regression boxes, each window produces a 256 dimensional vector splits into two different branches. The researchers propose also to use the anchor or the size of the original box of varying proportions as the starting point of the regression rather than the whole window. If k anchor types exist, the end classification for RPN has outputs of 2k and 4k outputs for regression.

As Fig. 2 Shows, the following ROI layer of pooling collects the input character maps and suggestions, merges the data, extracts the proposal characteristics, and sends them to the entire connected link layer in order to determine the category of object. In order to compute the specific vector of each proposal at the classification stage, the feature maps produced are used. In order to return the object detection box, bboxpred is acquired simultaneously in the bounding box regression. The general process for the classification of skin burn wound is shown in Fig. 3.

Figure 2: Architecture of region proposal network

Figure 3: Overall architecture and structure of classification

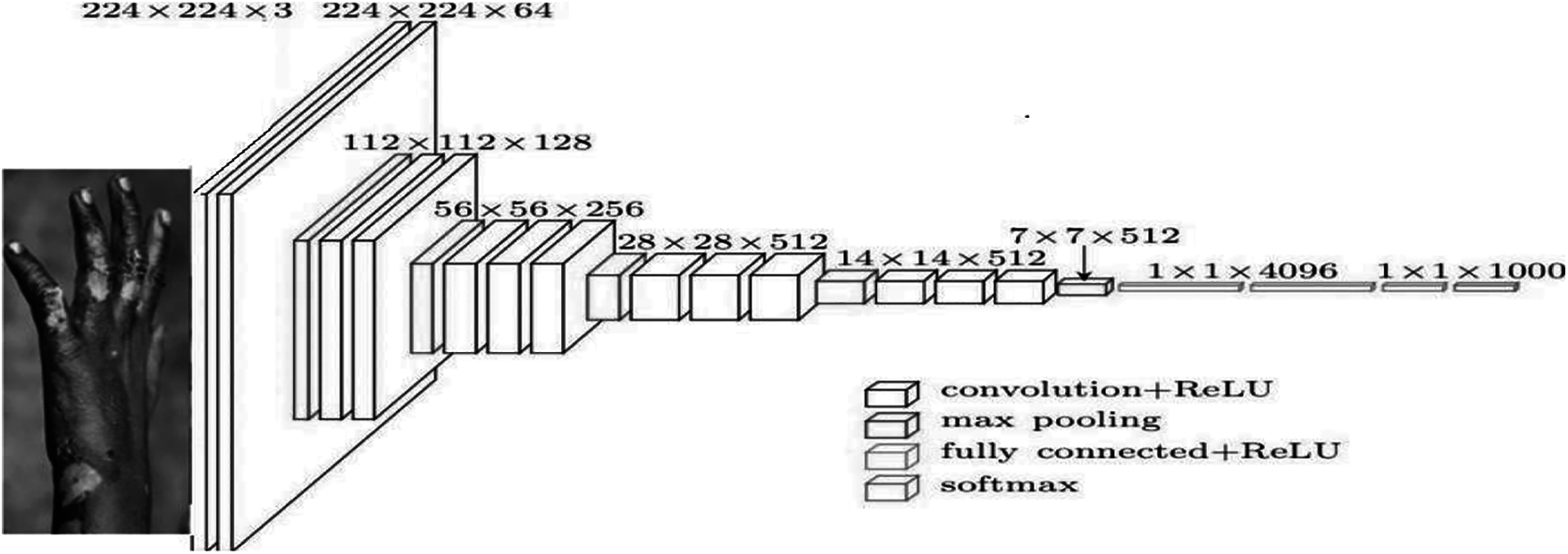

5.1 VGG-16 for Skin Burn Image Classification

1. K. Simonyan and A. Zisserman of Oxford University proposed the VGG16 model for the convolutionary neural network in their publication “Very Deep Convolutionary Networks for Large Scale Image Recognition.” The model achieves 92.7 percent of the highest test accuracy in ImageNet, a dataset of over 14 million images from 1000 classes.

AlexNet is outperformed by the sequential replacement of large kernel filters (11, 5, in both the first and second layers) with 33 kernel filters. Weeks on NVIDIA Titan Black GPUs, the VGG16 had been training. The analysis of the VGG-16 architecture is shown in Fig. 4. We labeled 1300 images in the format of the Common Objects in Context (COCO) data set for training our framework and trained our model on 1000 images. We compared the various backbone networks in our framework during the evaluation. The various burn degree are represented in Fig. 5.

Figure 4: VGG-16 Architecture for skin burn image analysis

Figure 5: Superficial, partial thickness and Full-thickness various level of burn wounds

A 224 by 224 RGB Skin burn image of a set size is the convolutionary layer input. The image is sent via a stack of convolutional layers with a receptive field of 33% set for the filters. It also uses 11 filters in the configuration of the input channels that can be considered as a linear transformation (followed by non-linearity). The convolution step is set to 1 pixel and the input of the spatial padding is set to 1 pixel for 33 conv layers, thus maintaining the spatial resolution. Five layers of max pooling are part of the convolutional strata and spatial pooling. With step 2 over a 22 pixel window max-pooling is done. Three Fully Connected (FC) layers are added after a stack of convolutionary layers: the first two have 4096 channels each, whereas the second one has 1000 channels ImageNet and therefore 1000 channels (one for each class). The layer of the soft max is the last layer. The fully connected levels are configured equally in all networks. Non-linearity correction (ReLU) occurs in all hidden layers.

By calculating the precision, recall and dice coefficient, the performance of image segmentation can be analyzedand it is calculated as follows.

Precision

Accuracy shows precision segmentation. Precision is a metric that calculates, and calculates the percentage of properly segmented pixels in a segmentation as follows.

Recall

Recall also shows the precision of segmentation. It calculates the percent in the truth of the ground and tests the percentage of properly divided pixels, in the truth of the ground:

Dice coefficient (Dice)

There are dice representing the relationship between the segmentation and the ground truth. Dice are also known as the F1 scoring as a measure that balances precision and recall. Dice is calculated by the harmonic mean of accuracy and reminder:

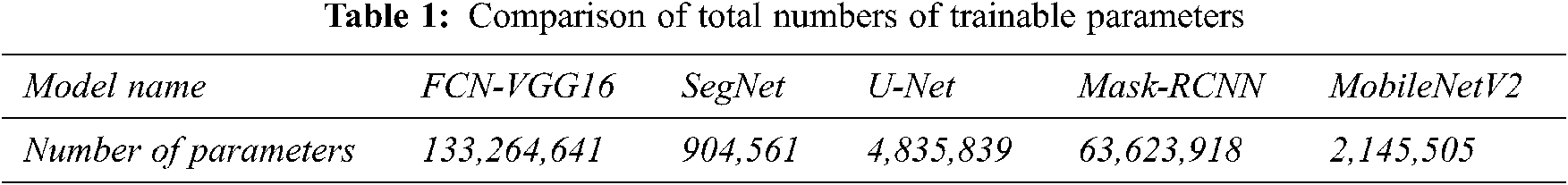

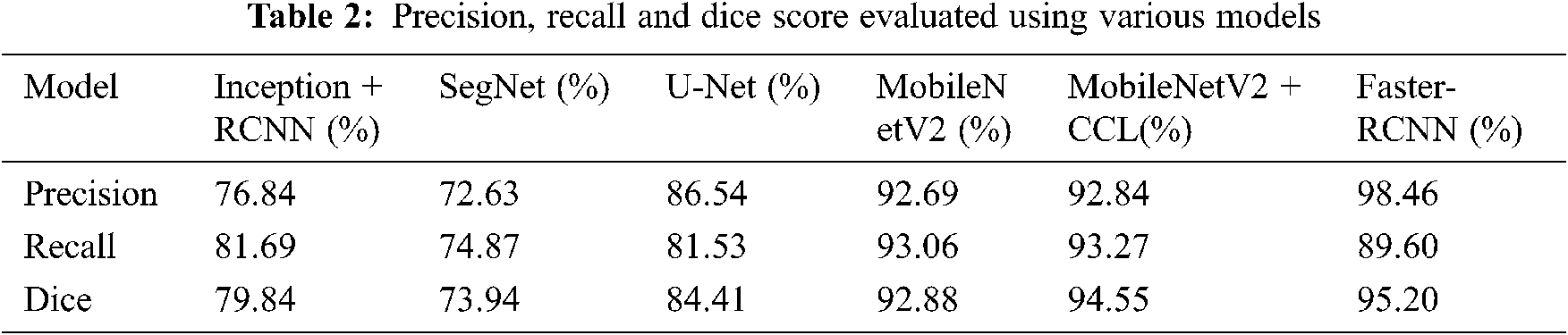

The methods for the entire model comparison are the same and the results are shown respectively in Tabs. 1 and 2.

Once the skin burn wound is successfully classified, many regeneration processes can be conducted for skin tissue. Four classical stages of skin-curing include: weather stasis (coagulation), inflammation (infiltration by mononuclear cells), proliferated (epitholization, fibroplasia, angiogenesis and granulatory-tissue development) and maturing (epithelesis, fibroplasia, anguish and tissue-forming). A number of factors, including reasons of burn, burning level and dimensions, general patient health factors, external factors and particle types are used to cover burn wound, affect the healing of the skin after burn injuries.

Depending on the severity of the burn, the healing process can have different results. In two weeks, burns on the surface cure and leave no cure. The migration of the keratinocytes from dermal peel appendage ensures that partial burns are epithelized again within hours of the lesion. As deeper burns heal about the edges rather than the middle because of the need for a fast wound closure. Various techniques are available to help burn patients based on the severity of the wound, as well as cell therapy. The proposed FASTER R-CNN profound learning model implemented by means of VGG-16 The CNN model not only evaluates burn wounds but also suggests adequate burn wounds techniques and methods. The proposed model leads to different appropriate solutions for the healing process, after evaluating burn injuries and classifying according to the severity. This greatly helps the patients and the healer to provide the patient with the necessary treatment.

Burn is not a wound, but a symptom of a greater problem. While the burn wound is similar to other injuries, this process is marked by a significant interaction between systemic factors. Burn wound has a direct effect on the general health of the patient, leading to conditions such as septicaemia and death. Prolonged hospitalization can lead to further burning problems such as a rise in hospital costs for both patients and their families. Delays in treatment due to the lack of proper objective assessment procedures and access to nearby burn centers can also be attributed to a long stay in hospital the excellent predictive score with FASTER R-CNN or VGG-16 on the burn injuries, based on the severity of the injury, is achieved with the proposed model.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. K. Jilani, H. Ugail, M. Bukar, A. Logan and T. Munshi, “A machine learning approach for ethnic classification: The British Pakistani face,” in Proc. Int. Conf. on Cyber Worlds ICCW, NewYork, USA, pp. 170–173, 2017. [Google Scholar]

2. A. Elmahmudi and H. Ugail, “Experiments on deep face recognition using partial faces,” in Proc. Int. Conf. on Cyber Worlds ICCW, Singapore, pp. 357–362, 2018. [Google Scholar]

3. K. Polat and K. O. Koc, “Detection of skin diseases from dermoscopy image using the combination of convolutional neural network and one-versus-all,” Journal of Artificial Intelligence and Systems, vol. 2, no. 1, pp. 80–97, 2020. [Google Scholar]

4. A. Abubakar and H. Ugail, “Discrimination of human skin burns using machine learning,” Journal of Medical and Biological Engineering, vol. 40, no. 3, pp. 321–333, 2019. [Google Scholar]

5. P. Kuan, C. Stephanie, E. Safawi, H. Wang and T. William, “A comparative study of the classification of skin burn depth in human,” Journal of Telecommunication, Electronic and Computer Engineering, vol. 9, no. 2, pp. 15–23, 2017. [Google Scholar]

6. D. Yadav, S. Ashish, S. Madhusudhan and G. Ayush, “Feature extraction based machine learning for human burn diagnosis from burn images,” IEEE Journal of Translational Engineering in Health and Medicine, vol. 7, no. 1, pp. 1–7, 2019. [Google Scholar]

7. F. A. Khan, U. R. B. Ateeq, A. Muhammad, A. Waqar, N. Muhammad et al., “Computer-aided diagnosis for burnt skin images using deep convolutional neural network,” Journal of Multimedia Tools and Applications, vol. 24, no. 79, pp. 34545–34568, 2020. [Google Scholar]

8. H. Shahin, M. Elmasry, I. Steinvall, F. Söberg and A. El-Serafi, “Vascularization is the next challenge for skin tissue engineering as a solution for burn management,” Journal on Burns & Trauma, vol. 8, no. 1, pp. 102–117, 2020. [Google Scholar]

9. T. Erika Maria, D. Rossella, G. Ida, C. Enricha, P. Silvia et al., “Skin wound healing process and new emerging technologies for skin wound care and regeneration,” Journal on Pharmaceutics, vol. 12, no. 8, pp. 86–98, 2020. [Google Scholar]

10. E. Hofmann, J. Fink, A. Eberl, P. Eva-Maria, K. Dagmar et al., “A novel human ex vivo skin model to study early local responses to burn injuries,” Journal on Scientific Reports, vol. 11, pp. 364–376, 2021. [Google Scholar]

11. E. Margarita, K. Ankita, A. Ayesha, V. D. K. Alex and G. J. Mark, “Burns in the elderly: Potential role of stem cells,” International Journal of Molecular Science, vol. 21, no. 13, pp. 45–64, 2020. [Google Scholar]

12. T. B. Steven and L. L. Andrea, “Tissue engineering of skin and regenerative medicine for wound care,” Journal on BurnTrauma, vol. 6, no. 4, pp. 197–210, 2018. [Google Scholar]

13. S. Anastasia, B. Denis, A. B. Evgeny, B. S. Roman, A. Anthony et al., “Skin tissue regeneration for burn injury,” Journal on Stem Cell Research & Therapy, vol. 10, no. 1, pp. 94–109, 2019. [Google Scholar]

14. P. D. S. Anna, O. K. Marta, M. C. Anna and S. Maria, “Local treatment of burns with cell-based therapies tested in clinical studies,” Journal of Clinical Medicine, vol. 10, no. 3, pp. 396–410, 2021. [Google Scholar]

15. A. N. Saeid, D. Reinhard, E. Gertruad, K. D. Andrea, P. Alexandra et al., “Stem cells derived from burned skin - the future of burn care, ”Journal on E-BioMedicine, vol. 37, no. 11, pp. 509–520, 2018. [Google Scholar]

16. A. B. Jordan and C. Renford, “Burn debridement, grafting and reconstruction,” StatPearls, Treasure Island (FL), StatPearls Publishing, vol. 1, pp. 10–27, 2020. [Online]. Available: https://www.ncbi.nlm.nih.gov/books/NBK551717/. [Google Scholar]

17. L. Yuan, X. Wei-Dong, V. M. Leanne, D. Wen-Tong and L. Cai, “Efficacy of stem cell therapy for burn wounds: A systematic review and meta-analysis of preclinical studies,” Journal of Stem Cell Research & Therapy, vol. 11, no. 1, pp. 322–334, 2020. [Google Scholar]

18. Y. Lara, T. K. T. Nguyen and M. S. Alexander, “Skin regeneration scaffolds: A multimodal bottom-up approach,” Journal of Trends in Biotechnology, Science Direct, vol. 30, no. 12, pp. 638–648, 2012. [Google Scholar]

19. C. Luis and C. Mara, “Skin acute wound healing: A comprehensive reviews,” International Journal of Inflammation, vol. 1, no. 1, pp. 15–30, 2019. [Google Scholar]

20. P. J. Gill, “The critical evaluation of laser Doppler imaging in determining burn depth,” International Journal of Burns and Trauma, vol. 3, no. 2, pp. 72–87, 2013. [Google Scholar]

21. D. Jia, D. Wei, S. Richard, L. Li-Jia, L. Kia et al., “Imagenet: A large-scale hierarchical image database,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition, Miami, FL, pp. 248–255, 2009. [Google Scholar]

22. V. Vapnik, “The Nature of Statistical Learning Theory,” ACM Digital Library, New York: Springer-Verlag, Berlin, Heidelberg, 2013. [Online]. Available: https://dl.acm.org/doi/book/10.5555/211359. [Google Scholar]

23. A. M. Mahfouz, D. Venugopal and S. G. Shiva, “Comparative analysis of ML classifiers for network intrusion detection,” in Proc. Fourth Int. Congress on Information and Communication Technology, Berlin, Springer, vol. 12, pp. 193–207, 2020. [Google Scholar]

24. S. Roghayeh, G. Eric and F. Giorgio, “F-measure curves: A tool to visualize classifier performance under imbalance,” Journal of Pattern Recognition, vol. 31, pp. 3203–3238, 2019. [Google Scholar]

25. J. M. Reinke and H. Sorg, “Wound repair and regeneration,” European Surgical Journal of Research, vol. 49, no. 1, pp. 35–43, 2012. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |