DOI:10.32604/csse.2022.021909

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.021909 |  |

| Article |

Diabetic Retinopathy Diagnosis Using ResNet with Fuzzy Rough C-Means Clustering

Sri Ramakrishna Engineering College, Coimbatore, 641022, India

*Corresponding Author: R. S. Rajkumar. Email: r.s.rajkumar89@gmail.com

Received: 19 July 2021; Accepted: 03 September 2021

Abstract: Diabetic Retinopathy (DR) is a vision disease due to the long-term prevalence of Diabetes Mellitus. It affects the retina of the eye and causes severe damage to the vision. If not treated on time it may lead to permanent vision loss in diabetic patients. Today’s development in science has no medication to cure Diabetic Retinopathy. However, if diagnosed at an early stage it can be controlled and permanent vision loss can be avoided. Compared to the diabetic population, experts to diagnose Diabetic Retinopathy are very less in particular to local areas. Hence an automatic computer-aided diagnosis for DR detection is necessary. In this paper, we propose an unsupervised clustering technique to automatically cluster the DR into one of its five development stages. The deep learning based unsupervised clustering is made to improve itself with the help of fuzzy rough c-means clustering where cluster centers are updated by fuzzy rough c-means clustering algorithm during the forward pass and the deep learning model representations are updated by Stochastic Gradient Descent during the backward pass of training. The proposed method was implemented using python and the results were taken on DGX server with Tesla V100 GPU cards. An experimental result on the publically available Kaggle dataset shows an overall accuracy of 88.7%. The proposed model improves the accuracy of DR diagnosis compared to the existing unsupervised algorithms like k-means, FCM, auto-encoder, and FRCM with alexnet.

Keywords: Diabetic retinopathy detection; diabetic retinopathy diagnosis; fuzzy rough c-means clustering; unsupervised CNN; clustering

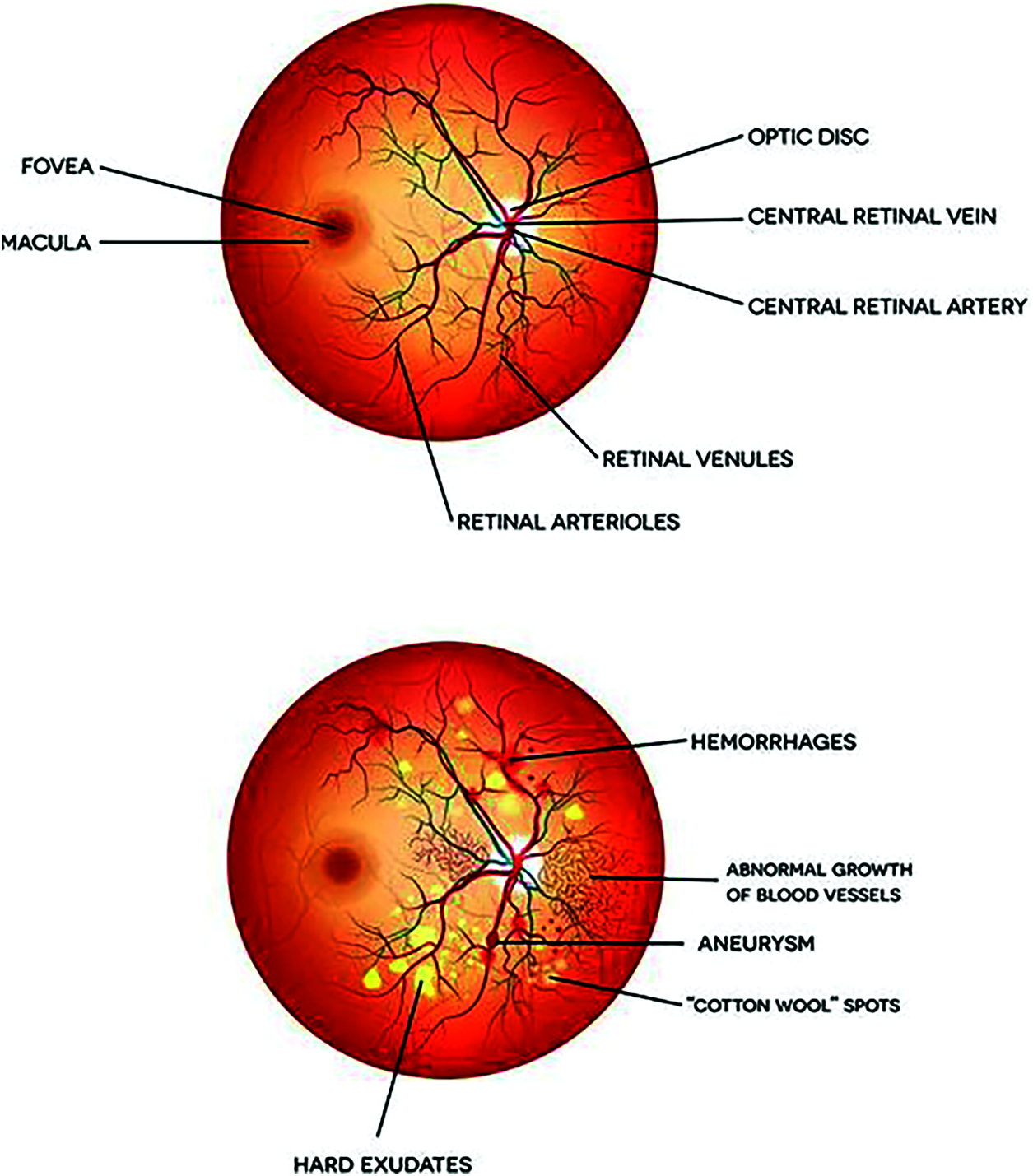

The increase in the blood sugar level due to resistance to insulin leads to Diabetes Mellitus (DM). DM is a major cause of a cluster of diseases. One such micro effect due to diabetes is Diabetic Retinopathy (DR). Recent statistics by International Diabetes Federation [1] show that more than 400 million people are living with diabetes and may reach 700 million by 2043. The variation of the DR eye from the normal eye and the vision seen by DR patients are shown in Figs. 1 and 2.

Figure 1: Normal vs. DR eye [2] (a) Normal eye (b) DR eye

Figure 2: Normal (left) vs. DR (right) vision [3]

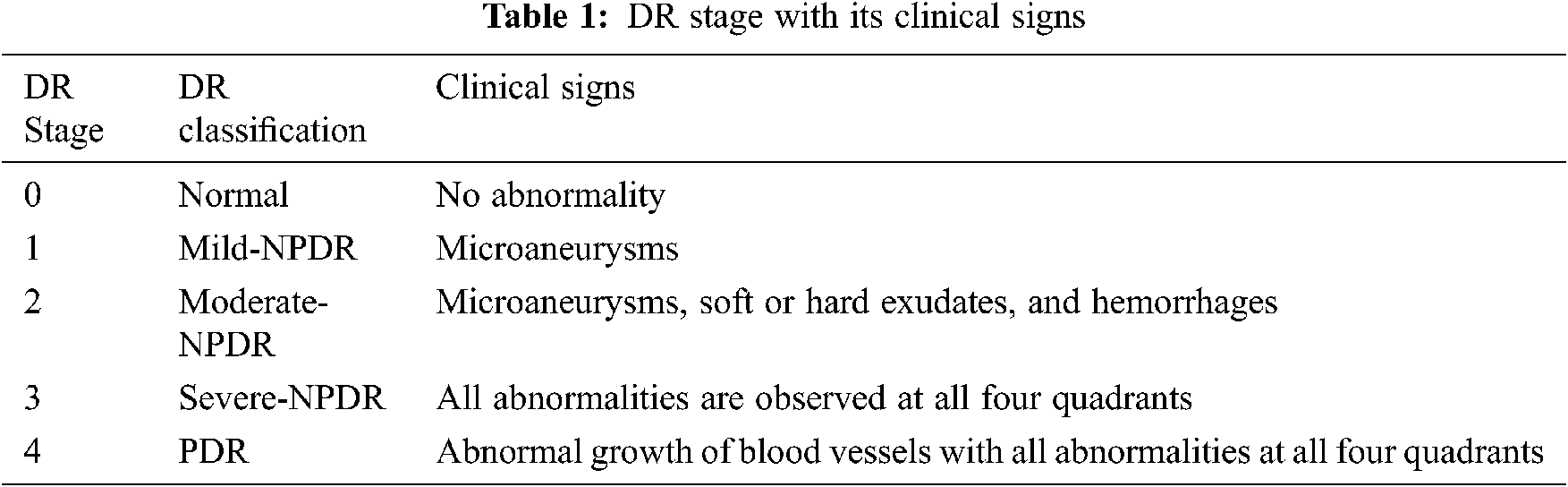

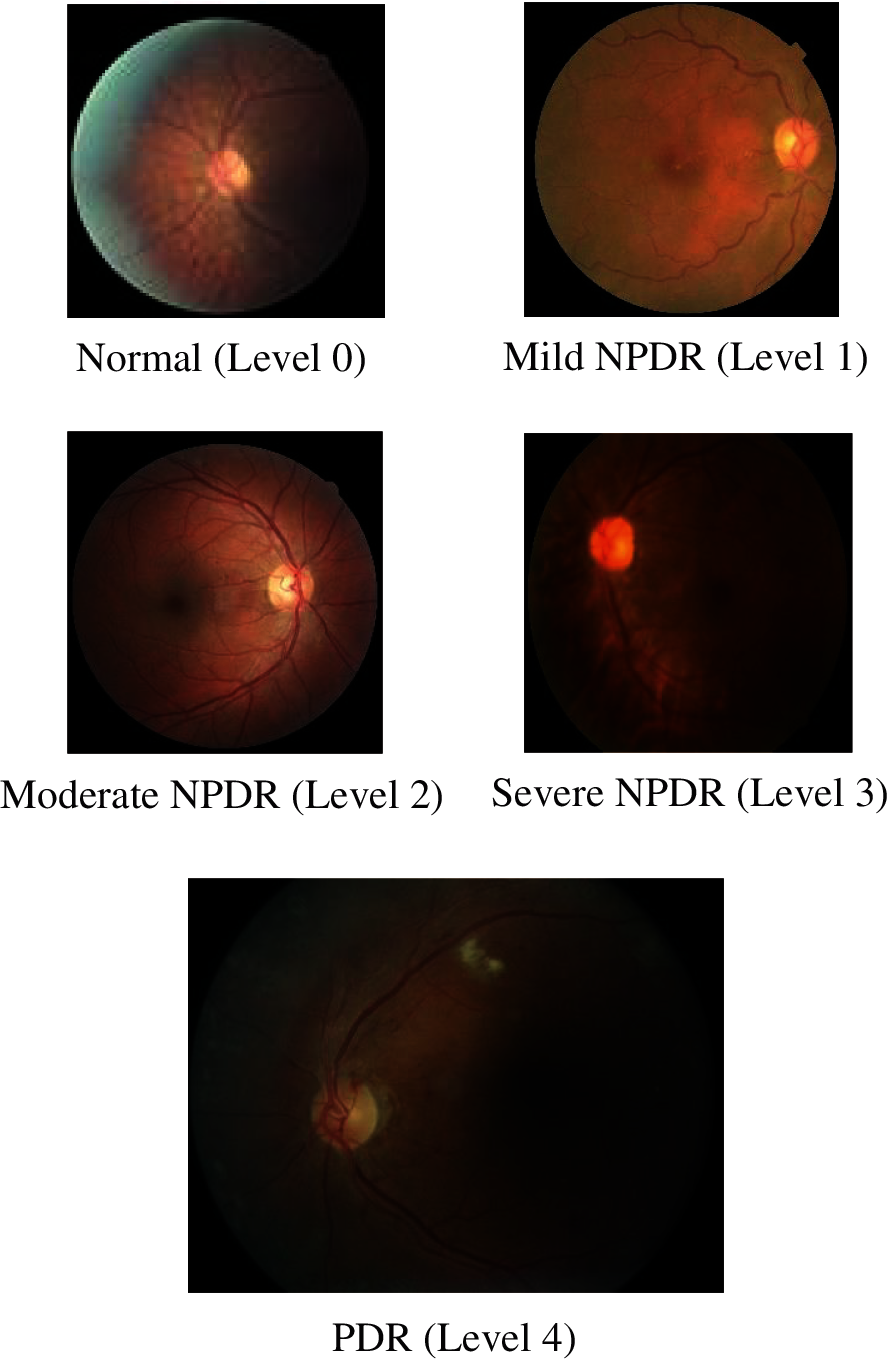

DR alters the blood vessels in the retina (light-sensitive region) of an eye. DR develops in five stages according to clinical study. The DR stages and its clinical signs in the fundus eye are listed in Tab. 1.

Microaneurysms are small lesions in the blood vessel. Exudates are white or yellowish-white spots due to leakage of proteins due to microaneurysms. More leakage of fluid into the retina of the eye leads to hemorrhages. DR leads to vision loss for people with long-term DM causing them permanent vision impairment. However early detection, timely treatment, and regular eye checkup can prevent them from permanent vision impairment.

Image clustering [4–16] has become significant research in the sphere of image processing and computer vision applications. For large-scale image processing, the major focus is on dimensionality reduction [17] and feature encoding [18]. Unsupervised clustering is a major machine learning technique for discovering the hidden patterns from the unlabeled datasets [19]. Centroid dependent clustering, hierarchical, graph dependent and density-dependent clustering are few approaches for unsupervised clustering techniques. For effective clustering of an image, the huge challenges are uncertainty, ambiguity, and overlapping among the clusters.

Deep Learning is a subset of artificial intelligence that mimics the working of the human brain to provide the solution to complex problems. Convolutional Neural Network (CNN) [20] is a deep learning model that is extensively used for image, audio, and video processing. CNN extracts the features of the input by performing a convolution operation in its convolution layer and produces the feature map. The best features from the feature are selected by its pooling layer thereby performing the dimensionality reduction of the input.

Several machine learning and deep learning algorithms were used for DR detection at an early stage [21]. To overcome permanent vision loss due to DR, we propose a novel unsupervised deep learning based computer aid analysis method for early DR detection. The proposed fuzzy rough c-means based unsupervised clustering along with the deep learning model is reliable and robust as the vagueness and uncertainties in the dataset are removed using rough set and fuzzy theory. The paper is structured as, Section 2 describes few works related to DR diagnosis using unsupervised clustering, Section 3 gives the background of Fuzzy Rough C Means clustering(FRCM) and ResNet CNN model, section 5 explains our proposed model in detail and Sections 6 & 7 explains our experimental setup and the results of our approach on DR dataset.

The introduction of deep learning has attracted computer vision researchers from their statistical methods. This is due to the high-tech results produced through deep learning models for large-scale datasets such as Big Data. Deep Learning has proven its efficiency in supervised learning. Owing to the success of supervised learning, recently researchers had focused on deep learning for unsupervised learning. Clustering is one of such popular unsupervised learning. Convolution Neural Network has proven itself in supervised learning yet it is not well suited for large-scale image clustering as there is no sufficient labeled data with feature representations for CNN to learn.

Xie et al. [22] tried the primary clustering using deep learning named Deep Embedded Clustering. Auto-encoders were used for the deep learning model and the traditional k-means algorithm was used at the end for clustering. Lie et al. [23] projected a method similar towards Deep Embedded Clustering. A conventional neural network was used by them instead of auto-encoders as high dimensional data representations are learned well by CNN rather than auto-encoders. The problem with the auto-encoders is that it is not well suitable for learning representation from images as images are of high dimensional data.

Yang et al. [24] proposed dimensionality reduction and clustering jointly. The k-means algorithm for clustering and auto-encoders for dimensionality reduction were used by them. The joint representation learning helped them in mapping the high dimensional data into latent space using k-means clustering. Yang et al. [25] used agglomerative clustering jointly with CNN. The representations are learned with the help of CNN in the backward propagation and the agglomerative clustering was updated in the forward propagation. However agglomerative clustering requires more memory and computation time than centroid-based clustering.

Hsu et al. [26] proposed clustering CNN for learning representations and clustering together. During clustering, they avoided drift error by feature drift compensation. Dundar et al. [27] proposed a connection matrix along with CNN for representation learning and k-means for clustering. The Connection matrix helped them in learning the representations with their associated additional data. This helped them in better clustering with the k-means algorithm.

Yellapragada et al. [28] proposed an unsupervised deep learning model with Non-Parametric Instance Discrimination (NPID) to detect macular damage due to age. NPID predicts the class of the input image by identifying the recognizable class within the hypersphere of the feature vectors obtained through trained images. Vimala et al. [29] proposed a k-means algorithm for segmentation. The segmented images are then classified as either exudates or non-exudates using a support vector machine.

Compared to the above non-fuzzy models, fuzzy models can deal with the uncertainties and vagueness in the unlabelled image data in a better way. Riaz et al. [30] proposed a Fuzzy Rough C-mean unsupervised Convolution Neural Network (FRUCNN) architecture where the images are assigned with initial clusters using AlexNet architecture and the cluster centroids are updated with help of the FRCM algorithm. Though AlexNet has fewer parameters and computation time compared to other deep CNN, it lacks in finding the simple correlation if exits in the data. Hence we need a good deep CNN model which maps the simple correlation if exists in the data. However increasing the learning parameters in the deep CNN, should not drastically increase the computation time of the model. Among various deep CNN models, ResNet50 was found to perform better [31].

Based on the above works of literature, we used ResNet50 for diagnosing the DR stage, and FRCM was integrated with the ResNet50 to improve its unsupervised learning.

3.1 Fuzzy Rough C Means Clustering (FRCM)

Clustering–an unsupervised learning algorithm that groups data into clusters where the data inside the cluster has more similarity to each other. Cluster analysis is one of the major aspects of Granular Computing [32]. Granular computing reduces the uncertainties (Roughness and Fuzziness) in the records. Rough C Mean clustering (RCM) [33,34] and Fuzzy C mean clustering (FCM) [35] were the major tools for achieving it in granular computing. Hu et al. [36] proposed a novel approach named FRCM where the RCM and FCM are combined. FRCM assigns membership values for those data lying in the boundary and lowers the approximation territory of the cluster.

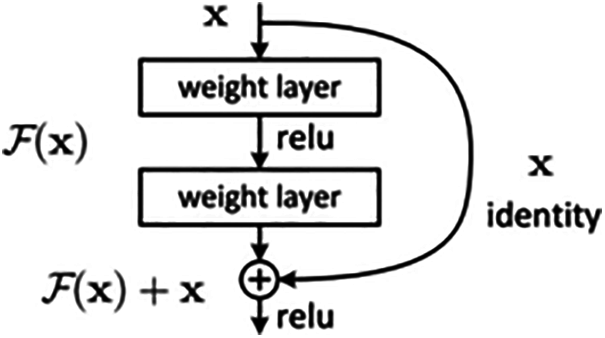

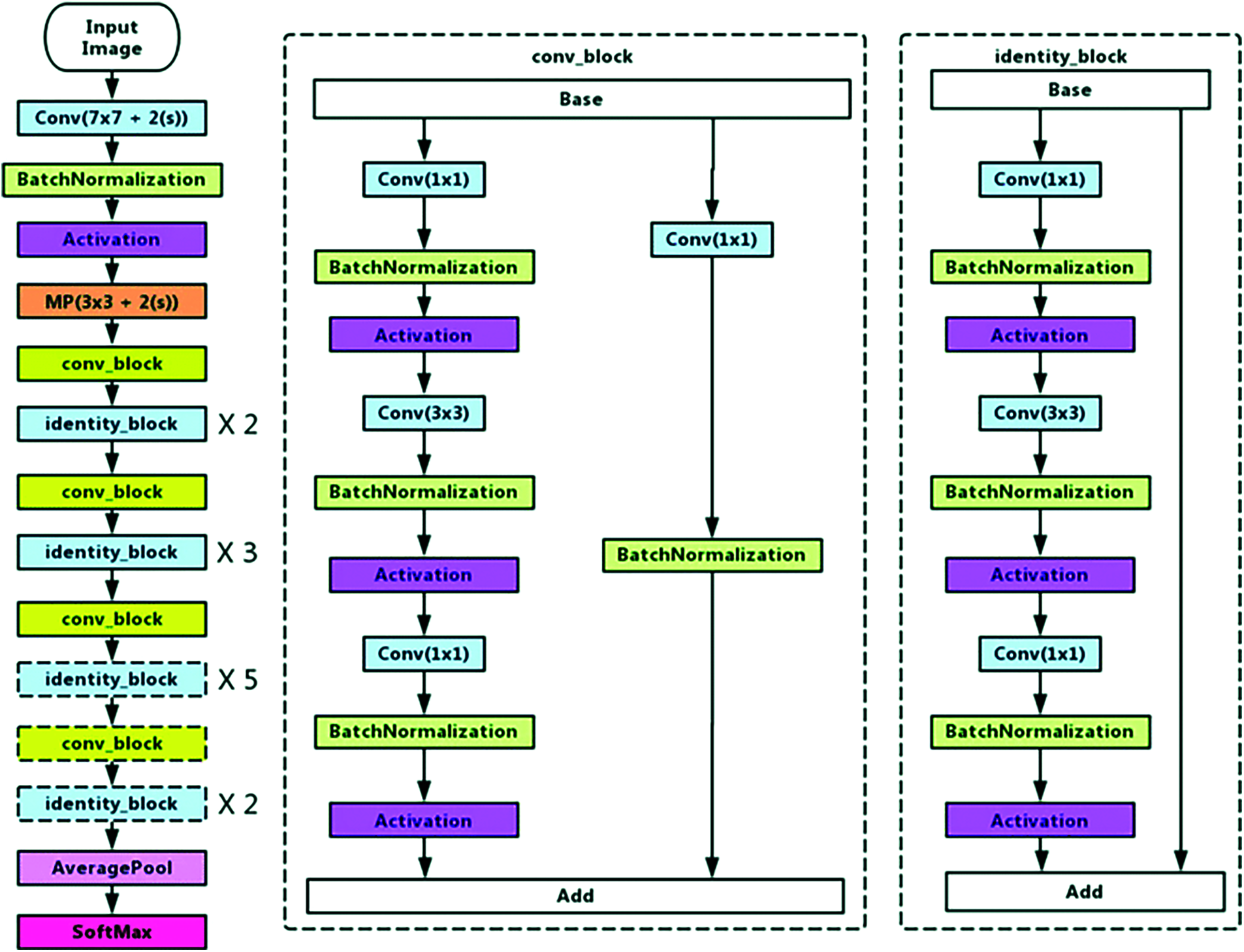

With several breakthroughs of deep CNN [37,38], for image classification [39,40], He et al. [41] proposed a deep neural network with residual connections called ResNet. The introduction of residual connections in the CNN architecture provided groundbreaking results and won 1st place in ILSVRC 2015 classification contest. ResNet overcomes the vanishing gradient problem (training deep networks incorporates backpropagation of error gradient which gets reduced as it passes in backward direction). They surmounted the vanishing gradient through its skip connections as shown in Fig. 3. For image classification, ResNet and its variants were found to produce good results. Among their variants, ResNet50 was used by us as their learning representations from the unlabelled image were good. The architecture of ResNet50 is shown in Fig. 4.

Figure 3: Skip connection in ResNet [42]

Figure 4: ResNet50 architecture [43]

A Publicly accessible Kaggle’s Diabetic Retinopathy Detection [44] dataset was used for DR development level clustering. The fundus images in the dataset were taken under different conditions by EyePacs. These images are of high resolution with different shapes and lighting conditions. There are more than 35,000 images in the dataset. Each of them was graded as observations found in Tab. 1 on a scale of 0–4. Fig. 5 shows the fundus image of each stage taken from the dataset with its respective DR grade.

Figure 5: Various levels of DR

5 Proposed Unsupervised Clustering Architecture

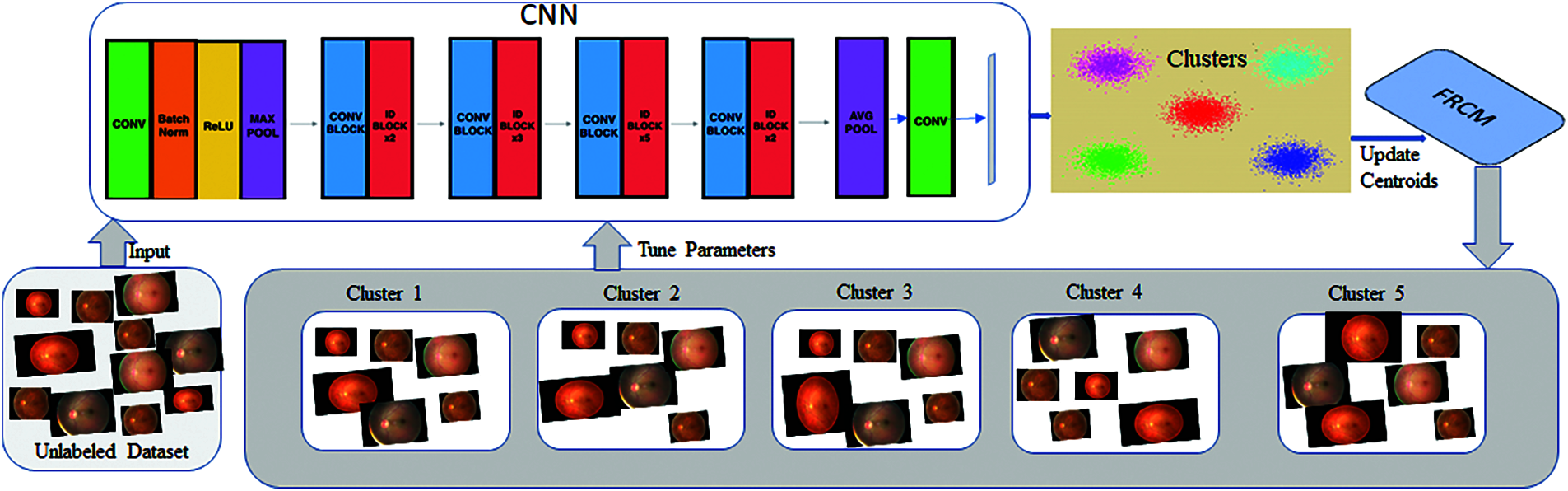

Proposed unsupervised clustering for DR diagnosis was performed with the help of ResNet50 architecture and the cluster centroids were updated during the training of the model using the FRCM algorithm. The proposed unsupervised clustering architecture for DR diagnosis is shown in Fig. 6.

The objective of our proposed system is to group the FI = {FI1, FI2, FI3, …, FIn} fundus images in the dataset into 5 clusters (5 stages of DR) where n represents the number of fundus images in the dataset. If F= {f1, f2, f3, …, fn} were the features at the fully connected layer of our architecture, then these features are used to update the cluster centroids utilizing the FRCM algorithm.

For initial clustering, we used the pre-trained model ResNet50 along with its weights trained for ImageNet. We randomly chose fundus images of batch size 32 from the dataset and are assigned with initial cluster centroids. Each fundus image is then assigned with a cluster label by FRCM. This is repeated for the images in the dataset. During this process, parameters of the projected CNN model and centroid of the clusters are updated by backpropagating the loss gradient using SGD (Stochastic Gradient Descent) method.

Figure 6: Proposed unsupervised clustering architecture

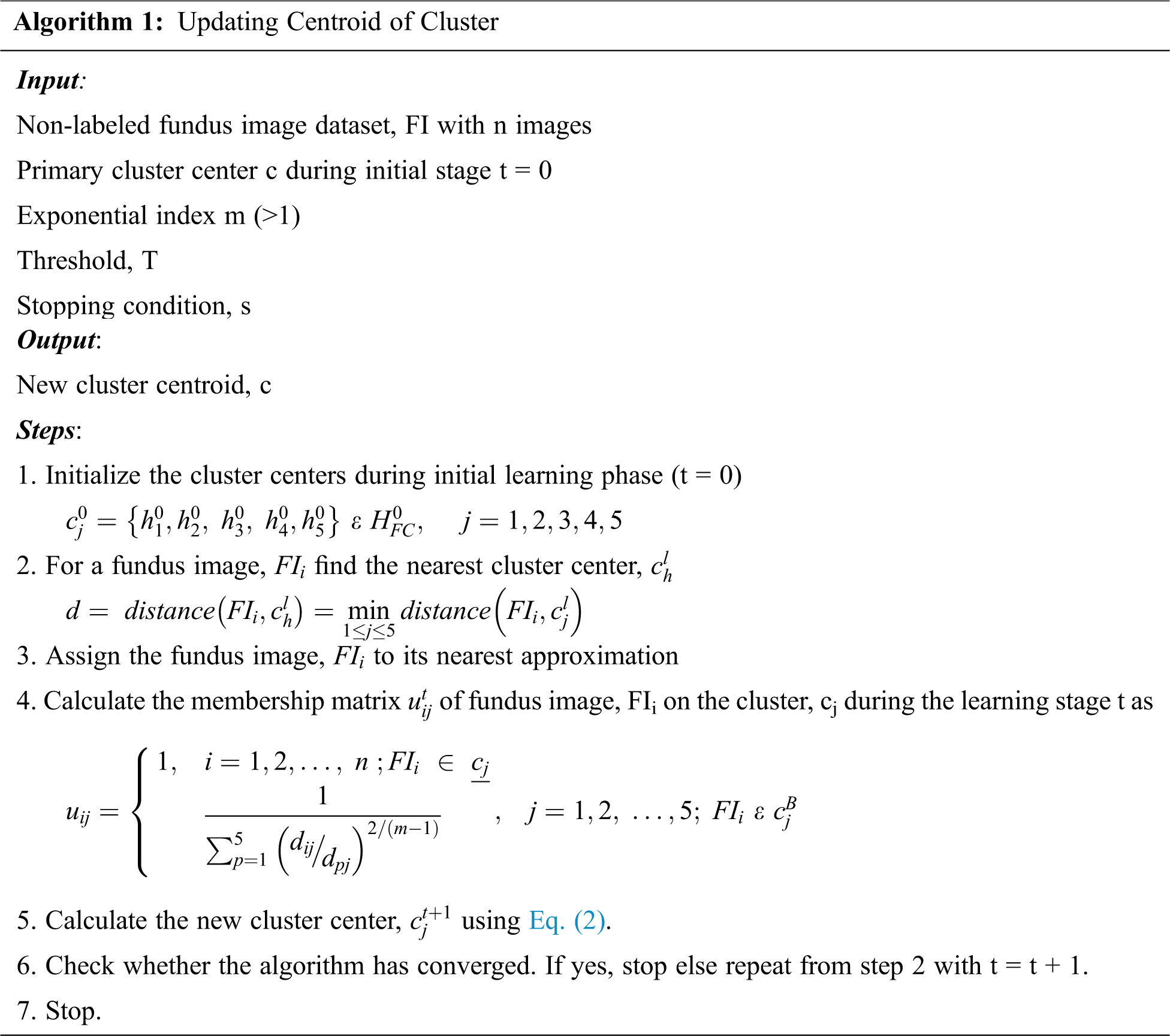

During the learning phase, the cluster centroids are updated according to algorithm 1, updating the centroid of the cluster. Let FI = {FI1, FI2, FI3,…, FIn} be the set of n fundus images. Five sample fundus images from FI were randomly picked and fed to the proposed unsupervised CNN architecture. Five images are chosen based on our objective to classify the image into one of the development stages of DR. Their features Fi(t) = {f1(t), f2(t), f3(t), f4(t), f5(t)} from the fully connected layer were extracted and made as the opening centroid of clusters in the first stage (t = 0). These centroids of the cluster are then rationalized by FRCM objective function as follows

where m is used for changing the membership value impact and should be >1, uij is the membership value of fundus image FIi on the cluster cj.

In the tth iteration, jth centroid cjt, i.e., the new extracted feature fj(t) for the fully connected layer is updated as follows

6 Experimental Setup and Result Analysis

The dataset [15] consists of 35,126 eye fundus images. 13,600 images were randomly chosen from 35,126 images in the dataset. The 13,600 images were split into 8,160 for training, 2,720 for validation, and 2,720 for testing in the ratio of 60:20:20. The images are then preprocessed for better feature extraction. The chosen color fundus images in the dataset were preprocessed as follows

i) Images are resized to 512 × 512 × 3 pixels.

ii) Random images chosen were flipped horizontally.

iii) Chosen images are augmented with basic geometric transformations of alteration in image brightness, contrast, and cropping.

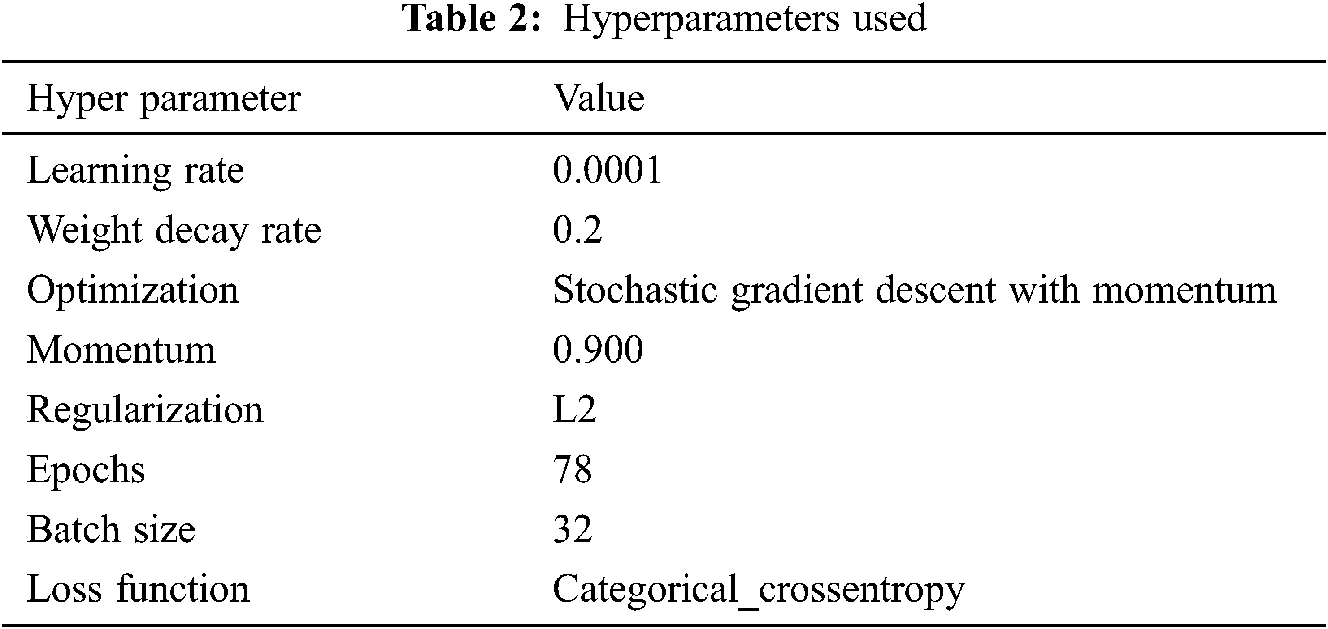

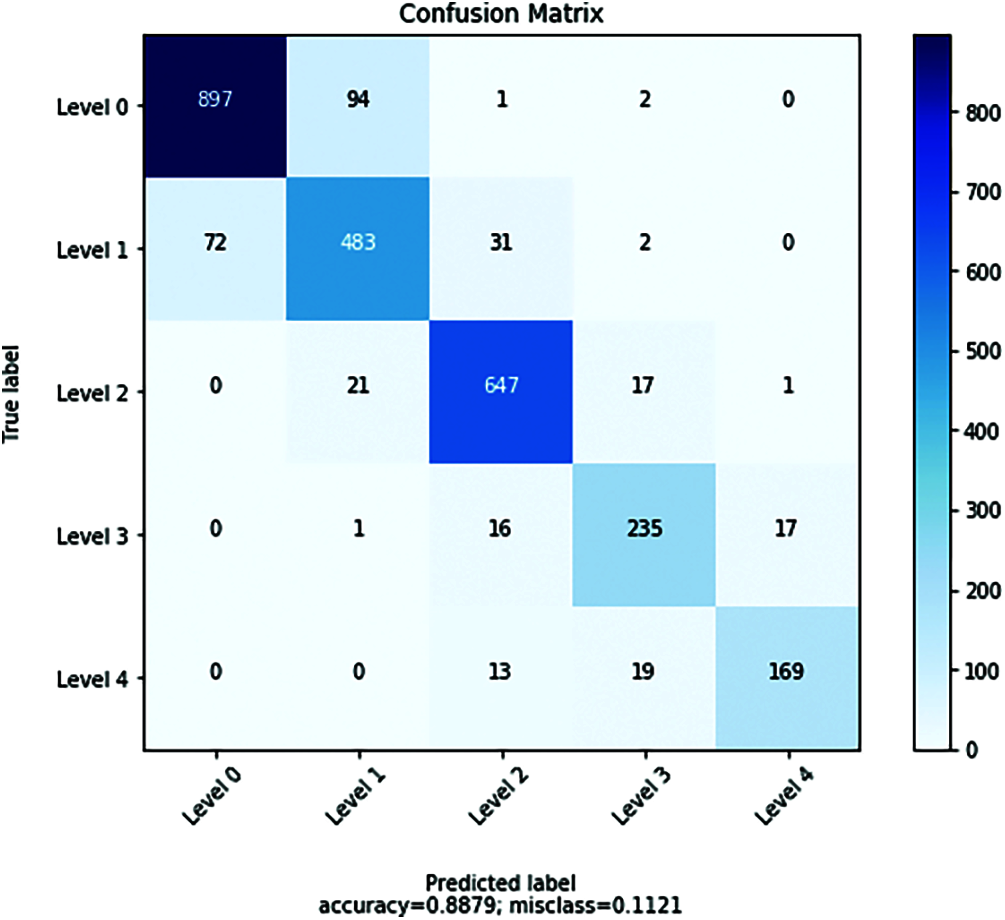

The pre-processed images were then trained and tested using our proposed model described in Section 5. The hyperparameters used during training were listed in Tab. 2. Training the proposed model is computationally expensive. Hence the proposed model was built and trained on a DGX server with Tesla V100 GPU cards. The confusion matrix obtained during testing was shown in Fig. 7.

The performance of the proposed model was measured as follows

where,

True Positive (TP) = Instances with predicted class is yes and actual class is also yes

False Positive (FP) = Instances with predicted class is yes but actual class is no

True Negative (TN) = Instances with predicted class no and actual class is also no

False Negative (FN) = Instances with predicted class no but actual class is yes

Figure 7: Confusion matrix

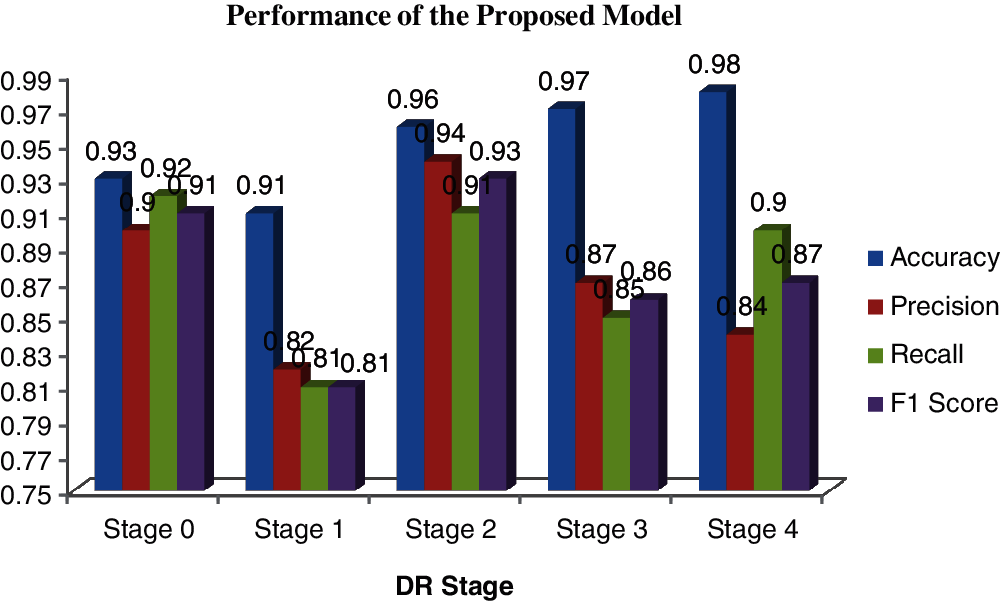

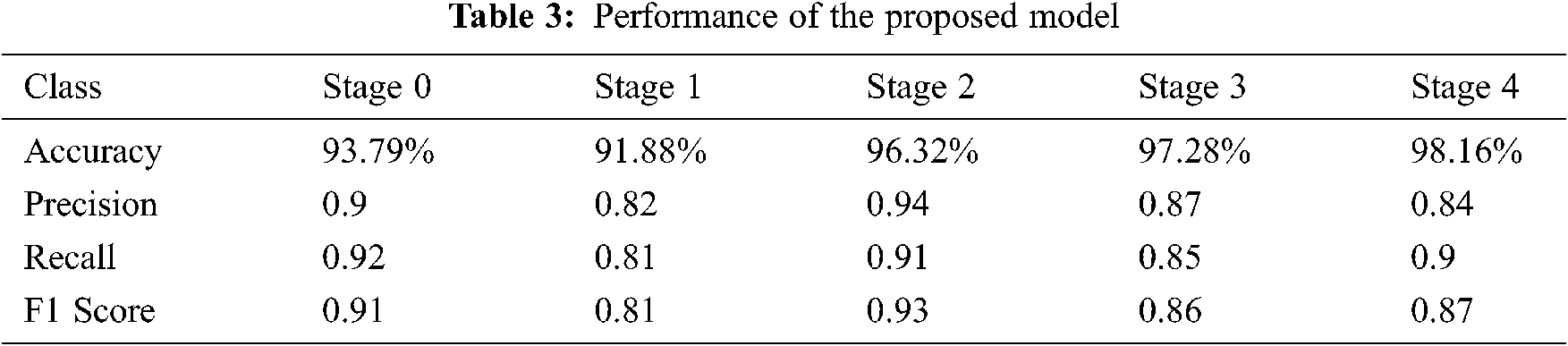

From the obtained confusion matrix, precision, sensitivity, accuracy, specificity, as well as F1 score are analyzed and shown in Tab. 3. The overall accuracy of our model is 88.7%. Fig. 8 shows the graphical representation of the performance of the proposed model.

Figure 8: Performance of the proposed model

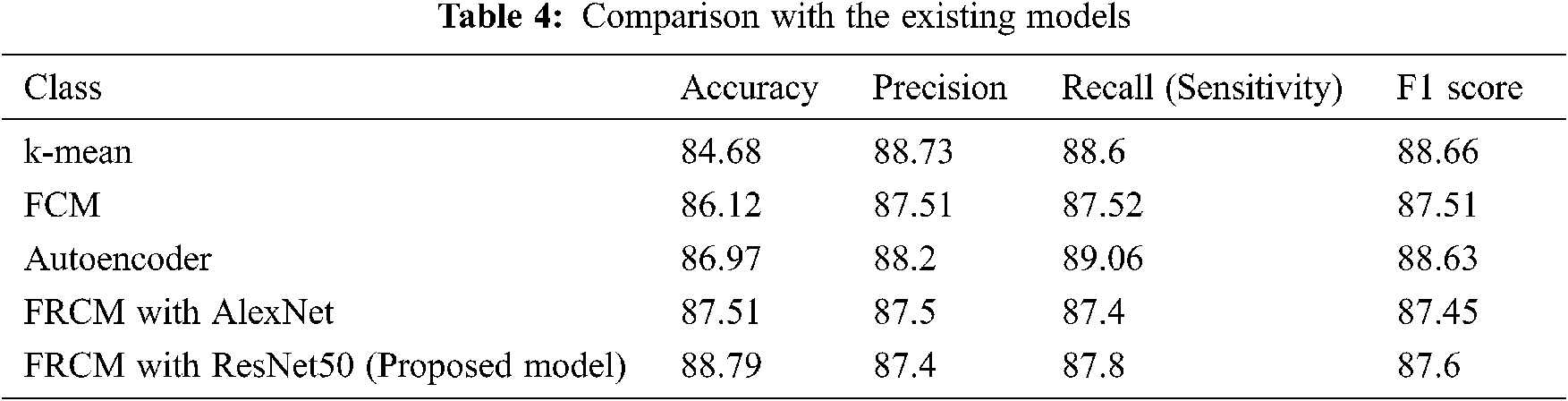

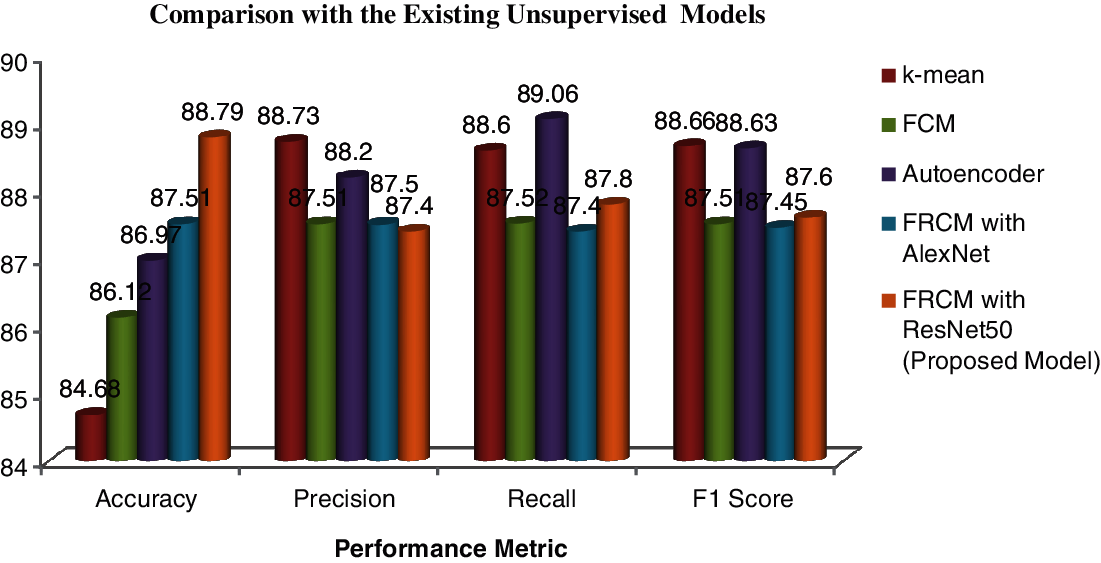

Comparisons with the existing unsupervised models were also done and the results are shown in Tab. 4 and the same is pictorially represented in Fig. 9.

Figure 9: Comparison with the existing unsupervised models

We proposed a novel method for the early diagnosis of DR in diabetic patients based on unsupervised learning. The features extracted from the last convolution layer of the unsupervised ResNet50 model were used for the diagnosis of DR. The performance of the unsupervised clustering by ResNet50 was improved by Rough set theory and fuzzy set theory. FRCM based unsupervised deep learning clustering technique provided state-of-the-art results in the diagnosis of DR at an early stage without human intervention. This is due to the usage of a rough set along with fuzzy set concepts. The vagueness, uncertainty, and incompleteness were removed by the rough set by its approximations and overlapping of cluster partitions were efficiently handled by the fuzzy set concept. Based on the extracted features of the image, initial clusters were formed by the unsupervised ResNet50 CNN model. The cluster centroid and the representations are jointly learned during training where cluster centers and network representations are updated through forward and backward propagation. The experimental outcome confirms that the proposed model gives overall accuracy of 88.7%. The proposed model has improved accuracy of DR diagnosis compared to the existing unsupervised algorithms like k-means, FCM, auto-encoder, and FRCM with alexnet. The proposed model needs to learn many parameters. Hence in the future, we would work on reducing the number of trainable parameters or making them adaptive consuming less memory.

Acknowledgement: We would like to acknowledge the faculties of the department of computer science and Research scholars at Bennett University, Greater Noida, UP who guided during the sabbatical at Bennett University, Greater Noida. We are allowed to work on their DGX server with 8 x Tesla V100 GPU cards. We thank our principal and management of Sri Ramakrishna Engineering College for their constant support.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. International Diabetes Federation, Atlas, 9thed., Brussels, Belgium, 2019. [Online]. Available at: https://diabetesatlas.org/en/sections/worldwide-toll-of-diabetes.html. [Google Scholar]

2. Exquisite Eye Care: [Online]. Available: https://exquisiteeyecare.com/diabetic-eye-disease. [Google Scholar]

3. Topcon Healthcare: [Online]. Available: https://topconhealth.com.au/diabetic-retinopathy-an-eye-disease-with-4-stages/. [Google Scholar]

4. C. Doersch, A. Gupta and A. A. Efros, “Mid-level visual element discovery as discriminative mode seeking,” in Proc. Conf. on Information Processing Systems, Lake Tahoe, NV, USA, pp. 494–502, 2013. [Google Scholar]

5. D. Han and J. Kim, “Unsupervised simultaneous orthogonal basis clustering feature selection,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, pp. 5016–5023, 2015. [Google Scholar]

6. B. Hariharan, J. Malik and D. Ramanan, “Discriminative decorrelation for clustering and classification,” in Proc. European Conference on Computer Vision, Florence, Italy, pp. 459–472, 2012. [Google Scholar]

7. F. Nie, Z. Zeng, I. W. Tsang, D. Xu and C. Zhang, “Spectral embedded clustering: A framework for in-sample and out-of-sample spectral clustering,” IEEE Transactions on Neural Networks and Learning Systems, vol. 22, no. 11, pp. 1796–1808, 2011. [Google Scholar]

8. F. Nie, X. Dong and X. Li, “Initialization independent clustering with actively self-training method,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 42, no. 1, pp. 17–27, 2012. [Google Scholar]

9. J. Song, L. Gao, F. Nie, H. T. Shen, Y. Yan et al., “Optimized graph learning using partial tags and multiple features for image and video annotation,” IEEE Transactions on Image Processing, vol. 25, no. 11, pp. 4999–5011, 2016. [Google Scholar]

10. F. Nie, X. Wang and H. Huang, “Clustering and projected clustering with adaptive neighbors,” in Proc.20th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining, New York, NY, USA, pp. 977–986, 2014. [Google Scholar]

11. F. Nie, H. Wang, C. Deng, X. Gao, X. Li et al., “New l1-Norm relaxations and optimizations for graph clustering,” in Proc. Thirtieth AAAI Conf. on Artificial Intelligence, Phoenix, AZ, USA, pp. 1962–1968, 2016. [Google Scholar]

12. L. Gao, J. Song, F. Nie, F. Zou, N. Sebe et al., “Graph-without-cut: An ideal graph learning for image segmentation,” in Proc. Thirtieth AAAI Conf. on Artificial Intelligence, Phoenix, AZ, USA, pp. 1188–1194, 2016. [Google Scholar]

13. F. Nie, C. Ding, D. Luo and H. Huang, “Improved minmax cut graph clustering with nonnegative relaxation,” in Proc. European Conf. on Machine Learning and Knowledge Discovery in Databases: Part II, Barcelona, Spain, pp. 451–466, 2010. [Google Scholar]

14. F. Tian, B. Gao, Q. Cui, E. Chen, T. Y. Liu et al., “Learning deep representations for graph clustering,” in Proc. the Twenty-Eighth AAAI Conf. on Artificial Intelligence, Quebec, QC, Cannada, pp. 1293–1299, 2014. [Google Scholar]

15. G. Trigeorgis, K. Bousmalis, S. Zafeiriou and B. Schuller, “A deep semi-NMF model for learning hidden representations,” in Proc. 31st Int. Conf. on on Machine Learning, Beijing, China, pp. 1692–1700, 2014. [Google Scholar]

16. P. Xie and E. Xing, “Integrating image clustering and codebook learning,” in Proc. of the Twenty-Ninth AAAI Conf. on Artificial Intelligence and the Twenty-Seventh Innovative Applications of Artificial Intelligence Conf., Palo Alto, California, The AAAI Press, pp. 1903–1909, 2015. [Google Scholar]

17. Y. Guo, L. Ma, F. Zhu and F. Liu, Selecting training samples from large-scale remote-sensing samples using an active learning algorithm. In: Computational Intelligence and Intelligent Systems, 1st ed., Singapore: Springer, pp. 40–51, 2018. [Google Scholar]

18. J. Song, Y. Yang, Z. Huang, H. T. Shen and J. Luo, “Effective multiple feature hashing for large-scale near-duplicate video retrieval,” IEEE Transanctions on Multimedia, vol. 15, no. 8, pp. 1997–2008, 2013. [Google Scholar]

19. C. C. Aggarwal and C. K. Reddy, Data Clustering: Algorithms and Applications, 1sted., Boca Raton, FL, USA: CRC Press, 2013. [Google Scholar]

20. Y. LeCun and Y. Bengio, “Convolutional networks for images, speech, and time series,” in The Handbook of Brain Theory and Neural Networks, 2nded., vol. 3361, MIT Press, Cambridge, Massachusetts, London, pp. 255–258, 1995. [Google Scholar]

21. R. S. Rajkumar, A. G. Selvarani and S. Ranjithkumar, “Comprehensive study on diabetic retinopathy,” in Soft Computing for Problem Solving. Advances in Intelligent Systems and Computing. Vol. 1057. Singapore: Springer, pp. 155– 164, 2020. [Google Scholar]

22. J. Xie, R. Girshick and A. Farhadi, “Unsupervised deep embedding for clustering analysis,” in Proc. 33rd Int. Conf. on Machine Learning, New York, NY, USA, pp. 19–24, 2016. [Google Scholar]

23. F. Li, H. Qiao, B. Zhang and X. Xi, “Discriminatively boosted image clustering with fully convolutional auto-encoders,” Pattern Recognition, vol. 83, no. 1, pp. 161–173, 2018. [Google Scholar]

24. B. Yang, X. Fu, N. D. Sidiropoulos and M. Hong, “Towards k-means-friendly spaces: Simultaneous deep learning and clustering,” Proc. Int. Conf. on Machine Learning, vol. 70, pp. 3861–3870, 2017. [Google Scholar]

25. J. Yang, D. Parikh and D. Batra, “Joint unsupervised learning of deep representations and image clusters,” in Proc. Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 5147–5156, 2016. [Google Scholar]

26. C. C. Hsu and C. W. Lin, “CNN-Based joint clustering and representation learning with feature drift compensation for large-scale image data,” IEEE Transactions on Multimedia, vol. 20, no. 2, pp. 421–429, 2018. [Google Scholar]

27. A. Dundar, J. Jin and E. Culurciello, “Convolutional clustering for unsupervised learning,” in Proc. Int. Conf. on Learning Representations, San Juan, PR, USA, pp. 1–11, 2016. [Google Scholar]

28. B. Yellapragada, S. Hornhauer, K. Snyder, S. Yu and G. Yiu, “Unsupervised deep learning for grading of age-related macular degeneration using retinal fundus images,” Investigative Ophthalmology & Visual Science, vol. 62, no. 8, pp. 1–29, 2021. [Google Scholar]

29. G. S. A. G. Vimala and S. K. Mohideen, “An efficient approach for detection of exudates in diabetic retinopathy images using clustering algorithm,” IOSR Journal of Computer Engineering, vol. 2, no. 5, pp. 43–48, 2012. [Google Scholar]

30. S. Riaz, A. Arshad and L. Jiao, “Fuzzy rough C-mean based unsupervised CNN clustering for large-scale image data,” Applied Science, vol. 8, no. 10, pp. 1869, 2018. [Google Scholar]

31. V. Maeda-Gutiérrez, C. E. Galván-Tejada, L. A. Zanella-Calzada, J. M. Celaya-Padilla, J. I. Galván-Tejada et al., “Comparison of convolutional neural network architectures for classification of tomato plant diseases,” Applied Sciences, vol. 10, no. 4, pp. 1–15, 2020. [Google Scholar]

32. J. T. Yao, A. V. Vasilakos and W. Pedrycz, “Granular computing: Perspectives and challenges,” IEEE Transactions on Cybernetics, vol. 43, no. 6, pp. 1977–1989, 2013. [Google Scholar]

33. P. Lingras and C. West, “Interval set clustering of web users with rough k-means,” Journal of Intelligent Information Systems, vol. 23, no. 1, pp. 5–16, 2004. [Google Scholar]

34. G. Peters, “Outliers in rough k-means clustering,” in Proc. First International Conference on Pattern Recognition and Machine Intelligence, Kolkata, India. 1sted., vol. 3776. Springer, pp. 702–707, 2005. [Google Scholar]

35. W. Jin, A. K. H. Tung and J. Han, “Mining top-n local outliers in large databases,” in Proc. 7th ACM SIGKDD Int. Conf. on Knowledge Discovery Data Mining, Mining, Association for Computing Machinery, New York NY,United States, pp. 293–298, 2001. [Google Scholar]

36. Q. Hu and D. Yu, “‘An improved clustering algorithm for information granulation,” in Lecture Notes in Computer Science, 1sted., vol. 3613. Berlin, Heidelberg: Springer, pp. 494–504, 2005. [Google Scholar]

37. Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard et al., “Backpropagation applied to handwritten zip code recognition,” Neural Computation, vol. 1, no. 4, pp. 541–551, 1989. [Google Scholar]

38. A. Krizhevsky, I. Sutskever and G. Hinton, “Imagenet classification with deep convolutional neural networks,” NIPS: Proc. Int. Conf. on Neural Information Processing Systems, vol. 1, pp. 1097–1105, 2012. [Google Scholar]

39. M. D. Zeiler and R. Fergus, “Visualizing and understanding convolutional neural networks,” in Proc. European Conf. on Computer Vision, Zurich, Switzerland, pp. 818–833, 2014. [Google Scholar]

40. P. Sermanet, D. Eigen, X. Zhang, M. Mathieu, R. Fergus et al., “Overfeat: Integrated recognition, localization and detection using convolutional networks,” in Proc. Int. Conf. on Learning Representations (ICLR), Banff, Canada, pp. 1–16, 2014. [Google Scholar]

41. K. He, X. Zhang, S. Ren and J. Sun, “Deep residual learning for image recognition,” in Proc. Computer Vision and Pattern Recognition (CVPR), Las Vegas, Nevada, USA, pp. 770–778, 2016. [Google Scholar]

42. Hrshit Kumar, “Skip connections and residual blocks,” Technical Fridays blog, 2018 [Online]. Available: https://kharshit.github.io/blog/2018/09/07/skip-connections-and-residual-blocks. [Google Scholar]

43. https://www.researchgate.net/figure/Left-ResNet50-architecture-Blocks-with-dotted-line-represents-modules-that-might-be_fig3_331364877. [Google Scholar]

44. EyePACS, LLC, “Diabetic Retinopathy Detection,” 2014, Accessed: Sep. 1, 2018. [Online]. Available: https://www.kaggle.com/c/diabetic-retinopathy-detection/data. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |