DOI:10.32604/csse.2022.022103

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.022103 |  |

| Article |

Deep Reinforcement Learning Empowered Edge Collaborative Caching Scheme for Internet of Vehicles

1State Key Laboratory of Networking and Switching Technology, Beijing University of Posts and Telecommunications, Beijing, 100876, China

2Information and Communication Branch, State Grid Liaoning Electric Power Co., Ltd., Shenyang, 110000, China

3China Global Energy Interconnection Research Institute, Nanjing, 210000, China

4Syracuse University, New York, 13244, USA

*Corresponding Author: Zhili Wang. Email: zlwang@bupt.edu.cn

Received: 27 July 2021; Accepted: 30 August 2021

Abstract: With the development of internet of vehicles, the traditional centralized content caching mode transmits content through the core network, which causes a large delay and cannot meet the demands for delay-sensitive services. To solve these problems, on basis of vehicle caching network, we propose an edge collaborative caching scheme. Road side unit (RSU) and mobile edge computing (MEC) are used to collect vehicle information, predict and cache popular content, thereby provide low-latency content delivery services. However, the storage capacity of a single RSU severely limits the edge caching performance and cannot handle intensive content requests at the same time. Through content sharing, collaborative caching can relieve the storage burden on caching servers. Therefore, we integrate RSU and collaborative caching to build a MEC-assisted vehicle edge collaborative caching (MVECC) scheme, so as to realize the collaborative caching among cloud, edge and vehicle. MVECC uses deep reinforcement learning to predict what needs to be cached on RSU, which enables RSUs to cache more popular content. In addition, MVECC also introduces a mobility-aware caching replacement scheme at the edge network to reduce redundant cache and improving cache efficiency, which allows RSU to dynamically replace the cached content in response to the mobility of vehicles. The simulation results show that the proposed MVECC scheme can improve cache performance in terms of energy cost and content hit rate.

Keywords: Internet of vehicles; vehicle caching network; collaborative caching; caching replacement; deep reinforcement learning

With the development of internet of vehicles, the content demand of mobile vehicles has increased rapidly. Artificial intelligence-driven vehicles need to constantly learn their surrounding environment and make instant decisions. Vehicles can be regarded as mobile devices to collect and process environmental data and support various information services. The development of Internet of Vehicles (IoV) and wireless technology provides a way to improve the driving experience [1]. Due to high latency and network energy consumption [2], traditional mobile cloud computing is not suitable for latency-sensitive applications [3,4], which affects the experience of vehicle users.

To solve the problems of high latency and energy consumption in IoV, road side units (RSU) are used to help collect vehicle data and provide vehicle-based information services, mobile edge computing (MEC) is used to calculate content popularity [5–7]. Caching popular content in RSU can bring valuable data, thereby reducing access latency and network energy consumption. However, the caching performance is limited by the RSU storage capacity. The storage capacity of single RSU is not enough to support the intensive content requests in IoV, which leads to an unbalanced and redundant cache in adjacent RSUs [8,9]. In addition, due to the high-speed mobility, the vehicle will quickly pass through its coverage region when sending a request to RSU, which means that the RSU cached content is easily out of date [10,11]. In order to improve the caching efficiency, the caching scheme should respond to the vehicle mobility and enable the RSU to selectively replace part of the cached content.

Therefore, an intelligent collaborative caching mechanism is needed to improve caching efficiency. Considering that collaborative caching can enable the cached content to be shared between RSUs, the implementation of collaborative caching at the edge of IoV can alleviate caching load of a single RSU. In addition, vehicle-to-vehicle (V2V) communication can further shorten the transmission distance and reduce the transmission delay. Therefore, we integrate MEC, collaborative caching and V2V caching to realize collaborative caching among cloud, RSU and vehicle.

However, the complex and dynamically changing IoV network environment makes it difficult to determine what content should be cached on RSU. Moreover, the caching optimization problem is a long-term mixed integer linear programming (LT-MILP), which has been proven to be NP-hard [12]. Through neural networks to continuously interact with the environment, deep reinforcement learning (DRL) has excellent performance in dealing with complex dynamic environments.

For this reason, we propose a MEC-assisted vehicle edge collaborative caching (MVECC) scheme based on the vehicle caching network. MVECC uses DRL to predict content popularity and cache popular content in RSUs. Each RSU shares the cached content to achieve collaborative caching. In MVECC, RSUs collect and upload the learning data to macro base station (MBS). Then MBS acts as a DRL agent to determine content caching and delivery schemes. In addition, we have also designed a mobility-aware caching replacement algorithm, RSU can selectively replace and update the cached content according to vehicle mobility. Our contributions can be summarized as follows:

• We build a MVECC scheme. In this scheme, we make full use of the caching capability of RSU and smart vehicles and design two content caching modes, including RSU caching and assisted caching vehicle (ACV) caching. In addition, MVECC includes four content delivery modes, namely content center server (CCS) delivery, RSU direct delivery, RSU collaborative indirect delivery and ACV assisted delivery. MVECC can maximize performance through the coordination of multiple caching and delivery modes.

• We propose a content caching algorithm based on deep deterministic policy gradient (DDPG) and a mobility-aware caching replacement algorithm. Through the learning process of DRL, MVECC continuously adapt to the dynamic changes of IoV network and determine caching scheme based on content popularity. We use Markov chains to simulate state changes. The content transmission energy consumption is regarded as a criterion for supervising the learning process. Agent decide the caching and delivery scheme based on historical experience. At the same time, caching replacement enables RSUs to replace the cached content in response to vehicle mobility, which ensures that RSUs do not store outdated and unpopular content.

In order to solve the caching optimization problem, many excellent works have studied mobile edge computing and caching methods in wireless networks. In terms of caching allocation, Huang et al. [13,14] considered the best combination of multiple transmissions. Campolo et al. [15] evaluated a solution that can reduce V2R link gap and improve the utilization of wireless channel. Kwon et al. [16] encoded the network and realizes data transmission between multiple RSUs. Mei et al. [17] optimized the radio resources and coding scheme of V2V communication to reduce transmission delay.

In addition, based on the similarity and population of the user community in the V2V communication scenario, Zhao et al. [18] proposed an effective caching scheme to improve the content hit rate. Ding et al. [19] proposed a cache-assisted data transmission scheme, which uses a large number of cache servers deployed on the roadside to support vehicle applications. To solve the data loss caused by vehicle mobility, Abdelhamid et al. [20,21] proposed a vehicle-assisted content caching scheme to distribute content at each exit and entrance of road segment.

Above works solves the cache optimization problem in IoV with the help of edge caching, the cache efficiency of these schemes in processing intensive service requests is still limited by the storage capacity of RSU. Specifically, adjacent RSUs tend to cache the same content, resulting in redundant caching. Through the collaboration between RSUs, MBS can make cache decisions for each RSU from a global perspective and effectively avoid redundant cache.

Considering the randomness of content popularity and IoV network state, it is very complicated to make effective caching decisions in IoV network. As an intelligent learning solution, DRL can effectively overcome these problems and find an optimal caching scheme. There have been some works focusing on DRL-based caching solutions. To solve the problem of continuously acting variables in Markov decision process (MDP) model, Li et al. [22] used a deterministic strategy gradient learning algorithm to provide the optimal resource pricing strategy. Sadeghi et al. [23] introduced the concept of global content popularity and proposed a novel RL-based content caching scheme to implement the best caching strategy.

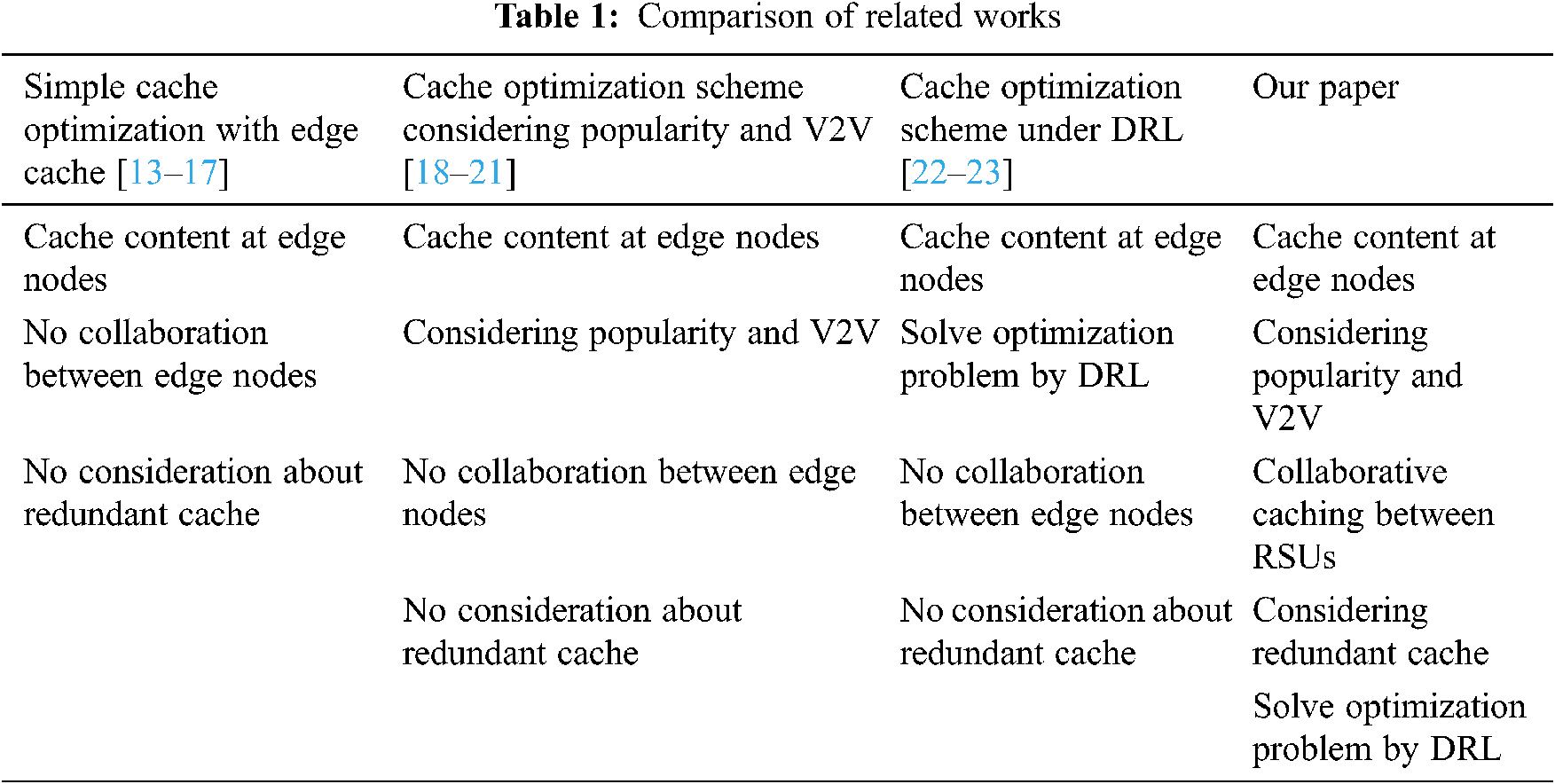

For reader convenience, the above references are presented in Tab. 1. Although several excellent caching schemes have been proposed to improve resource utilization and vehicle user experience. But most of these studies ignore the high-speed mobility of vehicle. After the vehicle moves, the RSU passing by the vehicle still caches the requested content, which may cause a waste of storage resources. To minimize the energy cost of vehicle caching network, this article introduce an artificial intelligence-assisted caching scheme, which allows multiple caching and delivery modes to coexist, and obtains the best caching and delivery by applying DRL. The simulation results show that we have proposed an effective and feasible reference scheme for vehicle caching network.

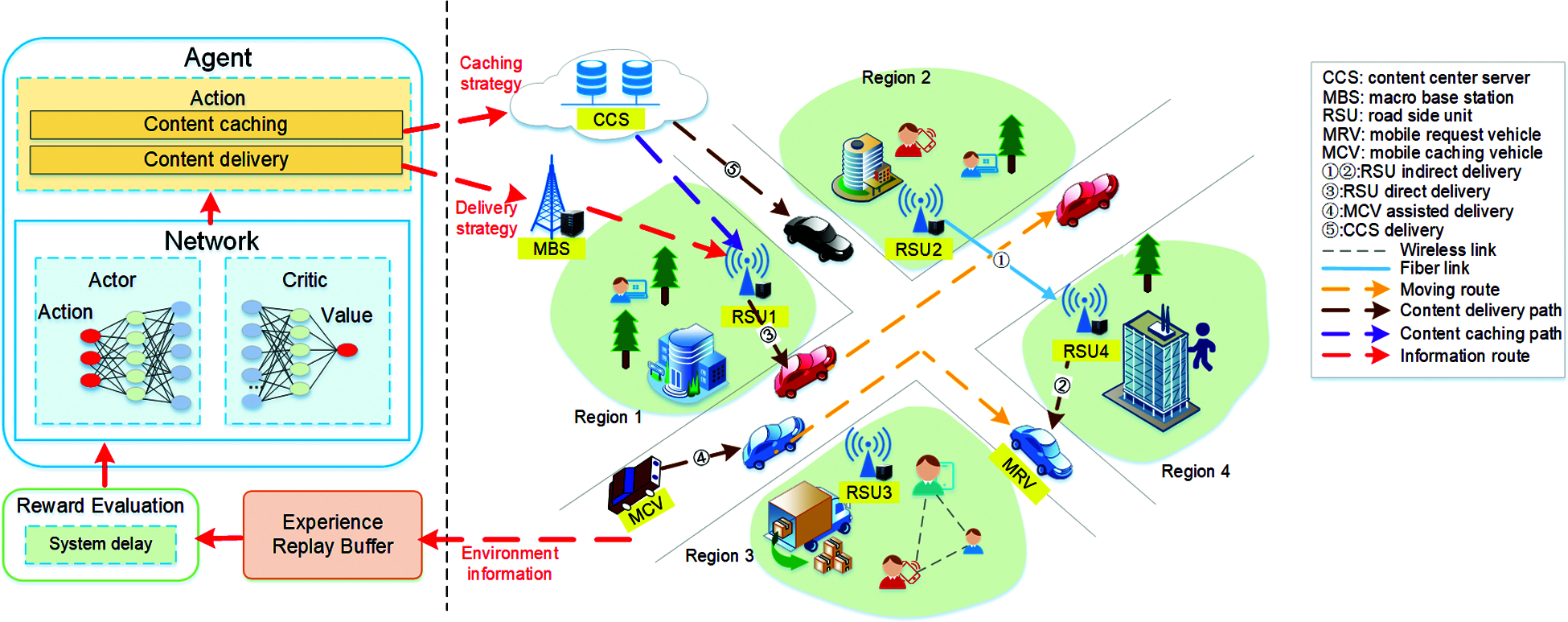

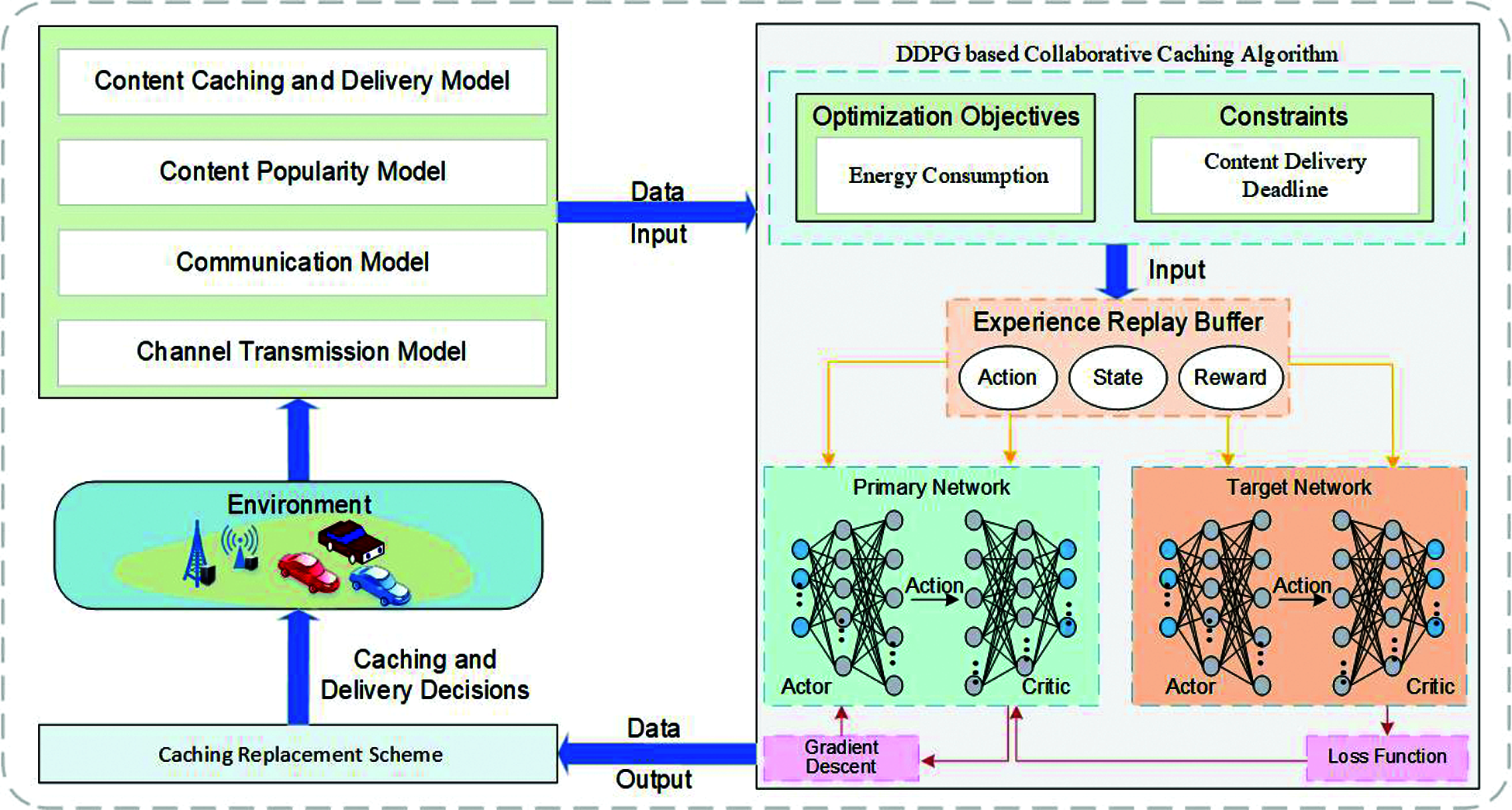

In this section, we propose a DRL-based MVECC scheme. As shown in Fig. 1, the MVECC scheme includes a content center server (CCS), a macro base station (MBS), multiple RSUs and assisted caching vehicles (ACVs). We define RSU and ACV as caching nodes, suppose set

Figure 1: System model

The proposed MVECC scheme uses DRL agents to monitor environment and extract vehicle and content features to estimate vehicle mobility and content popularity. By using Deep Deterministic Policy Gradient (DDPG) algorithm and caching replacement scheme, content can be cached and delivered more efficiently at edge network. DDPG algorithm are calculated and executed on MBS, the content is cached on RSU and ACV. Fig. 1 shows the proposed scheme, where on the left is a DRL module, responsible for determining the best caching and delivery decision. On the right is a MVECC network, where the content is cached from CCS to RSU and ACV, and finally delivered to MRV.

In MVECC, MBS is acted as a DRL agent to monitor the network environment, DDPG are calculated and executed on RSU. In content caching process, caching nodes caches content from CCS according to content popularity; in content delivery process, MRV requests content, MBS selects the optimal delivery path to complete content delivery with lower system energy consumption. Specifically, if the requested content is cached in MRV itself, the content can be obtained without any system energy consumption. Otherwise, the content will be obtained from the communication range, such as obtaining content from ACV or RSU in communication region of MRV. When neither RSU nor ACV within MRV communication range has cached the required content, it will consider forwarding the cached content from adjacent RSU to implement the delivery process. When the cache nodes cannot deliver the cached content, the content will be obtained from CCS.

3.2 Content Caching and Delivery Model

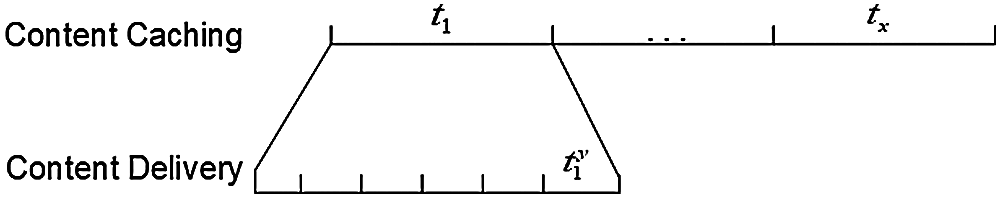

Considering that the content popularity and location of MRV/ACV are time-varying and uncertain, the time-varying scale of content popularity update is much larger than MRV/ACV location changes. Therefore, the content caching process and delivery process should be constructed based on different time scales. As shown in Fig. 2, the large time scale is content caching process. Let

Figure 2: Content caching and delivery time slot

We define caching matrix

To improve content delivery efficiency, we also propose a RSU collaborative content delivery model. Specifically, RSUs are linked by optical fiber links, they share the cached content with each other and perform content delivery process in a collaborative manner. For example, as shown in Fig. 1,

In summary, based on our proposed RSU collaborative content delivery model, there are four content delivery models for MRV content requests:

1. RSU direct delivery: as shown in step 1 of Fig. 1, the directly connected

2. RSU indirect delivery: as shown in steps 2–3 of Fig. 1, the directly connected

3. ACV assisted delivery: as shown in steps 4 of Fig. 1, in the communication range of MRV, ACV has cached content

4. CCS delivery: all caching nodes (RSU and ACV) cannot delivery content

Most of the current work assumes that the popularity of content follows the Zipf distribution in mobile social networks. Since the MRV may pass through multiple RSU regions during the content delivery process, this assumption may not always be applicable in IoV network, and distribution information of content popularity cannot correctly reflect the real-time content requirements of vehicles.

To provide a reasonable content popularity model, we define a global content popularity based on content request probability. RSU collects the content request information of all MRV and ACV in the region, calculates and updates the content popularity at the beginning of

where

In this section, we use the communication connection state between caching nodes (RSU, ACV) and MRV, as well as the communication connection state between cache nodes

Let

3.5 Channel Transmission Model

In this section, based on the real mobile network scenario, we analyze the link state between MRV and caching nodes and build an energy consumption model.

The signal-to-noise ratio (SNR) between

where

Let

According to Shannon's formula, the data transmission rate when

In this section, we transform the joint optimization problem of content caching and delivery into MDP. The behavior of MRV request content is modeled as Markov chain, in which each vehicle changes state with probability. The basic elements of characterizing MDP are: state set

RSU gets all MRVs content request information at

The system state set

At content caching time slot, after receiving the content request from MRV, MBS calculates content popularity, and then decides which content to cache in caching node. At content delivery time slot, MBS selects the best content delivery path to reduce the content delivery cost. Therefore, the action space contains the following parts:

The system action space

4.3 Content Caching and Delivery Energy Consumption

4.3.1 Content Caching Energy Consumption

On the basis of the previous section, we can calculate that the delay of

the delay of

The updated content size on caching node

where

The content caching cost is the energy consumption cost when transmitting updated content from backhaul link. It can be formulated as the product of transmission time and transmission power.

Therefore, according to Eqs. (6)–(8), the energy consumption of caching node

where

4.3.2 Content Delivery Energy Consumption

On the basis of the previous section, we can calculate that the delay of

If the content is delivered by collaborative forwarding, the delay of forwarding content

where

Therefore, according to Eqs. (11)–(12), the total energy consumption of content delivery process at

where

If the whole content is not obtained before the delivery deadline, the penalty cost will be:

where

According to Eqs. (6)–(14), the total system cost function at

where

After taking action

5.1 Principle of DDPG Algorithm

For some actions, it may be a continuous value, or a very high-dimensional discrete value, so that the spatial dimension of the action is great. If the random policy is used, it is possible to study the probability of all possible actions like DQN, and calculate the value of each possible action, the sample amount of that requires is very large. Although at the same state, the transition probability is different, but the maximum probability is unique. Determinative strategies take only the maximum probability, the strategy is:

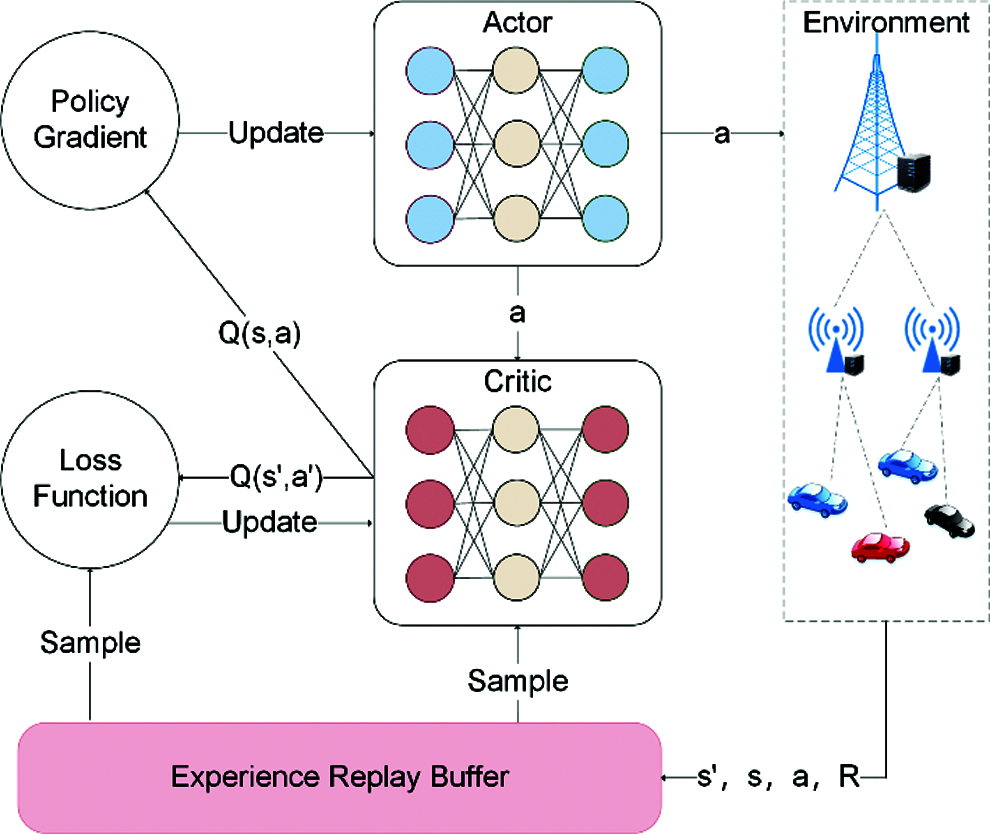

Therefore, based on the deterministic Actor-Critic model, DDPG provide an accurate estimate decisive policy function and value function. Similar to the Actor-Critic model, the actor network uses policy functions, responsible for generating action and interacts with the environment. The critic network uses value function, responsible for assessing the actor's performance and guides the actor's next action. This combination can be used to implement joint optimization of the proposed content caching and delivery issues. The schematic diagram of DDPG is shown in Fig. 3. There are three modules: 1) actor network; 2) critic network; 3) experience replay buffer.

Figure 3: Algorithm flow chart

5.2 DDPG Based Mobile Edge Collaborative Caching Algorithm

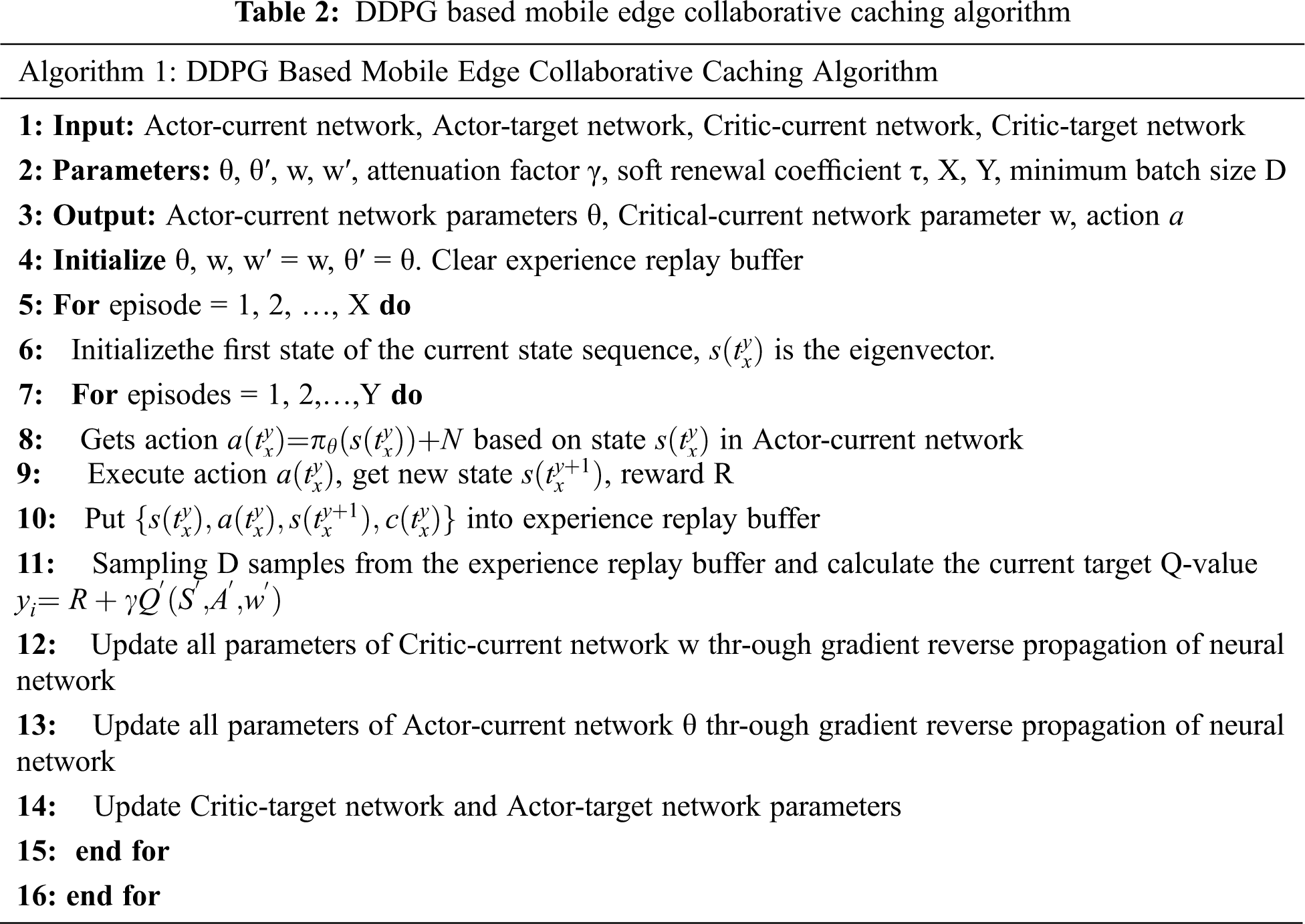

We develop DDPG based solutions to jointly optimize caching and delivery strategies and minimize energy consumption costs, the algorithm is shown in Tab. 2. Network parameters are initialized at the beginning of the training process. The training dataset is collected by MBS. Then, vehicle information is calculated according to different models, and the parameters are input into the training network in the form of state and action matrix. Finally, after continuous training, the model will converge, and the output data is the optimal cache and delivery decision.

5.3 Mobile-Aware Caching Replacement Scheme

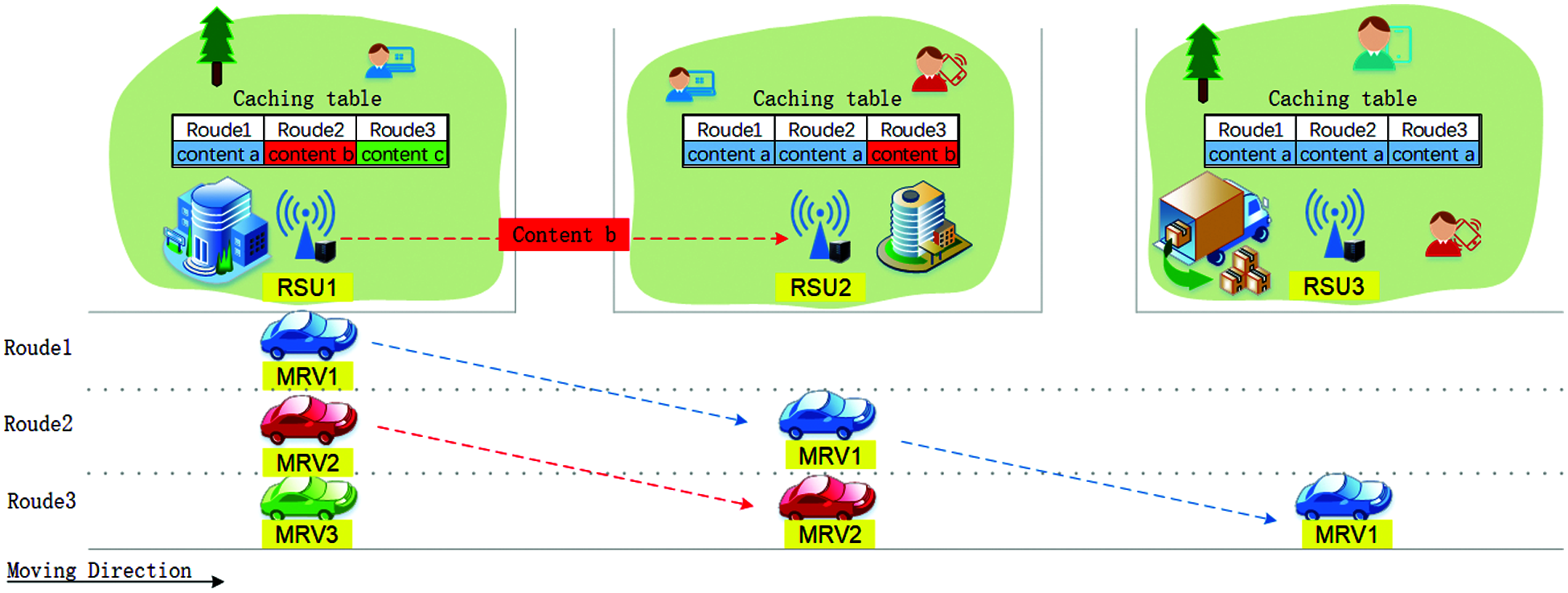

RSU should selectively replace outdated content based on the mobility of MRV. For example, when MRV moves from RSU1 to RSU2, RSU1 still stores the content requested by MRV, which causes a waste of storage resources. Fig. 4 shows the detailed process of three caching replacement rounds. MRV1, MRV2, and MRV3 request content

Figure 4: Caching replacement process

In the first caching round, MRV1 passes through the coverage area of RSU1 and requests content

In the second caching round, MRV1 leaves the coverage area of RSU1. At the same time, MRV2 will pass the coverage area of RSU1 and request content

At the beginning of the third caching round, the cache status of each RSU is: RSU1 cache content

The flow of caching algorithm is shown in Fig. 5. In DDPG based collaborative caching algorithm, the input dataset is the state information of IoV environment, and the output dataset is content caching and delivery decisions. According to the decisions and node cache state, the cache replacement scheme determines the contents that need to be replaced.

Figure 5: Flow chart of collaborative caching scheme

6 Simulation Results and Discussions

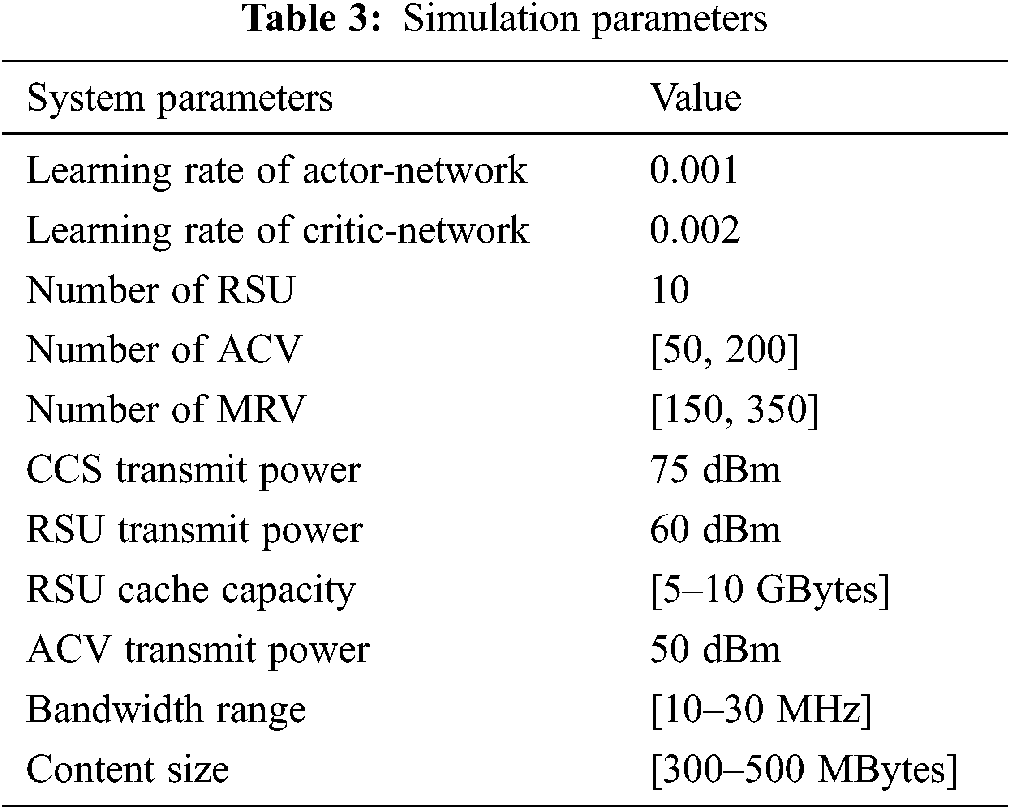

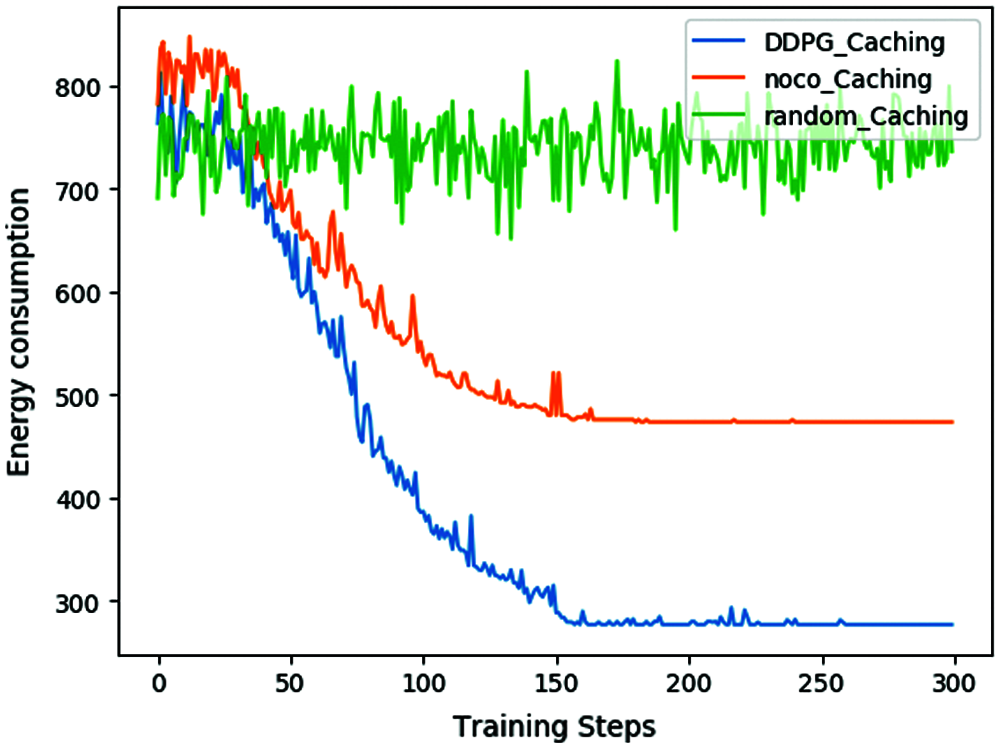

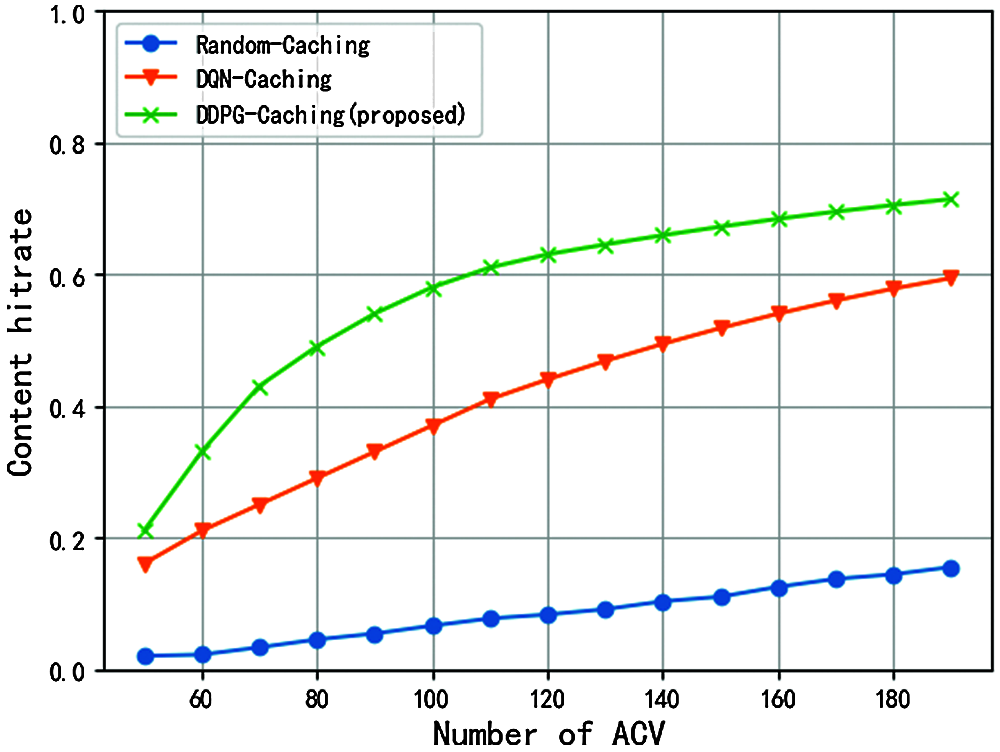

In this section, we use Python to build a simulation environment for the MVECC scheme. Using the TensorFlow platform to implement a DDPG-based collaborative caching solution (DDPG-Caching). Tab. 3 summarizes the main parameters used in the simulation.

As a reference, we implemented a random caching scheme and a deep Q-network (DQN)-based caching scheme (DQN-Caching) [24] as benchmark caching scheme. In the random caching scheme, the system randomly makes content caching and delivery decisions. In the DQN-caching scheme, there is no RSU collaborative caching, and only the RSU or ACV is responsible for delivery. We take energy consumption and content hit rate as performance measures. The energy consumption is generated by the content transmission, it can be calculated by Eqs. (9)–(13). We take the ratio of content hit number to the total requests number as content hit rate, which measures the caching efficiency of RSUs. The content hit rate can be formulated as:

where

6.2 System Performance Analysis

Figure 6: Comparison of energy consumption

Fig. 6 shows the comparison of system energy consumption costs when MRV = 200 and ACV = 50. As the number of training increases, we can draw the following observations from Fig. 6. First of all, the random cache scheme has the highest average system energy cost, the energy cost does not decrease with the increase of the number of trainings. This is because the random content caching and delivery decisions are not optimal system decisions. Therefore, random cache has a higher energy cost and does not have convergence. Secondly, because the collaboration of RSU and the assisted caching of ACV provide a more effective caching and delivery mode, the MVECC scheme can effectively reduce system costs. Third, compared with other benchmark schemes, through the DDPG reinforcement learning algorithm, our proposed MVECC scheme successfully solves the complex problem of high-dimensional action space in the joint optimization. So that MVECC can quickly find the best caching and delivery solution.

Next, in order to verify the performance of our proposed collaborative caching scheme, we compared the DQN-Caching algorithm with the proposed DDPG-Caching algorithm in terms of content hit rate and energy consumption.

Figure 7: Content hit rate under different ACV numbers

As shown in Fig. 7, compared with the non-collaborative algorithm, the proposed MVECC scheme increases the content hit rate by about 15%. As the number of ACVs increases, MVECC can provide MRV with more opportunities for V2V content delivery. In addition, through the caching and delivery decision made by DRL agent, RSU can cache more valuable content and deliver the content with a higher hit rate. Since DQN does not have strong learning ability when dealing with high-latitude spatial problems, the more ACVs there are, the higher the content hit rate of DRL based-MVECC will be.

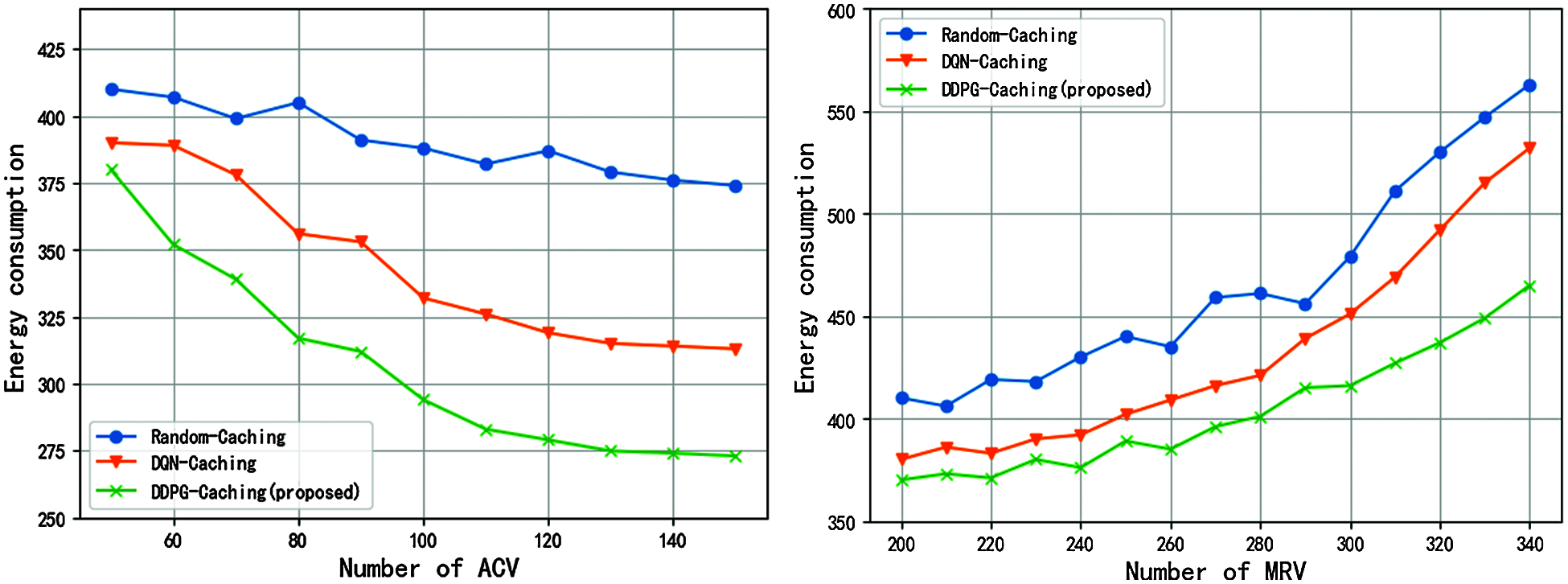

Figure 8: System energy consumption under different ACV and MRV numbers

Then, we use the overall system energy consumption as a performance indicator. As shown in Fig. 8, our proposed MVECC scheme has the lowest system energy consumption. The reason is that in the MVECC scheme, RSUs can respond to MRV content requests on the edge side, which can reduce system latency by reducing transmission distance and sharing cached content. When there are more MRV numbers, the number of requests will increase accordingly, so the system energy consumption will also increase. The increase in ACV will provide more V2V opportunities, so the system energy consumption will continue to decrease. Since there is no learning process for random caching, the content caching and delivery decisions executed each time are random rather than optimal, which results in a large system energy consumption. However, for DRL, when the number of MRV and ACV increases, there will be a disaster of dimensionality. Therefore, compared with the DQN caching algorithm, the DRL agent can avoid the dimensionality disaster through the DDPG algorithm and determine the best caching and delivery strategy for MVECC. Therefore, the optimal caching and delivery decisions made by the DRL agent in the MVECC scheme can still minimize the energy consumption.

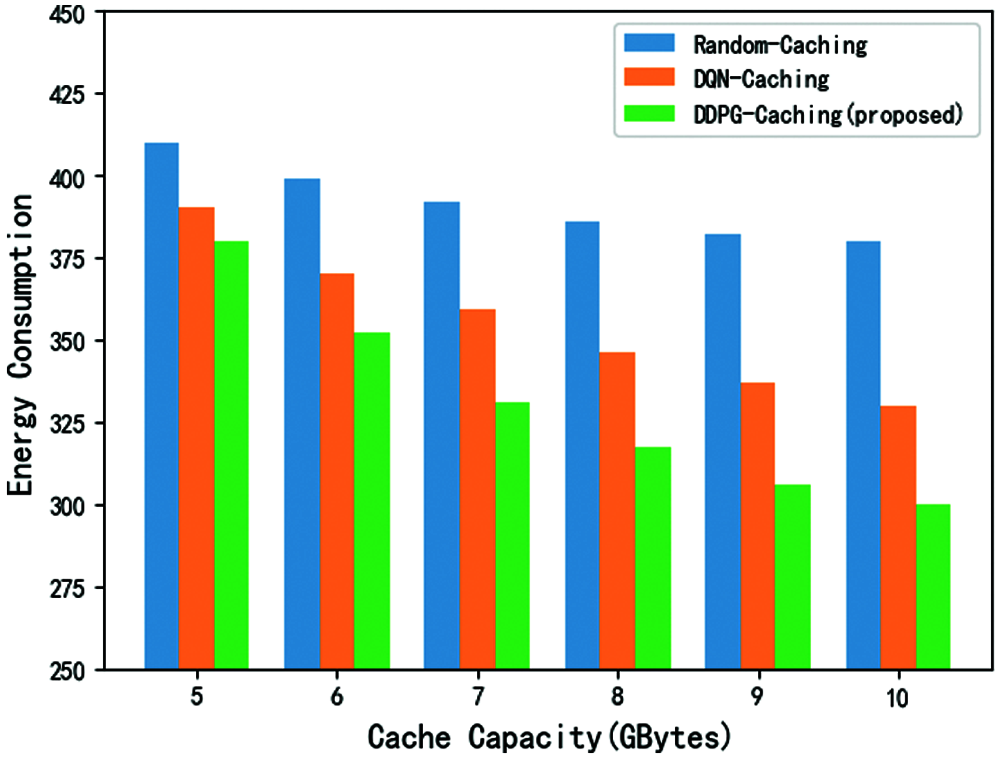

Figure 9: System energy consumption under different cache capacity

Finally, we compared the system energy consumption under different cache capacities. As shown in Fig. 9, as the cache capacity increases, the system energy consumption continues to decrease. This is because more content can be cached on the edge side, MRV content requests will be responded to more on the edge side. The number of content requests forwarded by the link to the CCS will be greatly reduced, thereby effectively reducing the high energy consumption costs on the backhaul link. In addition, the DRL agent selects the optimal content caching and delivery decision for the MVECC scheme, so that the caching node can cache the content that is more likely to be delivered under the limited cache capacity. RSU and ACV have more opportunities for content delivery, so that the system has the lowest energy consumption.

In this article, we take the vehicle caching network as an example to study the collaborative caching problem in the internet of vehicles. We propose a novel MEC-assisted vehicle edge collaborative caching (MVECC) scheme to improve cache efficiency. With the help of the roadside unit (RSU) and assisted caching vehicle, MVECC has two content caching modes and four content delivery modes coexist. MVECC also uses DRL to actively cache popular content on RSU, so as to reduce cache energy consumption. In addition, based on the vehicle mobility, we designed a mobility-aware cache replacement strategy to dynamically update the cached content on RSU. The simulation results show that the method is better than the random caching scheme and the DQN caching scheme in terms of content hit rate and system energy consumption. We do not consider the impact of link state changes on the transmission process, in our future work, we intend to study this problem and design a caching scheme based on federated learning to better adapt to user behavior.

Acknowledgement: Thanks for the technical support provided by the State Key Laboratory of Networking and Switching Technology.

Funding Statement: This work is supported by the Science and Technology Project of State Grid Corporation of China: Research and Application of Key Technologies in Virtual Operation of Information and Communication Resources.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Y. Kim, N. An, J. Park and H. Lim, “Mobility support for vehicular cloud radio-access-networks with edge computing,” in 2018 IEEE 7th Int. Conf. on Cloud Networking (CloudNetTokyo, pp. 1–4, 2018. [Google Scholar]

2. Y. Yan, Y. Dai, Z. Zhou, W. Jiang and S. Guo, “Edge computing-based tasks offloading and block caching for mobile blockchain,” Computers Materials & Continua, vol. 62, no. 2, pp. 905–915, 2020. [Google Scholar]

3. D. Zhu, Y. Sun, X. Li and R. Qu, “Massive files prefetching model based on LSTM neural network with cache transaction strategy,” Computers Materials & Continua, vol. 63, no. 2, pp. 979–993, 2020. [Google Scholar]

4. M. Yu, R. Li and Y. Chen, “A cache replacement policy based on multi-factors for named data networking,” Computers Materials & Continua, vol. 65, no. 1, pp. 321–336, 2020. [Google Scholar]

5. G. Qiao, S. Leng, S. Maharjan, Y. Zhang and N. Ansari, “Deep reinforcement learning for cooperative content caching in vehicular edge computing and networks,” IEEE Internet of Things Journal, vol. 7, no. 1, pp. 247–257, 2020. [Google Scholar]

6. Z. Li, W. Wei, T. Zhang, M. Wang, S. Hou et al., “Online multi-expert learning for visual tracking,” IEEE Transactions on Image Processing, vol. 29, pp. 934–946, 2020. [Google Scholar]

7. Q. Deng, Z. Li, J. Chen, F. Zeng, H. Wang et al., “Dynamic spectrum sharing for hybrid access in OFDMA-based cognitive femtocell networks,” IEEE Transactions on Vehicular Technology, vol. 67, no. 11, pp. 10830–10840, 2018. [Google Scholar]

8. M. A. Maddah-Ali and U. Niesen, “Fundamental limits of caching,” IEEE Transactions on Information Theory, vol. 60, no. 5, pp. 2856–2867, 2014. [Google Scholar]

9. S. Wang, X. Zhang, Y. Zhang, L. Wang, J. Yang et al., “A survey on mobile edge networks: Convergence of computing, caching and communications,” IEEE Access, vol. 5, pp. 6757–6779, 2017. [Google Scholar]

10. X. Wang, S. Leng and K. Yang, “Social-aware edge caching in fog radio access networks,” IEEE Access, vol. 5, pp. 8492–8501, 2017. [Google Scholar]

11. L. Yao, A. Chen, J. Deng, J. Wang and G. Wu, “A cooperative caching scheme based on mobility prediction in vehicular content centric networks,” IEEE Transactions on Vehicular Technology, vol. 67, no. 6, pp. 5435–5444, 2018. [Google Scholar]

12. H. Wang, Y. Li, X. Zhao and F. Yang, “An algorithm based on markov chain to improve edge cache hit ratio for blockchain-enabled IoT,” China Communications, vol. 17, no. 9, pp. 66–76, 2020. [Google Scholar]

13. J. Huang, C. Zhang and J. Zhang, “A multi-queue approach of energy efficient task scheduling for sensor hubs,” Chinese Journal of Electronics, vol. 29, no. 2, pp. 242–247, 2020. [Google Scholar]

14. J. Huang, J. Liang and S. Ali, “A simulation-based optimization approach for reliability-aware service composition in edge computing,” IEEE Access, vol. 8, pp. 50355–50366, 2020. [Google Scholar]

15. C. Campolo and A. Molinaro, “Improving V2R connectivity to provide ITS applications in IEEE 802.11p/WAVE VANETs,” in 2011 18th Int. Conf. on Telecommunications, Ayia Napa, pp. 476–481, 2011. [Google Scholar]

16. J. Kwon and H. Park, “Reliable data dissemination strategy based on systematic network coding in V2I networks,” in 2019 Int. Conf. on Information and Communication Technology Convergence (ICTCJeju Island, Korea (Southpp. 744–746, 2019. [Google Scholar]

17. J. Mei, K. Zheng, L. Zhao, Y. Teng and X. Wang, “A latency and reliability guaranteed resource allocation scheme for LTE V2V communication systems,” IEEE Transactions on Wireless Communications, vol. 17, no. 6, pp. 3850–3860, 2018. [Google Scholar]

18. W. Zhao, Y. Qin, D. Gao, C. Foh and H. Chao, “An efficient cache strategy in information centric networking vehicle-to-vehicle scenario,” IEEE Access, vol. 5, pp. 12657–12667, 2017. [Google Scholar]

19. R. Ding, T. Wang, L. Song, Z. Han and J. Wu, “Roadside-unit caching in vehicular ad hoc networks for efficient popular content delivery,” in 2015 IEEE Wireless Communications and Networking Conf. (WCNCNew Orleans, LA, USA, pp. 1207–1212, 2015. [Google Scholar]

20. S. Abdelhamid, H. S. Hassanein and G. Takahara, “On-road caching assistance for ubiquitous vehicle-based information service,” IEEE Transactions on Vehicular Technology, vol. 64, no. 12, pp. 5477–5492, 2015. [Google Scholar]

21. B. Hu, L. Fang, X. Cheng and L. Yang, “Vehicle-to-vehicle distributed storage in vehicular networks,” in 2018 IEEE Int. Conf. on Communications (ICCKansas City, MO, USA, pp. 1–6, 2018. [Google Scholar]

22. Z. Li, T. Chu, I. Kolmanovsky, Y. Xiang and X. Yin, “Cloud resource allocation for cloud-based automotive applications,” Mechatronics, vol. 50, no. 4, pp. 356–365, 2018. [Google Scholar]

23. A. Sadeghi, F. Sheikholeslam and G. B. Giannakis, “Optimal and scalable caching for 5G using reinforcement learning of space-time popularities,” IEEE Journal of Selected Topics in Signal Processing, vol. 12, no. 1, pp. 180–190, 2018. [Google Scholar]

24. J. Tang, H. Tang, X. Zhang, K. Cumanan, G. Chen et al., “Energy minimization in D2D-assisted cache-enabled internet of things: A deep reinforcement learning approach,” IEEE Transactions on Industrial Informatics, vol. 16, no. 8, pp. 5412–5423, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |