DOI:10.32604/csse.2022.018034

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.018034 |  |

| Article |

An Automated Brain Image Analysis System for Brain Cancer using Shearlets

1Department of Electronics and Communication Engineering, University College of Engineering Thirukkuvalai, Tamilnadu, 610204, India

2Department of Electronics and Communication Engineering, University College of Engineering Kancheepuram, Kancheepuram, 631552, India

*Corresponding Author: R. Muthaiyan. Email: muthume2005@gmail.com

Received: 22 February 2021; Accepted: 27 April 2021

Abstract: In this paper, an Automated Brain Image Analysis (ABIA) system that classifies the Magnetic Resonance Imaging (MRI) of human brain is presented. The classification of MRI images into normal or low grade or high grade plays a vital role for the early diagnosis. The Non-Subsampled Shearlet Transform (NSST) that captures more visual information than conventional wavelet transforms is employed for feature extraction. As the feature space of NSST is very high, a statistical t-test is applied to select the dominant directional sub-bands at each level of NSST decomposition based on sub-band energies. A combination of features that includes Gray Level Co-occurrence Matrix (GLCM) based features, Histograms of Positive Shearlet Coefficients (HPSC), and Histograms of Negative Shearlet Coefficients (HNSC) are estimated. The combined feature set is utilized in the classification phase where a hybrid approach is designed with three classifiers; k-Nearest Neighbor (kNN), Naive Bayes (NB) and Support Vector Machine (SVM) classifiers. The output of individual trained classifiers for a testing input is hybridized to take a final decision. The quantitative results of ABIA system on Repository of Molecular Brain Neoplasia Data (REMBRANDT) database show the overall improved performance in comparison with a single classifier model with accuracy of 99% for normal/abnormal classification and 98% for low and high risk classification.

Keywords: Brain image analysis; wavelets; Shearlet; multi-scale analysis; hybrid classification

The brain is the primary organ of the human body. As the cause of brain cancer is still unknown, an early diagnosis is required to decrease the mortality rate. Image classification is one of the diagnostic approaches used in the medical field which does not require segmentation [1–3]. Most of the image classification algorithms fall into one of the two categories; supervised and unsupervised. The former one learns the inherent patterns of training data for the classification using neural networks [4–6], Support Vector Machine (SVM) [7–11], k-Nearest Neighbor (kNN) [12], Naive Bayes (NB) [13] whereas the later one depends only on the input data. The clustering approach such as k-means and fuzzy-c-means come under unsupervised categories. When compared to unsupervised systems, the supervised systems give better results as they learn or trained from many samples.

A regularized extreme learning machine is discussed in Gumaei et al. [4] which combines two feature extraction approaches; normalized gist with Principal Component Analysis (PCA). These features help to classify brain tumor using a feed forward neural network. A convolutional neural network structure is used for feature extraction and classification in Sultan et al. [5]. It consists of 16 layers in which the features are selected in convolution and rectified linear unit. The dropout layer is used to prevent over fitting. Then, the fully connected layer and softmax layer is used for classification. Another deep learning approach is described in Kumar et al. [6] which use Discrete Wavelet Transform (DWT) to decompose the input images and the obtained feature space is reduced by auto-encoder.

Though deep learning approaches provide better results, it is very difficult to understand their architectures and also time complexity is very high. To achieve highest accuracy with reduced complexity, a hybrid approach is developed in this study using three different classifiers; kNN, NB and SVM. It is well known that the hybrid approach combines the qualities of each technique and thus provides better performance than single approach.

An approach to classify brain MRI images is described in Madheswaran et al. [7]. The input brain images are decomposed by DWT and the features are extracted by genetic algorithm. The parameters like, smoothness, entropy, correlation, root mean square and kurtosis are analyzed. SVM classifier is used for the classification. MRI brain image classification using SVM with various kernels is described in Mallick et al. [8]. Fuzzy-c-means algorithm is used to remove the skull region. Then, GLCM features are extracted and then irrelevant features are eliminated using genetic algorithm with joint entropy. SVM classifier is used for classification.

The energy features of different wavelet families are discussed in Mohankumar [9] using SVM classifier. Median filtering is used for de-noising and then decomposed by DWT up to 5th levels to extract energy features. Tetrolet based system with SVM classifier is discussed in Babu et al. [10] for brain MRI image classification. After preprocessing, brain image is transformed into frequency domain by Tetrolet transform and then t-test is applied for feature selection. The extension of wavelet transform called dual tree M band is employed for brain MRI image classification in Ayalapogu et al. [11]. Statistical and co-occurrence based features are extracted and SVM-Radial Basis Function (SVM-RBF) is used for classification.

In this paper, an efficient ABIA system for brain MRI image classification is presented by the use of NSST with a hybrid classification approach. Though the use of certain type of frequency domain analysis and the extraction of features for a particular classification system is not new, the salient feature of ABIA system is the extraction of combination of features (GLCM + HPSC + HNSC) from the selected NSST sub-bands at each level rather than selecting the features extracted from all NSST sub-bands. In many transformation based systems in the literature, features are extracted directly from the sub-bands [9–11]. Also, the outcome of ABIA system is obtained from the results of three classifiers; kNN (a lazy classifier), NB (a probabilistic classifier) and SVM (a non-probabilistic classifier) instead of a single classifier model by a hybrid approach.

The organization of the paper about ABIA system is as follows; the methods and materials used to develop the ABIA system for brain MRI image classification are discussed in Section 2. The next section conveys the quantitative results and the performances of ABIA system and the last section presents the conclusion of ABIA system.

The non-invasive diagnostic support system for brain cancer is considered as a two-class image classification system with two stages. At first, the given brain image is classified as Normal or Abnormal (NA Stage) and then the abnormal severity is classified as Low grade or High grade (LH Stage). Shearlet transform is analyzed well in various image processing based applications such as de-noising [14], enhancement [15], fusion [16], mammogram classification [17], and prostate cancer classification [18]. In this work, Shearlet transform based features are analyzed for the classification of brain images. It uses MRI of the brain as it is a low-risk non-invasive imaging technique.

2.1 Representation of MRI Brain Images

A directional representation system is employed by ABIA system due to its superior approximation performance over wavelets [19] by utilizing directional filter banks. In contrast to wavelets, the degree of orientations varies in Contourlets [20], Curvelets [21] and Shearlets [22,23] in a particular level of decomposition. Also, they precisely locate the boundary curves in a smooth region. However, Shearlets can able to detect the curves in a non smooth region. Hence, Shearlet transform is used as a feature extraction technique. Shearlets consist of well localized functions that are controlled by three variables; translation (t), shear (s) and scale (a). They are defined by [24]

where

Let

where

Figure 1: (a) Frequency domain by discrete Shearlet (b) Frequency domain on the cone by discrete Shearlet

The horizontal truncated cone regions (

Based on the horizontal and vertical cone regions, the Shearlet system in Eq. (1) can be rewritten as

where the index d is horizontal and vertical cone regions. NSST is employed in ABIA system in order to overcome lack of translation invariance of the Shearlet transform. The feature extraction stage of ABIA system is shown in Fig. 2.

Figure 2: Feature extraction stage of ABIA system

At first, the given MRI brain image is represented by NSST at various scale of decomposition. It produces various directional sub-bands and each sub-band carries significant information about the given image. Fig. 3 shows the NSST decomposition at scale 1 with 4 directions.

Figure 3: NSST decomposition at scale 1 with 4-directions (a) Source image (b) Low frequency components (c)–(f) directional components

As the feature space of NSST coefficients is very high, a statistical t-test is applied to select the directional sub-bands based on their energies. For the features of two classes A and B, it is defined by

where

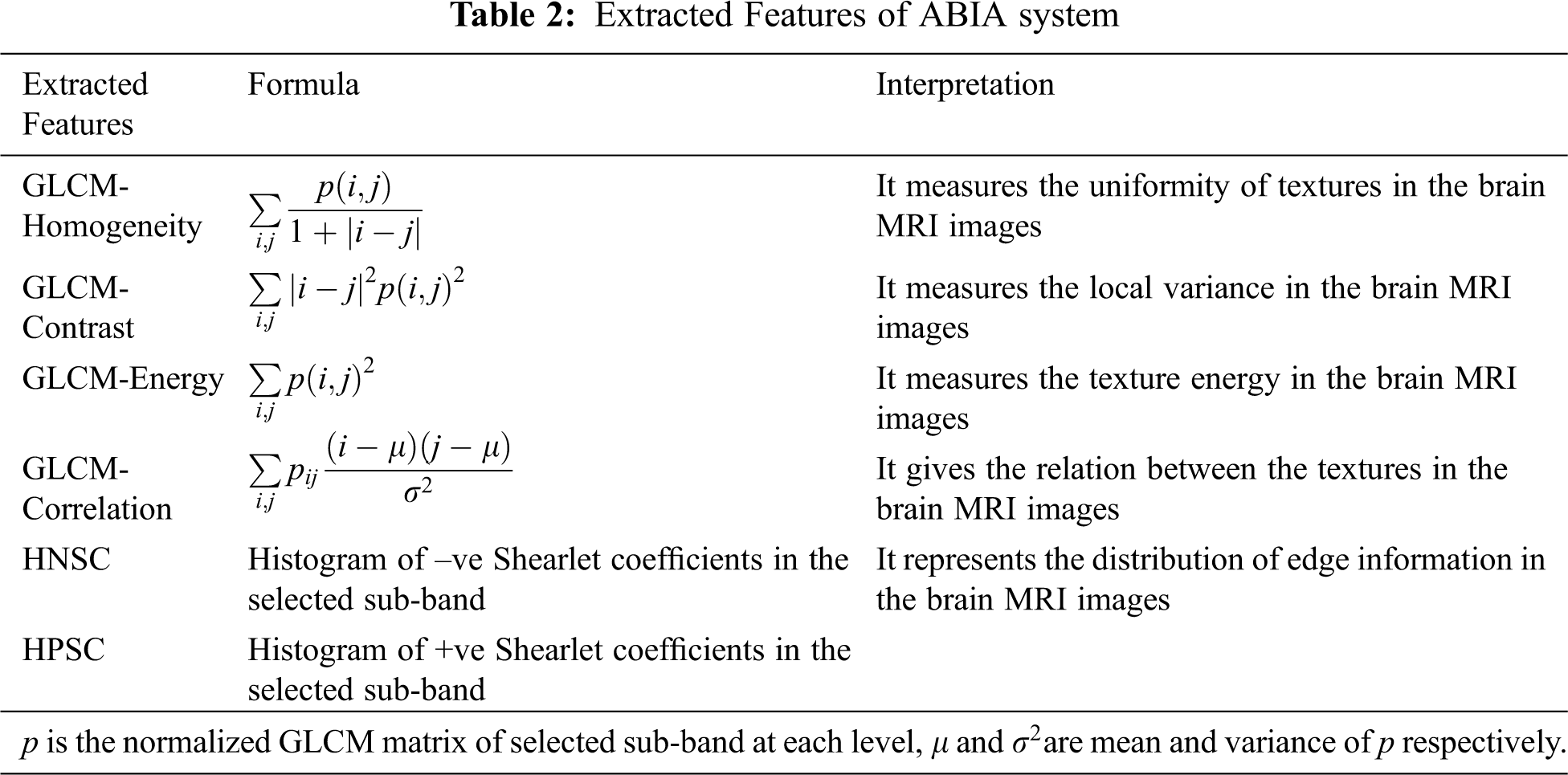

The selected directional sub-bands are utilized for extracting features such as GLCM [25], HPSC, and HNSC. GLCM features are extracted with one pixel difference and at four angular directions; 0, 45, 90 and 135 degrees. Also, HPSC and HNSC features use 10 bin histograms to reduce the feature space. Tab. 2 shows the features used by ABIA system for brain MRI image classification. The number of features extracted at any angular direction of GLCM is 4 and thus 16 GLCM features are extracted from four angular directions. Also, a total of 20 histogram features are obtained from HPSC and HNSC. Thus, the ABIA system uses 36 features for the classification.

2.2 Classification of MRI Brain Images

The selection of good classification algorithm is also an important step to achieve higher accuracy. In the ABIA system, a hybrid classification is employed with three different classifiers; kNN, NB and SVM classifiers. The output of individual classifiers for a testing input is hybridized to take a final decision for the classification of brain cancer. Fig. 4 shows the hybrid classification stage of the brain image classification system.

Figure 4: Hybrid classification stage of ABIA system

kNN [12] is performed by finding the k nearest neighbours in the feature space defined by the feature vector. The feature vector of ABIA system is the combination of features (GLCM + HPSC + HNSC) extracted from the selected NSST sub-bands at each level. Each neighbour votes on the classification of the testing sample. The closeness of neighbours in n-space is calculated from the n-dimensional Euclidean distance metric. Let us consider two feature vectors with n-dimension;

Euclidean distance

There is no training phase in kNN. Hence, it is classified as a lazy classifier. As the computation of Euclidean distance requires all of the training objects each time, kNN requires more storage space and more calculation at the time of classification.

NB classifier [13] uses Bayesian inference for the classification with an assumption that features of different classes are independent of one another. This assumption reduces the computational complexity as small amount of training data is required to train the classifier in 1-dimensional space n times where n is the number of features. If the features are assumed to be related to one another, then the testing object needs to be classified in n-dimensional space.

The posterior probability defined by Bayes theory is the probability that the object belongs to

where,

In many machine learning applications, SVM classifier [26] is used as a classification tool. It is very useful for two-class and multi-class classification problems. Let T

where the bias(b) and weight(w) are computed using T. The hyperplane defined in (12) separates the features in T optimally [26].

where the trade-off parameter (C) controls the trade-off between complexity and empirical risk.

where the support vectors are

where

The final decision of ABIA system is made from the classification results of each classifier to obtain a better decision. It combines the robustness of each classification algorithm and eliminates their drawbacks. Let

where k is the number of classifiers used. The weight of each classifier is assigned to their accuracy when using different training samples.

The performance of ABIA system to classify brain MRI images is evaluated by using the standard set of brain tumor images available in the REMBRANDT database [27–29]. It consists of MRI brain images collected from 130 subjects. All images are in DICOM format with resolution of 256 × 256 pixels. From the vast number of images, 100 images in each category (normal/abnormal) are selected [11]. Fig. 5 shows REMBRANDT database brain MRI images.

Figure 5: REMBRANDT database -brain MRI images (a) Normal (b) Low grade (c) High grade

The ability of ABIA system to classify all brain MRI images is measured by classification accuracy (

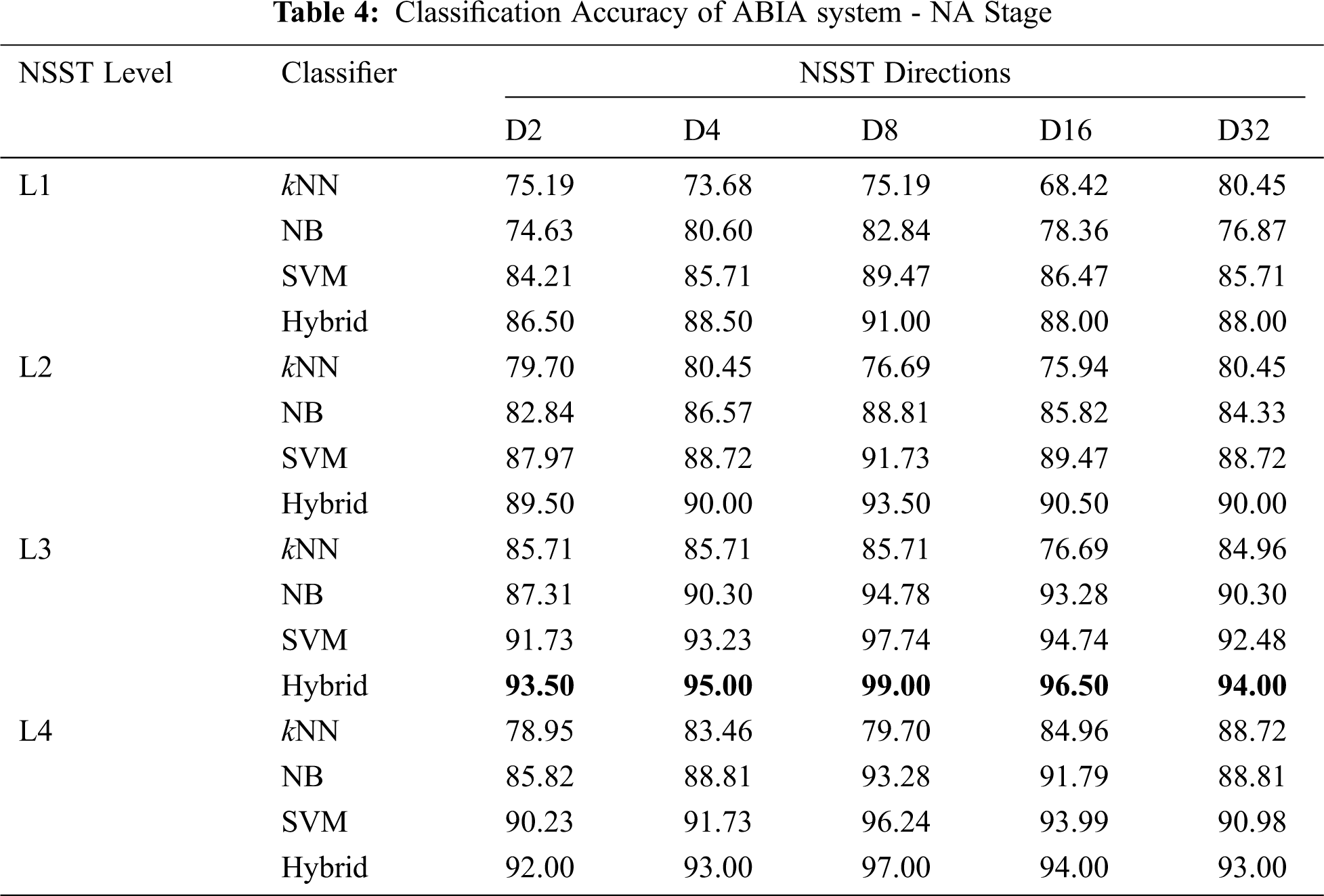

Tab. 4 shows the

It is observed that the hybrid approach gives much higher performance in L3-D8 than other combinations. Also the

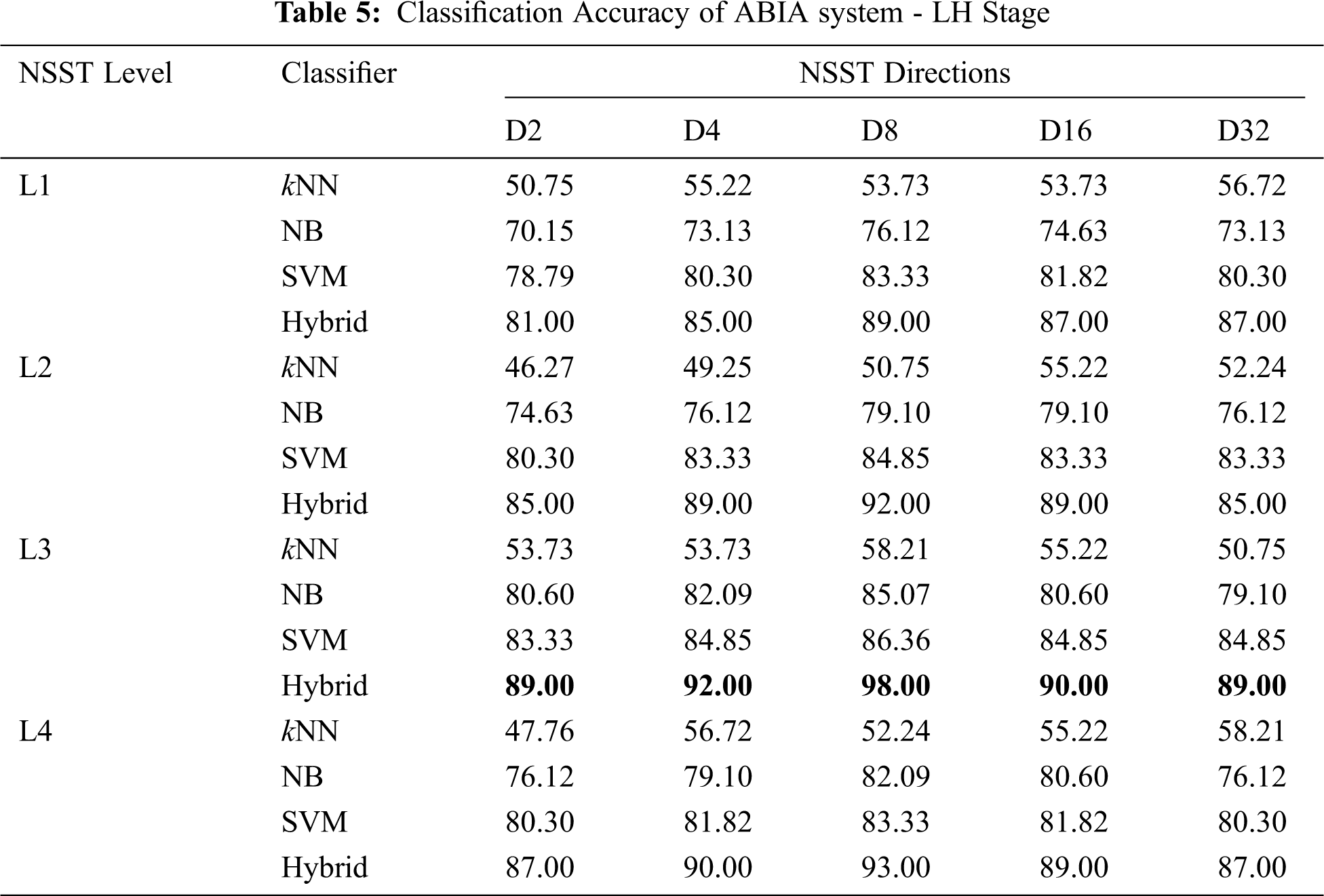

It is observed from Tab. 5 that the LH stage of ABIA system also produces higher performance in L3-D8 than others. The hybrid approach yields an

Figure 6: ROCs of ABIA system- LH stage (left image) and LH stage (Right image)

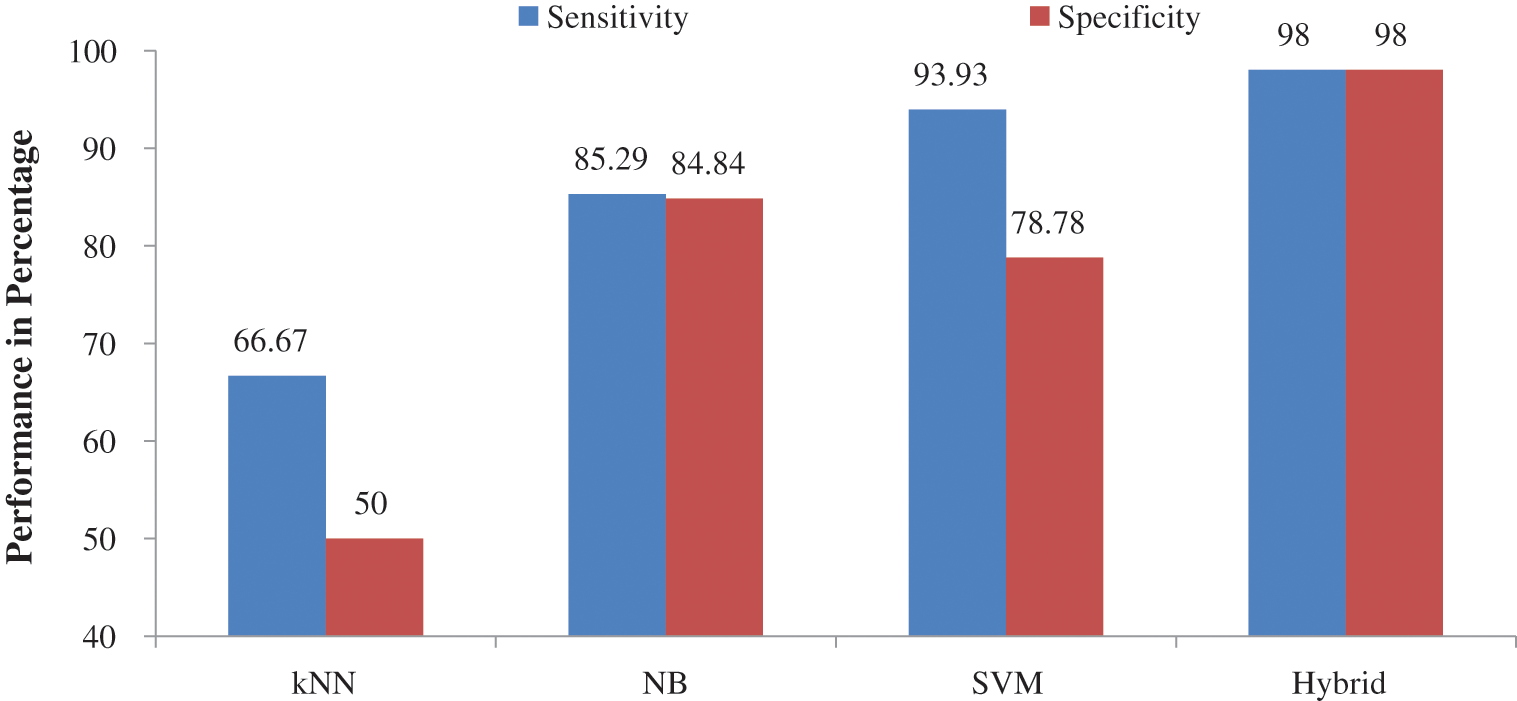

Figs. 7 and 8 show the other performances such as

Figure 7: Performance comparison of individual classifier with hybrid approach – NA stage of ABIA system

Figure 8: Performance comparison of individual classifier with hybrid approach – LH stage of ABIA system

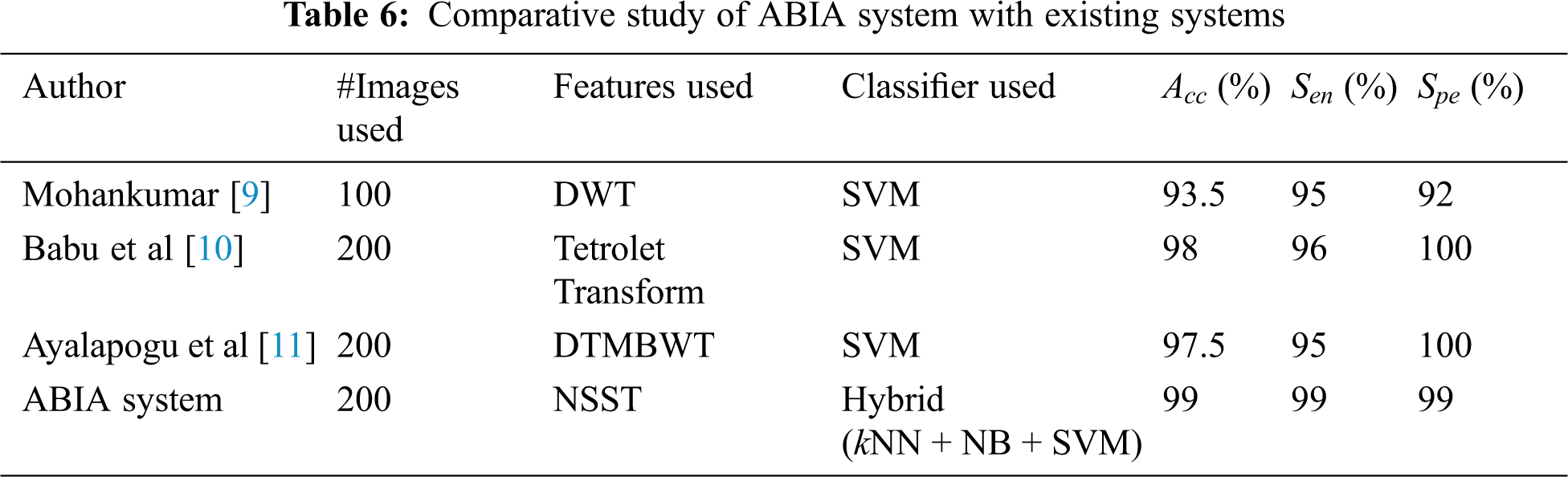

It is observed from the performance comparisons in Figs. 7 and 8 that hybrid approach performs well than their individual counterpart. It is obvious that kNN is the least performer as it is a lazy classifier. Tab. 6 shows the comparative study of ABIA system with existing approaches using REMBRANDT database images. Also they are designed to classify them into normal or abnormal category only. Thus, the performance of existing approach is compared with the performance of NA stage of ABIA system.

From Tab. 6, it is observed that the ABIA system is able to achieve near perfect sensitivity and specificity. Also, the performance of ABIA system shows a statistically significant difference in the accuracy of existing systems. From the performances of ABIA system, it is concluded that the ABIA system could potentially decrease the physician bias seen in ROI analysis. The output of ABIA system gives an alarm to the radiologist who can still examine the image for further review.

In this paper, an ABIA system to classify brain MRI images is discussed. The most correlated NSST sub-band at each NSST level is selected by t-test on training data set. The ABIA system uses the combination of features that includes GLCM, HPSC, and HNSC as indicators for the characterization of brain MRI images. Then, the extracted features are trained by a hybrid classification approach that includes kNN, NB and SVM. A two two-class classification system (NA stage and LH stage) is designed to classify brain MRI images. Results show that the clinical applicability of ABIA system for brain MRI image classification with an accuracy of 99% for NA stage and 98% for LH stage using the hybrid approach.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. Gokul Kannan and T. R. Ganeshbabu, “Glaucoma image classification using discrete orthogonal stockwell transform,” International Journal of Advances in Signal and Image Sciences, vol. 3, no. 1, pp. 1–6, 2017. [Google Scholar]

2. M. Chithra Devi and S. Audithan, “Analysis of different types of entropy measures for breast cancer diagnosis using ensemble classification,” Biomedical Research, vol. 28, no. 7, pp. 3182–3186, 2017. [Google Scholar]

3. K. S. Thivya, P. Sakthivel and P. M. Venkata Sai, “Analysis of framelets for breast cancer diagnosis,” Technology and Health Care, vol. 24, no. 1, pp. 21–29, 2016. [Google Scholar]

4. A. Gumaei, M. M. Hassan, M. R. Hassan, A. Alelaiwi and G. Fortino, “A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification,” IEEE Access, vol. 7, pp. 36266–36273, 2019. [Google Scholar]

5. H. H. Sultan, N. M. Salem and W. Al-Atabany, “Multi-classification of brain tumor images using deep neural network,” IEEE Access, vol. 7, pp. 69215–69225, 2019. [Google Scholar]

6. S. Kumar, C. Dabas and S. Godara, “Classification of brain MRI tumor images: A hybrid approach,” Procedia computer science, vol. 122, pp. 510–517, 2017. [Google Scholar]

7. M. Madheswaran and D. Anto sahaya Das, “Classification of MRI images using support vector machine with various kernels,” Biomedical research, vol. 26, no. 3, pp. 505–513, 2015. [Google Scholar]

8. P. K. Mallick, S. H. Ryu, S. K. Satapathy, S. Mishra, G. N. Nguyen et al., “Brain MRI image classification for cancer detection using deep wavelet autoencoder-based deep neural network,” IEEE Access, vol. 7, pp. 46278–46287, 2019. [Google Scholar]

9. S. Mohankumar, “Analysis of different wavelets for brain image classification using support vector machine,” International Journal of Advances in Signal and Image Sciences, vol. 2, no. 1, pp. 1–4, 2016. [Google Scholar]

10. B. S. Babu and S. Varadarajan, “Detection of brain tumour in MRI scan images using tetrolet transform and SVM classifier,” Indian Journal of Science and Technology, vol. 10, no. 19, pp. 1–10, 2017. [Google Scholar]

11. R. R. Ayalapogu, S. Pabboju and R. R. Ramisetty, “Analysis of dual tree M-band wavelet transform based features for brain image classification,” Magnetic resonance in medicine, vol. 80, no. 6, pp. 2393–2401, 2018. [Google Scholar]

12. K. Beyer, J. Goldstein, R. Ramakrishnan and U. Shaft, “When is “nearest neighbor” meaningful?,” in Int. conf. on database theory, Berlin, Heidelberg: Springer, pp. 217–235, 1999. [Google Scholar]

13. I. Rish, “An empirical study of the naive Bayes classifier,” IJCAI, 2001 workshop on empirical methods in artificial intelligence, vol. 3, no. 22, pp. 41–46, 2001. [Google Scholar]

14. G. T. Selvi and R. K. Duraisamy, “A novel 3-D digital shearlet transform based image fusion technique using MR and CT images for brain tumor detection,” Middle-East Journal of Scientific Research, vol. 22, no. 2, pp. 255–260, 2014. [Google Scholar]

15. L. Li, Y. Si and Z. Jia, “Microscopy mineral image enhancement based on improved adaptive threshold in nonsubsampled shearlet transform domain,” AIP Advances, vol. 8, no. 3, pp. 035002, 2018. [Google Scholar]

16. X. Jin, R. Nie, D. Zhou, Q. Wang and K. He, “Multifocus color image fusion based on NSST and PCNN,” Journal of Sensors, vol. 2016, pp. 1–12, 2016. [Google Scholar]

17. M. Kanchana and P. Varalakshmi, “Computer aided system for breast cancer in digitized mammogram using shearlet band features with LS-SVM classifier,” International Journal of Wavelets, Multiresolution and Information Processing, vol. 14, no. 3, pp. 1650017, 2016. [Google Scholar]

18. H. Rezaeilouyeh and M. H. Mahoor, “Automatic gleason grading of prostate cancer using shearlet transform and multiple kernel learning,” Journal of Imaging, vol. 2, no. 3, pp. 1–19, 2016. [Google Scholar]

19. S. Mallat, “A wavelet tour of signal processing: the sparse way,” Academic Press, Elsevier, 2008. [Google Scholar]

20. D. Donoho and E. Candes, “Continuous curvelet transform: II,” Discretization and frames, Applied and Computational Harmonic Analysis, vol. 19, no. 2, pp. 198–222, 2005. [Google Scholar]

21. M. N. Do and M. Vetterli, “The contourlet transform: An efficient directional multiresolution image representation,” IEEE Transactions on image processing, vol. 14, no. 12, pp. 2091–2106, 2005. [Google Scholar]

22. W. Q. Lim, “The discrete shearlet transform: A new directional transform and compactly supported shearlet frames,” IEEE Trans. Image Processing, vol. 19, no. 5, pp. 1166–1180, 2010. [Google Scholar]

23. G. Easley, D. Labate and W. Q. Lim, “Sparse directional image representations using the discrete shearlet transform,” Applied and Computational Harmonic Analysis, vol. 25, no. 1, pp. 25–46, 2008. [Google Scholar]

24. K. Guo, D. Labate and W. Q. Lim, “Edge analysis and identification using the continuous shearlet transform,” Applied and Computational Harmonic Analysis, vol. 27, no. 1, pp. 24–46, 2009. [Google Scholar]

25. R. M. Haralick, K. Shanmugam and I. H. Dinstein, “Textural features for image classification,” IEEE Transactions on systems, man, and cybernetics, vol. 6, pp. 610–621, 1973. [Google Scholar]

26. C. Cortes and V. Vapnik, “Support-vector networks,” Machine learning, vol. 20, no. 3, pp. 273–297, 1995. [Google Scholar]

27. Scarpace, Lisa, Flanders, E. Adam, Jain et al., “Data from REMBRANDT,” The Cancer Imaging Archive, 2015. http://doi.org/10.7937/K9/TCIA.2015.588OZUZB. [Google Scholar]

28. K. Clark, B. Vendt, K. Smith, J. Freymann, J. Kirby et al., “The Cancer Imaging Archive (TCIAMaintaining and Operating a Public Information Repository,” Journal of Digital Imaging, vol. 26, no. 6, pp. 1045–1057, 2013. [Google Scholar]

29. Brain MRI images:https://wiki.cancerimagingarchive.net/display/Public/REMBRANDT. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |