Open Access

Open Access

REVIEW

A Comprehensive Review of Pill Image Recognition

1 Faculty of Information Technology, Nguyen Tat Thanh University, 300A Nguyen Tat Thanh Street, District 4, Ho Chi Minh City, 700000, Vietnam

2 Faculty of Information Technology, School of Technology, Van Lang University, 69/68 Dang Thuy Tram Street, Ward 13, Binh Thanh District, Ho Chi Minh City, 700000, Vietnam

3 Faculty of Information Technology, Ho Chi Minh City Open University, 35-37 Ho Hao Hon Street, Ward Co Giang, District 1, Ho Chi Minh City, 700000, Vietnam

* Corresponding Authors: Linh Nguyen Thi My. Email: ; Vinh Truong Hoang. Email:

(This article belongs to the Special Issue: Novel Methods for Image Classification, Object Detection, and Segmentation)

Computers, Materials & Continua 2025, 82(3), 3693-3740. https://doi.org/10.32604/cmc.2025.060793

Received 10 November 2024; Accepted 16 January 2025; Issue published 06 March 2025

Abstract

Pill image recognition is an important field in computer vision. It has become a vital technology in healthcare and pharmaceuticals due to the necessity for precise medication identification to prevent errors and ensure patient safety. This survey examines the current state of pill image recognition, focusing on advancements, methodologies, and the challenges that remain unresolved. It provides a comprehensive overview of traditional image processing-based, machine learning-based, deep learning-based, and hybrid-based methods, and aims to explore the ongoing difficulties in the field. We summarize and classify the methods used in each article, compare the strengths and weaknesses of traditional image processing-based, machine learning-based, deep learning-based, and hybrid-based methods, and review benchmark datasets for pill image recognition. Additionally, we compare the performance of proposed methods on popular benchmark datasets. This survey applies recent advancements, such as Transformer models and cutting-edge technologies like Augmented Reality (AR), to discuss potential research directions and conclude the review. By offering a holistic perspective, this paper aims to serve as a valuable resource for researchers and practitioners striving to advance the field of pill image recognition.Keywords

Pill image recognition has practical applications and benefits across various fields. In healthcare and patient care, it assists patients through mobile applications that identify pills via images, helping the elderly and those managing multiple medications by ensuring correct medication intake and reducing errors. It also supports pharmacists and doctors by verifying medications to prevent mix-ups and providing quick identification for accurate advice. In hospital medication management, it enhances inventory control by tracking and managing medication stock, and increases efficiency and accuracy through automation, minimizing the need for manual checks. For safety and legal compliance, it helps identify counterfeit or substandard medications and supports regulatory inspections and adherence to standards. Additionally, it aids the visually impaired through assistive applications that help them recognize medications and receive information via audio.

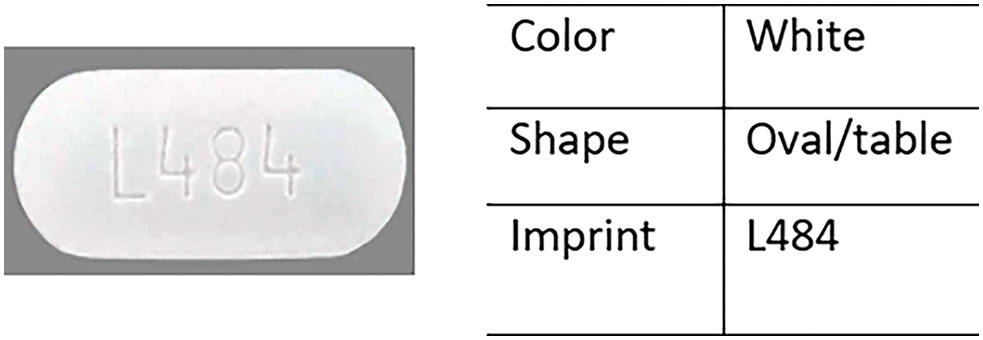

Recently, artificial intelligence (AI) has made significant strides and emerged as a powerful tool for addressing various challenges. In its early stages, pill identification was managed through various online systems that required users to manually enter multiple attributes, such as shape, color, and imprint, as shown in Fig. 1.

Figure 1: Shape, color, imprint of the Paracetamol pill

Websites like https://www.drugs.com/imprints.php (accessed on 15 January 2025) have made it easier for users to identify pills based on characteristics such as color, shape, and imprint. Despite this convenience, several challenges arise when relying on these platforms. For one, users must manually input various attributes, which can be difficult if the pill’s features are worn or unclear, making the process time-consuming. Additionally, the accuracy of the identification depends largely on the user’s ability to correctly describe the pill, increasing the probability of mistakes. Moreover, the website’s database may not always include the latest or less common medications, potentially leading to incomplete identification. These limitations emphasize the need for more efficient, automated solutions using pill image recognition technology.

This review highlights key ethical considerations in the development and application of pill image recognition technologies. Misidentification remains a critical risk, as inaccurate pill identification can lead to incorrect medication or dosage, potentially causing severe health consequences. To mitigate this, rigorous validation and verification processes must be implemented, alongside the use of diverse and representative datasets. Accessibility is another important factor, especially for visually impaired individuals and older adults. Developers should integrate assistive features, such as auditory feedback or simplified interfaces, to ensure inclusivity. Additionally, issues related to data privacy, security, and accountability necessitate compliance with ethical standards and regulatory frameworks. These considerations underscore the importance of ethical oversight in this field, encouraging the research community to prioritize safety, inclusivity, and trust in the development of pill image recognition systems.

In this literature review, we explore a range of methodologies employed for pill image recognition, categorizing them into four primary approaches: traditional image processing-based, traditional machine learning-based, deep learning-based, and hybrid-based methods. Traditional image processing-based methods use basic image processing techniques to extract features like shape, color, and imprint, facilitating the matching of pill images. Traditional machine learning-based methods build on these features, employing algorithms like K-Nearest Neighbors (k-NN), Support Vector Machines (SVM), and Decision Trees to classify pill images. Deep learning-based methods, powered by Convolutional Neural Networks (CNNs) and other neural network architectures, have shown remarkable performance by automatically learning intricate features from large datasets of pill images. Lastly, hybrid-based methods combine elements of the aforementioned approaches to capitalize on their respective strengths and reduce their limitations. The remainder of the article is organized as follows. Section 2 provides a comprehensive background on the foundational concepts relevant to this study. Section 3 presents a detailed literature survey. Section 4 discusses the benchmark datasets commonly used in the research community. In Section 5, a thorough comparison of various methods is conducted to highlight their respective strengths and weaknesses. Section 6 identifies open research problems. Finally, Section 7 concludes the article.

2.1 Definition of Pill Image Recognition

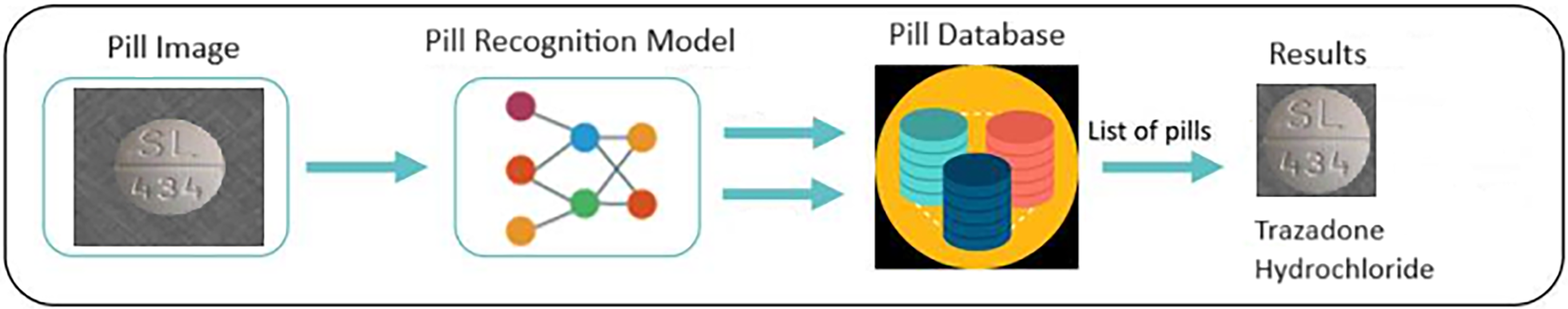

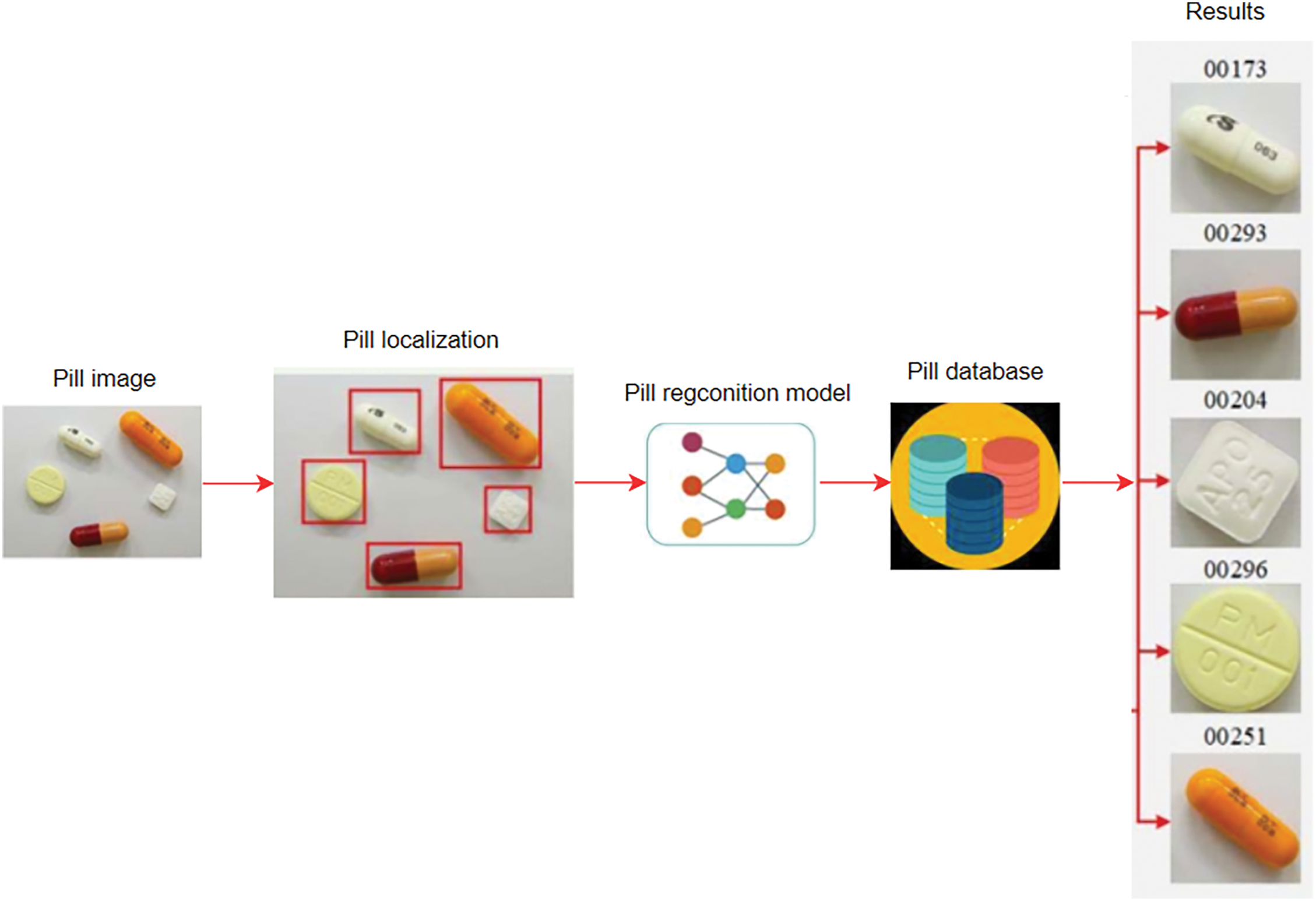

Pill image recognition is the process of using computer algorithms to recognize and identify pills based on visual features from photographic images of the pill. Figs. 2 and 3 illustrate the identification of one pill or multiple pills.

Figure 2: Defines the recognition of a pill

Figure 3: Defines the recognition of multiple pills

In the Fig. 2, the input is an image of a pill, including the background of the pill, the output is to identify the identity of that pill. In the Fig. 3, the input is an image of many pills, including the background of pills, the output is to identify each pill.

2.2 Definition of Pill Features

The shape of a pill refers to the geometric form or outline of the pill when viewed from above. It is one of the key physical characteristics used to identify and differentiate medications. Pill shapes can vary widely and are often designed for specific purposes related to the medication’s use, the ease of swallowing, or to avoid confusion with other pills.

The color of a pill refers to the hue or shade visible on the surface of the medication. Pill color is a significant identifying feature that helps distinguish one medication from another. It can be a single color or a combination of colors and may be used in conjunction with other physical characteristics such as shape, size, and imprint for accurate identification.

An imprint on a pill refers to any characters, symbols, logos, or patterns that are stamped, engraved, or printed on the surface of the pill. These imprints serve as unique identifiers to help distinguish one pill from another, providing essential information for identification and verification purposes. Example about shape, color, imprint of pill is shown in Fig. 1.

In this section, we review papers that utilize algorithms for pill image recognition. We categorize pill image recognition methods into four main approaches: traditional image processing-based, traditional machine learning-based, deep learning-based, and hybrid-based methods. During the data preprocessing stage, image filtering is commonly employed, with popular techniques such as averaging filters, median filters, and Gaussian filters frequently used by authors. In our summary of the methods used, we focus on the primary techniques and do not include these widely-used image filtering methods.

3.1 Traditional Image Processing-Based Methods for Pill Image Recognition

The traditional image processing-based method for pill image recognition refers to an approach that uses classic techniques in image analysis and manipulation, such as preprocessing for noise reduction and enhancement, segmentation to isolate pill features, and extraction of shape, color, and imprint attributes. This method relies on established algorithms and mathematical operations to interpret and classify pill images based on predefined visual characteristics. The main methods used by the authors in this category are summarized in Table 1.

Lee et al. [1,19] both emphasize the importance of imprint matching, using techniques like edge detection and contour analysis to extract pill imprints. These methods perform well when the pill imprints are clear and distinguishable, but their accuracy decreases when the imprint is faint or damaged. These approaches rely on predefined feature extraction algorithms, which are less flexible compared to modern learning-based approaches.

Morimoto et al. [6], Kim et al. [8] focus on matching pills based on their shape and color, utilizing features like contour descriptors and color histograms. These methods are effective for distinguishing pills with distinct shapes and colors but struggle with pills that are similar in these aspects. Shape-based recognition often suffers when pills are rotated or partially occluded, limiting the robustness of these methods in real-world conditions.

The work in Hartl et al. [9] explores the challenges of recognizing pills in unconstrained environments, such as on mobile devices, where lighting conditions and camera angles can vary significantly. The use of traditional image processing techniques here, including color and imprint analysis, shows promise, but the performance is highly dependent on controlled conditions. Variations in lighting and background can significantly affect the accuracy of recognition.

In Caban et al. [17], the shape distribution model is applied to pills by capturing the distribution of distances from a set of boundary points. This method improves the robustness to shape variations but can still be limited by the need for clear boundaries in the pill image, which might not always be available due to noise or occlusion.

Chen et al. [25] proposes a method that combines various features-shape, color, and texture-weighted dynamically depending on the context of the pill image. This method enhances the system’s flexibility to adapt to different pill appearances, though it still struggles with ambiguous or noisy images where feature distinction is difficult.

Yu et al. [36] introduces a method for recognizing pill imprints by analyzing distances between imprint features. This two-step approach improves the robustness of imprint recognition, but it is still reliant on the quality of the imprint and can struggle in cases of faint imprints.

Hema et al. [40] and Ranjitha et al. [49] emphasize feature extraction techniques, such as shape, color, and imprint, to differentiate pills. These methods are highly interpretable but lack the ability to generalize well across diverse pill types, particularly when there are subtle differences between them.

Suntronsuk et al. [43,45] tackle the challenging problem of imprint recognition, applying binarization and segmentation techniques to separate text from the pill’s surface. While effective for high-contrast imprints, these techniques face challenges when the pill’s imprint contrast is poor or the surface is reflective.

Lastly, Chokchaitam [50] addresses the issue of background interference by compensating for shadows and lighting variations. This improves the reliability of color-based features but is still sensitive to extreme lighting changes.

In summary, traditional image processing methods offer interpretability and efficiency in pill recognition but face challenges with variations in pill appearance, lighting conditions, and imprint quality. These methods are generally effective in controlled environments but struggle in real-world applications where pills may have similar colors or shapes, or where imprints are not clearly visible. As a result, while these methods laid the groundwork for pill recognition, they have been increasingly supplemented or replaced by machine learning and deep learning techniques in recent years due to the limitations in handling more complex or ambiguous pill images.

3.2 Traditional Machine Learning-Based Methods for Pill Image Recognition

The traditional machine learning-based method for pill image recognition involves using algorithms and statistical models to train on extracted features from pill images. This approach typically includes preprocessing steps to enhance image quality and feature extraction to identify key characteristics such as shape, color, and imprint. Machine learning models like Support Vector Machines (SVM), K-Nearest Neighbors (k-NN), and Random Forests are trained on these features to classify pill images based on learned patterns and similarities within a labeled dataset. The main methods used by the authors in this category are summarized in Table 2.

Cunha et al. [51] employ traditional machine learning to create a mobile-based tool for pill recognition. The system uses characteristics such as pill shape and color, employing a machine learning classifier to help elderly users identify pills. This study highlights the effectiveness of traditional methods in resource-constrained environments, particularly mobile platforms, where computational efficiency is crucial.

Yu et al. [37] focus on imprint-based features combined with traditional machine learning classifiers. Imprint information, including text or symbols on the pill surface, is extracted and processed to train models k-NN. This approach is particularly effective for pills that have similar shapes or colors but distinct imprints.

Ushizima et al. [55] explore various traditional machine learning approaches on a large-scale pill dataset. The study compares different feature extraction techniques and classifiers, including SVM and k-NN, finding that SVM performed better when combined with text and imprint features, underlining the importance of accurate feature selection.

Chupawa et al. [62] investigate the use of neural networks in pill recognition, but in the context of traditional machine learning rather than deep learning. The study employs shallow neural networks trained on features like imprints, shape, and imprint, achieving good results in recognizing pills with distinct imprints. This marks an early exploration of neural networks prior to the dominance of deep learning methods.

Vieira Neto et al. [63] introduce a novel feature extractor, CoforDes, to enhance the robustness of pill recognition systems against variations in lighting and pose. Combined with SVMs, this method provides invariance to scale and rotation, making it particularly useful in cases where pills are photographed under varying conditions.

Chughtai et al. [64] continue the exploration of shallow neural networks in pill identification. It emphasizes the effectiveness of combining hand-crafted features with a neural network classifier, demonstrating that neural networks can still produce strong results when paired with carefully designed feature sets, even without relying on deep learning.

Finally, Dhivya et al. [67] present a method that combines SVM for text recognition with an n-gram-based error correction algorithm. This method addresses challenges related to recognizing imprints on pill surfaces, particularly in low-quality images, making it a robust solution for text-based pill identification.

In summary, traditional machine learning methods for pill image recognition rely heavily on feature extraction, with classifiers such as SVM, k-NN, and shallow neural networks being commonly used. While earlier studies focused on shape and color features, more recent work has explored the use of text and imprint information as key distinguishing factors. Feature engineering remains crucial in these methods, with techniques like CoforDes and error correction algorithms enhancing robustness and accuracy. Although deep learning has overshadowed these approaches in recent years, traditional machine learning methods still provide efficient and effective solutions in scenarios with limited computational resources.

3.3 Deep Learning-Based Methods for Pill Image Recognition

The deep learning-based method for pill image recognition refers to an approach that employs deep neural networks, particularly Convolutional Neural Networks (CNNs), to automatically learn and extract features from pill images. This method eliminates the need for handcrafted features by using multiple layers of neurons to progressively learn hierarchical representations of visual data. Deep learning models are trained on large datasets of labeled pill images, enabling them to accurately classify and identify pills based on complex visual patterns and features. The main methods used by the authors in this category are summarized in Table 3.

Simonyan et al. [73] introduce the use of deep convolutional neural networks (CNNs) for image classification, laying the foundation for later work in pill image recognition. The depth of these networks allows for capturing intricate features from complex image data, which has proven beneficial for fine-grained pill identification.

Wong et al. [74] leverage a deep CNN for distinguishing between pills that have subtle visual differences. This approach demonstrates high accuracy by extracting detailed features, showing superior performance in handling pills with similar shapes or colors.

Ou et al. [79] apply a CNN to detect pills in real-world scenarios, where pills may be partially occluded or in cluttered environments. While it achieves high detection accuracy, the model’s performance degrades in scenarios with significant lighting variations.

Chang et al. [83,94] employ CNNs for real-time pill identification through wearable devices. These systems ensure rapid and accurate recognition while maintaining computational efficiency, making them suitable for resource-constrained environments like wearable smart glasses.

Larios Delgado et al. [86] emphasize computational efficiency while maintaining high accuracy. This method focuses on optimizing CNN architectures to deliver real-time pill recognition, crucial for clinical settings that require immediate results.

Cordeiro et al. [88] and Swastika et al. [89] explore multi-stream CNN architectures, where multiple networks are used to focus on different features of the pills (shape, color, imprint). The combination of these streams yields improved accuracy, especially for visually similar pills.

Usuyama et al. [91] present a benchmark for evaluating pill recognition models in low-shot settings. It highlights that models pretrained on large datasets can be adapted for pill identification with minimal additional data, making them more efficient in scenarios where labeled data is scarce.

Ou et al. [96] integrate feature pyramid networks (FPN) with CNNs to improve multiscale feature extraction. This approach achieves superior performance in detecting pills of varying sizes and in challenging backgrounds.

Marami et al. [98] address the unique challenge of recognizing discarded medications. The CNN-based system is able to identify damaged or altered pills, demonstrating the robustness of deep learning in handling real-world pill recognition scenarios.

Ling et al. [101] and Zhang et al. [130,139] focus on recognizing new pill classes with minimal data. These few-shot learning techniques enable the models to adapt to new pill types without requiring extensive retraining, making them highly adaptable in dynamic pharmaceutical environments.

Tsai et al. [107] present an innovative application where CNNs are used to automatically identify pills within a smart pillbox, ensuring that patients take the correct medications. The model’s accuracy, combined with a physical pill-dispensing system, improves patient compliance and safety.

Kwon et al. [108] apply deep learning for quality control in pharmaceutical manufacturing, where CNNs are used to inspect pills for defects. This model ensures the integrity of pills before they are distributed, highlighting the role of deep learning in ensuring quality assurance.

Lester et al. [110] evaluate CNN-based models across real-world clinical environments, showing that deep learning models generalize well when trained on diverse datasets. The study indicates that CNNs can handle varying lighting conditions and pill orientations effectively.

Ozmermer et al. [113] incorporate deep metric learning with CNNs to improve pill identification. The method outperforms traditional approaches by learning discriminative feature embeddings, enabling the model to distinguish visually similar pills more accurately.

Tan et al. [116,117] compare object detection models for pill recognition, revealing that YOLO-v3 offers the best balance between accuracy and inference speed for real-time applications. However, Faster R-CNN provides higher accuracy but at the cost of slower performance.

Tan et al. [118] propose a novel multi-stream CNN architecture that integrates different streams of information (shape, color, imprint) for pill classification. This method handles class-incremental learning more effectively, showing that it adapts to new pill classes without degrading the performance on previously learned classes.

Suksawatchon et al. [119] and Wu et al. [121] focus on shape-based recognition using deep CNNs. These models demonstrate superior performance in recognizing pills even under unconstrained conditions, such as varying orientations and partial occlusions.

Pornbunruang et al. [124] and Duy et al. [127] introduce hybrid approaches by combining CNNs with external knowledge sources, such as medical knowledge graphs. These models improve pill recognition by incorporating contextual information about the pill’s medical properties, dosage, and use cases.

Thanh et al. [128] and Duy et al. [132] integrate graph neural networks (GNNs) with CNNs to enhance pill recognition. These methods demonstrate improved accuracy by considering relationships between pills, prescriptions, and medical knowledge, providing more reliable and explainable results.

Bodakhe Sakshi et al. [129] focus on accurate and efficient pill detection using CNNs in dynamic environments, demonstrating strong performance in recognizing multiple pills in a single image.

Ashraf et al. [133] highlight the use of deep learning models that do not require extensive customization, making them more accessible for diverse clinical applications. This model performs well across different healthcare settings, demonstrating the adaptability of CNNs.

Dang et al. [135] emphasize the need for accurate real-time recognition on mobile devices. The CNN-based system ensures that visually impaired individuals can quickly and reliably identify their medications.

In conclusion, deep learning methods for pill image recognition, particularly CNN-based models, have shown significant success in both real-time and high-accuracy tasks. The creation of more efficient, flexible, and interpretable models, especially those incorporating external knowledge or few-shot learning methods, suggests a bright future for pill recognition technologies in a range of real-world applications. The diversity in architectural choices-ranging from feature pyramid networks to hybrid GNN-CNN approaches-demonstrates the growing sophistication and effectiveness of deep learning in pharmaceutical image analysis.

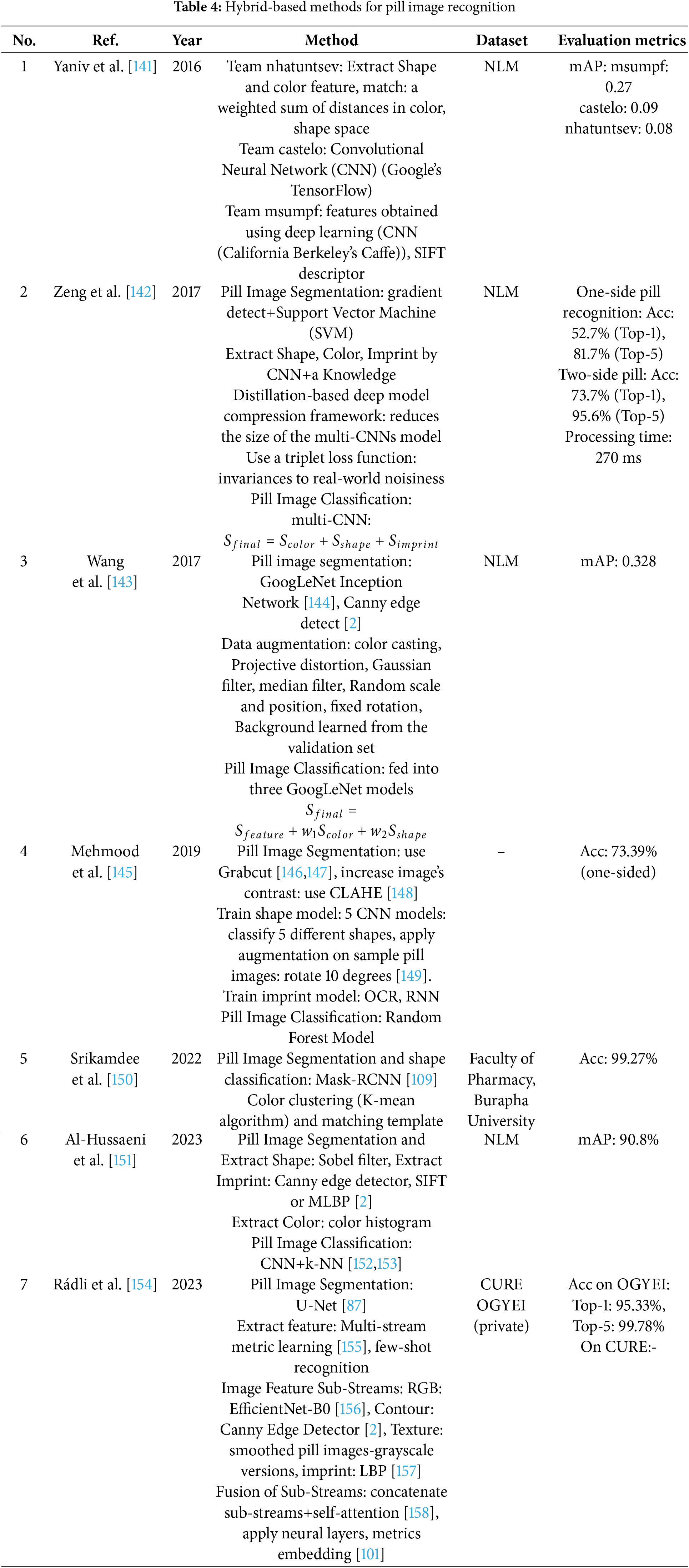

3.4 Hybrid-Based Methods for Pill Image Recognition

Hybrid-based methods for pill image recognition combine traditional image processing techniques with modern machine learning or deep learning approaches. This approach leverages the strengths of both methodologies to enhance accuracy and robustness in identifying pills based on their visual characteristics. The main methods used by the authors in this category are summarized in Table 4.

In Yaniv et al. [141], a hybrid method combining traditional machine learning features with convolutional neural networks (CNNs) is explored to address the challenge of pill identification. This approach aims to combine handcrafted features such as color and shape with deep learning-based features to improve accuracy, particularly for difficult cases where imprints or shapes are ambiguous.

Zeng et al. [142] present a lightweight hybrid model designed for mobile environments. This method leverages deep learning for feature extraction while incorporating post-processing techniques to handle unconstrained environments, such as varying lighting conditions and pill orientations. The hybrid approach enables efficient recognition on resource-constrained mobile devices while maintaining accuracy.

In Wang et al. [143], a hybrid approach is introduced to address the problem of limited labeled data. By combining transfer learning and semi-supervised learning, the model achieves high accuracy with minimal annotations. This method employs pre-trained deep learning models and incorporates clustering techniques like k-means to refine the classification process, demonstrating the effectiveness of hybrid systems in handling data scarcity.

Mehmood et al. [145] further explore hybrid techniques for mobile platforms, combining deep CNNs with traditional feature extraction methods to balance the computational load and accuracy. This method integrates deep learning for initial feature extraction while using clustering-based techniques to refine the identification process, optimizing the system for mobile use.

Srikamdee et al. [150] take a unique hybrid approach by combining CNNs with k-means clustering for pill identification. The CNNs extract deep features, which are then grouped using k-means clustering to handle variations in pill appearances, such as color or shape. This hybrid method allows for a more efficient search and retrieval process in real-world applications, showing its strength in large-scale pill identification systems.

Al-Hussaeni et al. [151] focus on combining CNNs with traditional image retrieval methods. The hybrid approach integrates deep learning features with image matching techniques, such as SIFT or SURF, for more accurate retrieval of similar pill images. This hybrid model aims to improve retrieval precision in scenarios where pills share similar visual characteristics but have subtle differences, like imprints or logos.

Finally, Rádli et al. [154] extend the hybrid approach by incorporating multi-stream CNNs with an attention mechanism to handle complex pill recognition tasks. The model combines multiple streams of deep learning features, such as color, shape, and imprint information, and applies attention to focus on the most discriminative features. This hybrid architecture outperforms single-stream CNNs by better capturing subtle variations in pill images and emphasizing important visual cues during classification.

In conclusion, hybrid-based methods for pill image recognition effectively combine the strengths of deep learning with traditional machine learning and clustering techniques to enhance accuracy, especially in challenging scenarios such as mobile deployment, limited data, and visually similar pills. By leveraging the complementary strengths of these methods, hybrid approaches provide a more robust solution for pill identification in diverse real-world environments.

Benchmark datasets play a crucial role in evaluating the performance of proposed methods. For pill image recognition, there is a wide range of commonly used benchmark datasets. In the methods discussed in the previous section, the authors utilized both public and private datasets. We note that some datasets are private, and we do not review these private datasets in this section. Here, we provide a brief review of the public datasets and their relevant information for pill image recognition.

4.1 National Library of Medicine (NLM)

In January 2016, the US National Library of Medicine (NLM) [141] initiated a competition aimed at developing advanced algorithms and software for accurately ranking prescription pill identifications based on images submitted by users (consumer images). The data for identification is drawn from the RxIMAGE collection (reference images).

The identification dataset was composed of three key parts: a training dataset accessible to all participants, a segregated testing dataset, and a non-segregated testing dataset reserved for evaluating the top three submissions after the competition concluded.

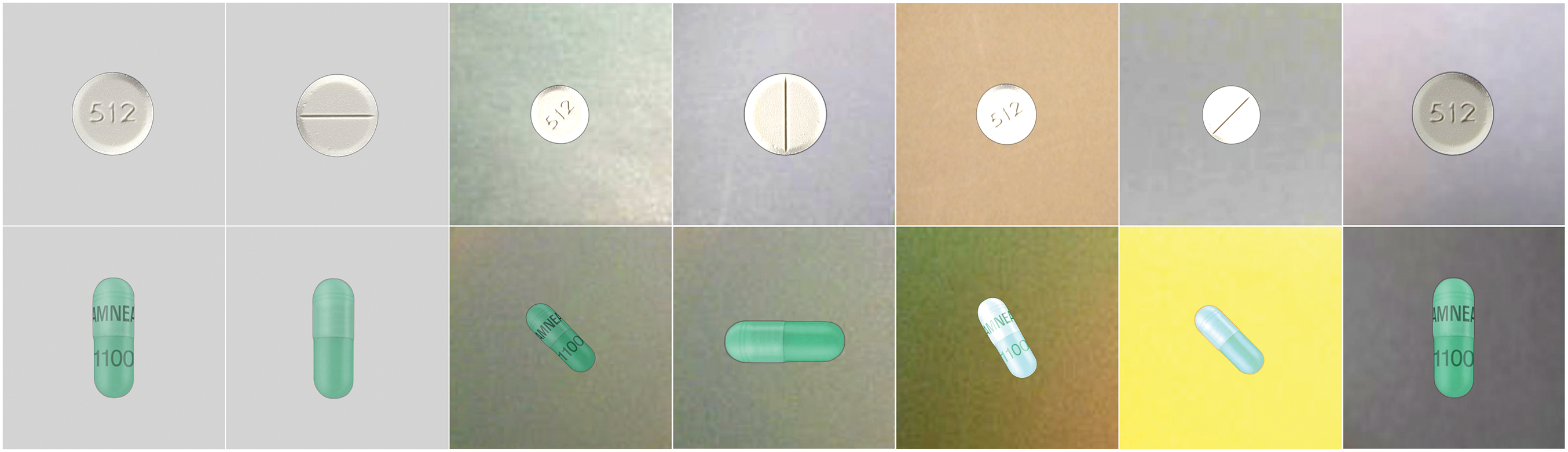

The training dataset contained 7000 images representing 1000 different pills. For each pill, there was one high-resolution macro photograph of each side and five consumer-quality images. For capsules, the image dataset included clear views of the pill’s imprint area with text aligned perpendicularly to the plane of the capsule. The pills included in this dataset were randomly selected from the RxIMAGE collection to ensure a representative distribution by color and shape. An example of a similar consumer-quality image and reference image in the NLM dataset for tablets and capsules is shown in Fig. 4.

Figure 4: Similar sample images from the training dataset in the NLM dataset. The first two columns are the reference, macro photographs. The remaining pictures are consumer quality images

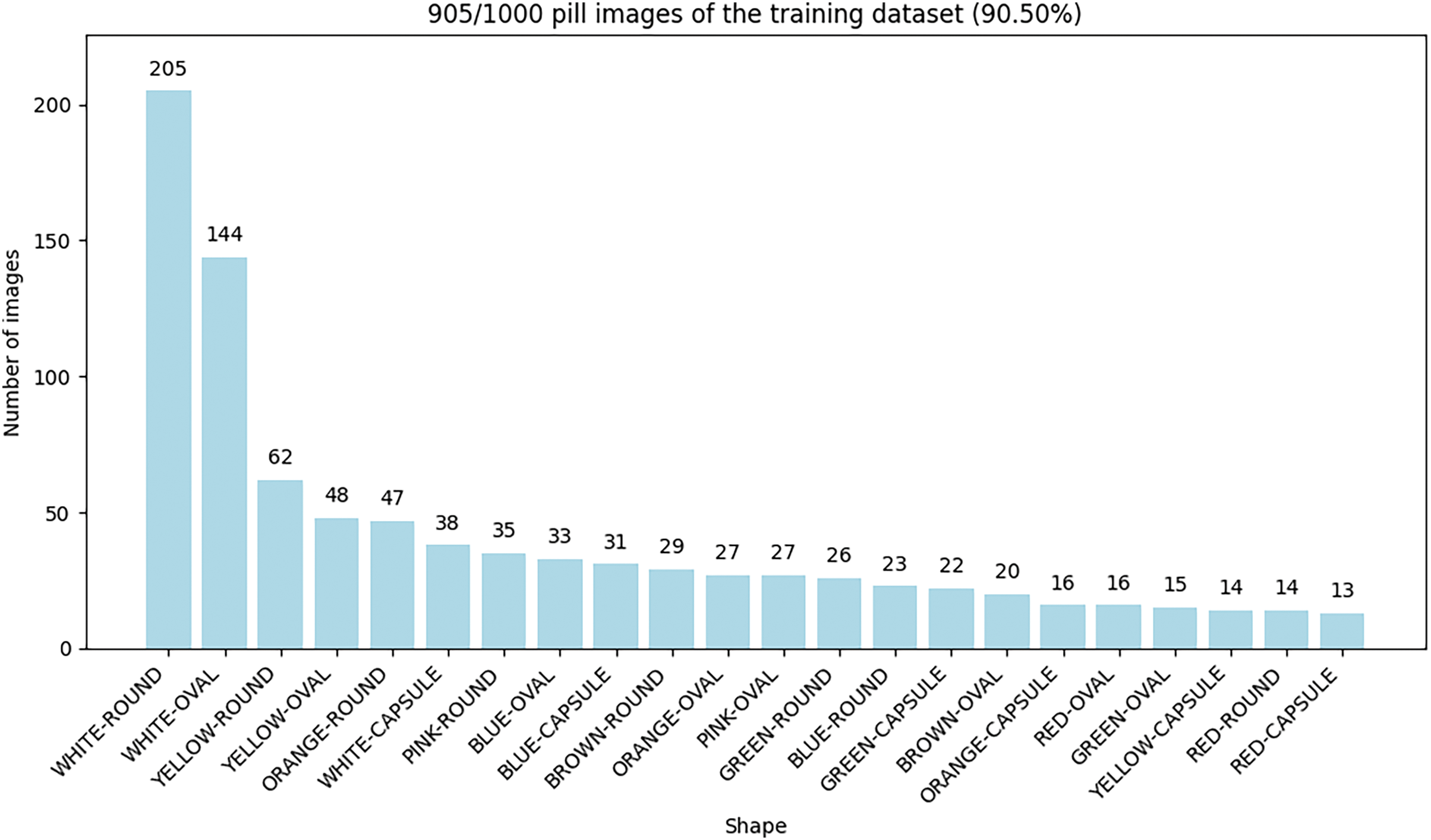

The distribution of pills based on their key visual properties, shape, and color in the training data is shown in Fig. 5.

Figure 5: Distribution of pills based on their key visual properties, shape and color of training data

The segregated testing dataset was assembled using a separate set of 1000 pills that were not available to the participants during the competition. For each pill in this dataset, there were two reference images and five consumer images. The pills were randomly selected based on their shape and color to ensure their distribution was similar to that of the training set. A bar chart displaying the pill distributions by color and shape is shown in Fig. 6.

Figure 6: Distribution of pills based on their key visual properties, shape and color. The segregated testing dataset is comprised of reference and consumer quality images from products that were not part of the training set

This dataset enables the contest judges to evaluate an algorithm’s generalization ability and its alignment with their long-term goals. As the contest judges continue to acquire additional images for the RxIMAGE collection, they prefer to train an algorithm only once.

The non-segregated testing dataset included data from the same 1000 pills used in the training set. For this dataset, the contest judges used 6486 consumer-quality images that were not part of the training set. This data allows the contest judges to assess whether an algorithm is potentially overfitting to the training data, as it should generalize well to variations in consumer images similar to those it has previously encountered.

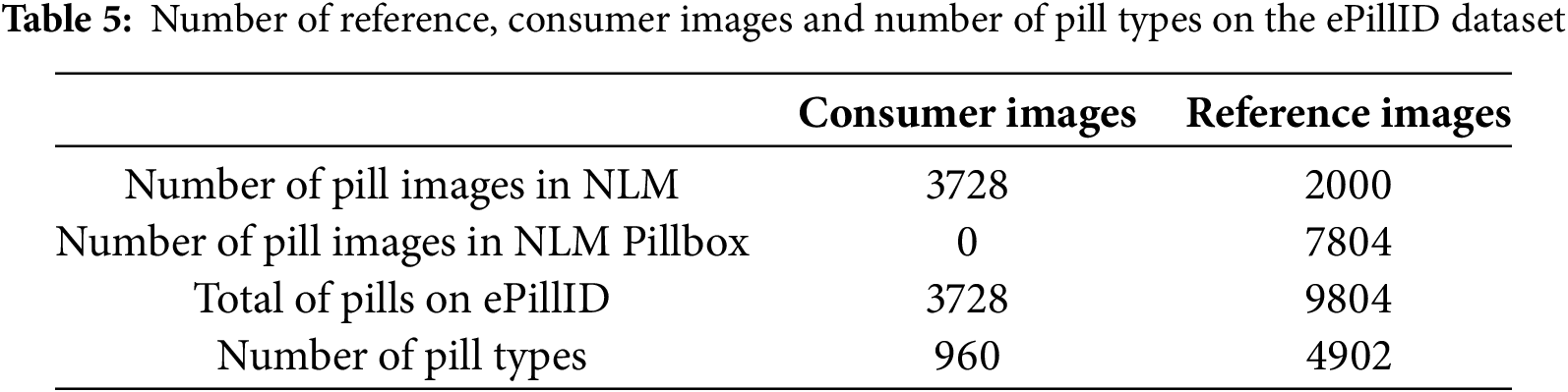

Usuyama et al. [91] used the NLM challenge dataset and the NLM Pillbox dataset to create it. The ePillID dataset includes a total of 13,532 images of 6766 pills, with each pill photographed from both sides (front and back). For each pill captured and saved as either a consumer image or a reference image, both sides are documented.

Of the 13,532 images, 3728 are consumer images and 9804 are reference images. Among these, the 3728 consumer images are sourced from the NLM dataset, while the 9804 reference images are divided into 2000 images from NLM and 7804 images from NLM Pillbox.

In the 3728 consumer images, there are 960 pill types, and in the 9804 reference images, there are 4902 pill types. Detailed descriptions of the number of images, consumer images, reference images, and pill types are shown in Table 5. These specific data are taken from the author’s article combined with the ePillID dataset that the author has published.

This dataset is particularly challenging due to its low-shot recognition setting, where most classes have only a single reference image, making it difficult for models to generalize. Various baseline models were evaluated, with a multi-head metric learning approach using bilinear features achieving the best performance. However, error analysis revealed that these models struggled to reliably distinguish between similar pill types. The paper also discusses future directions, including integrating Optical Character Recognition (OCR) to address challenges such as low-contrast imprinted text, irregular layouts, and varying pill materials like capsules and gels. Furthermore, the ePillID benchmark is set to expand with additional pill types and images, promoting further research in this critical area of healthcare.

The images in the NLM dataset have limitations related to lighting, background conditions, and equipment, among other factors. The CURE dataset [101] addresses these limitations.

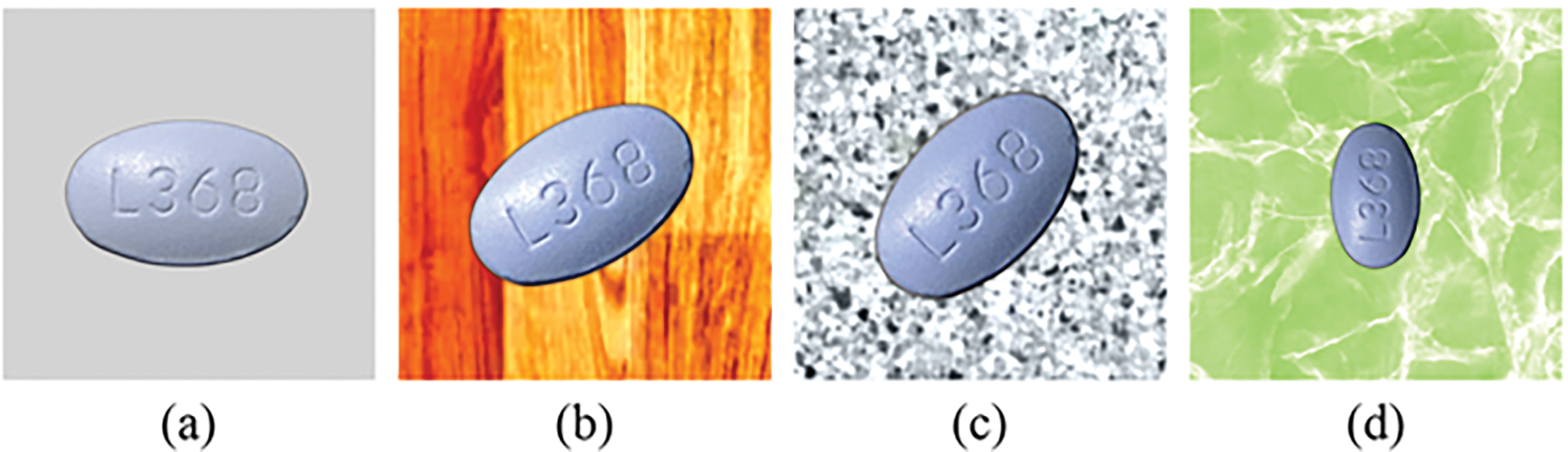

This dataset contains 8973 images across 196 categories, with approximately 45 samples for each pill category, as shown in Figs. 7 and 8.

Figure 7: Similar images in the CURE dataset of one pill type: (a): reference image; (b–d): consumer images

Figure 8: Similar images in the CURE dataset of a different pill than the one in Fig. 7. (a): reference image; (b–d): consumer images

As summarized in Table 6, this dataset accounts for more challenging real-world conditions (i.e., with more diverse backgrounds, lighting, and zooming conditions), making it a better reflection of practical scenarios compared to the NLM dataset [141]. Examples of images from the dataset are shown in Figs. 7 and 8. It can be observed that: 1) images were taken under different lighting conditions, leading to significant changes in pill color (especially in Fig. 8d, where the color of the images taken under different lighting conditions with the MPI equipment varies significantly); 2) Fig. 7c,d was taken under different zooming conditions; and 3) the backgrounds in this dataset are diverse.

mCURE Dataset

To adapt the CURE dataset for the Few-shot Class-incremental Learning (FSCIL) setting, Zhang et al. [130] used a splitting strategy similar to that employed for miniImageNet in [131]. They sampled 171 classes to create the miniCURE dataset, abbreviated as mCURE. These 171 classes were divided into 91 base classes and 80 new classes. The new classes were further divided into eight incremental sessions, with the training data in each session formatted as 10-way 5-shot.

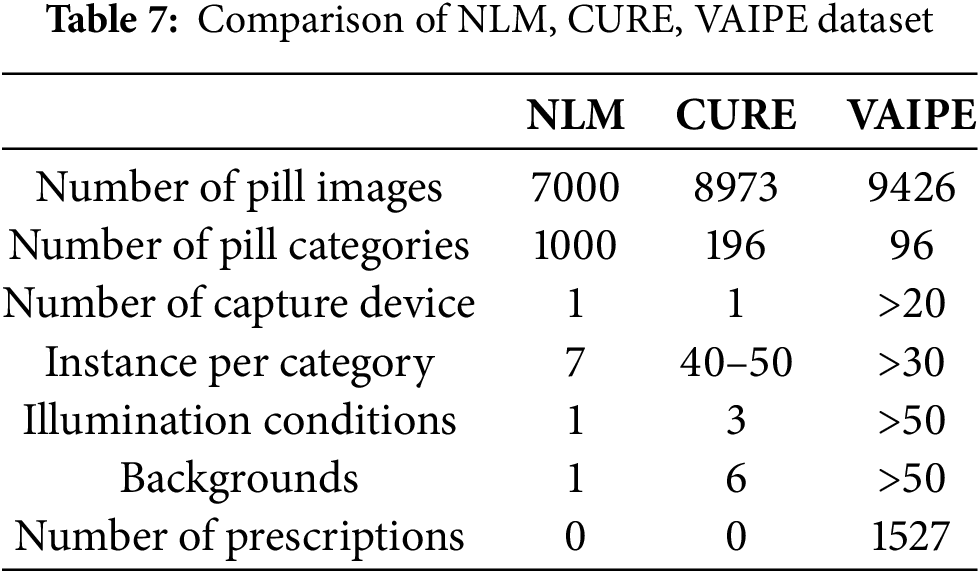

VAIPE [118] is the first real-world multi-pill image dataset, consisting of 9426 images representing 96 pill classes. The images were captured with ordinary smartphones in various settings and include prescriptions.

This dataset is designed for identifying images of multiple pills within their context, based on prescriptions. Each image containing multiple pills is annotated with a bounding box around each pill and labeled with its respective name. Prescriptions are also assigned a bounding box and labeled with the pill name and diagnosis in Vietnamese, making it suitable for the pill market in Vietnam.

The VAIPE dataset can serve as a valuable resource for training generic pill detectors. The characteristics of the VAIPE dataset, as well as comparisons with the NLM and CURE datasets, are detailed in Table 7.

VAIPE-PCIL Dataset

To facilitate research on Class-Incremental Learning (CIL) in pill image classification tasks, the authors derived a dataset version called VAIPE-PCIL (VAIPE Pill Class Incremental Learning) [118] from the original VAIPE data. VAIPE-PCIL was created by cropping pill instances from the original dataset. The authors selected only those categories that met the following criteria: 1) the number of samples should be greater than 10, and 2) the image size of samples should be at least 64

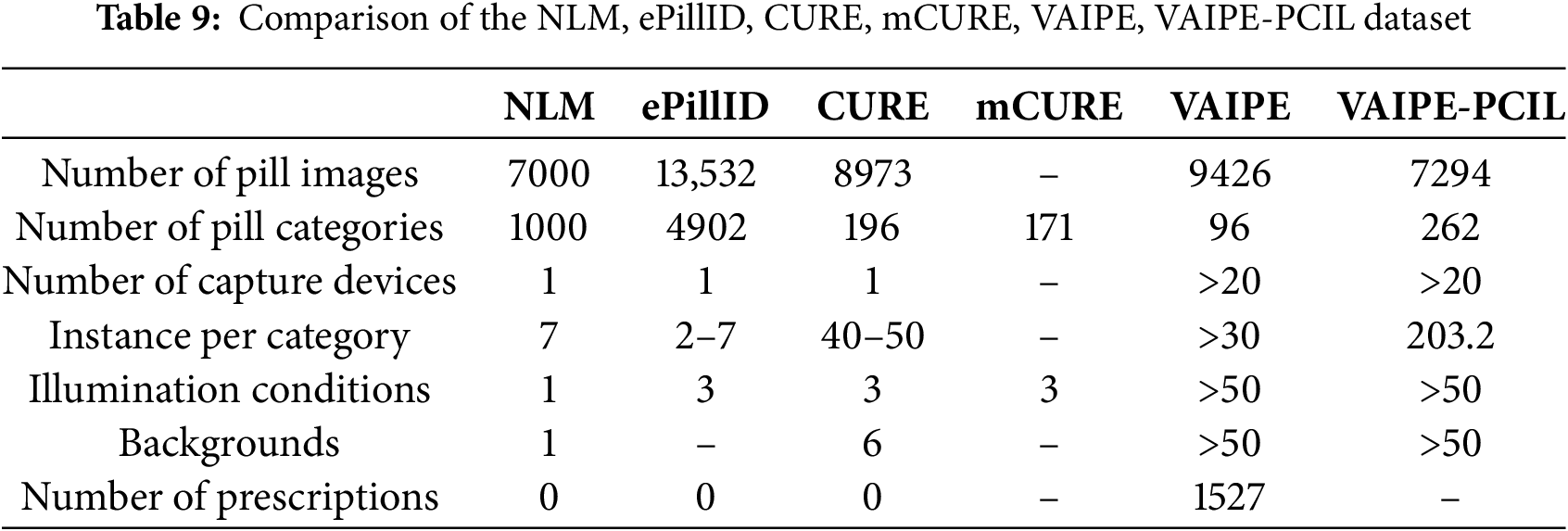

We compare the following 6 popular datasets: NLM, ePillID, CURE, mCURE VAIPE, VAIPE-PCIL in Table 9.

In summary, the NLM dataset is a popular source for pill identification problems, captured under ideal conditions. The ePillID dataset offers a large number of images for each pill and is more diverse, with images sourced partly from the NLM dataset and partly from the NLM Pillbox, and taken in natural conditions, is particularly challenging due to its low-shot recognition setting. The CURE dataset provides high-quality images with a variety of angles, lighting, and backgrounds, helping deep learning models handle real-life scenarios more effectively. The VAIPE dataset includes multi-pill images commonly found in the Vietnamese market, making it suitable for multi-pill image recognition with context-specific prescriptions and annotations in Vietnamese. This makes VAIPE a valuable resource for research and development in pill image recognition in Vietnam. Each dataset has its unique advantages, suited to different goals and applications in pill image recognition. Selecting the appropriate dataset depends on the user’s specific needs and the application environment.

5 Performance Comparison of Methods

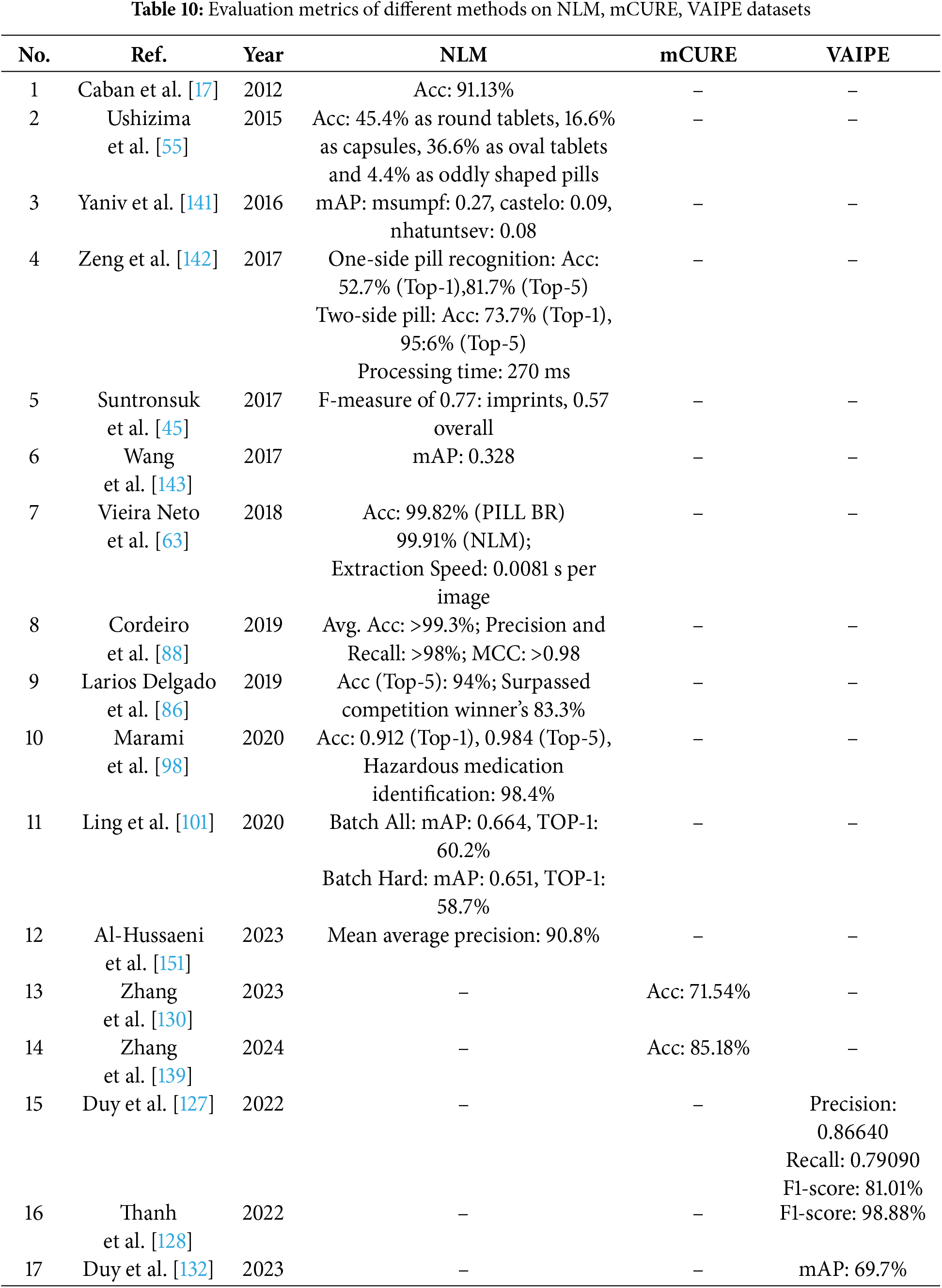

In the literature survey above, there is only one article using the CURE dataset, one article using the ePillID dataset, and one article using the VAIPE-PCIL dataset. Consequently, we do not compare the evaluation metrics of the algorithms applied to these datasets. Table 10 compares the evaluation metrics of algorithms used in articles on the NLM, mCURE, and VAIPE datasets.

5.1 Performance Comparison of Methods on Dataset NLM

Caban et al. [17] shows strong potential for automatic medication identification. By utilizing a modified shape distribution approach that analyzes the shape, color, and imprint of pills, the system achieves 91.13% accuracy on a dataset of 568 U.S. prescription pills. This high accuracy suggests the system’s robustness against real-world variability such as lighting and camera angles. Additionally, it identifies the correct pill within the top 5 matches, even when an exact match is not found at the top. The system assumes a top-view pill image, which may limit its application in cases where different views are required. Further optimization could involve adjusting feature weights based on their relevance to recognition. Overall, the system’s performance suggests its potential for practical use in healthcare settings.

Ushizima et al. [55] discuss a pill recognition system using the NLM dataset, which demonstrates promising results but also highlights areas for improvement. The system segments pill images and extracts features based on the FDA’s recommended physical pill attributes, organizing them into four main categories: round tablets (45.4%), capsules (16.6%), oval tablets (36.6%), and oddly shaped pills (4.4%). This categorization serves as a foundational step for content-based image retrieval.

While the shape descriptors help differentiate between standard pill shapes, the system struggles to distinguish oddly shaped pills, pointing to the need for more refined feature extraction. The paper suggests incorporating additional descriptors, such as convexity points, to enhance performance in identifying irregular shapes. Future improvements include capturing more minor variations in pill contours, like contour bending energy, and incorporating lower-resolution images to ensure functionality with standard mobile cameras.

Overall, while the system provides a useful framework for classifying pills, it requires further advancements to be reliably used in real-world applications, particularly for patient safety. The ongoing research promises significant contributions to the development of practical pill recognition automation.

Yaniv et al. [141] discuss how the NLM Pill Image Recognition challenge highlights both the potential and limitations of current algorithms for pill identification using consumer-quality images. The top three submissions achieved mean average precision scores of 0.27, 0.09, and 0.08, with correct reference images retrieved in the top five results for 43%, 12%, and 11% of queries. While capsules, with distinct textual and color features, were more easily identified (74% accuracy in the top five), tablets, especially those with embossed markings, posed a challenge, with only 34% accuracy in the top five results.

The challenge revealed that improvements are needed in dataset size, feature descriptors, and image acquisition methods. The best submissions utilized deep learning, but their performance was limited by a small training dataset. To improve results, the use of data augmentation, pre-training on unrelated datasets, and more controlled image acquisition (e.g., including reference objects for pose estimation) is recommended. Overall, continued research is needed to enhance pill identification algorithms for both healthcare professionals and the public.

Zeng et al. [142] present the MobileDeepPill system, showcased in the NLM Pill Image Recognition Challenge, which demonstrates significant progress in pill recognition using mobile devices. Its architecture incorporates a triplet loss function, multi-CNNs, and a Knowledge Distillation-based model compression framework, leading to impressive recognition accuracy. In one-side pill recognition, MobileDeepPill achieved a Top-1 accuracy of 52.7% and a Top-5 accuracy of 81.7%. In two-side recognition, the system’s performance improved, reaching a Top-1 accuracy of 73.7% and a Top-5 accuracy of 95.6%. These results highlight its robustness in real-world conditions, where image quality may vary due to factors like lighting and camera angles.

MobileDeepPill is notable for its small footprint, requiring only 34 MB of runtime memory, making it suitable for mobile devices without cloud offloading. With high-end mobile GPUs, the system’s processing time has been reduced to 270 milliseconds, enabling near real-time pill identification. Although there is potential for improvement in the accuracy of one-side recognition, the strong performance in two-side recognition and overall efficiency demonstrate that MobileDeepPill is a promising tool for mobile pill identification, both in healthcare environments and for everyday use.

Suntronsuk et al. [45] present a pill imprint extraction method that offers a novel approach to pill identification by extracting text from pill imprints, which is essential for linking pill images to databases used by healthcare providers. The method involves a multi-step process, beginning with image normalization and enhancement to improve imprint contrast. Two binarization techniques, Otsu’s thresholding and K-means clustering, were evaluated, with Otsu’s method outperforming K-means in handling engraved and printed imprints.

The system achieved an F-measure of 0.77 for printed imprints, demonstrating effectiveness in this category. However, the overall F-measure of 0.57 reflects challenges in handling complex or noisy areas, particularly for engraved imprints. Despite this, the use of Tesseract for text recognition was successful with binarized images. Future work is needed to refine the binarization step, especially for engraved imprints, potentially through advanced or hybrid techniques. Overall, the method shows promise but requires further improvement for more reliable pill identification.

Wang et al. [143] propose a pill recognition system on the NLM dataset that addresses the challenges of pill identification under real-world conditions, including limited labeled data and the domain shift from controlled environments to consumer images. To overcome these issues, the authors employ data augmentation techniques to generate synthetic images, enriching the training dataset and enhancing model robustness.

The system uses a Convolutional Neural Network (CNN), specifically the GoogLeNet Inception Network, with three models trained to specialize in color, shape, and feature extraction. This ensemble approach aims to improve recognition performance. The system achieved a Mean Average Precision (MAP) score of 0.328 on the NLM dataset, which reflects its ability to recognize pills but also highlights difficulties in dealing with noise, background interference, poor lighting, and varying image quality.

Key contributions include data augmentation and model ensembling, but the relatively modest MAP score indicates the need for further improvements. The authors suggest future extensions such as dynamic model weights, expanded data augmentation (e.g., JPEG distortions), and pill segmentation to improve performance. Overall, while the system shows promise, further optimization is needed for practical use in real-world pill recognition scenarios.

Vieira Neto et al. [63] present the CoforDes feature extraction method, which demonstrates exceptional performance on the NIH NLM PIR dataset, achieving 99.82% accuracy and 99.91% specificity. This highlights its effectiveness in classifying pill images based on shape and color, outperforming traditional descriptors such as GLCM, SCM, LBP, and moments (e.g., Zernike and Hu). The method’s rapid extraction speed of 0.00810 s per image further underscores its suitability for real-time applications, making it a robust tool for pill identification in critical healthcare settings.

Additionally, the study introduces a national dataset of Brazilian pills with 100 classes, marking a valuable contribution to the field. Despite its limited size, this dataset provides a basis for future expansion, particularly given the large number of drug classes in Brazil.

Future improvements focus on two key areas: expanding the dataset to include more pill classes and incorporating texture features into CoforDes. The latter is expected to enhance its ability to handle variations not fully captured by shape and color alone, increasing robustness and accuracy in more complex scenarios.

Overall, CoforDes shows great promise as a reliable and efficient solution for pill recognition, with clear potential for real-world deployment. Its combination of high accuracy, speed, and scalability positions it as a leading approach in the field, with further advancements likely to solidify its impact.

Cordeiro et al. [88] propose a pill classification system that demonstrates outstanding performance on the NLM PIR dataset, achieving over 99.3% average accuracy, with precision and recall exceeding 98%. These metrics highlight the system’s robustness and reliability, especially in handling unbalanced classes, as evidenced by its high Matthews Correlation Coefficient (MCC) score above 0.98.

The method leverages computationally efficient machine learning algorithms, including Support Vector Machines (SVM) and Multilayer Perceptron (MLP), which classify pills based on shape and color features extracted through image processing techniques. This method demonstrates high accuracy and remains robust to translation and rotation, making it suitable for images taken under different conditions, including those captured with consumer-grade cameras.

Compared to related studies, this system offers similar or superior accuracy at a significantly lower computational cost, enhancing its suitability for real-time applications. Future directions include testing the method on images captured in less controlled settings, incorporating additional attributes such as texture and imprints, and exploring content-based image retrieval (CBIR) and legal vs. illegal pill differentiation.

Overall, the system is highly promising for practical pill classification tasks, especially in controlled environments. Further work in real-world scenarios will determine its broader applicability and potential impact.

Larios Delgado et al. [86] present a deep learning-based prescription pill identification system that demonstrated significant efficacy on the NIH NLM Pill Image Recognition Challenge dataset, achieving a top-5 accuracy of 94%, surpassing the original competition winner’s accuracy of 83.3%. This remarkable performance underscores the system’s ability to address a critical need in healthcare by reducing preventable medical errors, a leading cause of patient harm.

The study highlights the potential of artificial intelligence (AI) to transform medication reconciliation and enhance clinical workflows by leveraging deep learning for precise pill identification. The system’s capability to work with mobile images further emphasizes its practicality and accessibility for healthcare providers, promoting integration into real-world applications.

This work aligns with healthcare goals of improving patient outcomes, reducing costs, enhancing population health, and supporting providers’ work-life balance. Although the results are promising, future research should assess the system’s robustness by using diverse real-world datasets to ensure its performance across different conditions.

The 94% top-5 accuracy achieved in this study underscores the promising role of AI in reducing medication errors and improving patient safety in clinical environments.

Marami et al. [98] propose a deep learning-based method for the automatic detection and classification of prescription medications, which achieved outstanding performance, with an overall accuracy of 91.2% and a top-5 accuracy of 98.4% on the NIH NLM PIR dataset. These results underscore its robustness in identifying diverse medications, making it highly suitable for applications like drug take-back programs.

A key strength of the system is its ability to distinguish hazardous medications from non-hazardous ones with 98.4% accuracy, ensuring compliance with DEA regulations and enhancing the safety of medication disposal practices. This capability minimizes the risk of environmental and public health hazards arising from improper disposal or mixing of medications.

Beyond classification, the system offers broader utility by enabling data collection on medication wastage patterns, which can inform pharmaceutical supply chain improvements, drug monitoring, and diversion prevention. Its compatibility with mobile devices enhances accessibility, fostering greater involvement in pill take-back programs and supporting sustainable disposal practices.

In summary, the system’s high accuracy and practical applications position it as a promising tool for improving the efficiency of drug take-back programs, reducing hazardous waste, and supporting public health and environmental safety initiatives.

Ling et al. [101] propose a pill recognition system that demonstrates strong performance on the NLM dataset, effectively addressing challenges related to few-shot learning and real-world imaging conditions. A notable contribution of this study is the introduction of the CURE dataset, which provides a more extensive and diverse set of instances per class under varied conditions, enhancing the model’s generalization capabilities for complex recognition tasks.

A key innovation is the

Additionally, the model excels in processing hard samples, particularly those with imprinted text, by emphasizing high-frequency components and incorporating texture information. The use of text imprint as an auxiliary stream in the multi-stream architecture significantly improves recognition accuracy in challenging scenarios.

Overall, the proposed system outperforms state-of-the-art models on both the NLM and CURE datasets, demonstrating its robustness and effectiveness in real-world pill recognition tasks, particularly in settings with limited data and unconstrained imaging conditions. This combination of enhanced segmentation, innovative architecture, and efficient training strategies positions it as a leading solution for practical applications in pill identification.

Al-Hussaeni et al. [151] evaluate three deep learning-based pill recognition methods in this study, including two hybrid models (CNN+SVM and CNN+kNN) and the ResNet-50 architecture, to improve pill image segmentation and classification. Among these, the CNN+kNN model achieved the highest accuracy of 90.8% on the NLM dataset, outperforming existing methods by approximately 10% while maintaining a fast runtime of about 1 ms per execution.

The hybrid approach, particularly CNN+kNN, demonstrated superior accuracy over traditional methods without a significant increase in computational cost, making it suitable for practical applications. This highlights the potential of combining convolutional neural networks with powerful classifiers like k-NN for recognizing complex or incomplete pill images.

Despite its strengths, the model’s accuracy can be impacted by poor lighting conditions, which affect pill shape detection. To mitigate this, the authors proposed capturing multiple images from various angles to construct a 3D model, enhancing robustness under diverse lighting scenarios.

Overall, the study underscores the feasibility of using deep learning for pill image recognition, achieving high accuracy and rapid processing speeds. This approach shows promise for improving medication safety and accuracy, supporting error prevention in clinical and pharmaceutical settings.

In summary, deep learning-based methods have shown superior performance compared to traditional image processing and machine learning approaches in pill recognition tasks. Traditional image processing methods such as those by Caban et al. [17], Suntronsuk et al. [45] have shown significant promise in automatic pill identification, achieving high accuracy in shape, color, and imprint recognition. However, these methods often struggle with image variability such as different views, lighting conditions, and noise. In contrast, traditional machine learning approaches, such as those developed by Ushizima et al. [55] and Vieira Neto et al. [63], have achieved promising results, especially with shape and color-based classification, but still face challenges in handling irregular shapes and achieving high accuracy across diverse datasets. Hybrid approaches, such as those proposed by Yaniv et al. [141] and Zeng et al. [142], have made considerable progress by combining traditional methods with machine learning techniques, resulting in improved outcomes in real-world situations by tackling dataset limitations and image quality challenges.

However, the real advancement in performance is seen in deep learning methods. Studies by Cordeiro et al. [88], Larios Delgado et al. [86], and Ling et al. [101] have demonstrated that deep learning models, particularly those leveraging convolutional neural networks (CNNs), achieve remarkable accuracy and robustness across various pill recognition tasks. These methods not only outperform traditional and hybrid models in terms of accuracy but also show greater adaptability to real-world conditions such as varying lighting, image resolution, and complex pill features. The ability of deep learning models to process large, diverse datasets and learn distinct features like those in the CURE dataset used by Ling et al. [101] has led to improved performance in challenging scenarios, such as few-shot learning and identifying pills with imprinted text. Moreover, deep learning methods, exemplified by the work of Marami et al. [98], have achieved exceptional results, including a top-5 accuracy of 98.4%, highlighting their effectiveness in not only recognizing pills but also distinguishing hazardous medications.

In conclusion, deep learning techniques surpass traditional image processing and machine learning approaches in both accuracy and robustness, making them the most promising solution for practical pill recognition applications in healthcare and everyday use.

5.2 Performance Comparison of Methods on Dataset mCURE

The comparison of the few-shot class-incremental learning (FSCIL) frameworks introduced in Zhang et al. [130,139] reveals notable advancements in automatic pill recognition systems, particularly for dynamic and resource-constrained environments. Both studies address the challenges posed by the continuously increasing categories of pills and the limited availability of annotated data, yet their distinct architectural designs and methodological approaches lead to different levels of performance on the mCure dataset. The framework proposed in Zhang et al. [130] adopt a decoupled learning strategy that separates representation learning and classifier adaptation. The representation learning leverages a Center-Triplet (CT) loss to enhance intra-class compactness and inter-class separability, while the classifier adaptation employs a Graph Attention Network (GAT) trained on pseudo pill images to accommodate new classes incrementally. In contrast, the method presented in Zhang et al. [139] build on this foundation by integrating Discriminative and Bidirectional Compatible Few-Shot Class-Incremental Learning (DBC-FSCIL). This framework introduces forward-compatible learning, which synthesizes virtual classes as placeholders in the feature space to enrich semantic diversity and support future class updates. It also incorporates backward-compatible learning, employing uncertainty quantification to generate reliable pseudo-features of old classes, which facilitates effective Data Replay (DR) and Knowledge Distillation (KD) for balancing memory efficiency and knowledge retention. The superior performance of Zhang et al. [139] on the mCure dataset can be attributed to its more comprehensive management of the feature space and its robust approach to preserving old-class knowledge during incremental updates. By synthesizing virtual classes, the framework anticipates and incorporates future class distributions, ensuring a richer training dataset compared to the reliance on pseudo image generation in Zhang et al. [130]. Furthermore, the uncertainty-based synthesis of pseudo-features allows Zhang et al. [139] to mitigate catastrophic forgetting more effectively, addressing a critical limitation in Zhang et al. [130], which lacks explicit mechanisms for backward compatibility. Additionally, the use of DR and KD strategies in Zhang et al. [139] optimize the trade-off between performance and storage requirements, further highlighting its adaptability to real-world applications.

Overall, while both frameworks demonstrate significant advancements in FSCIL for pill recognition, the holistic design of Zhang et al. [139], balancing forward and backward compatibility, enables it to achieve superior results on mCure. This underscores the importance of simultaneously addressing feature discrimination, knowledge retention, and adaptability in designing FSCIL systems for practical scenarios.

5.3 Performance Comparison of Methods on Dataset VAIPE

The VAIPE dataset presents unique challenges for pill identification and recognition due to the high visual similarity among pills, multi-pill scenarios, and unconstrained conditions of real-world images. Several deep learning-based approaches have been proposed to address these issues, leveraging external knowledge, novel architectures, and multi-modal learning techniques. Among these, the PIKA framework, introduced by Duy et al. [127], integrates external prescription-based knowledge graphs with image features via a lightweight attention mechanism, achieving an F1-score improvement of 4.8%–34.1% over baseline methods. This approach underscores the critical role of external knowledge graphs in enhancing recognition accuracy, though its scalability is constrained by the availability of accurate prescription data. Meanwhile, the PIMA framework, proposed by Thanh et al. [128], addresses the pill-prescription matching task by leveraging Graph Neural Networks (GNNs) and contrastive learning to effectively align textual and visual representations. PIMA demonstrates notable performance gains, improving the F1-score from 19.05% to 46.95%, while maintaining efficiency with limited training costs. For multi-pill detection in real-world settings, the PGPNet framework, developed by Duy et al. [132], integrates heterogeneous a priori graphs to model co-occurrence likelihood, size relationships, and visual semantics of pills. PGPNet achieves significant improvements, with COCO mAP metrics increasing by 9.4% compared to Faster R-CNN and 12.0% over YOLO-v5, while also emphasizing robustness and explainability. However, its dependency on extensive external knowledge poses scalability challenges. Collectively, these methods address different facets of the pill recognition problem, with PIKA excelling in single-pill identification, PIMA optimizing pill-prescription matching, and PGPNet advancing multi-pill detection in complex scenarios. This comparative analysis highlights the critical importance of external knowledge, multi-modal learning, and tailored frameworks in addressing the challenges posed by the VAIPE dataset, while also identifying areas for future research, including scalability, expanded knowledge bases, and computational optimization.

5.4 Performance Comparison of Methods on Dataset VAIPE-PCIL

Nguyen et al. [118] addressed the challenge of catastrophic forgetting in pill image classification by introducing the Incremental Multi-stream Intermediate Fusion (IMIF) framework. This approach integrates an additional guidance stream, leveraging color histogram information, to enhance traditional class incremental learning (CIL) systems. The authors proposed Color Guidance with Multi-stream Intermediate Fusion (CG-IMIF), which can be seamlessly incorporated into existing exemplar-based CIL methods. Experimental evaluation on the VAIPE-PCIL dataset revealed that CG-IMIF significantly outperforms state-of-the-art methods, achieving accuracies of 76.85% (N = 5), 69.94% (N = 10), and 64.97% (N = 15) under varying task settings. These results highlight CG-IMIF’s robustness in handling incremental learning tasks in real-world pill classification scenarios, offering a promising solution for smart healthcare applications.

5.5 Performance Comparison of Methods on Dataset ePillID

Usuyama et al. [91] introduced ePillID, the largest public benchmark for pill image recognition, consisting of 13 k images representing 8184 appearance classes, corresponding to 4092 pill types with two sides. This dataset is particularly challenging due to its low-shot recognition setting, where most classes have only a single reference image. The authors evaluated various baseline models, with a multi-head metric learning approach with bilinear features yielding the best performance. Despite this, error analysis revealed that these models struggled to reliably distinguish particularly confusing pill types. The paper also highlights future directions, including the integration of Optical Character Recognition (OCR) to address challenges such as low-contrast imprinted text, irregular layouts, and pill materials like capsules and gels. This benchmark, aimed at advancing pill identification systems, also plans to expand with additional pill types and images, fostering further research in this critical healthcare task.

Based on the insights gathered from the Literature Survey, Benchmark Datasets, and the Comparison of Performance of Methods, several open research problems in the field of pill image recognition remain. In this section, we will explore these unresolved issues and discuss potential research directions that could drive future progress in the field. By identifying these open problems, we aim to provide a roadmap for researchers to focus on the most critical areas for further development and innovation in pill image recognition.

6.1 Integrating Pill Recognition Systems into Resource-Constrained Environments

In recent years, wearable devices designed to assist visually impaired individuals with pill recognition have gained significant attention. Notably, the works by Chang et al. [83,94] contribute valuable insights into this area, proposing systems that leverage deep learning models for pill identification. Despite the promising potential of these devices, several challenges hinder their real-world applicability. One major issue is the difficulty of accurately recognizing pills under adverse conditions, such as low lighting, complex backgrounds, or partial occlusion. These factors significantly reduce the effectiveness of the systems, particularly in emergency situations. Furthermore, the large diversity of medications, varying in shape, color, and imprint, presents scalability challenges, as deep learning models require extensive training datasets to ensure reliable identification across a wide range of pill types.

The computational demands of deep learning models, such as Convolutional Neural Networks (CNNs), further complicate the deployment of these systems on resource-constrained devices like smart glasses, highlighting the need for hardware optimization. Additionally, enhancing user experience is crucial, as wearable devices must provide seamless interfaces with fast response times to be practical in everyday use.

To address these challenges, future research could focus on improving recognition models by exploring advanced architectures such as Vision Transformers (ViT) or hybrid CNN-ViT models. Data augmentation methods can be used to improve the model’s robustness across different conditions, while lightweight models such as MobileNet or EfficientNet could enhance performance for wearable devices. Moreover, the integration of Augmented Reality (AR) could significantly improve user interaction, making pill recognition more intuitive. AR overlays could display crucial information, such as the pill name, dosage, and usage instructions, directly onto the user’s field of view, facilitating easy identification without the need to focus on a separate screen. The combination of AR with haptic feedback or voice prompts could further enhance usability by providing alternative modes of interaction, particularly for users with limited vision. In addition, AR could help address the challenge of recognizing pills in complex environments by emphasizing key features like shape and imprint, thereby improving identification accuracy in real-world scenarios.

Ultimately, extensive real-world testing and collaborations with healthcare organizations will be essential to ensure the seamless integration of these systems into healthcare workflows, improving safety and efficiency. The advancements in AR, along with lightweight model architectures and enhanced data processing techniques, hold the potential to transform wearable pill recognition systems into reliable tools for visually impaired individuals in their daily lives.

Several studies, including those by Hartl et al. [9], Cunha et al. [51], Dang et al. [135], Zeng et al. [142], and Mehmood et al. [145], have contributed significantly to the advancement of mobile-based pill recognition systems, providing portable, real-time solutions. However, challenges remain, particularly in adapting these systems to dynamic real-world environments with variable lighting, pill orientations, occlusions, and complex backgrounds, all of which can affect performance. Furthermore, these systems are often limited by the need for specific datasets, which pose scalability challenges due to the wide variety of pill appearances and regional differences in pill design. Mobile devices’ resource constraints also create barriers, as many models must balance computational efficiency with recognition accuracy. Additionally, the user experience, particularly for vulnerable populations like the elderly or visually impaired, requires further improvement in terms of accessibility, ease of use, and response times.

To overcome these limitations, future research should focus on enhancing algorithmic robustness through hybrid or domain adaptation techniques, as well as ensuring broader model generalization by incorporating more diverse and expansive datasets for continuous learning. Lightweight architectures like MobileNet or EfficientNet, combined with model optimization strategies, can address the computational constraints of mobile hardware. Moreover, integrating Augmented Reality (AR) into mobile pill recognition systems can significantly improve usability. AR can guide users in real-time by overlaying visual cues, such as alignment instructions or pill information, directly onto the pill in their view. This integration can be enhanced with tactile or voice feedback to further improve accessibility for users with impaired vision or limited mobility.

For these advancements to be truly effective, rigorous real-world testing and collaboration with healthcare providers will be necessary to ensure the safety, reliability, and compliance of these systems with regulatory standards. By addressing these challenges, mobile-based pill recognition systems can evolve into more robust, scalable, and user-friendly solutions, improving accessibility and patient outcomes, particularly for those in need of immediate pill identification.

6.2 Developing Datasets for Multi-Region Markets

A major challenge in pill image recognition is the creation of diverse and representative datasets that can accurately capture the wide variety of pill appearances found globally. This is particularly true for the Vietnamese market, where the pharmaceutical landscape includes both locally manufactured and imported medications. Existing international datasets often fall short in representing the full spectrum of pills available in Vietnam, leading to potential gaps in the performance and generalizability of pill recognition systems. For instance, while the VAIPE dataset [118] is useful for the Vietnamese context, it is primarily focused on prescription medications and includes a limited variety of pills, most of which are generic, with prescription information provided in Vietnamese.

To address this issue, there is an urgent need to develop a more comprehensive dataset that encompasses a wider range of pill types, reflecting the diversity of the Vietnamese pharmaceutical market.

Beyond the Vietnamese market, it is also essential to advocate for the development and use of diverse datasets that represent pills from various regions, conditions, and manufacturing standards. Such datasets should capture the global diversity of medications, enabling pill recognition systems to operate effectively across different environments and cultural contexts. This broader approach would not only improve recognition accuracy but also enhance the scalability and adaptability of systems deployed in resource-constrained settings.

The integration of pill recognition systems into resource-constrained environments, such as wearable devices for visually impaired individuals, is increasingly important. Wearable technologies, like smart glasses, are being developed to assist users with real-time pill identification. However, to ensure these systems’ effectiveness in such settings, datasets must reflect real-world variations, such as low lighting, complex backgrounds, and diverse pill types. Without a rich and diverse dataset, these systems may struggle to accurately recognize pills under challenging conditions, thereby limiting their practical utility in daily life.

Creating a robust, region-specific dataset for Vietnam, alongside the integration of international datasets, would require collaboration between local healthcare institutions, pharmaceutical companies, research organizations, and regulatory bodies. This initiative would improve the accuracy of pill recognition systems in Vietnam, facilitating their adoption in local healthcare practices and improving medication safety and patient compliance. Moreover, such a dataset would contribute to advancing the field of machine learning and computer vision within the pharmaceutical industry, providing a valuable resource for researchers and developers. Ultimately, the development of diverse and expansive pill image datasets will foster innovation and lead to better healthcare outcomes in Vietnam and beyond.

Additionally, integrating Augmented Reality (AR) into these systems could significantly enhance user interaction, making pill recognition more intuitive. AR overlays could display crucial information, such as the pill name, dosage, and usage instructions, directly onto the user’s field of view, facilitating easy identification without the need to focus on a separate screen. The combination of AR with haptic feedback or voice prompts could further improve usability, particularly for users with limited vision. Furthermore, AR could help address the challenge of recognizing pills in complex environments by emphasizing key features like shape and imprint, thereby improving identification accuracy in real-world scenarios.

Ultimately, extensive real-world testing and collaborations with healthcare organizations will be essential to ensure the seamless integration of these systems into healthcare workflows, improving safety and efficiency. The advancements in AR, along with lightweight model architectures and enhanced data processing techniques, hold the potential to transform wearable pill recognition systems into reliable tools for visually impaired individuals in their daily lives. By addressing these challenges through the development of diverse datasets and innovative technologies, we can enhance pill recognition systems’ effectiveness and expand their utility, particularly in underserved or resource-constrained settings.

6.3 Improving Performance in Pill Image Segmentation and Imprint Identification

In the methods presented in the literature review, the authors used the NLM [141], ePillID [91], VAIPE [118] and CURE [101] datasets. The NLM dataset was collected under ideal conditions, while ePillID, CURE and VAIPE were collected under natural conditions, so they are suitable for pill image recognition under real-world conditions. However, the VAIPE dataset is suitable for multi-pill image recognition in the context of prescriptions and is suitable for generic pill recognition. ePillID dataset is particularly challenging due to its low-shot recognition setting, where most classes have only a single reference image, making it difficult for models to generalize. In this section, this article focuses on the recognition of pill images of various types, in natural conditions, so this article focuses on improving the algorithms using the CURE dataset.

The accuracy and other metrics presented by Ling et al. (2020) in [101] on the CURE dataset emphasize the necessity for advancements in the pill image segmentation phase. In the above paper, the author uses the

6.3.1 Building a New Model Based on Combining

Image segmentation plays a crucial role in enhancing the performance of pill recognition systems, especially when dealing with datasets such as CURE, which contain natural conditions, complex backgrounds, and limited data. Accurate segmentation isolates the pill from distracting backgrounds, emphasizing critical features such as color, shape, and imprint. When segmentation is inaccurate, subsequent recognition stages may suffer from noise introduced by irrelevant elements, leading to reduced performance. Therefore, selecting an appropriate segmentation method is essential to improve recognition performance, particularly for datasets like CURE that are characterized by challenging visual diversity and limited training samples.

The

To address this, leveraging transfer learning using a model pre-trained on datasets with similar characteristics emerges as a viable alternative. Transfer learning not only reduces computational costs but also enhances generalization on small and complex datasets such as CURE.

The U-Net architecture is one of the most prominent and effective models for image segmentation tasks, particularly in the medical field. Its distinctive U-shaped design consists of two main parts: the encoder and the decoder. The encoder employs convolutional and pooling layers to extract complex features and reduce the spatial dimensions of the image, while the decoder restores spatial information using upsampling or transposed convolution layers to reconstruct the image to its original size. A key feature of U-Net is the use of skip connections, which directly transfer features from corresponding layers in the encoder to the decoder. This mechanism preserves detailed information and enhances accuracy, especially along object boundaries. Thanks to its powerful processing capabilities and relatively low data requirements, U-Net has become a standard for applications such as medical image segmentation.

In the context of recognizing images of pills with few data and complex backgrounds, we need U-Net-based algorithms that have been pre-trained on datasets similar to the data of pill images with complex backgrounds to optimize performance.

Popular algorithms based on U-Net that have been pre-trained on popular datasets include U-Net++, Attention U-Net, Res-U-Net,

Pill images often exhibit significant variability in shape, color, and imprint (e.g., logos, numbers, or letters), while being situated on backgrounds that range from simple to highly cluttered. Such attributes mirror the challenges present in SOD datasets. Leveraging

The architectural strengths of

6.3.2 Developing Deep TextSpotter (DTS) Pill Imprint Extraction Method Based on Improvement and Flexible Application of Existing Algorithms