Open Access

Open Access

ARTICLE

Local Content-Aware Enhancement for Low-Light Images with Non-Uniform Illumination

College of Computer Science and Technology, Xi’an University of Science and Technology, Xi’an, 710054, China

* Corresponding Author: Qi Mu. Email:

(This article belongs to the Special Issue: New Trends in Image Processing)

Computers, Materials & Continua 2025, 82(3), 4669-4690. https://doi.org/10.32604/cmc.2025.058495

Received 13 September 2024; Accepted 17 December 2024; Issue published 06 March 2025

Abstract

In low-light image enhancement, prevailing Retinex-based methods often struggle with precise illumination estimation and brightness modulation. This can result in issues such as halo artifacts, blurred edges, and diminished details in bright regions, particularly under non-uniform illumination conditions. We propose an innovative approach that refines low-light images by leveraging an in-depth awareness of local content within the image. By introducing multi-scale effective guided filtering, our method surpasses the limitations of traditional isotropic filters, such as Gaussian filters, in handling non-uniform illumination. It dynamically adjusts regularization parameters in response to local image characteristics and significantly integrates edge perception across different scales. This balanced approach achieves a harmonious blend of smoothing and detail preservation, enabling more accurate illumination estimation. Additionally, we have designed an adaptive gamma correction function that dynamically adjusts the brightness value based on local pixel intensity, further balancing enhancement effects across different brightness levels in the image. Experimental results demonstrate the effectiveness of our proposed method for non-uniform illumination images across various scenarios. It exhibits superior quality and objective evaluation scores compared to existing methods. Our method effectively addresses potential issues that existing methods encounter when processing non-uniform illumination images, producing enhanced images with precise details and natural, vivid colors.Keywords

Images contain rich information and are indispensable to a wide array of high-level computer vision tasks. Nonetheless, their quality can be compromised under low-light conditions, typically exhibiting diminished contrast, reduced brightness, and obscured details. These degradations challenge human visual perception and impede the accuracy of critical computer vision tasks, such as object detection, tracking, and semantic segmentation. Thus, developing effective low-light image enhancement techniques has become a prominent research focus [1,2].

Retinex-based [3] methods have distinguished themselves by achieving a dynamic balance across multiple aspects, particularly excelling in color retention and detail enhancement in low-light conditions [4–6]. These methods employ filters as center-surround functions to estimate illumination and subsequently mitigate its effects. The accuracy of this estimation relies on the performance of the filters. Traditional Retinex methods, utilizing isotropic filters, have successfully increased the brightness of low-light images and unveiled details in dark regions. However, when applied to non-uniformly illuminated images, these isotropic filters are prone to deviations at illumination transition regions, resulting in halo artifacts and detail loss, as exemplified by the multi-scale Retinex with color restoration (MSRCR) [7] method in Fig. 1b. To overcome these limitations, researchers have developed novel Retinex enhancement methods, categorized into traditional and deep learning strategies.

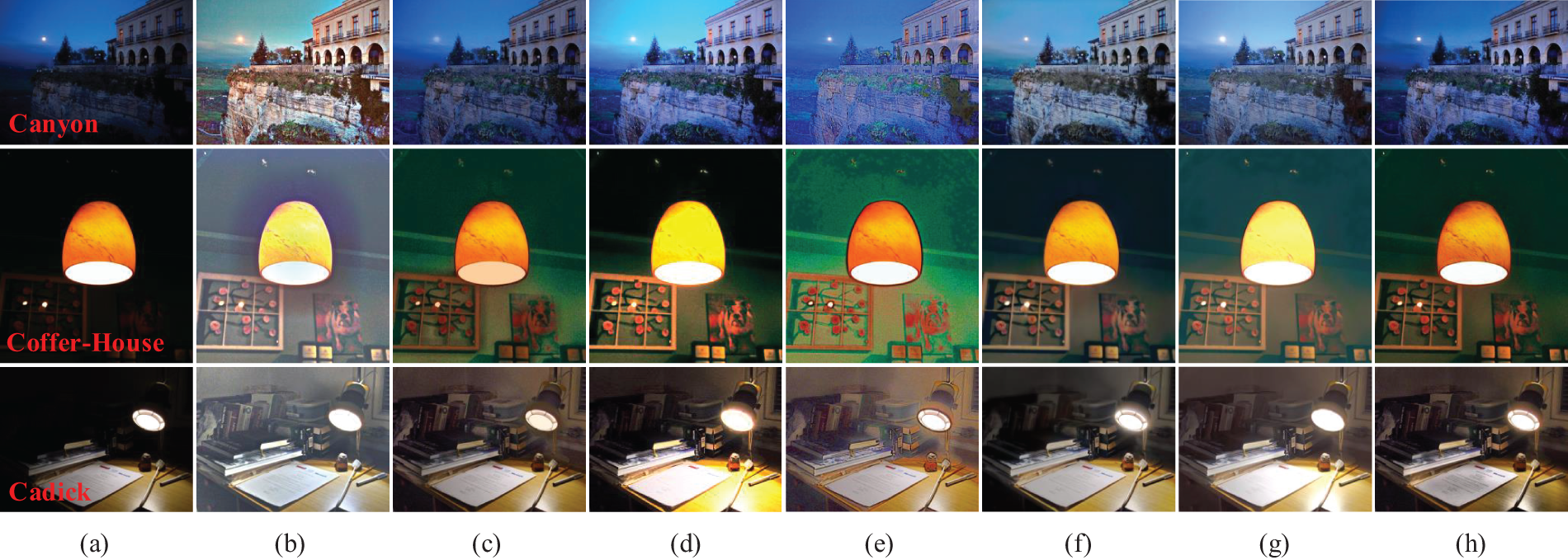

Figure 1: The enhanced results of different methods in images with non-uniform low illumination: (a) Original images, (b) MSRCR, (c) NPE, (d) Mu’s method, (e) Retinex-Net, (f) KinD, (g) URetinex-Net, (h) Ours

In traditional Retinex enhancement methods, a series of anisotropic filters has been implemented to estimate illumination [8]. Although these methods offer some relief from halo artifacts and improve the natural rendering of colors, they still encounter difficulties with accurately estimating illumination at areas of light transition. This limitation can result in edge blur in the enhanced images, as exemplified by the naturalness preserved enhancement (NPE) [9] method shown in Fig. 1c. Additionally, these methods may not constantly adjust brightness finely across different regions of an image, potentially resulting in inadequate enhancement of dark areas or over-enhancement of the bright areas, as illustrated by the Mu et al. [10] in Fig. 1d.

In recent years, advances in deep neural networks have prompted researchers to utilize these sophisticated models to separate and adjust illumination and reflectance components in images. Compared to traditional methods, deep learning approaches have demonstrated superior brightness enhancement capabilities and a reduced propensity for halo artifacts at illumination transitions, as illustrated by models such as deep Retinex network (Retinex-Net) [11], Kindling the Darkness network (KinD) [12], and Retinex-based deep unfolding network (URetinex-Net) [13]. Nonetheless, the absence of an optimized network structure can sometimes result in the loss of features during the decomposition process, potentially leading to blurred details, as shown in Fig. 1e,f. The performance of supervised learning-based low-light image enhancement methods solutions largely depends on the training dataset, including its size, diversity, and differences from real-world data, all of which can impact the model’s performance [14]. As a result, some deep learning methods may risk over-enhancing bright regions when dealing with non-uniformly illuminated images, as shown in Fig. 1g.

To cope with the above problems, we propose a novel local content-aware enhancement method for low-light images with non-uniform illumination. Our approach effectively mitigates halo artifacts, edge blurring, and over-enhancement in bright regions while simultaneously removing noise and preserving essential image details, as illustrated in Fig. 1. Our contributions are as follows:

(1) We proposed a multi-scale effective guided filtering approach complemented by an adaptive gamma correction function. This integrated methodology ensures precise illumination estimation and adjustment across diverse image regions, tailored to the specific characteristics of each local area within the image.

(2) We utilized an effective guided filter to denoise the reflectance component, focusing on edge preservation and accurate noise removal. Subsequently, we implemented a detail enhancement process on the denoised image, preventing noise amplification during the enhancement process.

(3) We conducted comprehensive experiments on six varied low-light image datasets encompassing a range of scenes. Our method was rigorously compared with several mainstream Retinex enhancement methods. These experiments substantiated the effectiveness of our approach in enhancing low-light images, particularly under non-uniform illumination conditions.

2.1 Image Enhancement through Gray Mapping Techniques

Gray mapping methods adjust brightness and contrast by compressing or stretching pixel values point-by-point. Representative methods include gamma correction and histogram equalization (HE). Gamma correction adjusts brightness using a nonlinear transformation. Global correction parameters struggle with non-uniform illumination. Hence, adaptive gamma correction functions have been developed [15]. Histogram equalization enhances contrast by expanding the dynamic range of pixel values. Early global methods processed all pixels uniformly, ignoring local differences. This often led to over-enhancement and noise amplification in low-light images with non-uniform illumination. Consequently, many local HE methods and improvements have been proposed to enhance brightness and contrast [16,17].

Gray mapping methods ignore the influence of spatial context, making the image prone to over-enhancement and detail loss, which results in an unnatural appearance. They are typically used with other methods to improve enhancement effects in practical applications [8,18].

2.2 Retinex-Based Image Enhancement

Land proposed the Retinex theory [3] based on color constancy. This theory effectively models color perception in the human visual system. According to the Retinex theory, the image P can be decomposed into two components: the reflectance component R and the illumination component I. The mathematical description is given in Eq. (1).

The core of Retinex-based image enhancement methods is to remove or suppress the influence of the illumination component from the original image P. The key lies in accurately estimating the illumination. Early Retinex enhancement methods assumed uniform illumination, using isotropic Gaussian filtering (GF) for estimation and treating the reflectance component as a result. However, real-world illumination is non-uniform, and GF often fails at light transition areas, leading to halo artifacts, edge blurring, and detail loss in enhanced images. To address these issues, anisotropic bilateral filtering (BF) [19] has been applied for illumination estimation, enhancing image details, and reducing halo phenomena. However, gradient reversal near edges causes artifacts. The NPE method combines neighborhood brightness information with a bright-pass filter and dual-logarithm transformation to enhance detail and naturalness. Many researchers use guided image filters (GIF) [20] and their improved versions for more accurate illumination estimation [21]. Unlike these, Fu et al. [22] designed a weighted variational model for better prior modeling and edge preservation. The structure-revealing low-light image enhancement (SRLIE) [23] method introduces various priors to construct an optimization function, effectively suppressing noise and halo phenomena.

Deep learning-based low-light image enhancement methods have made significant progress recently. Zhang et al. proposed KinD, which reduces noise and preserves color fidelity by training on paired datasets under different exposure conditions. However, the enhanced images still exhibit light spots and blurred details in some cases. To address these issues, Zhang et al. [24] introduced an improved version, KinD++, which incorporates a multi-scale illumination attention module to significantly reduce light spots and detail blurring. Nevertheless, when processing images with non-uniform illumination, artifacts may still appear in areas with abrupt lighting transitions. Wu et al. [13] introduced URetinex-Net, a deep unfolding network that effectively restores clear details while suppressing noise, though it tends to over-enhance non-uniformly illuminated images. Cai et al. [25] presented Retinexformer, the first Transformer method with Retinex theory, which improves low-visibility areas, removes noise, and avoids artifacts. Compared to traditional methods, deep learning approaches recover details and restore colors more accurately by learning rich features and contextual information, enhancing visual quality. However, they require large annotated datasets, complex training, and high computational resources.

2.3 Guided Filter-Based Illumination Estimation

The GIF is an anisotropic filter that establishes a local linear relationship between the guide image G and the filtered result image O, as shown in Eq. (2).

where

The values of

where

GIF uses the guide image’s structural information to adjust weights. GIF ensures that the output image shares the same gradient edges as the guide image, effectively avoiding the gradient reversal problem in BF [26]. However, GIF uses the same regularization parameter in all filtering windows, overlooking regional differences in non-uniform illumination. This results in halo artifacts and edge blurring in areas with significant texture variations. To address this, researchers have developed improved versions of GIF for illumination estimation. Weighted guided image filtering (WGIF) [27] introduces edge-aware weights and adaptively adjusts the regularization factor, better preserving edges and preventing blurring. Gradient domain guided image filtering (GGIF) [28] further enhances filtering performance by incorporating multi-scale edge-aware weights and edge-aware constraints. However, these filters have limited local perception capabilities and are highly sensitive to the regularization parameter. As the regularization parameter increases, halo artifacts become more severe. Effective guided image filtering (EGIF) [29] incorporates the average of local variances of all pixels into the cost function, accurately preserving edges. This approach is more robust to the regularization parameter and significantly reduces halo artifacts. Therefore, we employ EGIF for illumination estimation.

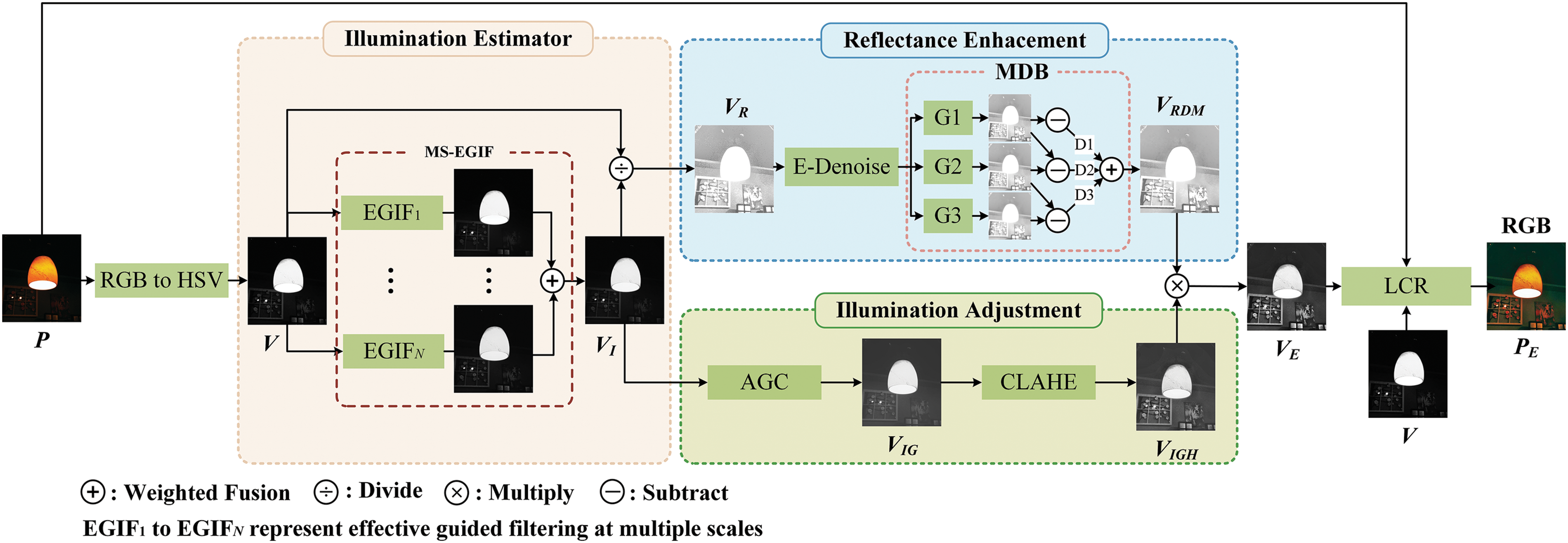

Fig. 2 shows the framework of the proposed method for local content-aware enhancement for low-light Images with non-uniform illumination. The input image P is converted from the RGB to the HSV color model. This method enhances only the Value (brightness) channel V. First, multi-scale effective guided image filtering (MS-EGIF) estimates the illumination component

Figure 2: Framework of local content-aware enhancement for low-light Images with non-uniform illumination

We introduce the MS-EGIF method for illumination estimation. This method enhances the accuracy of illumination estimation by utilizing EGIF across multiple scales to capture both local and global illumination variations, achieving superior detail smoothing and edge preservation.

In the MS-EGIF method, multiple appropriate scale parameters are initially selected using a scale-adaptive selection strategy. Based on these scale parameters, illumination estimation is subsequently conducted using effective guided image filtering. Finally, the illumination components estimated at different scales are weighted and averaged to derive the final illumination component

where

The scale-adaptive selection strategy dynamically adjusts the scale parameters to accommodate images of different sizes, thereby enhancing the perceptual accuracy of the illumination estimation method. As indicated by Eq. (5), smaller images’ scale parameters are relatively minor, which helps avoid excessive smoothing while preserving crucial edge information. Conversely, larger images yield larger scale parameters, capturing a broader range of illumination variations while ensuring smooth detail processing. This approach overcomes the limitations of fixed scale parameters by balancing edge preservation with detail smoothing across images of varying sizes, thereby improving the accuracy of illumination estimation.

The EGIF used in the MS-EGIF method is an outstanding edge-preserving smoothing filter. EGIF uses the average of local variances of all pixels as edge-aware weights

where

where

According to Eqs. (8) and (9), if pixel

Following these steps, the estimated illumination component

After obtaining the illumination component, our method designs adaptive illumination adjustment. It adjusts enhancement magnitude based on pixel brightness values for precise illumination adjustment. First, the brightness of low-light regions is enhanced through AGC, improving the overall visibility of these areas. However, it may not be sufficient to recover local details. The image’s local contrast is further enhanced using CLAHE, particularly in low-light regions, making the image details clearer.

The adaptive gamma correction function adjusts the gamma correction parameter adaptively based on the value of each pixel in the illumination component image. It corrects the local brightness of the image pixel by pixel to achieve varying degrees of enhancement in dark and bright regions, as shown in Eqs. (10) and (11).

where

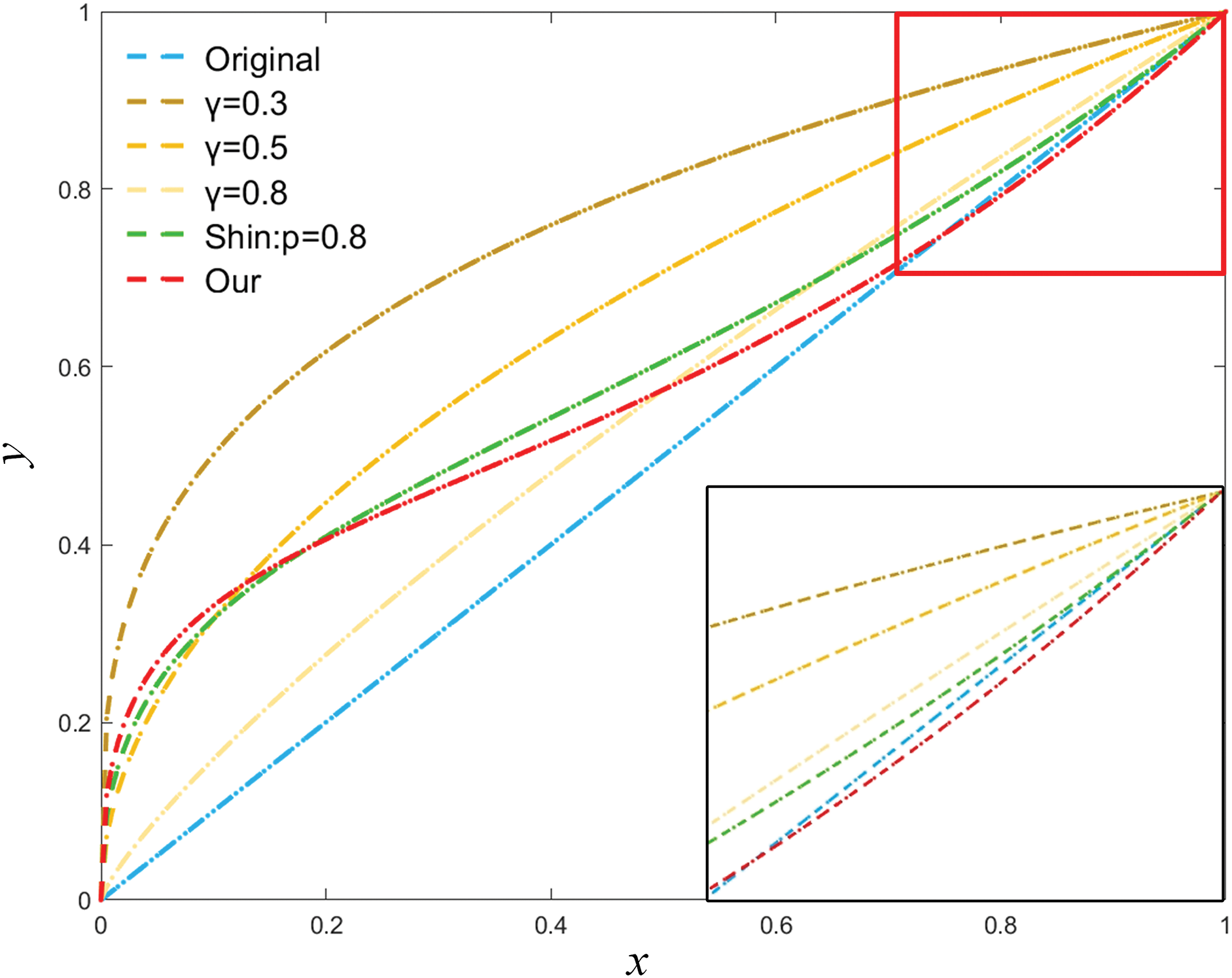

Fig. 3 shows the AGC designed in this study (red curve), the standard gamma correction function (

Figure 3: The different gamma correction function curves. The horizontal axis

Subsequently, the adjusted illumination component

Classical Retinex-based enhancement methods do not consider noise, leading to noise amplification. Direct denoising causes detail loss. We propose an effective reflectance component adjustment method that considers both noise and details, resulting in a reflectance component with reduced noise and clear details.

First, EGIF is used to denoise the reflectance component (E-Denoise), as shown in Eq. (13). EGIF effectively senses noise and textures. It retains as much edge and texture information as possible during denoising.

A multi-scale local detail enhancement method (MDB) [32] is applied to the denoised reflectance component

where

Images

Finally, the enhanced illumination component

The enhanced value channel image

This section validates the effectiveness of the proposed method by comparing it with various existing low-light image enhancement methods. These include traditional methods such as MSRCR [7], MF [34], SRLIE [23], Hong’s method [35], and Frequency [36], as well as deep learning methods like Retinex-Net [11], URetinex-Net [13], Retinexformer [25], global structure-aware diffusion (GSAD) [37], and double domain guided network (DDNet) [38]. The code and results for the comparison methods were obtained from the original authors’ websites. Default parameters specified in their articles were employed in the experiments. All experiments were conducted using the following setup: Intel Core i7 CPU @ 2.40 GHz, 16 GB RAM, Windows 11 × 64 operating system, and Matlab R2020a experimental platform.

To ensure data diversity, this study utilized images from six low-light image datasets for subjective and objective evaluations. These datasets include DICM [39], LIME [40], MEF [41], NPE [9], VV1 and LOL [11]. The DICM dataset contains 69 images, 44 captured under low-light conditions. The LIME dataset includes 10 low-light images spanning various scenes. The MEF dataset comprises 9 indoor and 8 outdoor low-light images. The NPE dataset includes 85 low-light images captured under diverse weather and lighting conditions. The VV dataset comprises 24 real multi-exposure images at various resolutions, featuring indoor and outdoor scenes with people and natural landscapes. The LOL dataset contains 500 pairs of low-light and normal-light images. These datasets encompass various lighting conditions, including indoor objects and decor, outdoor buildings, and natural landscapes. Additionally, we evaluated our method’s and other methods’ average objective metrics and time performance on the DICM, LIME, and MEF datasets.

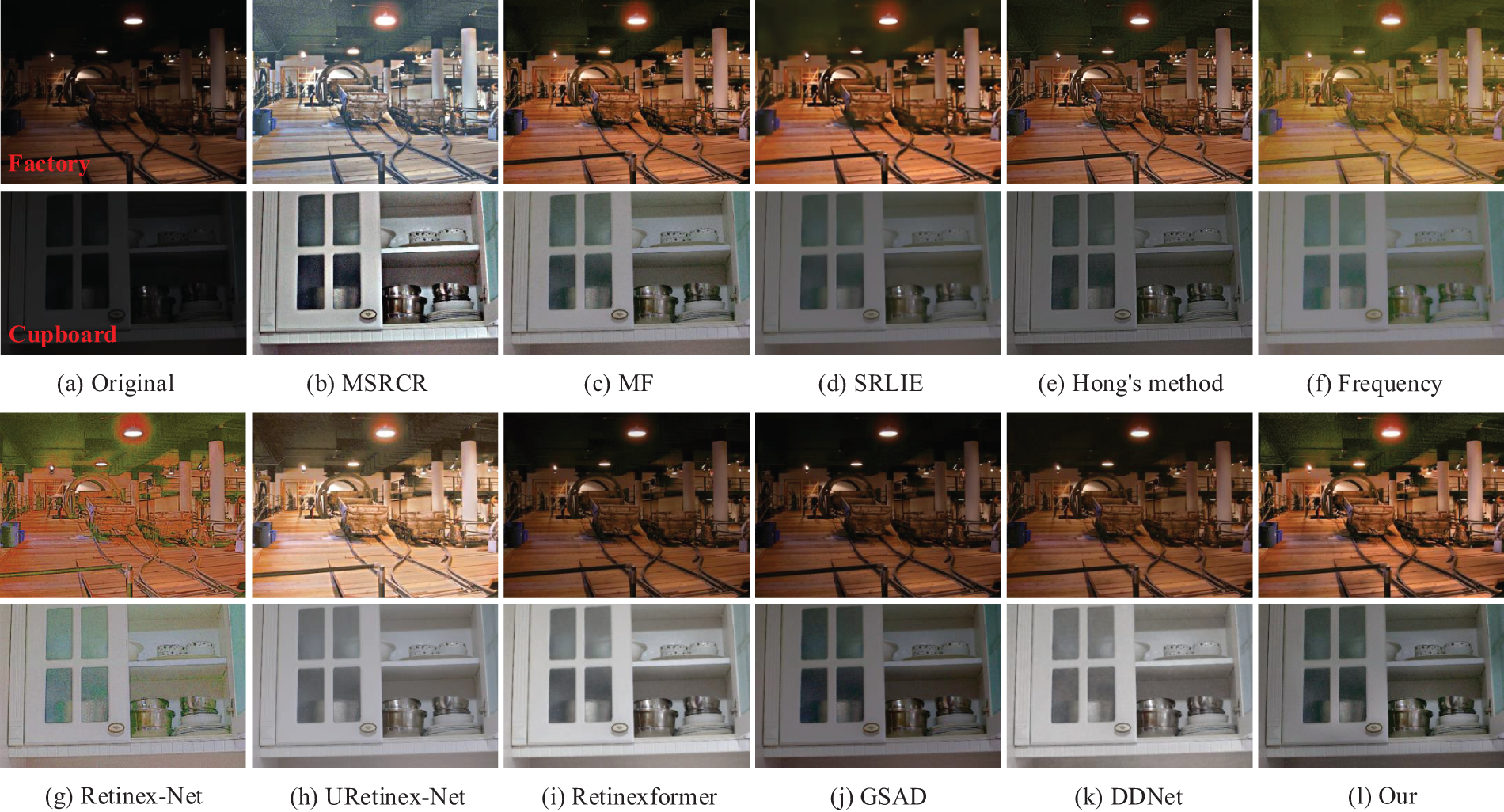

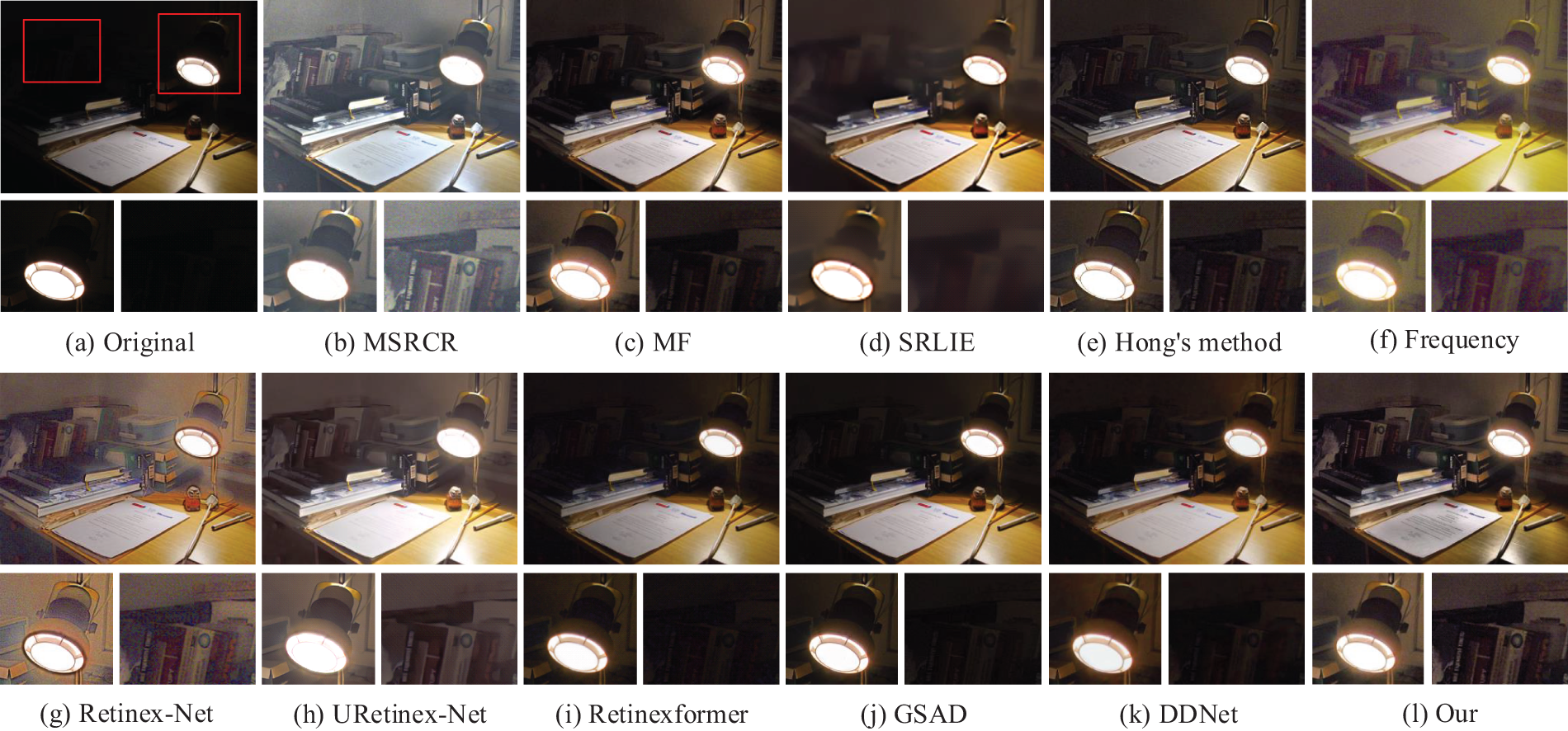

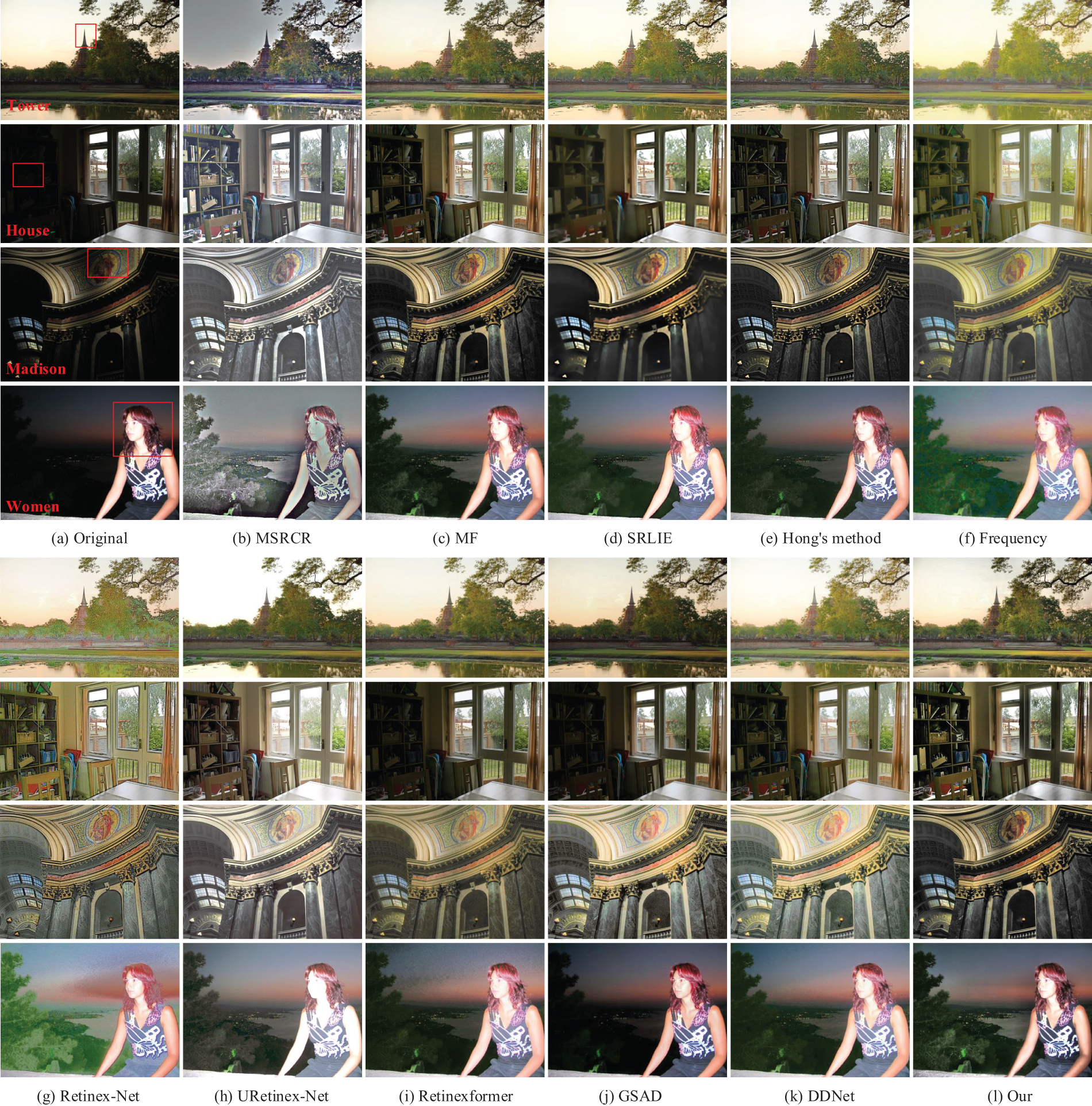

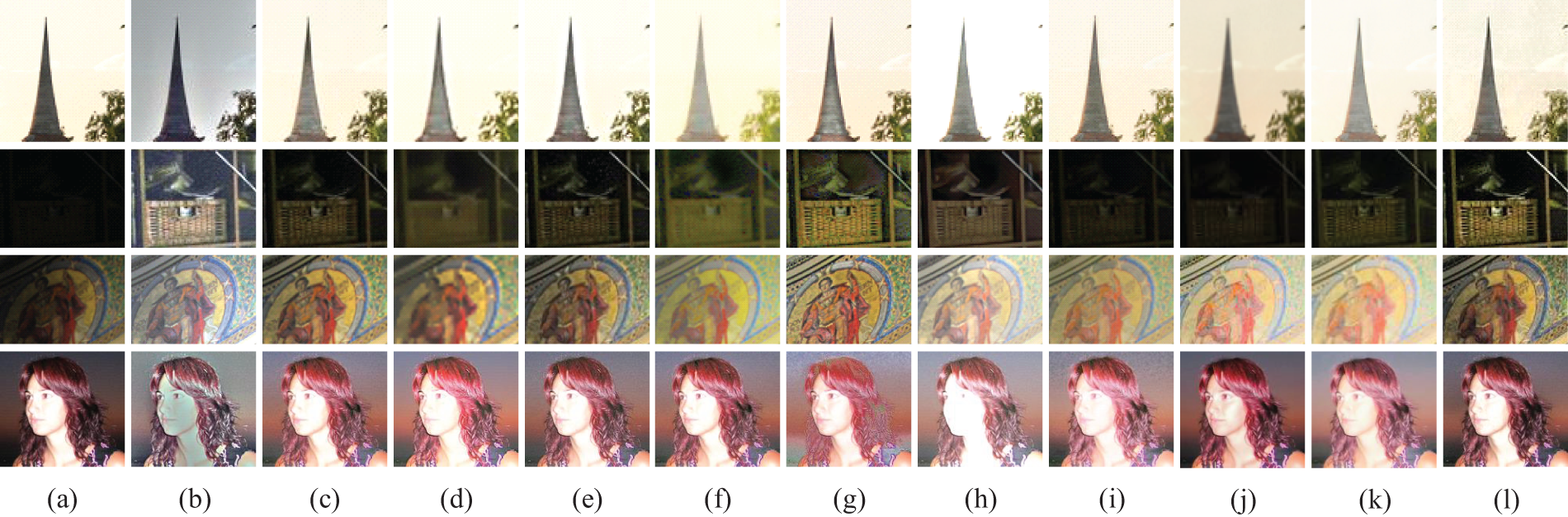

Figs. 4–8 illustrate the enhancement results of our method and other methods for uniform and non-uniform low-illumination color images. Figs. 4 and 7 display the enhancement results of images with uniform and non-uniform illumination, respectively. Fig. 8 displays the local details within the red-boxed regions of the images shown in Fig. 7. Figs. 5 and 6 show the enhancement outcomes of the non-uniform illumination images Candle and Cadik, along with detailed comparisons of local regions.

Figure 4: The enhanced results of different methods in images with uniform low illumination

Figure 5: The detailed enhanced results of different methods in Candle

Figure 6: The detailed enhanced results of different methods in Cadik

Figure 7: The enhanced results of different methods in images with non-uniform low illumination

Figure 8: The detailed enhanced results of different methods in images with non-uniform low illumination: (a) Original images, (b) MSRCR, (c) MF, (d) SRLIE, (e) Hong’s method, (f) Frequency, (g) Retinex-Net, (h) URetinex-Net, (i) Retinexformer, (j) GSAD, (k) DDNet, (l) Ours

As shown in Fig. 4, these methods effectively enhance the brightness of images with uniform low-light conditions, revealing more detailed information. Among them, MSRCR, Frequency, Retinex-Net, and URetinex-Net exhibit the most significant improvements in brightness. However, each of these methods introduces varying degrees of color distortion. Although SRLIE effectively reduces noise, it blurs image details, as shown in Fig. 4d. In comparison, MF, Hong’s method, and the proposed method achieve satisfactory enhancement effects. However, MF produces slightly lower image sharpness than the proposed method, as seen in Fig. 4c. The Hong’s method and GSAD show insufficient brightness enhancement for images in extremely low-light conditions, as shown in Fig. 4e,j. Retinexformer and DDNet improve brightness in uniformly low-light images and maintain natural color in the enhanced images. However, DDNet suffers from reduced image sharpness, as shown in Fig. 4k.

As shown in Figs. 5–8, the MSRCR, Retinex-Net, and URetinex-Net methods effectively enhance brightness in the dark regions of images with non-uniform illumination. However, MSRCR and Retinex-Net exhibit severe halo artifacts, color distortion, and noise amplification. The URetinex-Net method suffers from substantial detail loss in the bright regions and presents low contrast, giving the image an overall washed-out appearance. In contrast, MF, SRLIE, Hong’s method, Frequency, DDNet, and the proposed method manage Non-Uniform Illumination effectively, enhancing brightness in dark regions without over-enhancing bright areas. Despite this, these methods still have some limitations. For instance, the SRLIE and Hong’s method fail to effectively address edge artifacts, with halos still visible around the candle base in Fig. 5d,e. SRLIE results in significant details blurring in dark regions, as shown in Fig. 7d. The Frequency produces blurred edges and unnatural color in enhanced images, as shown in Fig. 7f. The detail sharpness of images enhanced by DDNet is slightly inferior to that achieved by the proposed method, as shown in Fig. 7k. Additionally, Retinexformer and GSAD show limited brightness enhancement in dark regions, which leads to obscured details, as illustrated by the Cadik image in Fig. 6i,j.

Overall, our method demonstrates significant advantages in handling images with non-uniform illumination. The images enhanced by our method demonstrate no significant halo artifacts in areas with abrupt changes in illumination, and the colors are rendered more vividly and authentically. Both dark and bright regions are well-enhanced. For example, the inner frame of the lamp in the Cadik image in Fig. 6l and the clouds in the Tower image in Fig. 7l are well-defined. Additionally, our method preserves precise details in enhanced images, as seen in the books in the Cadik image in Fig. 6l and the portrait in the Madison image in Fig. 7l. However, compared to URetinex-Net and Retinexformer, our algorithm still has room for improvement in noise reduction in extremely dark regions of non-uniform illumination images, as illustrated by the magnified details in the Cadik image in Fig. 6l and the wall in the House image in Fig. 7l.

To comprehensively and objectively evaluate the enhancement effects of the proposed method and its comparison methods, we will use the following seven objective metrics to measure the quality of the enhanced images from detail richness, sharpness, color, contrast, and naturalness perspectives. (1) Information entropy (IE) is employed to assess the richness of image information. A greater value of IE indicates a richer abundance of detail. (2) The energy of the gradient (EOG) is utilized to evaluate image sharpness. A higher value of EOG reflects improved image sharpness. (3)

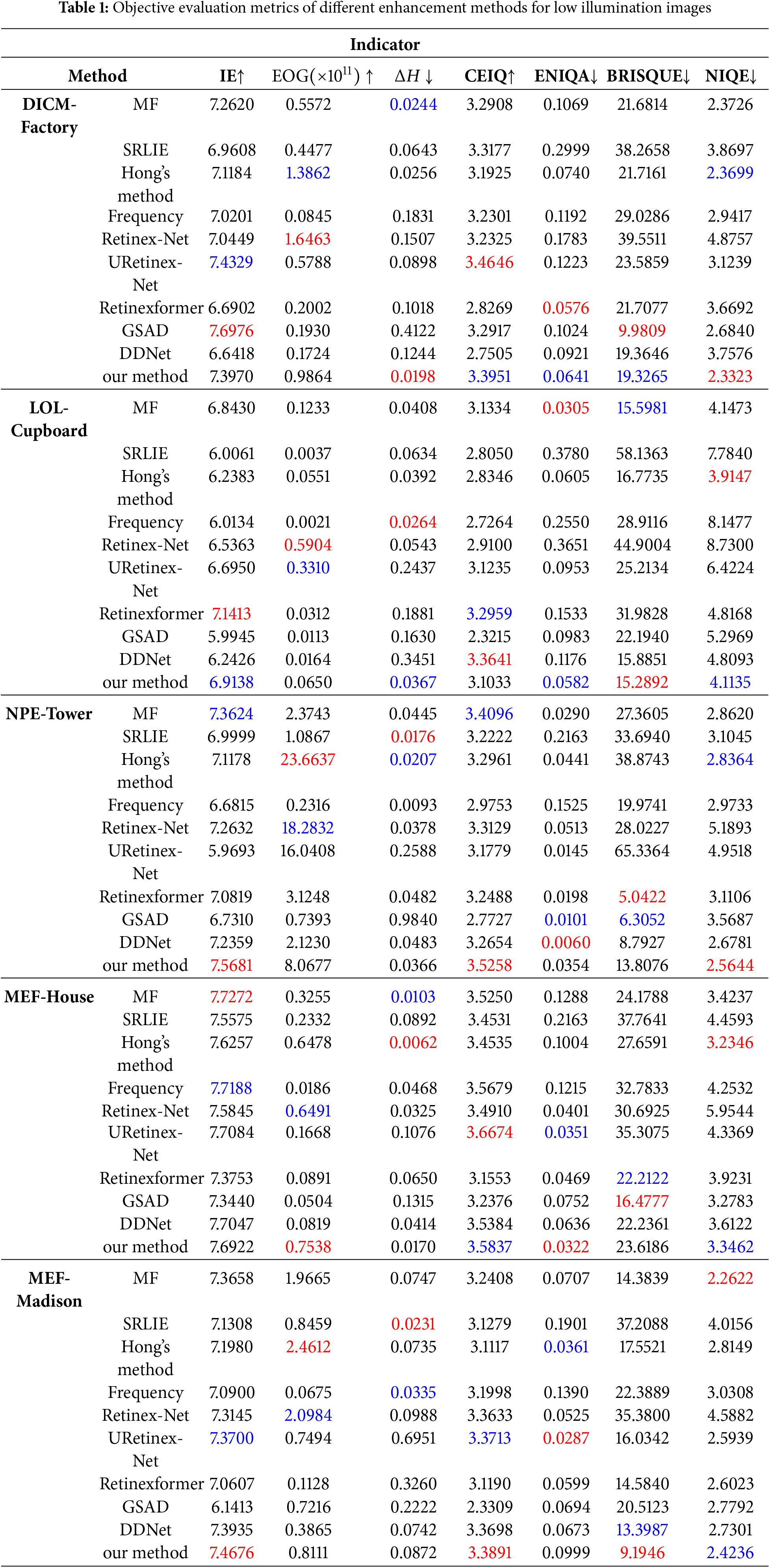

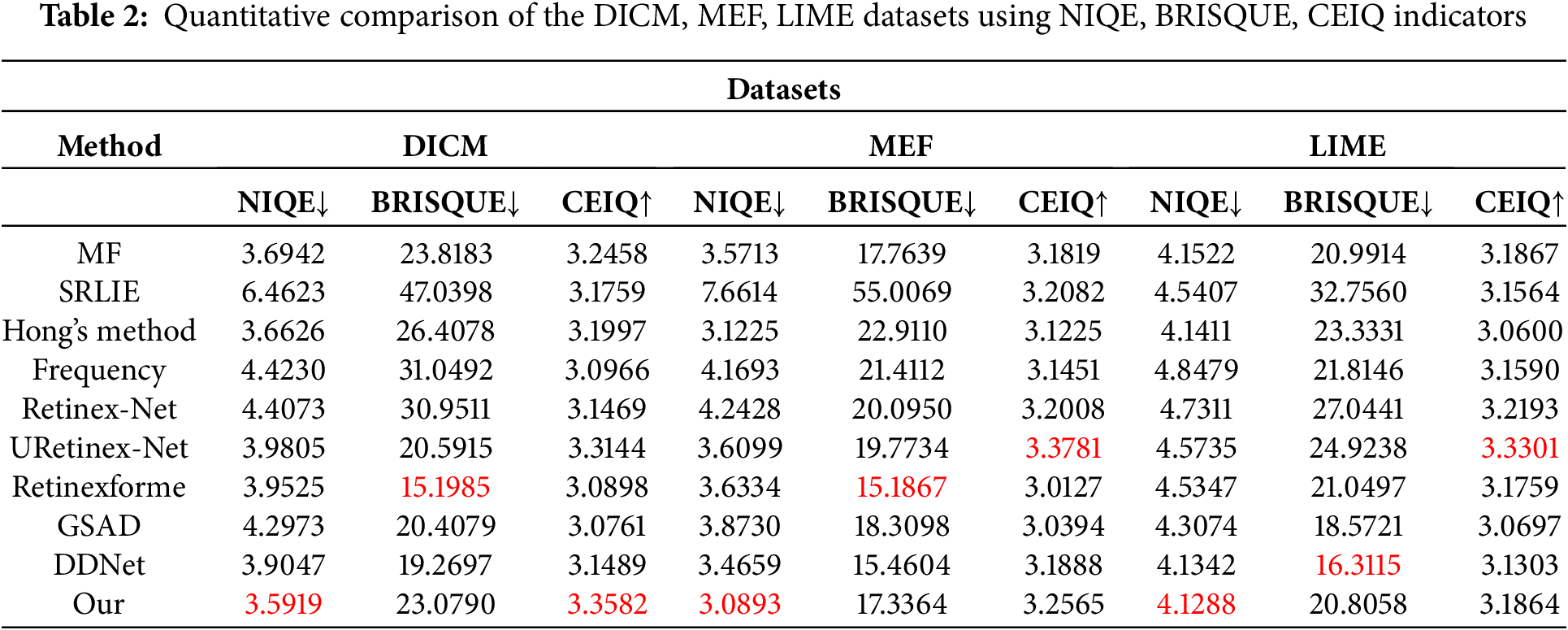

Table 1 presents the objective evaluation metrics for selected images used in the subjective assessment of this study. Tables 2 and 3 display the average NIQE, BRISQUE, and CEIQ values, along with the average time performance, for various methods applied to images from the DICM, MEF, and LIME datasets. The best values are highlighted in red, and the second-best values are highlighted in blue. Subjective evaluations reveal that images enhanced by MSRCR exhibit severe distortion, adversely affecting visual perception. In contrast, the other comparative methods, including ours, show favorable enhancement results. To further evaluate the strengths and weaknesses of these methods, various objective metrics are used to compare image detail richness, clarity, color, contrast, and naturalness.

As shown in Table 1, images processed by our method under various lighting conditions achieved the best or second-best results across multiple metrics. This indicates that our approach performs well regarding image detail richness, sharpness, color fidelity, contrast, and naturalness under diverse lighting conditions, consistent with subjective evaluations. Specifically, Retinex-Net and Frequency methods lead to color distortion in enhanced images, reflected in their higher

As illustrated in Table 2, our method consistently achieves the best NIQE scores across three datasets and obtains optimal or near-optimal values in the BRISQUE and CEIQ metrics. This indicates that our method reliably enhances low-light images under various illumination conditions and across diverse scenes. Although our method does not achieve the highest score for every metric on each dataset, it provides the best overall enhancement effect with better robustness.

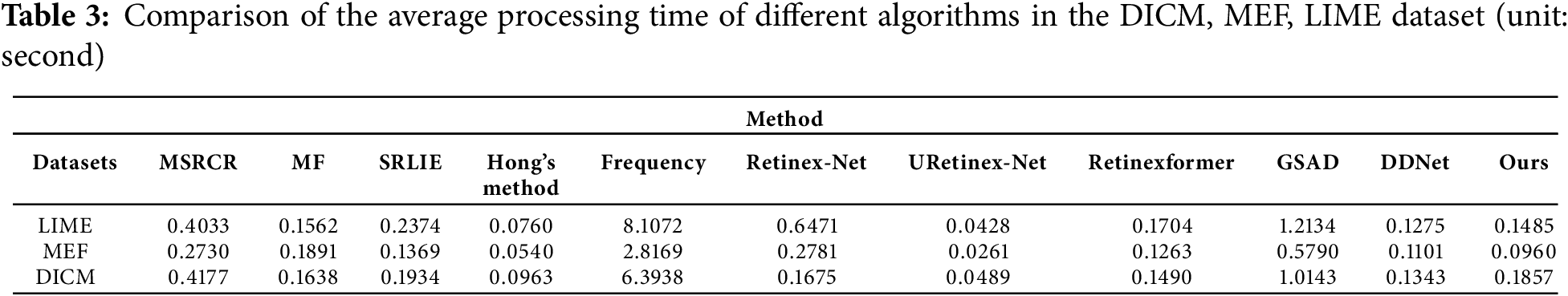

Table 3 presents the average processing time for enhancing low-light images in the DICM, MEF, and NPE datasets using the algorithms discussed. As shown in Table 3, Hong’s method and URetinex-Net demonstrate shorter processing times, whereas MSRCR, Frequency, Retinex-Net, and GSAD require longer processing times. The runtime of MF, Retinexformer, DDNet, and our method is comparable. Considering both subjective and objective evaluations, as well as the time efficiency of each algorithm, our method achieves the best overall performance.

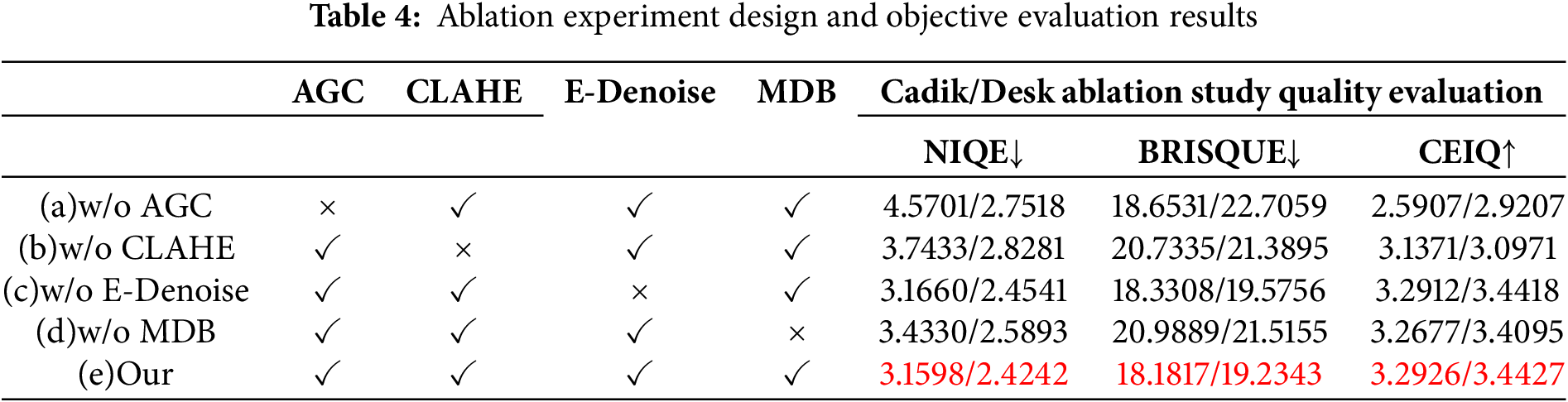

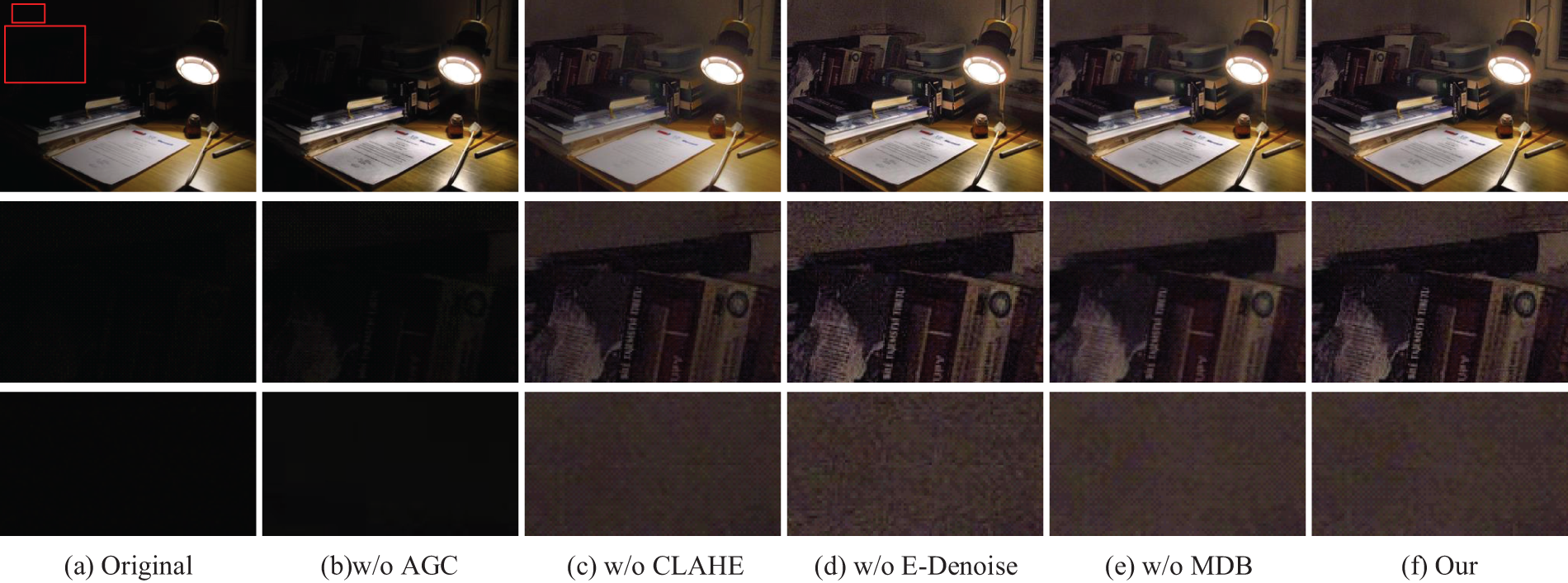

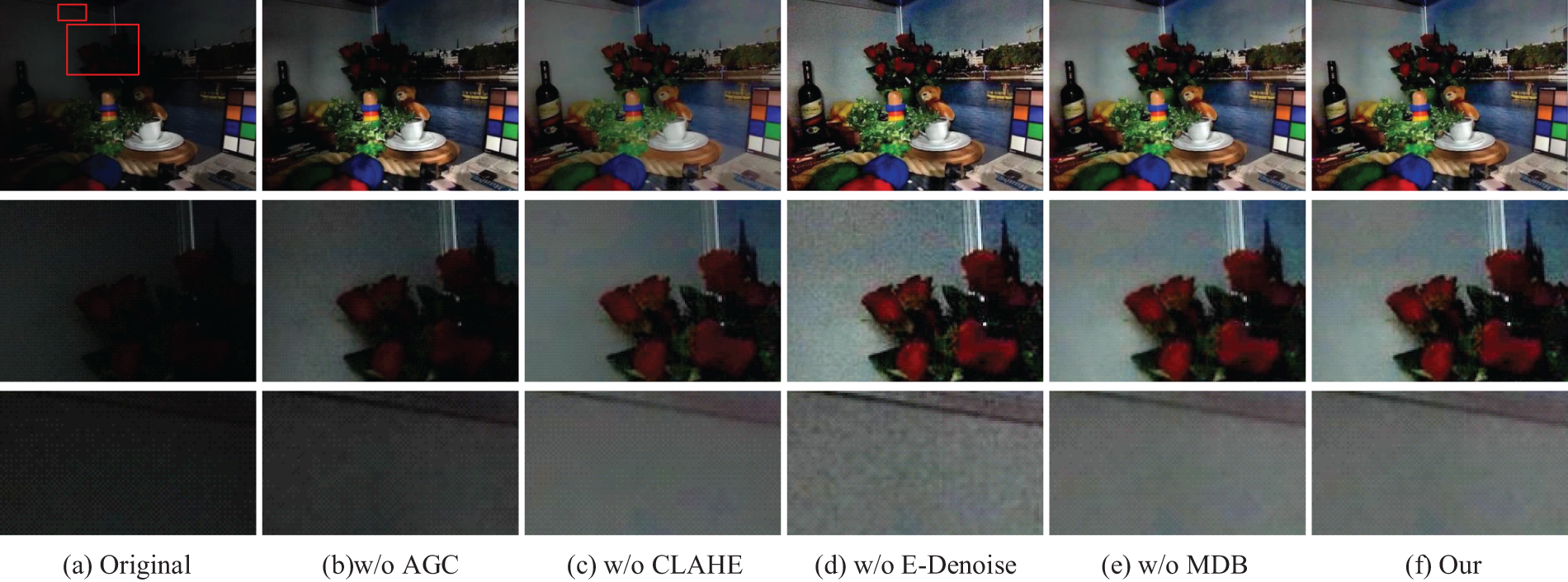

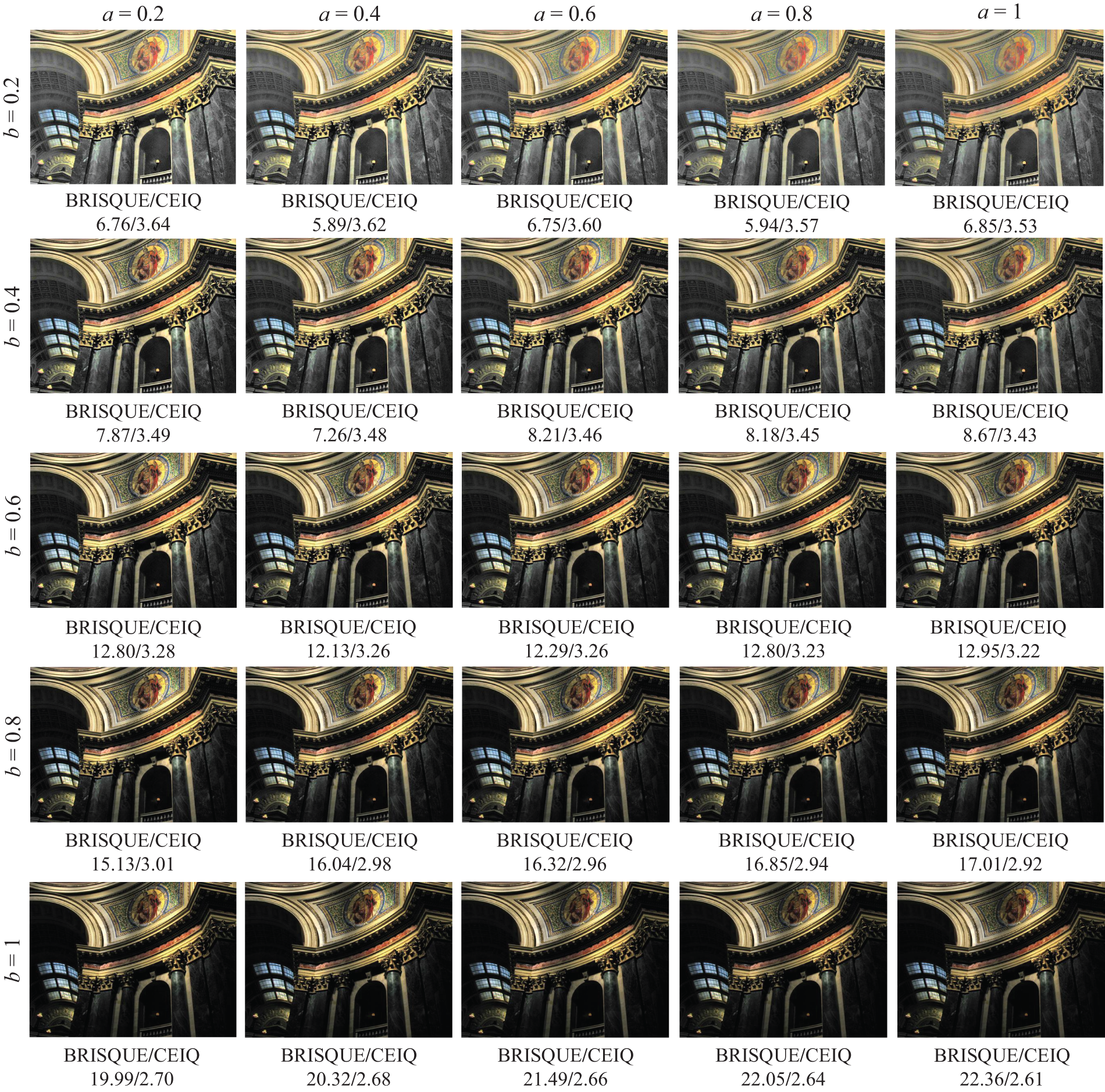

To verify the necessity of each processing module used in our method, we conducted an ablation experiment. The experiments include: (a) without adaptive gamma correction (w/o AGC), (b) without contrast limited adaptive histogram equalization (w/o CLAHE), (c) without EGIF denoising (w/o ED), (d) without multi-scale detail boosting (w/o MDB), and (e) the complete proposed method. The ablation study design is shown in Table 4. Due to space constraints, only two images, Cadik and Desk, from different scenes are presented for the ablation study. The final experimental results are displayed in Figs. 9 and 10.

Figure 9: Ablation experiment results in Cadik image

Figure 10: Ablation experiment results in Desk image

Figs. 9 and 10 show the results of the ablation study for each design scheme, leading to the following conclusions: (a) Without AGC, the overall brightness of the image is low, and the enhancement of dark areas is insufficient, as seen in the books against the wall in Fig. 9a. (b) Without CLAHE, the image brightness is significantly enhanced, but the recovery of local details is insufficient. (c) Without E-Denoise, the image details are richer, and edge textures are clearer. However, the noise is significantly amplified, and the image looks unnatural. (e.g., the wall sections in Fig. 9c and Fig. 10c). (d) Without MDB, the noise is effectively filtered out, but detailed texture information is blurred, resulting in a less clear image. (e) Our method, which includes complete processing modules, performs better in brightness, contrast enhancement, denoising, and detail enhancement. Additionally, Table 4 presents the objective evaluation metrics for all images in Figs. 9 and 10, where our method achieves the best values in multiple metrics, outperforming the other compared images.

4.5 Parameter Analysis Experiment

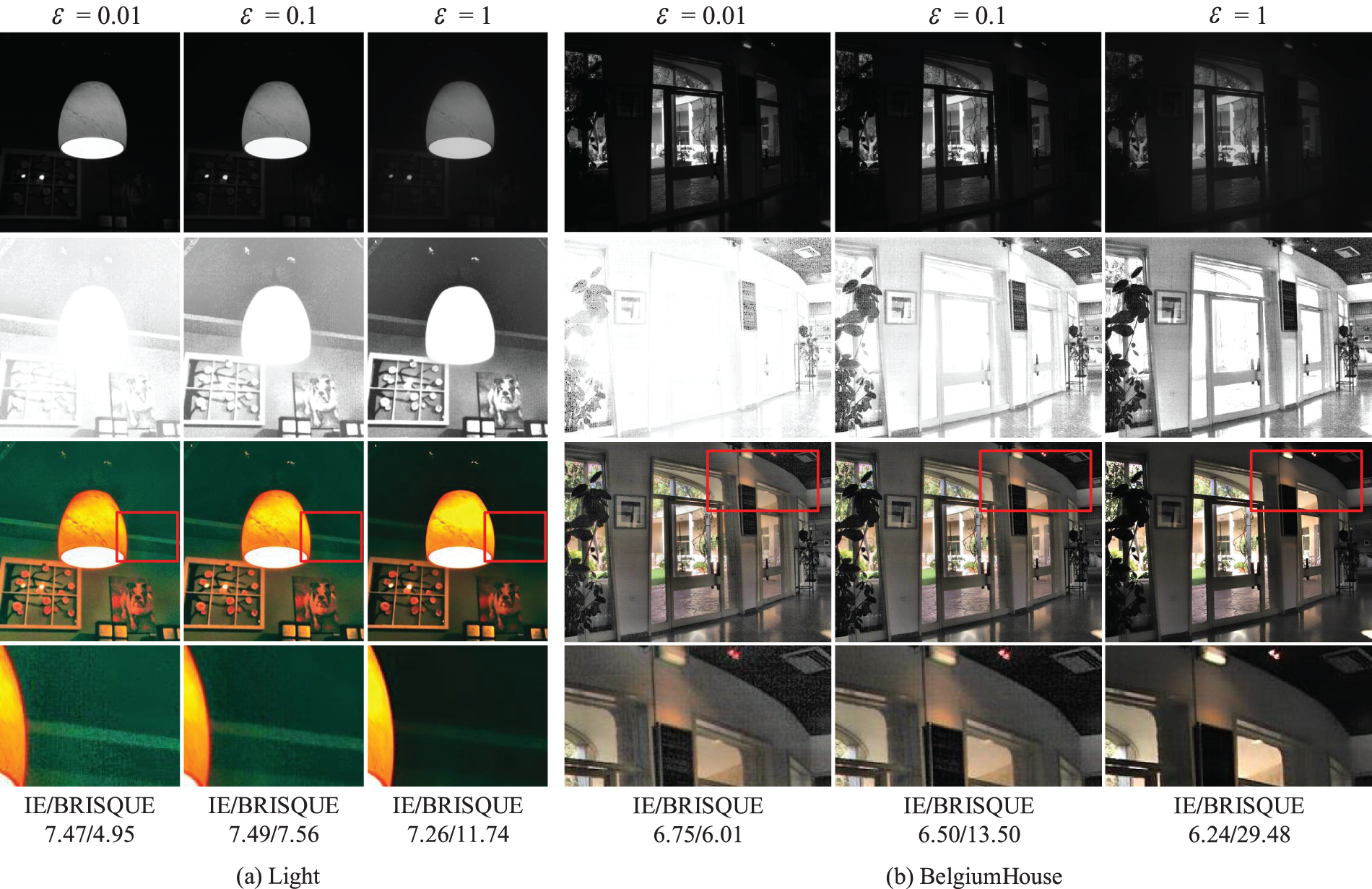

In this section, we evaluate the relevant parameters in the paper, specifically the regularization parameter

First, we assess the regularization parameter

Figure 11: The enhanced results of different regularization parameter values

In Eq. (11), the AGC function determines the gamma correction parameter primarily based on the parameters

Figure 12: The enhanced results of different parameter values

As shown in Fig. 12, parameter

Despite the exceptional performance of deep learning in image enhancement, traditional methods retain their prevalence in resource-limited scenarios, such as embedded devices, industrial inspection, and mobile processing. We propose a local content-aware enhancement method for low-light images with non-uniform illumination. MS-EGIF is used for localized illumination estimation, improving the accuracy of illumination estimation. It can effectively solve the halo artifacts often appearing in enhanced non-uniform illumination images. To avoid over-enhancement of bright regions, an AGC function is designed to enhance the brightness of dark areas while effectively suppressing bright regions. EGIF and MDB methods adjust the reflectance component. This ensures noise reduction while preserving image details.

Experimental results show that the proposed method can efficiently enhance images captured under uniform illumination. Compared to existing methods, the resulting images show significant improvements in addressing halo artifacts and over-enhancement in bright regions. Additionally, the images exhibit natural colors and display more prosperous, more precise details. Although the method achieves satisfactory enhancement results, it still exhibits limitations in denoising in low-light regions when dealing with images with extremely non-uniform illumination. Resolving this issue will be part of our future work.

Acknowledgement: None.

Funding Statement:: The authors received no specific funding for this study.

Author Contributions: The authors confirm contribution to the paper as follows: study conception and design: Qi Mu, Zhanli Li; data collection: Yuanjie Guo, Xinyue Wang; analysis and interpretation of results: Qi Mu, Yuanjie Guo, Xiangfu Ge; draft manuscript preparation: Qi Mu, Yuanjie Guo. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data and materials used to support the findings of this study are available from the corresponding author upon request.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

1https://sites.google.com/site/vonikakis/datasets, accessed on 01 November 2024.

References

1. Li C, Guo C, Han L, Jiang J, Cheng M-M, Gu J, et al. Low-light image and video enhancement using deep learning: a survey. IEEE Trans Pattern Anal Mach Intell. 2022;44(12):9396–416. doi:10.1109/TPAMI.2021.3126387. [Google Scholar] [PubMed] [CrossRef]

2. Liu X, Wu Z, Li A, Vasluianu F-A, Zhang Y, Gu S, et al. Ntire 2024 challenge on low light image enhancement: methods and results. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2024; Seattle, WA, USA: IEEE. p. 6571–94. [Google Scholar]

3. Land EH. The retinex theory of color vision. Sci Am. 1977;237(6):108–29. doi:10.1038/scientificamerican1277-108. [Google Scholar] [PubMed] [CrossRef]

4. Lin Y-H, Lu Y-C. Low-light enhancement using a plug-and-play retinex model with shrinkage mapping for illumination estimation. IEEE Trans Image Process. 2022;31:4897–908. doi:10.1109/TIP.2022.3189805. [Google Scholar] [PubMed] [CrossRef]

5. Du S, Zhao M, Liu Y, You Z, Shi Z, Li J, et al. Low-light image enhancement and denoising via dual-constrained retinex model. Appl Math Model. 2023;116:1–15. doi:10.1016/j.apm.2022.11.022. [Google Scholar] [CrossRef]

6. Yi X, Xu H, Zhang H, Tang L, Ma J. Diff-Retinex: rethinking low-light image enhancement with a generative diffusion model. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV); 2023 Oct; Paris, France: IEEE. p. 12302–11. [Google Scholar]

7. Rahman Z-U, Jobson DJ, Woodell GA. Retinex processing for automatic image enhancement. J Electron Imaging. 2004;13(1):100–10. doi:10.1117/1.1636183. [Google Scholar] [CrossRef]

8. Wang W, Wu X, Yuan X, Gao Z. An experiment-based review of low-light image enhancement methods. IEEE Access. 2020;8:87 884–917. doi:10.1109/ACCESS.2020.2992749. [Google Scholar] [CrossRef]

9. Wang S, Zheng J, Hu H-M, Li B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans Image Process. 2013;22(9):3538–48. doi:10.1109/TIP.2013.2261309. [Google Scholar] [PubMed] [CrossRef]

10. Mu Q, Wang X, Wei Y, Li Z. Low and non-uniform illumination color image enhancement using weighted guided image filtering. Comput Vis Media. 2021;7(4):529–46. doi:10.1007/s41095-021-0232-x. [Google Scholar] [CrossRef]

11. Wei C, Wang W, Yang W, Liu J. Deep retinex decomposition for low-light enhancement. arXiv:1808.04560. 2018. [Google Scholar]

12. Zhang Y, Zhang J, Guo X. Kindling the darkness: A practical low-light image enhancer. In: Proceedings of the 27th ACM International Conference on Multimedia; 2019; New York, NY, USA: ACM. p. 1632–40. [Google Scholar]

13. Wu W, Weng J, Zhang P, Wang X, Yang W, Jiang J. Uretinex-Net: retinex-based deep unfolding network for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2022; New Orleans, LA, USA: IEEE. p. 5901–10. [Google Scholar]

14. Ye J, Qiu C, Zhang Z. A survey on learning-based low-light image and video enhancement. Displays. 2023;81:102614. [Google Scholar]

15. Jeon JJ, Park JY, Eom IK. Low-light image enhancement using gamma correction prior in mixed color spaces. Pattern Recognit. 2024;146:110001. doi:10.1016/j.patcog.2023.110001. [Google Scholar] [CrossRef]

16. Paul A. Adaptive tri-plateau limit tri-histogram equalization algorithm for digital image enhancement. Vis Comput. 2023;39(1):297–318. doi:10.1007/s00371-021-02330-z. [Google Scholar] [CrossRef]

17. Veluchamy M, Subramani B. Fuzzy dissimilarity color histogram equalization for contrast enhancement and color correction. Appl Soft Comput. 2020;89(1):106077. doi:10.1016/j.asoc.2020.106077. [Google Scholar] [CrossRef]

18. Chang Y, Jung C, Ke P, Song H, Hwang J. Automatic contrast-limited adaptive histogram equalization with dual gamma correction. IEEE Access. 2018;6:11 782–92. doi:10.1109/ACCESS.2018.2797872. [Google Scholar] [CrossRef]

19. Tomasi C, Manduchi R. Bilateral filtering for gray and color images. In: Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271); 1998; Bombay, India: IEEE. p. 839–46. [Google Scholar]

20. He K, Sun J, Tang X. Guided image filtering. IEEE Trans Pattern Anal Mach Intell. 2012;35(6):1397–409. doi:10.1109/TPAMI.2012.213. [Google Scholar] [PubMed] [CrossRef]

21. Xu X, Yu Z. Low-light image enhancement based on retinex theory. In: 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT); 2023; Qingdao, China: IEEE. p. 1–6. [Google Scholar]

22. Fu X, Zeng D, Huang Y, Zhang X-P, Ding X. A weighted variational model for simultaneous reflectance and illumination estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; Las Vegas, NV, USA: IEEE. p. 2782–90. [Google Scholar]

23. Li M, Liu J, Yang W, Sun X, Guo Z. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans Image Process. 2018;27(6):2828–41. doi:10.1109/TIP.2018.2810539. [Google Scholar] [PubMed] [CrossRef]

24. Zhang Y, Guo X, Ma J, Liu W, Zhang J. Beyond brightening low-light images. Int J Comput Vis. 2021;129(4):1013–37. doi:10.1007/s11263-020-01407-x. [Google Scholar] [CrossRef]

25. Cai Y, Bian H, Lin J, Wang H, Timofte R, Zhang Y. Retinexformer: one-stage retinex-based transformer for low-light image enhancement. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV); 2023; Paris, France: IEEE. p. 12504–13. [Google Scholar]

26. Yin J, Li H, Du J, He P. Low illumination image retinex enhancement algorithm based on guided filtering. In: 2014 IEEE 3rd International Conference on Cloud Computing and Intelligence Systems; 2014; Shenzhen, China: IEEE. p. 639–44. [Google Scholar]

27. Li Z, Zheng J, Zhu Z, Yao W, Wu S. Weighted guided image filtering. IEEE Trans Image Process. 2014;24(1):120–9. [Google Scholar] [PubMed]

28. Kou F, Chen W, Wen C, Li Z. Gradient domain guided image filtering. IEEE Trans Image Process. 2015;24(11):4528–39. doi:10.1109/TIP.2015.2468183. [Google Scholar] [PubMed] [CrossRef]

29. Lu Z, Long B, Li K, Lu F. Effective guided image filtering for contrast enhancement. IEEE Signal Process Lett. 2018;25(10):1585–9. doi:10.1109/LSP.2018.2867896. [Google Scholar] [CrossRef]

30. He Z, Mo H, Xiao Y, Cui G, Wang P, Jia L. Multi-scale fusion for image enhancement in shield tunneling: a combined MSRCR and clahe approach. Meas Sci Technol. 2024;35(5):056112. doi:10.1088/1361-6501/ad25e4. [Google Scholar] [CrossRef]

31. Shin Y, Jeong S, Lee S. Content awareness-based color image enhancement. In: The 18th IEEE International Symposium on Consumer Electronics (ISCE 2014); 2014; Jeju, Republic of Korea: IEEE. p. 1–2. [Google Scholar]

32. Kim Y, Koh YJ, Lee C, Kim S, Kim C-S. Dark image enhancement based onpairwise target contrast and multi-scale detail boosting. In: 2015 IEEE International Conference on Image Processing (ICIP); 2015; Quebec City, QC, Canada: IEEE. p. 1404–8. [Google Scholar]

33. Gorai A, Ghosh A. Hue-preserving color image enhancement using particle swarm optimization. In: 2011 IEEE Recent Advances in Intelligent Computational Systems; 2011. p. 563–8. [Google Scholar]

34. Fu X, Zeng D, Huang Y, Liao Y, Ding X, Paisley J. A fusion-based enhancing method for weakly illuminated images. Signal Process. 2016;129(12):82–96. doi:10.1016/j.sigpro.2016.05.031. [Google Scholar] [CrossRef]

35. Hong Y, Zhu DP, Gong PS. Retinex mine image enhancement algorithm based on tophat weighted guided filtering. J Mine Automat. 2022;48(8):43–9. doi:10.13272/j.issn.1671-251x.2022020029. [Google Scholar] [CrossRef]

36. Zhou M, Leng H, Fang B, Xiang T, Wei X, Jia W. Low-light image enhancement via a frequency-based model with structure and texture decomposition. ACM Transact Multim Comput Communicat Appl. 2023;19(6):1–23. doi:10.1145/3590965. [Google Scholar] [CrossRef]

37. Hou J, Zhu Z, Hou J, Liu H, Zeng H, Yuan H. Global structure-aware diffusion process for low-light image enhancement. In: Proceedings of the 37th International Conference on Neural Information Processing Systems (NIPS '23); 2024; Red Hook, NY, USA: Curran Associates Inc. Vol. 36, p. 79734–47. [Google Scholar]

38. Qu J, Liu RW, Gao Y, Guo Y, Zhu F, Wang F-Y. Double domain guided real-time low-light image enhancement for ultra-high-definition transportation surveillance. IEEE Trans Intell Transp Syst. 2024;25(8):9550–62. doi:10.1109/TITS.2024.3359755. [Google Scholar] [CrossRef]

39. Lee C, Lee C, Kim C-S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans Image Process. 2013;22(12):5372–84. doi:10.1109/TIP.2013.2284059. [Google Scholar] [PubMed] [CrossRef]

40. Guo X, Li Y, Ling H. Lime: low-light image enhancement via illumination map estimation. IEEE Trans Image Process. 2016;26(2):982–93. [Google Scholar]

41. Ma K, Zeng K, Wang Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans Image Process. 2015;24(11):3345–56. doi:10.1109/TIP.2015.2442920. [Google Scholar] [PubMed] [CrossRef]

42. Yan J, Li J, Fu X. No-reference quality assessment of contrast-distorted images using contrast enhancement. arXiv preprint arXiv:1904.08879. 2019. [Google Scholar]

43. Chen X, Zhang Q, Lin M, Yang G, He C. No-reference color image quality assessment: from entropy to perceptual quality. EURASIP J Image Video Process. 2019;2019:1–14. [Google Scholar]

44. Mittal A, Moorthy AK, Bovik AC. No-reference image quality assessment in the spatial domain. IEEE Trans Image Process. 2012;21(12):4695–708. doi:10.1109/TIP.2012.2214050. [Google Scholar] [PubMed] [CrossRef]

45. Mittal A, Soundararajan R, Bovik AC. Making a “completely blind” image quality analyzer. IEEE Signal Process Lett. 2012;20(3):209–12. [Google Scholar]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools