Open Access

Open Access

ARTICLE

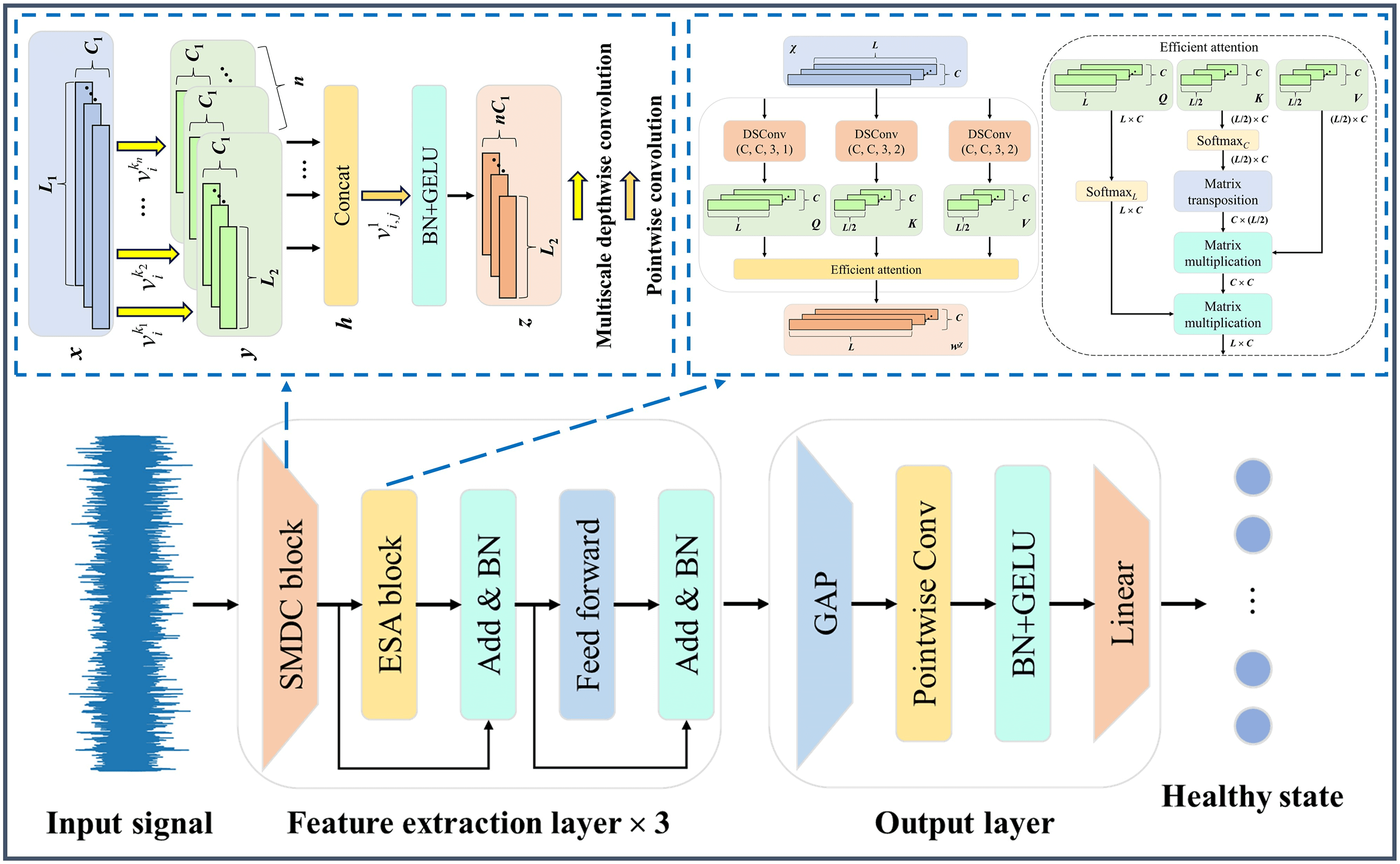

SEFormer: A Lightweight CNN-Transformer Based on Separable Multiscale Depthwise Convolution and Efficient Self-Attention for Rotating Machinery Fault Diagnosis

1 State Key Laboratory of Mechanics and Control for Aerospace Structures, Nanjing University of Aeronautics and Astronautics, Nanjing, 210016, China

2 School of Computer Science and Engineering, Nanyang Technological University, Singapore, 639798, Singapore

* Corresponding Authors: Hua Zhu. Email: ; Huafeng Li. Email:

(This article belongs to the Special Issue: Industrial Big Data and Artificial Intelligence-Driven Intelligent Perception, Maintenance, and Decision Optimization in Industrial Systems)

Computers, Materials & Continua 2025, 82(1), 1417-1437. https://doi.org/10.32604/cmc.2024.058785

Received 21 September 2024; Accepted 25 November 2024; Issue published 03 January 2025

Abstract

Traditional data-driven fault diagnosis methods depend on expert experience to manually extract effective fault features of signals, which has certain limitations. Conversely, deep learning techniques have gained prominence as a central focus of research in the field of fault diagnosis by strong fault feature extraction ability and end-to-end fault diagnosis efficiency. Recently, utilizing the respective advantages of convolution neural network (CNN) and Transformer in local and global feature extraction, research on cooperating the two have demonstrated promise in the field of fault diagnosis. However, the cross-channel convolution mechanism in CNN and the self-attention calculations in Transformer contribute to excessive complexity in the cooperative model. This complexity results in high computational costs and limited industrial applicability. To tackle the above challenges, this paper proposes a lightweight CNN-Transformer named as SEFormer for rotating machinery fault diagnosis. First, a separable multiscale depthwise convolution block is designed to extract and integrate multiscale feature information from different channel dimensions of vibration signals. Then, an efficient self-attention block is developed to capture critical fine-grained features of the signal from a global perspective. Finally, experimental results on the planetary gearbox dataset and the motor roller bearing dataset prove that the proposed framework can balance the advantages of robustness, generalization and lightweight compared to recent state-of-the-art fault diagnosis models based on CNN and Transformer. This study presents a feasible strategy for developing a lightweight rotating machinery fault diagnosis framework aimed at economical deployment.Graphic Abstract

Keywords

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools