Open Access

Open Access

ARTICLE

A Hybrid Deep Learning Approach for Green Energy Forecasting in Asian Countries

1 School of Computer Science, Chengdu University, Chengdu, 610106, China

2 Information Technology Services, University of Okara, Okara, 56310, Pakistan

3 MLC Research Lab, University of Okara, Okara, 56300, Pakistan

4 Department of Mathematics, University of Okara, Okara, 56310, Pakistan

5 School of Technology and Innovations, University of Vaasa, Vaasa, 65200, Finland

* Corresponding Author: Muhammad Faheem. Email:

Computers, Materials & Continua 2024, 81(2), 2685-2708. https://doi.org/10.32604/cmc.2024.058186

Received 06 September 2024; Accepted 30 September 2024; Issue published 18 November 2024

Abstract

Electricity is essential for keeping power networks balanced between supply and demand, especially since it costs a lot to store. The article talks about different deep learning methods that are used to guess how much green energy different Asian countries will produce. The main goal is to make reliable and accurate predictions that can help with the planning of new power plants to meet rising demand. There is a new deep learning model called the Green-electrical Production Ensemble (GP-Ensemble). It combines three types of neural networks: convolutional neural networks (CNNs), gated recurrent units (GRUs), and feedforward neural networks (FNNs). The model promises to improve prediction accuracy. The 1965–2023 dataset covers green energy generation statistics from ten Asian countries. Due to the rising energy supply-demand mismatch, the primary goal is to develop the best model for predicting future power production. The GP-Ensemble deep learning model outperforms individual models (GRU, FNN, and CNN) and alternative approaches such as fully convolutional networks (FCN) and other ensemble models in mean squared error (MSE), mean absolute error (MAE) and root mean squared error (RMSE) metrics. This study enhances our ability to predict green electricity production over time, with MSE of 0.0631, MAE of 0.1754, and RMSE of 0.2383. It may influence laws and enhance energy management.Keywords

Renewable and sustainable energy sources, known as green energy sources, are derived from non-polluting and naturally existing sources. These include wind, solar, hydroelectric, geothermal, and biomass power. Green energy’s multiple goals include diversifying and securing energy sources, lowering greenhouse gas emissions, and decreasing dependence on fossil fuels [1]. In the battle for a more environmentally friendly tomorrow, green energy is making a massive splash in many sectors, including electricity generation, transportation, and industrial activities.

Asian investment in renewable energy is being driven by growing energy needs and environmental aspirations. China is the world’s biggest producer and consumer of renewable energy. The Global Wind Energy Council (GWEC) projects that by 2021, China’s wind power capacity will be 2145 gigawatts (GW) and the world's 756 GW [2]. By 2025, Pakistan wants to boost renewable energy from 5% to 20% and 2030 30% [3]. Pakistan offers excellent potential for wind and solar energy. 4% solar, 2% biofuel, and 15% hydropower provide Malaysia’s energy. The 2022–2040 National Energy Policy (NEP) aims to increase renewable energy to 4% solar, 4% hydropower, and 4% biofuels by 2040. Energy in the Philippines comes from 7% hydropower, 4% solar, and 1% geothermal. Thailand utilizes 3% wind, 6% solar, and 6% hydropower. The country's capacity for renewable energy is growing. Less than 1% wind and solar, 6% biofuel, 5% geothermal, and 8% hydropower are used in Indonesia [4].

The REN21 Renewables 2022 Global Status Report (GSR) ranks India as the fourth most potent renewable energy country in terms of installed capacity for hydropower, wind power, and solar power. At the 26th Conference of the Parties (COP26), the nation committed to using 500 GW of renewable energy by 2030 [5]. At 170 watts per square meter (W/m2), the world average, western Iraq produces the most significant solar power. The German Aerospace Center (DLR) reports that the Iraqi deserts make 270–290 W/m2 and 2310 kWh/m2 yearly [6]. In 2015, hydro accounted for 24.1%, natural gas for 8.5%, nuclear for 8.1%, solar for 11%, geothermal for 7.8%, oil for 1.6%, and wind for 1.5% of Japan’s energy production, with biofuels and waste making up 37.5% of that total [7]. With the following targets in mind, the Korean government aims for new and renewable energy (NRE) to account for 5% of primary energy by 2020, 7.7% by 2025, 9.7% by 2030, and 11% by 2035. From 2014–2035, these projects would increase NRE supply by 6.2%, more than South Korea’s primary energy demand growth of 0.7% [8].

The impact of solar irradiance and ambient temperature on array power output can be measured using mathematical formulas in renewable energy optimization, which is used in Asia and other regions [9]. In this model, the power output is calculated as:

Here, Gpvg stands for photovoltaic (PV) generating efficiency, Apvg for PV generator area in square meters, and J for solar irradiation in the sloped plane of the module in W/m2. We can additionally define Gpvg as:

When maximum power point tracking (MPPT) is employed, the power conditioning efficiency (Gpce) is set to one, the temperature coefficient (B) ranges from 0.004 to 0.006 per degree Celsius, Gr denotes the reference module efficiency, and Tcreff stands for the reference cell temperature in degrees Celsius.

Power outages, load shedding, and other economic disturbances are consequences of Asia's fast-increasing electrical consumption. The problems are worsened because pollution and population growth strain the energy infrastructure. Due to these constraints, correct models for predicting renewable energy production up to 2030 are necessary. Achieving inexpensive and clean energy, the seventh Sustainable Development Goal (SDG) established by the United Nations (UN) requires educated decision-making and prudent energy management [10]. Stakeholders and policymakers need accurate estimates of renewable energy generation to prepare for a sustainable energy future.

Deep learning uses artificial neural networks (ANNs) to sift through large datasets for complex patterns. It has numerous potential applications, including computer vision, voice recognition, and natural language processing (NLP). In the case of green power generation, it can also predict energy use and supply. Some advanced prediction methods are CNNs, FNNs, and GRUs. GRU stands out among the many univariate time series analysis models [11]. Like long short-term memory (LSTM), GRU is less complex in design, which might lead to faster training and processing times. FNNs are accessible and practical because they can anticipate time series using successive neural layers [12]. Conversely, time series analysis extensively uses CNNs because they can detect spatial and temporal patterns, especially local correlations in sequential data [13].

Deep-learning models are required to predict renewable energy production to maximize power generation and distribution efficiency. The author Ateş [1] suggests using swarm intelligence and ANN to make efficient and accurate short-term projections of wind turbine power. Shekhar et al. [14] show that CNNs are better than recurrent neural networks (RNNs) at predicting the output of solar power generators for wind turbines using data from the International Renewable Energy Agency (IREA) and interconnections in Pennsylvania, New Jersey, and Maryland. Jamil et al. [15] use three deep learning types—ANN, RNN, and CNN-LSTM—to predict how much solar energy Quaid-e-Azam Solar Park will generate. The best model for predicting power output was the CNN-LSTM hybrid, which allows for more precise predictions of solar energy generation. For intelligent microgrid future predictions, Widodo et al. [16] evaluate a deep neural network (DNN) model with LSTM utilizing RMSE and confusion matrix accuracy.

According to recent research, deep learning and machine learning have shown remarkable progress in estimating solar panels’ power output. Khortsriwong et al. [17] examine deep learning models for floating PV plant power production prediction and Miraftabzadeh et al. [18] for day-ahead photovoltaic power. Furthermore, Sedai et al. [19–21] thoroughly investigate the current status of renewable energy forecasting. They specifically look at the efficacy and adaptability of hybrid deep learning models when projecting future solar power production.

This study suggests using deep learning instead of traditional machine learning techniques. This is because deep learning models can find more complex patterns and dependencies in data, work with more extensive and complicated datasets, and do better at tasks involving high-dimensional data, which regular methods might have trouble with.

The research on green electricity output projections has substantial gaps, which this study intends to fill. More efficient use of the Asia green electricity generating dataset is required. Mistakes in prediction due to inaccurate data might affect energy sector planning and regulation. Only some studies have compiled all the relevant data on renewable energy. When dealing with long-term data, it becomes increasingly challenging to forecast energy consumption and production capacities reliably. The shift toward renewable energy sources and away from fossil fuels is worrisome. By 2030, the UN must have revised and achieved its seventh sustainable energy output objective. We need accurate predictions of green electricity production if we want modern, affordable, dependable, and environmentally friendly energy. New error reduction and prediction approaches need to be developed to cover the research gaps. The fields of processing, data collecting, deep learning, and modelling have all made strides forward. This project aims to improve green electricity output estimates and accelerate the global transition to sustainable electrical infrastructure by addressing these distinct models. The following is the primary contribution of this study:

1. This paper introduces the GP-Ensemble deep learning model, which combines FNN, GRU, and CNN to improve the accuracy of sustainable electricity forecasts.

2. The study enhances the accuracy of long-term predictions for green electricity and minimizes forecasting errors, lowering the chances of making errors while planning.

3. This study uses the updated dataset until 2023 and contributes by forecasting total green electricity generation until 2030. This will help achieve the United Nations’ seventh SDG of guaranteeing universal access to modern, affordable, dependable, and sustainable energy.

The outline for the remaining portion of the article is as follows: There is a literature review in Section 2. Materials, dataset splitting, data processing, algorithms (FNN, GRU, CNN, GP-Ensemble), experimental design, and assessment metrics are all covered in Section 3. In Section 4, a model comparison and a contextual assessment of previous research are offered together with the results and discussions. The report is concluded in Section 5, which also provides a plan for the future.

Deep learning algorithms are necessary for making green power, which sustainably meets the long-term electricity needs of modern society. These algorithms are very good at predicting how much green energy will be produced in the future. Therefore, they render the power system more efficient, better at allocating resources, and better at noticing demand trends. The fact that they can learn from complicated data and find hidden trends has helped them reach these goals. CNNs and RNNs are the most well-known deep learning algorithms. They are employed for medium-term prediction of the future, demand response, error identification, load profiling, and green energy prediction. Modern methods for evaluating green electricity production are more accurate, highlighting energy use trends and underlying causes.

This section aims to provide a full review of the literature on deep learning methods for predicting and producing green energy, focusing on the work Asian countries do in this area. In the past few years, many countries have realized that they need to switch to sustainable and environmentally friendly energy sources to have less of an impact on the environment and less dependence on fossil fuels.

Al-Ali et al. [13] propose an outstanding new deep learning model that combines transformers, LSTMs, and CNNs to predict the quantity of solar energy generated. It is easier to add solar power to power networks now that a new way has been developed that makes predictions more accurate. Research shows that this mixed approach works better than standard tactics. Mystakidis et al. [22] developed and tested a new machine learning and deep learning method to make Energy Generation Forecasting (EGF) more accurate at different levels of data. To improve smart grid layouts and demand response management, they tried many other ways to guess how buildings would work by looking at how they had worked in the past. With the lowest RMSE, deep neural networks are the best way to predict how much energy will be used [23]. Green building plans that are highly complex need to be looked into. The MAE, RMSE, and mean absolute percentage error (MAPE) values for LSTM-CNN hybrids were lower than those for CNN-LSTM hybrids and single models (LSTM, CNN). The people Wang et al. [24] determine that CNN-LSTM hybrids are better at predicting solar power than LSTM-CNN hybrids.

Significant advancements in load frequency management and power generation prediction techniques have been made. Kumar et al. [25] have utilized LSTM-RNNs to predict the impact of solar and wind power on load frequency in microgrids at a specific distance. Using the deep LSTM-RNN technique developed by Abdel-Nasser et al. [26], PV power generation may be accurately predicted. This technique significantly improves grid planning and control over usual models by reducing the number of mistakes due to merging hourly data from many places. Short-term wind power is predicted via a model developed by Huang et al. [2] that utilizes BiLSTM-CNN-WGAN-GP. A novel generative adversarial network technique that uses variational mode decomposition (VMD) for data decomposition has been designed to increase accuracy and stability. In addition, the model is improved by integrating data from the Jiuquan wind farm. An et al. [27] propose a PVMD-ESMA-DELM model to forecast wind generation over very short periods. The model’s decomposition optimization and global optimization improve wind power projections. This method outperforms traditional prediction. LSTM and chosen input analysis help Pasandideh et al. [28] predict steam engine output with a 0.47 error rate.

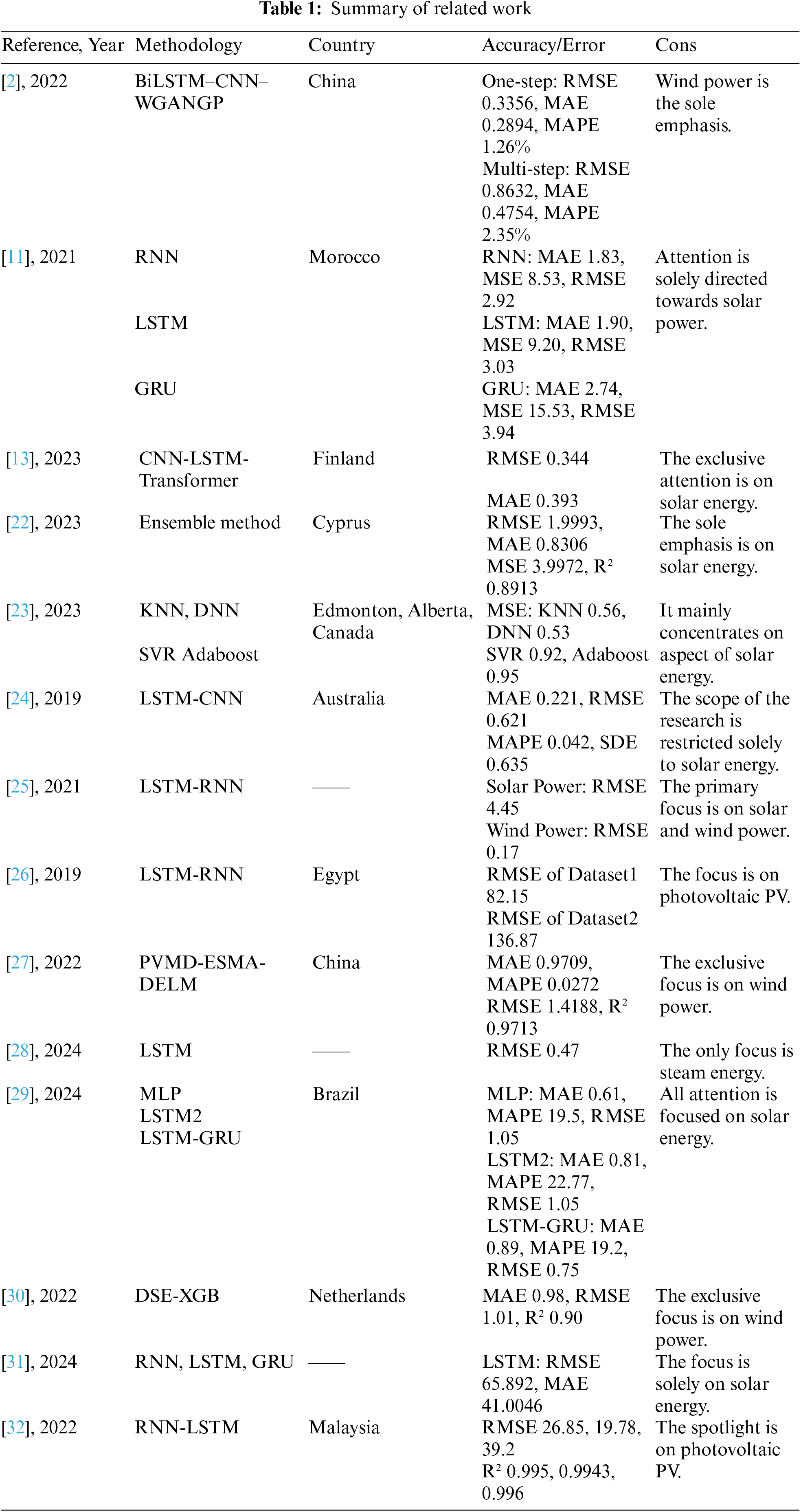

Marques et al. [29] estimate Amazon solar exposure using deep learning and data from twelve regions. This project uses neural networks like LSTM and LSTM-GRU. The LSTM-GRU has the lowest MAPE, especially in Labrea. Consequently, it can be used to make more clean energy in places that are good for the environment, like the Amazon Basin. Jebli et al. [11] use data from Errachidia (2016–2018) and RNN, LSTM, and GRU deep learning models to guess what solar energy would be used for. To find long-term links between time series, RNN, and LSTM models do much better than GRU regarding solar predictions and grid integration. Khan et al. [30] create an ensemble stacking method based on deep learning to predict solar energy. ANN and LSTM models are 10 to 12 percent less accurate in different weather situations than this method. A study by Abubakar et al. [31] says that LSTM machine-learning models can correctly guess smart grid solar power 97% of the time. Because of this, both the use of solar energy and its performance are better. It is found that the RNN-LSTM model created by Akhter et al. [32] makes predictions of PV power more accurate than older methods. Table 1 shows a list of valuable publications.

A literature review found many knowledge gaps in green power output prediction. The research addresses these gaps by emphasizing the necessity of trustworthy green power records in Asia. It also emphasizes the need to avoid incorrect datasets that lead to inaccurate energy policy and planning estimations. Integrating all renewable energy sources requires a lot of data, making long-term estimates difficult. Limiting energy usage and abandoning fossil fuels is crucial. The research flow of this study is given in Fig. 1.

Figure 1: Research framework

This section describes each phase of the procedure for gathering and evaluating data for our study. This explains various models, including GRU, FNN, CNN, and the GP-Ensemble model, which combines GRU, FNN, and CNN. The analysis that follows gives a thorough overview of our study’s methods.

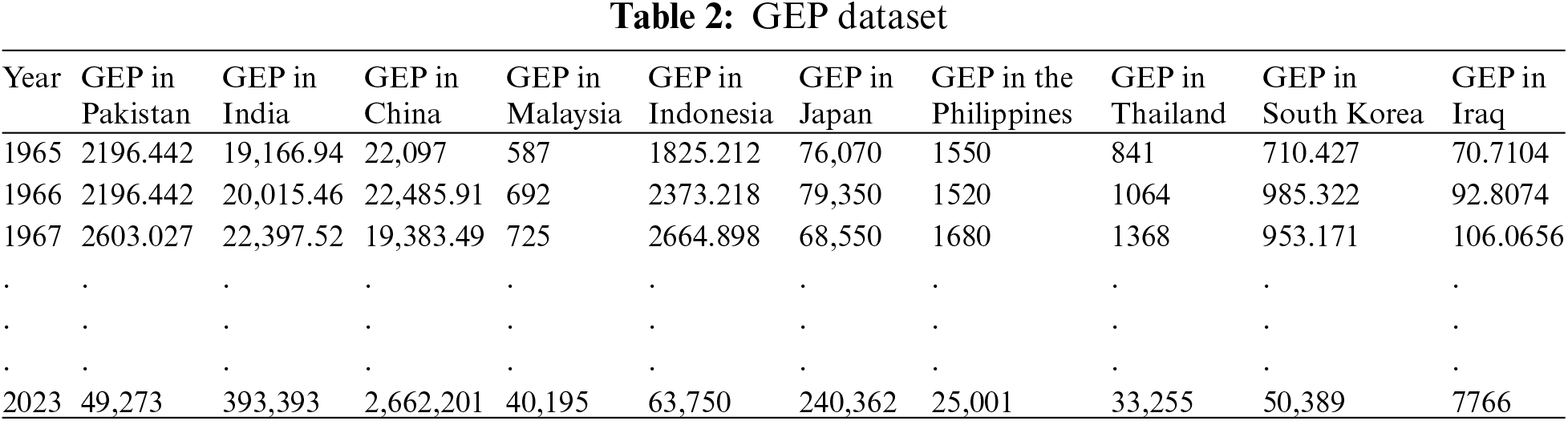

Our dataset follows the progress of renewable energy sources from 1965 to 2021 by evaluating total green electricity production (GEP) in several Asian countries. It includes data from 10 countries—Iraq, Pakistan, India, China, Malaysia, Indonesia, Japan, the Philippines, Thailand, and South Korea—and provides a comprehensive picture of renewable energy generation on the continent. The dataset from 1965 to 2021, acquired initially from Kaggle [33], included terawatt-hours (TWh) of renewable energy produced by solar, hydro, wind, thermal, and biofuel sources. Our study updated this dataset by combining all the data points into a single gigawatt hour (GWh) for each country, bringing the image of green energy generation together from its various renewable sources. The dataset from 2022 to 2023, obtained from [34], is month-wise, which we are converting year-wise. With this update, renewable electricity generation in ten countries is displayed consistently, as illustrated in Table 2, which are our inputs. The update allows for a clear understanding and comparison of each country’s contributions to the green energy sector. Using renewable energy and other sustainability measures in Asia has advanced significantly, and we hope to bring this to light.

Using data processing to clean up the information makes our model learn from it much more effectively. Sorting the data into a time series with each year as the primary number makes this method more straightforward. The preprocessing stage is easy to reach and changes every year. We begin by carefully cleaning the dataset, which is an essential first step that relies mainly on the skills of our data scientists and machine learning engineers. It is a crucial first step that depends on their abilities. Ensuring the dataset is safe and laying a solid foundation for training the model is essential throughout this step. Finding and fixing data errors, outliers, and missing numbers requires careful examination.

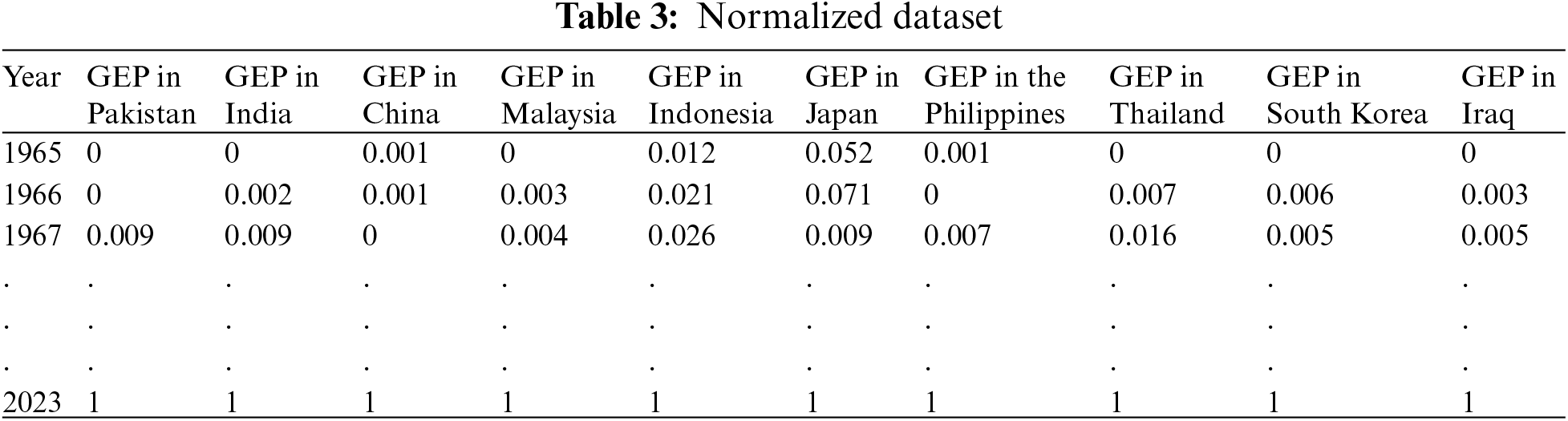

After cleaning the dataset, it is essential to ensure the column numbers are all the same. The Min-Max Normalization method, shown in Eq. (3), is used to do this. It’s impossible for any part of the data to significantly affect the results when the data is reduced to a [0, 1] range [35]. Results ensure that all features add equally to the model’s learning process. The normalization step works wonders for the ensemble model’s data-driven learning because it harmonizes all the scales of the different qualities in the dataset. From Table 3, you can see the normalized information.

The set of values is represented by

Data splitting is integral to training and testing deep learning models to ensure they can spread well and not become too perfect. We could use this method to see how well the model works on different data groups. Scientists often use training-to-testing splits like 90:10, 80:20, 70:30, or even 10:90 to find the most accurate RNN model for prediction [36]. The best-split ratio depends on many factors, such as the model’s structure, the data type, and the prediction horizon’s length. In addition, the dataset is split into different parts for testing and training. The model is trained using about 80% of the dataset. It helps researchers find the best mix of prediction elements and fine-tune the network parameters. The final 20% is the test set for comparing various models.

Many deep learning models are available, each with the potential to improve prediction precision. As part of our research, we introduce the GP-Ensemble deep learning model, a novel technique that combines the best features of GRUs, CNNs, and FNNs. To improve the accuracy of our forecasts, we propose this composite model. Our suggested GP-Ensemble model requires a basic grasp of GRU, FNN, and CNN.

3.2.1 GRU Neural Network Model

RNNs can interpret sequence data in various ways, including the GRU. Fig. 2 shows its streamlined shape and how it mirrors LSTM performance. For learning long-term dependencies in sequence data, GRU overcomes the vanishing gradient problem and requires fewer computing resources than LSTM [37]. Three parts comprise a GRU: the hidden state, the update gate, and the reset gate. The update gate is crucial for GRUs because it keeps data safe. On a scale from 0 to 1, values close to 1 mean that information from the past is remembered, while values close to 0 mean that it is lost. At the same time, a number close to 0 for the reset gate means more trash and a number close to 1 means more pass data retention. These two gates update the GRU’s memory with a mix of old and new information by working together on the newly hidden state.

Figure 2: The GRUs internal architecture

Eq. (4) shows how GRU is written in math. St is the reset gate, yt is the update gate, ht is the hidden state, and xt is the current input. We can find the equation here. The reset gate has weights that are ws, and the update gate has weights that are wy.

3.2.2 FNN: Feedforward Neural Network Model

The FNN, a primary ANN type, comprises layers that send input in only one direction. As data moves through the hidden nodes from the input to the exit nodes, no cycles or loops are made. The three levels in Fig. 3 comprise a FNN core: input, hidden, and output [38]. A FNN has an input layer that sends data, hidden layers that use activation functions and weighted sums to process the data, and an output layer that sends the results. All the neurons in the network can talk to each other in the layer below them because of weights [12]. Weights show how strongly the neurons are linked to each other.

Figure 3: The FNN’s internal architecture

The mathematical representation of a FNN consists of layers and activation functions within each layer. In general, consider an n-layer FNN, where p is the output space (Y), k is the input space (X), and (

Here, we have xi, the input to the ith layer, the bias vector bi, the activation function ai, the weight matrix wi that connects the previous and current layers, and the ith layer itself.

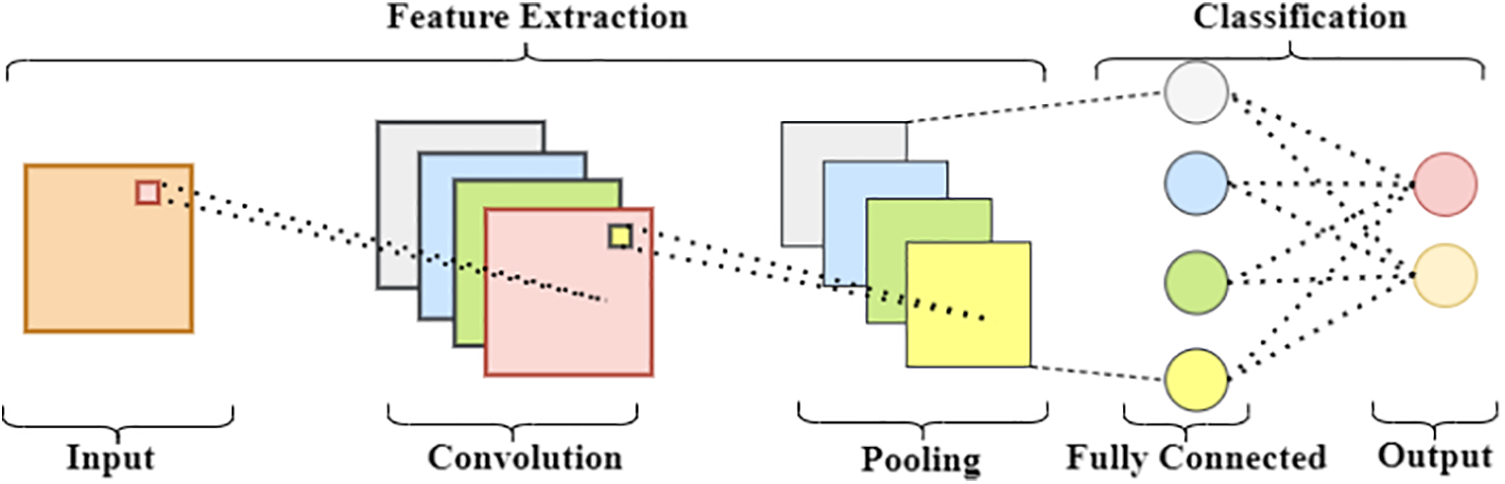

3.2.3 CNN: Convolutional Neural Network Model

CNNs are a type of deep learning model that can deal with data with many dimensions. CNNs complete their tasks using filters, downsampling techniques, and linear operations. CNNs may directly extract low-level characteristics from data by using convolutional layers. It employs pooling layers to simplify how they are represented and improve the readability of these feature maps. Lastly, fully linked layers produce the model’s output by utilizing these features to comprehend broader patterns more effectively [13]. Fig. 4 illustrates how these architectural components work together to process the incoming data and make conclusions efficiently.

Figure 4: Structure of CNN

Generating a feature map involves utilizing the convolution technique, which applies a kernel (or weight function) to an input function. While undergoing training, we modify a multidimensional array by including the updated kernel weights. The kernel obtains the feature map values by performing a dot product at each place while traversing the input function. The feature map values are obtained by integrating the input function h with the kernel p using the r and s indices for the rows and columns of the output matrix, as shown in Eq. (6).

Max-pooling and average-pooling are the two most often employed pooling layers in convolutional neural networks. During the processing of input segments, max-pooling filters identify and choose the highest value from each segment, while average-pooling filters calculate the average value of all segments [39]. Eq. (7) represents the formula for max-pooling.

3.2.4 GP-Ensemble Deep Learning Model

Fig. 5 depicts our proposed ensemble model structure for input data analysis, which is a concatenated model. It uses an intricate combination of CNN, FNN, and GRU branches to optimize the capabilities of each architecture. The novelty of this ensemble technique is that we use one hidden layer of GRU, two hidden layers of FNN, and one layer of CNN, which reduces the cost of our ensemble technique compared to other models. This multi-branch method allows for the complicated analysis of time-series or sequential data by capturing many aspects of the data’s structure and trends. The recurrent structure of the GRU branch makes it very suitable for sequence prediction problems since it makes temporal correlations easy to identify. In our dataset, renewable electricity generation for ten Asian countries is consistently displayed, as illustrated in Table 2, which is our input for GRU, FNN, and CNN. However, owing to its emphasis on densely coupled layers that capture elusive patterns in the input, FNNs excel in non-sequential data processing. Pattern recognition in the data is made simple by the CNN branch’s use of convolutional and pooling layers to extract localized features. A final dense layer produces the result once the model has concatenated the outputs from these several branches using a comprehensive feature set. An ensemble approach improves the model’s forecast accuracy and generalizability as it learns from more complicated data.

Figure 5: GP-Ensemble deep learning approach

It comes after a long talk of how this ensemble model is expressed mathematically. An XGRU, an XFNN, and an XCNN show the inputs to the GRU, FNN, and CNN branches. The activation functions fGRU, fFNN, and fCNN, along with the weights WGRU, WFNN, and WCNN, tell each branch what changes to make to its input. OGRU, OFNN, and OCNN are the three separate results of each processing branch. What these branches give us is mixed to make one feature vector. The model’s output O is found by working this combined vector more in the previous dense layer with final weights Wfinal and final bias bfinal. A function ffinal may or may not be used by this layer to activate things. Eq. (8) shows a mathematical and analytical description of the process.

When OGRU = fGRU (WGRU. XGRU), OFNN = fFNN (WFNN. XFNN), and OCNN = fCNN (WCNN. XCNN), the outputs of the branches are not just combined, but collaboratively merged using the symbol [;]. This formula underscores how an ensemble model, like a team, leverages different data representations to enhance prediction accuracy.

The GRU, FNN, and CNN models use the last pattern from their test datasets to discern what will happen next. An ensemble model meticulously selects the most accurate parts of each prediction and integrates them to make the overall prediction more precise. This method demonstrates high precision in forecasting the outcomes in ten Asian countries from 2024 to 2030.

This research looks into whether or not a GP-Ensemble deep learning model could be used to predict how much green energy ten Asian countries will produce. The model’s last dense layer has a unique layout combining GRU, FNN, and CNN results. It has ten linear units, one for each of the ten countries being looked at. This ensemble method, which takes the best parts of several neural networks and puts them together, makes it easier to find trends in the data related to space and time. The Adam optimizer is used in the model optimization process because it is good at handling sparse gradients on noisy problems. MSE, RMSE, and MAE are used to measure how accurate a prediction is. In Table 4, you can see that the entire model, which has a batch size of 32, goes through 190 epochs of hard training to figure out the trends in the green power production dataset. Our experiment aims to find out how well ensemble deep learning methods can correctly predict the outputs of renewable energy sources in Asian countries. Algorithm 1 presents the pseudocode for GP-Ensemble deep learning approach.

To evaluate the performance of our approach, we used several evaluation metrics, such as MAE, MSE, and RMSE. These numbers are defined by Eqs. (9)–(11). The MAE measures the degree of difference between the actual and predicted values. The RMSE measure displays the degree of difference between the actual and expected values. The MSE measure measures the squared discrepancies between observed and predicted values [22].

where

The deep learning models were constructed using Python and the Keras tools. The training and testing phases were conducted on Google Colab [40], a platform strongly supporting graphics processing units (GPUs). Here are the main points from the models’ findings:

1. By analyzing Green Electricity Production Database (GEPD) data from around Asia, we tested the GP-Ensemble model’s ability to forecast and enhance renewable power generation strategies.

2. We compared the GP-Ensemble deep learning model to CNN, FCN, GRU, FNN, and an ensemble model that combined CNN and FNN.

3. To evaluate the GP-Ensemble deep learning model, we compared its results to those of existing research.

4.1 GP-Ensemble Deep Learning Model Performance on Dataset of Asian Countries

Table 5 shows the performance metrics of the GP-Ensemble deep learning model, which include MSE, MAE, and RMSE. Our metrics display an MSE of 0.0631, an MAE of 0.1754, and an RMSE of 0.2383. The MSE, MAE, and RMSE values are averaged across ten Asian countries. The lower numbers show that the GP-Ensemble deep learning model is entirely accurate, which allowed it to understand the trends and patterns in the Asian country’s dataset.

From 2024–2030, Table 6 shows the GEP in GWh for ten Asian nations. It includes Iraq, Pakistan, India, China, Malaysia, Indonesia, Japan, Thailand, South Korea, and the Philippines. Because these countries declare their production figures every year, it is easy to see that green electricity generation is on the rise.

Projections for GEP in Asian nations from 1965 to 2030 are shown in Fig. 6 as bar charts. The graph’s x-axis represents years, while the y-axis represents green electricity generation in GWh. Pakistan’s GEP has steadily increased, from 2196 GWh in 1965 to 49,273 GWh in 2023. India’s energy generation has surged dramatically, from 19,167 GWh in 1965 to 392,293 GWh in 2023, highlighting its rapid industrialization and expanding energy needs. With its substantial economic growth, China escalated its GEP from 22,097 to 2,662,201 GWh, representing one of the most significant increases globally. Malaysia's GEP grew from 587 to 40,195 GWh, reflecting steady progress in its energy sector. Similarly, Indonesia's production rose from 1825 to 63,750 GWh, indicating robust development. Japan's energy production gradually increased from 76,070 to 240,362 GWh despite challenges such as the Fukushima disaster. The Philippines' GEP increased from 1550 to 25,001 GWh, while Thailand's grew from 841 to 33,255 GWh, showcasing its expanding energy infrastructure.

Figure 6: Prediction of GEP with GP-Ensemble deep learning model on a testing set

South Korea’s production grew from 710 to 50,389 GWh, underscoring its industrial advancement. Despite its turbulent history, Iraq saw an increase from 71 to 7766 GWh, reflecting some growth in its energy sector. Predictions from 2024 to 2030 suggest continued growth for these nations. Pakistan is expected to reach 51,386 GWh by 2024 and 55,498 GWh by 2025. From 418,067 GWh in 2024 to 522,715 GWh in 2030, India’s GEP will add up. China’s energy generation is forecasted to grow significantly from 2,818,646 GWh in 2024 to 3,757,321 GWh in 2030. Malaysia’s production is anticipated to reach 42,933 GWh by 2024 and 56,626 GWh by 2028. Indonesia is projected to generate 67,304 GWh by 2024 and 77,965 GWh by 2028. Japan’s forecast suggests a steady increase to 251,777 GWh by 2024 and 320,267 GWh by 2030. The Philippines’ GEP is expected to rise to 25,429 GWh in 2024 and 27,571 GWh by 2028. Thailand is predicted to generate 35,447 GWh by 2024 and 41,407 GWh by 2028. South Korea’s GEP is forecasted to increase to 54,615 GWh by 2024 and 71,969 GWh by 2030. Iraq’s GEP is expected to reach 8692 GWh by 2024 and 9322 GWh by 2028. These projections indicate a trend of increasing energy production across all countries, driven by growing populations and expanding industrial activities.

4.2 Comparison of Deep Learning Models Performance on the Dataset of Asian Countries

A line graph is depicted in Fig. 7. The MSE loss is displayed on the vertical axis. One can observe the number of epochs on the horizontal axis, which ranges from 0 to 190. The plot shows the performance of different models over time as each line depicts a different model:

• A blue line represents the GRU model.

• An orange line shows the FNN model.

• A red line represents the GP-Ensemble, which combines GRU, FNN, and CNN.

• The purple line represents the ensemble model of FNN and CNN.

• Brown lines denote FCN.

• The dark purple line represents the ensemble model of FCN and FNN.

• The light green line represents the ensemble model of GRU and FCN.

Figure 7: Training losses comparison of forecasting models

All models exhibit a steep initial drop in training loss during the first 25 epochs, indicating rapid learning. After that, the loss reduction rate slows as the models converge toward their minimum losses. The GP-Ensemble model consistently achieves the lowest final loss, implying better performance in training loss reduction. CNN and FNN demonstrate competitive performance among the remaining models, with GRU slightly trailing behind. The ensemble models (FCN and FNN) and (GRU and FCN) have significant alterations at the start and converge more slowly with total loss than other methods. FCN shows moderate performance, while the ensemble (FNN and CNN) outperforms all models in the later stages of training. Overall, ensemble models, particularly the GP-Ensemble, enhance performance by combining the strengths of individual models.

Table 7 compares the performance of many deep-learning models. The table shows six distinct models. These models are evaluated with three statistical measures (MSE, MAE, and RMSE). The errors allow us to compare the models’ performance; lower values indicate more precise predictions. The performance of GP-Ensemble outperforms that of other deep learning models. Its error rates are 0.0631 MSE, 0.1754 MAE, and 0.2383 RMSE, the lowest in the models. Also doing well is the GRU model, which differs only slightly from the GP-Ensemble model. Given the reduced error rates of the other models, there may be better choices for this forecasting task than the FCN model.

Fig. 8 illustrates a bar chart comparing MSE, MAE, and RMSE for different deep-learning models on an Asian testing set. With an MSE of 0.0631, the GP-Ensemble model—a combination of GRU, FNN, and CNN—produced the most impressive results. Also, the FCN model has an MSE of just 0.1286. Although the FNN model received a higher total score of 0.2599, the GP-Ensemble model remained the most accurate predictor, with an MAE of 0.1754 and RMSE of 0.2383, while the FCN model had the highest RMSE of 0.3587. The GP-Ensemble model beats all other ensemble approaches and solo models regarding the testing set.

Figure 8: Performance comparison of forecasting models on a testing set

4.2.1 Ablation Study on Asian Countries Dataset

Table 8 shows the study on ablation that used the GP-Ensemble deep learning model and a sample from Asian countries. It shows how well different batch sizes (16 and 32), types of optimizers (SGD and Adam), and epochs (100 and 190) work. These measurements include MSE, MAE, and RMSE. Each row shows a different mix of those things to show how different batch sizes, optimizers, and epochs change MSE, MAE, and RMSE.

In this investigation, the Adam optimizer, 190 epochs, and 32 batches yielded the lowest MSE of 0.0631, MAE of 0.1754, and RMSE of 0.2383. It shows that more extended training and higher batch sizes may optimize deep learning models for complicated datasets like Asian country datasets.

4.3 Comparison of GP-Ensemble Deep Learning Model Performance with Existing Studies

MSE, RMSE, and MAE of several deep learning algorithms are compared to past work in Table 9. The study found LSTM-CNN had RMSE 0.621 and MAE 0.221 [24]. PVMD-ESMA-DELM achieved the best RMSE (1.4188) and MAE (0.9709) in 2022 [27]. Another CNN-GRU investigation in 2022 found reduced errors with MAE 0.2894 and RMSE 0.3356 [2]. The CNN-LSTM-Transformer approach had an MAE of 0.393 and an RMSE of 0.344 in 2023 [13], while the ensemble method had an MSE of 3.9972, MAE of 0.8306 and RMSE of 1.9993 [22]. A deep neural network (DNN) has an MSE of 0.53 in the same year [23]. In contrast to one LSTM study’s 0.47 RMSE [28], the LSTM-GRU approach reported 0.89 and 0.75 [29], while the SSA-CNN-LSTM had an MSE of 0.2031, MAE of 0.1774 and RMSE of 0.4506 [41]. In this comparison, the suggested GP-Ensemble deep learning model has the highest forecasting ability and the lowest error scores: MSE 0.0631, MAE 0.1754, and RMSE 0.2383.

The study found that the GP-Ensemble model is an efficient deep learning technique for predicting Asian green power production. It predicts better than GRU, FNN, and CNN individually and other ensemble models. This idea improves green power output predictions as energy demand rises. It will enable a wiser energy management strategy. Lawmakers, energy firms, and academics should read this report to anticipate future energy demands and use energy more efficiently. The best part is that deep learning-based green power research will improve models and predictions. Based on the study’s excellent findings, some things could be improved with this study. It doesn’t look into more complex models like LSTMs or attention-based models, and it only uses data from ten Asian countries, which makes it less diverse. Also, the model only works with real-time data, which is needed to make accurate forecasts. The GP-Ensemble model works well but takes a long time to run and doesn’t estimate error, which is essential for making accurate energy predictions. The study also only looks at basic error measures and doesn’t look at how they might affect the real world. It also doesn’t use advanced data processing methods to improve the model input. The model has also yet to be used with other types of energy or in different industries, which limits how widely it can be used. More real-time and varied data should be used in future research, along with making the model more straightforward, adding uncertainty measures, using more advanced data processing techniques, and trying the model on various energy sectors to make it more useful in real life.

Acknowledgement: The authors would like to thank their affiliated universities for providing research funding to accomplish this research work.

Funding Statement: The research of Muhammad Faheem is funded by the Academy of Finland and the University of Vassa, Finland.

Author Contributions: Study conception and design: Muhammad Shoaib Saleem; data collection: Javed Rashid, Sajjad Ahmad, Muhammad Faheem, Tao Yan; analysis and interpretation of results: Javed Rashid, Sajjad Ahmad, Muhammad Shoaib Saleem; draft manuscript preparation: Sajjad Ahmad, Muhammad Shoaib Saleem. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data can be shared on valid request made to corresponding author.

Ethics Approval: The research does not involve human participants or animal subjects, and therefore does not require formal ethical approval. The study is based on publicly available datasets and computational models, ensuring compliance with ethical standards in research and data usage. All data used in the study are sourced from open-access repositories, and no personally identifiable information or sensitive data is involved. The research team has adhered to best practices in data handling and analysis, maintaining transparency and integrity throughout the research process.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. K. T. Ateş, “Estimation of short-term power of wind turbines using artificial neural network (ANN) and swarm intelligence,” Sustainability, vol. 15, no. 18, Sep. 2023, Art. no. 13572. doi: 10.3390/su151813572. [Google Scholar] [CrossRef]

2. L. Huang, L. Li, X. Wei, and D. Zhang, “Short-term prediction of wind power based on BiLSTM–CNN–WGAN–GP,” Soft Comput., vol. 26, no. 20, pp. 10607–10621, Oct. 2022. doi: 10.1007/s00500-021-06725-x. [Google Scholar] [CrossRef]

3. The World Bank, “A renewable energy future for Pakistan’s power system,” Accessed: Feb. 21, 2020. [Online]. Available: https://www.worldbank.org/en/news/feature/2020/11/09/a-renewable-energy-future-for-pakistans-power-system [Google Scholar]

4. D. Fallin, K. Lee, and G. B. Poling, “Clean energy and decarbonization in Southeast Asia,” Accessed: Feb. 21, 2023. [Online]. Available: https://www.csis.org/analysis/clean-energy-and-decarbonization-southeast-asia-overview-obstacles-and-opportunities [Google Scholar]

5. REN21, “Renewable 2022 Global Status Report,” 2022. Accessed: Feb. 22, 2024. [Online]. Available: https://www.ren21.net/gsr-2022 [Google Scholar]

6. F. M. Abed, Y. Al-Douri, and G. M. Al-Shahery, “Review on the energy and renewable energy status in Iraq: The outlooks,” Renew. Sustain. Energ. Rev., vol. 39, pp. 816–827, Nov. 2014. doi: 10.1016/j.rser.2014.07.026. [Google Scholar] [CrossRef]

7. D. Zhu, S. M. Mortazavi, A. Maleki, A. Aslani, and H. Yousefi, “Analysis of the robustness of energy supply in Japan: Role of renewable energy,” Energy Rep., vol. 6, no. 2, pp. 378–391, Nov. 2020. doi: 10.1016/j.egyr.2020.01.011. [Google Scholar] [CrossRef]

8. C. Y. Lee and S. Y. Huh, “Forecasting new and renewable energy supply through a bottom-up approach: The case of South Korea,” Renew. Sustain. Energ. Rev., vol. 69, no. 4, pp. 207–217, Mar. 2017. doi: 10.1016/j.rser.2016.11.173. [Google Scholar] [CrossRef]

9. B. Bhandari, S. R. Poudel, K. T. Lee, and S. H. Ahn, “Mathematical modeling of hybrid renewable energy system: A review on small hydro-solar-wind power generation,” Int. J. Precis. Eng. Manuf. Green Technol., vol. 1, no. 2, pp. 157–173, Apr. 2014. doi: 10.1007/s40684-014-0021-4. [Google Scholar] [CrossRef]

10. SDG 7, “GOAL 7: Affordable and clean energy,” Accessed: Feb. 22, 2024. [Online]. Available: https://www.unep.org/explore-topics/sustainable-development-goals/why-do-sustainable-development-goals-matter/goal-7 [Google Scholar]

11. I. Jebli, F. Z. Belouadha, M. I. Kabbaj, and A. Tilioua, “Deep learning based models for solar energy prediction,” Adv. Sci. Technol. Eng. Syst. J., vol. 6, no. 1, pp. 349–355, Jan. 2021. doi: 10.25046/aj060140. [Google Scholar] [CrossRef]

12. Y. Xue, T. Tang, and A. X. Liu, “Large-scale feedforward neural network optimization by a self-adaptive strategy and parameter based particle swarm optimization,” IEEE Access, vol. 7, pp. 52473–52483, Apr. 2019. doi: 10.1109/ACCESS.2019.2911530. [Google Scholar] [CrossRef]

13. E. M. Al-Ali et al., “Solar energy production forecasting based on a hybrid CNN-LSTM-Transformer model,” Mathematics, vol. 11, no. 3, Jan. 2023, Art. no. 676. [Google Scholar]

14. H. Shekhar et al., “Demand side control for energy saving in renewable energy resources using deep learning optimization,” Elect. Power Compon. Syst., vol. 51, no. 19, pp. 2397–2413, Nov. 2023. doi: 10.1080/15325008.2023.2246463. [Google Scholar] [CrossRef]

15. I. Jamil et al., “Predictive evaluation of solar energy variables for a large-scale solar power plant based on triple deep learning forecast models,” Alex. Eng. J., vol. 76, pp. 51–73, Aug. 2023. doi: 10.1016/j.aej.2023.06.023. [Google Scholar] [CrossRef]

16. D. A. Widodo, N. Iksan, and E. D. Udayanti, “Renewable energy power generation forecasting using deep learning method,” IOP Conf. Series: Earth Environ. Sci., Indonesia, vol. 700, no. 1, Mar. 2021, Art. no. 012026. doi: 10.1088/1755-1315/700/1/012026. [Google Scholar] [CrossRef]

17. N. Khortsriwong et al., “Performance of deep learning techniques for forecasting PV power generation: A case study on a 1.5 MWp floating PV power plant,” Energies, vol. 16, no. 5, Feb. 2023, Art. no. 2119. doi: 10.3390/en16052119. [Google Scholar] [CrossRef]

18. S. M. Miraftabzadeh, C. G. Colombo, M. Longo, and F. Foiadelli, “A day-ahead photovoltaic power prediction via transfer learning and deep neural networks,” Forecasting, vol. 5, no. 1, pp. 213–228, Feb. 2023. doi: 10.3390/forecast5010012. [Google Scholar] [CrossRef]

19. A. Sedai et al., “Performance analysis of statistical, machine learning, and deep learning models in long-term forecasting of solar power production,” Forecasting, vol. 5, no. 1, pp. 256–284, Feb. 2023. doi: 10.3390/forecast5010014. [Google Scholar] [CrossRef]

20. J. Li, C. Zhang, and B. Sun, “Two-stage hybrid deep learning with strong adaptability for detailed day-ahead photovoltaic power forecasting,” IEEE Trans. Sustain. Energy, vol. 14, no. 1, pp. 193–205, Sep. 2022. doi: 10.1109/TSTE.2022.3206240. [Google Scholar] [CrossRef]

21. N. E. Benti, M. D. Chaka, and A. G. Semie, “Forecasting renewable energy generation with machine learning and deep learning: Current advances and future prospects,” Sustainability, vol. 15, no. 9, Apr. 2023, Art. no. 7087. doi: 10.3390/su15097087. [Google Scholar] [CrossRef]

22. A. Mystakidis et al., “Energy generation forecasting: Elevating performance with machine and deep learning,” Computing, vol. 105, no. 8, pp. 1623–1645, Aug. 2023. doi: 10.1007/s00607-023-01164-y. [Google Scholar] [CrossRef]

23. M. A. Mirjalili, A. Aslani, R. Zahedi, and M. Soleimani, “A comparative study of machine learning and deep learning methods for energy balance prediction in a hybrid building-renewable energy system,” Sustain. Energy Res., vol. 10, no. 1, Jun. 2023, Art. no. 8. doi: 10.1186/s40807-023-00078-9. [Google Scholar] [CrossRef]

24. K. Wang, X. Qi, and H. Liu, “Photovoltaic power forecasting based LSTM-convolutional network,” Energy, vol. 189, Dec. 2019, Art. no. 116225. [Google Scholar]

25. D. Kumar, H. D. Mathur, S. Bhanot, and R. C. Bansal, “Forecasting of solar and wind power using LSTM RNN for load frequency control in isolated microgrid,” Int. J. Model. Simul., vol. 41, no. 4, pp. 311–323, Jul. 2021. [Google Scholar]

26. M. Abdel-Nasser and K. Mahmoud, “Accurate photovoltaic power forecasting models using deep LSTM-RNN,” Neural Comput. Appl., vol. 31, pp. 2727–2740, Jul. 2019. [Google Scholar]

27. G. An et al., “Ultra-short-term wind power prediction based on PVMD-ESMA-DELM,” Energy Rep., vol. 8, pp. 8574–8588, Nov. 2022. [Google Scholar]

28. M. Pasandideh, M. Taylor, S. R. Tito, M. Atkins, and M. Apperley, “Predicting steam turbine power generation: A comparison of long short-term memory and willans line model,” Energies, vol. 17, no. 2, Jan. 2024, Art. no. 352. [Google Scholar]

29. A. L. F. Marques, M. J. Teixeira, F. V. De Almeida, and P. L. P. Corrêa, “Neural networks forecast models comparison for the solar energy generation in Amazon Basin,” IEEE Access, vol. 12, no. 1, pp. 17915–17925, Jan. 2024. doi: 10.1109/ACCESS.2024.3358339. [Google Scholar] [CrossRef]

30. W. Khan, S. Walker, and W. Zeiler, “Improved solar photovoltaic energy generation forecast using deep learning-based ensemble stacking approach,” Energy, vol. 240, Feb. 2022, Art. no. 122812. doi: 10.1016/j.energy.2021.122812. [Google Scholar] [CrossRef]

31. M. Abubakar, Y. Che, M. Faheem, M. S. Bhutta, and A. A. Mudasar, “Intelligent modeling and optimization of solar plant production integration in the smart grid using machine learning models,” Adv. Energy Sustain. Res., vol. 5, no. 4, Apr. 2024, Art. no. 2300160. doi: 10.1002/aesr.202300160. [Google Scholar] [CrossRef]

32. M. N. Akhter et al., “An hour-ahead PV power forecasting method based on an RNN-LSTM model for three different PV plants,” Energies, vol. 15, no. 6, Mar. 2022, Art. no. 2243. doi: 10.3390/en15062243. [Google Scholar] [CrossRef]

33. B. Hossainds, “Dataset of green electricity production 1965–2021,” Accessed: Feb. 24, 2022. [Online]. Available: https://www.kaggle.com/datasets/belayethossainds/renewable-energy-world-wide-19652022 [Google Scholar]

34. Ember, “Dataset of green electricity production 2022–2023,” Accessed: Apr. 15, 2024. [Online]. Available: https://ember-climate.org/countries-and-regions/countries/united-kingdom/ [Google Scholar]

35. F. T. Lima and V. M. Souza, “A large comparison of normalization methods on time series,” Big Data Res, vol. 34, Nov. 2023, Art. no. 100407. [Google Scholar]

36. Q. H. Nguyen et al., “Influence of data splitting on performance of machine learning models in prediction of shear strength of soil,” Math. Probl. Eng., vol. 2021, pp. 1–15, Feb. 2021. doi: 10.1155/2021/4832864. [Google Scholar] [CrossRef]

37. N. Son and Y. Shin, “Short-and medium-term electricity consumption forecasting using prophet and GRU,” Sustainability, vol. 15, no. 22, Nov. 2023, Art. no. 15860. [Google Scholar]

38. M. G. Abdolrasol et al., “Abdolrasol etal, Artificial neural networks based optimization techniques: A review,” Electronics, vol. 10, no. 21, Nov. 2021, Art. no. 2689. [Google Scholar]

39. V. A. N. I. T. A. Agrawal, P. K. Goswami, and K. K. Sarma, “Week-ahead forecasting of household energy consumption using CNN and multivariate data,” WSEAS Trans. Comput., vol. 20, pp. 182–188, Aug. 2021. doi: 10.37394/23205. [Google Scholar] [CrossRef]

40. Google Colaboratory, “Welcome to Colaboratory,” Accessed: Feb. 1, 2024. [Online]. Available: https://colab.research.google.com/notebooks/intro.ipynb?utm_source%20=%20scs-index [Google Scholar]

41. V. D. Venkateswaran and Y. Cho, “Efficient solar power generation forecasting for greenhouses: A hybrid deep learning approach,” Alex. Eng. J., vol. 91, pp. 222–236, Mar. 2024. doi: 10.1016/j.aej.2024.02.004. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools