Open Access

Open Access

REVIEW

A Comprehensive Survey on Joint Resource Allocation Strategies in Federated Edge Learning

1 School of Internet of Things Engineering, Jiangnan University, Wuxi, 214122, China

2 Department of Electronic Engineering, Beijing National Research Center for Information Science and Technology, Tsinghua University, Beijing, 100084, China

3 Qualcomm, San Jose, CA 95110, USA

* Corresponding Author: Qiong Wu. Email:

Computers, Materials & Continua 2024, 81(2), 1953-1998. https://doi.org/10.32604/cmc.2024.057006

Received 05 August 2024; Accepted 12 October 2024; Issue published 18 November 2024

Abstract

Federated Edge Learning (FEL), an emerging distributed Machine Learning (ML) paradigm, enables model training in a distributed environment while ensuring user privacy by using physical separation for each user’s data. However, with the development of complex application scenarios such as the Internet of Things (IoT) and Smart Earth, the conventional resource allocation schemes can no longer effectively support these growing computational and communication demands. Therefore, joint resource optimization may be the key solution to the scaling problem. This paper simultaneously addresses the multifaceted challenges of computation and communication, with the growing multiple resource demands. We systematically review the joint allocation strategies for different resources (computation, data, communication, and network topology) in FEL, and summarize the advantages in improving system efficiency, reducing latency, enhancing resource utilization, and enhancing robustness. In addition, we present the potential ability of joint optimization to enhance privacy preservation by reducing communication requirements, indirectly. This work not only provides theoretical support for resource management in federated learning (FL) systems, but also provides ideas for potential optimal deployment in multiple real-world scenarios. By thoroughly discussing the current challenges and future research directions, it also provides some important insights into multi-resource optimization in complex application environments.Keywords

With the proliferation of the Internet of Things (IoT) and mobile devices, the paradigm of data generation and processing is fundamentally changing. In the past decades, data was typically collected and transmitted to remote data centers for processing and analysis. However, with the explosive growth of data volume and the growing concern for low latency, high reliability, and privacy protection, this centralized data processing model is no longer applicable. As a result, edge computing has been proposed as an emerging computing paradigm to address these issues. Edge computing involves performing computing tasks on devices close to the user, thereby reducing the time and network load for data transmission. Currently, Federated learning (FL) is a distributed machine learning (ML) approach that allows different devices to train models without sharing their data. With the development of technology in recent years, significant progress has been made in theory and practice, and in-depth research has been carried out on model fairness, heterogeneity handling, privacy-preserving security, model training efficiency, robustness, and scalability. The combination of edge computing and the FL will benefit from both of them and have more powerful functions in the application. However, FL requires frequent client-to-server communication for updating model parameters, so the communication efficiency of FL is still a key issue. Edge learning (EL) is a method of data processing and model training on edge devices (ED) close to the data source to reduce computing and communication latency [1], improve real-time performance, and reduce the burden on the center server (CS). However, EL usually suffers from resource constraints as well as device heterogeneity. Because of the defective problems of FL and EL, an emerging distributed learning technique called Federated Edge Learning (FEL) has been proposed [2]. In recent years, the development of edge computing technology has provided a solid infrastructure for FEL to better handle the large amount of data generated by IoT devices.

FEL combines the advantages of FL and EL by performing local model computation and training on the ED, which can leverage the computational power of the device for learning, speeding up data processing while preserving data privacy, and then aggregating the updates on the edge server (ES) through the mechanism of FL [3]. The core features of FEL include distributed training, data privacy protection, as well as low latency and high efficiency. FEL achieves distributed training by performing model training on multiple ED, which prevents the transmission of sensitive data to the CS and thus effectively protects user privacy. The approach significantly reduces data transmission latency and improves the overall computational efficiency since data processing and model training occur in the edge network close to the data source. Moreover, FEL is able to utilize distributed computation and storage resources at the edge of the network, thus ensuring effective model training while enhancing data privacy protection [4]. However, the resource allocation problem remains a challenging issue. With the popularity of IoT as well as mobile ED, in the smart planet, a large amount of distributed data is generated, and the combination of FEL and resource allocation is designed to address the resource constraints [5], data privacy, communication efficiency, and energy management in distributed computing environments to achieve efficient model training data processing while protecting data privacy and security. In FEL, resource allocation involves optimizing computational resources, communication resources, network topology, data selection, device scheduling, and energy consumption among participating ED. In fact, optimizing computational resources refers to the process of allocating computational power to the participating ED in FL, and optimizing communication resources refers to the strategy for efficient data transfer and model updating among ED. Optimizing network topology refers to the process of improving system performance and efficiency by adjusting the connections between ED in the network. Optimizing data selection refers to the process of selecting the most valuable data for model training from the data collected from multiple ED. Optimizing device scheduling refers to the process of reasonably allocating and scheduling computing tasks to individual devices in a distributed computing system according to the real-time state and demand of the system, in order to achieve the optimal use of resources and the maximization of system performance. Optimizing energy consumption refers to minimizing the energy consumed by ED during model training and data processing while ensuring system performance, which is crucial for extending the service life of the devices, reducing operating costs and achieving sustainable development. Therefore, an effective resource allocation strategy cannot only significantly reduce the communication cost, but also improve the overall system efficiency.

In today’s increasingly sophisticated technology, focusing on a single performance metric to the exclusion of other important factors may have limitations in fully dealing with complex multi-objective problems, whereas joint optimization can efficiently integrate and weigh different performance metrics in a multi-objective environment by selecting different weight for each metric where multiple available resources can simultaneously get a balance among multiple objectives, satisfying multiple physical constraints. For example, joint optimization of computation and communication resources can balance the weights between computation and communication appropriately, which can significantly improve the overall energy efficiency of the system [6,7]. Joint data selection and communication resources are to select effective data to train the model and address the allocation of communication resources across devices. In such a way, it can effectively protect device privacy, reduce local model training time, and reduce energy consumption [8] to improve learning efficiency [9]. Joint scheduling and communication resource allocation is trying to optimize system performance and efficiency and reduce latency and energy consumption [10,11]. Joint topology and computational resource optimization are usually used in minimizing energy consumption and latency by adjusting the connectivity between ED in the network [12].

In practical applications, joint optimization resources have demonstrated significant benefits. For example, in intelligent transportation systems, joint optimization of computing and communication resources enables real-time adjustment of traffic light control strategies, improving traffic flow and reducing congestion. In smart agriculture, by optimizing data selection and communication resources, edge devices are able to efficiently collect and transmit agricultural data, thereby improving the timeliness of decision-making. In addition, in smart manufacturing, through joint device scheduling and communication resource optimization, the resource utilization and productivity of production equipment are significantly improved. Meanwhile, in financial services, joint optimization of equipment scheduling and communication resources ensures fast processing and security of real-time financial transactions. Similarly, in some smart agriculture, joint optimization of network topology and communication resources improves the efficiency of data collection and transmission in precision agriculture, enabling low-latency agricultural monitoring. The financial domain also improves the collaboration capability of distributed models through joint optimization, which effectively improves the accuracy of fraud detection. Therefore, joint optimization resources can effectively improve system performance in various application scenarios.

This survey bridges the gaps in existing research, mainly from the single resource optimization to the multi-resource co-optimization. This survey will discuss in detail the latest technological developments and cutting-edge advances in FL, with a special focus on joint optimization problems in resource allocation, which is less covered in existing reviews. Through the specific joint optimization case analysis and practical application scenarios, the applications of FEL theory in practice are demonstrated, while some in-depth empirical analysis and future research directions are provided.

This survey will review the research progress in combining FEL with resource allocation, focusing on computational resources, communication resources, data selection, network topology, and device scheduling, and present a deep analysis of the current state-of-the-art methods, as well as point out the existing challenges, and future directions. That is, various resource allocations to enhance the efficiency of optimized FEL and reduce energy consumption will be presented in detail. By synthesizing the existing research results, the advantages as well as limitations will be analyzed, and the existing challenges and future development trends will be discussed.

In order to more effectively capture and learn large-scale data from the edge of the network, and provide rapid response and intelligent services for network terminals and other powerful functions, edge intelligence technology has been developed. By applying ML algorithms at the edge of the network, it is possible to continuously train and optimize artificial intelligence (AI) models in the edge cloud because ML algorithms can quickly and efficiently utilize distributed mobile data [13]. Among the many branches of edge intelligence technologies, FEL is increasingly becoming one of the most popular EL approaches due to its superior data privacy protection and efficient utilization of endpoint computational resources [4]. FEL is a distributed ML paradigm whose core idea is to perform local data processing and model training on distributed ED [14], while aggregating and protecting user data privacy through the physical separation of raw data of users [15]. In FEL, multiple components may be involved, including ED, ES, and potentially cloud servers [16].

2.1 FEL Basic Framework and Process

In FEL, distributed wireless devices collaborate to perform the ML task, and individual ED do not need to upload their complete training data samples to the ES [17]. Instead, each device independently trains the model locally on its local dataset. The model parameters generated as stochastic gradient descent (SGD) are uploaded to the ES. This approach ensures that the original data samples remain on the device locally, thus avoiding the need to share data resources, and enhancing data privacy protection. The basic framework of FEL is shown in Fig. 1.

Figure 1: FEL basic framework

The typical framework for FEL presented in this paper involves multiple participants, including ED, ES, and CS.

1) Edge Devices: refers to devices at the edge of the network, which may include smartphones, sensors, routers, smartwatches, computers, and so on. They have computing and storage capabilities and can perform data processing as well as model training locally.

2) Edge Server: refers to a server that exists at the edge of the network and is responsible for coordinating and managing the computational tasks of the ED. The ES receives model updates from the ED and then aggregates the model updates to finally generate the global model.

3) Cloud Server: refers to the CS located in the cloud, which is responsible for coordinating and managing the computational tasks on the ES, and the cloud server receives model updates from the ES, and finally aggregates the model updates to generate the global model.

Based on the numbering of the figures in Fig. 1, the basic flow of the FEL can be understood, as shown in Fig. 2.

Figure 2: FEL basic flowchart local

① Firstly, in the initialization phase, each ED loads its respective initial ML model, and the ES is responsible for coordinating the model training process of the ED and broadcasting the global model.

② In the local training phase, each ED is trained using its own collected data, during which the device updates the local parameters of the model.

③ In the model update upload phase, each ED will send the updated model to ES instead of uploading its original data, and ES is responsible for receiving the updated model from each device, which can better protect the user’s data privacy.

④ In the model aggregation phase, ES aggregates all model updates received to generate a new global model.

⑤ In the update phase of the global model, the newly generated global model is sent to the CS, which uses the aggregated updates to compute the global model. To protect data privacy, the CS only receives model updates and does not directly access the raw data.

⑥ During the model distribution phase, the updated global model is broadcast to the ES.

⑦ The ES sends the global model back to the relevant ED, and then each ED uses its newly received global model and continues its training to update the local model parameters.

The above looping process will continue until the model reaches the expected performance or stops when specific conditions are met. In each iteration, the model is transferred among ED, ES, and CS, while the original data is always kept in ED.

FEL combines the advantages of FL and edge computing to provide an efficient, secure, and expandable approach to data processing and model training. FEL has several advantages over traditional centralized ML and single FL or EL.

2.2.1 Low Latency and Efficient Computing

In traditional ML, all the data in the device needs to be transmitted to the CS, especially when the data volume is large and widely distributed, which may lead to high latency, however, in FL, the data transmission mainly consists of the model related data transmission, and although some of the data volume is reduced, the model update still needs to be transmitted to the CS for aggregation, which may have latency when the network is unstable or the bandwidth is limited [18]. EL significantly reduces latency by processing data at the ED, which is suitable for applications with high real-time performance [19]. However, the emergence of FEL not only retains the low-latency advantage of EL, which allows real-time processing and response, but also significantly improves the system efficiency through the distributed computation and storage capabilities among the ED. ED processes the data and trains the model locally, which reduces the traffic of model related data transmission to the CS and thus reduces network congestion, can adapt to changes in the local environment in real time, and improves the intelligence of the overall system by sharing knowledge with other devices through the FL mechanism [20].

2.2.2 Data Privacy and Security

In conventional ML, the data has to be transmitted to the CS for unified processing training, which may have the problem of privacy leakage when some users’ private and sensitive data are involved. However, FL performs model training on local devices and shares model updates that are not raw data, which reduces the risk of leaking private raw data [21,22]. However, FL still relies on the CS for aggregation and coordination of model updates [23]. There is still a privacy leakage problem during parameter exchanges in FL [24]. EL in the vicinity of the data source can collaborate on data processing and model training [25], which reduces data transmission and improves data privacy protection, but there are challenges in inter-device collaboration and global model training. Finally, FEL excels in protecting data privacy as it avoids centralized data storage and transmission by training the model locally at the ED and updating it collaboratively with other ED and CS, and the training and updating process does not require the uploading of raw data [26,27]. In addition, ED usually have better security as they are located at the edge of the network and are subject to a lower risk of attacks. Performing a large number of computations on ED allows the computational burden of CS and the frequency of data transmission to be further reduced, so that it is evident that FEL is better at protecting privacy compared to others.

2.2.3 Reduce Energy Consumption and Minimize Communication Costs

In traditional ML, the entire data needs to be uploaded, which results in a large amount of data being transmitted to the CS for processing updates and storage. Therefore, it may cause high communication costs. The CS needs to process a large amount of data from the ED, which requires a large amount of energy consumption. In FL, although there is no more transmission of raw data, frequent model updating and aggregation still consume a large amount of communication cost [28,29], especially with a large number of participating ED. EL processes the data locally. The requirement for data transmission is reduced, and leads to a reduction in communication costs and energy consumption [30,31]. However, independent ED processing may lead to uneven resource utilization and overall energy consumption is not optimized [32]. However, FEL reduces the amount of raw data transmission to the CS by performing local model training at the ED, so not only the communication cost but also the energy consumption is reduced. The collaborative learning and model update transmission between each ED is relatively small, further minimizing the communication overhead. In addition, the data is distributed and processed locally, which can further optimize the energy consumption of the devices, especially when using low-power devices and optimizing the allocation of computational tasks, making the overall system more efficient and significant in reducing energy consumption [33].

3 Related Work to FEL Based Resource Allocation

FEL excels in data privacy protection, real-time processing, and resource optimization. However, due to the heterogeneous nature of ED and limited resources [34], how to efficiently allocate and manage computational and communication resources as well as optimize network topology, data selection, energy consumption, and communication efficiency in the scaling of multiple demands have become important research issues in FEL. In this section, we provide a detailed overview of the current state of research on resource allocation in FEL.

3.1 FEL Based Computational Resource Allocation

Computational resource allocation in FEL involves efficiently distributing computational resources among multiple ED to support the training and updating of distributed machine learning models [35]. These computational resources include central processing units (CPU), graphics processing units (GPU), memory, storage, and other hardware resources used to perform computational tasks, etc. In FEL, the goal of computational resource allocation is to optimize the efficiency of computational resource utilization and reduce latency while ensuring the quality of model training.

In [36], Aghapour et al. utilized the ES available in the environment, which can optimize the total latency and energy consumption of the IoT devices in the system. Then a Deep Reinforcement Learning (DRL)-based strategy is proposed to decompose the device offloading and resource allocation problem into two independent sub-problems. Specifically, the offloading strategy is adjusted by constantly updating the environment information, and the resource allocation is optimized using the Salp Swarm Algorithm (SSA). Simulation experimental results show that the algorithm achieves significant cost minimization and performs well in terms of latency and power consumption. In [37], in order to minimize the total cost of FL, Liu et al. proposed a DRL-based resource allocation algorithm for joint optimization of computational resources and multi-UAV association. With this algorithm, it aims to optimize the allocation of computational resources while reducing the energy consumption of Unmanned Aerial Vehicle (UAV). In order to reduce the computational energy consumption of UAV, a UAV computational resource allocation strategy is proposed. This strategy reduces energy consumption by dynamically adjusting the allocation of computational tasks on the UAV. Ultimately, it is demonstrated that this strategy can effectively reduce the total cost of FL, thus improving the economy and sustainability of the system. In [38], in order to address the computational power limitations encountered by clients when training Deep Neural Networks (DNN) using FL, Wen et al. designed an innovative FL framework by combining edge computing and split learning techniques. This framework allows the model to be split during the training process, which reduces the latency during the training process while ensuring that the test accuracy is maintained. In this way, Wen et al.’s work effectively alleviates the problem of insufficient computational resources on the client side when dealing with complex DNN models, providing a new solution for the application of FL on ED. In [39], Salh et al. developed efficient integration of federated edge smart nodes by investigating the computational resource allocation, optimal transmission power, etc., to reduce the energy consumption of each iteration of the FL time from IoT devices to edge smart nodes and to meet the learning time requirements of all IoT devices. In [40], Zhang et al. designed an FL service-oriented computational allocation policy algorithm to solve the problem of allocation policy computation in time-varying environments. Finally, it is found that the wise allocation of computational resources is crucial for the overall performance of the FL task allocation system. The task allocation is dynamically adjusted according to the real-time computing capability and current load of the device.

In [41], Sardellitti et al. proposed a DRL-based task allocation algorithm that is able to adjust the allocation of computational tasks in real-time according to the state of the devices and network conditions. Part of the computational tasks are offloaded to the ES for execution to reduce the computational burden of the ED. In [19], Shi et al. proposed an innovative offloading strategy for edge computing, which achieves optimal allocation of computing resources by subdividing computing tasks into segments and making offloading decisions. Through collaborative computing between devices, this strategy realizes effective offloading and sharing of tasks, and improves the overall computational efficiency and performance of the system. In [42], Liu et al. designed an FL framework that centers on collaborative computing and significantly improves the overall computational efficiency by facilitating the collaboration of tasks among devices. In addition, an energy-aware scheduling method is proposed, which is able to intelligently adjust the allocation of computational tasks by real-time monitoring of the device’s battery power and computational load, thus effectively extending the device’s endurance. In order to achieve this goal, an energy consumption model is also established, which is able to dynamically adjust the allocation of computing tasks according to the real-time energy consumption of the device, ensuring the effective use of resources and the optimization of energy consumption. In [43], Wang et al. proposed a task scheduling algorithm based on the energy consumption model, which considers a typical EL framework, utilizes limited computational communication resources in EL for the best performance, and optimizes the task scheduling strategy by real-time monitoring of device power consumption. In [44], Zhou et al. used a low-power algorithm to reduce energy consumption during computation, and designed an energy-efficient FL algorithm through the overall architecture, framework, and emerging key technologies for training and reasoning in deep learning models at the edge of the network, which effectively extends the device’s endurance by optimizing the energy consumption during the computation process. Through this innovation, the energy efficiency of the FL system is improved, which enables the device to run for a longer period of time while performing learning tasks, thus enhancing the utility and flexibility of the system. For tasks with high real-time requirements, computational resources are prioritized and allocated to improve the real-time response capability of the system. In [45], Li et al. designed a computational scheduling framework centered on real-time prioritization, which significantly improves the real-time response performance of the system by dynamically adjusting the priority of computational tasks. This innovation ensures that the system can respond quickly to real-time tasks and improves the efficiency of the system.

The above studies address a single computational resource allocation, and through various strategies such as task decomposition and scheduling, task offloading, energy-aware scheduling and computational priority scheduling, the utilization of computational resources can be optimized to improve the overall performance and efficiency of the system. However, in [46], computation offloading is a key technique for solving the resource-constrained problem of user devices. Computing on a single device may lead to increased application latency due to limited computation and communication as well as storage resources, computing resources alone may not be sufficient to handle complex and large-scale data streams, leading to processing capacity bottlenecks. On the contrary, for multiple devices, joint scheduling of resource allocation effectively reduces overall latency by optimizing the distribution of tasks across them. In the next chapter, we will explore in-depth in conjunction with other resource allocation algorithms, dynamic scheduling strategies, and co-scheduling mechanisms to jointly enhance the resource management capabilities of FEL.

3.2 FEL Based Communication Resource Allocation

Communication resource allocation for FEL involves efficiently distributing wireless communication resources among multiple ED to support the training and updating of the distributed ML model. These communication resources include wireless spectrum, transmission power, bandwidth optimization, and coding resources. In FEL, the goal of communication resource allocation is to optimize the efficiency of communication resource utilization and reduce latency while ensuring the quality of model training.

FEL faces great challenges in communication resource allocation, especially when the number of ED is huge and the network environment is complex, how to efficiently allocate and manage communication resources becomes a key issue to improve the overall performance of FEL. FEL needs to frequently exchange model parameters between ED and ES, so the optimization of communication bandwidth is especially important. The bandwidth allocation is dynamically adjusted according to the network state and data volume to optimize the data transmission efficiency.

Current research focuses on reducing the amount of transmitted data and improving transmission efficiency. For example, methods based on model compression and sparsity can significantly reduce the communication overhead [28]. Through data compression and coding techniques, the amount of transmitted data is reduced and the efficiency of communication bandwidth utilization is improved. The size of the model is reduced by removing parameters that contribute less to the model through pruning. In [29], McMahan et al. proposed an SGD algorithm for differential privacy, called Differential Private SGD (DPSGD). This algorithm adds Gaussian noise to the transmitted parameters, thereby reducing the amount of exact data to be transmitted while preserving data privacy. By adding Gaussian noise to the gradient update, the DPSGD algorithm is able to provide effective protection of user privacy without sacrificing model performance. In [47], Lin et al. proposed a gradient quantization technique that effectively reduces the amount of data transmission by reducing the representation accuracy of model parameters. Specifically, the gradient values are converted to a low-precision representation, which significantly reduces the communication cost. With this approach, not only the communication efficiency is improved, but also the network bandwidth requirement is reduced, providing an effective solution for the application of FL systems in resource-constrained environments.

The utilization of communication resources can be effectively improved by dynamically adjusting the communication frequency through real-time monitoring of the network status and device requirements. Based on the current network bandwidth and the computational load of the devices, the communication period of the model update can be dynamically adjusted. Based on the real-time network load and data transmission demand, the bandwidth allocation strategy can be dynamically adjusted.

In [43], Wang et al. proposed an adaptive FL mechanism that dynamically adjusts the communication frequency by monitoring the network and device status, thus reducing the communication overhead. This method can flexibly adjust the communication strategy according to the actual conditions, which optimizes the allocation and utilization of communication resources. In [48], Aji et al. significantly reduced the amount of data transmitted by evaluating the importance of gradients, which allows selective transmission of parameters that are critical for model updating. This approach optimizes communication efficiency as it transmits only key information that is critical for model improvement, thus reducing unnecessary data transmission and saving bandwidth and communication resources. In a multi-device environment, a communication prioritization allocation strategy based on device characteristics can be used for more efficient use of communication resources. Each device is assigned a corresponding communication priority based on its computing power, data volume, and network condition. This strategy enables the devices to contribute more computational resources and updates during the training process according to their capabilities, which in turn improves the overall model training efficiency. In [49], Nishio et al. proposed that better performing devices can communicate with the server more frequently to provide timely model updates, thus speeding up the training progress. This method of prioritization assignment based on device characteristics helps to achieve collaborative training among multiple devices and improves the overall performance of FL. According to the urgency and importance of tasks, the allocation of communication resources can be flexibly adjusted to prioritize the needs of critical tasks. In [18], Kairouz et al. proposed that more communication bandwidth can be allocated to tasks that are critical for model training or decision making to ensure that they can be completed in a timely and efficient manner. This task-priority based communication resource allocation strategy helps to improve the overall performance of the system, especially when resources are limited, and ensures that critical tasks are prioritized, thus optimizing the response time and resource utilization efficiency of the system. ES plays an important role in FEL by optimizing the resource allocation through auxiliary communication, and ES locally aggregates the model parameters and reduces communication with the CS. In [19], Shi et al. designed a hierarchical aggregation technique that effectively reduces the overall communication through local aggregation of ES. With this approach, the communication efficiency is significantly improved and the overhead of data transmission is reduced, thus optimizing the system performance. In [30], Mao et al. designed a hierarchical network architecture that optimizes communication resources through multi-layered ES distribution. In this multilayered ES architecture, data can be processed and aggregated at different levels, thus reducing the communication burden on a single node. Communication delay and energy consumption are reduced by optimizing communication paths and selecting the best transmission protocol. Data traffic is decentralized and congestion on a single path is reduced through multi-path transmission techniques. It also improves communication efficiency and reliability by selecting the optimal transmission protocol according to the network conditions.

The communication resource allocation based on FEL effectively optimizes the communication bandwidth utilization through the strategies of model compression, adaptive communication, inter-device collaboration, and ES assistance. Separate communication resource allocation has been extensively studied in the aforementioned works, aiming to optimize the efficiency of federated learning through effective communication resource management. In contrast, federated resource allocation approaches can bring more performance improvements. In [50], Ni et al. suggested that complex tasks with multiple rounds of updates lead to high communication costs, increasing network bottlenecks and affecting system performance. In addition, load imbalance is a serious problem and may lead to degradation of quality of service. Joint resource allocation addresses these challenges more effectively by sharing the load and improving system reliability and flexibility. Joint resource allocation not only enhances the flexibility and robustness of the FEL system compared to single communication resource optimization, but also substantially improves the model performance in multi-device and multi-task environments, making it more efficient and practical in real-world applications. Future research can be combined with other systematic processing methods to jointly enhance the resource management capability of FEL.

Due to the uneven and diverse data distribution on each device, the role of data selection strategies in FEL is crucial. This subsection explores effective data selection strategies in the FEL framework to enhance model performance and training efficiency.

In FEL, data selection not only affects the performance of the model, but also directly relates to the efficiency of training and privacy protection. Data on different ED may have significant differences, i.e., data heterogeneity, and such differences can lead to inconsistency in model performance. If an effective data selection strategy is not chosen during model training, it may result in data on certain devices contributing less to the model, thus affecting the overall model accuracy and generalization ability. The privacy of the devices should also be protected during the data selection process while maintaining the validity and availability of the data.

In [51], Serhani et al. designed a dynamic sample selection model designed to optimize resource utilization and address the problem of big data heterogeneity and data imbalance. On ED, computational and storage resources are usually limited. Therefore, by selecting a subset that best represents the overall data distribution for training, resource utilization efficiency can be significantly improved and unnecessary computational overhead can be reduced. In [52], Hu et al. developed a framework called Auto FL, which enhances device involvement in model training by empowering clients to make autonomous decisions. This autonomous decision-making process allows the client to flexibly decide whether or not to participate in model training based on its own resource status and network conditions, thus effectively increasing the device’s participation. This increased participation not only expands the range of devices participating in training, but also significantly improves the performance and training efficiency of FEL. In FEL, data selection also requires consideration of privacy protection. By selecting the non-sensitive data that contributes the most to the model training, the model performance can be improved while ensuring privacy. In [53], Wei et al. proposed a participant selection problem aiming to minimize the total cost of hierarchical FL with multiple models, and based on this, to enhance privacy protection and reduce the total learning cost. In [54], a wireless gradient aggregation technique is employed in order to achieve efficient resource management in FEL. In order to solve the channel and data distortion problem caused by channel fading and data-aware scheduling, Su et al. proposed a sensor-side residual feedback mechanism. This mechanism is able to offset the bias caused by channel and data distortion, which improves the convergence speed of training, reduces the training loss, and avoids the distortion problem. In this way, not only faster convergence speed and lower training loss are realized, but also the accuracy and reliability of the training process are ensured. In [55], Kim et al. developed an innovative mobile edge computing (MEC) server selection and datasets management mechanism for FL-based mobile network traffic prediction. The impact of MEC server participation and datasets utilization on global model accuracy and training cost is deeply analyzed to construct an accurate mixed-integer nonlinear programming problem. Validated by simulation experiments and real datasets, the proposed framework achieves a 40% reduction in energy consumption while reducing the number of MEC servers involved in the FL process and maintains a high prediction accuracy.

Data selection plays a crucial role in FEL. An effective data selection strategy not only improves the performance of the model, but also optimizes resource utilization, improves training efficiency, and protects data privacy. However, separate data selection strategies, while optimizing the quality of training data to some extent, often fail to take full advantage of their inherent values when communication bandwidth is limited or computational power is restricted. In [56], Xin et al. suggested that a single data selection strategy has several drawbacks. It can lead to poor data representation, significant model bias, high risk of over-fitting, and poor performance in dealing with data imbalance and insufficient update frequency. Comparatively, joint resource allocation, by combining data sources from multiple nodes, can provide more comprehensive data diversity, reduce bias, balance data categories, and improve the generalization ability and updating effectiveness of the model. Future research can further explore more data selection methods and combine them with other resources (computational resources, communication resources). Compared to individual data selection, the joint resource allocation approach not only considers the importance of data selection, but also simultaneously optimizes multiple dimensions such as communication resources, computational resources, and device scheduling. This integrated optimization approach can better coordinate the use of data selection with other system resources, enabling the system to achieve better performance under different network conditions and computing environments when facing the growing multiple demands of users.

3.4 FEL Based Device Scheduling

In FEL, the heterogeneity of ED makes device scheduling crucial. A reasonable device scheduling strategy can fully utilize the computational resources of ED, balance the load, reduce the training time, and improve the overall performance of the system. The wide variation in ED in terms of computational power, storage capacity, network bandwidth, and battery life poses challenges to device scheduling. How to maximize the utilization of these heterogeneous resources while ensuring the model training effect is the core problem of device scheduling. The main goals of scheduling strategies include maximizing resource utilization, balancing device load, minimizing model training time, optimizing communication overhead, and extending device battery life, etc.

Resources such as computing power, storage space, and energy supply of the ED are evaluated to determine the performance and capacity of the equipment. Since the ED has limited resources, the device capacity assessment can optimize resource utilization and improve the scalability and utility of the system.

Through the application of DRL algorithm, it can dynamically learn and adjust the task allocation strategy according to the real-time state of the equipment and network conditions, so as to improve the efficiency of resource utilization. In [57], Chen et al. constructed an adaptive scheduling model for energy hubs based on the DRL algorithm. The model adopts the federated DRL method to meet the requirements of data privacy protection. The matching learning technique accelerates the convergence of DRL intelligence by solving the training instability problem in large-scale shared learning. The algorithm significantly improves the training efficiency and economic benefits in the application of multi-energy hubs. In [58], Yan et al. pointed out that due to the rapid development of IoT and edge computing technologies in recent years, personal privacy and data leakage have become major issues in IoT edge computing environments. FL has been proposed as a solution to address these privacy issues. However, the heterogeneity of the devices in the IoT edge computing environments poses a significant challenge to the implementation of FL. To overcome this challenge, a novel DRL-based node selection strategy is proposed to optimize FL in heterogeneous device IoT environments. Furthermore, the proposed strategy can ensure the efficiency of heterogeneous devices participating in the training and improve the accuracy of the model while guaranteeing privacy protection.

Dynamic task allocation strategies achieve efficient resource utilization by dynamically adjusting the allocation of tasks based on the real-time state of the device. In [59], Ren et al. introduced an innovative scheduling strategy aimed at improving the performance of FEL by developing a probabilistic-based scheduling framework that ensures unbiased aggregation of global gradients and accelerates the convergence speed of the model. The strategy designs a relatively optimal scheduling scheme by taking into account the importance of channel states and updated data. In addition, a detailed convergence analysis is performed to demonstrate the effectiveness of the strategy. The final experimental results show that the strategy can significantly improve the learning performance of FEL. In [60], Chu et al. explored the Constrained Markov Decision Process (CMDP) problem of combining FL with MEC server. In this model, the mobile device periodically transmits updates of the local model to the ES, which contains training on locally sensitive data. ES is responsible for aggregating the parameters from the mobile device and broadcasting the aggregated parameters back to the mobile device. The ultimate goal is to dynamically optimize the mobile device’s transmit power and the scheduling of training tasks. In the first step, the resource scheduling problem during synchronized FL is modeled as a CMDP problem and the size of the training samples is used as a measure of FL performance. Due to the coupling between iterations and the complexity of the state-action space, the authors employ a Lagrange multiplier approach to solve this problem. The final simulation results show that the proposed stochastic learning algorithm outperforms other benchmark algorithms in terms of performance. This suggests that the strategy can effectively balance the performance and resource constraints of FL, thus realizing efficient FL in an MEC environment.

Energy-aware scheduling strategies dynamically adjust task assignments to reduce energy consumption through the real-time energy consumption of devices. In [61], Hu et al. conducted an in-depth study on the problem of device scheduling in FEL systems, which face stochastic data generation constraints of energy and delay at the ED. To cope with the system dynamics of data arrivals and energy consumption, a dynamic scheduling algorithm was designed using Liapunov optimization, which aims to maximize the importance of long-term data while taking into account the energy consumption and per-round latency under the constraints of the set of scheduling devices. The final results show that the proposed method has significant advantages in reducing energy consumption and achieving better learning performance. This suggests that the strategy can effectively balance the performance and resource constraints of the FEL system to achieve efficient FEL in practical applications. Both energy consumption and model performance are important metrics of FEL. In [62], Hu et al. modeled the two metrics, energy consumption and model performance, to reveal the relationship between them, especially the correlation with the size of training data. In order to further optimize the FEL system, a workload constraint is added to the model, resulting in a common factor constraint problem. A strategy for resource optimization and device scheduling is proposed to address this problem, aiming to achieve a balance between energy consumption and model performance. The strategy minimizes the energy consumption of the training device by dynamically adjusting the workload of the device and the size of the training data. Experimental results demonstrate the effectiveness of the strategy, which can significantly reduce the energy consumption of the training device while maintaining good model performance.

In FEL, communication time minimization is necessary for device scheduling. In FEL, due to bandwidth constraints, only some devices can be selected to upload their model updates at each training iteration. This challenge has led to the study of optimal device scheduling strategies in FEL aiming at minimizing communication time. In [11], Zhang et al. proposed a new probabilistic scheduling scheme aimed at minimizing the communication time. The final results of this scheme show that the method is robust to changes in different network conditions and device performances, and it is effective in minimizing the communication time. In [63], Zhang et al. focused on exploring the optimal device scheduling strategy in FEL to significantly reduce communication time. However, due to the difficulty in quantifying the communication time, the current study can only partially address this issue by considering the number of communication rounds or the delay per round to indirectly determine the total communication time. In order to address this challenge more precisely, a first attempt is made to formulate and solve the communication time minimization problem. Based on the analytical results, a closed form of an optimized probabilistic scheduling policy is obtained by solving an approximate communication time minimization problem. As the training process progresses, this optimized strategy gradually shifts the priority from reducing the number of remaining communication rounds to reducing the delay per round. Ultimately, the proposed scheme is demonstrated to be effective in minimizing the communication time and reducing the delay per round in a case study of cooperative 3D target detection.

FEL-based device scheduling effectively improves the overall system performance and resource utilization efficiency through the application of DRL algorithms, dynamic task allocation, energy-aware scheduling, and minimization of communication time. Unlike standalone device scheduling, joint device scheduling and communication resource allocation not only focuses on selecting which devices participate in training, but also simultaneously optimizes each device’s communication resources, bandwidth allocation, and computational capabilities. In [64], Taïk et al. suggested that single-device scheduling strategies face problems such as poor data representation, uneven resource utilization, large fluctuations in model performance, data imbalance, and insufficient update frequency. In contrast, joint resource allocation can improve data diversity, optimize resource allocation, enhance model stability, balance data categories, and increase update frequency and efficiency by integrating data from multiple devices. Future research can delve into more intelligent scheduling algorithms, real-time dynamic adjustment strategies, and multi-level collaborative mechanisms. By jointly optimizing additional resources, FEL systems can better coordinate the relationship between device selection and other resource allocation.

3.5 FEL Based Network Topology

Network topology is the structure of devices and their connections in a network. In FEL, network topology directly affects data transmission, model synchronization, and resource allocation. FEL based network topology is a distributed computing architecture that combines edge computing and FL to enable collaborative learning among devices while protecting data privacy and reducing communication overhead. In this topology, ED such as smartphones, sensors, and IoT devices collect data locally and train on local models. These devices then share updates to the model, typically usually gradient or parameter updates, rather than raw data, in a privacy-preserving manner, either through ES or by directly collaborating with other devices.

The network topology in FEL can be categorized as star topology, tree topology, mesh topology, hybrid topology, etc.

The origin of FL is the co-training of ML algorithms on devices in multiple locations, and the core idea is to implement ML without centralizing or directly exchanging private user data. Nevertheless, most FL implementations still rely on the presence of CS. The most common network topology in FL, including the initial work, uses a centralized aggregation and distribution architecture, which is also known as a star topology. Thus, the graphical representation of this server-client architecture resembles a star, as shown in Fig. 3. Many FL studies and algorithms have been designed based on the assumption of this star topology. In a star topology, all ED are directly connected to a CS, which is responsible for coordinating the aggregation and distribution of models. The advantage of this topology is that the CS can easily manage all the ED, but the disadvantage is that the CS then becomes a single point of failure and can lead to bottlenecks in large-scale networks. In [65], Wu et al. considered that traditional Federated Averaging Algorithm (FedAvg)-based FL approaches tend to use a simple star network structure, which does not adequately consider the changes in edge computing environments in real-world scenarios and the diverse heterogeneous and hierarchical characteristics of the network topology. Therefore, other topology needs to be considered to address the limitations and bottlenecks of the star topology.

Figure 3: Star topology

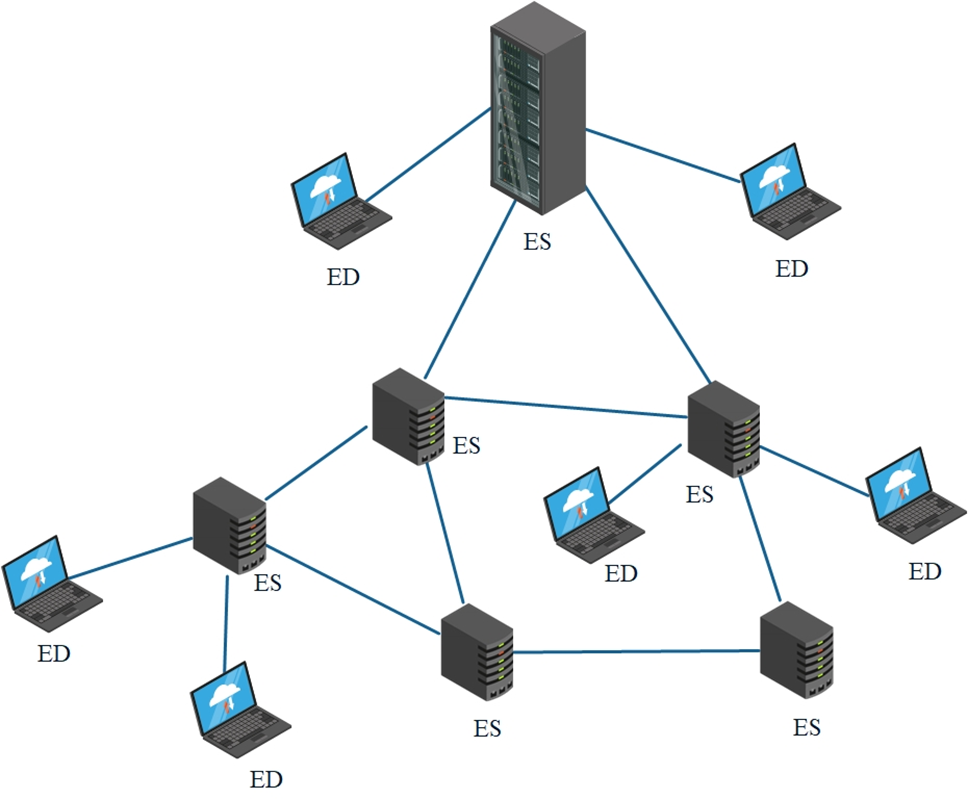

Between the CS and the ED, one or more additional levels may exist. For example, the ES connecting the ED and the CS may form one or more layers to form a tree topology, as shown in Fig. 4. In this structure, the CS is located at the top of the tree and the ED is located at the bottom of the tree. A tree topology contains at least three levels, otherwise it would be considered as a star topology. Tree topology helps to overcome the bottleneck in performance and single point of failure in star topology as compared to conventional FL. Thus, FL with tree topology can achieve different communication costs in different clusters based on their energy profiles. In the FL system with tree topology, any level of clients can exist. Fuzzy logic research in tree topology is divided into two main categories: hierarchical and dynamic. Hierarchical research is concerned with how to rationally divide and design the hierarchical structure of the tree topology in order to improve the overall performance and stability of the system. Dynamic research, on the other hand, focuses on how to dynamically adjust the hierarchical structure of the tree topology according to the changes in the system state and requirements in order to adapt to different application scenarios and environmental conditions. The tree topology is similar to a hierarchical network, where ED are connected according to a hierarchical structure and data and models can be transmitted along tree paths. This structure reduces the risk of single point of failure and can be scaled efficiently, but bottlenecks may still exist at some levels.

Figure 4: Tree topology

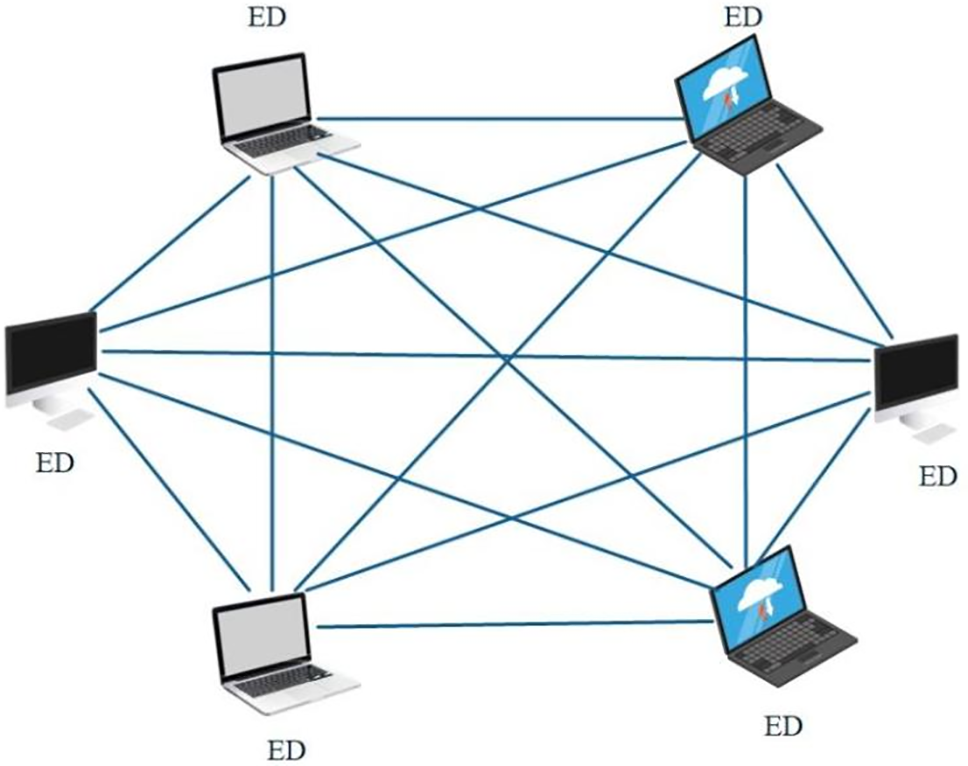

A mesh topology is a network structure in which all end devices are directly connected in a local network. In recent research, this topology is often used in FL systems. For example, Peer-to-Peer (P2P) or Device-to-Device (D2D) FL models are mesh topologies. Nevertheless, many existing FL systems still rely on centralized or cloud servers for model aggregation. When centralized servers are not feasible, decentralized approaches are sometimes considered as a sub-optimal alternative to centralized approaches. In a mesh topology, each ED can be directly connected to multiple other devices to form a mesh-like network, as shown in Fig. 5. The mesh topology provides high redundancy and fault tolerance, but the network complexity is higher and more difficult to manage and maintain. In [66], Xu et al. proposed a decentralized learning architecture using a mesh topology with multiple autonomous computing nodes. In this setup, all nodes are equal and communicate directly with each other through P2P, promoting efficient and flexible data exchange.

Figure 5: Mesh topology

In the previous study, we explored a series of topologies that are prevalent in the FL domain. Although these topologies are able to cope with numerous scenarios, each of them has certain advantages and limitations. In order to fully utilize the advantages of different topologies, attempts have been made to fuse various topologies to form a hybrid network topology. The design concept of this hybrid topology is to combine the advantages of at least two traditional architectures in order to seek a balance between performance and complexity, and thus create a more flexible and efficient solution, as shown in Fig. 6, where the addition of mesh connections can be added on top of a star, and the redundancy and fault tolerance can be improved. In [67], Kaur et al. modeled and simulated a wireless sensor network using Zigbee technology according to the characteristics of the Zigbee protocol, designed a hybrid topology, considered three unused combinations of possibilities of routing schemes for Zigbee in different scenarios, and finally verified the communication by testing key metrics such as latency, throughput, network loading, and packet delivery rate, and finally verified the communication reliability of the network.

Figure 6: Hybrid topology

Network topology plays a crucial role in FEL. Properly designing the network topology and adopting some dynamic and collaborative resource allocation strategies can significantly improve the performance and efficiency of FEL as well as the stability of the system. However, under complex network conditions, it is difficult to achieve globally optimal performance with separate topology optimization strategies. In contrast, the joint resource allocation strategy is able to optimize the network topology and communication resources together as a whole. In [68], Wei et al. noted the limitations of single network topology resource allocation, including constraints and high communication costs, and stressed that federated resource allocation improves flexibility and scalability. Under the framework of joint network topology and communication resource allocation, not only can the network topology be dynamically adjusted according to the geographic location of devices, network structure, and communication status between devices, but also the allocation of communication resources can be optimized simultaneously. This strategy can effectively reduce the communication delay under different topologies and maximize the participation of devices and the overall efficiency of the system. The next chapter will further explore more ways to combine network topology with other resource allocations.

4 Optimized Multi-Resource Allocation for FEL

With the popularity of IoT devices and the explosive growth of data volume, the traditional centralized data processing paradigm can no longer meet the demands of real-time and privacy protection. Therefore, FEL, an emerging distributed ML paradigm, has been proposed to address these issues. FEL allows model training and updating on distributed devices at the edge of the network, thereby reducing data transmission latency, improving computational efficiency, and enhancing privacy protection. However, FEL faces many challenges in its implementation, one of which is how to efficiently allocate and manage multiple resources to ensure model training efficiency and performance. In FEL, multi-resource allocation involves how to rationally allocate computational resources, communication resources, data selection, device scheduling, and network topology to support model training and updating. In this section, we explore the issue of multi-resource allocation in FEL.

4.1 Joint Computing and Communication Resource Optimization for FEL

In FEL, computational and communication resources are closely related. The computational capacity of ED determines the speed and energy consumption of local model training, while the limitation of communication resources affects the transmission efficiency of model parameters. Jointly optimizing the computational and communication resources can reduce the training time and energy consumption and improve the overall efficiency of the system under the premise of ensuring the model’s accuracy. Existing research focuses on the following aspects, dynamic task allocation and scheduling, model compression and communication optimization, and energy consumption awareness and energy saving optimization.

4.1.1 Dynamic Tasking and Scheduling

Dynamic task allocation and scheduling is to dynamically adjust the allocation and communication frequency of computing tasks according to the real-time status of ED. Dynamic task allocation and scheduling contains not only task decomposition and dynamic scheduling, but also load balancing and computational prioritization. Among them, the task decomposition and dynamic scheduling technique improves the efficiency of resource utilization, improves the training efficiency, and reduces the overall training time while fully utilizing the computational resources of the ED by decomposing the computational task into a number of sub-tasks, decomposing the model into a number of sub-models, and dynamically adjusting the order of the task execution allocation and scheduling according to the real-time computational capacity of the device system and the network condition [69]. Task decomposition is the process of decomposing a large-scale model training task is decomposed into multiple small tasks and assigned to different ED.

In [45], Li et al. proposed a task decomposition method based on ED collaboration, which optimizes resource utilization by decomposing and assigning tasks. Dynamic scheduling refers to the dynamic adjustment of task allocation and scheduling based on the real-time computing capability, load and network conditions of the device, then in [70], Chen et al. proposed a DRL-based dynamic scheduling algorithm to achieve efficient resource allocation by monitoring and predicting the device status in real-time. Through load balancing and computing priority setting in dynamic task allocation and scheduling, the computing load of each ED is balanced, avoids overloading of a single device, and effectively improves the computational efficiency of the overall system. Load balancing refers to real-time monitoring of the computing load of each device according to the computing capacity, storage space and energy supply and other resources of the ED, and reasonably allocating tasks, and realizing load balancing by adjusting the task allocation, and ultimately achieving the optimal use of resources. Among them, load balancing can improve the overall performance of the system and avoid resource waste and bottleneck problems. In [71], Lu et al. proposed a load balancing framework to optimize resource utilization by dynamically adjusting task allocation through distributed algorithms. Computational prioritization refers to setting the computational priority according to the importance and urgency of tasks and prioritizing the allocation of resources to high-priority tasks. Computational prioritization ensures that critical tasks are prioritized and improves the response speed and performance of the system. In [49], Nishio et al. proposed a priority scheduling algorithm that improves system response speed by prioritizing high priority tasks. In [72], Gu et al. proposed an improved federated self-supervised learning algorithm. It optimizes computational and communication resource allocation by combining integrated sensing and communication technologies in an intelligent transport system. The algorithm does this by offloading some tasks to the roadside unit (RSU). It also adjusts the transmission power, CPU frequency and task allocation ratio to balance the energy efficiency of local computing with RSU computing while optimizing resource allocation. The study shows that the improved algorithm reduces energy consumption and improves offloading efficiency, demonstrating its effectiveness in dynamic task offloading and resource allocation.

In FEL, dynamic task allocation and scheduling is a complex process that requires comprehensive consideration and optimization of factors such as task decomposition, dynamic scheduling, load balancing, and computational priority. By reasonably allocating tasks and scheduling, the overall performance and efficiency of the system can be improved and the optimal utilization of resources can be achieved.

4.1.2 Model Compression and Communication Optimization

Model compression and communication optimization are used to reduce the amount of transmitted data and optimize communication resources through model compression and parameter quantization [28]. Model compression techniques significantly reduce the amount of transmitted data, reduce storage and computation requirements, and improve the efficiency of communication bandwidth utilization by reducing the number of model parameters, using simpler model architectures, and so on [47]. In FEL, since ED are usually limited in terms of resources, such as computational power, storage space, and energy supply, model compression is crucial to improve the scalability and utility of the system. Parameter pruning in model compression techniques is used to reduce the size of the model by removing parameters that contribute less to the model through pruning.

A weight pruning method significantly reduces the number of parameters of the DNN by pruning and fine-tuning layer by layer to achieve model compression. The low-rank decomposition in the model compression technique is to reduce the number of parameters by decomposing the weight matrix into two smaller matrices. This approach reduces the model storage space and improves the computational efficiency. Communication optimization strategy in FEL is to reduce unnecessary communication overhead, optimize communication resources, improve communication efficiency, reduce communication delay and optimize system performance through adaptive communication frequency and differential privacy strategies. In FEL, communication overhead is an important performance bottleneck. By optimizing the communication, the overall efficiency and responsiveness of the system can be improved. Adaptive communication in the communication optimization strategy dynamically adjusts the communication frequency according to the network state and device requirements. In [43], Wang et al. proposed an adaptive communication mechanism that dynamically adjusts the communication frequency and reduces the communication overhead by monitoring the network and device states. Differential privacy in the communication optimization strategy protects data privacy by adding noise to the transmission parameters while reducing the amount of precise data to be transmitted. In [29], the DPSGD algorithm proposed by McMahan et al. protects user privacy by adding Gaussian noise. These techniques not only reduce the amount of communication but also increase the efficiency of communication at the same time. In [73], Guo et al. proposed a robust and efficient soft clustered federated system named REC-Fed, which aims to solve the problem of resource-constrained edge networks. The system enhances the personalization and robustness of model aggregation through a hierarchical aggregation method. In addition, adaptive model transmission optimization was also designed to jointly optimize model compression and bandwidth allocation to improve transmission efficiency. In [74], Ma et al. proposed a novel FL framework. Joint optimization of computational and communication resources is achieved through computational offloading. The framework utilizes computational offloading to deal with the challenges posed by data heterogeneity. It also minimizes Kullback-Leibler (KL) scatter by optimizing computational offload scheduling. Minimizing communication costs through resource allocation. Federated learning based on computing Offloading decouples the optimization of computational and communication resource allocation into two steps. It effectively improved the convergence and accuracy of the model and reduced the negative impact of data heterogeneity on the system.

In FEL, model compression and communication optimization are interrelated. Model compression reduces the amount of data that needs to be transmitted, thus reducing communication overhead. Meanwhile, communication optimization can improve communication efficiency and thus reduce the need for model compression. Therefore, in FEL, model compression and communication optimization are complementary and need to be considered and optimized comprehensively.

4.1.3 Energy Consumption Sensing and Energy Optimization

Energy-aware and energy-saving optimization is to reduce the energy consumption in the computation and communication process by means of an energy model and a low-power algorithm [43]. The energy-aware scheduling method dynamically adjusts the allocation order of computation tasks according to the real-time situation by means of the ED battery power and the computation load in order to balance the system devices, reduce the waiting time, reduce the energy consumption, and prolong the device’s endurance [44]. The energy model in energy-aware scheduling is implemented by means of establishing an energy consumption model to dynamically adjust the computation tasks according to the real-time energy consumption of the devices.

In [75], Li et al. proposed a task scheduling algorithm based on the energy consumption model to optimize the task scheduling strategy by monitoring the device power in real-time. In addition, the energy-saving algorithm in energy-aware scheduling uses a low-power algorithm to reduce the energy consumption in the computation process. In [76], Wen et al. proposed an energy-efficient FL algorithm to improve the device endurance by optimizing the energy consumption during computation. Inter-device Collaboration and Energy Sharing in Energy Sensing and Energy Saving Optimization Achieve efficient use of resources and energy saving optimization through collaborative computation and energy sharing between devices. In [77], Stergiou et al. proposed an innovative cloud-based architecture, InFeMo, focusing on optimizing the allocation of computational and communication resources. InFeMo achieves efficient computational resource utilization by combining FL scenarios and existing cloud models with the flexibility of choosing to train the model on either a local client or a cloud server. This strategy not only reduces the waiting time for user requests, but also optimizes resource efficiency through energy-efficient design, further enhancing the coordinated allocation of computing and communication resources.

Collaborative computing is inter-device collaborative computing, where a task or model training is accomplished together through the decomposition and sharing of tasks and communication collaboration between ED [78]. Inter-device collaboration can fully utilize the computational resources of ED to improve computational efficiency and system performance, while reducing the energy consumption of individual devices [79]. In [42], Liu et al. proposed a FL framework based on collaborative computing, which improves computational efficiency through inter-device task collaboration. Energy sharing is an energy saving optimization through energy sharing and dynamic scheduling among ED. Energy sharing can improve the overall energy efficiency of the system and extend the device’s endurance while reducing energy consumption. In [80], Zhang et al. proposed an energy sharing mechanism that optimizes computational resource utilization through energy scheduling between devices.

In FEL, energy sensing and energy saving optimization is a complex process that requires comprehensive consideration and optimization of factors such as energy sensing scheduling, inter-device collaboration and energy sharing. By reasonably assigning tasks and scheduling, the overall performance and efficiency of the system can be improved and the optimal utilization of resources can be achieved. At the same time, through inter-device collaboration and energy sharing, the energy consumption of individual devices can be reduced, the duration of the devices can be extended, and energy-saving optimization can be achieved.

4.2 Joint Data Selection and Communication Resource Allocation for FEL

In FEL, due to the heterogeneity of ED and resource constraints, how to effectively select training data and optimize communication resources becomes the key to improving the performance of FEL. In this paper, we explore the strategy of joint data selection and communication resource optimization based on FEL and its application to achieve efficient resource allocation and system performance improvement.

In FEL, the data on ED are often non-independently and identically distributed (non-IID), and there are large differences in data distribution and data volume across devices. An effective data selection strategy not only reduces the communication overhead, but also ensures the global convergence and performance of the model. Therefore, reasonable data selection is crucial for the successful implementation of FEL. In addition, communication resources are one of the key factors affecting system performance. An efficient communication resource optimization strategy can reduce the transmission delay, lower the energy consumption, and improve the transmission efficiency, thus enhancing the overall performance of FEL.

The integration of joint data selection and communication resource optimization strategies aims to construct an efficient optimization framework to achieve the co-optimization of data selection and communication resource allocation. The joint data selection and communication optimization model is constructed to achieve overall optimization by comprehensively considering data characteristics and communication costs. By incorporating data selection and communication resource optimization into the same optimization model, global optimization is achieved by comprehensively considering data distribution, computational load and communication cost. Current research on sensing technology mainly focuses on a single federated device, ignoring the competition between devices and the resource allocation problems within devices, which limit the application of sensing technology.

To address this challenge, in [3], Fu et al. first delved into the potential bottlenecks when executing multiple federated tasks and constructed a federated optimization model to model the problem as a multidimensional optimization problem, which involves the device selection and communication resource allocation in a two-stage Stackelberg game. In order to solve this problem more efficiently, we propose a device selection and resource allocation method based on a multi-coalition game, which ultimately proves that the proposed method can reduce training time and save communication costs. The traditional centralized learning approach transmits data directly to the center for processing, which, although simple, introduces significant communication delays and may raise the risk of serious privacy breaches. To overcome these challenges, a significance-aware FEL system has been proposed in [81]. The system aims to enhance learning efficiency by optimizing end-to-end latency. By analyzing the relationship between communication resource allocation and data selection, and by exploiting the correlation between loss attenuation and gradient paradigm, an optimization model aiming to maximize the learning efficiency is constructed. Based on this, a data selection strategy and a communication resource allocation method are further developed that achieves optimal performance for a given end-to-end delay and sample size. By using a golden section search algorithm with low computational complexity, the optimal end-to-end delay setting can be determined. Experimental results on three popular convolution neural network (CNN) models show that the scheme not only significantly reduces the training latency but also improves the learning accuracy compared to other benchmark algorithms.