Open Access

Open Access

ARTICLE

Cross-Target Stance Detection with Sentiments-Aware Hierarchical Attention Network

College of Cryptographic Engineering, Engineering University of PAP, Xi’an, 710086, China

* Corresponding Author: Mingshu Zhang. Email:

Computers, Materials & Continua 2024, 81(1), 789-807. https://doi.org/10.32604/cmc.2024.055624

Received 02 July 2024; Accepted 20 August 2024; Issue published 15 October 2024

Abstract

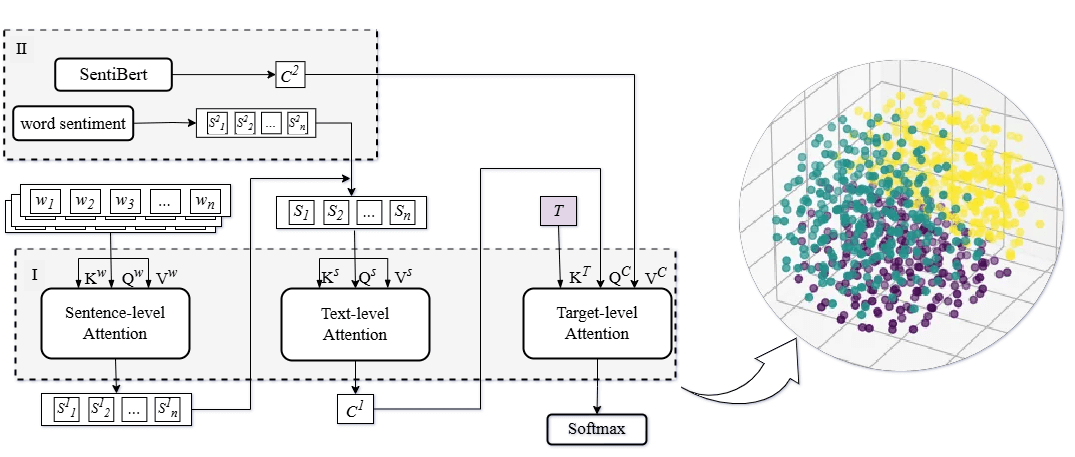

The task of cross-target stance detection faces significant challenges due to the lack of additional background information in emerging knowledge domains and the colloquial nature of language patterns. Traditional stance detection methods often struggle with understanding limited context and have insufficient generalization across diverse sentiments and semantic structures. This paper focuses on effectively mining and utilizing sentiment-semantics knowledge for stance knowledge transfer and proposes a sentiment-aware hierarchical attention network (SentiHAN) for cross-target stance detection. SentiHAN introduces an improved hierarchical attention network designed to maximize the use of high-level representations of targets and texts at various fine-grain levels. This model integrates phrase-level combinatorial sentiment knowledge to effectively bridge the knowledge gap between known and unknown targets. By doing so, it enables a comprehensive understanding of stance representations for unknown targets across different sentiments and semantic structures. The model’s ability to leverage sentiment-semantics knowledge enhances its performance in detecting stances that may not be directly observable from the immediate context. Extensive experimental results indicate that SentiHAN significantly outperforms existing benchmark methods in terms of both accuracy and robustness. Moreover, the paper employs ablation studies and visualization techniques to explore the intricate relationship between sentiment and stance. These analyses further confirm the effectiveness of sentence-level combinatorial sentiment knowledge in improving stance detection capabilities.Graphic Abstract

Keywords

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools