Open Access

Open Access

REVIEW

Confusing Object Detection: A Survey

1 School of Computer Science and Technology, East China Normal University, Shanghai, 200062, China

2 Shanghai Institute of Ceramics, Chinese Academy of Sciences, Shanghai, 200050, China

* Corresponding Author: Xin Tan. Email:

# These authors contributed equally to this work

Computers, Materials & Continua 2024, 80(3), 3421-3461. https://doi.org/10.32604/cmc.2024.055327

Received 23 June 2024; Accepted 07 August 2024; Issue published 12 September 2024

Abstract

Confusing object detection (COD), such as glass, mirrors, and camouflaged objects, represents a burgeoning visual detection task centered on pinpointing and distinguishing concealed targets within intricate backgrounds, leveraging deep learning methodologies. Despite garnering increasing attention in computer vision, the focus of most existing works leans toward formulating task-specific solutions rather than delving into in-depth analyses of methodological structures. As of now, there is a notable absence of a comprehensive systematic review that focuses on recently proposed deep learning-based models for these specific tasks. To fill this gap, our study presents a pioneering review that covers both the models and the publicly available benchmark datasets, while also identifying potential directions for future research in this field. The current dataset primarily focuses on single confusing object detection at the image level, with some studies extending to video-level data. We conduct an in-depth analysis of deep learning architectures, revealing that the current state-of-the-art (SOTA) COD methods demonstrate promising performance in single object detection. We also compile and provide detailed descriptions of widely used datasets relevant to these detection tasks. Our endeavor extends to discussing the limitations observed in current methodologies, alongside proposed solutions aimed at enhancing detection accuracy. Additionally, we deliberate on relevant applications and outline future research trajectories, aiming to catalyze advancements in the field of glass, mirror, and camouflaged object detection.Keywords

Object detection is the task that aims to detect and locate the object in the images or videos, which has attracted considerable research attention in recent years. The methods for object detection have achieved significant advancements since the introduction of deep convolutional neural networks, e.g., AlexNet [1]. While general object detection methods [2,3] perform well on most regular objects, there exist many tricky objects that it cannot detect reliably.

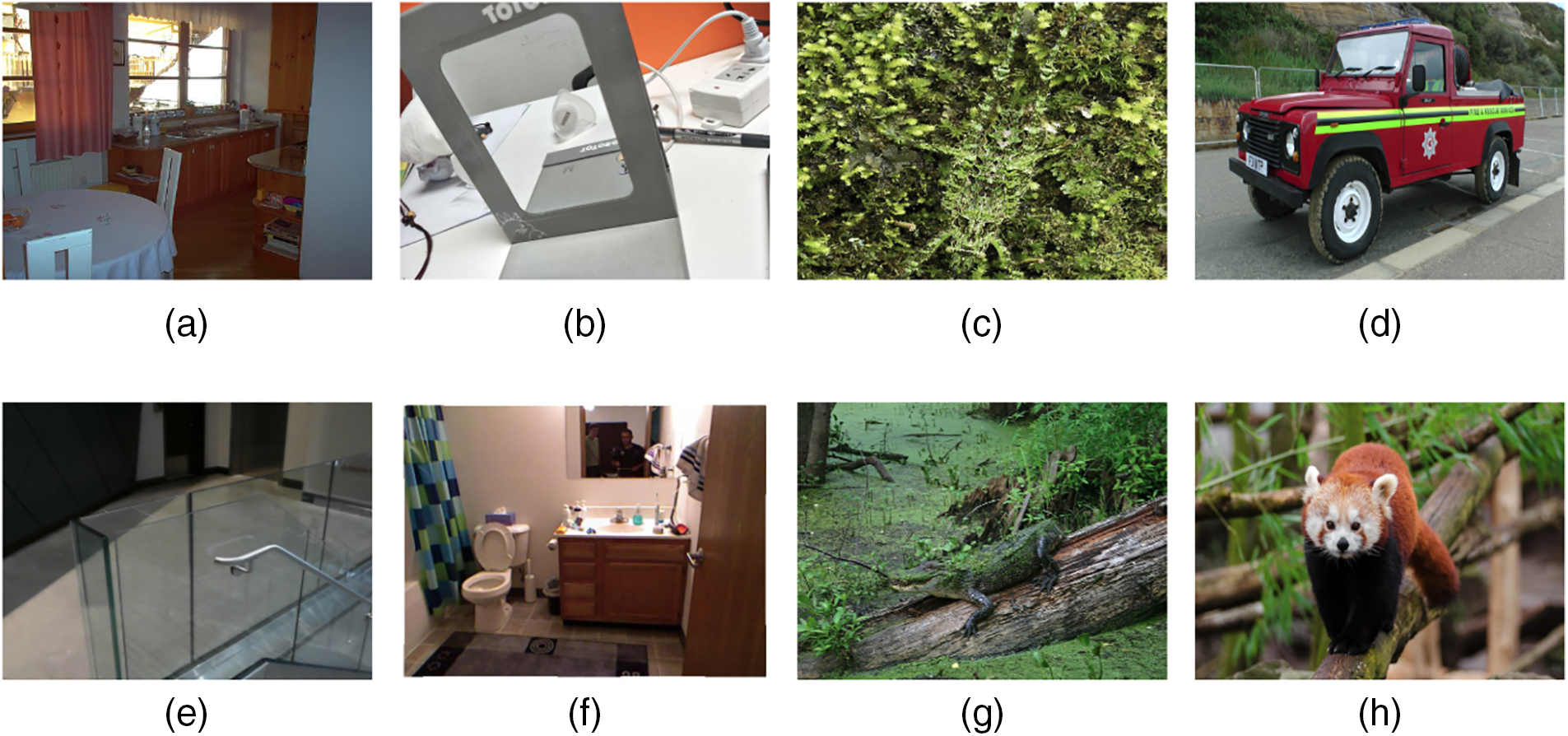

Easily confused objects such as glass, mirrors, and camouflaged objects pose a significant challenge to commonly used general object detection methods. The left three columns of Fig. 1 show some examples of confusing objects. For instance, the inherent optical properties of mirrors pose considerable difficulties for reliable object detection. This challenge arises from the striking resemblance between the content within the mirror and the surrounding environment. Moreover, the variability in both the size and shape of mirrors, coupled with the potential presence of any object within their reflections, poses a substantial hurdle for conventional object detection systems. Similar complexities are observed in the task of detecting glass. Unlike mirrors that reflect real-world objects, glass transmits light, allowing for the visualization of objects positioned behind it. This unique characteristic of glass introduces inherent complexities distinct from those associated with mirror detection. Compared to glass and mirrors, which we humans can easily recognize, we cannot realize the existence of camouflaged objects without paying enough attention to the objects and their surroundings. Therefore, it is difficult to detect confusing objects using general or salient object detection methods.

Figure 1: Representative samples of confusing objects sourced from popular datasets are as follows: Image (a) depicts a glass surface sourced from the glass detection dataset (GDD). Reprinted with permission from Reference [4]. Copyright 2020, Copyright Haiyang Mei. Image (e) depicts a glass surface sourced from the glass surface dataset (GSD). Reprinted with permission from Reference [5]. Copyright 2021, Copyright Jiaying Lin. Image (b) represents a mirror sourced from the Mirror Segmentation Dataset (MSD). Reprinted with permission from Reference [6]. Copyright 2019, Copyright Xin Yang. Image (f) represents a mirror sourced from the Progressive Mirror Detection (PMD) dataset. Reprinted with permission from Reference [7]. Copyright 2020, Copyright Jiaying Lin. Image (c) illustrates a camouflaged object sourced from the camouflaged object images (CAMO) dataset. Reprinted with permission from Reference [8]. Copyright 2020, Copyright Elsevier. Image (g) illustrates a camouflaged object sourced from the COD10K dataset. Reprinted with permission from Reference [9]. Copyright 2020, Copyright Dengping Fan. Image (d, h) is non-confusing object images in the public domain that were downloaded from the Internet

In various applications integrating object detection technology, the inability to detect confusing objects such as glasses, mirrors and camouflaged objects presents a significant concern. Given the widespread prevalence of these objects in both indoor settings, like vanity mirrors, and outdoor environments, including glass doors in public spaces, accurate detection holds paramount importance in averting undesirable incidents. The ability to detect these objects correctly is crucial in preventing potential mishaps. For instance, a failure to recognize the presence of glass doors by a robot may result in collisions, leading to property damage and posing safety hazards to pedestrians. While camouflaged object detection, a task aimed at accurately detecting a target object from an environment that blends perfectly with the target, has long been a research hotspot [10–14] in biology and the medical aspect. Mainstream protruding object detection methods make extensive use of discriminative features, while confusing targets have relatively few discriminative features. Accordingly, due to the opposed properties of protruding and camouflaged objects, it is not a good idea to impose related methods on protruding object detection tasks. Hence, establishing an object detection system resilient enough to reliably detect the existence of confusing objects stands as a matter of substantial significance.

Historically, research in object detection predominantly emphasized discerning prominent entities or salient objects like humans [15], animals [16], and vehicles [17]. These studies showcased cutting-edge performance by harnessing extensive datasets and leveraging deep neural networks. Instead, for confusing objects like glasses, mirrors, and camouflaged objects, it lacks both datasets and efficient methods to work on them. In recent years, this situation has begun to change with the prevalence of deep learning. Numerous deep learning-based models have been proposed for the challenging task of COD. This progress is accompanied by the availability of large-scale datasets for COD with professionally annotated tags. The research on confusing object has a long and rich history since the early days [18]. However, early approaches to confusing object segmentation predominantly centered on low-level features encompassing color [12], texture [19], shape [11], and edge [18]. However, these methodologies exhibited limitations, being primarily suited for simple scenes and proving inadequate when confronted with intricate environmental contexts.

Indeed, detecting confusing objects like mirrors, glass, and camouflaged targets is a challenging task. Nonetheless, these areas not only have research value but also have broad application prospects. For example, these specialized object detection accuracy improvements can address edge cases in general object detection methods, thereby avoiding unnecessary failure conditions. The following section covers the literature on deep learning-based glass, mirror, and camouflaged object detection. Since object detection for these specialized subjects is relatively new, the number of papers is smaller than in the general area of object detection and semantic segmentation. We organize the subsequent section as follows: Section 2 provides a brief review comparing our work with previous surveys in the related field. Section 3 briefly introduces the general object detection method. Section 4 dives into the popular methods for confusing object detection, focusing on mirror, glass, and camouflaged objects. Section 5 provides an overview of the mainstream datasets used for our task, with a primary focus on open-source ones. Section 6 overviews the performance of various models under the same settings. Section 7 summarizes the recent work and the future direction of COD.

2 Comparison with Previous Review

To the best of our knowledge, this study is the first to systematically address the detection and segmentation of confusing objects, encompassing glass/transparent objects, mirrors, and camouflaged objects at the same time. There is no prior survey literature on glass and mirror detection. Therefore, our work represents a novel and comprehensive review of this subject area. While camouflaged object has a longer history and is supported by a few existing studies, the research on glass and mirror detection is relatively limited.

Previous studies, such as [20], have primarily focused on non-deep learning approaches to camouflage detection. This is a very limiting scope, considering the pervasive influence of deep learning in recent years. Another study [21,22] summarizes image-level models for camouflaged object detection, although it includes only a small amount of literature. In addition, literature [23] provides a comprehensive review on model structure and paradigm classification, public benchmark datasets, evaluation metrics, model performance benchmarks, and potential future development directions. Specifically, a number of existing deep learning algorithms are reviewed, offering researchers an extensive overview of the latest methods in this field.

Meanwhile, the published literature [24] reviews relevant work in the broader field of concealed scene understanding. This comprehensive review summarizes a total of 48 existing models for the image-level task. Unlike previous surveys, this study systematically and comprehensively examines the integration of deep learning into the image-level camouflaged scene understanding. It provides an in-depth analysis and discussion on various aspects, including model structure, learning paradigms, datasets, evaluation metrics, and performance comparisons.

In particular, we have summarized and cataloged a substantial number of existing deep learning-based methods for COD. We compare the performance of relevant mainstream models using core metrics to enhance understanding of these approaches. Furthermore, we provide insights into the challenges, key open issues, and future directions of image-level COD.

3 Overview of General Object Detection

Object detection or segmentation has a long history since the early days of computer vision, and image segmentation plays a vital role in different real-world applications. Before the era of deep learning, image segmentation methods used techniques such as k-mean clustering, normalized cuts, region growing, and threshold, which usually yield bad performance. As deep learning comes onto the scene, models based on convolutional neural networks (CNNs) [25] have achieved outstanding performance never achieved by earlier methods. Previous work [26] already has a comprehensive depiction of this topic. The most common deep learning-based method in object detection is the CNN-based model, CNN is one of the most successful and widely used architectures in the field of computer vision. As the transformer model prevails in natural language processing, researchers in computer vision find it also achieves promising results when it is incorporated into computer vision tasks. Different from CNN-based approaches, transformers rely on the attention mechanism at its heart, which enhances their performance in various vision tasks. Simply put it, this mechanism allows transformers to capture long-range dependencies and contextual information more effectively. Besides object detection, transformers have demonstrated their effectiveness in a wide range of vision applications, including image classification, segmentation, and even generative tasks. Generative Adversarial Networks (GANs) [27] is a newly proposed model, using GANs to solve the segmentation task has been a research interest in recent times. The dilated convolution is a slightly modified version of convolution with an additional parameter called dilation rate. The working mechanism of dilation convolution expands the receptive field, therefore capturing more information with less computation cost. Dilation convolution is a widespread technique in the segmentation model. Besides, the probabilistic graphic model is helpful to exploit scene-level semantic information. While challenges exist, probabilistic graphical model Conditional Random fields and Markov Random Fields still achieve promising outcomes in recent works. Encoder-decoder architecture is applied in most object detection models explicitly or implicitly nowadays. It has gained popularity since Badrinarrayann et al. [3] proposed SegNet. These general object techniques are also common components of the model for COD.

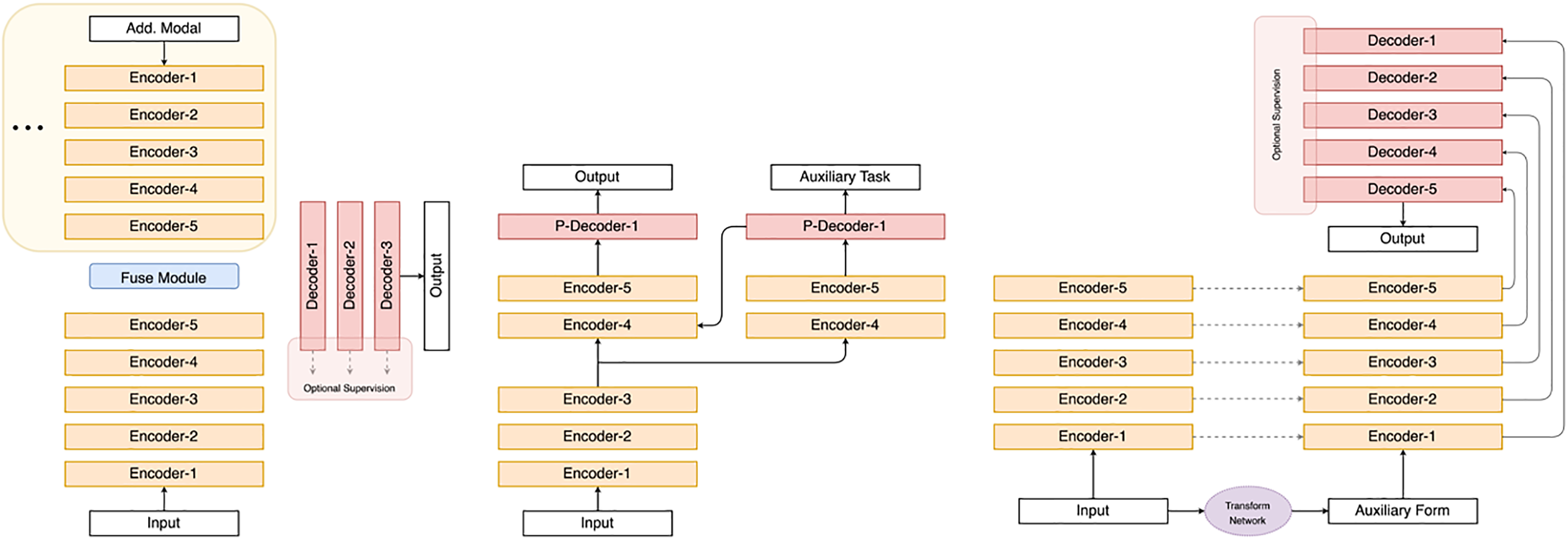

However, given the special nature of confusing objects, a different approach from general object detection is required. Fig. 2 provides an overview summary of the overall architecture present in the literature. The following section aims to give a comprehensive review of popular methods in recent years.

Figure 2: Overview of network architectures for confusing object detection. Three common frameworks are presented in a sequential arrangement from left to right

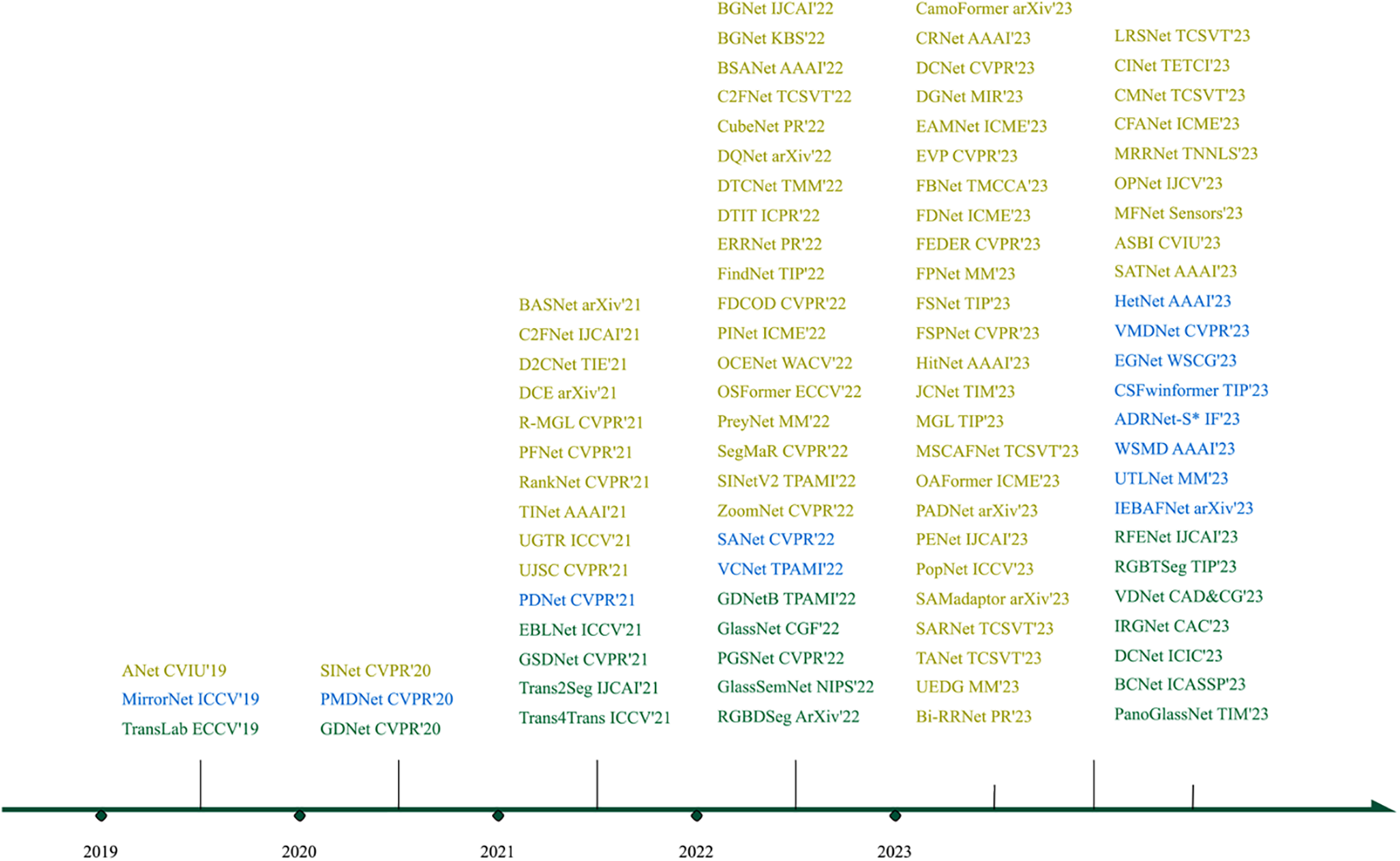

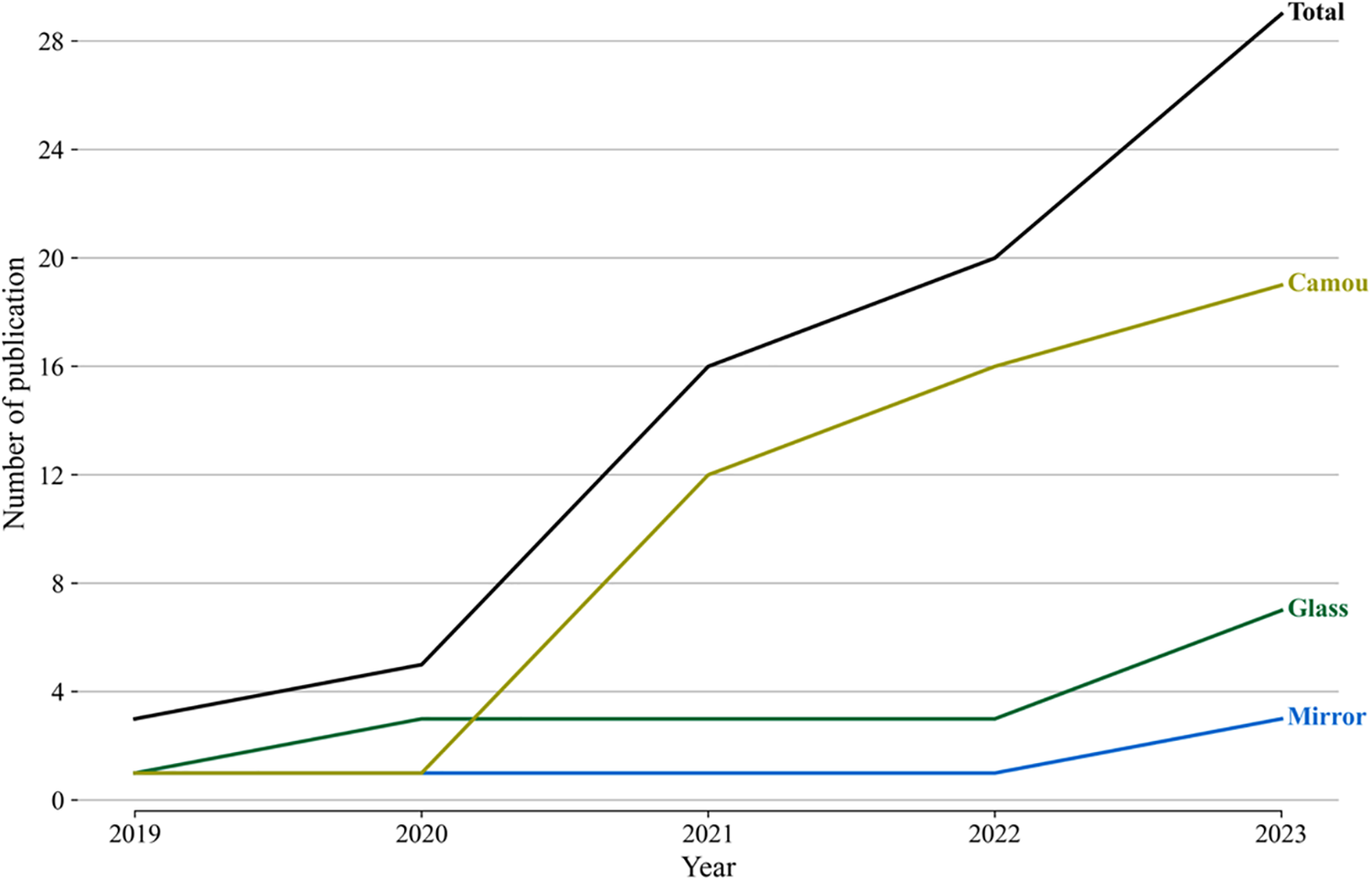

Confusing objects are intrinsically different from general or salient object detection. Confusing object reference to objects like shadow, water, glass, mirrors, and camouflaged objects. Confusing object has internal and optical characteristics that are completely different from ordinary or prominent objects. Using generic object parts directly will certainly not yield good performance, so it is necessary to design a model specifically for obfuscated objects. In recent years, many researchers have published a large amount of work using deep learning-based models on this topic, and it is time to summarize their outstanding work. Fig. 3 demonstrates the amount of work published within each category. With the missing of some topics, here we focus on glass, mirrors, and camouflaged objects.

Figure 3: Timeline of the deep learning-based COD methods from 2019 to 2023

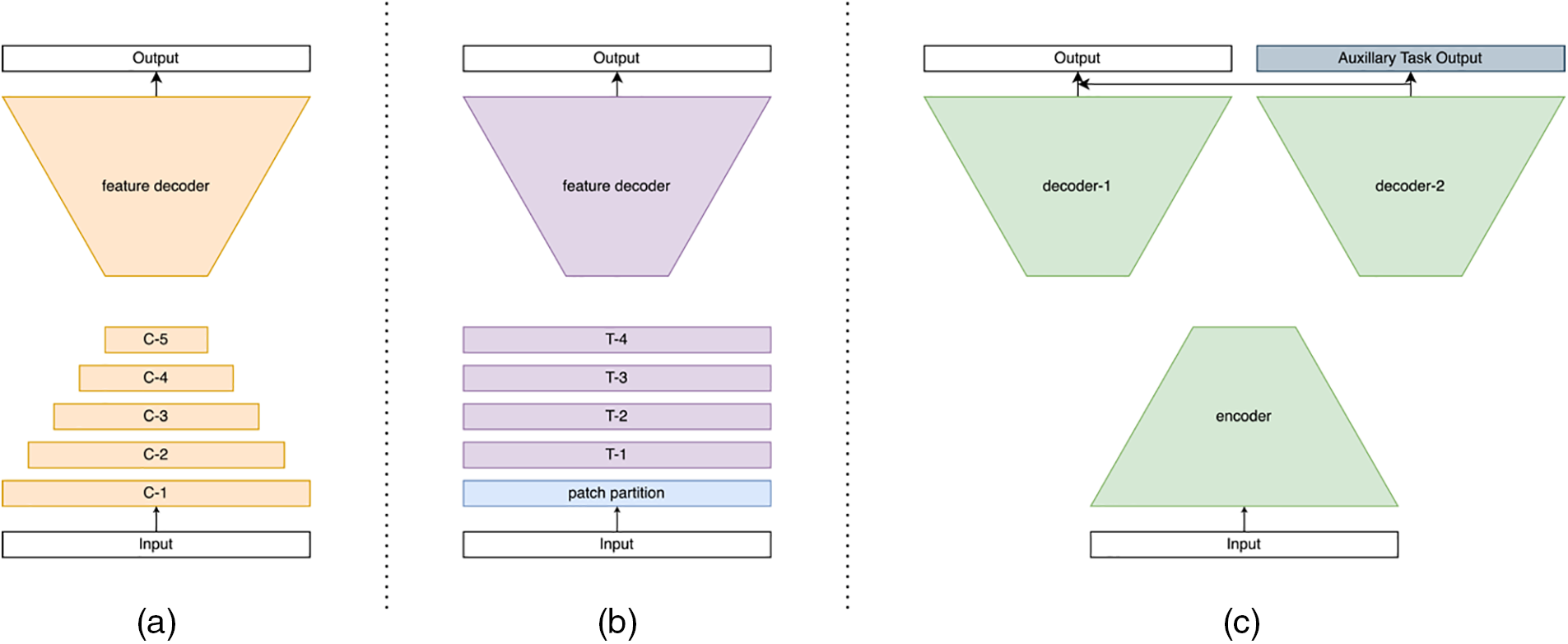

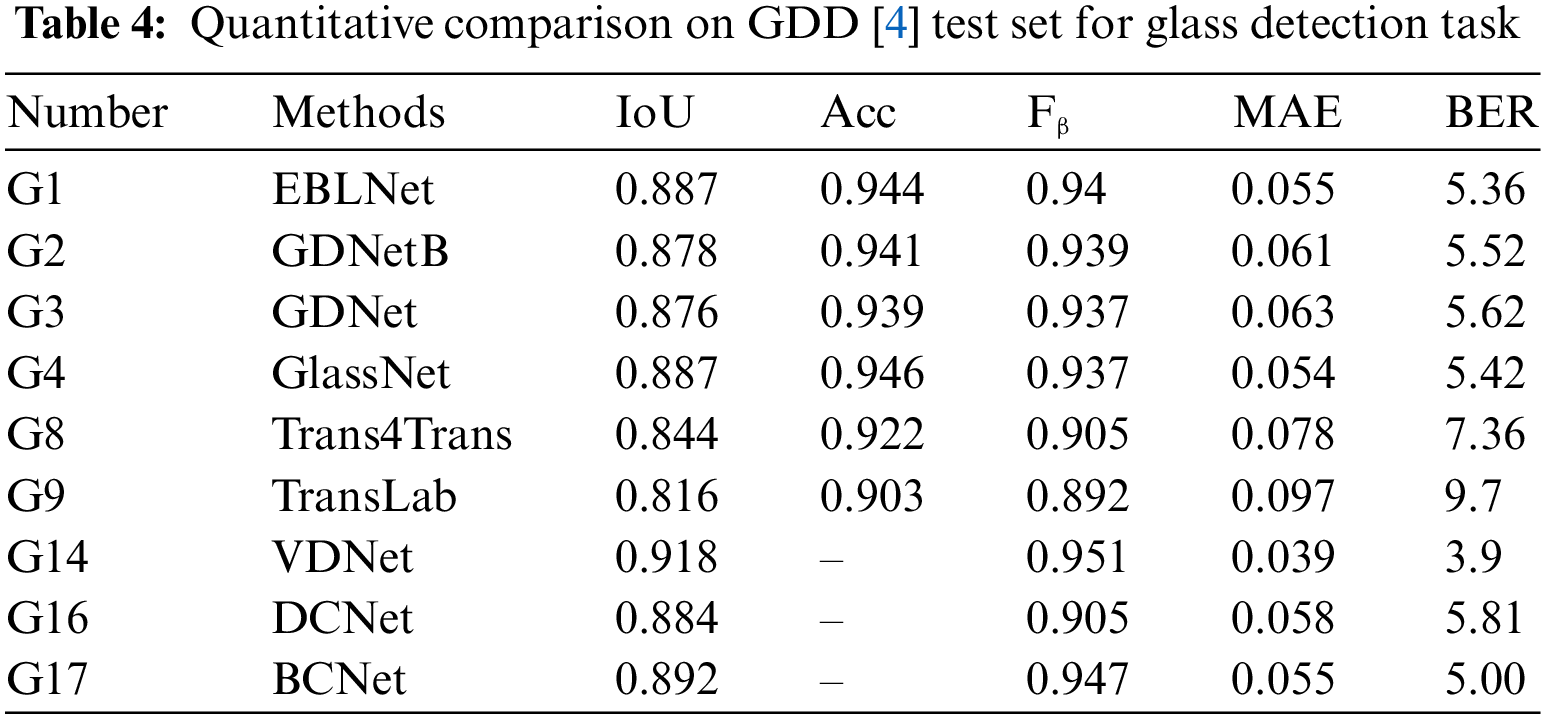

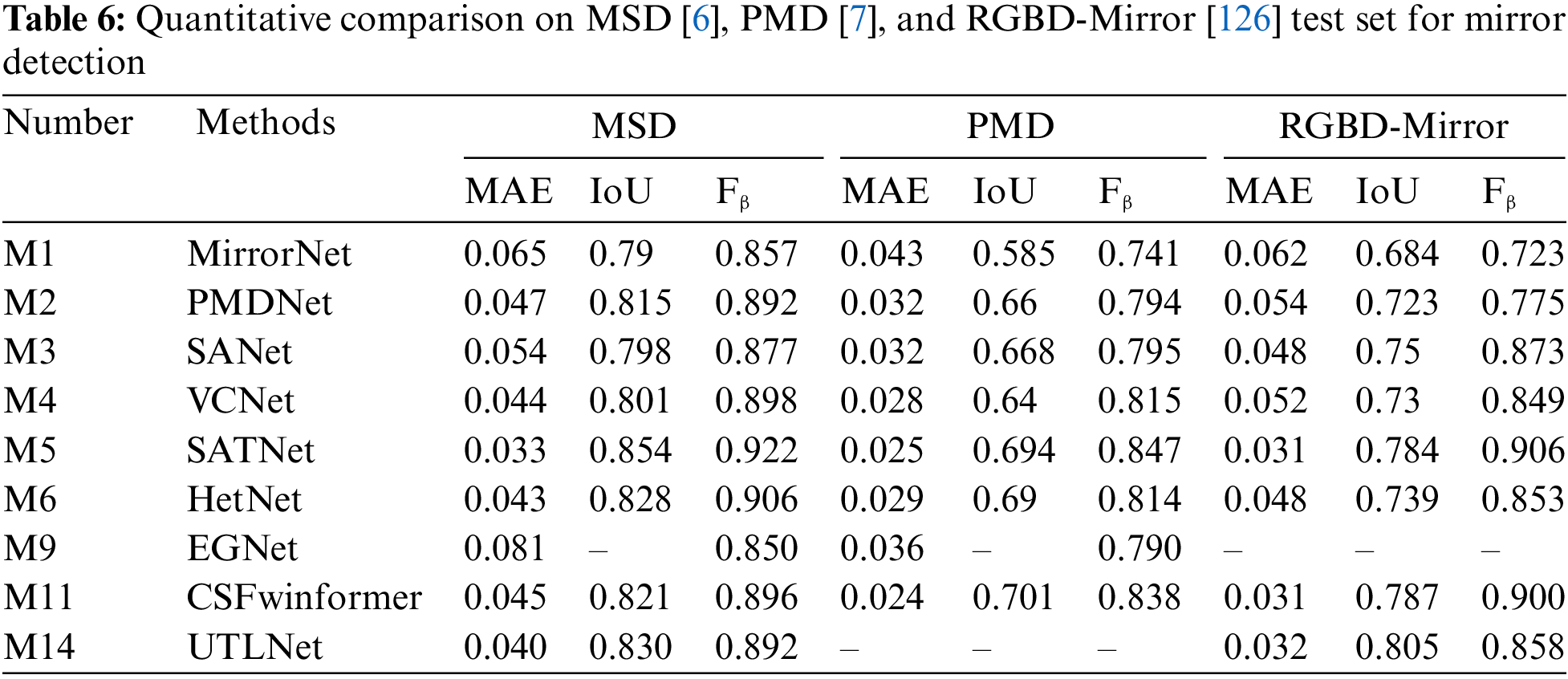

For an overall understanding of CNN-based architecture, refer to subfigure (a) in Fig. 4. For the differences between Transformer-based models and multi-task learning frameworks, see subfigure (b,c). Yang et al. [6] propose the first deep learning-based model MirrorNet to apply to the mirror detection task. It is inspired by human biology and detects entire mirrored areas by identifying content discontinuities. Notably, MirrorNet employs ResNeXt101 as the backbone feature extraction network. Lin et al. [7] propose the PMDNet, which uses not only discontinuities but also the relational content inside and outside the mirror, and then leverages the extracted features to construct mirror edges. The methods that exploit discontinuities and the correspondence between mirror contents will fail in certain scenarios. Tan et al. [28] realize the mirror image exhibits visual chirality property and propose the model called VCNet makes use of visual chirality cues to detect mirror region. Guan et al. observe that there is a semantic connection in the position mirror (i.e., people usually place mirrors in several fixed positions), and propose the SANet to leverage the semantic association for mirror detection. Aiming at the shortcomings of previous methods that consume a lot of computing resources, He et al. [29] introduce the HetNet which applies heterogeneous modules in different levels of features, which outperforms the previous work in accuracy and efficiency. The key difference with the previous method lies in that it treats the backbone feature differently with different specially design modules to utilize the characteristics of varying level features fully. The model proposed by Gonzales et al. [30] incorporates parallel convolutional layers alongside a lightweight convolutional block attention module to capture both low-level and high-level features for edge extraction. Mei et al. [31] propose a novel network MirrorNet+ which models both contextual contrasts and semantic associations. While Mei et al. [4] propose the glass detection net (GDNet) for glass object detection. By employing ResNeXt101 as the feature extractor, GDNet utilizes both low-level cues and high-level semantic information for high-accuracy glass detection. The GDNet employs a cascade strategy by embedding multiple modules in the last four layers of the backbone network and dealing with low-level and high-level features separately. The GDNet outperforms existing semantic segmentation and object detection methods. GDNet-B [32] is the successor to GDNet, with the additional boundary feature enhancement module incorporated into the original design to boost performance.

Figure 4: Simplified architectures of CNN-based models, Transformer-based models, and multi-task learning frameworks, where (a) refers to the CNN-based architecture, the lower part refers to the pre-trained CNN backbone, and the upper part refers to the specialized decoder architecture. (b) has a similar architecture, but the backbone is replaced by the Transformer backbone. (c) uses two decoder architectures, and the final result is a fusion of its own decoder and the auxiliary task

Xie et al. [33] propose TransLab, named after “Looking at the Boundary”, focusing on transparent object detection. As the name suggests, TransLab takes the inspiration that transparent object often has a clear boundary, it adopts a dual path scheme with backbone features sending into two different modules for calculating boundary loss and segment loss separately. Besides, Xie et al. [34] present Trans2Seg as a reformed version of TransLab. Although Trans2Seg adopts a Transformer-based encoder-decoder architecture, it uses a CNN-based network as the feature extractor. Due to the hybrid CNN-Transformer architecture, Trans2Seg obtains a wider receptive field, thereby showing more advantages than previous CNN-based models. Like TransLab, EBLNet introduced by He et al. [35] also extensively uses boundary information. EBLNet adopts a powerful module called the fine differential module, which works in a coarse-to-fine manner to reduce the impact of complex internal components and obtain accurate boundary predictions. Besides, they use a point-based graph convolution network module to leverage accurate edge prediction to enhance global feature learning around edges, thereby improving the final prediction. Another model based on CNN is GSDNet [5], GSDNet uses glass reflections and boundaries as the two main cues for locating and segmenting glass objects. GSDNet reliably extracts boundary features and detects glass reflections from inputs to segment mirrors in images. Han et al. [36] propose a structure similar to EBLNet, but in their work, they differentiated the boundary regions into internal and external boundaries, based on which they optimized the internal and external features of transparent surfaces.

Yu et al. [37] introduce IRGNet, a lightweight RGB-Infrared fusion glass detection network specifically designed to satisfy low power consumption requirements and ensure high real-time performance for mobile robots. This network incorporates an information fusion module that amalgamates complementary feature information from RGB and infrared images at multiple scales. Zhang et al. [38] propose a novel detail-guided and cross-level fusion network, termed DCNet, to utilize label decoupling to obtain detail labels explore finer detail cues. This approach leverages discontinuities and correlations to refine the glass boundary, thereby effectively extracting local pixel and global semantic cues from glass-like object regions and fusing features from all stages. Xiao et al. [39] propose BCNet, a network for efficient and accurate glass segmentation. It features a multi-branch boundary extraction module for precise boundary cues and a boundary cue guidance module that integrates these cues into representation learning. This approach captures contextual information across different receptive fields to detect glass objects of varying sizes and shapes. Zheng et al. [40] propose a novel glass segmentation network, termed GlassSegNet, for detecting transparent glass. This two-stage network comprises an identification stage and a correction stage. The former stage simulates human recognition by utilizing global context and edge information to identify transparent glass. While the correction stage refines the coarse predictions by correcting erroneous regions based on the information gathered in the identification stage. The transparent object segmentation network, ShuffleTrans [41], is designed with a Patch-wise Weight Shuffle operation combined with dynamic convolution to incorporate global context cues.

Le et al. [8] present ANet, the first deep learning-based network for detecting camouflaged objects. The idea of ANet is straightforward, using a salient object segmentation method to accomplish the task and an additional classification stream to determine if the image contained camouflaged objects. As a result, it does not design a deep learning-based method to specifically address the problem of camouflaged object segmentation, which is a clear departure from later work. The SINet [9] propose by Fan et al. uses a partial decoder structure that is roughly divided into two sub-modules (i.e., search module and recognition module). SINet also is the most common baseline for the camouflaged object detection task. The subsequent iteration of SINet, known as SINet v2 [42] in journal publications, advances visual outcomes by enhancing adaptability to various lighting conditions, alterations in appearance, and addressing ambiguous or undefined boundaries more effectively. The PFNet [43] employs a positioning and focusing strategy, using a positioning module to locate the position and the cascading focusing modules to refine the segmentation map using features obtained at different stages of ResNet-50. Zhang et al. [44] endeavor to unravel the intricacies of accurate detection and propose PreyNet, a model that emulates two fundamental facets of predation: initial detection and predator learning, akin to cognitive mechanisms. To harness the sensory process effectively, PreyNet integrates a bidirectional bridging interaction module, specifically crafted to discern and consolidate initial features through attentive selection and aggregation. The process of predator learning is delineated through a policy and calibration paradigm, aimed at identifying uncertain regions and fostering targeted feature refinement. Taking inspiration from biological search and recognition mechanisms, Yue et al. [45] introduce a novel framework named DCNet, which leverages two specific constraints—object area and boundary—to explore candidate objects and additional object-related edges. Employing a coarse-to-fine approach, it detects camouflaged objects by progressively refining the identification process. Utilizing a deep supervision strategy, DCNet achieves precise localization of camouflaged objects, thereby enhancing its accuracy in COD tasks.

Sun et al. [46] propose the C2FNet represents a novel approach that capitalizes on contextual information leveraging an attention mechanism. Central to its design is an attention-induced cross-level fusion module, strategically engineered to amalgamate multiscale features effectively. C2FNet cascades these two modules into the network to get the final prediction map. D2CNet [47] bears a striking resemblance to that of SINet, albeit with notable distinctions. D2CNet delineates itself through the adoption of a U-shaped network structure, where the first stage of the network operation does not incorporate the underlying information. Moreover, D2CNet introduces supplementary modules, notably the self-refining attention unit and the cross-refining unit, augmenting its functionality and enabling enhanced information refinement and integration throughout the network’s processing stages. Zhai et al. [48] devise a novel model of Mutual Graph Learning (MGL) that extends the idea of mutual learning from the regular grid to the graph domain. MGL is equipped with typed functions to handle different complementary relationships, thus maximizing information interactions and obtaining superior performance gains. The updated iteration of MGL, named R-MGLv2 [49], brings forth multiple enhancements. These improvements encompass the integration of a new multi-source attention block explicitly engineered for conducting attention within the realm of COD. Moreover, Zhai et al. incorporate side-output features intended to collaboratively guide the learning process. This innovation effectively alleviates the burdens associated with recurrent learning overhead and mitigates the accuracy reduction during inference at lower resolutions.

POCINet [50] propose by Liu et al. has a similar procedure to SINet, which can also be divided into search and recognition phases. The difference is that POCINet adopts a novel scheme to decode camouflaged objects using contrast information and part-object relationship knowledge. TANet [51] exploits the subtleties inherent in the texture distinction between camouflaged objects and their backgrounds. This strategic approach enables the model to cultivate texture-aware features, delve into intricate object structural details, and amplify texture differences. As a result, TANet enhances the ability to recognize texture differences, thereby improving the overall efficacy and performance. Inspired by the complementary relationship between texture labels and camouflaged object labels, Zhu et al. [52] design TINet as an interactive guidance framework that focuses on finding uncertain boundaries and texture differences through progressive interactive guidance. It maximizes the usefulness of fine-grained multilevel texture cues for guiding segmentation. The work of Chen et al. [53] is an improved version of the work of Sun et al. [46] with similar design ideas and follows the name C2FNet. The advancements made in this work significantly enhance the previous model by implementing a multi-step refinement process that involves the iterative refining of low-level features through the utilization of preliminary maps. This iterative refinement strategy culminates in the prediction of the final outcome. The improvements introduced in this updated version showcase notable enhancements in performance compared to the preceding model. Li et al. [54] introduce the Progressive Enhancement Network (PENet), a novel system that mirrors the human visual detection system. PENet adopts a three-stage detection process, comprising object localization, texture refinement, and boundary restoration.

Ji et al. [55] introduce a novel network architecture named ERRNet, which stands out for its innovative edge-based reversible re-calibration mechanism. Compared to prevailing methods, ERRNet demonstrates substantial performance enhancements coupled with notably higher processing speeds. Its ability to achieve superior performance while maintaining efficiency positions ERRNet as a promising solution with broad applicability. Chen et al. [56] propose a novel boundary-guided network (BgNet), to tackle the challenging task in a systematic coarse-to-fine fashion. The architecture of BgNet is characterized by the localization module and the boundary-guided fusion module. The dual-module strategy empowers BgNet to achieve accurate and expeditious segmentation of camouflaged regions, thereby establishing its efficacy in addressing this intricate problem. The BGNet [57] shares a parallel motivation akin to BgNet, aiming to strategically incorporates essential object-related edge semantics into the representation learning process, compelling the model to prioritize the generation of features that accentuate the object’s structural elements. By explicitly integrating edge semantics and extensively leveraging boundary features, BGNet enables the model to discern and emphasize critical structural components of camouflaged objects, thereby elevating its capability for accurately localizing boundaries, a crucial aspect within this domain.

Obviously, COD methods heavily rely on boundary information, and BSA-Net [58] introduces a novel boundary-guided separation of attention mechanism. The network design is based on procedural steps observed in the human way to detect camouflaged objects, where object boundaries are delineated by recognizing subtle differences between foreground and background. Distinguishing itself from existing networks, BSA-Net adopts a dual-stream separated attention module specifically crafted to emphasize the separation between the image background and foreground. This unique design incorporates a reverse attention stream, facilitating the exclusion of the interior of camouflaged objects to prioritize the background. Conversely, the normal attention stream works to restore the interior details, emphasizing foreground elements. Both attention streams are steered by a boundary-guiding module, collaboratively combining their outputs to augment the model’s understanding and refinement of object boundaries. FAPNet [59] adopts a multifaceted approach. FAPNet capitalizes on cross-level correlations, enhancing the overall contextual understanding. One notable advantage of FAPNet lies in its adaptability and scalability to the polyp segmentation task, demonstrating its versatility and robustness beyond the realm of COD. Li et al. [60] propose another boundary-guided network (FindNet) with the utilization of texture cues from a single image. The capability of FindNet to perform accurate detection across diverse visual conditions characterized by varying textures and boundaries underscores its versatility and robustness. Sun et al. [61] introduce the Edge-aware Mirror Network (EAMNet), which implements a two-branch architecture facilitating mutual guidance between the segmentation and edge detection branches, establishing a cross-guidance mechanism to amplify the extraction of structural details from low-level features.

In the realm of COD, accurate annotations prove challenging due to the resemblance between camouflaged foreground and background elements, particularly around object boundaries. Liu et al. [62] highlight concerns regarding direct training with noisy camouflage maps, positing that this approach may result in models lacking robust generalization capabilities. To address this, they introduce an explicitly designed aleatoric uncertainty estimation technique to account for predictive uncertainty arising from imperfect labeling. Their proposed framework, OCENet, positions itself as a confidence-aware solution. This framework leverages dynamic supervision to generate both precise camouflage maps and dependable aleatoric uncertainty estimations. Once OCENet is trained, the embedded confidence estimation network is capable of assessing pixel-wise prediction accuracy autonomously, reducing reliance on ground truth camouflage maps for evaluation purposes.

HeelNet [63] employing cascading decamouflage modules to iteratively refine the prediction graph. These modules are composed of distinct components: a region enhancement module and a reverse attention mining module, strategically designed for precise detection and the thorough extraction of target objects. Additionally, HeelNet introduces a novel technique called classification-based label reweighting. This method generates a gated label graph, serving as supervisory guidance for the network. Its purpose is to aid in identifying and capturing the most salient regions of camouflaged objects, thereby facilitating the complete acquisition of the target object. The objective of CubeNet [64] revolves around harnessing hierarchical features extracted from various layers and diverse input supervisions. Specifically, CubeNet employs X-connections to facilitate multi-level feature fusion, utilizes different supervised learning methods, and employs a refinement strategy to elaborate on the complex details of camouflaged objects. To this end, it makes full use of edge information and considers it as an important cue for capturing object boundaries. By incorporating these methodologies, CubeNet endeavors to enhance the detection and delineation of camouflaged objects, effectively leveraging hierarchical features, diverse supervisory signals, and edge information for comprehensive object understanding and boundary delineation. Zhai et al. [65] present a novel approach in their paper, introducing the Deep Texton-Coherence Network (DTC-Net). The primary focus of DTC-Net revolves around extracting discriminative features through the comprehensive understanding of spatial coherence within local textures. This understanding facilitates the effective detection of camouflaged objects within scenes. DTC-Net implements a deep supervision mechanism across multiple layers. This mechanism involves applying supervision at various stages of the network, facilitating the iterative refinement of network parameters through continuous feedback from these supervisory signals. This approach serves to promote the effective updating and optimization of the network’s parameters, enhancing the model’s performance in camouflage object detection. Zhai et al. [66] advocate for leveraging the intricate process of figure-ground assignment to enhance the capabilities of CNNs in achieving robust perceptual organization, even in the presence of visual ambiguity. By integrating the figure-ground assignment mechanism into CNN architectures, the model’s success in various challenging applications, notably in COD tasks, underscores the potential efficacy of integrating cognitive-inspired mechanisms into CNN architectures. DGNet [67], a newly introduced deep framework, revolutionizes COD by leveraging object gradient supervision. The linchpin of this architecture lies in the gradient-induced transition, symbolizing a fluid connection between context and texture features. This mechanism essentially establishes a soft grouping between the two feature sets. The application of DGNet in various scenarios, including polyp segmentation, defect detection, and transparent object segmentation, has demonstrated remarkable efficacy and robustness, further validating its superiority and versatility in diverse visual detection tasks. Hu et al. [68] aim to address the challenges by prioritizing the extraction of high-resolution texture details, thereby mitigating the problem of detail degradation that leads to blurred edges and boundaries. Their approach includes the introduction of HitNet, a novel framework designed to enhance low-resolution representations by incorporating high-resolution features into iterative feedback loops. In addition, the authors propose an iterative feedback loss that adds an extra layer of constraints to each feedback connection. This iterative feedback loss approach aims to further refine the iterative connections, thereby enhancing the model’s ability to capture the fine-grained details that are critical. Wang et al. [69] propose a new framework named FLCNet, which includes an underlying feature mining module, a texture-enhanced module, and a neighborhood feature fusion module. Deng et al. [70] propose a new ternary symmetric fusion network for detecting camouflaged objects by fully fusing features from different levels and scales. To effectively enhance detection performance, Shi et al. [71] propose a novel model featuring context-aware detection and boundary refinement.

Motivated by the complementary relationship between boundaries and camouflaged object regions, Yu et al. [72] propose an alternate guidance network named AGNet for enhanced interaction. They introduce a feature selective module to choose highly discriminative features while filtering out noisy background features. Liu et al. [73] propose MFNet, a novel network for multi-level feature integration. Xiang et al. [74] propose a double-branch fusion network with a parallel attention selection mechanism. Yan et al. [75] propose a matching-recognition-refinement network (MRR-Net) to break visual wholeness and see through camouflage by matching the appropriate field of view. Zhang et al. [76] design a novel cross-layer feature aggregation network (CFANet). CFANet effectively aggregates multi-level and multi-scale features from the backbone network by exploring the similarities and differences of features at various levels.

To sum up, CNN-based methods can offer high accuracy and robust performance due to their ability to automatically learn and extract complex features from images, making them effective in diverse and challenging COD settings. Fig. 5 indicates the number of publications on CNN-based literature from 2019 to 2023. On the other hand, CNN-based architecture facilitates end-to-end learning and adaptability, particularly through transfer learning, which allows the use of pre-trained models to enhance efficiency and accuracy. Yet, these methods are computational resources-consuming, which could be an obstacle for real-time applications. Besides, CNNs are less effective on unseen data and vulnerable to adversarial attacks. Despite these challenges, CNN-based methods remain a reliable tool for COD in complex scenarios with sufficient data.

Figure 5: Number of CNN-based image-level COD methods published annually from 2019 to 2023

Huang et al. [77] present the SATNet which applies a transformer as the backbone network. Observing the content in the mirror is not strictly symmetry with the real-world object, i.e., loose symmetry, they proposed a network that takes as input an input image and its flipped image to provide data augmentation techniques and further fully exploits symmetry features and proposed a network with takes input images and its flipped images as input to fully exploit symmetry features as well as serves a data augmentation technique. Liu et al. [78] focus on the phenomenon of reflections on mirror surfaces, introducing a frequency domain feature extraction module. This module maps multi-scale features of the mirror to the frequency domain, extracts mirror-specific features, and suppresses interference caused by reflections of external objects. Additionally, they propose a cross-level fusion module based on reverse attention, which integrates features from different levels to enhance overall performance. To identify mirror features in more diverse scenes, Xie et al. [79] introduce a cross-space-frequency window transformer, which is designed to extract both spatial and frequency characteristics for comprehensive texture analysis.

Furthermore, some transparent object detection methods employ transformer architecture to detect glass-like objects. Zhang et al. [80] contribute Trans4Trans, which means Transformer for Transparent, an encoder-decoder model based entirely on the Transformer architecture. With a carefully designed Transformer pairing module and dual-path structure, Trans4Trans outperforms the then-SOTA transparent object segmentation method, namely Trans2Seg. Xin et al. [81] observe glass-induced image distortion and introduced a visual distortion-aware module to mitigate this problem. This module captures multi-scale visual distortion information and integrates it effectively, allowing the Swin-B backbone network to focus on regions affected by glass-induced distortion, thereby accurately identifying these surfaces. In addition, the inclusion of glass surface centroid information through a classification sub-task improves the accuracy of glass mask predictions. To differentiate between glass and non-glass regions, the transformer architecture leverages two crucial visual cues: boundary and reflection feature learning. Vu et al. [82] propose TransCues, a pyramidal transformer encoder-decoder architecture designed for the segmentation of transparent objects from color images.

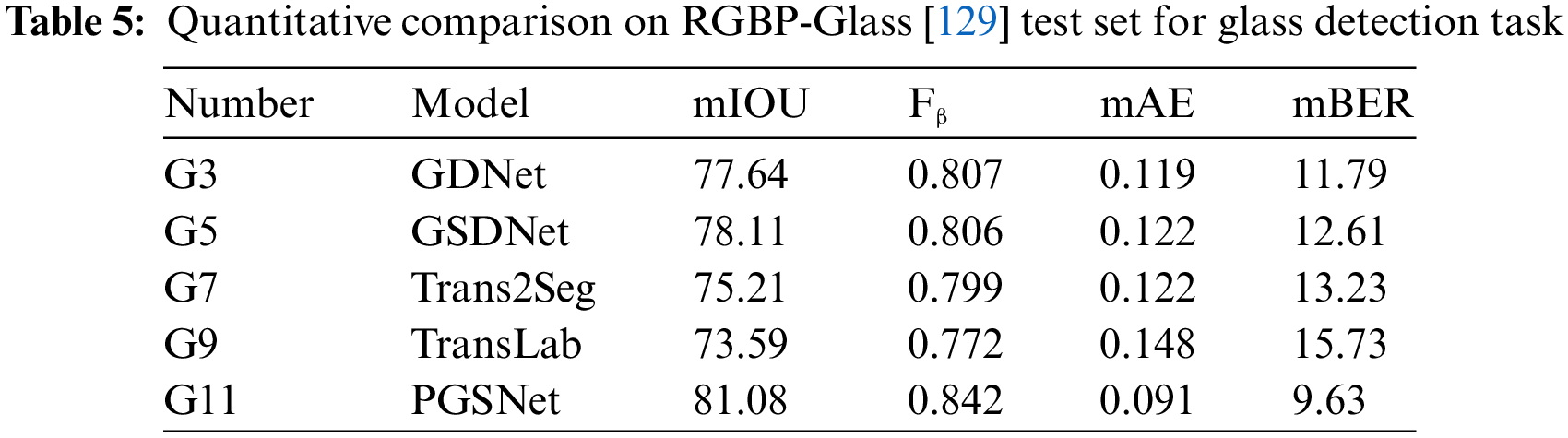

The study [83] constructs a contextual attention module to extract backbone features using a self-attention approach and proposes a new enhanced feature fusion algorithm for detecting glass regions in a single RGB image. Additionally, it introduces a VIT-based deep semantic segmentation architecture that associates multilevel receptive field features and retains the feature information captured at each level. Hu et al. [84] introduce a novel convolutional attention glass segmentation network, designed to minimize the number of training cycles and iterations, thereby enhancing performance and efficiency. The network employs a custom edge-weighting scheme to optimize glass detection within images, further improving segmentation precision. Inspired by the scale integration strategy and refinement method, Xu et al. [85] propose MGNet, featuring a fine-rescaling and merging module to enhance spatial relationship extraction and a primary prediction guiding module to mine residual semantics from fused features. An uncertainty-aware loss supervises the model to produce high-confidence segmentation maps. Observing glass naturally results in blurs. Building on this intrinsic visual blurriness cue, Qi et al. [86] propose a novel visual blurriness aggregation module that models blurriness as a learnable residual. This approach extracts and aggregates valuable multiscale blurriness features, which guide the backbone features to detect glass with high precision. The Progressive Glass Segmentation Network (PGSNet) [87] is constructed using multiple discriminability enhancement modules and a focus-and-exploration-based fusion strategy. This design progressively aggregates features from high-level to low-level, enabling a coarse-to-fine glass segmentation approach.

Yang et al. [88] introduce an innovative method named Uncertainty-Guided Transformer Reasoning (UGTR), utilizing probabilistic representational models in conjunction with a transformer architecture to facilitate explicit reasoning under uncertainty. The foundational concept involves the initial acquisition of an estimate and its associated uncertainty by learning the conditional distribution of the backbone output. Subsequently, the attention mechanism is employed to deliberate over these uncertain regions, culminating in a refined and definitive prediction. This method strategically amalgamates the strengths of Bayesian learning and transformer-based inference, leveraging both deterministic and probabilistic information. The synergistic integration of these elements enhances the model’s capability to discern camouflaged objects effectively. Inherent uncertainty poses a significant challenge which is compounded by two primary biases observed in the training data. The “center bias” prevalent in the dataset causes models to exhibit poor generalization, as they tend to focus on detecting camouflaged objects primarily around the image center, termed as “model bias.” Additionally, accurately labeling the boundaries of camouflaged objects is challenging due to the resemblance between the object and its surroundings, leading to inaccuracies in defining the object’s scope, known as “data bias.” To effectively address these biases, Zhang et al. [89] propose leveraging uncertainty estimation techniques. They introduce a predictive uncertainty estimation approach, combining model uncertainty and data uncertainty. Their proposed solution, PUENet, comprises a Bayesian conditional VAE to achieve predictive uncertainty estimation. PUENet aims to mitigate the impact of model and data biases by estimating predictive uncertainty. Liu et al. [90] challenge the conventional bio-inspired framework used in object detection methodologies, highlighting the inherent limitations in the recurrent search for objects and boundaries, which can be taxing and limiting for human perception. Their proposed solution involves a transformer-based model that enables the simultaneous detection of the object’s accurate position and its intricate boundary by extracting features related to the foreground object and its surrounding background, enabling the acquisition of initial object and boundary features. Pei et al. [91] introduce OSFormer, a one-stage transformer framework which is founded on two fundamental architectural components. OSFormer adeptly combines local feature extraction with the assimilation of extensive contextual dependencies. This integration enhances its capability to accurately predict camouflaged instances by efficiently leveraging both local and long-range contextual information. Yin et al. [92] decompose the multi-head self-attention mechanism into three distinct segments. Each segment is tasked with discerning camouflaged objects from the background by employing diverse mask strategies. Additionally, they introduce CamoFormer, a novel framework designed to progressively capture high-resolution semantic representations. This is achieved through a straightforward top-down decoder augmented by the proposed masked separable attention mechanism, enabling the attainment of precise segmentation outcomes.

Existing methods often replicate the predator’s sequential approach of positioning before focusing, but they struggle to locate camouflaged objects within cluttered scenes or accurately outline their boundaries. This limitation arises from their lack of holistic scene comprehension while concentrating on these objects. Mei et al. [93] contend that an ideal model for COD should concurrently process both local and global information, achieving a comprehensive perception of the scene throughout the segmentation process. Their proposed Omni-Perception Network (OPNet) aims to amalgamate local features and global representations, facilitating accurate positioning of camouflaged objects and precise focus on their boundaries, respectively. Huang et al. [94] introduce the FSPNet, a transformer-based framework which employs a hierarchical decoding strategy aimed at enhancing the local characteristics of neighboring transformer features by progressively reducing their scale. The objective is to accumulate subtle yet significant cues progressively, aiming to decode critical object-related information that might otherwise remain imperceptible. Drawing inspiration from query-based transformers, Dong et al. [95] present a unified query-based multi-task learning framework, UQFormer, specifically designed for camouflaged instance segmentation. UQFormer approaches instance segmentation as a query-based direct set prediction task, eliminating the need for additional post-processing techniques like non-maximal suppression. Jiang et al. [96] introduce a novel joint comparative network (JCNet), leveraging joint salient objects for contrastive learning. The key innovation within JCNet lies in the design of the contrastive network, which generates a distinct feature representation specifically for the camouflaged object, setting it apart from others. The methodology involves the establishment of positive and negative samples, alongside the integration of loss functions tailored to different sample types, contributing to the overall efficacy of the approach.

Liu et al. [97] present the MSCAF-Net, a comprehensive COD framework. This framework concentrates on acquiring multi-scale context-aware characteristics by employing the enhanced Pyramid Vision Transformer (PVTv2) model as the primary extractor for global contextual data across various scales. An improved module for expanding the receptive field is developed to fine-tune characteristics at each scale. Additionally, they introduce a cross-scale feature fusion module to effectively blend multi-scale information, enhancing the variety of features extracted. Moreover, a dense interactive decoder module is formulated to generate a preliminary localization map, utilized for fine-tuning the fused features, leading to more precise detection outcomes. Drawing inspiration from human behavior, which involves approaching and magnifying ambiguous objects for clearer recognition, Xing et al. [98] introduce a novel three-stage architecture termed the SARNet which alternate their focus between foreground and background, employing attention mechanisms to effectively differentiate highly similar foreground and background elements, thereby achieving precise separation. Song et al. [99] present an innovative approach centered on focus areas, which signify regions within an image that exhibit distinct colors or textures. They introduce a two-stage focus scanning network named FSNet. This process aims to enhance the model’s ability to discern camouflaged objects by capturing detailed information within these distinctive regions. Yang et al. [100] introduce an innovative occlusion-aware transformer network (OAFormer) aimed at precise identification of occluded camouflaged objects. Within OAFormer, a hierarchical location guidance module is developed to pinpoint potential positions of camouflaged objects. This approach enables OAFormer to cleverly capture the full picture of camouflaged objects and integrates an assisted supervision strategy to enhance the learning capability of the model. Bi et al. [101] propose a novel architecture which comprising an in-layer information enhancement module and a cross-layer information aggregation module. By combining shallow texture information with deep semantic information, this architecture accurately locates target objects while minimizing noise and interference. Liu et al. [102] present Bi-level Recurrent Refinement Network (Bi-RRNet. It includes a Lower-level RRNet (L-RRN) that refines high-level features with low-level features in a top-down manner, and an Up-level RRNet (U-RRN) that polishes these features recurrently, producing high-resolution semantic features for accurate detection.

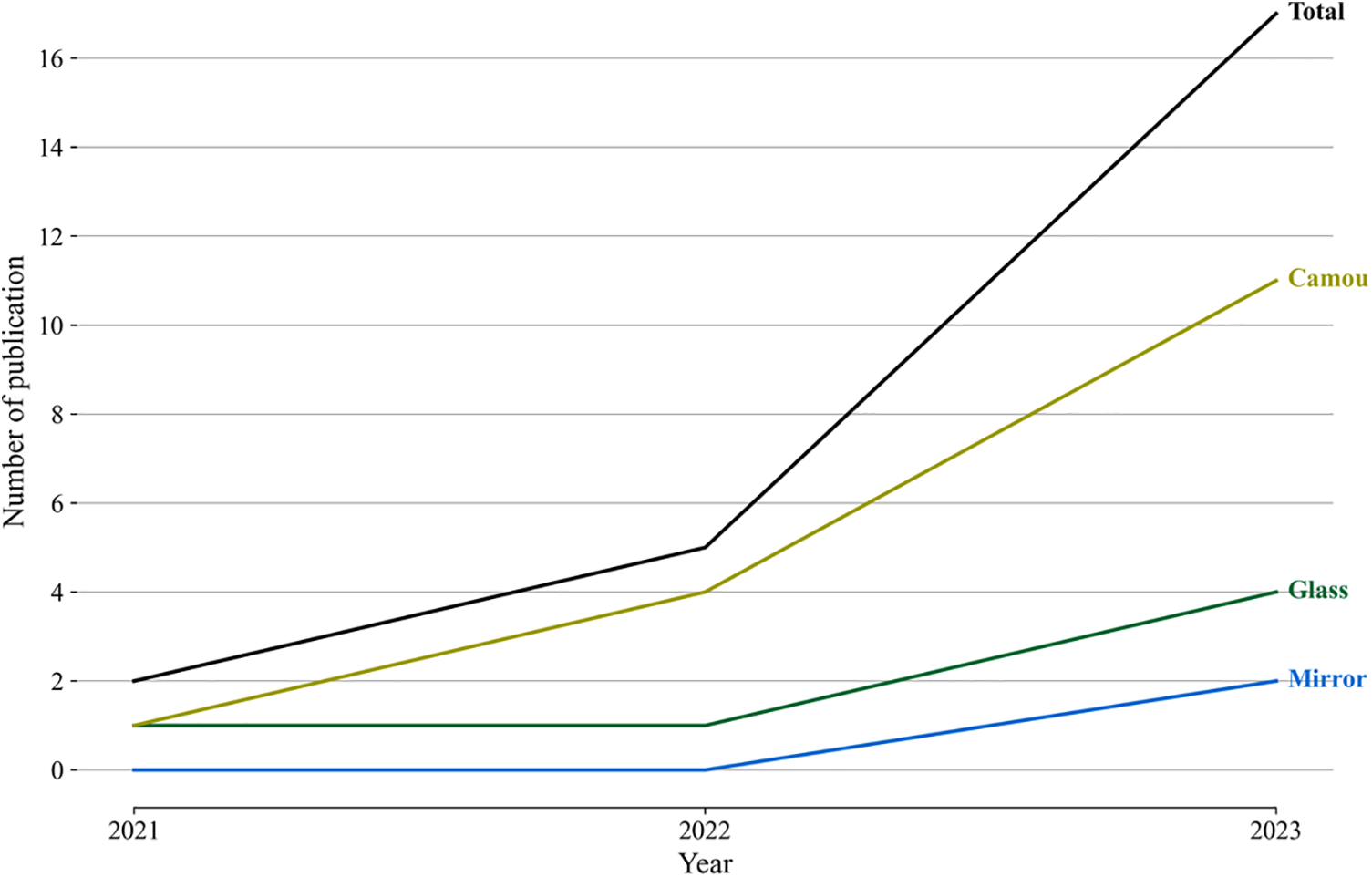

The Transformer architecture excels in handling long-range dependencies and can easily extract contextual information, which makes it an ideal choice for our COD task. Transformer-based models have considerable ability to capture global dependencies in images, so they can help reliably detect confusing objects without being affected by complex conditions and environments. The complexity of such models may add obstacles to the tuning part. Despite these shortcomings, the Transformer-based models in the environment can surpass CNN-based models in terms of accuracy in detecting confusing objects. Fig. 6 presents the number of publications on Transformer-based methods from 2021 to 2023.

Figure 6: Number of Transformer-based image-level COD methods published annually from 2021 to 2023

4.3 Multi-Task Learning Framework

GlassNet [103] utilizes the label decoupling framework in the glass detection task, which has the advantage of better predicting the labels of pixels near the actual boundaries. Specifically, they use the label decoupling procedure on the Ground Truth (GT) map to obtain internal diffusion maps and boundary diffusion maps, which in turn serve as the GT maps of additional supervision streams in a multi-task learning manner. The RFENet designed by Fan et al. [104] also utilizes the boundary as an additional supervisory stream to work in an independent but consistent manner. It introduces semantic and boundary-supervised losses at different feature stages in a cascading manner to achieve synergistic feature enhancement. Zhang et al. [105] propose an algorithm for staged feature extraction that employs a multitype backbone network, integrating features from both CNNs and transformers. Additionally, a multiview collector is used to extract cross-modal fusion features from diverse perspectives. Wan et al. [106] propose a novel bidirectional cross-modal fusion framework incorporating shift-window cross-attention for glass segmentation which includes a feature exchange module and a shifted-window cross-attention feature fusion module within each transformer block stage to calibrate, exchange, and fuse cross-modal features. Chang et al. [107] propose PanoGlassNet for panoramic images which uses a novel module with four branches of varying kernel sizes and deformable convolutions to capture the wide field of view and irregular boundaries. Given that most existing structures are complex and heavy, while lightweight structures often lack accuracy, Zhou et al. [108] propose a novel Asymmetric Depth Registration Network student model. This model, trained with distilled knowledge, is designed to address these limitations effectively. Zhou et al. [109] propose a novel uncertainty-aware transformer localization network for RGB-D mirror segmentation. This approach is inspired by biomimicry, particularly the observational behavior patterns of humans. It aims to explore features from various perspectives and concentrate on complex features that are challenging to discern during the coding stage.

Lv et al. [110] devise a multi-task learning framework termed RankNet, aimed at concurrent localization, segmentation, and ranking of camouflaged objects. The motivation is that explicitly capturing the unique properties of camouflaged objects in their surroundings not only enriches the understanding of camouflage and animal evolutionary strategies but also provides valuable insights for the development of more sophisticated camouflage techniques. Notably, specific components or features of camouflaged objects play a key role in predator discrimination of camouflaged objects in their surroundings. The proposed network consists of three interrelated models, a localization model designed to identify discriminatory regions that make camouflaged objects conspicuous, a segmentation model responsible for delineating the complete range of camouflaged objects, and a novel ranking model designed to assess the ability to detect differences between different camouflaged entities. The collaborative operation of these three modules within RankNet yields promising results when tested on widely used datasets.

He et al. [111] introduce the FEDER model, specifically addressing the inherent similarity between foreground and background elements. FEDER employs learnable wavelets to decompose features into distinct frequency bands, mitigating this similarity. To tackle the issue of ambiguous boundaries, FEDER adopts an auxiliary edge reconstruction task concurrent with the primary objective. The FEDER model achieves enhanced precision in generating prediction maps with precise object boundaries through simultaneous learning of both tasks. Lv et al. [112] introduce a triple-task learning framework capable of concurrently localizing, segmenting, and ranking camouflaged objects, thus quantifying the level of conspicuousness in camouflage. Due to the absence of datasets for the localization and ranking models, the authors employ an eye tracker to generate localization maps. These maps are subsequently aligned with instance-level labels to create their ranking-based training and testing dataset, providing a pioneering approach to comprehensively evaluating and ranking.

Yang et al. [113] propose a novel perspective, emphasizing its potential to deepen the understanding of camouflage and revolutionize the approach to detecting camouflaged objects. Subsequently, acknowledging the intrinsic connections between salient object detection (SOD) and COD, introducing a multi-task learning framework. This framework captures the inherent relationships between the two tasks from diverse angles. The task-consistent attribute, established through an adversarial learning scheme, seeks to accentuate the boundary disparities between camouflaged objects and backgrounds, thereby achieving comprehensive segmentation of the camouflaged objects. Xing et al. [114] introduce a groundbreaking paradigm termed the “pre-train, adapt, and detect” approach. This method leverages a significantly pre-trained model, allowing the direct transfer of extensive knowledge obtained from vast multi-modal datasets. To tailor the features for the downstream task, a lightweight parallel adapter is integrated, facilitating necessary adjustments. Furthermore, a multi-task learning scheme is implemented to fine-tune the adapter, enabling the utilization of shared knowledge across various semantic classes for enhanced performance. Lyu et al. [115] introduce the UEDG architecture, adept at amalgamating probabilistic-derived uncertainty and deterministic-derived edge information to achieve precise detection of concealed objects. UEDG harnesses the advantages of both Bayesian learning and convolution-based learning, culminating in a robust multitask-guided approach.

The multi-task learning framework allows models to be trained on related tasks, which can bring multiple benefits. By training models on related tasks simultaneously, such as depth estimation and edge detection, our model can exploit complementary information in shared features, thereby improving overall performance. This framework can be easily extended from existing methods, achieving stronger generalization capabilities across different scenarios and reducing the risk of overfitting. However, it also presents challenges, such as the need for carefully balanced loss functions to ensure that all tasks are learned effectively without one dominating the others. They require more complex architectures and hyperparameter optimization, and often require the help of additional data or pseudo-labels.

4.4 Models Using Multimodal Inputs

Mei et al. [116] present the first mirror segmentation model called PDNet that leverages the information from the depth map. PDNet subdivides the mirror segmentation task into two separate stages (i.e., positioning and delineating). The previous stage is performed by exploiting semantic and depth discontinuities from RGB and depth maps respectively. The delineating stage utilizes lower-level features to refine the mirrored area progressively to obtain the final map. Kalra et al. [117] introduce polarization cues to the task of transparent object segmentation, naming the model Polarized Mask R-CNN. Specifically, polarized CNNs use three separated CNN backbones (RGB images) and two polarization cues to extract features separately and fuse the features with a self-designed attention module to better utilize features from different sources. GlassSemNet [118] introduces an additional semantic ground truth mask that serves as additional input. GlassSemNet uses two independent backbone networks (i.e., SegFormer and ResNet50) on different inputs to extract spatial and semantic features, and then uses an attention mechanism to segment glass objects from the enhanced features. PGSNet [119] is another network that takes Angle of Linear Polarization (AoLP) and Degree of Linear Polarization (DoLP) information as additional input. Unlike the Polarized Mask R-CNN, PGSNet is a more complex structure that makes full use of multimodal cues with the Conformer as its backbone. Noting the subtle physical differences in the response of glass-like objects to thermal radiation and visible light, Huo et al. [120] introduce another modal input (i.e., thermal images.) RGBTSeg builds the network in an encoder-decoder fashion and implements an efficient fusion strategy with a novel multimodal fusion module.

Yan et al. [121] present MirrorNet, a bio-inspired network tailored for camouflage body measurements, in which the bio-inspired representation uses flipped images to reveal more information, uniquely exploiting synergies between instance segmentation and bio-inspired attack streams. Unlike traditional models that rely on a single input stream, this innovative model integrates two segmentation streams. Pang et al. [122] introduce ZoomNet, a mixed-scale triplet network designed to emulate the human behavior of zooming in and out when observing ambiguous images. The central strategy employed by ZoomNet involves utilizing zooming techniques to acquire discriminative mixed-scale semantics. ZoomNet aims to capture nuanced visual details across multiple scales, thereby enhancing its ability to discern and accurately predict objects in the presence of ambiguous or vague visual information. Jia et al. [123] align with ZoomNet’s approach by employing human attention principles alongside a coarse-to-fine detection strategy. Their proposed framework, named SegMaR, operates through an iterative refinement process involving Segmentation, Magnification, and Reiteration across multiple stages for detection. Specifically, SegMaR introduces a novel discriminative mask that directs the model’s focus toward fixation and edge regions. Notably, the model utilizes an attention-based sampler to progressively amplify object regions without requiring image size enlargement. Comprehensive experimentation demonstrates SegMaR’s remarkable and consistent enhancements in performance.

Unlike existing approaches utilizing contextual aggregation techniques developed primarily for SOD, Lin et al. [124] approach introduces a new method, FBNet, which aims to address a key challenge, i.e., the prevalent contextual aggregation strategy tends to prioritize distinctive objects while potentially attenuating the features of less discriminative objects. The FBNet approach incorporates frequency learning to effectively suppress high-frequency texture information. In addition, the proposed FBNet integrates a gradient-weighted loss function that strategically directs the method to emphasize the contour details, thus refining the learning process. Luo et al. [125] introduce a De-camouflaging Network (DCNet) comprising a pixel-level camouflage decoupling module and an instance-level camouflage suppression module, marking a novel approach in the field. The authors introduce reliable reference points to establish a more robust similarity measurement, aiming to diminish the impact of background noise during segmentation. By integrating these two modules, the DCNet models de-camouflaging, enabling precise segmentation of camouflaged instances.

Zhong et al. [126] assert that the objective of the task surpasses replicating human visual perception within a singular RGB domain; rather, it aims to transcend human biological vision. They present FDCOD, a robust network integrating two specialized components to effectively incorporate frequency clues into CNN models. The frequency enhancement module encompasses an offline discrete cosine transform enabling the extraction and refinement of significant information embedded within the frequency domain. Subsequently, a feature alignment step is employed to fuse the features derived from both the RGB and frequency domains. Furthermore, to maximize the utilization of frequency information, Zhong et al. propose the high-order relation module which is designed to handle the intricate fusion of features, leveraging the rich information obtained from the fused RGB and frequency domains. This module thereby facilitates the comprehensive integration and exploitation of frequency clues, augmenting the network’s capacity for accurate and nuanced detection. Cong et al. [127] introduce FPNet, integrating a learnable and separable frequency perception mechanism driven by semantic hierarchy within the frequency domain.

PopNet [128] integrates depth cues into the task. Rather than directly deriving depth maps from RGB images, Wu et al. employ modern learning-based techniques to infer reliable depth maps in real-world scenarios. This approach utilizes pre-trained depth inference models to establish the “pop-out” prior for objects in a 3D context. The “pop-out” prior assumes object placement on the background surface, enabling reasoning about objects in 3D space. Xiang et al. [129] investigate the role of depth information, utilizing depth maps generated through established monocular depth estimation methodologies. However, due to inherent discrepancies between the MDE dataset and the camouflaged obejct dataset, the resulting depth maps lack the necessary accuracy for direct utilization. Zheng et al. [130] introduce a behavior-inspired framework termed the MFFN, drawing inspiration from human approaches to identifying ambiguous objects in images. This framework mirrors human behavior by employing multiple perspectives, angles, and distances to observe such objects. MFFN leverages the interplay between views and channels to explore channel-specific contextual information across diverse feature maps through iterative processes.

Lin et al. [131] propose the first video mirror detection model VCNet which takes video as input. To make use of the properties of video data, VCNet applies a novel Dual Correspondence (DC) module to leverage both spatial and temporal correspondence inside videos. Qiao et al. [132] introduce the first polarization-guided video glass segmentation propagation solution, capable of robustly propagating glass segmentation in RGB-P video sequences. This method leverages spatiotemporal polarization and color information, combining multi-view polarization cues to reduce the view dependence of single-input intensity variations on glass objects.

Lamdouar et al. [133] introduce a novel approach to camouflaged animal detection in video sequences leveraging optical flow between consecutive frames. The model is divided into two key modules, the first of which consists of a differentiable registration module responsible for the alignment of consecutive frames, and the second of which consists of a motion segmentation module characterized by a modified U-shaped network structure with an additional memory component aimed at segmenting camouflaged animals. Yang et al. [134] introduce a self-supervised model specifically designed to address the intricate task of segmenting camouflaged objects within video sequences. Their approach hinges upon the strategic exploitation of motion grouping mechanisms. The methodology commences by employing a modified Transformer framework, serving as the initial stage in segmenting optical flow frames into distinct primary objects and background components within the video context. SIMO [135] is another work on RGB camouflaged object segmentation in video sequences. In this work, Lamdouar et al. designed a two-path architecture consisting of ConvNets and Transformer that accepts optical flow input sequences and is designed to learn to segment moving objects in difficult scenarios such as partial occlusion or stationary states.

The research conducted by Meunier et al. [136] operates under the assumption that the input optical flow can be effectively represented as a set of parametric motion models, commonly characterized by affine or quadratic forms. Notably, this approach eliminates the need for ground truth or manual annotation during the training phase. The research introduces an efficient data augmentation technique tailored for optical flow fields which is applicable to any network utilizing optical flow as input and is inherently designed to segment multiple motions. The resulting Expectation-Maximization (EM)-driven motion segmentation network was evaluated on both camouflaged object and salient object video datasets, demonstrating high performance while maintaining efficiency during test time.

Since current video camouflaged object detection methods usually utilize isomorphic or optical flow to represent motion, the detection error may be accumulated by motion estimation error and segmentation error. Cheng et al. [137] propose a new video detection framework that can detect camouflaged objects from video frames using short-term dynamics and long-term temporal coherence. Lamdouar et al. [138] utilize a transformer-based architecture trained on synthetic datasets, showcasing its efficacy in identifying concealed objects within real video content, such as in the case of MoCA. Their proposed model amalgamates elements from two pre-existing architectures—motion segmentation and SINet. This combined framework facilitates the production of high-resolution segmentation masks derived from the motion stream, enhancing the model’s ability to reveal concealed objects within the visual content.

Video COD research is making significant progress, but challenges remain for a number of reasons. Contemporary approaches use deep learning-based models to combine object detection and optical flow analysis to exploit information between different frames. Such approaches typically do not handle challenges such as static objects, rapid scene changes, changing lighting conditions, and reflections well. In addition, real-time detection still requires a lot of computation, especially on edge devices, and the price of high-quality data annotation is quite expensive and difficult to deploy in real scenarios. To address the above issues, future research on video COD should develop more advanced and efficient architectures. Another promising area is to combine unsupervised and semi-supervised learning techniques to exploit the large amount of unlabeled video data and reduce the dependence on large annotated datasets.

Costanzino et al. [139] present a simple pipeline for neural networks to estimate depth accurately for reflective surfaces without ground-truth annotations. They generate reliable pseudo labels by in-painting mirror objects and using a monocular depth estimation model. Li et al. [140] develop UJSC, a combined SOD and COD segmentation model which leverages the connection between them. The authors constructed a similarity measurement module to refine the feature encoder since the saliency and camouflage streams’ predictions should not intersect. In addition, they introduce adversarial learning to train the predictive encoder for generating the final result and the confidence estimation module for modeling uncertainty in certain regions of the image. The performance achieved a satisfactory result despite using only optical flow as input. To delve into cross-task relevance through a “contrast” approach, Li et al. [141] incorporate contrast learning into their dual-task learning framework. By injecting a structure based on adversarial training, they explored multiple training strategies specialized for discriminators, thus improving the stability of training. Zhao et al. [142] propose to utilize existing successful SOD models for camouflage object detection to reduce the development costs associated with COD models. Their central premise lies in the fact that SOD and COD share two aspects of information, namely the semantic representation of objects used to differentiate between objects and context, and the contextual attributes that play a key role in determining the class of an object.

Observing mirror reflections is crucial to how people perceive the presence of mirrors, and such mid-level features can be effectively transferred from self-supervised pre-trained models. Lin et al. [143] aim to enhance mirror detection methods by proposing a novel self-supervised learning pre-training framework. This framework progressively models the representation of mirror reflections during the pre-training process. Le et al. [144] propose a simple yet efficient CFL framework that undergoes a dual-stage training process to achieve its optimized performance. This scene-driven framework capitalized on the diverse advantages offered by different methods, adaptive selecting the most suitable models for each image. Song et al. [145] propose FDNet, strategically combines Convolutional Neural Networks and Transformer architectures to encode multi-scale images simultaneously. To synergistically exploit the advantages of both encoders, the authors designed a feature grafting module based on a cross-attention mechanism.

The primary aim of camouflaged object detection techniques is the identification of objects seamlessly blending into their environments visually. While prevailing COD methodologies concentrate solely on recognizing camouflaged objects within familiar categories in the training dataset, they confront challenges in accurately identifying objects from unfamiliar categories, resulting in diminished performance. Real-world implementation proves arduous due to the complexities in amassing adequate data for recognized categories, compounded by the demanding expertise needed for accurate labeling, rendering these established approaches unfeasible. Li et al. [146] introduce a novel zero-shot framework tailored to proficiently detect previously unseen categories of camouflaged objects. The incorporation of Li’s graph reasoning, underpinned by a dynamic searching strategy, prioritizes object boundaries, effectively mitigating the influence of background elements.

Chen et al. [147] observe that despite the remarkable success of the Segment Anything Model (SAM) large model in various image segmentation tasks, it encountered limitations in tasks like shadow detection and camouflaged object detection. Rather than opting for fine-tuning SAM directly, they introduced SAM-Adapter, a novel approach aiming to enhance SAM’s performance in these challenging tasks. SAM-Adapter integrates domain-specific information into the segmentation network through simple yet effective adapters. He et al. [148] address the constraints of prevalent techniques relying on extensive datasets with pixel-wise annotations, a process inherently laborious due to the intricate and ambiguous object boundaries. Their introduced CRNet framework via scribble learning underscores the importance of structural insights and semantic relationships to augment the model’s comprehension and detection capabilities in scenarios with limited annotation information. Primarily, they propose a new consistency loss function, leveraging scribble annotations outlining object structures without detailing pixel-level information. This function aims to guide the network in accurately localizing camouflaged object boundaries. Additionally, CRNet incorporates a feature-guided loss, using both directly extracted visual features from images and semantically significant model-captured features.

Zhang et al. [149] introduce the concept of referring camouflaged object detection, a novel task focused on segmenting specified camouflaged objects. This segmentation is achieved using a small set of referring images containing salient target objects for reference. Ma et al. [150] propose a cross-level interaction network with scale-aware augmentation. The scale-aware augmentation module calculates the optimal receptive field to perceive object scales, while the cross-level interaction module enhances feature map context by integrating scale information across levels.

Le et al. [151] aim to automatically learn transformations that reveal the underlying structure of camouflaged objects, enabling the model to better identify and segment them. They propose a learnable augmentation method in the frequency domain via a Fourier transform approach, dubbed CamoFourier. Chen et al. [152] propose a new paradigm that treats camouflaged object detection as a conditional mask-generation task by leveraging diffusion models. They employ a denoising process to progressively refine predictions while incorporating image conditions. The stochastic sampling process of diffusion allows the model to generate multiple possible predictions, thus avoiding the issue of overconfident point estimation. Chen et al. [153] propose a diffusion-based framework. This novel framework treats the camouflaged object segmentation task as a denoising diffusion process, transforming noisy masks into precise object masks. Zhang et al. [154] formulate unsupervised camouflaged object segmentation as a source-free unsupervised domain adaptation task, where both source and target labels are absent during the entire model training process. They define a source model comprising self-supervised vision transformers pre-trained on ImageNet. In contrast, the target domain consists of a simple linear layer and unlabeled camouflaged objects. Liu et al. [155] present the first systematic work on military high-level camouflage object detection, targeting objects embedded in chaotic backgrounds. Inspired by biological vision, which first perceives objects through global search and then strives to recover the complete object, they propose a novel detection network called MHNet. Li et al. [156] recognize the ambiguous semantic biases in camouflaged object datasets that affect detection results. To address this challenge, they design a counterfactual intervention network (CINet) to mitigate these biases and achieve accurate results.

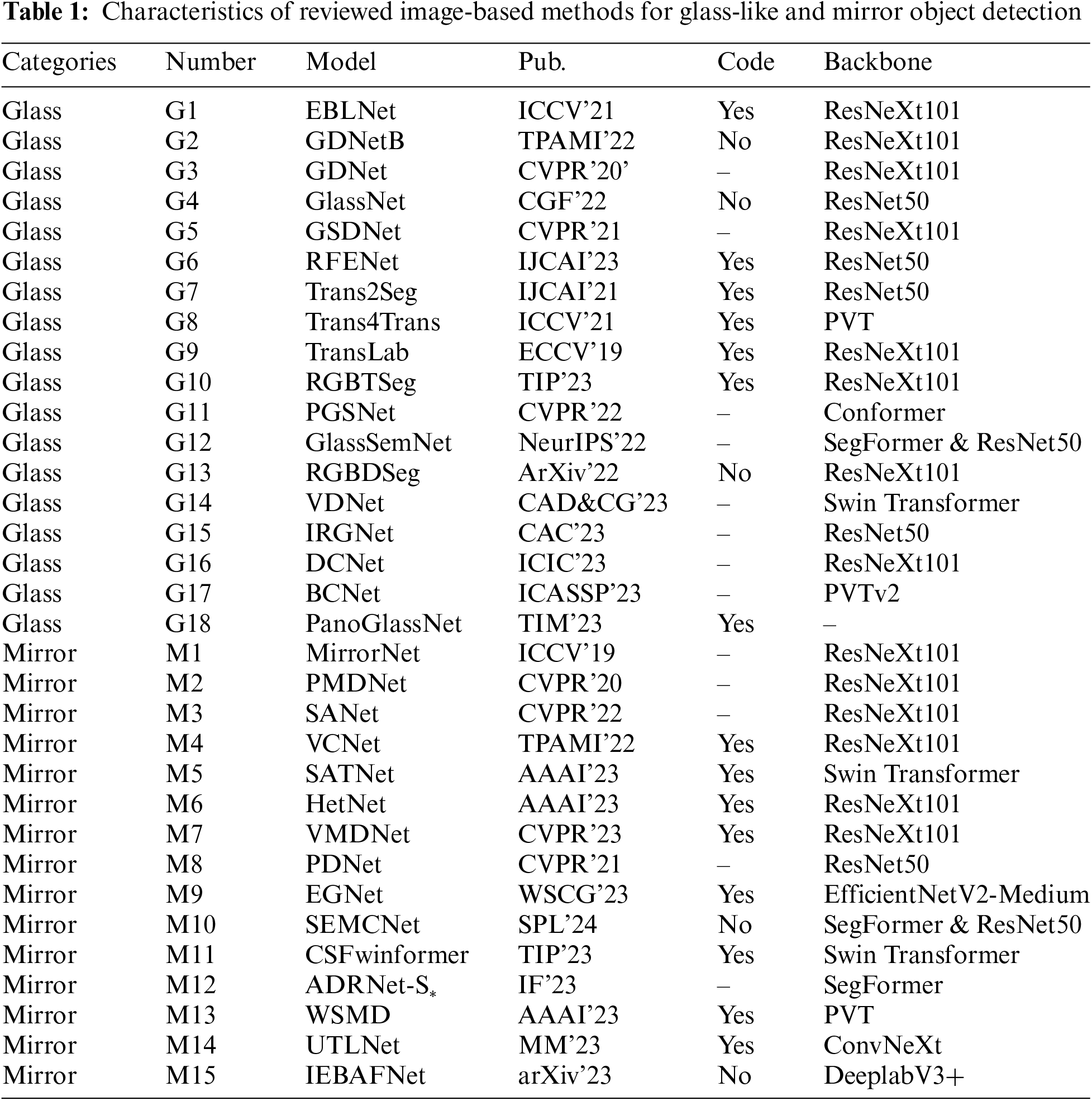

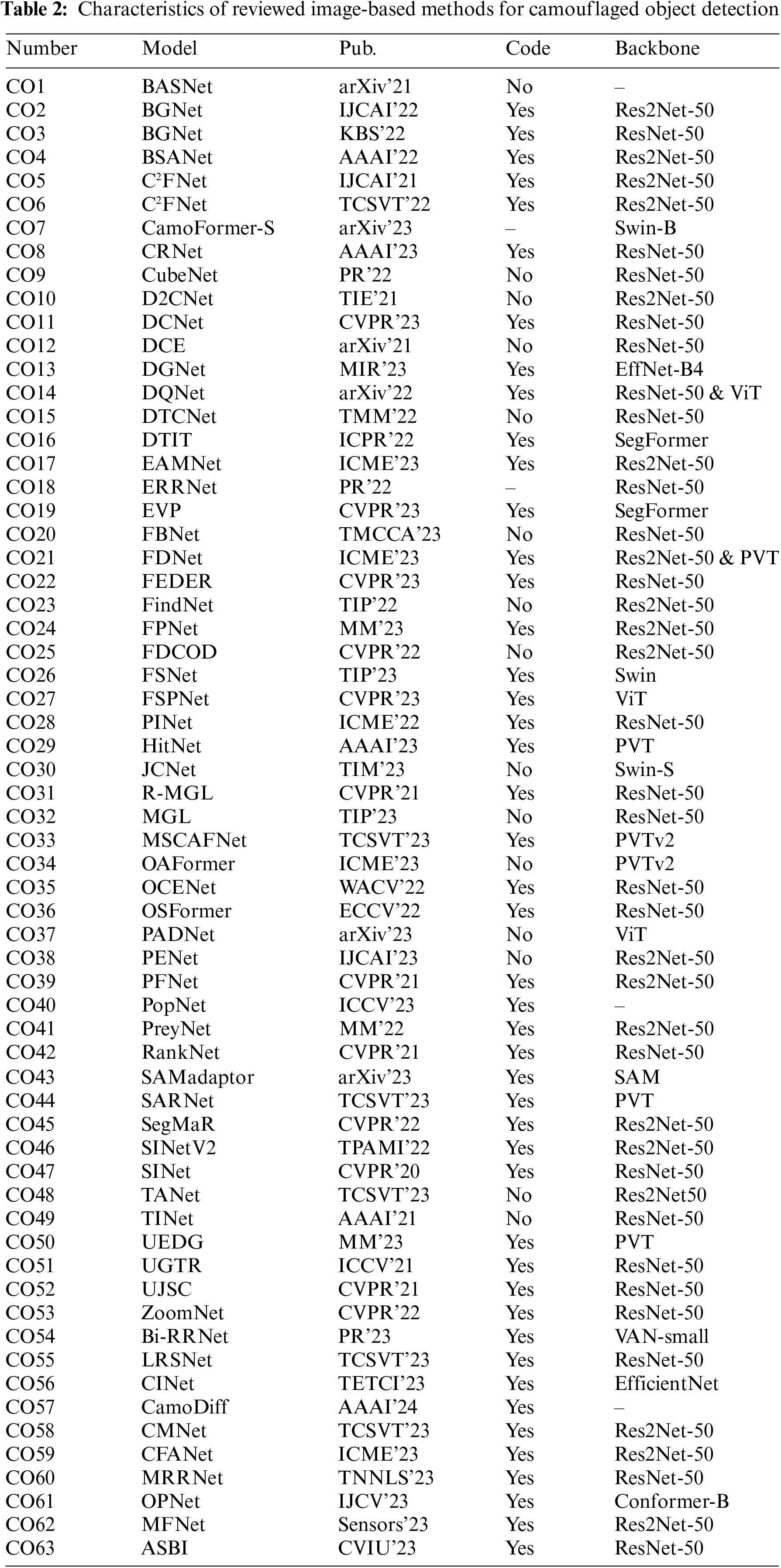

This section introduces some novel methods in COD, most of which cannot be directly benchmarked with previous methods. Yet it presents the probable direction for future research, such as the zero-shot framework for COD, the novel sam-adaptor to fine-tune the segment anything model, and more recently the diffusion model-based COD framework. We summarize notable characteristics of the reviewed models in Tables 1 and 2.

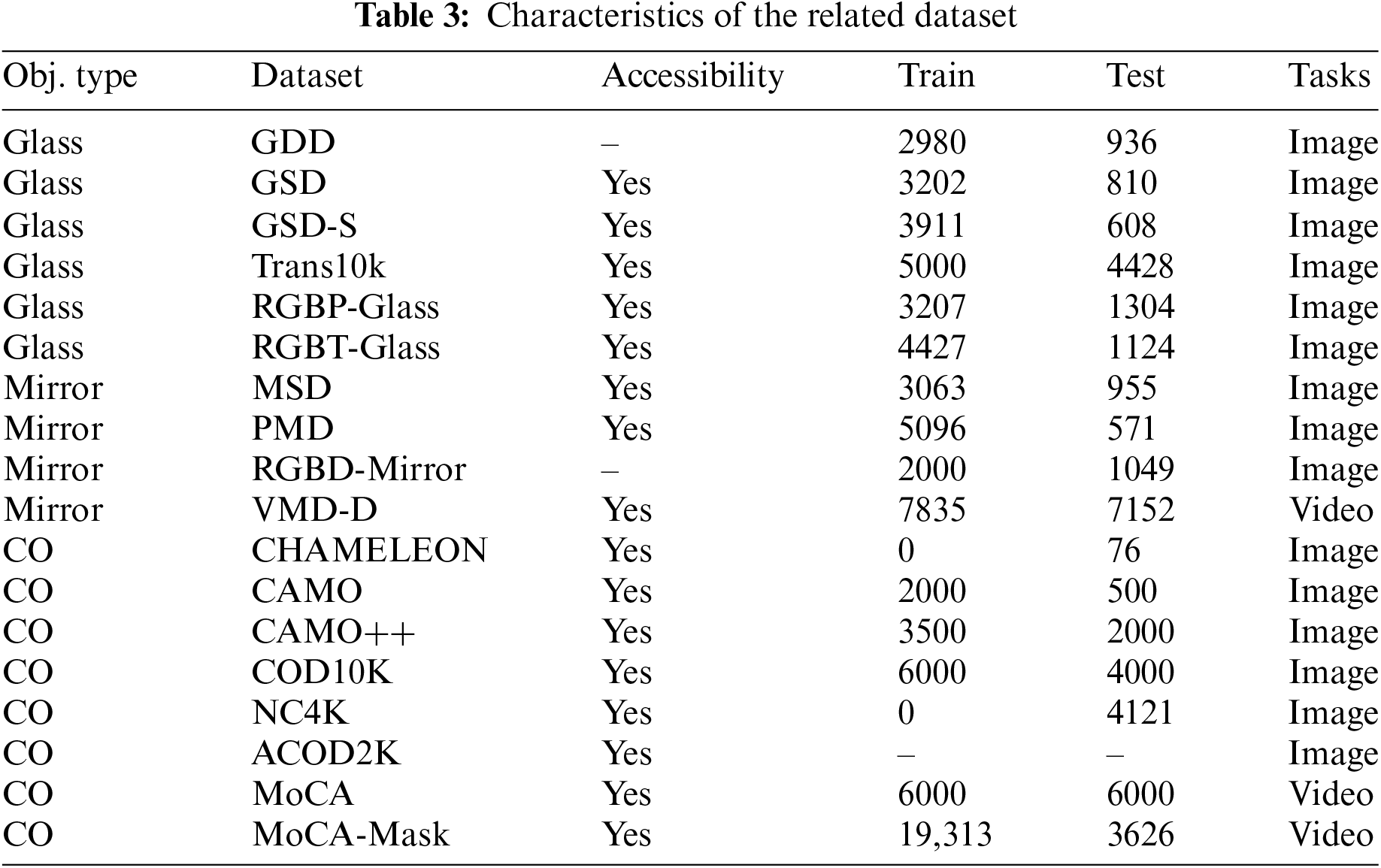

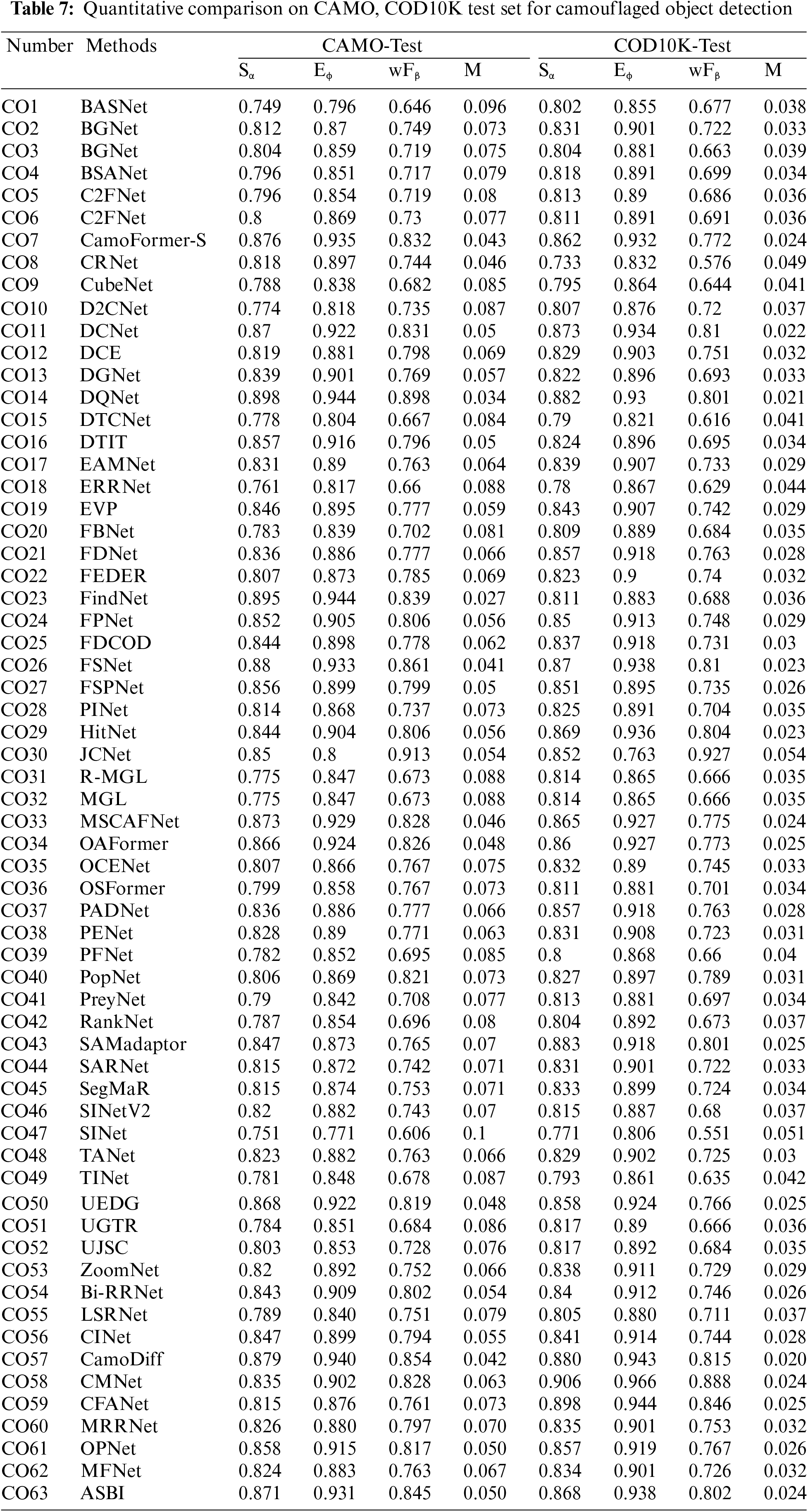

Table 3 lists the characteristics of the related COD dataset. Mei et al. [4] construct the first large benchmark dataset called the GDD for glass detection. GDD contains a total of 3916 images, of which 2980 are used for training and 936 for testing. To overcome the limitations of GDD, Lin et al. [5] contribute a more challenging dataset called GSD, composed of 4012 images collected from existing datasets and the Internet with manually annotated masks. Similarly, Xie et al. [33] contribute to the first dataset Trans10K specifically for transparent object segmentation in the same year. Trans10K is composed of 10,428 transparent object images collected from the real world, with different categories including glass objects and other transparent objects like plastic bottles. Furthermore, Xie et al. [34] extend the Trans10K dataset with a new dataset, Trans10K-v2, designed to overcome the previous version’s lack of detailed transparent object categories.