Open Access

Open Access

REVIEW

Caching Strategies in NDN Based Wireless Ad Hoc Network: A Survey

1 Department of Computer Science, FAST School of Computing, National University of Computer and Emerging Sciences, Lahore, 54000, Pakistan

2 Department of Software and Communications Engineering, Hongik University, Sejong City, 30016, Republic of Korea

* Corresponding Author: Byung-Seo Kim. Email:

Computers, Materials & Continua 2024, 80(1), 61-103. https://doi.org/10.32604/cmc.2024.049981

Received 24 January 2024; Accepted 01 June 2024; Issue published 18 July 2024

Abstract

Wireless Ad Hoc Networks consist of devices that are wirelessly connected. Mobile Ad Hoc Networks (MANETs), Internet of Things (IoT), and Vehicular Ad Hoc Networks (VANETs) are the main domains of wireless ad hoc network. Internet is used in wireless ad hoc network. Internet is based on Transmission Control Protocol (TCP)/Internet Protocol (IP) network where clients and servers interact with each other with the help of IP in a pre-defined environment. Internet fetches data from a fixed location. Data redundancy, mobility, and location dependency are the main issues of the IP network paradigm. All these factors result in poor performance of wireless ad hoc networks. The main disadvantage of IP is that, it does not provide in-network caching. Therefore, there is a need to move towards a new network that overcomes these limitations. Named Data Network (NDN) is a network that overcomes these limitations. NDN is a project of Information-centric Network (ICN). NDN provides in-network caching which helps in fast response to user queries. Implementing NDN in wireless ad hoc network provides many benefits such as caching, mobility, scalability, security, and privacy. By considering the certainty, in this survey paper, we present a comprehensive survey on Caching Strategies in NDN-based Wireless Ad Hoc Network. Various caching mechanism-based results are also described. In the last, we also shed light on the challenges and future directions of this promising field to provide a clear understanding of what caching-related problems exist in NDN-based wireless ad hoc networks.Keywords

The Internet is based on a TCP/IP stack. When data are uploaded to the Internet, they are divided into small packets [1]. The Internet was invented by the Advanced Research Project Agency Network in the 1960s [2]. Packets on the Internet travel from source to destination using TCP and IP. IP contains information on how to send the data from the client to the server, whereas TCP ensures that the user receives the complete data, no data packets are lost during transmission, and the data are reliable. TCP is a protocol for communication that addresses how packets of data are exchanged between different Internet layers. It provides some key features such as reliable data delivery, packet switching, connection-oriented communication, best-effort delivery, and IP addressing. TCP establishes a reliable communication connection between multiple devices before transmission begins. It also imposes a flow control mechanism that helps with the data transmission rate. TCP allows data to flow in both directions. The Internet has some issues that need to be addressed as soon as possible. Some of the important issues of traditional TCP/IP are:

• The Internet is host-centric, and users demand content rather than location.

• For locating content distribution servers (CDNs), the Internet uses the domain name system (DNS).

• Security issue (because the location of data is known).

• Mobility issue (because for a new location, we have to require new IP).

• TCP/IP model is difficult to manage, set up, and control.

• TCP/IP model does not represent other protocol stacks other than TCP/IP [3].

• Chances of failure when traffic grows rapidly with time.

• Inefficient process of packet delivery.

To overcome the issue of the inefficient processing of packet delivery, researchers used the CDN mechanism [4]. In this mechanism, a new server is created that has all the data of the original server. If the content request is the same, the query is transferred to the new duplicate server. This saves time and energy because the content query is redirected to the duplicate server instead of the original server, as the original server is far from the user. However, the content is changed dynamically if the user request is difficult to support or mobility issues occur. CDN is based on IP, so when the client is far away from the duplicate server, there is still an issue that the client request will not be served on time by the duplicate server. Therefore, there is a need for a new network that can overcome these issues.

With the evolution of the Internet, new requirements and demands have emerged for the network, such as mobility, distribution of data in a scalable form, and security. These requirements have encouraged scientific researchers and stakeholders to study and propose alternative architectures for the network to overcome these issues. Thus, a new network paradigm was introduced called information-centric networking (ICN). This is a next-level approach for the future of the Internet. ICN retrieves content in two ways: content delivery and content discovery. ICN is a new paradigm that works on the content-centric approach. ICN content is distinguished by its unique identifiers and names. Content names are hierarchical and contain multiple attributes, such as location, publisher, and content type information. A request in ICN is forwarded based on the content name. ICN then caches the content closer to the user within the network. ICN enables content distribution, flexibility, and scalability. ICN has four main design components:

1. Data objects based on the name: photos, documents, songs, and webpages.

2. Security and naming: unique names to distinguish data objects.

3. Routing and forwarding: Data routing and forwarding paths and decisions.

4. Caching: In-network caching.

Major ICN projects in the EU include scalable adaptive Internet solutions, content mediator architecture for content-aware networks, content-aware searching retrieval and streaming, publish-subscribe Internet technology, ALICANTE, and CONVERGENCE. Major U.S. projects include content-centric networks (CCN) and NDN. CCN and NDN are the most useful projects. In 2009, Vasilakos et al. [5] elaborated the idea of the CCN paradigm. CCN is now implemented in the NDN project. Both of these projects were proposed by the Palo Alto Research Center. The main goal of NDN is to replace the traditional IP-based network with a name-based content model. NDN is necessary for the following reasons:

• NDN provides an effective and simple model of communication.

• Instead of IP, the content name is used.

• NDN focuses on “what” instead of “where.”

• The name of the content is more important than the location of the content.

• It is a decentralized approach (less chance of failure).

• Consumers initiate the communication.

• Less scalability issue (because naming is hierarchical, as names are human-readable).

• Provides in-network caching.

• Not only end-users, but intermediate nodes are also aware of user requests.

• Support mobility.

• Better security (because the location is not known).

• It is proposed by the USA researchers hence it is important from a business perspective.

A comparison of IP and NDN is illustrated in Table 1, where we can easily see why NDN is better than a traditional IP network. NDN can use the major services of IP, such as DNS. It is also capable of using different IP protocols and can easily use NDN with minor modifications. There are two packets in NDN: the INTEREST packet and the DATA packet. The INTEREST packet is a packet that is used for content requests, whereas the DATA packet is the actual packet of data. These packets hold the names that distinguish multiple pieces of content. The main node transmits the packet in the network until the requested content is found or a producer of that content is not found [6].

A wireless ad hoc network is a broad network consisting of different nodes and devices, each of them connected to the others using various wireless connections. Traditional networks use a sink node or router with nearby devices. Some of the key benefits of wireless ad hoc networks are listed below:

• They provide mobility.

• They are easy to set up and expand.

• They provide better global coverage.

• They provide greater flexibility.

• They are cheaper as compared to wired networks because it does not require cables.

As wireless users increase, traffic on wireless networks also increases. Demand for quick data retrieval also increases accordingly. To fulfill user demand, we have to shift from traditional wireless networks toward a new paradigm in which users’ queries and demands are fulfilled in a fast and efficient manner. The content-centric approach should be considered to satisfy users’ demands. A user request is served by the nodes that are available near the user. To support this, NDN nodes support caching to store the content. The concept of caching content on NDN nodes is different from other networks. In a wireless ad hoc network, the mobility of nodes is also considered because if the user or node moves to another region, then data should be formulated against the changing scenario. Therefore, a wireless ad hoc network should be integrated into NDN, which would result in many benefits. Due to limited storage space and cost, the caching of content on wireless ad hoc network nodes is still a problem in NDN-based wireless ad hoc networks [7]. The main issue with caching is determining with which criteria data should be cached on wireless nodes. NDN nodes are integrated with various cache policies that determine which content is to be replaced or deleted and on what basis.

In this paper, we comprehensively discuss different caching strategies in NDN-based wireless ad hoc networks. Though many different works have been carried out on caching in NDN, those studies only focused on limited caching strategies and not on NDN-based wireless ad hoc networks.

In this survey paper, we present a survey of caching strategies in NDN-based wireless ad hoc networks. We highlight different aspects (how NDN works and its applications, caching in NDN, wireless ad hoc networks, and caching in NDN-based wireless ad hoc networks) that are necessary to better understand this topic. This is the first survey paper to comprehensively cover the up-to-date caching schemes of NDN-based wireless ad-hoc networks, including MANETs, the IoT, and VANETs, in a single paper. This paper contributes the following:

1. As compared with other survey papers, we present a discussion of the need for caching strategies in NDN and the classification of caching in NDN-based wireless ad hoc networks in detail.

2. Different caching strategies used in NDN-based MANETs, IoT, and VANETs are explained.

3. We identify the challenges and future directions of this promising field.

Section 1 discusses the current Internet network paradigm and the need for a new network paradigm as well as the benefits of wireless ad hoc networks and why we should integrate these with NDN. Section 2 describes the NDN architecture and its applications. Section 3 elaborates on caching in NDN and the need for caching policies. Section 4 describes caching in NDN-based wireless ad hoc networks. Section 5 explains caching in NDN-based MANETs and different caching strategies (cooperative, popularity, location, priority, hybrid, and multifarious caching). Caching strategies in NDN-based IoT (cooperative, freshness, popularity, hybrid, and multifarious caching) are explained in Section 6. Section 7 explains caching strategies in NDN-based VANETs (cooperative, popularity, probability, proactive, optimization, hybrid, and multifarious caching). In Section 8, we list some challenges and future directions regarding caching in NDN-based wireless ad hoc networks. Finally, the survey paper is concluded in Section 9.

2 NDN Working and Its Applications

In this section, we discuss the background of our proposed work, the motivation behind our research work, and the problem statement that drove us to contribute here.

2.1 NDN Architecture and Working

NDN architecture is like an hourglass structure. A comparison between IP and NDN architectures is illustrated in Fig. 1. IP and NDN have similar structures with a minor difference. NDN’s first layer is the application layer, which includes different applications such as browsers. The second layer in NDN is the transport layer, which includes different protocols. After the second layer, the content name replaces the IP in NDN because NDN focuses on the content name rather than location. The NDN structure has three main tables:

1. Content store (CS) table: To save bandwidth, enhance sharing possibilities, and reduce retrieval time of the content, NDN routers cache a copy of DATA packets and store this in the CS table until new content is placed. Matching in the CS table can be done through the matching of exact characters.

2. Pending interest table (PIT): PIT handles the INTEREST. PIT is used to keep a record of interfaces. It maintains every incoming packet entry until its lifetime expires or a corresponding DATA packet arrives. Data are delivered to requested users based on interfaces. A PIT entry is deleted when data reaches the user or the lifetime of the INTEREST packet is expired. Matching in PIT can be done through the matching of exact characters.

3. Forwarding information base (FIB) table: The role of FIB is to maintain information on the next nodes to which a node in the INTEREST packet should be directed [8].

Figure 1: Comparison of glass model of IP vs. NDN architecture

The data forwarding process occurs with the help of both packets. When a user wants data, an INTEREST packet is sent to the NDN main device. This device searches the user’s query in the CS table to check if an exact match is found. If a perfect match exists, the DATA packet is signed and sent to the user immediately. If the user query is not available in the first table, then the NDN device checks the PIT to find the exact match. If the PIT has the exact match for the user query, then the interface is added to the demanded packet in the PIT list. This interface is added because the user will get their DATA packet when the required DATA packet is available. If a PIT entry is not found for an incoming packet, then the NDN router passes the user’s INTEREST packet to the FIB, which further tries to match the user’s query by using the longest matching via the prefix. The INTEREST packet is forwarded to the other nodes when the FIB entry containing the matching query is found, and a brand new entry in PIT is created for this interface. If the INTEREST packet does not exist in FIB, then this INTEREST packet is deleted or flooded to all the outgoing interfaces. This is decided by the NDN’s router policies. When a content packet moves to the router, all the entries in PIT are properly searched for an exact match for the user’s query. The DATA packet is forwarded to all interfaces that are listed in the interfaces table to determine if the specific entry is present in the PIT. Moreover, content is saved in the CS table, and the corresponding entry in PIT is deleted. Data are stored in the CS table because NDN has an in-network caching ability; thus, the NDN router stores data so that future user requests for the same data are tackled quickly [6].

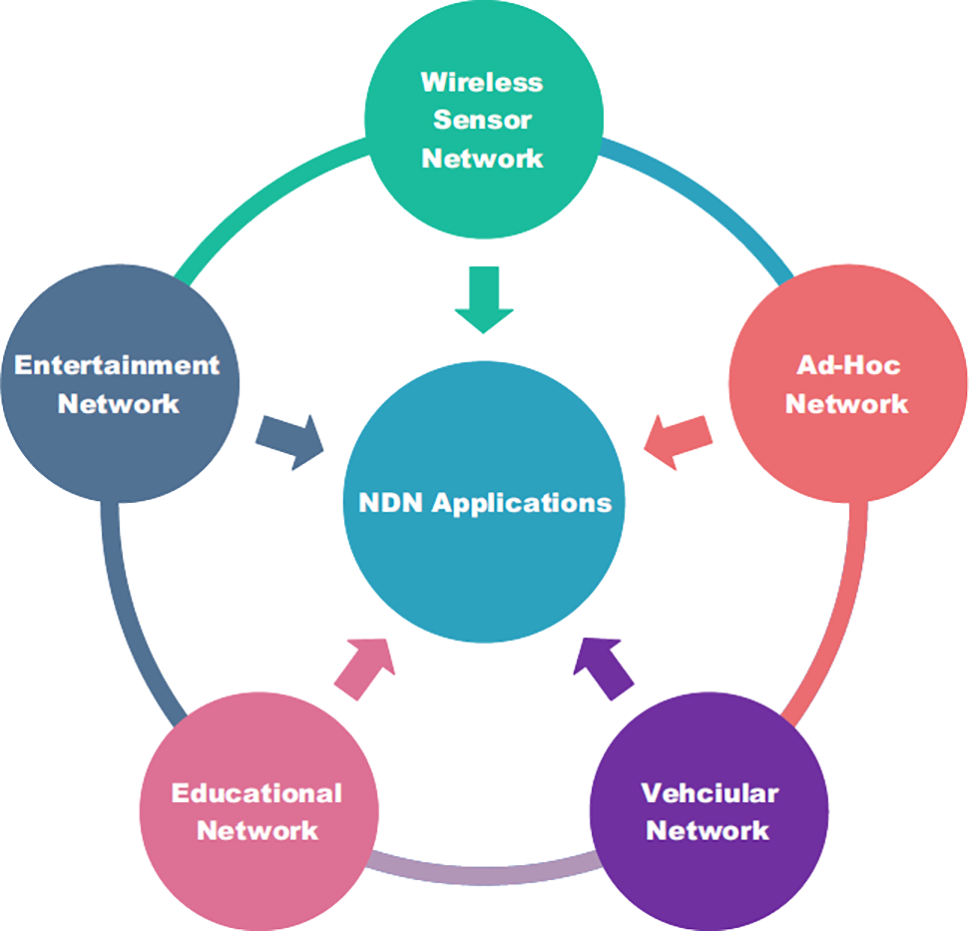

In this section, we discuss different application services in which NDN is used widely. Multiple applications are used in our daily lives and cover a huge amount of human workload. Therefore, it is necessary to implement NDN in these applications. NDN applications, such as the wireless sensor network (WSN), ad-hoc network, vehicular network, educational network, and entertainment network, are illustrated in Fig. 2.

Figure 2: NDN applications

1. WSN: WSN is widely used in different domains. It is made up of multiple sensor nodes. These nodes are integrated in a wide area. The attributes of NDN-based WSN are:

● Task nature: Tells us the task name.

● Task duration: Includes the time duration of the task and can also include values in real-time.

● Location: Includes the geographic area identification and can also include different logical or region-based names.

● Data duplication: Discards duplicate data. If multiple sensors fulfill the same interest request, the sink node must identify and discard the duplicate data [9].

2. Ad-hoc network: This is a decentralized wireless network in which multiple wireless devices establish a connection with other devices. Ad-hoc networks provide multiple benefits in different domains. They are widely used for connecting army equipment and personnel at headquarters and on the battlefield. Many state-of-the-art army communications systems solely depend on traditional IP networks. Ad-hoc networks face multiple challenges such as mobility, high latency, limited connectivity, and limited bandwidth. In many army communication systems, different nodes shift their region as they move from one region to another, which results in mobility issues in a wide range. Therefore, it is necessary to shift from a traditional IP network mechanism towards a better network paradigm. NDN is a network that overcomes these issues with ad-hoc networks. NDN’s convincing characteristics and topologies make it a strong candidate. The navy’s network consists of mobile nodes (ships) that use a mechanism to transmit crucial content to other nodes. NDN-based scenarios used in army special vehicles also contain moveable nodes and work as the network backbone. If NDN is implemented with proper guidelines, it will work more efficiently than existing ad-hoc networks [10].

3. Vehicular network: This is a network in which vehicles are treated as nodes that can move anywhere at any time. Furthermore, with the development of a transportation system based on intelligence, simple Internet vehicles’ and autonomous vehicles’ data are sent to the provider instead of just the consumer [11]. Internet vehicles and different autonomous vehicles are based on IP networks. These vehicles analyze and collect data such as road safety, navigation data, the status of road traffic, and weather conditions. These vehicles share and communicate their data to lessen road traffic jams and provide information on speeders, road closures or openings, and road conditions. Vehicles are fully loaded with different units and sensors that are used for different purposes. Due to their diverse mechanisms, designing a scalable and flexible scheme for data dissemination is a difficult challenge in the vehicular network domain [12]. Combining NDN with vehicular networks is a solution that may reduce the issues faced by traditional vehicular networks. NDN is relatively fast and more efficient than a traditional network. Two models of communication are used in vehicular networks: ICN and an address-centric network. ICN is a routing architecture of NDN. Named data are used for communication establishment. Joint online optimization of the data sampling rate is a process of collecting data from sensors in real-time by adjusting sampling rates to achieve objective functions. It is a sophisticated approach that provides a balance between system performance, quality, and resources. In contrast, the preprocessing mode for edge-cloud collaboration-enabled industrial IoT is a way of leveraging between cloud and edge computing with the help of data preprocessing to enhance the industrial IoT system. It helps improve the use of raw data before they are stored or used. Another optimization approach is the vacation queue-based approach, which enables better energy consumption and latency using a queue-based approach. It dynamically allocates the applications in cloud computing devices by considering efficient energy resources. In vacation queue mode, the server shows the status as inactive when it is not participating in processing tasks. During this timeframe, the server saves its energy by minimizing its resources. In contrast, the address-centric model uses the nodes’ addresses, such as their IDs, to generate communication paths. Cluster-based routing in named data is an algorithm that lessens the cons of the vehicular network. Some of the main aspects of this algorithm are highlighted below:

● It uses the NDN mechanism to reduce the error rate.

● It uses the NDN mechanism to provide greater bandwidth and efficient usage of the network.

● Provides greater delivery ratio of data by using ICN setup.

● It is a self-configuring and lightweight technique.

By implementing a vehicular network with cluster-based routing in the named data, the results are highly improved [13]. This clearly shows that if different vehicular techniques are combined with NDN, we will get huge benefits.

4. Educational network: The educational network is one of the primary aspects of today’s world. Traditional IP networks are used to connect students and teachers. Sometimes, IP networks fail to meet teachers’ and students’ demands. The reasons for IP network failure are too much traffic, less throughput, and more delays. Therefore, it is essential to use a network that can overcome these issues. By implementing NDN in educational networks, all these issues may be overcome. Therefore, a new program named inherent Indonesian topology was started by the Indonesian government. This program connects 33 Indonesian cities’ educational institutes using satellite or fiber optics networks. It performs different experiments by considering factors such as throughput, delay, and packet loss. Throughput is how many bits are transmitted successfully in a given time frame. Delay is defined as the time spent sending or receiving information. Packet loss is the number of all the sent packets that do not safely reach their destination. In their scenario, they stored 100 packets of INTEREST with a payload size of 1024. They also used 1000 CS. Two producer nodes and four consumer nodes were used. The best route policy was also used. Network Simulator 3 (NS3) was used to carry out the simulations. All files were run on ndnSIM. They ran simulations approximately every 20 s. The routing information protocol was used in the IP scenario. The simulation results of NDN and IP are listed below:

● The throughput of NDN was better than IP. Because the NDN CS table caches the information, there is no need to go to the destination to get information. In contrast, the throughput of IP was inferior because all information was gained by the nodes from the original location.

● Two types of delay were measured by ndnSIM: last delay and full delay. The last delay is the total time a packet needs to arrive from one place to another. In this duration, the path length is not considered. Thus, this is a smaller delay. In contrast, full delay is measured as the total time a packet needs to travel from one place to another. During this duration, the path length is calculated. Thus, this is a larger delay. The delay was higher for the IP network because the nodes had to collect data from the destination, which may be far away. NDN had a low delay because all nodes were cached, and any node could fulfill the user’s query.

● For both the NDN and IP networks, no packet loss was detected. This was because the scenario that they used did not have enough consumers [14].

Videoconferencing in real-time is another important feature used in educational institutions. Especially in online classes, almost all lectures are attended via videoconferencing. Implementing NDN in videoconferencing in real time is quite an appreciated approach. Videoconferencing can benefit from NDN’s forwarding scheme, caching scheme, data signature, and aggregation. The main goals of implementing NDN in videoconferencing in real time are listed below [15]:

● Audio/video in low latency.

● Caching ability by all nodes.

● Verification of data.

● Network assumption.

● Fast data retrieval.

5. Entertainment networks: Multimedia is the basic source of entertainment, and the prime form of multimedia is video. As the number of users increases, streaming of different videos on computers, mobile phones, tablets, and laptops over traditional IP networks is limited to network capacity, bandwidth, and throughput. To overcome these issues, a new network paradigm should be considered. NDN is particularly well-suited to the speedy delivery of video for clients. Different experiments were performed. These experiments included streaming many videos of up to 18 Mbps and resolutions up to 4 K. Multiple clients and channels were considered. Different building blocks for comparing NDN and IP were used. Some comparisons are listed below:

● In-network recovery of loss was handled by the NDN with the help of caches.

● Better bandwidth because the demanded data could already be in the cache.

● NDN had better load balancing as NDN nodes store data [16].

In this section, we focus on the classification of caching policies. Nodes store the objects, data, and information temporarily to support peer-to-peer and content-centric approaches. An advantage of caching is that it speeds up the data transmission process. Caching decreases the extra cost of data transmission and saves time on data recovery. It also helps lessen the expensive cost of downstream and up-streaming of user requests and information. In NDN caching, when a user request is sent by the client, the node that is closest to the client will try to find a DATA packet against that INTEREST. If a DATA packet is found, then it forwards the DATA packet to the client, which results in client satisfaction promptly. Caching provides several benefits:

• Reduces traffic load on nodes.

• Improves response time as almost all nodes cache the content.

• Better bandwidth utilization.

• Reduces data waste using multiple cache policies.

3.1 Need for Caching Strategies in NDN

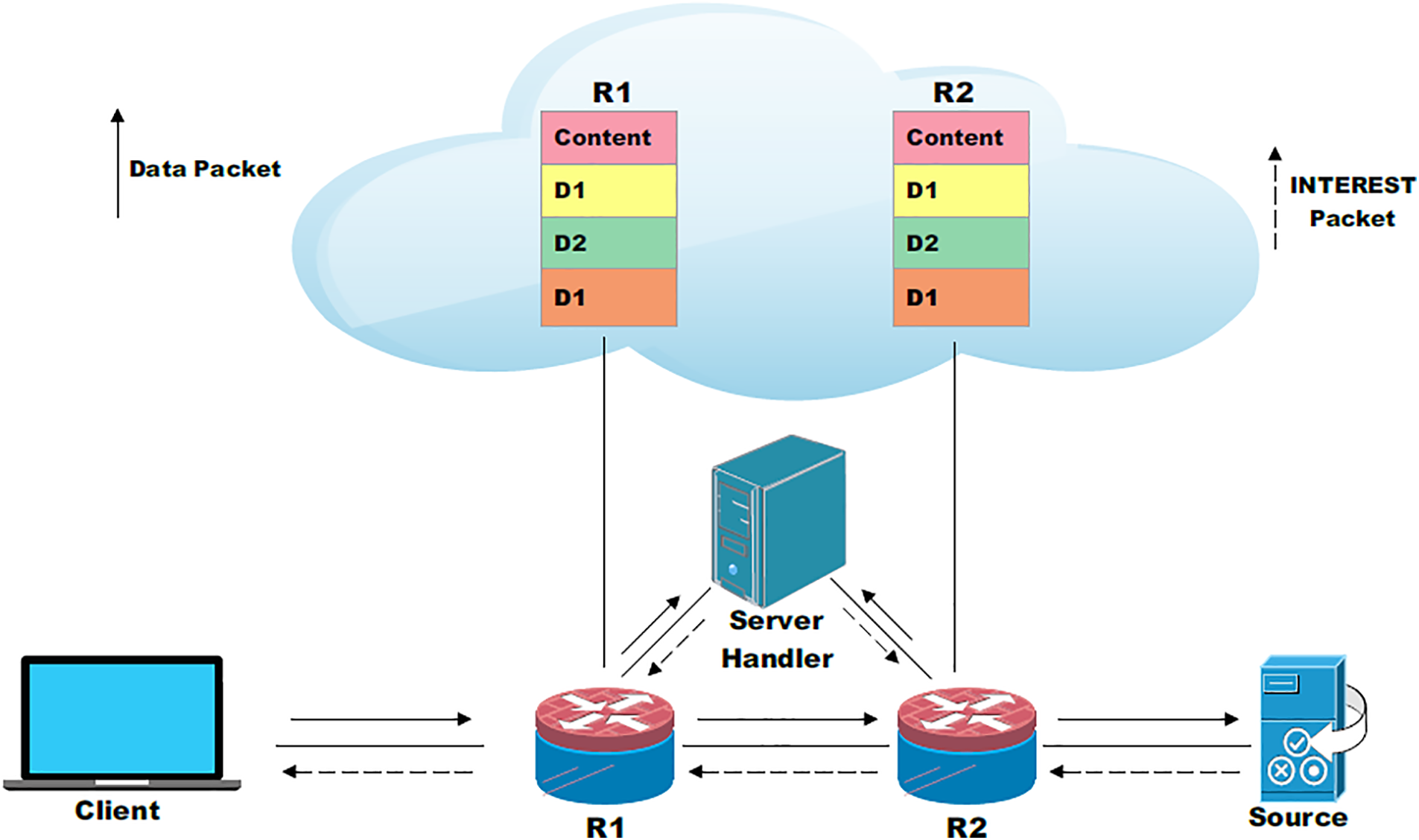

In CCN, data content is the most precious component of communication. As we discussed earlier, NDN offers several advantages over traditional IP networks, such as mobility, security, and simplicity. Furthermore, it enhances the diverse model of communication by transmitting heterogeneous data. Two terms need to be considered: homogeneous caching and heterogeneous caching. In homogeneous caching, routers cache the same content packets that are passed from them. NDN and CCN architectures also support homogeneous caching [17]. For example, as seen in Fig. 3, two routers (R1 and R2) cache the same content (D1, D2, and D3). In heterogeneous caching, some routers cache the DATA packet along the path. For example, as seen in Fig. 4, routers R1 and R2 cache the content differently. D1 and D2 are cached by router R1, whereas D3 is cached by router R2. Each router has a different cache size in heterogeneous caching [18]. Therefore, NDN is the future of the Internet, and caching architecture based on NDN is beneficial to gain notable performance.

Figure 3: Homogeneous caching

Figure 4: Heterogeneous caching

Three main caching aspects are used in NDN scenarios:

1. In-network caching: This is a strategy in which data are generally stored in an area nearer to the user who requested those data. For example, a browser stores its site resources on a web cache that is closer and more reliable for the user. By doing this, the web query is efficiently satisfied. Caching in network has two different forms:

● Non-coordinated caching: In this form, data and different objects are selected without any precautions. Data duplication and redundancy are not considered. By doing this, neighboring nodes may contain identical data. Load on the original data distributor is high, as data need to be extracted from the original data distributor. The hop count of the router is also maximized.

● Coordinated caching: In this form, the data and different objects are carefully selected to avoid data duplication in the nodes. This enhances the latency. By doing this, neighboring nodes do not contain identical data, and the load on the original data distributor is reduced. The hop count of the router is also minimized. A few research questions still require researchers’ attention [17]: When should we use a coordinated caching scheme? What is the best way to use a coordinated caching scheme? Which metrics should be used to differentiate between recently used data to avoid duplication?

2. On-path caching: This is a strategy in which a place decides the type of caching. In this, when a user requests content, the data content are cached along a path. On-path caching is easily applicable in NDN. The mechanism of on-path caching is illustrated in Fig. 5, which shows that routers that lie in the path between the client and source can cache the data. This is the most important module because its approach is flexible towards content caching. CCN, NDN, and DONA also support on-path caching [17].

3. Off-path caching: In off-path caching, when a user requests a DATA packet, the data content may or may not be cached along the path. In NDN, a centralized topology exists. This means that the server handler that is placed in the center is the only server that decides where to cache the data. A central server handler decides where a DATA packet should be cached [18]. The off-path caching mechanism is illustrated in Fig. 6, which shows that the server handler decides where to cache data. It is necessary to have caching strategies. However, by implementing more efficient performance regarding caching, results may be more favorable. Efficient performance of caching demands more efficient strategies that are capable of searching for the appropriate nodes or routers for data storage.

Figure 5: On-path caching

Figure 6: Off-path caching

Decision policies for caching decide where DATA packets should be cached, such as whether they are cached on interposed routers or not. For better understanding, there are two main aspects: placement of the cache (at which nodes or routers the cache is placed) and replacement of the cache (which cached data are replaced with new cached data).

1. Placement of cache: This is a caching technique in which the contents of the data are stored in the cache. This decides where to store the data content [6]. There are many policies regarding content storage. A DATA packet that is available in the network will be cached at downstream neighboring nodes. Thus, the packet is cached along the data delivery path. A DATA packet that is available in the network will be cached among all the nodes or routers. If a new request for content approaches, intermediate nodes will try to satisfy the request because they have a copy of that particular content. The utilization of bandwidth is increased in the on-path caching mechanism. Many ICN architectures support different caching techniques.

2. Replacement of cache: Replacement of the cache is used to decide which data should be replaced with the newly cached data. Basically, it is the main aspect of cache policies [6]. Many replacement policies for cache storage exist. Sometimes, the content that is accessed the least recently is dropped by the nodes or routers. In others, content that is used less is the first to be dropped. Drop timing may depend on the router.

4 Caching in NDN-Based Wireless Ad Hoc Networks

A wireless network consists of multiple moveable devices that are connected via some kind of signal. It is a huge group of nodes that exchange information with each other. These nodes are connected to each other without any fixed infrastructure or topology. They use radio signals for communication between other wireless devices. This is a kind of dynamic network. In this network, nodes do not rely on a predefined infrastructure; because of this specification, they are called wireless ad hoc networks [19]. A radio network consists of multi-hop devices for communication. Each single node is capable of directly communicating with the other nodes. Different wireless technologies (such as Wi-Fi and Bluetooth) use an ad hoc wireless network. These networks are very helpful in emergency scenarios. In contrast, NDN supports caching in which data are exchanged between different devices in an asynchronous manner. Devices at both ends of the wireless ad hoc network may or may not be connected simultaneously. Maintaining a path between two end devices is also not necessary.

Mobility is the main challenge in a wireless ad hoc network because it is difficult for a device to perform its services continuously after switching from one region to another. As NDN is decentralized, it provides a flexible and efficient caching mechanism in the network. If a central server station fails in a simple wireless ad hoc network, then coverage issues may occur. Using an NDN-based caching mechanism in a wireless ad hoc network increases the response time of the network compared with a traditional IP-based wireless network. An NDN-based caching mechanism with a wireless ad hoc network can be used in offices, on roads (i.e., syncing side unit signs from the road via moving from one place to another), by educational institutions (i.e., arranging online classes in less time), by the military (i.e., establishing communication setup between army headquarters in less time), and in industry (i.e., dealing with foreign clients via video meetings by establishing quick connections). It also provides support to devices and nodes to help a node deal with mobility issues because there is no need for different network configuration parameters (such as an IP address) in NDN. Nodes can easily take advantage of the CCN approach as their location is not fixed in NDN, and data can be fetched from anywhere at any time. Implementing an NDN caching mechanism can improve various wireless ad hoc networks, such as MANETs, IoT, and VANETs. Avoidance of packet collision and aggregation of INTEREST packets (i.e., if the same INTEREST packet is received by the node, then it denies the INTEREST transmission) can increase the performance of wireless ad hoc networks based on NDN. Moreover, multiple strategies in different wireless ad hoc networks using an NDN scheme resulted in better and improved versions of those wireless ad hoc networks [8]. Some of the advantages of caching using wireless ad hoc networks are listed below:

• Enhances the data transmission in a sped-up manner.

• Decreases the extra cost of data transmission and saves time on data recovery.

• Reduces the traffic load.

• Improves node response time.

• Improves bandwidth utilization.

• Decreases unwanted content by using different node cache policies.

• Improves data availability.

• Lessens query delay time.

• Widely used in homes, educational institutions, industry, and private and public sectors.

There are many types of NDN-based wireless ad hoc networks; we discuss caching mechanisms for the following:

1. Caching in NDN-based MANETs

2. Caching in NDN-based IoT

3. Caching in NDN-based VANETs

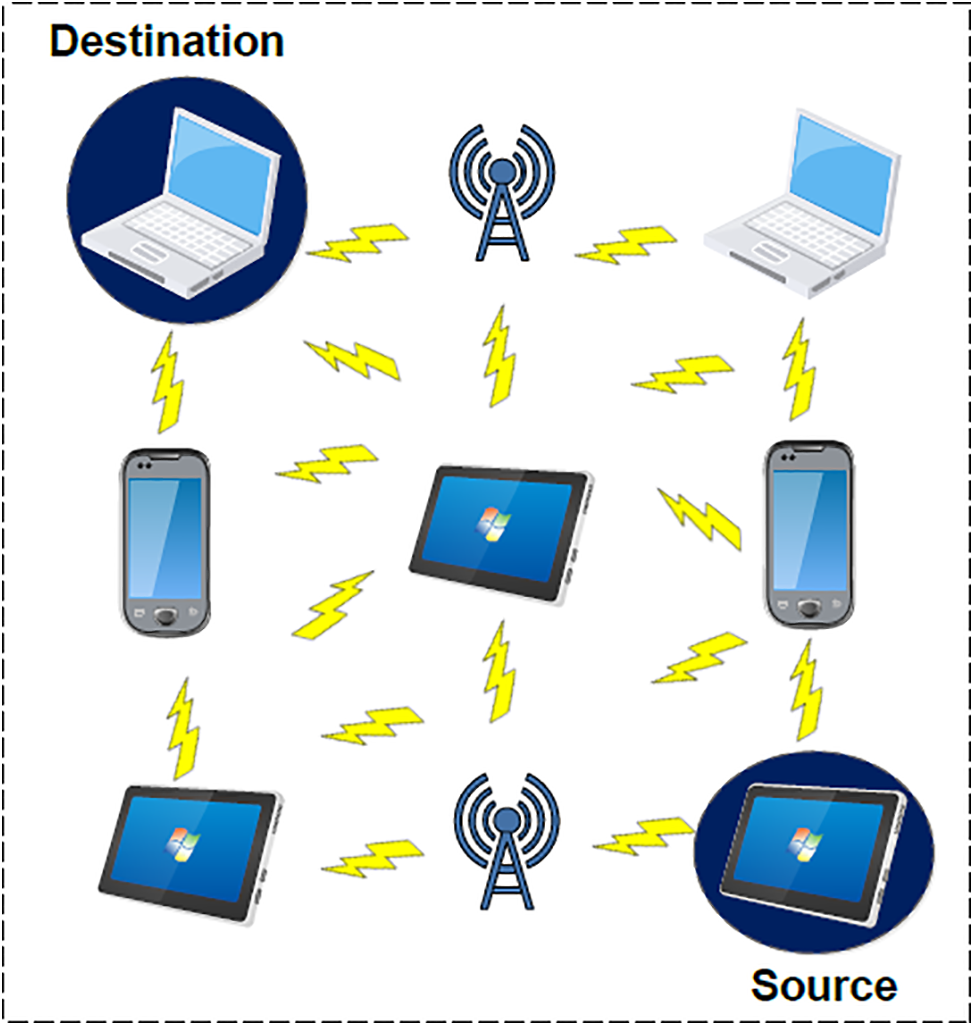

MANETs are basically networks that consist of mobile devices or nodes that are capable of moving from one place to another. Advancements in MANETs have made them an ideal candidate as the next main source of communication. Nodes in MANETs create a network that is decentralized. These nodes automatically connect over a link (i.e., wireless). Nodes present in the same range (e.g., radio signals) interact directly with each other. There is no need for a dedicated router or node because all routers and nodes are capable of self-forwarding the packet. Each device or node can work as a router and can transfer the packet without any difficulty. This network is very helpful in several environments (e.g., earthquake rescues, battles, and disaster recovery) [20].

Caching in NDN-based MANETs is a process in which different mobile nodes cache the data for future use. If a user demands specific data from the network, the mobile nodes contact each other and check their cache storage. If the cache storage contains the data, then they are forwarded to the user. Through caching, overall time is saved. If the data are not found in the mobile nodes, then a data request is sent to the data originator or server. The data originator or server sends the data to the demanding node, and the mobile nodes cache the data for future user requests. NDN-based MANETs works in different challenging and active environments. They are limited in terms of connectivity, battery, and storage. They also face many problems such as retransmission of packets, packet collision, data duplication, and packet flooding. In NDN-based MANETs, INTEREST packets are forwarded via different methods [21]. Different types of caching in NDN-based MANETs may be used depending on the scenario. Caching is the core aspect of NDN in MANETs, and it provides multiple advantages:

• Avoids caching of similar data content on adjacent nodes.

• Lessens the duplicate data in the network.

• Enhances the cache hit ratio.

• Quick response time.

• Decreases data retrieval latency.

• Lessens duplicate data requests in the server.

• Covers mobility issues in some cases.

The working mechanisms of MANETs are depicted in Fig. 7, which shows different wireless devices communicating with each other and messages being forwarded from source to destination using different wireless nodes. In MANETs, caching is also a challenge because the environment is different and resources are limited. Caching in MANETs depends upon the placement and distribution of data. Many aspects limit the performance of caching mechanisms in MANETs. These aspects are:

• Node mobility.

• Limited resources.

• Limited energy.

• Limited bandwidth.

• Limited memory.

Figure 7: Working of MANETs

Strategies for data caching in NDN-based MANETs are necessary to deal with energy, memory, bandwidth, and resource constraint issues. Caching strategies in NDN-based MANETs decide how to efficiently select the best caching scheme. The design of NDN-based caching in MANETs’ schemes depends upon the context and complete network scenario. A detailed classification of different caching strategies in NDN-based MANETs is illustrated in Fig. 8.

We divide the different caching strategies for NDN-based MANETs into different branches:

1. Cooperative caching

2. Popularity-based caching

3. Location-based caching

4. Priority-based caching

5. Hybrid caching

6. Multifarious caching

Figure 8: Caching strategies in NDN-based MANETs

In wireless ad hoc networks, the cooperative caching technique is used the most often. Due to several challenges of node size and network nature, there was a need for an efficient caching scheme in wireless ad hoc networks, which is fulfilled by cooperative caching. Cooperative caching is a type of in-network caching. In-network caching enables more than one node to work together to enhance the caching process. By doing in-network caching, many challenges and limitations can be resolved. Cooperative caching allows sharing and mutual coordination between multiple moving nodes. This helps improve network performance.

Cooperative caching consists of four components. The first is data locating, which focuses on locating the data in the cache. When data are required by the node, it first determines whether the required data are available in the node locally. If data are not available in the node, then this component evaluates how to access the data. The second is cache management, which caches all the data regardless of where they come from. It may use certain parameters (e.g., bandwidth, distance between the nodes, and latency) to decide what to cache. The third is replacement of the cache, which is when the decision to eliminate data content is decided independently by the node using its native information. The fourth is cache regularity, which ensures whether cached content is valid or not. Due to congestion in the network, a user request may not be very important if it takes a long time to respond to the user query. Therefore, some kind of attribute (e.g., TTL) should be added in the caching process that tells the system about data validity [22].

In [23], a caching strategy in a CCN-based MANETs (CSCM) technique based on cooperative caching was proposed by the authors. This technique selects the suitable node for caching by considering the factor of mobility. It also lessens the mobility issue of nodes. Their model consists of different mobile nodes. These nodes work as the consumer, intermediate, or producer. Nodes can freely move in the region. The selection of appropriate nodes is an important task. For better overall performance, each and every node calculates its ability to cache the incoming content. For this, each node calculates its selection score (SC). A threshold value is also defined. If the SC is less than the threshold value, then it transfers the content to other nodes. If the SC is greater than or equal to the threshold value, then it caches the content. Thus, only a few nodes are selected for the cache instead of every node. This helps with energy efficiency. In this way, content duplication is also reduced. For this, SC is used to calculate the best suitable node for caching the content. The SC for every single node and for all incoming data was calculated. The authors tackled different metrics for different limitations of MANETs, such as available cache memory and middling nodes. These metrics were also calculated and combined with SC. The equation for these metrics is presented in [23].

Middling of the node was also performed. This means that a node that has the highest middling ranking among the other nodes enhances the cache accessibility and has more successful cache hits. Node middling is used to find the most important node in the network [24]. In CSCM, node middling is measured using the eigenvector centrality formula [25]. This formula indicates if a node is connected to other crucial nodes that have greater values of eigenvector centrality, in which case, it is a middling node. If a node that has a low eigenvector centrality value is connected with multiple other nodes, then the node’s eigenvector centrality becomes higher, and this node is labelled a middling node. The middling value score of every node can be calculated according to its connection with other nodes. These middling values are broadcast to the adjacent nodes. If nodes that are fewer middling nodes are now middling nodes after knowing that their neighbor node is a middling node. This helps with tackling topology or mobility changes.

Checking available cache memory is important for caching new content. There are two types of mobility: mobility of the node within the area (content availability never occurs) and mobility of the node across the area (content availability may occur). Therefore, mobility across the area is considered here. If a node guesses that it is about to move from one area to another, it selects the most suitable node to transfer all of its content to so that the new node works as the cache node in that area. In CSCM, each middling node checks its status. If its status is about to move, then it broadcasts a message to its adjacent nodes. The broadcasted message includes a list of available data. After that, every node calculates its SC. If its SC is less than the threshold or status of the node that is about to move, then it cannot send INTEREST packets. If the SC is greater than the value of the threshold, and the status of the node is not about to move, then the node forwards its INTEREST packet. After receiving the INTEREST packet, the node sends the content to the new middling node [23]. In [23], the performance of CSCM was evaluated using NS3. Fifty nodes were installed in an area. These nodes moved from one place to another randomly. One node was labeled as a producer node, while the remaining nodes were labeled as client or consumer nodes. The results showed that the network traffic was reduced, the hit rate increased, and content retrieval time increased.

In [20], the group caching (GC) technique based on cooperative caching was described by the authors. MANETs can exchange information with other nodes at any time. Therefore, a cooperative caching scheme was used in this technique. In this technique, each mobile node created a group with its adjacent nodes that were one hop away. The caching status of all nodes was recorded in the group. By using this technique, the caching space was utilized efficiently, average latency was reduced, and data duplication was decreased. In this model, graph (M) shows different vertices representing nodes. Edge (E) represents the connectivity between them. Edge (E) is present between nodes only if another node is in range. This edge may be bi-directional. A group is formed by the nodes that are connected to their one hop adjacent nodes. A unique group ID was assigned to each node. The node that was connected to the highest number of other nodes was known as the leader node. A “SYNC” message was broadcast to group members to check the connectivity of nodes. In this way, each node could identify its neighbor nodes. Each node forwarded its caching status to its group member nodes. Thus, when new content became available, the leader node selected the most suitable node from the group for caching. Each node from the group contained two records for maintaining the caching status of the group. These two tables are the collective table and individual table. The collective table stores the group members’ node caching status. It contains the columns cached content ID, content source ID, timestamp, and cached content. In contrast, the individual table stores the caching status. It contains the columns cached content ID, content source ID, timestamp, and ID of the group member. When a mobile node gets a caching status notification from the other nodes in the group, then it modifies the collective table.

Leader nodes know which content is stored in which node in the group with the help of the collective table. When an INTEREST packet is received by the leader node, then it searches both records and finds the requested content. A message is broadcast to every node in the group to check the available cache space of each node. The message contains information about different fields (e.g., group member ID, cached content ID, available cache space, and timestamp). The leader node updates the collective table after receiving the messages from the other nodes. When new content arrives, the leader node checks its cache storage space. If the storage space is greater than the new content, then it stores the data; otherwise, it checks the collective table and forwards the data to the node in the group that has greater or equal storage compared to the newly arrived content. If no sufficient space is available in all nodes in the group, then the leader node checks whether this content is already cached in other nodes in the group. If yes, then it discards the data. If no, then the leader node selects the node that has the oldest cached data timestamp, and the content is placed in that node. When clients demand data from the source, the leader node first checks its individual table and then the collective table. If found, then it forwards the content to the client. If not found in both tables, then it forwards the request to another leader via constructing a routing path to the data originator. The next leader repeats the same process and checks both tables first. This process continues until the data are found. If the data originator receives a request for data, it sends the data, and cache placement and replacement is done by the intermediate nodes. In [20], GC performance was evaluated using NS2 for 95 nodes. The radio range was about 99 meters, and 999 content packets were distributed among all the leader nodes. These nodes moved from one place to another randomly. The results show that the average hop count was reduced, the cache hit rate increased, and the average latency decreased. Another study presented a cooperative scheme combined with popularity for improved cache hit ratio [26]. Improved caching was also found by using a valuable node for suitable caching in MANETs [27]. Furthermore, cooperative caching schemes in MANETs were described by the authors in [22,28].

Every NDN-based mobile router or node keeps track of the total number of times specific content is requested by the client. It calculates the total number of data chunks by seeing the demand for that particular datum [6]. For example, if a user demands a piece of content, the mobile nodes first check their caches to determine if the demanded content is available or not. They also count the total number of requests for that specific content. If the content is not available, they request that content from the data generator and cache the content for future use. If the count for a specific piece of content increases beyond the threshold value, then this content is labeled as popular content. Popular content has the highest hit ratio.

Energy, cache space, and mobility are issues in MANETs. Therefore, time-dependent caching (TDPC) techniques were proposed by the authors in [21] as a solution to overcome these issues. TDPC is popularity-based caching. It caches the data along the forwarding path. It is a time-dependent scheme that chooses the content on the basis of its popularity. It also measures the storage capacity and cache distribution of MANETs’ nodes. In this technique, not all content is cached on all nodes along the routing path, but the content is cached on a few nodes. It uses two factors for caching the content: the gap between consumer and time-based popularity. The gap between nodes is measured by checking the hop counts. The total number of nodes that are passed by the INTEREST packet helps calculate the hop count. A new column is added to the INTEREST packet that measures the hop count. When a user demands the content, then the INTEREST packet is forwarded to the adjacent nodes. First, the CS table is checked for the required content; if it is found in the CS table, then the content is immediately sent to the user. If the required content is not found, then the PIT is checked. If the content entry is not found in the PIT, then it adds the entry in PIT, increases the hop count in the INTEREST packet, and forwards the packet further. Every time the INTEREST packet is moved forward, the hop count is increased by one. When the content is found, it goes backward, and the PIT is checked for the interface. If the interface is not found in the PIT, then it discards the data. If the interface is found, then it transfers the content to the user. The device with the smallest hop count is considered the nearest node. In the future, if the same node requests the content, then it gets the data from this node. When an INTEREST packet is received by the data originator, the total hop count is fetched and added to the content. Popularity based on time is calculated here. The amount of content is divided by the current time to calculate the popularity of the content. If the popularity is greater than the old limit, then the content is stored in the CS table. In [21], the performance of TDPC was evaluated using NS3 for 55 MANETs nodes. These nodes randomly moved from one place to another at speeds of 9 to 24 m/s. Some nodes were labeled as producer, consumer, and intermediate nodes. The results showed that the content retrieval time was better, the cache hit rate increased, and unnecessary replacements decreased.

In [29], a scheme called less space still faster (LF) was proposed by the authors to enhance the caching of NDN-based MANETs. LF places the content close to the requesting node. LF provides a faster response using less space. Four factors are responsible for LF’s fast pace. Caching only occurs if the free space is greater than the space required for caching. Distance is measured by using the total number of hops that a packet passed by. A new field is added in the INTEREST packet that counts the hops passed. The hop field also checks when a DATA packet is forwarded to the destination. The node with the minimum hop count is selected as the caching node. Content popularity is represented by frequency. The frequency of each packet increases by one when a particular piece of content is demanded. Content with the highest number of requests is considered popular content. These contents are cached as urgent. A new field is added to the INTEREST packet that determines the data redundancy. In order to detect data duplication, a new field provides values that indicate whether the data are already cached or not. The performance of the LF was evaluated using NS3 [29]. Sixty MANETs nodes were used. The distance between these nodes was at least 240 m. The results showed that the response time was better, and the cache hit rate increased.

In location-aware caching, all NDN nodes in MANETs cache the data according to the mobile nodes’ locations. Performing caching according to location parameters results in different improvements. If the location is known by the NDN-based mobile nodes, then they may cache the data in the nearest node, which helps improve the performance of the overall network. The performance of the network depends heavily on the location of the caching mobile nodes.

In [30], a novel caching scheme, location-based caching in CCN MANETs, was described by the authors. It is based on the location of the mobile nodes. Each mobile node uses a location system (i.e., GPS) to know the exact location of itself. The location of every node is shared while sending and receiving INTEREST and DATA packets. When a DATA packet is needed by the client node, an INTEREST packet is forwarded to the whole network. The INTEREST packet also contains location coordinates. When this packet is received, the total distance is calculated using the Euclidean formula. If the distance between the client node and the intermediate node is less, then it forwards the packet further. If the distance is greater than, it discards the packet. Every single node in the network performs distance-based caching. This avoids a slow response time as content is cached on the nodes that are nearest to the client node. In order to lessen content duplication and collision, every mobile node continuously senses the whole network for a limited amount of time. If a similar DATA or INTEREST packet is received by the mobile nodes during this period, then this transmission is terminated. The authors evaluated the performance using ndnSIM. Mobile nodes were found to travel up to 700 m. The nodes cached 990 packets. The results showed that the content retrieval time was improved, and packet retransmission decreased.

The authors of [31] proposed a scheme called location-based on-demand multipath caching and forwarding (LOMCF). This scheme was also based on location caching. In NDN, if a node demands content, then a standard NDN communication procedure is followed. In LOMCF, the location of both the sender and receiver is considered during the forwarding mechanism. Here, distance-based caching is performed by every single node. When a DATA packet is needed by the client node in the network, an INTEREST packet is forwarded. Location coordinates are encapsulated in the INTEREST packet. The distance from other nodes is calculated using the Euclidean formula. If the distance is greater than, it discards the packet, and if the distance between the client node and intermediate node is less, then it forwards the packet to the other nodes. This avoids slow response times as the content is cached by the nodes that are nearest to the client node. By calculating the total distance between the client and intermediate node, unnecessary packet flooding between the networks is reduced. The authors evaluated the performance of LOMCF using NS3 for 990 DATA packets. The results showed that packet retransmission decreased, and content retrieval time improved.

Priority-based caching means that the content is cached on the basis of priority. The priority assigned to the different data content indicates which data content will be swapped first. When different nodes try to exchange data content, they swap their high-priority data content first. Content with a high priority will be found in NDN more frequently compared to content with a low priority. Content with a low priority may suffer from poor latency. Priority can be of any type depending on the developer [6].

Caching strategies in NDN-based MANETs focus less on content precedence. This affects the crucial data usability. In [32], a priority-based content caching scheme was proposed by the authors. Different data are prioritized by considering different demands of the data. Content priority is the key factor in this scheme. The authors added a new field, named field of priority, to the NDN packet. A greater priority value is added in the field for identifying priority. Frequency of content was also introduced to calculate how many times a particular piece of content was demanded in the CS table. This is used to distinguish between the popular and unpopular content. Frequency of content is measured by multiplying the content size by content priority. The authors evaluated the performance using ndnSIM for 45 mobile nodes. The results showed that content availability improved, and important content was better cached.

Hybrid caching is when multiple schemes are combined with each other to obtain better results. In [26], a novel cooperative scheme combining a popularity mechanism for caching in CCN MANETs was proposed by the authors. They named this scheme CCMANET. They developed a random topology and implemented the cache scheme on different levels. They also elaborated a way of calculating the popularity of content in the network. They evaluated this scheme using NS3. Both the cache hit ratio and server load improved. In [27], cooperative caching combining popularity and probability were implemented via groups in NDN-based MANETs. Their model selects the most crucial adjacent node for cache placement. It excludes the content that has the lowest probability. They evaluated this scheme using ndnSim and found that the cache hit probability improved.

Here, we summarize the different caching schemes that do not adopt any targeted schemes. This involves all the caching policies that depend on different parameters. Many more caching techniques exist in NDN-based MANETs. In [33], a technique for caching in MANETs was described. This technique works on a normal caching scheme. A new parameter (interval of data) was added to the DATA packet. This parameter manages the interval of data along the forwarding path. Performance was evaluated using the QualNet network simulator. The results showed that overhead decreased, cache hit rate increased, and average path length decreased. In [34], a technique for cache space efficient caching in MANETs was described. This technique works on the graph scenario, which represents the mobile nodes. If graph nodes are connected, this means that mobile nodes are connected. Performance was evaluated using ndnSIM, and the results showed better cache space utilization and increased cache hit rate.

The IoT is usually considered to be a type of WSN. The IoT has received much attention due to its recent advancements. It enables different hardware objects to think, learn, hear, and perform different crucial tasks. The IoT uses devices, actuators, and sensors that interact with each other and perform multiple operations. It converts these devices into smart devices by giving them these abilities. Radio frequency is necessary for IoT communication. However, many technologies have been introduced that are similar to radio frequency. The IoT can connect almost any device with others. There are many state-of-the-art techniques for operating the IoT. The first technique is to use fully automated methods of retaining and organizing content. The second technique is to use a new method that is more productive to get information. The third technique is to use a secured method to combine IoT data regardless of where they originated from. The IoT is used in different applications [35].

The IoT consists of a data-centric network. IoT devices heavily depend on resources, and sometimes these resources are limited. The nature of the IoT network and inefficient addressing make the IoT a perfect candidate for implementing NDN. The IoT naming system lists multiple sensing tasks. The NDN naming scheme is similar to the IoT naming scheme. For example, the aggregator node depicts what type of data is necessary. Applying NDN in WSN has several advantages:

• Provides caching as sensor nodes have storage capability.

• Provides easy retrieval and searching of content via hierarchical naming.

• Provides flexibility in deployment by implementing NDN on top of a traditional IP network.

• Provides better scalability as several nodes easily retrieve data using the NDN approach.

• Provides easy development of applications [9].

The performance of IoT nodes is affected by many parameters, such as the limited storage space of IoT nodes, limited energy resources, and limited bandwidth. Strategies for data caching in NDN-based IoT are necessary to deal with these aspects. The design and selection of NDN-based IoT caching schemes depends on the context and complete network scenario. A detailed classification of caching strategies in NDN-based IoT is illustrated in Fig. 9. We divide different caching strategies for NDN-based MANETs into the following branches:

1. Cooperative caching

2. Freshness-based caching

3. Popularity-based caching

4. Hybrid caching

5. Multifarious caching

Figure 9: Caching strategies in NDN-based IoT

In the IoT, the cooperative caching technique is widely used. Cooperative caching allows sharing and mutual coordination between multiple moving nodes. Cooperative caching works on the mechanism of in-network caching. NDN also supports in-network caching because more than one node can work together to enhance the caching process. Cooperative caching helps improve network performance by resolving several restrictions, such as slow response time to user queries, the limited storage space of IoT nodes, limited energy resources, and limited bandwidth. It is based on four important parameters.

In [36], a disaster management system (DMS) scheme based on cooperative caching was proposed by the authors. In this technique, the producer node sends a fire alert notification to the adjacent consumer. A fire scenario is considered in school. In this scheme, the nodes collaborate with each other and manage the situation efficiently. PIT is restricted because, in a disaster, it is useless to maintain PIT entries.

The reason for this is that the main focus is to send the fire alert notification to the adjacent nodes. This helps decrease the delay. If the value of a fire sensor crosses the threshold limit, then an alert notification is broadcast to the network using a push-based technique. Fire sensors are implemented in schools. When the sensor values are less than the threshold level, then the sensor indicates that the environment is safe. When sensor values are greater than the threshold level, then the sensor indicates that the environment is unsafe. In an unsafe environment, all nodes send alert information to each node. In this way, all nodes are notified, and all instructors and children know about the fire incident. The authors evaluated the performance of DMS using NS2 for 49 nodes placed in a school. The simulation results indicated that energy utilization and delay were reduced.

In [37], the authors proposed a form of cooperative caching called information-centric wireless sensor networking (ICWSN) for an energy-efficient WSN. As NDN sensor nodes have a limited battery and may have to wait for a long time for a request, this results in an inefficient use of energy. Therefore, the authors suggested an energy-efficient cooperative caching scheme that improves fast data fetching from cache nodes and better energy usage. NDN-based WSN sensors were deployed that frequently sense the network and forward the required data. Normally, three working modes are considered in an IoT scenario: normal, mild sleep, and full sleep. In normal mode, the nodes perform their operations normally. In mild-sleep mode, the nodes are in power-saving mode and use less energy, whereas in full-sleep mode, the nodes are sleeping and are activated by an alarm in the case of any processing. When an INTEREST packet enters the network, NDN sensors check the PIT to detect whether this packet was requested earlier or not. If it was not requested earlier, then the sensors activate the policy of cooperation and calculate the other parameters, such as eigenvector centrality [25], to determine the node with a large enough neighborhood to cache the content. The authors evaluated the performance of ICWSN using the NetworkX library for 95 nodes. The simulation results showed that delays were reduced, and energy consumption was achieved.

Data freshness is used for caching schemes that cache the content on the basis of content lifetime [38]. In [39], cooperative caching was proposed for NDN-based IoT. An ICN architecture scheme was applied in a low-power IoT system for better energy consumption. As we know, NDN offers in-network caching, which is beneficial because if IoT devices are in sleep mode, then the content still remains available. CoCa is an IoT protocol. In this scheme, CoCa was implemented with NDN to enhance effective caching in the IoT environment. The authors evaluated the performance using the remote interactive optimization testbed. The results showed that energy consumption was reduced, and the availability of recently used content was almost 91%.

In [40], an NDN-based IoT technique known as SPICE-IT was implemented for controlling the pandemic situation. This scheme works on a pull-based mechanism. Individuals were monitored for body temperature and mask adherence. The authors evaluated the performance using NS3, and the results showed that the cache overflow was reduced, and network congestion was improved. A different popularity model can also be used to collaborate with the cooperative mechanism [41].

Freshness-based caching means that the freshest or latest content that was required in the network is determined. This concept is vital in the field of IoT, as IoT nodes have limited storage space. Caching fresh content helps with eliminating the old content that is rarely needed in the network. If the freshness of the content is not considered, then there is a chance of slow response times for the user and network overload. Freshness caching is a way of caching only the fresh content that circulates in the NDN-based IoT network. It also helps speed up the caching process because old content is evicted and space is made for fresh content in the nodes. Here, DATA packets should be cached by considering some value that differentiates the fresh content from the old content.

In [42], a freshness approach to an IoT-based scenario that works on the freshness caching mechanism was proposed by the authors. In an NDN-based IoT network, new information is consumed and produced by the consumer and data generator, respectively. Users mostly demand state-of-the-art information. Previously, a sequence number was assigned to the content, but this process was not very useful because when a new node entered the system, it did not know which sequence number was required. To address the old sequence number process issue, a new parameter was added named the latest parameter. It determines if a particular packet will remain in the network and for how long. If the latest parameter is not added, then aggregate nodes always forward the old packet. Two values are assigned to the latest parameter: low and high. Content that is new to the network is labeled as high, and content that is old in the network is labeled as low. Because different users demand different types of content, the user specifies the needed content. If the desired content is not available in the CS due to a lack of freshness, it is considered a cache miss, and this query is transferred to the data originator. A new field (that determines the content freshness) is added to the INTEREST packet and a check (timestamp indicating that the content is currently cached) is added in the CS table. Old cached content is replaced by new content. The authors evaluated the performance using NS3. Nine producer nodes and four consumer nodes were placed. The simulation results showed that the cache hit and overall caching performance increased.

In [43], a caching scheme named least fresh first (LFF) was proposed by the authors. In this scheme, cached contents that are considered invalid based on the sensors’ time forecasting are evicted first. It is used to enhance the freshness of data in the network. The least fresh content is selected if the cached content has not been modified in the cache since its last request. The main idea of this scenario is to predict the sensor event time to determine a rough estimate of the remaining life. Prediction of freshness depends on the behavior of the traffic in the IoT network. If data are flowing non-stop, then it is called continuous traffic. If data are flowing in a fixed time interval, then it is called periodic. If traffic is periodic, then after a certain amount of time, cached content is no longer fresh because new content is flowing into the network. Fresh content is calculated from this observation. The authors evaluated the performance of LFF using ccnSim. Many nodes were placed in the evaluation scenario. The simulation results found that the cache freshness percentage and overall retrieval time increased.

Content freshness can also be calculated by eliminating the content cache time from the total time and then dividing it by the total time [44]. In [45], an energy-efficient content caching scheme was proposed that works on the mechanism of content freshness. This scheme considers the energy issue via caching the content. They used a mechanism in which real-time user request rates increased, and the energy and content freshness was efficiently maintained. The authors evaluated the performance using ndnSim in which they used nodes with a memory of about 50 to 100 MB. The results showed that energy consumption was reasonably reduced, and response time increased.

In [46], caching considering content freshness and energy was described by the authors. In their scheme, a new field was added that manages all cached content entries, maintains fresh data, and removes the old data. They evaluated the performance using ndnSim. Performance was measured using different parameters such as the quantity of nodes, mobility, and simulation time. The results showed that data freshness increased, and caching efficiency improved. More than one distinct caching strategy can also be combined (i.e., popularity and freshness) for efficient caching [47].

Content popularity directly affects caching efficiency. If some nodes frequently demand the same data, then this results in less delay time, reduced load on the network, and faster access time. NDN-based WSN nodes calculate the total amount of times a piece of content is requested by the consumer. When the client wants a piece of content, IoT nodes first check their cache to see whether that particular content is available in the CS table. The total number of requests for specific content is also recorded by the NDN-based IoT nodes. If content is not available in the CS, then they request that content from the data producer and cache the content for future use. If the count for specific content increases above the threshold value, then this content is labeled as popular content.

Due to advancements in the IoT, NDN is considered the only future network paradigm that efficiently maintains all the services of the IoT. Therefore, in [48], a scheme called periodic caching (PC) was proposed by the authors for an NDN-based smart city. Smart city is one of the key fields in the IoT. It is the ideal scenario for almost all fields. PC is a flexible strategy for efficient caching in NDN-based IoT networks. Each node in the smart city contains a separate table in which interest for each request is calculated. This table includes multiple fields such as data name, threshold, frequency count, and recent accessed time. The most used content is calculated with the help of this table. The threshold algorithm calculates the maximum value for the threshold. This indicates which content is used the most. For each specific piece of content, if the interest touches the threshold value, then this content is marked as the most-used content. The most-used content is cached at the edge routers. If the cache space in the edge routers is full, then some data packets are transferred to the central node. All the new INTEREST packets pass through these central nodes. Furthermore, if requested data are found at these central nodes, then the request is fulfilled there, and the INTEREST packet is not sent to the edge routers. LRU policy is used for content eviction. Desired content is placed near the user in the edge router, which results in less data retrieval time and a shorter path length. However, the cache hit ratio increases because now all the INTEREST packets pass through the central node. The authors evaluated the PC performance using SocialCCN simulator. A user load of 4000 was put on the PC, and the tree topology was implemented. Different cache sizes of 90 and 999 were used. The results showed that the cache hit ratio increased, and data retrieval time decreased. Popularity and freshness have also been combined for better caching in NDN-based IoT devices [49].

In [50], a popularity-based caching scheme was proposed by the authors. This collaborative strategy caches the content by considering the node degree and its distance. The authors evaluated the performance using CCNx Contiki and ran this simulation nine times on different parameters. The results showed that the cache hit ratio and energy consumption were improved. Caching for heterogeneous content in the IoT can also be possible by adopting one or more caching schemes together in a single framework [51].

In [38], a cooperative scheme that integrates a data freshness mechanism for caching in NDN-based IoT was proposed by the authors. This scheme uses data freshness and cooperative mechanisms. They named this scheme lifetime cooperative caching. The scheme uses the request rate and lifetime of the IoT content. They also proposed a threshold level for caching that automatically adjusts different data rates under different conditions. They evaluated this scheme by developing a simulator in C++. The results showed that data retrieval delay and energy consumption were improved.

In [41], a cooperative caching scheme that combines content popularity in NDN-based IoT was proposed. This scheme works on machine-to-machine NDN-based IoT networks. The goal was to improve the ICN-IoT domain. The lifetime of WSN data was considered for efficient retrieval and nodes’ battery consumption. Performance was evaluated using the TOSSIM simulator, and the results showed that the cache hit ratio increased and energy consumption and retrieval delay were improved.

In [44], a freshness and probability-based caching scheme named probability-based IoT in-network caching was proposed by the authors. Their model caches the content on the basis of content freshness and then calculates its probability using different parameters. Content is cached by eliminating the less probable content. The authors evaluated the performance for 399 routers using MATLAB. The results showed that cost reduction and network load were improved.

In [47], edge caching by considering freshness and popularity in NDN-based IoT was proposed by the authors. This scheme works under different scenarios depending upon the requirement. Two different caching strategies were combined: autonomous and coordinated. Performance was evaluated using the GEANT topology. The results showed that cache hit ratio increased.

In [49], a scheme named caching fresh and popular content (CFPC) was proposed by the authors. In this scheme, the authors proposed an NDN-based IoT that caches the data by calculating the content popularity and lifetime in the network. The main aim was to cache the most popular data by considering the maximum lifetime of the cached content. The decision about content popularity was based on the NDN-based IoT routers’ content forwarding. After a fixed interval of time, the router calculates the total number of received requests and each distinct content request. Popularity is measured by dividing each distinct content request by the total number of requests received. Then, some exponential weightage is added for more accurate calculation. After a fixed amount of time, these calculations are reset to zero, and a new popularity is defined. If the content is popular, then it is cached; otherwise, it is dropped. The authors evaluated the performance of CFPC using ndnSim simulator for 10 NDN routers with a binary tree topology. The results showed that the cache hit ratio increased, and data retrieval time decreased.

In [51], an edge caching-based heterogeneous scheme in the IoT was proposed by the authors. This scheme uses artificial edge caching for heterogeneous IoT applications that mostly considers the content’s popularity and probability. This scheme uses edge clustering and edge caching processes that count and cache the popular content. The probability of specific content is predicted via collaborative filtering. Content with the highest probability is stored at the edge nodes. The authors evaluated the performance using the Icarus simulator. The results showed that the cache hit ratio increased and average hop count and content retrieval time decreased.