Open Access

Open Access

ARTICLE

5G Resource Allocation Using Feature Selection and Greylag Goose Optimization Algorithm

1 Department of Computer Sciences, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

2 Computer Science and Intelligent Systems Research Center, Blacksburg, VA 24060, USA

* Corresponding Author: S. K. Towfek. Email:

Computers, Materials & Continua 2024, 80(1), 1179-1201. https://doi.org/10.32604/cmc.2024.049874

Received 21 January 2024; Accepted 29 May 2024; Issue published 18 July 2024

Abstract

In the contemporary world of highly efficient technological development, fifth-generation technology (5G) is seen as a vital step forward with theoretical maximum download speeds of up to twenty gigabits per second (Gbps). As far as the current implementations are concerned, they are at the level of slightly below 1 Gbps, but this allowed a great leap forward from fourth generation technology (4G), as well as enabling significantly reduced latency, making 5G an absolute necessity for applications such as gaming, virtual conferencing, and other interactive electronic processes. Prospects of this change are not limited to connectivity alone; it urges operators to refine their business strategies and offers users better and improved digital solutions. An essential factor is optimization and the application of artificial intelligence throughout the general arrangement of intricate and detailed 5G lines. Integrating Binary Greylag Goose Optimization (bGGO) to achieve a significant reduction in the feature set while maintaining or improving model performance, leading to more efficient and effective 5G network management, and Greylag Goose Optimization (GGO) increases the efficiency of the machine learning models. Thus, the model performs and yields more accurate results. This work proposes a new method to schedule the resources in the next generation, 5G, based on a feature selection using GGO and a regression model that is an ensemble of K-Nearest Neighbors (KNN), Gradient Boosting, and Extra Trees algorithms. The ensemble model shows better prediction performance with the coefficient of determination R squared value equal to. 99348. The proposed framework is supported by several Statistical analyses, such as the Wilcoxon signed-rank test. Some of the benefits of this study are the introduction of new efficient optimization algorithms, the selection of features and more reliable ensemble models which improve the efficiency of 5G technology.Keywords

The communication technology was fully revolutionizing and establishing a new paradigm of 5G where one can get a peak speed of around number two hundred gigabytes per second, which is way different from its initial introduction to the world in the form of four gees where there was theoretical top speed limit which is close or nothing more than mere single gigabits. However, 5G is not a very minor enhancement; it offers the potential to realize huge revolutions, primarily in those varieties of low latency [1]. This trait has a great opportunity to increase the efficacy of various applications, from business systems to immersion information technologies, including online gaming, virtual conferences, and self-driving cars. However, unlike previous cellular technologies that were all about connectivity, 5G goes beyond those boundaries by providing connected experiences that are not rationally restrained from the cloud but rather reach end users. They include virtual and software-led designs based on cloud technology that strike the world of connectivity. Portability can be eliminated by going below the surface of 5G connectivity. Using its seamless roaming features, a user can move uninterruptedly from one cellular access point or Wi-Fi hotspot outdoors and in buildings. This is achieved without any user participation or need for re-authentication so as not to break connectivity betweenenvironments [2,3].

5G will connect people who live in remote rural areas to the Internet, which will help solve problems. It also tries to keep up with the growing need for connectivity in cities with lots of people, where 4G technology might not be able to keep up. Some ways to speed up the processing of data are to change the network’s architecture to make it denser and more spread out and to store data closer to the edges. AI and optimization synthesis has become the most important part of 5G technology in order to get the most out of it [4]. Metaheuristic optimization is a field that uses complex algorithms to solve these kinds of optimization problems. It is the way to solve these problems because there are an infinite number of resources available. It is important to use the most useful information from the sources that are available in the real world, so optimizations and new ways of doing things areneeded [5,6].

One of the main aspects related to machine learning model optimization includes feature selection, which refers to picking up relevant features from various data. This cumbersome process is replete with obstacles involving complex combinatorial optimization. Binary optimization algorithms, including bGGO [7,8] and others, pave the way. Following the feature extraction strategy, they obtained better results with relatively few selected features that can be applied in many areas as problem solutions [9]. Our question is not limited to binary optimization but continues into other wide-ranging approaches. In the framework dedicated to 5G resource allocation operations, machine learning models are optimized by using various algorithms such as Greylag Goose Optimization (GGO) [10], Gray Wolf Optimizer (GWO) [11], Particle Swarm Optimization (PSO) [12], Biogeography-Based Optimization (BBO) [13]. Additionally, we have a vast array of machine learning algorithms that play varied roles in the optimization process for resource allocation to 5G networks [14–16]. In our study, we use the following toolkit, which mainly consists of K-Nearest Neighbors (KNN) [17], Gradient Boosting [18], Extra Trees [19], Multilayer Perceptron (MLP) [20], Support Vector Regression [21], XGBoost [22], Catboost [23], Decision Tree [24], Random Forest, and Linear Regression [25–27].

The main contributions of this study include:

○ Development of a Novel Optimization Framework: The introduction of an advanced optimization framework that leverages bGGO for feature selection, specifically tailored to enhance the performance of machine learning models in 5G resource allocation.

○ Improvement in Feature Selection Strategies: Utilizing bGGO to significantly reduce the feature set while maintaining or improving model performance, leading to more efficient and effective 5G network management.

○ Ensemble Model Innovation: Creation of a robust ensemble model combining KNN, Gradient Boosting, and Extra Trees algorithms, which significantly improves predictive accuracy and stability in the context of 5G networks.

○ Optimization Using GGO: Implementing the GGO algorithm to fine-tune the ensemble model, further enhancing its performance and ensuring optimal resource allocation in 5G networks.

○ Empirical Validation: Demonstrating the practical applicability and superiority of the proposed framework through rigorous testing and validation, highlighting substantial improvements in network efficiency and resource management.

These contributions collectively advance understanding optimization techniques, feature selection strategies, and ensemble modeling in 5G technology, offering valuable insights for researchers and practitioners.

Previous research has been majorly pegged on heuristic and metaheuristic optimization techniques in resource scheduling. Although these methods are proven effective, the problem is that, in many cases, they are limited in terms of their scalability and ability to adapt to the current state of the networks. Moreover, feature selection still represents an important issue because 5G network data presents many elements that must be analyzed to create machine learning models.

The primary research gaps identified include:

○ Scalability Issues: Several optimization algorithms available today are not easily scaled up to large volumes of data and the high-speed technological environments of the 5G network.

○ Dynamic Resource Management: It has emerged that there remain issues when it comes to source management in the networks and the demands of users.

○ Efficient Feature Selection: When machine learning models are employed in 5G network data, high dimensionality affects feature selection, which may reduce the model’s performance.

○ Integration of Advanced Optimization Techniques: There are diverse areas in which AI and machine learning have been incorporated in 5G; however, scattered models have not improved the performance of integrated feature selection and model optimization.

Based on the abovementioned shortcomings, this research will propose a new optimization framework to address feature selection using bGGO and fine-tuning an ensemble model with GGO. This integrated approach addresses these concerns and promotes scalability, dynamism, and optimization in resource management in 5G networks. To fill the abovementioned gaps, this study aims to enhance the developmental pace of 5G technology by offering concrete recommendations for improving all the interconnected components of a telecommunication network.

In the subsequent parts of this paper, the focus is on specific performance measures and statistical tests used to measure efficiency optimization algorithms. Then, it dives deeply into Related Works, Materials and Methods, Experimental Results, and a forward-looking Conclusion and Future Directions, offering a holistic view of the research endeavor.

Applying s-BSs interconnected via mmWave backhaul links is one strategy to control traffic requirement dynamics in contemporary 5G mobile networks [28]. This paper introduces the intelligent backhauling and s-BS/BH link sleeping (IBSBS) framework to minimize power consumption in heterogeneous networks (HetNets). Considering power and capacity constraints, the framework optimizes UE association and backhauling through a heuristic function-based intelligent backhauling algorithm. Additionally, a load-sharing-based s-BS sleeping algorithm dynamically adjusts the states of s-BSs to meet UE demands without compromising power efficiency. Evaluation results demonstrate the superiority of the proposed framework in terms of network energy efficiency, power consumption, and the number of active s-BSs/BH links compared to state-of-the-art algorithms.

In the world of 5G/B5G, mmWave communications, the beam selection problem is one tough nut that must be cracked to overcome attenuation and penetration losses [29]. Traditional exhaustive search methods for beam selection struggle within short contact times. This paper explores the application of machine learning (ML) to address the beam selection problem in a vehicular scenario. Through a comparative study using various ML methods, the work leverages a common dataset from the literature, enhancing accuracy by approximately 30%. The dataset is further enriched with synthetic data, and ensemble learning techniques achieve an impressive 94% accuracy. The novelty lies in improving the dataset and designing a custom ensemble learning method, showcasing advancements in addressing the beam selection problem in mmWave communications.

Spoofing attack detection is important in the 5G wireless communications domain because it helps to preserve network security [30]. This paper focuses on leveraging information from the radio channel to minimize the impact of cyber threats in 5G IoT environments. The integration of artificial intelligence (AI), particularly a Support Vector Machine (SVM) based PHY-layer authentication algorithm, enhances security by detecting possible attacks at the physical layer. The simulation results demonstrate the effectiveness of the proposed method in achieving a high detection rate across various attacks.

The growing dependence on wireless devices for daily necessities necessitates efficient data processing, particularly in applications involving smart technologies [31]. In this context, 5G networks play a crucial role, and this paper addresses the challenge of 5G data offloading to save energy over time. The suggested solution applies MEC and PSO algorithms for edge node selection in order to achieve energy-efficient offloading over the 5G networks. Load balancing is highlighted in the edge nodes to minimize energy consumption, making it clear that the PSO-based offloading method is working well. Results show that mobile edge computing consumes less energy than core cloud and mobile devices, highlighting the power savings obtained using this approach.

A significant surge in mobile data traffic forces 5G BSs to respond by demanding a lot of power [32]. However, existing energy conservation technologies usually ignore the dynamics of user (UE) change and influence the ability to achieve maximum sleep for idle or lightly loaded BS. To overcome this, the paper presents ECOS-BS or Energy Consumption Optimization Strategy of 5G BSs Considering Variable Threshold Sleep Mechanism. This strategy dynamically adjusts the optimal sleep threshold of BSs based on UEs’ dynamic changes, enhancing the management of BS energy consumption in heterogeneous cellular networks.

As a new concept of the next-generation access networks, commonly known as 5G cellular networks, multi-tenancy network slicing remains a fundamental aspect. In this technology, we have the idea of network slices for the initial time as a new kind of public cloud service that brings more flexibility to services and better utilizes the network resources. However, they also come with new problems in managing the network system’s resources. Over time, researchers have proposed multiple techniques to tackle these problems, and several of them utilized ML and AI solutions. In the article [33], the authors present a general overview of the possibilities of network slicing used for resource management and the abilities of machine learning algorithms in the context of the presented solutions. The identified method concerns outline the need to manage network slicing better and handle the multi-tenancy complexity through utilizing ML and AI in 5G networks. The article seeks to bring insights and understanding of the current state of network slicing resource management, especially with the advent of machine learning as a transformative force in this specific network architecture resource management.

Internet and other communication networks are among the most vital aspects of the current society, but despite their importance, several issues remain unsolved, hence the need for constant evolution. Hence, the integration of graph-based deep learning algorithms has gained significant prominence as a pacesetter in modeling different transmission network topologies, outcompeting other approaches in tackling several concerns in the field. This latest survey by [34] provides a comprehensive overview of the rapidly growing literature applying a wide range of graph-based deep learning models, including GCNs and GATs, to various issues in various communication scenarios (e.g., wireless, wired and SDN). The survey presents systematically all the problems examined and solutions suggested in the studies and offers a convenient report for a scholar. In the same way, it also pointed out potential avenues of study that would be useful to explore and fields that would be worth investigating more deeply. Most notably, to the author’s knowledge, this work is novel in proposing deep learning architectures built on graph theory for wired and wireless network scenarios.

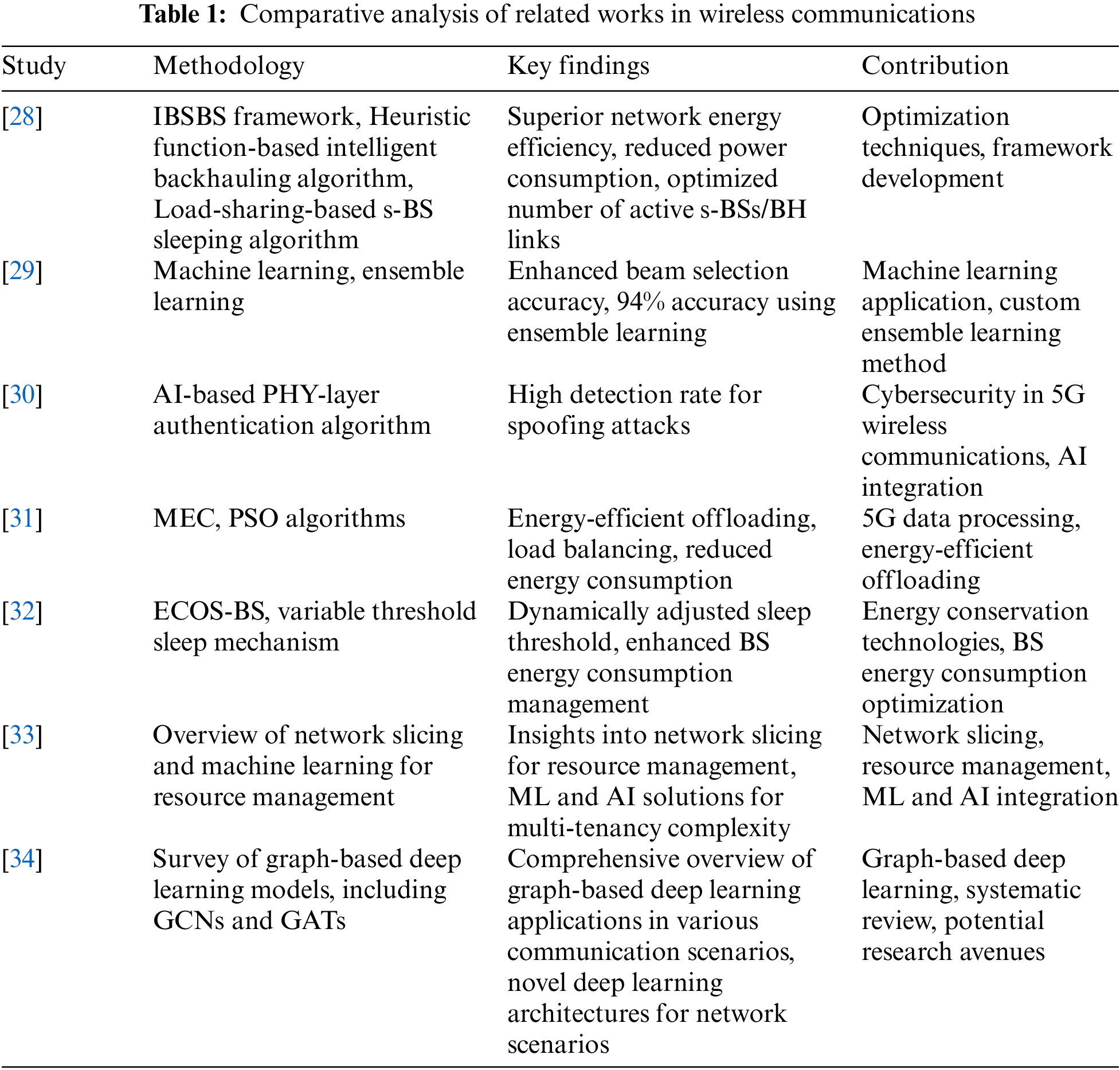

Table 1 summarizes the key contributions of the related works.

This paper uses the Kaggle-obtained dataset [35] to achieve a deep understanding of 5G resource allotment trends. Advanced AI algorithms play an important role in the dynamic resource management of the network using bandwidth, frequency spectrum, and computing power. The dataset provides a multifaceted perspective on resource allocation, delving into various aspects:

○ Application Types: This facet offers insights into the resource demands and allocations associated with diverse applications, ranging from high-definition video calls to IoT sensor data.

○ Signal Strength: The dataset allows an in-depth examination of how signal strength influences resource allocation decisions and subsequently impacts the quality of service delivered.

○ Latency: A crucial element in the study, latency considerations shed light on the intricate balance between low-latency requirements and the availability of resources.

○ Bandwidth Requirements: The dataset enables detailed exploration of the varied bandwidth needs of different applications, unveiling their influence on allocation percentages.

○ Resource Allocation: At the core of the investigation lies the dynamic resource allocation process, where AI-driven decisions determine optimal network performance through allocated percentages.

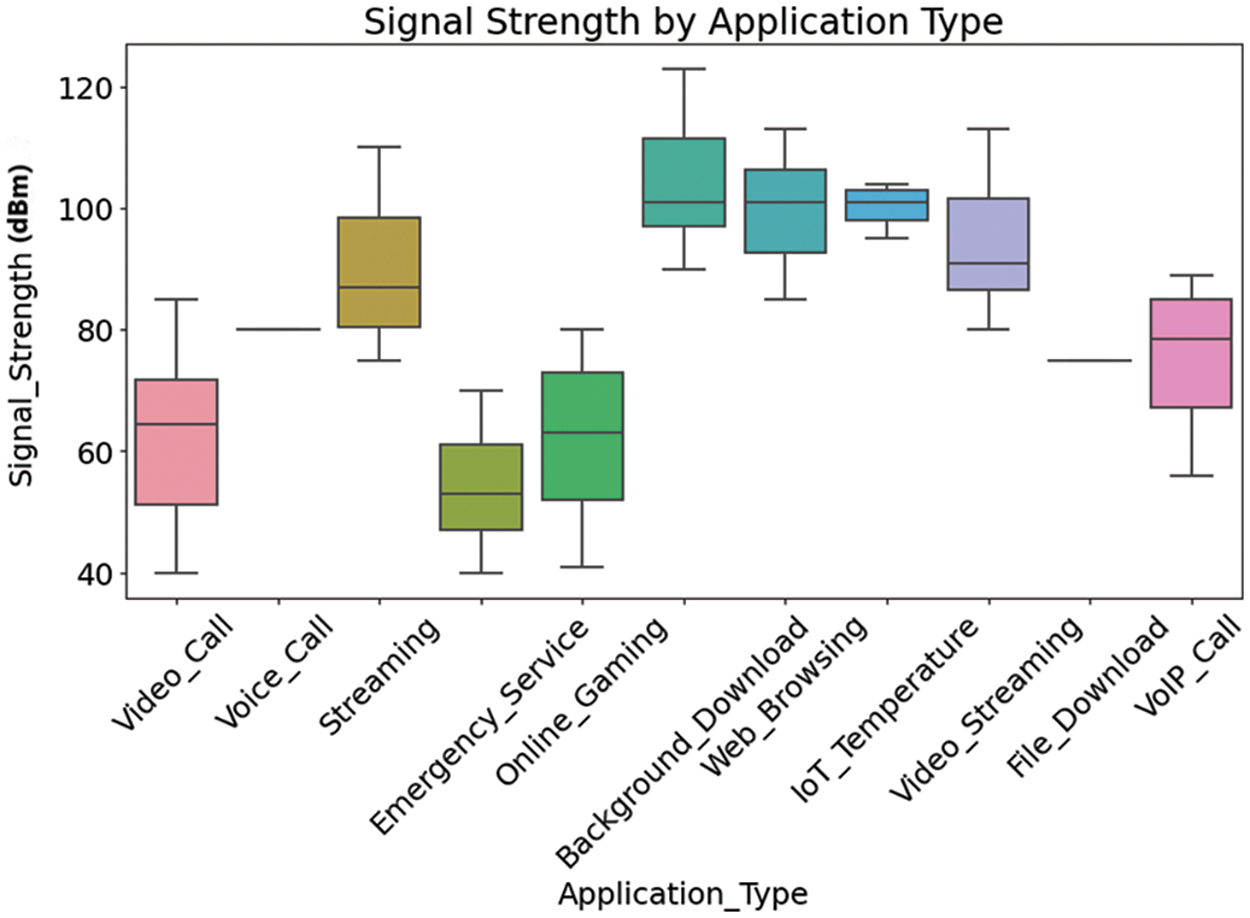

Fig. 1 shows the distribution of signal strength with respect to different application types in the dataset. The figure thus categorizes applications from high-definition video calls to IoT sensor data in order to shed light on how some specifics place significant drains and are themselves drainable by so much signal strength.

Figure 1: Signal strength by application type in the selected dataset

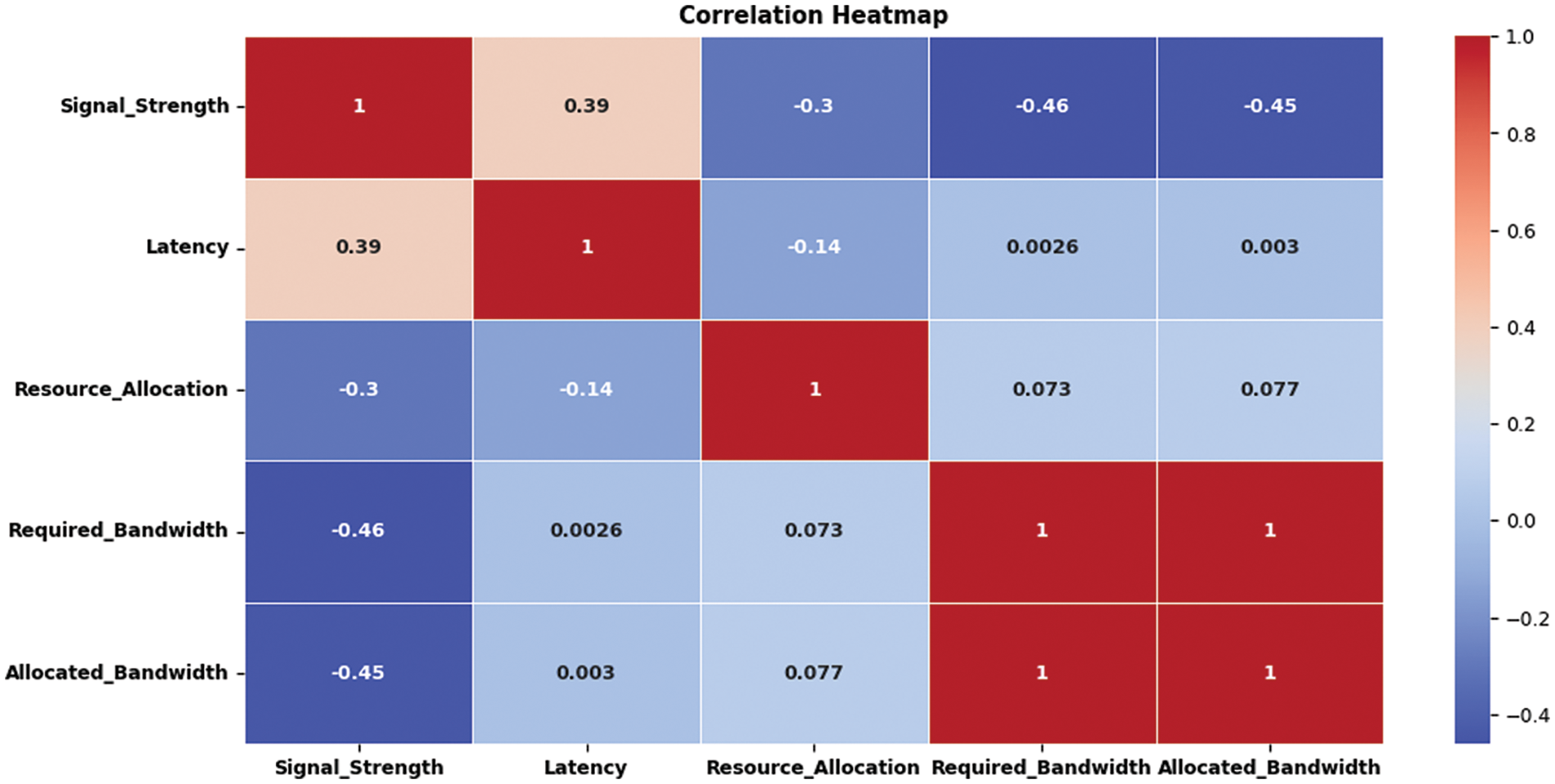

Fig. 2 shows a heatmap of the correlation matrix of numeric columns in the dataset. This graphical representation provides a broad analysis of the elements between core values. Every cell in the heatmap denotes a correlation coefficient value that is presented as an image of how variables interconnect themselves.

Figure 2: Heatmap of the correlation matrix for numeric columns in the selected dataset

3.2 Machine Learning Basic Models

Machine learning, one of the branches of frameworks advanced by artificial intelligence, teaches machines how to mimic intelligent human behavior in solving complex issues, almost like a problem-solver. Fundamental machine learning models that are used as reference models:

○ K-Nearest Neighbors (KNN): This algorithm is applied for classification and regression tasks. It operates because similar data points share akin labels or values. During training, KNN stores the complete dataset as a reference [36].

○ Gradient Boosting: A boosting technique in machine learning that minimizes overall prediction error by amalgamating the strengths of prior models. It strategically establishes target outcomes for the subsequent model to diminish error [37].

○ Extra Trees: Abbreviated for extremely randomized trees, this supervised ensemble learning method utilizes decision trees. It is applied by the Train Using AutoML tool, contributing to classification and regression tasks [38].

○ Multilayer Perceptron (MLP): Employed in diverse machine learning techniques, such as classification and regression, MLPs demonstrate heightened accuracy for classification predicaments. Regression, a supervised learning technique, approximates continuous-valued variables[39].

○ Support Vector Regression (SVR): Applied for regression analysis, SVR seeks a function that approximates the relationship between input variables and a continuous target variable, curtailing prediction error [40].

○ XGBoost: A robust machine-learning algorithm implementing gradient-boosting decision trees. It has earned global acclaim among data scientists for optimizing machine-learning models [41].

○ CatBoost: A variant of gradient boosting adept at managing both categorical and numerical features. It circumvents traditional feature encoding techniques, utilizing an algorithm termed symmetric weighted quantile sketch to manage missing values and enhance performance [42].

○ Decision Tree: A non-parametric supervised learning algorithm utilized for both classification and regression tasks. It structures information hierarchically with root nodes, branches, internal nodes, and leaf nodes [43].

○ Random Forest: An ensemble learning method constructing numerous decision trees during training for classification, regression, and other tasks. For classification, the output is the class selected by the majority of trees [44].

○ Linear Regression: This analysis predicts a variable’s value based on another variable. The predicted variable is the dependent variable, while the variable used for prediction is the independent variable [45].

Ensemble learning is a well-thought-out strategy in machine learning through which the predictions of several models are summed up to have relatively better results compared with any one model alone. The approach reduces human or machine errors within the separate models by science being derived from an assortment of such finished artifacts. This teamwork, in turn, contributes to better precision and improved performance of the system performing ensemble learning, meaning this approach is one powerful tool within the machine-learning field [46].

3.4 Greylag Goose Optimization (GGO) Algorithm

3.4.1 Geese: Social and Dynamic Behavior

Geese show both social and dynamic behaviors which are adaptive and make them cooperative. They create durable alliances with their mates, manifesting fidelity through vigilance in the presence of sickness or injury. Intriguingly, a goose could move to isolation after losing a partner and could be single till they die, signing a great level of loyalty rarely seen in many other species. During the breeding season, geese undertake breeding behaviors, with males fervently guarding the nests while females tend to them. Some geese show nest site fidelity, repeatedly returning to the same nesting place if conditions allow. This trait depicts their dedication to keeping the future generation on the safe side.

In group settings, geese would gather to form larger groups known as gaggles. Among these groups, individuals in rotation act as sentries guarding for predators as others feed. Such an interdependent behavior is like the way the teamwork among sailors on board, where members watch each other’s safety. Well-being geese have been observed protecting injured individuals and behaving as the brood generally. The migration of geese is equally impressive. They travel thousands of kilometers in large flocks. They fly in a unique V-shaped pattern, which reduces air resistance and allows them to save energy while on long trips. Also equipped with joint memory and navigation, geese rely on recognized reference points and sky indicators.

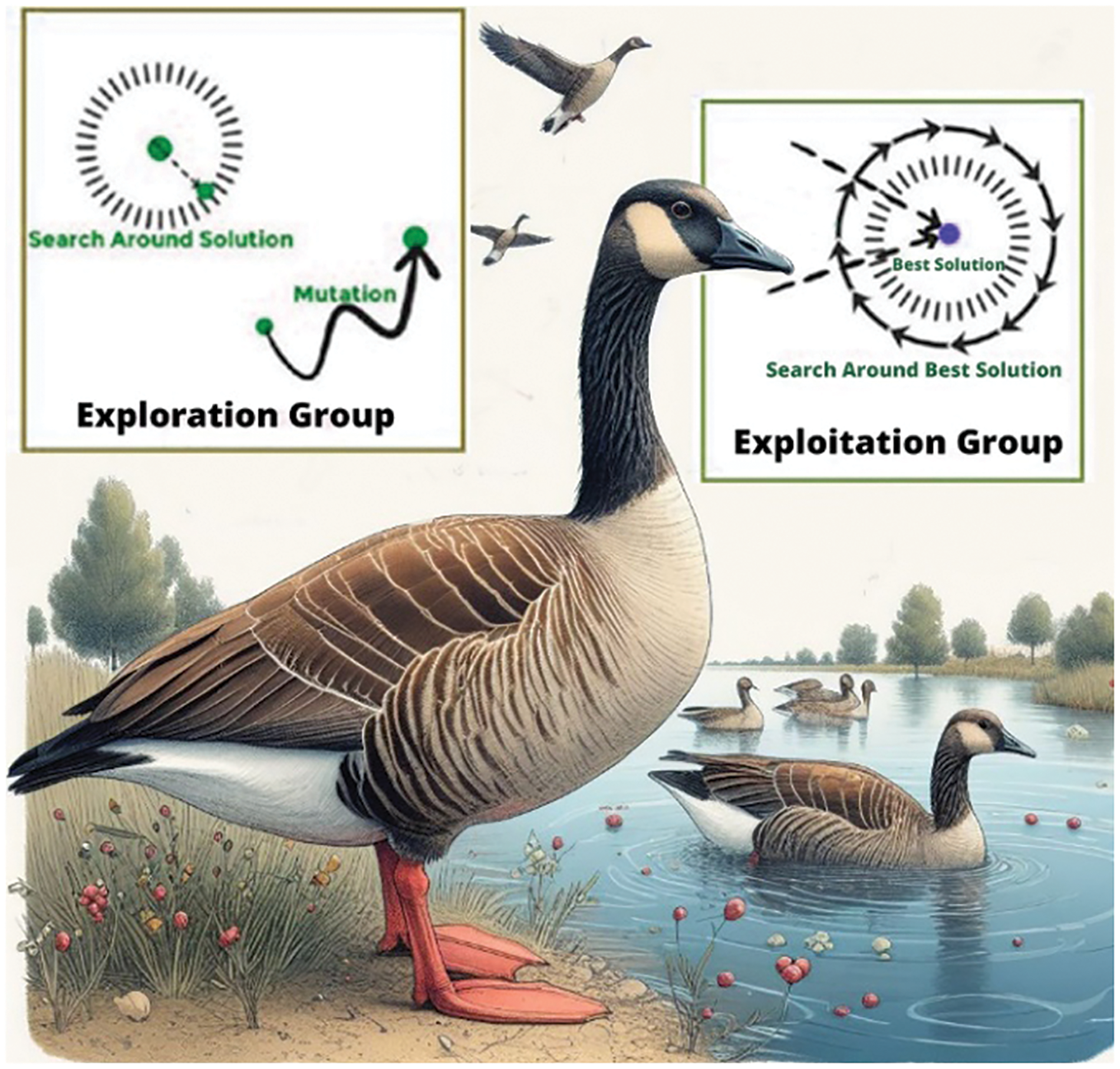

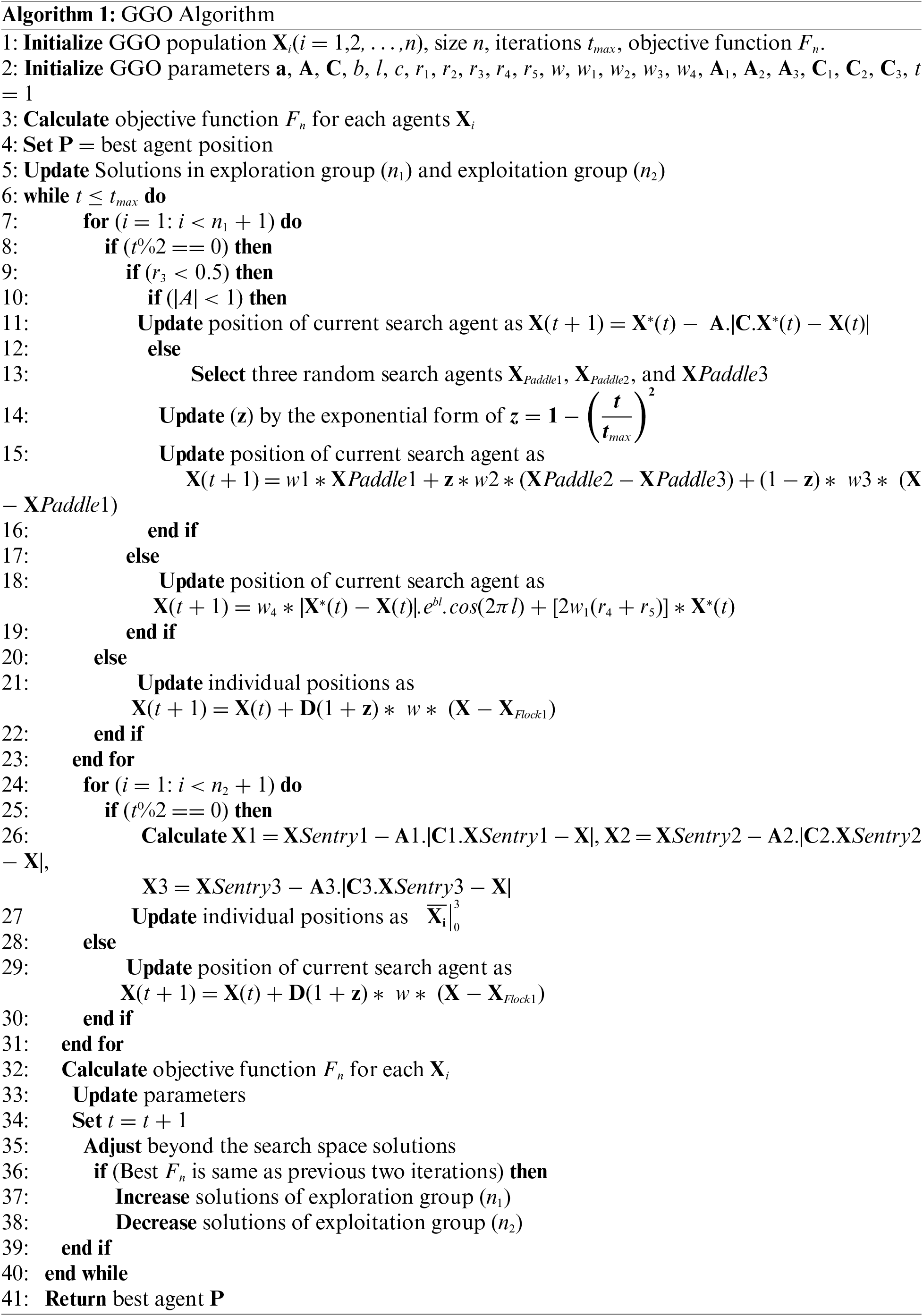

The Greylag Goose Optimization (GGO) algorithm [10] borrows concepts of this dynamic behavior of geese. It begins by randomly producing individuals, each referring to a prospective solution to a particular problem. Such individuals are then divided into exploration and exploitation groups, the size of each adjusting dynamically based on how well the best solution is performing. This results in an effective search of solution space and avoids the algorithm being successfully stuck in local optima, as shown in Fig. 3.

Figure 3: Exploration and exploitation of grey goose optimization

The exploration operation in the Greylag Goose Optimization (GGO) algorithm focuses on finding promising areas within the search space and avoiding stagnation in local optima by moving towards the best solution. This is achieved through iterative adjustments of agent positions using specific equations.

One key equation used in the GGO algorithm for updating agent positions is:

Another equation used to update agent positions involves utilizing three random search agents, named

where

Additionally, another updating process involves adjusting vector values based on certain conditions, and is expressed as:

where

The exploitation operation in the Greylag Goose Optimization (GGO) algorithm focuses on improving existing solutions based on their fitness. The GGO identifies the solutions with the highest fitness at the end of each cycle and utilizes two strategies to enhance them.

1. Moving towards the best solution: This strategy involves guiding other individuals towards the estimated position of the best solution using three solutions known as sentries. The position updating equations for each sentry

where

2. Searching the area around the best solution: In this strategy, individuals explore regions close to the best solution to find enhancements. The position updating equation is given by:

where

These strategies allow the GGO algorithm to continuously improve solutions by adjusting the positions of individuals towards more promising regions in the search space.

Algorithm 1 acts as a systemic tutorial, displaying how GGO uses behaviors analogous to flock dynamics, gathering from studies of the behavioral patterns of Greylag Geese. Through the deconstruction of the GGO algorithm, we learn how this AI system enhances solutions via a flying bird example showing networked conditions that emulate collaborative and efficient flight behaviors.

Feature selection has gained prominence in data analysis due to its ability to reduce the dimensionality of data by eliminating irrelevant or redundant features. This optimization process aims to find relevant features that minimize classification errors across various domains. Feature selection is often formulated as a minimization problem in optimization. In the context of the GGO algorithm, solutions are binary, taking values of 0 or 1, to facilitate feature selection. To convert continuous values to binary ones, the Sigmoid function is utilized:

where

In the binary GGO algorithm, the quality of a solution is evaluated using the objective equation

where

Hyperparameter tuning is a basic element of the field of machine learning since it gives this conduit for data scientists interested in maximal efficiency to boost results. This rather intricate process is related to settings tailor-made for fine-tuning, referred to as hyperparameters, that flavor the performance of a model. The selection of the proper values for such a setter hyperparameters is key to success in this field, where machine learning dominates. Demonstrating with a learning rate, one of the most significant hyperparameters, tuning it right can lead to dramatic changes in model performance and the predictability of its results.

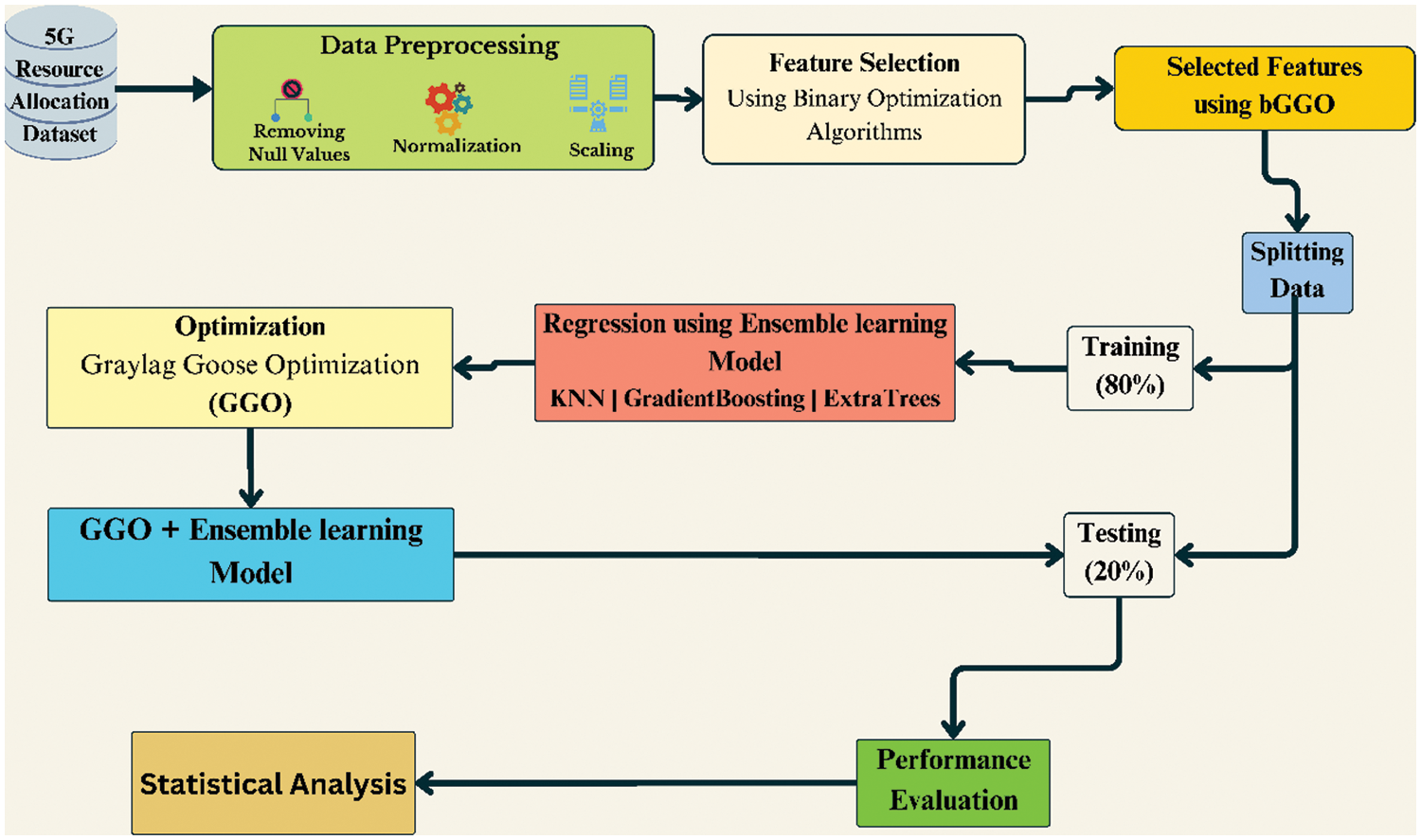

Fig. 4 shows the generic approach to resource management in 5G networks and how sophisticated feature selection, and optimization may be additive features. The framework uses the employed Binary Greylag Goose Optimization (bGGO) algorithm to determine which features from the dataset are relevant to the ML models and incorporate them into the models for better performance. Hence, it is through these optimized features that the recently developed ensemble model consisting of K-Nearest Neighbors (KNN), Gradient Boosting, and Extra Trees algorithms for performing a final prediction. The mentioned working approach helps optimize the resources used across the layers and fulfill the 5G requirements. This figure summarizes the different steps within the framework, including the pre-processing of data, selecting the relevant features, and building and training the model. This structured methodology underscores the interaction between feature selection and enzyme learning regarding considerable precision enhancement and better resource utilization in 5G networks. Analyzing the results obtained for test data in terms of Mean Squared Error (MSE) and the degrees of explained variance (R-squared) indicates the efficiency of the proposed framework and thus makes it one of the main contributions of this paper to the field of 5G network optimization.

Figure 4: Proposed framework for optimizing 5G resource allocation

In our study, we meticulously conducted at least 30 independent runs for each of the algorithms evaluated. This rigorous approach ensured the robustness and reliability of our experimental results. Beginning with the crucial step of feature selection, we identified the most significant feature through various optimization techniques such as bGGO, bGWO, bPSO, and others. Notably, the bGGO implementation stood out, exhibiting superior performance with record-low average error compared to alternative methods. Encouraged by these promising results, we proceeded to assemble an Ensemble model utilizing the GGO algorithm.

Our Ensemble model combines three well-known machine learning algorithms: KNN, Gradient Boosting and Extra Tree algorithms. We selected these algorithms for strong predictive abilities to the extent that they collectively deliver R2 values. From this point onwards, we would like to discuss our methodology, explain the algorithms used, and present all results of experiments followed by their critical analysis. This travel points out essential cooperation between Feature Selection and Ensemble modeling, indicating the crucial place of respective means in achieving the predictive performance enhancement accuracy of machine-cognizance models.

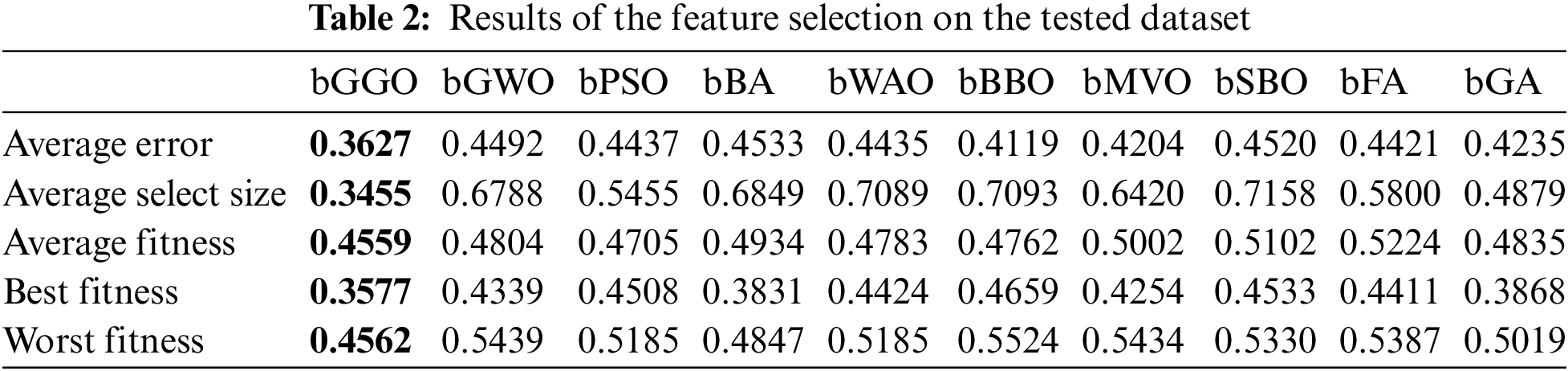

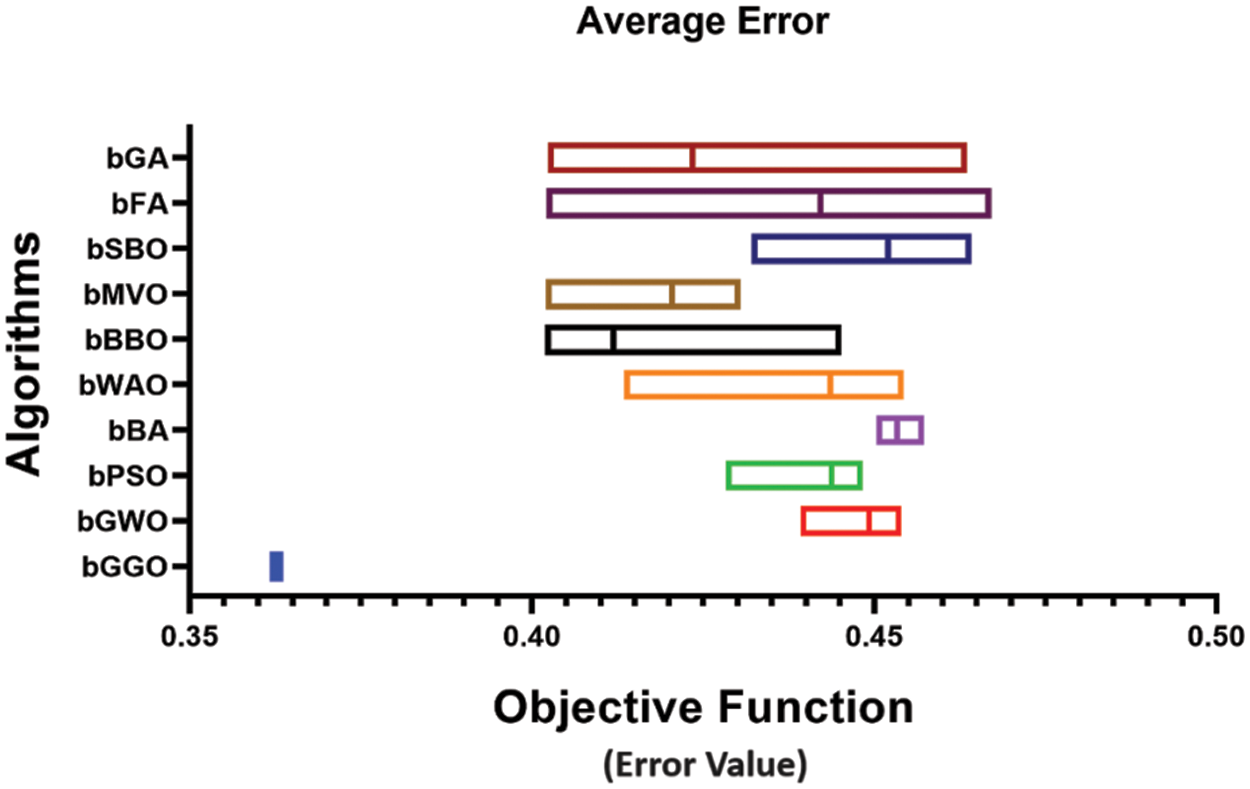

Table 2 shows the results obtained from feature selection due to various algorithms that were tested using this dataset. With the best results in terms of performance and precision, it is a binary Greylag Goose Optimization (bGGO) algorithm with the lowest value of 0.3627 as the average error, which proves its efficiency for finding relevant features.

Fig. 5 shows the accuracy of different Feature Selection algorithms. This visual reflection illustrates the performance of accuracy for each algorithm. It can, therefore, be concluded from the findings that the bGGO algorithm is one of those algorithms with remarkable accuracy in feature selection for the dataset.

Figure 5: Accuracy investigation of various feature selection algorithms

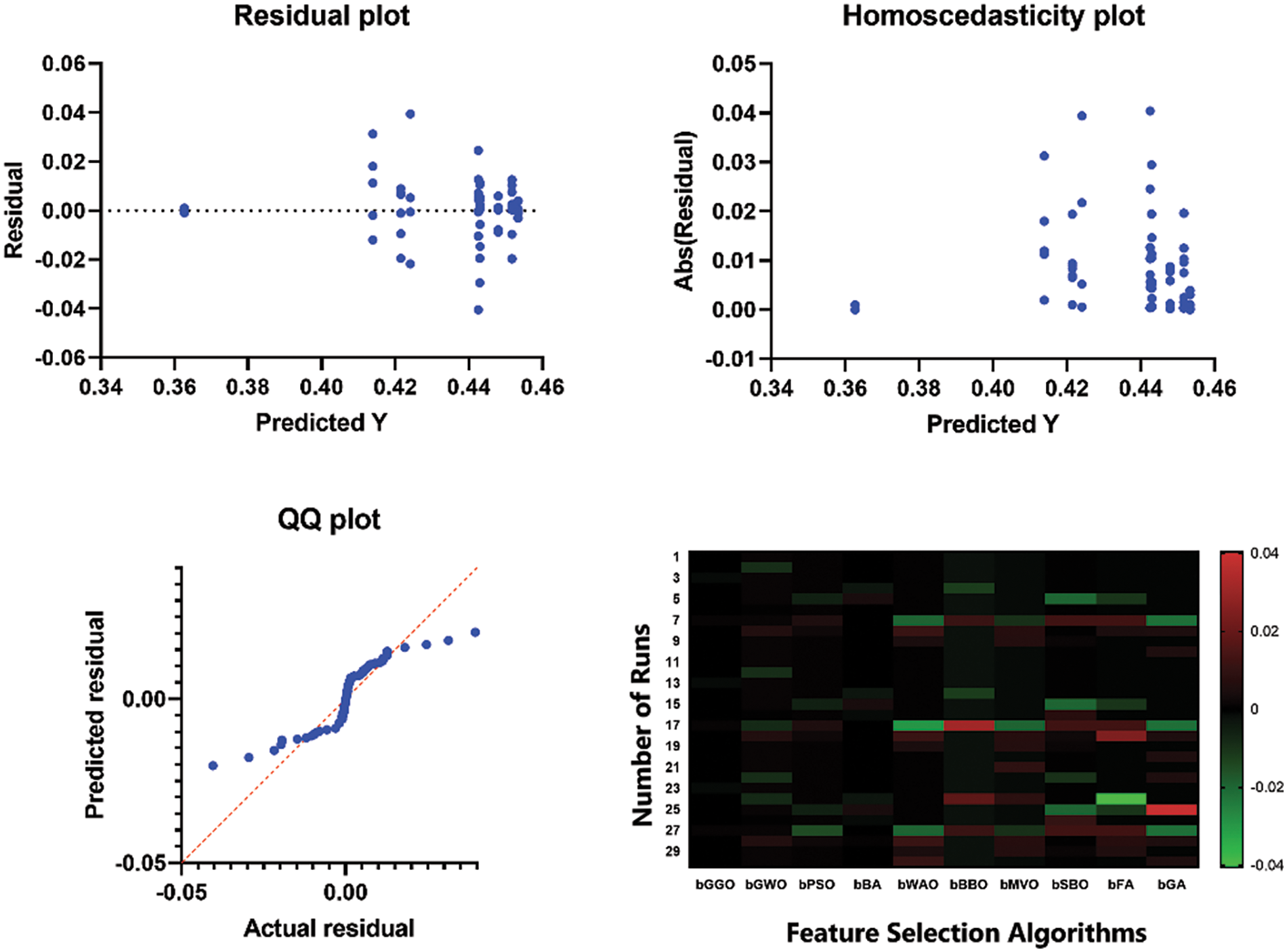

Heatmap and the residual values for bGGO, bGA, and bPSO are shown in Fig. 6. Finally, through residual values, the variations between observed and predicted outcomes can be closely assessed to determine how effective this model has been. Alternatively, a heatmap graphically shows the correlation matrix of numeric columns. It allows for further increasing performance indicators by understanding how different variables influence each other in this way, and that is not necessarily the case. This figure is useful in evaluating the efficacy of the bGGO algorithm compared to different algorithms by looking at the remainder assessment and correlation patterns.

Figure 6: Residual values and heatmap for the proposed GGO algorithm and other algorithms

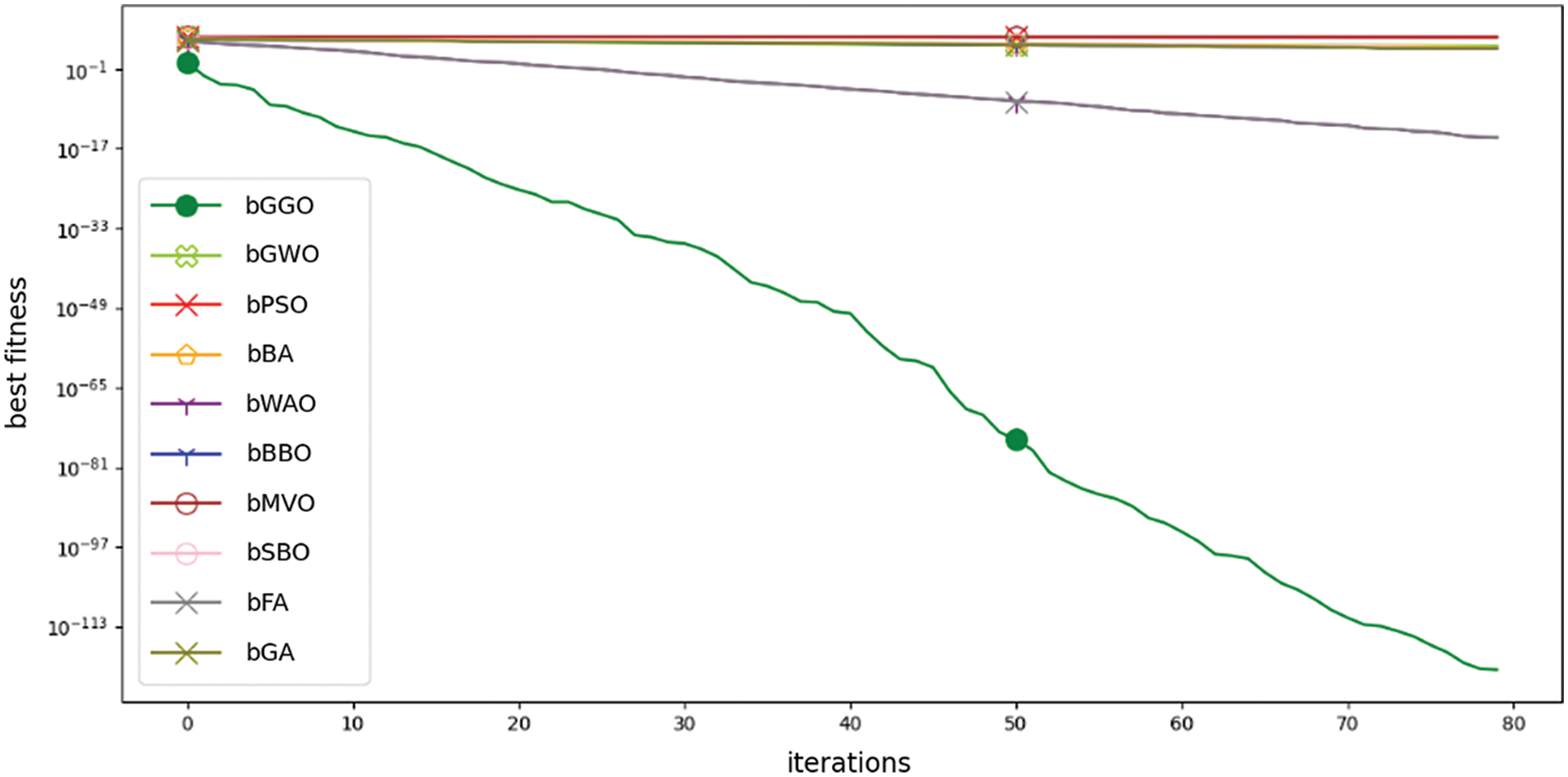

Fig. 7 depicts a convergence plot of the proposed bGGO algorithm against different algorithms in the literature. This chart demonstrates the transformative path of the optimization process as it is dependent on the number of evaluations of people.

Figure 7: Convergence curve of proposed bGGO algorithm vs. VS algorithms

So, convergence curves or time optimization curves refer to the period of evaluations and cover how an optimization continues toward determining the optimal solution during its execution. Looking at such a curve allows you to get acquainted with the efficiency and convergence patterns of bGGO algorithms compared with others.

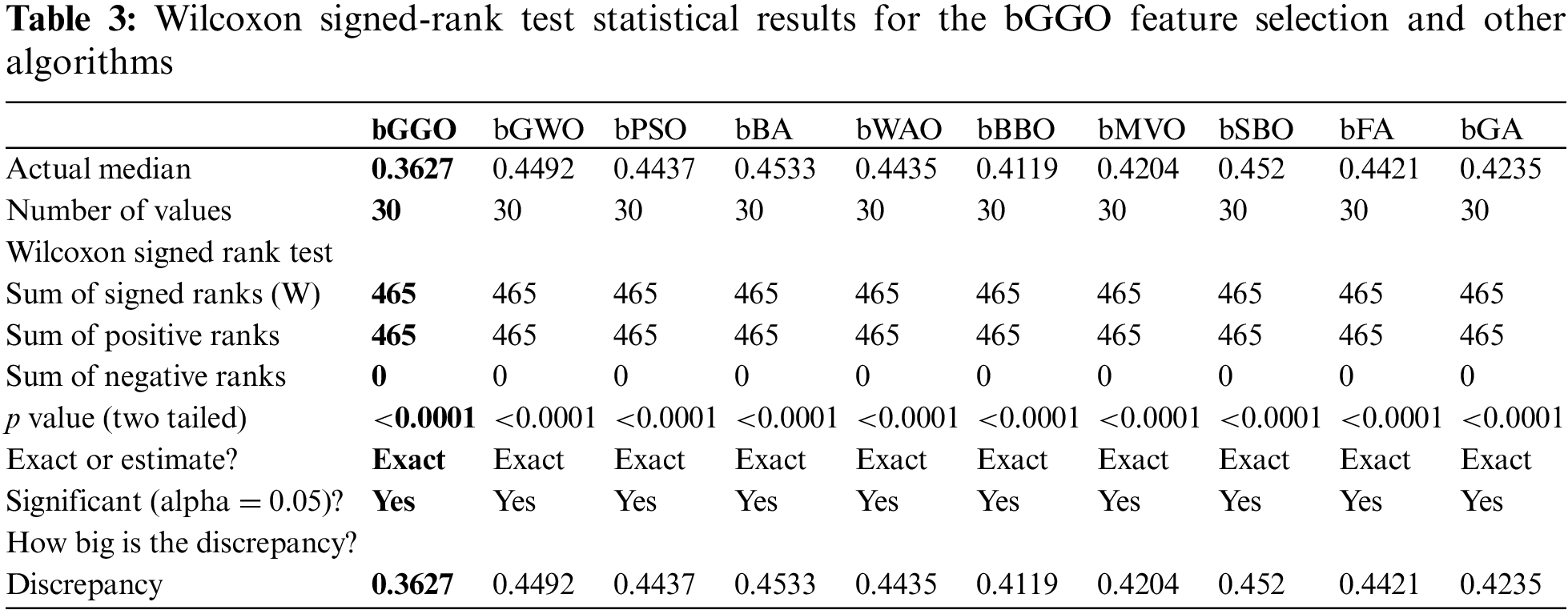

Table 3 shows the outcome of the Wilcoxon signed-rank test, which was conducted to evaluate bGGO Feature Selection against other algorithms. The p values, both exact and estimated, are assumed to point to the significance of differences. The table also contains observed differences in performance measurements, like average error, for all the algorithms that show significance.

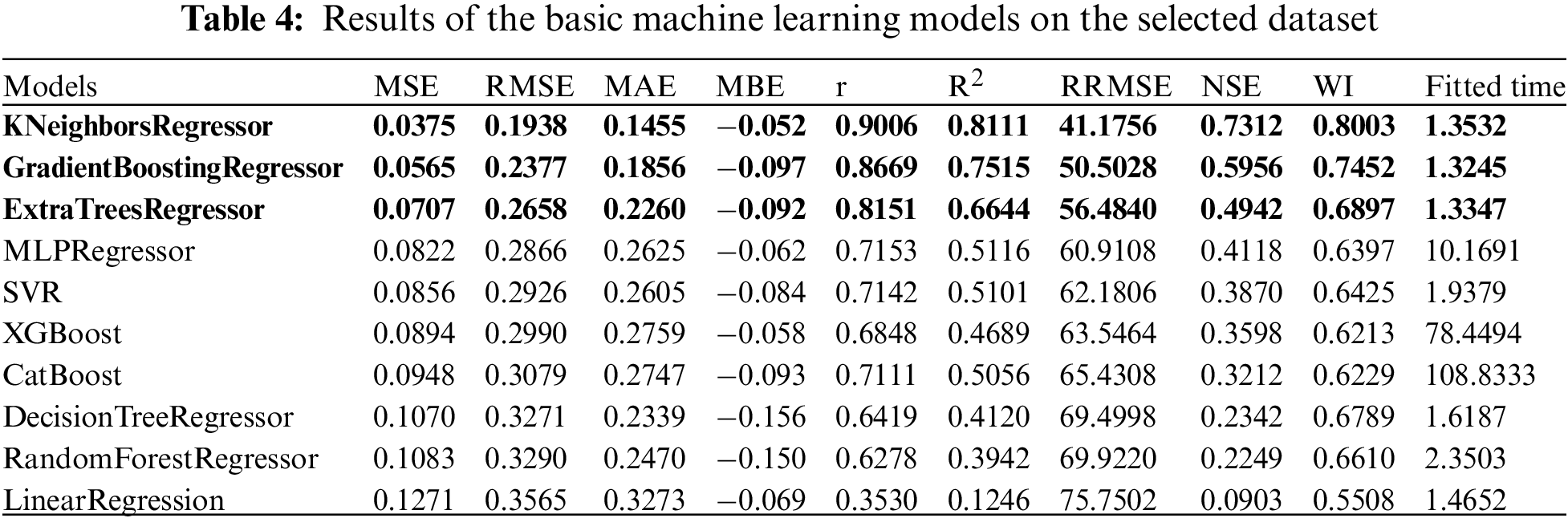

As part of our study, we utilized the GGO algorithm for ensemble modeling based on k-nearest neighbors, Gradient Boosting and Extra Tree models. This ensemble was selected because of its collective optimality to attain the maximum R2 values. Table 4 does an excellent review of the performance results for several simple machine learning models used on the above-described dataset. The models are KNeighborsRegressor, GradientBoostingRegressor, ExtraTreesRegression classifier and MultiLayerPerceptron Regulator classifiers. The measures appraised include Mean Squared Error (MSE), Root Means Square (RMSE) for the examination, Measure absolute error (MAE), and Bias mean alteration as means of evaluation. Such metrics evaluate models in terms of their performance across a number of criteria.

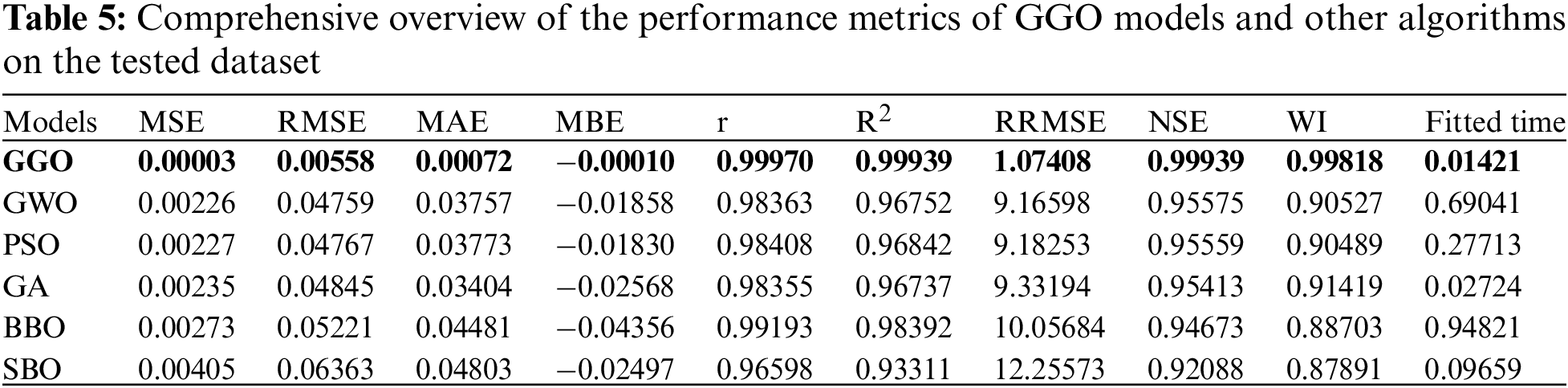

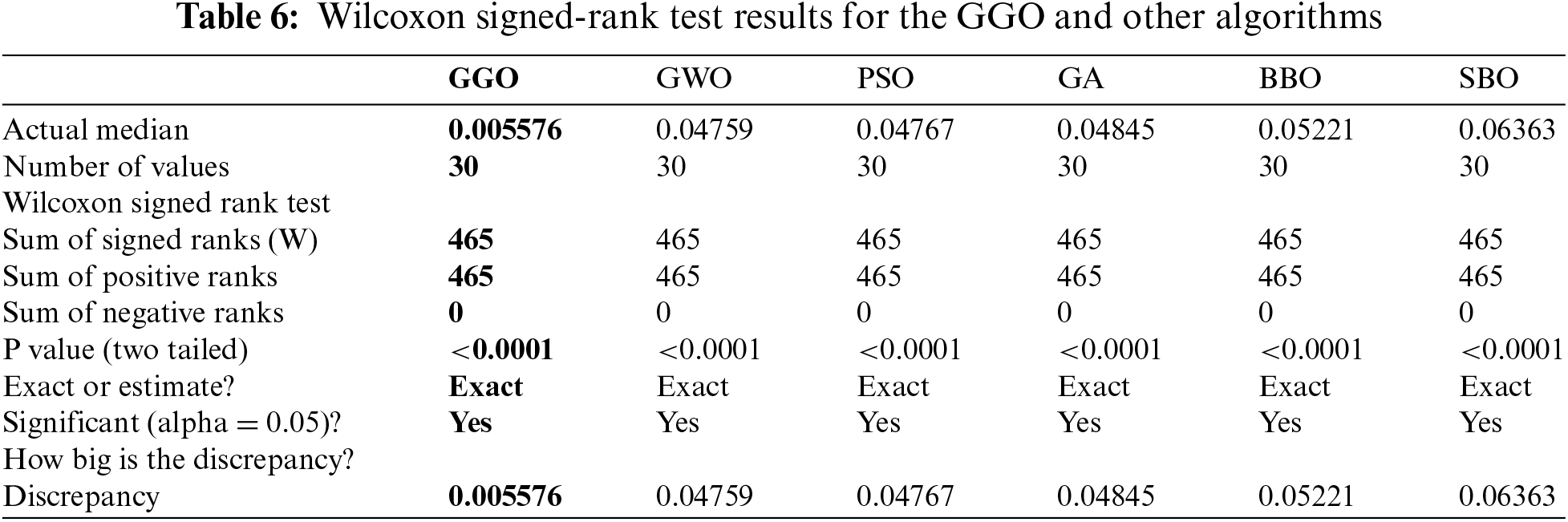

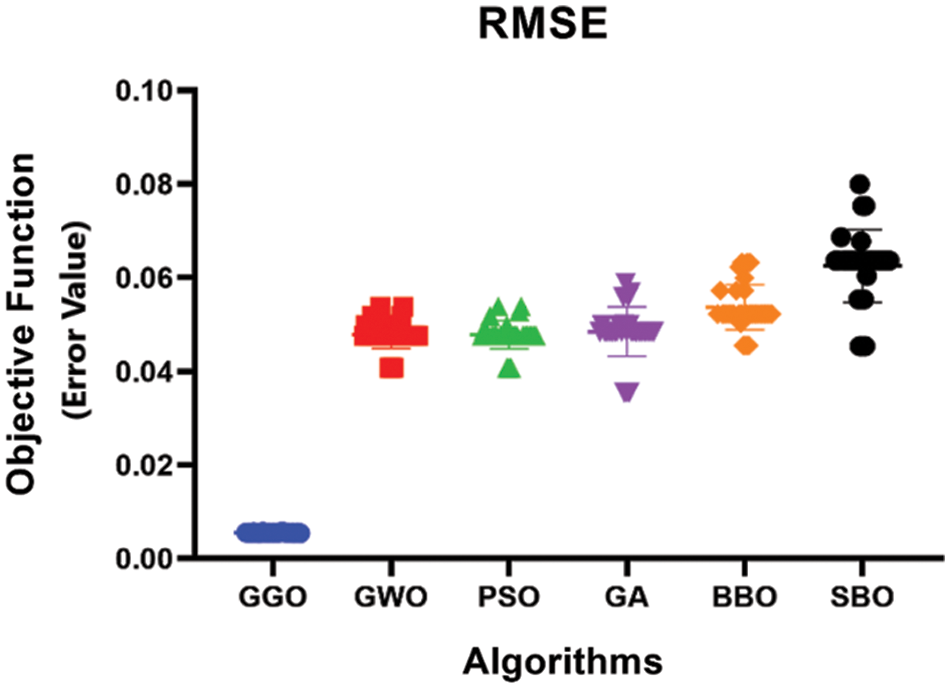

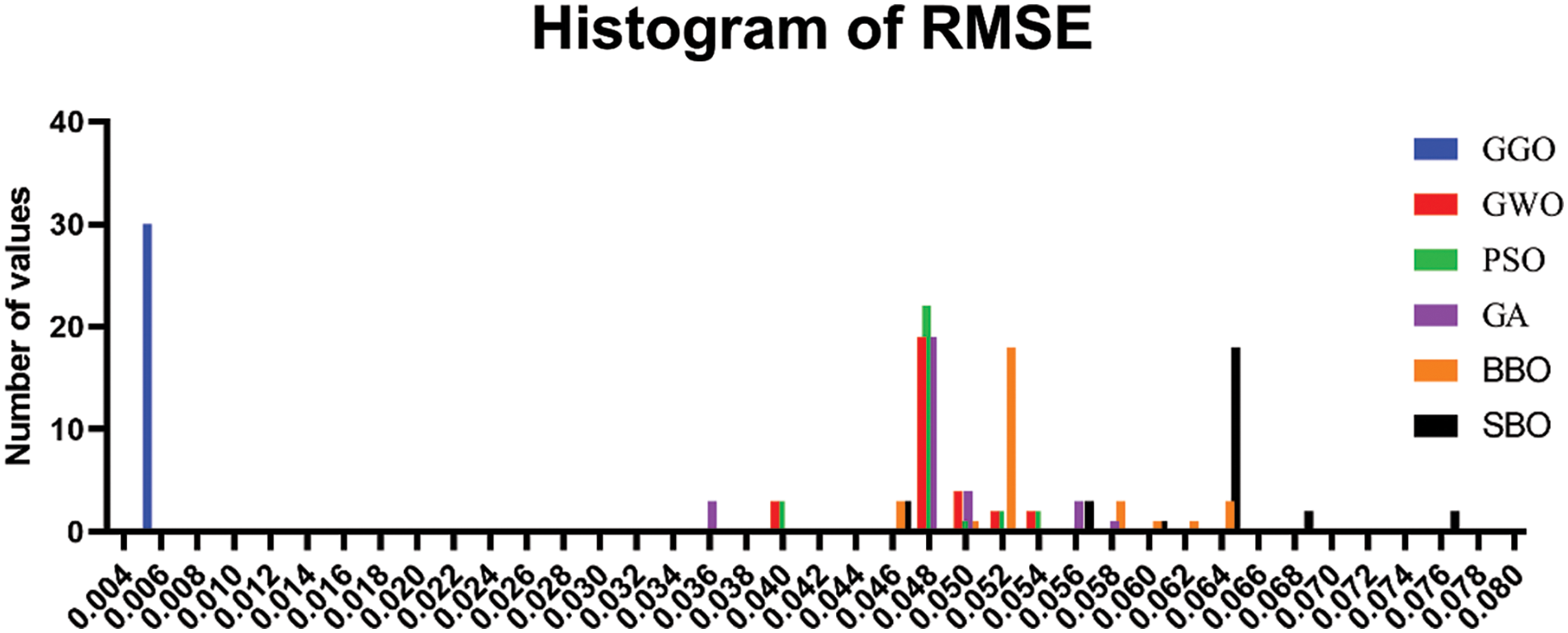

Table 5 discusses in detail the performance measures of different optimization algorithms on the tested data. Notably, the GGO model significantly outperforms with low error values, which only indicates that this works effectively at optimizing predictions. Furthermore, the small Fitted Time value for GGO of 0.01421 suggests that it performs better more quickly than other algorithms.

These algorithms, including Grey Wolf Optimization (GWO), Particle Swarm Optimization (PSO), Genetic Algorithm (GA), Binary Bat Optimization (BBO), and Sine Cosine Algorithm (SCA), display higher error values. For instance, GWO has a more elevated MSE of 0.00226, RMSE of 0.04759, MAE of 0.03757, and MBE of −0.01858. The significant performance changes illuminate the better GGO model efficiency in perfecting forecasts with respect to the tested dataset.

In Table 6, the results of the Wilcoxon signed-rank test [47] are shown to evaluate the statistical validity of differences between the GGO model and other algorithms. The p-values speak of the statistical significance, and in detail, the nature of the results serves to support consistency in GGO’s superior performance against its rivals across different parameters. The differences further show that the GGO algorithm is an improved approach to yielding better outcomes.

In Fig. 8, we perform a detailed analysis of the accuracy of GGO with respect to other methods. This visual representation enables us to judge the performance standards achieved by GGO against other optimization procedures, thus determining their overall accuracy.

Figure 8: Accuracy investigation of GGO and compared algorithms

Moving on to Fig. 9, we see a histogram that reveals the accuracy level distribution for GGO and the alternative methods. This graphic display offers an easily understandable comparison of the accuracy levels achieved via GGO and other processes in various circumstances, thus contributing to a general elaboration on their distribution.

Figure 9: The histogram of accuracy for GGO and other algorithms

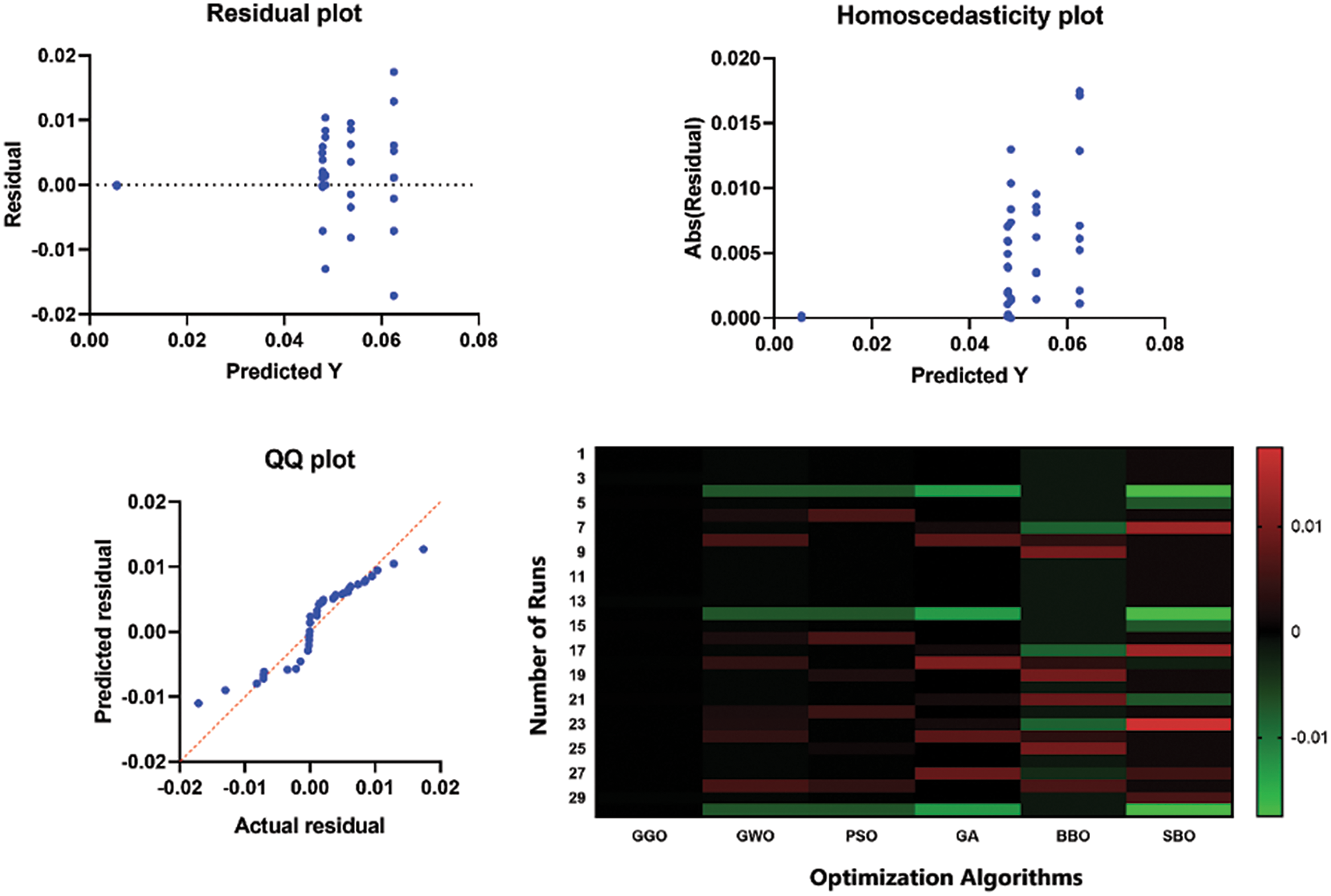

Finally, in Fig. 10, we discuss the residual values and heatmap for the proposed GGO algorithm’s version, including its competitors. Residuals help to identify the differences between predicted values and those observed, while heatmaps reflect this gap from different scenarios. This figure enables further analysis of the operation and precognition approaches offered by GGO and facilitates comparing it with other algorithms.

Figure 10: Residual values and heatmap for the proposed GGO algorithm and other algorithms

5 Conclusion and Future Directions

In our study, we delve into the realm of optimization algorithms, with a particular focus on the GGO algorithm and its application to ensemble models. Through meticulous experimentation and analysis, we have demonstrated the superior performance of bGGO in feature selections. It consistently achieves remarkably low error rates while maintaining high effectiveness. Important results have been obtained through a comprehensive assessment of fundamental machine learning models and an ensemble model, which includes GGO. Statistical analyses, such as ANOVA tests and the Wilcoxon signed-rank test, were also conducted. The R-squared values are quite impressive, as is evident from the consistently lower errors of GGO compared to other algorithms. The correlation coefficients further support this observation. In the future, our GGO experiments will have promising results, suggesting various opportunities for further study and practical application.

Future research endeavors should delve into the fine-tuning and extension of the GGO algorithm, tailoring it to suit specific applications and exploring its untapped potential across diverse fields. The development of hybrid models, amalgamating GGO with other optimization techniques, holds promise for enhancing results and bolstering resilience. It is imperative to acknowledge the limitations of our study, such as the need for further empirical validation and consideration of real-world constraints. Despite these constraints, our findings contribute valuable insights into the progression of optimization algorithms, setting the stage for future breakthroughs in the realm of artificial technology.

Acknowledgement: Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2024R 308), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding Statement: This research was funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project.

Author Contributions: The authors confirm their contribution to the paper as follows: study conception and design: S. K. Towfek; data collection: S. K. Towfek; analysis and interpretation of results: Amel Ali Alhussan; draft manuscript preparation: Amel Ali Alhussan, S. K. Towfek. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data that support the findings of this study are openly available in Kaggle at https://www.kaggle.com/datasets/omarsobhy14/5g-quality-of-service (accessed on 01/04/2024).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. F. Palmieri, “A reliability and latency-aware routing framework for 5G transport infrastructures,” Comput. Netw., vol. 179, no. 12, pp. 107365, 2020. doi: 10.1016/j.comnet.2020.107365. [Google Scholar] [CrossRef]

2. R. Ahmed, Y. Chen, and B. Hassan, “Deep learning-driven opportunistic spectrum access (OSA) framework for cognitive 5G and beyond 5G (B5G) networks,” Ad Hoc Netw., vol. 123, no. 1, pp. 102632, 2021. doi: 10.1016/j.adhoc.2021.102632. [Google Scholar] [CrossRef]

3. A. Çalhan and M. Cicioğlu, “Handover scheme for 5G small cell networks with non-orthogonal multiple access,” Comput. Netw., vol. 183, no. 5, pp. 107601, 2020. doi: 10.1016/j.comnet.2020.107601. [Google Scholar] [CrossRef]

4. C. Zhang, Y. L. Ueng, C. Studer, and A. Burg, “Artificial intelligence for 5G and beyond 5G: Implementations, algorithms, and optimizations,” IEEE J. Emerg. Sel. Top. Circuits Syst., vol. 10, no. 2, pp. 149–163, Jun. 2020. doi: 10.1109/JETCAS.2020.3000103. [Google Scholar] [CrossRef]

5. B. Abdollahzadeh, F. S. Gharehchopogh, N. Khodadadi, and S. Mirjalili, “Mountain gazelle optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems,” Adv. Eng. Softw., vol. 174, no. 3, pp. 103282, 2022. doi: 10.1016/j.advengsoft.2022.103282. [Google Scholar] [CrossRef]

6. F. S. Gharehchopogh, B. Abdollahzadeh, S. Barshandeh, and B. Arasteh, “A multi-objective mutation-based dynamic Harris Hawks optimization for botnet detection in IoT,” Internet Things, vol. 24, no. 7, pp. 100952, 2023. doi: 10.1016/j.iot.2023.100952. [Google Scholar] [CrossRef]

7. Y. E. Kim, Y. S. Kim, and H. Kim, “Effective feature selection methods to detect IoT DDoS attack in 5G core network,” Sensors, vol. 22, no. 10, pp. 10, Jan. 2022. doi: 10.3390/s22103819. [Google Scholar] [PubMed] [CrossRef]

8. Y. He, F. Zhang, S. Mirjalili, and T. Zhang, “Novel binary differential evolution algorithm based on taper-shaped transfer functions for binary optimization problems,” Swarm Evol. Comput., vol. 69, no. 2, pp. 101022, 2022. doi: 10.1016/j.swevo.2021.101022. [Google Scholar] [CrossRef]

9. R. Zhang, L. Ning, M. Li, C. Wang, W. Li and Y. Wang, “Feature extraction of trajectories for mobility modeling in 5G NB-IoT networks,” Wirel. Netw., Aug. 2021. doi: 10.1007/978-3-030-88666-0. [Google Scholar] [CrossRef]

10. E. S. M. El-Kenawy et al., “Greylag goose optimization: Nature-inspired optimization algorithm,” Expert. Syst. Appl., vol. 238, no. 22, pp. 122147, 2024. doi: 10.1016/j.eswa.2023.122147. [Google Scholar] [CrossRef]

11. R. Purushothaman, S. P. Rajagopalan, and G. Dhandapani, “Hybridizing gray wolf optimization (GWO) with grasshopper optimization algorithm (GOA) for text feature selection and clustering,” Appl. Soft Comput., vol. 96, no. 4, pp. 106651, 2020. doi: 10.1016/j.asoc.2020.106651. [Google Scholar] [CrossRef]

12. S. Waleed, I. Ullah, W. U. Khan, A. U. Rehman, T. Rahman and S. Li, “Resource allocation of 5G network by exploiting particle swarm optimization,” Iran J. Comput Sci., vol. 4, no. 3, pp. 211–219, Sep. 2021. doi: 10.1007/s42044-021-00091-5. [Google Scholar] [CrossRef]

13. L. Liu, A. Wang, G. Sun, T. Zheng, and C. Yu, “An improved biogeography-based optimization approach for beam pattern optimizations of linear and circular antenna arrays,” Int. J. Numer. Model.: Electron. Netw., Devices Fields, vol. 34, no. 6, pp. e2910, 2021. doi: 10.1002/jnm.2910. [Google Scholar] [CrossRef]

14. J. Kaur, M. A. Khan, M. Iftikhar, M. Imran, and Q. Emad Ul Haq, “Machine learning techniques for 5G and beyond,” IEEE Access, vol. 9, pp. 23472–23488, 2021. doi: 10.1109/ACCESS.2021.3051557. [Google Scholar] [CrossRef]

15. D. Bega, M. Gramaglia, A. Banchs, V. Sciancalepore, and X. Costa-Pérez, “A machine learning approach to 5G infrastructure market optimization,” IEEE Trans. Mob. Comput., vol. 19, no. 3, pp. 498–512, Mar. 2020. doi: 10.1109/TMC.2019.2896950. [Google Scholar] [CrossRef]

16. H. N. Fakhouri, S. Alawadi, F. M. Awaysheh, I. B. Hani, M. Alkhalaileh and F. Hamad, “A comprehensive study on the role of machine learning in 5G security: Challenges, technologies, and solutions,” Electronics, vol. 12, no. 22, pp. 22, Jan. 2023. doi: 10.3390/electronics12224604. [Google Scholar] [CrossRef]

17. Y. Gong and L. Zhang, “Improved K-nearest neighbor algorithm for indoor positioning using 5G channel state information,” in 2021 IEEE 5th Inf. Technol., Netw., Electron. Autom. Control Conf. (ITNEC), Xi’an, China, Oct. 2021, pp. 333–337. doi: 10.1109/ITNEC52019.2021.9587088. [Google Scholar] [CrossRef]

18. C. Bentéjac, A. Csörgő, and G. Martínez-Muñoz, “A comparative analysis of gradient boosting algorithms,” Artif. Intell. Rev., vol. 54, no. 3, pp. 1937–1967, 2021. doi: 10.1007/s10462-020-09896-5. [Google Scholar] [CrossRef]

19. Y. A. Alsariera, V. E. Adeyemo, A. O. Balogun, and A. K. Alazzawi, “AI meta-learners and extra-trees algorithm for the detection of phishing websites,” IEEE Access, vol. 8, pp. 142532–142542, 2020. doi: 10.1109/ACCESS.2020.3013699. [Google Scholar] [CrossRef]

20. R. Kruse, S. Mostaghim, C. Borgelt, C. Braune, and M. Steinbrecher, “Multi-layer perceptrons,” in R. Kruse, S. Mostaghim, C. Borgelt, C. Braune, M. Steinbrecher (Eds.Computational Intelligence: A Methodological Introduction, Cham: Springer International Publishing, 2022, pp. 53–124. doi: 10.1007/978-3-030-42227-1_5. [Google Scholar] [CrossRef]

21. F. Zhang and L. J. O’Donnell, “Chapter 7—support vector regression,” in A. Mechelli, S. Vieira (Eds.Machine Learning, Academic Press, 2020, pp. 123–140. doi: 10.1016/B978-0-12-815739-8.00007-9. [Google Scholar] [CrossRef]

22. X. Liu and T. Wang, “Application of XGBOOST model on potential 5G mobile users forecast,” in J. Sun, Y. Wang, M. Huo, L. Xu (Eds.Signal and Information Processing, Networking and Computers, Singapore: Springer Nature, 2023, pp. 1492–1500. doi: 10.1007/978-981-19-3387-5_177. [Google Scholar] [CrossRef]

23. L. Zhang, J. Guo, B. Guo, and J. Shen, “Root cause analysis of 5G base station faults based on Catboost algorithm,” in Y. Wang, J. Zou, L. Xu, Z. Ling, X. Cheng (Eds.Signal and Information Processing, Networking and Computers, Singapore: Springer Nature, 2024, pp. 224–232. doi: 10.1007/978-981-97-2124-5_27. [Google Scholar] [CrossRef]

24. T. Jo, “Decision tree,” in T. Jo (Ed.Machine Learning Foundations: Supervised, Unsupervised and Advanced Learning, Cham: Springer International Publishing, 2021, pp. 141–165. doi: 10.1007/978-3-030-65900-4_7. [Google Scholar] [CrossRef]

25. G. K. Uyanık and N. Güler, “A study on multiple linear regression analysis,” Proc. Soc. Behav. Sci., vol. 106, pp. 234–240, 2013. doi: 10.1016/j.sbspro.2013.12.027. [Google Scholar] [CrossRef]

26. A. Priyanka, P. Gauthamarayathirumal, and C. Chandrasekar, “Machine learning algorithms in proactive decision making for handover management from 5G & beyond 5G,” Egypt. Inform. J., vol. 24, no. 3, pp. 100389, 2023. doi: 10.1016/j.eij.2023.100389. [Google Scholar] [CrossRef]

27. J. Norolahi and P. Azmi, “A machine learning based algorithm for joint improvement of power control, link adaptation and capacity in beyond 5G communication systems,” Telecommun. Syst., vol. 83, no. 4, pp. 323–337, 2023. doi: 10.1007/s11235-023-01017-1. [Google Scholar] [CrossRef]

28. K. Venkateswararao, P. Swain, S. S. Jha, I. Ioannou, and A. Pitsillides, “A novel power consumption optimization framework in 5G heterogeneous networks,” Comput. Netw., vol. 220, no. 7, pp. 109487, 2023. doi: 10.1016/j.comnet.2022.109487. [Google Scholar] [CrossRef]

29. E. Chatzoglou and S. K. Goudos, “Beam-selection for 5G/B5G networks using machine learning: A comparative study,” Sensors, vol. 23, no. 6, pp. 6, 2023. doi: 10.3390/s23062967. [Google Scholar] [PubMed] [CrossRef]

30. S. Sumathy, M. Revathy, and R. Manikandan, “Improving the state of materials in cybersecurity attack detection in 5G wireless systems using machine learning,” Mater. Today: Proc., vol. 81, no. 2, pp. 700–707, 2023. doi: 10.1016/j.matpr.2021.04.171. [Google Scholar] [CrossRef]

31. N. Bacanin et al., “Energy efficient offloading mechanism using particle swarm optimization in 5G enabled edge nodes,” Cluster Comput., vol. 26, no. 1, pp. 587–598, 2023. doi: 10.1007/s10586-022-03609-z. [Google Scholar] [CrossRef]

32. X. Ma et al., “Energy consumption optimization of 5G base stations considering variable threshold sleep mechanism,” Energy Rep., vol. 9, no. 3, pp. 34–42, 2023. doi: 10.1016/j.egyr.2023.04.026. [Google Scholar] [CrossRef]

33. Q. Abu Al-Haija and S. Zein-Sabatto, “An efficient deep-learning-based detection and classification system for cyber-attacks in IoT communication networks,” Electronics, vol. 9, no. 12, Dec. 2020. doi: 10.3390/electronics9122152. [Google Scholar] [CrossRef]

34. W. Jiang, “Graph-based deep learning for communication networks: A survey,” Comput. Commun., vol. 185, no. 1, pp. 40–54, 2022. doi: 10.1016/j.comcom.2021.12.015. [Google Scholar] [CrossRef]

35. V. C. Prakash, G. Nagarajan, and N. Priyavarthan, “Channel coverage identification conditions for massive MIMO millimeter wave at 28 and 39 GHz using fine K-nearest neighbor machine learning algorithm,” in E. S. Gopi (Ed.Machine Learning, Deep Learning and Computational Intelligence for Wireless Communication, Singapore: Springer, 2021, pp. 143–163. doi: 10.1007/978-981-16-0289-4_12. [Google Scholar] [CrossRef]

36. S. I. Majid, S. W. Shah, and S. N. K. Marwat, “Applications of extreme gradient boosting for intelligent handovers from 4G To 5G (mm Waves) technology with partial radio contact,” Electronics, vol. 9, no. 4, pp. 4, Apr. 2020. doi: 10.3390/electronics9040545. [Google Scholar] [CrossRef]

37. S. K. Patel, J. Surve, V. Katkar, and J. Parmar, “Machine learning assisted metamaterial-based reconfigurable antenna for low-cost portable electronic devices,” Sci. Rep., vol. 12, no. 1, pp. 12354, Jul. 2022. doi: 10.1038/s41598-022-16678-2. [Google Scholar] [PubMed] [CrossRef]

38. M. Saedi, A. Moore, P. Perry, and C. Luo, “RBS-MLP: A deep learning based rogue base station detection approach for 5G mobile networks,” in IEEE Trans. Veh. Technol., Mar. 2024. Accessed: May 12, 2024. [Online]. Available: https://openaccess.city.ac.uk/id/eprint/32451/ [Google Scholar]

39. Y. Li, J. Wang, X. Sun, Z. Li, M. Liu and G. Gui, “Smoothing-aided support vector machine based nonstationary video traffic prediction towards B5G networks,” IEEE Trans. Veh. Technol., vol. 69, no. 7, pp. 7493–7502, Jul. 2020. doi: 10.1109/TVT.2020.2993262. [Google Scholar] [CrossRef]

40. C. Karthikraja, J. Senthilkumar, R. Hariharan, G. Usha Devi, Y. Suresh and V. Mohanraj, “An empirical intrusion detection system based on XGBoost and bidirectional long-short term model for 5G and other telecommunication technologies,” Comput. Intell., vol. 38, no. 4, pp. 1216–1231, 2022. doi: 10.1111/coin.12497. [Google Scholar] [CrossRef]

41. R. Sanjeetha, A. Raj, K. Saivenu, M. I. Ahmed, B. Sathvik and A. Kanavalli, “Detection and mitigation of botnet based DDoS attacks using catboost machine learning algorithm in SDN environment,” IJATEE, vol. 8, no. 76, pp. 445–461, Mar. 2021. doi: 10.19101/IJATEE.2021.874021. [Google Scholar] [CrossRef]

42. P. T. Kalaivaani, R. Krishnamoorthy, A. S. Reddy, and A. D. D. Chelladurai, “Adaptive multimode decision tree classification model using effective system analysis in IDS for 5G and IoT security issues,” in S. Velliangiri, M. Gunasekaran, P. Karthikeyan (Eds.Secure Communication for 5G and IoT Networks, Cham: Springer International Publishing, 2022, pp. 141–158. doi: 10.1007/978-3-030-79766-9_9. [Google Scholar] [CrossRef]

43. S. Imtiaz, G. P. Koudouridis, H. Ghauch, and J. Gross, “Random forests for resource allocation in 5G cloud radio access networks based on position information,” J. Wirel. Com. Netw., vol. 2018, no. 1, pp. 142, Jun. 2018. doi: 10.1186/s13638-018-1149-7. [Google Scholar] [CrossRef]

44. M. Qasim, M. Amin, and T. Omer, “Performance of some new Liu parameters for the linear regression model,” Commun. Stat.-Theory Methods, vol. 49, no. 17, pp. 4178–4196, 2020. doi: 10.1080/03610926.2019.1595654. [Google Scholar] [CrossRef]

45. K. Abbas, T. A. Khan, M. Afaq, and W. C. Song, “Ensemble learning-based network data analytics for network slice orchestration and management: An intent-based networking mechanism,” in NOMS 2022–2022 IEEE/IFIP Netw. Oper. Manag. Symp., Apr. 2022, pp. 1–5. doi: 10.1109/NOMS54207.2022.9789706. [Google Scholar] [CrossRef]

46. F. S. Samidi, N. A. Mohamed Radzi, K. H. Mohd Azmi, N. Mohd Aripin, and N. A. Azhar, “5G technology: ML hyperparameter tuning analysis for subcarrier spacing prediction model,” Appl. Sci., vol. 12, no. 16, pp. 16, Jan. 2022. doi: 10.3390/app12168271. [Google Scholar] [CrossRef]

47. X. Li et al., “A novel index of functional connectivity: Phase lag based on Wilcoxon signed rank test,” Cogn. Neurodyn., vol. 15, no. 4, pp. 621–636, Aug. 2021. doi: 10.1007/s11571-020-09646-x. [Google Scholar] [PubMed] [CrossRef]

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools