Open Access

Open Access

ARTICLE

An Elite-Class Teaching-Learning-Based Optimization for Reentrant Hybrid Flow Shop Scheduling with Bottleneck Stage

College of Automation, Wuhan University of Technology, Wuhan, 430070, China

* Corresponding Author: Mingbo Li. Email:

(This article belongs to the Special Issue: Metaheuristic-Driven Optimization Algorithms: Methods and Applications)

Computers, Materials & Continua 2024, 79(1), 47-63. https://doi.org/10.32604/cmc.2024.049481

Received 09 January 2024; Accepted 11 March 2024; Issue published 25 April 2024

Abstract

Bottleneck stage and reentrance often exist in real-life manufacturing processes; however, the previous research rarely addresses these two processing conditions in a scheduling problem. In this study, a reentrant hybrid flow shop scheduling problem (RHFSP) with a bottleneck stage is considered, and an elite-class teaching-learning-based optimization (ETLBO) algorithm is proposed to minimize maximum completion time. To produce high-quality solutions, teachers are divided into formal ones and substitute ones, and multiple classes are formed. The teacher phase is composed of teacher competition and teacher teaching. The learner phase is replaced with a reinforcement search of the elite class. Adaptive adjustment on teachers and classes is established based on class quality, which is determined by the number of elite solutions in class. Numerous experimental results demonstrate the effectiveness of new strategies, and ETLBO has a significant advantage in solving the considered RHFSP.Keywords

A hybrid flow shop scheduling problem (HFSP) is a typical scheduling problem that exists widely in many industries such as petrochemicals, chemical engineering, and semiconductor manufacturing [1,2]. The term ‘reentrant’ means a job may be processed multiple times on the same machine or stage [3]. A typical reentrant is a cyclic reentrant [4,5], which means that each job is cycled through the manufacturing process. As an extension of HFSP, RHFSP is extensively used in electronic manufacturing industries, including printed circuit board production [6] and semiconductor wafer manufacturing [7], etc.

RHFSP has been fully investigated and many results have been obtained in the past decade. Xu et al. [8] applied an improved moth-flame optimization algorithm to minimize maximum completion time and reduce the comprehensive impact of resources and environment. Zhou et al. [9] proposed a hybrid differential evolution algorithm with an estimation of distribution algorithm to minimize total weighted completion time. Cho et al. [10] employed a Pareto genetic algorithm with a local search strategy and Minkowski distance-based crossover operator to minimize maximum completion time and total tardiness. Shen et al. [11] designed a modified teaching-learning-based optimization (TLBO) algorithm to minimize maximum completion time and total tardiness, where Pareto-based ranking method and training phase are adopted.

In recent years, RHFSP with real-life constraints has attracted much attention. Lin et al. [12] proposed a hybrid harmony search and genetic algorithm (HHSGA) for RHFSP with limited buffer to minimize weighted values of maximum completion time and mean flowtime. For RHFSP with missing operations, Tang et al. [13] designed an improved dual-population genetic algorithm (IDPGA) to minimize maximum completion time and energy consumption. Zhang et al. [14] considered machine eligibility constraints and applied a discrete differential evolution algorithm (DDE) with a modified crossover operator to minimize total tardiness. Chamnanlor et al. [15] adopted a genetic algorithm hybridized ant colony optimization for the problem with time window constraints. Wu et al. [16] applied an improved multi-objective evolutionary algorithm based on decomposition to solve the problem with bottleneck stage and batch processing machines.

In HFSP with

As stated above, RHFSP with real-life constraints such as machine eligibility and limited buffer has been investigated; however, RHFSP with bottleneck stage is seldom considered, which exists in real-life manufacturing processes such as seamless steel tube cold drawing production [16]. The modelling and optimization on reentrance and bottleneck stage can lead to optimization results with high application value, so it is necessary to deal with RHFSP with the bottleneck stage.

TLBO [22–26] is a population-based algorithm inspired by passing on knowledge within a classroom environment and consists of the teacher phase and learner phase. TLBO [27–31] has become a main approach to production scheduling [32–35] due to its simple structure and fewer parameters. TLBO has been successfully applied to solve RHFSP [11] and its searchability and advantages on RHFSP are tested; however, it is rarely used to solve RHFSP with the bottleneck stage, which is an extension of RHFSP. The successful applications of TLBO to RHFSP show that TLBO has potential advantages to address RHFSP with bottleneck stage, so TLBO is chosen.

In this study, the reentrance and bottleneck stages are simultaneously investigated in a hybrid flow shop, and an elite-class teaching-learning-based optimization (ETLBO) is developed. The main contributions can be summarized as follows: (1) RHFSP with bottleneck stage is solved and a new algorithm called ETLBO is proposed to minimize maximum completion time. (2) In ETLBO, teachers are divided into formal ones and substitute ones. The teacher phase consists of teacher competition and teacher teaching, the learner phase is replaced by reinforcement research of elite class; adaptive adjustment on teachers and classes is applied based on class quality, and class quality is determined by the number of elite solutions in class. (3) Extensive experiments are conducted to test the performances of ETLBO by comparing it with other existing algorithms from the literature. The computational results demonstrate that new strategies are effective and ETLBO has promising advantages in solving RHFSP with bottleneck stage.

The remainder of the paper is organized as follows. The problem description is described in Section 2. Section 3 shows the proposed ETLBO for RHFSP with the bottleneck stage. Numerical test experiments on ETLBO are reported in Section 4. Conclusions and some topics of future research are given in the final section.

RHFSP with bottleneck stage is described as follows. There are

There are the following constraints on jobs and machines:

All jobs and machines are available at time 0.

Each machine can process at most one operation at a time.

No jobs may be processed on more than one machine at a time.

Operations cannot be interrupted.

The problem can be divided into two sub-problems: scheduling and machine assignment. Scheduling is applied to determine processing sequence for all jobs on each machine. Machine assignment is used for selecting appropriate machine at each stage for each job. There are strong coupled relationships between these two sub-problems. The optimization contents of scheduling are directly determined by the machine assignment. To obtain an optimal solution, it is necessary to efficiently combine the two sub-problems.

The goal of the problem is to minimize maximum completion time when all constraints are met.

where

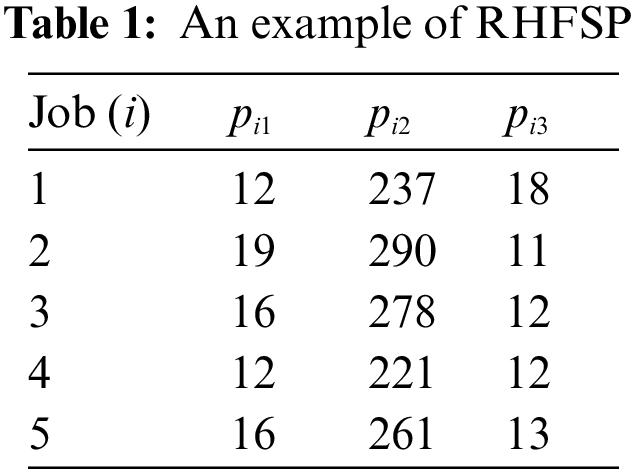

An example is shown in Table 1, where

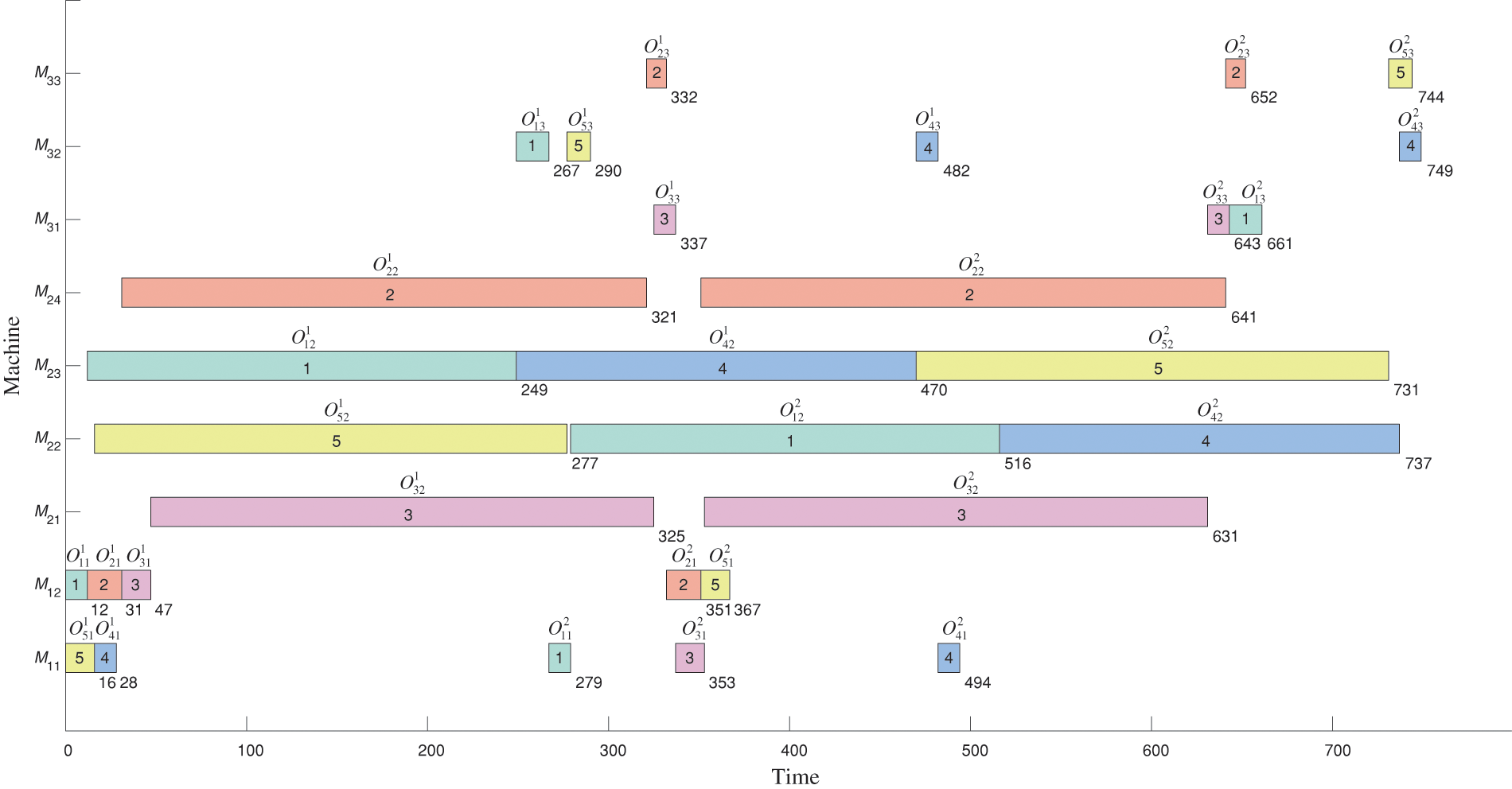

Figure 1: A schedule of the example

3 ETLBO for RHFSP with Bottleneck Stage

Some works are obtained on TLBO with multiple classes; however, in the existing TLBO [36–39], competition among teachers is not used, reinforcement search of some elite solutions and adaptive adjustment on classes and teachers are rarely considered. To effectively solve RHFSP with bottleneck stage, ETLBO is constructed based on reinforcement search of elite class and adaptive adjustment.

3.1 Initialization and Formation of Multiple Classes

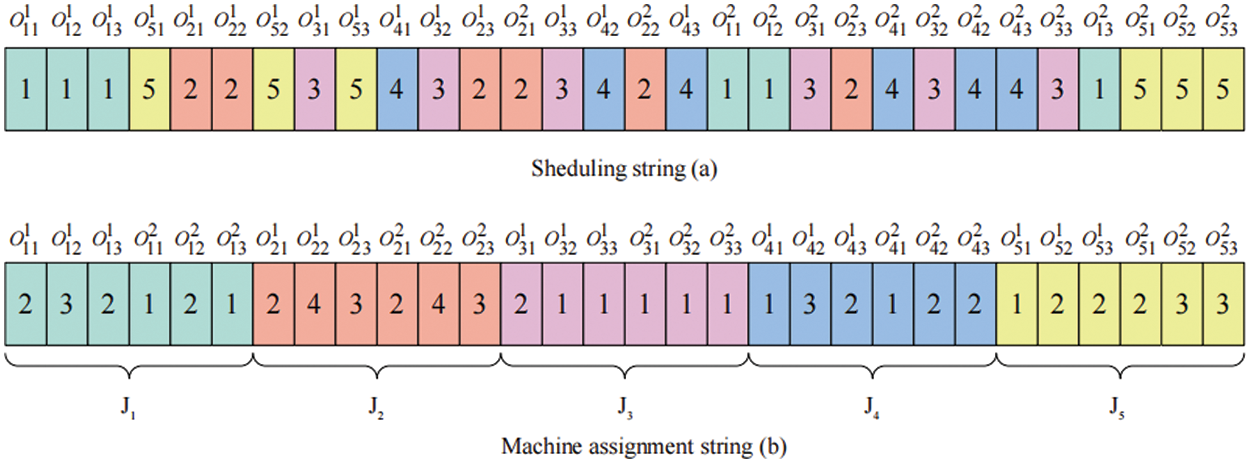

To solve the considered RHFSP with reentrant feature, a two-string representation is used [12]. For RHFSP with

In scheduling string, the frequency of occurrence is

The decoding procedure to deal with reentrant feature is shown below. Start with job

For the example in Section 2, the solution is shown in Fig. 2. For job

Figure 2: A coding of the example

Initial population

The formation of multiple classes is described as follows:

1. Sort all solutions of

2. Divide all learners into

3. Each class

where

The remaining

Global search

Ten neighborhood structures

Multiple neighborhood search is executed in the following way. Let

Class evolution is composed of teacher competition, teacher’s teaching and reinforcement search of elite class. Let

Teacher competition is described as follows:

1. For each teacher

2. For each formal teacher

When

Teacher teaching is shown below. For each learner

Reinforcement search of elite class is performed in the following way. Sort all solutions in population

Unlike the previous TLBO [40–43], ETLBO has reinforcement search of elite class used to substitute for learner phase. Since elite solutions are mostly composed of teachers and good learners, better solutions are more likely generated by global search and multiple neighborhood search on these elite solutions, and the waste of computational resources can be avoided on interactive learning between those worse learners with bigger

3.4 Adaptive Adjustment on Teachers and Classes

Class quality is determined by the number of elite solutions in class. The quality

Adaptive adjustment on teachers and classes is shown below:

(1) Sort all classes in descending order of

(2) For each solution

(3) Let

(4)

When roulette selection is done, selection probability

In step (1), communication between classes

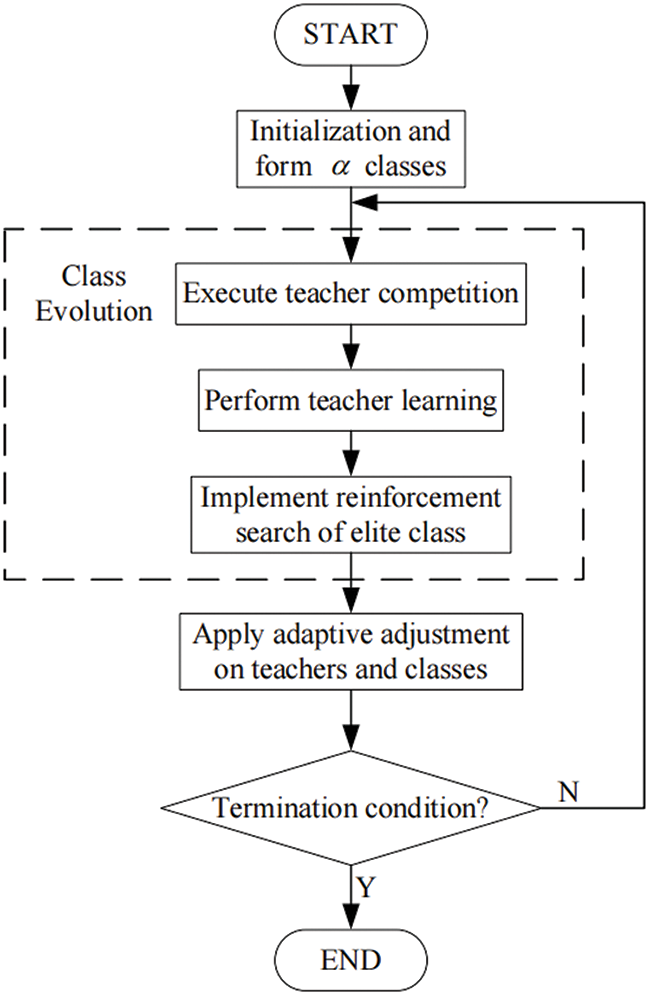

The search procedure of ETLBO is shown below:

1. Randomly produce an initial population

2. Execute teacher competition.

3. Perform teacher’s teaching.

4. Implement reinforcement search of elite class.

5. Apply adaptive adjustment on teachers and classes.

6. If the termination condition is not met, go to step (2); otherwise, stop search and output the optimum solution.

Fig. 3 describes flow chart of ETLBO.

Figure 3: Flow chart of ETLBO

ETLBO has the following new features. Teachers are divided into formal ones and substitute ones. Teacher competition is applied between formal and substitute teachers. Teacher’s teaching is performed and reinforcement search of elite class is used to replace learner phase. Adaptive adjustment on teachers and classes is conducted based on class quality assessment. These features promote a balance between exploration and exploitation, then good results can finally be obtained.

Extensive experiments are conducted to test the performance of ETLBO for RHFSP with bottleneck stage. All experiments are implemented by using Microsoft Visual C++ 2022 and run on i7-8750H CPU (2.20 GHz) and 24 GB RAM.

4.1 Test Instance and Comparative Algorithms

60 instances are randomly produced. For each instance depicted by

For the considered RHFSP with maximum completion time minimization, there are no existing methods. To demonstrate the advantages of ETLBO for the RHFSP with bottleneck stage, hybrid harmony search and genetic algorithm (HHSGA, [12]), improved dual-population genetic algorithm (IDPGA, [13]) and discrete differential evolution algorithm (DDE, [14]) are selected as comparative algorithms.

Lin et al. [12] proposed an algorithm named HHSGA for RHFSP with limited buffer to minimize weighted values of maximum completion time and mean flowtime. Tang et al. [13] designed IDPGA to solve RHFSP with missing operations to minimize maximum completion time and energy consumption. Zhang et al. [14] applied DDE to address RHFSP with machine eligibility to minimize total tardiness. These algorithms have been successfully applied to deal with RHFSP, so they can be directly used to handle the considered RHFSP by incorporating bottleneck formation into the decoding process; moreover, missing judgment vector and related operators of IDPGA are removed.

A TLBO is constructed, it consists of a class in which the best solution be seen as a teacher and remaining solutions are students, and it includes a teacher phase and a learner phase. Teacher phase is implemented by each learner learning from the teacher and learner phase is done by interactive learning between a learner and another randomly selected learner. These activities are the same as global search in ETLBO. The comparisons between ETLBO and TLBO are applied to show the effect of new strategies of ETLBO.

It can be found that ETLBO can converge well when

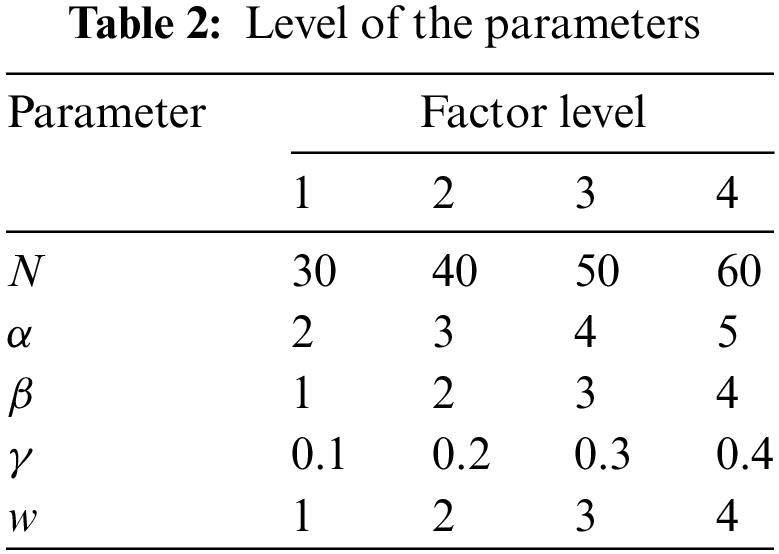

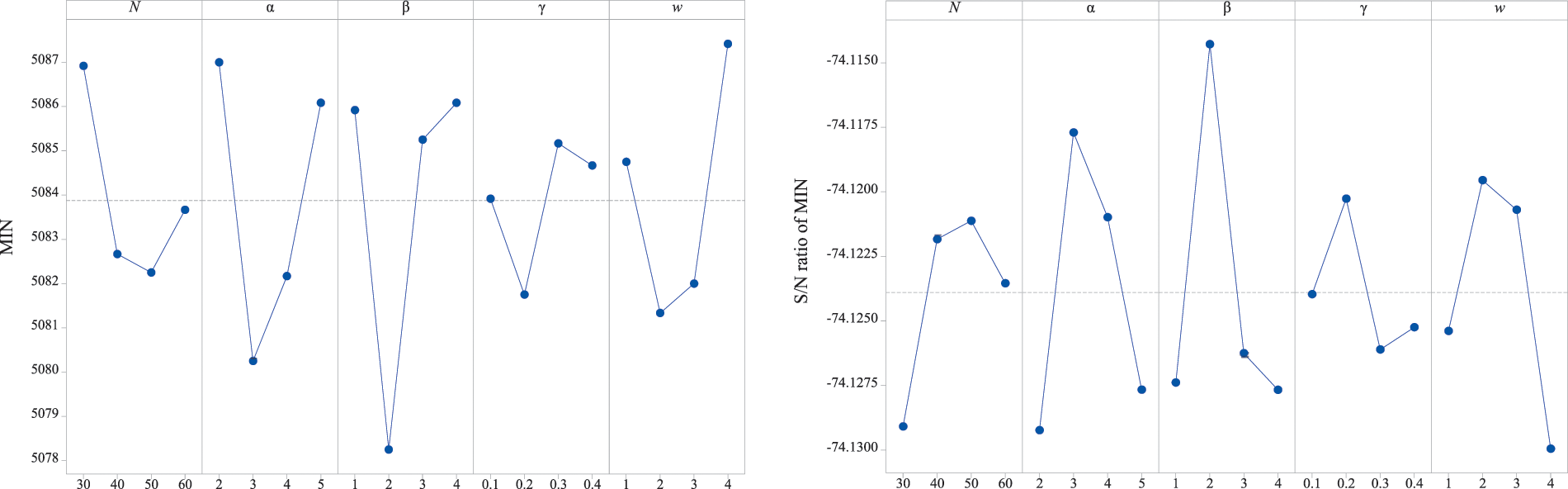

Other parameters of ETLBO, namely

Fig. 4 shows the results of MIN and S/N ratio, which is defined as

Figure 4: Main effect plot for mean MIN and S/N ratio

TLBO have

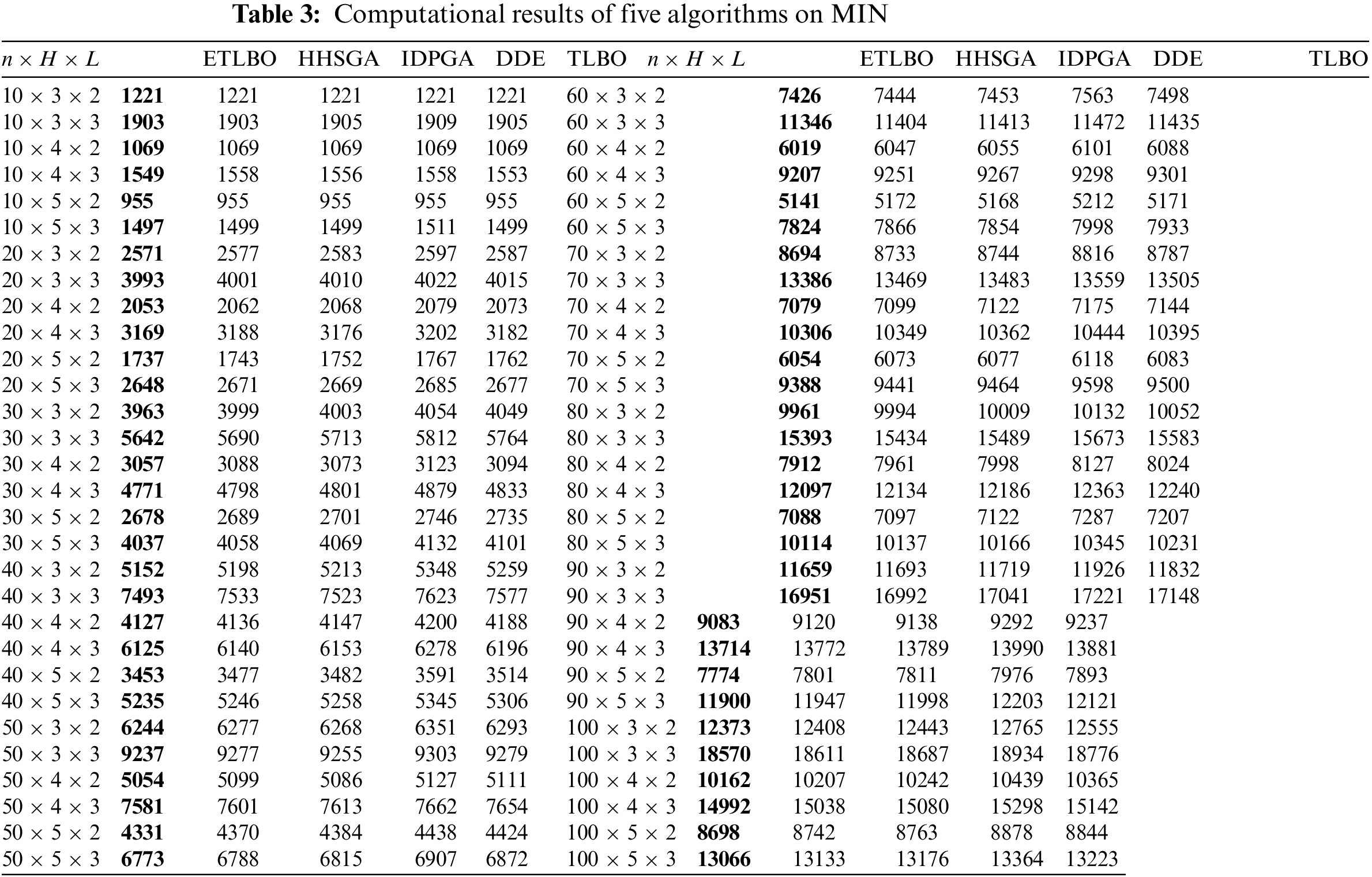

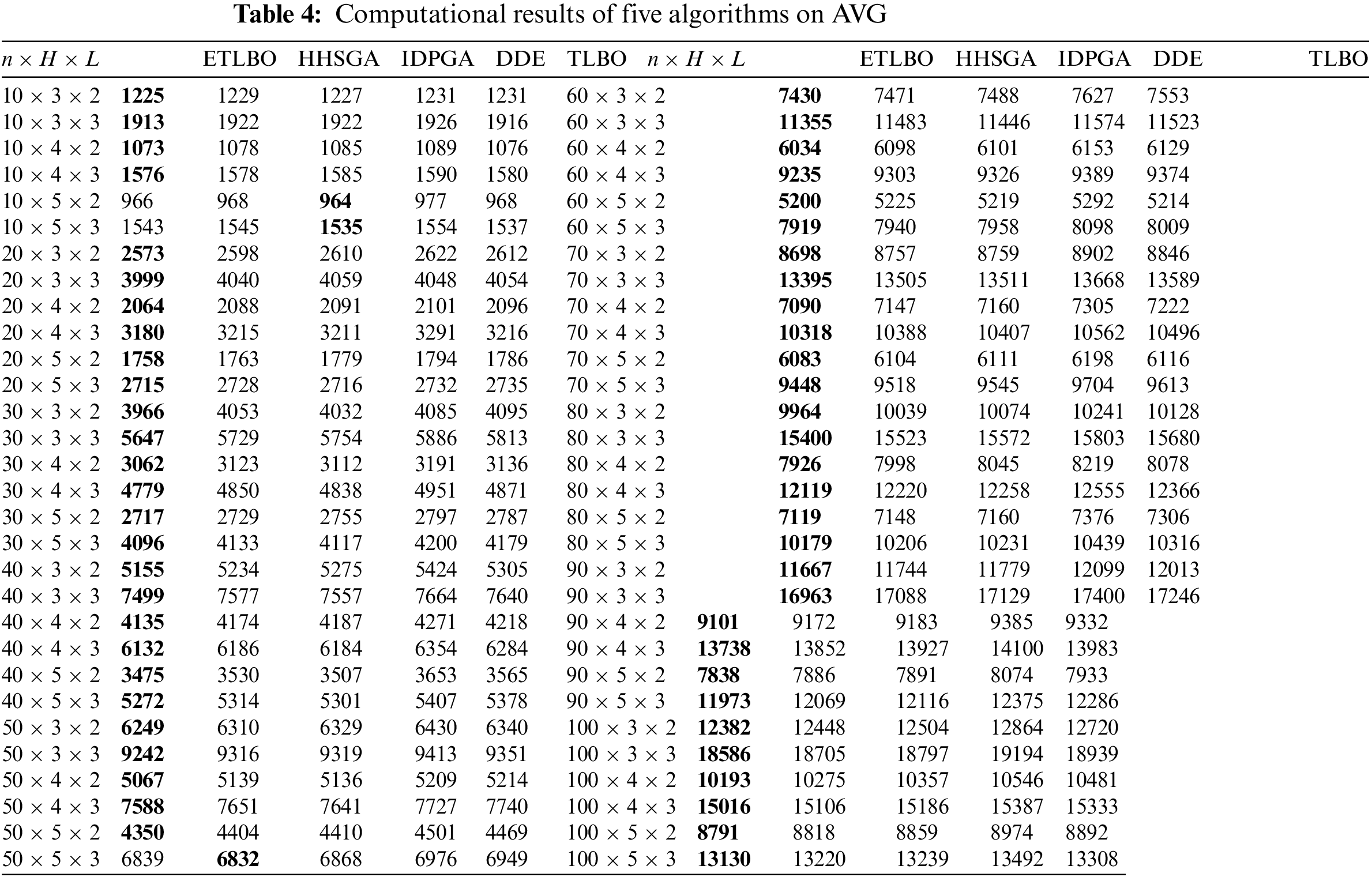

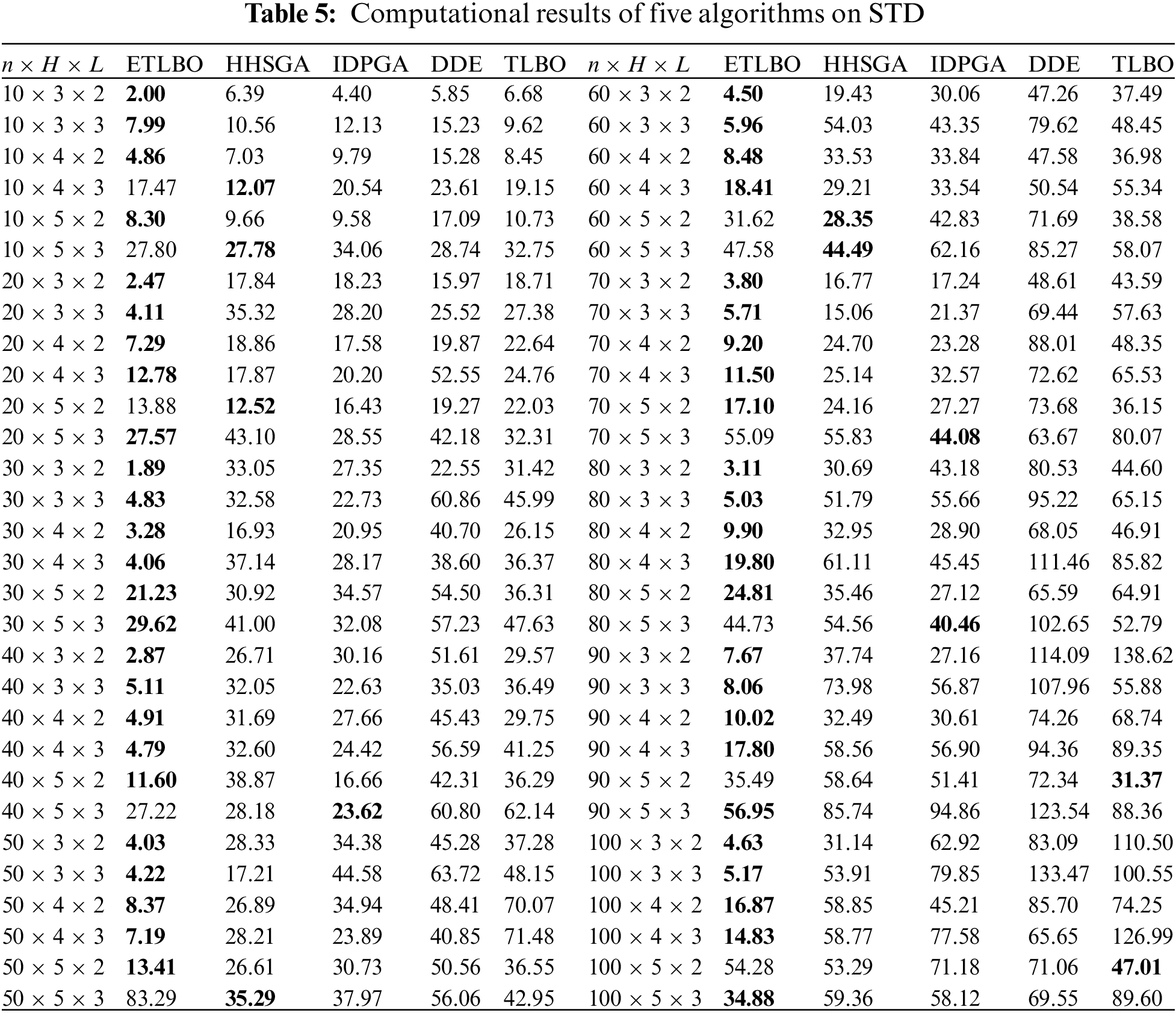

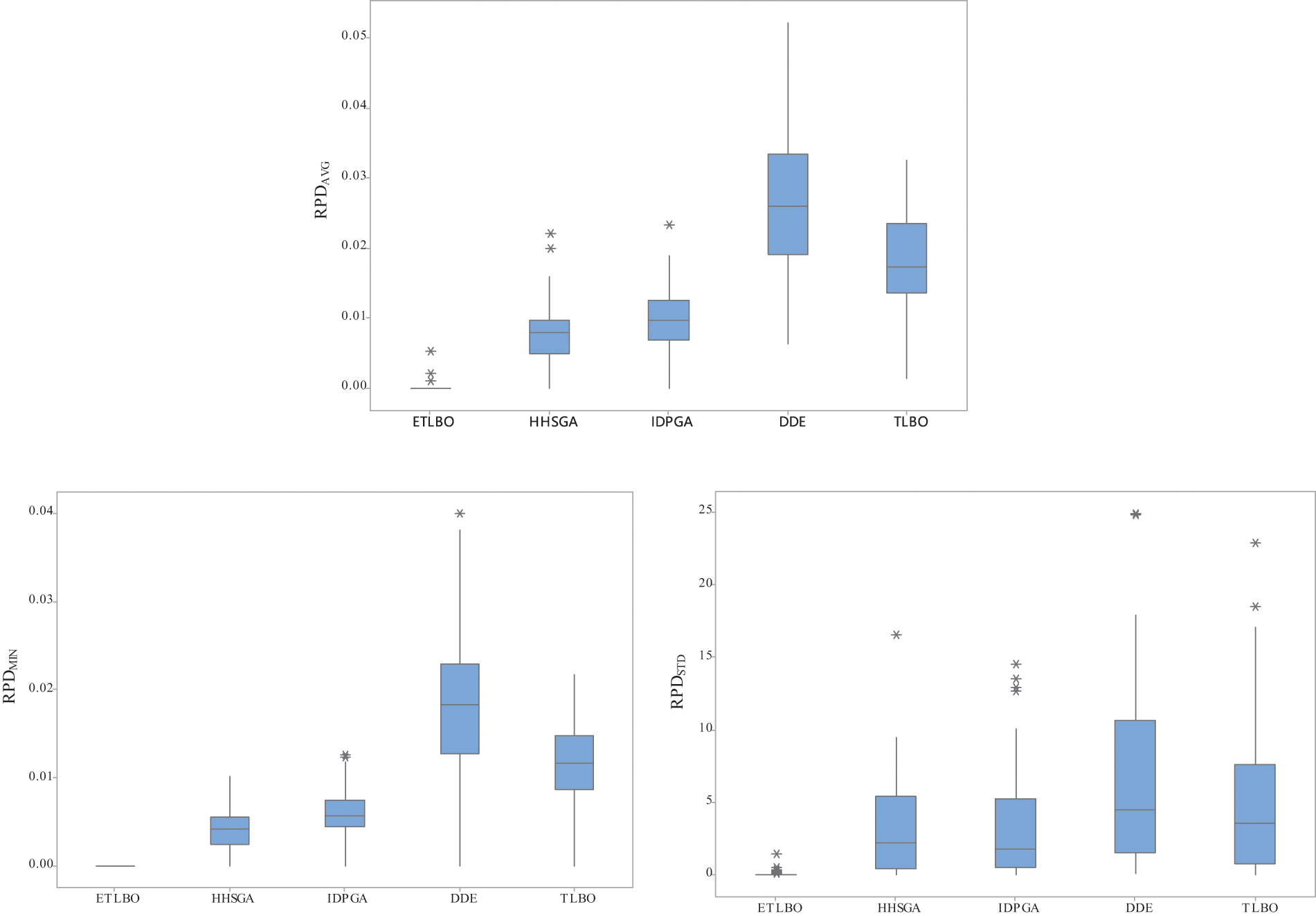

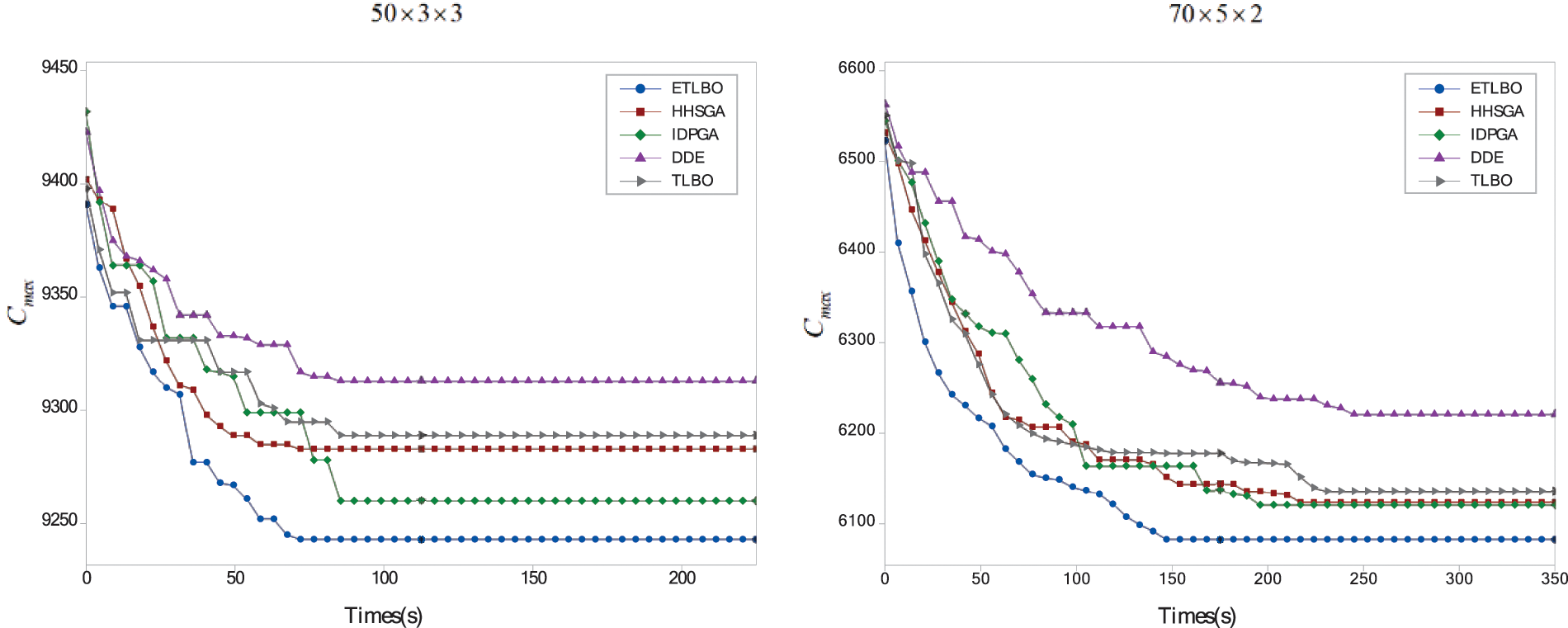

ETLBO is compared with HHSGA, IDPGA, DDE and TLBO. Each algorithm randomly runs 10 times for each instance. AVG, STD denotes the average and standard deviation of solutions in 10 run times. Tables 3–5describe the corresponding results of five algorithms. Figs. 5 and 6 show box plots of all algorithms and convergence curves of instance

Figure 5: Box plots for all algorithms

Figure 6: Convergence curves of instance

where MIN* (MAX*, STD*) is the smallest MIN (MAX, STD) obtained by all algorithms, when MIN and MIN* are replaced with STD(AVG) and STD*(AVG*), respectively,

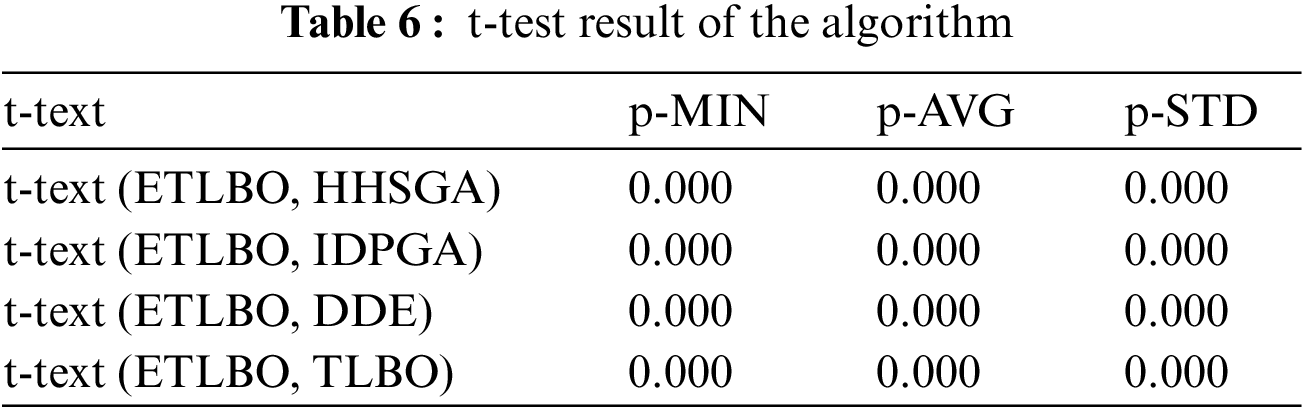

Table 6 describes the results of a pair-sample t-test, in which t-test (A1, A2) means that a paired t-test is performed to judge whether algorithm A1 gives a better sample mean than A2. If the significance level is set at 0.05, a statistically significant difference between A1 and A2 is indicated by a p-value less than 0.05.

As shown in Tables 3–5, ETLBO obtains smaller MIN than TLBO on all instances and MIN of ETLBO is lower than that of TLBO by at least 50 on 46 instances. It can be found from Table 4 that AVG of ETLBO is better than that of TLBO on 59 of 60 instances and SFLA is worse AVG than ETLBO by at least 50 on 45 instances. Table 5 shows that ETLBO obtains smaller STD than TLBO on 58 instances. Table 6 shows that there are notable performance differences between ETLBO and TLBO in a statistical sense. Fig. 5 depicts the notable differences between STD of the two algorithms, and Fig. 6 reveals that ETLBO significantly converges better than TLBO. It can be concluded that teacher competition, reinforcement search of elite class and adaptive adjustment on teachers and classes have a positive impact on the performance of ETLBO.

Table 3 describes that ETLBO performs better than HHSGA and IDPGA on MIN for all instances. As can be seen from Table 4, ETLBO produces smaller AVG than with the two comparative algorithms on 57 of 60 instances; moreover, AVG of ETLBO is less than that of HHSGA by at least 50 on 26 instances and IDPGA by at least 50 on 48 instances. Table 5 also shows that ETLBO obtains smaller STD than the two comparative algorithms on 49 instances. ETLBO converges better than HHSGA and IDPGA. The results in Table 6, Figs. 5 and 6 also demonstrate the convergence advantage of ETLBO.

It can be concluded from Tables 3–5that ETLBO performs significantly better than DDE. ETLBO produces smaller MIN than DDE in all instances, also generates better AVG than DDE by at least 50 on 45 instances and obtains better STD than or the same STD as DDE on nearly all instances. ETLBO performs notably better than DDE, and the same conclusion can be found in Table 6. Fig. 5 illustrates the significant difference in STD, and Fig. 6 demonstrates the notable convergence advantage of ETLBO.

As analyzed above, ETLBO outperforms its comparative algorithms. The good performance of ETLBO mainly results from its teacher competition, reinforcement search of elite class and adaptive adjustment on teachers and classes. Teacher competition is proposed to make full use of teacher solutions, reinforcement search of elite class performs more searches for better solutions to avoid wasting computational resources, adaptive adjustment on teachers and classes dynamically adjusts class composition according to class quality, as a result, which can effectively prevent the algorithm from falling into local optima. Thus, it can be concluded that ETLBO is a promising method for RHFSP with bottleneck stage.

This study considers RHFSP with bottleneck stage, and a new algorithm named ETLBO is presented to minimize maximum completion time. In ETLBO, teachers are divided into formal teachers and substitute teachers. A new teacher phase is implemented, which includes two types of teachers’ competition and teaching phases. Reinforcement search of the elite class is used to replace the learner phase. Based on class quality, adaptive adjustment is made for classes and teachers to change the composition of them. The experimental results show that ETLBO is a very competitive algorithm for the considered RHFSP.

In the near future, we will continue to focus on RHFSP and use other meta-heuristics such as artificial bee colony algorithm and imperialist competitive algorithm to solve it. Some new optimization mechanisms, such as cooperation and reinforcement learning, are added into meta-heuristics are our future research topics. Fuzzy RHFSP and distributed RHFSP are another of our directions. Furthermore, the application of ETLBO to deal with other scheduling problems is also worthy of further investigation.

Acknowledgement: The author would like to thank the editors and reviewers for their valuable work, as well as the supervisor and family for their valuable support during the research process.

Funding Statement: This research was funded by the National Natural Science Foundation of China (Grant Number 61573264).

Author Contributions: The authors confirm contribution to the paper as follows: study conception and design: Deming Lei, Surui Duan; data collection: Surui Duan; analysis and interpretation of results: Deming Lei, Surui Duan, Mingbo Li, Jing Wang; draft manuscript preparation: Deming Lei, Surui Duan, Mingbo Li, Jing Wang. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: Data supporting this study are described in the first paragraph of Section 4.1.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. C. Low, C. J. Hsu and C. T. Su, “A two-stage hybrid flowshop scheduling problem with a function constraint and unrelated alternative machines,” Comput. Oper. Res., vol. 35, no. 3, pp. 845–853, Mar. 2008. doi: 10.1016/j.cor.2006.04.004. [Google Scholar] [CrossRef]

2. R. Ruiz and J. A. Vázquez-Rodríguez, “The hybrid flow shop scheduling problem,” Eur. J. Oper. Res., vol. 205, no. 1, pp. 1–18, Aug. 2010. doi: 10.1016/j.ejor.2009.09.024. [Google Scholar] [CrossRef]

3. J. Dong, and C. Ye, “Green scheduling of distributed two-stage reentrant hybrid flow shop considering distributed energy resources and energy storage system,” Comput. Ind. Eng., vol. 169, pp. 108146, Mar. 2022. doi: 10.1016/j.cie.2022.108146. [Google Scholar] [CrossRef]

4. J. S. Chen, “A branch and bound procedure for the reentrant permutation flow-shop scheduling problem,” Int. J. Adv. Manuf. Technol., vol. 29, pp. 1186–1193, Aug. 2006. doi: 10.1007/s00170-005-0017-x. [Google Scholar] [CrossRef]

5. J. C. H. Pan and J. S. Chen, “Minimizing makespan in re-entrant permutation flow-shops,” J. Oper. Res. Soc., vol. 54, pp. 642–653, Jun. 2003. doi: 10.1057/palgrave.jors.2601556. [Google Scholar] [CrossRef]

6. S. Kumar and M. K. Omar, “Stochastic re-entrant line modeling for an environment stress testing in a semiconductor assembly industry,” Appl. Math. Comput., vol. 173, no. 1, pp. 603–615, Feb. 2006. doi: 10.1016/j.amc.2005.04.050. [Google Scholar] [CrossRef]

7. Y. H. Lee and B. Lee, “Push-pull production planning of the re-entrant process,” Int. J. Adv. Manuf. Technol., vol. 22, pp. 922–931, Aug. 2003. doi: 10.1007/s00170-003-1653-7. [Google Scholar] [CrossRef]

8. F. Xu et al., “Research on green reentrant hybrid flow shop scheduling problem based on improved moth-flame optimization algorithm,” Process., vol. 10, no. 12, pp. 2475, Nov. 2022. doi: 10.3390/pr10122475. [Google Scholar] [CrossRef]

9. B. H. Zhou, L. M. Hu and Z. Y. Zhong, “A hybrid differential evolution algorithm with estimation of distribution algorithm for reentrant hybrid flow shop scheduling problem,” Neural. Comput. Appl., vol. 30, no. 1, pp. 193–209, Jul. 2018. doi: 10.1007/s00521-016-2692-y. [Google Scholar] [CrossRef]

10. H. M. Cho et al., “Bi-objective scheduling for reentrant hybrid flow shop using Pareto genetic algorithm,” Comput. Ind. Eng., vol. 61, no. 3, pp. 529–541, Oct. 2011. doi: 10.1016/j.cie.2011.04.008. [Google Scholar] [CrossRef]

11. J. N. Shen, L. Wang and H. Y. Zheng, “A modified teaching-learning-based optimisation algorithm for bi-objective re-entrant hybrid flowshop scheduling,” Int. J. Prod. Res., vol. 54, no. 12, pp. 3622–3639, Jun. 2016. doi: 10.1080/00207543.2015.1120900. [Google Scholar] [CrossRef]

12. C. C. Lin, W. Y. Liu, and Y. H. Chen, “Considering stockers in reentrant hybrid flow shop scheduling with limited buffer capacity,” Comput. Ind. Eng., vol. 139, pp. 106154, Jan. 2020. doi: 10.1016/j.cie.2019.106154. [Google Scholar] [CrossRef]

13. H. T. Tang et al., “Hybrid flow-shop scheduling problems with missing and re-entrant operations considering process scheduling and production of energy consumption,” Sustainability, vol. 15, no. 10, pp. 7982, May 2023. doi: 10.3390/su15107982. [Google Scholar] [CrossRef]

14. X. Y. Zhang and L. Chen, “A re-entrant hybrid flow shop scheduling problem with machine eligibility constraints,” Int. J. Prod. Res., vol. 56, no. 16, pp. 5293–5305, Dec. 2017. doi: 10.1080/00207543.2017.1408971. [Google Scholar] [CrossRef]

15. C. Chamnanlor et al., “Embedding ant system in genetic algorithm for re-entrant hybrid flow shop scheduling problems with time window constraints,” J. Intell. Manuf., vol. 28, pp. 1915–1931, Dec. 2017. doi: 10.1007/s10845-015-1078-9. [Google Scholar] [CrossRef]

16. X. L. Wu and Z. Cao, “An improved multi-objective evolutionary algorithm based on decomposition for solving re-entrant hybrid flow shop scheduling problem with batch processing machines,” Comput. Ind. Eng., vol. 169, pp. 108236, Jul. 2022. doi: 10.1016/j.cie.2022.108236. [Google Scholar] [CrossRef]

17. A. Costa, V. Fernandez-Viagas, and J. M. Framiñan, “Solving the hybrid flow shop scheduling problem with limited human resource constraint,” Comput. Ind. Eng., vol. 146, pp. 106545, Aug. 2020. doi: 10.1016/j.cie.2020.106545. [Google Scholar] [CrossRef]

18. W. Shao, Z. Shao, and D. Pi, “Modelling and optimization of distributed heterogeneous hybrid flow shop lot-streaming scheduling problem,” Expert. Syst. Appl., vol. 214, pp. 119151, Mar. 2023. doi: 10.1016/j.eswa.2022.119151. [Google Scholar] [CrossRef]

19. C. J. Liao, E. Tjandradjaja, and T. P. Chung, “An approach using particle swarm optimization and bottleneck heuristic to solve hybrid flow shop scheduling problem,” Appl. Soft Comput., vol. 12, no. 6, pp. 1755–1764, Jun. 2012. doi: 10.1016/j.asoc.2012.01.011. [Google Scholar] [CrossRef]

20. C. Zhang et al., “A discrete whale swarm algorithm for hybrid flow-shop scheduling problem with limited buffers,” Robot. CIM-Int. Manuf., vol. 68, pp. 102081, Oct. 2020. doi: 10.1016/j.rcim.2020.102081. [Google Scholar] [CrossRef]

21. J. Wang, D. Lei, and H. Tang, “An adaptive artificial bee colony for hybrid flow shop scheduling with batch processing machines in casting process,” Int. J. Prod. Res., pp. 1–16, Nov. 2023. doi: 10.1080/00207543.2023.2279145. [Google Scholar] [CrossRef]

22. R. V. Rao, V. J. Savsani, and D. P. Vakharia, “Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems,” Comput. Aided. Des., vol. 43, no. 3, pp. 303–315, Mar. 2011. doi: 10.1016/j.cad.2010.12.015. [Google Scholar] [CrossRef]

23. M. K. Sun, Z. Y. Cai, and H. A. Zhang, “A teaching-learning-based optimization with feedback for L-R fuzzy flexible assembly job shop scheduling problem with batch splitting,” Expert. Syst. Appl., vol. 224, pp. 120043, Aug. 2023. doi: 10.1016/j.eswa.2023.120043. [Google Scholar] [CrossRef]

24. A. Baykasoğlu, A. Hamzadayi, and S. Y. Köse, “Testing the performance of teaching-learning based optimization (TLBO) algorithm on combinatorial problems: Flow shop and job shop scheduling cases,” Inf. Sci., vol. 276, pp. 204–218, Aug. 2014. doi: 10.1016/j.ins.2014.02.056. [Google Scholar] [CrossRef]

25. Z. Xie et al., “An effective hybrid teaching-learning-based optimization algorithm for permutation flow shop scheduling problem,” Adv. Eng. Softw., vol. 77, pp. 35–47, Nov. 2014. doi: 10.1016/j.advengsoft.2014.07.006. [Google Scholar] [CrossRef]

26. W. Shao, D. Pi, and Z. Shao, “An extended teaching-learning based optimization algorithm for solving no-wait flow shop scheduling problem,” Appl. Soft Comput., vol. 61, pp. 193–210, Dec. 2017. doi: 10.1016/j.asoc.2017.08.020. [Google Scholar] [CrossRef]

27. C. Song, “A hybrid multi-objective teaching-learning based optimization for scheduling problem of hybrid flow shop with unrelated parallel machine,” IEEE Access, vol. 9, pp. 56822–56835, Apr. 2021. doi: 10.1109/ACCESS.2021.3071729. [Google Scholar] [CrossRef]

28. R. Buddala and S. S. Mahapatra, “Improved teaching-learning-based and JAYA optimization algorithms for solving flexible flow shop scheduling problems,” J. Ind. Eng. Int., vol. 14, pp. 555–570, Sep. 2018. doi: 10.1007/s40092-017-0244-4. [Google Scholar] [CrossRef]

29. B. J. Xi and D. M. Lei, “Q-learning-based teaching-learning optimization for distributed two-stage hybrid flow shop scheduling with fuzzy processing time,” Complex Syst. Model. Simul., vol. 2, no. 2, pp. 113–129, Jun. 2022. doi: 10.23919/CSMS.2022.0002. [Google Scholar] [CrossRef]

30. U. Balande and D. Shrimankar, “A modified teaching learning metaheuristic algorithm with opposite-based learning for permutation flow-shop scheduling problem,” Evol. Intell., vol. 15, no. 1, pp. 57–79, Mar. 2022. doi: 10.1007/s12065-020-00487-5. [Google Scholar] [CrossRef]

31. X. Ji et al., “An improved teaching-learning-based optimization algorithm and its application to a combinatorial optimization problem in foundry industry,” Appl. Soft Comput., vol. 57, pp. 504–516, Aug. 2017. doi: 10.1016/j.asoc.2017.04.029. [Google Scholar] [CrossRef]

32. R. Buddala and S. S. Mahapatra, “An integrated approach for scheduling flexible job-shop using teaching-learning-based optimization method,” J. Ind. Eng. Int., vol. 15, no. 1, pp. 181–192, Mar. 2019. doi: 10.1007/s40092-018-0280-8. [Google Scholar] [CrossRef]

33. M. Rostami and A. Yousefzadeh, “A gamified teaching-learning based optimization algorithm for a three-echelon supply chain scheduling problem in a two-stage assembly flow shop environment,” Appl. Soft Comput., vol. 146, pp. 110598, Oct. 2023. doi: 10.1016/j.asoc.2023.110598. [Google Scholar] [CrossRef]

34. R. Buddala and S. S. Mahapatra, “Two-stage teaching-learning-based optimization method for flexible job-shop scheduling under machine breakdown,” Int. J. Adv. Manuf. Technol., vol. 100, pp. 1419–1432, Feb. 2019. doi: 10.1007/s00170-018-2805-0. [Google Scholar] [CrossRef]

35. J. Jayanthi et al., “Segmentation of brain tumor magnetic resonance images using a teaching-learning optimization algorithm,” Comput. Mater. Contin., vol. 68, no. 3, pp. 4191–4203, Mar. 2021. doi: 10.32604/cmc.2021.012252. [Google Scholar] [CrossRef]

36. D. M. Lei, B. Su, and M. Li, “Cooperated teaching-learning-based optimisation for distributed two-stage assembly flow shop scheduling,” Int. J. Prod. Res., vol. 59, no. 23, pp. 7232–7245, Nov. 2020. doi: 10.1080/00207543.2020.1836422. [Google Scholar] [CrossRef]

37. D. M. Lei and B. Su, “A multi-class teaching-learning-based optimization for multi-objective distributed hybrid flow shop scheduling,” Knowl. Based. Syst., vol. 263, pp. 110252, Jan. 2023. doi: 10.1016/j.knosys.2023.110252. [Google Scholar] [CrossRef]

38. A. Dubey, U. Gupta, and S. Jain, “Medical data clustering and classification using TLBO and machine learning algorithms,” Comput. Mater. Contin., vol. 70, no. 3, pp. 4523–4543, Oct. 2021. doi: 10.32604/cmc.2022.021148. [Google Scholar] [CrossRef]

39. D. M. Lei and B. J. Xi, “Diversified teaching-learning-based optimization for fuzzy two-stage hybrid flow shop scheduling with setup time,” J. Intell. Fuzzy Syst., vol. 41, no. 2, pp. 4159–4173, Sep. 2021. doi: 10.3233/JIFS-210764. [Google Scholar] [CrossRef]

40. D. M. Lei, L. Gao, and Y. L. Zheng, “A novel teaching-learning-based optimization algorithm for energy-efficient scheduling in hybrid flow shop,” IEEE Trans. Eng. Manage., vol. 65, no. 2, pp. 330–340, May. 2018. doi: 10.1109/TEM.2017.2774281. [Google Scholar] [CrossRef]

41. A. Sharma et al., “Identification of photovoltaic module parameters by implementing a novel teaching learning based optimization with unique exemplar generation scheme (TLBO-UEGS),” Energy Rep., vol. 10, pp. 1485–1506, Nov. 2023. doi: 10.1016/j.egyr.2023.08.019. [Google Scholar] [CrossRef]

42. M. Arashpour et al., “Predicting individual learning performance using machine-learning hybridized with the teaching-learning-based optimization,” Comput. Appl. Eng. Educ., vol. 31, no. 1, pp. 83–99, Jan. 2023. doi: 10.1002/cae.22572. [Google Scholar] [CrossRef]

43. A. K. Shukla, S. K. Pippal, and S. S. Chauhan, “An empirical evaluation of teaching-learning-based optimization, genetic algorithm and particle swarm optimization,” Int. J. Comput. Appl., vol. 45, no. 1, pp. 36–50, Jan. 2023. doi: 10.1080/1206212X.2019.1686562. [Google Scholar] [CrossRef]

44. J. Deng and L. Wang, “A competitive memetic algorithm for multi-objective distributed permutation flow shop scheduling problem,” Swarm Evol. Comput., vol. 32, pp. 121–131, Feb. 2017. doi: 10.1016/j.swevo.2016.06.002. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools