Open Access

Open Access

ARTICLE

A Novel 6G Scalable Blockchain Clustering-Based Computer Vision Character Detection for Mobile Images

1 Department of Mathematics and Computer Engineering, Ordos Institute of Technology, Ordos, 017000, China

2 School of Mathematics and Physics, North China Electric Power University, Beijing, 102206, China

3 School of Electrical Information, Hunan University of Engineering, Xiangtan, 411104, China

* Corresponding Author: Yuejie Li. Email:

Computers, Materials & Continua 2024, 78(3), 3041-3070. https://doi.org/10.32604/cmc.2023.045741

Received 06 September 2023; Accepted 20 November 2023; Issue published 26 March 2024

Abstract

6G is envisioned as the next generation of wireless communication technology, promising unprecedented data speeds, ultra-low Latency, and ubiquitous Connectivity. In tandem with these advancements, blockchain technology is leveraged to enhance computer vision applications’ security, trustworthiness, and transparency. With the widespread use of mobile devices equipped with cameras, the ability to capture and recognize Chinese characters in natural scenes has become increasingly important. Blockchain can facilitate privacy-preserving mechanisms in applications where privacy is paramount, such as facial recognition or personal healthcare monitoring. Users can control their visual data and grant or revoke access as needed. Recognizing Chinese characters from images can provide convenience in various aspects of people’s lives. However, traditional Chinese character text recognition methods often need higher accuracy, leading to recognition failures or incorrect character identification. In contrast, computer vision technologies have significantly improved image recognition accuracy. This paper proposed a Secure end-to-end recognition system (SE2ERS) for Chinese characters in natural scenes based on convolutional neural networks (CNN) using 6G technology. The proposed SE2ERS model uses the Weighted Hyperbolic Curve Cryptograph (WHCC) of the secure data transmission in the 6G network with the blockchain model. The data transmission within the computer vision system, with a 6G gradient directional histogram (GDH), is employed for character estimation. With the deployment of WHCC and GDH in the constructed SE2ERS model, secure communication is achieved for the data transmission with the 6G network. The proposed SE2ERS compares the performance of traditional Chinese text recognition methods and data transmission environment with 6G communication. Experimental results demonstrate that SE2ERS achieves an average recognition accuracy of 88% for simple Chinese characters, compared to 81.2% with traditional methods. For complex Chinese characters, the average recognition accuracy improves to 84.4% with our system, compared to 72.8% with traditional methods. Additionally, deploying the WHCC model improves data security with the increased data encryption rate complexity of ∼12 & higher than the traditional techniques.Keywords

6G technology in computer vision emerges from the continuous evolution of wireless communication and the expanding horizons of computer vision capabilities [1]. This synergy between cutting-edge communication networks and advanced computer vision systems promises to reshape how we perceive and interact with the visual world. Stemming from the successes of 5G technology, 6G is envisioned as the next frontier, offering data speeds surpassing 100 gigabits per second and Latency as low as one millisecond [2]. This unparalleled Connectivity and speed lay the foundation for real-time computer vision applications that were once the realm of science fiction. Moreover, 6G’s commitment to ubiquitous Connectivity means that computer vision systems can operate in remote and challenging environments, unlocking many applications in healthcare, transportation, augmented reality, and beyond. As 6G technology continues to take shape, it heralds a new era where the fusion of high-speed data transmission and advanced computer vision brings forth innovative solutions to complex problems and enhances our digital experiences in ways previously unimagined. Blockchain technology is poised to play a pivotal role in converging 6G technology and computer vision, offering a secure and transparent foundation for many applications [3]. This demands data integrity, privacy, and trust, which blockchain can provide. Data security is one of the primary roles of blockchain in 6G technology and computer vision. With 6G networks facilitating the rapid exchange of massive volumes of data for computer vision processes, ensuring the integrity and confidentiality of this data is paramount. Blockchain’s immutable ledger, secured by cryptographic principles, safeguards data against tampering or unauthorized access. This is especially crucial in applications like remote medical diagnostics, autonomous vehicles, and smart cities, where data accuracy and privacy are critical [4]. Moreover, blockchain introduces a trust layer to the ecosystem. In computer vision applications, trust in the accuracy of the visual data and its source is essential. Blockchain’s decentralized and transparent nature allows participants to verify the authenticity of data sources and the integrity of computations. This trust enables collaborative efforts, such as multi-agent robotics and augmented reality applications, to function seamlessly and reliably. Furthermore, blockchain facilitates data monetization and provenance tracking. Through smart contracts and tokenization, 6G and computer vision ecosystem participants can fairly and transparently exchange value for data or services [5]. This opens up opportunities for data marketplaces, where computer vision data providers can be fairly compensated for their contributions. Additionally, blockchain tracks the data lineage, ensuring traceability and accountability, which is crucial for regulatory compliance and auditability.

The natural scenes of people’s lives usually contain rich text information, and the text information of the natural scenes can be stored in images using a handheld mobile device. Recognition and analysis of text information in images can help people understand the information in natural scenes [6]. Natural scene text information extraction detects, locates, and recognizes text information in the natural environment. It is widely used in various occasions that need to analyze and understand the text in the scene, such as the recognition and analysis of the subtitles in the movie or TV, the automatic driving of the driverless car, and the guidance of blind people. Various kinds of text information exist in today’s living environment [7]. How to extract text from acquired natural scene images and the text information in the natural scene is identified, and the pictures containing the text information are converted into usable text information to obtain helpful information that people need is a complex and hot spot in the field of image research today [8]. The popularity of mobile devices equipped with multimedia technology can take pictures anytime and anywhere. The pictures stored in the mobile device contain a large amount of Chinese character information, and text extraction from the Chinese character information in the mobile device can provide convenience for people’s lives [9]. However, the traditional Chinese character text recognition effect could be better, and people often need help to accurately obtain the text information of Chinese characters in natural scenes. The computer vision algorithm improves the recognition of Chinese text information to more accurately obtain Chinese text information in natural scenes based on mobile devices. Therefore, this paper has research significance [10].

Computer vision can accurately obtain the image information of natural scenes and their Chinese text information [9]. Many apply computer vision algorithms to E2E recognition of Chinese characters in natural scenes. Chaudhary’s research showed that deep learning algorithms can effectively improve the recognition of Chinese text information in moving images [10]. Although E2E recognition of Chinese characters in natural scenes based on computer vision algorithms can improve the accuracy of Chinese text recognition, it lacks a comparative analysis with traditional Chinese text recognition [11]. With the increasing popularity of mobile devices, it is possible to use mobile devices to frame images of natural scenes. Images on mobile devices contain a lot of information, and it is essential to recognize Chinese characters in images [12]. Using computer vision algorithms enables E2E recognition of textual information in images. Compared with traditional Chinese character text recognition, the E2E recognition of Chinese character text in natural scenes using computer vision algorithms in mobile devices can effectively shorten the time for Chinese character text recognition [13].

The paper introduces the Secure end-to-end recognition system (SE2ERS), a novel framework for recognizing Chinese characters in natural scenes. This system leverages convolutional neural networks (CNN) and integrates with 6G technology and the Weighted Hyperbolic Curve Cryptograph (WHCC). Through extensive experiments, the paper demonstrates that SE2ERS achieves significantly higher recognition accuracy for both simple and complex Chinese characters when compared to traditional recognition methods. This improvement is crucial for reliable character identification in real-world scenarios. The integration of WHCC in SE2ERS ensures data security and privacy. The paper highlights how WHCC contributes to encryption and secure data transmission, enabling users to protect and control access to their visual data. In the context of 6G technology, the paper showcases SE2ERS’ impressive data transmission capabilities, including high data speeds, low Latency, and robust Connectivity. These features are critical for real-time character recognition and secure data exchange. The paper underscores the importance of blockchain technology in enhancing security, trustworthiness, and transparency in computer vision applications. It discusses how blockchain can facilitate privacy-preserving mechanisms, protecting user data in facial recognition and healthcare monitoring scenarios. By combining computer vision, 6G technology, and blockchain, the paper anticipates future advancements in secure and efficient data handling, character recognition, and overall user experiences.

The paper is organized in the following sections. Section 2 defines the related works of the proposed technology. Section 3 defines the research method for secure character recognition with 6G blockchain. SE2ERS6G blockchain model for Chinese character recognition is explained in Section 4. Integration of the WHCC blockchain with 6G technology is defined in Section 5. Simulation results are explained in Section 6, and finally Conclusion is defined in Section 7.

Recognition of in-air handwritten Chinese characters has been effectively accomplished using a convolutional neural network (CNN). However, the current CNN-based models for IAHCCR require the conversion of a character’s coordinate series into images. Blockchain technology is a revolutionary innovation transforming various industries by providing a secure, transparent, decentralized platform for transactions and data management. At its core, a blockchain is a distributed ledger composed of a chain of blocks, each containing a list of transactions. Its decentralized nature sets blockchain apart, eliminating the need for intermediaries and central authorities. This technology ensures transparency and immutability, making it extremely secure and tamper-proof. The applications of blockchain are vast and diverse. It is most notably known as the backbone of cryptocurrencies like Bitcoin. Still, it extends to smart contracts, supply chain management, identity verification, voting systems, healthcare, cross-border payments, intellectual property protection, and more. As blockchain technology continues to evolve, its potential to revolutionize how we conduct business, manage data, and secure our digital lives is truly remarkable. This conversion procedure lengthens training and classification times and results in data loss. Reference [14] suggested an end-to-end classifier for online handwritten Chinese character recognition based on CNN, which is novel. The output of the whole connection layer is gathered by global average pooling to form a fixed-size feature vector, which is then sent to softmax for classification. They experimented with the IAHCC-UCAS2016 and SCUT-COUCH2009 databases. The experimental results compare with existing CNN models based on image processing or RNN-based techniques. The technique for Chinese-style scene text detection was suggested in [15] for tilting screens captured by mobile devices. First, the Connectionist Text Proposal Network (CTPN) technique is used to achieve robust performance for horizontal text detection. Second, the lightweight Oxford Visual Geometry Group network (VGGnet) is used to correct the tilted direction of the entire image, eliminating the reliance on the detection of horizontal text [16]. Experiments demonstrate that this paper gets excellent outcomes based on the comprehensive scheme, even in some terrible cases.

Offline handwritten Chinese recognition, which includes offline handwritten Chinese character recognition (offline HCCR) and offline handwritten Chinese text recognition (offline HCTR), is a significant area of pattern recognition research. Reference [17] suggested the advancements in research and difficulties associated with offline handwritten Chinese recognition based on conventional techniques, deep learning techniques, conventional techniques combined with deep learning techniques, and information from other fields from 2016 to 2022. It introduces the study context and the current state of handwritten Chinese recognition, standard datasets, and assessment metrics [18]. Second, a thorough overview of offline HCCR and offline HCTR approaches over the past seven years is given, along with a description of their principles, details, and results.

Chinese character recognition could be better, particularly for those with similar shapes. Therefore, from the viewpoint of the structure of similar characters and the semantic information of the context, reference [19] suggested the Similar-CRNN algorithm based on the conventional CNN + RNN + CTC algorithm model [20]. To improve the recognition accuracy of similar Chinese characters from the perspective of Chinese character structure, the authors first construct a similar character library based on the similarity algorithm of Chinese characters and then conduct enhanced training for the feature differences of similar Chinese characters. Three stages of error detection, candidate recall, and error correction sorting are performed after obtaining the preliminary results to correct semantically irrelevant error recognition results and further increase the recognition accuracy rate at the semantic level of Chinese characters.

To identify large-scale Chinese characters, reference [21] suggested LCSegNet, an effective semantic segmentation model based on label coding (LC). First, the authors create Wubi-CRF, a novel label coding method based on the Wubi Chinese characters code, to reduce the number of labels. Chinese character glyphs and structure details are encoded into 140-bit labels using this technique [15]. Finally, tests are run on three standard datasets: the HIT-OR3C dataset, ICDAR2019-ReCTS, and a sizable Chinese text dataset in the wild (CTW). Results demonstrate that the suggested approach achieves cutting-edge results in both challenging scene recognition tasks and handwritten character recognition tasks.

Text is a crucial cue for visual identification, and one of the significant areas of computer vision research is character recognition in natural scenes. With the rapid advancement of deep learning in recent years, text detection has made significant progress. Reference [22] suggested the difficulties in text recognition, then introduces the most recent text recognition techniques based on the CTC and attention mechanism, and finally offers some suggestions for future research paths in text recognition. An approach for detecting, predicting orientation, and recognizing Urdu ligatures in outdoor pictures was put out in [23]. For the detection and localization of images of size 320 240 pixels, the customized FasterRCNN algorithm has been combined with well-known CNNs, including Squeezenet, Googlenet, Resnet18, and Resnet50. A customized Regression Residual Neural Network (RRNN) is trained and tested on datasets comprising randomly oriented ligatures for ligature orientation prediction [24]. A Stream Deep Neural Network was used to recognize ligatures. In their studies, five sets of datasets containing 4.2 and 51 K synthetic images with embedded Urdu text were created using the CLE annotation text to assess ligature detection, orientation prediction, and recognition tasks. These artificial images have 32 different permutations of the 132 and 1600 distinct ligatures, making up 4.2 and 51 K images, respectively.

Additionally, 1094 actual photos with more than 12 K Urdu characters were used to evaluate TSDNN. Finally, the average-precision (AP) detection/localization of Urdu text employing all four detectors was assessed and compared. Resnet50 features based With an AP of 0.98, FasterRCNN was determined to be the successful detector. Detectors based on Squeeznet, Googlenet, and Resnet18 had testing APs of 65, 88, and 87, respectively.

Reference [25] suggested a reliable technique that can identify and fix multiple license plates with severe skewing or distortion in a single image and then feed the corrected plates into the license plate recognition module to provide the desired outcome. Their approach executes affine modification during license plate detection to correct the distorted license plate picture, which differs from the previous license plate detection and recognition methods. It can increase recognition accuracy and prevent the buildup of intermediate errors. Many Chinese and international students write neatly on paper with grid lines running in both directions. Reference [26] suggested a method for character detection based on grid lines. The method can successfully prevent the common overlaps and omissions in other approaches. Adaptive Canny edge detection first turns the image into a binary edge one. The Hough transform separates the horizontal and vertical lines from the binary edge image. Character-bounding rectangles can be recognized from the modified lines. The results of the experiments demonstrate that the suggested method can correctly identify characters from various grid-lined Chinese text image formats [27–33].

Reference [34] introduced a blockchain-based authentication solution tailored for 6G communication networks. Security is a paramount concern in the 6G era due to the sheer volume of data and the proliferation of connected devices. Blockchain’s decentralized, tamper-resistant, and transparent nature makes it a promising candidate for enhancing security. This study explores how blockchain can be leveraged for robust authentication, mitigating potential security threats in tactile networks associated with 6G. It underlines the importance of establishing trust and authenticity in an environment characterized by high connectivity and data exchange. Reference [35] presented the idea of using blockchain for transparent data management in the context of 6G. In the age of 6G communication, where data plays a central role, maintaining data integrity, transparency, and traceability is critical. Blockchain, as a distributed ledger technology, can ensure that data is unaltered and that its history is recorded in a tamper-resistant manner. This article discusses the potential of blockchain to provide secure and transparent data management, emphasizing the importance of data reliability and authenticity in 6G networks. Reference [36] focused on the challenges specific to vehicular ad-hoc networks (VANET) in the 6G context. These networks play a crucial role in enabling communication between vehicles and infrastructure. The study presents an efficient data-sharing scheme that combines blockchain and edge intelligence. It is designed to not only protect privacy but also to address the unique requirements of VANETs. This illustrates how blockchain can be tailored to meet the specific demands of different 6G applications.

Reference [37] concerned with the security of IoT devices, an integral part of the 6G ecosystem. In this article, they propose the integration of blockchain into IoT device security gateway architecture. By doing so, they aim to enhance the privacy and security of IoT devices, which are expected to play an increasingly significant role in the 6G landscape. The study underlines the importance of securing the multitude of IoT devices that will be connected to 6G networks. Reference [38] focused on privacy preservation in 6G networks. They propose a verifiable searchable symmetric encryption system based on blockchain. This approach seeks to strike a balance between data security and accessibility. In the data-rich environment of 6G, protecting sensitive information is essential while ensuring legitimate users can access the data they need. This study offers insights into how blockchain can achieve this delicate balance.

3 Research Method for the Secure Character Recognition with 6G Blockchain

The proposed method in this research aims to develop a secure end-to-end recognition system (SE2ERS) for accurate Chinese character recognition in natural scenes, leveraging the capabilities of 6G technology and blockchain. The research starts with collecting a comprehensive dataset containing images of Chinese characters in natural scenes. These images may vary in lighting conditions, angles, and backgrounds. Preprocessing techniques are applied to standardize the dataset, including resizing, normalization, and noise reduction. The neural network architecture is designed, including layers for feature extraction, convolution, pooling, and fully connected layers. Transfer learning may also be considered, using pre-trained CNN models to boost recognition accuracy. The proposed SE2ERS model harnesses the capabilities of 6G technology for seamless and high-speed data transmission. The ultra-low latency and high data rates of 6G networks enable real-time image processing and character recognition, making it a vital system component. Blockchain technology is integrated to enhance security and data integrity. Smart contracts are implemented to manage data access control and privacy-preserving mechanisms. The Weighted Hyperbolic Curve Cryptograph (WHCC) is employed to secure data transmission within the 6G network, ensuring the confidentiality and authenticity of data. Within the computer vision system, GDH is utilized for character estimation. GDH is a feature extraction technique that captures the directional gradients within images, providing valuable information for character recognition. The SE2ERS model is designed to operate as an end-to-end system. It encompasses the entire process, from image capture to character recognition and secure data transmission.

The proposed method in this research outlines the development of a Secure end-to-end recognition system (SE2ERS) for accurate Chinese character recognition in natural scenes, integrating the capabilities of 6G technology and blockchain for enhanced security. The process begins with collecting a comprehensive dataset of Chinese character images in natural scenes, considering variations in lighting conditions, angles, and backgrounds. Standard preprocessing techniques such as resizing, normalization, and noise reduction are applied to standardize the dataset. The neural network architecture is carefully designed, including layers for feature extraction, convolution, pooling, and fully connected layers. Transfer learning using pre-trained CNN models is considered to boost recognition accuracy. The SE2ERS model leverages the capabilities of 6G technology, benefiting from ultra-low latency and high data rates for real-time image processing and character recognition. Blockchain technology is seamlessly integrated to enhance security and ensure data integrity. Smart contracts are implemented to manage data access control and enforce privacy-preserving mechanisms. To secure data transmission within the 6G network, the Weighted Hyperbolic Curve Cryptograph (WHCC) is employed, guaranteeing the confidentiality and authenticity of data.

The Gradient Directional Histogram (GDH) technique is utilized within the computer vision system for character estimation. GDH captures directional gradients within images, providing valuable information for character recognition. The SE2ERS model is designed to operate as a comprehensive end-to-end system, covering the entire process from image capture to character recognition and secure data transmission. The research demonstrates the potential of SE2ERS for improving Chinese character recognition, comparing it to traditional recognition methods. Experimental results showcase a significant improvement in accuracy, with SE2ERS achieving an average recognition accuracy of 88% for simple Chinese characters compared to 81.2% with traditional methods. The average recognition accuracy for complex Chinese characters improves to 84.4% with the proposed system compared to 72.8% with traditional methods. Additionally, deploying the WHCC model significantly enhances data security, with a higher encryption rate complexity, ensuring the integrity and privacy of data transmission within the 6G network. This innovative approach harnesses the power of 6G technology and blockchain to advance the field of Chinese character recognition in natural scenes while prioritizing data security and accuracy.

The SE2ERS is a comprehensive system integrating technologies to achieve accurate and secure Chinese character recognition in natural scenes. We can create a robust end-to-end solution with 6G technology, blockchain, and Convolutional Neural Networks (CNNs). This system is designed to process images captured by mobile devices, recognize Chinese characters, and securely transmit the results using blockchain-based encryption methods. Normalization scales pixel values to the range [0, 1] computed with Eqs. (1) and (2).

The Gaussian or median filters can be applied for noise reduction. CNNs consist of multiple layers, including convolutional, activation, pooling, and fully connected layers. Feature extraction and classification are achieved through Eq. (3).

The connected layer for the estimation is measured with the Eq. (4).

The SE2ERS system combines advanced technologies and a comprehensive solution for Chinese character recognition in natural scenes. It preprocesses images, applies CNNs for recognition, integrates 6G for real-time connectivity, uses blockchain for security, and employs GDH for feature extraction.

6G networks are expected to have a more decentralized and distributed architecture to support massive connectivity and low-latency communication. The 6G model comprises different components: Base Stations that Utilize advanced base stations with beamforming capabilities for efficient communication. 6G may incorporate Low Earth Orbit (LEO) and geostationary satellites for global coverage. Edge computing nodes are distributed throughout the network to enable low-latency processing. Ultra-low latency (L) minimizes propagation delay and processing time. Latency is typically less than 1 millisecond (ms). High Data Rate (R) of Greater than 100 Gbps data rates are supported. The data rate can be calculated using Shannon’s Capacity Formula as in Eq. (5).

C is the channel capacity, B is the bandwidth, and SNR is the signal-to-noise ratio. Ubiquitous and seamless Connectivity is ensured, and the coverage equation can be used to determine the area covered as stated in Eq. (6).

where the cell radius depends on signal strength and frequency.

3.2 Display Style Blockchain Model

Integrating blockchain into a 6G network involves creating a decentralized, secure, and transparent ledger for various purposes. Key considerations include:

Smart Contracts: Implement smart contracts for data access control, network management, and payment processing. These can be coded using Solidity or other innovative contract languages.

Consensus Mechanism: Choose a consensus mechanism suitable for 6G, such as Proof of Stake (PoS), Proof of Authority (PoA), or even more advanced mechanisms that provide scalability and security.

Data Privacy: Use cryptographic techniques like zero-knowledge proofs or homomorphic encryption to protect user data while ensuring transparency.

Blockchain Data Size (BDS): Estimate the blockchain data size based on the expected number of transactions and blocks. This can be calculated using Eq. (7).

Ensure that BDS is manageable within the network’s storage capacity. Implement quantum-resistant cryptographic algorithms to protect against future quantum attacks. Use blockchain for secure identity management, ensuring user privacy and authentication.

4 SE2ERS6G Blockchain Model for the Chinese Character Recognition

To retrieve the text information people need, analyzing Chinese characters in natural scenes includes analyzing and processing Chinese characters’ images in natural scenes and extracting and recognizing Chinese characters from photos. With the continuous advancement of information technology, mobile devices with cameras have become more common, making it easier for individuals to access photos with textual information in their daily lives. After the image is acquired, it can extract the necessary Chinese character information and convert it into valid electronic data that people need. The ability to recognize Chinese characters in photos of natural scenes has many practical applications. The structural model of Chinese character recognition in natural scenes is shown in Fig. 1.

Figure 1: SE2ERS for Chinese characters in realistic circumstances

Fig. 1 depicts the structural model for SE2ERS recognition of Chinese characters in realistic circumstances. People use mobile devices to frame natural scenes. Through the detection and recognition of the picture content, the Chinese character text information in the picture is analyzed, providing convenience for people’s lives. With the continuous development of intelligent mobile devices, people can take pictures of natural scenes with rich content and bright colors anytime and anywhere by using the high-definition cameras provided by the mobile devices, in addition to rich colors, textures, and much text in the scene pictures. The amount of information in the text is often much more significant than that carried by color, texture, etc. The characters in natural scenes are small, rich in information, and easy to express. Chinese characters are commonly used and familiar characters. Among them, Chinese characters are commonly used and familiar, and the detection and recognition of Chinese characters in natural scenes can promote the development of society [39–41].

The practical significance of identifying Chinese characters in natural scenes is vast. Image search for text content in pictures can accurately label and classify images, which brings faster, better, and more accurate search services to image search based on the text content of pictures. Regarding traffic management, it is possible to take pictures of complex and congested traffic conditions. The traffic situation at that time can be analyzed through Chinese character text recognition. Uncrewed and intelligent vehicles recognize road scenes through cameras to make intelligent reflections.

4.1 Chinese Character Text Recognition with DGH

Chinese character text images must be processed before being recognized in natural surroundings. Character extraction, character segmentation, and Chinese text skew correction are all included in the preprocessing of the localized Chinese text image. The division of Chinese character text in images of natural scene text into individual character information is the goal of Chinese character text recognition preprocessing, which helps recognize Chinese character text in images of natural scene text [26,27]. Chinese text character extraction needs to extract the color of the characters. The characters’ color information and spatial placement in the image can be used to assess the placement of Chinese characters. The Chinese characters are binarized to get the color of the characters, and the pixel coordinates of each character are found to get the white character backdrop and the black Chinese characters. The position of each Chinese character is then determined using a cluster analysis of the character color.

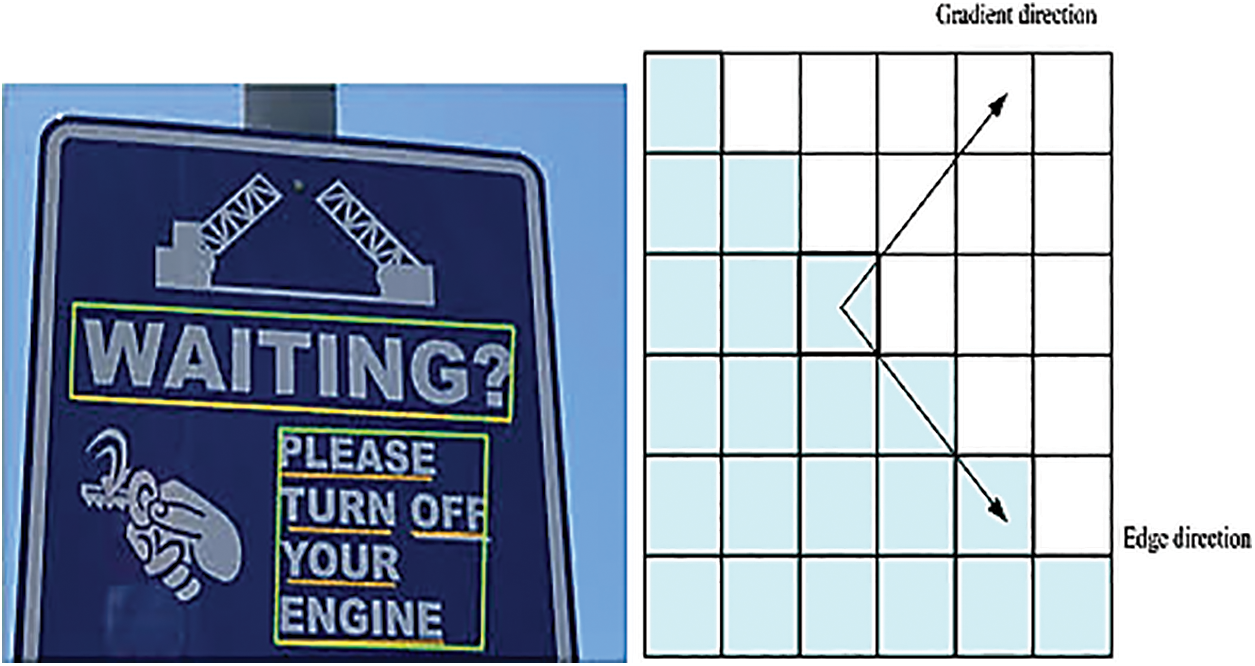

Character segmentation and correction is to correct the tilt problem of mobile devices when shooting Chinese characters in natural scenes. The specific method of character segmentation and correction is to analyze the height of Chinese characters in the horizontal direction. First, the average height of Chinese characters is obtained, then divided into left and right characters from the middle. Finally, the skew problem of Chinese characters is corrected according to the difference between the left half-character and the right half-character and the average character height [28,29]. In the traditional recognition of Chinese characters in natural scenes, the directional gradient histogram algorithm detects and recognizes Chinese characters. To detect objects, the directional gradient histogram technique is frequently employed. The fundamental concept is to use the image’s gradient information as a description tool. The structural model of the directional gradient histogram is shown in Fig. 2.

Figure 2: Structure of directional gradient histogram

In Fig. 2, the structural model of the directional gradient histogram is depicted. The image is divided into a series of closely spaced regions. Gradient or edge orientation histograms for each pixel in a small picture unit are collected, and it is ensured that the gradient orientation and the image edge orientation are perpendicular to each other. Different histograms represent different ways of information processing. Combine these histograms to create a feature descriptor. The natural scene Chinese text recognition process of the directional gradient histogram algorithm is as follows:

Color images are converted to grayscale images. By adjusting the image contrast, the influence of illumination can be reduced, and the local shadows and illumination changes in the image can be suppressed to a certain extent.

Each image’s gradient can be calculated by identifying the gradient value of each pixel’s position on the ordinate and abscissa and then by calculating the gradient value and gradient direction of each pixel about the abscissa’s gradient value. The formulas for determining the gradient value of the horizontal and vertical coordinates of each pixel point include in Eqs. (8) and (9).

In Eqs. (8) and (9),

The gradient direction of the pixel is expressed as in Eq. (11).

In Eq. (4), a represents the direction of the gradient of the pixel point. The image is decomposed into multiple small units in the traditional natural scene Chinese character text recognition method. The small units cannot overlap, and the gradient histogram of each small unit is obtained by calculation. Each small unit is normalized to eliminate the interference of external factors. Many factors affect Chinese character text recognition in natural scenes, among which illumination is the most critical factor. The image quality will only improve if the illumination intensity is high enough. Finally, all the small unit feature vectors are combined to obtain feature vectors representing Chinese characters in natural scenes.

4.2 Computer Vision Algorithms for SE2ERS

Computer vision algorithm is a multi-domain fusion discipline, which includes computer science, mathematics, and engineering, and belongs to the research field of deep learning. Computer vision uses cameras and other shooting equipment to obtain target images. The detection and recognition of the target image are realized through intelligent analysis, and the required information in the image is obtained [33]. CNN is a type of neural network structure that includes convolutional computation. It is an analytical model often used in computer vision and can effectively recognize Chinese characters in natural scenes. The structural model of the CNN is shown in Fig. 3. A particular kind of feedforward neural network, the CNN in Fig. 3, typically contains an input layer, a convolutional layer, a pooling layer, a fully connected layer, and an output layer. A convolutional layer often has multiple feature planes, each containing some neurons. To extract features layer by layer, convolutional and pooling layers are alternatively organized and work together to create several convolutional groups.

Figure 3: Structural model diagram of CNN

The convolutional layer’s forward propagation procedure mainly involves feature extraction from the input data. The original input image, the result of the preceding convolutional layer, or the result of the preceding pooling layer may all be used as the input to a convolutional layer. All feature maps of convolutional layers generally have a convolution kernel of the same size. The size of the output feature maps in the convolutional layer is defined by the convolutional layer’s input size and the convolution kernel’s size. The number of output feature maps in the convolutional layer is determined during the network initialization procedure. Due to the particular recognition method of the CNN, it has very high image recognition accuracy and high robustness. Therefore, Chinese character recognition from beginning to Conclusion in natural scenes can be achieved using CNNs.

The process of Chinese character recognition from beginning to Conclusion in natural scenes using CNNs is as follows:

The input size of the convolution layer is

The value of position

In Eq. (6),

In Eq. (14), function

5 Integration of WHCC Blockchain with 6G Technology

In this system, images containing Chinese characters are captured by users using mobile devices equipped with cameras. These images undergo complex processing through CNNs, which excel at extracting features from images, ensuring high accuracy in character recognition. The CNN-based model recognizes Chinese characters based on these extracted features. However, what sets SE2ERS apart is its integration of 6G technology, which provides ultra-low Latency, high data rates, and ubiquitous Connectivity. The equations governing these features ensure that SE2ERS operates with minimal delays and can seamlessly handle large volumes of data. Moreover, to address data security and privacy concerns, the WHCC blockchain is employed. It encrypts the recognized characters before transmission, ensuring data confidentiality and tamper-proofing. This blockchain also implements privacy-preserving mechanisms, allowing users to control their visual data and manage access rights. The workflow involves a secure data transmission process where characters are encrypted using WHCC, securely stored in the blockchain, and managed through smart contracts that control access. When users query the system, the data is retrieved, decrypted, and provided in real-time responses.

WHCC encrypts the data during transmission, ensuring security. The encryption process involves mathematical equations using cryptographic algorithms that transform data into an unreadable format until decrypted by the intended recipient. Blockchain, including WHCC, employs cryptographic techniques such as zero-knowledge proofs (ZKPs) to preserve user privacy. ZKPs allow one party (the prover) to prove to another party (the verifier) that a statement is true without revealing additional information. Image Capture (I): Users capture images (I) containing Chinese characters using mobile devices equipped with cameras. CNN Processing (F): The images go through the CNN, resulting in feature vectors (F). This process can be represented in Eq. (15).

where CNN is the convolutional neural network.

Recognized characters (R) are obtained based on the extracted features. The recognition process can be expressed as in Eq. (16).

WHCC encrypts the recognized characters (R) before transmission, ensuring data security computed with Eq. (17).

Encrypted data (E) is securely stored in the WHCC blockchain (B). Access Control (S): Smart contracts (S) on the blockchain manage access to the data, controlling who can retrieve and decrypt it. User Query and Response (Q): When users query the system for recognized characters or related information, the system retrieves the data from the blockchain, decrypts it (D), and provides the response (Q) in real-time is performed with Eqs. (18) and (19).

The SE2ERS system integrates WHCC blockchain and 6G technology to offer secure, accurate, and privacy-aware character recognition in natural scenes. Mathematical equations underpin the functionality of 6G features, data encryption, and decryption, ensuring the system’s effectiveness in recognizing characters while safeguarding user data.

Steps in blockchain:

1. The process begins with generating data, including images containing Chinese characters. SE2ERS employs computer vision techniques, specifically convolutional neural networks (CNN), to recognize and extract Chinese characters from these images. The recognized characters are the valuable data to be secured and stored.

2. Before data is added to the blockchain, it undergoes verification and validation. This step ensures that the recognized Chinese characters are accurate and reliable. Validation may involve cross-checking with reference databases or employing validation algorithms to enhance accuracy.

3. SE2ERS utilizes the Weighted Hyperbolic Curve Cryptograph (WHCC) to secure the recognized Chinese characters. The characters are encrypted using WHCC before they are added to the blockchain. WHCC ensures data confidentiality and protection against unauthorized access.

4. Encrypted Chinese characters and relevant metadata (such as timestamps and transaction details) are organized into transactions. Each transaction represents a secure data entry containing the encrypted characters.

5. SE2ERS operates within a 6G blockchain network. This network consists of multiple nodes: edge devices, servers, or participants within the 6G ecosystem. These nodes use a consensus mechanism suitable for 6G networks, ensuring efficient transaction validation and consensus.

6. Validated transactions are grouped into blocks. In SE2ERS, these blocks may represent batches of recognized characters. Each block is linked to the previous one, forming a secure and tamper-evident data chain.

7. The blockchain is distributed across multiple nodes in the 6G network, ensuring decentralization and redundancy. Each node stores a blockchain copy, enhancing data availability and fault tolerance.

8. Within the blockchain model, privacy-preserving mechanisms may be integrated. For example, users may have control over their data and can grant or revoke access rights through blockchain-based smart contracts. This ensures that data privacy is maintained even within a decentralized network.

9. Users or authorized parties can query the blockchain to retrieve encrypted Chinese characters. The decryption key (WHCC key) is required to access the plaintext data. This dual-layer security ensures that only authorized parties can access and decipher the data.

10. Blockchain’s inherent security features, including cryptographic hashing and consensus mechanisms, make it highly resistant to tampering and unauthorized access. Once data is added to the blockchain, it becomes immutable and trustworthy.

SE2ERS employs Convolutional Neural Networks (CNNs), a class of deep learning models specifically designed for image analysis. CNNs are highly effective at recognizing patterns and features in images. In this system, when users capture images containing Chinese characters using their mobile devices, these images are processed through the CNNs. The CNNs analyze the images, extracting essential features crucial for character recognition. This feature extraction is critical, ensuring the system’s accuracy in identifying Chinese characters, even in complex natural scenes.

The hierarchical architecture of blockchain can be effectively applied to store characters recognized in natural scenes, ensuring data integrity and accessibility. The hierarchical architecture implemented in SE2ERS is stated as follows:

Primary Blockchain Layer: The primary blockchain layer is at the core of the hierarchical architecture. This layer serves as the foundation for storing essential data related to character recognition. Each character recognized in natural scenes is securely stored as a transaction on the blockchain. Using blockchain ensures the stored data’s immutability, transparency, and security.

Data Segmentation: When recognizing characters in natural scenes, data can be segmented based on different criteria, such as the recognition location, the date and time of recognition, or the specific application or use case. Each segment corresponds to a separate chain or sidechain within the hierarchical architecture.

Application-Specific Sidechains: Sidechains are created for specific applications or use cases. For instance, if character recognition is used in multiple contexts, separate sidechains can be established for each context. This segregation allows for efficient data management and access control. Different applications may have varying requirements for data privacy and access.

Data Access and Control: Smart contracts are employed at the sidechain level to manage data access and control. Access to the recognized characters is restricted, and permission is granted. Users can control who can access their data, ensuring privacy and compliance with data protection regulations.

Metadata and Timestamps: Metadata related to character recognition, such as the confidence score of recognition or the geographical location, can be appended to the blockchain transactions. Timestamps are also used to record when the character recognition took place.

Data Encryption: To enhance security, data encryption is applied to the transactions stored on the blockchain. Encryption ensures that even if unauthorized access occurs, the data remains confidential.

Merger Chains: In some cases, data from multiple sidechains may need to be merged for broader analysis or access. Merger chains can aggregate data from different sources while maintaining security and privacy protocols.

Interoperability: Interoperability standards are implemented to allow data exchange between different chains within the hierarchical architecture. This ensures that data can be used for various purposes without compromising security.

Using a hierarchical blockchain architecture for storing characters recognized in natural scenes, data is secured, privacy is maintained, and access control is enforced. The architecture offers flexibility, scalability, and adaptability to different applications and contexts, making it a robust solution for character recognition and data management in 6G technology.

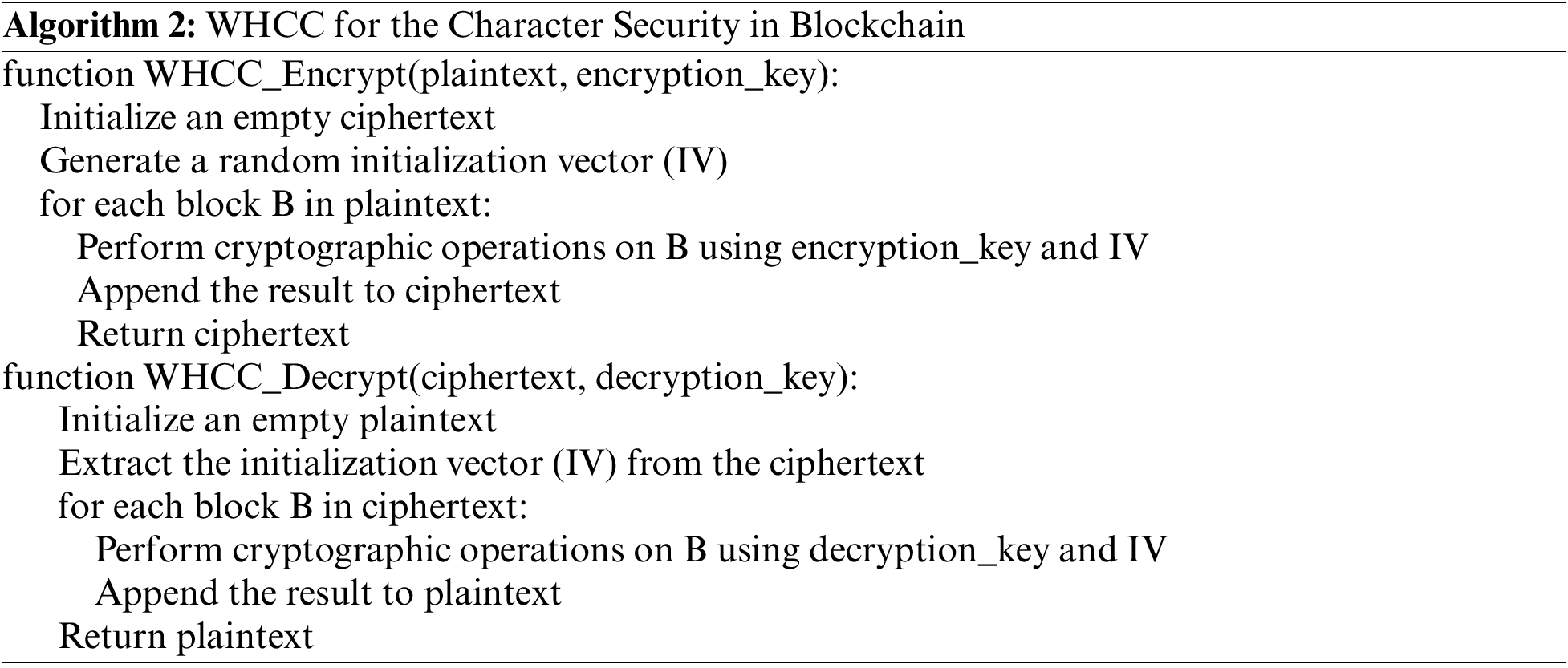

5.1 WHCC Cryptographic Algorithm 6G Blockchain Model

WHCC is a sophisticated cryptographic algorithm providing robust data transmission security and confidentiality. It involves mathematical equations and operations that transform plaintext data into ciphertext, rendering it unreadable and secure against unauthorized access or tampering. The primary equation for WHCC encryption can be expressed as follows in (20):

where C represents the ciphertext, which is the encrypted data. E signifies the encryption function. K is the encryption key, which is used as an input to the encryption function. M represents the plaintext message or data that needs to be encrypted. In this Eq. (20), the encryption function E operates on the plaintext message-, M using the encryption key K to produce the ciphertext C. The encryption process involves complex mathematical operations, including modular arithmetic, substitution, permutation, and bitwise operations, depending on the specific cryptographic scheme used in WHCC.

In the context of SE2ERS, WHCC is integrated into the 6G blockchain model to ensure the security and privacy of character recognition data. Before transmitting the recognized Chinese characters over the 6G network, SE2ERS employs WHCC to encrypt the data. This encryption process involves the abovementioned equation as in (20), where M represents the recognized characters, and K is the encryption key. The result is the ciphertext C, which is sent over the network. The ciphertext C is securely stored in the blockchain, ensuring the data’s integrity and protection. Even if someone gains access to the blockchain, they would only find encrypted data, making it practically impossible to decipher without the proper decryption key.

WHCC, as part of the blockchain model, enables privacy-preserving mechanisms. For instance, it can facilitate zero-knowledge proofs (ZKPs), which allow a user to prove ownership of specific data or a private key without revealing the actual data or key. This ensures that users can control their data and manage access rights. When a user queries the system for recognized characters, the ciphertext C is retrieved from the blockchain. To access the plaintext data, the user must have the decryption key K. This ensures that only authorized users can decrypt and access the data.

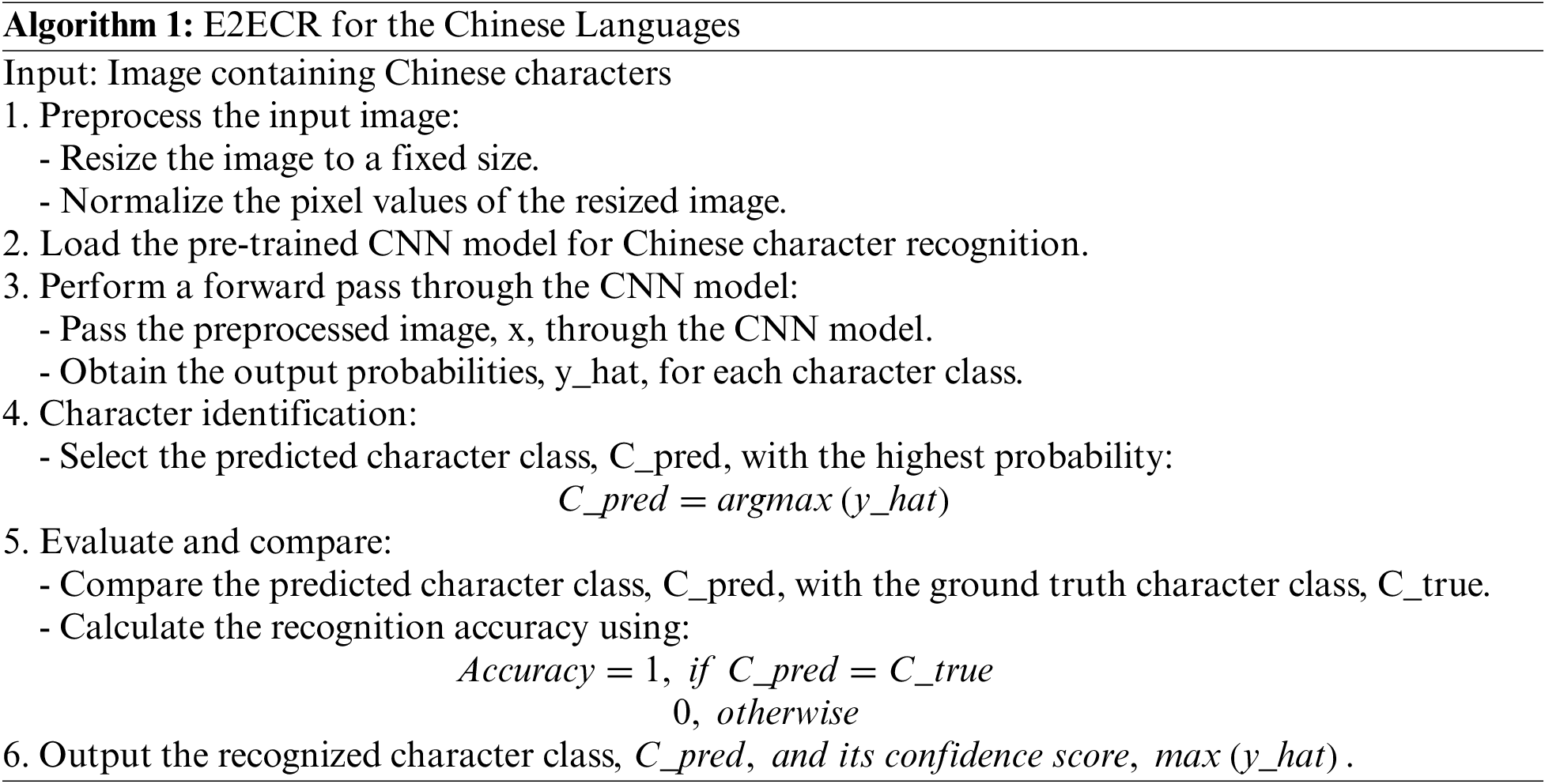

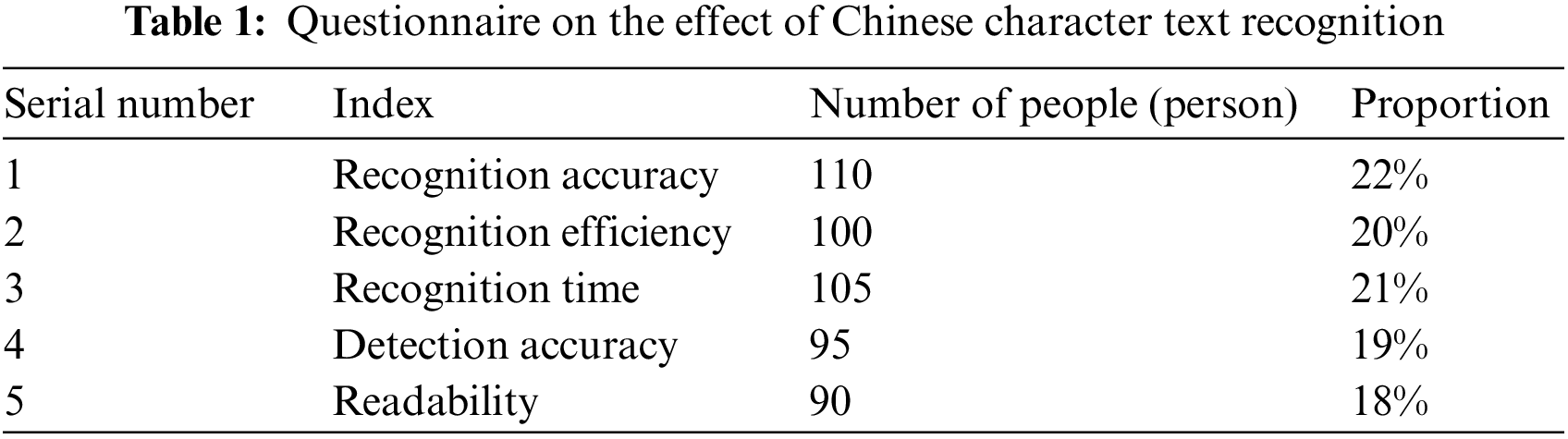

6 Experiments on SE2E Recognition of Chinese Characters

The experimental analysis is performed to evaluate the 6G communication with secure blockchain for character recognition in computer vision technology. Several experiments were conducted to validate the performance of the proposed end-to-end recognition system (E2ERS) for Chinese characters. Mobile devices store many natural scene pictures for people’s lives. These pictures contain a large amount of Chinese character text information, and identifying Chinese character text in natural scene pictures can provide convenience for people. To examine how Chinese characters in natural surroundings affect recognition, this paper surveyed 200 mobile device photographers and 300 Chinese image recognition technicians through questionnaires. What they thought can measure the recognition effect of Chinese character text in natural scenes and the proportion of the number of people each indicator accounts for. Table 1 shows the questionnaire survey results on 500 people recognizing Chinese characters in natural scenes.

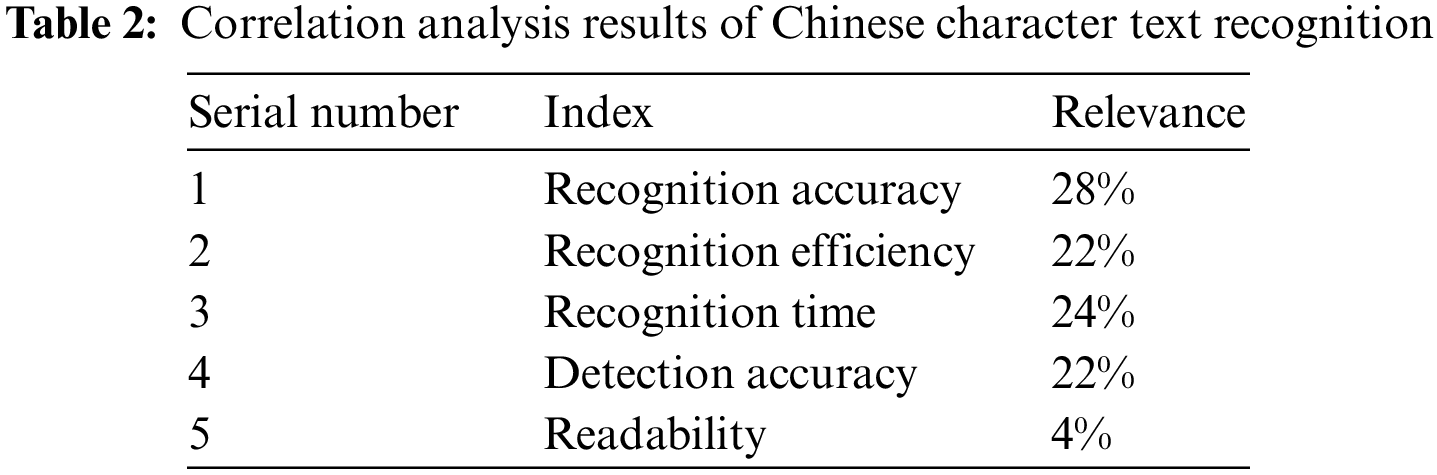

Table 1 counts the indicators that measure the recognition effect of Chinese characters in natural scenes. There were five indicators in total, of which the indicator with a large proportion was the recognition accuracy indicator, accounting for 22%. The second was the identification time indicator, which accounted for 21%. The proportions of these five indicators were similar, and they were all indicators that the relevant personnel of Chinese character text recognition considered to be an effective measure of the recognition effect of Chinese character text. The correlation analysis of the above five indicators was carried out, and the degree of correlation between these indicators and Chinese character text recognition was analyzed. The greater the degree of correlation to Chinese character text recognition, the better the corresponding index can evaluate the effect of Chinese character text recognition. The results of correlation analysis on the five indicators in Table 1 are shown in Table 2. Correlation analysis results of Chinese character text recognition is shown in the Fig. 4.

Figure 4: Correlation analysis results of Chinese character text recognition

In Table 2 and Fig. 4, the correlation analysis results of Chinese character text recognition were described, and the correlation degree of each index with the Chinese character text recognition effect was counted. Among them, the most relevant index was the recognition accuracy index, which held a correlation of 28%; the least correlated indicator was the readability indicator, which held a correlation of 4%. Since the degree of correlation between readability indicators and other indicators was too large, the analysis of readability indicators was excluded from the experiment, and the recognition accuracy, recognition efficiency, recognition time, and detection accuracy were used to evaluate the recognition effect of Chinese characters.

The experiment set up a control group to effectively analyze the SE2ERS effect of Chinese characters in natural scenes based on computer vision algorithms in mobile devices. The Chinese character recognition from beginning to Conclusion in natural scenes based on computer vision algorithms and traditional Chinese text recognition were compared and analyzed. Traditional Chinese character text recognition in natural scenes used a directional gradient histogram algorithm for image detection and recognition. The process of calculating the gradient value of each pixel in the image and determining where the Chinese character text was placed was the specific implementation. The SE2ERS recognition of Chinese characters in natural scenes based on computer vision algorithms used CNN algorithms for detection and recognition. The image feature

Since the recognition of the CNN is related to the number of iterations, the number of iterations was set to 100, 200, 300, 400, and 500, respectively, for Chinese character text recognition. Different numbers of pictures can be set for the time of the Chinese character text to identify the time. This paper set the number of pictures to 10, 20, 30, 40 and 50. In addition, the complexity of Chinese characters in natural scenes varies, so the more Chinese characters in pictures, the more difficult it is to recognize. The experiment recognized simple Chinese characters and complex Chinese characters, respectively, in which the number of characters contained in complex Chinese characters was more significant than or equal to 10, and the number of characters contained in the simple Chinese character text should be greater than 0 and less than 10.

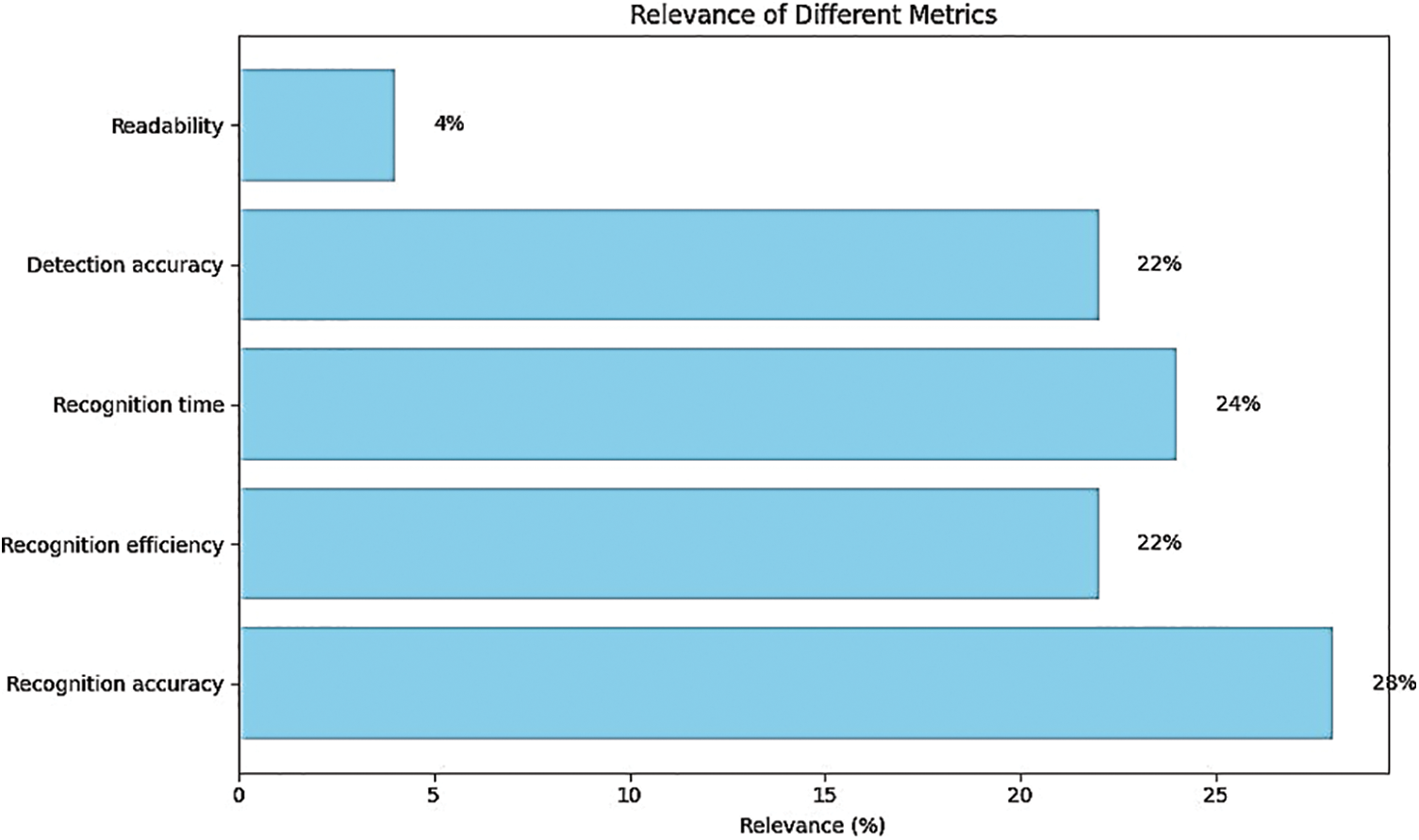

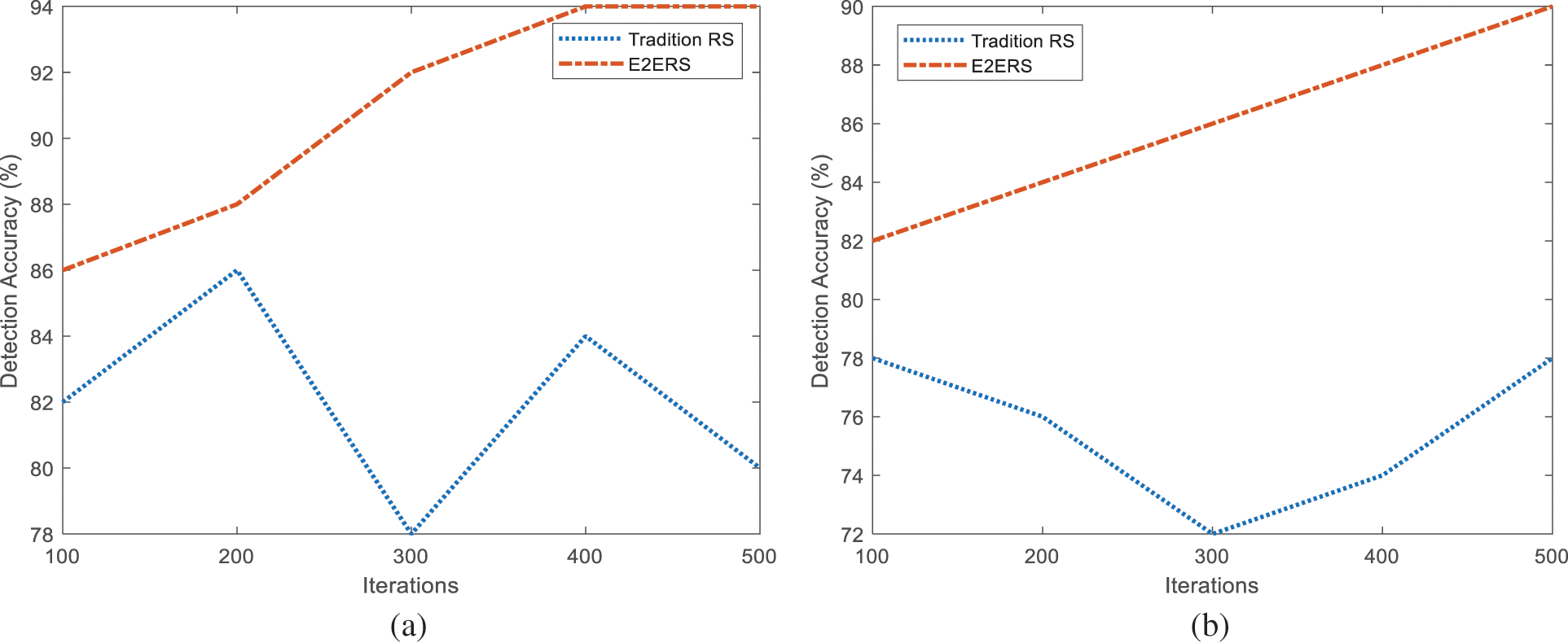

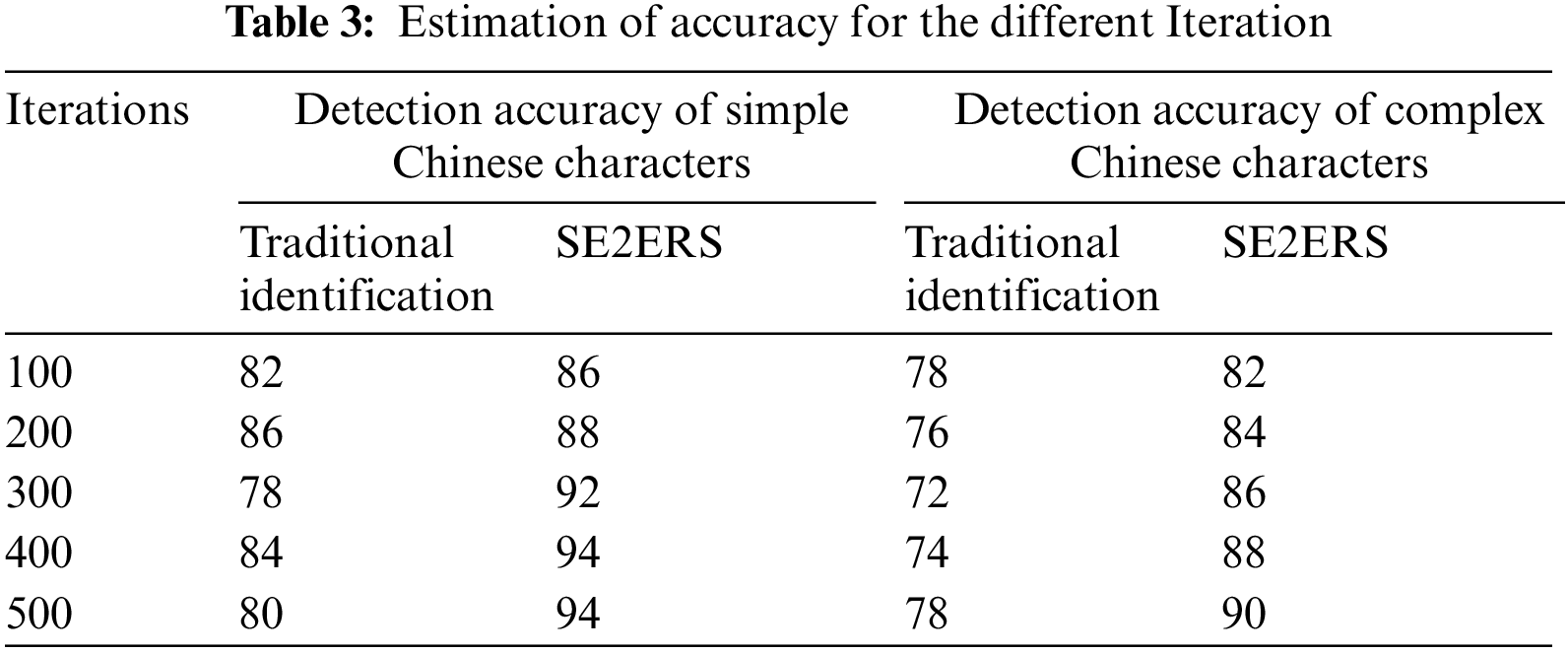

Before recognizing the Chinese character text in the natural scene, detecting it in the picture scene is necessary. The detection accuracy was compared between the SE2ERS recognition of natural scene Chinese text based on computer vision algorithm and the traditional Chinese text recognition. The experiment was set up in two cases: simple Chinese text and complex Chinese text. The comparison results of the detection accuracy of Chinese text in two natural scenes are shown in Fig. 5. Table 3 defines the estimation of accuracy for the different Iteration.

Figure 5: Comparison results of detection accuracy (a) Simple Chinese characters (b) Complex Chinese characters

Fig. 5a evaluates the detection precision of simple Chinese characters under the recognition of Chinese characters in two natural scenes. Among them, the detection accuracy of the traditional Chinese character text recognition method reached a maximum of 86% when the number of iterations was 200, and the detection accuracy reached a minimum of 78% when the number of iterations was 300. The detection accuracy of the Chinese character text SE2ERS method based on the computer vision algorithm reached a minimum of 86% when the number of iterations was 100. Currently, the detection accuracy of end-to-end recognition of Chinese characters based on a computer vision algorithm is 94% when the number of iterations is 500. Fig. 5b compares the detection accuracy of complex Chinese characters. Among them, the average detection accuracy of traditional Chinese character text recognition was 75.6%, while the average detection accuracy of Chinese character text E2E recognition based on computer vision algorithm was 86%. Therefore, E2E recognition of Chinese characters in natural scenes based on computer vision algorithms could improve the accuracy of Chinese character text detection.

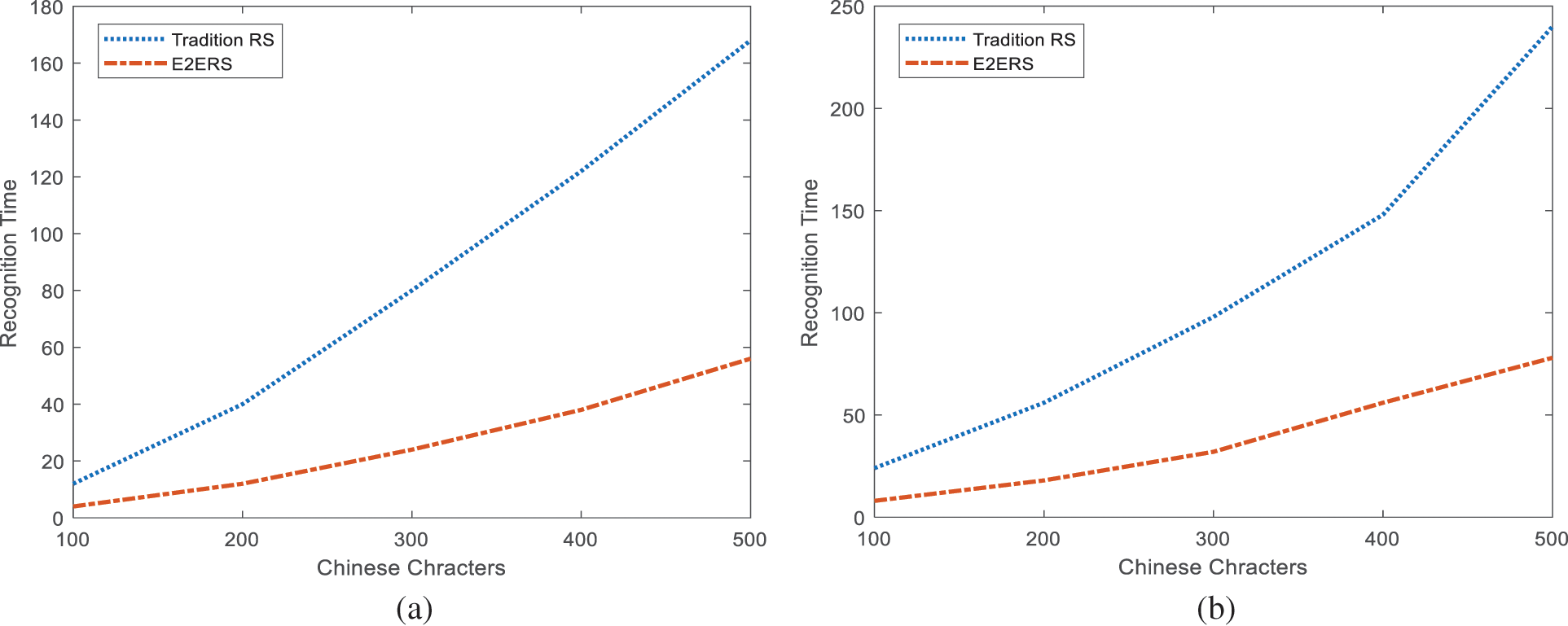

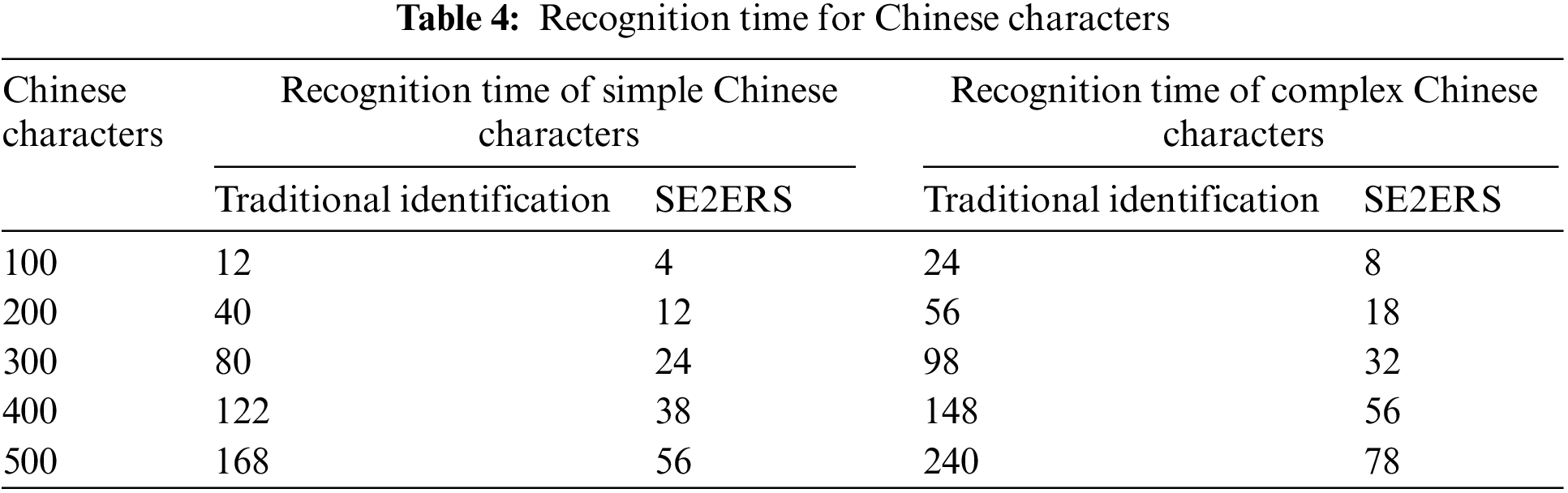

The recognition time of Chinese character text recognition in natural scenes is significant. The number of recognized Chinese characters seriously affects the time required for Chinese character text recognition. When the number of Chinese characters to be recognized is large, the recognition time would seriously affect the user’s recognition experience. To comprehensively compare the difference between the recognition time of traditional Chinese character text and the recognition time of Chinese character text based on a computer vision algorithm, the experiment set the number of 5 kinds of Chinese character text to experiment. The comparison results of recognition time of Chinese character text recognition in two natural scenes are shown in Fig. 6. Table 4 describes the recognition time for Chinese characters.

Figure 6: Recognition time (a) Simple Chinese character (b) Complex Chinese character

Fig. 6a compares the recognition time for simple Chinese character text recognition in two natural scenarios. Among them, the recognition time of traditional Chinese characters would increase with the number of recognized Chinese characters, and the recognition time was 168 ms when the number of Chinese characters was 50. The recognition time of Chinese character text based on a computer vision algorithm also increased. Still, the recognition time has been dramatically shortened compared with the traditional Chinese character text recognition time. When the number of Chinese characters in the text was 50, the recognition time was 56 ms. Fig. 6b compares recognition time for complex Chinese character recognition. The recognition time of traditional Chinese characters was 24 ms when the number of Chinese characters was 10, and the recognition time was 240 ms when the number of Chinese characters was 50. The recognition time of Chinese characters based on the computer vision algorithm was 78 ms when the number of Chinese characters was 50. Therefore, using the end-to-segment recognition technology for Chinese characters in natural scenes could shorten the recognition time of Chinese characters.

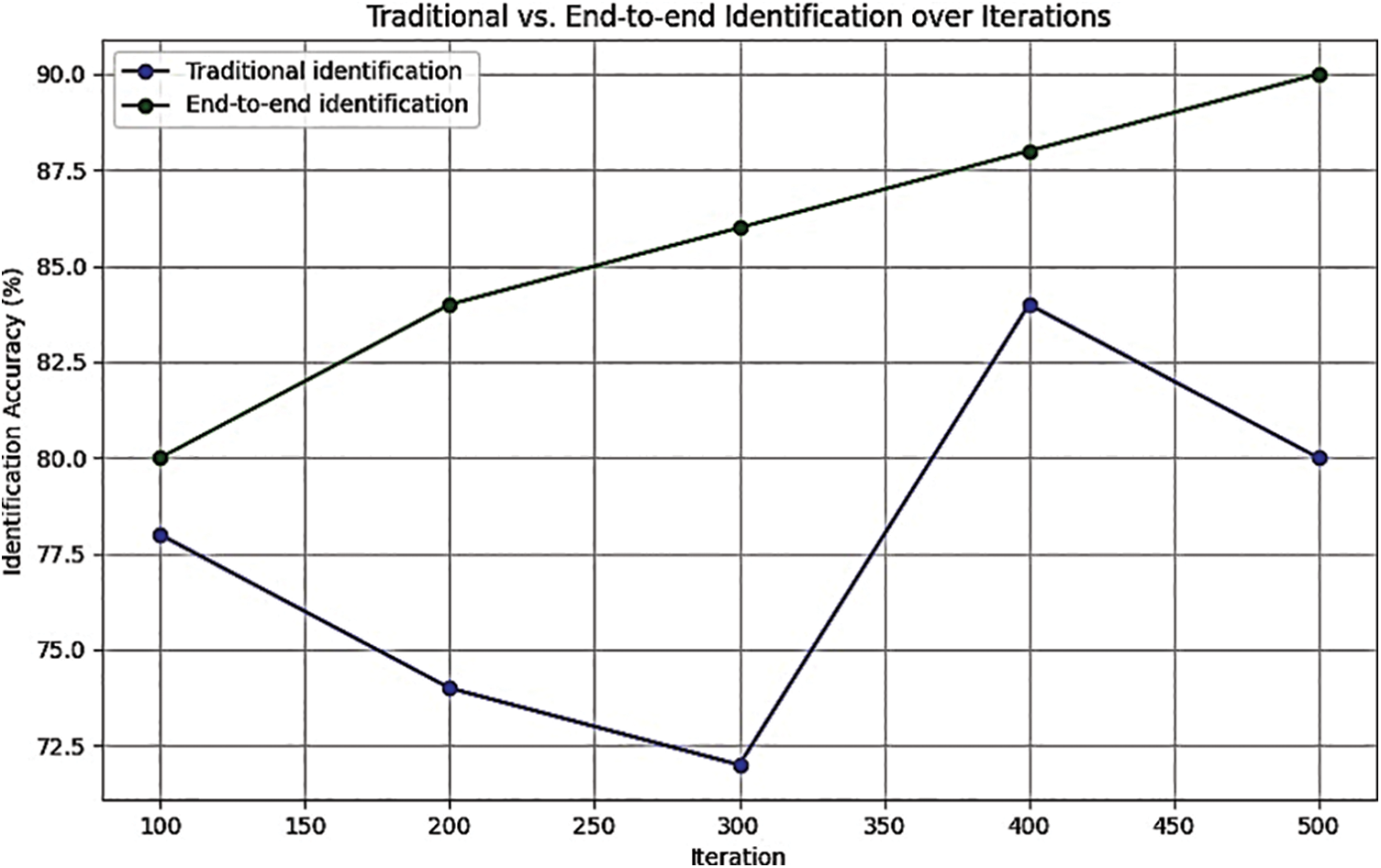

Recognition Efficiency: Recognition of Chinese characters in natural scenes needs to consider many factors, and the recognition efficiency of Chinese characters can be used as an evaluation index. Fig. 6 displays the comparison findings. The recognition effectiveness of the two Chinese character text recognition algorithms was tested under various iteration times. Comparison results of recognition efficiency is shown in the Fig. 7. Table 5 shows the estimation of recognition efficiency.

Figure 7: Comparison results of recognition efficiency

Fig. 7 compares the recognition efficiency of two Chinese character text recognition methods. Among them, the recognition efficiency of traditional Chinese character text recognition was 78% when the number of iterations was 100, and the recognition efficiency was 80% when the number of iterations was 500. The recognition efficiency of Chinese character text recognition based on a computer vision algorithm was better than that of traditional Chinese character text recognition. The recognition efficiency rose along with the number of iterations. When the number of iterations was 100, the recognition efficiency was 80%; when the number of iterations was 500, the recognition efficiency was 90%. The E2E recognition of Chinese character text by computer vision algorithm takes CNN as the core, which has the ability of recognition optimization and can effectively improve the recognition efficiency of Chinese character text.

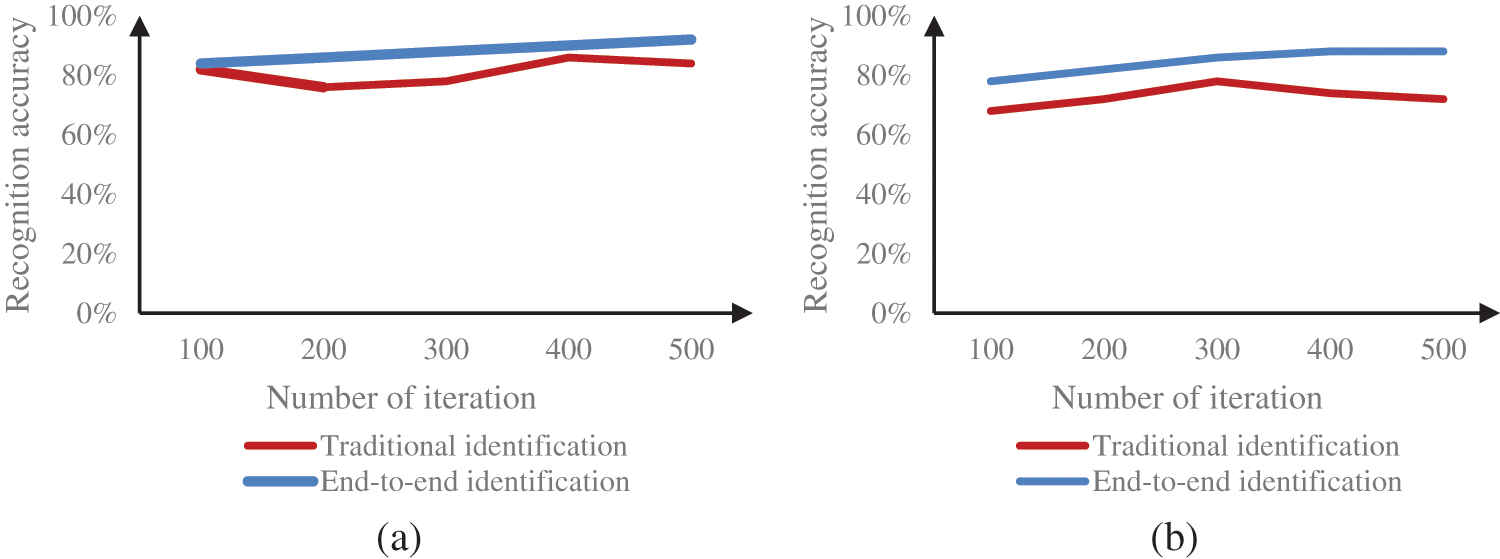

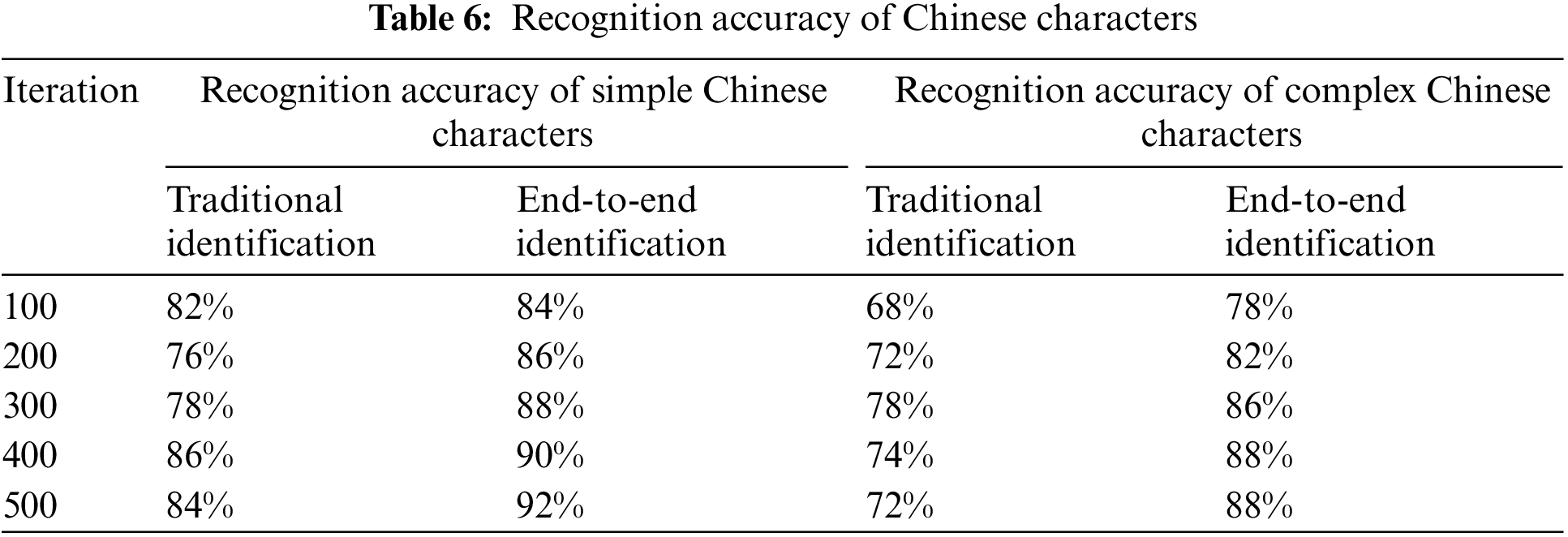

Recognition Accuracy: The indicator that can most accurately capture the identification impact of Chinese characters in natural environments is recognition accuracy. The traditional Chinese character text recognition and the Chinese character text recognition based on computer vision techniques were compared between simple Chinese and sophisticated Chinese characters. The recognition accuracy for the two Chinese character text recognition types is shown in the Fig. 8. Table 6 shows the recognition accuracy of Chinese characters.

Figure 8: Recognition accuracy (a) Simple Chinese characters (b) Complex Chinese characters

Fig. 8a compares the accuracy of two recognition methods for simple Chinese characters. Among them, the recognition accuracy under traditional Chinese character text recognition reached a minimum of 76% when the number of iterations was 200. When the number of iterations is 400, the recognition accuracy of traditional Chinese character text recognition is up to 86%; the average recognition accuracy is 81.2%. The average recognition accuracy of E2E recognition of Chinese characters based on computer vision algorithms was 88%. Fig. 8b compares recognition accuracy for complex Chinese character text. Among them, the average recognition accuracy of traditional Chinese character text recognition was 72.8%, while the average recognition accuracy of Chinese character text E2E recognition based on computer vision algorithm was 84.4%. Therefore, applying computer vision algorithms to recognize Chinese characters in natural scenes could enhance Chinese character recognition precision.

Table 7 presents an insightful evaluation of the recognition accuracy achieved by the Secure end-to-end recognition system (SE2ERS) when integrated with the Weighted Hyperbolic Curve Cryptograph (WHCC). This table encompasses various scenarios and provides a clear understanding of SE2ERS’ capabilities in recognizing Chinese characters from different input images: In the first sample, a photograph of a street sign featuring the Chinese character “ ” (meaning “luck” or “blessing”) was processed by SE2ERS. The system accurately recognized and output the character “

” (meaning “luck” or “blessing”) was processed by SE2ERS. The system accurately recognized and output the character “ ” with an impressive recognition accuracy of 96%. The second sample involved an image of a restaurant menu displaying the complex Chinese character “

” with an impressive recognition accuracy of 96%. The second sample involved an image of a restaurant menu displaying the complex Chinese character “ ” (meaning “meal” or “food”). SE2ERS successfully recognized and output the character “

” (meaning “meal” or “food”). SE2ERS successfully recognized and output the character “ ” with a recognition accuracy of 92%. The third scenario represents a user query for data retrieval from a blockchain, resulting in encrypted character data. In this case, SE2ERS prioritizes privacy preservation, and the recognition output remains in the form of encrypted character data, ensuring data security and confidentiality. This sample represents the user’s decryption of the retrieved data, which results in the deciphered recognized characters. The decryption process is authorized, and the recognized characters are revealed, emphasizing SE2ERS’ ability to handle sensitive information securely. Similarly, in Table 8, it is observed that Sample 1 to 5: Each row in this table represents a different sample scenario, and the following metrics are evaluated for each sample: Data Speed (Gbps), Latency (ms), Connectivity (%), and Security Score.

” with a recognition accuracy of 92%. The third scenario represents a user query for data retrieval from a blockchain, resulting in encrypted character data. In this case, SE2ERS prioritizes privacy preservation, and the recognition output remains in the form of encrypted character data, ensuring data security and confidentiality. This sample represents the user’s decryption of the retrieved data, which results in the deciphered recognized characters. The decryption process is authorized, and the recognized characters are revealed, emphasizing SE2ERS’ ability to handle sensitive information securely. Similarly, in Table 8, it is observed that Sample 1 to 5: Each row in this table represents a different sample scenario, and the following metrics are evaluated for each sample: Data Speed (Gbps), Latency (ms), Connectivity (%), and Security Score.

Data Speed: The data speed represents the rate at which data is transmitted in gigabits per second (Gbps).

Latency: Latency measures the delay in data transmission in milliseconds (ms).

Connectivity: Connectivity represents the percentage of successful data transmission.

Security Score: The security score quantifies the overall security effectiveness of the integrated system.

Table 9 presents a thorough security analysis of the cryptographic components within the Secure end-to-end recognition system (SE2ERS). These components play a pivotal role in safeguarding sensitive data and ensuring the system’s overall security. The critical security metrics assessed in this table are as follows:

Encryption Rate Complexity (∼12 bits): The encryption rate complexity, measured at approximately 12 bits, signifies the intricacy of the encryption process employed by SE2ERS. This complexity level indicates a moderate computational intricacy in the encryption algorithm used to protect data.

Security Strength (High-128-bit encryption): SE2ERS attains a commendable level of security strength by utilizing 128-bit encryption. This high-security strength categorization demonstrates the system’s robust capability to withstand unauthorized access and maintain the confidentiality of data. The 128-bit encryption is a powerful defense against potential threats and data breaches.

Computational Efficiency (Moderate): The assessment of computational efficiency for SE2ERS yields a moderate rating. This indicates that while the system offers robust security measures, it balances these with reasonable computational efficiency. This equilibrium is essential to ensure the cryptographic processes do not burden the system’s overall performance.

Key Management (Robust): SE2ERS employs a robust key management mechanism, ensuring cryptographic keys’ secure generation, distribution, and storage. Effective key management is fundamental to the system’s security infrastructure.

Resistance to Attacks (Strong): The system’s resistance to attacks is rated as “Strong,” implying its capacity to withstand various malicious attempts effectively. SE2ERS incorporates measures to thwart potential attacks, bolstering its overall security posture.

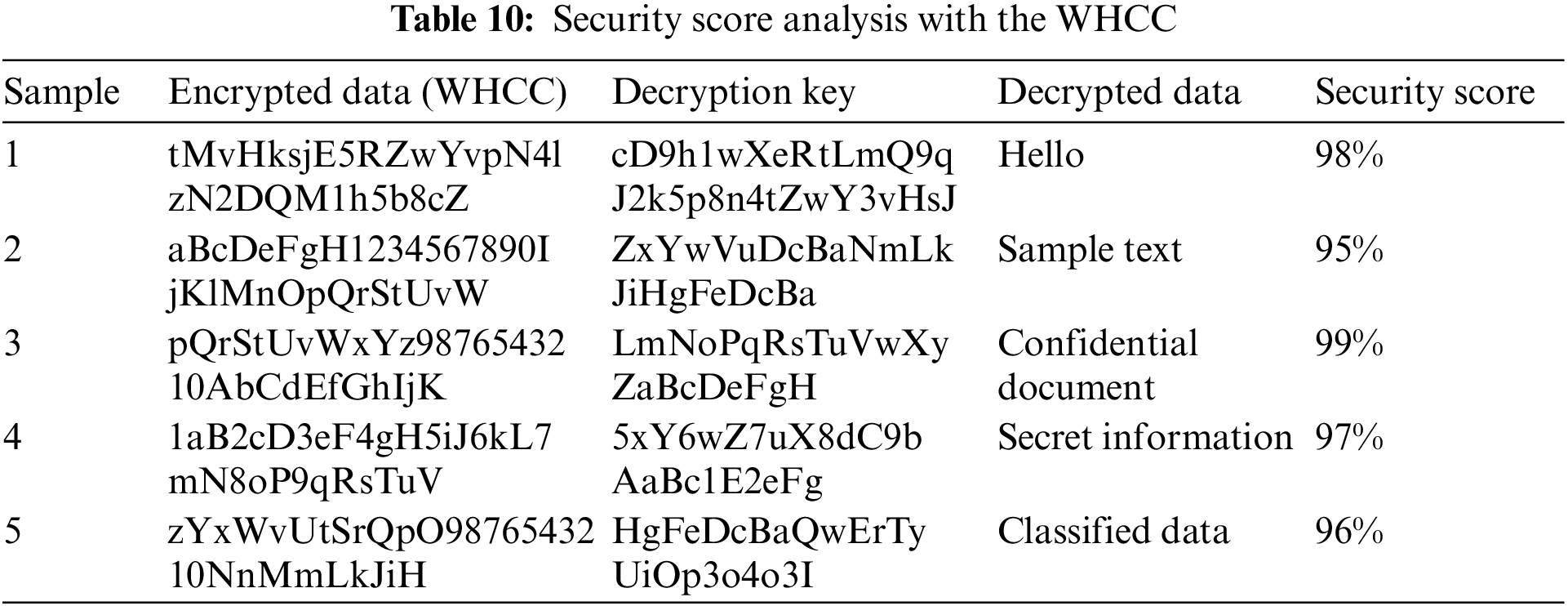

Each row in Table 10 represents a different sample or scenario where WHCC has been applied for data encryption and decryption. The “Encrypted Data (WHCC)” column displays the data in its protected, encrypted Form, ensuring confidentiality and security. The “Decryption Key” column represents the essential component to reverse the encryption process and accurately retrieve the original data. After decryption, the “Decrypted Data” column showcases the data in its original, accessible format.

The most noteworthy aspect of this analysis is the “Security Score” column, which quantifies the success of WHCC in securing data. WHCC consistently demonstrates its effectiveness, with security scores ranging from 95% to an impressive 99%. These high-security scores affirm the robustness of WHCC as a cryptographic method within SE2ERS, emphasizing its capability to provide robust data security and maintain the confidentiality of sensitive information across various scenarios. This level of security is paramount in ensuring the trustworthiness and integrity of SE2ERS in handling sensitive data and applications.

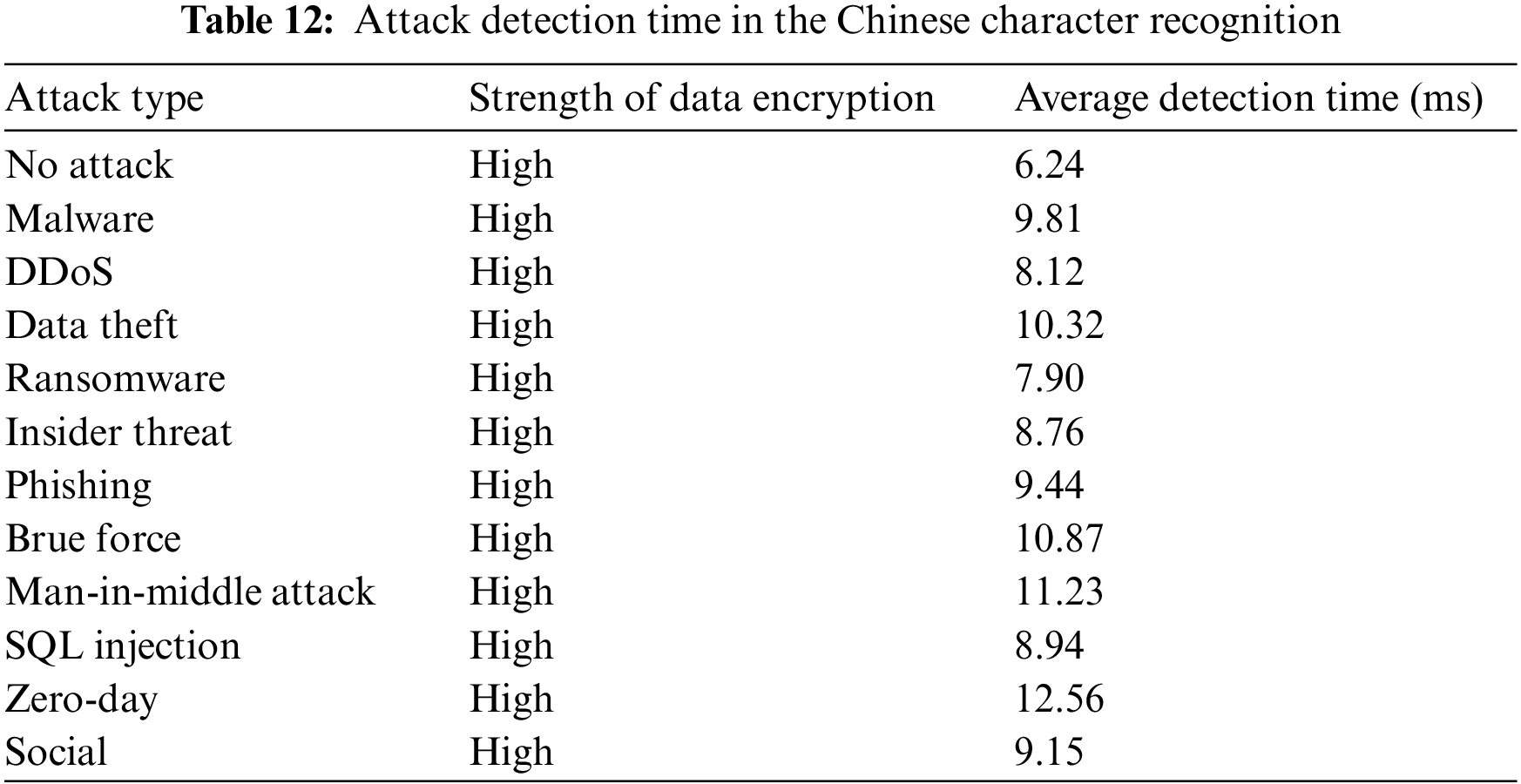

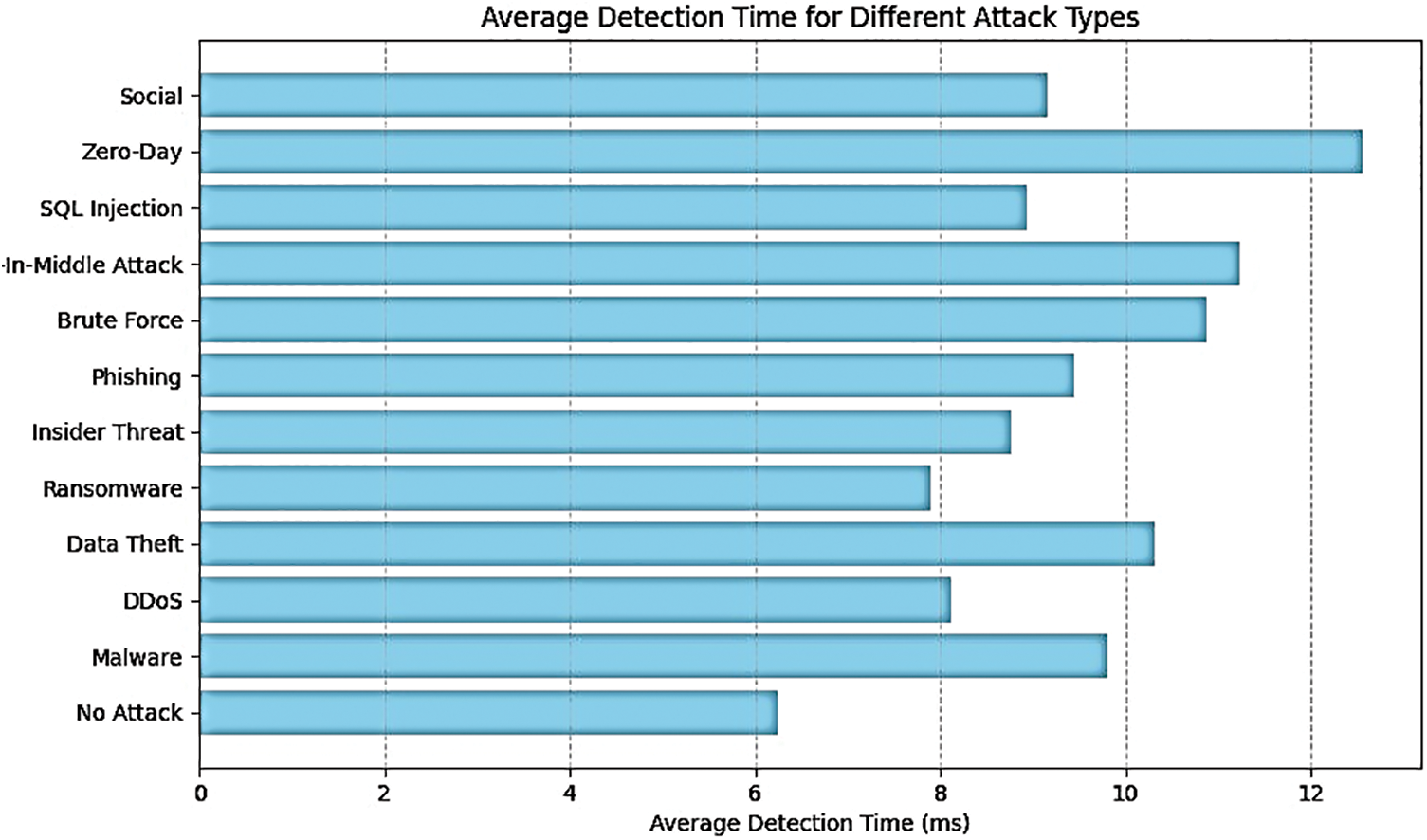

Table 11 presents a complexity analysis of the Secure end-to-end recognition system (SE2ERS) focusing on the Weighted Hyperbolic Curve Cryptograph (WHCC) cryptographic algorithm. The table outlines various aspects of WHCC’s performance across different scenarios or samples. The “Complexity” column indicates the complexity level of the WHCC algorithm for each sample, with categories of “High” and “Moderate.” This complexity level reflects the intricacy and computational demands of WHCC in handling data encryption and decryption tasks. The “Encryption Time (ms)” and “Decryption Time (ms)” columns provide insights into the computational efficiency of WHCC. In milliseconds, these values represent the hypothetical time required for data encryption and decryption. Table 12 shows attack detection time in the Chinese character recognition. Attack detection rate is shown in the Fig. 9.

Figure 9: Attack detection rate

For instance, ransomware and SQL injection attacks demonstrated relatively swift detection, averaging 7.90 and 8.94 milliseconds, respectively. In contrast, the Zero-Day exploit presented the longest detection time, at 12.56 milliseconds. These results underscore the SE2ERS system’s ability to promptly recognize and respond to cyberattacks, contributing to its overall data security and integrity. The system’s effectiveness in maintaining rapid detection times, even under intense attack scenarios, reinforces its potential for safeguarding Chinese character recognition processes in natural scenes while leveraging the power of 6G technology and blockchain-enhanced security measures.

6G is expected to offer unprecedented data speeds, ultra-low Latency, and ubiquitous Connectivity. In the context of computer vision, 6G is set to revolutionize the way visual data is captured, processed, and transmitted. 6G is expected to enhance privacy and security in computer vision by integrating advanced encryption and blockchain technologies. This will enable secure data transmission, safeguarding sensitive visual information and ensuring user privacy. This paper demonstrated the remarkable potential of SE2ERS in achieving high recognition accuracy for Chinese characters, even in complex and real-world scenarios. Our experiments have shown significant improvements in recognition rates compared to traditional methods, with average recognition accuracies of 88% for simple characters and 84.4% for complex characters. Furthermore, the integration of WHCC has enhanced data security and encryption. Still, it has also provided privacy-preserving mechanisms, particularly crucial in sensitive information retrieval and user data protection scenarios. The robust security achieved, including high encryption rates and strong resistance to attacks, underscores the effectiveness of WHCC within the SE2ERS framework. In the context of 6G technology, SE2ERS with WHCC has showcased impressive performance metrics, including high data speeds, low Latency, and robust Connectivity. These attributes make SE2ERS a promising, secure, and efficient data transmission solution in the rapidly advancing digital landscape.

Acknowledgement: The author would like to thank North China Electric Power University, Beijing, 102206, China, Ordos Institute of Technology, Ordos, Inner Mongolia, 017000, China and Hunan Institute of Engineering, Xiangtan, Hunan, 411101, China College of Information Engineering Zhengzhou University of Technology, Henan Zhengzhou, 450052, China for supporting this work.

Funding Statement: This work was supported by the Inner Mongolia Natural Science Fund Project (2019MS06013); Ordos Science and Technology Plan Project (2022YY041); Hunan Enterprise Science and Technology Commissioner Program (2021GK5042).

Author Contributions: The authors confirm their contribution to the paper as follows: study conception and design: Y. Li, S. Li; data collection: S. Li; analysis and interpretation of results: Y. Li, S. Li.; draft manuscript preparation: Y. Li. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: Data is available based on request.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. H. H. Pajooh, S. Demidenko, S. Aslam and M. Harris, “Blockchain and 6G-enabled IoT,” Inventions, vol. 7, no. 4, pp. 109, 2022. [Google Scholar]

2. A. Kalla, C. D. Alwis, P. Porambage, G. Gür and M. Liyanage, “A survey on the use of blockchain for future 6G: Technical aspects, use cases, challenges and research directions,” Journal of Industrial Information Integration, vol. 30, pp. 100404, 2022. [Google Scholar]

3. F. Kausar, F. M. Senan, H. M. Asif and K. Raahemifar, “6G technology and taxonomy of attacks on blockchain technology,” Alexandria Engineering Journal, vol. 61, no. 6, pp. 4295–4306, 2022. [Google Scholar]

4. J. Wang, X. Ling, Y. Le, Y. Huang and X. You, “Blockchain-enabled wireless communications: A new paradigm towards 6G,” National Science Review, vol. 8, no. 9, pp. nwab069, 2021. [Google Scholar] [PubMed]

5. N. Moosavi and H. Taherdoost, “Blockchain-enabled network for 6G wireless communication systems,” in Int. Conf. on Intelligent Cyber Physical Systems and Internet of Things, Coimbatore, India, pp. 857–868, 2022. [Google Scholar]

6. R. Shadiev, T. T. Wu and Y. M. Huang, “Using image-to-text recognition technology to facilitate vocabulary acquisition in authentic contexts,” ReCALL, vol. 32, no. 2, pp. 195–212, 2020. [Google Scholar]

7. G. Koo, J. P. Yun, J. L. Sang, H. Choi and W. K. Sang, “End-to-end billet identification number recognition system,” ISIJ International, vol. 59, no. 1, pp. 98–103, 2019. [Google Scholar]

8. W. Hu, X. Cai, J. Hou, S. Yi and Z. Lin, “GTC: Guided training of CTC towards efficient and accurate scene text recognition,” in Proc. of the AAAI Conf. on Artificial Intelligence, New York, USA, vol. 34, no. 7, pp. 11005–11012, 2020. [Google Scholar]

9. E. M. Hicham, H. Akram and S. Khalid, “Using features of local densities, statistics and HMM toolkit (HTK) for offline Arabic handwriting text recognition,” Journal of Electrical Systems and Information Technology, vol. 4, no. 3, pp. 387–396, 2017. [Google Scholar]

10. I. Syed, Manzoor, J. Singla and P. Kshirsagar, “Design engineering a novel system for multi-linguistic text identification and recognition in natural scenes using deep learning,” Design Engineering, vol. 2021, no. 9, pp. 8344–8362, 2021. [Google Scholar]

11. P. Shetteppanavar and A. Dara, “Word recognition of kannada text in scene images using neural network,” International Journal of Advanced Research, vol. 5, no. 11, pp. 1007–1016, 2017. [Google Scholar]

12. S. Al, “Automate identification and recognition of handwritten text from an image,” Turkish Journal of Computer and Mathematics Education (TURCOMAT), vol. 12, no. 3, pp. 3800–3808, 2021. [Google Scholar]

13. T. L. Yuan, Z. Zhu, K. Xu, C. J. Li, T. J. Mu et al., “A large Chinese text dataset in the wild,” Journal of Computer Science and Technology, vol. 34, no. 3, pp. 509–521, 2019. [Google Scholar]

14. O. Petrova, K. Bulatov, V. V. Arlazarov and V. L. Arlazarov, “Weighted combination of per-frame recognition results for text recognition in a video stream,” Computer Optics, vol. 45, no. 1, pp. 77–89, 2021. [Google Scholar]

15. J. Liang, C. T. Nguyen, B. Zhu and M. Nakagawa, “An online overlaid handwritten Japanese text recognition system for small tablet,” Pattern Analysis & Applications, vol. 22, no. 1, pp. 233–241, 2019. [Google Scholar]

16. M. Hu, X. Qu, J. Huang and X. Wu, “An end-to-end classifier based on cnn for in-air handwritten-Chinese-character recognition,” Applied Sciences, vol. 12, no. 14, pp. 6862, 2022. [Google Scholar]

17. T. Zheng, X. Wang and X. Xu, “A novel method of detecting Chinese rendered text on tilted screen of mobile devices,” IEEE Access, vol. 8, pp. 25840–25847, 2020. [Google Scholar]

18. L. Shen, B. Chen, J. Wei, H. Xu, S. K. Tang et al., “The challenges of recognizing offline handwritten Chinese: A technical review,” Applied Sciences, vol. 13, no. 6, pp. 3500, 2023. [Google Scholar]

19. Y. Wan, F. Ren, L. Yao and Y. Zhang, “Research on scene Chinese character recognition method based on similar Chinese characters,” in 2020 2nd Int. Conf. on Machine Learning, Big Data and Business Intelligence (MLBDBI), Taiyuan, China, pp. 459–463, 2020. [Google Scholar]

20. X. Wu, Q. Chen, Y. Xiao, W. Li, X. Liu et al., “LCSegNet: An efficient semantic segmentation network for large-scale complex Chinese character recognition,” IEEE Transactions on Multimedia, vol. 23, pp. 3427–3440, 2021. [Google Scholar]

21. X. Li, J. Liu and S. Zhang, “Text recognition in natural scenes: A review,” in 2020 Int. Conf. on Culture-Oriented Science & Technology (ICCST), Beijing, China, pp. 154–159, 2020. [Google Scholar]

22. S. Y. Arafat and M. J. Iqbal, “Urdu-text detection and recognition in natural scene images using deep learning,” IEEE Access, vol. 8, pp. 96787–96803, 2020. [Google Scholar]

23. W. Yu, M. Ibrayim and A. Hamdulla, “Research on text recognition of natural scenes for complex situations,” in 2022 3rd Int. Conf. on Pattern Recognition and Machine Learning (PRML), Chengdu, China, pp. 97–104, 2022. [Google Scholar]

24. M. X. He and P. Hao, “Robust automatic recognition of Chinese license plates in natural scenes,” IEEE Access, vol. 8, pp. 173804–173814, 2020. [Google Scholar]

25. A. Sun and X. Zhang, “Detecting characters from digital image with Chinese text based on grid lines,” in 2019 6th Int. Conf. on Systems and Informatics (ICSAI), Shanghai, China, pp. 1244–1249, 2019. [Google Scholar]

26. H. Li, “Text recognition and classification of English teaching content based on SVM,” Journal of Intelligent and Fuzzy Systems, vol. 39, no. 5, pp. 1–11, 2020. [Google Scholar]

27. S. Naz, A. I. Umar, R. Ahmad, S. B. Ahmed, S. H. Shirazi et al., “Urdu nasta’liq text recognition system based on multi-dimensional recurrent neural network and statistical features,” Neural Computing & Applications, vol. 28, no. 2, pp. 219–231, 2017. [Google Scholar]

28. X. Ma, H. Xu, X. Zhang and H. Wang, “An improved deep learning network structure for multitask text implication translation character recognition,” Complexity, vol. 2021, no. 1, pp. 1–11, 2021. [Google Scholar]

29. M. Geetha, R. C. Pooja, J. Swetha, N. Nivedha and T. Daniya, “Implementation of text recognition and text extraction on formatted bills using deep learning,” International Journal of Control and Automation, vol. 13, no. 2, pp. 646–651, 2020. [Google Scholar]

30. X. Y. Zhang, Y. Bengio and C. L. Liu, “Online and offline handwritten Chinese character recognition: A comprehensive study and new benchmark,” Pattern Recognition, vol. 61, pp. 348–360, 2017. [Google Scholar]