Open Access

Open Access

ARTICLE

Shadow Extraction and Elimination of Moving Vehicles for Tracking Vehicles

1 Department of Electronics & Communication Engineering, Faculty of Engineering and Technology, Parul Institute of Engineering and Technology, Parul University, Vadodara, Gujarat, 391760, India

2 Department Electronics and Electrical Communications Engineering, Faculty of Electronic Engineering, Menoufia University, Menouf, 32952, Egypt

3 Computer Science Department, Community College, King Saud University, Riyadh, 11362, Saudi Arabia

4 Electronics and Communications Engineering Department, College of Engineering and Technology, Arab Academy for Science, Technology and Maritime Transport, Alexandria, 1029, Egypt

5 Department of Computer Engineering, Faculty of Engineering and Technology, Parul Institute of Engineering and Technology, Parul University, Vadodara, Gujarat, 391760, India

6 Department of Electrical and Computer Engineering, University of Saskatchewan, 57 Campus Drive, Saskatoon, SK S7N5A9, Canada

* Corresponding Author: Vishal Sorathiya. Email:

Computers, Materials & Continua 2023, 77(2), 2009-2030. https://doi.org/10.32604/cmc.2023.043168

Received 23 June 2023; Accepted 27 September 2023; Issue published 29 November 2023

Abstract

Shadow extraction and elimination is essential for intelligent transportation systems (ITS) in vehicle tracking application. The shadow is the source of error for vehicle detection, which causes misclassification of vehicles and a high false alarm rate in the research of vehicle counting, vehicle detection, vehicle tracking, and classification. Most of the existing research is on shadow extraction of moving vehicles in high intensity and on standard datasets, but the process of extracting shadows from moving vehicles in low light of real scenes is difficult. The real scenes of vehicles dataset are generated by self on the Vadodara–Mumbai highway during periods of poor illumination for shadow extraction of moving vehicles to address the above problem. This paper offers a robust shadow extraction of moving vehicles and its elimination for vehicle tracking. The method is distributed into two phases: In the first phase, we extract foreground regions using a mixture of Gaussian model, and then in the second phase, with the help of the Gamma correction, intensity ratio, negative transformation, and a combination of Gaussian filters, we locate and remove the shadow region from the foreground areas. Compared to the outcomes proposed method with outcomes of an existing method, the suggested method achieves an average true negative rate of above 90%, a shadow detection rate SDR (η%), and a shadow discrimination rate SDR (ξ%) of 80%. Hence, the suggested method is more appropriate for moving shadow detection in real scenes.Keywords

The intelligent transportation system (ITS) faces various traffic complications, such as vehicle counts, estimating speed, identifying accidents, and assisting traffic surveillance [1–5]. The challenge of counting vehicles in complicated scenes with a high vehicle density is challenging for computer vision. In the complex scenes of vehicles (i.e., express highways), the detection of projected shadows and removal of moving vehicles is an open research work in vehicle detection for the vehicle counting system. Therefore, an efficient algorithm must be developed to remove the vehicle’s cast shadows accurately and provide vehicle information only for the vehicle counting system in real-time scenes.

Very heavy traffic faces at the Bapod crossroad of the Vadodara-Mumbai highway in the evening (5:30 PM to 7:30 PM) due to the employees of universities, industries, and some other firms leaving their offices. Traffic management requires vehicle detection without its cast shadow properly in very heavy traffic; hence the exact counting of the vehicle is performed, which would be helpful in traffic issues at the Vadodara-Mumbai highway. Most previous research on shadow region identification of moving objects has been proposed solely on standard datasets with small sizes of shadow strength (high intensity/daytime). In that instance, the shadow identification of moving objects may be done easily using any segmentation method. However, the shadow region identification of moving objects with high shadow strength (low intensity/evening length) is a difficult challenge. This paper proposes vehicle tracking on the Vadodara-Mumbai expressway after detecting and removing the shadow of moving vehicles in the evening.

The following characteristics make the proposed work special: The existing method is best either for shadow region or foreground region, i.e., the existing method achieved around 90% shadow detection; at the same time, it achieved around 50% shadow discrimination rate means the existing method cannot classify shadow region and foreground region equally for the next applications of computer vision, i.e., tracking, counting, recognize, etc., but by using a few straightforward and original image processing techniques including pixel-by-pixel division, negative transformation, Gamma correction, and logical operations between the output of MoG and Gamma corrected output, the suggested method can categorize shadow and foreground regions equally. The existing method has been implemented on some standard datasets. Hence, the existing method may not detect the shadow and foreground regions in real scenes due to many challenges, such as trees or grass moving in the wind, clouds traversing the sky, and changes in illumination. However, against the existing method, the proposed method can detect both shadow region and foreground region equally on standard datasets and real scenes because a mixture of Gaussian model is a suitable method in the proposed method to overcome the address problem for vehicle detection as an initial stage. The suggested technique has been examined on the standard and self-generated datasets on the Vadodara-Mumbai expressway. The implemented outcomes of the proposed method on the standard dataset compared to the outcomes of the existing approach demonstrate that the suggested approach is robust enough to segregate the region of shadow and foreground equally. A self-generated dataset of the Vadodara–Mumbai expressway was used to evaluate the proposed method, and it was successful in detecting shadows in more than 80% of cases. The existing chroma-based methods fail when vehicle chroma properties are similar to the background, but in the proposed method, detection of shadow has not been obtained from the chroma characteristic; hence, the proposed method achieved detection of shadow in the above case.

The ground truth frames are required to measure the performance parameters of own dataset of the Vadodara Mumbai expressway. Hence, we have used Java-based ImageJ software to obtain the ground truth frames. The author has achieved performance parameters from an algorithm comparison of the ground truth and target frames.

Many background models have been introduced for vehicle detection in the scene. A statistical model (Mixture of Gaussian model) is the best for the scene, which contains a moving vehicle under the dynamic background (movement of leaves, change in illuminations). This paper proposes the cast shadow detection of moving vehicles for a vehicle counting system. The proposed method contains a mixture of Gaussian model, negative transformation, Gamma decoding, and logical AND operation with the proper threshold.

The article is ordered as follows. In Section 2, the related work of identifying projected moving shadows from moving vehicles using several existing techniques has been covered. The proposed methodology is fully described in Section 3. In Section 4, there is a thorough discussion of the findings and a critique of the suggested strategy. Section 5 discusses the executive overview of the proposed effort, its findings, and the potential for the following research project.

Various methods have been introduced in the past decade for cast shadow detection. The existing work has proposed shadow detection on static images or image sequences (video). The paper will mainly review cast shadow detection of moving vehicles for the vehicle tracking system related to the second case. Hence, the main objective is to find the moving shadow of moving vehicles. In related work, it is found that the taxonomies are used to identify moving cast shadows detection. Hence, the purpose of the moving cast shadow detection is divided into two categories (i) model-based and (ii) properties-based. The shadow models were based on prior information of geometrical information [6] of the dataset, object, and brightness. The properties-based moving shadow detection is based on chromaticity, texture pattern, photometric physical, and brightness [1].

For the purpose of detecting moving cast shadows, many color models, including normalized RGB, HSV, HSI, YCbCr, and C1C2C3, have been introduced. The chromaticity (measurement of color) has been considered for moving shadow detection from all various color models. It fails when an object’s color is the same as a shadow [2]. Wan et al. proposed a shadow removal method to remove ghost artifacts in moving objects. The ghosting region was reformed to avoid eliminating the shadow pixels of vehicles going throughout the scene. Hence, the recommended approach is not comparatively used for surveillance and noisy environments [3].

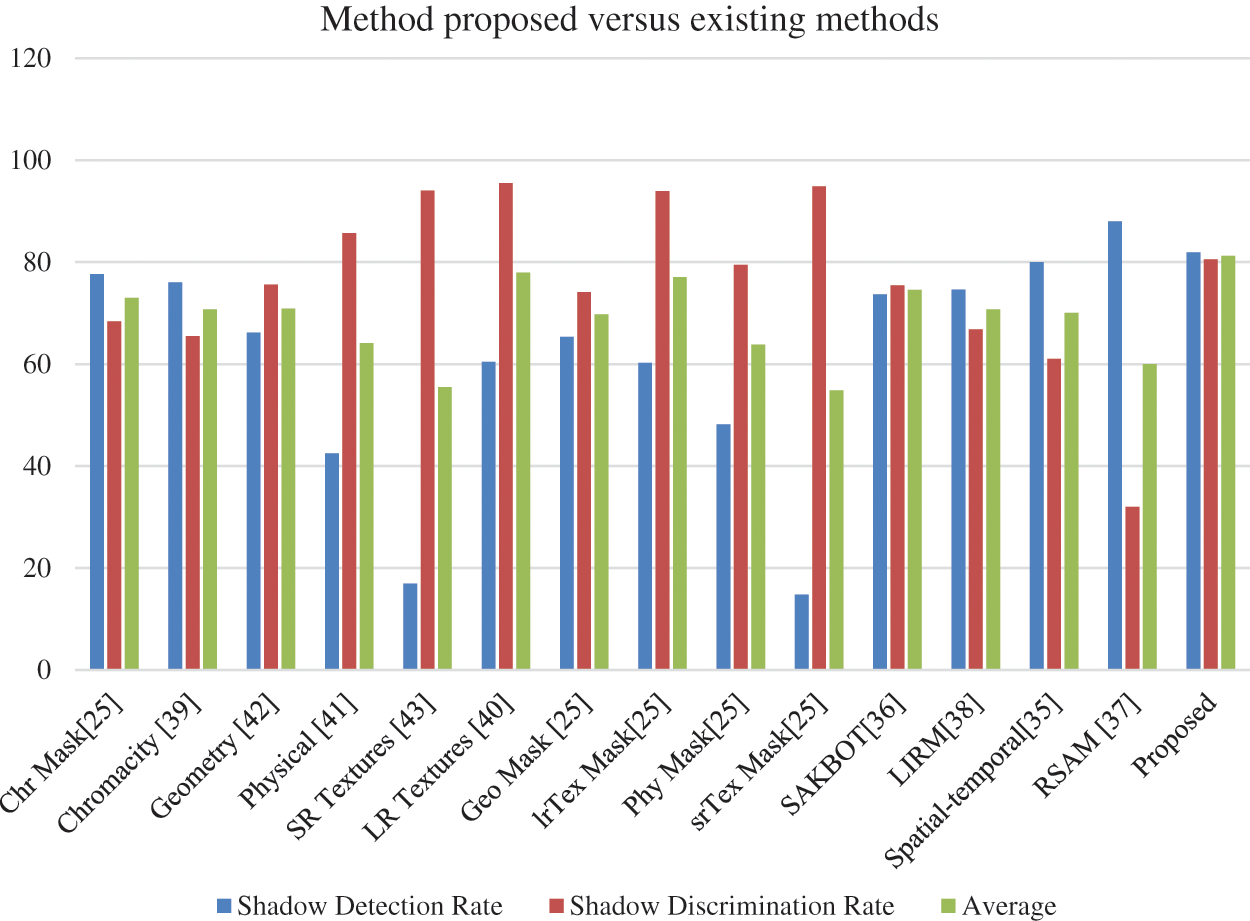

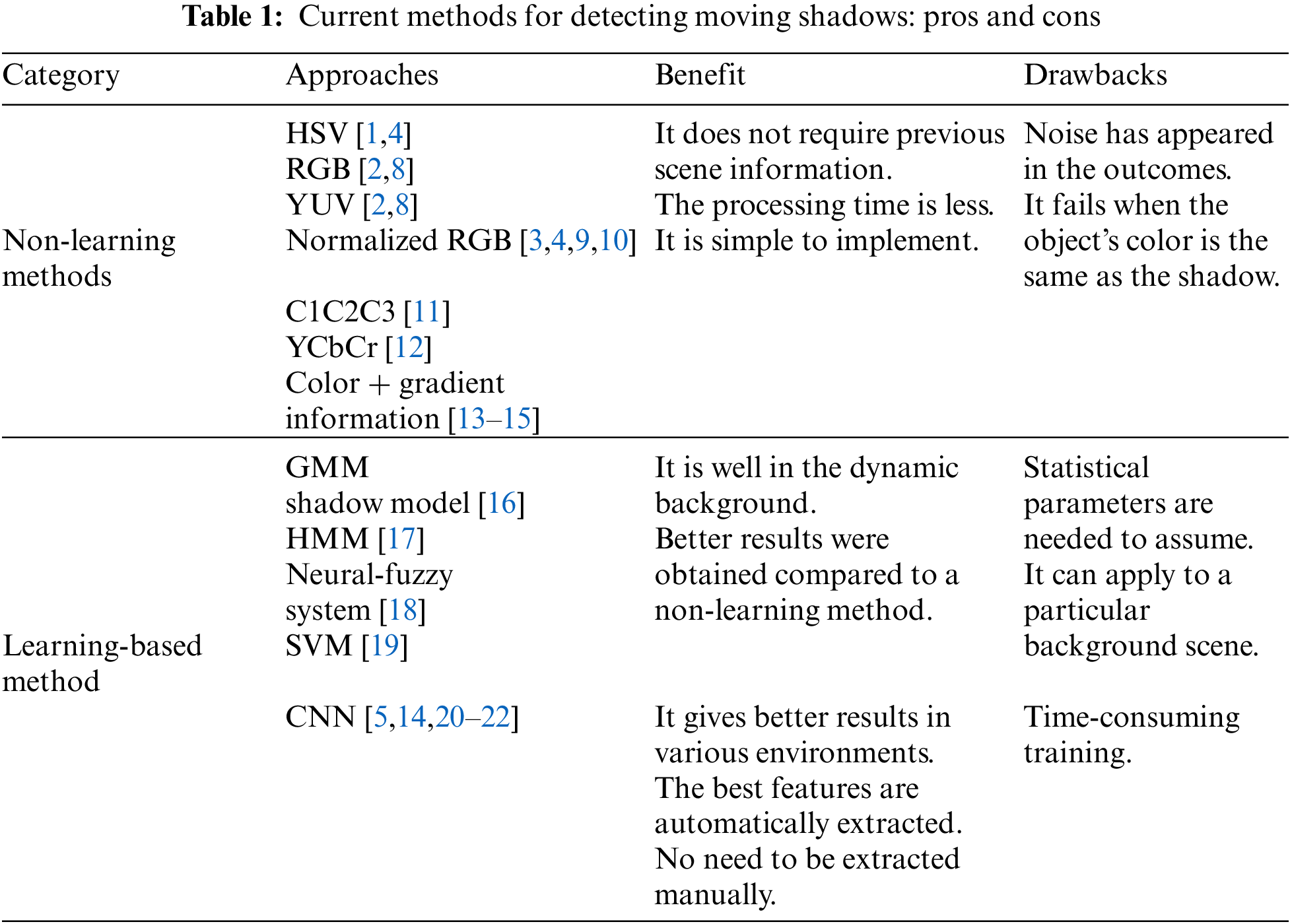

Cucchiara et al. offered a method to distinguish moving cast shadow pixels using the hue, saturation, and value (HSV) color system. In the brightness component, the shadow darkens the background, while the hue and saturation components are altered [4,7]. Kim et al. showed the advantages and disadvantages of the available learning and non-learning approaches for the detection of moving cast shadows. Compared to the non-learning method, the learning method produces better results [5]. The pros and cons of existing methods have been covered in Table 1.

Martel et al. developed an algorithm to model the non-uniform and dynamic intensity of moving cast shadows and to build a statistical model for extracting moving cast shadow pixels using a learning skill of a mixture of Gaussian model [19]. There are several approaches to detecting and classifying vehicles available in the market. The current approaches for detecting vehicles rely on motion-based characteristics, appearance-based features, and neural network-based techniques. The fundamental techniques for motion-based features are optical flow, frame difference, and background subtraction. The background subtraction is good for the static background, but it may fail when suddenly changing in the background. The frame difference gives good results of vehicle detection in the dynamic background, but the noise appears in the outcomes. The optical flow can provide a high accuracy of 98.60% in vehicle detection at the speed of 0.212 cm/s of the vehicle but fails in front-view vehicle detection [23].

Abbas et al. developed a comparative review of the existing vehicle detection method. The Haar-like features, SIFT (Scale Invariant Feature Transformation), HOG (Histogram of Oriented Gradients) features, and some basic features (i.e., color, size, shape, and so more) are the fragments of the appearance features-based method. The basic features-based method gives good results in vehicle detection, but it may fail with the changes in size, illumination, and many more. The SIFT-based method offers an 87% vehicle detection rate but is computationally expensive and has a slow processing speed. The HOG-based method gives 97% accuracy in vehicle detection but fails to recognize vehicles in complex scenarios with several obstructed objects. The Haar-like feature-based method provides 80% accuracy in vehicle detection at a 120 false alarm rate but can only detect small vehicles. R-CNN (Region-based Convolutional Neural Network) and Faster R-CNN are two established, well-known techniques for detecting vehicles using neural networks. Compared to previous methods, R-CNN and Faster R-CNN are used to segment in natural frame sequences with complicated background motion and variations in lighting, but the network cannot be retrained [24].

Zhang et al. developed a hue-saturation-value (HSV) based moving shadow removal method and a fusion of multiple features. They found dark objects from the HSV shadow detection and false detection could improve by texture features. The proposed method was implemented on some standard datasets and obtained good results for the shadow and foreground regions [25,26].

The deep learning (DL) technique requires a significant amount of labelled data to be trained, which takes a long time, before the model generated using the technique can be used in another scenario. DL-based shadow removal is mostly concerned with removing shadows from a single image, therefore more research is required.

The following research gap the author found after the rigorous literature review:

• In the evening, moving object shadow detection is possible.

• Since most researchers have only concentrated on the identification/extraction of either the shadow region or the foreground region, a study on the classification of the shadow and foreground of moving objects is still needed for both indoor and outdoor scenarios.

• On publicly accessible datasets, the performance of the current technique can be changed. Also, performance can be evaluated on the Vadodara Mumbai highway which was generated by the author.

• To eliminate the noise seen in the results of existing approaches, the proposed method may be made robust and effective.

• Because foreground pixels are mistakenly identified as shadow pixels, current dynamic shadow detection algorithms have a high discriminating rate but a low detection rate.

This research contributed recommended methods to overcome the existing research gaps, which is discussed below:

• A unique approach for detecting moving shadows of automobiles in poor illumination has been created using data from the Vadodara-Mumbai expressway. The method introduces the intensity ratio of background and current frames in grayscale as a better shadow extraction method than other previous methods for producing accurate results in cases where low illumination problems exist in the dynamic backdrop. Furthermore, Gamma correction in conjunction with morphological operation has been proposed as an additional shadow identification criterion to improve detection results by thresholding. Along with a mixture of Gaussian model has been used for vehicle detection. The proposed method's acquired outcomes have evolved using self-generated ground truth.

• The subsequent processes have been combined: Using MoG, pixel-by-pixel image division, negative transformation, Gamma correction, and morphological operation, an efficient moving shadow identification technique is developed using datasets created by the author and publically available video sequences. Additionally, logical operation between the results of MoG and the results of Gamma correction with morphological operation has been added as a way to improve the rate at which shadows may be detected and distinguished.

• According to the results and performance analysis when compared to the results and performance evaluation of the current approach, the proposed approach is easy to use and robust against the accurate categorization of shadow and foreground of moving objects.

In this paper, a mixture of Gaussian model has been used for the moving vehicle detection in the dynamic background because it handles the change of illumination and repetitive motion of the vehicles in the scene [27] and intensity ratios with negative transformation, Gamma correction is used for detecting moving cast shadows. The shadow region is removed from the mixture of Gaussian model output for exact vehicle tracking in the dynamic scene.

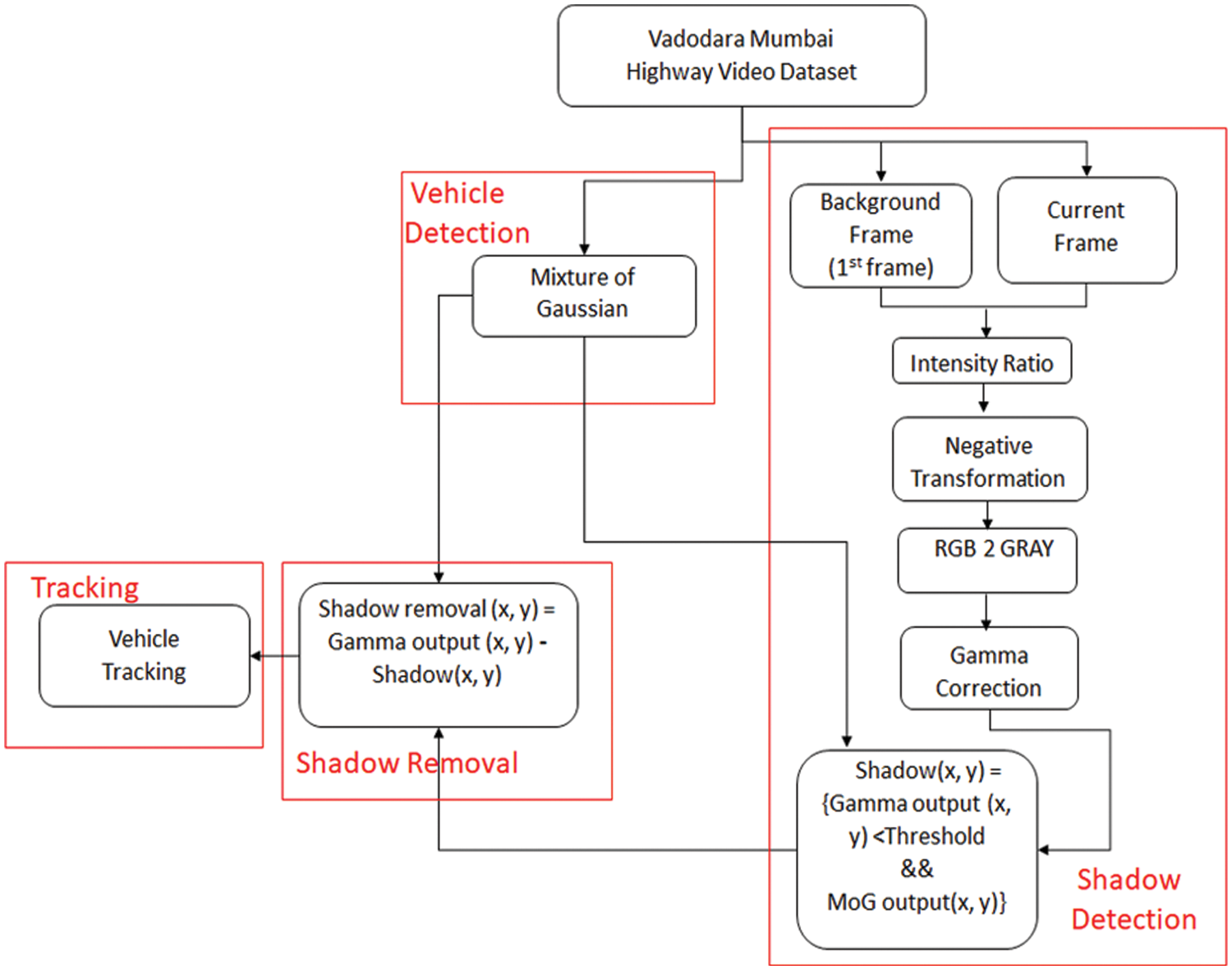

The proposed method is categorized into four major tasks, i.e., Vehicle detection, Shadow detection, Shadow removal, and Vehicle tracking, and several steps are used to accomplish our goal in our proposed method. All key tasks are discussed below in the following section, shown in Fig. 1.

Figure 1: The proposed method’s detailed flow

The existing research work has been proposed on a dataset of moving vehicles during the daytime, where the shadow strength of moving vehicles is very short. Hence, shadow detection is simple, but it is challenging to identify strong shadows of moving vehicles in low light. In low-light period (after 5:00 pm), the dataset has been obtained by a Go-Pro camera device to achieve the moving shadow detection challenge in heavy traffic on the Vadodara-Mumbai expressway. Table 2 is a list of the technical specifications for the own made dataset. The collected video can be divided up into video frames, which can then be processed to provide the desired output.

In the first section, the Gaussian [28] mixture is used to detect the vehicle in a change of illumination and dynamic background. The kth Gaussian distribution has been considered for every pixel as time t for vehicle detection. It can identify the pixels belonging to the vehicle or background.

The distribution of random variable x is described into various K mixtures of Gaussian, and the sum of all distributions is the scene’s distribution. Eq. (1) represents the probability density function of a mixture of the Gaussian of the scene (considered three only).

where,

There are three main steps of MoG model processing for the background model, which are below. The first step is to assume that all pixels of the image are independent mutually, the intensity distribution is in terms of a Gaussian mixture at time t in the second step, and in the third step, the computational K-mean approximation has been considered as modeling. The weight and mean of the Gaussian reference observation distribution have been considered 1. Another Gaussian distribution is compared with the reference observation by given match parameters based on the standard deviation represented by Eq. (3), which identifies the pixels belonging to the background.

The Gaussian distribution parameters are updated if the kth Gaussian distribution is matched as per the matched definition (mentioned in Eq. (3)) [29,30]; otherwise, the last distribution is changed with a mean equivalent to the current value of xt, high variance σ, and weight as low value as per the Eqs. (4)–(6).

where α is the learning constant

where ρ is the learning factor

In the subtraction process, the descending order of the w/σ ratio is considered for identifying the background distribution. The background that is most likely, with a high weight and low variance, is placed at the top of this ranking. The background distributions are only allowed to use the first B Gaussian distribution, which is given in Eq. (7). T is more than the specified threshold, Eq. (7) is used to categorize the background and foreground pixels in the distribution.

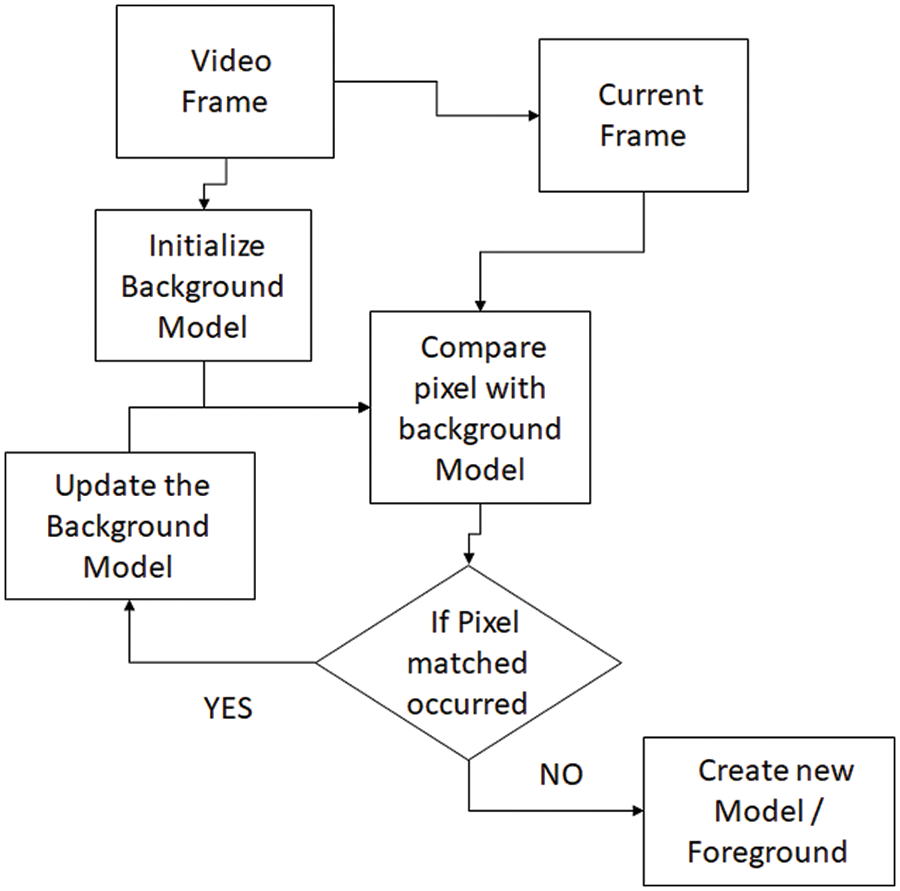

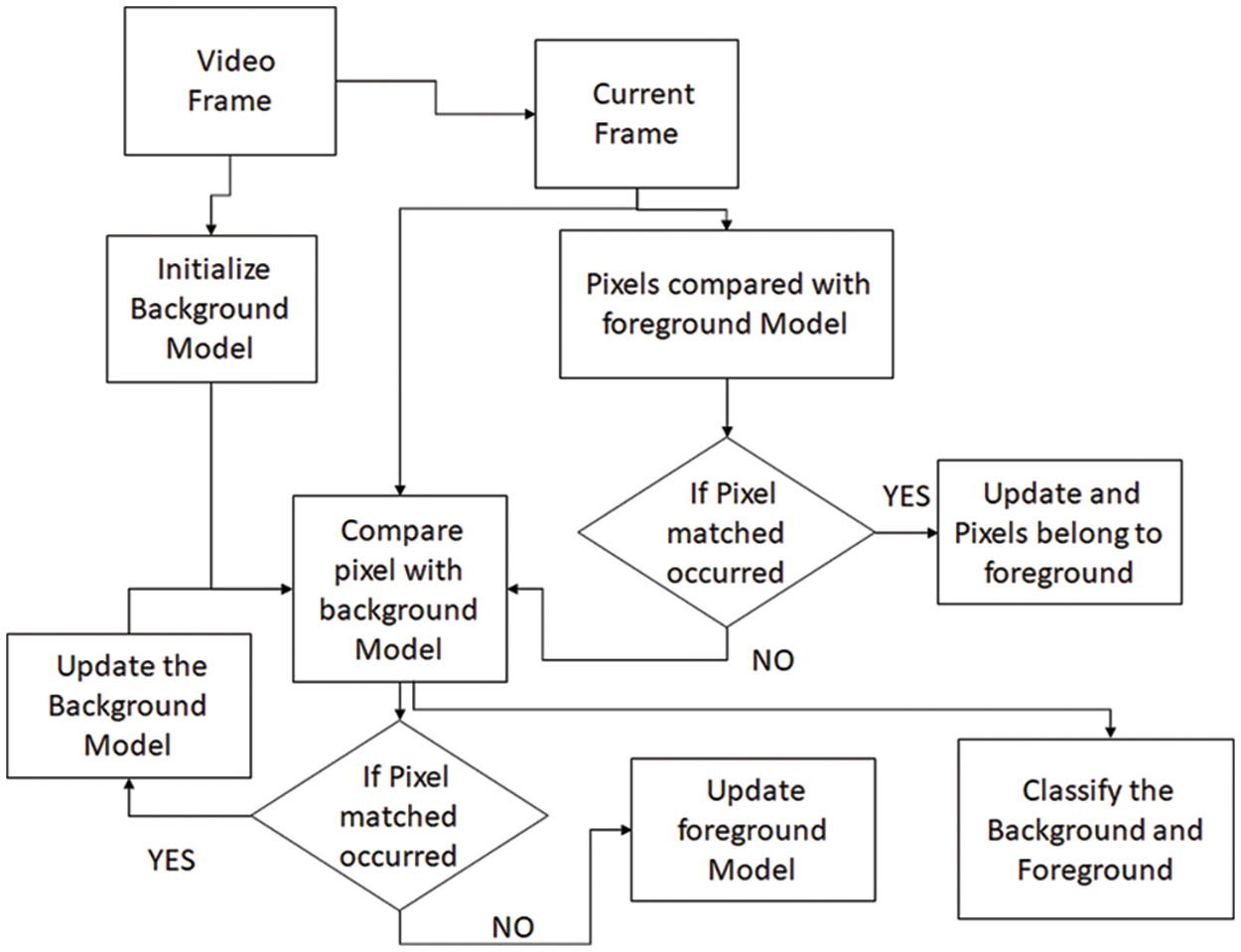

Fig. 2 represents the process of a MoG for the classification of a pixel belonging to the background or not from the background model setup. Each pixel distribution of frames at time t is a mixture of Gaussian model represented by Eq. (1). As per the mixture of Gaussian model flow, the background model is formed with parameters, and then every pixel of current frames is compared to the background model with matching criteria represented by Eq. (2). If a pixel matches Eq. (2), then the Gaussian is said to be ‘matched’ then increase the weight, adjust the mean closer to initialize the model and decrease the variance as per the Eqs. (4)–(6) else the Gaussian is unmatched then create a new Gaussian. If all Gaussian mixtures for a pixel are unmatched, then the pixel belonging to the foreground will find the probable Gaussian and replace the new one, which should have a high variance and low weight. The mixture of Gaussian model is updated using the foreground model represented by Fig. 3, in which every pixel is compared with the foreground model. If it is matched, then it seems to be the foreground pixel, and if it is unmatched in that case the pixel is compared to as per the previous flow of a mixture of Gaussian model represented by Fig. 2.

Figure 2: Flow of mixture of Gaussian model using background model

Figure 3: Flow of mixture of Gaussian model for foreground model and background model

Shadow detection is accomplished through the use of the image division pixel-by-pixel, Gamma correction, negative transformation, and the logical operation of Gamma correction output and MoG output. For the extracting dark intensities (shadow intensities) region, the pixel-to-pixel division of the reference frame has been taken, and the present frame is shown in Eq. (8).

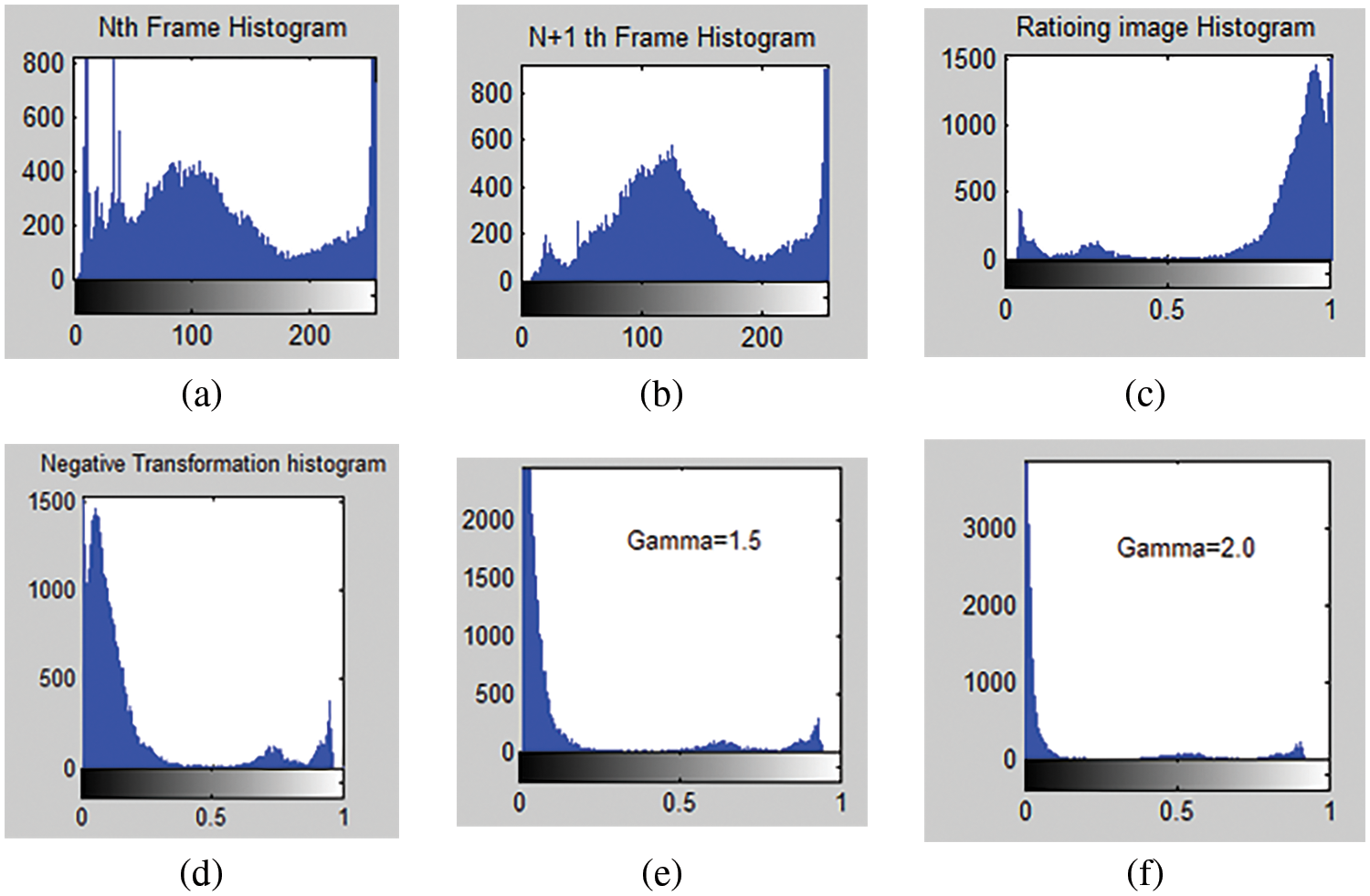

The result of the image division has a value of 1 for the background frame’s intensity and the current frame’s intensity, with the remaining intensities being less than 1. As per the histogram of image division output, the same intensities of background and current frames are nearest to 1, and the rest of the other intensities are less than 1, which shows the region of shadow, vehicle, and other changes in the background are depicted in Fig. 4c.

Figure 4: Image division, negative transform, and Gamma correction output histogram: (a) background image’s histogram (b) current image’s histogram (c) proportionality of background and current image’s histogram (d) negative transformation’s output histogram (e) Gamma correction histogram from Gamma = 1.5 (f) Gamma correction histogram from Gamma = 2.0

The background frame and current frame’s identical intensities, which have a value of 1 in the result of image division, were shifted using the negative transformation. The negative transformation output is obtained by subtracting each frame pixel from the maximum intensity value. Eq. (9) defines the negative transformation. The shadow intensities can be found from the proper thresholding operation on the output of the negative transformation shown in Fig. 4d. However, the identical brightness of the reference frame and present frame contain the vehicle (change in the background) and its shadow information; hence, the Gamma correction image enhancement technique has been used to extract the exact shadow intensities of the vehicle from similar intensities.

3.2.2 Gamma Correction (Image Enhancement)

The Gamma correction controls the image’s overall luminance using Gamma-based non-linear function. Each pixel has luminance representing the average of (Red, Green, and Blue) values in the frame; As a result, the grayscale image is necessary before the Gamma correction. The grayscale image is an average luminance calculated from Eq. (10) [31].

The output is proportional to the input raised to the power of Gamma γ is shown Gamma correction mathematically in Eq. (11) in which γ = 1 provides the ideal response of the image, but if γ < 1 will give the brighter intensities to be darker and γ > 1 will give darker intensities to be brighter. For extracting the shadow intensities, the Gamma correction has been used on the grayscale output of the negative transformation by γ > 1 (1.5, 2, 2.5, …), the Gamma correction histogram output of γ = 1.5 and γ = 2 has been selected to extract the shadow intensities which is shown in Figs. 4e and 4f.

Grayscale (x, y) is the converted grayscale output of the color negative transformation output image for the computational complexity [32].

3.2.3 Thresholding and Logical Operation

The logical operation has been taken between the outputs of Gamma correction output and the output of MoG for extracting the shadow region using the proper threshold followed by Eq. (12).

The thresholds have been selected based on the nearest dark intensities of the histogram of the grayscale output of Gamma correction.

The output of the shadow region is subtracted from the output of MoG, as shown in Eq. (13), which will remove the shadow, and the output is to be used for further application of computer vision (vehicle tracking).

The region-filling morphological operation has been used on the output of shadow removal for removing the noise.

The shadow removal output is in the binary image. All the 1 intensities of the output are connected using neighborhood 8-connectivity and assigned the labels to them, which are represented by rectangular for vehicle tracking over time. The following steps have been performed in the proposed method for vehicle tracking:

Steps:

1) From the upper left corner to the bottom right corner of the binary detection output, every pixel p is tested, progressing linearly and looking for foreground pixels whose intensity is 1.

2) To identify the related components in an image, give each one a distinct label.

3) Measure a set of labeled connecting objects for each connected component in the binary image.

4) Draw the rectangle using the region props command for vehicle tracking.

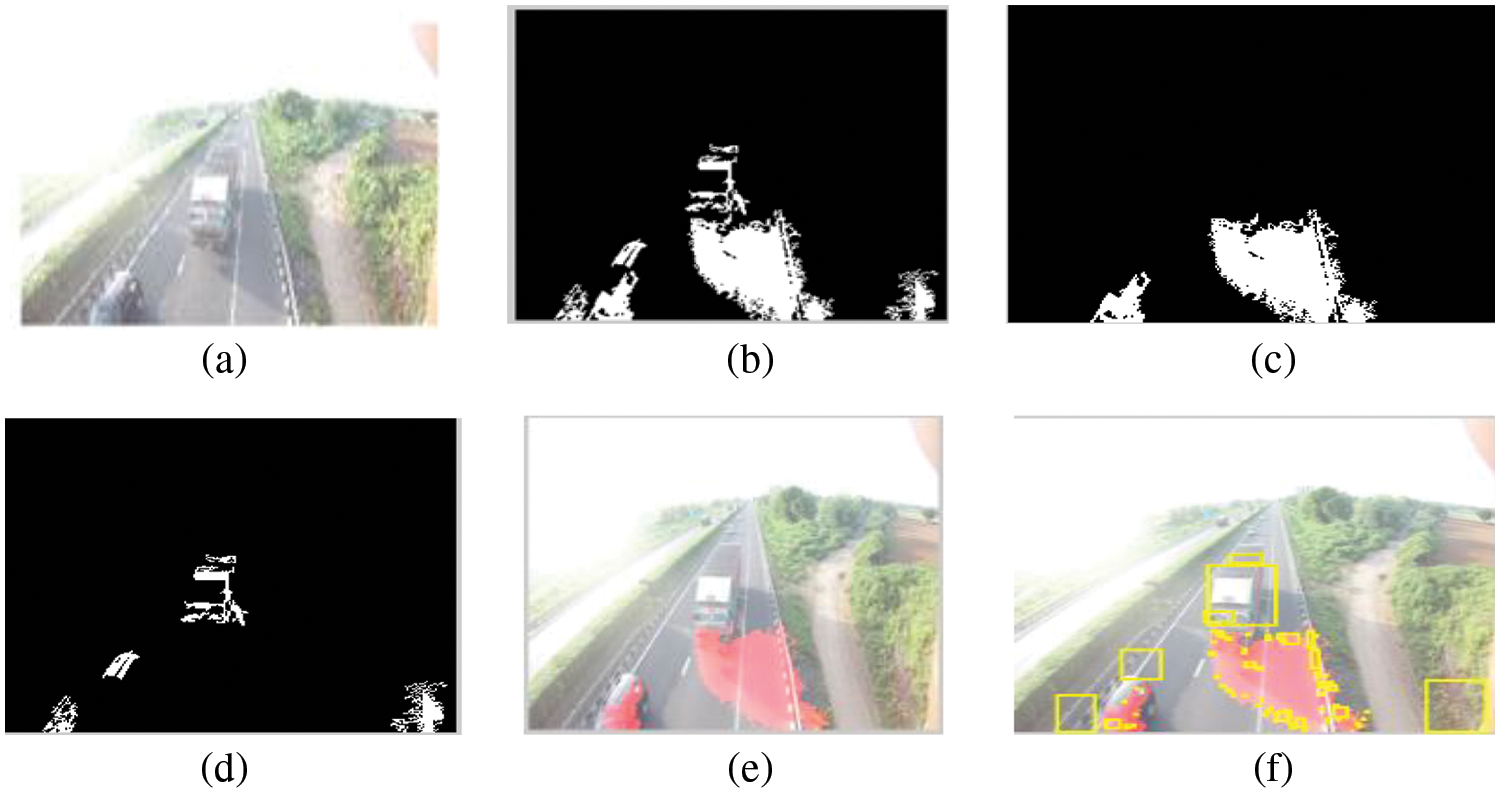

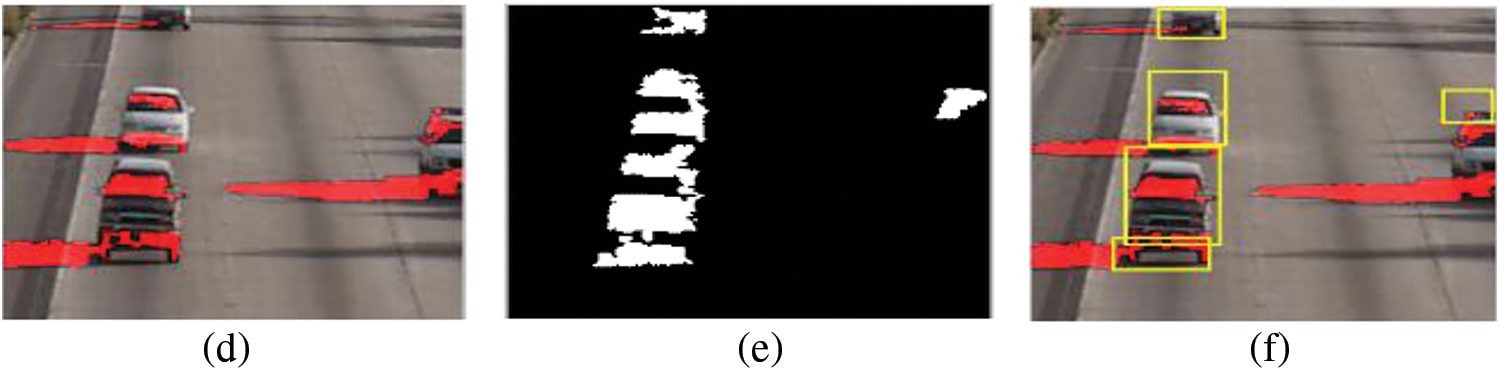

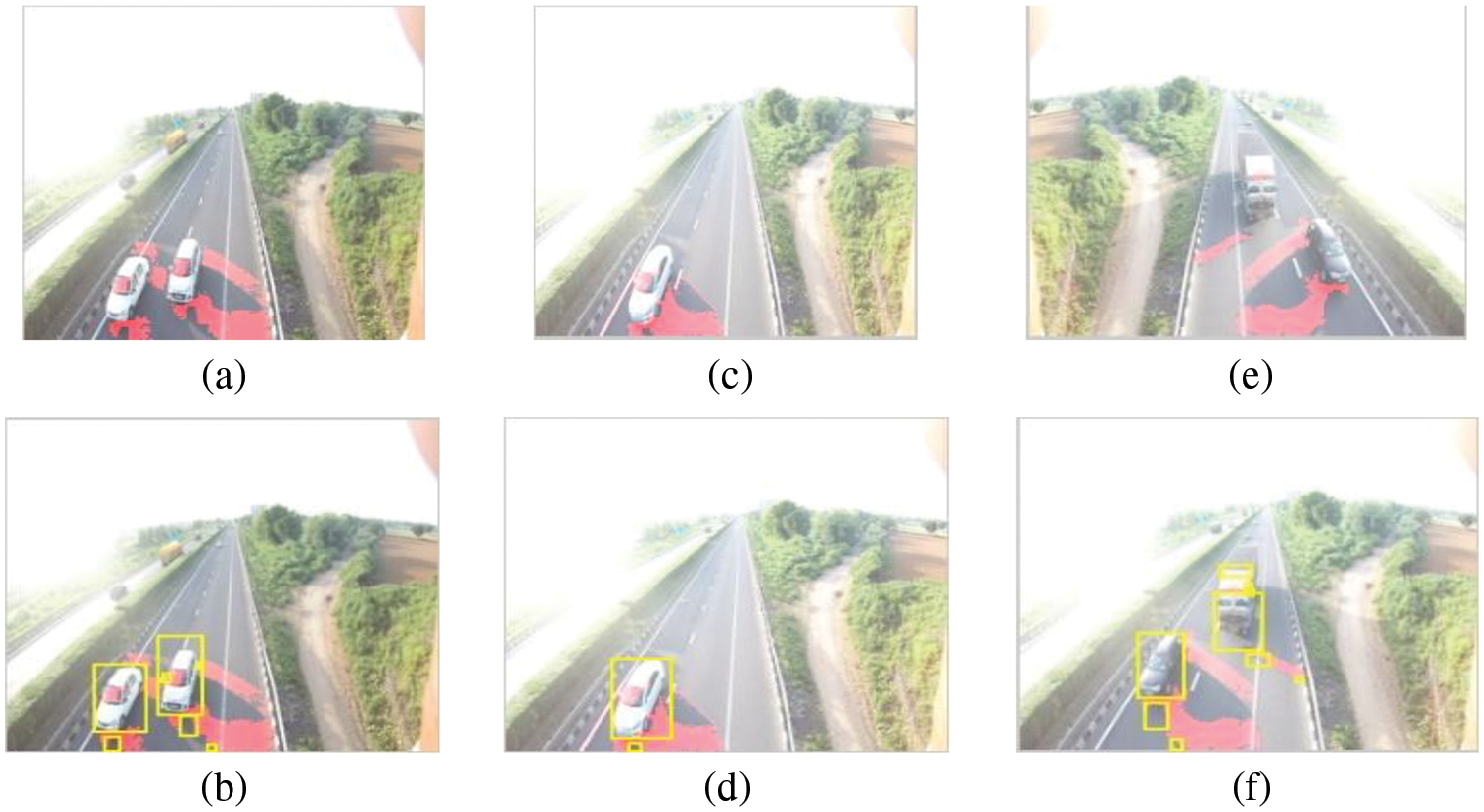

Results are obtained from applying the suggested technique in MATLAB on an Intel Core TM i3 CPU 2.4 GHz, RAM of 4 GB, and 64-bit operating system (Windows 8.1) for detecting and eradicating moving shadows of vehicles using a combination of Gaussian along with image division, negative transformation, Gamma correction, and logical operation. The proposed method has tested all frames of datasets, but Figs. 3 and 4 depict the results of the recommended procedure on the 30th frame of self-acquired datasets and the 45th frame of standard datasets. The vehicle detection output of both datasets is obtained from the mixture of the Gaussian model as shown in Figs. 5b and 5c. Fig. 5 shows the output of the shadow detection, which is obtained from various steps of the proposed method, such as image ratio, negative transformation, Gamma correction, and thresholding operation. The shadow removal is found from the subtraction of the output of the mixture of Gaussian model and shadow detection for further application of ITS, shown in Fig. 5d. In the proposed system, vehicle tracking is achieved from the label of the connecting component and drawing a bounding box around the vehicle region only. Vehicle tracking results are shown in Fig. 5f. Fig. 6 displays the same outcomes from frame 45 of the reference dataset. Fig. 7 displays the outcomes of shadow identification and vehicle tracking for various frames using the suggested technique for a self-prepared dataset.

Figure 5: Proposed method results on a standard dataset: (a) 30th frame of the self-prepared dataset (b) vehicle detection output of frame 30th of the self-prepared dataset using the mixture of Gaussian model (c) shadow detection output in binary nature (d) shadow removal output of 30th frame (e) shadow detection presents in the red color of 30th frame (f) vehicle tracking of 30th frame

Figure 6: Proposed method results in a standard dataset: (a) 45th frame of the standard dataset (b) vehicle detection output of frame 45th of the standard dataset using the mixture of Gaussian model (c) shadow detection output in binary nature (d) shadow removal output of 45th frame (e) shadow detection presents in the red color of 45th frame (f) vehicle tracking of 45th frame

Figure 7: Shadow detection and vehicle tracking outcomes of the proposed method on various frames of self-prepared dataset: (a) Shadow detection outcomes of 85th frame (b) vehicle tracking outcomes of 85th frame (c) shadow detection outcomes of 242nd frame (d) vehicle tracking outcomes of 242nd frame (e) shadow detection outcomes of 27th frame (f) vehicle tracking outcomes of 27th frame

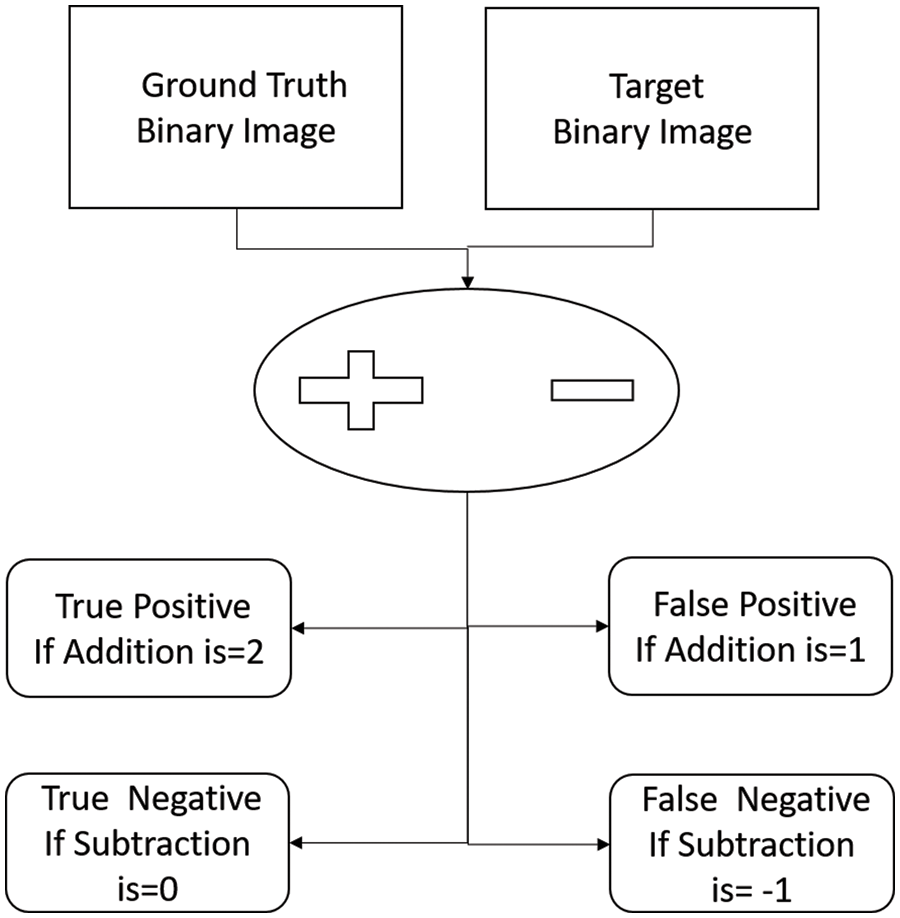

With the help of the algorithm below, depicted in Fig. 8, the shadow performance analysis of the suggested technique has been obtained. The required ground truth images have been obtained from the ImageJ software for the performance analysis. The binary outcomes of shadow detection are added and subtracted from the binary self-generated ground truth images for the performance analysis matrices. The total number of obtained 0 and 1 intensities of the arithmetic operation output are the same as the intensities of ground truth image (can 0 and 1) can be considered as true positive and true negative. The total number of obtained 0 and 1 intensities of the output of arithmetic operation are not matched with the intensities of ground truth image (can 0 and 1) and can be considered as a false positive and false negative. The assessment of results has been obtained from shadow detection analysis parameters, i.e., the shadow detection rate and shadow discrimination rate. The shadow detection rate, shadow discrimination rate, and specificity (true negative rate) are mathematically defined in Eqs. (14)–(16).

Figure 8: Performance evaluation of the suggested approach: TP, TN, FP, and FN

where “s” stands for “shadow” and “f” stands for “foreground”.

True Positive is the acronym for the TP, which means correctly detected pixels of shadow/foreground region from the total number of pixels of shadow/foreground about ground truth, whereas TN is true negative which says that correctly detected pixels of non-shadow/non-foreground region. False Positive FP means wrongly detected shadow and foreground pixels of the shadow/foreground region. False Negative, or FN, refers to pixels about the ground truth that were inadvertently identified as non-shadow or non-foreground.

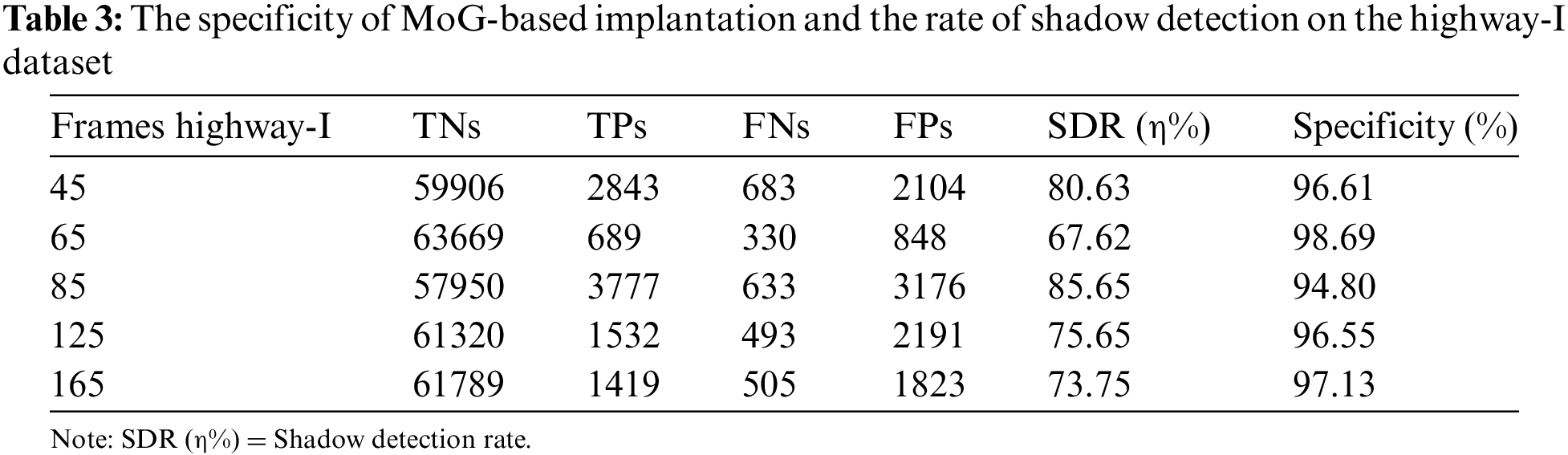

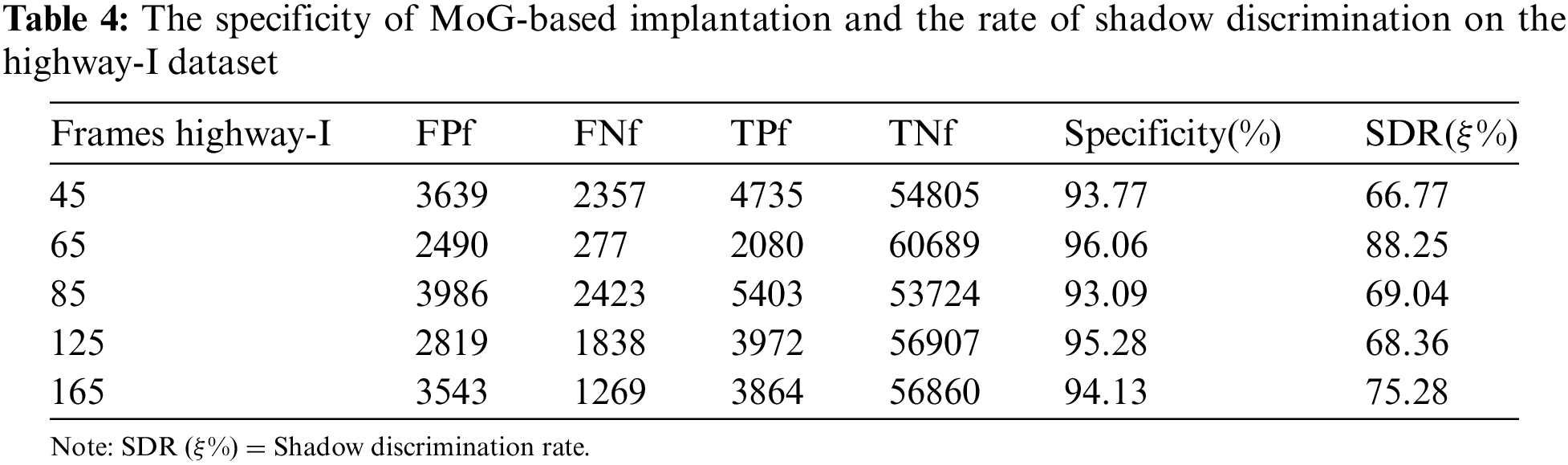

The suggested method was tested on a standard dataset to compare the results of the proposed method to the results of existing techniques using the shadow detection rate, shadow discrimination rate, and true negative rate (specificity). The proposed method outcomes of frames (45, 65, 85,125, and 165) have been analyzed using shadow detection and discrimination rates with specificity, those of which are given in Tables 3 and 4. According to Tables 3 and 4, the suggested method has a high level of specificity (over 90%) for detecting the shadow and foreground regions.

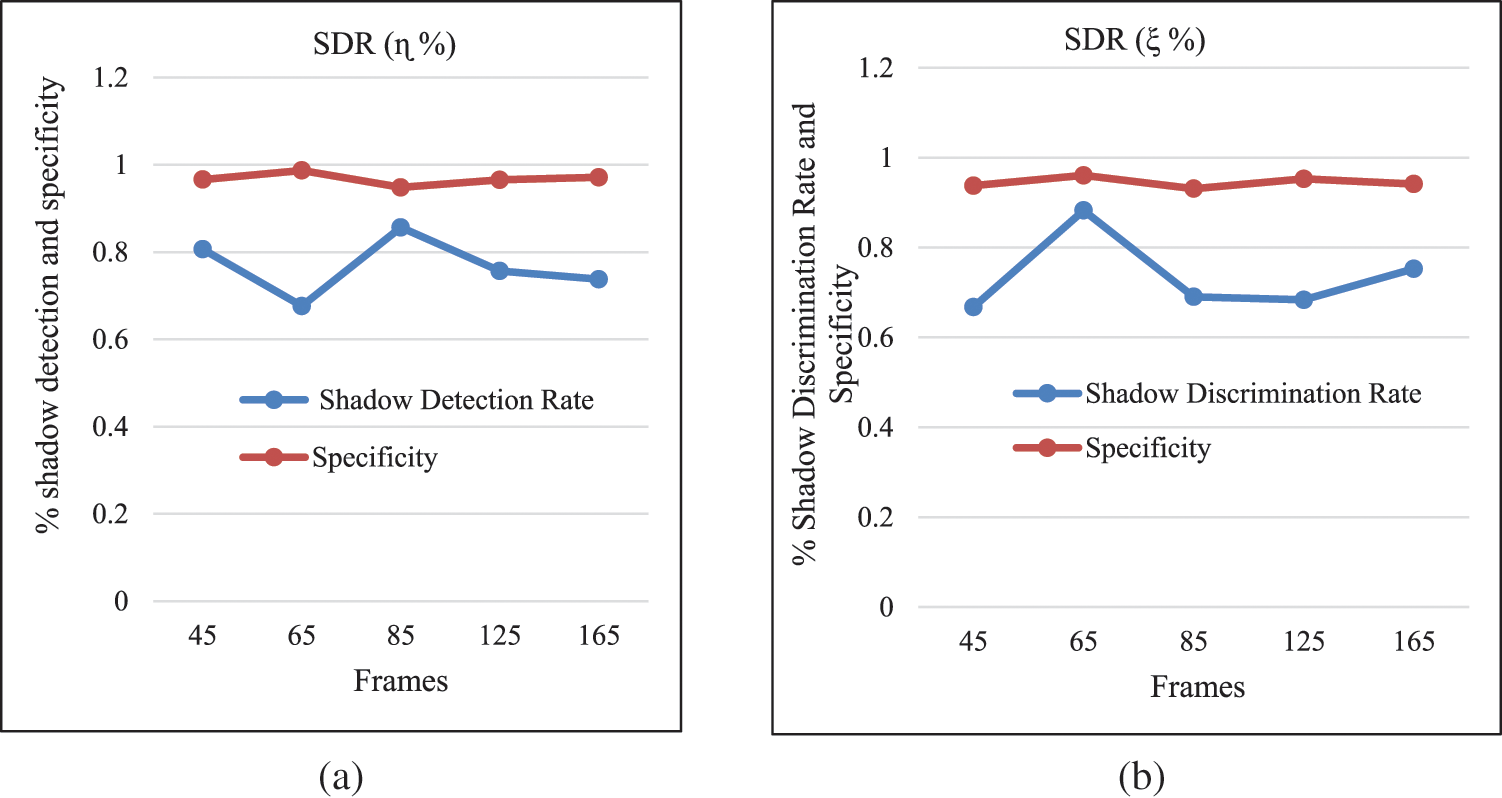

The graphical representation of the above results is shown in Fig. 9, which shows that the shadow detection rate and shadow discrimination rate of all frames are above 70%. This shows that both the foreground and background regions of the suggested method are stable. As per Table 3, the 80.63% shadow detection rate and the corresponding 96.61% specificity of frame 45 shows that 80.63% shadow region detected and 96.61% pixels are not detected, which is not required. As per Table 4, the 66.77% shadow discrimination rate and corresponding 93.77% specificity mean 66.77% pixels are properly detected in the vehicle, and 93.77% are not detected, which is not required. The same results of other frames show that the proposed method properly classifies the vehicle and its cast shadow with more than a 90% true negative rate. Fig. 9 depicts a graphical representation of the suggested method’s performance analysis of outcomes. Fig. 9’s graphical performance demonstrates that the proposed solution is not limited to the shadow region. However, focusing on the vehicle region implies that the suggested technique can separate the vehicle region and its shadow region; thus, the outcomes of the proposed approach for vehicle tracking give the right vehicle region.

Figure 9: Shadow/foreground analysis in graphics: (a) shadow detection efficiency with specificity (b) shadow discrimination efficiency with specificity

The performance of the suggested method’s moving shadow region has been studied, and the obtained shadow detection rate is more than 70% with more than 90% specificity, other associated characteristics, such as precision, F-measure, and others, are provided in Table 5. Eqs. (17) and (18) define precision and F-measurement quantitatively [26,33,34]. According to the results of the suggested approach’s performance analysis on the self-generated dataset, the proposed method can detect shadow regions correctly with correct not detection of wrong pixels.

In Table 6 of shadow detection in a real scene, the average shadow detection rate is around 80%, with above than the average true negative rate 92.96%, meaning the proposed method can detect shadow up to 80%, but it is capable of not detecting wrong information up to 92.96%. The graphical demonstration of Table 7 is shown in Fig. 10, which shows that existing methods are detected well in either the shadow region or foreground region, but the proposed method can extract the shadow and foreground region.

Figure 10: Comparison in graphic form of the proposed methods and the current method

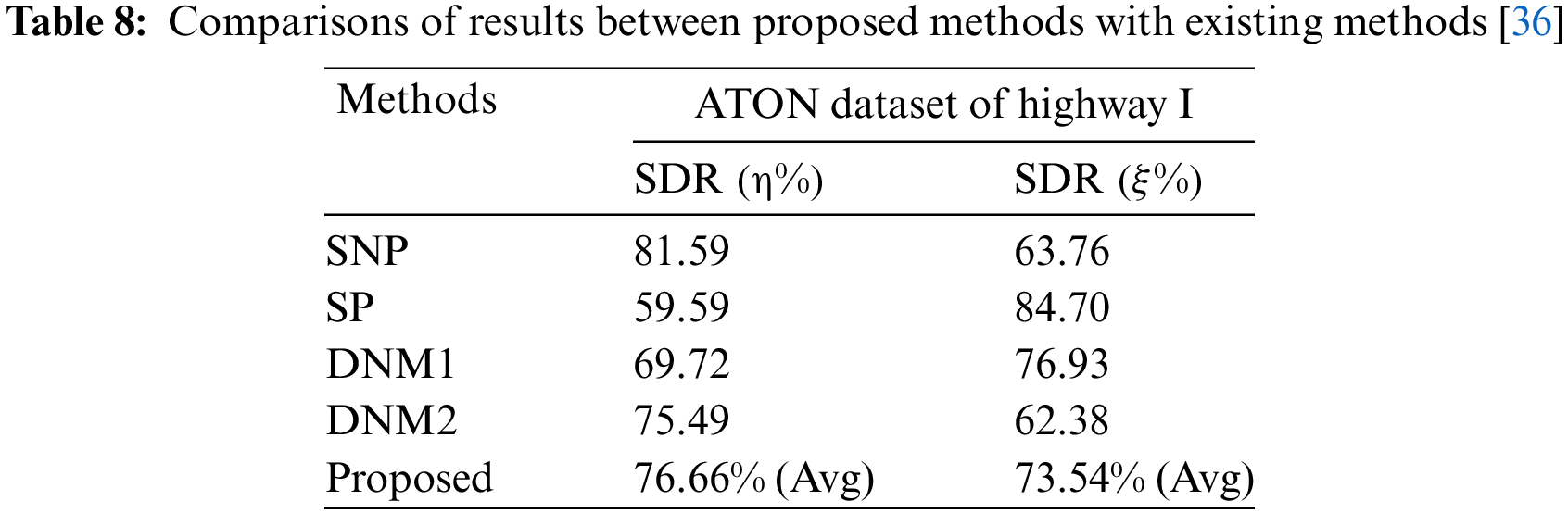

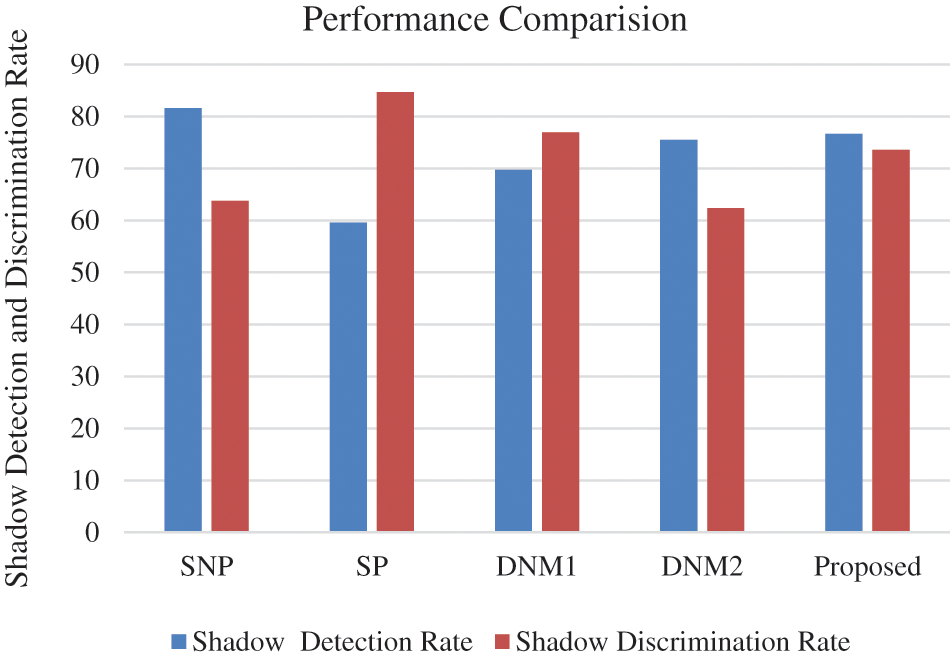

The MoG-based method results have been compared with the results of an existing method in terms of shadow detection and discrimination rates, as listed in Table 8. The comparison of the statistical nonparametric (SNP), statistical parametric (SP), deterministic non-model-based (DNM1), and deterministic non-model-based (DNM2) approaches on the standard dataset has been obtained in the form of shadow detection rate and shadow discrimination rate, as shown in Table 8. Fig. 11 depicts a graphical depiction of the proposed method's and other existing approaches’ output on a standard dataset.

Figure 11: Results on a common dataset from a graphical comparison of the suggested method with other methods already in use

As a result of statistical parameters, statistical nonparametric, DNM1, and DNM2 are not capable of detecting shadow detection properly because they detected some regions of the vehicle; hence, the SDR (

From Table 7, the Statistical Parametric approach has obtained an 84.70% SDR (ξ%), but the SDR (

Most research has focused on the pixels of vehicles or the pixels of shadow. The majority of studies have only found either vehicle regions or shadow regions. The past research cannot detect vehicle and shadow regions simultaneously; thus, it is necessary to correctly separate the shadow region and foreground for various applications, including classification, counting, and tracking. Under low illumination and against a dynamic background, it is extremely difficult to detect moving vehicle shadows. For vehicle tracking in low-light conditions with a dynamic background, the authors developed a technique based on a mixture of Gaussian model for vehicle detection and the image pixel-by-pixel division, negative transformation, Gamma correction, and morphological operation for shadow detection. The low illumination and dynamic background dataset and the required vehicle ground truth images were obtained on the Vadodara-Mumbai highway using a high-resolution camera and ImageJ software. The suggested approach was evaluated on a standard dataset to compare the results of the new method to the results of an existing method utilizing standard shadow detection performance parameters. Under low illumination and with a dynamic background, the proposed method achieved more than 70% shadow detection rate and 90% specificity (true negative rate) on a self-prepared dataset. Based on performance analysis the suggested technique is easy and simple for detecting moving shadows on the self-prepared video frames and steady for vehicle and shadow classification on the standard dataset.

The suggested work is distinctive in the following ways: The intensity of the car will be on the brighter side of the intensity region when we examine the intensity histograms of the foreground and background objects, whereas the intensity of the shadow will be on the darker side. The histogram does not fall on either the brighter or darker side when we examine an object with a vehicle region and a shadow. As a result, the foreground and shadow strengths must be separated.

To do this, the suggested solution uses a Gamma correction technique in conjunction with an intensity ratio and negative transformation. The current frame’s to the following frame’s pixel-by-pixel intensity ratio is collected, and the resulting image's histogram displays an intensity distribution on the brighter side, suggesting the presence of information about the vehicle region. Nevertheless, it combines foreground and shadow data. The resultant output of the image created after the image ratio and negative transformation includes a shadow intensity, and its intensity is determined by the Gamma correction procedure. When the Gamma value is 2.5, the intensity value is close to the dark intensity, and the histogram is in the darker intensity zone when the Gamma value is 2.0. Thus, we may compute shadow intensity by combining the picture intensity ratio, negative transformation, and the Gamma correction technique. The output image formed from a combination of Gaussian output was then subjected to the AND logical operation after Gamma correction. Then, morphological techniques are used to highlight the region of the shadow intensity. The output is then subtracted from the mixture of Gaussian model output, which now just contains details on the vehicle. Thereafter by applying a connecting labeling algorithm only vehicles are tracked by rectangle continue over a time. The vehicle re-identification computer vision application can be implemented using the existing method deep feature-based approaches and feature-based methods that are handcrafted [43] as other computer vision applications. In future work, the tracking can be improved using the Kalman filter tracking method in place of the labeled connected component method in the proposed method and estimation of the speed of the vehicle required to analyze the proposed method with existing speed estimation of vehicle methods [44].

For the vehicle tracking system, the following methods are suggested: MoG-based vehicle detection; image pixel-by-pixel division; negative transformation; and Gamma correction shadow detection. Compared to previous methods, MoG-based vehicle detection may be able to find vehicles in settings with larger variance. The image division with innovative image processing steps included negative transformation, Gamma correction, logical operations, and thresholding processes improved shadow identification on datasets that were created independently and on datasets that were provided by the industry. Utilizing ImageJ software, the performance analysis’s reference images are gathered. Results from the suggested method are compared to those from other methods, proving that it is a reliable way for locating and eradicating the shadow zone that is also straightforward to use. Both the shadow region and the foreground can benefit from the suggested strategy.

Acknowledgement: This work is funded by King Saud University, Riyadh, Saudi Arabia.

Funding Statement: This work is funded by Researchers Supporting Project Number (RSP2023R503), King Saud University, Riyadh, Saudi Arabia.

Author Contributions: Study conception and design: K.J. and V.S.; data collection: T.A., M.A.; analysis and interpretation of results: K.J., V.V.; draft manuscript preparation: K.A., F.B., K.J. supervision and funding acquisition: W.S., T.A., M.A. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: Data will be available from corresponding author upon reasonable request.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. S. Elena, A. Cavallaro and T. Ebrahimi, “Spatio-temporal shadow segmentation and tracking,” Image and Video Communications and Processing, vol. 5, no. 2, pp. 1–19, 2003. [Google Scholar]

2. E. Salvador, A. Cavallaro and T. Ebrahimi, “Shadow identification and classification using invariant color models,” Acoustics, Speech, and Signal Processing Journal, vol. 3, no. 5, pp. 1545–1548, 2001. [Google Scholar]

3. Y. Wan and Z. Miao, “Automatic panorama image mosaic and ghost eliminating,” Multimedia Systems, vol. 4, no. 3, pp. 945–948, 2008. [Google Scholar]

4. R. Cucchiara, C. Crana, M. Piccardi, A. Prati and S. Sirotti, “Improving shadow suppression in moving object detection with HSV color information,” Intelligent Transportation Systems, vol. 3, no. 9, pp. 334–339, 2001. [Google Scholar]

5. D. Kim, M. Arsalan and K. Park, “Convolutional neural network-based shadow detection in images using visible light camera sensor,” Sensors, vol. 18, no. 4, pp. 1–32, 2018. [Google Scholar]

6. A. Whangbo, “Detection and removal of moving object shadows using geometry and color information for indoor video streams,” Applied Science, vol. 9, no. 23, pp. 1–15, 2019. [Google Scholar]

7. M. kumar, “An effective framework using region merging and learning machine for shadow detection and removal,” Turkish Journal of Computational Mathematics and Edication, vol. 12, no. 2, pp. 2506–2514, 2021. [Google Scholar]

8. O. Schreer, I. Feldmann, U. Golz and P. Kauff, “Fast and robust shadow detection in video conference applications,” International Journal of Multimedia Communications Systems, vol. 4, no. 7, pp. 371–375, 2002. [Google Scholar]

9. A. Cavallaro, E. Salvador and T. Ebrahimi, “Shadow-aware object-based video processing,” Multimedia Systems, vol. 12, no. 4, pp. 39–51, 2005. [Google Scholar]

10. H. Wang and D. Suter, “A consensus-based method for tracking: Modelling background scenario and foreground appearance,” Pattern Recognition, vol. 40, no. 3, pp. 1091–1105, 2007. [Google Scholar]

11. E. Salvador, A. Cavallaro and T. Ebrahimi, “Cast shadow segmentation using invariant color features,” Computation Visual and Image Understanding, vol. 95, no. 2, pp. 238–259, 2004. [Google Scholar]

12. K. Deb, “Shadow detection and removal based on YCbCr color space,” Journal of Smart Computers, vol. 4, no. 1, pp. 1–19, 2014. [Google Scholar]

13. A. Sanin, C. Sanderson and B. Lovell, “Improved shadow removal for robust person tracking in surveillance scenarios,” Pattern Recognition, vol. 3, no. 8, pp. 141–144, 2010. [Google Scholar]

14. G. Fung, N. Yung, G. Pang and A. Lai, “Effective moving cast shadow detection for monocular color image sequences,” Image Analysis and Processing Journal, vol. 3, no. 6, pp. 404–409, 2001. [Google Scholar]

15. G. Lee, M. Lee, J. Park and T. Kim, “Shadow detection based on regions of light sources for object extraction in nighttime video,” Sensors, vol. 17, no. 3, pp. 65–86, 2017. [Google Scholar]

16. N. Martel-Brisson and A. Zaccarin, “Moving cast shadow detection from a gaussian mixture shadow model,” Pattern Recognition, vol. 2, no. 4, pp. 643–648, 2005. [Google Scholar]

17. R. Jens, “A probabilistic background model for tracking,” Computer Vision Journal, vol. 3, no. 6, pp. 1–23, 2000. [Google Scholar]

18. M. Chacon and S. Gonzalez, “An adaptive neural-fuzzy approach for object detection in dynamic backgrounds for surveillance systems,” IEEE Transaction of Industrial Electronics, vol. 59, no. 8, pp. 3286–3298, 2012. [Google Scholar]

19. N. Martel and A. Zaccarin, “Learning and removing cast shadows through a multi-distribution approach,” IEEE Transaction of Pattern Recognition, vol. 29, no. 7, pp. 1133–1146, 2007. [Google Scholar]

20. K. Bin, J. Lin and X. Tong, “Edge intelligence-based moving target classification using compressed seismic measurements and convolutional neural networks,” IEEE Transaction of Remote Sensing, vol. 19, no. 7, pp. 1–5, 2022. [Google Scholar]

21. G. Jin, B. Ye, Y. Wu and F. Qu, “Vehicle classification based on seismic signatures using convolutional neural network,” IEEE Transaction of Remote Sensing, vol. 16, no. 4, pp. 628–632, 2019. [Google Scholar]

22. T. Xu, N. Wang and X. Xu, “Seismic target recognition based on parallel recurrent neural network for unattended ground sensor systems,” IEEE Access, vol. 7, no. 4, pp. 137823–137834, 2019. [Google Scholar]

23. V. Keerthi, P. Parida and S. Dash, “Vehicle detection and classification: A review,” Computer Vision Journal, vol. 4, no. 7, pp. 1–17, 2021. [Google Scholar]

24. A. Abbas, U. Sheikh, F. AL-Dhief and M. Mohd, “A comprehensive review of vehicle detection using computer vision,” Telecommunication Computers and Electronic Control, vol. 19, no. 3, pp. 83–93, 2021. [Google Scholar]

25. J. Xiang, H. Fan and S. Yu, “Moving object detection and shadow removing under changing illumination condition,” Mathematics and Probability Engineering, vol. 2, no. 14, pp. 1–10, 2014. [Google Scholar]

26. H. Zhang, S. Qu, H. Li and W. Xu, “A moving shadow elimination method based on the fusion of multi-feature,” IEEE Access, vol. 8, no. 4, pp. 63971–63982, 2020. [Google Scholar]

27. W. Zhang, “Spatiotemporal Gaussian mixture model to detect moving objects in dynamic scenes,” Journal of Electronic Imaging, vol. 16, no. 2, pp. 23–42, 2007. [Google Scholar]

28. Y. Wang, X. Cheng and X. Yuan, “Convolutional neural network-based moving ground target classification using raw seismic waveforms as input,” IEEE Sensors Journal, vol. 19, no. 14, pp. 5751–5759, 2019. [Google Scholar]

29. J. Hsieh, W. Hu, C. Chang and Y. Chen, “Shadow elimination for effective moving object detection by Gaussian shadow modeling,” Image Visual and Computations, vol. 21, no. 6, pp. 505–516, 2003. [Google Scholar]

30. P. Jagannathan, S. Rajkumar, J. Frnda, P. Divakarachari and P. Subramani, “Moving vehicle detection and classification using Gaussian mixture model and ensemble deep learning technique,” Wireless Communication and Mobile Computation, vol. 2, no. 11, pp. 1–15, 2021. [Google Scholar]

31. G. Xu, J. Su, H. D. Pan, Z. G. Zhang and H. B. Gong, “An image enhancement method based on Gamma correction,” Computational Intelligence and Design, vol. 3, no. 8, pp. 60–63, 2009. [Google Scholar]

32. Y. Miura, W. Zhou and H. Toyoda, “A new image correction method for the moving-object recognition of low-illuminance video-images,” Consumer of Electronics Journal, vol. 4, no. 6, pp. 1–12, 2018. [Google Scholar]

33. B. Wang, Y. Zhao and C. Chen, “Moving cast shadows segmentation using illumination invariant feature,” IEEE Transaction of Multimedia, vol. 22, no. 9, pp. 2221–2233, 2020. [Google Scholar]

34. A. Russell and J. Zou, “Moving shadow detection based on spatial-temporal constancy,” Signal Processing and Communication Systems, vol. 3, no. 7, pp. 1–16, 2013. [Google Scholar]

35. M. Russell, J. J. Zou and G. Fang, “An evaluation of moving shadow detection techniques,” Computational Visual Media, vol. 2, no. 3, pp. 195–217, 2016. [Google Scholar]

36. A. Prati, I. Mikic, M. Trivedi and R. Cucchiara, “Detecting moving shadows: Algorithms and evaluation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 25, no. 7, pp. 918–923, 2003. [Google Scholar]

37. Y. Zhang, G. Chen, J. Vukomanovic, K. Singh, Y. Liu et al., “Recurrent shadow attention model (RSAM) for shadow removal in high-resolution urban land-cover mapping,” Remote Sensing of Environment, vol. 24, no. 7, pp. 111–123, 2020. [Google Scholar]

38. J. Xiang, H. Fan, H. Liao, J. Xu, W. Sun et al., “Moving object detection and shadow removing under changing illumination condition,” Mathematical Problems in Engineering, vol. 20, no. 14, pp. 1–10, 2014. [Google Scholar]

39. A. Sanin, C. Sanderson and B. Lovell, “Shadow detection: A survey and comparative evaluation of recent methods,” Pattern Recognition, vol. 45, no. 4, pp. 1684–1695, 2013. [Google Scholar]

40. J. Huang and C. Chen, “Moving cast shadow detection using physics-based features,” in Proc. of IEEE Conf. on Computer Vision and Pattern Recognition, Quebec City, QC, Canada, pp. 2310–2317, 2009. [Google Scholar]

41. J. Hsieh, W. Hu, C. Chang and Y. Chen, “Shadow elimination for effective moving object detection by Gaussian shadow modeling,” Image and Vision Computing, vol. 21, no. 6, pp. 505–516, 2003. [Google Scholar]

42. A. Leone and C. Distante, “Shadow detection for moving objects based on texture analysis,” Pattern Recognition, vol. 40, no. 4, pp. 1222–1233, 2007. [Google Scholar]

43. S. Khan and H. Ullah, “A survey of advances in vision-based vehicle re-identification,” Computer Vision and Image Understanding, vol. 18, no. 2, pp. 50–63, 2019. [Google Scholar]

44. M. Saqib, S. Khan and S. Basalamah, “Vehicle speed estimation using wireless sensor network,” Multimedia Tools and Applications, vol. 2, no. 3, pp. 195–217, 2011. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools