Open Access

Open Access

ARTICLE

Telepresence Robots and Controlling Techniques in Healthcare System

1 Electrical Engineering Department, The University of Lahore, Lahore, Pakistan

2 Faculty of Computing and Information Technology, University of the Punjab, Lahore, Pakistan

3 Electrical Engineering Department, Fatima Jinnah Women University, Rawalpindi, Pakistan

* Corresponding Author: Fawad Naseer. Email:

Computers, Materials & Continua 2023, 74(3), 6623-6639. https://doi.org/10.32604/cmc.2023.035218

Received 12 August 2022; Accepted 26 October 2022; Issue published 28 December 2022

Abstract

In this era of post-COVID-19, humans are psychologically restricted to interact less with other humans. According to the world health organization (WHO), there are many scenarios where human interactions cause severe multiplication of viruses from human to human and spread worldwide. Most healthcare systems shifted to isolation during the pandemic and a very restricted work environment. Investigations were done to overcome the remedy, and the researcher developed different techniques and recommended solutions. Telepresence robot was the solution achieved by all industries to continue their operations but with almost zero physical interaction with other humans. It played a vital role in this perspective to help humans to perform daily routine tasks. Healthcare workers can use telepresence robots to interact with patients who visit the healthcare center for initial diagnosis for better healthcare system performance without direct interaction. The presented paper aims to compare different telepresence robots and their different controlling techniques to perform the needful in the respective scenario of healthcare environments. This paper comprehensively analyzes and reviews the applications of presented techniques to control different telepresence robots. However, our feature-wise analysis also points to specific technical, appropriate, and ethical challenges that remain to be solved. The proposed investigation summarizes the need for further multifaceted research on the design and impact of a telepresence robot for healthcare centers, building on new perceptions during the COVID-19 pandemic.Keywords

This article emphasizes the possibility of improving telepresence robots for the doctor's interaction with the patient and other usages at the healthcare center. Keeping patients at a specific standard distance while doctors interact with them at the healthcare center is mandatory due to COVID-19. Many doctors have lost their lives. Along with different frameworks for e-health services, including diagnostic testing during the COVID-19 outbreak [1], we must protect our healthcare workers by using telepresence robotic solutions.

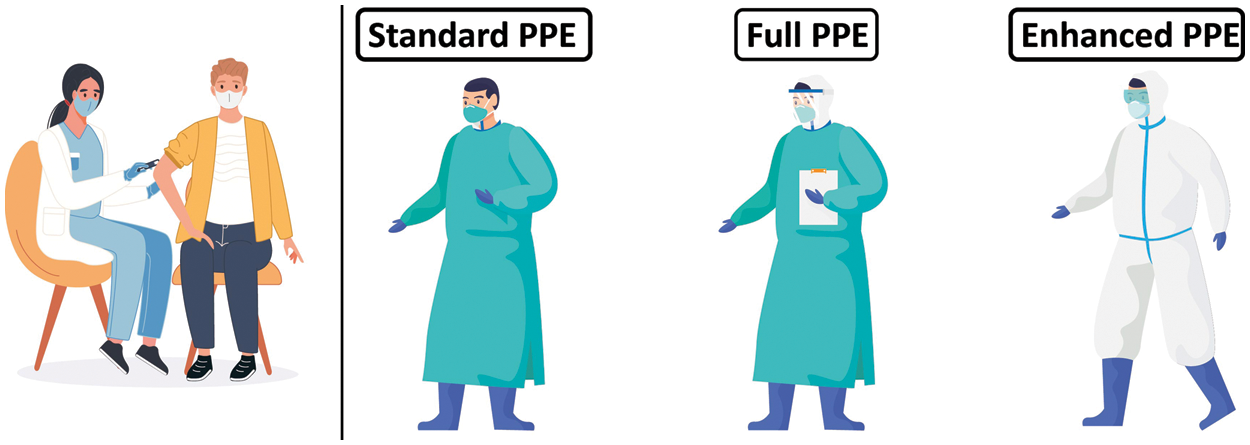

This section will discuss the scenario of Pakistan's healthcare system and the need for a telepresence robot. Ultimately, we will introduce different categorizations of telepresence robots in the healthcare environment. Our healthcare system can hardly handle routine hospitalizations, especially under unusual pandemic circumstances. Many patients carry viral infections with this fragile system. It is directed to a complete loss of movement in these crowded environments concerning existing services, resources and staff. As a result, healthcare center staff has expanded to non-infected healthcare centers, patients and other patients with health problems and their families. It reflects the healthcare center staff's reaction to the pandemic. Several factors cause a lack of commitment to infection control policies. There are different types of Personal protective equipment (PPE) for doctors, as shown in Fig. 1. Many doctors unintentionally wear PPE incorrectly. In addition, some doctors may not be able to choose the right quality of safety due to the absence of information and equipment, lack of PPE, poor ventilation and unavailability of air-conditioned wards.

Figure 1: Different types of personal protective equipment (PPE) for doctors

Doctors deal with different types of patients and their relatives, especially when doctors provide counseling regarding the diagnosis of COVID-19 and news of patient death. Some families denied the finding and accused doctors of misrepresenting the results. They often used to be aggressive with doctors in response.

1.1 Categorization of Different Telepresence Robots

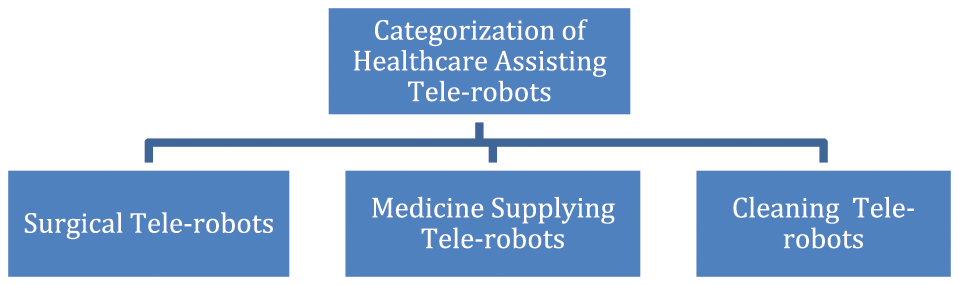

COVID-19 is highly contagious, making it challenging for healthcare professionals to deal directly with potentially infected patients without proper protection. There is a shortage of respiratory organs, goggles, gloves, or gowns in emergencies like today. Performing sensitive surgery on a human patient is one of the most visible aspects of the problem. Detailed categorization of medical robots assisting in surgical, medicine disposal, interacting with patients and cleansing the healthcare center environment is described in Fig. 2.

Figure 2: Categorization of healthcare center assisting tele-robots

Robots are now part of almost all medical departments in healthcare centers [2,3], including prosthetics, assistive robots, orthotics and others. Researchers are predicting more than 36% of healthcare activities will be performed by robots in healthcare centers [4]. It will force the healthcare system to adapt the business process model for a better understanding of the processes and improvements by Industry 4.0 technological advancements to reduce cost, enhance quality, increase profit and reduce waste while complying with environmental sustainability [5] in the healthcare environment.

These remote-controlled telerobots can help surgeons to perform sensitive tasks during surgery on a human patient. Most of these are minimally invasive procedures; for example, operating a complicated robot arm by controlling its operation remotely by a surgeon sitting on a workstation outside the operating room is the hallmark of a teleoperated robot for surgery. Advanced robots have been developed to enable surgeons to obtain the spatial references needed for highly complex surgical interventions [6]. Robots are used to perform surgery in a variety of procedures. Initially, surgery is planned and performed carefully by the surgeons. Then the robot can register the patient so that the surgery can be performed smoothly and the procedure can be performed efficiently.

1.1.2 Medicine Supplying Tele-Robot

Many delivery tasks are done in the healthcare center during working hours and provide point-to-point supplies of medicines. It can also be extended to multiple collection and delivery services, including operating room medications and other surgical instruments. It completes the delivery workflow by the remote user. Different teleoperated medical supplies delivery robots.

Cleanliness is an essential element of healthcare environments, and a good cleaning team is a compulsory part of developing these activities. Still, because of the COVID-19 scenario, healthcare environments are at high risk. The action required to reduce that risk is to diminish the vulnerability of transmission of infection by replacing current cleaning equipment with telerobots. These remote-controlled robots can perform disinfection and cleaning tasks. While assigning such cleanliness tasks to such telepresence, robot scans save lives by reducing the number of human staff assigned to these tasks.

Ultraviolet-C robots combine in-depth knowledge of microbiology. It will move between the healthcare center room and the operating room, remotely controlled by the teleoperator. It will cover all essential surfaces with the right amount of UV-C light to kill certain viruses and bacteria. More light exposed by the robot will kill more harmful microorganisms.

This paper compares different telepresence systems used for interacting with patients, their controlling techniques and communication protocols. This paper explains a complete, detailed review of robotic telepresence systems in the literature; this work's main motive is to summarize the available telepresence automatic systems, their proposed usage and the primary research directions. This study also intends to review the various studies by reflecting on the lessons from these various research initiatives. In the literature, multiple terms refer to systems and users. The COVID-19 pandemic has destroyed economic and social structures, especially in developing countries. Older adults and sick patients are more vulnerable to COVID-19 infectious disease.

This paper aims to review the insight of healthcare-based telepresence robots, which can be used for senior care and COVID-19 patient interaction. This paper also describes different telepresence robot and their different controlling techniques by comparing their response time. For an experiment, we have simulated the response time of different controlling techniques to control the telepresence robot from a remote, distant location and understand the efficiency of techniques for healthcare environment-based telepresence robots. The main goal of this study is to explain an overview of the available telepresence robots, their intended use in the healthcare environment and the primary research directions discussed in the literature. This paper also summarizes the various studies by reflecting on the lessons learned from these research initiatives.

This paper is organized as follows. The telepresence robot core system is described in Section 2 with detailed system architecture and software flow. Section 3 will discuss different healthcare-based telepresence robots in detail and Section 4 comprises the comprehensive details of different controlling techniques available. Section 5 describes telepresence communication protocols. In Section 6, discussion and future developments are discussed in detail. The conclusion is discussed in Section 7.

2 General Telepresence Robot Core System

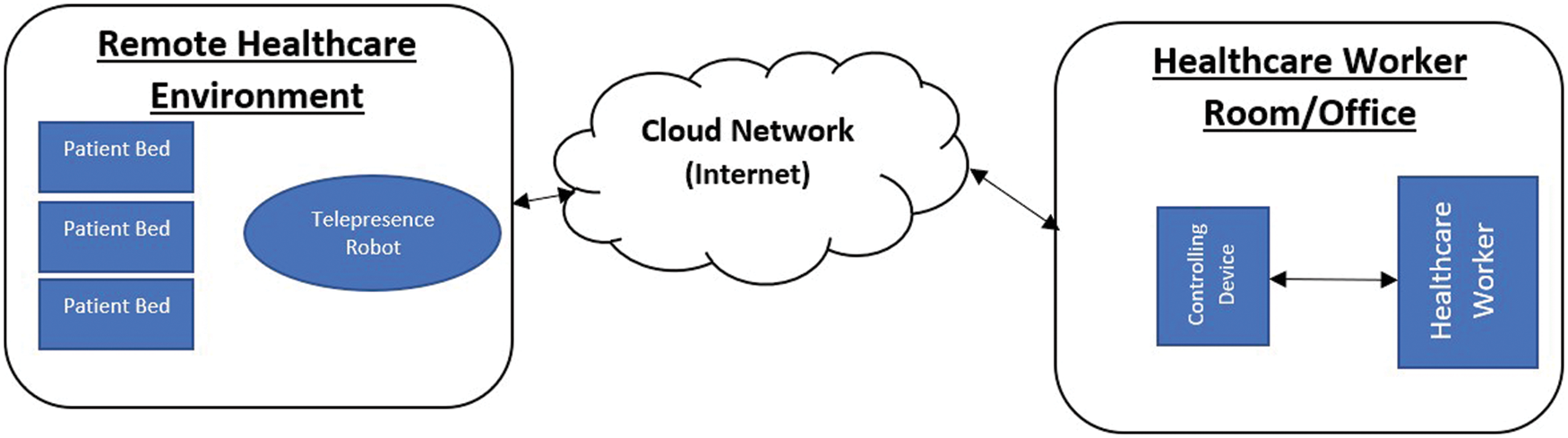

The general system overview shown in Fig. 3 is the remote environment and the host-master controller system environment. The whole system comprises a telepresence robot and a remote, distant environment where patients reside using a display device connected to the robot to interact with patients and to share medical data through video and audio devices attached to the remote telepresence robot. The host system consists of a controlling device which is a PC or laptop, joystick, or any virtual operated device, including brain-controlled devices by which communication takes place.

Figure 3: General core telepresence system

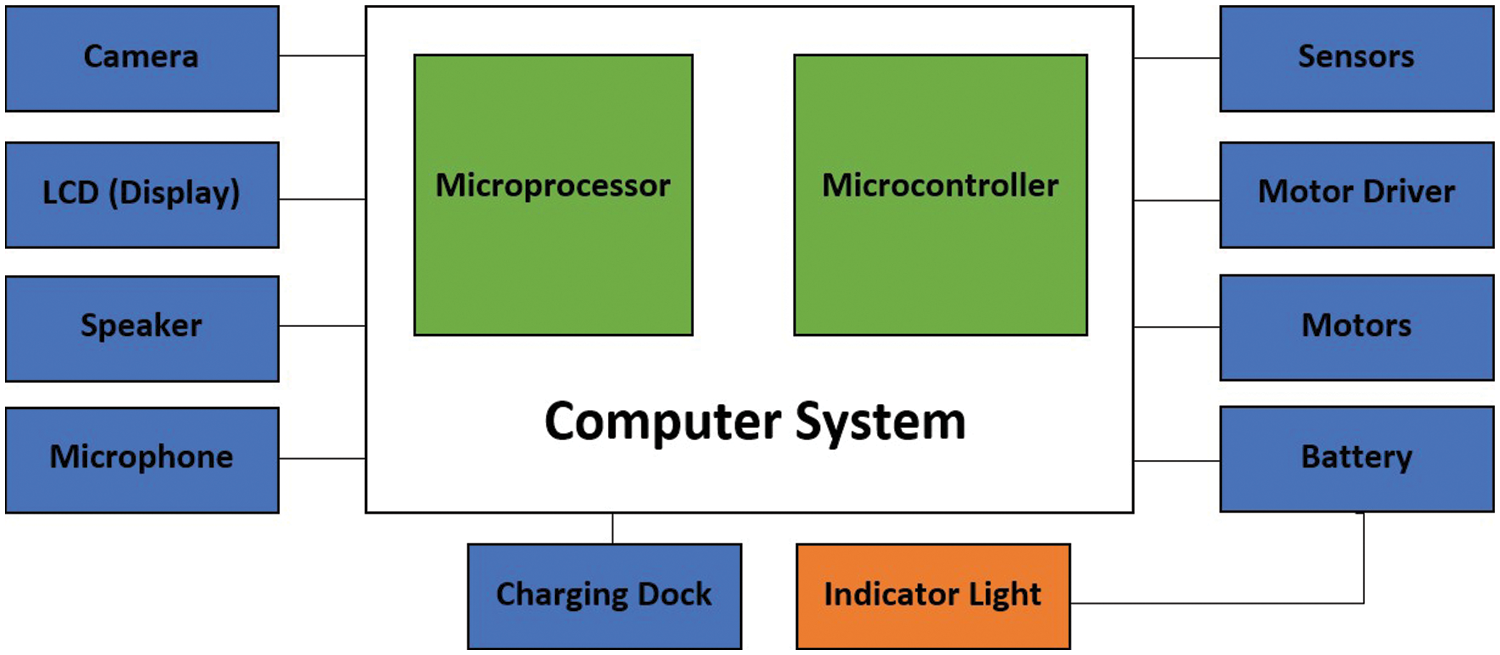

The general system architecture, as shown in Fig. 4, comprises a host controller system at a doctor's side and a telepresence robot at a remote location unknown environment. The interaction between the doctor and the telepresence robot can be communicated and controlled by wireless communication via the internet. The master controller consists of a graphical user interface and a display device for a PC or laptop, which is used to control the telepresence robot.

Figure 4: General telepresence robot system architecture

Telepresence robot movements and behavior at remote locations are generally controlled using tablets, PCs and laptops from a distant site. The central control unit is connected to the microcontroller, the primary computation processing board and is used to control the motor shield.

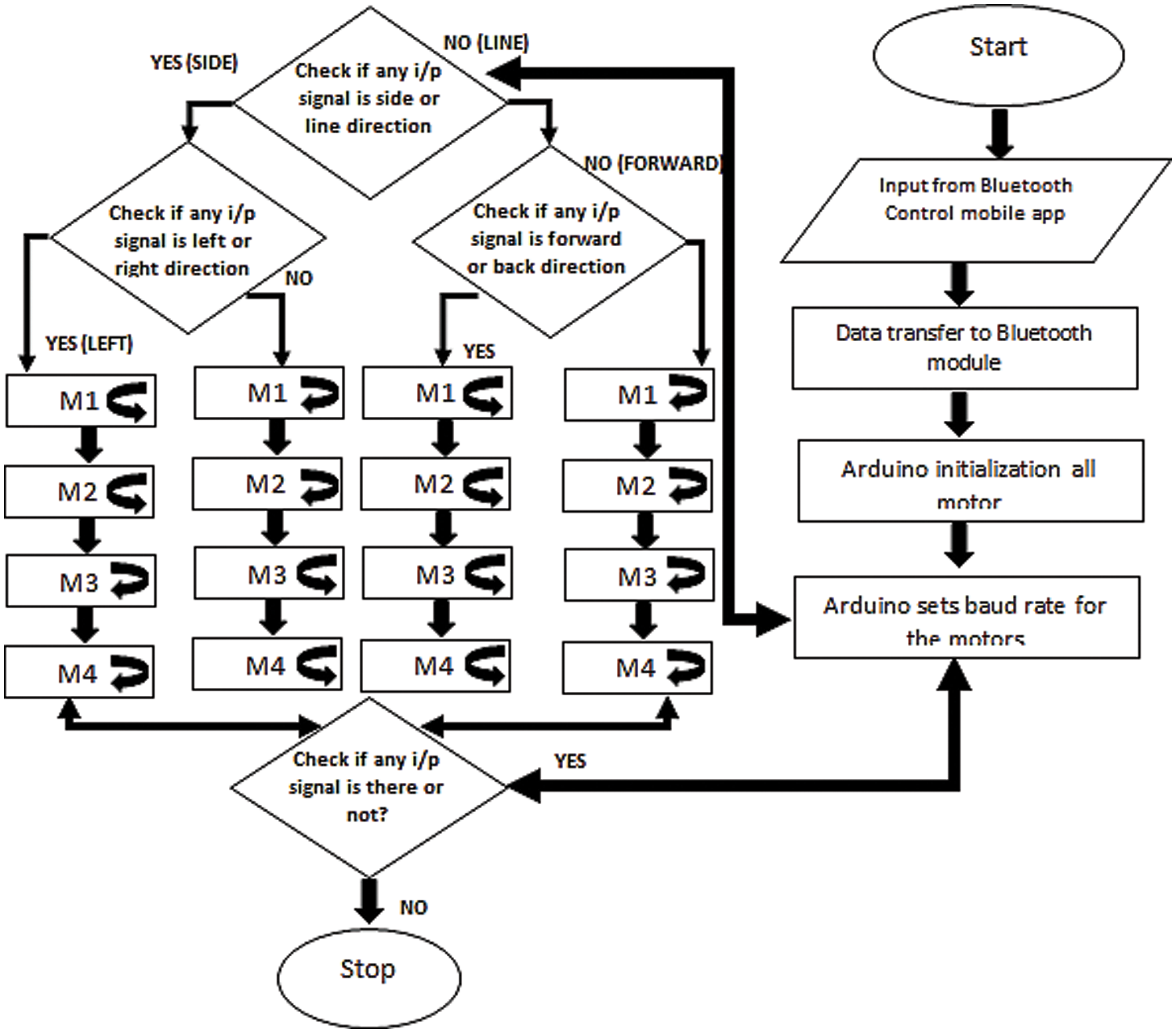

Fig. 5 shows the controlling flow of remote-control operations of a telepresence robot based on programming code. The left front Motor (M1), right front motor (M2), left rear motor (M3) and right rear motor (M4) represent the four motors of the telepresence robot. The flow of operations shows that the programming code checks whether the input signal is for moving the telepresence robot left, right, forward, or backward. In the case of the double horizontal direction, check whether the direction is left or right. You can move each motor clockwise or counterclockwise. Similarly, you can monitor the direction of the line and move each motor clockwise or counterclockwise according to the input signal communicated from the host controller.

Figure 5: Flowchart of controlling operation of the telepresence robot

3 Literature Review About Different Telepresence Robots

Different categories of healthcare-based telepresence robots are discussed above. This section describes different telepresence robots healthcare doctors use to interact with patients during their initial visit. It will help our frontline healthcare workers to be safe from an infected patient.

Healthcare workers include nurses and doctors. An initial visit means that healthcare staff has no idea of their previous health history whenever a patient visits for the first time in healthcare centers. A healthcare worker's interaction with the patient during the initial visit means seeing the patient live, inquiring about their symptoms and discussing how the patient is feeling.

3.1 Different Telepresence Robots for Healthcare Environment

Below is detailed information and a comparison of different telepresence robots, especially in the healthcare environment.

3.1.1 Giraff Telepresence Robot

The Giraff telepresence robot is assembled on an iron frame platform with electric circuits controlling motor-connected wheels. It has a video conferencing suite with microphones, speakers, cameras and a display screen. Giraff can move around in an unknown environment and interact with different surrounding humans. Giraff height is so well designed that it can stream the controller vision to the screen. By tilt the giraffe's head helps create a user-friendly interaction that makes humans in a remote environment more clear and feasible. Fig. 6a shows the different evolution stages of the Giraff telepresence robot.

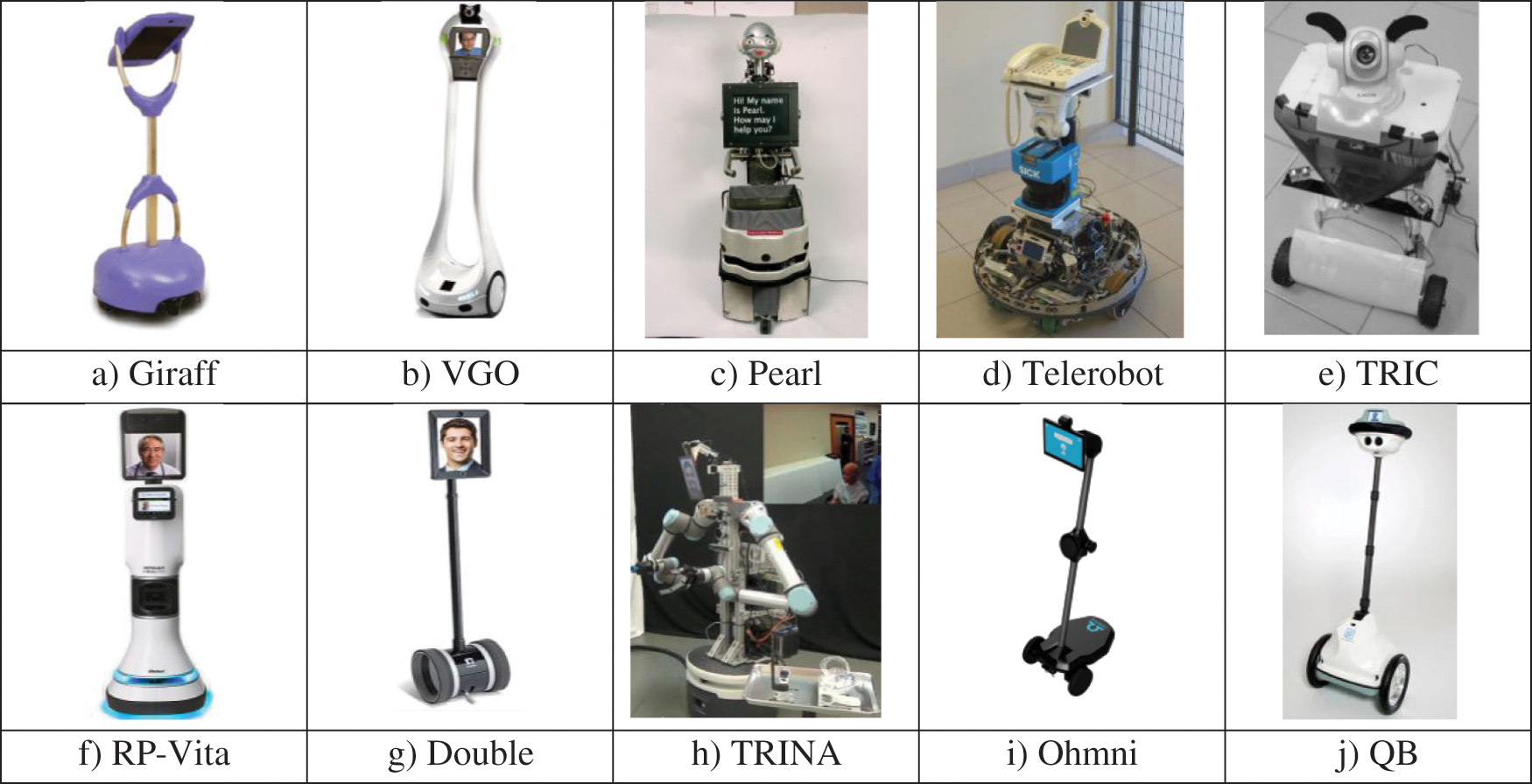

Figure 6: Comparison of ten different telepresence robots. (a) Giraff, (b) VGO, (c) Pearl, (d) Telerobot, (e) TRIC, (f) RP-Vita, (g) Double, (h) TRINA, (i) Ohmni, (j) QB

VGo system and software provide very advanced and well-established communication between the telepresence robot and controller. VGo App is a specific software developed for two-way communication along with real-time video conferencing. It supports different video and audio-based communication capabilities. The controller side user interface is a view of the live feed from the telepresence robot's camera. VGo telepresence robot appearance is generally very pleasing and above the top located display screen looks like the head of the robot as it is surrounded by a black plastic ring as shown in Fig. 6b.

3.1.3 Pearl Telepresence Robot

The Nursebot invented Pearl's telepresence robot, as shown in Fig. 6c, in 1998 by a team of interdisciplinary researchers from three universities in the health and computer science departments. The initial objective was to develop a mobile assistant robot for mild cognitive impairment-based older adults at home. Over time, it also includes nursing assistants in healthcare environments [7]. In terms of software, Pearl includes standard stand-alone mobile navigation systems, speech synthesis, speech recognition and compression software for audio and video transmission capability using low bandwidth.

3.1.4 Telerobot Telepresence Robot

The prototype of Telerobot is shown in Fig. 6d. It can cross obstacles and move around in tight spaces, providing stable and adequate video communication for the teleportation of the robotic telepresence platform. They have developed a round-shaped robot with a rocker-bogie suspension. Telerobot is intelligently programmed to move to a specific location, for example, including the capability of its return to a specifically located charging station in the case of low battery, its smooth remote control, avoid obstacles around it and stop moving in holes. The platform enables a variety of tracking approaches, including odometry, beacons and maps of CAR-MEN assistance, Carnegie Mellon Robot Navigation Toolkit.

While aging is associated with an increased risk of segregation, social interactions can delay the deterioration of older people and the associated health problems. This robot (TRIC), as shown in Fig. 6e, is a telepresence robot for interpersonal communication. It communicates with older people in home environments or healthcare centers. The primary goal of the development of TRIC was to enable older people to stay in the home environment and for caregivers or doctors can maintain a dual way of communication and monitoring traditionally [8].

3.1.6 RP-Vita Telepresence Robot

RP-VITA was manufactured and designed in collaboration with iRobot. This telepresence robot is 5 feet tall with a touch screen display and a camera for dual-way communication. This telepresence robot allows doctors to see patients in situations where they cannot be hospitalized or interact remotely due to epidemic-oriented viral diseases. RP VITA, as shown in Fig. 6f, enables on-site physicians to consult and interact with patients and their colleagues by having a built-in electronic stethoscope to assess the patient. The RP-VITA can be controlled from the tablet or laptop interface and a dedicated robot autonomous driving system allows it to navigate the healthcare center automatically.

3.1.7 Double Telepresence Robot

A dual robot is a commercial product designed by Double Robotics. Recent research in 2022 has updated telepresence robots and made them feasibly determine human expectations and needs regarding the telepresence robot. The double robot consists of three main parts: The physical telepresence robot body, the display screen with an integrated camera known as the iPad and its charging station, as shown in Fig. 6g. The double robot has its web interface used for remote services. After authentication of its controller by a username and password, it can be used to navigate around a remote environment and interact with other humans at home or distant locations.

3.1.8 TRINA Telepresence Robot

The Telerobotic Intelligent Nursing Assistant (TRINA) telepresence robot, as shown in Fig. 6h, was designed and manufactured to ensure the system is safe for humans. Layman humans as beginners can also use it as a relatively swift and cost-effective solution. Such a system should theoretically act as a replacement for humans. It initiates many research challenges, such as developing sufficiently robust hardware rather than heavy work, such as bringing food or medication or lifting a patient into a cart [9].

3.1.9 Ohmni Telepresence Robot

Silicon Valley-based robotics startup Ohmni Labs developed a telepresence robot in 2015, as shown in Fig. 6i. For work to be simple and accessible enough for people to use to communicate with their families, Ohmni is designed to be as independent as possible. It can be used by anyone who is not familiar with the technology. Great for families who don't live nearby or for seniors who want to talk. The basic functionality of the Omni is the same as any other telepresence robot. They updated their software in 2022, providing excellent streaming audio and video with the right camera, audio and display screen.

QB is the innovation of a Silicon Valley-based startup called Anybots [10]. Anybots claims that QB is a communication platform that allows remote workers to collaborate in other ways that are not possible with wall monitors in conference rooms. The QB's structure has a small LCD on top of its body, which always shows a live broadcast of the controller's face, as shown in Fig. 6j. Remote-controlled telepresence robots have long been used to increase human access to remote areas such as space and the deep sea and dangerous areas such as mines and nuclear reactors.

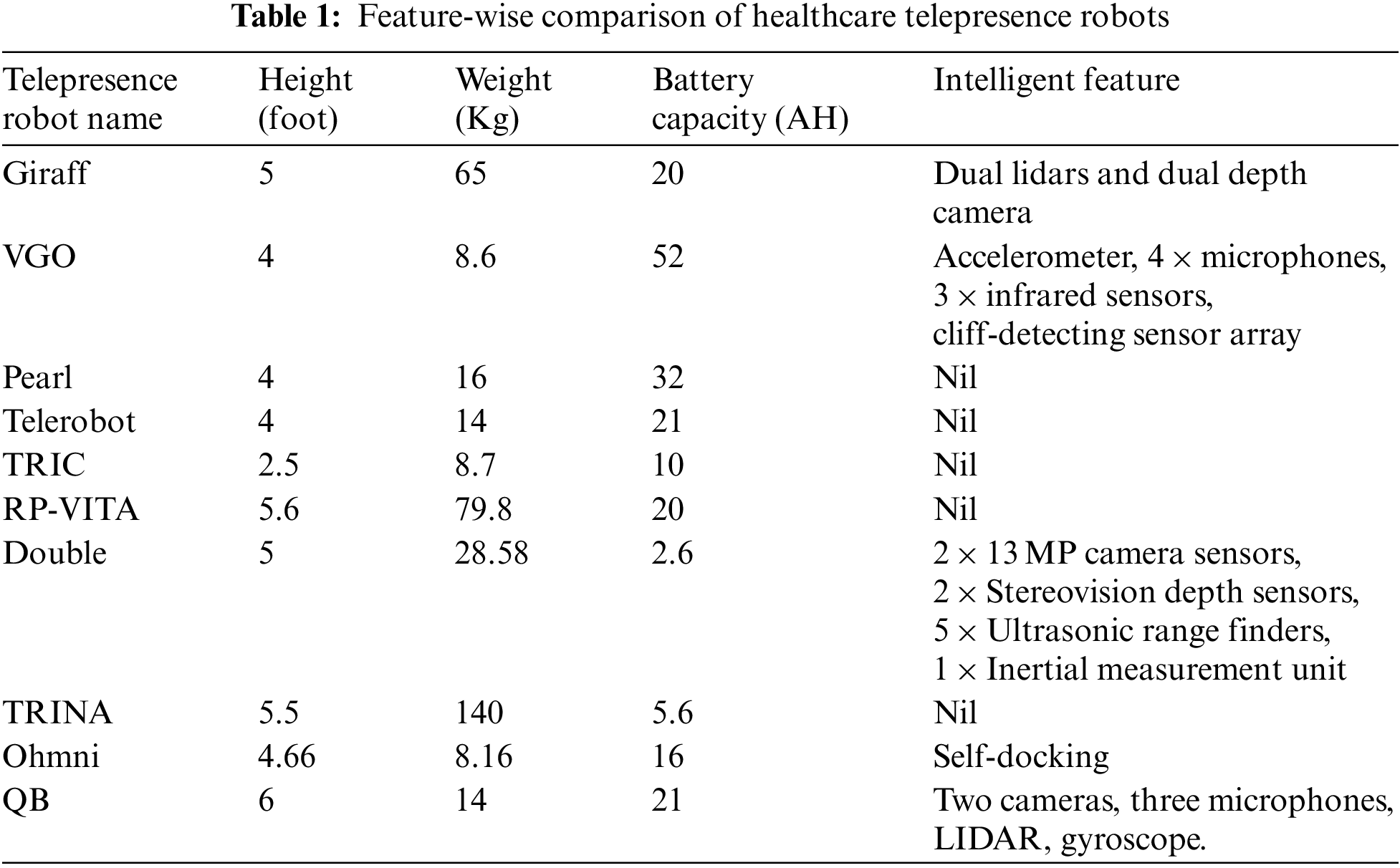

3.2 Feature-Wise Comparison of Different Telepresence Robots

Different telepresence robots are compared in Table 1, which is explained a feature-wise and in-depth review of all robots.

Different categories of healthcare-based telepresence robots are discussed above. This section describes different telepresence robot's healthcare doctors use to interact with patients during their initial visit. It will help our frontline healthcare workers to be safe from an infected patient.

4 Different Telepresence Robot-Controlling Techniques

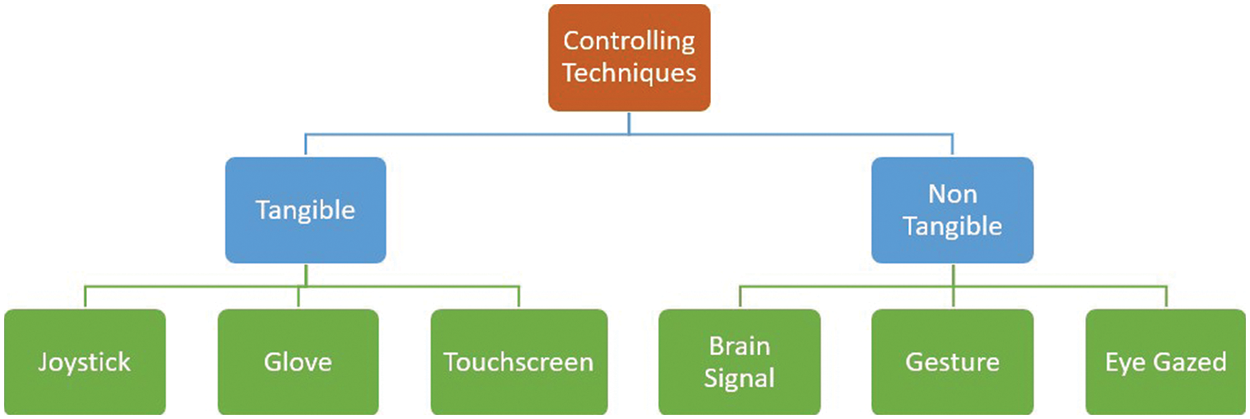

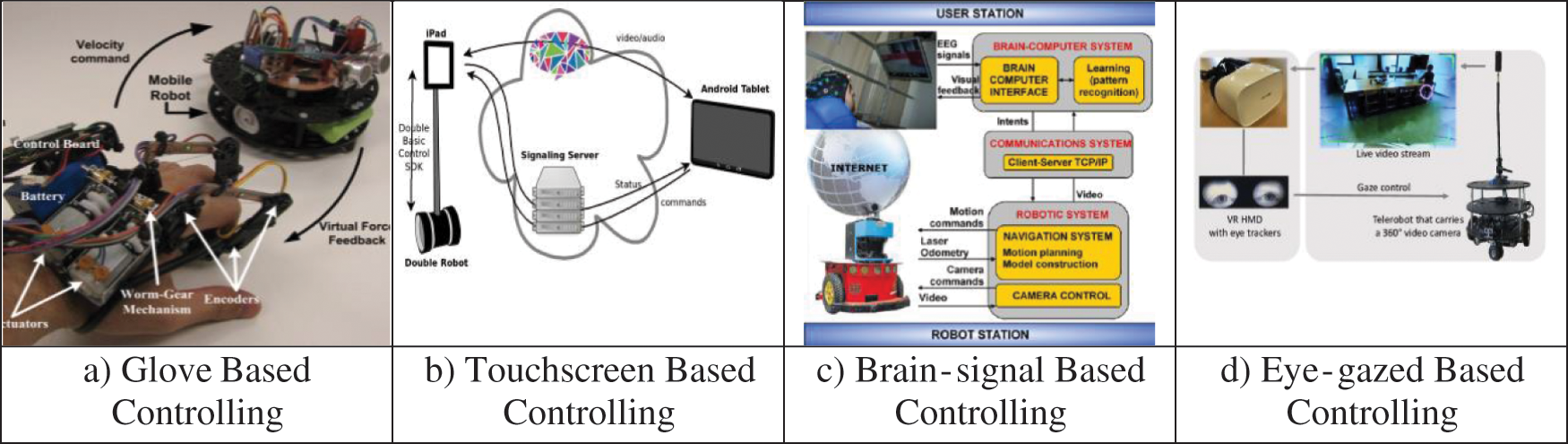

A remote telepresence robot is a semi-autonomous robotics platform system that allows people to interact with other humans and navigate in a remote environment from a distant location [11]. The telepresence robot can be controlled by different techniques, as stated in Fig. 7.

Figure 7: Different types of controlling techniques for telepresence robot

Different control techniques exist for a telepresence robot to interact with other humans at a remote location. We can further categorize the controlling techniques as tangible, nontangible and hybrid based on a telepresence robot. Tangible techniques include a hardware-based joystick, touch screen (smartphone or tablet) and electronic gloves. The nontangible techniques include brain signal, gesture and eye gaze-based controlling techniques. Another technique is a hybrid, which can integrate both tangible and nontangible. Generally, the controlling technologies interact with human intention, processing and providing the proper signal to the telepresence robot. The brain's intention can be categorized into brain signals, head movement, eye movement, speech, gesture, walking pattern and hand and finger movement. After getting signals from these different sources of intentions, it is processed. Then the actual instruction, conveyed by the intention, is delivered to the telepresence robot to behave accordingly in a remote environment. Secure information systems [12] are mandatory to manage all private data.

Reference [13] presents the haptic glove's implementation and experimental verification. Such a technique is designed as a lightweight, portable, fully equipped mechatronics system that provides tactile feedback from each finger. The glove can control the movement of telepresence robot navigation, as shown in Fig. 8a. Park et al. [14] proposed an intuitive operating technique that uses an inertial measurement unit (IMU) and tactile gloves to control a remote 6-DOF robotic arm. The 3GPP network (3rd generation partnership program), Long-Term Evolution (LTE) and Virtual Private Network (VPN) were used as communication methods and the performance of the proposed system was validated by such experiments [15].

Figure 8: Different controlling techniques for telepresence robot. (a) Glove based controlling, (b) Touchscreen based controlling, (c) Brain-signal based controlling, (d) Eye-gazed based controlling

4.2 Brain-Signal Based Controlling

Several BCI techniques are used in controlling telepresence robots [16–20]. It shows that choosing the proper paradigm is an essential step in the design of BCI's telepresence sensorimotor rhythm (SMR) system [21–24]. It does not require any external visual or auditory stimuli to transmit control signals to the telepresence robot, as shown in Fig. 8c. It involves the concentration of the entire human, an extension of training time and is generally difficult to use for people with disabilities. In addition, BCI systems driven by external stimuli are routinely used in distance learning, such as Steady State Visually Induced Potential (SSVEP). High data transfer (ITR) and excellent performance with few or no training sessions [25–28]. Abibullaev et al. [29] designed an event-related potential (ERP) measured by EEG in healthy volunteers to control an endogenous humanoid telepresence robot with distance presence.

The use of gestures to control a telepresence robot, such as guiding the robot about the picking of an object [30] and indicating the direction of motion on the ground [31], is being researched. The AIBO entertainment robot can be controlled and perform individual actions by exploiting visual gesture recognition using a self-organizing function map [32]. Local area network (LAN) to study gesture recognition and maintain a simulated mobile robot to perform simple actions such as moving, stopping and rotating left or right. Gesture-based control has also been demonstrated through a wearable sleeve interface with EMG and IMU sensors [33] and user-transmitted wireless accelerometer signals [34]. Instead of recognizing human gestures, the whole-body gestures of small humanoid robots have been studied [35], allowing for playful interactions with humans from a remote location.

4.4 Eye-Gazed Based Controlling

Telepresence robots can be implemented in such a way that they can be used as an input technique to control and interact socially with people in a remote environment by improving the quality of communication. The primary concern was to develop a controlling technique that could be a hands-free and easy-to-use telepresence system. The system should be straightforward, compact, discreet and convenient. In paper [36], they have developed a system for gaze-controlled telepresence robots. Their working platform is based on the open-source operating system named Robot Operating System (ROS), as shown in Fig. 8d.

4.5 Joystick Based Controlling

Some previous studies have developed teleoperation systems for different types of remote-operated robots [37–40]. In Japan's Humanoid Robotics Project (HRP) [41–43], Neo Ee Sian and colleagues proposed a teleoperation technique that uses a physical joystick to control the lower body of a humanoid robot. Lu et al. [44] introduce a joystick-based teleoperation system for humanoid robots. Humanoid robots’ body comprises the head, torso, arms and legs, which can move in more than 3 degrees of freedom (DOF). Remotely controlling a humanoid robot using a joystick has several advantages compared to using a keyboard or mouse [45–47]. For example, the joystick makes it more comfortable and easier to operate and using a hand remote control makes the joystick more efficient and adaptable [48].

4.6 Touchscreen Based Controlling

The healthcare environment and using different technology and communications equipment are not new. Still, the COVID-19 pandemic required communication between the medical team and the patient without direct contact, so mobile robots had to be used to find a solution, as shown in Fig. 8b. Companies like Ohmnilab offer the remote controlling capability for telepresence to perform different tasks in remote environments. It's useful because it provides a solution for commuting and medical industry nurses and doctors to remotely monitor patients in the event of a COVID-19 crisis [49,50].

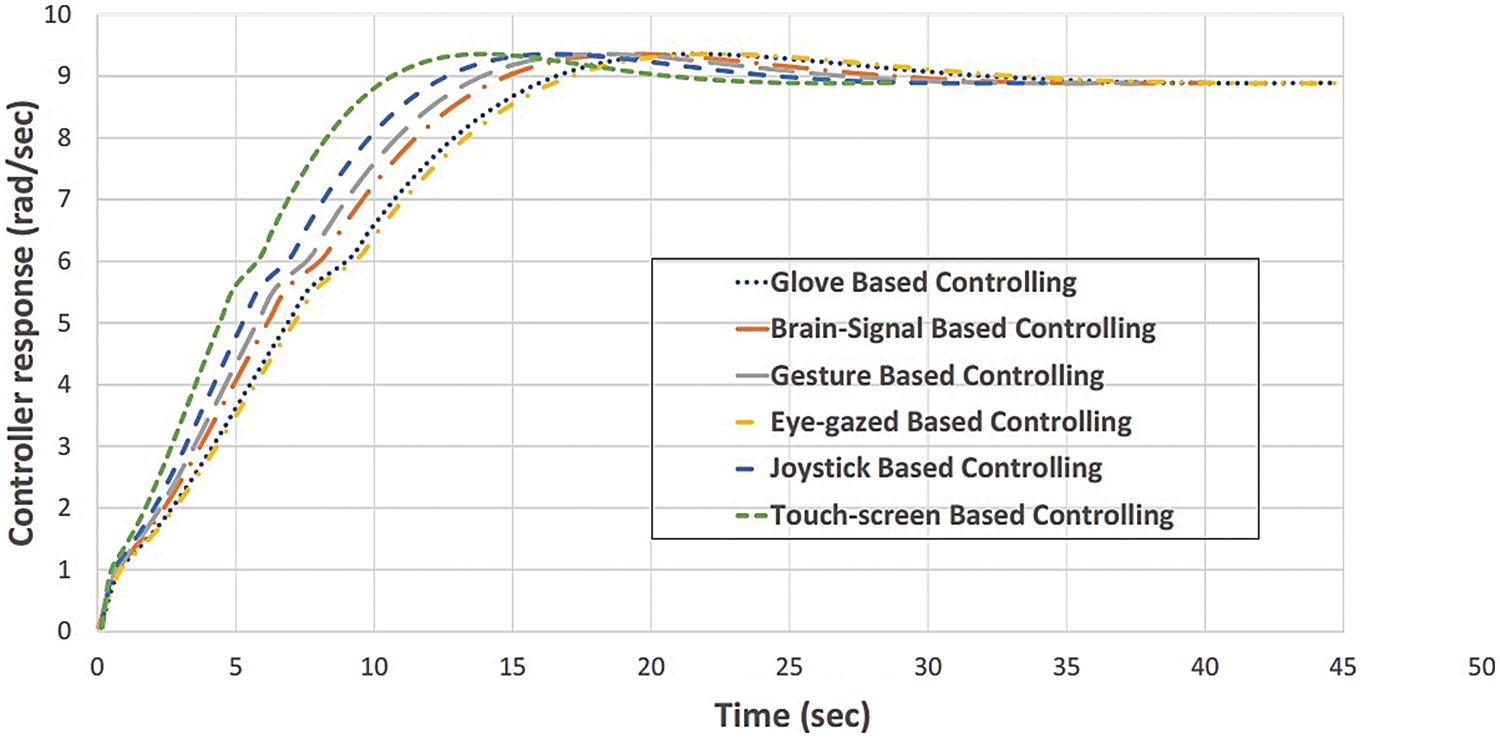

4.7 Response Time of Different Controlling Techniques

The response time is defined as the time between the occurrence of an event of controlling from a remote, distant area, its detection by a robot, its recognition, the decision making and the response with real-time constraints. Researchers with years of experience have introduced many research attempts based on different classes of deterministic and stochastic-based methods [51]. Fig. 9 shows the response time of the telepresence robot's motor rotation in radian angle per second movement according to the above compared different controlling techniques. The differences between different controlling techniques of telepresence robot's responses were evident, which indicates a different time when using any techniques to control. The response time represents the time elapsed after the telepresence robot received an instruction from a remote controller to successfully activate the telepresence robot behavior in the context of motor rotation in rad/sec.

Figure 9: Controller response time of different controlling techniques

5 Telepresence Robot General Communication Protocols

Stable communication FEC-based controlling and transmission scheme of video and audio [52] from the telepresence robot to the host controller as the telepresence robot uses wireless communication to send control commands and visual and audio data to allow the telepresence robot to drive and behave socially in a remote, distant environment. Telepresence robotic systems are becoming popular due to their capability of providing dual-way voice and video communication by using different communication protocols ranging from Bluetooth, LORA, Wi-Fi and the internet as a backbone [53]. However, compared to wired communication, wireless communication is more likely to cause packet loss or transmission delay. Getting proper audio and video quality while interacting with the telepresence robot system requires a reliable connection without high latency or data loss.

6 Discussion and Future Developments

Today, the easiest way to design and deploy a telepresence robot is to create audio and video communication between a telepresence robot controlled at a distant remote place and the main host controller [54]. Standard applications for today's telepresence robot are often limited to video, audio, or sharing of the presentation screen. This method can be sufficient, for example, in distance learning and activities based on communication and visual experience. In this technological era, there are some areas where we need high accuracy and easy controlling technique and flawless technology to maneuver in a remote areas, such as expanding telepresence robotic technology with medical devices.

This paper cannot fully represent the long-term use of telepresence in a remote environment because of a few different parameters, including controlling of telepresence robot in an outdoor environment, unknown indoor environment, dynamic moving obstacle environment, different height restricted limited environment, long duration operation capability environment, complex oriented environment, controlling commands from host controller delays and disconnection of telepresence robot in a remote environment from hos controller. Major operator or controller of general telepresence robot includes technology-oriented people and non-technology-oriented people. However, all the previous study has not considered a non-technological oriented controller to operate telepresence robots remotely. It is not possible that a telepresence robot can be used as a simple on-off to cover all behaviors displayed on remote telepresence robots. Despite a simple user controller technique, it turns out that previous telepresence robot controllers have no control over expressive material. And what we consider is the need to rationalize the instructional design to control telepresence robots. Our discussion and the above observation affect the number of new features that can be added to the telepresence robot and some more specific controlling techniques would be required additions.

6.1 Design Issues Regarding the Interconnection of a Telepresence Robot

After studying different telepresence robots, the controller thought that the robot's movement was unnatural for having a conversation in a remote environment. The person who interacted with the telepresence robot saw a controller's flat face as it streamed live from a controller camera. It doesn't give a natural feeling to interact with that telepresence robot. Sound from the speaker of a controller to the remote location by the telepresence robot speaker is not as natural as the human feels in real face-to-face interaction, with the effect of different reasons like environment noise and distortion in the digital communication channel.

6.2 Lack of Human-Like Maneuverability in a Telepresence Robot

The telepresence robots are operated by dc motors, which lags the feeling of experiencing real human-like movement. The Motorized movement of the telepresence robot and controlling signal from the host controller does not behave like a human, its movement, turning effect, motor-based robot lacks the smooth movement of a telepresence robot in an indoor and remote outdoor environment. Interaction of telepresence robots lags the human-like feeling in real-time with other humans due to its robust movement.

6.3 Lack of Wireless Communication Lag Management with a Telepresence Robot

Another faced issue was the lag in communication or the scenario when a telepresence robot was roaming around an unknown environment and disconnected from the internet for any reason, which means that the telepresence robot is on its own in a remote environment behaving unattended, which may cause damage to itself or damage to the surrounding environment. Although the duration of communication delays or the disconnect of connectivity of the telepresence robot to the host controller might be limited to a few seconds, ultimately, it can cause huge chaos to the telepresence robot or the environment.

6.4 Lack of Assistance in a Telepresence Robot

A telepresence robot in a distant location transmits the video of the remote environment with a limitation of viewable coverage area angle. In the scenario, the robot might be moving straight with no obstacle in front, but it can damage the surrounding object in a remote environment that is not visible to the host controller. It can happen due to the high positioning of the camera on a telepresence robot or the lower position of the camera. Due to the lack of coverage angle 360-degree surround, the telepresence robot can collide with any static or dynamic object moving around.

This paper presents the categorization of telepresence robots that can be used in the healthcare environment and focuses on different available robots. The other different controlling techniques are discussed in detail to provide a good understanding of controlling a telepresence robot in a remote unknown environment. Most of the proposed controlling techniques can interact with telepresence robots efficiently and robustly, except for lack of control in the scenario of disconnection or delays. Based on the review, all existing telepresence robots cannot behave intelligently according to the controller behavior in the scenario of communication issues. This study compares better, effective and more efficient control techniques for interacting with telepresence robots for specific purposes or in general. It also shows the lacking features of existing telepresence robots like modification of design and lack of human interaction, chaotic behavior of telepresence robots in the situation of delays or disconnection of wireless communication, Object avoidance capability and addition of peripheral attachments for more human-like behavior at a remote environment. Enthusiastic thinking in development will help researchers.

Acknowledgement: This paper presents the categorization of tele assisted robots used in a healthcare environment.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare they have no conflicts of interest to report regarding the present study.

References

1. Y. Alotaibi and A. F. Subahi, “New goal-oriented requirements extraction framework for e-health services: A case study of diagnostic testing during the COVID-19 outbreak,” Business Process Management Journal, vol. 28, no. 1, pp. 273–292, 2022. [Google Scholar]

2. S. M. El-Sadig, L. A. Fahal, Z. B. Abdelrahim, E. S. Ahmed, N. S. Mohamed et al., “Impact of COVID-19 on doctors and healthcare providers during the pandemic in Sudan,” Transactions of the Royal Society of Tropical Medicine and Hygiene, vol. 115, no. 6, pp. 577–578, 2021. [Google Scholar]

3. T. Ginoya, Y. Maddahi and K. Zareinia, “A historical review of medical robotic platforms,” Hindawi Journal of Robotics, vol. 2021, pp. 13, 2021. [Google Scholar]

4. R. A. Beasley, “Medical robots: Current systems and research directions hindawi,” Journal of Robotics, vol. 2012, pp. 14, 2012. [Google Scholar]

5. Y. Alotaibi, “Business process modelling challenges and solutions: A literature review,” Journal of Intelligent Manufacturing, vol. 27, no. 4, pp. 701–723, 2016. [Google Scholar]

6. J. H. Lonner, J. Zangrilli and S. Saini, “Emerging robotic technologies and innovations for hospital process improvement,” in Robotics in Knee and Hip Arthroplasty: Current Concepts Techniques and Emerging Uses, Springer, Cham, chapter no. 23, section no. 4, pp. 233–243, 2019. [Online]. Available: https://link.springer.com/chapter/10.1007/978-3-030-16593-2_23. [Google Scholar]

7. M. Pollack, S. Engberg, S. Thrun, L. Brown, D. Colbry et al., “Pearl: A mobile robotic assistant for the elderly,” in AAAI Workshop on Automation as Caregiver, Edmonton, Alberta, Canada, pp. 85–92, 2002. [Google Scholar]

8. T. Tsai, Y. Hsu, A. Ma, T. King and C. Wu, “Developing a telepresence robot for interpersonal communication with the elderly in a home environment,” Telemedicine Journal & E–Health, vol. 13, no.4, pp. 407–424, 2007. [Google Scholar]

9. Z. Li, P. Moran, Q. Dong, R. J. Shaw and K. Hauser, “Development of a tele-nursing mobile manipulator for remote care-giving in quarantine areas,” in IEEE Int. Conf. on Robotics and Automation (ICRA), Singapore, pp. 3581–3586, 2017. [Google Scholar]

10. E. Guizzo, “When my avatar went to work,” IEEE Spectrum, vol. 47, no. 9, pp. 26–50, 2010. [Google Scholar]

11. T. W. Butler, G. K. Leong and L. N. Everett, “The operations management role in hospital strategic planning,” Journal of Operations Management, IEEE Transactions on Systems, Man and Cybernetics, Part-B, vol. 14, no. 2, pp. 137–156, 1996. [Google Scholar]

12. Y. Alotaibi, “Automated business process modelling for analyzing sustainable system requirements engineering,” in 6th Int. Conf. on Information Management (ICIM), London, UK, pp. 157–161, 2020. [Google Scholar]

13. Z. MA and P. Ben-Tzvi, “RML glove—An exoskeleton glove mechanism with haptics feedback,” IEEE/ASME Transactions on Mechatronics, vol. 20, no. 2, pp. 641–652, April 2015. [Google Scholar]

14. S. Park, Y. Jung and J. Bae, “A tele-operation interface with a motion capture system and a haptic glove,” in 13th Int. Conf. on Ubiquitous Robots and Ambient Intelligence (URAI), Xi'an, China, pp. 544–549, 2016. [Google Scholar]

15. J. Suh, M. Amjadi, I. Park and H. Yoo, “Finger motion detection glove toward human–machine interface,” in IEEE SENSORS, Busan, Korea (Southpp. 1–4, 2015. [Google Scholar]

16. C. Escolano, J. M. Antelis and J. Minguez, “A telepresence mobile robot controlled with a noninvasive brain–computer interface,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 42, no. 3, pp. 793–804, 2012. [Google Scholar]

17. L. Tonin, R. Leeb, M. Tavella, S. Perdikis and J. D. R. Millán, “The role of shared-control in BCI-based telepresence,” in IEEE Int. Conf. on Systems, Man and Cybernetics, Istanbul, Turkey, pp. 1462–1466, 2010. [Google Scholar]

18. A. Chella, E. Pagello, E. Menegatti, R. Sorbello, S. M. Anzalone et al., “A BCI teleoperated museum robotic guide,” in Int. Conf. on Complex, Intelligent and Software Intensive Systems, CISIS, Fukuoka, Japan, pp. 783–788, 2009. [Google Scholar]

19. C. Escolano, A. R. Murguialday, T. Matuz, N. Birbaumer and J. Minguez, “A telepresence robotic system operated with a P300-based brain–computer interface: Initial tests with ALS patients,” in Proc. Annu. Int. Conf. IEEE Eng. Med. Biol., Buenos Aires, Argentina, pp. 4476–4480, 2010. [Google Scholar]

20. S. -J. Yun, M. -C. Lee and S. -B. Cho, “P300 BCI based planning behavior selection network for humanoid robot control,” in Proc. 9th Int. Conf. Natural Comput. (ICNC), Shenyang, China, pp. 354–358, 2013. [Google Scholar]

21. R. Leeb, L. Tonin, M. Rohm, L. Desideri, T. Carlson et al., “Towards independence: A BCI telepresence robot for people with severe motor disabilities,” Proceedings of the IEEE, vol. 103, no. 6, pp. 969–982, 2015. [Google Scholar]

22. Y. Chae, S. Jo and J. Jeong, “Brain-actuated humanoid robot navigation control using asynchronous brain–computer interface,” in Proc. 5th Int. IEEE/EMBS Conf. on Neural Engineering (NER), Cancun, Mexico, pp. 519–524, 2011. [Google Scholar]

23. W. Li, C. Jaramillo and Y. Li, “Development of mind control system for humanoid robot through a brain computer interface,” in Proc. 2nd Int. Conf. Intelligent System Design and Engineering Application (ISDEA), Sanya, China, pp. 679–682, 2012. [Google Scholar]

24. A. Thobbi, R. Kadam and W. Sheng, “Achieving remote presence using a humanoid robot controlled by a non-invasive BCI device,” International Journal of Artificial Intelligence and Machine Learning, vol. 10, pp. 41–45, 2010. [Google Scholar]

25. A. Güneysu and H. L. Akin, “An SSVEP based BCI to control a humanoid robot by using portable EEG device,” in Proc. 35th Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. (EMBC), Osaka, Japan, pp. 6905–6908, 2013. [Google Scholar]

26. D. Petit, P. Gergondet, A. Cherubini and A. Kheddar, “An integrated framework for humanoid embodiment with a BCI,” in Proc. IEEE Int. Conf. Robot. Automat. (ICRA), Seattle, WA, USA, pp. 2882–2887, 2015. [Google Scholar]

27. M. Bryan, J. Green, M. Chung, L. Chang, R. Scherer et al., “An adaptive brain–computer interface for humanoid robot control,” in Proc. 11th IEEE-RAS Int. Conf. Humanoid Robots, Bled, Slovenia, pp. 199–204, 2011. [Google Scholar]

28. E. Tidoni, P. Gergondet, A. Kheddar and S. M. Aglioti, “Audio-visual feedback improves the BCI performance in the navigational control of a humanoid robot,” Frontiers Neurorobot, vol. 8, pp. 20, 2014. [Google Scholar]

29. B. Abibullaev, A. Zollanvari, B. Saduanov and T. Alizadeh, “Design and optimization of a BCI-driven telepresence robot through programming by demonstration,” IEEE Access, vol. 7, pp. 111625–111636, 2019. [Google Scholar]

30. J. J. Steil, G. Heidemann, J. Jockusch, R. Rae, N. Jungclaus et al., “Guiding attention for grasping tasks by gestural instruction: The gravis-robot architecture,” in Proc. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Maui, HI, USA, pp. 1570–1577, 2001. [Google Scholar]

31. S. Abidi, M. Williams and B. Johnston, “Human pointing as a robot directive,” in Proc. ACM/IEEE Int. Conf. Human–Robot Interaction, Tokyo, Japan, pp. 67–68, 2013. [Google Scholar]

32. T. Hashimaya, K. Sada, M. Iwata and S. Tano, “Controlling an entertainment robot through intuitive gestures,” in Proc. IEEE Int. Conf. Systems, Man and Cybernetics, Taipei, Taiwan, pp. 1909–1914, 2006. [Google Scholar]

33. M. T. Wolf, C. Assad, M. T. Vernacchia, J. Fromm and H. L. Jethani, “Gesture-based robot control with variable autonomy from the JPL BioSleeve,” in Proc. IEEE Int. Conf. Robotics and Automation, Karlsruhe, Germany, pp. 1160–1165, 2013. [Google Scholar]

34. X. H. Wu, M. C. Su and P. C. Wang, “A hand-gesture-based control interface for a car-robot,” in Proc. IEEE/RSJ Int. Conf. Intelligent Robots and Systems, Taipei, Taiwan, pp. 4644–4648, 2010. [Google Scholar]

35. M. D. Cooney, C. Becker-Asano, T. Kanda, A. Alissandrakis and H. Ishiguro, “Full-body gesture recognition using inertial sensors for playful interaction with small humanoid robot,” in Proc. IEEE/RSJ Int. Conf. Intelligent Robots and Systems, Taipei, Taiwan, pp. 2276–2282, 2010. [Google Scholar]

36. J. P. Hansen, A. Alapetite, M. Thomsen, Z. Wang, K. Minakata et al., “Head and gaze control of a telepresence robot with an hmd,” in Proc. of the ACM Symposium on Eye Tracking Research & Applications, New York, NY, USA, pp. 82, 2018. [Google Scholar]

37. H. Hasunumat, M. Kobayashit, H. Moriyamat, T. Itokot, Y. Yanagiharatt et al., “A tele-operated humanoid robot drives a lift truck,” in IEEE Int. Conf. on Robotics 8 Automation, Washington, DC, USA, pp. 2246–2252, 2002. [Google Scholar]

38. A. Monferrer and D. Bonyuet, “Cooperative robot teleoperation through virtual reality interfaces,” in IEEE Proc. of the Sixth Int. Conf. on Information Visualisation (IV'02), London, UK, pp. 243–248, 2002. [Google Scholar]

39. I. R. Belousov, R. Chellali and G. J. Clapworthy, “Virtual reality tools for internet robotics,” in IEEE Int. Conf. on Robotics 8, Automation, Seoul, Korea, pp. 1878–1883, 2001. [Google Scholar]

40. S. Kagami, J. J. Kuffner, K. Nishiwaki, T. Sugihara, T. Michikata et al., “Design and implementation of remotely operation interface for humanoid robot,” in IEEE Int. Conf. of Robotics & Automation, Seoul, Korea (Southpp. 401–406, 2006. [Google Scholar]

41. N. E. Sian, K. Yokoi, S. Kajita and K. Tanie, “Whole body teleoperation of a humanoid robot integrating operator's intention and robot's autonomy—An experimental verification,” in IEEE Int. Conf. on Intelligent Robots and Systems, Las Vegas, NV, USA, pp. 1651–1656. 2003. [Google Scholar]

42. N. E. Sian, K. Yokoi, S. Kajita, K. Tanie and F. Kanchiro, “Whole body teleoperation of a humanoid robot—A method of integrating operator's intention and robot's autonomy,” in IEEE Int. Conf. 00 Robotics &Automation, Taipei, Taiwan, pp. 1651–1656, 2003. [Google Scholar]

43. N. E. Sian, K. Yokoi, S. Kajinta and K. Tanie, “Whole body teleoperation of a humanoid robot-development of a simple master device using joystick,” in IEEE/RSJ Int. Conf. on Intelligent Robots and Systems. EPFL, Lausanne, Switzerland, pp. 2569–2574, 2002. [Google Scholar]

44. Y. Lu, Q. Huang, M. Li, X. Jiang and M. Keerio, “A friendly and human-based teleoperation system for humanoid robot using joystick,” in 7th World Congress on Intelligent Control and Automation, Chongqing, China, pp. 2283–2288, 2008. [Google Scholar]

45. T. Carlson, L. Tonin, S. Perdikis, R. Leeb and J. d. R. Millán, “A hybrid BCI for enhanced control of a telepresence robot,” in 35th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, pp. 3097–3100, 2013. [Google Scholar]

46. G. Pfurtscheller, B. Allison, G. Bauernfeind, C. Brunner, T. S. Escalante et al., “The hybrid BCI,” Front. Neurosci., vol. 4, pp. 42, 2010. [Google Scholar]

47. T. Carlson and Y. Demiris, “Collaborative control for a robotic wheelchair: Evaluation of performance, attention and workload,” IEEE Transactions on Systems, Man and Cybernetics, Part B (Cybernetics), vol. 42, no. 3, pp. 876–888, 2012. [Google Scholar]

48. T. Carlson and Y. Demiris, “Collaborative control in human wheelchair interaction reduces the need for dexterity in precise manoeuvres,” in ACM/IEEE HRI Robot. Helpers User Interact. Interfaces Companions Assistive Therapy Robot, Amsterdam, NL, pp. 59–66, 2008. [Google Scholar]

49. T. Carlson and Y. Demiris, “Increasing robotic wheelchair safety with collaborative control: Evidence from secondary task experiments,” in Proc. IEEE ICRA, Anchorage, AK, USA, pp. 5582–5587, 2010. [Google Scholar]

50. T. Carlson and Y. Demiris, “Using visual attention to evaluate collaborative control architectures for human robot interaction,” in Proc. New Frontiers in Human Robot Interaction a Symposium at AISB, Edinburgh, Scotland, pp. 38–43, 2009. [Google Scholar]

51. Y. Alotaibi, “A new meta-heuristics data clustering algorithm based on Tabu search and adaptive search memory,” Symmetry, vol. 14, no. 3, pp. 623–637, 2022. [Google Scholar]

52. Y. Alotaibi, “A new multi-path forward error correction (FEC) control scheme with path interleaving for video streaming,” in IEEE 10th Int. Conf. on Industrial Electronics and Applications (ICIEA), Auckland, New Zealand, pp. 1655–1660, 2015. [Google Scholar]

53. Y. Xu, C. Yu, J. Li and Y. Liu, “Video telephony for end-consumers: Measurement study of google+ ichat and skype,” in ACM Conf. on Internet Measurement Conf., Boston, Massachusetts, USA, pp. 371–384, 2012. [Google Scholar]

54. L. Almeida, P. Menezes and J. Dias, “Telepresence social robotics towards co-presence: A review,” Applied Sciences, vol. 12, no. 11, pp. 5557, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools