Open Access

Open Access

ARTICLE

CVIP-Net: A Convolutional Neural Network-Based Model for Forensic Radiology Image Classification

Department of Artificial Intelligence, Faculty of Computing, The Islamia University of Bahawalpur, Bahawalpur, 63100, Pakistan

* Corresponding Author: Ghulam Gilanie. Email:

Computers, Materials & Continua 2023, 74(1), 1319-1332. https://doi.org/10.32604/cmc.2023.032121

Received 07 May 2022; Accepted 29 June 2022; Issue published 22 September 2022

Abstract

Automated and autonomous decisions of image classification systems have essential applicability in this modern age even. Image-based decisions are commonly taken through explicit or auto-feature engineering of images. In forensic radiology, auto decisions based on images significantly affect the automation of various tasks. This study aims to assist forensic radiology in its biological profile estimation when only bones are left. A benchmarked dataset Radiology Society of North America (RSNA) has been used for research and experiments. Additionally, a locally developed dataset has also been used for research and experiments to cross-validate the results. A Convolutional Neural Network (CNN)-based model named computer vision and image processing-net (CVIP-Net) has been proposed to learn and classify image features. Experiments have also been performed on state-of-the-art pertained models, which are alex_net, inceptionv_3, google_net, Residual Network (resnet)_50, and Visual Geometry Group (VGG)-19. Experiments proved that the proposed CNN model is more accurate than other models when panoramic dental x-ray images are used to identify age and gender. The specially designed CNN-based achieved results in terms of standard evaluation measures including accuracy (98.90%), specificity (97.99%), sensitivity (99.34%), and Area under the Curve (AUC)-value (0.99) on the locally developed dataset to detect age. The classification rates of the proposed model for gender estimation were 99.57%, 97.67%, 98.99%, and 0.98, achieved in terms of accuracy, specificity, sensitivity, and AUC-value, respectively, on the local dataset. The classification rates of the proposed model for age estimation were 96.80%, 96.80%, 97.03%, and 0.99 achieved in terms of accuracy, specificity, sensitivity, and AUC-value, respectively, on the RSNA dataset.Keywords

Despite several machine-learning issues, autonomous decisions based upon image classification have essential applicability in modern society to make human life more comfortable. An image classification system is a system that inputs an image and classifies it in one of the targeted classes. Several machine-learning models are being used to assist in decision-making for various industrial applications.

Identification has been proved the essential feature of living or dead human beings. Identification is the only feature that differentiates one from the other. All proceedings of the forensics department, law enforcement agencies, courts, and other official departments are dependent on human identity facts. These identifying data may include the face, hair, fingerprint, skin color, the retina of the eye, or personal possessions of living or dead bodies. Identifying a deceased person becomes difficult when fingerprints or other physical characteristics are lost because of the body's decomposition, i.e., whole (only bones) or partial. This could happen due to natural disasters such as earthquakes, tsunamis, blasts, burning, criminal cases, etc. In October 2005, an earthquake in Pakistan with a magnitude of 7.6 and a Mercalli rating of VIII also impacted neighboring nations, including Afghanistan, Tajikistan, and China, which only became the cause of 75000 casualties [1]. Multiple deceased bodies were incorrectly identified and temporarily buried. Officials used methods to associate the personal possessions of dead bodies with the information provided by plaintiffs. No autopsy, X-Ray fingerprinting, or deoxyribonucleic acid analysis (DNA) was possible to perform for identification. Another incident occurred on 25 June 2017 in Ahmedpur East, Punjab, Pakistan, when an oil tanker turned turtle, resultantly in the release of a Patrol of 50,000 liters along the way. A mob from nearby villagers gathered up to pick up the petrol, but it was accidentally ignited, resulting in an explosion, which burned 219 people, and several were injured in this tragic event [2]. All dead bodies lost their identity. More than 120 unidentified victims were buried in a mass grave. Each person’s guardian or parent will receive Rs. 2 million from the Government of Pakistan. More than 10,000 individuals representing various families came forward to make a claim. Because only bones remained, identification was once again a challenging task.

Between 2001 and 2010, a total of 63898 people were killed in bomb and suicide attacks, including 22657 civilians, 7127 security personnel, and 34114 terrorists & insurgents [3]. Terrorists and people who were not terrorists or insurgents had lost their identities. In criminal cases, especially rape cases of children and women, dead bodies are buried by the criminals.

In the scenarios where bones are left, identification becomes a significant problem from these remaining bones. Usually, the first claimant is the police department, which refers the discovered materials to a doctor for age assessment using intramembranous, endochondral, and wrist tests, with a two-year assessment difference. This is only used to identify individuals over the age of more than 18 [4]. When a physician provides reports or results, he initiates an additional investigation and presents his findings before the court or other law enforcement agencies. The local physician does not perform gender identification. Additionally, this method is used only at the request of the claimants. If a claimant insists and is financially able, he may also refer the case to the forensic department for a DNA test.

The forensic department deals with such issues daily. The forensic department conducts investigations using both pathological and radiological techniques. Although DNA is the best test for age and gender estimation, is it possible to conduct DNA testing on every claim in a reasonable length of time if 10,000 persons claimed ownership of a body? Is it possible for each claimant to pay the DNA cost? The answer to each of these questions is obviously no. Therefore, it is impossible to conduct DNA testing on each parent claimant who claims on behalf of a dead person. In Pakistan, Punjab has only one laboratory capable of performing DNA tests for its massive population of over 150 million. This issue can be resolved if the body’s age and gender are determined initially.

Human bones help us to determine not only age but also gender. The left-hand wrist, femur bone [5,6], and skull [7–10] can be used to determine an individual's age, whereas the pelvic bone can only be used to determine a person's gender [11]. Further to this, teeth bones can be used to figure out both biological profiles. It is possible to find teeth in a dead body for a long time because they are less affected by external and physical factors. Even teeth can last more than 1300 years [12]. It has become critical and necessary to automate the forensic identification system as a result of the evolution of information technology and the massive volume of cases that must be investigated by forensic specialists who have relied on biometric identifiers as a primary tool for decades [13]. Bone X-Ray is the most straightforward method to determine a biological profile, more quickly and easily.

A benchmarked, publicly available, and locally developed dataset has been used for research and experiments. Features are auto engineered and extracted from images using deep convolutional procedures. Convolutional neural network (CNN), a deep learning model, use small preprocessing compared to other image classification algorithms; therefore, they are considered ideal for processing two-dimensional (2D) images. It is a preferred neural network model used for image classification because of its local understanding of an image. CNN performs its work by feature extraction. As a result, the need for manual feature extraction is eliminated. While the features are automatically learned, the network is trained on a set of images. Hidden layers provide CNN with feature detection capabilities. With each layer of the CNN model, the complexity of the learned features expands. As a result, deep learning models for computer vision tasks are extremely accurate. CNN-based models outperformed other ImageNet Large Scale Visual Recognition Challenge (ILSVRC) models.

The goal of the research is to develop a CNN-based model to identify the age and gender of a deceased person. This research will directly benefit the forensic department and indirectly benefit police, courts, law enforcement, and other agencies.

The rest of the article is ordered as follows: Section 2 comprises the literature review, Section 3 includes the material and methods. Section 4 consists of the results and discussions, and Section 5 consists of the conclusions and future work.

In research article [14], cross-section and descriptive analysis are the two fundamental models used for gender estimation. The inter-acetabular distance, acetabular diameter, and pelvic breadth of the ilium are measured using the picture analysis and communication system (PACS). One hundred eighty pelvic bones from patients with pelvic injuries were used for research and experiments, with 90 males and 90 females. The accuracy of this research was 77% for ilium height and 70% for acetabular diameter and breadth estimation. The study’s main flaw is its accuracy, which is less than 80%, moreover, experiments are performed on a limited dataset. In this research [15], the authors used a deep convolutional network for gender estimation. 4155 grey-scale panoramic X-Ray images consist of 1662 males and 2493 females have been used for research. In this study, for gender estimation, deep convolutional networks have been used. The probability is obtained on the output layer using the Softmax activation function. The average accuracy of 94.3% is archived. In this study [16], bone age is compared with the chronological age using the Demirjian's approach. Dataset comprises of 1260 students with a ratio of 566 males and 694 females, which consists of two groups, i.e., one for bone age and one group for chronological age. Results obtained from this study are good with less than 1% difference between the bone age and chronological age. The limitation of this study is that there is small volume of the dataset used for research and experiments

In this research article [17], by establishing a relationship between age and the third molar maturity index, the authors applied a cameriere approach for age estimation. There were 276 students comprised of 139 females and 137 males in this dataset. This approach is for age determining for ages 18 or 18 plus. This research achieved 89% and 84% specificity against males and females respectively. However, lower volume of the dataset is used for research. In this paper [18], the authors applied 10-fold cross-validation for age estimation. The dataset used in this research consists of 814 (685 male and 185 female) postmortem scans. Transfer learning is used for training. The proposed technique produced good results with a mean absolute error of 5.15 years and a concordance correlation value of 0.80. In another research [19], multiclass Support Vector Machine (SVM) for age estimation and a Library for Support Vector Machines (LIBSVM) for the prediction of gender is used. A total of 1142 X-Rays of teeth were used for research and experiments. The accuracy achieved through this study was 96%.

In a nutshell, mostly studies estimate gender and age from bones scans using machine learning based approaches. They are either with low classification rates or trained on limited datasets.

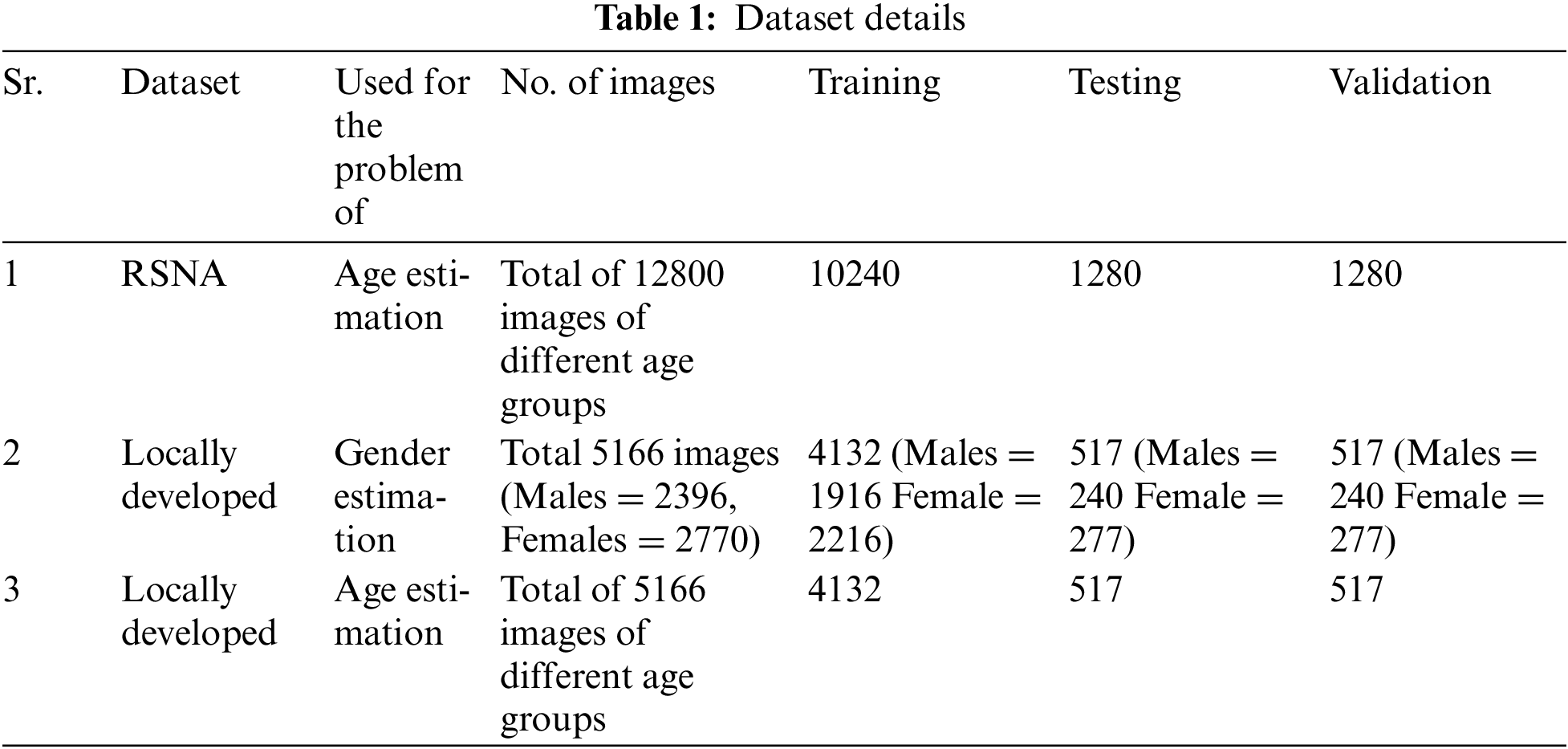

For research and experiments, a benchmarked publicly available dataset, Radiology Society of North America (RSNA) [20] and locally developed dataset has been used. In 2017, at RSNA, there was a contest to identify the age of children using their hand X-Ray. This dataset comprises hand X-Ray radiographs. Another dataset has been collected on request from Civil Hospital, Bahawalpur, Pakistan, which consists of 5166 images. This dataset consists of dental X-Ray radiographs of both males and females and is helpful in gender and age estimation. Details of the datasets is present in Tab. 1. 80% of the available data was used for training, while 10% each is used for testing and validation purpose.

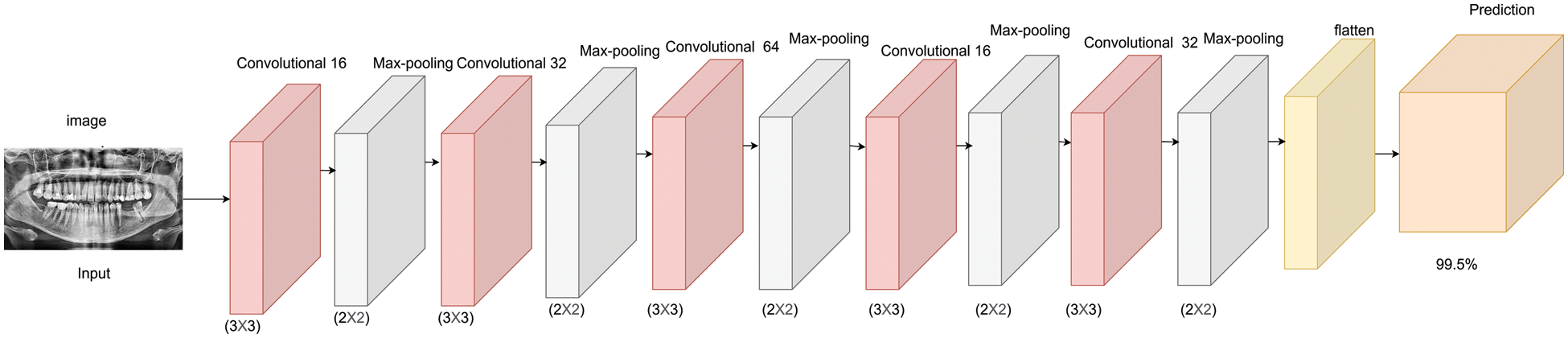

3.2 Specially Designed CNN Architecture

A light-weighted (with a lesser number of layers, i.e., 12 and parameters, i.e., 0.47million) CNN-based model named as Computer Vision and Image Processing-Net (CVIP-Net) has been designed for X-Ray images. This model is used to determine age and gender from X-Ray scans. Layer-wise architecture of the proposed CVIP-Net is shown in Fig. 1, while its complete model is described in Tab. 2. Description of the layers used in the proposed model is as follows.

Figure 1: The proposed computer vision and image processing-net (CVIP-Net) model

The convolutional layer generates feature maps representing the learned features from the input set of images [21]. Trainable weights aid in the generation of feature maps through filters [22]. Eq. (1) represents Given image Img with dimensions M, N, and a filter F with a size p, q. Feature maps are created using a convolutional process that begins at the top left and ends at the bottom right of the input image.

Pooling is simple and effective process, which is frequently done after the convolution process [23]. The pooling technique generates feature maps with local perceptual fields [24]. The pooling layer was used to extract the critical contributing features that helped the model estimate the age and gender from the dataset. It was also used to minimize the dimensionality of the extracted features.

3.2.3 Rectified Linear Unit (ReLU) Layers

To enable convolutional neural networks to learn complex problems and to work effectively, this layer is essential [25]. Matrix multiplication of a network during training may result in the network becoming linear if the activation function is not applied. As indicated in Eq. (2), the sigmoid function is commonly utilized as an activation function. The gradient descent algorithm is essentially dependent on the sigmoid function, which is calculated using Eq. (3).

When the gradients diminish, the process of learning gradually decreases, that is why it is not used in deep architectures. As a result, the ReLU is frequently a feasible option for deep systems, moreover, it may be used in a variety of ways. The process of ReLU is mathematically represented using Eq. (4).

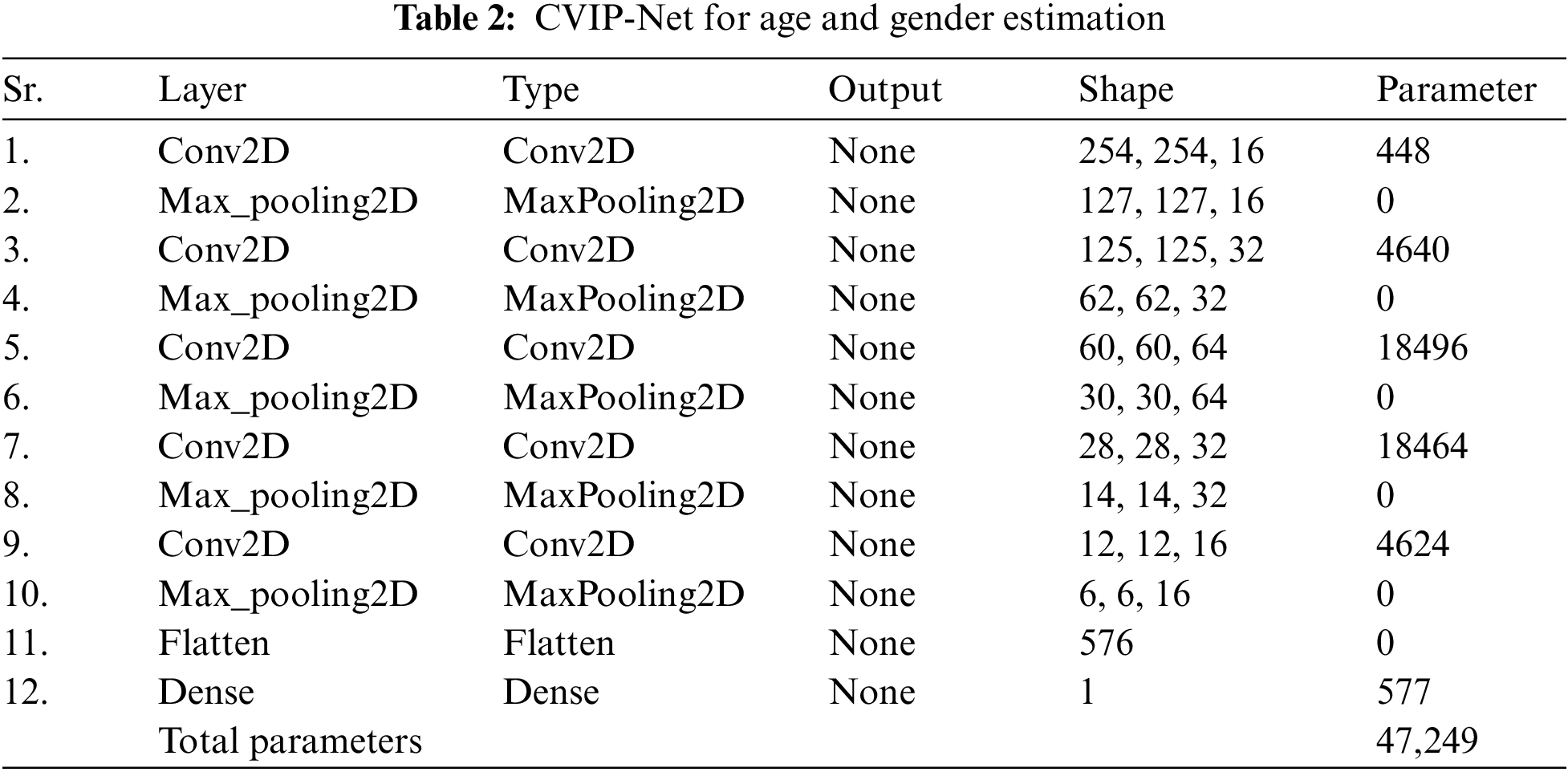

This layer is usually included in the end of CNN models [26] and is used to identify the objects that are being focused, age and gender, in this architecture. Overall, description of the proposed methodology is descripted in Fig. 2. The proposed CVIP-Net and other state-of-the-art CNN models, i.e., alex_net, inceptionv_3, google_net, Residual Network (resnet)_50, and VGG_19 have also been used to train on 80% of the data, while rest of 10% each is used for testing and validation. Standard evaluation measures including accuracy, specificity, sensitivity, and AUC-value have been used for the evaluation of the proposed model.

Figure 2: Overall, the proposed methodology for age and gender estimation

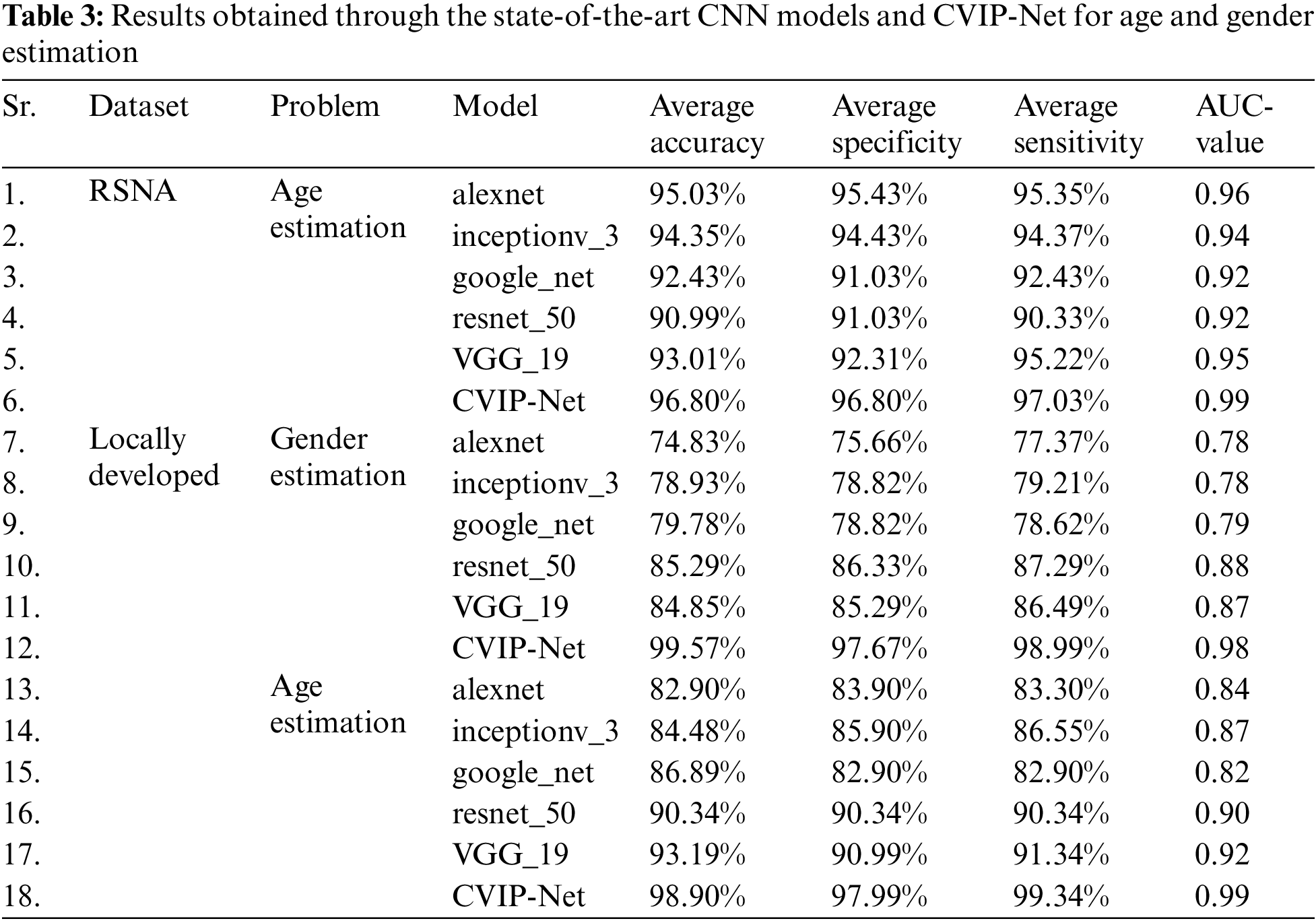

Experiments have been performed on the datasets shown in Tab. 1 for both ages and gender estimation, and their obtained results are represented in Tab. 3. Not only experiments were performed using the proposed model, but other state-of-the-art CNN models, i.e., alex_net, inceptionv_3, google_net, resnet_50, and VGG_19, have also been used for comparison purpose.

In this study, 80% of the dataset was used for the training, 10% of the dataset was used for the testing, and 10% of the dataset was used for the validation. The compare the performance of the proposed CVIP-Net, other pre-trained models, i.e., alex_net, inceptionv_3, google_net, resnet_50, and VGG_19.

To know the applicability of the proposed model, results have been discussed dataset, CNN model, and age & gender wise.

RSNA dataset for age estimation using inception_3 provides a good result, which is as per as accuracy = 94.35%, specificity = 94.43%, sensitivity = 94.37%, and AUC-values = 0.94. RSNA dataset provide better result using alexnet, which is as per as accuracy = 95.03%, specificity = 95.43%, sensitivity = 95.35%, and AUC-value = 0.96. RSNA dataset provide best result using CVIP-Net as per as accuracy = 96.80%, specificity = 96.80%, sensitivity = 97.03%, and AUC-value = 0.99.

Locally developed dataset provides good results for age estimation using resnet50, which is as per as accuracy (90.34%), specificity (90.34%), sensitivity (90.34%), and AUC-value (0.90). Locally developed dataset provides better results for age estimation using VGG_19, which is as per as accuracy as 93.19%, specificity as 90.99%, sensitivity as 91.34%, and AUC-value as 0.92. Locally developed dataset for age estimation provides best results using CVIP-Net as per accuracy, specificity, sensitivity, and AUC-value as 98.90%, 97.99%, 99.34%, and 0.99, respectively. Locally developed dataset for gender estimation provides good results using VGG_19, in terms of accuracy = 84.85%, specificity = 85.29%, sensitivity = 86.49%, and AUC-value = 0.87. Locally developed dataset provides better results for gender estimation using resnet_50, which is as per as accuracy = 85.29%, specificity = 86.33%, sensitivity = 87.29%, and AUC-value = 0.88. Locally developed dataset provides best results for gender estimation using CVIP-Net in terms of accuracy (99.57%), specificity (97.67%), sensitivity (98.99%), AUC-value (0.98).

Pertained models, i.e., alex_net, inceptionv_3, google_net, resnet_50, and VGG_19 and the proposed CVIP-Net is used for age and gender identification. VGG_19 resulted with good results for age detection when trained on locally dataset. It resulted with measures as 93.19%, 90.99%, 91.34%, and 0.92 achieved against standard evaluation parameters, i.e., accuracy, specificity, sensitivity, and AUC-value respectively. The pre-trained model resnet_50 obtained good results for gender identification when trained on locally developed dataset. It results as per accuracy (85.29%), specificity (86.33%), sensitivity (87.29%), and AUC-value (0.88).

The obtained results showed that the proposed CVIP-Net outperformed in either of the case, i.e., age or gender estimation regardless of the dataset. When trained on RSNA dataset for age estimation, it resulted with highest measures, i.e., 96.80%, 96.80%, 97.03%, and 0.99 as per accuracy, specificity, sensitivity, and AUC-value respectively. Similarly, when trained on locally developed dataset for gender estimation, it again achieved best results, i.e., 99.57% (accuracy), 97.67% (specificity), 98.99% (sensitivity), and AUC-value (0.98). Results obtained for age estimation using the proposed CVIP-Net also proved its efficacy over rest of the CNN-based models. The results were 98.90%, 97.99%, 99.34%, and 0.99 achieved against accuracy, specificity, sensitivity, and AUC-value respectively.

The experimental results showed that the gender is estimated better from the others, when the proposed CVIP-Net is trained, tested, and evaluated using locally developed dataset. Its achieved evaluation measures are (accuracy = 99.57%, specificity = 97.67%, sensitivity = 98.99%, and AUC-value = 0.98). As per the results, age is the best to estimate from bones, when the proposed CVIP-Net is trained on locally developed dataset. It achieved best measures, i.e., accuracy as 98.90%, specificity as 97.99%, sensitivity as 99.34%, and AUC-value as 0.99.

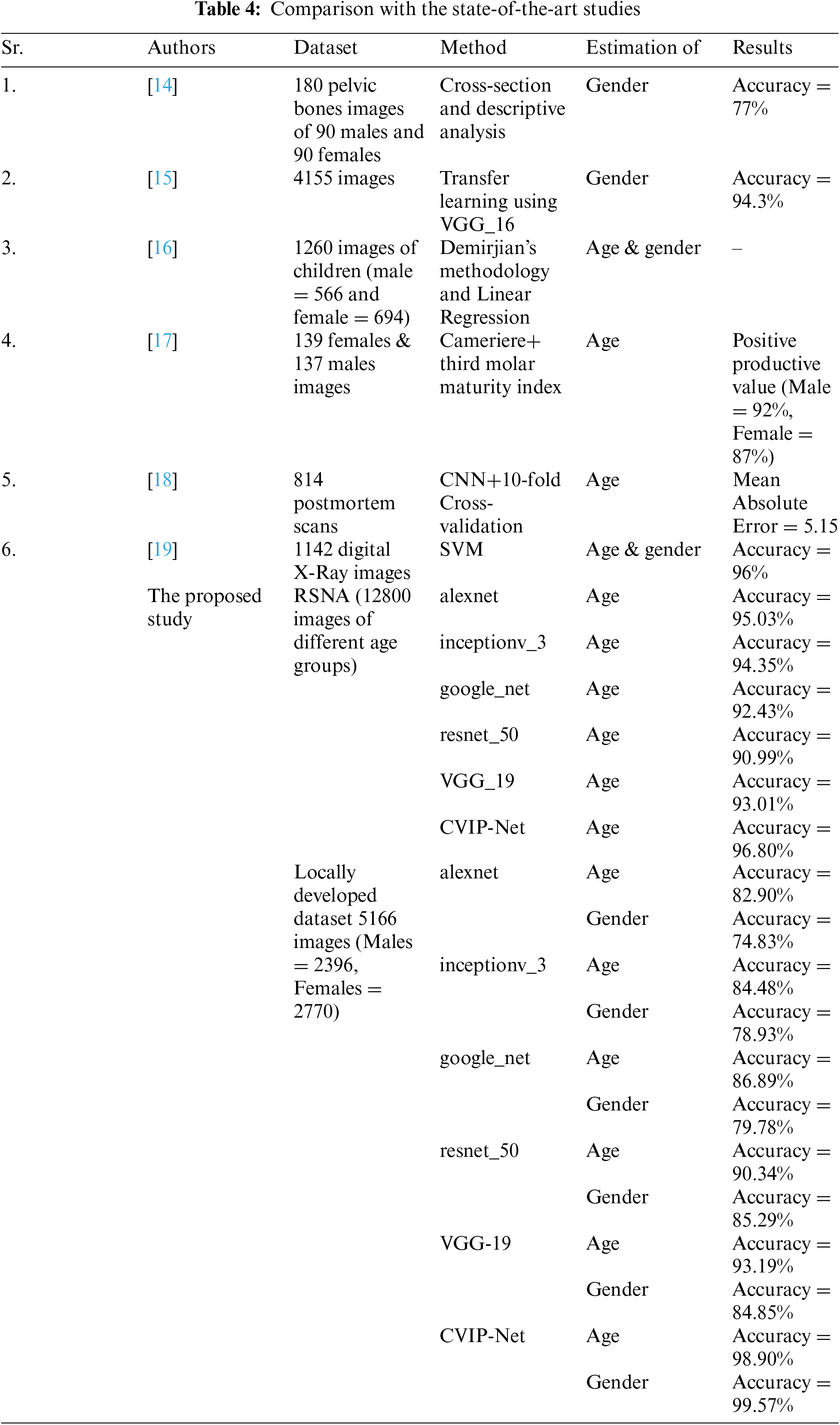

4.3 Comparison with the State-of-the-Art Studies

As shown in the Tab. 4, in the research [14], pelvic bone is used to estimate gender. Dataset includes 90 males and 90 females individuals used for research and experiments. The accuracy achieved from that study is 77%. In this study [15], a transfer learning model, i.e., VGG_16 is used on 4155 high-quality x-ray scans to estimate gender, with achieved accuracy as 94.3%. The evaluation measures achieved by this study are not so high, moreover, the model CNN-based proposed in this study consists of 16 number of layers, which makes it computationally complex while training. This study [16] uses Demirjian’s technique for age and gender estimation. Datasets consist of 1260 students. The results obtained from this research are good as the difference between chronological age, and bone age is around 1%. The limitation of this research is the limited dataset. In another research [17], the authors used the cameriere approach for age estimation. Datasets used in this research consist of 276 students. The specificity for males is 96% and for females is 93%. Low volumes of the dataset are used for experiments. In another study [18], CNN have been used with 10-fold cross-validation for age estimation on a small sample size consisting of 814 images of the full-body post-mortem computed tomography images. Overall, achieved results are evaluated as per mean absolute error, which is 5.15 years and a concordance correlation value, which is 0.80. Although, the results are reasonable, however, 814 scans could not ensure robustness of the model. In the research [19], SVM has been used for age and gender estimation. Model has been evaluated on a total of 1142 images, with achieved accuracy as 96%. However, the dataset is low in volume.

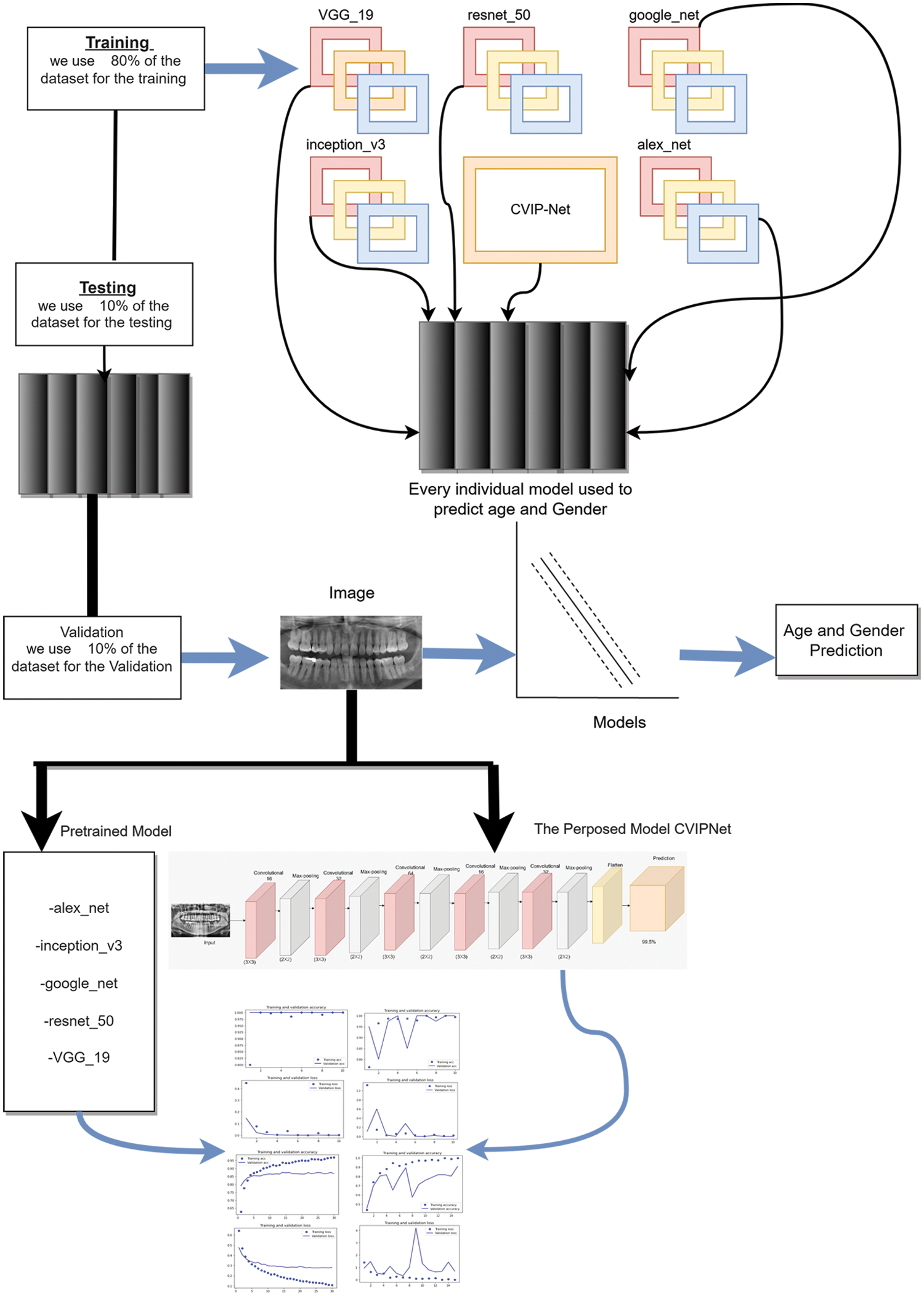

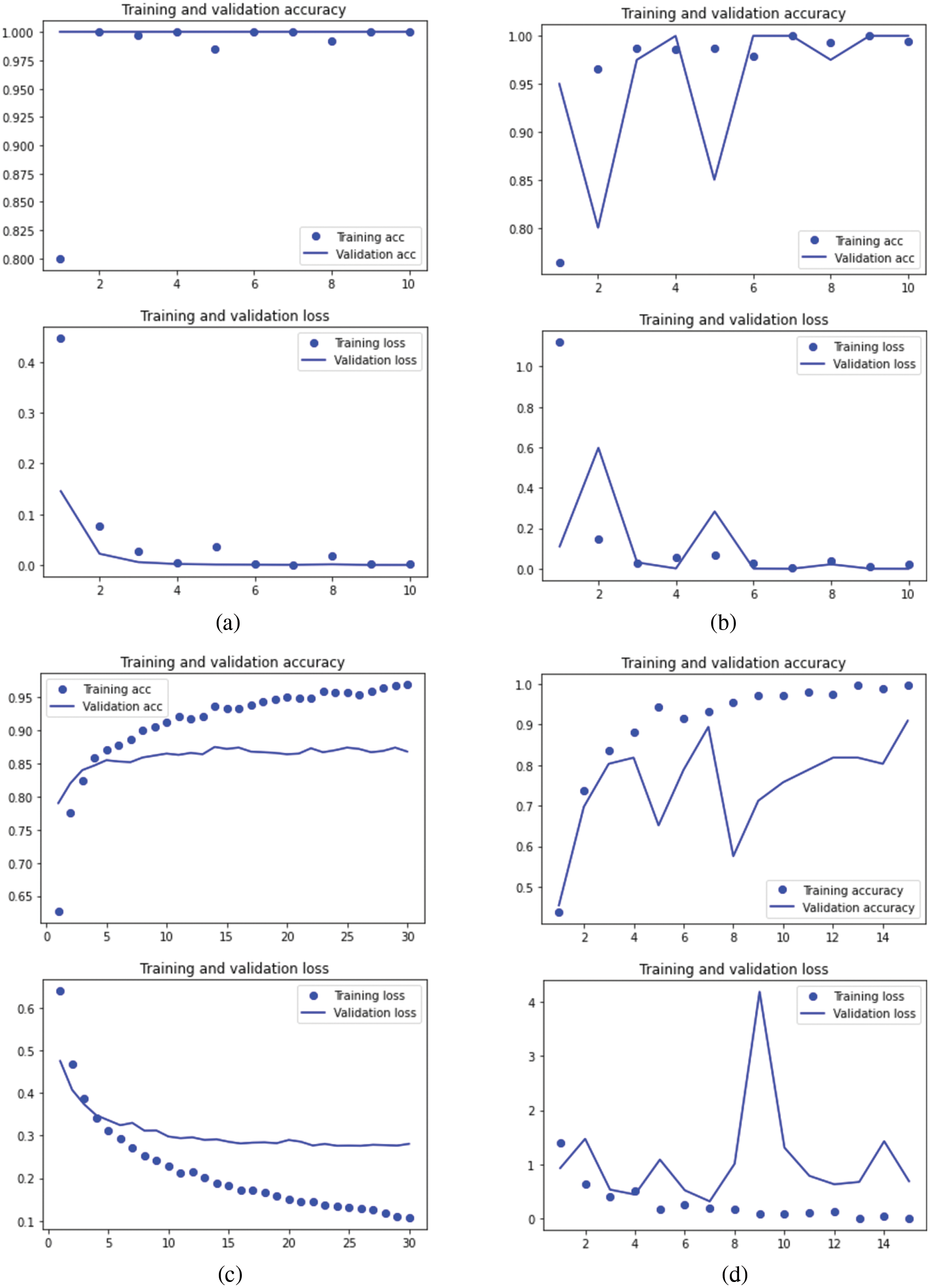

The proposed CVIP-Net consist of 12 number of layers and only 0.47million parameters (learnable), which make it computationally efficient while training, testing or even validation. Moreover, it can estimate both age and gender from X-Ray scans. The proposed model is trained on 12800 images of different age groups for age estimation, while 5166 images are used for gender estimation, which is huge volume of dataset used for experiments purpose, as compared to the state-of-the-art studies. Moreover, the results achieved from the CVIP-Net, are for both age and gender estimation are also better than all previously conducted studies. Accuracy achieved as 96.80% for age estimation on publicly available RSNA dataset, while 98.90% for age and 99.57% for gender on locally developed dataset using CVIP-Net proved its superiority on previously conducted studies. Fig. 3 shows accuracy and loss plots of the results. Fig. 3A shows accuracy and loss plots against of the proposed CVIP-Net model for age and gender estimation, Fig. 3B shows accuracy and loss plot of VGG_19 model, Fig. 3C shows accuracy and loss plots of the google_net for age estimation, and Fig. 3D shows accuracy and loss plots of resnet_50 for age estimation.

Figure 3: Accuracy and loss plots of the results, (A) Accuracy and loss plots of the proposed CVIP-Net model for age and gender estimation, (B) Accuracy and loss plot of VGG_19 model, (C) Accuracy and loss plots of the google_net for age estimation, (D) Accuracy and loss plots of resnet_50 for age estimation

Technological advancement in medicine has resulted in several types of evolution in radiologic technology, including radiographic fluoroscopy, molecular imaging, and digital imaging. When only the skeleton remains, gender identification becomes increasingly difficult and time-consuming. In this research work, age and gender estimation is performed using the proposed CNN model, i.e., CVIP-Net, and other pertained models, i.e., alex_net, inception_v3, google_net, resnet_50, and VGG_19 are also used for comparison purpose. For age and gender estimation, the proposed model CVIP-Net achieved significant classification rates. The proposed CVIP-Net consist of 12 number of layers and only 0.47 million parameters (learnable), which make it computationally efficient while training, testing or even validation. Moreover, it can estimate both age and gender from X-Ray scans. The proposed model trained on 12800 images of different age groups for age estimation, and 5166 images for gender estimation, which is huge volume of dataset used for experiments purpose, as compared to the state-of-the-art studies. Accuracy achieved as 96.80% for age estimation on publicly available RSNA dataset, while 98.90% for age and 99.57% for gender on locally developed dataset using CVIP-Net proved its superiority on previously conducted studies.

In the future we aimed to further improve to detect rest of the biological profile related particulars in real time environment from radiological scans.

Acknowledgement: The researchers would like to thank the staff of Civil Hospital, Bahawalpur, Pakistan for their cooperation in the provision of dataset.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. J. P. Hamilton and S. J. Halvorson, “The 2005 kashmir earthquake,” Mountain Research and Development, vol. 27, no. 4, pp. 296–301, 2007. [Google Scholar]

2. O. M. Qureshi, A. Hafeez and S. S. H. Kazmi, “Ahmedpur sharqia oil tanker tragedy: Lessons learnt from one of the biggest road accidents in history,” Journal of Loss Prevention in the Process Industries, vol. 67, pp. 104243, 2020. [Google Scholar]

3. Q. Ali, M. R. Yaseen and M. T. I. Khan, “The impact of temperature, rainfall, and health worker density index on road traffic fatalities in Pakistan,” Environmental Science and Pollution Research, vol. l, pp. 1–20, 2020. [Google Scholar]

4. S. Ghimire, S. Miramini, G. Edwards, R. Rotne, J. Xu et al., “The investigation of bone fracture healing under intramembranous and endochondral ossification,” Bone Reports, vol. 14, pp. 100740, 2020. [Google Scholar]

5. S. KC. “Development of human age and gender identification system from teeth, wrist and femur images,” in Proc. of Int. Conf. on Sustainable Computing in Science, Technology and Management (SUSCOMAmity University Rajasthan, Jaipur-India, 2019. [Google Scholar]

6. K. Santosh and N. Pradeep, Knowledge discovery (feature identification) from teeth, wrist and femur images to determine human age and gender. In: Machine Learning with Health Care Perspective2020. Vol. 13. Switzerland AG: Springer Nature, pp. 133–158, 2020. [Google Scholar]

7. J. Clarke, H. Peyre, M. Alison, A. Bargiacchi, C. Stordeur et al., “Abnormal bone mineral density and content in girls with early-onset anorexia nervosa,” Journal of Eating Disorders, vol. 9, no. 1, pp. 1–8, 2021. [Google Scholar]

8. J. Adserias-Garriga and R. Wilson-Taylor, Skeletal age estimation in adults. In: Age Estimation, Texas State University, San Marcos, TX, USA, Academic Press, Elsevier, pp. 55–73, 2019. [Google Scholar]

9. D. H. Ubelaker and H. Khosrowshahi, “Estimation of age in forensic anthropology: Historical perspective and recent methodological advances,” Forensic Sciences Research, vol. 4, no. 1, pp. 1–9, 2019. [Google Scholar]

10. A. Kotěrová, D. Navega, M. Štepanovsktfytf, Z. Buk, J. Brůžek et al., “Age estimation of adult human remains from hip bones using advanced methods,” Forensic Science International, vol. 287, pp. 163–175, 2018. [Google Scholar]

11. Y. Li, Z. Huang, X. Dong, W. Liang, H. Xue et al., “Forensic age estimation for pelvic X-ray images using deep learning,” European Radiology, vol. 29, no. 5, pp. 2322–2329, 2019. [Google Scholar]

12. J. S. Sehrawat and M. Singh, “Application of trace elemental profile of known teeth for sex and age estimation of ajnala skeletal remains: A forensic anthropological cross-validation study,” Biological Trace Element Research, vol. 193, no. 2, pp. 295–310, 2020. [Google Scholar]

13. P. Mesejo, R. Martos, Ó. Ibáñez, J. Novo and M. Ortega, “A survey on artificial intelligence techniques for biomedical image analysis in skeleton-based forensic human identification,” Applied Sciences, vol. 10, no. 14, pp. 4703, 2020. [Google Scholar]

14. M. Varzandeh, M. Akhlaghi, M. V. Farahani, F. Mousavi, S. K. Jashni et al., “The diagnostic value of anthropometric characteristics of ilium for sex estimation using pelvic radiographs,” International Journal of Medical Toxicology and Forensic Medicine, vol. 9, no. 1, pp. 1–10, 2019. [Google Scholar]

15. I. Ilić, M. Vodanović and M. Subašić, “Gender estimation from panoramic dental X-ray images using deep convolutional networks,” in IEEE EUROCON 2019-18th Int. Conf. on Smart Technologies, Novi Sad, Serbia, IEEE, pp. 1–5, 2019. [Google Scholar]

16. N. Khdairi, T. Halilah, M. N. Khandakji, P.-G. Jost-Brinkmann and T. Bartzela, “The adaptation of demirjian’s dental age estimation method on North German children,” Forensic Science International, vol. 303, no. 4, pp. 109927, 2019. [Google Scholar]

17. A. Kumagai, N. Takahashi, L. A. V. Palacio, A. Giampieri, L. Ferrante et al., “Accuracy of the third molar index cut-off value for estimating 18 years of age: Validation in a Japanese samples,” Legal Medicine, vol. 38, no. Suppl., pp. 5–9, 2019. [Google Scholar]

18. C. V. Pham, S.-J. Lee, S.-Y. Kim, S. Lee, S.-H. Kim et al., “Age estimation based on 3D post-mortem computed tomography images of mandible and femur using convolutional neural networks,” PLOS ONE, vol. 16, no. 5, pp. e0251388, 2021. [Google Scholar]

19. K. Santosh, N. Pradeep, V. Goel, R. Ranjan, E. Pandey et al., “Machine learning techniques for human age and gender identification based on teeth X-Ray images,” Journal of Healthcare Engineering, vol. 2022, no. 5, pp. 1–14, 2022. [Google Scholar]

20. S. S. Halabi, L. M. Prevedello, J. Kalpathy-Cramer, A. B. Mamonov, A. Bilbily et al., “The RSNA pediatric bone age machine learning challenge,” Radiology, vol. 290, no. 2, pp. 498–503, 2019. [Google Scholar]

21. X. Zhang, W. Zhang, W. Sun, H. Wu, A. Song et al., “A real-time cutting model based on finite element and order reduction,” Computer Systems Science and Engineering, vol. 43, no. 1, pp. 1–15, 2022. [Google Scholar]

22. X. Zhang, J. Zhou, W. Sun and S. K. Jha, “A lightweight CNN based on transfer learning for COVID-19 diagnosis,” Computers, Materials and Continua, vol. 72, no. 1, pp. 1123–1137, 2022. [Google Scholar]

23. G. Gilanie, M.-u. Hassan, M. Asghar, A.-M. Qamar, H. Ullah et al., “An automated and real-time approach of depression detection from facial micro-expressions,” Computers, Materials & Continua, vol. 73, no. 2, pp. 2513–2528, 2022. [Google Scholar]

24. G. Gilanie, U. I. Bajwa, M. M. Waraich and M. W. Anwar, “Risk-free WHO grading of astrocytoma using convolutional neural networks from MRI images,” Multimedia Tools and Applications, vol. 80, no. 3, pp. 4295–4306, 2021. [Google Scholar]

25. G. Gilanie, U. I. Bajwa, M. M. Waraich, M. Asghar, R. Kousar et al., “Coronavirus (COVID-19) detection from chest radiology images using convolutional neural networks,” Biomedical Signal Processing and Control, vol. 66, no. 3, pp. 102490, 2021. [Google Scholar]

26. G. Gilanie, N. Nasir, U. I. Bajwa and H. Ullah, “RiceNet: Convolutional neural networks-based model to classify Pakistani grown rice seed types,” Multimedia Systems, vol. 27, no. 5, pp. 867–875, 2021. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools