Open Access

Open Access

ARTICLE

A Deep Learning Approach for Detecting Covid-19 Using the Chest X-Ray Images

1 Department of Industrial Engineering, Sharif University of Technology, Tehran, 14588-89694, Iran

2 Department of Industrial Engineering, University of Houston, Houston, TX, 77204, USA

3 Department of Computer Engineering, Gachon University, Seongnam, 13120, Korea

* Corresponding Author: Seong Oun Hwang. Email:

Computers, Materials & Continua 2023, 74(1), 751-768. https://doi.org/10.32604/cmc.2023.031519

Received 20 April 2022; Accepted 24 June 2022; Issue published 22 September 2022

Abstract

Real-time detection of Covid-19 has definitely been the most widely-used world-wide classification problem since the start of the pandemic from 2020 until now. In the meantime, airspace opacities spreads related to lung have been of the most challenging problems in this area. A common approach to do on that score has been using chest X-ray images to better diagnose positive Covid-19 cases. Similar to most other classification problems, machine learning-based approaches have been the first/most-used candidates in this application. Many schemes based on machine/deep learning have been proposed in recent years though increasing the performance and accuracy of the system has still remained an open issue. In this paper, we develop a novel deep learning architecture to better classify the Covid-19 X-ray images. To do so, we first propose a novel multi-habitat migration artificial bee colony (MHMABC) algorithm to improve the exploitation/exploration of artificial bee colony (ABC) algorithm. After that, we optimally train the fully connected by using the proposed MHMABC algorithm to obtain better accuracy and convergence rate while reducing the execution cost. Our experiment results on Covid-19 X-ray image dataset show that the proposed deep architecture has a great performance in different important optimization parameters. Furthermore, it will be shown that the MHMABC algorithm outperforms the state-of-the-art algorithms by evaluating its performance using some well-known benchmark datasets.Keywords

The rapid and accurate detection of Covid-19 has been one of the most important/challenging problems for the scientists since the beginning of the pandemic in 2020. Among various solutions/tests, the Polymerase Chain Reaction (PCR) test has been the most-used method for detecting the Covid-19 as a world-wide approach. PCR test though has shown as an arduous test, requiring considerable time to get a result, and needing complicated procedure with the short supply kits. Contrarily, not only the X-ray images are highly attainable but also the scans are considerably low cost [1–6].

The exigency of building a real-time Covid-19 indicator that accurately detects the coronavirus is growing day-by-day. As analyzing the X-ray images are driven from the image processing topic, deep learning would be a promising candidate to do on that score. Therefore, our aim is to provide accurate X-ray images classifier based on deep convolutional neural network (DCNN) to efficiently detect the COVID-19 cases. According to the current literature, a few researches have been done in this specific area since the beginning of the pandemic [7–15]. Regardless of deep learning’s extraordinary ability to solve a wide range of complex problems, optimally updating the weights and biases of the fully-connected neural network (training procedure) is one of the most challenging issues that creates so much potential to improve the performance of the classifier [16–21]. There are a few algorithms which have been frequently used to train the fully-connected in the deep architecture e.g., gradient descent (GD) [22,23], conjugate gradient (CG) [24–26], arbitrary Lagrangian–Eulerian (ALE) [27–29], Hessian-Free optimization (HFO) [30], and Krylov subspace descent (KSD) [31].

It is known that GD-based algorithms for training purposes have relatively lower implementation cost and more rapid execution time [32]. However, these algorithms require various tuning parameters (which should be set manually) to improve the entire performance of the system. Furthermore, the GD-based training algorithms is sequential in essence, thus, parallelizing them during the hardware implementations, for example, on microcontrollers [33] or field programmable gate arrays (FPGAs) would be so challenging. In addition, GD-based algorithms are comparatively slow, regardless of their stable behavior when they are using to train a fully-connected [34]. Therefore, GD-based algorithms require a considerable computational capacity and large random access memory (RAM) resources [35]. A little after, HFO has been used to train the deep architectures especially for deep auto-encoders. It has been shown that this algorithm has a better performance in pre-training and optimizing of deep auto-encoders in comparison with the proposed algorithm by Hinton and Salakhutdinov. It is worth mentioning that, KSD has a lower implementation complexity comparing to HFO while having more robust performance. In addition, the further studies have shown that KSD has a higher classification accuracy and convergence rate than HFO. Nevertheless, KSD needs larger storage space than HFO [30].

Although meta-heuristic-based approaches have played an important role to find the best solutions for a wide range of optimization and complicated problems, the efforts regarding optimizing the deep architectures using the meta-heuristic algorithms are still on the very beginning of their path. The proposed scheme in [36] was the first effort that employed the genetic algorithm (GA) to train a DCNN. This effort had benefited the advantage of different operators in GA (e.g., selection, crossover and mutation) to train the deep architecture by considering the weights and biases of each network as a chromosome. Furthermore, in the re-mixture stage, only the weights and biases of the first and third convolution layers have been rectified. Rosa et al. [37] proposed a harmony search (HS)-based approach for optimizing the DCNN parameters. The authors in [38] have presented a scheme based on progressive unsupervised learning (PUL) for tuning the parameters of pre-trained DCNN. They have shown that their approach can be efficiently implemented and can also be used as an optimized baseline for unsupervised learning. This approach can add a new feature as a selection option between the clustering and fine-tuning phases when the results of the clustering phase is noisy.

The authors in [39] have proposed a GA-based automatic DCNN for optimal image classification. The automatic characteristic of the presented GA is its most important feature in which there is no need to know additional information about the trained DCNN. Though, the main weakness of this approach is the training algorithm turns slow when the deep structure is large. Because the GA’s chromosomes will be considerably large consequently. The authors in [40] have also used the advantage of social-spider optimization (SSO) algorithm to train a deep adaptive neuro fuzzy inference system (ANFIS) for predicting the biochar yields [41]; though, the proposed scheme has faced the ill-conditioning issue. More training algorithms have also been proposed in recent years to better the performance of deep architectures [42–45]. Though, the mentioned schemes lack in providing low computational cost and unreliability when the inputs of the deep architecture are high-dimension and a large set of images.

According to the mentioned drawbacks and weaknesses of the proposed schemes in the literature, we aim to develop a novel meta-heuristic-based approach to optimally training a DCNN to accurately detect the coronavirus by using the COVIDetectioNet dataset [46]. Furthermore, we will update the last layer in the deep architecture of a fully-connected neural network by replacing it with a new fully-connected neural network trained by a novel MHMABC algorithm. The contributions of this paper are as follows:

• We develop a meta-heuristic algorithm named MHMABC in which the migration operator of the biogeography-based optimization (BBO) is used to improve the exploitation ability of ABC algorithm for the first time.

• We evaluate the performance of MHMABC algorithm using some benchmark datasets [46].

• We optimally improve the performance of the DCNN by updating its optimization parameters using the proposed MHMABC algorithm.

• By using the proposed deep architecture to analyze the X-ray images, we propose an accurate scheme over PCR to detect the Covid-19 cases.

The reminder of this paper is organized as follows. Section 2 represents the proposed MHMABC algorithm. Section 3 describes the developed deep architecture trained by MHMABC algorithm. Section 4 evaluates the performance of MHMABC algorithm on some well-known benchmark datasets. In Section 5, we use the proposed deep architecture to analyze the chest X-ray images by using the COVIDetectioNet dataset [46], and finally, we draw a conclusion of this paper in Section 6.

2 The Proposed MHMABC Algorithm

Artificial bee colony (ABC) is a swarm-based meta-heuristic algorithm that first introduced by Karaboga [47] in 2005. ABC algorithm was inspired by the intelligent search behavior of honey bees. In ABC algorithm, a colony consists of three types of artificial bees:

• Scout bees: Solutions that are randomly generated to discover new spaces are called scout bees. Scout bees are responsible for exploring the search space.

• Employed bees: A number of scout bees with good fitness function become employed bees. Employed bees are responsible for advertising quality food sources.

• Onlooker bees: The onlooker bees are responsible for searching the neighborhood for employed bees. Onlooker bees receive information about food sources and search around these sources. The role of these bees is both exploitation and exploration of algorithm.

In ABC algorithm, scout bees discover a population of initial solution vectors randomly and repeatedly improve them by onlooker and employed bees (using neighbor search method to move towards better solutions while eliminating poor solutions). In general, ABC algorithm uses two main methods (neighbor search and random search) to get the optimal answer: Random search by scout and onlooker bees and neighbor search by employed and onlooker bees. In ABC algorithm, each candidate answer indicates the position of food source, and the quality of the nectar is used as a fitness function. In this algorithm, first, all initial populations are explored by scout bees. Scout bees with best fitness functions are selected as the employed bees. Employed bees exploit the solution positions and then onlooker bees are created. The higher the quality of the employed bee, the more onlooker bees will be created around it. The onlooker bee selects new food position (based on the information of the employed bee) and exploit around these positions. Next, random scout bees are created to find new random food positions. ABC algorithm can be formulated as Eqs. (1)–(3) [46].

where,

where,

Figure 1: An example of neighborhood search and multi-habitat migration in MHMABC algorithm

Figure 2: The flowchart of the MHMABC algorithm

3 Training Deep Architecture with MHMABC Algorithm

The main architecture of a DCNN is based on four layers: convolution, pooling, activation function, and fully connected layers. The role of the convolution layer is to put a convolution filter to the input data in order to extract the features by which a set of values will be multiplied with the input data. Next, a kernel passes over an image many times when the weights are multiplied with the input data. The kernel move from top to bottom and right to left, in order to cover all data before a mathematical operation be performed as a next step. The overall inputs will be summed and then generate a distinctive value per every kernel position. Rectified linear unit (ReLu) will act as a nonlinear activation function in the next step where the mapped data produced by the convolution operation will be passed through. ReLu finally replaces the negative values with zeros.

The pooling layer is responsible for reducing the size of the input data and hold the data which are the most worthy. As an example, consider a sample group of four pixels, the max pooling here means that the most valuable pixels which will be held. Pooling layer has an important role in deep learning by decreasing the computational cost balancing the total over fitting. After the convolution and pooling processes are done, the fully connected layer will act which mostly consists of multi-layer perceptron (MLP) neural network. One of the most important contributions of our work is to optimally update the parameters of the fully-connected in the proposed deep architecture using the MHMABC algorithm. To do on that score, we suppose the weights and biases of the MLP neural network as the optimization parameters for the MHMABC algorithm. MHMABC algorithm optimizes the weights and biases of the used DCNN to better classify the Covid-19 X-ray images.

We name the proposed approach MHMABC-DCNN. Fig. 3 shows the definition of a bee in MHMABC algorithm. The fitness function of algorithm (mean square error (MSE)) can be calculated as Eq. (6), where

Figure 3: An overview of bees in MHMABC algorithm

4 MHMABC Algorithm Performance Evaluation

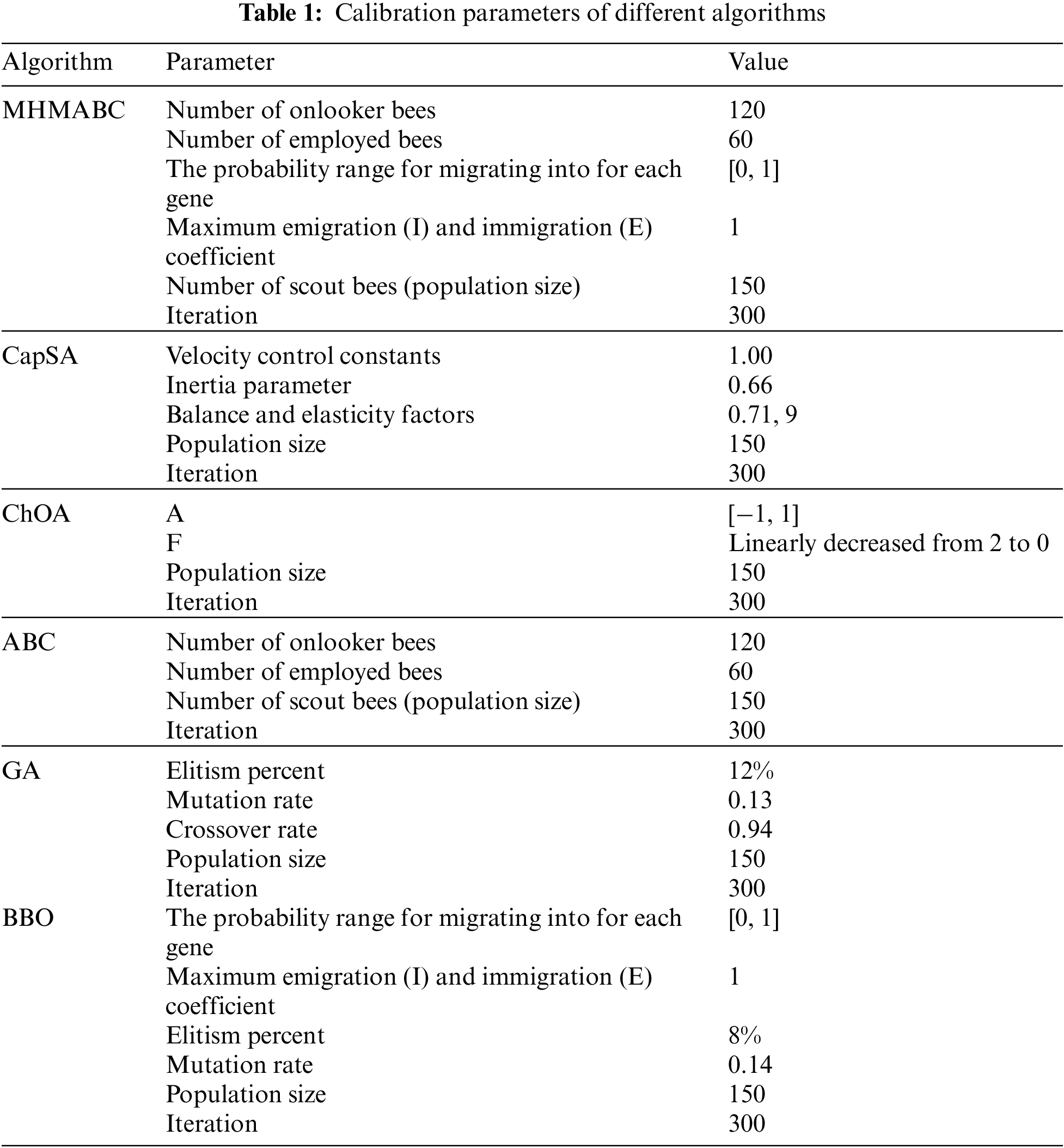

In this section, the performance of the proposed MHMABC algorithm on different benchmark functions (21 competitive benchmark functions) is evaluated. To evaluate the performance of MHMABC algorithm, three well-known and two novel algorithms called GA, ABC, BBO, capuchin search algorithm (CapSA) [49], and chimp optimization algorithm (ChOA) [50] are used. Tab. 1 shows the calibration parameters of all algorithms.

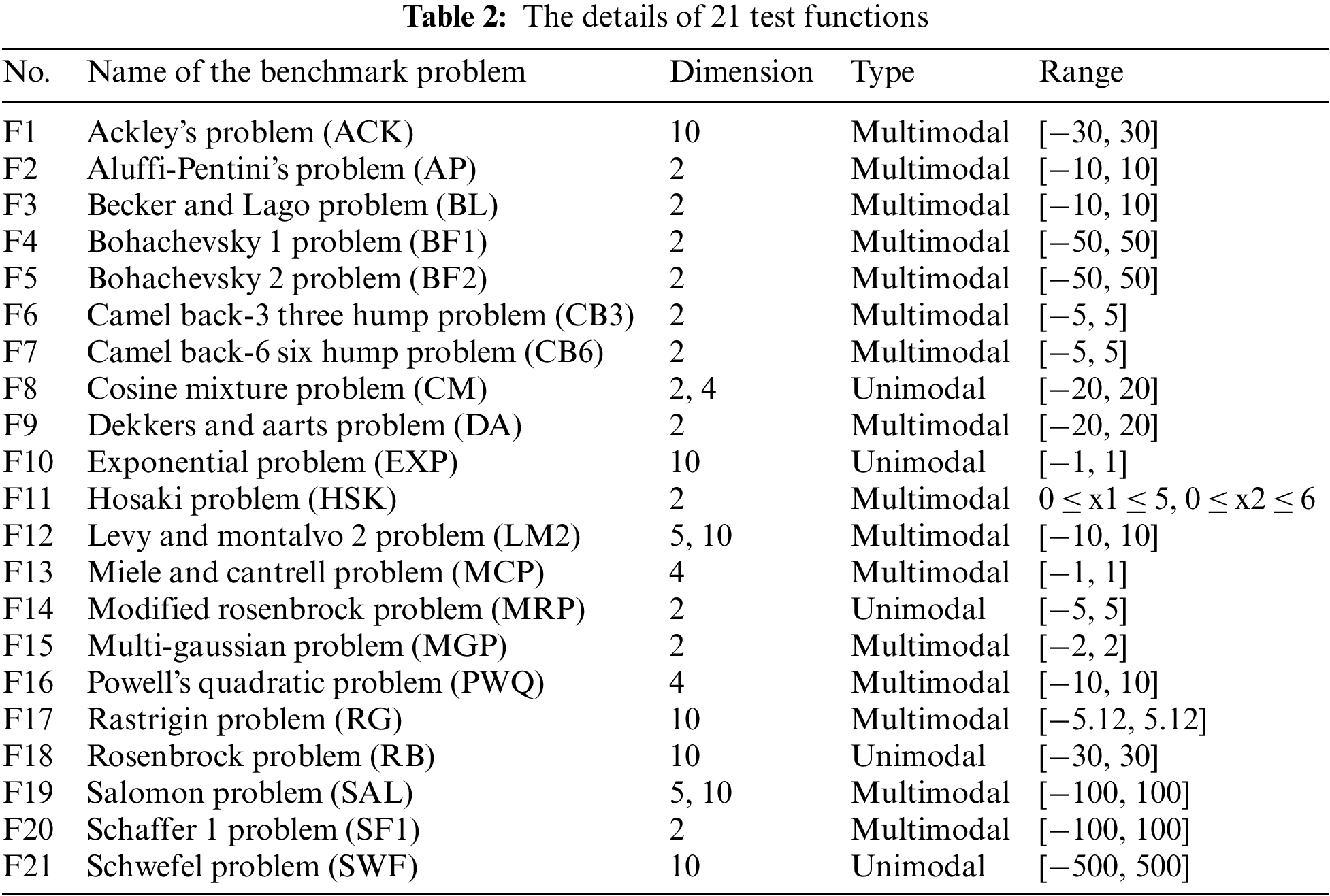

4.1 Competitive Test Functions

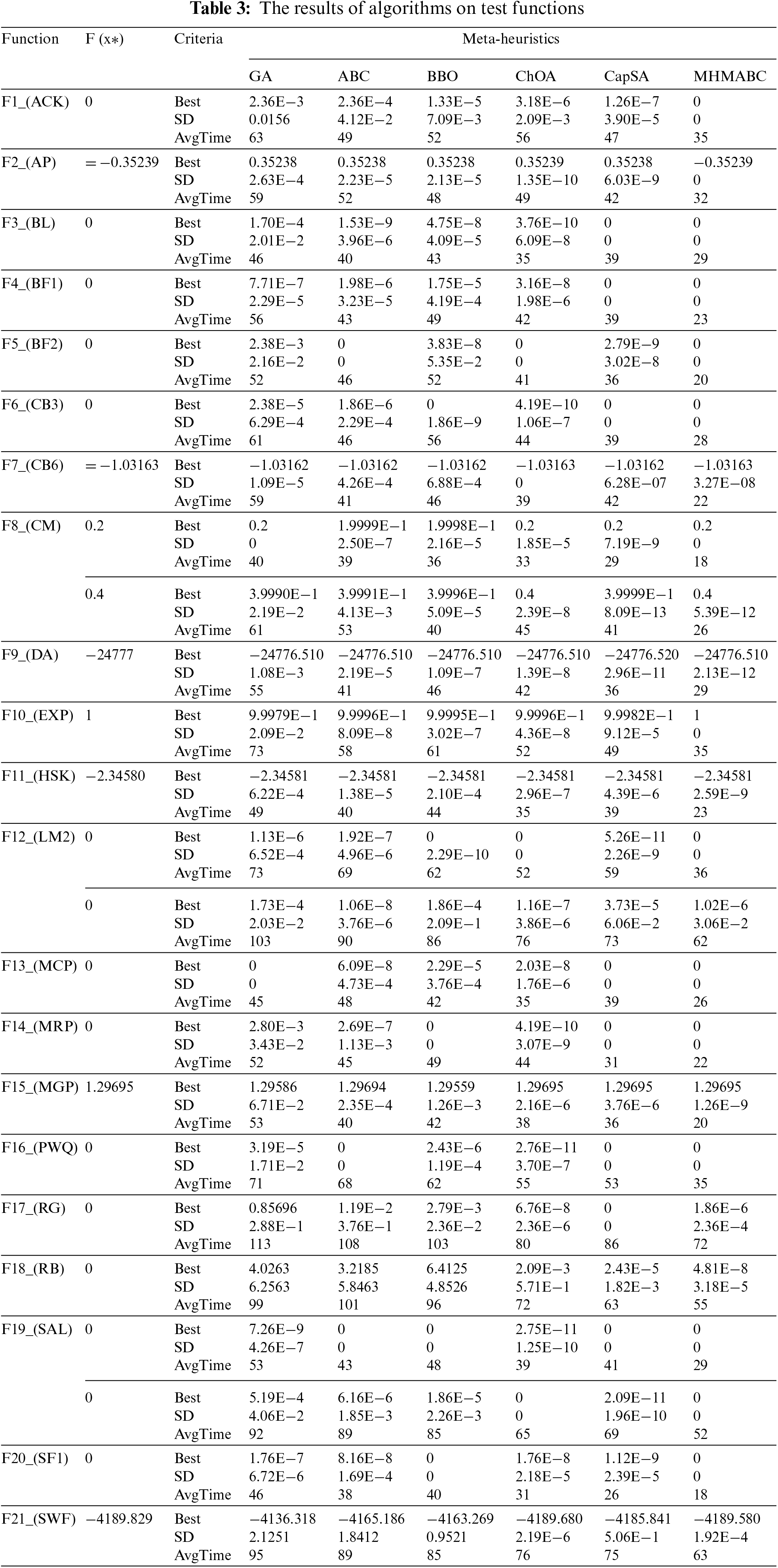

In this section, 16 multimodal and 5 unimodal functions are used to evaluate the performance of the proposed algorithm. Tab. 2 shows the details of these benchmark functions (see [51] for more details). Furthermore, Tab. 3 shows the results of algorithms in this benchmark functions. All the algorithms have been implemented 30 times for each problem. Tab. 3 shows best value of the fitness function (Best), the standard deviation of 30 run (StdDev), and the average runtime of the algorithms (AvgTime). As presented in Tab. 3, the results of the MHMABC algorithm are better than the other algorithms. MHMABC in 21 (from 24 function) test functions (87.5%) has achieved the best value of the “Best”. CapSA in 12 functions (50%), ChOA in 10 functions (41.66%), BBO in 6 functions (25%), ABC in 5 functions (20.83%), and GA in 3 functions (12.50%) have achieved the best value of “Best”.

Also, the MHMABC algorithm is better than others in terms of AvgTime (Fig. 4) and SD. As shown in Fig. 4, the total “Average Time” of the MHMABC is less than other algorithms. The standard deviation (SD) values presented in Tab. 3 indicate that MHMABC has the highest rank of repeatability compared to other algorithms. According to the results, in most of the benchmark problems, the MHMABC produce results with the least amount of SD. Thus, the MHMABC is a very stable search algorithm.

Figure 4: Total AvgTime of algorithms

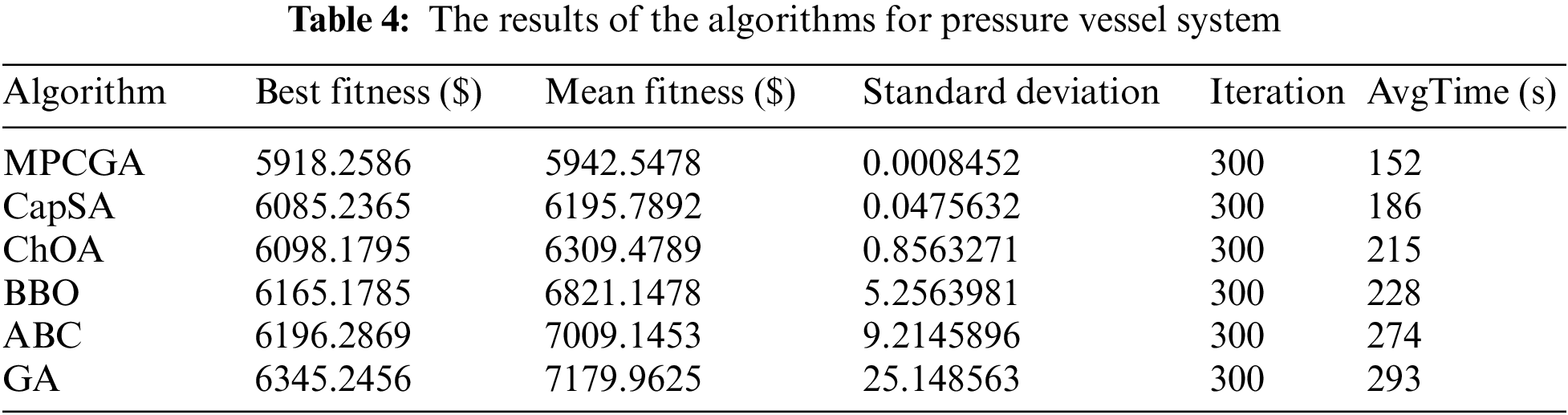

4.2 Pressure Vessel System Benchmark

In this section, the performance of the proposed MHMABC and other algorithms on the pressure vessel system benchmark are investigated, which can be formulated as Eqs. (7)–(12).

Subjected to

where,

5 Simulation Results for Covid-19 Detection

In this part, the performance of proposed evolutionary deep architecture called MHMABC-DCNN and the other are evaluated for COVID-19 X-ray images classification. For this comparison, the accuracy, sensitivity, and specificity analysis are used. These three criteria are obtained from the confusion matrix and can be calculated as Eqs. (13)–(15) [52].

where

Tab. 5 shows the accuracy, specificity, and sensitivity of different deep learning architectures for COVID-19 X-ray images classification. According to Tab. 5, MHMABC-DCNN shows the best results in training and validation datasets. MHMABC-DCNN achieved 99.32% and 98.32% of accuracy in the test and train datasets, respectively. MHMABC-DCNN also achieved 99.63% and 98.82% of sensitivity in the test and train datasets, respectively. The results of evolutionary deep learning architectures in the test dataset show that deep learning architectures are well trained using meta-heuristic algorithms. Because accuracy, specificity, and sensitivity of different deep learning architectures in the test and train datasets are highly stable. As shown in Tab. 5, the “Average Time” of the MHMABC-DCNN is less than other architectures for COVID-19 X-ray images classification. Fig. 6 shows the radar plot of the architectures in both dataset. As can be seen, the accuracy, sensitivity, and specificity of the MHMABC-DCNN is better than other architectures.

Figure 5: Six stochastic sample images from the COVID-X-ray-5 k dataset

Figure 6: The radar plot of the machine learning approaches

Tab. 6 also demonstrates the comparison of the different deep architectures in MSE criteria. It can be seen that the proposed deep MHMABC-DCNN architecture has a fewer MSE than the other approaches. Therefore, the proposed MHMABC-DCNN has been useful to solve this problem. The rank of the algorithms in this comparison are: MHMABC-DCNN, CapSA-DCNN, ChOA-DCNN, BBO-DCNN, ABC-DCNN, GA-DCNN, DCNN, and MLPNN, respectively. To have a more comprehensive comparison, the convergence curve of the architectures has been shown in Fig. 7. As shown in this figure, MHMABC-DCNN, CapCA-DCNN, and ChOA-DCNN architectures converge faster than others. All architectures have been trained by meta-heuristic algorithms show higher convergence rates.

Figure 7: The convergence curve of the architectures

This paper proposed a real-time Covid-19 detector based on a novel deep learning architecture. In order to overcome the drawbacks of the PCR tests, we took the advantage of using chest X-ray images to better diagnose positive Covid-19 cases. After that, we proposed a novel algorithm named MHMABC to improve the exploitation and exploration of the ABC algorithm. We then optimally train the fully connected in our deep architecture by using the proposed MHMABC algorithm. The experiment results on Covid-19 X-ray image dataset showed that the proposed scheme (MHMABC-DCNN) could obtain better accuracy and convergence rate while reducing the execution cost. Furthermore, the performance evaluating on some well-known benchmark datasets showed that the proposed MHMABC algorithm outperformed the state-of-the-art algorithms.

Funding Statement: This work has been supported in part by the Institute of Information and Communications Technology Planning and Evaluation (IITP) under the High-Potential Individuals Global Training Program under Grant 2021-0-01532 (50%), and in part by the National Research Foundation of Korea (NRF) under Grant 2020R1A2B5B01002145 (50%), all funded by the Korean Government through Ministry of Science and ICT (MSIT).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. X. Li, Z. Q. Dong, P. Yu, L. P. Wang, X. D. Niu et al., “Effect of self-assembly on fluorescence in magnetic multiphase flows and its application on the novel detection for COVID-19,” Physics of Fluids, vol. 33, no. 4, pp. 042004, 2021. [Google Scholar]

2. M. Abdel-Basset, R. Mohamed, M. Elhoseny, R. K. Chakrabortty and M. Ryan, “A hybrid COVID-19 detection model using an improved marine predators algorithm and a ranking-based diversity reduction strategy,” IEEE Access, vol. 8, pp. 79521–79540, 2020. [Google Scholar]

3. W. Wang, H. Liu, J. Li, H. Nie and X. Wang, “Using CFW-net deep learning models for X-ray images to detect COVID-19 patients,” International Journal of Computational Intelligence Systems, vol. 14, no. 1, pp. 199–207, 2021. [Google Scholar]

4. D. Zhang, J. Hu, F. Li, X. Ding, A. K. Sangaiah et al., “Small object detection via precise region-based fully convolutional networks,” Computers, Materials and Continua, vol. 69, no. 2, pp. 1503–1517, 2021. [Google Scholar]

5. S. Wacharapluesadee, T. Kaewpom, W. Ampoot, S. Ghai, W. Khamhang et al., “Evaluating the efficiency of specimen pooling for PCR-based detection of COVID-19,” Journal of Medical Virology, vol. 92, no. 10, pp. 2193–2199, 2020. [Google Scholar]

6. A. M. Karim, H. Kaya, V. Alcan, B. Sen and I. A. Hadimlioglu, “New optimized deep learning application for COVID-19 detection in chest X-ray images,” Symmetry, vol. 4, no. 5, pp. 1003, 2022. [Google Scholar]

7. M. Khishe, F. Caraffini and S. Kuhn, “Evolving deep learning convolutional neural networks for early COVID-19 detection in chest X-ray images,” Mathematics, vol. 9, no. 9, pp. 1002, 2021. [Google Scholar]

8. J. Wang, Y. Wu, S. He, P. K. Sharma, X. Yu et al., “Lightweight single image super-resolution convolution neural network in portable device,” KSII Transactions on Internet and Information Systems (TIIS), vol. 15, no. 11, pp. 4065–4083, 2021. [Google Scholar]

9. S. M. J. Jalali, M. Ahmadian, S. Ahmadian, R. Hedjam, A. Khosravi et al., “X-ray image based COVID-19 detection using evolutionary deep learning approach,” Expert Systems with Applications, vol. 201, pp. 116942, 2022. [Google Scholar]

10. M. Kaveh, M. Khishe and M. R. Mosavi, “Design and implementation of a neighborhood search biogeography-based optimization trainer for classifying sonar dataset using multi-layer perceptron neural network,” Analog Integrated Circuits and Signal Processing, vol. 100, no. 2, pp. 405–428, 2019. [Google Scholar]

11. F. Najafi, M. Kaveh, D. Martin and M. R. Mosavi, “Deep PUF: A highly reliable DRAM PUF-based authentication for iot networks using deep convolutional neural networks,” Sensors, vol. 21, no. 6, pp. 2009, 2021. [Google Scholar]

12. J. Wang, Y. Zou, P. Lei, R. S. Sherratt and L. Wang, “Research on recurrent neural network based crack opening prediction of concrete dam,” Journal of Internet Technology, vol. 21, no. 4, pp. 1161–1169, 2020. [Google Scholar]

13. M. Kaveh and M. S. Mesgari, “Improved biogeography-based optimization using migration process adjustment: An approach for location-allocation of ambulances,” Computers & Industrial Engineering, vol. 135, pp. 800–813, 2019. [Google Scholar]

14. A. Lotfy, M. Kaveh, M. R. Mosavi and A. R. Rahmati, “An enhanced fuzzy controller based on improved genetic algorithm for speed control of DC motors,” Analog Integrated Circuits and Signal Processing, vol. 105, no. 2, pp. 141–155, 2020. [Google Scholar]

15. M. Khishe, M. R. Mosavi and M. Kaveh, “Improved migration models of biogeography-based optimization for sonar dataset classification by using neural network,” Applied Acoustics, vol. 118, pp. 15–29, 2017. [Google Scholar]

16. S. He, Z. Li, Y. Tang, Z. Liao, F. Li et al., “Parameters compressing in deep learning,” Computers Materials & Continua, vol. 62, no. 1, pp. 321–336, 2020. [Google Scholar]

17. M. Kaveh, M. Kaveh, M. S. Mesgari and R. S. Paland, “Multiple criteria decision-making for hospital location-allocation based on improved genetic algorithm,” Applied Geomatics, vol. 12, no. 3, pp. 291–306, 2020. [Google Scholar]

18. N. Kianfar, M. S. Mesgari, A. Mollalo and M. Kaveh, “Spatio-temporal modeling of COVID-19 prevalence and mortality using artificial neural network algorithms,” Spatial and Spatio-Temporal Epidemiology, vol. 40, pp. 100471, 2022. [Google Scholar]

19. O. Rostami and M. Kaveh, “Optimal feature selection for SAR image classification using biogeography-based optimization (BBOartificial bee colony (ABC) and support vector machine (SVMA combined approach of optimization and machine learning,” Computational Geosciences, vol. 25, no. 3, pp. 911–930, 2021. [Google Scholar]

20. J. Wang, M. Khishe, M. Kaveh and H. Mohammadi, “Binary chimp optimization algorithm (BChOAA new binary meta-heuristic for solving optimization problems,” Cognitive Computation, vol. 13, no. 5, pp. 1297–1316, 2021. [Google Scholar]

21. S. R. Zhou and B. Tan, “Electrocardiogram soft computing using hybrid deep learning CNN-ELM,” Applied Soft Computing, vol. 86, pp. 105778, 2020. [Google Scholar]

22. M. Ali, L. T. Jung, A. H. Abdel-Aty, M. Y., Abubakar, M. Elhoseny et al., “Semantic-k-NN algorithm: An enhanced version of traditional k-NN algorithm,” Expert Systems with Applications, vol. 151, pp. 113374, 2020. [Google Scholar]

23. L. Zhu, L. Kong and C. Zhang, “Numerical study on hysteretic behaviour of horizontal-connection and energy-dissipation structures developed for prefabricated shear walls,” Applied Sciences, vol. 10, no. 4, pp. 1240, 2020. [Google Scholar]

24. X. R. Zhang, X. Sun, W. Sun, T. Xu and P. P. Wang, “Deformation expression of soft tissue based on BP neural network,” Intelligent Automation & Soft Computing, vol. 32, no. 2, pp. 1041–1053, 2022. [Google Scholar]

25. Y. Wei, M. M. Zhao, M. Hong, M. J. Zhao and M. Lei, “Learned conjugate gradient descent network for massive MIMO detection,” IEEE Transactions on Signal Processing, vol. 68, pp. 6336–6349, 2020. [Google Scholar]

26. X. R. Zhang, W. F. Zhang, W. Sun, X. M. Sun and S. K. Jha, “A robust 3-D medical watermarking based on wavelet transform for data protection,” Computer Systems Science & Engineering, vol. 41, no. 3, pp. 1043–1056, 2022. [Google Scholar]

27. C. Zhang, M. Abedini and J. Mehrmashhadi, “Development of pressure-impulse models and residual capacity assessment of RC columns using high fidelity arbitrary lagrangian-eulerian simulation,” Engineering Structures, vol. 224, pp. 111219, 2020. [Google Scholar]

28. M. Jiang, B. Gallagher, J. Kallman and D. Laney, “A supervised learning framework for arbitrary lagrangian-eulerian simulations,” in Proc. of the 2016 IEEE 15th Int. Conf. on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, pp. 977–982, 2016. [Google Scholar]

29. W. Wang, Y. Jiang, Y. Luo, J. Li, X. Wang et al., “An advanced deep residual dense network (DRDN) approach for image super-resolution,” International Journal of Computational Intelligence Systems, vol. 12, no. 2, pp. 1592–1601, 2019. [Google Scholar]

30. J. Martens, “Deep learning via hessian-free optimization,” in Proc. of the 27th ICML’10 Int. Conf. on Machine Learning, Haifa, Israel, vol. 27, pp. 735–742, 2010. [Google Scholar]

31. O. Vinyals and D. Povey, “Krylov subspace descent for deep learning,” Artificial Intelligence and Statistics, vol. 22, pp. 1261–1268, 2012. [Google Scholar]

32. B. Cao, J. Zhao, P. Yang, P. Yang, X. Liu et al., “Multiobjective feature selection for microarray data via distributed parallel algorithms,” Future Generation Computer Systems, vol. 100, pp. 952–981, 2019. [Google Scholar]

33. A. Lotfy, M. Kaveh, M. R. Mosavi and A. R. Rahmati, “An enhanced FPGA-based implementation of fuzzy controller using a personalized microcontroller,” in Proc. of the 2019 IEEE 34th Int. Conf. on Power System (PSC), Tehran, Iran, pp. 1–4, 2019. [Google Scholar]

34. M. Kaveh, D. Martín and M. R. Mosavi, “A lightweight authentication scheme for V2G communications: A PUF-based approach ensuring cyber/physical security and identity/location privacy,” Electronics, vol. 9, no. 9, pp. 1479, 2020. [Google Scholar]

35. A. Lotfy, M. Kaveh, D. Martín and M. R. Mosavi, “An efficient design of anderson PUF by utilization of the xilinx primitives in the SLICEM,” IEEE Access, vol. 9, pp. 23025–23034, 2021. [Google Scholar]

36. W. Wang, Y. Yang, J. Li, Y. Hu, Y. Luo et al., “Woodland labeling in Chenzhou, China, via deep learning approach,” International Journal of Computational Intelligence Systems, vol. 13, no. 1, pp. 1393–1403, 2020. [Google Scholar]

37. G. Rosa, J. Papa, A. Marana, W. Scheirer and D. Cox, “Fine-tuning convolutional neural networks using harmony search,” in Proc. of the 20th Iberoamerican Int. Conf. on Pattern Recognition, Montevideo, Uruguay, pp. 683–690, 2015. [Google Scholar]

38. H. Fan, L. Zheng, C. Yan and Y. Yang, “Unsupervised person re-identification: Clustering and fine-tuning,” ACM Transactions on Multimedia Computing, Communications, and Applications, vol. 14, no. 4, pp. 1–18, 2018. [Google Scholar]

39. Y. Sun, B. Xue, M. Zhang, G. G. Yen and J. Lv, “Automatically designing CNN architectures using the genetic algorithm for image classification,” IEEE Transactions on Cybernetics, vol. 50, no. 9, pp. 3840–3854, 2020. [Google Scholar]

40. A. A. Ewees, M. Abd El Aziz and M. Elhoseny, “Social-spider optimization algorithm for improving ANFIS to predict biochar yield,” in Proc. of the 2017 IEEE 8th Int. Conf. on Computing, Communication and Networking Technologies (ICCCNT), Delhi, India, pp. 1–6, 2017. [Google Scholar]

41. K. Shankar, M. Elhoseny, S. Lakshmanaprabu, M. Ilayaraja, R. M. Vidhyavathi et al., “Optimal feature level fusion based ANFIS classifier for brain MRI image classification,” Concurrency and Computation-Practice & Experience, vol. 32, no. 1, pp. 1–12, 2020. [Google Scholar]

42. R. M. Rizk-Allah, A. E. Hassanien and M. Elhoseny, “A multi-objective transportation model under neutrosophic environment,” Computers & Electrical Engineering, vol. 69, pp. 705–719, 2018. [Google Scholar]

43. I. M. El-Hasnony, S. I. Barakat, M. Elhoseny and R. R., Mostafa, “Improved feature selection model for big data analytics,” IEEE Access, vol. 8, pp. 66989–67004, 2020. [Google Scholar]

44. M. Elhoseny, “Intelligent firefly-based algorithm with levy distribution (FF-L) for multicast routing in vehicular communications,” Expert Systems with Applications, vol. 140, pp. 112889, 2020. [Google Scholar]

45. X. Wang, C. Gong, M. Khishe, M. Mohammadi and T. A. Rashid, “Pulmonary diffuse airspace opacities diagnosis from chest X-ray images using deep convolutional neural networks fine-tuned by whale optimizer,” Wireless Personal Communications, vol. 124, no. 2, pp. 1–20, 2021. [Google Scholar]

46. M. Turkoglu, “COVIDetectioNet: COVID-19 diagnosis system based on X-ray images using features selected from pre-learned deep features ensemble,” Applied Intelligence, vol. 51, no. 3, pp. 1213–1226, 2021. [Google Scholar]

47. D. Karaboga, “An idea based on honey bee swarm for numerical optimization,” Technical Report-tr06, vol. 200, pp. 1–10, 2005. [Google Scholar]

48. D. Simon, “Biogeography-based optimization,” IEEE Transactions on Evolutionary Computation, vol. 12, no. 6, pp. 702–713, 2008. [Google Scholar]

49. M. Braik, A. Sheta and H. Al-Hiary, “A novel meta-heuristic search algorithm for solving optimization problems: Capuchin search algorithm,” Neural Computing and Applications, vol. 33, no. 7, pp. 2515–2547, 2021. [Google Scholar]

50. M. Khishe and M. R. Mosavi, “Chimp optimization algorithm,” Expert Systems with Applications, vol. 149, pp. 113338, 2020. [Google Scholar]

51. M. M., Ali, C. Khompatraporn and Z. B. Zabinsky, “A numerical evaluation of several stochastic algorithms on selected continuous global optimization test problems,” Journal of Global Optimization, vol. 31, no. 4, pp. 635–672, 2005. [Google Scholar]

52. W. Kaidi, M. Khishe and M. Mohammadi, “Dynamic levy flight chimp optimization,” Knowledge-Based Systems, vol. 235, pp. 107625, 2022. [Google Scholar]

53. J. Irvin, P. Rajpurkar, M. Ko, Y. Yu, S. Ciurea-Ilcus et al., “Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison,” in Proc. of the 33th AAAI Int. Conf. on Artificial Intelligence, Honolulu, Hawaii, USA, vol. 33, no. 1, pp. 590–597, 2019. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools