Open Access

Open Access

ARTICLE

Tracking and Analysis of Pedestrian’s Behavior in Public Places

1 Department of Computer Science, Bahria University, Islamabad, Pakistan

2 Department of Computer Science, College of Computers and Information Technology, Taif University, Taif, 21944, Saudi Arabia

3 Department of Computer Science, Air University, Islamabad, Pakistan

4 Department of Computer Science, College of Computer, Qassim University, Buraydah, 51452, Saudi Arabia

5 Department of Computer Engineering, Tech University of Korea, 237 Sangidaehak-ro, Siheung-si, 15073, Gyeonggi-do, Korea

* Corresponding Author: Jeongmin Park. Email:

Computers, Materials & Continua 2023, 74(1), 841-853. https://doi.org/10.32604/cmc.2023.029629

Received 08 March 2022; Accepted 15 June 2022; Issue published 22 September 2022

Abstract

Crowd management becomes a global concern due to increased population in urban areas. Better management of pedestrians leads to improved use of public places. Behavior of pedestrian’s is a major factor of crowd management in public places. There are multiple applications available in this area but the challenge is open due to complexity of crowd and depends on the environment. In this paper, we have proposed a new method for pedestrian’s behavior detection. Kalman filter has been used to detect pedestrian’s using movement based approach. Next, we have performed occlusion detection and removal using region shrinking method to isolate occluded humans. Human verification is performed on each human silhouette and wavelet analysis and particle gradient motion are extracted for each silhouettes. Gray Wolf Optimizer (GWO) has been utilized to optimize feature set and then behavior classification has been performed using the Extreme Gradient (XG) Boost classifier. Performance has been evaluated using pedestrian’s data from avenue and UBI-Fight datasets, where both have different environment. The mean achieved accuracies are 91.3% and 85.14% over the Avenue and UBI-Fight datasets, respectively. These results are more accurate as compared to other existing methods.Keywords

Crowd management is becoming a global issue due to increased population in urban areas. According to research, world population in urban areas will be increased by 60% in 2030 [1]. This enormous change increases the need for effective management of public places and crowd behavior analysis. Crowd behavior is the main factor that can effect space management and safety measures used in public places [2]. Different algorithms to estimate pedestrian’s intention have been proposed by researchers and performed well in multiple scenarios [3–6]. However, challenges still exist because pedestrians can suddenly change directions while moving in any direction and perform any action. One other cause is mutual occlusion of pedestrians and with other obstacles [7].

Intelligent prediction of pedestrian’s behavior in public places is main focus of this research work. We have proposed an alternative vigorous approach for pedestrian’s behavior tracking, which examines the behavior of an individual or crowd and determines whether it is normal or abnormal. Our approach is able to classify the behavior of people in crowded scenes and efficiently deal with occlusions, arbitrary movements, and overlaps. First of all, we have applied pre-processing step on extracted video frames for noise removal, pedestrian’s detection and tracking, and then shadow removal has performed to enhance the appearance of a human. The next step we have followed is object shrinking or object separation that helps to isolate multiple occluded people. Human/non-human clustering has been done using fuzzy c-means and local descriptors has been applied. We applied a gray-wolf optimizer to enhance the features and an XG boost classifier [8] to categorize the behavior of the crowd. Two main classes (normal and abnormal) have been used for classification purposes. We have evaluated the performance of our proposed approach on publicly available benchmarked Avenue [9] and UBI-Fight [10] datasets, and the proposed method has been fully validated for efficacy, while surpassing the other state-of-the-art methods.

The main contributions of this paper are the selection of motion based features that can be effectively utilized to identify the behavior of pedestrians. The other main important aspect of this work is occlusion removal process. We used semi-circle to identify the head parts of pedestrian if occlusion found, and then we estimated the layout of silhouettes using body parts estimation. Then estimated regions are separated and occlusion has been removed.

The rest of paper is structured as follows: Section 2 explains a detailed overview of existing work. Section 3 outlines the setting of complete methodology of pedestrian’s behavior detection. Section 4 discusses the feature extraction process and optimization of features has been provided in Section 5. Complete description of the experimental setup and a comprehensive comparison of the proposed system with existing state-of-the-art systems has been described in Section 6. Results are discussed in Section 7 and finally in Section 8, future directions and conclusions are defined.

Different researchers have focused pedestrians at different places. A few used in-door areas including shopping malls, educational institutes, and hospitals to track and identify the pedestrians, whereas others focused on out-door places including bus stations, parks, and roads to track intentions of pedestrians. We divided our literature into two parts. This section describes the recent works done in both fields.

Several research articles have focused on safety systems used for in-door pedestrians. Kamal et al. [11] built a model using Gaussian Process (GP) [12] and computed the pedestrian’s body pose. They used reduced dimension and predict pedestrian's action dynamics such as walking, stopping, starting off and standing. However, they needed a person to stand for four seconds at least in same to detect her action. In another study, Kamal [13] predicted action/behavior of pedestrians including walking, standing or performing any action. Jalal et al. ( [14] and [15]) detected motion contour using Histogram of Gradient (HOG)-like descriptor to detect pedestrians and jointly with Support Vector Machines (SVM) to estimate the intention of pedestrians. The behavior of pedestrians has been predicted in several studies. Most of them used images [16], 3D point clouds [17], or information of both sets fusion.

The estimation of pedestrian’s intention is even more challenging due to uncertainties regarding their impending motion [18]. In a fraction of a second, pedestrians can decide to move in one of the many different possible directions, stop walking abruptly [19], have their image/point cloud occluded by a variety of obstacles, and be distracted talking to other pedestrians or even using a mobile phone. According to [20], the analysis of non-critical situations has not received considerable attention and Jalal et al. [21], observed the difference between an effective and a non-effective intervention can depend merely on a few centimeters or a fraction of a second.

Multiple efforts with different approaches have been put on by researchers to achieve milestone of pedestrian’s behavior prediction. Major part of this area have focused on out-door environment especially in road crossing. According to [22], 22% of worldwide deaths are caused by traffic accidents. To enhance safety measures and to reduce number of accidents, [23] proposed an algorithm to predict intentions of pedestrians crossing roads. They declared it as a pre-requisite for safe operations of automated vehicles. Mahmood et al. [24] developed a stereo vision based system that enabled autonomous cars to estimate movements of pedestrian crossing the roads. Quaid et al. in [25] proposed a system and evaluated drivers and pedestrians awareness to estimate risk of collision. Their system is very helpful to improve safety of people during road crossing.

Nadeem et al. [26] developed another system that works for detection of cyclist and pedestrians concurrently. This system warns the driver when pedestrian is detected. Pedestrians and their behavior detection has been examined in several studies using 3D point clouds or using images. In [27], intention of pedestrians are predicted using path prediction. In [28], interaction between humans and autonomous vehicles have been investigated and evaluated for different motion patterns of pedestrians. Another related work is given in [29]. Jalal et al. [30] predicted pedestrian’s future actions of using visual data and physical environment effects on pedestrians’ choice of behaviors from static semantic scene understanding and control Theory. All these applications are very useful and perform well in different setting. But there is still need for more improvement. First challenge we found is mutual occlusions of pedestrians that can complicate detection of pedestrian in rush hours. Second challenge is the variance of expected behavior of pedestrians. They can behave in different ways that can mislead classification process.

In this research we focused on occlusion detection and removal and upgrade the object detection process. Also we have considered the variance of pedestrian’s behavior and used multiple dataset with different actions performed at public places to train our system to deal with multiple type of behaviors.

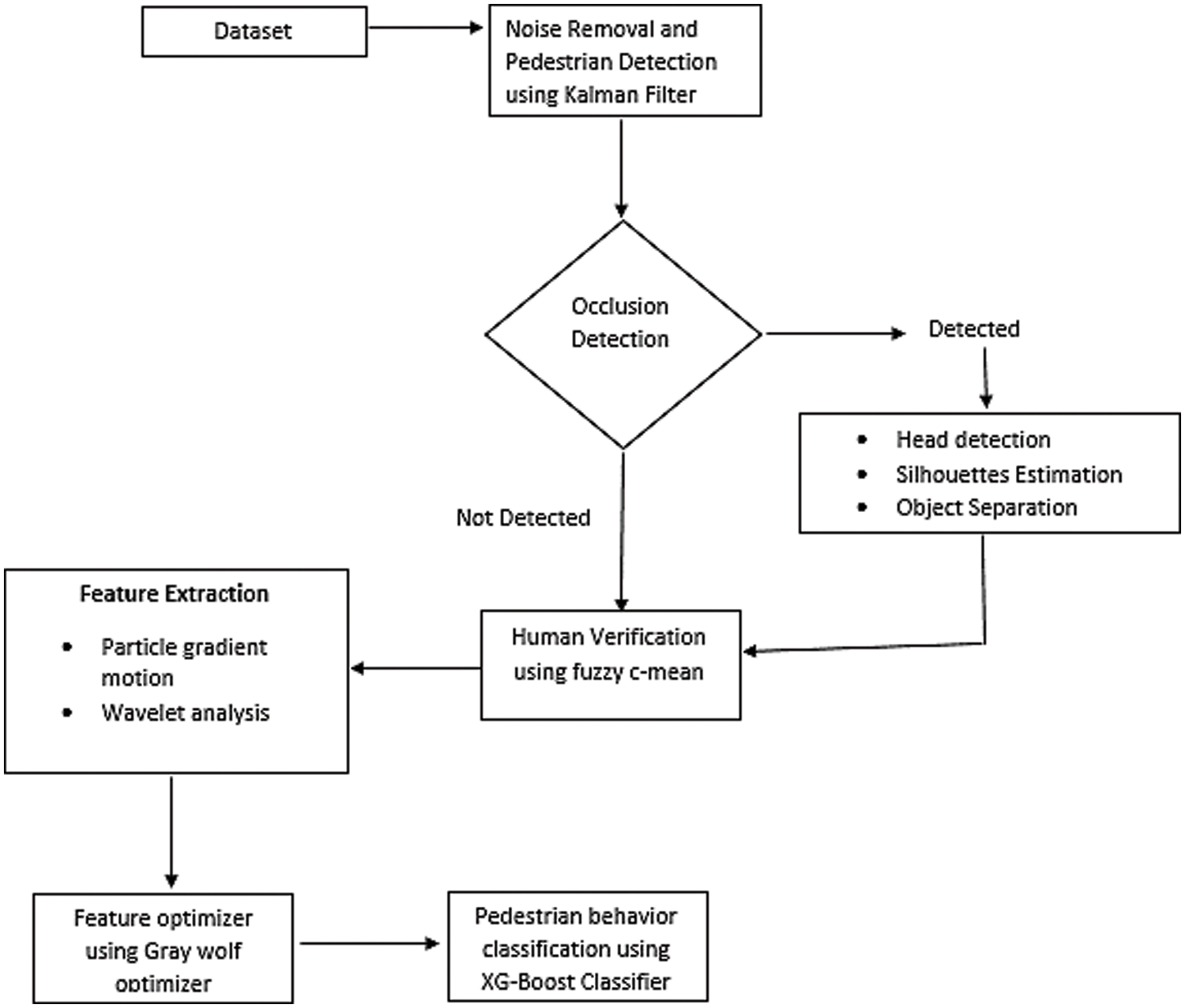

In this section, we have used two datasets as input to track the behavior of pedestrians in indoor and outdoor environments. Complete architecture of the proposed system for behavior detection is given in Fig. 1. Preprocessing is the initial step performed to remove noise, extract foreground and object detection. We have applied Gaussian mixture model (GMM) [31] for background subtraction and identification of foreground objects.

Figure 1: Proposed methodology to detect pedestrian’s behavior

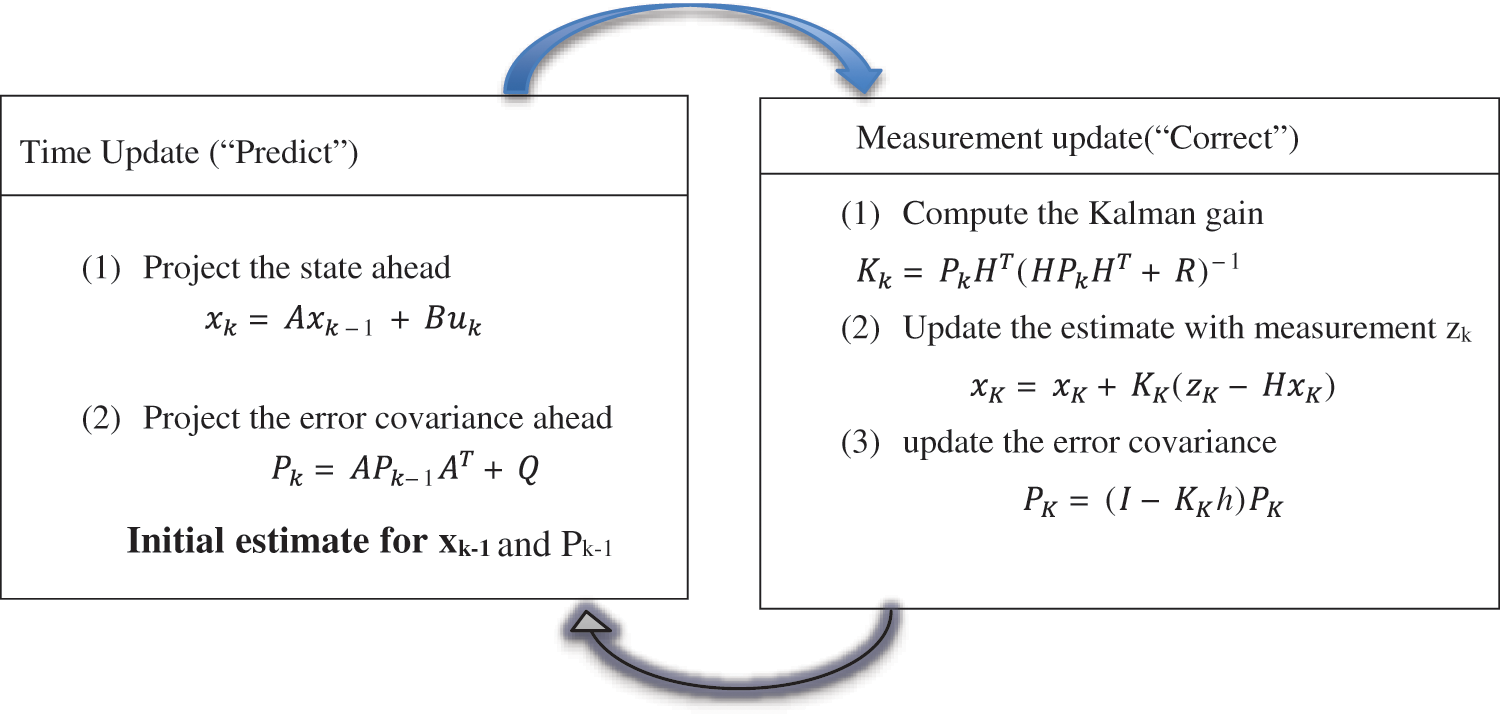

Pedestrians tracking is considered as the second milestone after pre-processing in any image/video analysis system, which is a very tricky and risky task. So, after pre-processing via Gaussian mixture model, we used the Kalman filter [32] to track the pedestrians that estimate the state of a process. It can be a past, present, or future state, even when there is no knowledge of the precise nature of the modeled system. There are two methods in the Kalman filter that are used to predict the state: time update and measurement.

It takes feedback from the previous state every time to predict the next state of an object with a time update. Feedback is considered as an important task that is used to convert the new update equation and to convert from old estimated value to new estimated value. Two main variables are used in Kalman Filter for state measurement; state time Xk/k, and error covariance Pk/k. Lastly, the noisy value is found. Pedestrians detection and tracking formulas are computed using Fig. 2 present the main architecture of Kalman filter and results of Avenue dataset after applying Kalman filter are displayed in Fig. 3.

Figure 2: Object state prediction process of Kalman filter

Figure 3: Object tracking using Kalman Filter. (a) Original image and (b) object tracked using Kalman

After detection of pedestrians, we have examined that a few objects’ size have been increased due to their shadow, which will complicate human verification process and create confusion between humans and obstacles. To avoid such errors and to enhance the appearance of the human, shadow detection and removal technique have been performed using images in Hue, Saturation and Value (HSV) domain.

Further, we deal with occlusion using region estimation method to detect and remove occlusions that helped us to isolate multiple occluded people. Human/non-human silhouette verification has been done using fuzzy c-means and local descriptor. Gray-wolf optimizer (GWO) has been utilized to optimize the features set and an XG boost classifier [33] has implemented to classify the behavior of the crowd Avenue [34], and UBI-Fight [35] datasets, are used to assess the performance of our system and the proposed method was fully validated for efficacy and performed better than other state-of-the-art methods.

In this section, we discuss the features set used to classify the events. This list includes wavelet analysis of object movement, and particle gradient motion. Features of each human silhouette are extracted independently and discussed below in detail.

Wavelet analysis is used to analyze the frequency spectrum-based movement of objects in linear or non-linear order [36]. Wavelet analysis attempts to solve these problems by decomposing a time series into time/frequency space simultaneously, see Eq. (1). One gets information on both the amplitude of any “periodic” signals within the series and how this amplitude varies with time. We performed wavelet analysis to identify the frequency spectrum [37] of normal and abnormal parts of videos using the velocity and frequency spectrum as shown in Fig. 4.

Figure 4: Wavelet analysis of normal and abnormal Events

Particle gradient is used to identify the density based gradient of particles moving in a multi direction [38]. We used the motion of a particle, computed using Eq. (2) and its density and gradient information to classify it as normal or abnormal flow. Visual display of particle gradient motion is displayed in Fig. 5.

Figure 5: Gradient motion of multiple particles. (a) Abnormal Event and (b) corresponding gradient motion of a particle

5 Feature Optimization and Classification

GWO is novel method of swarm intelligence optimization. Grey wolves belong to Canidae family and are considered to be at the top of food chains, as apex predator [39]. They prefer to live as a group or in a pack. The leader wolf is called alpha that decides their plan of hunting. Alpha wolfs are best in managing the tasks. The subordinate wolf of alpha is called beta that helps and assits alpha in planning and decision making, also communicates with other wolves in pack. Omega is considered as the lowest rank wolf that accepts the decision of others in the pack and follows them.

Following the same hierarchy, we considered the alpha as the best solution, beta as second best solution that ranked after alpha and delta as third best, remaining solutions are considered as omega [40]. Behavior of wolves plans are calculated using Eqs. (3) and (4).

where A and C are the coefficients, t is the current iteration,

Using the above equations, the position of wolves are updated according to the position of prey and best solution is considered.

XG-boost classifier [41] that is used for abnormal event classification. We used XG-Boost classifier over two datasets: Avenue, and UBI-Fight. XG-Boost is one of the most popular machine learning algorithms these days. As compared to other machine learning algorithms, XG-Boost classifier gives bettter performance reagradless of data types. It provides parallel computation using extreme gradient boosting. Eqs. (7) and (8) computation on a single machine.

where {x1, x2, x3,… xn} is training set, Fx is approximation function with low cost and hm is learner function.

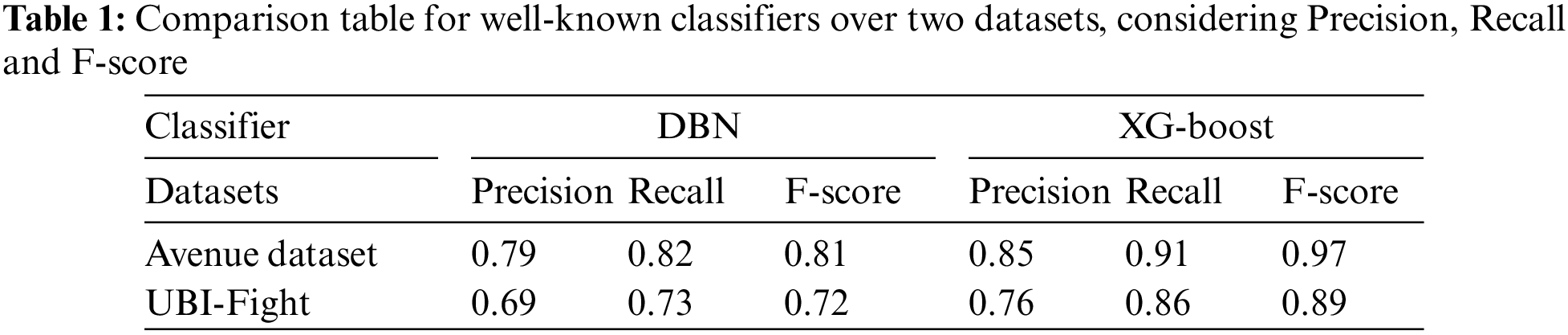

We perfomed a comparative analysis of Deep Belief Network(DBN) [42] and XG-Boost classifier to ensure the effectiveness of the classifier used in this study. Tab. 1 shows the results of all three classifiers used in the comparative analysis and it is clearly shown that XG-Boost performs very well as compared to the other two classifiers.

Experiments were performed using Intel Core i5 2.80 GHz CPU having 16 GB RAM and 64-bit operating system. System has been implemented in Matlab 2013b and image processing toolbox.

This section provides details of the different datasets used in the evaluation of the proposed system. We used two datasets having different type of anomalies in different settings.

UBI-Fight dataset contain multiple fighting scenarios captured at publically places and is openly available dataset as shown in Fig. 6. This dataset is widely used for activity detection, anomaly detection, action detection and behavior detection. It contains 1000 videos having 80 h length. Videos are annotated at frame level and divided into normal and abnormal behavior, 784 belongs to normal behavior and remaining videos belong to other category.

Figure 6: Some examples of the UBI Fight dataset

Avenue Dataset was collected in CUHK Campus Avenue and comprised of 21 testing videos and 16 training clips. The complete dataset includes 30652 frames in total. Videos contain multiple normal and abnormal behavior scenarios. Fig. 7 presents different abnormal behaviors of the avenue dataset.

Figure 7: Abnormal behavior of the avenue dataset

6.2 Performance Measurement and Results Analysis

For system evaluation, precision has been taken as the performance measure to evaluate the performance of our system. Precision [43] has been calculated using Eq. (9).

where

Figure 8: Results of behavior detection with UBI Fight dataset

Figure 9: Results of behavior detection with avenue dataset

Figure 10: Performance measures of both datasets used to evaluate the system

To evaluate the performance in more detail Error equivalence rate (EER) [44], Area under the curve (AUC) and decidability [45] are used to evaluate the performance over both datasets. AUC offers cumulative measures of performance amongst all potential classification’s thresholds. Value of AUC cab be between 0 to 1. To declare false acceptance and false rejection rate EER is used as a threshold parameter. The cumulative performance of both datasets are given in Fig. 10.

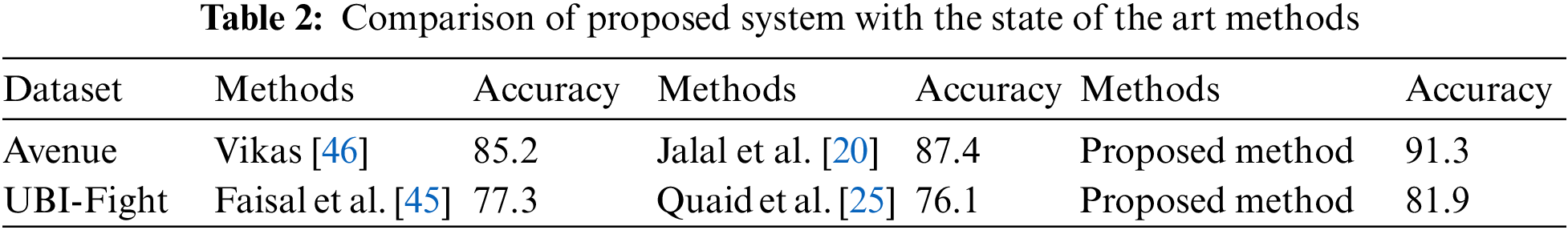

Comparative analysis has been performed among the existing method and proposed method having same evaluation datasets (See Tab. 2). Proposed system perform well as compare to other state of the art method.

The system has been evaluated using two different dataset with different type of anomalies. Results displayed in Figs. 8 and 9 clearly displays that system perform better with UBI-Fight dataset as compare to Avenue dataset. Although UBI-Fight dataset contains videos of fighting scenarios and chances of occlusion in such videos are high as compare to other dataset. The main reason we identified is the capturing process of videos and medium used to define anomalous behavior. First reason of poor results with avenue dataset is shaky camera. There are multiple videos captured with shaky camera. As we have used motion based features that differentiate normal and abnormal behavior of pedestrians, Shaky camera can mislead pedestrian detection that is based on movement of silhouettes, other reason is type of object; we considered human silhouettes as our object of interest and used them to classify behavior but in avenue dataset there are multiple object like bicycle, car etc. that are considered as abnormal behavior and last one is frequency of normal event occurrence. There are some events that occurs rarely and effect learning process of proposed system.

This paper proposes an effective method for the detection of people behavior. To enhance the performance of our system and to handle the occluded situation we designed a new region shrinking algorithm to extract human silhouettes in the occluded situations. Fuzzy c-means clustering is used to verify objects as human or non-human silhouettes. We extracted motion-related features which include wavelet analysis, and particle gradient motion to classify the behavior. To reduce computational cost and optimize data, the Gray Wolf optimization model is implemented. The proposed behavior detection system works in surveillance applications with different environments. The system also has theoretical implications in identifying the behavior analysis in shopping places, railway stations, airports, shopping malls.

In the future, we will work on the high-density crowds with moving background objects in a complex environment. We will work for places with high levels of an occluded occlusion, like at holy events, rallies, shopping festivals.

Acknowledgement: This research was supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2023-2018-0-01426) supervised by the IITP (Institute for Information & Communications Technology Planning & Evaluation).

Funding Statement: This work was partially supported by the Taif University Researchers Supporting Project number (TURSP-2020/115), Taif University, Taif, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. A. Jalal, S. Lee, J. Kim and T. Kim, “Human activity recognition via the features of labeled depth body parts,” in Proc. Smart Homes Health Telematics, Berlin, Heidelberg, pp. 246–249, 2012. [Google Scholar]

2. A. Jalal, J. T. Kim and T.-S. Kim, “Development of a life logging system via depth imaging-based human activity recognition for smart homes,” in Proc. Int. Symp. on Sustainable Healthy Buildings, Seoul, Korea, vol. 19, pp. 91–95, 2012. [Google Scholar]

3. A. Jalal, J. T. Kim and T.-S. Kim, “Human activity recognition using the labeled depth body parts information of depth silhouettes,” in Proc. 6th Int. Symp. on Sustainable Healthy Buildings, Korea, pp. 1–8, 2012. [Google Scholar]

4. A. Jalal, N. Sharif, J. T. Kim and T.-S. Kim, “Human activity recognition via recognized body parts of human depth silhouettes for residents monitoring services at smart homes,” Indoor and Built Environment, vol. 22, no. 9, pp. 271–279, 2013. [Google Scholar]

5. A. Jalal, M. Zia Uddin and T. S. Kim, “Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home,” IEEE Transaction on Consumer Electronics, vol. 58, no. 3, pp. 863–871, 2012. [Google Scholar]

6. A. Jalal, Y. Kim and D. Kim, “Ridge body parts features for human pose estimation and recognition from RGB-D video data,” in Proc. IEEE Int. Conf. on Computing, Communication and Networking Technologies, Hefei, China, pp. 1–6, 2014. [Google Scholar]

7. A. Jalal and Y. Kim, “Dense depth maps-based human pose tracking and recognition in dynamic scenes using ridge data,” in proc. IEEE Int. Conf. on Advanced Video and Signal-based Surveillance, Seoul, Korea, HanaSquare, Korea University, pp. 119–124, 2014. [Google Scholar]

8. A. Jalal and S. Kamal, “Real-time life logging via a depth silhouette-based human activity recognition system for smart home services,” in Proc. IEEE Int. Conf. on Advanced Video and Signal-based Surveillance, Seoul, HanaSquare, Korea University, pp. 74–80, 2014. [Google Scholar]

9. C. Lu, J. Shi and J. Jia, “Abnormal event detection at 150 fps in matlab,” in Proc. IEEE Int. Conf. on Computer Vision, Washington, DC, USA, 2013. [Google Scholar]

10. B. Degardin and H. Proença, “Human activity analysis: Iterative weak/self-supervised learning frameworks for detecting abnormal events,” in IEEE Int. Joint Conf. on Biometrics (IJCB), Houstan, USA, 2020. [Google Scholar]

11. S. Kamal and A. Jalal, “A hybrid feature extraction approach for human detection, tracking and activity recognition using depth sensors,” Arabian Journal for Science and Engineering, vol. 41, no. 3, pp. 1043–1051, 2016. [Google Scholar]

12. A. Jalal, Y. H. Kim, Y. J. Kim, S. Kamal and D. Kim, “Robust human activity recognition from depth video using spatiotemporal multi-fused features,” Pattern Recognition, vol. 61, no. 4, pp. 295–308, 2017. [Google Scholar]

13. S. Kamal, A. Jalal and D. Kim, “Depth images-based human detection, tracking and activity recognition using spatiotemporal features and modified HMM,” Journal of Electrical Engineering and Technology, vol. 11, no. 6, pp. 1921–1926, 2016. [Google Scholar]

14. A. Jalal, S. Kamal and D. Kim, “Facial expression recognition using 1D transform features and hidden markov model,” Journal of Electrical Engineering & Technology, vol. 12, no. 4, pp. 1657–1662, 2017. [Google Scholar]

15. A. Jalal, S. Kamal and D. Kim, “A depth video-based human detection and activity recognition using multi-features and embedded hidden markov models for health care monitoring systems,” International Journal of Interactive Multimedia and Artificial Intelligence, vol. 4, no. 4, pp. 54–62, 2017. [Google Scholar]

16. A. Jalal, M. A. K. Quaid and A. S. Hasan, “Wearable sensor-based human behavior understanding and recognition in daily life for smart environments,” in IEEE Conf. on Int. Conf. on Frontiers of Information Technology, Islamabad, Pakistan, 2018. [Google Scholar]

17. A. Jalal, M. Mahmood and M. A. Sidduqi, “Robust spatio-temporal features for human interaction recognition via artificial neural network,” in IEEE Conf. on Int. Conf. on Frontiers of Information Technology, Islamabad, Pakistan, 2018. [Google Scholar]

18. A. Jalal, M. A. K. Quaid and M. A. Sidduqi, “A Triaxial acceleration-based human motion detection for ambient smart home system,” in IEEE Int. Conf. on Applied Sciences and Technology, Korea, 2019. [Google Scholar]

19. A. Jalal, M. Mahmood and A. S. Hasan, “Multi-features descriptors for human activity tracking and recognition in Indoor-outdoor environments,” in IEEE Int. Conf. on Applied Sciences and Technology, Queretaro, Mexico, 2019. [Google Scholar]

20. A. Jalal and M. Mahmood, “Students’ behavior mining in e-learning environment using cognitive processes with information technologies,” Education and Information Technologies, Springer, vol. 24, no. 5, pp. 2719–2821, 2019. [Google Scholar]

21. A. Jalal, A. Nadeem and S. Bobasu, “Human body parts estimation and detection for physical sports movements,” in IEEE Int. Conf. On Communication, Computing and Digital Systems, Islamabad, Pakistan, 2019. [Google Scholar]

22. A. Ahmed, A. Jalal and A. A. Rafique, “Salient segmentation based object detection and recognition using hybrid genetic transform,” in IEEE ICAEM Conf., Taxila, Pakistan, 2019. [Google Scholar]

23. A. Ahmed, A. Jalal and K. Kim, “Region and decision tree-based segmentations for multi-objects detection and classification in outdoor Scenes,” in IEEE Conf. on Frontiers of Information Technology, Islamabad, Pakistan, 2019. [Google Scholar]

24. M. Mahmood, A. Jalal and K. Kim, “WHITE STAG model: Wise human interaction tracking and estimation (WHITE) using spatio-temporal and angular-geometric (STAG) descriptors,” Multimedia Tools and Applications, vol. 79, no. 11, pp. 6919–6950, 2020. [Google Scholar]

25. M. A. K. Quaid and A. Jalal, “Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm,” Multimedia Tools and Applications, vol. 79, no. 9, pp. 6061–6083, 2019. [Google Scholar]

26. A. Nadeem, A. Jalal and K. Kim, “Human actions tracking and recognition based on body parts detection via artificial neural network,” in IEEE Int. Conf. on Advancements in Computational Sciences, Lahore, Pakistan, 2020. [Google Scholar]

27. N. Golestani and M. Moghaddam, “Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks,” Nature Communication, vol. 11, pp. 1–11, 2020. [Google Scholar]

28. S. Amna, A. Jalal and K. Kim, “An accurate facial expression detector using multi-landmarks selection and local transform features,” in IEEE ICACS Conf., Quebec, Canada, 2020. [Google Scholar]

29. A. Ahmed, A. Jalal and K. Kim, “A novel statistical method for scene classification based on multi-object categorization and logistic regression,” Sensors, vol. 20, no. 14, pp. 3871, 2020. [Google Scholar]

30. A. Jalal, N. Khalid and K. Kim, “Automatic recognition of human interaction via hybrid descriptors and maximum entropy markov model using depth sensors,” Entropy, vol. 22, no. 8, pp. 217, 2020. [Google Scholar]

31. A. Rafique, A. Jalal and K. Kim, “Automated sustainable multi-object segmentation and recognition via modified sampling consensus and kernel sliding perceptron,” Symmetry, vol. 13, no. 12, pp. 1928, 2020. [Google Scholar]

32. A. Ahmed, A. Jalal and K. Kim, “Multi‐objects detection and segmentation for scene understanding based on texton forest and kernel sliding perceptron,” Journal of Electrical Engineering and Technology, vol. 16, no. 2, pp. 1143–1150, 2020. [Google Scholar]

33. A. Jalal, I. Akhtar and K. Kim, “Human posture estimation and sustainable events classification via pseudo-2d stick model and k-ary tree hashing,” Sustainability, vol. 12, no. 23, pp. 9814, 2020. [Google Scholar]

34. M. Pervaiz, A. Jalal and K. Kim, “Hybrid algorithm for multi people counting and tracking for smart surveillance,” in IEEE IBCAST, Bhurban, Pakistan, 2021. [Google Scholar]

35. K. Nida, M. Gochoo, A. Jalal and K. Kim, “Modeling two-person segmentation and locomotion for stereoscopic action identification: A sustainable video surveillance system,” Sustainability, vol. 12, no. 2, pp. 970, 2021. [Google Scholar]

36. J. Madiha, M. Gochoo, A. Jalal and K. Kim, “HF-SPHR: Hybrid features for sustainable physical healthcare pattern recognition using deep belief networks,” Sustainability, vol. 13, no. 5, pp. 1699, 2021. [Google Scholar]

37. A. Jalal, A. Ahmed, A. A. Rafique, K. Kim, “Scene semantic recognition based on modified fuzzy c-mean and maximum entropy using object-to-object relations,” IEEE Access, vol. 9, pp. 27758–27772, 2021. [Google Scholar]

38. M. Gochoo, I. Akhter, A. Jalal and K. Kim, “Stochastic remote sensing event classification over adaptive posture estimation via multifused data and deep belief network,” Remote Sensing, vol. 13, no. 5, pp. 912, 2021. [Google Scholar]

39. H. Ansar, A. Jalal, M. Gochoo, K. Kim, “Hand gesture recognition based on auto-landmark localization and reweighted genetic algorithm for healthcare muscle activities,” Sustainability, vol. 13, no. 5, pp. 2961, 2021. [Google Scholar]

40. N. Amir, A. Jalal and K. Kim, “Automatic human posture estimation for sport activity recognition with robust body parts detection and entropy markov model,” Multimedia Tools and Applications, vol. 80, no. 14, pp. 21465–21498, 2021. [Google Scholar]

41. I. Akhter, A. Jalal and K. Kim, “Adaptive pose estimation for gait event detection using context‐aware model and hierarchical optimization,” Journal of Electrical Engineering and Technology, vol. 16, no. 5, pp. 2721–2729, 2021. [Google Scholar]

42. M. Gochoo, S. Badar, A. Jalal and K. Kim, “Monitoring real-time personal locomotion behaviors over smart indoor-outdoor environments via body-worn sensors,” IEEE Access, vol. 9, pp. 70556–70570, 2021. [Google Scholar]

43. P. Mahwish, G. Yazeed, M. Gochoo, A. Jalal, S. Kamal et al., “A smart surveillance system for people counting and tracking using particle flow and modified SOM,” Sustainability, vol. 13, no. 12, pp. 5367, 2021. [Google Scholar]

44. M. Gochoo, S. R. Amna, G. Yazeed, A. Jalal, S. Kamal et al., “A systematic deep learning based overhead tracking and counting system using RGB-D remote cameras,” Applied Sciences, vol. 11, no. 12, pp. 5503, 2021. [Google Scholar]

45. A. Faisal, Y. Ghadi, M. Gochoo, A. Jalal and K. Kim, “Multi-person tracking and crowd behavior detection via particles gradient motion descriptor and improved entropy classifier,” Entropy, vol. 23, no. 5, pp. 628, 2021. [Google Scholar]

46. M. Vikas, “Walkable streets: Pedestrian behavior, perceptions and attitudes,” Journal of Urbanism, vol. 1, no. 3, pp. 217–245, 2008. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools